The Digital Algorithm Processors for the ATLAS Level1

- Slides: 31

The Digital Algorithm Processors for the ATLAS Level-1 Calorimeter Trigger Samuel Silverstein, Stockholm University For the ATLAS TDAQ collaboration Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing

Acknowledgements l The work presented here is a major collaborative effort among the member institutes in L 1 Calo: l l UK: Rutherford Appleton Laboratory, University of Birmingham, Queen Mary University of London Germany: University of Heidelberg, Johannes Gutenberg University of Mainz Sweden: Stockholm University Thanks to many collaborators whose work is included in this talk. NB: Much more information and detail in conference proceeding and TNS submission Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 2

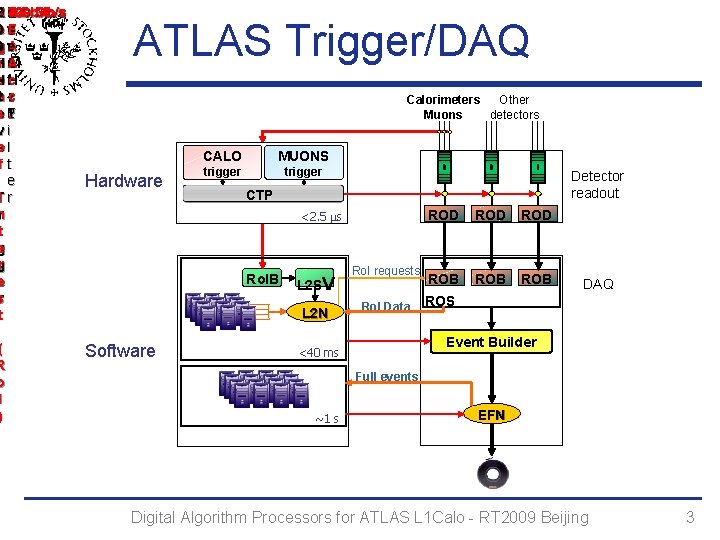

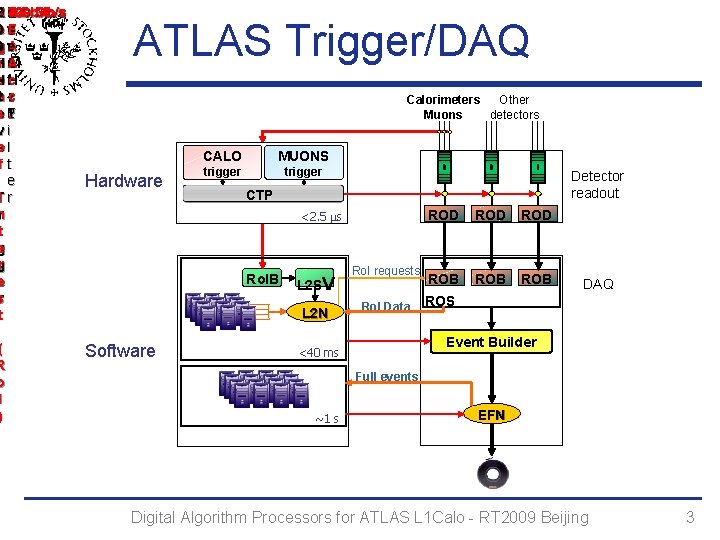

4 7 R 120 Gb/s 3 Gb/s E 2 L 300 Mb/s H 0 1 5 e 2 v. F 0 i e A kv e 0 g M. k H A n hi e H o z- ltc H z n zl -c s 1 2. F e vi o el fl t e TI r nr ti e g gr e sr t ( R o I ) ATLAS Trigger/DAQ Calorimeters Other Muons detectors Hardware CALO MUONS trigger Detector readout CTP <2. 5 s Ro. IB L 2 SV L 2 N Software Ro. I requests Ro. I Data ROD ROD ROB ROB DAQ ROS Event Builder <40 ms Full events ~1 s EFN Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 3

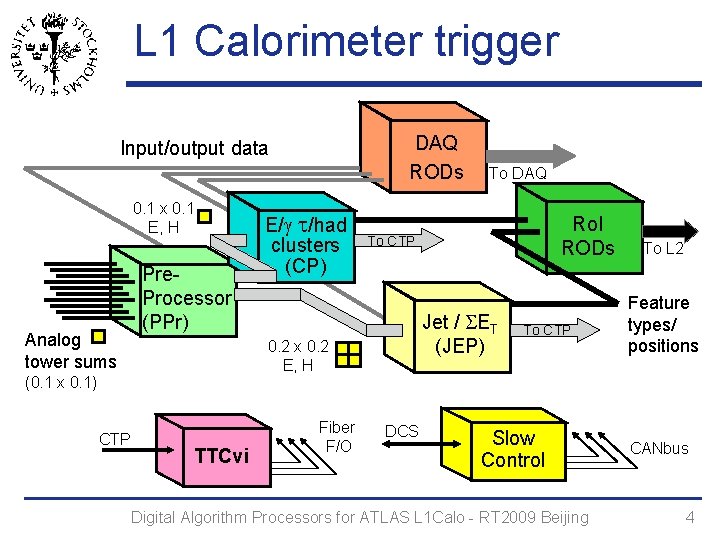

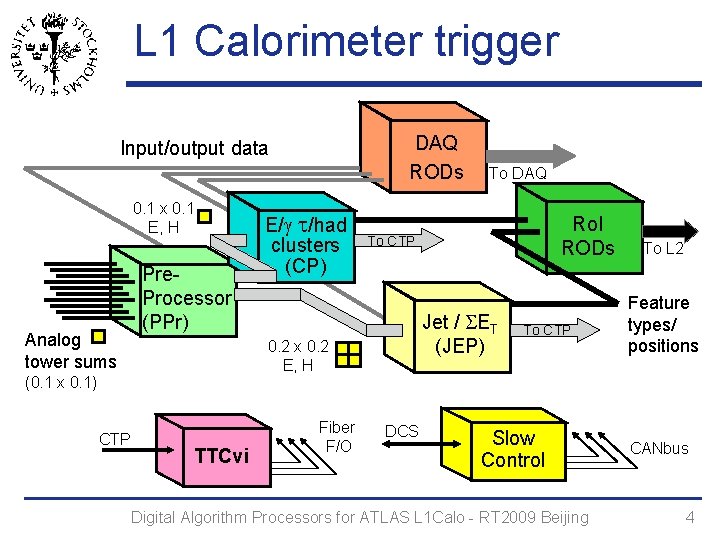

L 1 Calorimeter trigger DAQ RODs Input/output data 0. 1 x 0. 1 E, H Analog tower sums Pre. Processor (PPr) TTCvi Fiber F/O Ro. I RODs To CTP Jet / SET (JEP) 0. 2 x 0. 2 E, H (0. 1 x 0. 1) CTP E/g t/had clusters (CP) To DAQ DCS To CTP Slow Control Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing To L 2 Feature types/ positions CANbus 4

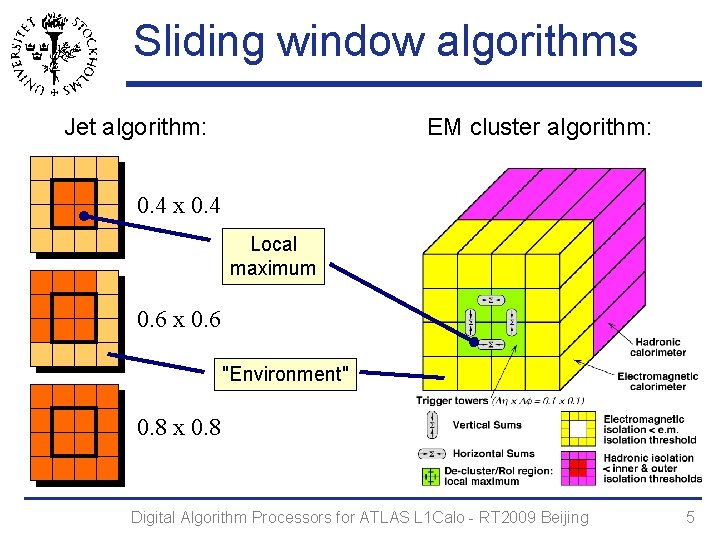

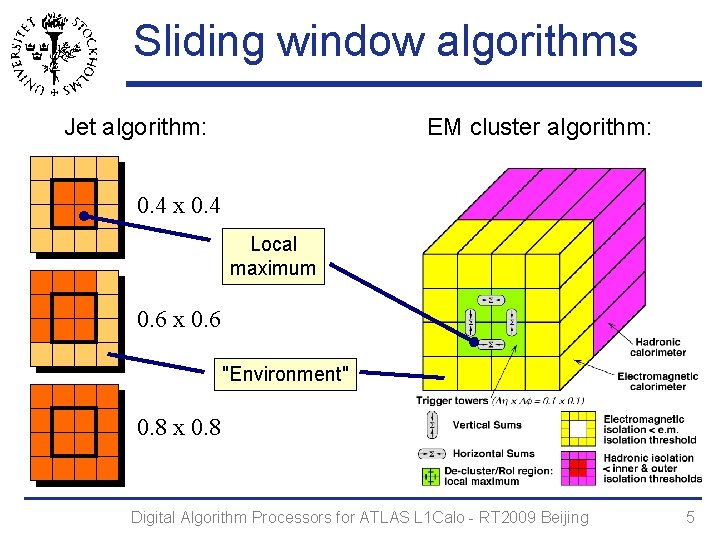

Sliding window algorithms Jet algorithm: EM cluster algorithm: 0. 4 x 0. 4 Local maximum 0. 6 x 0. 6 "Environment" 0. 8 x 0. 8 Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 5

Digital algorithm processors l Two independent subsystems l Cluster Processor (CP) Jet/Energy-sum Processor (JEP) These have evolved together Common architecture, technology choices In many cases, common hardware Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 6

Outline l Common architecture l l l Input data links Readout scheme Slow control Hardware Production/commissioning experience Outlook (upgrade) Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 7

Input data links l Technology: 480 MBaud serial links l Jet/Energy-sum processor (2 crates) l National Instruments Bus-LVDS 10 bits data @ 40 MHz + 2 sync bits 11 m shielded parallel-pair cables for all links 0. 2 "jet elements" (9 bits + parity) ~ 1400 links per crate Cluster processor (4 crates) 0. 1 "trigger towers" from Pre. Processor (8 bits + parity) ~2240 em and hadronic trigger towers per crate! We exploit a feature of Pre. Processor to halve the number of links needed in CP (to ~1120) Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 8

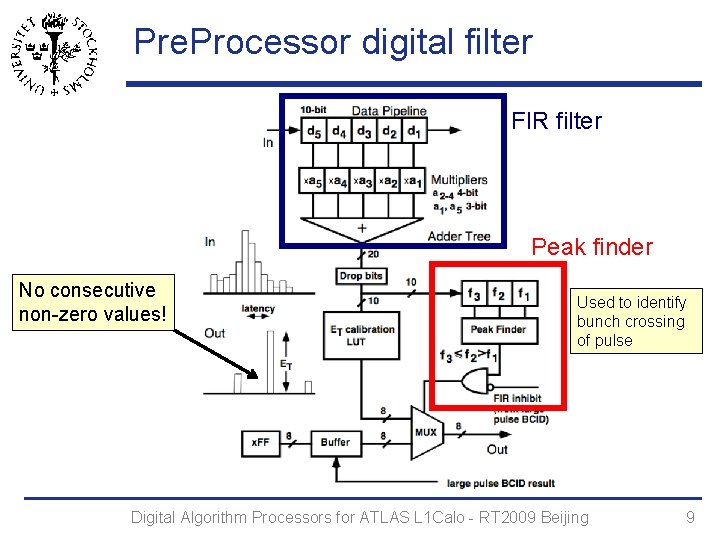

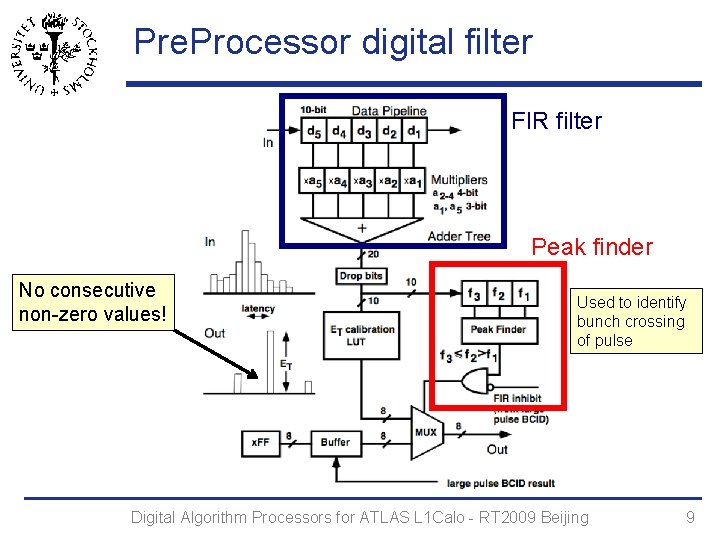

Pre. Processor digital filter FIR filter Peak finder No consecutive non-zero values! Used to identify bunch crossing of pulse Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 9

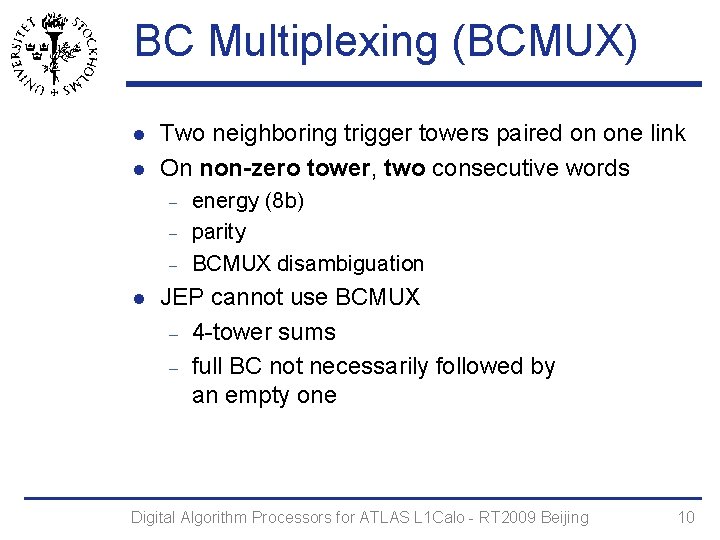

BC Multiplexing (BCMUX) l l Two neighboring trigger towers paired on one link On non-zero tower, two consecutive words l energy (8 b) parity BCMUX disambiguation JEP cannot use BCMUX 4 -tower sums full BC not necessarily followed by an empty one Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 10

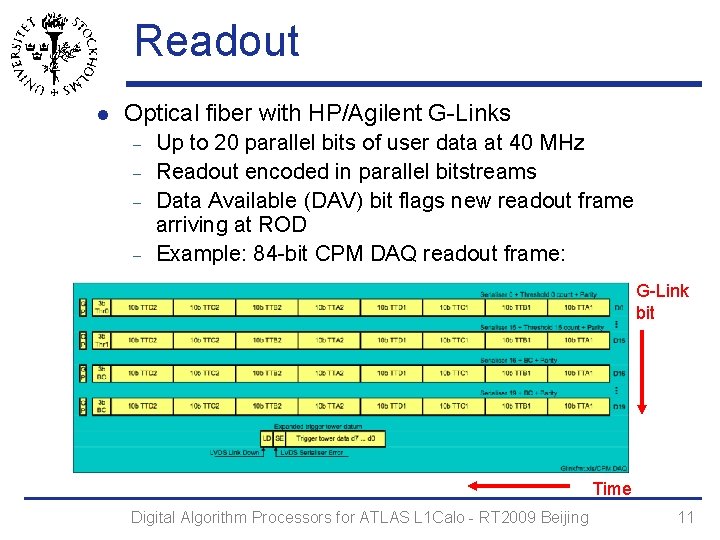

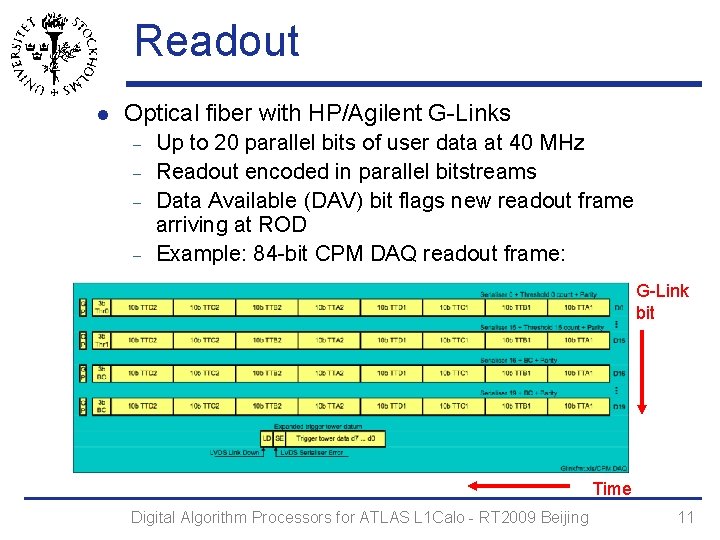

Readout l Optical fiber with HP/Agilent G-Links Up to 20 parallel bits of user data at 40 MHz Readout encoded in parallel bitstreams Data Available (DAV) bit flags new readout frame arriving at ROD Example: 84 -bit CPM DAQ readout frame: G-Link bit Time Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 11

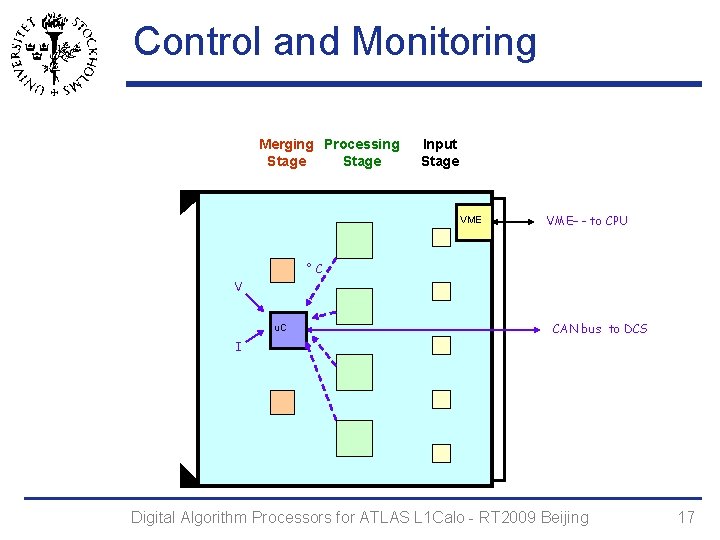

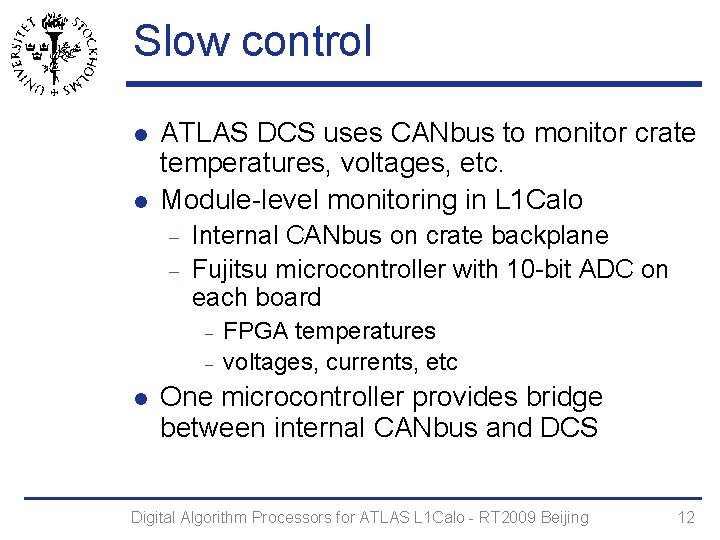

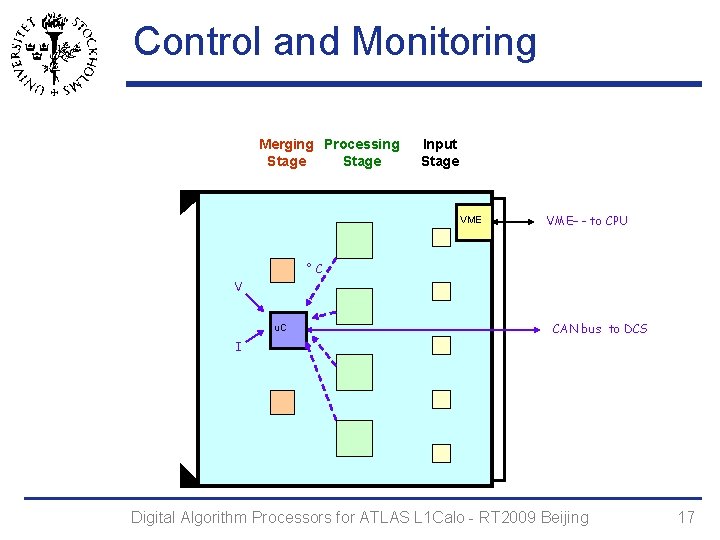

Slow control l l ATLAS DCS uses CANbus to monitor crate temperatures, voltages, etc. Module-level monitoring in L 1 Calo Internal CANbus on crate backplane Fujitsu microcontroller with 10 -bit ADC on each board l FPGA temperatures voltages, currents, etc One microcontroller provides bridge between internal CANbus and DCS Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 12

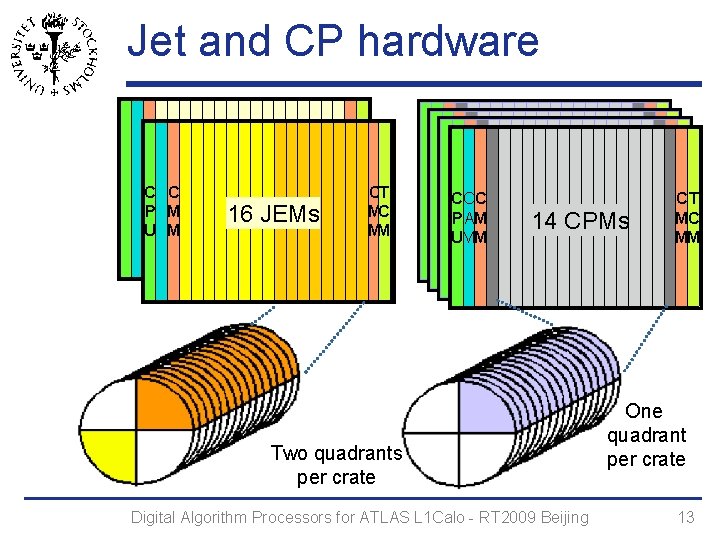

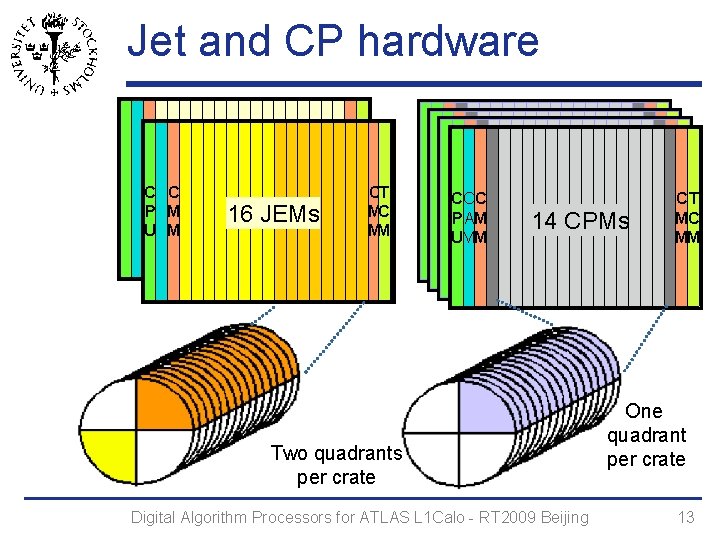

Jet and CP hardware C C P M U M 16 JEMs CT MC MM CCC P AM UMM 14 CPMs Two quadrants per crate Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing CT MC MM One quadrant per crate 13

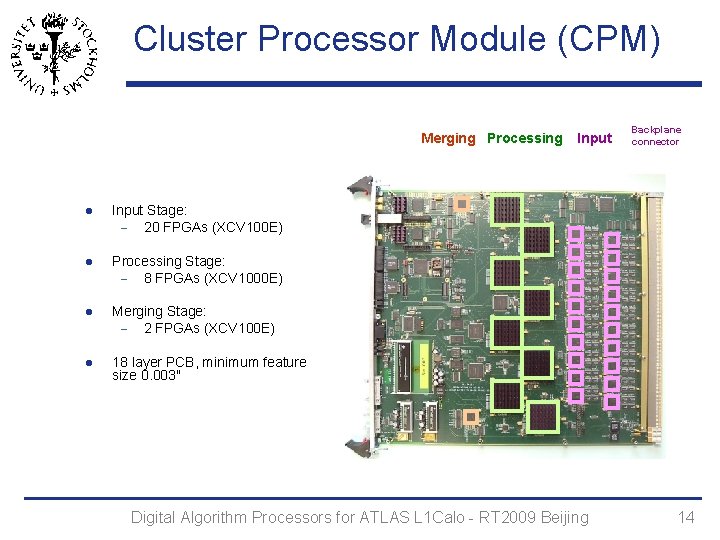

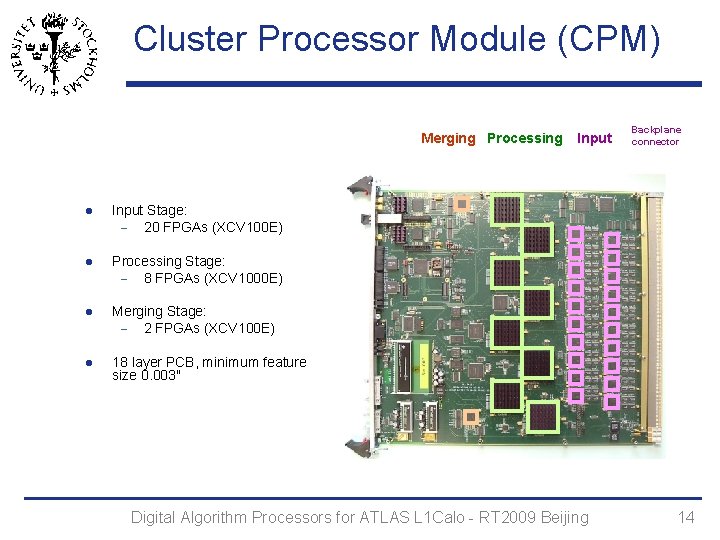

Cluster Processor Module (CPM) Merging Processing l Input Stage: 20 FPGAs (XCV 100 E) l Processing Stage: 8 FPGAs (XCV 1000 E) l Merging Stage: 2 FPGAs (XCV 100 E) l 18 layer PCB, minimum feature size 0. 003" Input Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing Backplane connector 14

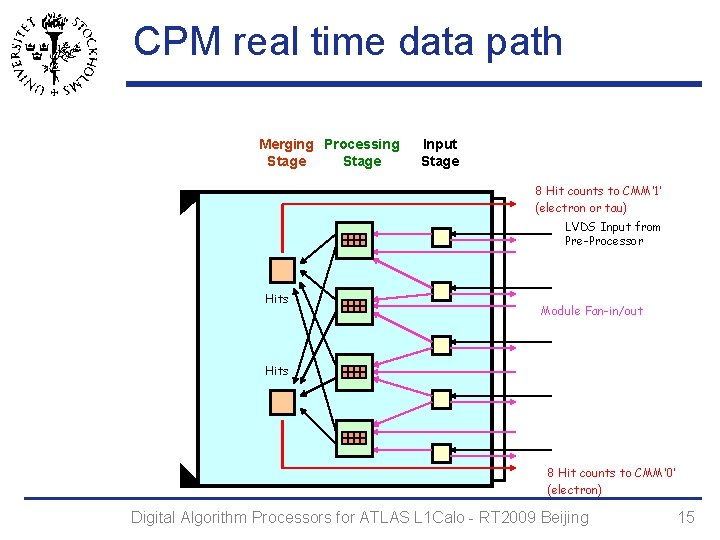

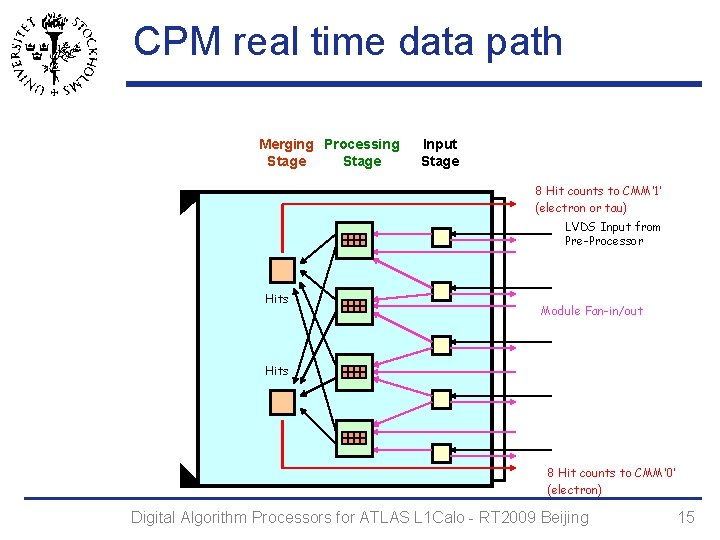

CPM real time data path Merging Processing Stage Input Stage 8 Hit counts to CMM’ 1’ (electron or tau) LVDS Input from Pre-Processor Hits Module Fan-in/out Hits 8 Hit counts to CMM’ 0’ (electron) Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 15

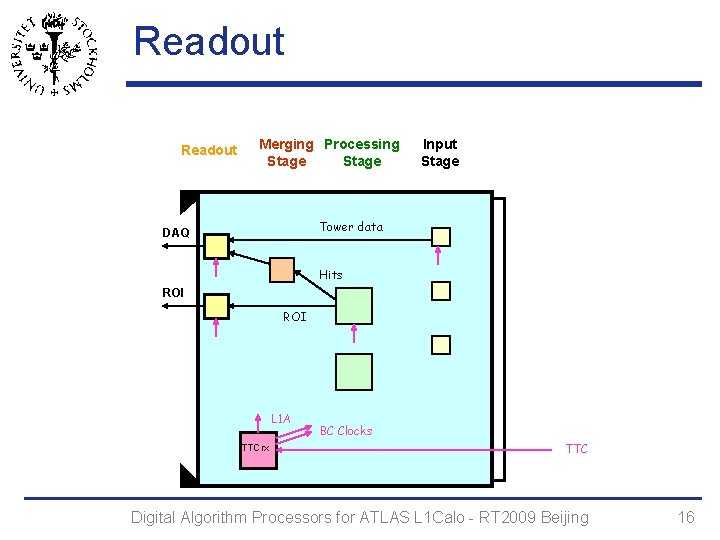

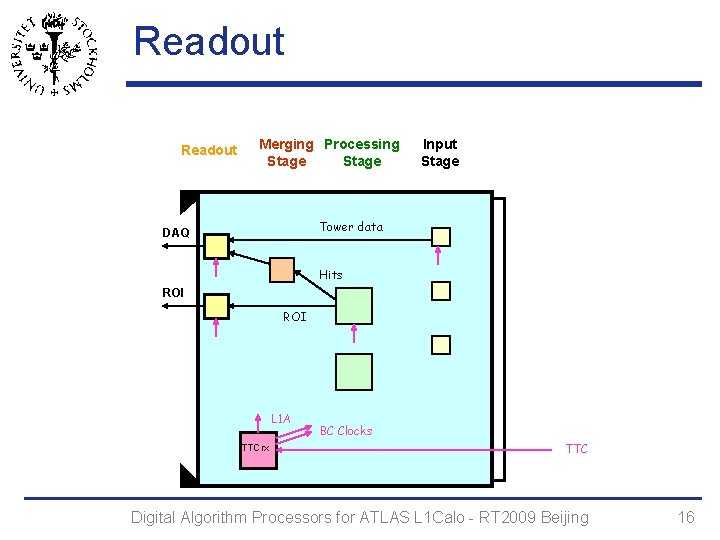

Readout Merging Processing Stage Input Stage Tower data DAQ Hits ROI L 1 A TTCrx BC Clocks TTC Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 16

Control and Monitoring Merging Processing Stage Input Stage VME– - to CPU °C V u. C CAN bus to DCS I Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 17

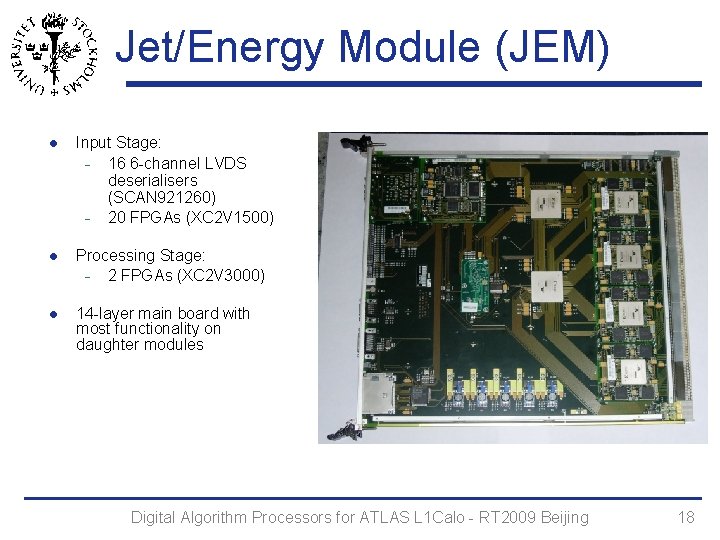

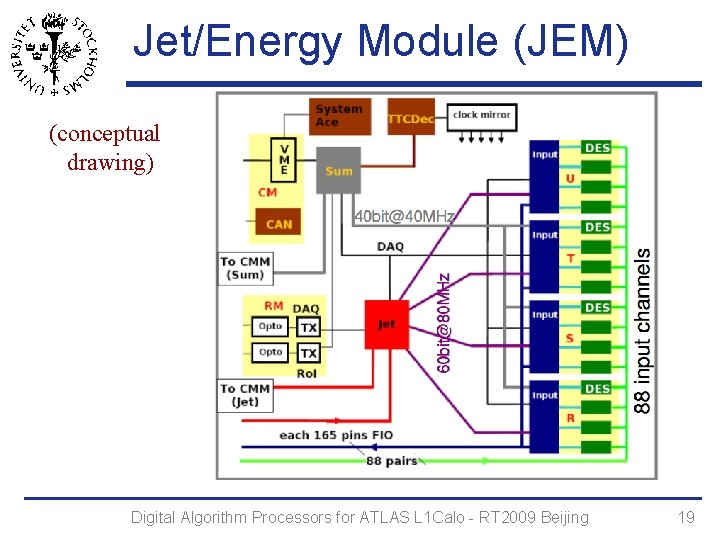

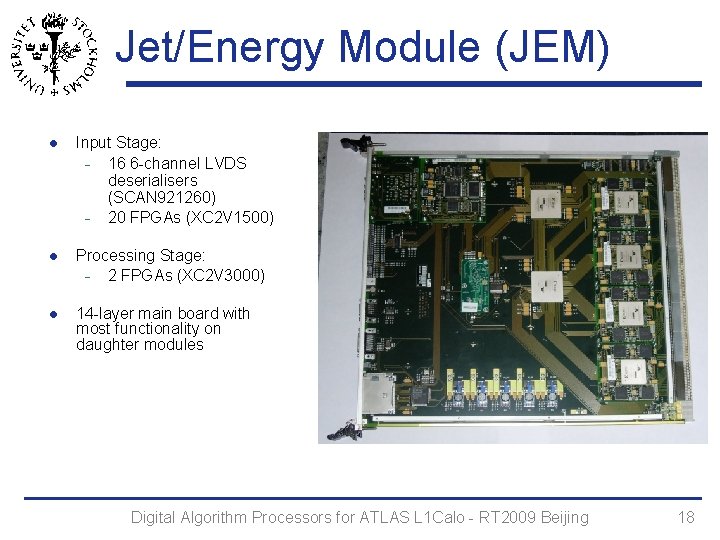

Jet/Energy Module (JEM) l Input Stage: 16 6 -channel LVDS deserialisers (SCAN 921260) 20 FPGAs (XC 2 V 1500) l Processing Stage: 2 FPGAs (XC 2 V 3000) l 14 -layer main board with most functionality on daughter modules Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 18

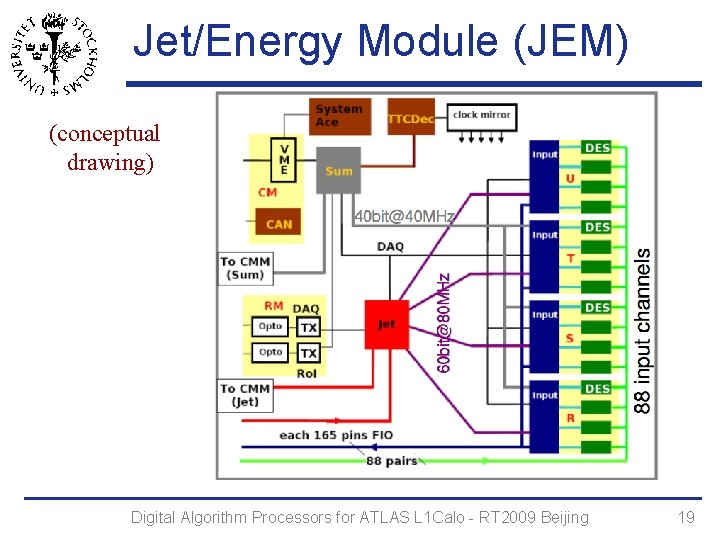

Jet/Energy Module (JEM) (conceptual drawing) Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 19

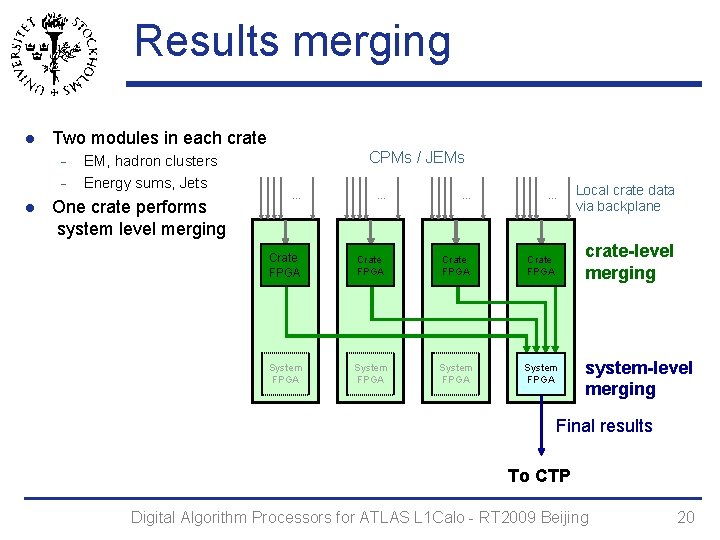

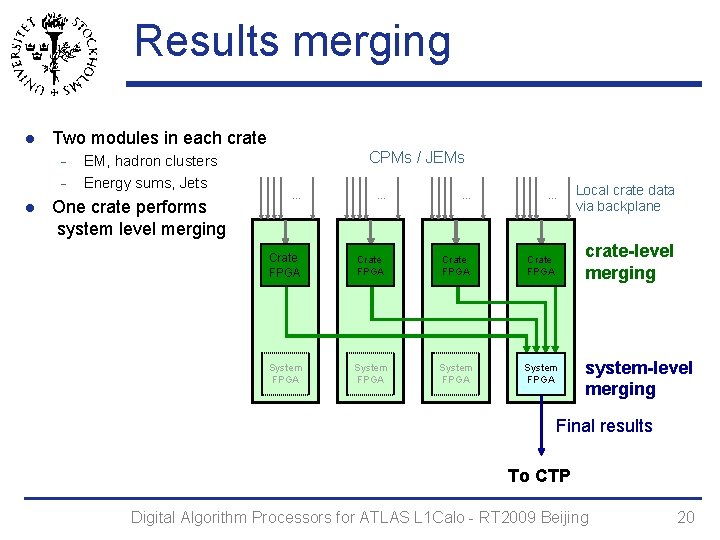

Results merging l Two modules in each crate l EM, hadron clusters Energy sums, Jets One crate performs system level merging CPMs / JEMs … … Local crate data via backplane Crate FPGA crate-level merging System FPGA system-level merging Final results To CTP Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 20

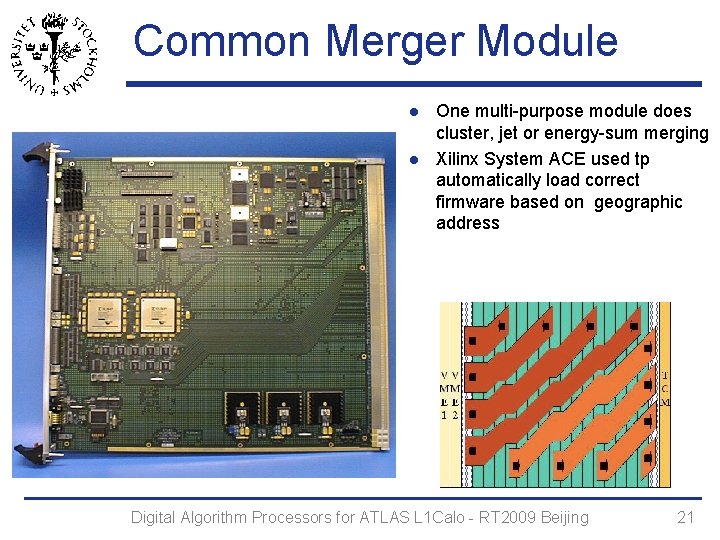

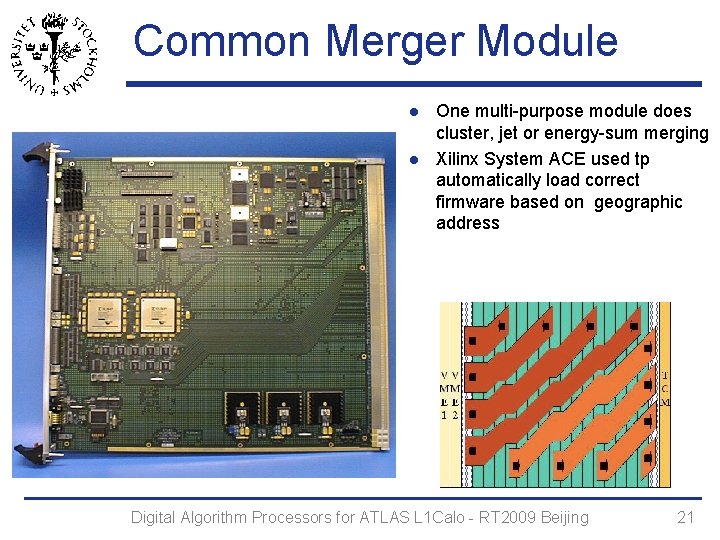

Common Merger Module l l One multi-purpose module does cluster, jet or energy-sum merging Xilinx System ACE used tp automatically load correct firmware based on geographic address Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 21

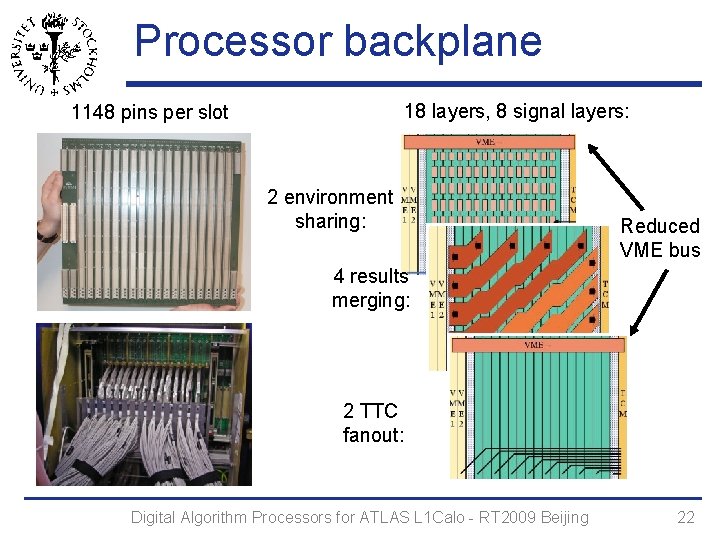

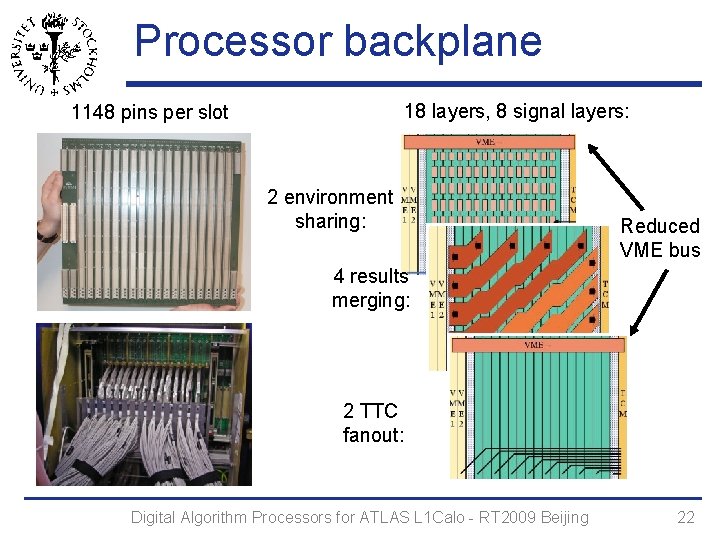

Processor backplane 18 layers, 8 signal layers: 1148 pins per slot 2 environment sharing: Reduced VME bus 4 results merging: 2 TTC fanout: Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 22

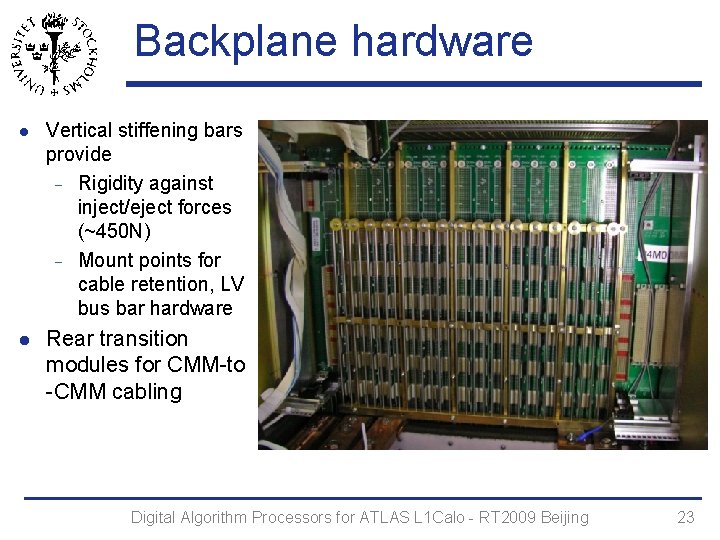

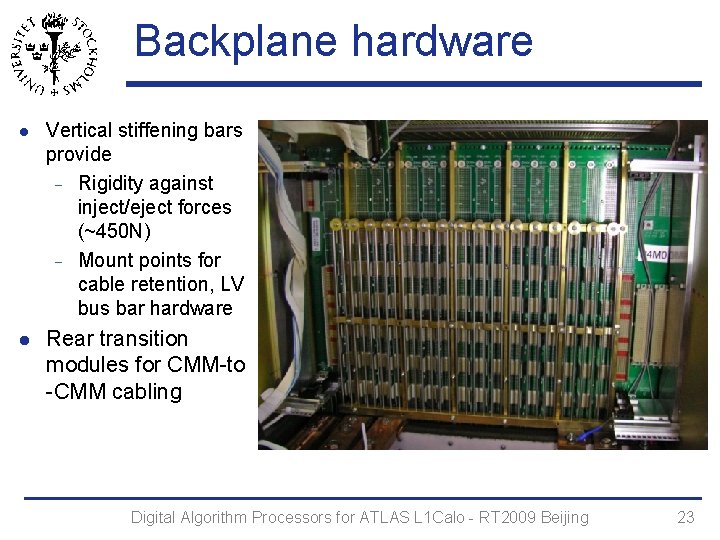

Backplane hardware l Vertical stiffening bars provide Rigidity against inject/eject forces (~450 N) Mount points for cable retention, LV bus bar hardware l Rear transition modules for CMM-to -CMM cabling Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 23

Other backplane features l Reduced VME bus (VME--) l Constrained by limited pin count A 24 D 16 slave cycles 3. 3 V levels Extended geographic addressing Position in crate Which crate in system (set by rotary switch) Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 24

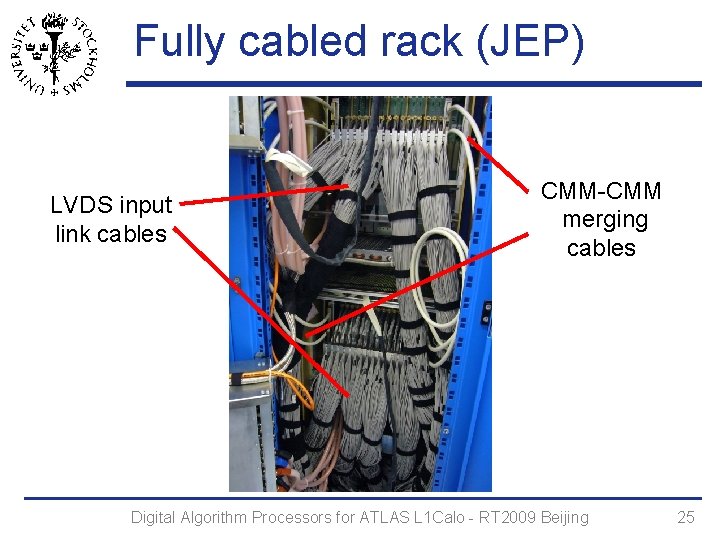

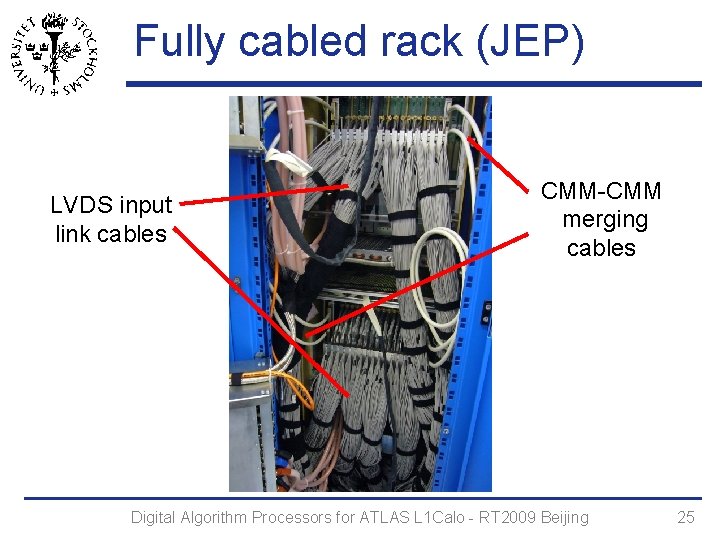

Fully cabled rack (JEP) LVDS input link cables CMM-CMM merging cables Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 25

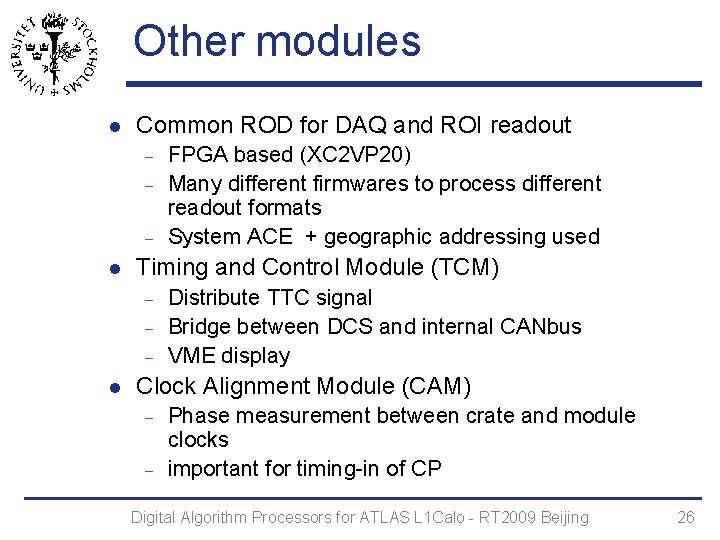

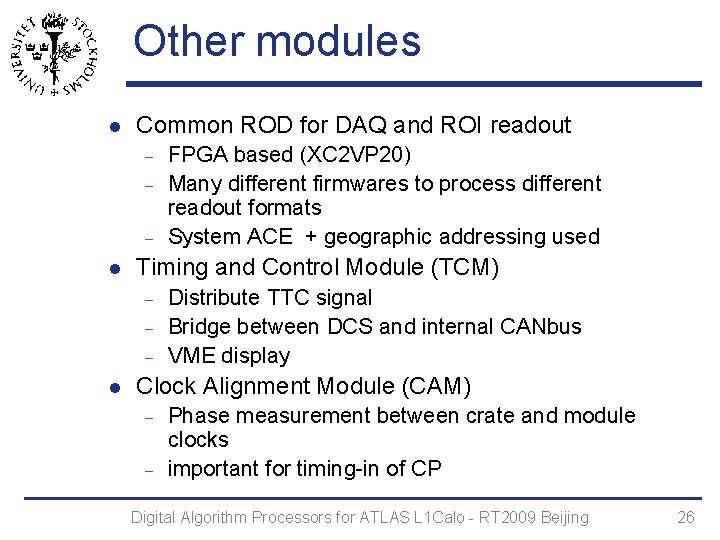

Other modules l Common ROD for DAQ and ROI readout l Timing and Control Module (TCM) l FPGA based (XC 2 VP 20) Many different firmwares to process different readout formats System ACE + geographic addressing used Distribute TTC signal Bridge between DCS and internal CANbus VME display Clock Alignment Module (CAM) Phase measurement between crate and module clocks important for timing-in of CP Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 26

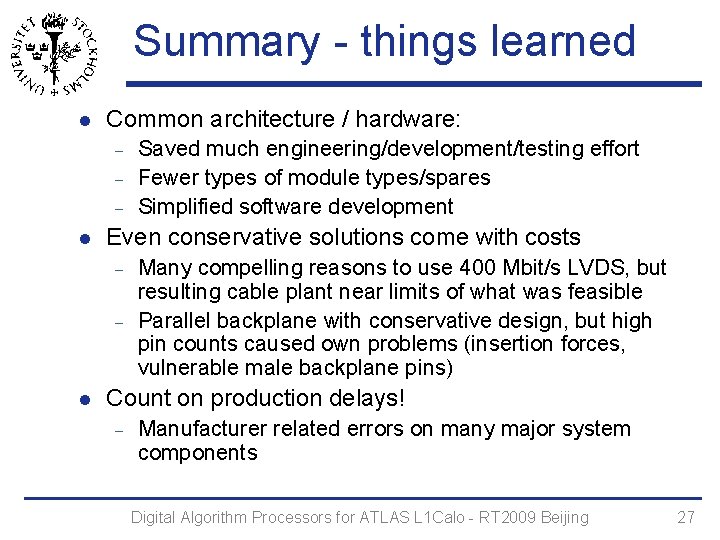

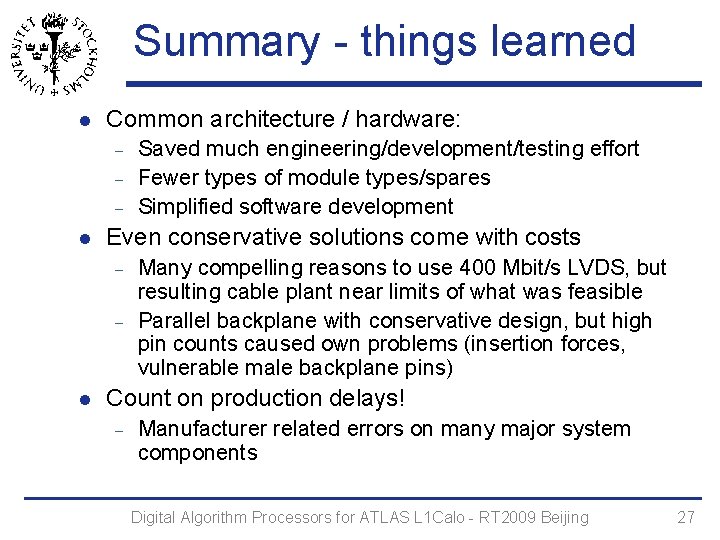

Summary - things learned l Common architecture / hardware: l Even conservative solutions come with costs l Saved much engineering/development/testing effort Fewer types of module types/spares Simplified software development Many compelling reasons to use 400 Mbit/s LVDS, but resulting cable plant near limits of what was feasible Parallel backplane with conservative design, but high pin counts caused own problems (insertion forces, vulnerable male backplane pins) Count on production delays! Manufacturer related errors on many major system components Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 27

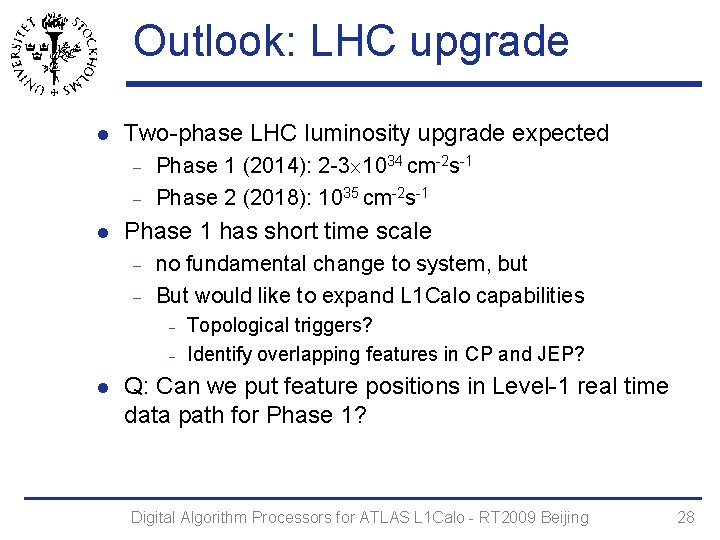

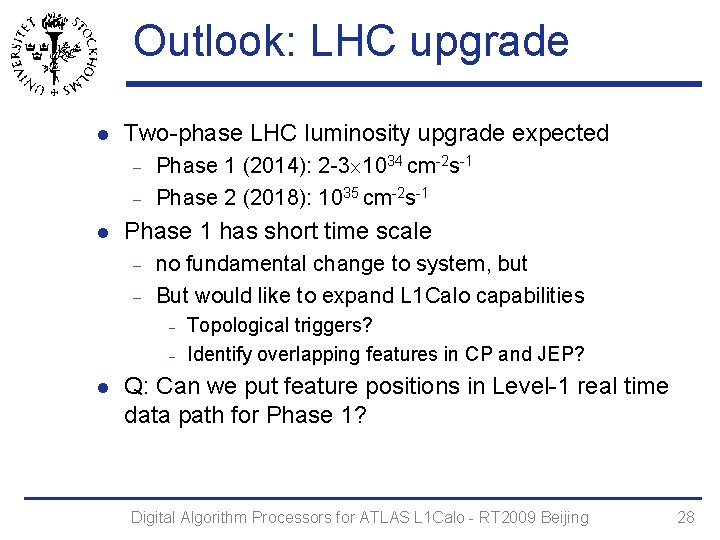

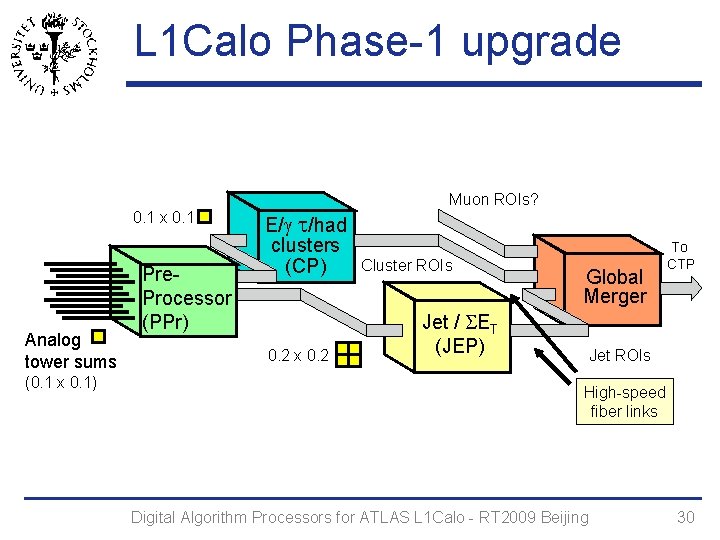

Outlook: LHC upgrade l Two-phase LHC luminosity upgrade expected l Phase 1 (2014): 2 -3 1034 cm-2 s-1 Phase 2 (2018): 1035 cm-2 s-1 Phase 1 has short time scale no fundamental change to system, but But would like to expand L 1 Calo capabilities l Topological triggers? Identify overlapping features in CP and JEP? Q: Can we put feature positions in Level-1 real time data path for Phase 1? Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 28

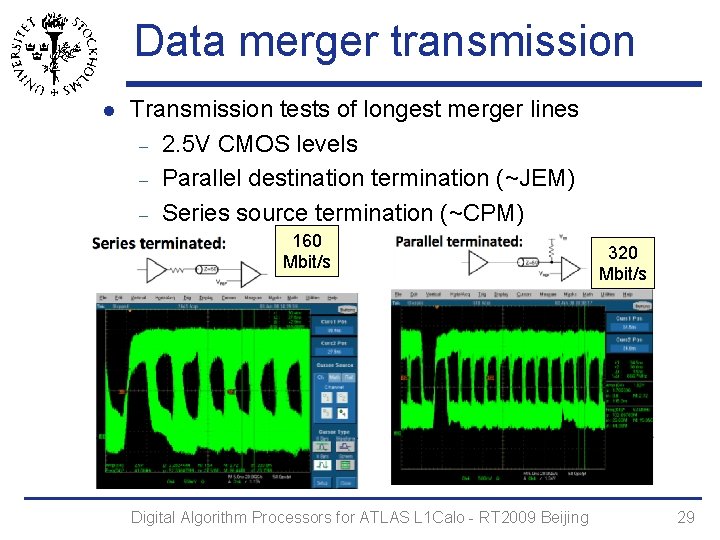

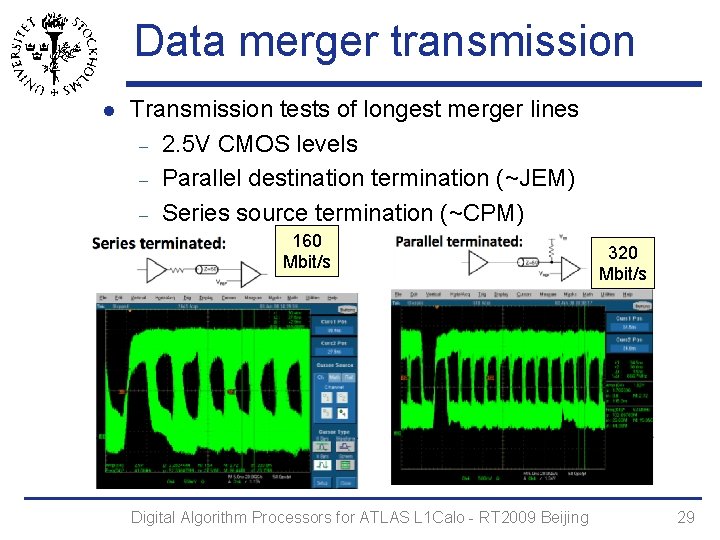

Data merger transmission l Transmission tests of longest merger lines 2. 5 V CMOS levels Parallel destination termination (~JEM) Series source termination (~CPM) 160 Mbit/s Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 320 Mbit/s 29

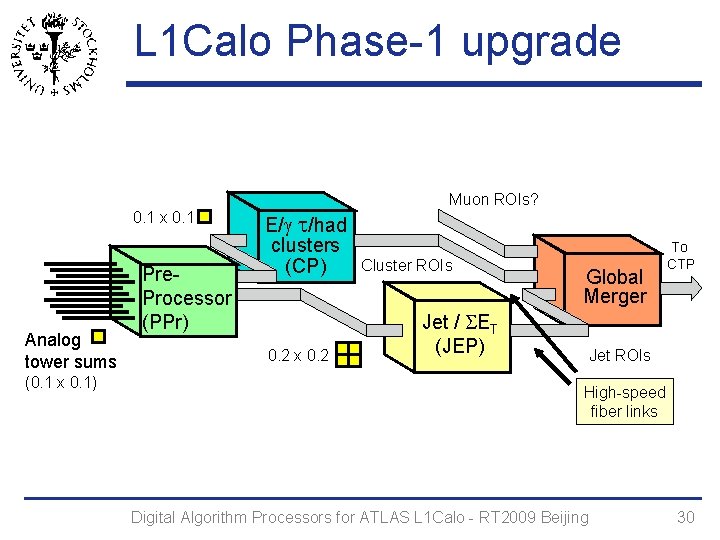

L 1 Calo Phase-1 upgrade 0. 1 x 0. 1 Analog tower sums (0. 1 x 0. 1) Pre. Processor (PPr) E/g t/had clusters (CP) 0. 2 x 0. 2 Muon ROIs? Cluster ROIs Global Merger Jet / SET (JEP) To CTP Jet ROIs High-speed fiber links Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 30

Thank you for your attention! Digital Algorithm Processors for ATLAS L 1 Calo - RT 2009 Beijing 31