The Bootstrap An example of a resampling method

The Bootstrap • An example of a resampling method • Allows us to estimate the sampling distribution of an estimator (in the absence of information about the distribution of the underlying data or of the estimator itself) • The bootstrap method generates repeated samples of our data by randomly sampling from our dataset with replacement and then calculating the estimator of interest for each of those resampled datasets. • Very useful for scenarios in which the standard error of the estimator cannot be easily calculated from a formula: e. g. , standard error (SE) of the median • To estimate SE, compute the standard deviation of the estimator (e. g. , median) using the bootstrapped samples. Summer Institutes 2020 Module 1, Session 9 1

Bootstrap Pros and Cons Advantages • All-purpose, computer intensive method useful for statistical inference. • Bootstrap estimates of precision do not require knowledge of theoretical form of an estimator’s standard error, no matter how complicated it is. Disadvantages • Typically not useful for correlated (dependent) data. • Missing data, censoring, data with outliers are also problematic • Often used incorrectly Note that there are many different types of bootstraps: we have only discussed one Summer Institutes 2020 Module 1, Session 9 2

The Jackknife • Also known as the ‘leave-one-out’ method because it is based on sequentially deleting one observation from the dataset and computing the estimator for each of these n samples (each of size n-1). That is, there are exactly n jackknife estimates obtained in a sample of size n. • The standard error of the estimator can then be calculated as the standard deviation of the n jackknife estimates. Summer Institutes 2020 Module 1, Session 9 3

Jackknife Pros and Cons Advantages • Useful method for estimating and compensating for bias in an estimator. • Like the bootstrap, the methodology does not require knowledge of theoretical form of an estimator’s standard error. • Is generally less computationally intensive compared to the bootstrap method. Disadvantages • The jackknife method is more conservative than the bootstrap method, that is, its estimated standard error tends to be slightly larger. • Performs poorly when the estimator is not sufficiently smooth, e. g. , the median. Summer Institutes 2020 Module 1, Session 9 4

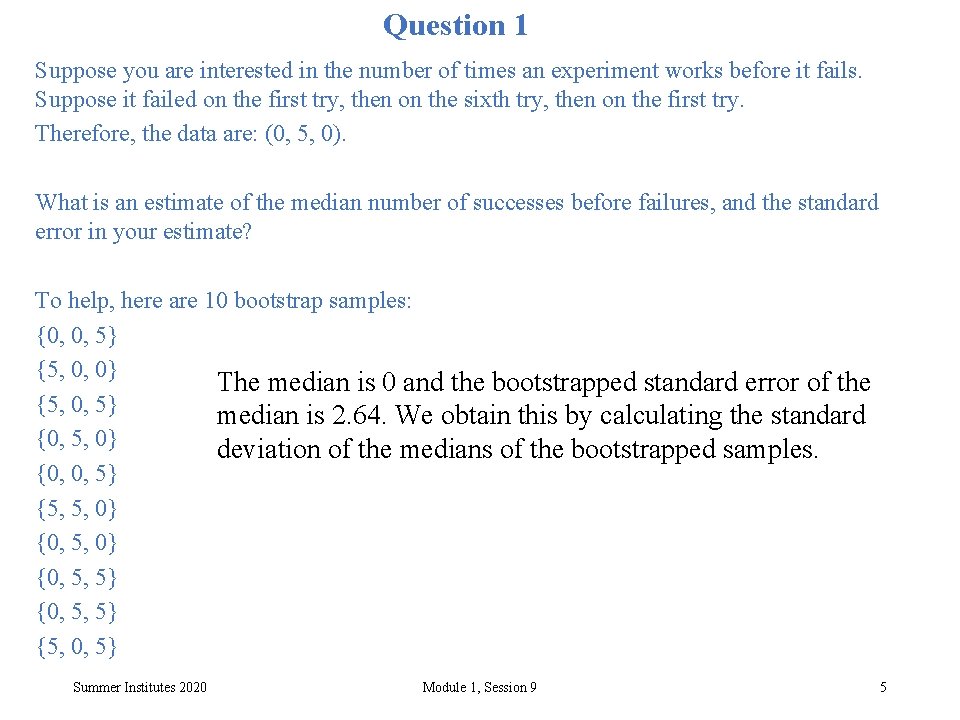

Question 1 Suppose you are interested in the number of times an experiment works before it fails. Suppose it failed on the first try, then on the sixth try, then on the first try. Therefore, the data are: (0, 5, 0). What is an estimate of the median number of successes before failures, and the standard error in your estimate? To help, here are 10 bootstrap samples: {0, 0, 5} {5, 0, 0} The median is 0 and the bootstrapped standard error of the {5, 0, 5} median is 2. 64. We obtain this by calculating the standard {0, 5, 0} deviation of the medians of the bootstrapped samples. {0, 0, 5} {5, 5, 0} {0, 5, 5} {5, 0, 5} Summer Institutes 2020 Module 1, Session 9 5

- Slides: 5