The ATLAS Data Acquisition Trigger concept design status

- Slides: 41

The ATLAS Data Acquisition & Trigger: concept, design & status Kostas KORDAS INFN – Frascati 10 th Topical Seminar on Innovative Particle & Radiation Detectors (IPRD 06) Siena, ATLAS 1 -5 Oct. 2006 IPRD 06, 1 -5 Oct. 2006, Siena, Italy TDAQ concept, design & status - Kostas KORDAS

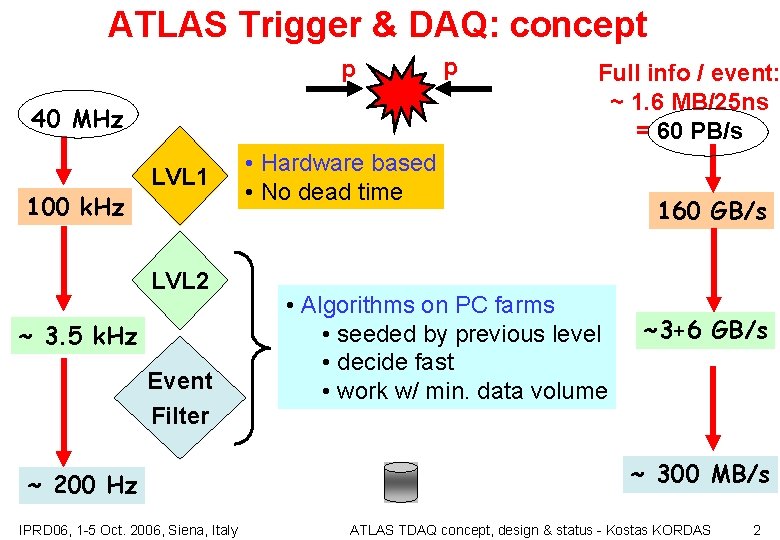

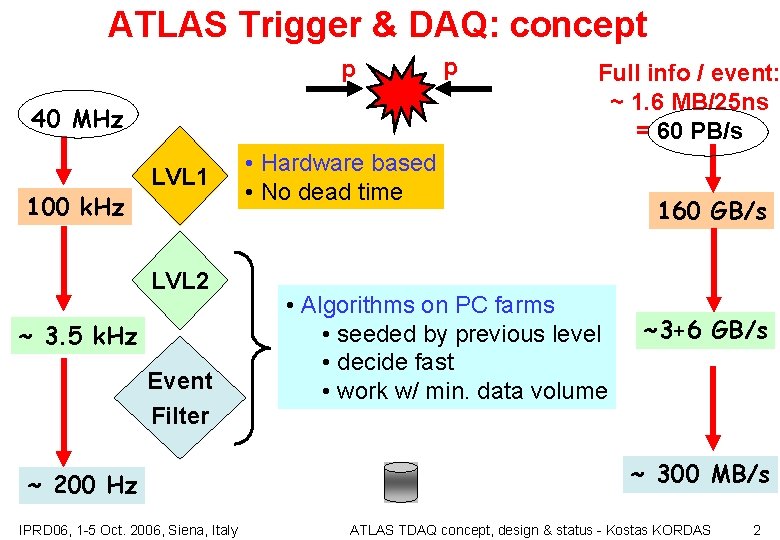

ATLAS Trigger & DAQ: concept p 40 MHz 100 k. Hz LVL 1 LVL 2 ~ 3. 5 k. Hz Event Filter ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy p Full info / event: ~ 1. 6 MB/25 ns = 60 PB/s • Hardware based • No dead time • Algorithms on PC farms • seeded by previous level • decide fast • work w/ min. data volume 160 GB/s ~3+6 GB/s ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 2

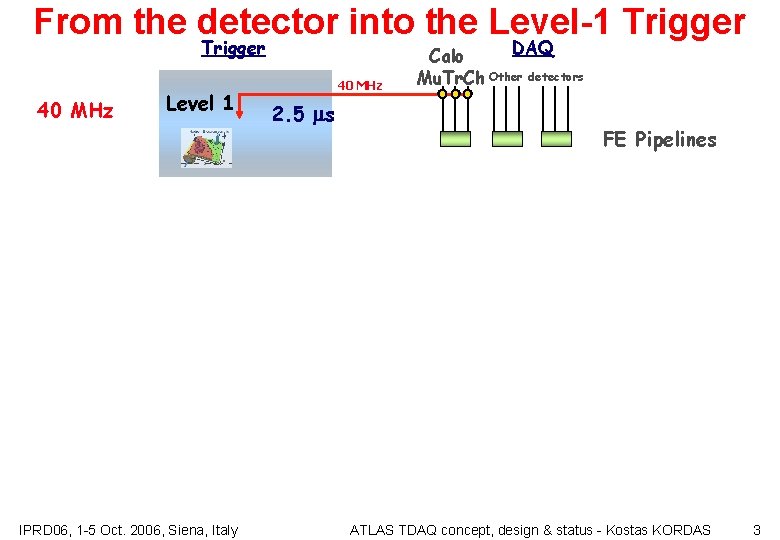

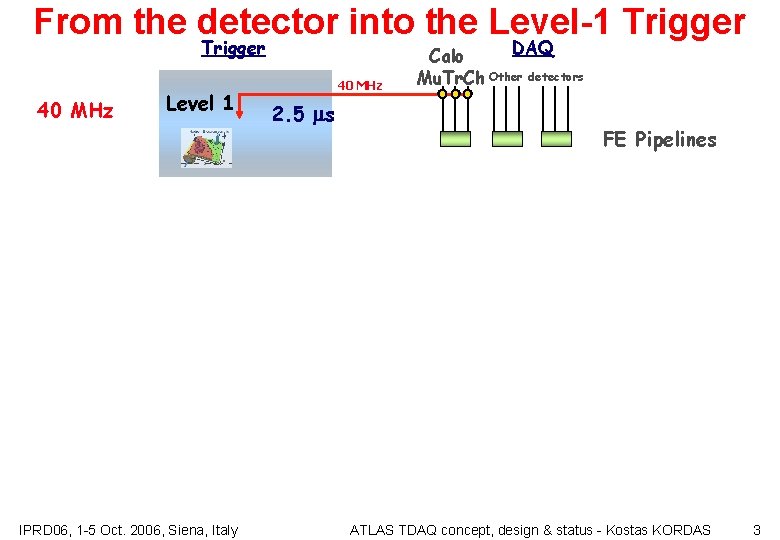

From the detector into the Level-1 Trigger 40 MHz Level 1 IPRD 06, 1 -5 Oct. 2006, Siena, Italy 40 MHz 2. 5 ms DAQ Calo Mu. Tr. Ch Other detectors FE Pipelines ATLAS TDAQ concept, design & status - Kostas KORDAS 3

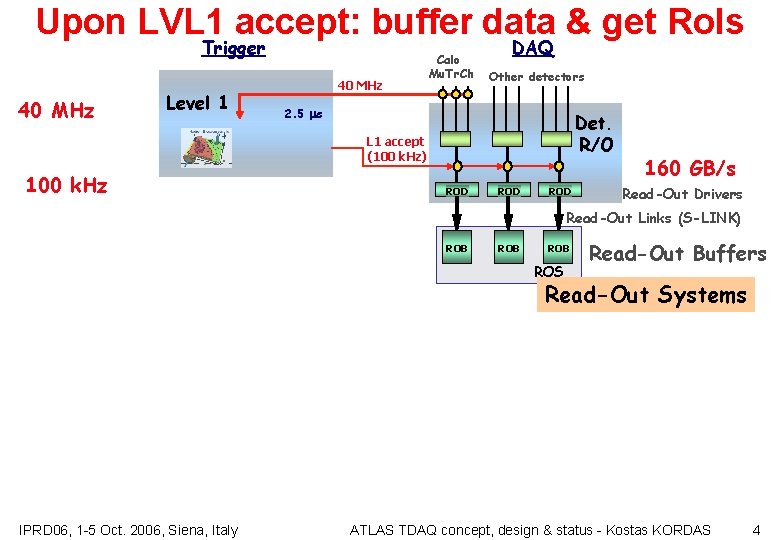

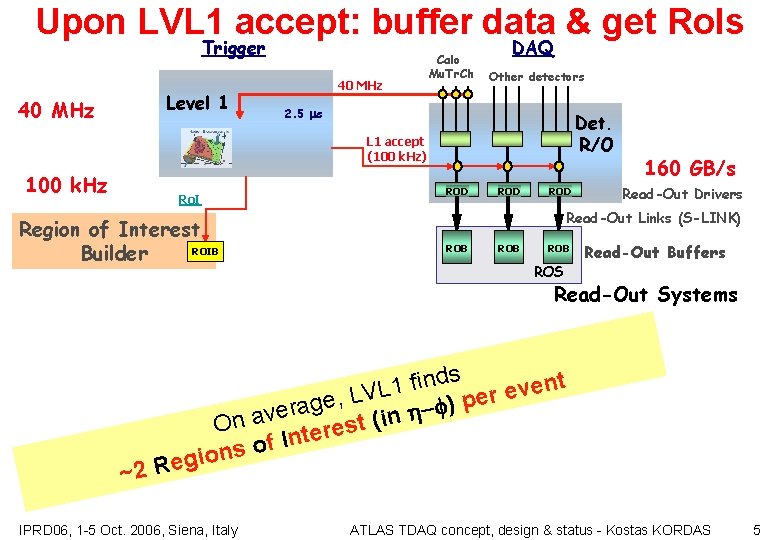

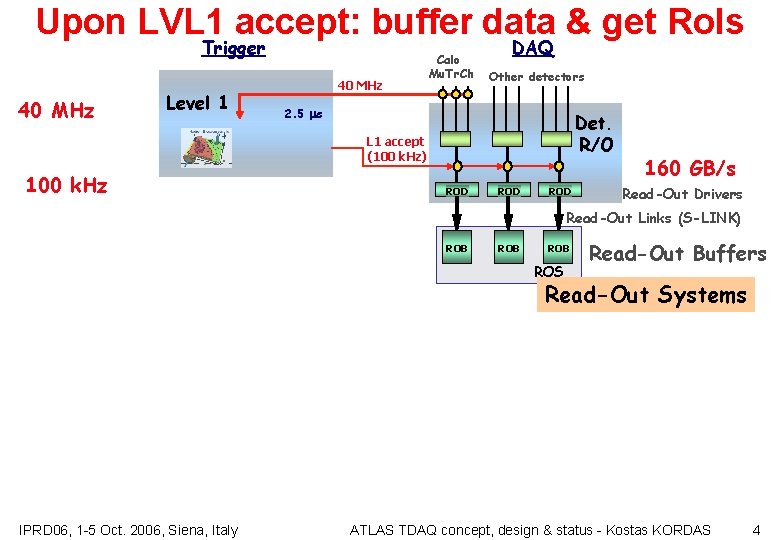

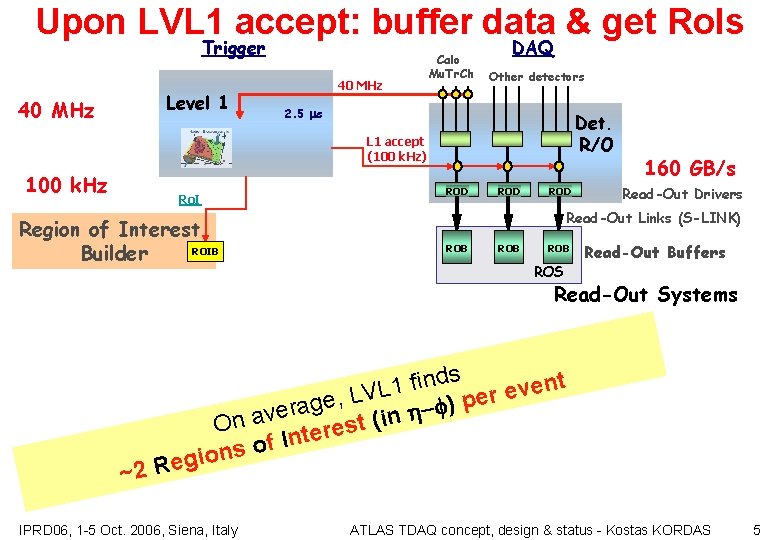

Upon LVL 1 accept: buffer data & get Ro. Is Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz ROD ROD 160 GB/s Read-Out Drivers Read-Out Links (S-LINK) ROB ROB ROS Read-Out Buffers Read-Out Systems IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 4

Upon LVL 1 accept: buffer data & get Ro. Is Trigger Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Region of Interest ROIB Builder ROD ROD 160 GB/s Read-Out Drivers Read-Out Links (S-LINK) ROB ROB ROS Read-Out Buffers Read-Out Systems ~2 ds n i f nt e 1 v L e V r L e e, p g ) a f r e On av terest (in In f o s n Regio IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 5

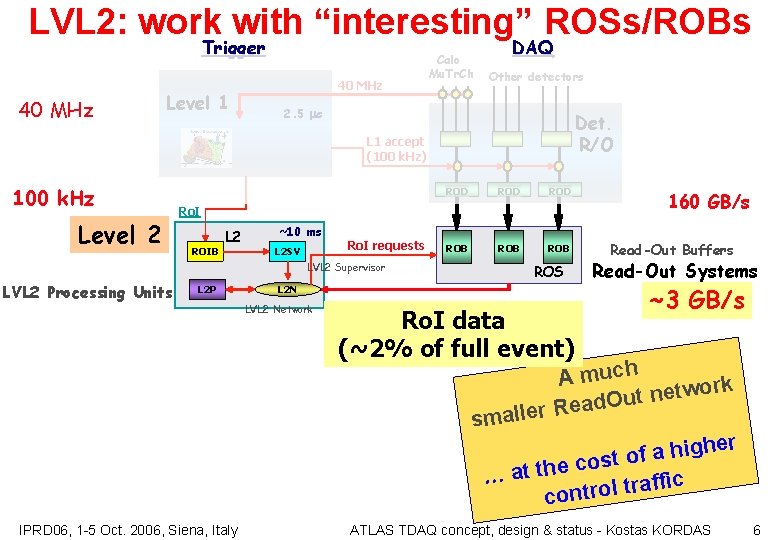

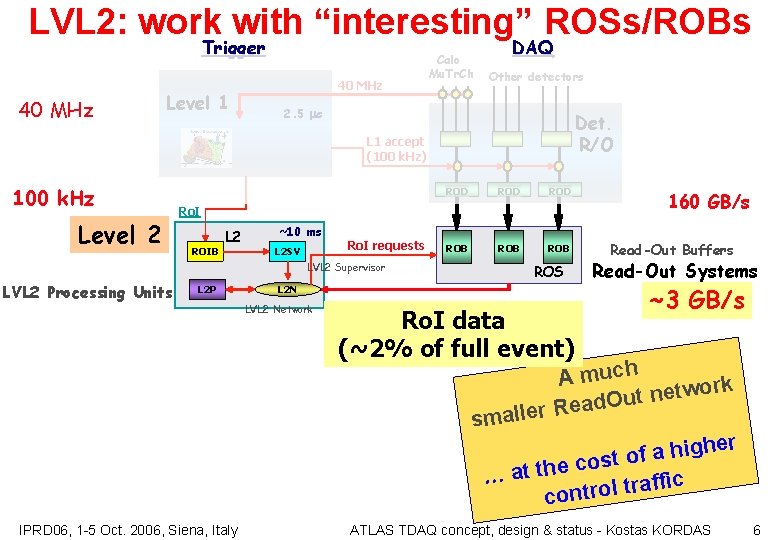

LVL 2: work with “interesting” ROSs/ROBs Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Level 2 ROD ROD ROB ROB Ro. I ROIB L 2 ~10 ms L 2 SV Ro. I requests LVL 2 Supervisor LVL 2 Processing Units L 2 P ROS L 2 N LVL 2 Network Ro. I data (~2% of full event) 160 GB/s Read-Out Buffers Read-Out Systems ~3 GB/s A much ork w t e n t u ad. O aller Re sm er h g i h a f st o o c e h t t …a ffic a r t l o r t con IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 6

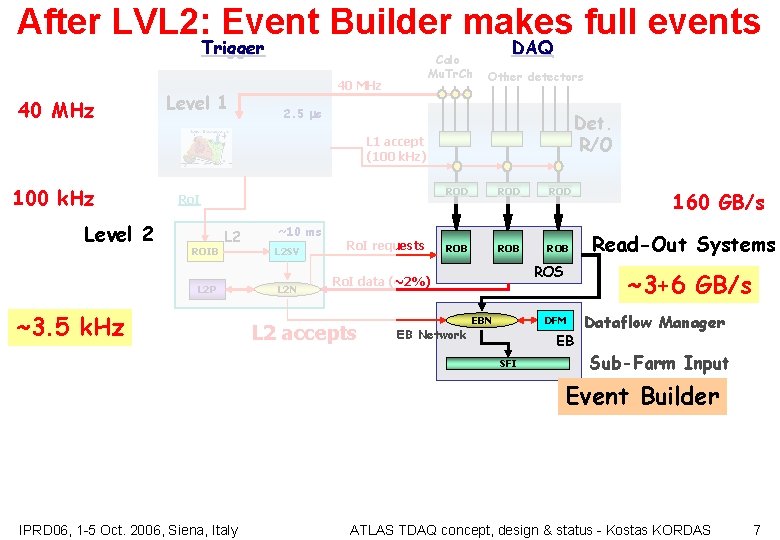

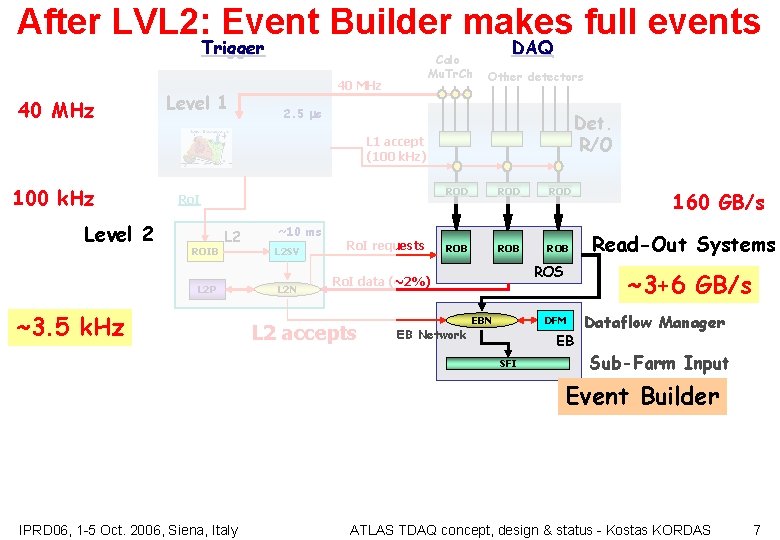

After LVL 2: Event Builder makes full events Trigger 40 MHz Level 1 Calo Mu. Tr. Ch 40 MHz DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Level 2 Ro. I ROIB L 2 P ~3. 5 k. Hz ~10 ms L 2 SV L 2 N Ro. I requests ROD ROD ROB ROB ROS Ro. I data (~2%) L 2 accepts EB Network EB SFI Read-Out Systems ~3+6 GB/s DFM EBN 160 GB/s Dataflow Manager Sub-Farm Input Event Builder IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 7

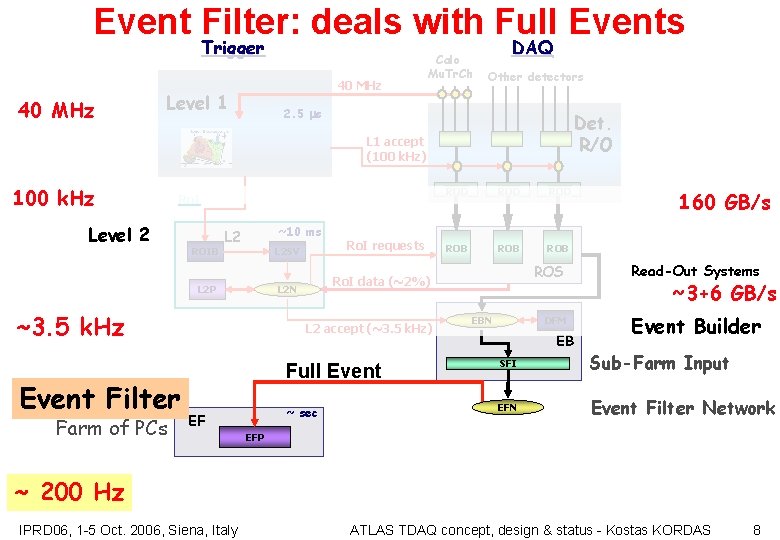

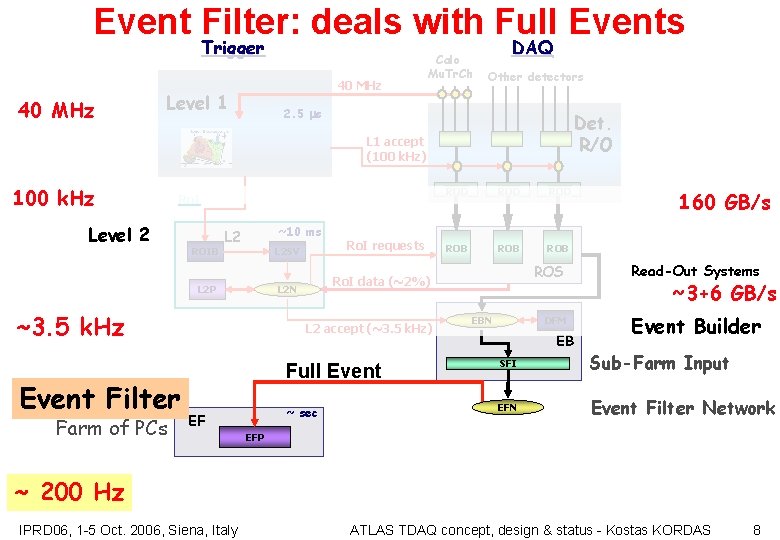

Event Filter: deals with Full Events Trigger 40 MHz Level 1 Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB ~10 ms L 2 SV ~3. 5 k. Hz Event Filter Farm of PCs L 2 accept (~3. 5 k. Hz) EF ROD ROB ROB ROS Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD DFM EBN EB 160 GB/s Read-Out Systems ~3+6 GB/s Event Builder Full Event SFI Sub-Farm Input ~ sec EFN Event Filter Network EFP ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 8

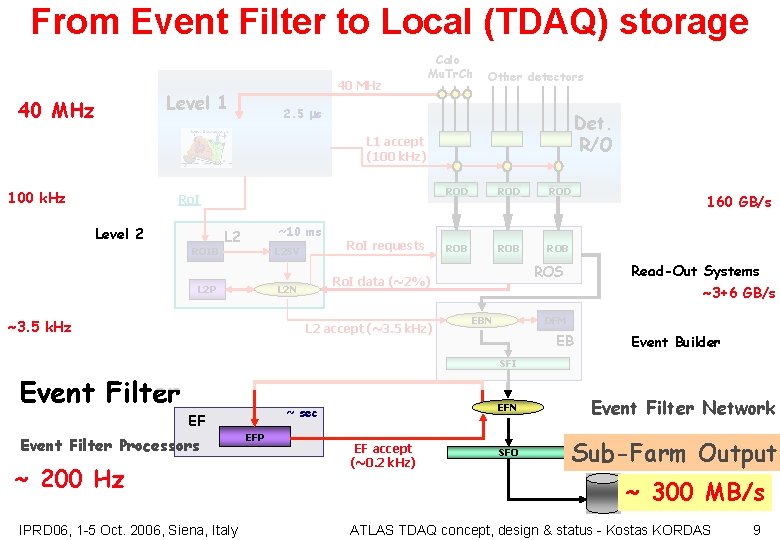

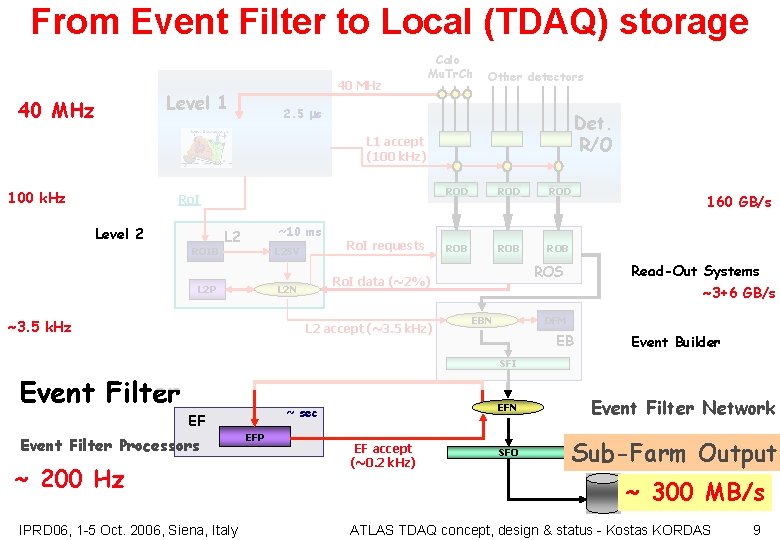

From Event Filter to Local (TDAQ) storage 40 MHz Level 1 Calo Mu. Tr. Ch Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB ~10 ms L 2 SV ~3. 5 k. Hz ROD ROB ROB L 2 accept (~3. 5 k. Hz) 160 GB/s Read-Out Systems ROS Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD ~3+6 GB/s DFM EBN EB Event Builder SFI Event Filter ~ sec EF Event Filter Processors ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy EFP EF accept (~0. 2 k. Hz) EFN Event Filter Network SFO Sub-Farm Output ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 9

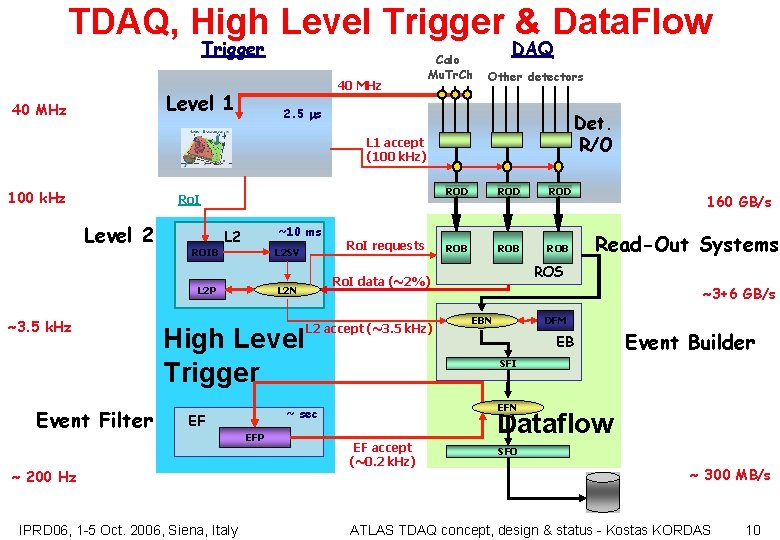

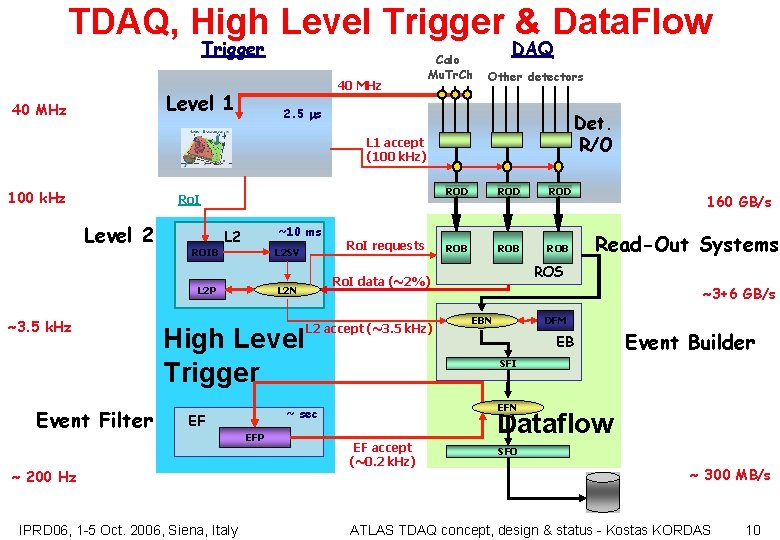

TDAQ, High Level Trigger & Data. Flow Trigger 40 MHz Level 1 40 MHz Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB ~10 ms L 2 SV ~3. 5 k. Hz Event Filter High Level Trigger ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy L 2 accept (~3. 5 k. Hz) EFP ROD ROB ROB 160 GB/s Read-Out Systems ROS ~3+6 GB/s DFM EBN EB Event Builder SFI EFN ~ sec EF ROD Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD Dataflow EF accept (~0. 2 k. Hz) SFO ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 10

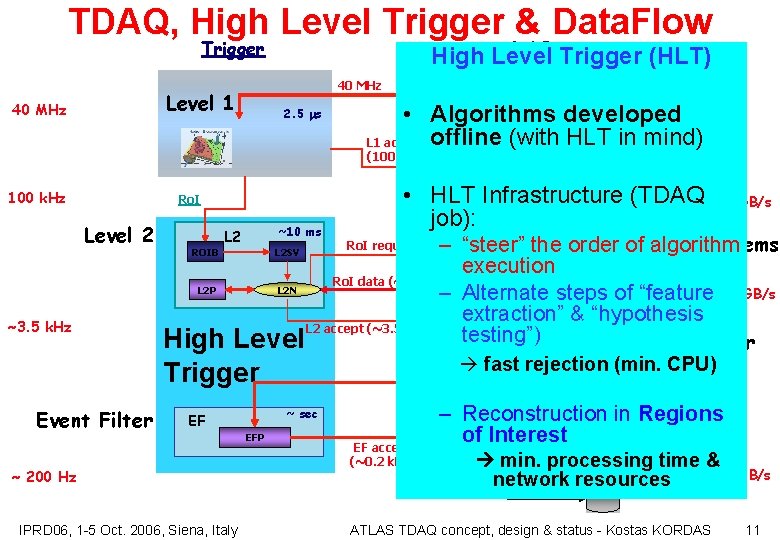

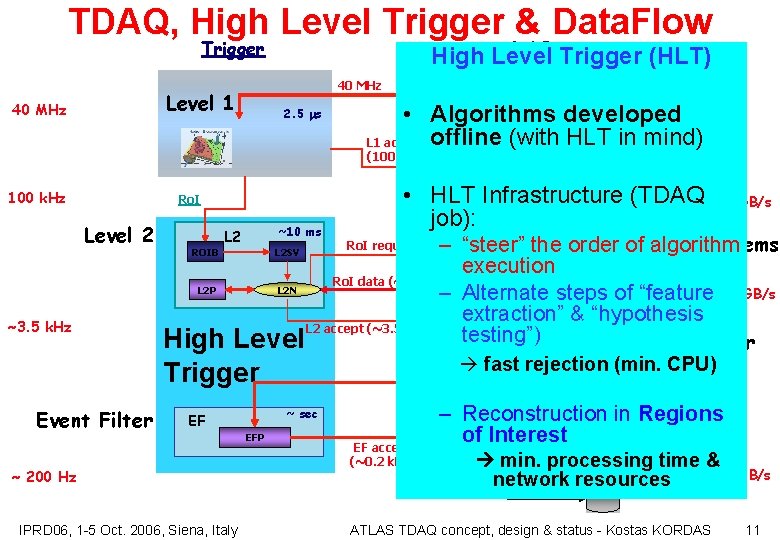

TDAQ, High Level Trigger & Data. Flow Trigger Mu. Tr. Ch 40 MHz Level 1 40 MHz DAQ Calo High Level Trigger (HLT) 2. 5 ms Other detectors • Algorithms developed Det. L 1 accept offline (with HLT R/O in mind) (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB L 2 P ~3. 5 k. Hz Event Filter ~10 ms L 2 ROD ROD • HLT Infrastructure (TDAQ 160 GB/s job): Read-Out Systems –ROB“steer” of algorithm ROB the ROBorder execution ROS Ro. I data (~2%) L 2 N ~3+6 GB/s – Alternate steps of “feature extraction” DFM & “hypothesis EBN L 2 accept (~3. 5 k. Hz) testing”) EB Level Event Builder L 2 SV Ro. I requests High Trigger ~ sec EF ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy SFI rejection (min. CPU) fast EFP EF accept (~0. 2 k. Hz) EFN – Reconstruction in Regions Dataflow of Interest SFO min. processing time & network resources ~ 300 ATLAS TDAQ concept, design & status - Kostas KORDAS MB/s 11

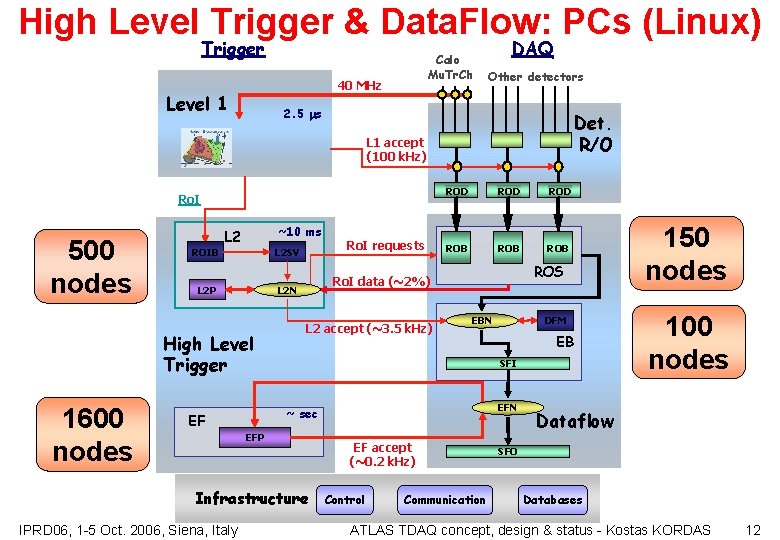

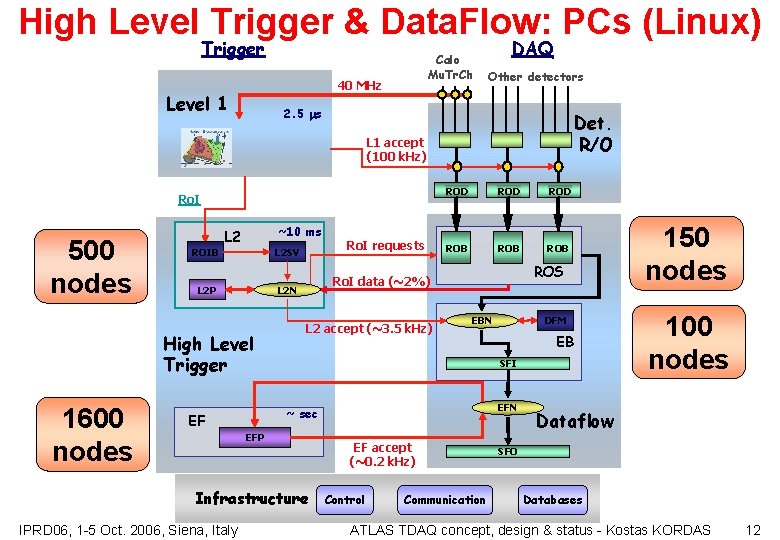

High Level Trigger & Data. Flow: PCs (Linux) Trigger Calo Mu. Tr. Ch 40 MHz Level 1 DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) Ro. I 500 nodes ROIB ~10 ms L 2 SV High Level Trigger 1600 nodes ROD ROB ROB ROS L 2 accept (~3. 5 k. Hz) DFM EBN EB SFI EFN ~ sec EF EFP Infrastructure IPRD 06, 1 -5 Oct. 2006, Siena, Italy ROD Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD EF accept (~0. 2 k. Hz) Control Communication 150 nodes 100 nodes Dataflow SFO Databases ATLAS TDAQ concept, design & status - Kostas KORDAS 12

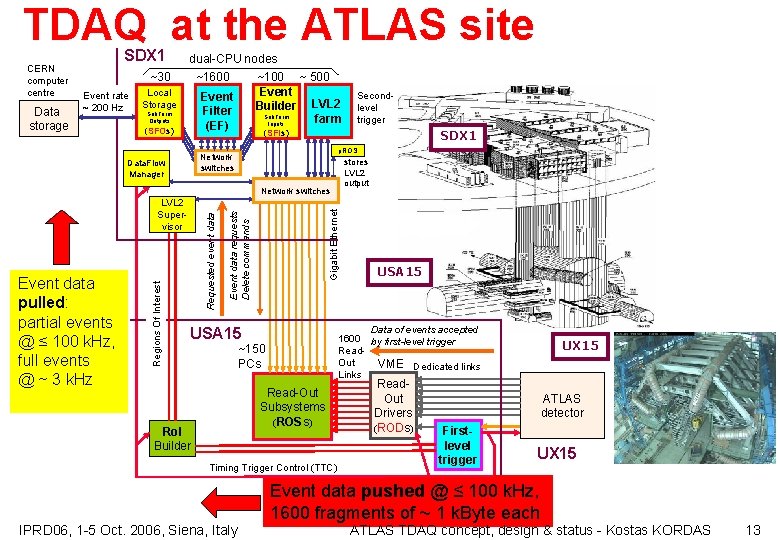

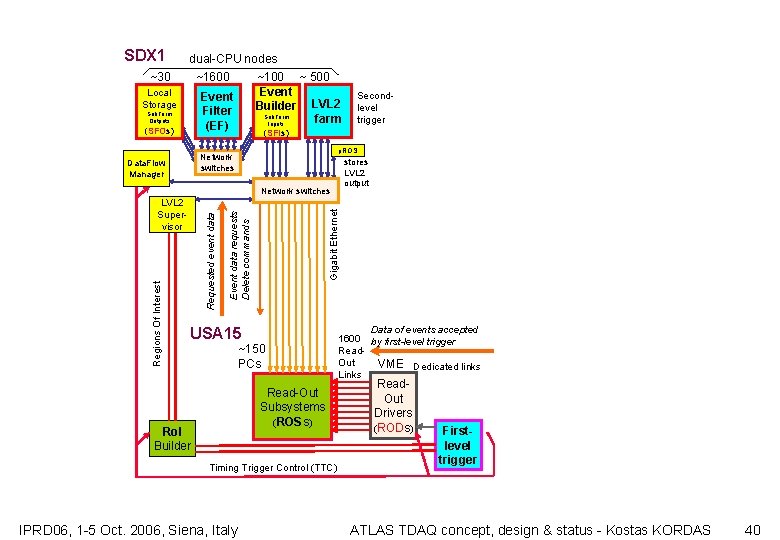

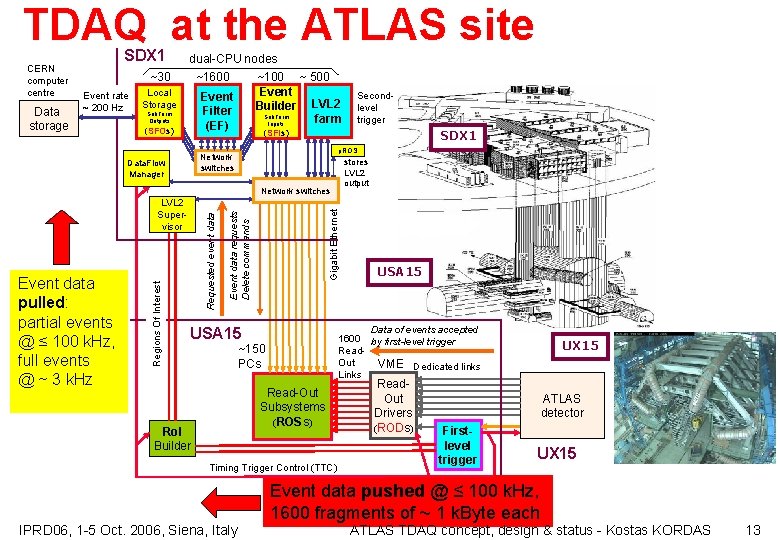

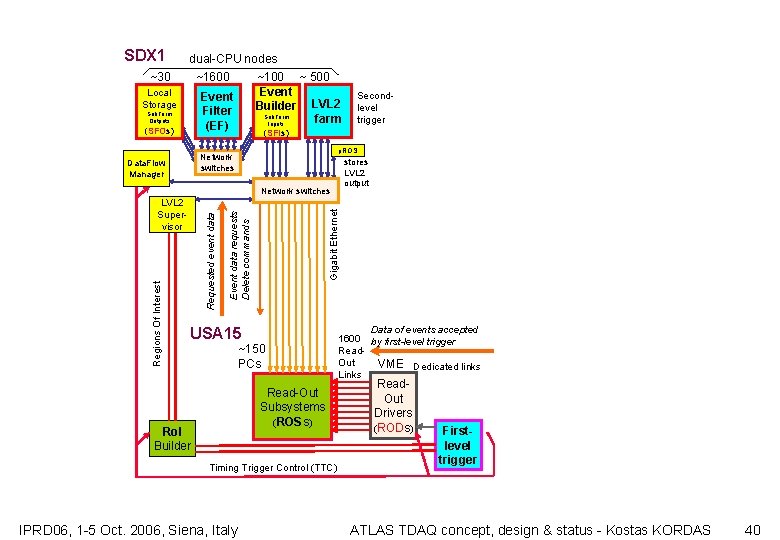

TDAQ at the ATLAS site CERN computer centre Data storage SDX 1 dual-CPU nodes ~30 Event rate ~ 200 Hz ~1600 Local Storage ~100 ~ 500 Event Builder LVL 2 Sub. Farm farm Inputs Event Filter (EF) Sub. Farm Outputs (SFOs) (SFIs) SDX 1 p. ROS Network switches Data. Flow Manager stores LVL 2 output Event data requests Delete commands Requested event data Regions Of Interest Event data pulled: partial events @ ≤ 100 k. Hz, full events @ ~ 3 k. Hz Gigabit Ethernet Network switches LVL 2 Supervisor USA 15 Data of events accepted 1600 by first-level trigger Read. Out VME Dedicated links Links USA 15 ~150 PCs Read-Out Subsystems (ROSs) Ro. I Builder Secondlevel trigger Timing Trigger Control (TTC) Read. Out Drivers (RODs) UX 15 ATLAS detector Firstlevel trigger UX 15 Event data pushed @ ≤ 100 k. Hz, 1600 fragments of ~ 1 k. Byte each IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 13

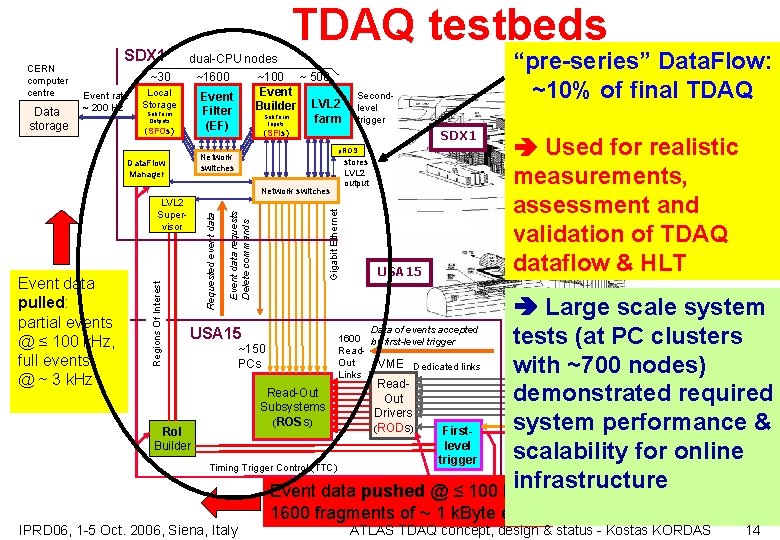

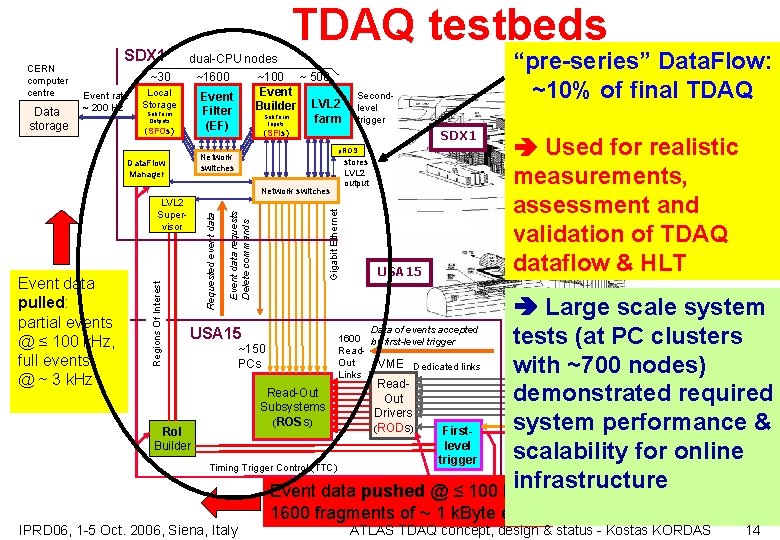

CERN computer centre Data storage SDX 1 ~30 Event rate ~ 200 Hz Local Storage Sub. Farm Outputs (SFOs) Data. Flow Manager TDAQ testbeds ~1600 Event Filter (EF) ~100 ~ 500 Event Builder LVL 2 Sub. Farm farm Inputs SDX 1 p. ROS Network switches Gigabit Ethernet Event data requests Delete commands Requested event data Regions Of Interest Event data pulled: partial events @ ≤ 100 k. Hz, full events @ ~ 3 k. Hz Secondlevel trigger (SFIs) Network switches LVL 2 Supervisor “pre-series” Data. Flow: ~10% of final TDAQ dual-CPU nodes stores LVL 2 output USA 15 Used for realistic measurements, assessment and validation of TDAQ dataflow & HLT Large scale system Data of events accepted USA 15 1600 by first-level trigger tests. UX 15 (at PC clusters ~150 Read. Out PCs VME Dedicated links with ~700 nodes) Links Read-Out demonstrated required Out ATLAS Subsystems Drivers detector (ROSs) system performance & ( RODs ) First. Ro. I level Builder scalability for online UX 15 trigger Timing Trigger Control (TTC) infrastructure Event data pushed @ ≤ 100 k. Hz, 1600 fragments of ~ 1 k. Byte each IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 14

Muon + HAD Cal. cosmics run with LVL 1: Calorimeter, muon and central trigger logics in production and installation phases for both hardware & software run y a r mic : 6 0 0 s 2 o c t s d u ine b Aug m o AS L T A if rst c of t e e f at n o i r t e c t e e s m i n lor uo a M C ) D • A H ( r e ile g T g i Tr • n o u M y b ed r e g g i Tr. Chambers IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 15

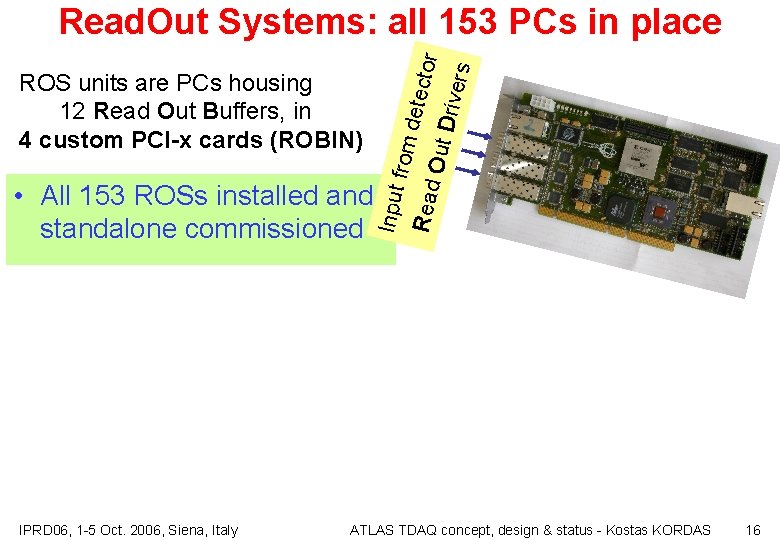

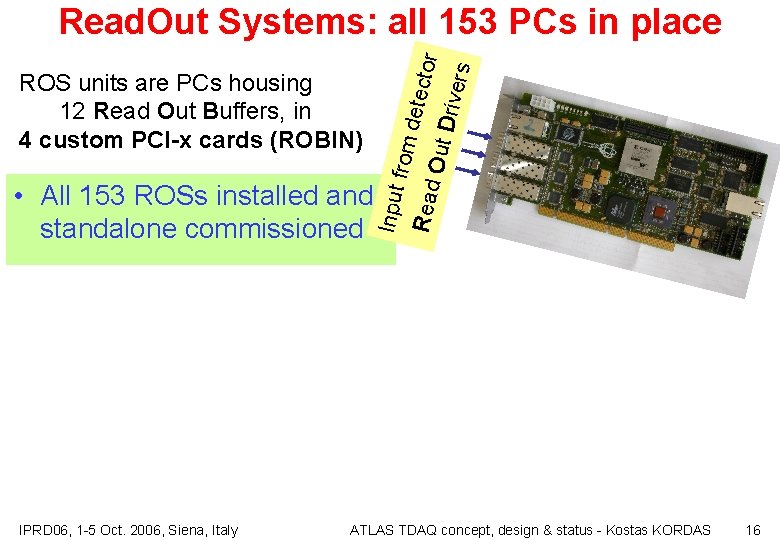

IPRD 06, 1 -5 Oct. 2006, Siena, Italy Read from d • All 153 ROSs installed and standalone commissioned Input ROS units are PCs housing 12 Read Out Buffers, in 4 custom PCI-x cards (ROBIN) etect or Out D rivers Read. Out Systems: all 153 PCs in place ATLAS TDAQ concept, design & status - Kostas KORDAS 16

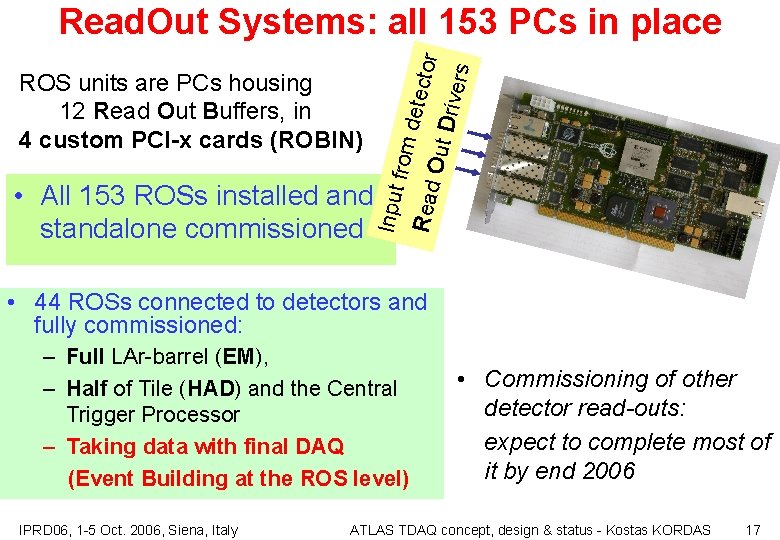

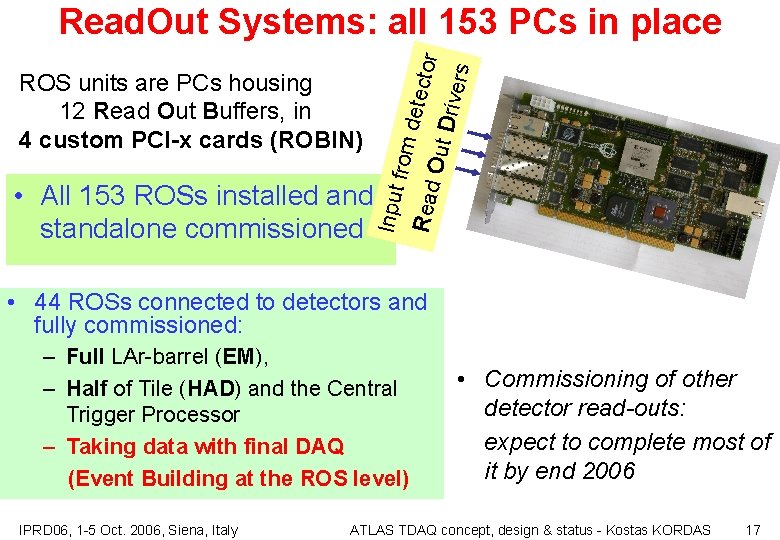

tor rivers Read. Out Systems: all 153 PCs in place Out D Read Input • All 153 ROSs installed and standalone commissioned from detec ROS units are PCs housing 12 Read Out Buffers, in 4 custom PCI-x cards (ROBIN) • 44 ROSs connected to detectors and fully commissioned: – Full LAr-barrel (EM), – Half of Tile (HAD) and the Central Trigger Processor – Taking data with final DAQ (Event Building at the ROS level) IPRD 06, 1 -5 Oct. 2006, Siena, Italy • Commissioning of other detector read-outs: expect to complete most of it by end 2006 ATLAS TDAQ concept, design & status - Kostas KORDAS 17

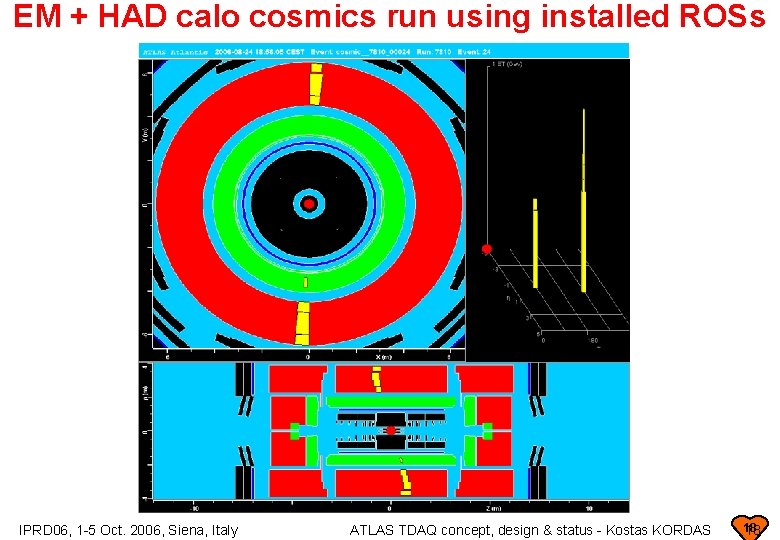

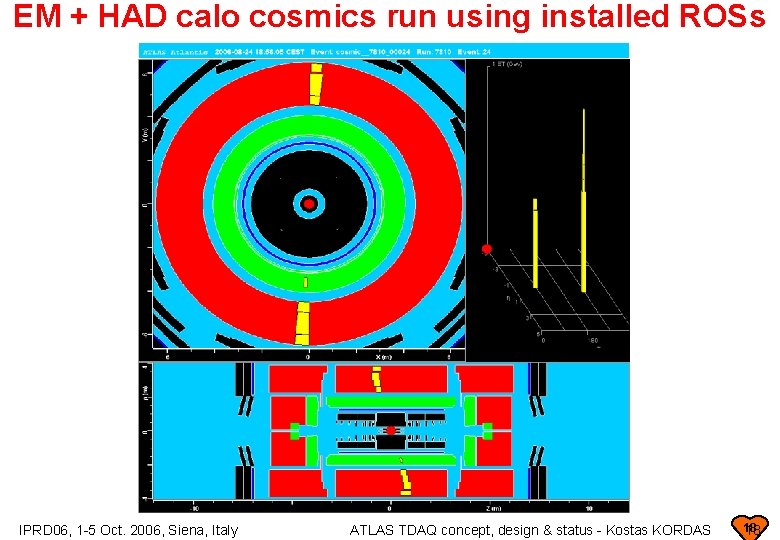

EM + HAD calo cosmics run using installed ROSs IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 18 18

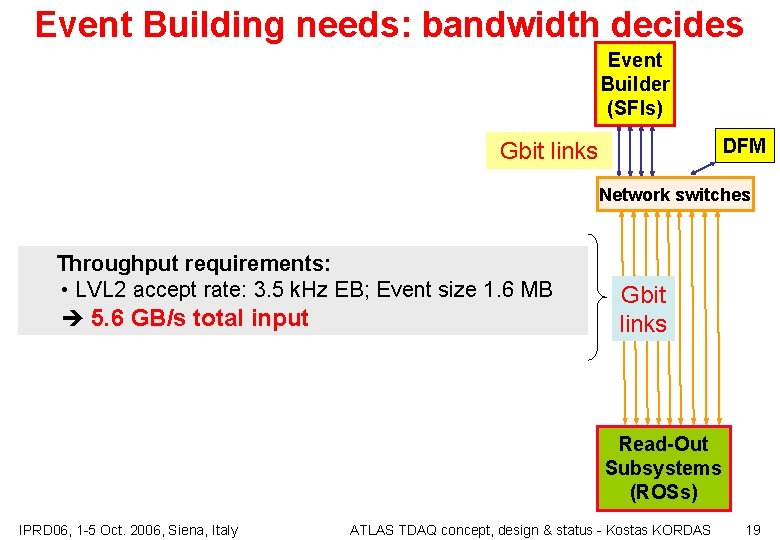

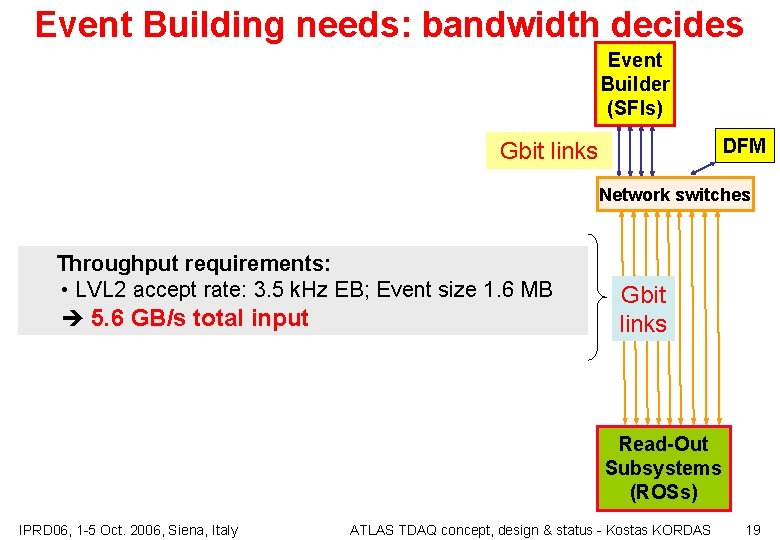

Event Building needs: bandwidth decides Event Builder (SFIs) DFM Gbit links Network switches Throughput requirements: • LVL 2 accept rate: 3. 5 k. Hz EB; Event size 1. 6 MB 5. 6 GB/s total input Gbit links Read-Out Subsystems (ROSs) IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 19

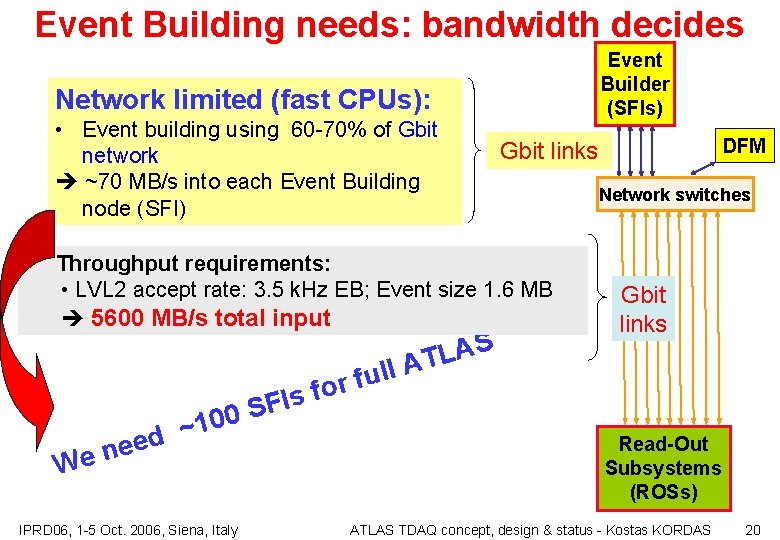

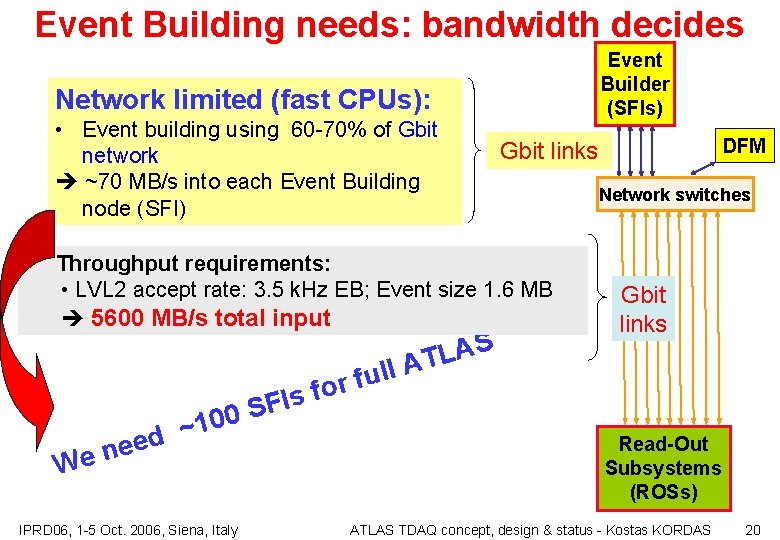

Event Building needs: bandwidth decides Event Builder (SFIs) Network limited (fast CPUs): • Event building using 60 -70% of Gbit network ~70 MB/s into each Event Building node (SFI) Throughput requirements: • LVL 2 accept rate: 3. 5 k. Hz EB; Event size 1. 6 MB 5600 MB/s total input S A L T A l l or fu DFM Gbit links Network switches Gbit links f s I F 0 S 0 1 ~ d e e We n IPRD 06, 1 -5 Oct. 2006, Siena, Italy Read-Out Subsystems (ROSs) ATLAS TDAQ concept, design & status - Kostas KORDAS 20

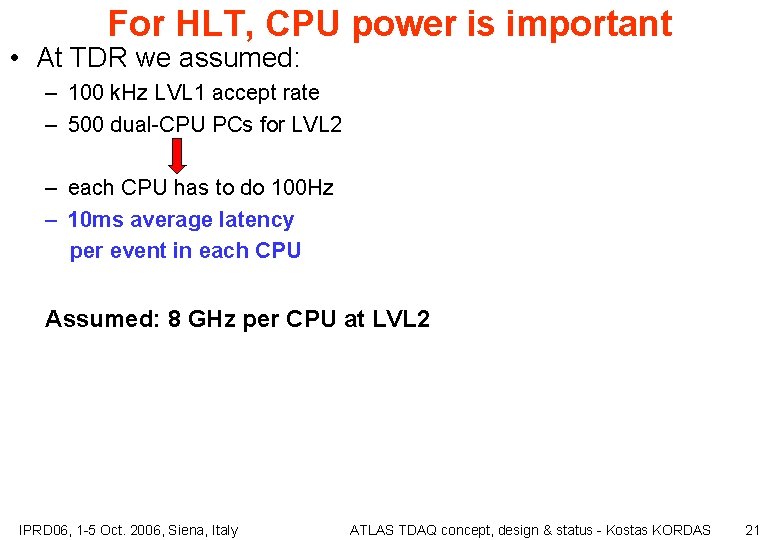

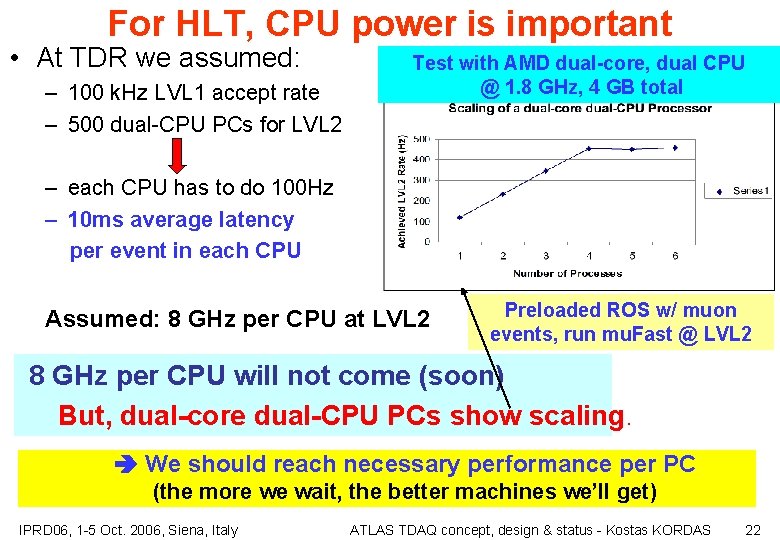

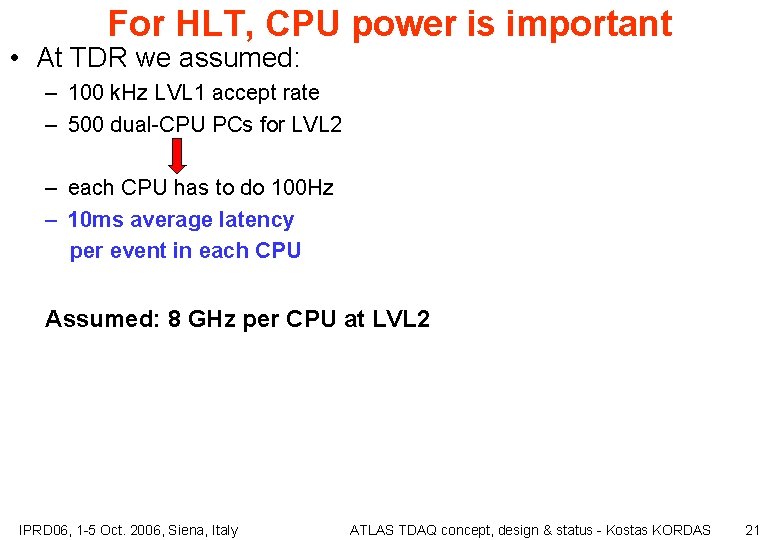

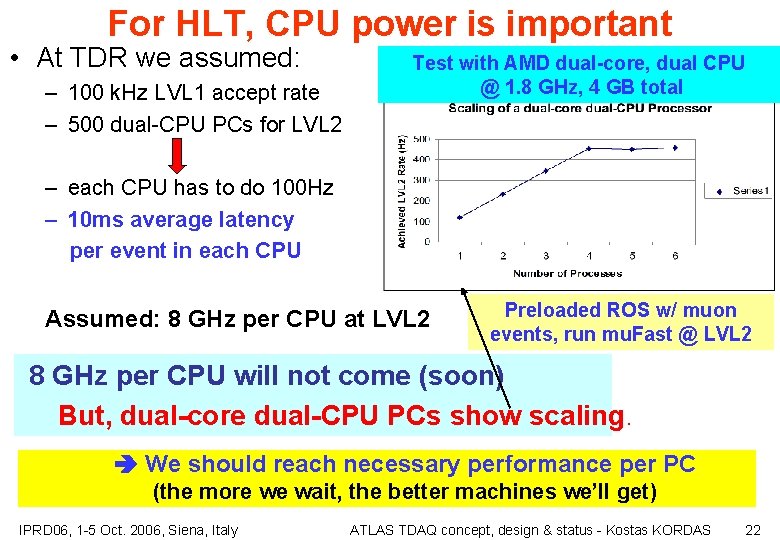

For HLT, CPU power is important • At TDR we assumed: – 100 k. Hz LVL 1 accept rate – 500 dual-CPU PCs for LVL 2 – each CPU has to do 100 Hz – 10 ms average latency per event in each CPU Assumed: 8 GHz per CPU at LVL 2 IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 21

For HLT, CPU power is important • At TDR we assumed: – 100 k. Hz LVL 1 accept rate – 500 dual-CPU PCs for LVL 2 Test with AMD dual-core, dual CPU @ 1. 8 GHz, 4 GB total – each CPU has to do 100 Hz – 10 ms average latency per event in each CPU Assumed: 8 GHz per CPU at LVL 2 Preloaded ROS w/ muon events, run mu. Fast @ LVL 2 8 GHz per CPU will not come (soon) But, dual-core dual-CPU PCs show scaling. We should reach necessary performance per PC (the more we wait, the better machines we’ll get) IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 22

DAQ / HLT commissioning Online infrastructure: • A useful fraction operational since last year. Growing according to need • Final network almost done IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 23

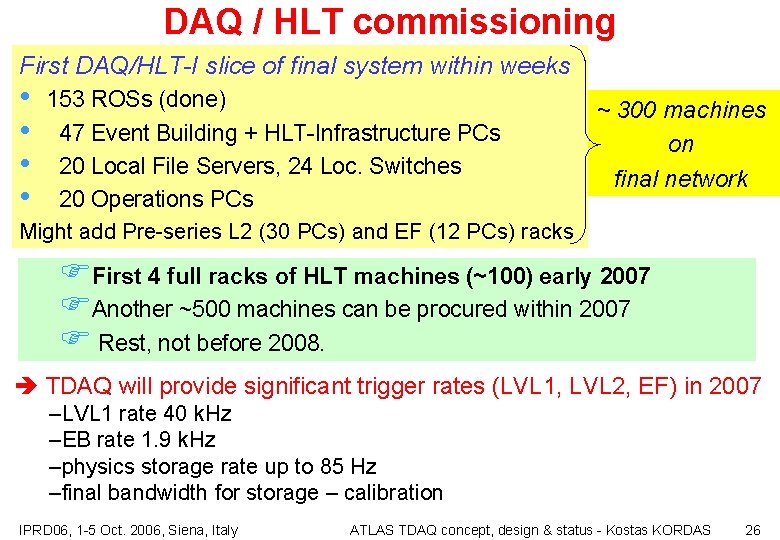

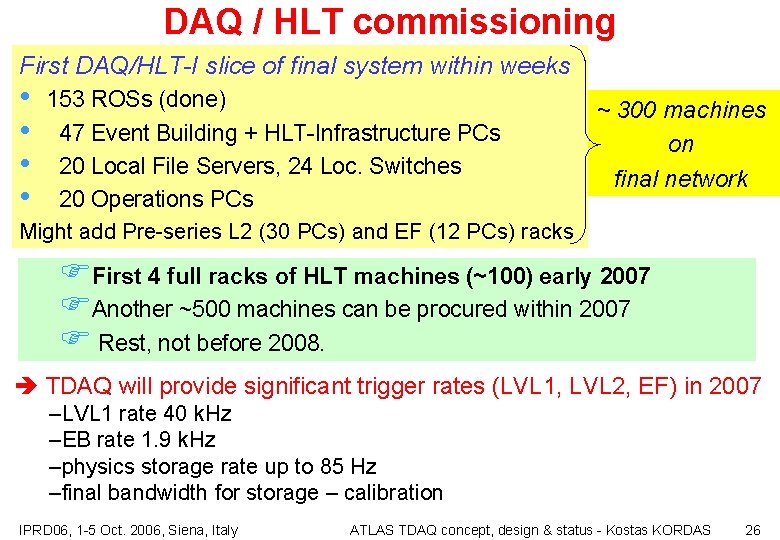

DAQ / HLT commissioning First DAQ/HLT-I slice of final system within weeks • • 153 ROSs (done) ~ 300 machines 47 Event Building + HLT-Infrastructure PCs on 20 Local File Servers, 24 Loc. Switches final network 20 Operations PCs Might add Pre-series L 2 (30 PCs) and EF (12 PCs) racks IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 24

DAQ / HLT commissioning First DAQ/HLT-I slice of final system within weeks • • 153 ROSs (done) ~ 300 machines 47 Event Building + HLT-Infrastructure PCs on 20 Local File Servers, 24 Loc. Switches final network 20 Operations PCs Might add Pre-series L 2 (30 PCs) and EF (12 PCs) racks FFirst 4 full racks of HLT machines (~100) early 2007 FAnother 500 to 600 machines can be procured within 2007 F Rest, not before 2008. IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 25

DAQ / HLT commissioning First DAQ/HLT-I slice of final system within weeks • • 153 ROSs (done) ~ 300 machines 47 Event Building + HLT-Infrastructure PCs on 20 Local File Servers, 24 Loc. Switches final network 20 Operations PCs Might add Pre-series L 2 (30 PCs) and EF (12 PCs) racks FFirst 4 full racks of HLT machines (~100) early 2007 FAnother ~500 machines can be procured within 2007 F Rest, not before 2008. TDAQ will provide significant trigger rates (LVL 1, LVL 2, EF) in 2007 –LVL 1 rate 40 k. Hz –EB rate 1. 9 k. Hz –physics storage rate up to 85 Hz –final bandwidth for storage – calibration IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 26

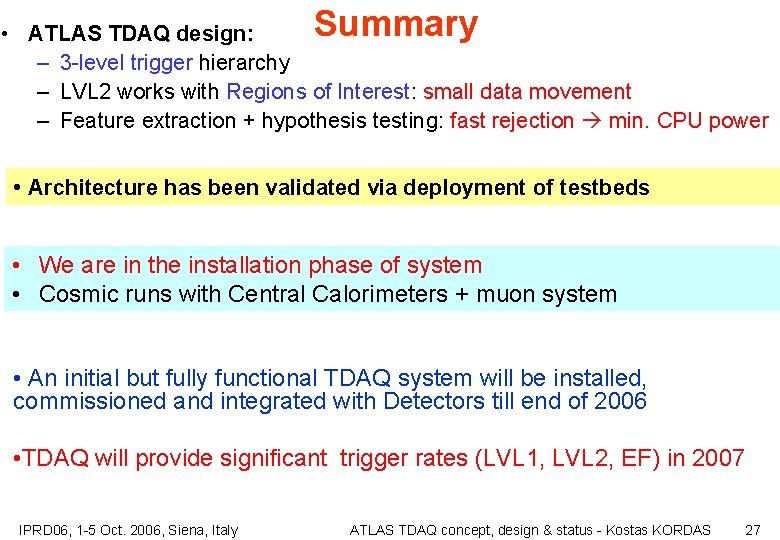

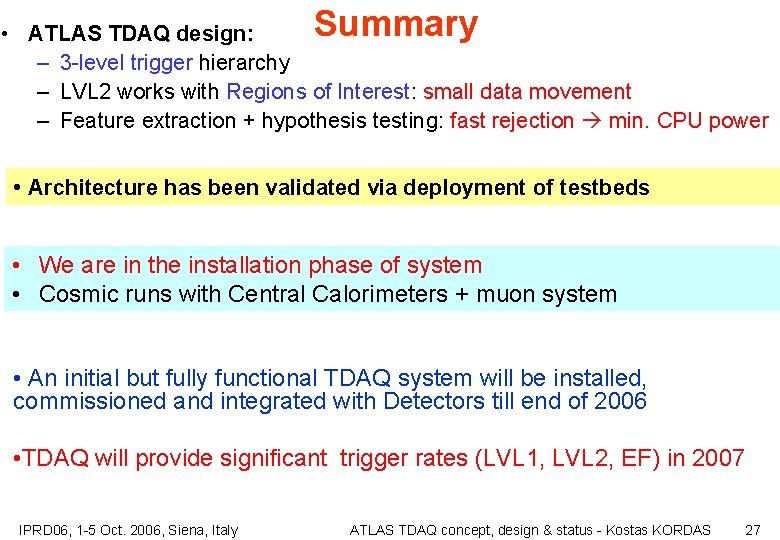

Summary • ATLAS TDAQ design: – 3 -level trigger hierarchy – LVL 2 works with Regions of Interest: small data movement – Feature extraction + hypothesis testing: fast rejection min. CPU power • Architecture has been validated via deployment of testbeds • We are in the installation phase of system • Cosmic runs with Central Calorimeters + muon system • An initial but fully functional TDAQ system will be installed, commissioned and integrated with Detectors till end of 2006 • TDAQ will provide significant trigger rates (LVL 1, LVL 2, EF) in 2007 IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 27

Thank you IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 28

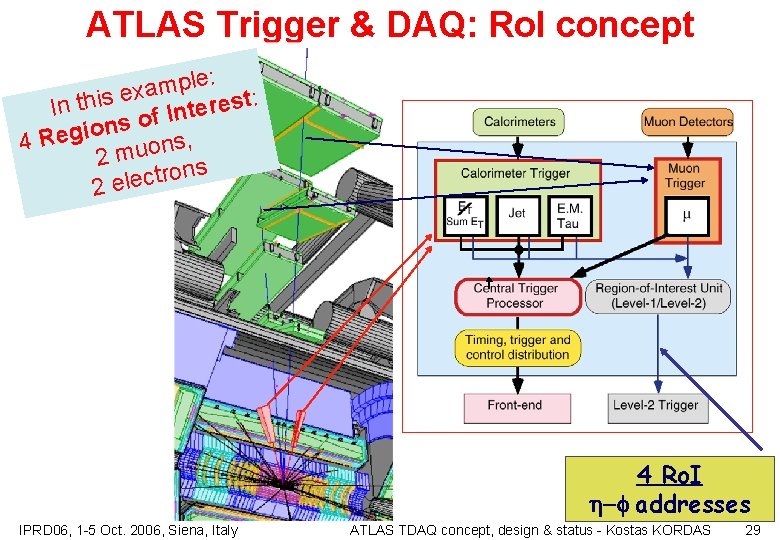

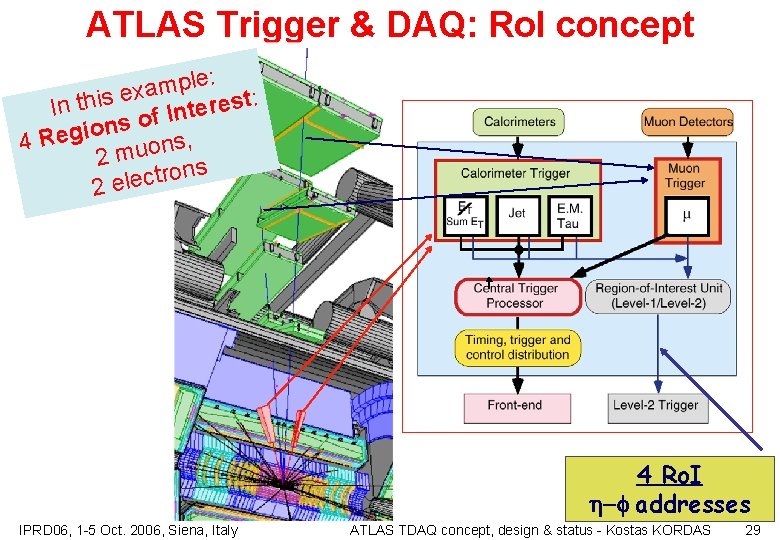

ATLAS Trigger & DAQ: Ro. I concept le: p m a x e t: s e r In this e t f In o s n io 4 Reg muons, 2 s n o r t c 2 ele 4 Ro. I -f addresses IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 29

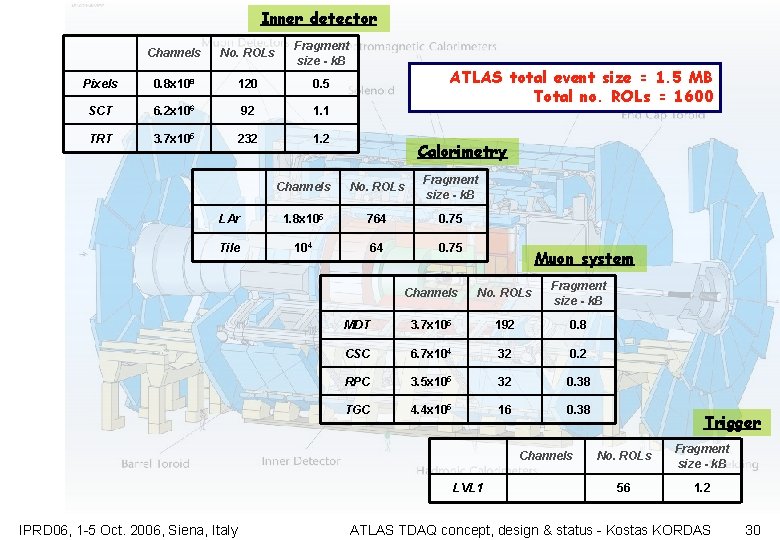

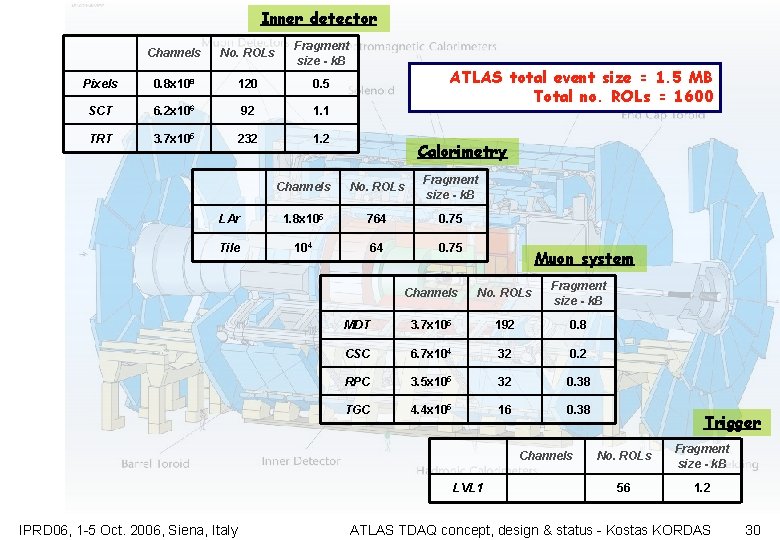

Inner detector Channels No. ROLs Fragment size - k. B Pixels 0. 8 x 108 120 0. 5 SCT 6. 2 x 106 92 1. 1 TRT 3. 7 x 105 232 1. 2 ATLAS total event size = 1. 5 MB Total no. ROLs = 1600 Calorimetry Channels No. ROLs Fragment size - k. B LAr 1. 8 x 105 764 0. 75 Tile 104 64 0. 75 Muon system Channels No. ROLs Fragment size - k. B MDT 3. 7 x 105 192 0. 8 CSC 6. 7 x 104 32 0. 2 RPC 3. 5 x 105 32 0. 38 TGC 4. 4 x 105 16 0. 38 Channels LVL 1 IPRD 06, 1 -5 Oct. 2006, Siena, Italy Trigger No. ROLs Fragment size - k. B 56 1. 2 ATLAS TDAQ concept, design & status - Kostas KORDAS 30

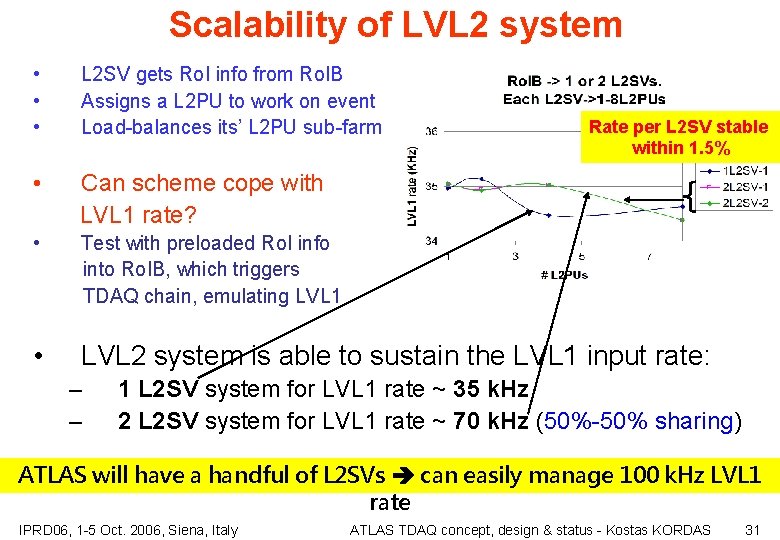

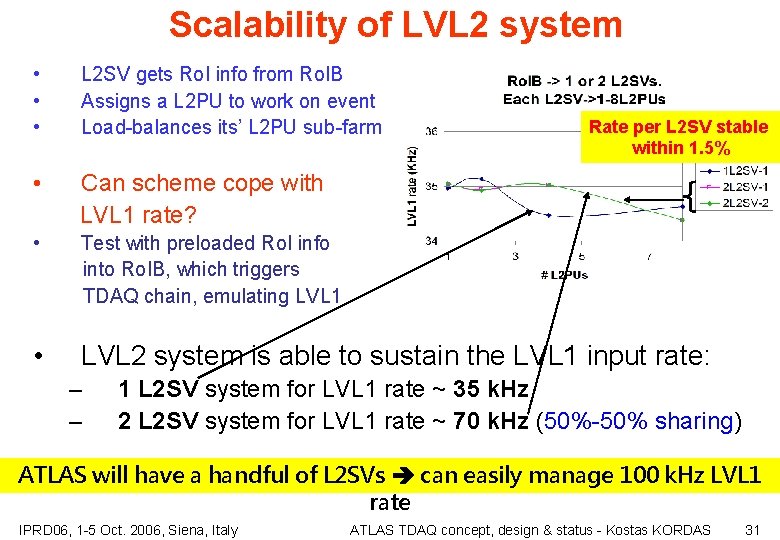

Scalability of LVL 2 system • • • L 2 SV gets Ro. I info from Ro. IB Assigns a L 2 PU to work on event Load-balances its’ L 2 PU sub-farm • Can scheme cope with LVL 1 rate? • Test with preloaded Ro. I info into Ro. IB, which triggers TDAQ chain, emulating LVL 1 • LVL 2 system is able to sustain the LVL 1 input rate: – – Rate per L 2 SV stable within 1. 5% 1 L 2 SV system for LVL 1 rate ~ 35 k. Hz 2 L 2 SV system for LVL 1 rate ~ 70 k. Hz (50%-50% sharing) ATLAS will have a handful of L 2 SVs can easily manage 100 k. Hz LVL 1 rate IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 31

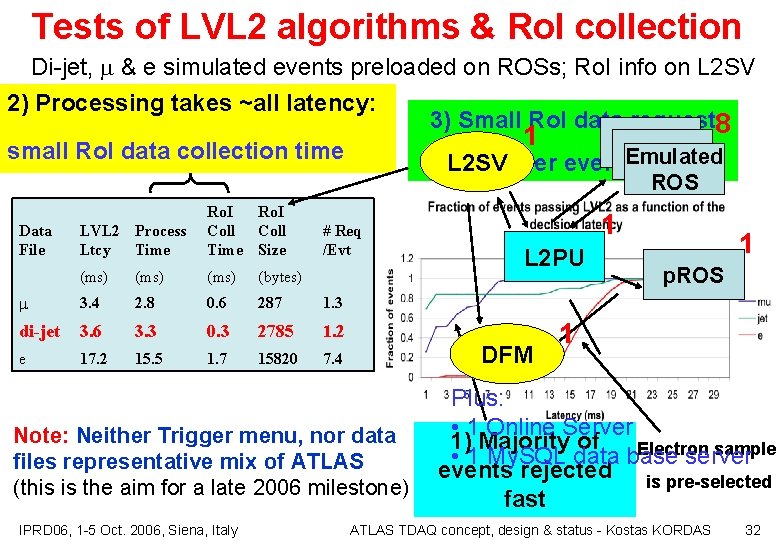

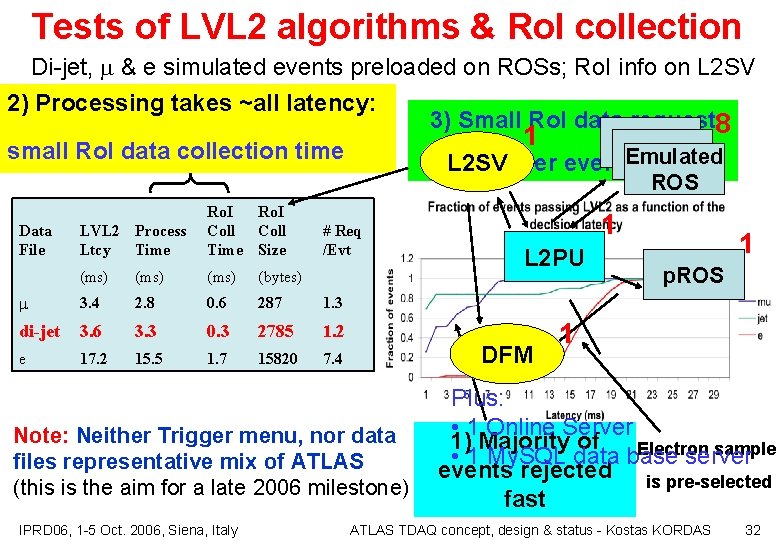

Tests of LVL 2 algorithms & Ro. I collection Di-jet, m & e simulated events preloaded on ROSs; Ro. I info on L 2 SV 2) Processing takes ~all latency: 3) Small Ro. I data request 8 1 p. ROS small Ro. I data collection time L 2 SV per event. Emulated ROS LVL 2 Process Ltcy Time Ro. I Coll Time Size (ms) (bytes) m 3. 4 2. 8 0. 6 287 1. 3 di-jet 3. 6 3. 3 0. 3 2785 1. 2 e 17. 2 15. 5 1. 7 15820 7. 4 Data File # Req /Evt L 2 PU DFM Note: Neither Trigger menu, nor data files representative mix of ATLAS (this is the aim for a late 2006 milestone) IPRD 06, 1 -5 Oct. 2006, Siena, Italy 1 1 p. ROS 1 Plus: • 1 Online Server 1) Majority of Electron sample • 1 My. SQL data base server events rejected is pre-selected fast ATLAS TDAQ concept, design & status - Kostas KORDAS 32

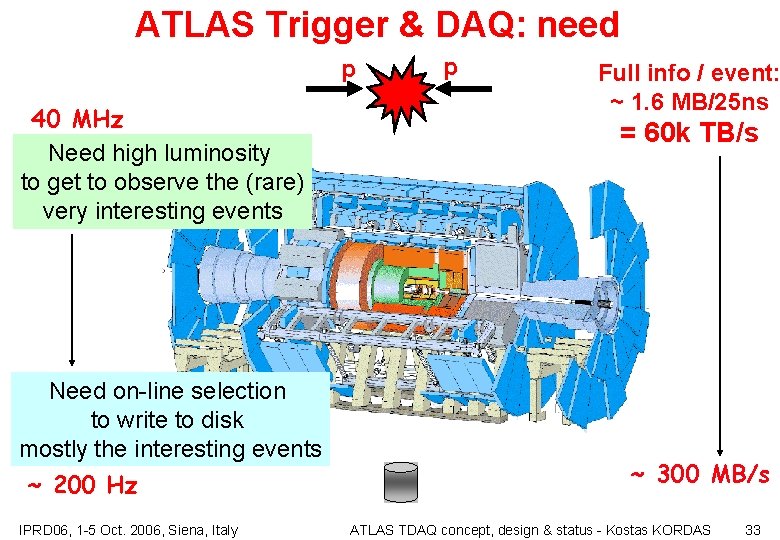

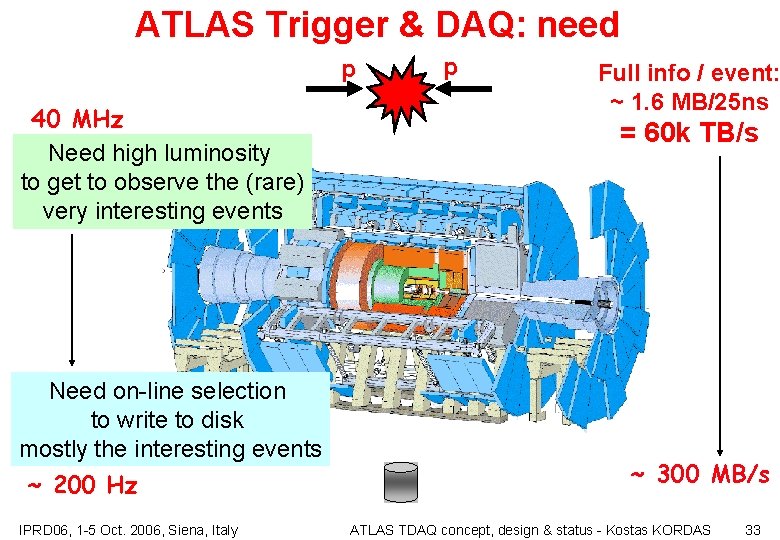

ATLAS Trigger & DAQ: need p 40 MHz Need high luminosity to get to observe the (rare) very interesting events Need on-line selection to write to disk mostly the interesting events ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy p Full info / event: ~ 1. 6 MB/25 ns = 60 k TB/s ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 33

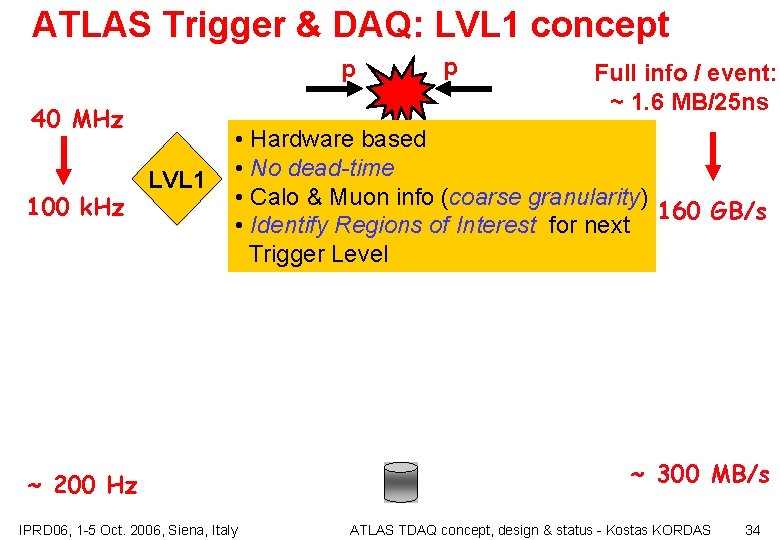

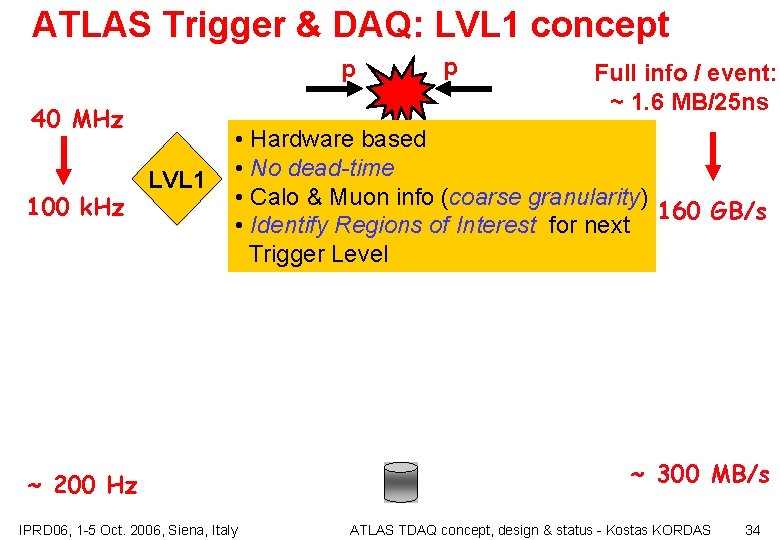

ATLAS Trigger & DAQ: LVL 1 concept p 40 MHz 100 k. Hz LVL 1 p Full info / event: ~ 1. 6 MB/25 ns • Hardware based • No dead-time • Calo & Muon info (coarse granularity) 160 GB/s • Identify Regions of Interest for next Trigger Level ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 34

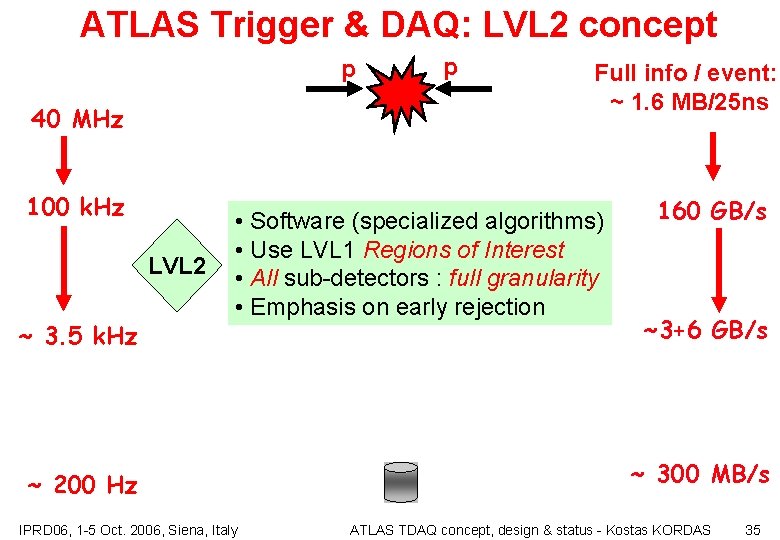

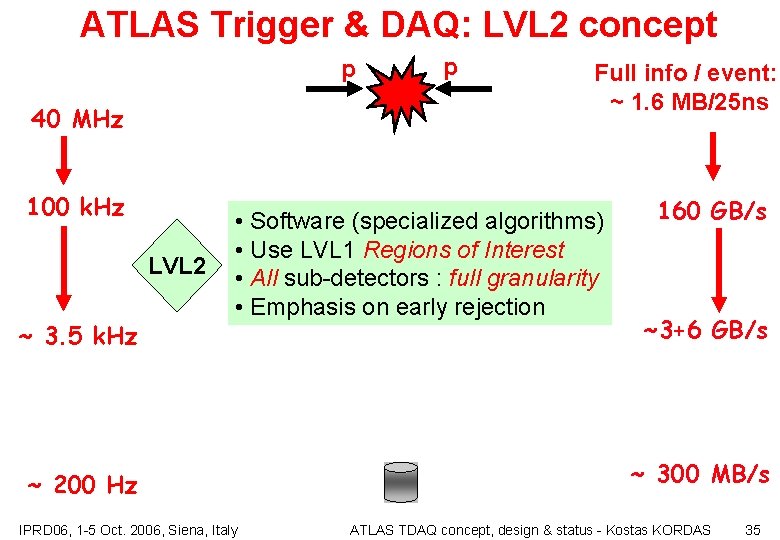

ATLAS Trigger & DAQ: LVL 2 concept p 40 MHz 100 k. Hz LVL 2 ~ 3. 5 k. Hz p Full info / event: ~ 1. 6 MB/25 ns • Software (specialized algorithms) • Use LVL 1 Regions of Interest • All sub-detectors : full granularity • Emphasis on early rejection ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy 160 GB/s ~3+6 GB/s ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 35

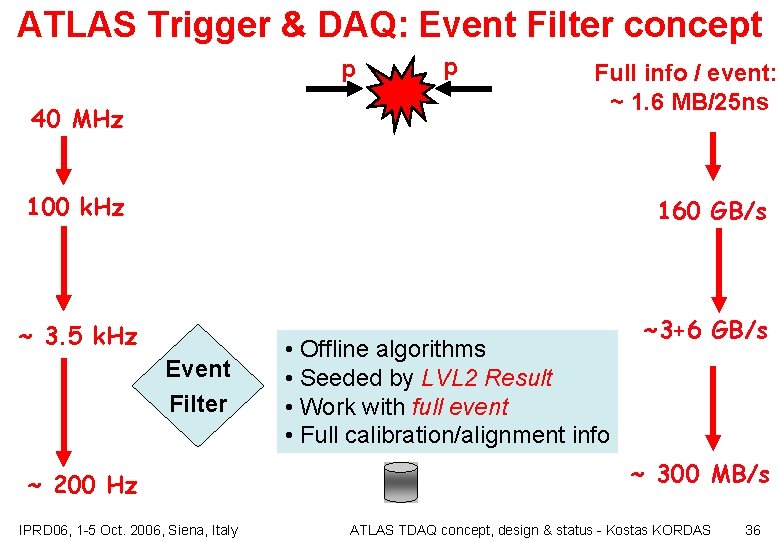

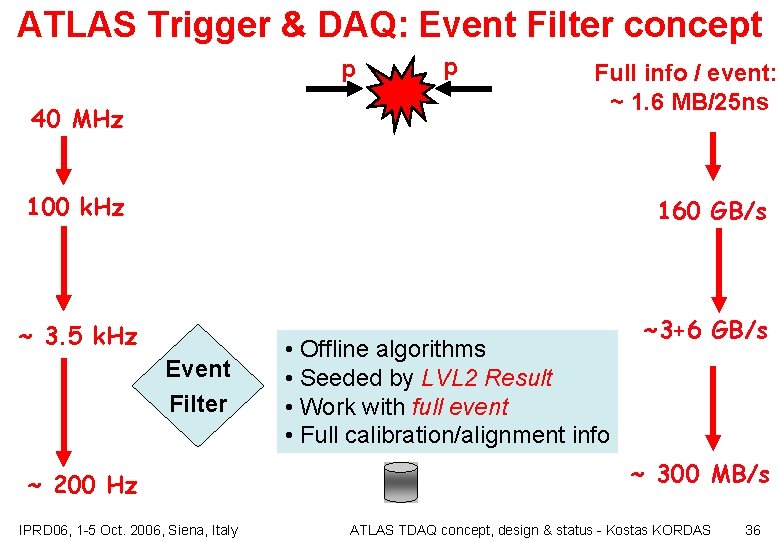

ATLAS Trigger & DAQ: Event Filter concept p 40 MHz p Full info / event: ~ 1. 6 MB/25 ns 100 k. Hz 160 GB/s ~ 3. 5 k. Hz ~3+6 GB/s Event Filter ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy • Offline algorithms • Seeded by LVL 2 Result • Work with full event • Full calibration/alignment info ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 36

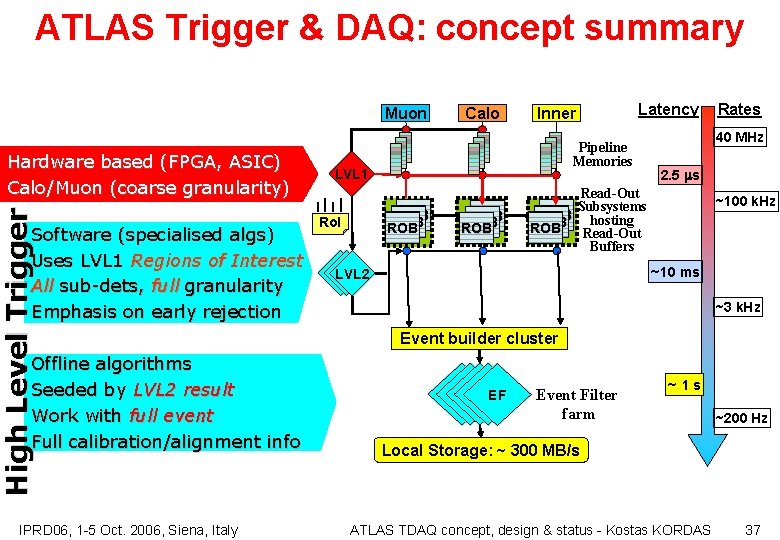

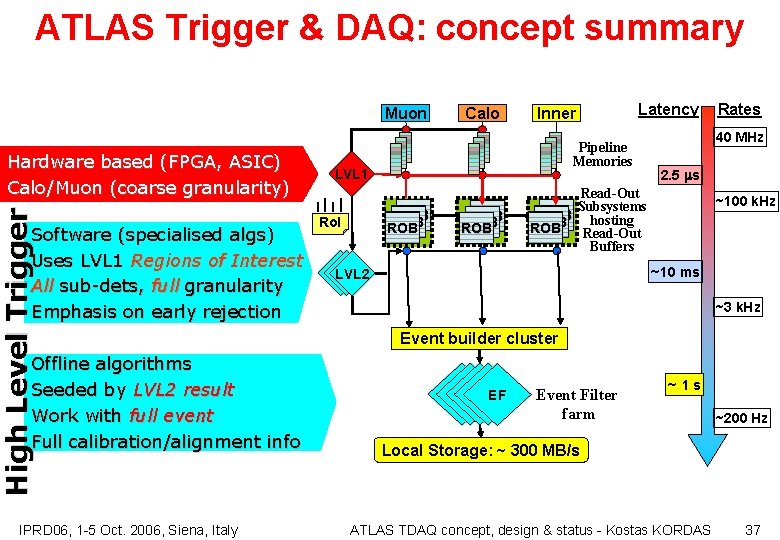

ATLAS Trigger & DAQ: concept summary Muon High Level Trigger Hardware based (FPGA, ASIC) Calo/Muon (coarse granularity) Software (specialised algs) Uses LVL 1 Regions of Interest All sub-dets, full granularity Emphasis on early rejection Offline algorithms Seeded by LVL 2 result Work with full event Full calibration/alignment info IPRD 06, 1 -5 Oct. 2006, Siena, Italy Calo Inner Pipeline Memories LVL 1 ROB ROB Ro. I Latency ROB ROB Rates 40 MHz 2. 5 ms Read-Out Subsystems ROB hosting ROB Read-Out Buffers ~100 k. Hz ~10 ms LVL 2 ~3 k. Hz Event builder cluster EF Event Filter farm ~1 s ~200 Hz Local Storage: ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 37

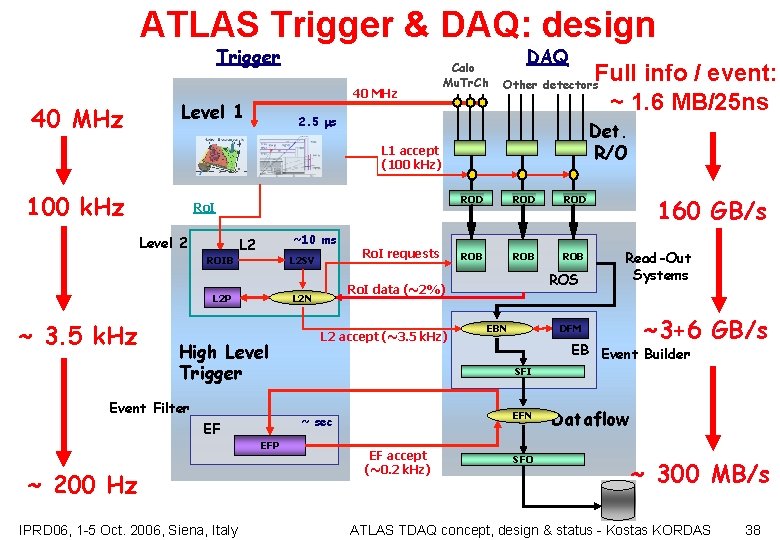

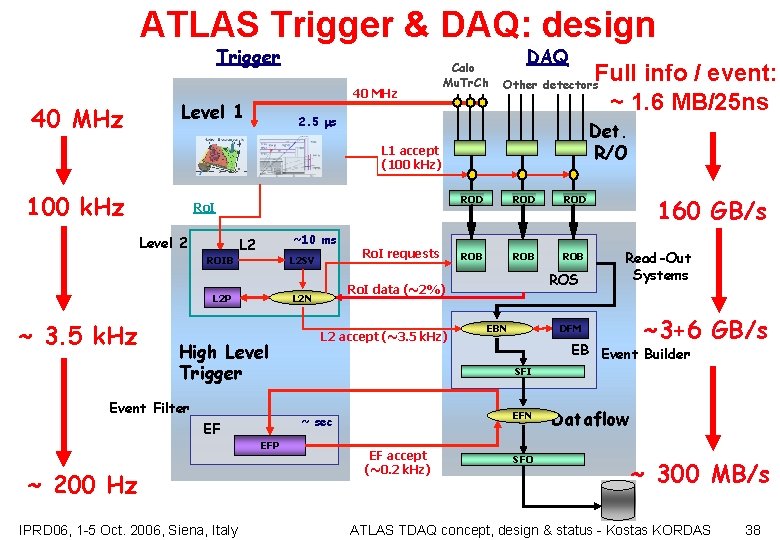

ATLAS Trigger & DAQ: design Trigger 40 MHz Level 1 Calo Mu. Tr. Ch DAQ Full info / event: ~ 1. 6 MB/25 ns Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) 100 k. Hz Ro. I Level 2 ROIB ~10 ms L 2 SV ~ 3. 5 k. Hz High Level Trigger Event Filter EFP ~ 200 Hz IPRD 06, 1 -5 Oct. 2006, Siena, Italy L 2 accept (~3. 5 k. Hz) ROD ROB ROB ROS 160 GB/s Read-Out Systems DFM EBN ~3+6 GB/s EB Event Builder SFI EFN ~ sec EF ROD Ro. I data (~2%) L 2 N L 2 P Ro. I requests ROD EF accept (~0. 2 k. Hz) SFO Dataflow ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 38

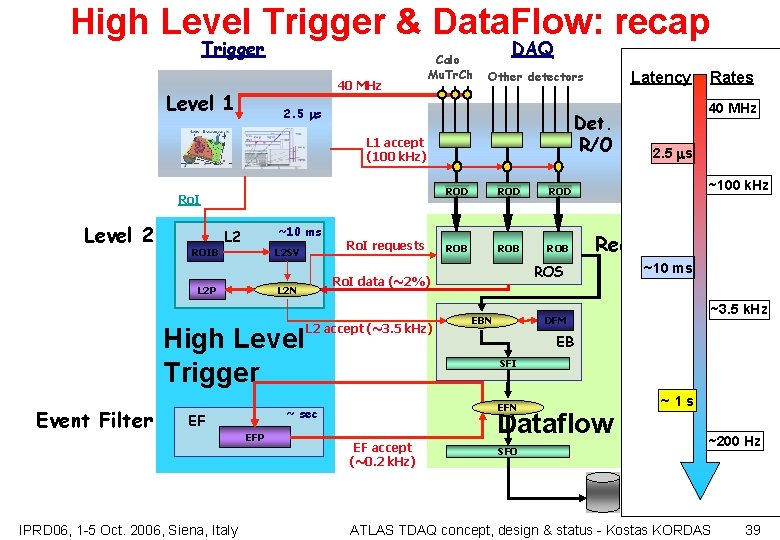

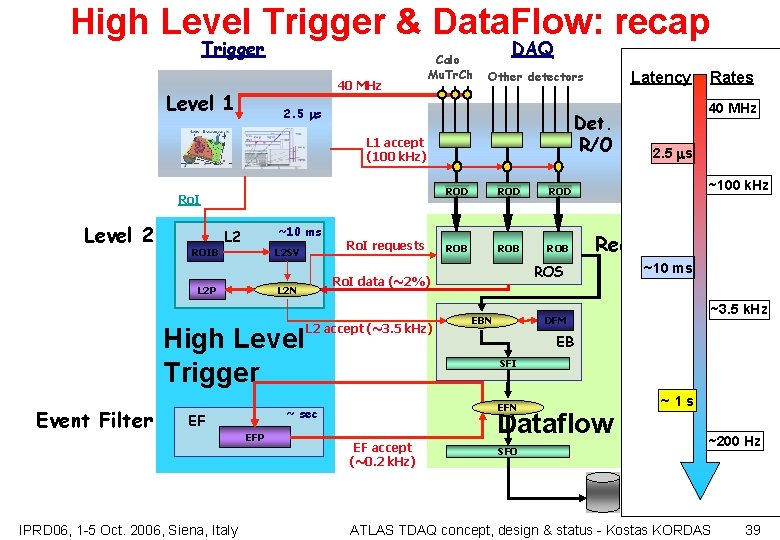

High Level Trigger & Data. Flow: recap Trigger 40 MHz Level 1 Calo Mu. Tr. Ch DAQ Other detectors 2. 5 ms Det. R/O L 1 accept (100 k. Hz) Ro. I Level 2 ROIB ~10 ms L 2 SV High Level Trigger Event Filter IPRD 06, 1 -5 Oct. 2006, Siena, Italy L 2 accept (~3. 5 k. Hz) EFP ROD ROB ROB Rates 40 MHz 2. 5 ms ~100 k. Hz 160 GB/s Read-Out Systems ROS ~10 ms ~3+6 GB/s ~3. 5 k. Hz DFM EBN EB Event Builder SFI EFN ~ sec EF ROD Ro. I data (~2%) L 2 N L 2 P Ro. I requests Latency Dataflow EF accept (~0. 2 k. Hz) SFO ~1 s ~200 Hz ~ 300 MB/s ATLAS TDAQ concept, design & status - Kostas KORDAS 39

SDX 1 dual-CPU nodes ~30 ~1600 Local Storage ~100 ~ 500 Event Builder LVL 2 Sub. Farm farm Inputs Event Filter (EF) Sub. Farm Outputs (SFOs) (SFIs) p. ROS Network switches Data. Flow Manager stores LVL 2 output Event data requests Delete commands Requested event data Regions Of Interest Gigabit Ethernet Network switches LVL 2 Supervisor USA 15 ~150 PCs Read-Out Subsystems (ROSs) Ro. I Builder Timing Trigger Control (TTC) IPRD 06, 1 -5 Oct. 2006, Siena, Italy Secondlevel trigger Data of events accepted 1600 by first-level trigger Read. Out VME Dedicated links Links Read. Out Drivers (RODs) Firstlevel trigger ATLAS TDAQ concept, design & status - Kostas KORDAS 40

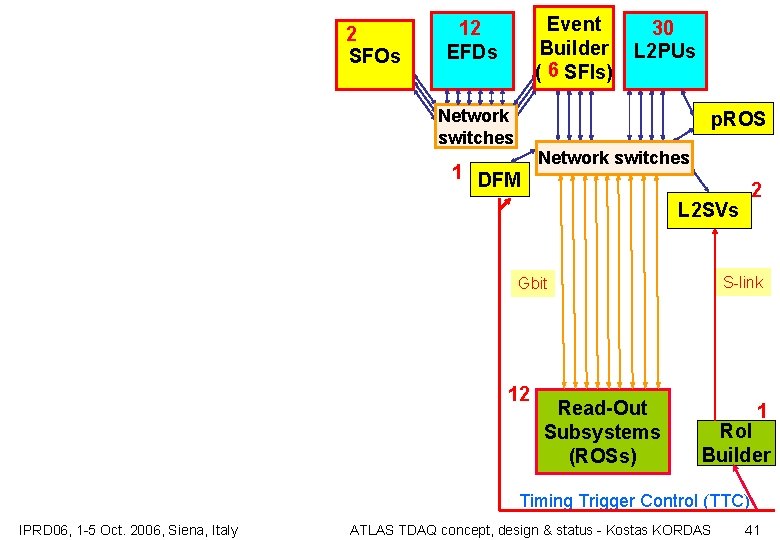

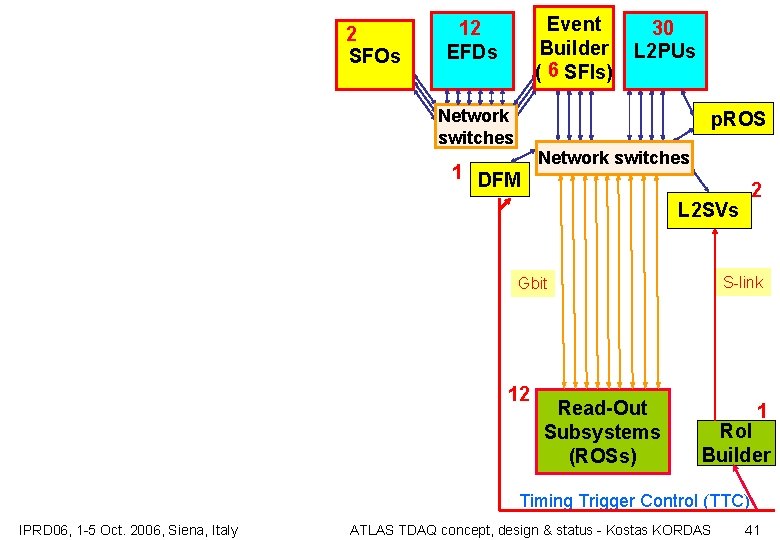

2 SFOs Event Builder ( 6 SFIs) 12 EFDs 30 L 2 PUs Network switches p. ROS 1 DFM Network switches 2 L 2 SVs S-link Gbit 12 Read-Out Subsystems (ROSs) 1 Ro. I Builder Timing Trigger Control (TTC) IPRD 06, 1 -5 Oct. 2006, Siena, Italy ATLAS TDAQ concept, design & status - Kostas KORDAS 41