Text classification In Search of a Representation Stan

- Slides: 29

Text classification: In Search of a Representation Stan Matwin School of Information Technology and Engineering University of Ottawa stan@site. uottawa. ca 1 Matwin 1999

Outline ¢Supervised learning=classification ¢ML/DM at U of O ¢Classical approach ¢Attempt at a linguistic representation ¢N-grams – how to get them? ¢Labelling and co-learning ¢Next steps? … 2 Matwin 1999

Supervised learning (classification) Given: ¢a set of training instances T={et}, where each t is a class label : one of the classes C 1, …Ck ¢a concept with k classes C 1, …Ck (but the definition of the concept is NOT known) Find: ¢a description for each class which will perform well in determining (predicting) class membership for unseen instances 3 Matwin 1999

Classification ¢Prevalent practice: examples are represented as vectors of values of attributes ¢Theoretical wisdom, confirmed empirically: the more examples, the better predictive accuracy 4 Matwin 1999

ML/DM at U of O ¢ Learning from imbalanced classes: applications in remote sensing ¢ a relational, rather than propositional representation: learning the maintainability concept ¢ Learning in the presence of background knowledge. Bayesian belief networks and how to get them. Appl to distributed DB 5 Matwin 1999

Why text classification? ¢Automatic file saving ¢Internet filters ¢Recommenders ¢Information extraction ¢… 6 Matwin 1999

Text classification: standard approach Ba g 1. Remove stop words and markings 2. remaining words are all attributes 3. A document becomes a vector of wo rd <word, frequency> s 4. Train a boolean classifier for each class 5. Evaluate the results on an unseen sample 7 Matwin 1999

Text classification: tools ¢RIPPER A “covering”learner Works well with large sets of binary features ¢Naïve Bayes Efficient (no search) Simple to program Gives “degree of belief” 8 Matwin 1999

“Prior art” ¢Yang: best results using k-NN: 82. 3% microaveraged accuracy ¢Joachim’s results using Support Vector Machine + unlabelled data ¢SVM insensitive to high dimensionality, sparseness of examples 9 Matwin 1999

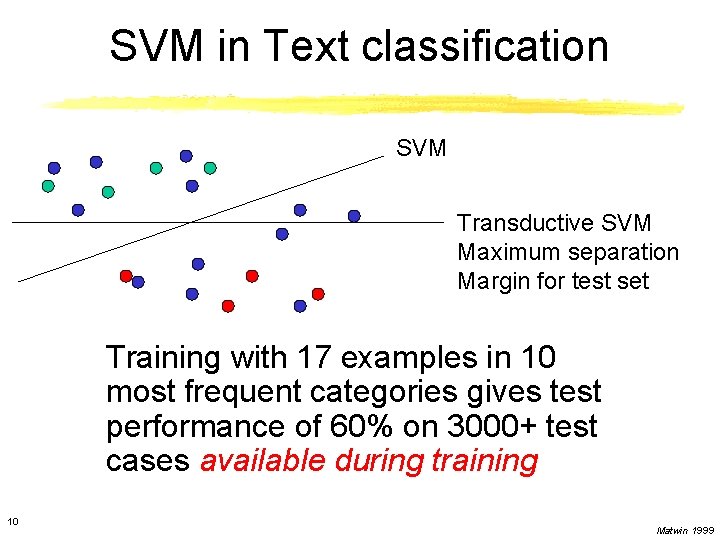

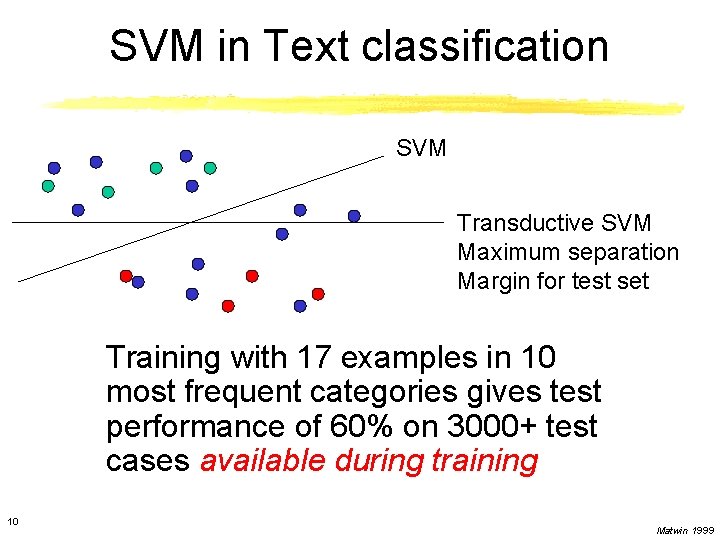

SVM in Text classification SVM Transductive SVM Maximum separation Margin for test set Training with 17 examples in 10 most frequent categories gives test performance of 60% on 3000+ test cases available during training 10 Matwin 1999

Problem 1: aggressive feature selection 11 Matwin 1999

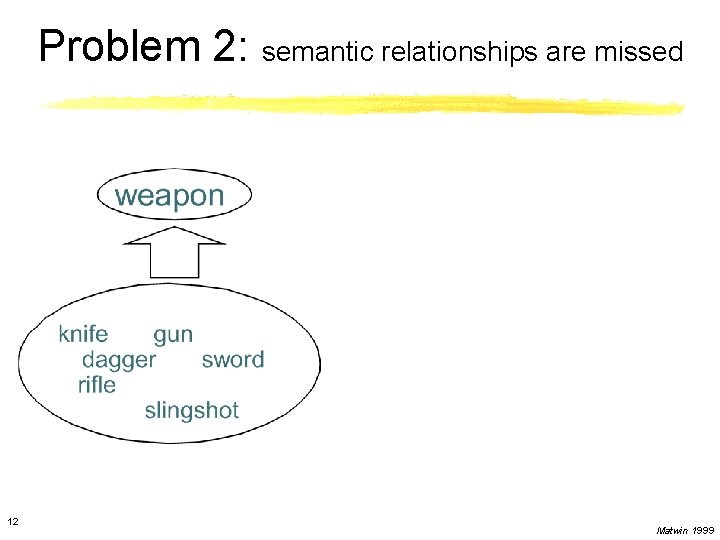

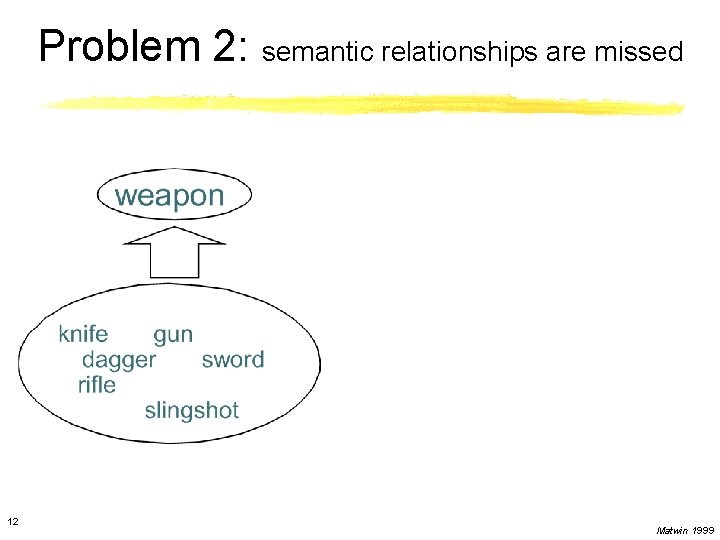

Problem 2: semantic relationships are missed 12 Matwin 1999

Proposed solution (Sam Scott) ¢Get noun phrases and/or key phrases (Extractor) and add to the feature list ¢Add hypernyms 13 Matwin 1999

Hypernyms - Word. Net 14 Matwin 1999

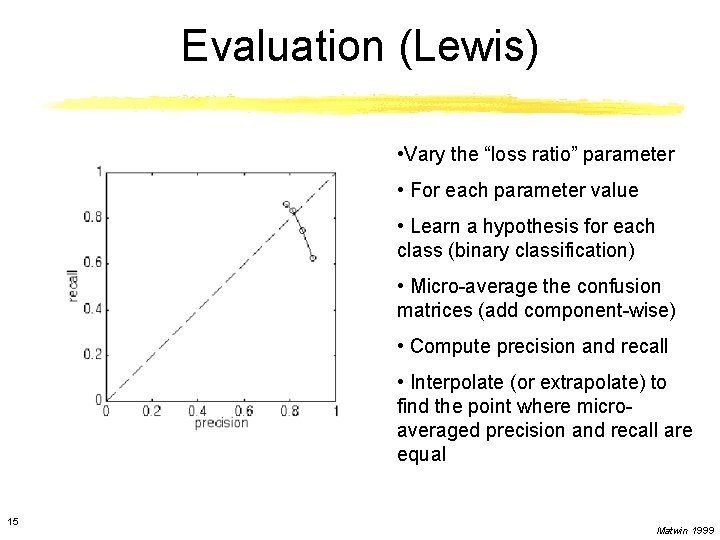

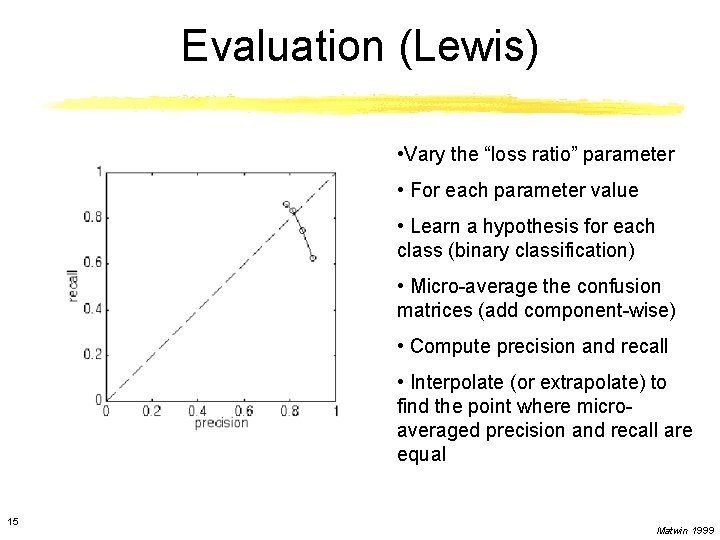

Evaluation (Lewis) • Vary the “loss ratio” parameter • For each parameter value • Learn a hypothesis for each class (binary classification) • Micro-average the confusion matrices (add component-wise) • Compute precision and recall • Interpolate (or extrapolate) to find the point where microaveraged precision and recall are equal 15 Matwin 1999

Results No gain over BW in alternative representations But… Comprehensibility… 16 Matwin 1999

Combining classifiers Comparable to best known results (Yang) 17 Matwin 1999

Other possibilities ¢Using hypernyms with a small training set (avoids ambiguous words) ¢Use Bayes+Ripper in a cascade scheme (Gama) ¢Other representations: 18 Matwin 1999

Collocations ¢Do not need to be noun phrases, just pairs of words possibly separated by stop words ¢Only the well discriminating ones are chosen ¢These are added to the bag of words, and… ¢Ripper 19 Matwin 1999

N-grams ¢ N-grams are substrings of a given length ¢ Good results in Reuters [Mladenic, Grobelnik] with Bayes; we try RIPPER ¢ A different task: classifying text files Attachments Audio/video Coded ¢ From n-grams to relational features 20 Matwin 1999

How to get good n-grams? We use Ziv-Lempel for frequent substring detection (. gz!) a abababa a b b a 21 Matwin 1999

N-grams ¢Counting ¢Pruning: substring occurrence ratio < acceptance threshold ¢Building relations: string A almost always precedes string B ¢Feeding into relational learner (FOIL) 22 Matwin 1999

Using grammar induction (text files) ¢Idea: detect patterns of substrings ¢Patterns are regular languages ¢Methods of automata induction: a recognizer for each class of files ¢We use a modified version of RPNI 2 [Dupont, Miclet] 23 Matwin 1999

What’s new… ¢Work with marked up text (Word, Web) ¢XML with semantic tags: mixed blessing for DM/TM ¢Co-learning ¢Text mining 24 Matwin 1999

Co-learning ¢How to use unlabelled data? Or How to limit the number of examples that need be labelled? ¢Two classifiers and two redundantly sufficient representations ¢Train both, run both on test set, ¢add best predictions to training set 25 Matwin 1999

Co-learning ¢Training set grows as… ¢…each learner predicts independently due to redundant sufficiency (different representations) ¢would also work with our learners if we used Bayes? ¢Would work with classifying emails 26 Matwin 1999

Co-learning ¢Mitchell experimented with the task of classifying web pages (profs, students, courses, projects) – a supervised learning task ¢Used Anchor text Page contents ¢Error rate halved (from 11% to 5%) 27 Matwin 1999

Cog-sci? ¢Co- learning seems to be cognitively justified ¢Model: students learning in groups (pairs) ¢What other social learning mechanisms could provide models for supervised learning? 28 Matwin 1999

Conclusion ¢A practical task, needs a solution ¢No satisfactory solution so far ¢Fruitful ground for research 29 Matwin 1999