Tests of the ATLAS Trigger and Data Acquisition

- Slides: 15

Tests of the ATLAS Trigger and Data Acquisition System G. Lehmann Miotto, CERN-PH/ATD on behalf of the ATLAS TDAQ Group Milano, 12 September 2005

2 Outline l l l Overview of system requirements The ATLAS TDAQ architecture Performance and stability challenges Tests on a large general purpose computer cluster Tests on purpose build setup Conclusions Milano, 12 September 2005 September 12 th 2005

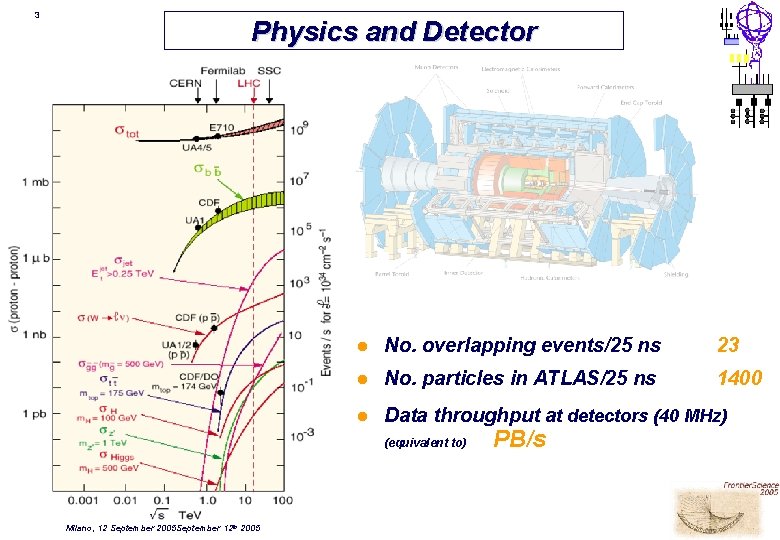

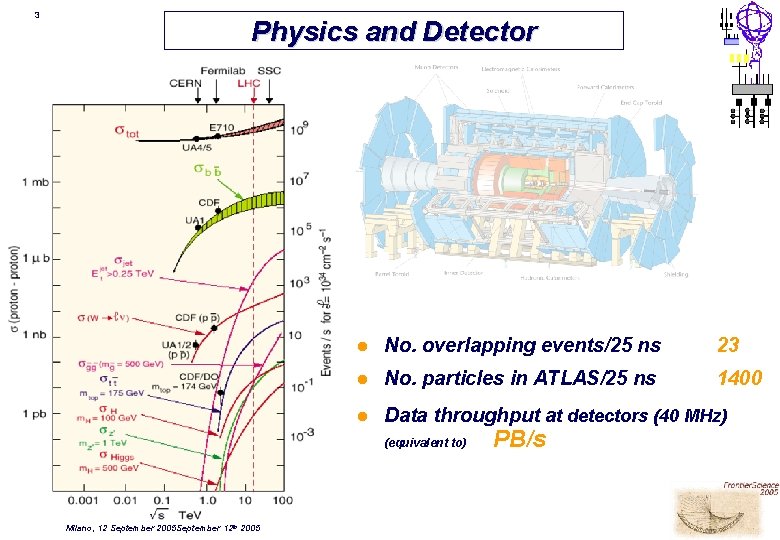

3 Physics and Detector l No. overlapping events/25 ns 23 l No. particles in ATLAS/25 ns 1400 l Data throughput at detectors (40 MHz) (equivalent to) Milano, 12 September 2005 September 12 th 2005 PB/s

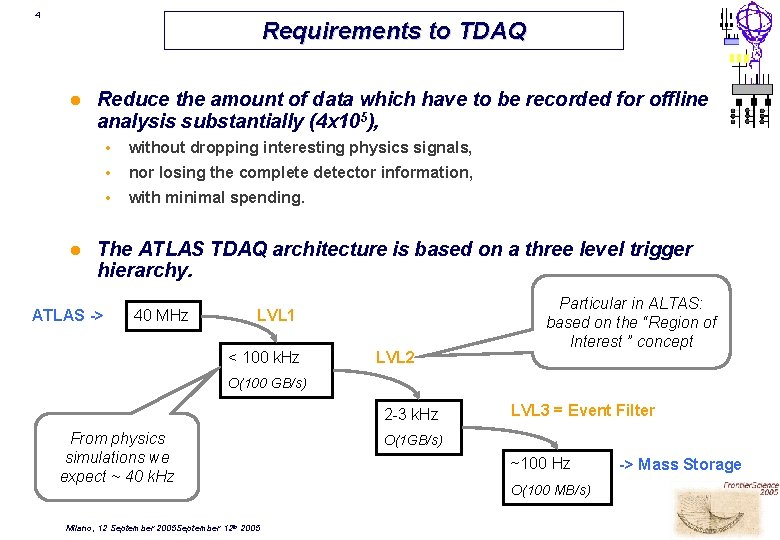

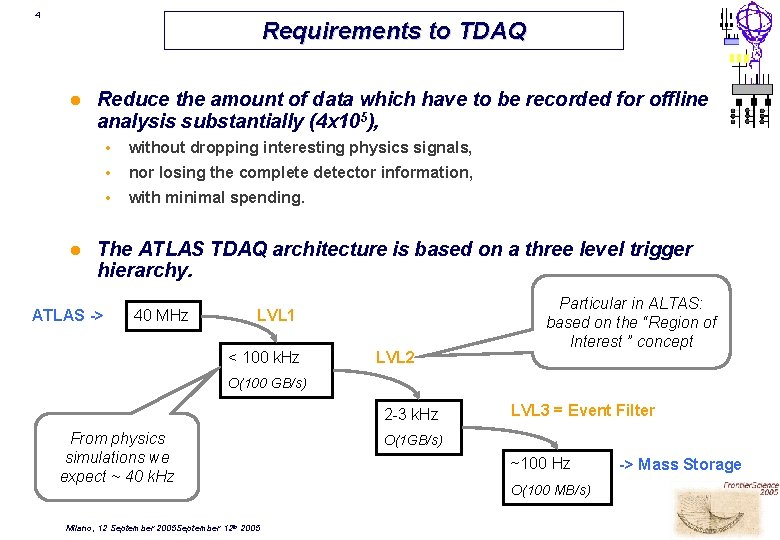

4 Requirements to TDAQ l l Reduce the amount of data which have to be recorded for offline analysis substantially (4 x 105), • without dropping interesting physics signals, • nor losing the complete detector information, • with minimal spending. The ATLAS TDAQ architecture is based on a three level trigger hierarchy. ATLAS -> 40 MHz LVL 1 < 100 k. Hz LVL 2 Particular in ALTAS: based on the “Region of Interest ” concept O(100 GB/s) 2 -3 k. Hz From physics simulations we expect ~ 40 k. Hz Milano, 12 September 2005 September 12 th 2005 LVL 3 = Event Filter O(1 GB/s) ~100 Hz O(100 MB/s) -> Mass Storage

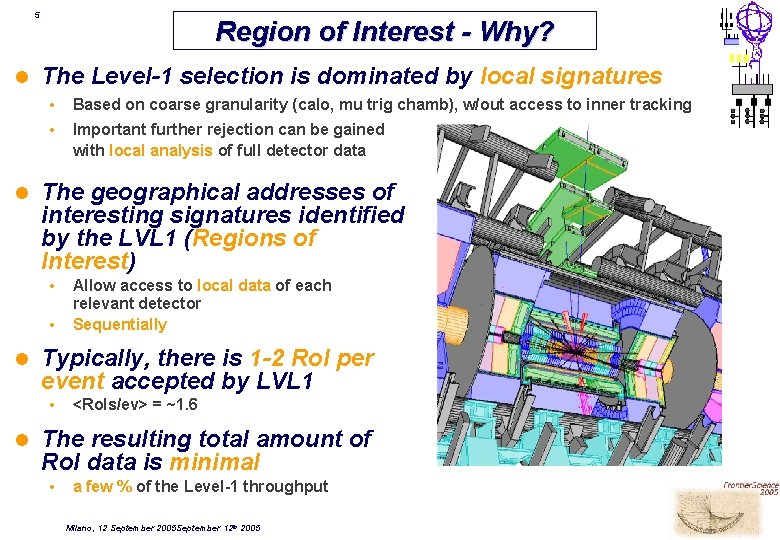

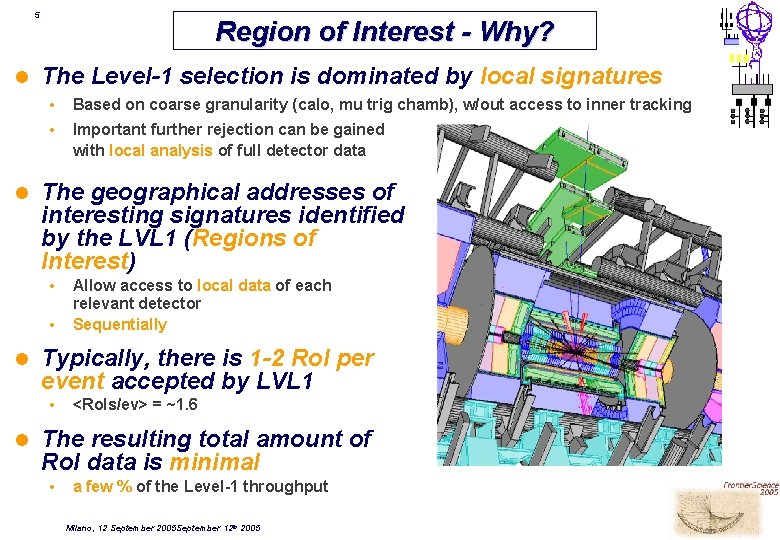

5 l l Region of Interest - Why? The Level-1 selection is dominated by local signatures • Based on coarse granularity (calo, mu trig chamb), w/out access to inner tracking • Important further rejection can be gained with local analysis of full detector data The geographical addresses of interesting signatures identified by the LVL 1 (Regions of Interest) • • l Typically, there is 1 -2 Ro. I per event accepted by LVL 1 • l Allow access to local data of each relevant detector Sequentially <Ro. Is/ev> = ~1. 6 The resulting total amount of Ro. I data is minimal • a few % of the Level-1 throughput Milano, 12 September 2005 September 12 th 2005

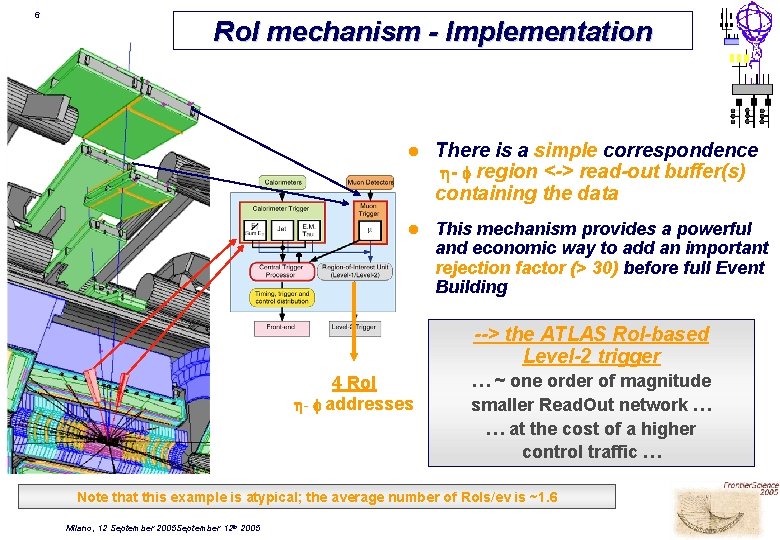

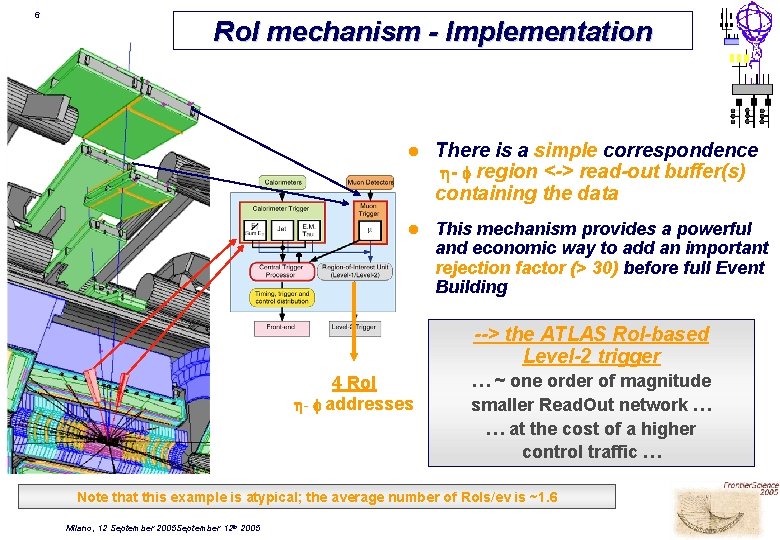

6 Ro. I mechanism - Implementation l There is a simple correspondence - region <-> read-out buffer(s) containing the data l This mechanism provides a powerful and economic way to add an important rejection factor (> 30) before full Event Building --> the ATLAS Ro. I-based Level-2 trigger 4 Ro. I - addresses … ~ one order of magnitude smaller Read. Out network … … at the cost of a higher control traffic … Note that this example is atypical; the average number of Ro. Is/ev is ~1. 6 Milano, 12 September 2005 September 12 th 2005

7 ATLAS Trigger / DAQ Data. Flow SDX 1 USA 15 UX 15 Milano, 12 September 2005 September 12 th 2005

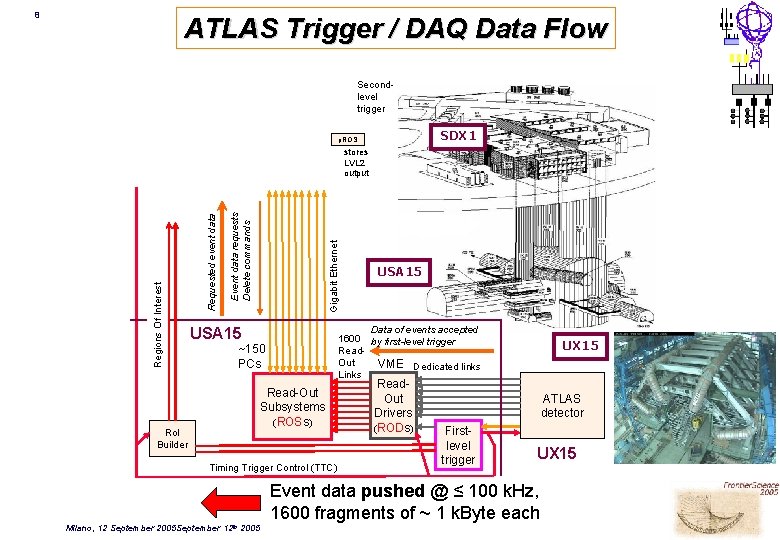

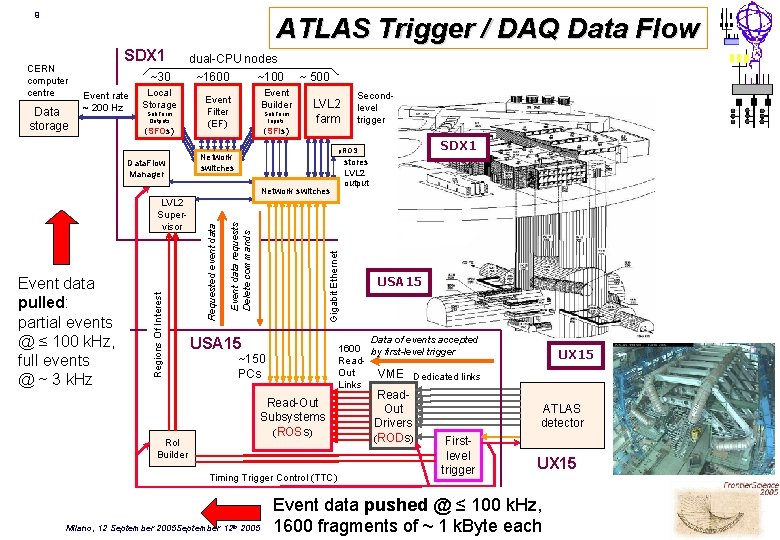

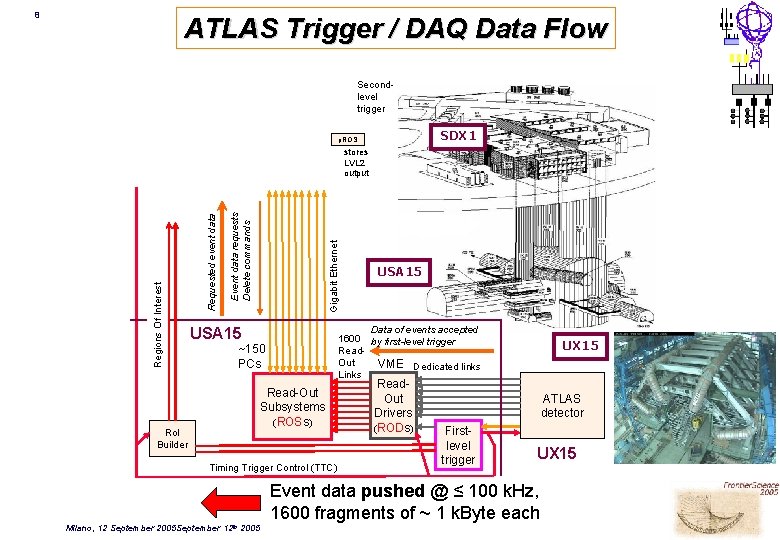

8 ATLAS Trigger / DAQ Data Flow Secondlevel trigger SDX 1 p. ROS Ro. I Builder Gigabit Ethernet Event data requests Delete commands Requested event data Regions Of Interest stores LVL 2 output USA 15 Data of events accepted 1600 by first-level trigger Read. Out VME Dedicated links Links USA 15 ~150 PCs Read-Out Subsystems (ROSs) Timing Trigger Control (TTC) Read. Out Drivers (RODs) UX 15 ATLAS detector Firstlevel trigger UX 15 Event data pushed @ ≤ 100 k. Hz, 1600 fragments of ~ 1 k. Byte each Milano, 12 September 2005 September 12 th 2005

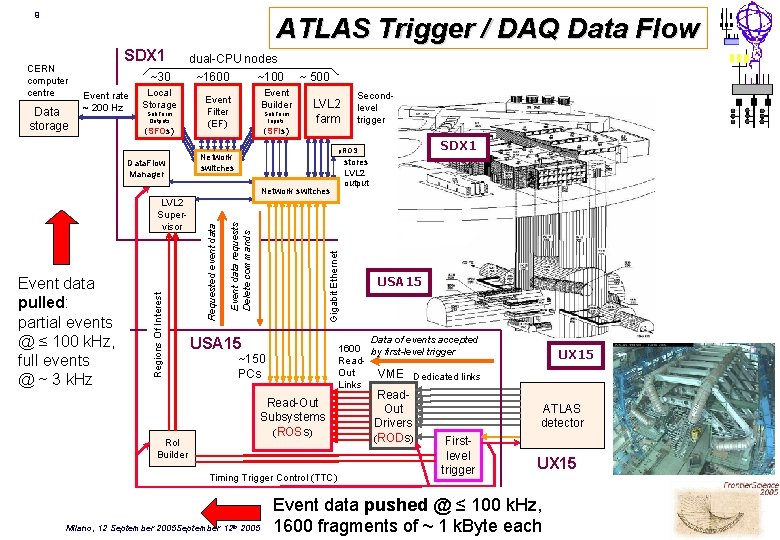

9 ATLAS Trigger / DAQ Data Flow CERN computer centre Data storage SDX 1 ~30 Event rate ~ 200 Hz Local Storage Sub. Farm Outputs (SFOs) Data. Flow Manager dual-CPU nodes ~1600 ~100 Event Builder Event Filter (EF) Sub. Farm Inputs ~ 500 LVL 2 farm (SFIs) Ro. I Builder stores LVL 2 output Gigabit Ethernet Event data requests Delete commands Requested event data Regions Of Interest Event data pulled: partial events @ ≤ 100 k. Hz, full events @ ~ 3 k. Hz SDX 1 p. ROS Network switches LVL 2 Supervisor Secondlevel trigger Data of events accepted 1600 by first-level trigger Read. Out VME Dedicated links Links USA 15 ~150 PCs Read-Out Subsystems (ROSs) Timing Trigger Control (TTC) Milano, 12 September 2005 September 12 th 2005 USA 15 Read. Out Drivers (RODs) UX 15 ATLAS detector Firstlevel trigger UX 15 Event data pushed @ ≤ 100 k. Hz, 1600 fragments of ~ 1 k. Byte each

10 The TDAQ Challenges l Control and Configuration of a huge distributed and inhomogeneous computing system • l Test software on largest possible computing clusters. Performance and scaling of the data flow elements • Reproduce the ATLAS flow of data on a reduced scale using realistic elements (both computers and networks) in a dedicated laboratory. Milano, 12 September 2005 September 12 th 2005

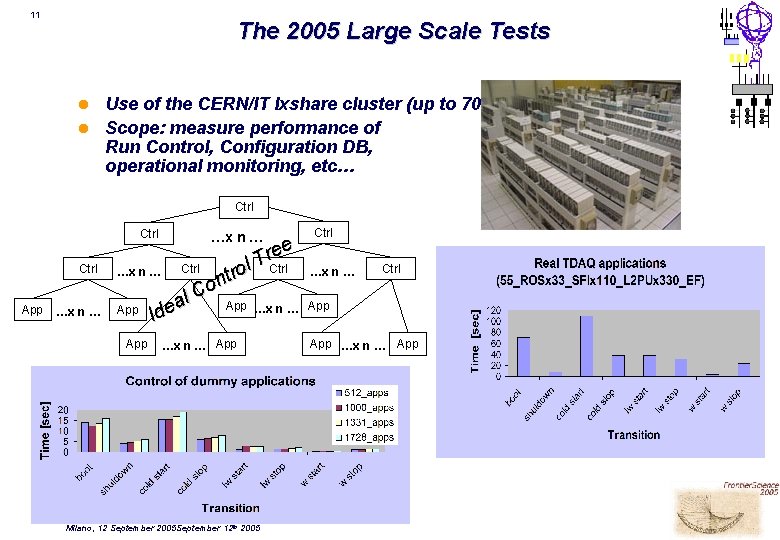

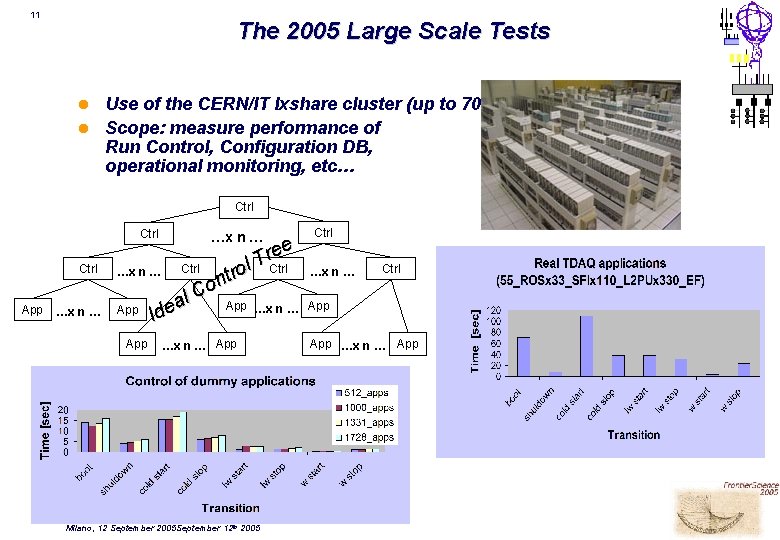

11 The 2005 Large Scale Tests Use of the CERN/IT lxshare cluster (up to 700 nodes) for 6 weeks l Scope: measure performance of Run Control, Configuration DB, operational monitoring, etc… l Ctrl App …x n … ree T Ctrl …x n … ol Ctrl r t on C al App …x n … App e d I App …x n … App Milano, 12 September 2005 September 12 th 2005 Ctrl …x n … Ctrl App …x n … App

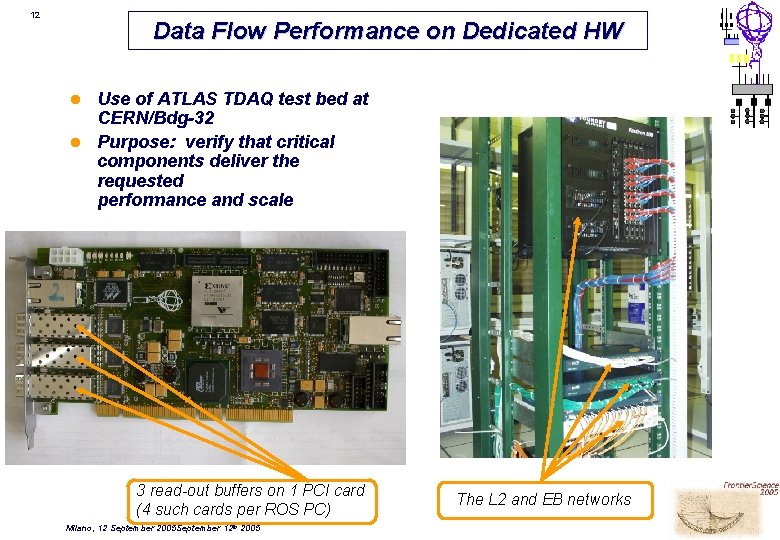

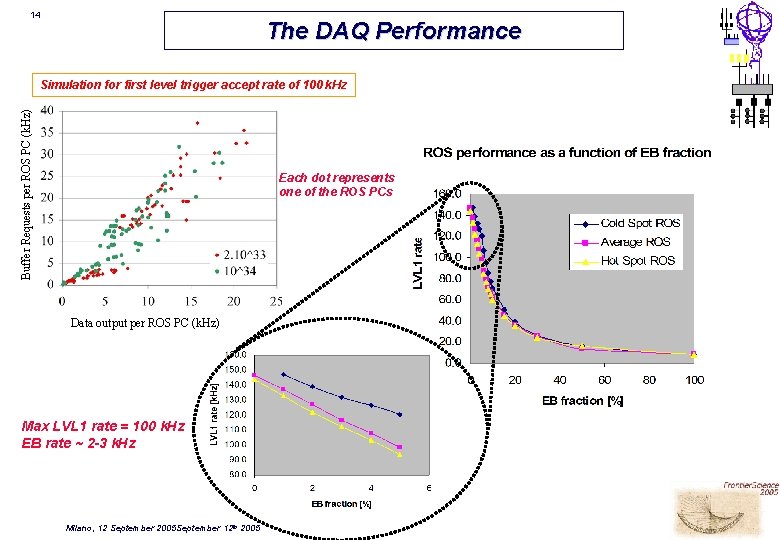

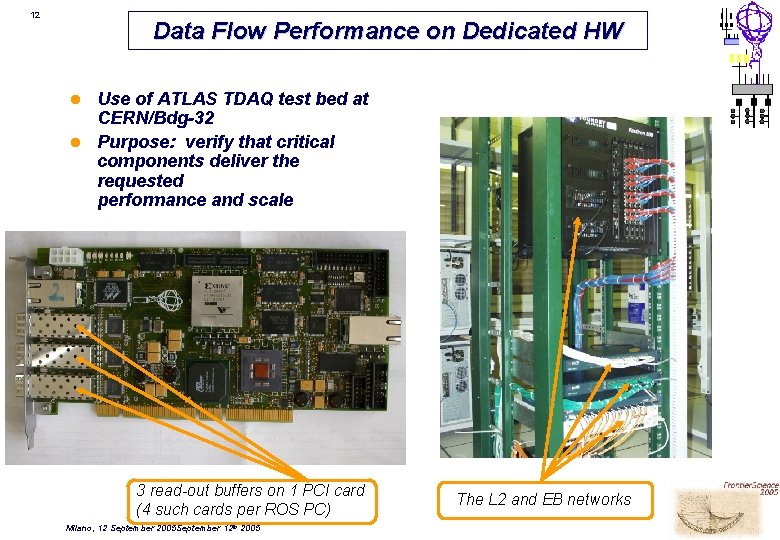

12 Data Flow Performance on Dedicated HW Use of ATLAS TDAQ test bed at CERN/Bdg-32 l Purpose: verify that critical components deliver the requested performance and scale l 3 read-out buffers on 1 PCI card (4 such cards per ROS PC) Milano, 12 September 2005 September 12 th 2005 The L 2 and EB networks

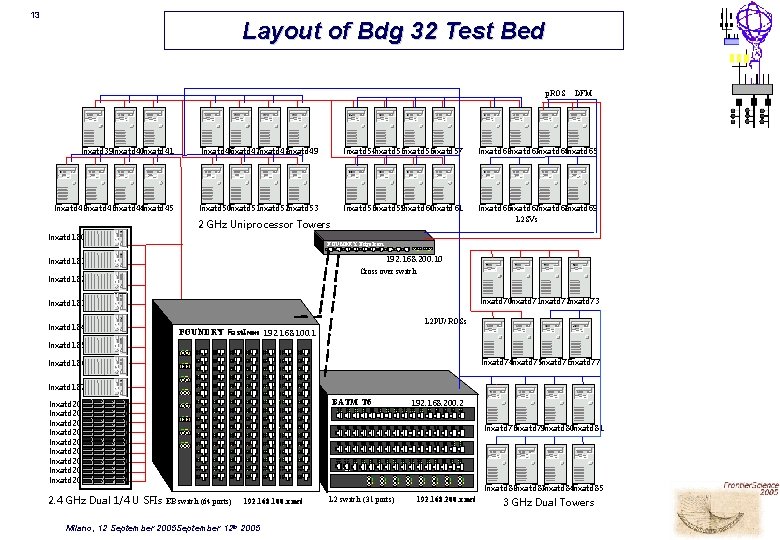

13 Layout of Bdg 32 Test Bed p. ROS DFM lnxatd 39 lnxatd 40 lnxatd 41 lnxatd 46 lnxatd 47 lnxatd 48 lnxatd 49 lnxatd 54 lnxatd 55 lnxatd 56 lnxatd 57 lnxatd 62 lnxatd 63 lnxatd 64 lnxatd 65 lnxatd 42 lnxatd 43 lnxatd 44 lnxatd 45 lnxatd 50 lnxatd 51 lnxatd 52 lnxatd 53 lnxatd 58 lnxatd 59 lnxatd 60 lnxatd 61 lnxatd 66 lnxatd 67 lnxatd 68 lnxatd 69 L 2 SVs 2 GHz Uniprocessor Towers lnxatd 180 FOUNDRY Edge. Iron 192. 168. 200. 10 lnxatd 181 Cross over switch lnxatd 182 lnxatd 70 lnxatd 71 lnxatd 72 lnxatd 73 lnxatd 184 L 2 PU/ ROSs FOUNDRY Fast. Iron 192. 168. 100. 1 lnxatd 185 lnxatd 74 lnxatd 75 lnxatd 76 lnxatd 77 lnxatd 186 lnxatd 187 BATM T 6 lnxatd 200 lnxatd 201 lnxatd 202 lnxatd 203 lnxatd 204 lnxatd 205 lnxatd 206 lnxatd 207 lnxatd 208 2. 4 GHz Dual 1/4 U SFIs 192. 168. 200. 2 lnxatd 78 lnxatd 79 lnxatd 80 lnxatd 81 lnxatd 82 lnxatd 83 lnxatd 84 lnxatd 85 EB switch (64 ports) 192. 168. 100. x net Milano, 12 September 2005 September 12 th 2005 L 2 switch (31 ports) 192. 168. 200. x net 3 GHz Dual Towers

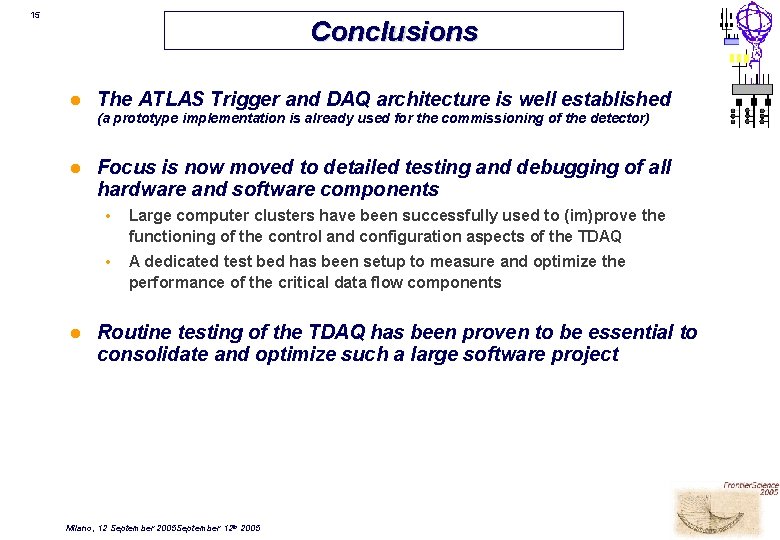

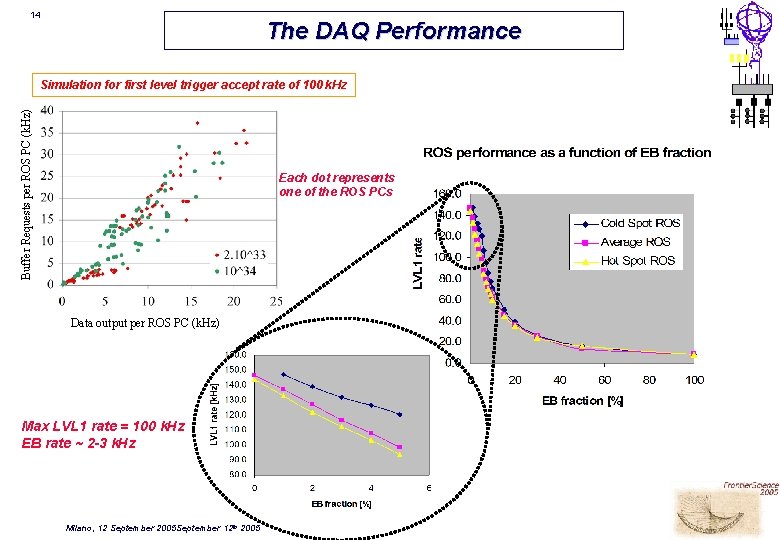

14 The DAQ Performance Buffer Requests per ROS PC (k. Hz) Simulation for first level trigger accept rate of 100 k. Hz Each dot represents one of the ROS PCs Data output per ROS PC (k. Hz) Max LVL 1 rate = 100 k. Hz EB rate ~ 2 -3 k. Hz Milano, 12 September 2005 September 12 th 2005

15 Conclusions l The ATLAS Trigger and DAQ architecture is well established (a prototype implementation is already used for the commissioning of the detector) l l Focus is now moved to detailed testing and debugging of all hardware and software components • Large computer clusters have been successfully used to (im)prove the functioning of the control and configuration aspects of the TDAQ • A dedicated test bed has been setup to measure and optimize the performance of the critical data flow components Routine testing of the TDAQ has been proven to be essential to consolidate and optimize such a large software project Milano, 12 September 2005 September 12 th 2005