Testing the Test Serbian STANAG 6001 English Language

Поднаслов презентације Testing the Test – Serbian STANAG 6001 English Language Test STANAG 6001 Testing Team PELT Directorate, Serbian MOD STANAG 6001 Testing Workshop Brno, Czech Republic, 6 – 8 September 2016

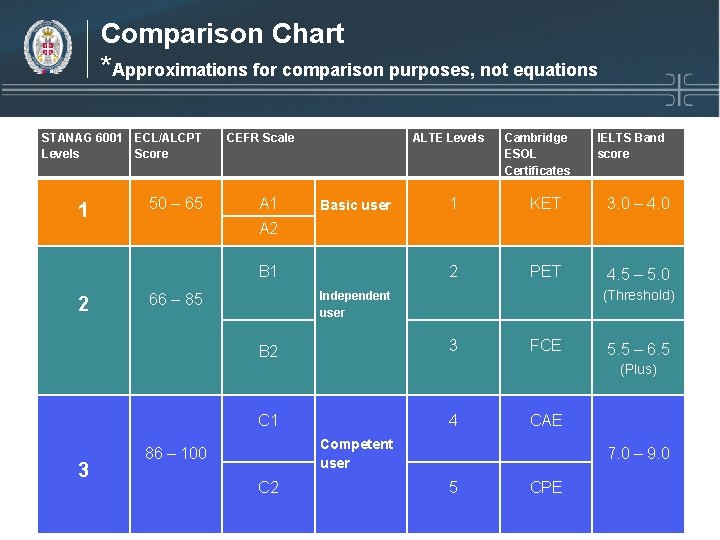

General and Specific Concerns q q q Ø Ø Ø Any kind of testing/examination has some general and some specific points of concern. In general points, relevant to any kind of language examination, we are governed by the set of principles as presented in the Principles of Good Practice for ALTE Examinations (Association of Language Testers in Europe) Specific points of concern arise from the following: STANAG 6001 is a high-stake examination; It is a language proficiency testing general English in military setting; It is a criterion –referenced test, based on STANAG 6001 table of level descriptors, incommensurate with other criterion-referenced tests (e. g. Cambridge ESOL exams, IELTS, etc. ) and language proficiency scales (CEFR, ALTE levels, etc. )

Limiting Factors q Bearing this in mind, there are many serious constraints when designing the test (including the things beyond your control): Ø What are the actual needs of the particular nation? (NATO member? Pf. P member? MD member? Test all levels? Test L 4? ) What kind of test? (Multi-level 1 -2 -3? Bi-level L 1/2, L 2/3? Single level? ) STANAG 6001 language descriptors are uniform, not open to individual/national interpretation Number of test takers per cycle Number of testing cycles per year Testing facilities at your disposal: premises (small/large testing rooms? ), amenities (multimedia equipment? PCs/laptops? Headphones/loudspeakers? ), staff (Number of invigilators? Trained OPI-ers? ), etc. Ø Ø Ø

Your Responsibilities q Ø Ø Ø q q Ø Ø Things you are in control of and can make individual decisions on are the following: Test format (based on the test specifications you designed) Number of questions, type of questions, elicitation techniques, etc. Rating criteria (analytic/holistic? Mixed? ), cut-off scores, etc. But, even these decisions are heavily influenced by aforesaid constraints. Whatever your test eventually come to be, it has to meet the following examination qualities: Validity Reliability Impact Practicality

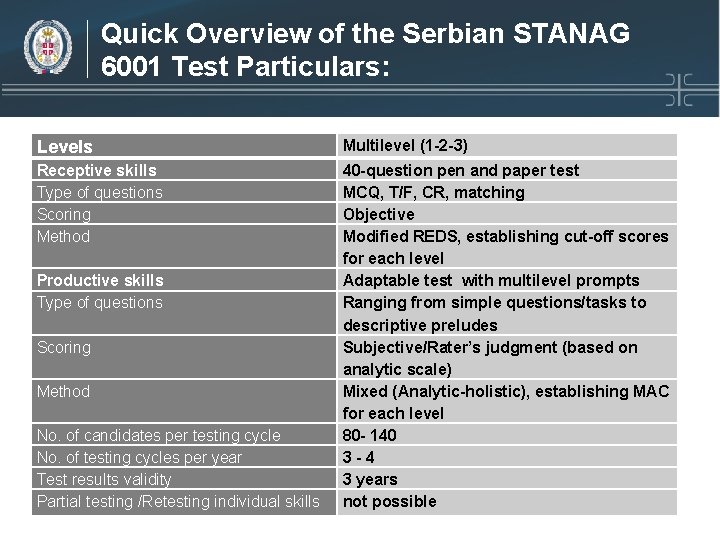

Quick Overview of the Serbian STANAG 6001 Test Particulars: Levels Multilevel (1 -2 -3) Receptive skills Type of questions Scoring Method 40 -question pen and paper test MCQ, T/F, CR, matching Objective Modified REDS, establishing cut-off scores for each level Adaptable test with multilevel prompts Ranging from simple questions/tasks to descriptive preludes Subjective/Rater’s judgment (based on analytic scale) Mixed (Analytic-holistic), establishing MAC for each level 80 - 140 3 -4 3 years not possible Productive skills Type of questions Scoring Method No. of candidates per testing cycle No. of testing cycles per year Test results validity Partial testing /Retesting individual skills

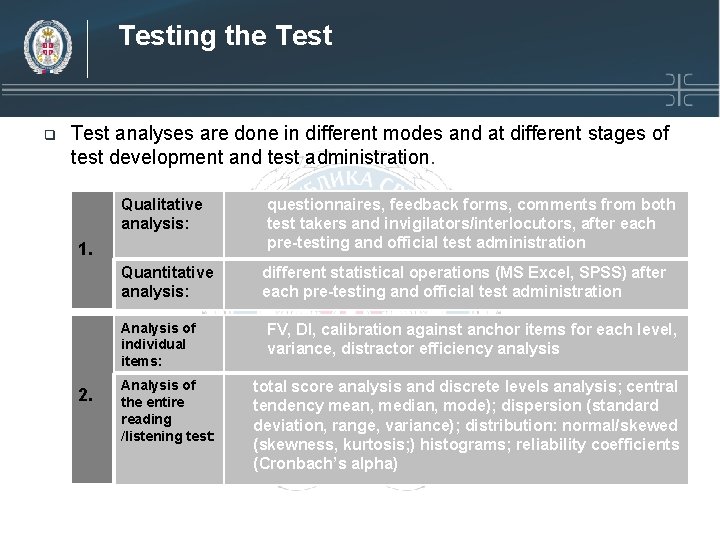

Testing the Test q Test analyses are done in different modes and at different stages of test development and test administration. Qualitative analysis: 1. Quantitative analysis: Analysis of individual items: 2. Analysis of the entire reading /listening test: questionnaires, feedback forms, comments from both test takers and invigilators/interlocutors, after each pre-testing and official test administration different statistical operations (MS Excel, SPSS) after each pre-testing and official test administration FV, DI, calibration against anchor items for each level, variance, distractor efficiency analysis total score analysis and discrete levels analysis; central tendency mean, median, mode); dispersion (standard deviation, range, variance); distribution: normal/skewed (skewness, kurtosis; ) histograms; reliability coefficients (Cronbach’s alpha)

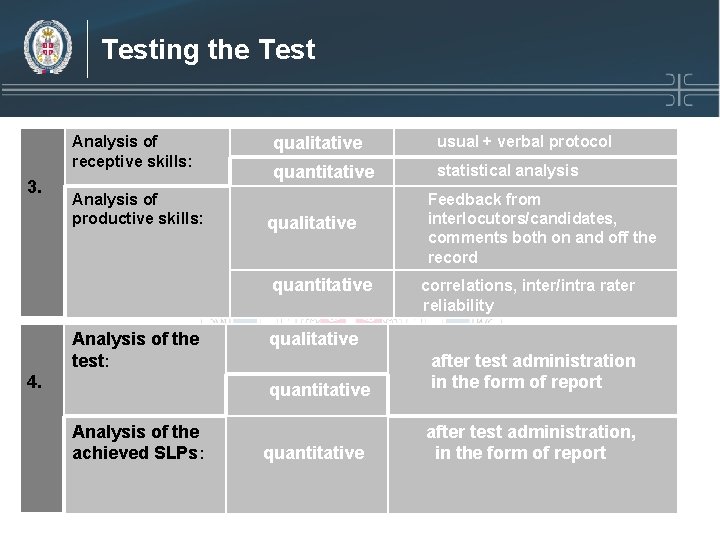

Testing the Test Analysis of receptive skills: 3. Analysis of productive skills: qualitative usual + verbal protocol quantitative statistical analysis qualitative quantitative Analysis of the test: 4. correlations, inter/intra rater reliability qualitative quantitative Analysis of the achieved SLPs: Feedback from interlocutors/candidates, comments both on and off the record quantitative after test administration in the form of report after test administration, in the form of report

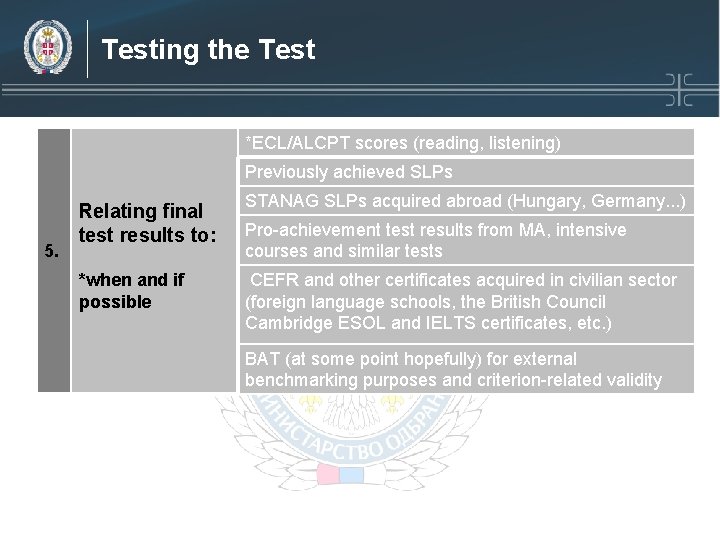

Testing the Test *ECL/ALCPT scores (reading, listening) Previously achieved SLPs 5. Relating final test results to: *when and if possible STANAG SLPs acquired abroad (Hungary, Germany. . . ) Pro-achievement test results from MA, intensive courses and similar tests CEFR and other certificates acquired in civilian sector (foreign language schools, the British Council Cambridge ESOL and IELTS certificates, etc. ) BAT (at some point hopefully) for external benchmarking purposes and criterion-related validity

Scoring Criteria for STANAG 6001 Speaking & Writing Tests q q q Interlocutor frame (scripted interview) in speaking test enhances standardization of the speaking test and reduces variability amongst different raters. Analytic rating scales enhance reliability in speaking and writing tests due to more consistency in scores and also reduce “ratercandidate interaction“ and bias. Recorded speaking responses and writing responses are crossrated for higher degree of consistency /reliability.

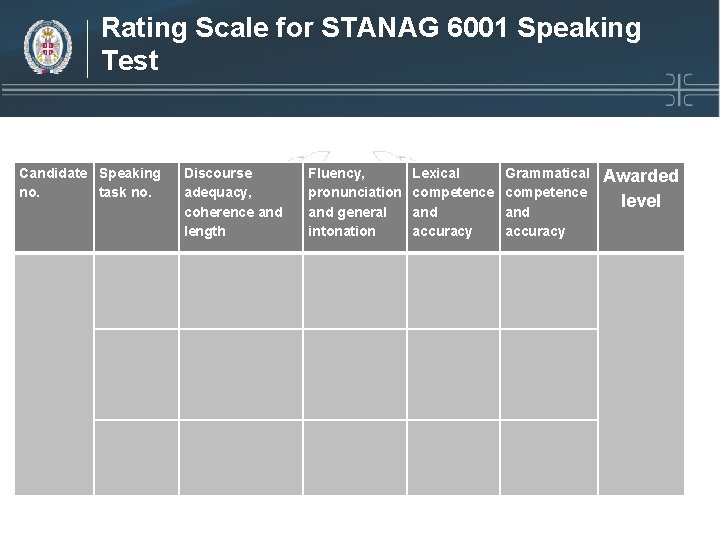

Rating Scale for STANAG 6001 Speaking Test Candidate Speaking no. task no. Discourse adequacy, coherence and length Fluency, pronunciation and general intonation Lexical competence and accuracy Grammatical competence and accuracy Awarded level

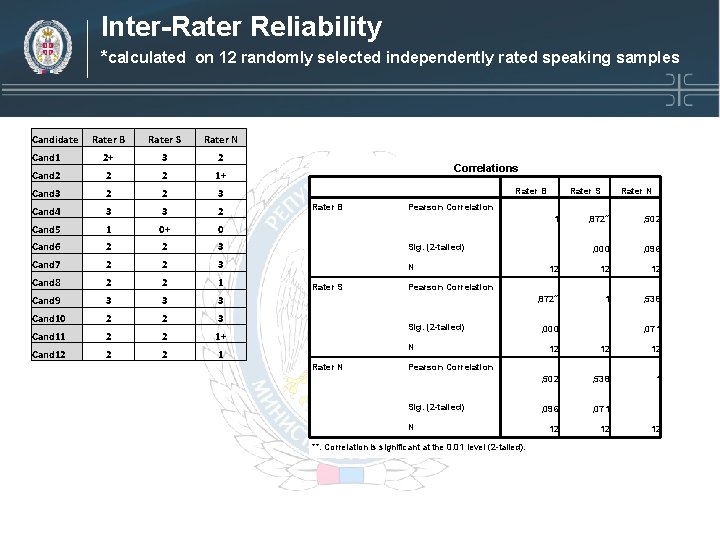

Inter-Rater Reliability *calculated on 12 randomly selected independently rated speaking samples Candidate Rater B Rater S Rater N Cand 1 2+ 3 2 Cand 2 2 2 1+ Cand 3 2 2 3 Cand 4 3 3 2 Cand 5 1 0+ 0 Cand 6 2 2 3 Sig. (2 -tailed) Cand 7 2 2 3 N Cand 8 2 2 1 Cand 9 3 3 3 Cand 10 2 2 3 Cand 11 2 2 1+ Cand 12 2 2 1 Correlations Rater B Rater S Pearson Correlation 1 Rater S , 872** , 502 , 000 , 096 12 12 12 , 872** 1 , 538 Pearson Correlation Sig. (2 -tailed) N Rater N , 000 , 071 12 12 12 , 502 , 538 1 , 096 , 071 12 12 Pearson Correlation Sig. (2 -tailed) N **. Correlation is significant at the 0. 01 level (2 -tailed). 12

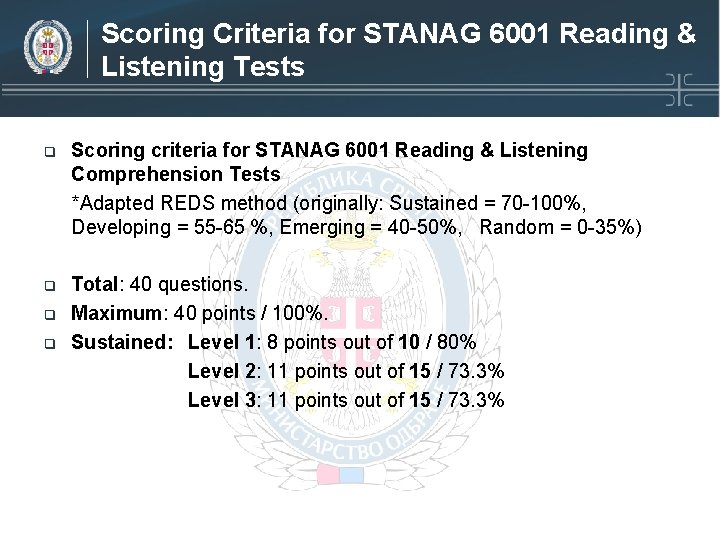

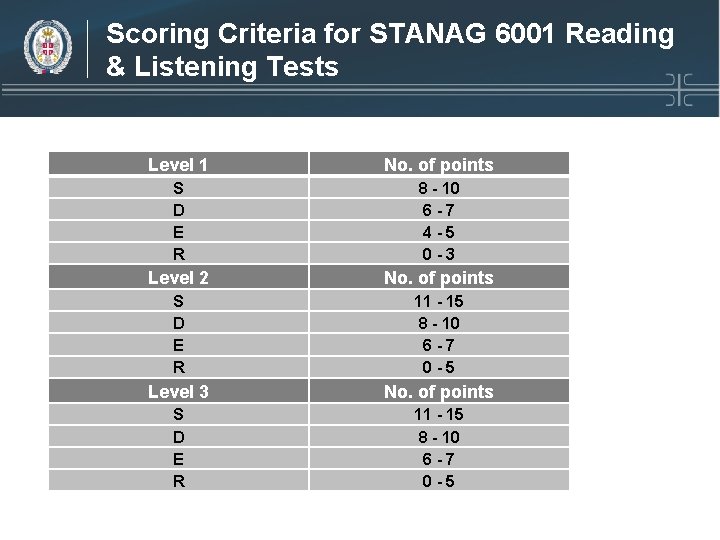

Scoring Criteria for STANAG 6001 Reading & Listening Tests q Scoring criteria for STANAG 6001 Reading & Listening Comprehension Tests *Adapted REDS method (originally: Sustained = 70 -100%, Developing = 55 -65 %, Emerging = 40 -50%, Random = 0 -35%) q Total: 40 questions. Maximum: 40 points / 100%. Sustained: Level 1: 8 points out of 10 / 80% Level 2: 11 points out of 15 / 73. 3% Level 3: 11 points out of 15 / 73. 3% q q

Scoring Criteria for STANAG 6001 Reading & Listening Tests Level 1 No. of points S D E R 8 - 10 6 -7 4 -5 0 -3 Level 2 No. of points S D E R 11 - 15 8 - 10 6 -7 0 -5 Level 3 No. of points S D E R 11 - 15 8 - 10 6 -7 0 -5

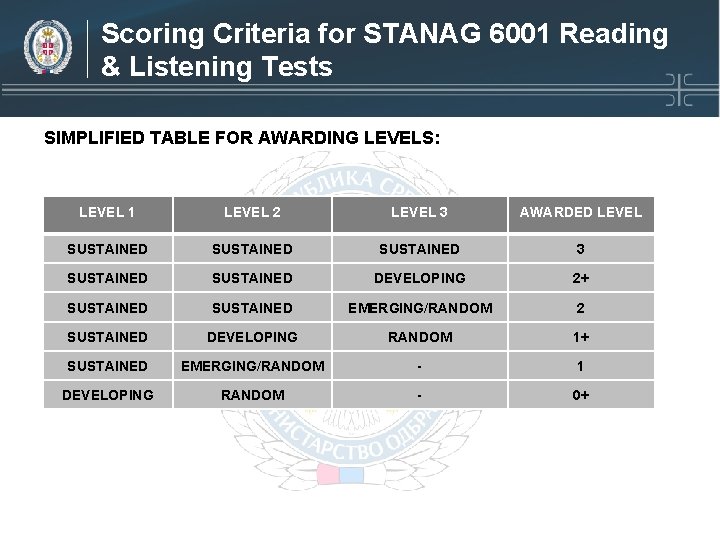

Scoring Criteria for STANAG 6001 Reading & Listening Tests SIMPLIFIED TABLE FOR AWARDING LEVELS: LEVEL 1 LEVEL 2 LEVEL 3 AWARDED LEVEL SUSTAINED 3 SUSTAINED DEVELOPING 2+ SUSTAINED EMERGING/RANDOM 2 SUSTAINED DEVELOPING RANDOM 1+ SUSTAINED EMERGING/RANDOM - 1 DEVELOPING RANDOM - 0+

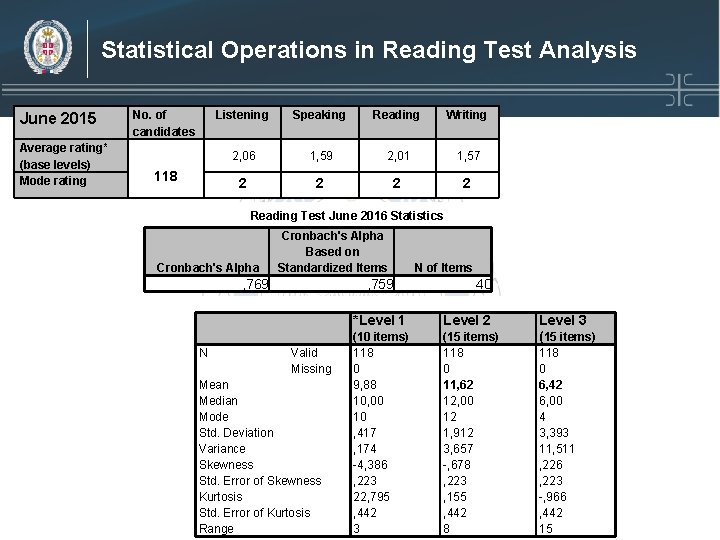

Statistical Operations in Reading Test Analysis June 2015 Average rating* (base levels) Mode rating No. of candidates 118 Listening Speaking Reading Writing 2, 06 1, 59 2, 01 1, 57 2 2 Reading Test June 2016 Statistics Cronbach's Alpha Based on Standardized Items , 769 N , 759 Valid Missing Mean Median Mode Std. Deviation Variance Skewness Std. Error of Skewness Kurtosis Std. Error of Kurtosis Range N of Items 40 *Level 1 Level 2 (10 items) 118 0 9, 88 10, 00 10 , 417 , 174 -4, 386 , 223 22, 795 , 442 3 (15 items) 118 0 11, 62 12, 00 12 1, 912 3, 657 -, 678 , 223 , 155 , 442 8 Level 3 (15 items) 118 0 6, 42 6, 00 4 3, 393 11, 511 , 226 , 223 -, 966 , 442 15

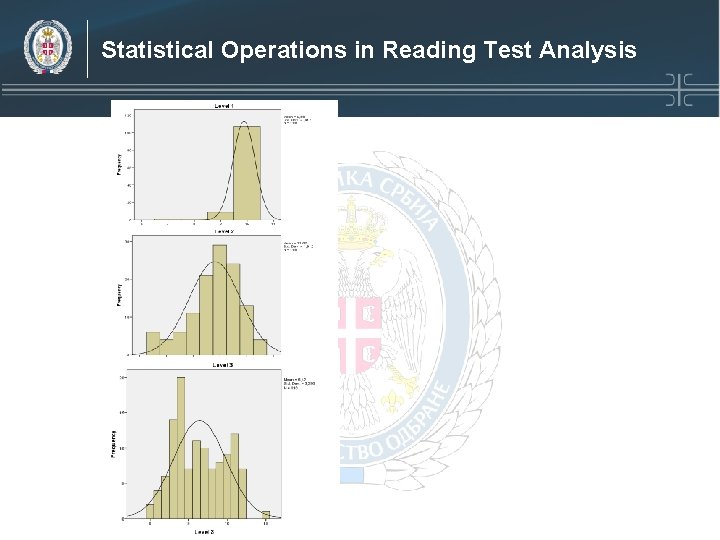

Statistical Operations in Reading Test Analysis

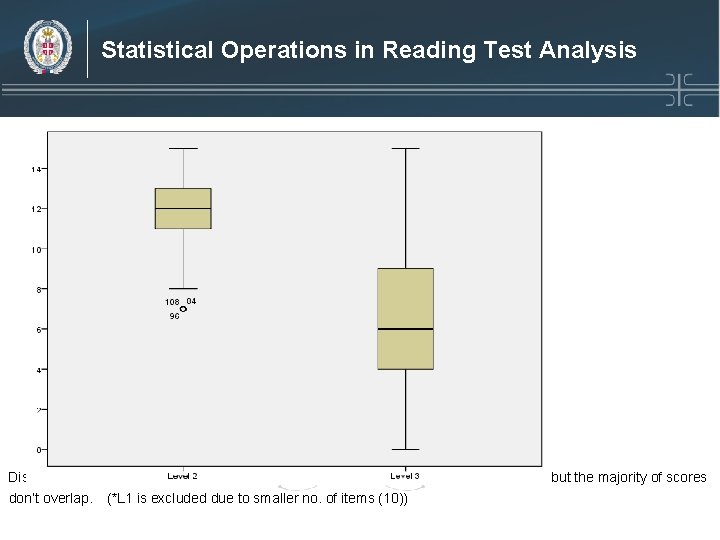

Statistical Operations in Reading Test Analysis Distribution of candidates’ scores (0 - 15) per level shows some overlapping of outliers, but the majority of scores don’t overlap. (*L 1 is excluded due to smaller no. of items (10))

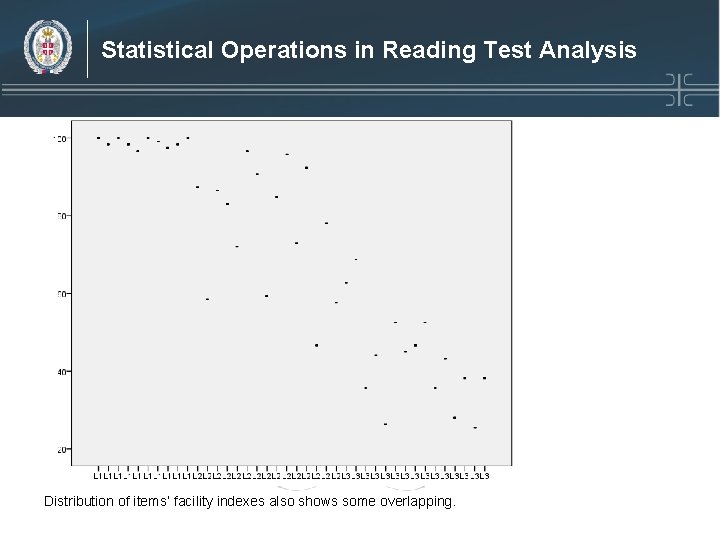

Statistical Operations in Reading Test Analysis Distribution of items’ facility indexes also shows some overlapping.

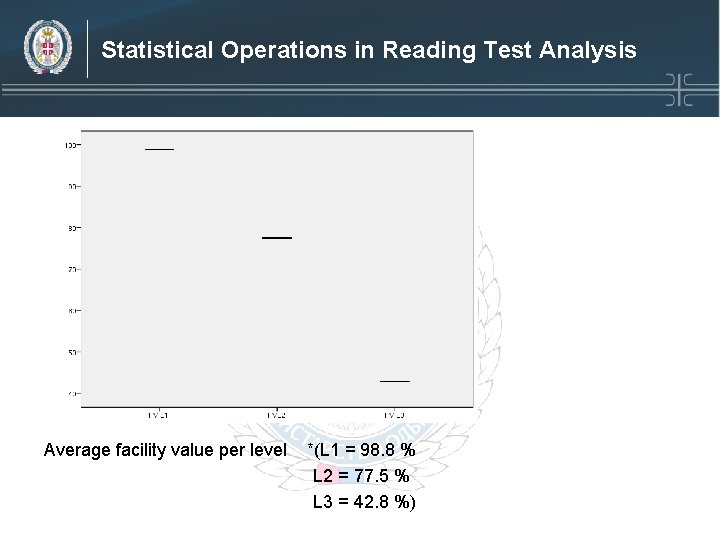

Statistical Operations in Reading Test Analysis Average facility value per level *(L 1 = 98. 8 % L 2 = 77. 5 % L 3 = 42. 8 %)

Comparison Chart *Approximations for comparison purposes, not equations STANAG 6001 ECL/ALCPT Levels Score 1 50 – 65 CEFR Scale A 1 ALTE Levels Basic user IELTS Band score 1 KET 3. 0 – 4. 0 2 PET 4. 5 – 5. 0 A 2 B 1 2 Cambridge ESOL Certificates (Threshold) Independent user 66 – 85 3 B 2 FCE 5. 5 – 6. 5 (Plus) C 1 3 4 CAE Competent user 86 – 100 C 2 7. 0 – 9. 0 5 CPE

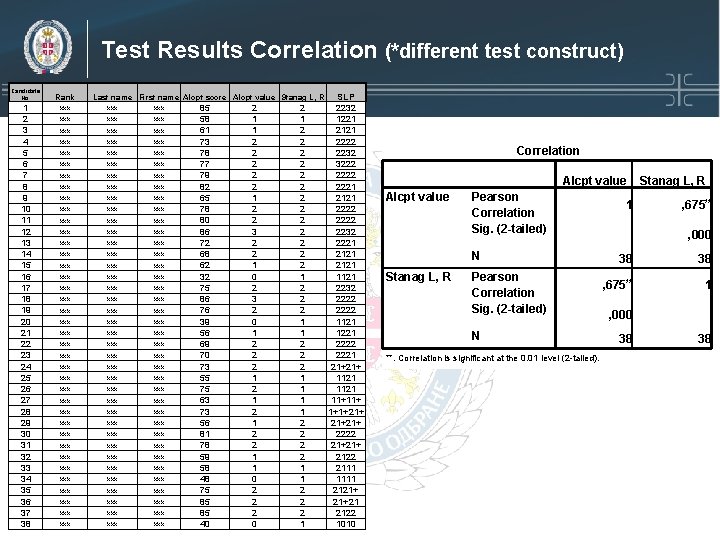

Test Results Correlation (*different test construct) Candidate No. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 Rank Last name First name Alcpt score Alcpt value Stanag L, R xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx xxx 85 58 61 73 78 77 79 82 65 78 80 86 72 68 62 32 75 86 76 39 56 69 70 73 55 75 63 73 56 81 78 59 58 48 75 85 85 40 2 1 1 2 2 2 1 2 2 3 2 2 1 0 2 3 2 0 1 2 2 2 1 2 1 2 2 1 1 0 2 2 2 0 2 1 2 2 2 2 1 2 2 2 1 1 1 1 2 2 2 1 SLP 2232 1221 2121 2222 2232 3222 2221 2121 2222 2232 2221 2121 1121 2232 2222 1121 1221 2222 2221 21+21+ 1121 11+11+ 1+1+21+ 2222 21+21+ 2122 2111 1111 2121+ 21+21 2122 1010 Correlation Alcpt value Pearson Correlation Sig. (2 -tailed) N Stanag L, R Pearson Correlation Sig. (2 -tailed) N **. Correlation is significant at the 0. 01 level (2 -tailed). 1 Stanag L, R , 675** , 000 38 38 , 675** 1 , 000 38 38

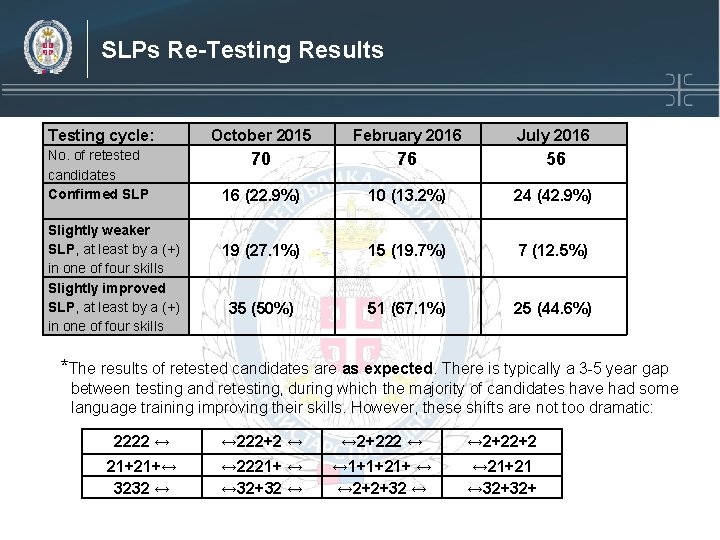

SLPs Re-Testing Results Testing cycle: October 2015 February 2016 July 2016 No. of retested candidates Confirmed SLP 70 76 56 16 (22. 9%) 10 (13. 2%) 24 (42. 9%) 19 (27. 1%) 15 (19. 7%) 7 (12. 5%) 35 (50%) 51 (67. 1%) 25 (44. 6%) Slightly weaker SLP, at least by a (+) in one of four skills Slightly improved SLP, at least by a (+) in one of four skills *The results of retested candidates are as expected. There is typically a 3 -5 year gap between testing and retesting, during which the majority of candidates have had some language training improving their skills. However, these shifts are not too dramatic: 2222 ↔ ↔ 222+2 ↔ ↔ 2+22+2 21+21+↔ 3232 ↔ ↔ 2221+ ↔ ↔ 32+32 ↔ ↔ 1+1+21+ ↔ ↔ 2+2+32 ↔ ↔ 21+21 ↔ 32+32+

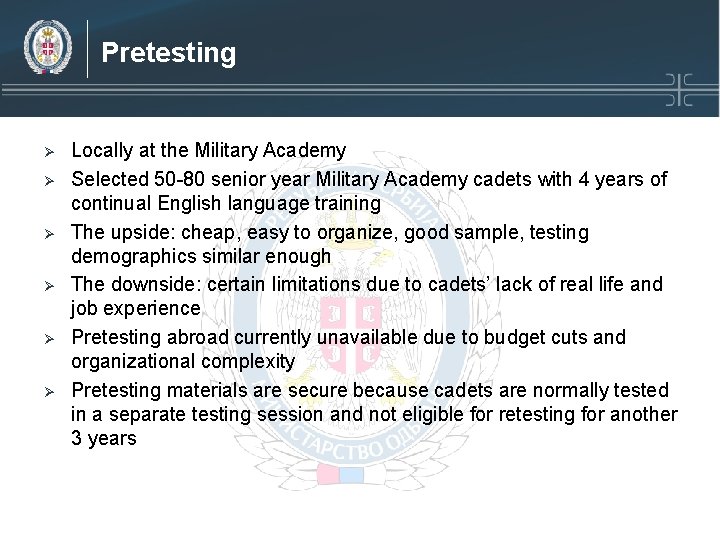

Pretesting Ø Ø Ø Locally at the Military Academy Selected 50 -80 senior year Military Academy cadets with 4 years of continual English language training The upside: cheap, easy to organize, good sample, testing demographics similar enough The downside: certain limitations due to cadets’ lack of real life and job experience Pretesting abroad currently unavailable due to budget cuts and organizational complexity Pretesting materials are secure because cadets are normally tested in a separate testing session and not eligible for retesting for another 3 years

Cooperation with Other Language Professionals q q Cooperation with English language professionals, experts and teachers within the system of defence exists on all levels and in all forms (Military Academy Department of Foreign Languages, GS J-7 Training and Doctrine Department – Group for English language training, PELT part-time English language experts and lecturers, etc. ) English teachers act as invigilators, interlocutors and expert judges when determining content and face validity, cut-off scores, feedback, etc.

Cooperation with Other Functional Units in HR Sector q Reporting the test results q Interpreting STANAG 6001 language proficiency levels to language non-professionals q Consulting with Personnel departments in Mo. D and GS, the Centre for Peacekeeping Operations, the Military Academy, The National Defence School, etc. about language-related career matters, language requirements for appointments, attending courses abroad, participation in PK missions, etc.

Поднаслов презентације Thank you for your attention Questions?

- Slides: 26