Testing research software An academic perspective on compromise

- Slides: 13

Testing research software: An academic perspective on compromise Daniel Standage @byuhobbes https: //osf. io/f 2 gcz/

Research questions • Software requirements rarely fully understood at the offset • Subtle and complicated • Research is rarely “done” • Incredibly fulfilling!

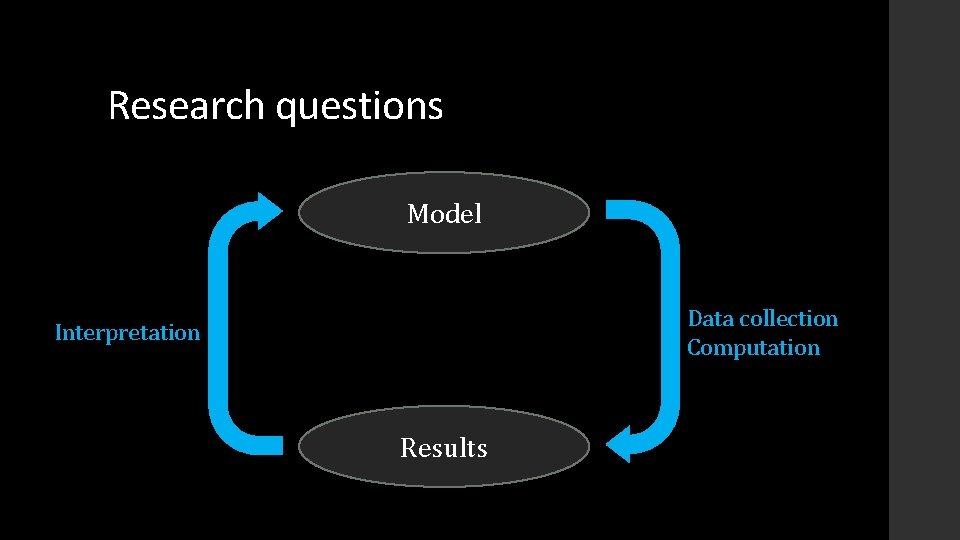

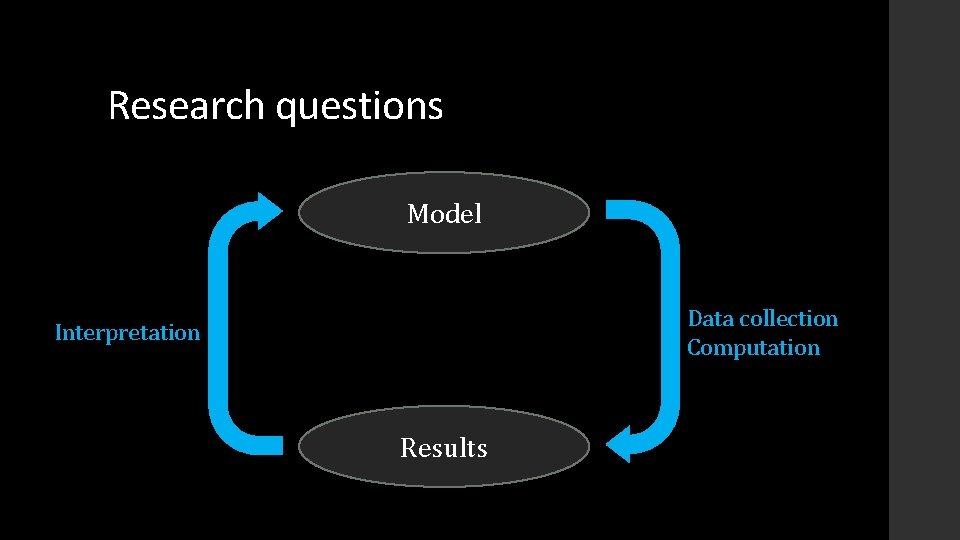

Research questions Model Data collection Computation Interpretation Results

Research groups • Diverse scientific backgrounds • Diverse computing skills & levels of experience • Little to no funding or direct incentive for responsible software development • Even less so for software maintenance Very little automated testing in academic research software!

Research software contributors • Extensive domain knowledge (surface chemistry, marine biology, etc. ) • Don’t know all the right answers, but know some • Lots of intuition critical thinking about problem domain vs. technical domain • Often very little formal training in programming

Automated testing is critical… but. . .

Compromise on best practices is essential!

Priorities for research software 1. Accuracy / insight 2. Performance / efficiency 3. Openness / transparency / clarity 4. … 5. … 6. … N. 7. User experience

How does all this inform our approach to testing?

“Stupidity-driven development” • Write simple but extensive tests for scientific core of the code • Start with many fewer tests for other project components • When critical bugs are encountered: Reproduce the bug with a regression test THEN fix the bug Get on with more important things Brown CT (2014) Some Thoughts on Research Coding and Stupidity Driven Development. http: //ivory. idyll. org/blog/2014 -research-coding. html

“Stupidity-driven development” Avoid wasting time writing tests for bugs that aren’t important or that never appear! Brown CT (2014) Some Thoughts on Research Coding and Stupidity Driven Development. http: //ivory. idyll. org/blog/2014 -research-coding. html

Acknowledgements Sascha Steinbiss (@ssatta) Gordon Gremme Prof. Stefan Kurtz http: //genometools. org Prof. Titus Brown (@ctitusbrown) https: //github. com/dib-lab Greg Wilson (@gvwilson) http: //software-carpentry. org