Testing Coverage ideally testing will exercise the system

- Slides: 24

Testing • Coverage – ideally, testing will exercise the system in all possible ways – not possible, so we use different criteria to judge how well our testing strategy “covers” the system • Test case – consists of data, procedure, and expected result – represents just one situation under which the system (or some part of it) might run

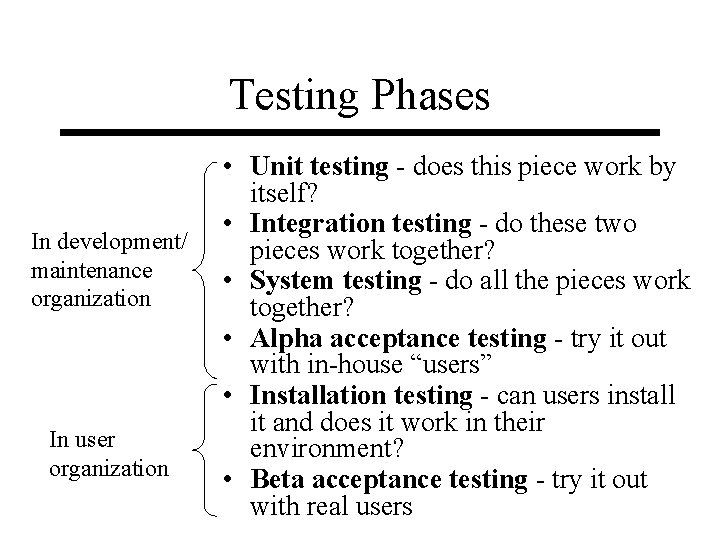

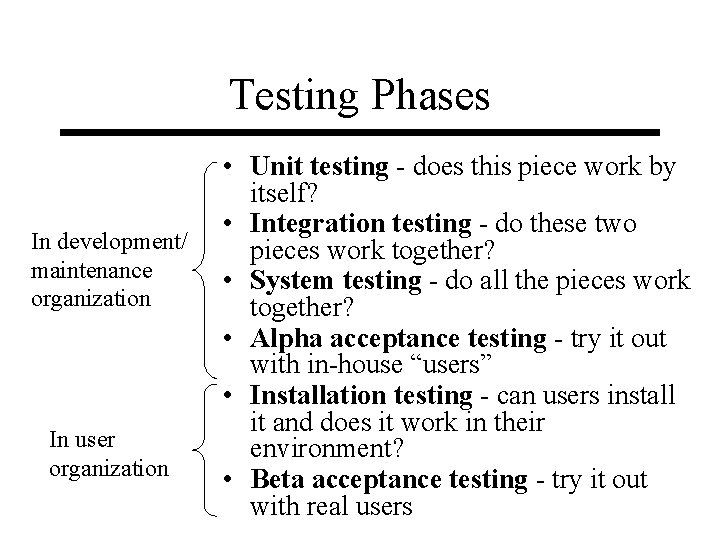

Testing Phases In development/ maintenance organization In user organization • Unit testing - does this piece work by itself? • Integration testing - do these two pieces work together? • System testing - do all the pieces work together? • Alpha acceptance testing - try it out with in-house “users” • Installation testing - can users install it and does it work in their environment? • Beta acceptance testing - try it out with real users

Test Planning • A Test Plan: – covers all types and phases of testing – guides the entire testing process – who, why, when, what – developed as requirements, functional specification, and high-level design are developed – should be done before implementation starts

Test Planning (cont. ) • A test plan includes: – test objectives – schedule and logistics – test strategies – test cases • procedure • data • expected result – procedures for handling problems

Testing Techniques • Structural testing techniques – “white box” testing – based on statements in the code – coverage criteria related to physical parts of the system – tests how a program/system does something • Functional testing techniques – “black box” testing – based on input and output – coverage criteria based on behavior aspects – tests the behavior of a system or program

System Testing Techniques • Goal is to evaluate the system as a whole, not its parts • Techniques can be structural or functional • Techniques can be used in any stage that tests the system as a whole (acceptance, installation, etc. ) • Techniques not mutually exclusive

System Testing Techniques (cont. ) • Structural techniques – stress testing - test larger-than-normal capacity in terms of transactions, data, users, speed, etc. – execution testing - test performance in terms of speed, precision, etc. – recovery testing - test how the system recovers from a disaster, how it handles corrupted data, etc.

System Testing Techniques (cont. ) • Structural techniques (cont. ) – operations testing - test how the system fits in with existing operations and procedures in the user organization – compliance testing - test adherence to standards – security testing - test security requirements

System Testing Techniques (cont. ) • Functional techniques – requirements testing - fundamental form of testing makes sure the system does what it’s required to do – regression testing - make sure unchanged functionality remains unchanged – error-handling testing - test required error-handling functions (usually user error) – manual-support testing - test that the system can be used properly - includes user documentation

System Testing Techniques (cont. ) • Functional techniques (cont. ) – intersystem handling testing - test that the system is compatible with other systems in the environment – control testing - test required control mechanisms – parallel testing - feed same input into two versions of the system to make sure they produce the same output

Unit Testing • Goal is to evaluate some piece (file, program, module, component, etc. ) in isolation • Techniques can be structural or functional • In practice, it’s usually ad-hoc and looks a lot like debugging • More structured approaches exist

Unit Testing Techniques • Functional techniques – input domain testing - pick test cases representative of the range of allowable input, including high, low, and average values – equivalence partitioning - partition the range of allowable input so that the program is expected to behave similarly for all inputs in a given partition, then pick a test case from each partition

Unit Testing Techniques (cont. ) • Functional techniques (cont. ) – boundary value - choose test cases with input values at the boundary (both inside and outside) of the allowable range – syntax checking - choose test cases that violate the format rules for input – special values - design test cases that use input values that represent special situations – output domain testing - pick test cases that will produce output at the extremes of the output domain

Unit Testing Techniques (cont. ) • Structural techniques – statement testing - ensure the set of test cases exercises every statement at least once – branch testing - each branch of an if/then statement is exercised – conditional testing - each truth statement is exercised both true and false – expression testing - every part of every expression is exercised – path testing - every path is exercised (impossible in practice)

Unit Testing Techniques (cont. ) • Error-based techniques – basic idea is that if you know something about the nature of the defects in the code, you can estimate whether or not you’ve found all of them or not – fault seeding - put a certain number of known faults into the code, then test until they are all found

Unit Testing Techniques (cont. ) • Error-based techniques (cont. ) – mutation testing - create mutants of the program by making single changes, then run test cases until all mutants have been killed – historical test data - an organization keeps records of the average numbers of defects in the products it produces, then tests a new product until the number of defects found approaches the expected number

Testing vs. Static Analysis • Static Analysis attempts to evaluate a program without executing it • Techniques: – – – inspections and reviews mathematical correctness proofs safety analysis program measurements data flow and control flow analysis symbolic execution

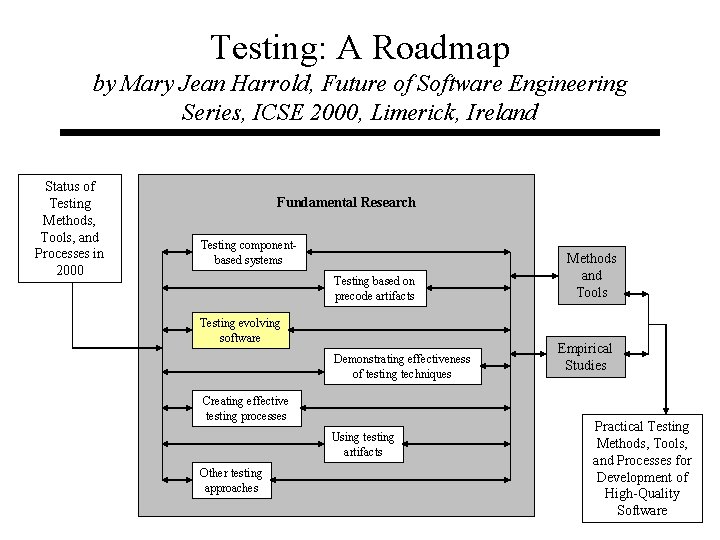

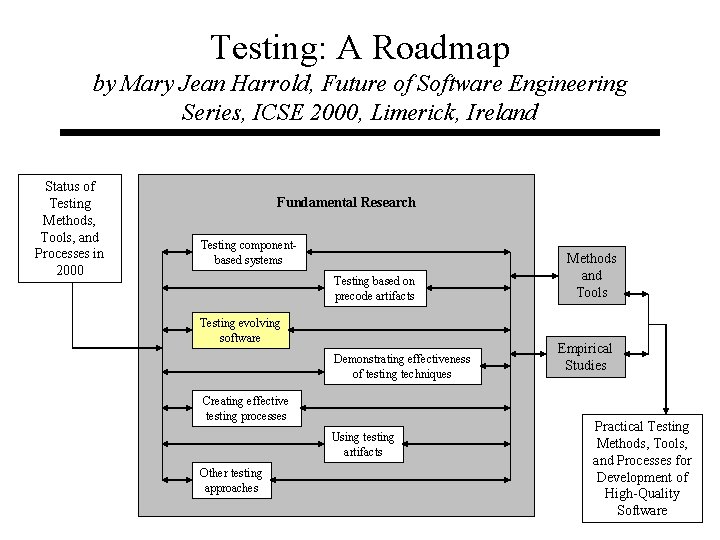

Testing: A Roadmap by Mary Jean Harrold, Future of Software Engineering Series, ICSE 2000, Limerick, Ireland Status of Testing Methods, Tools, and Processes in 2000 Fundamental Research Testing componentbased systems Testing based on precode artifacts Testing evolving software Demonstrating effectiveness of testing techniques Creating effective testing processes Using testing artifacts Other testing approaches Methods and Tools Empirical Studies Practical Testing Methods, Tools, and Processes for Development of High-Quality Software

Testing: A Roadmap (cont. ) • Regression testing can account for up to 1/3 of total cost of software • Research has focused on ways to make regression testing more efficient: – Finding most effective subsets of the test suite – Managing the growth of the test suite – Use precode artifacts to help plan regression testing • Need to focus on reducing size of test suite, prioritizing test cases, and assessing testability

Test Case Prioritization: An Empirical Study Gregg Rothermel et al, ICSM 1999, Oxford, England • Prioritization is necessary: – during regression testing – when there is not enough time or resources to run the entire test suite – when there is a need to decide which test cases to run first, i. e. which give the biggest bang for the buck • Prioritization can be based on: – which test cases are likely to find the most faults – which test cases are likely to find the most severe faults – which test cases are likely to cover the most statements

Test Case Prioritization: An Empirical Study • The study: – Applied 9 different prioritization techniques to 7 different programs – All prioritization techniques were aimed at finding faults faster – Results showed that prioritization definitely increases the fault detection rate, but different prioritization techniques didn’t differ much in effectiveness

A Manager’s Guide to Evaluating Test Suites Brian Marick, et al. http: //www. testing. com/writings/evaluating-testsuites-paper. pdf • Basic assumption: purpose of testing is to help manager decide whether or not to release the system to the customer • Threat Product Problem Victim • Users - create detailed descriptions of fictitious, but realistic, users • Pay attention to usability • Coverage - code, documentation

Summary of Readings • Rigorous scientific study vs. practical experience – Harrold and Rothermel are academic researchers – Marick is a practitioner/consultant – differences in perspective, concerns, approaches • Plan ahead vs. evaluate after the fact

Summary of Readings (cont. ) • Regression testing – big cost – size and quality of test suite is crucial – automation only goes so far – tradeoff between test planning time and test execution time