Testing Challenges for Next Generation CPS Software Mike

![Examining Observability With Model Counting and Symbolic Evaluation [ true ] test (X, Y) Examining Observability With Model Counting and Symbolic Evaluation [ true ] test (X, Y)](https://slidetodoc.com/presentation_image_h/ed0abc5fd16a64ed68424b11259d9ae9/image-24.jpg)

![Probabilistic SE 104 [ true ] y=10 x Hard to reach [ Y=X*10 ] Probabilistic SE 104 [ true ] y=10 x Hard to reach [ Y=X*10 ]](https://slidetodoc.com/presentation_image_h/ed0abc5fd16a64ed68424b11259d9ae9/image-26.jpg)

![Neural Net Code Structure (in MATLAB) 1. function [y 1] = simulate. Standalone. Net(x Neural Net Code Structure (in MATLAB) 1. function [y 1] = simulate. Standalone. Net(x](https://slidetodoc.com/presentation_image_h/ed0abc5fd16a64ed68424b11259d9ae9/image-48.jpg)

- Slides: 53

Testing Challenges for Next. Generation CPS Software Mike Whalen University of Minnesota 7/12/2017 1

Acknowledgements • Rockwell Collins: Steven Miller, Darren Cofer, Lucas Wagner, Andrew Gacek, John Backes • University of Minnesota: Mats P. E. Heimdahl, Sanjai Rayadurgam, Matt Staats, Ajitha Rajan, Gregory Gay • Funding Sponsors: NASA, Air Force Research Labs, DARPA 7/12/2017 2

Who Am I? My main aim is in reducing verification and validation (V&V) cost and increasing rigor • • Applied automated V&V techniques on industrial systems at Rockwell Collins for 6 ½ years Proofs, bounded analyses, static analysis, automated testing Combining several kinds of assurance artifacts I’m interested in requirements as they pertain to V&V. Main research thrusts in testing • Factors in testing: how do we make testing experiments fair and repeatable? • Test metrics: What are reasonable metrics for testing safety-critical systems? • What does it mean for a metric to be reasonable? 7/12/2017 3

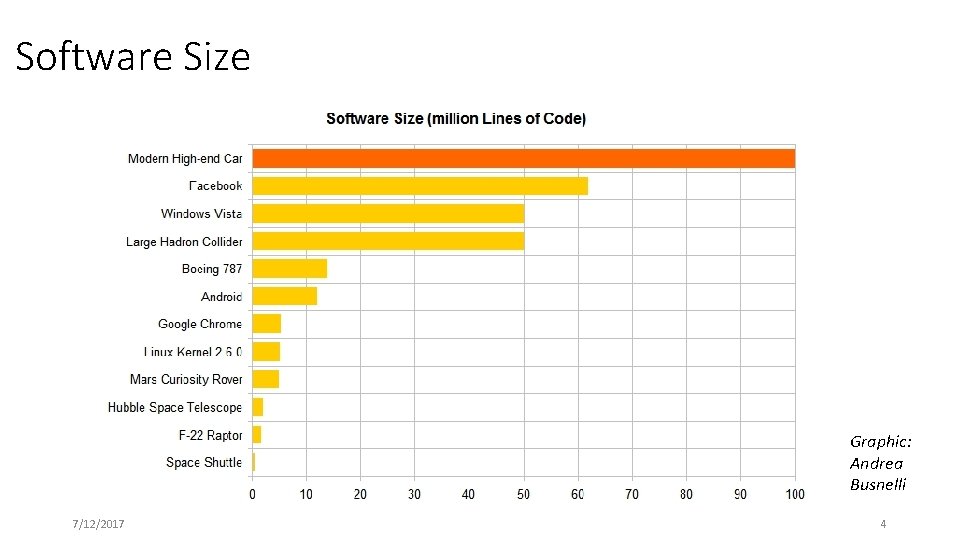

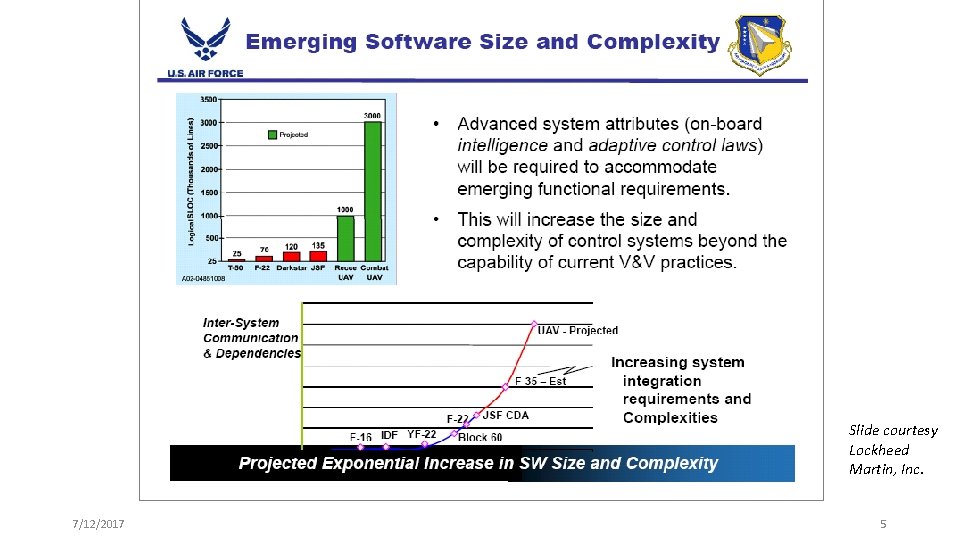

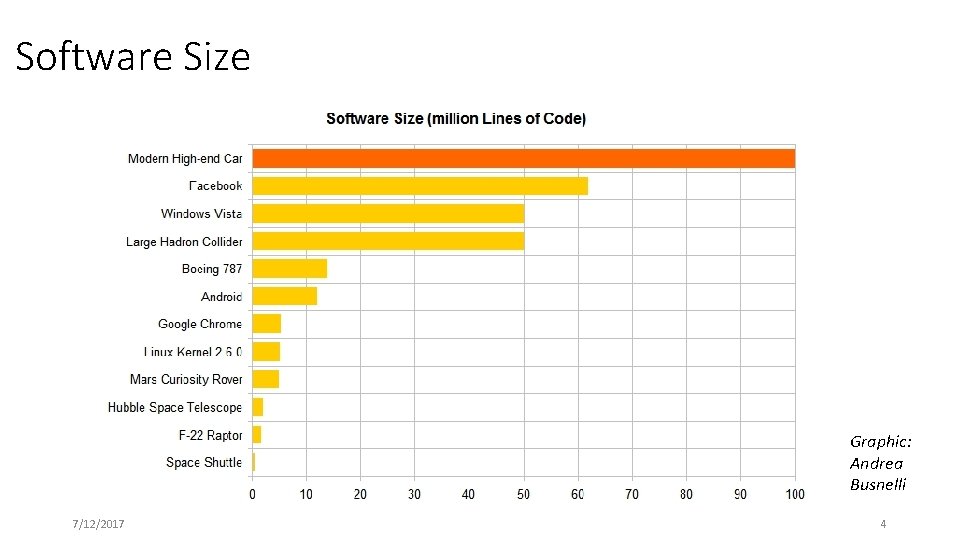

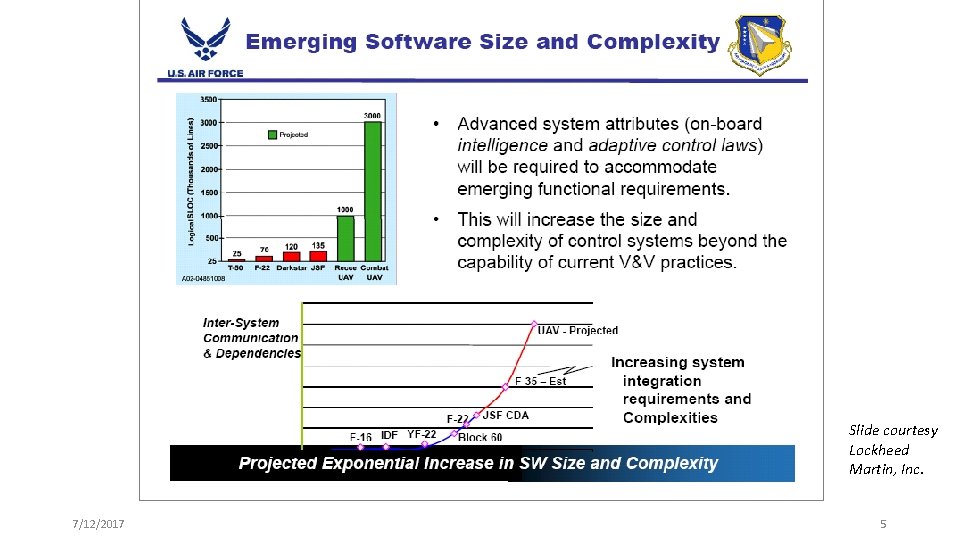

Software Size Graphic: Andrea Busnelli 7/12/2017 4

Slide courtesy Lockheed Martin, Inc. 7/12/2017 5

Software Connectivity 7/12/2017 6

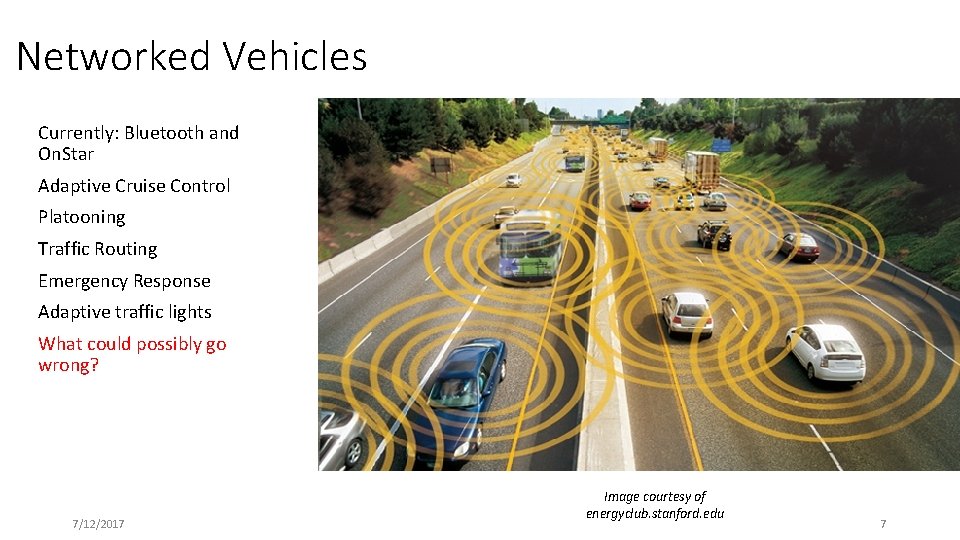

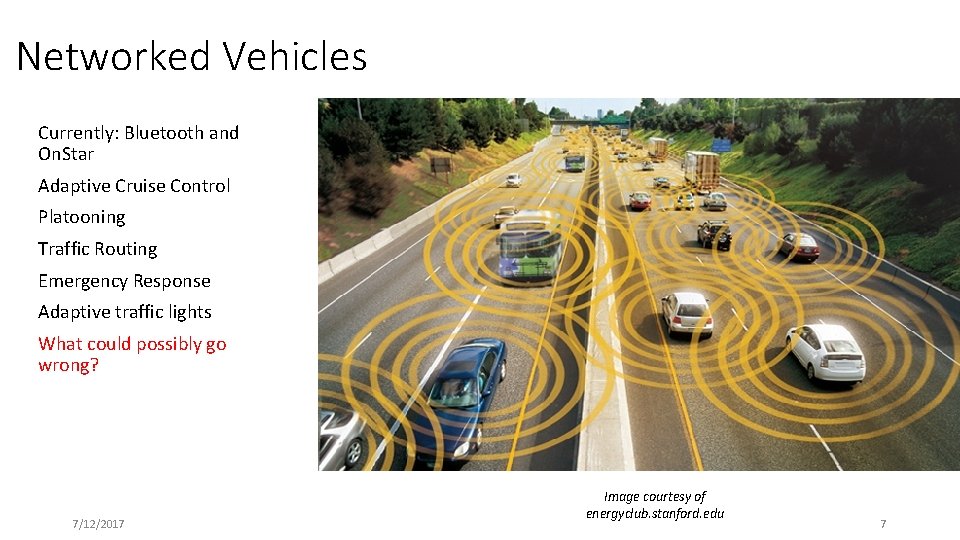

Networked Vehicles Currently: Bluetooth and On. Star Adaptive Cruise Control Platooning Traffic Routing Emergency Response Adaptive traffic lights What could possibly go wrong? 7/12/2017 Image courtesy of energyclub. stanford. edu 7

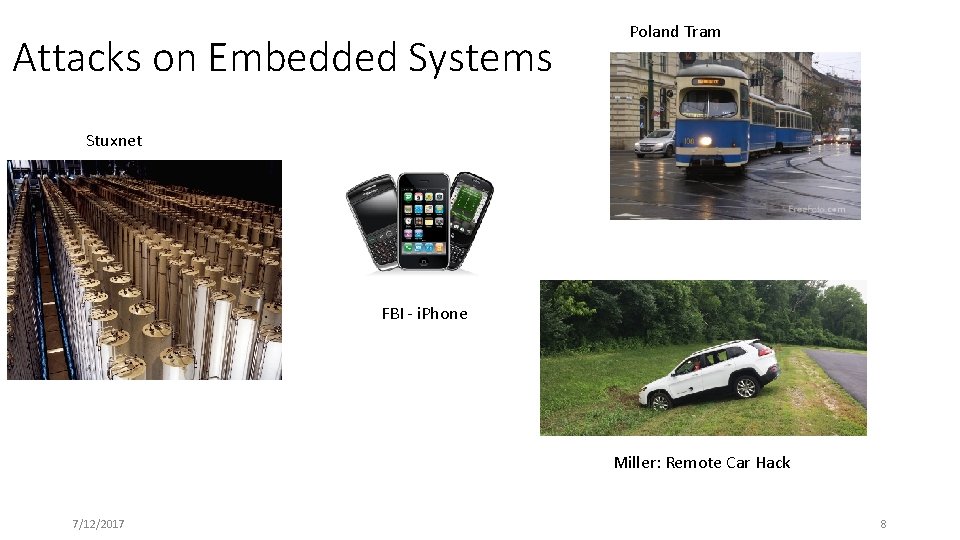

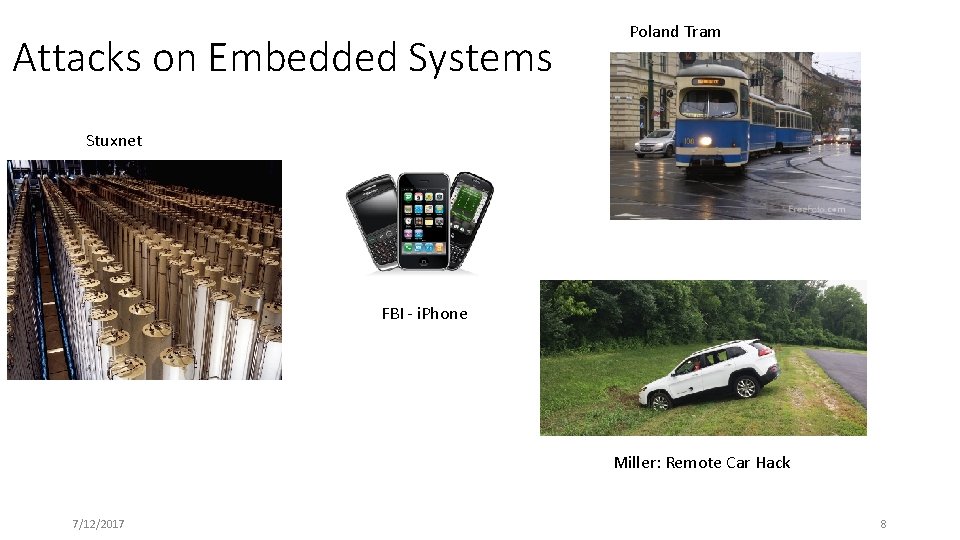

Attacks on Embedded Systems Poland Tram Stuxnet FBI - i. Phone Miller: Remote Car Hack 7/12/2017 8

Hypotheses CPS testers are facing enormous challenges of scale and scrutiny • Substantially larger code bases • Increased attention from attackers Thorough use of automation is necessary to increase rigor for CPS verification • Requires understanding of factors in testing • Common coverage metrics are not as well-suited for CPS as for general purpose software • Structure of programs, oracles is important for automated testing! Creating intelligent / adaptive systems will make the testing problem harder • Use of “deep learning” for critical functionality • We have little knowledge of how to systematically white-box test deep-learning generated code such as neural nets 7/12/2017 9

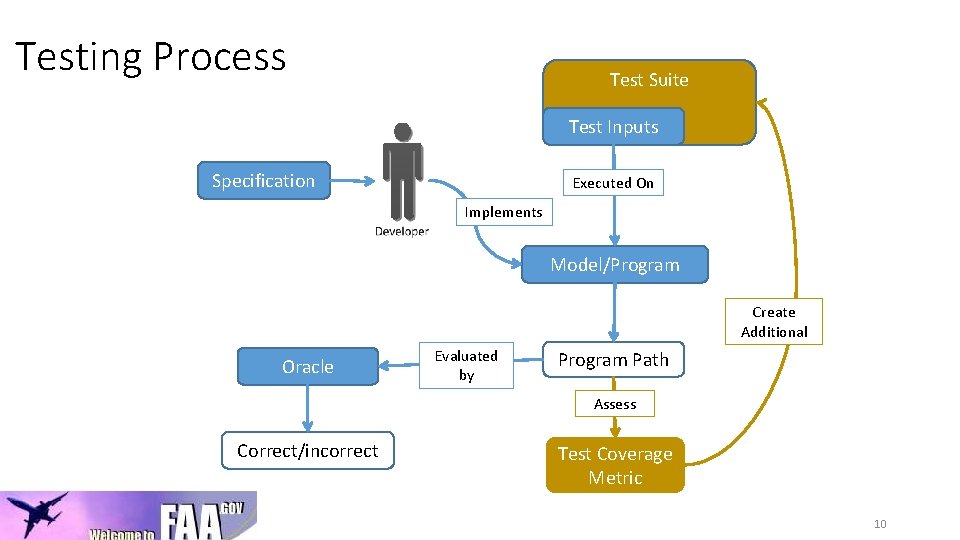

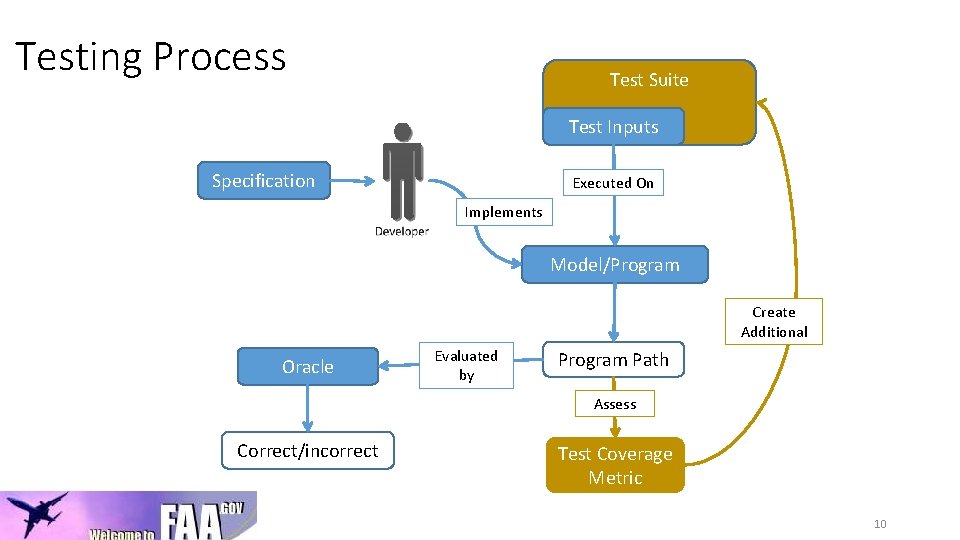

Testing Process Test Suite Test Inputs Specification Executed On Implements Model/Program Create Additional Oracle Evaluated by Program Path Assess Correct/incorrect 7/12/2017 Test Coverage Metric 10

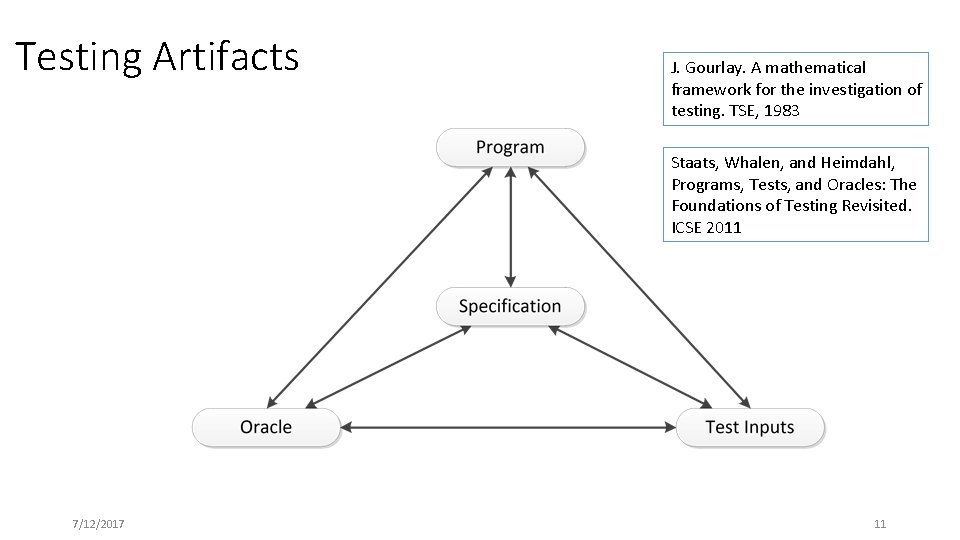

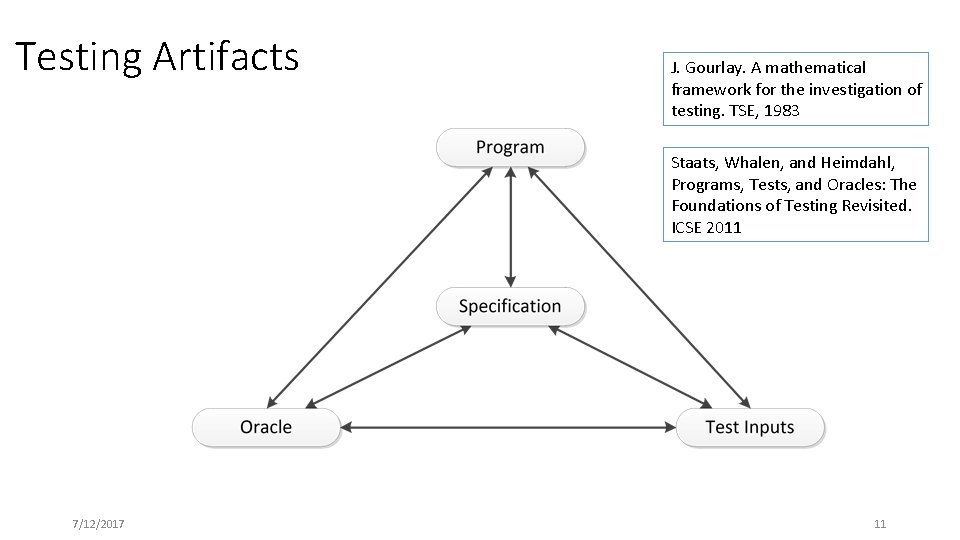

Testing Artifacts J. Gourlay. A mathematical framework for the investigation of testing. TSE, 1983 Staats, Whalen, and Heimdahl, Programs, Tests, and Oracles: The Foundations of Testing Revisited. ICSE 2011 7/12/2017 11

Testing Artifacts – In Practice 7/12/2017 12

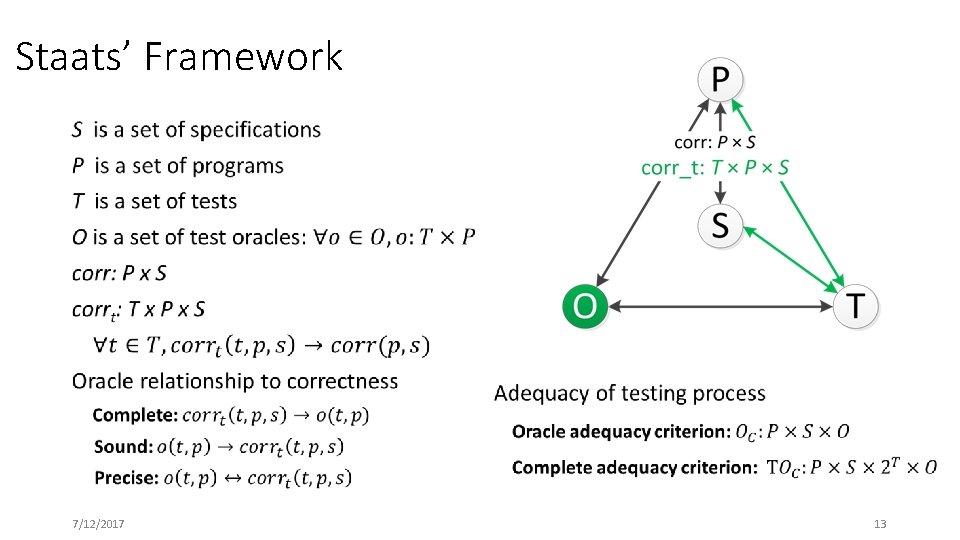

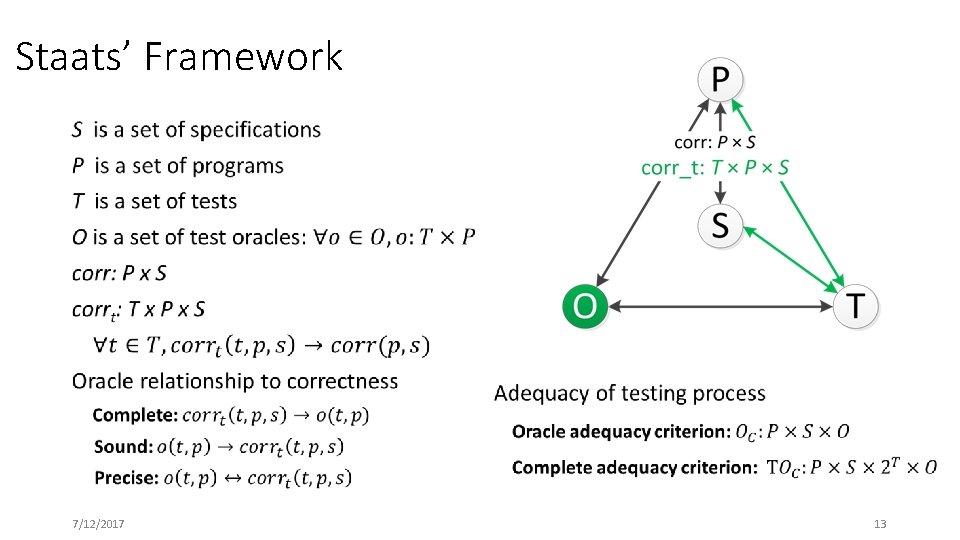

Staats’ Framework • 7/12/2017 13

Theory in Practice 7/12/2017 14

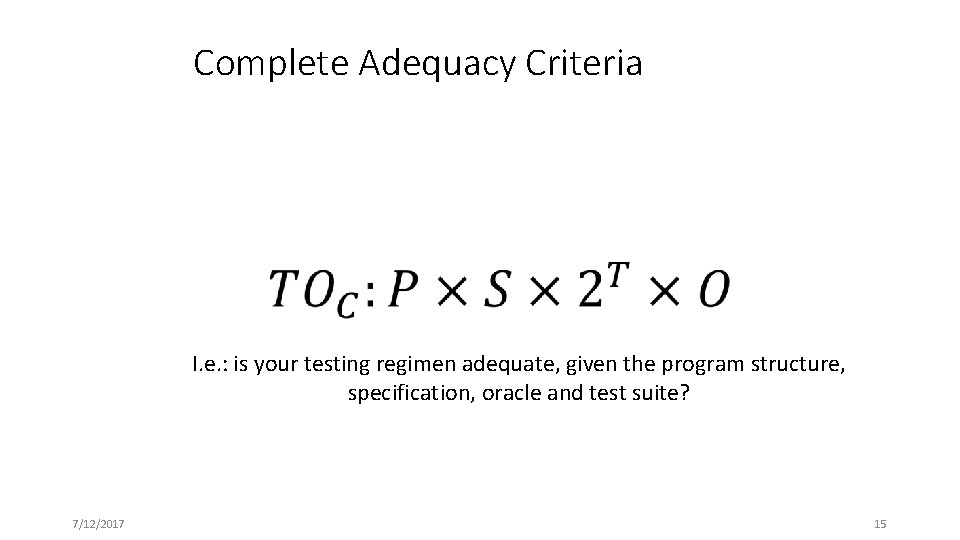

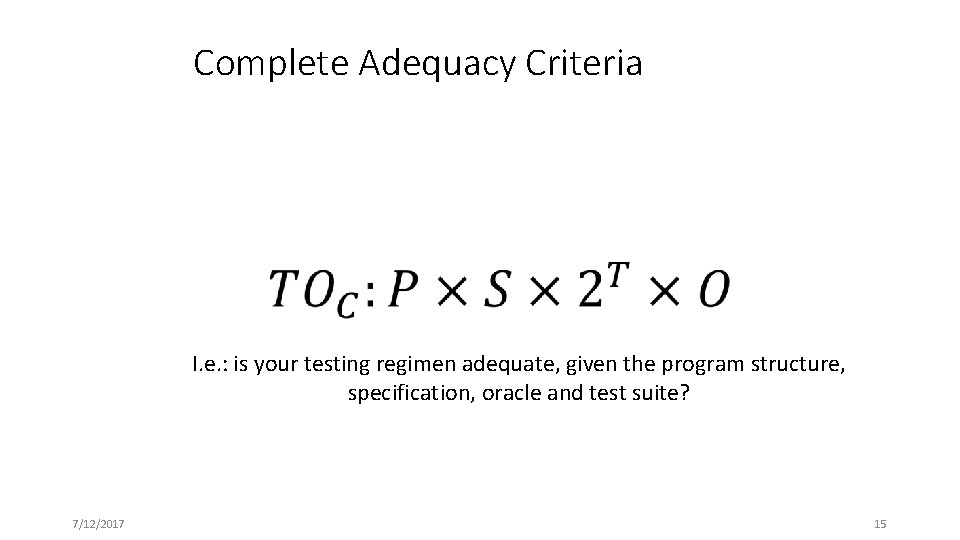

Complete Adequacy Criteria I. e. : is your testing regimen adequate, given the program structure, specification, oracle and test suite? 7/12/2017 15

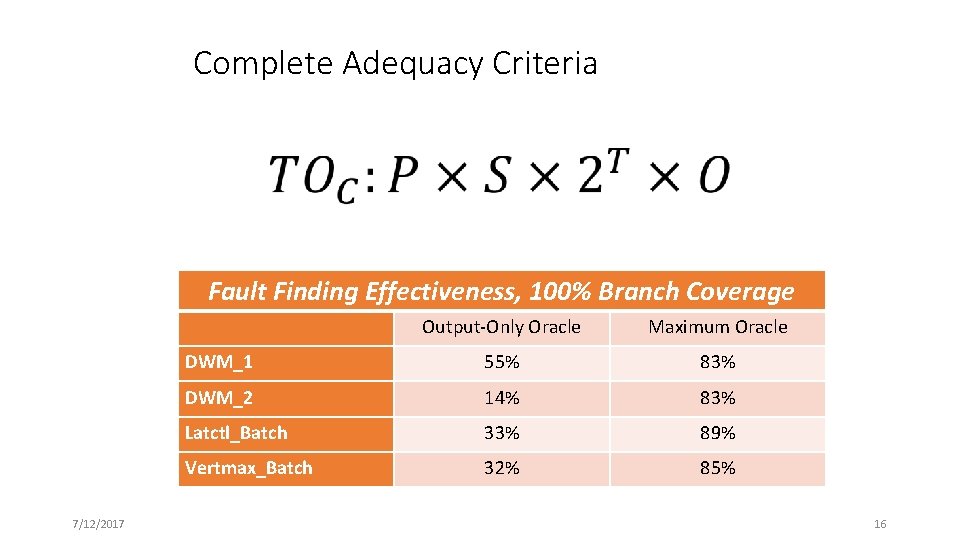

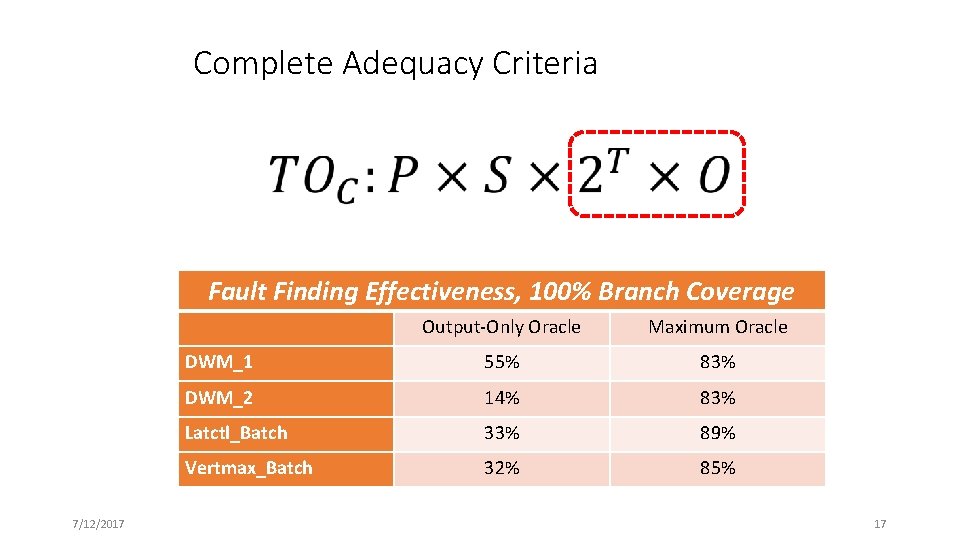

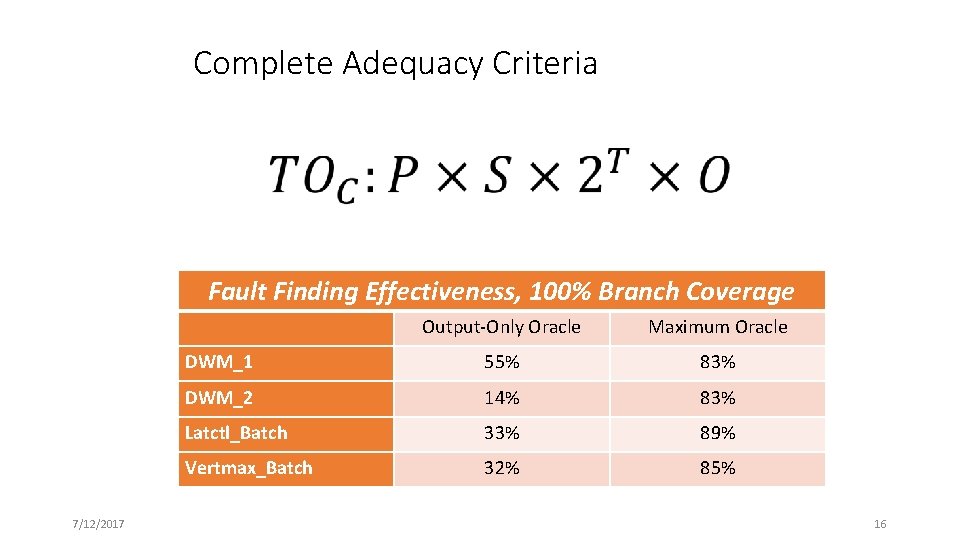

Complete Adequacy Criteria Fault Finding Effectiveness, 100% Branch Coverage 7/12/2017 Output-Only Oracle Maximum Oracle DWM_1 55% 83% DWM_2 14% 83% Latctl_Batch 33% 89% Vertmax_Batch 32% 85% 16

Complete Adequacy Criteria Fault Finding Effectiveness, 100% Branch Coverage 7/12/2017 Output-Only Oracle Maximum Oracle DWM_1 55% 83% DWM_2 14% 83% Latctl_Batch 33% 89% Vertmax_Batch 32% 85% 17

Complete Adequacy Criteria 7/12/2017 18

Complete Adequacy Criteria 7/12/2017 Gay, Staats, Whalen, and Heimdahl, The Risks of Coverage-Directed Test Case Generation, FASE 2012, TSE 2015. 19

MC/DC Effectiveness DWM_2 Code structure has large effect! Choice of oracle has large effect! Vertmax_Batch DWM_3 7/12/2017 20

Goals for “Good” Test Metric: Effective at finding faults; • Better than random testing for suites of the same size • Better than other metrics • This often requires accounting for oracle Inozemtseva and Holmes, Coverage Is Not Strongly Correlated with Test Suite Effectiveness, ICSE 14 Zhang and Mesbah: Assertions Are Strongly Correlated with Test Suite Effectiveness, FSE 15 Robust to changes in program structure Reasonable in terms of the number of required tests and cost of coverage analysis 7/12/2017 21

Another way to look at MC/DC Masking MC/DC can be expressed: Where means, For program P, the computed value for the nth instance of expression e is replaced by value v Describes whether a condition is observable in a decision (i. e. , not masked) Problem 1: any masking after the decision is not accounted for. Problem 2: we can rewrite programs to make decisions large or small (and MC/DC easy or hard to satisfy!) 7/12/2017 22

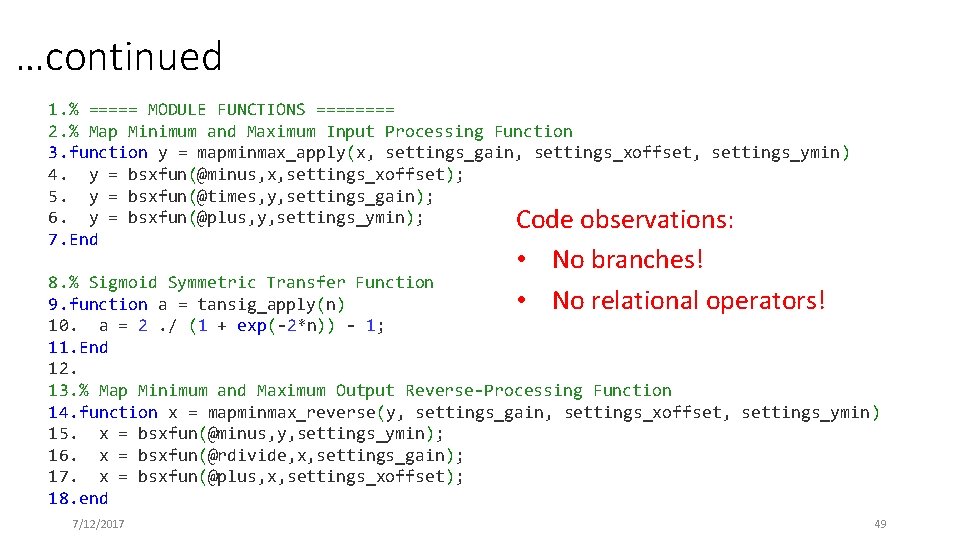

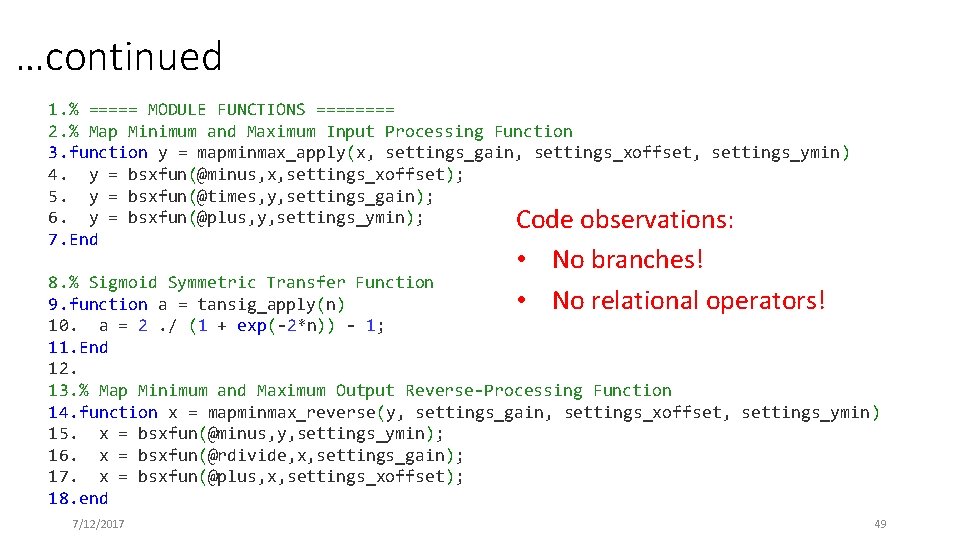

Reachability and Observability 7/12/2017 23

![Examining Observability With Model Counting and Symbolic Evaluation true test X Y Examining Observability With Model Counting and Symbolic Evaluation [ true ] test (X, Y)](https://slidetodoc.com/presentation_image_h/ed0abc5fd16a64ed68424b11259d9ae9/image-24.jpg)

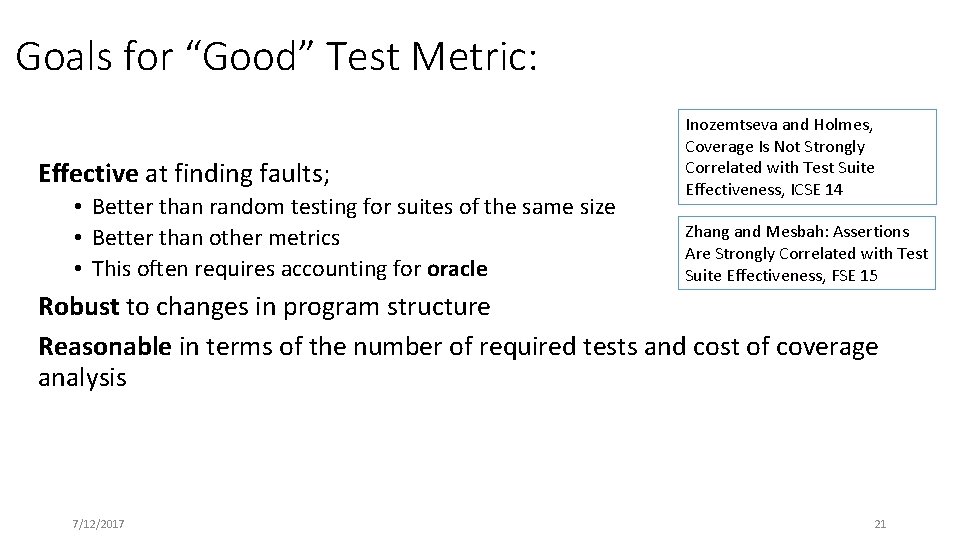

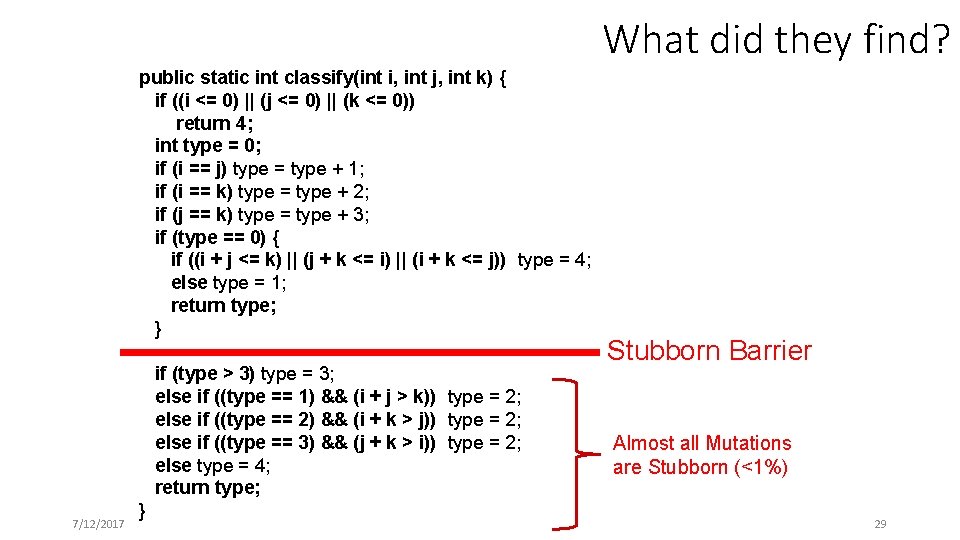

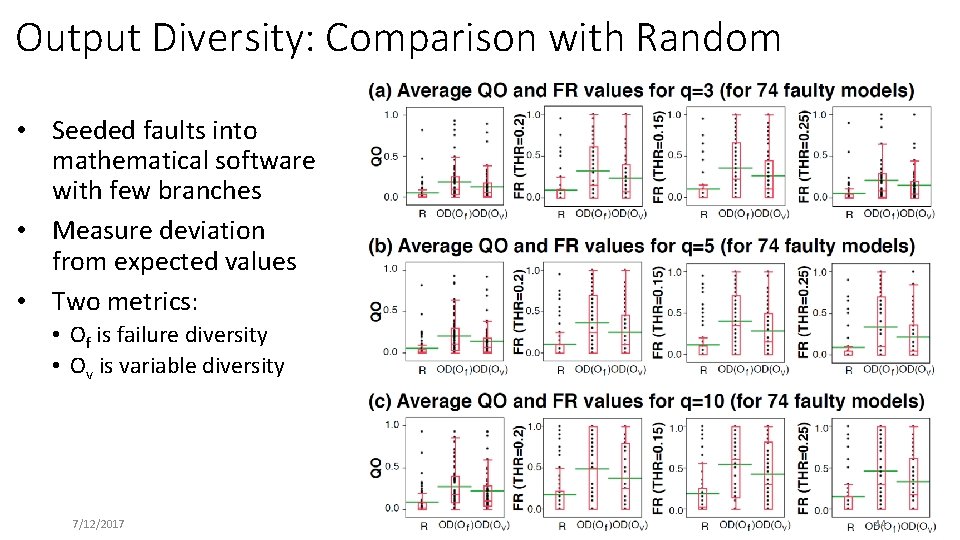

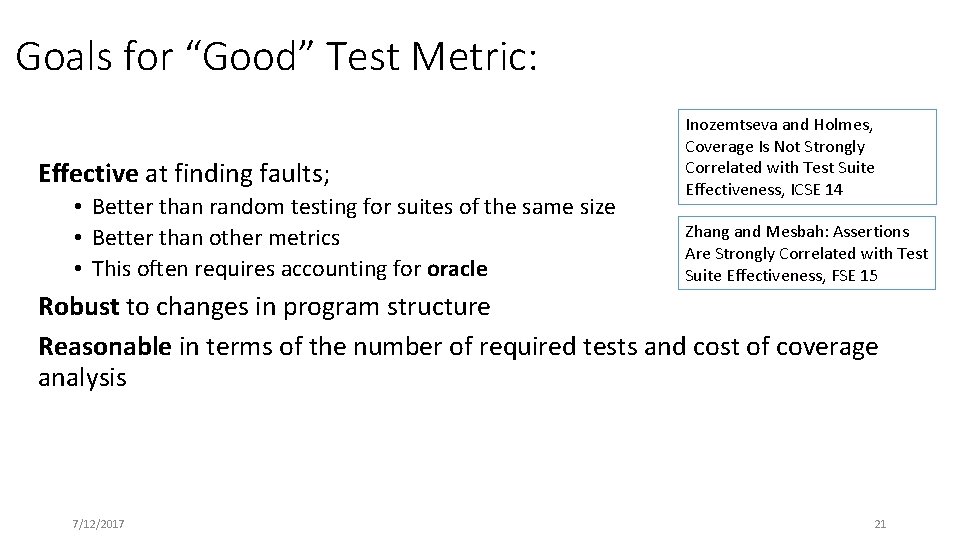

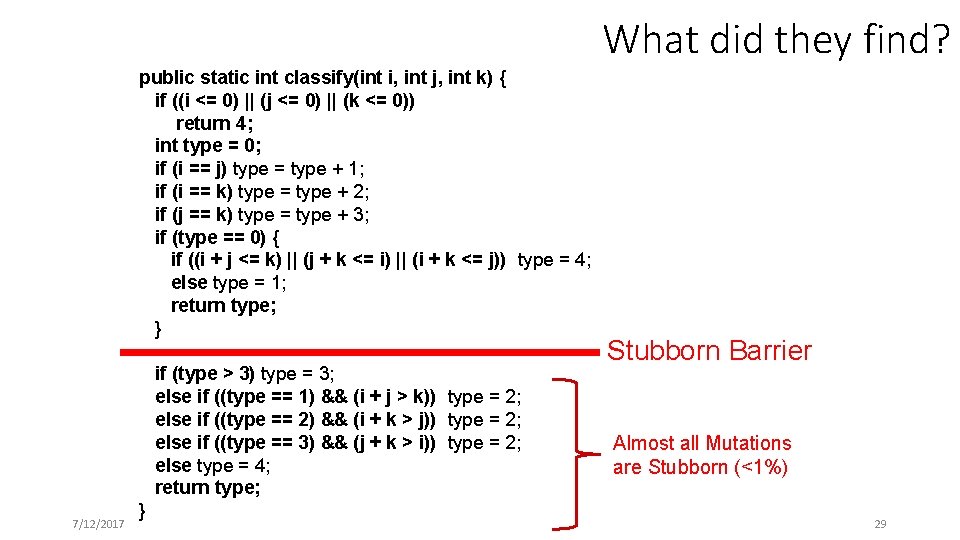

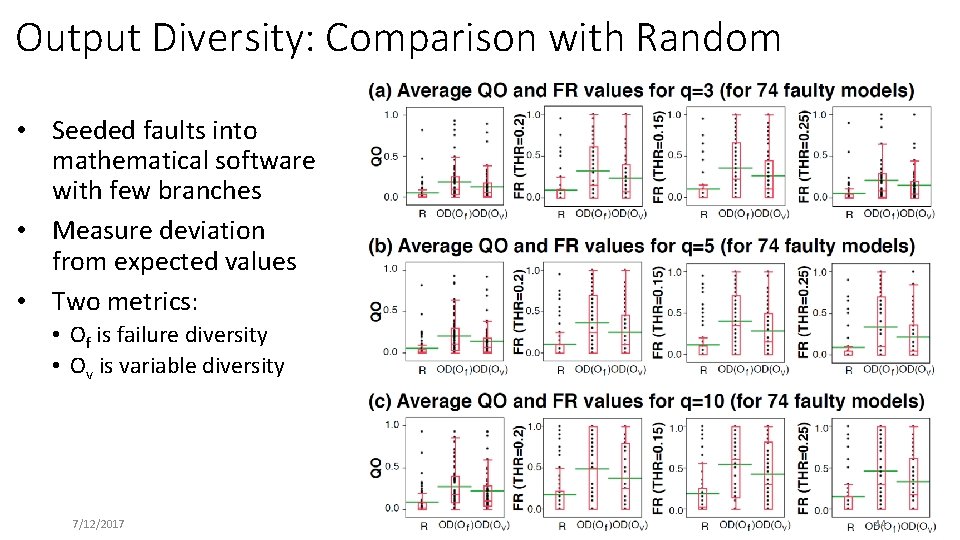

Examining Observability With Model Counting and Symbolic Evaluation [ true ] test (X, Y) int test(int x, int y) { int z; if (y == x*10) S 0; else S 1; if (x > 3 && y > 10) S 2; else S 3; return z; } 7/12/2017 [ Y=X*10 ] S 0 [ Y!=X*10 ] S 1 [ X>3 & 10<Y=X*10] S 2 [ X>3 & 10<Y!=X*10] S 2 [ Y=X*10 & !(X>3 & Y>10) ] S 3 Test(1, 10) reaches S 0, S 3 Test(0, 1) reaches S 1, S 3 Test(4, 11) reaches S 1, S 2 [ Y!=X*10 & !(X>3 & Y>10) ] S 3 Work by: Willem Visser, Matt Dwyer, Jaco Geldenhuys, Corina Pasareanu, Antonio Filieri, Tevfik Bultan ISSTA ‘ 12, ICSE ‘ 13, PLDI’ 14, SPIN ‘ 15, CAV ‘ 15 24

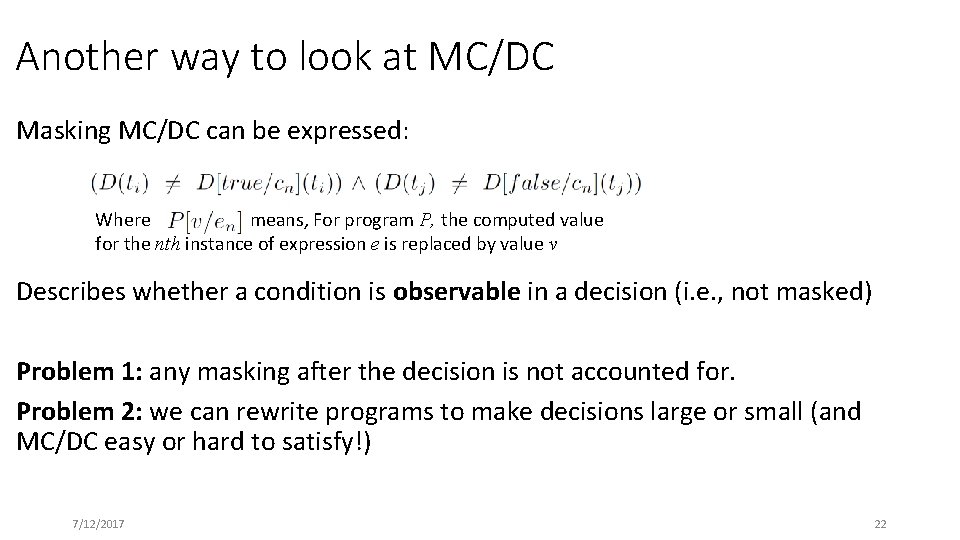

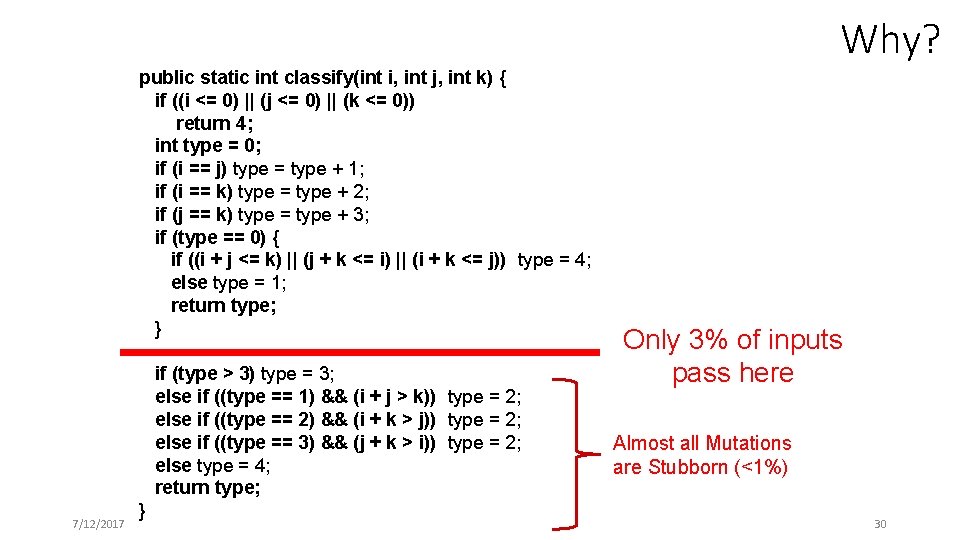

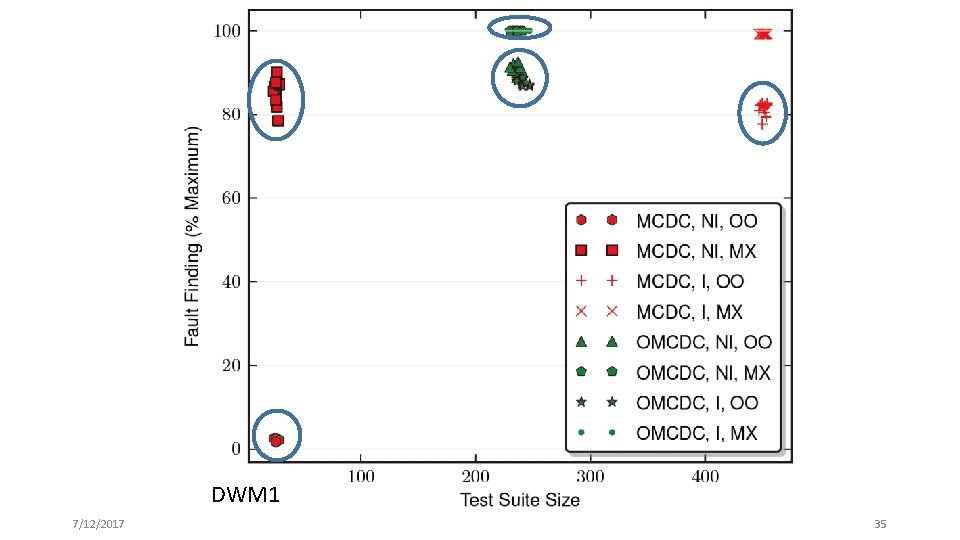

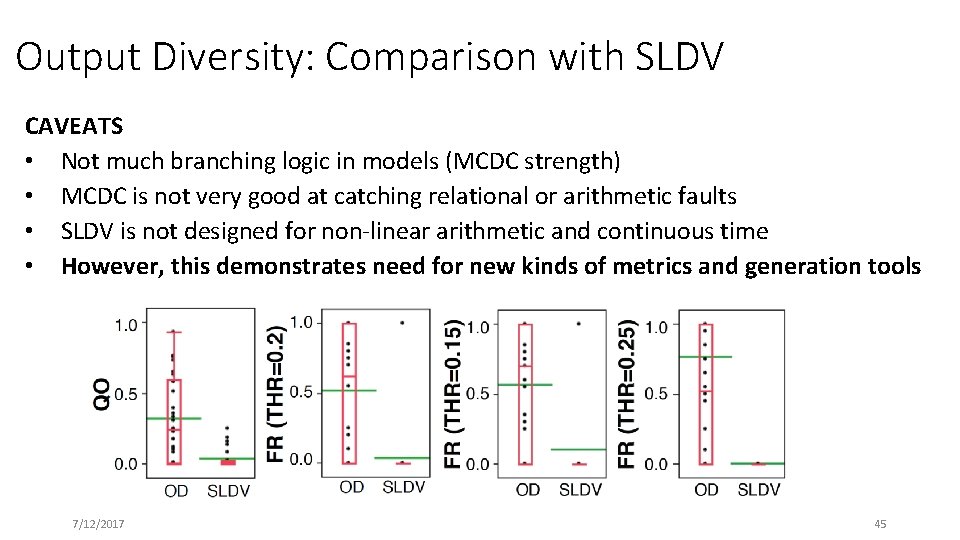

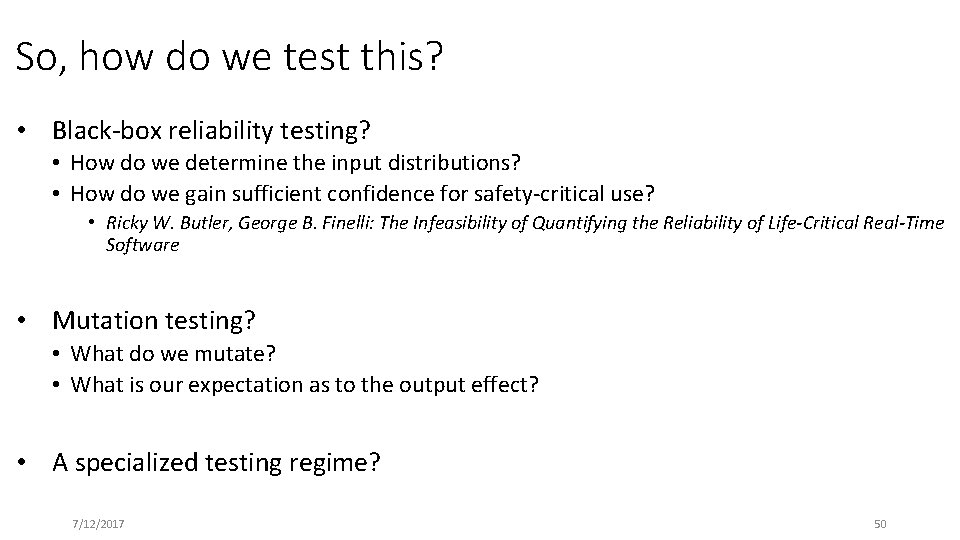

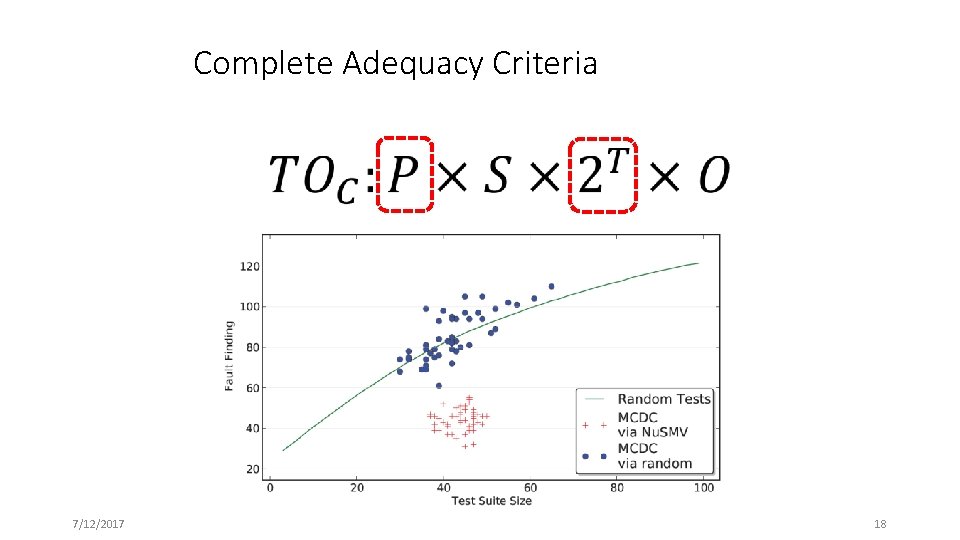

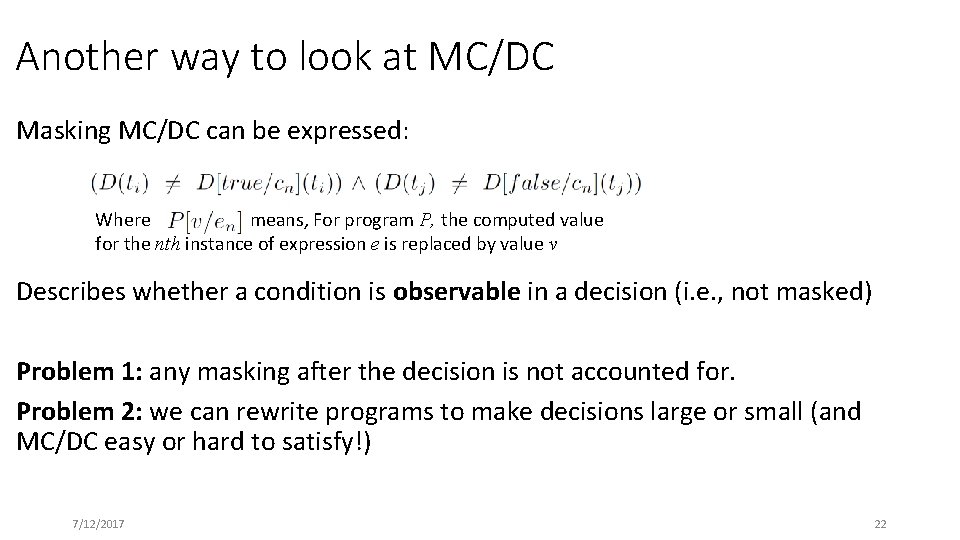

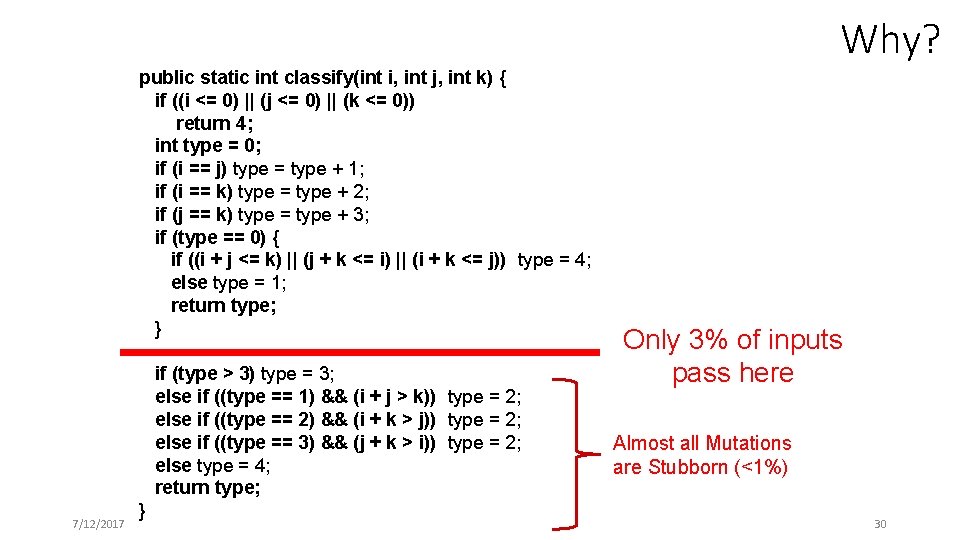

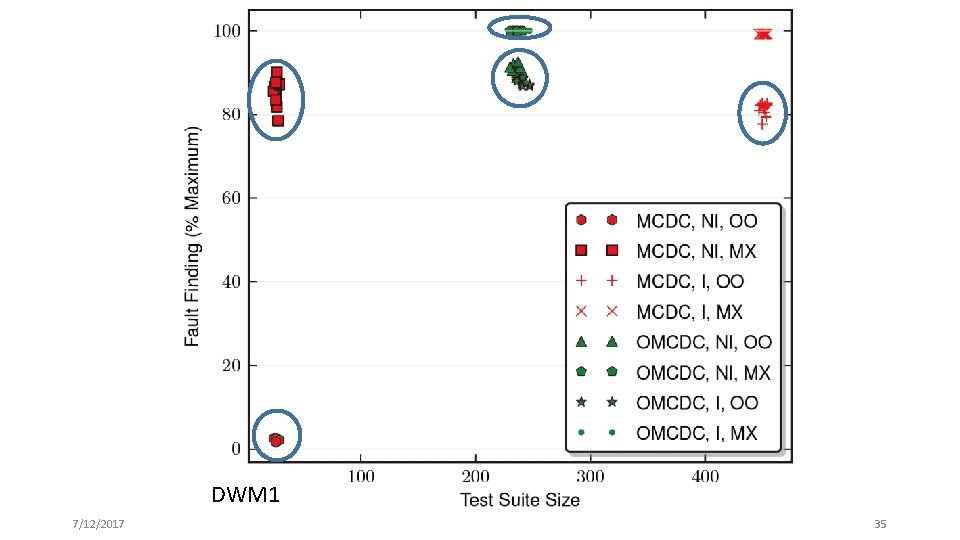

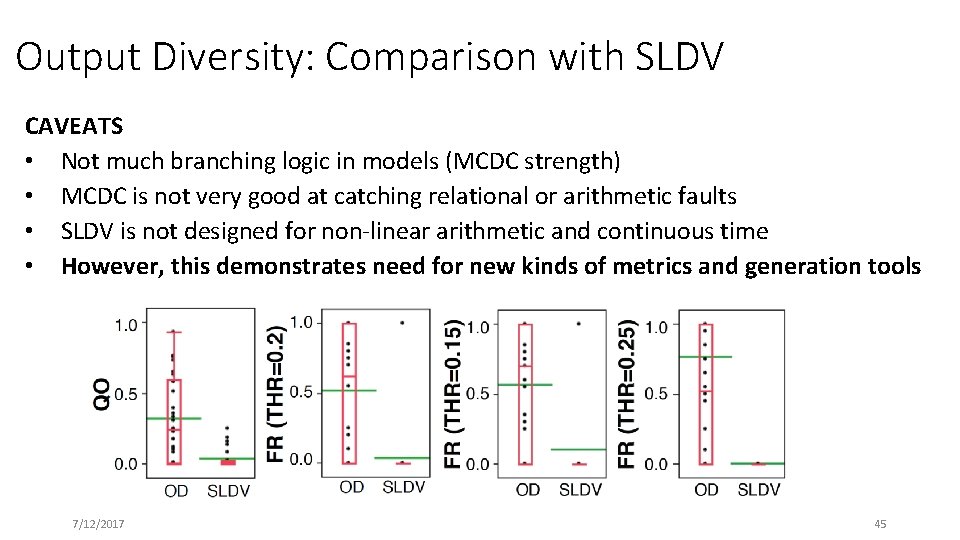

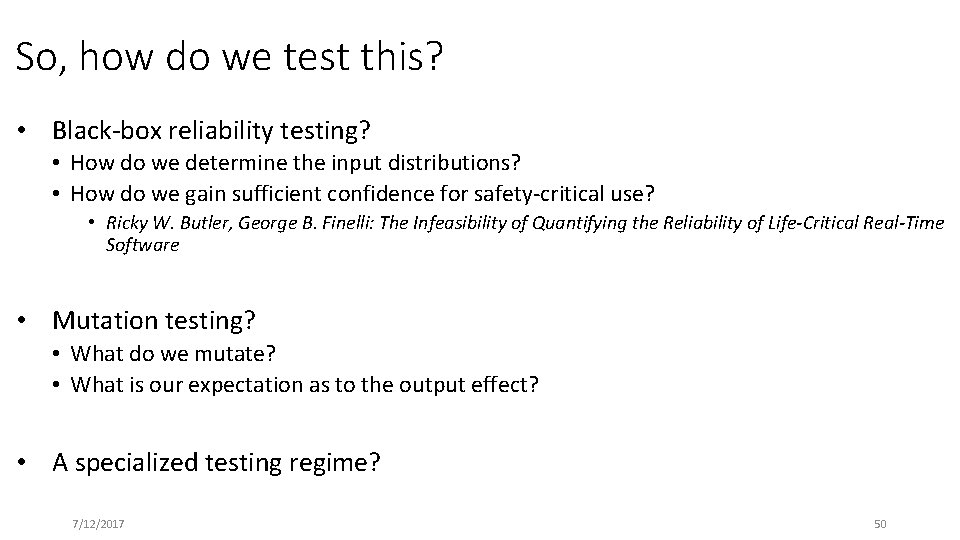

Probabilistic Symbolic Execution int test(int x, int y: 0. . 99) { int z; if (y == x*10) S 0; else z = 10; if (x > 1 && y > 10) z = 8; else S 3; return z; 6 } [ X>3 & 10<Y=X*10] 7/12/2017 104 [ true ] y=10 x [ Y=X*10 ] [ Y!=X*10 ] 9990 10 x>3 & y>10 The statement z = 10 gets visited in 99. 9% of tests x>3 & y>10 4 8538 1452 But it only affects the outcome in 14% of tests [ Y=X*10 & !(X>3 & Y>10) ] [ X>3 & 10<Y!=X*10] [ Y!=X*10 & !(X>3 & Y>10) ] 25

![Probabilistic SE 104 true y10 x Hard to reach YX10 Probabilistic SE 104 [ true ] y=10 x Hard to reach [ Y=X*10 ]](https://slidetodoc.com/presentation_image_h/ed0abc5fd16a64ed68424b11259d9ae9/image-26.jpg)

Probabilistic SE 104 [ true ] y=10 x Hard to reach [ Y=X*10 ] 9990 10 x>3 & y>10 Easy to observe 6 [ X>3 & 10<Y=X*10] 7/12/2017 [ Y!=X*10 ] Easy to reach x>3 & y>10 4 8538 (Somewhat) Hard to 1452 observe [ Y=X*10 & !(X>3 & Y>10) ] [ X>3 & 10<Y!=X*10] [ Y!=X*10 & !(X>3 & Y>10) ] 26

7/12/2017 Location, Location More important than chicken or bull Not hard at all Spoiler Alert How hard is it to kill a mutant? Just, Jalali, Inozemtseva, Ernst, Holmes, and Fraser. Are mutants a valid substitute for real faults in software testing? FSE 2014 Yao, Harmon, Jia, A study of Equivalent and Stubborn Mutation Operators using Human Analysis of Equivalence. ICSE 2014 W. Visser, What makes killing a mutant hard? ASE 2016. 27

In the initial results 7/12/2017 They saw something interesting 28

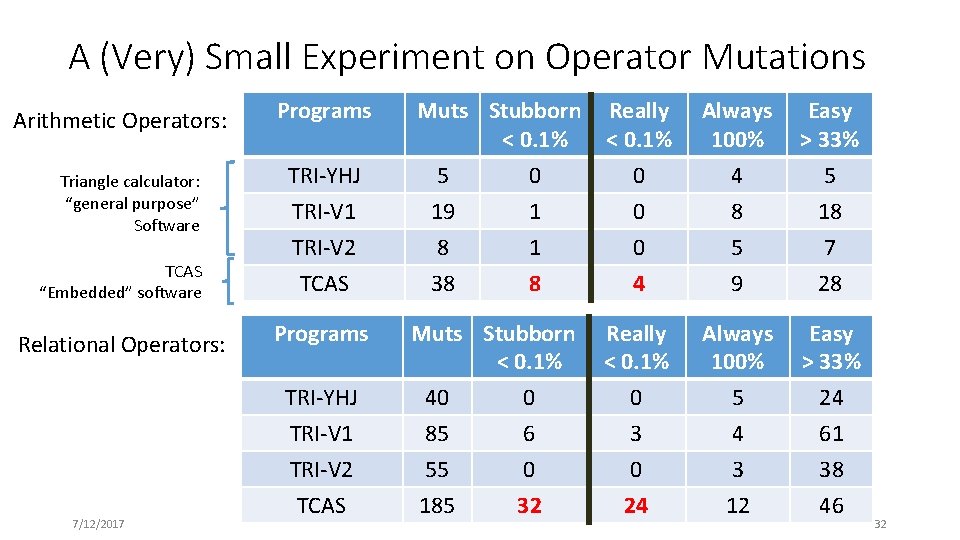

What did they find? public static int classify(int i, int j, int k) { if ((i <= 0) || (j <= 0) || (k <= 0)) return 4; int type = 0; if (i == j) type = type + 1; if (i == k) type = type + 2; if (j == k) type = type + 3; if (type == 0) { if ((i + j <= k) || (j + k <= i) || (i + k <= j)) type = 4; else type = 1; return type; } if (type > 3) type = 3; else if ((type == 1) && (i + j > k)) type = 2; else if ((type == 2) && (i + k > j)) type = 2; else if ((type == 3) && (j + k > i)) type = 2; else type = 4; return type; 7/12/2017 } Stubborn Barrier Almost all Mutations are Stubborn (<1%) 29

Why? public static int classify(int i, int j, int k) { if ((i <= 0) || (j <= 0) || (k <= 0)) return 4; int type = 0; if (i == j) type = type + 1; if (i == k) type = type + 2; if (j == k) type = type + 3; if (type == 0) { if ((i + j <= k) || (j + k <= i) || (i + k <= j)) type = 4; else type = 1; return type; } if (type > 3) type = 3; else if ((type == 1) && (i + j > k)) type = 2; else if ((type == 2) && (i + k > j)) type = 2; else if ((type == 3) && (j + k > i)) type = 2; else type = 4; return type; 7/12/2017 } Only 3% of inputs pass here Almost all Mutations are Stubborn (<1%) 30

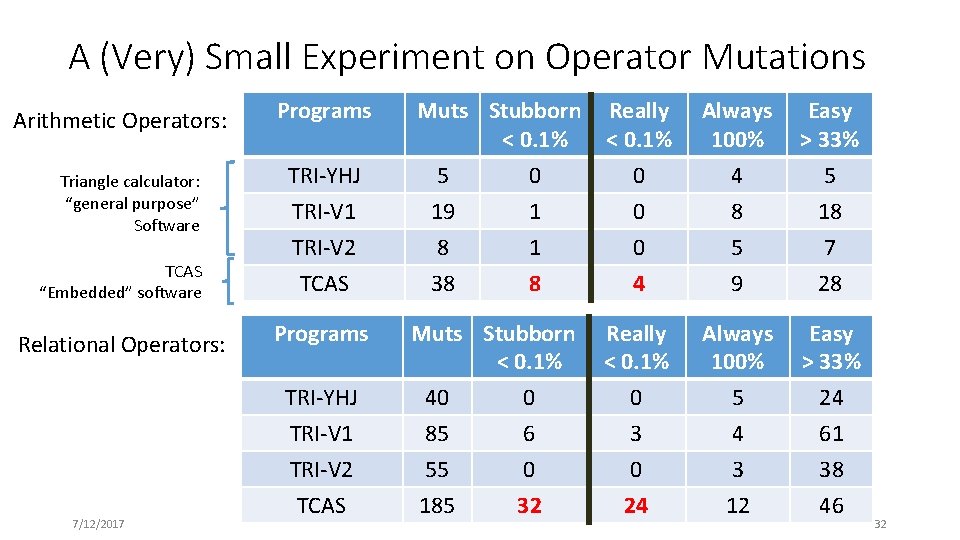

A (Very) Small Experiment on Operator Mutations Arithmetic Operators: Triangle calculator: “general purpose” Software TCAS “Embedded” software Relational Operators: Programs TRI-YHJ TRI-V 1 TRI-V 2 TCAS Programs TRI-YHJ TRI-V 1 TRI-V 2 7/12/2017 TCAS Muts Stubborn < 0. 1% 5 0 19 1 8 1 38 8 Really < 0. 1% 0 0 0 4 Always 100% 4 8 5 9 Easy > 33% 5 18 7 28 Muts Stubborn < 0. 1% 40 0 85 6 55 0 Really < 0. 1% 0 3 0 Always 100% 5 4 3 Easy > 33% 24 61 38 24 12 46 185 32 31

A (Very) Small Experiment on Operator Mutations Arithmetic Operators: Triangle calculator: “general purpose” Software TCAS “Embedded” software Relational Operators: Programs TRI-YHJ TRI-V 1 TRI-V 2 TCAS Programs TRI-YHJ TRI-V 1 TRI-V 2 7/12/2017 TCAS Muts Stubborn < 0. 1% 5 0 19 1 8 1 38 8 Really < 0. 1% 0 0 0 4 Always 100% 4 8 5 9 Easy > 33% 5 18 7 28 Muts Stubborn < 0. 1% 40 0 85 6 55 0 Really < 0. 1% 0 3 0 Always 100% 5 4 3 Easy > 33% 24 61 38 24 12 46 185 32 32

Why is observability an issue for embedded systems? Often long tests are required to expose faults from earlier computations • • Rate Limiters Hysteresis / de-bounce Feedback bounds System Modes Physical systems can impede observability • Cannot observe all outputs • Or cannot observe them accurately Fault tolerance logic can impede observability • Richer oracle data than system outputs required Structure of programs can impede observability • Graphical dataflow notations (Simulink / SCADE) put conditional blocks at the end of computation flows rather than at the beginning. 7/12/2017 33

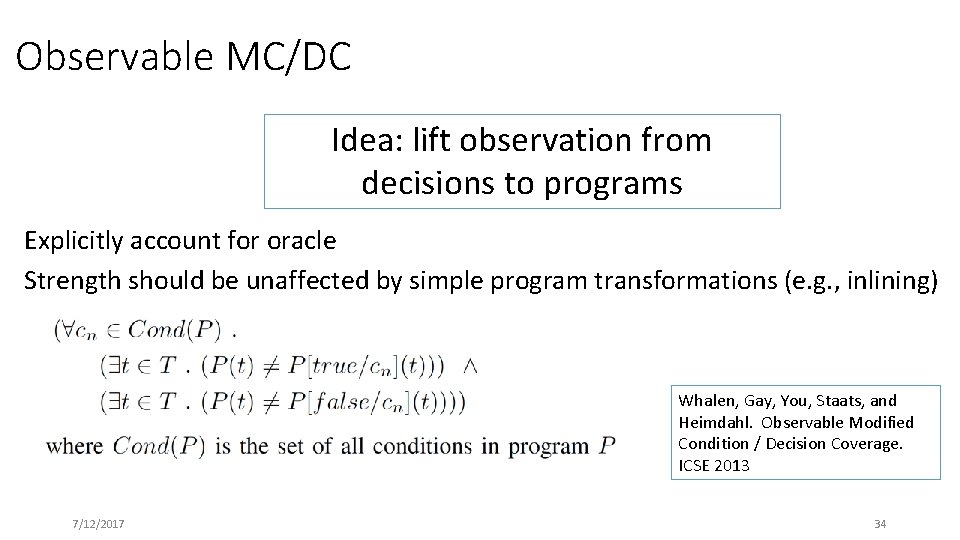

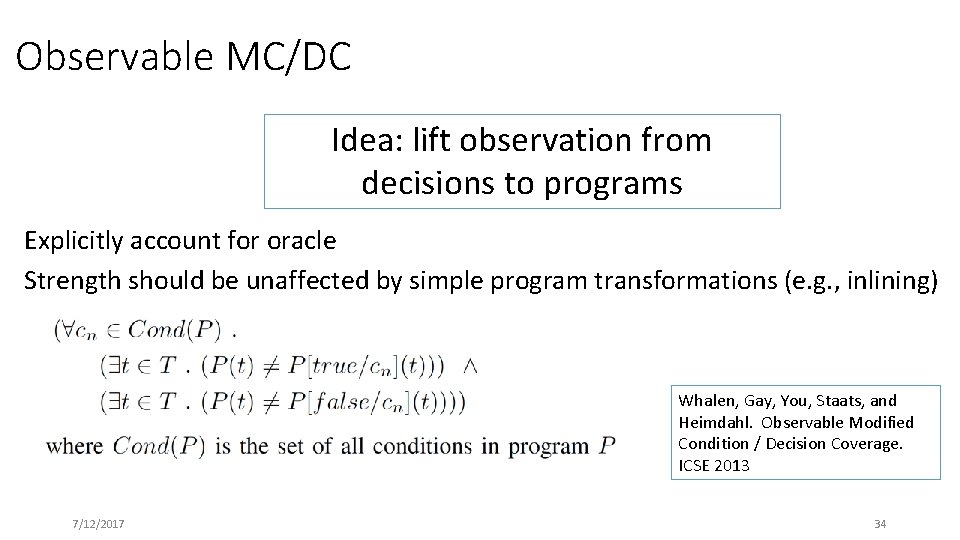

Observable MC/DC Idea: lift observation from decisions to programs Explicitly account for oracle Strength should be unaffected by simple program transformations (e. g. , inlining) Whalen, Gay, You, Staats, and Heimdahl. Observable Modified Condition / Decision Coverage. ICSE 2013 7/12/2017 34

DWM 1 7/12/2017 35

DWM 2 7/12/2017 Vertmax Latctl Microwave 36

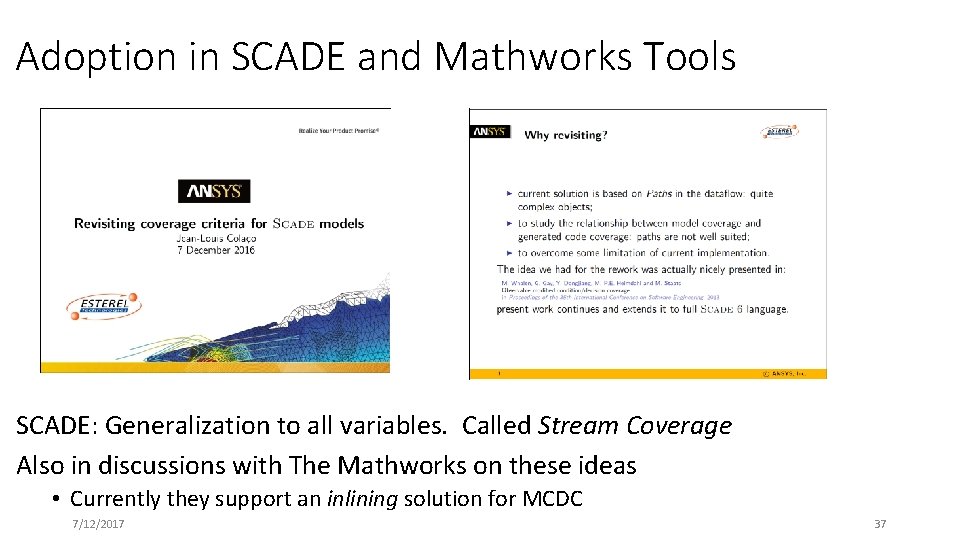

Adoption in SCADE and Mathworks Tools SCADE: Generalization to all variables. Called Stream Coverage Also in discussions with The Mathworks on these ideas • Currently they support an inlining solution for MCDC 7/12/2017 37

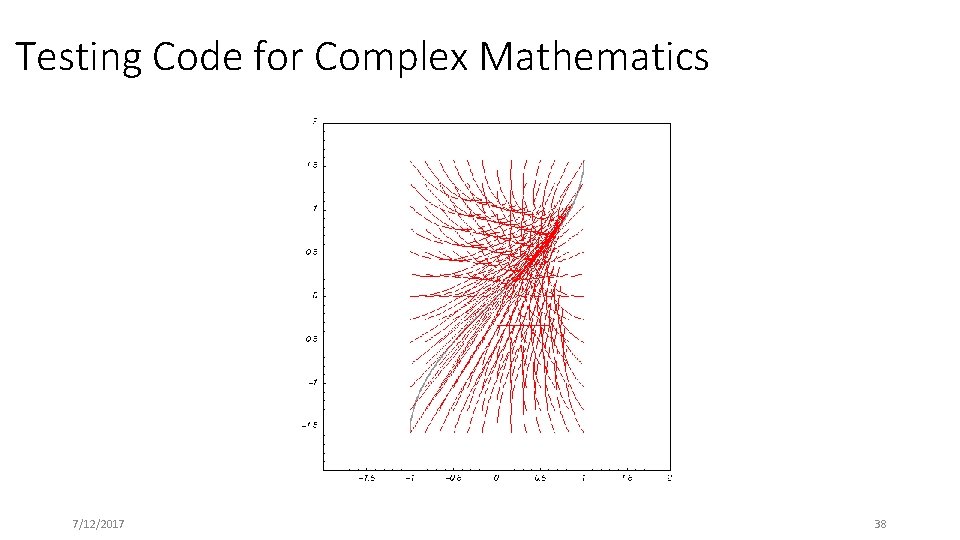

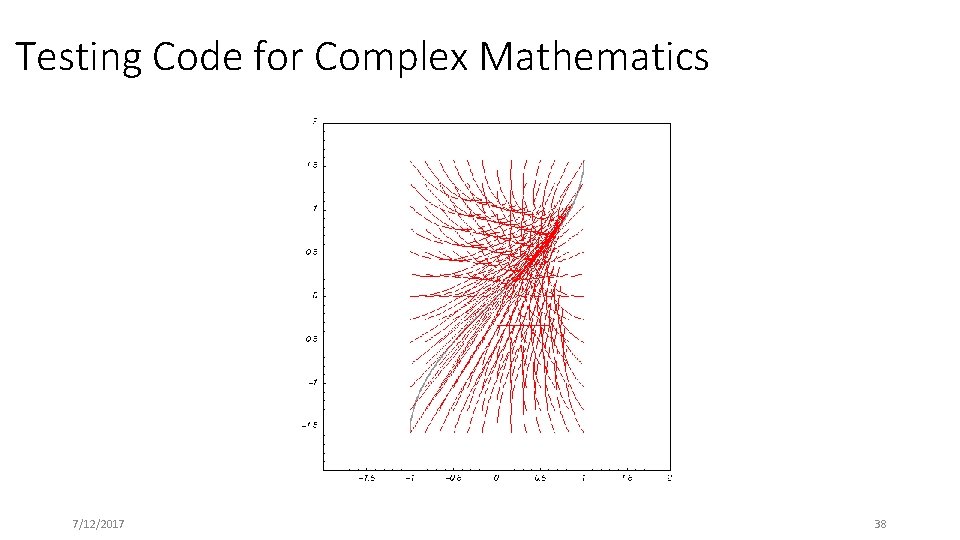

Testing Code for Complex Mathematics 7/12/2017 38

Testing Complex Mathematics Metrics describing branching logic often miss errors in complex mathematics Errors often exist in parts of the “numerical space” rather than portions of the CFG - Overflow / underflow - Loss of precision - Divide by zero - Oscillation - Transients 7/12/2017 39

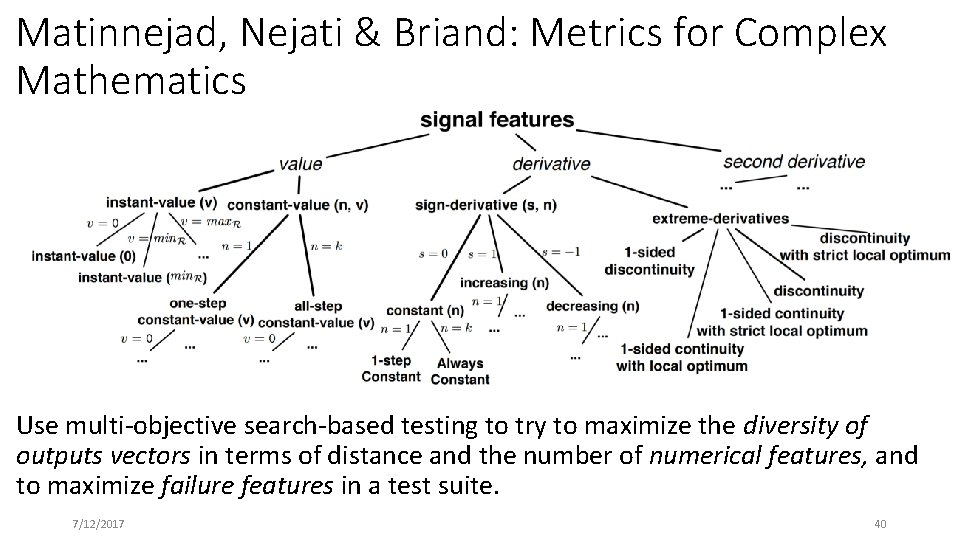

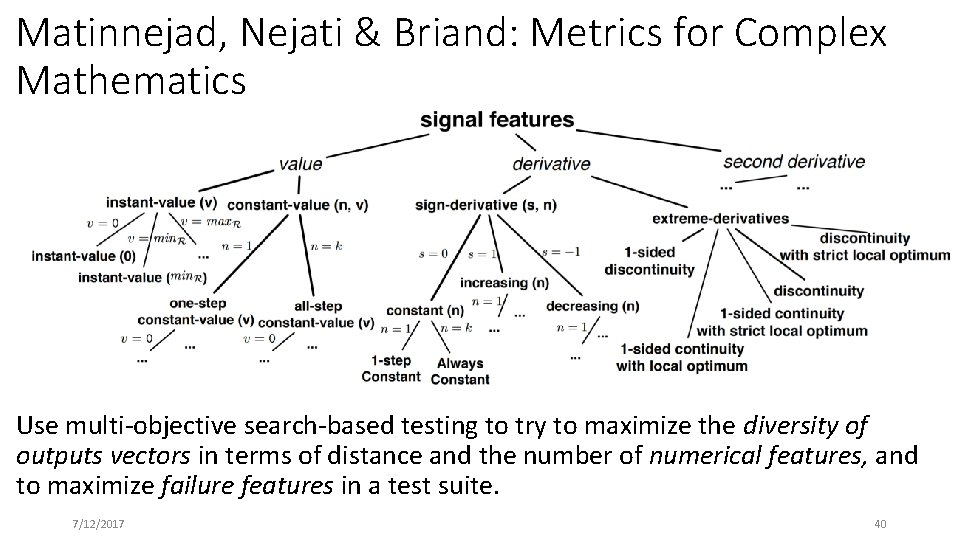

Matinnejad, Nejati & Briand: Metrics for Complex Mathematics Use multi-objective search-based testing to try to maximize the diversity of outputs vectors in terms of distance and the number of numerical features, and to maximize failure features in a test suite. 7/12/2017 40

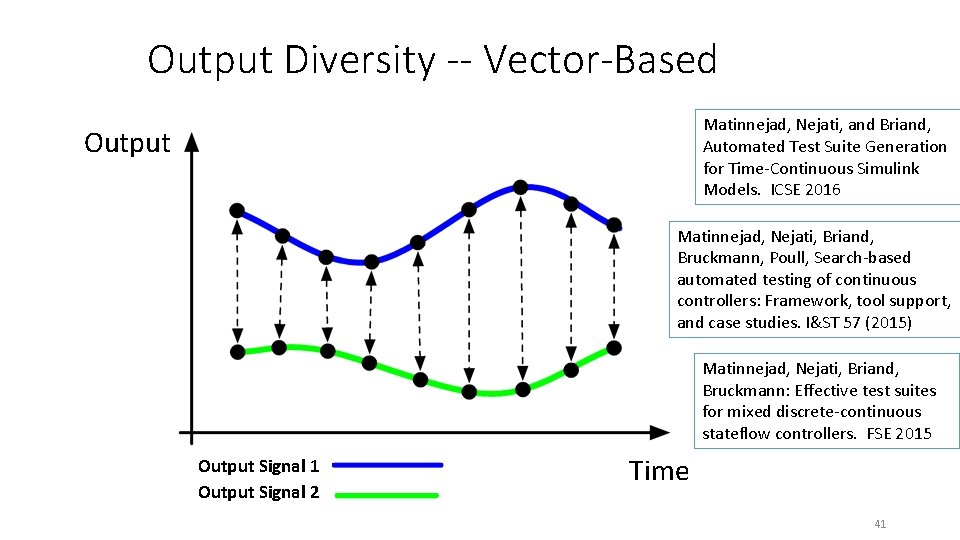

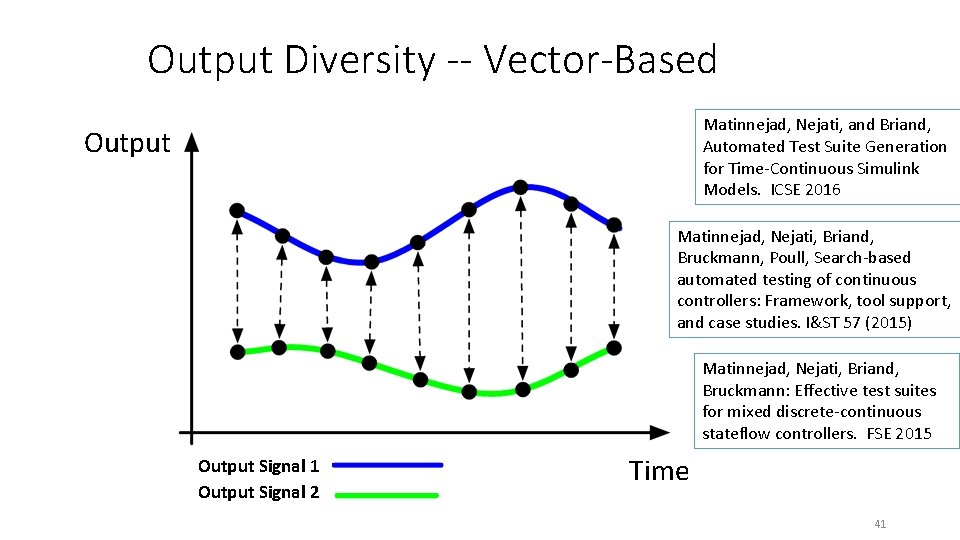

Output Diversity -- Vector-Based Matinnejad, Nejati, and Briand, Automated Test Suite Generation for Time-Continuous Simulink Models. ICSE 2016 Output Matinnejad, Nejati, Briand, Bruckmann, Poull, Search-based automated testing of continuous controllers: Framework, tool support, and case studies. I&ST 57 (2015) Matinnejad, Nejati, Briand, Bruckmann: Effective test suites for mixed discrete-continuous stateflow controllers. FSE 2015 Output Signal 1 Output Signal 2 Time 41

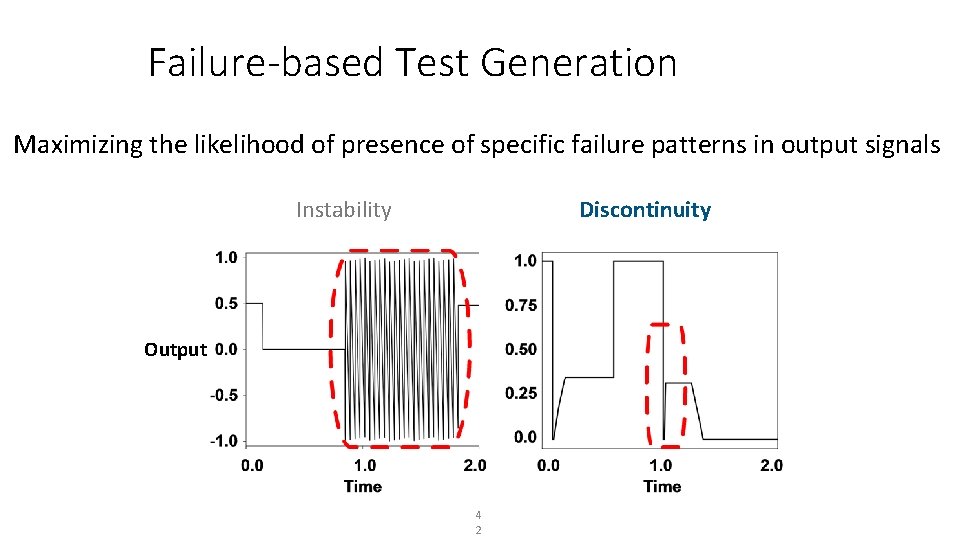

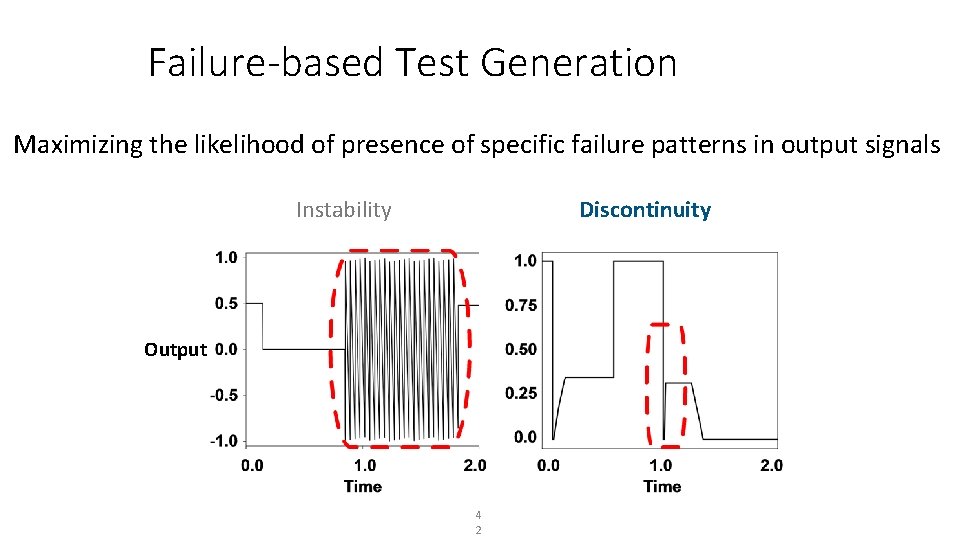

Failure-based Test Generation Maximizing the likelihood of presence of specific failure patterns in output signals Instability Discontinuity Output 4 2

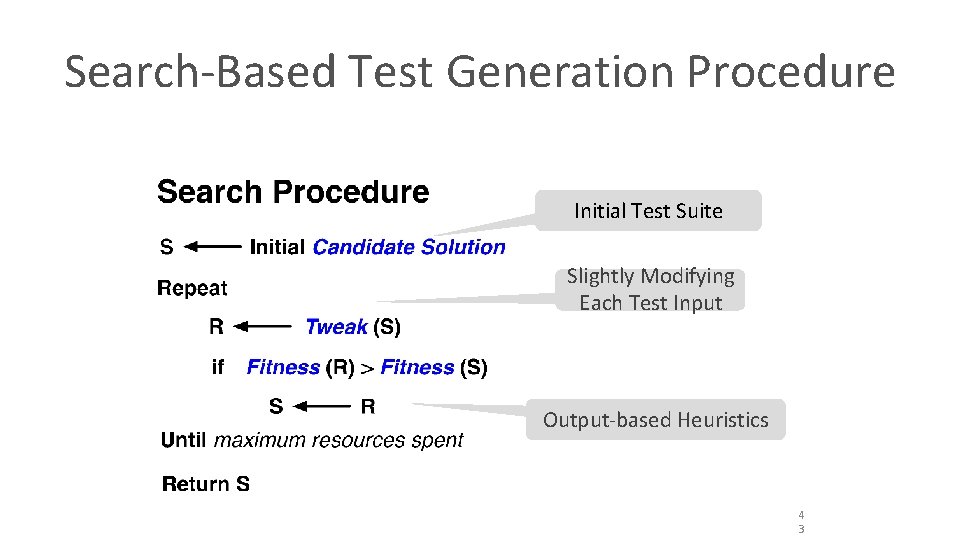

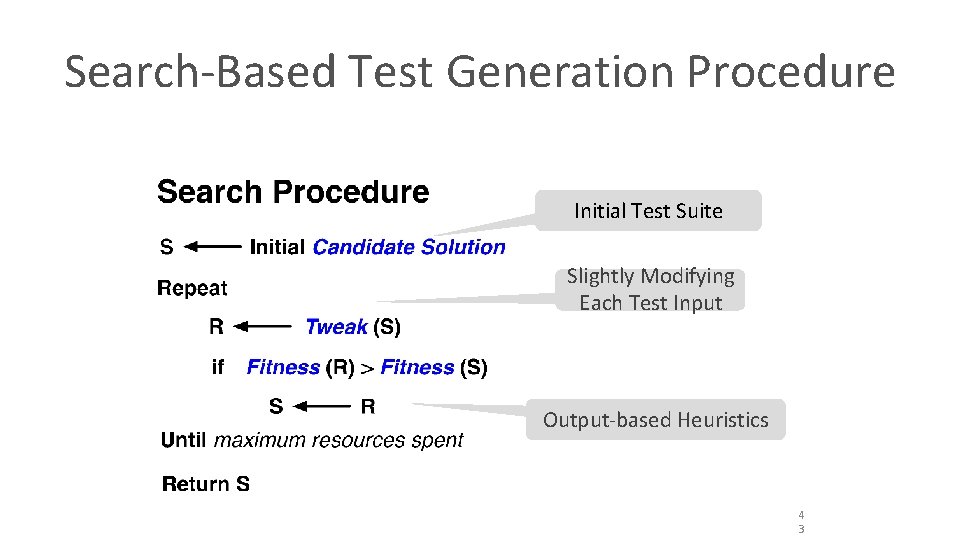

Search-Based Test Generation Procedure Initial Test Suite Slightly Modifying Each Test Input Output-based Heuristics 4 3

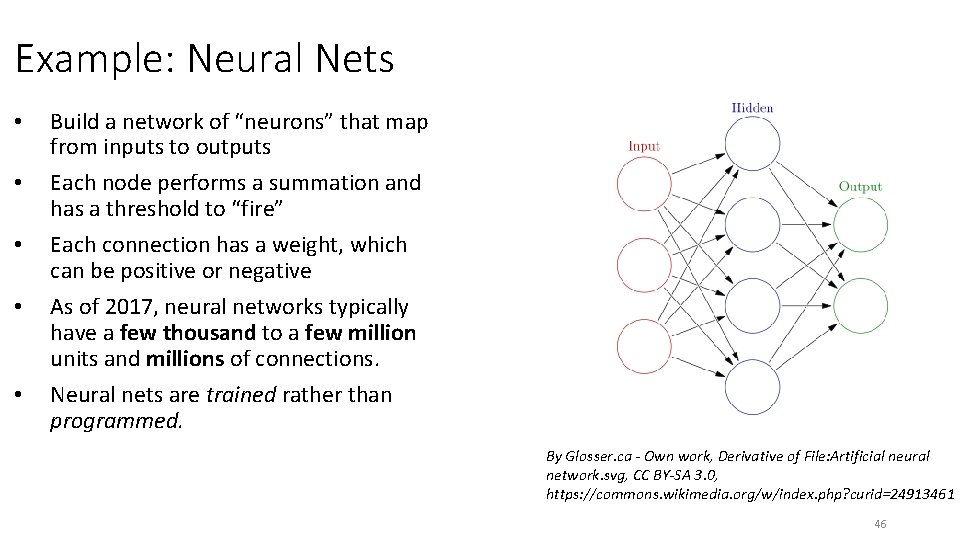

Output Diversity: Comparison with Random • Seeded faults into mathematical software with few branches • Measure deviation from expected values • Two metrics: • Of is failure diversity • Ov is variable diversity 7/12/2017 44

Output Diversity: Comparison with SLDV CAVEATS • Not much branching logic in models (MCDC strength) • MCDC is not very good at catching relational or arithmetic faults • SLDV is not designed for non-linear arithmetic and continuous time • However, this demonstrates need for new kinds of metrics and generation tools 7/12/2017 45

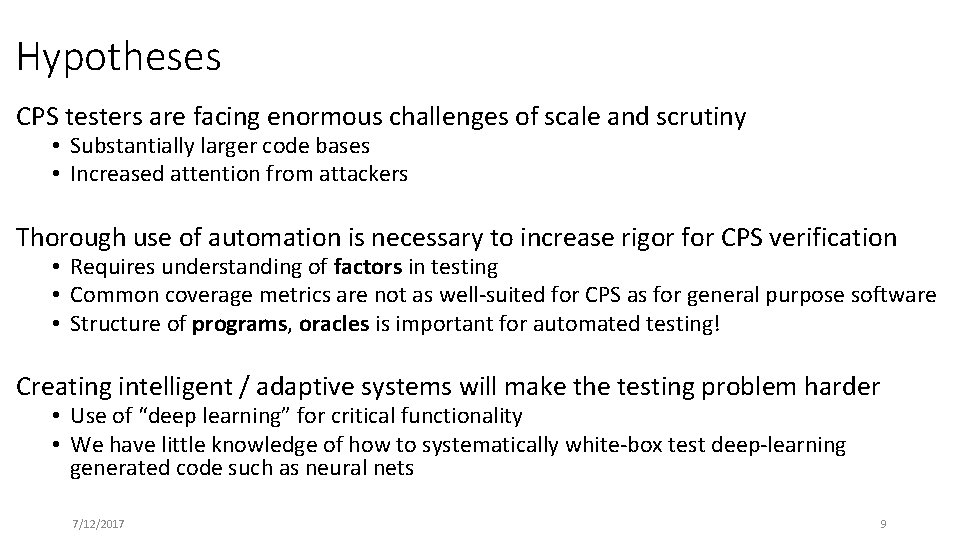

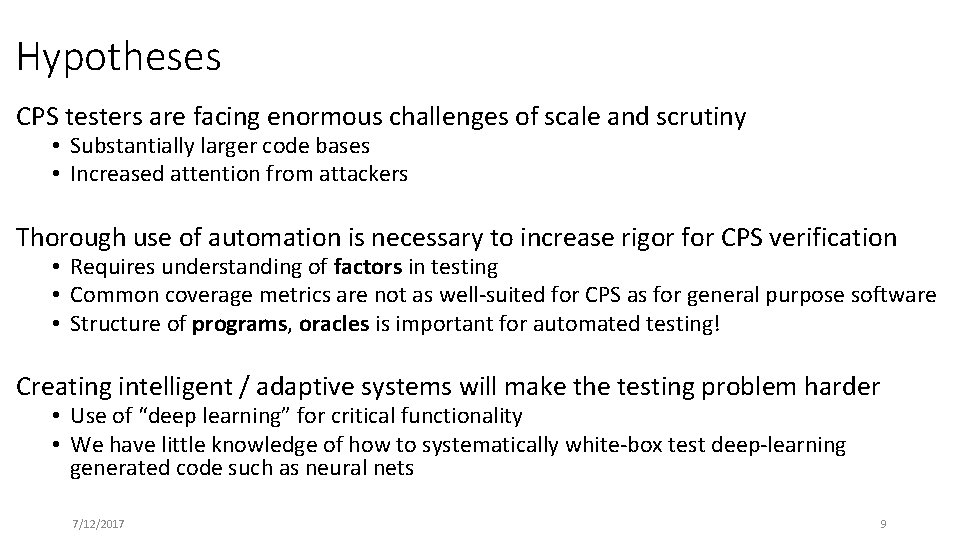

Example: Neural Nets • • • Build a network of “neurons” that map from inputs to outputs Each node performs a summation and has a threshold to “fire” Each connection has a weight, which can be positive or negative As of 2017, neural networks typically have a few thousand to a few million units and millions of connections. Neural nets are trained rather than programmed. By Glosser. ca - Own work, Derivative of File: Artificial neural network. svg, CC BY-SA 3. 0, https: //commons. wikimedia. org/w/index. php? curid=24913461 46

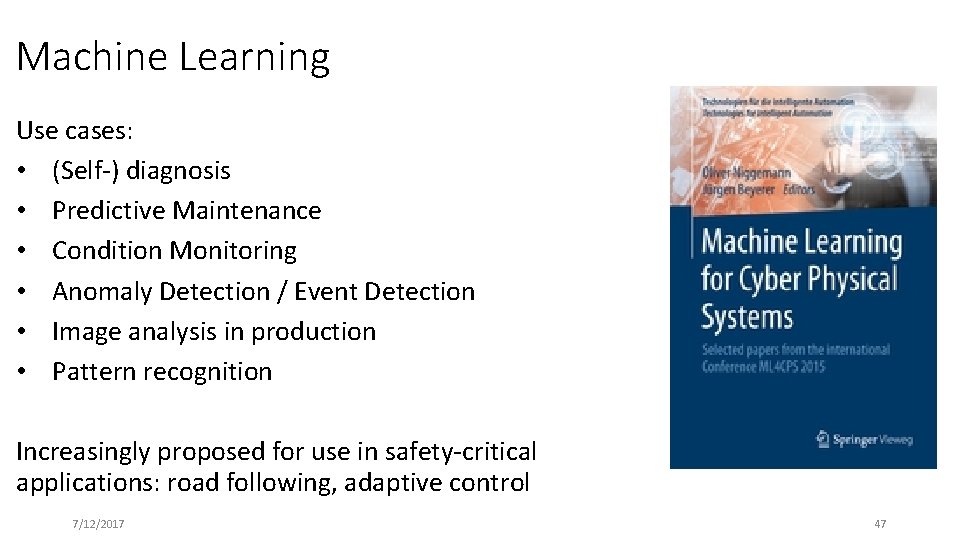

Machine Learning Use cases: • (Self-) diagnosis • Predictive Maintenance • Condition Monitoring • Anomaly Detection / Event Detection • Image analysis in production • Pattern recognition Increasingly proposed for use in safety-critical applications: road following, adaptive control 7/12/2017 47

![Neural Net Code Structure in MATLAB 1 function y 1 simulate Standalone Netx Neural Net Code Structure (in MATLAB) 1. function [y 1] = simulate. Standalone. Net(x](https://slidetodoc.com/presentation_image_h/ed0abc5fd16a64ed68424b11259d9ae9/image-48.jpg)

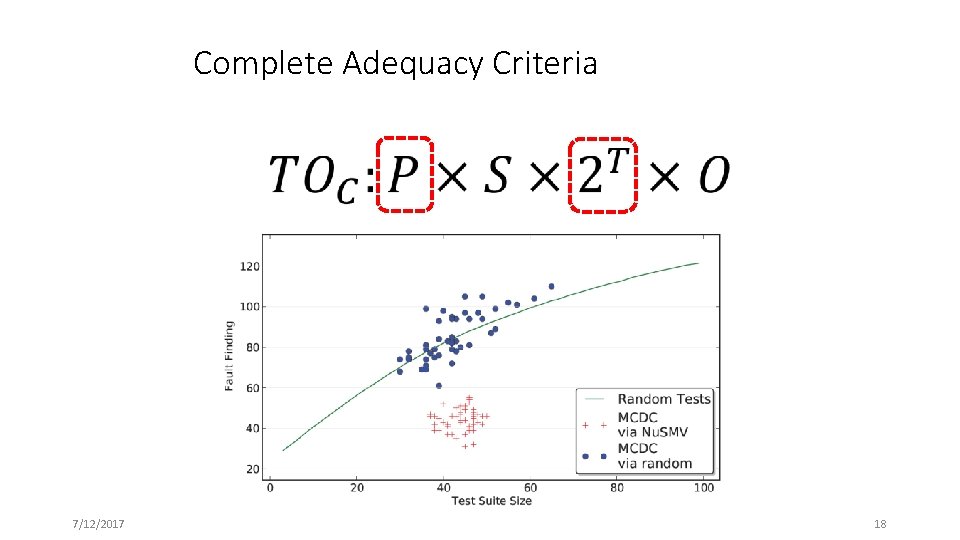

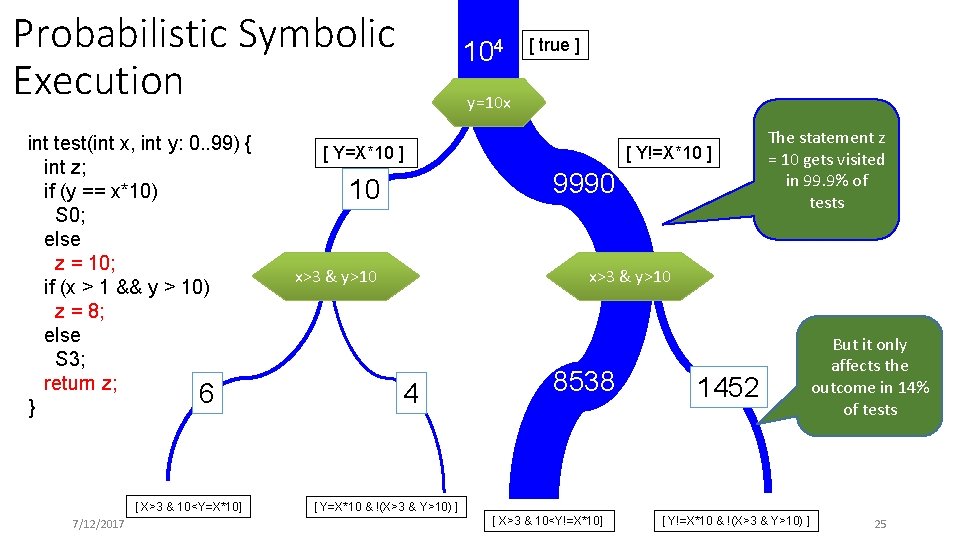

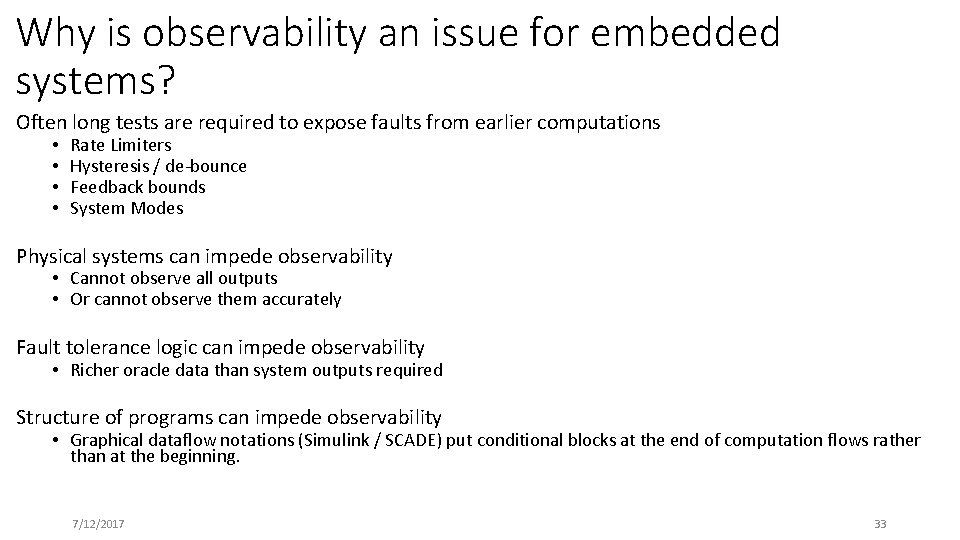

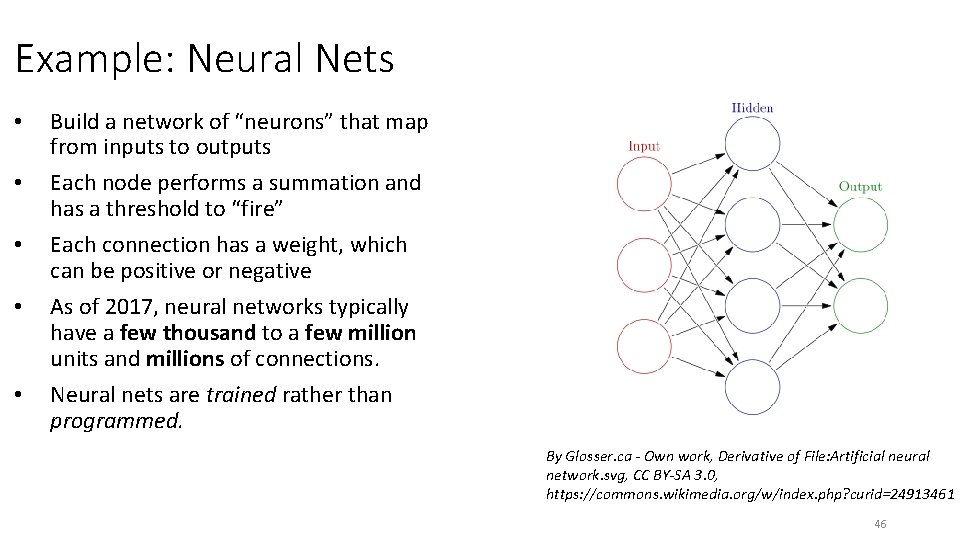

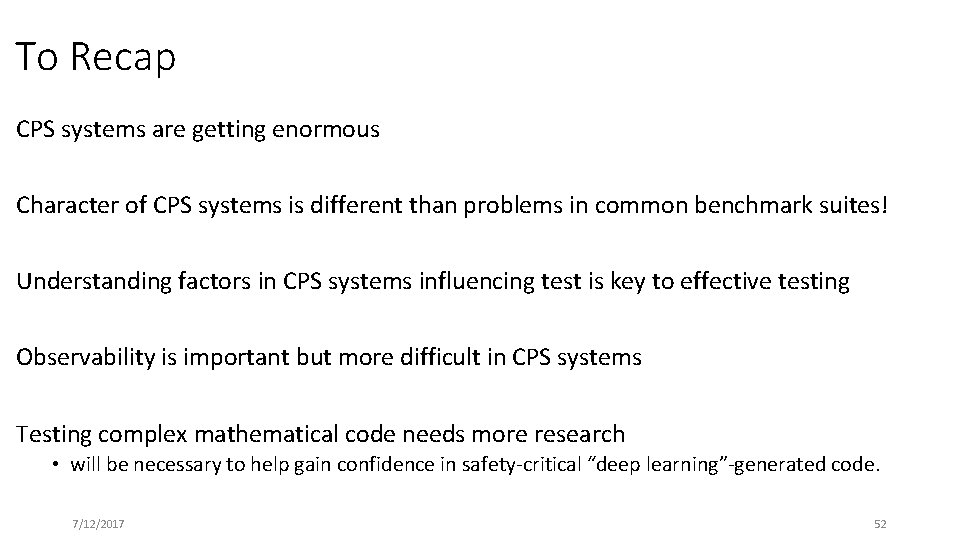

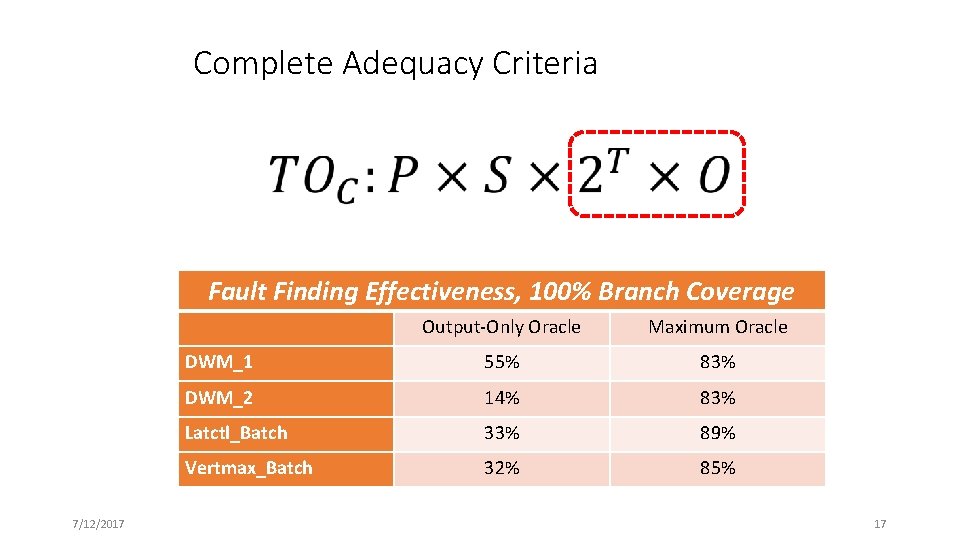

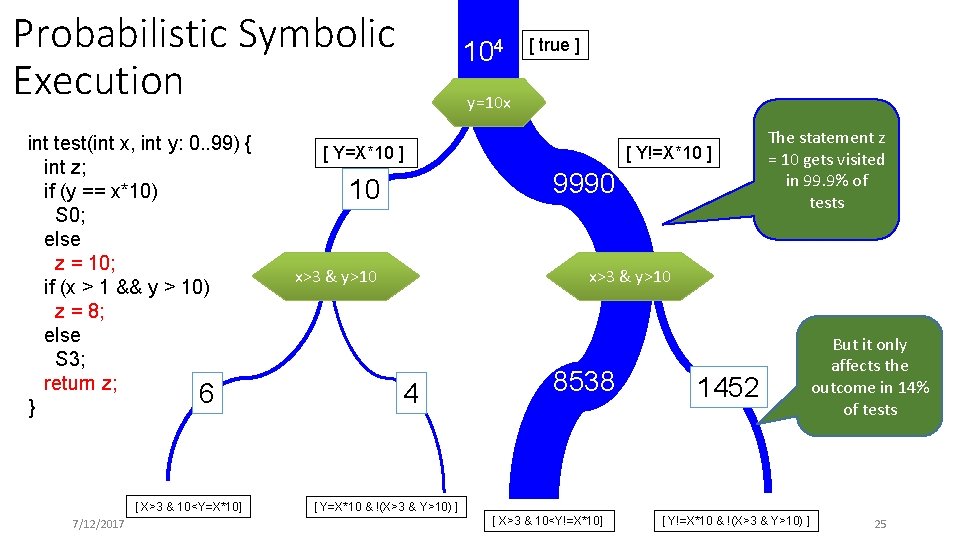

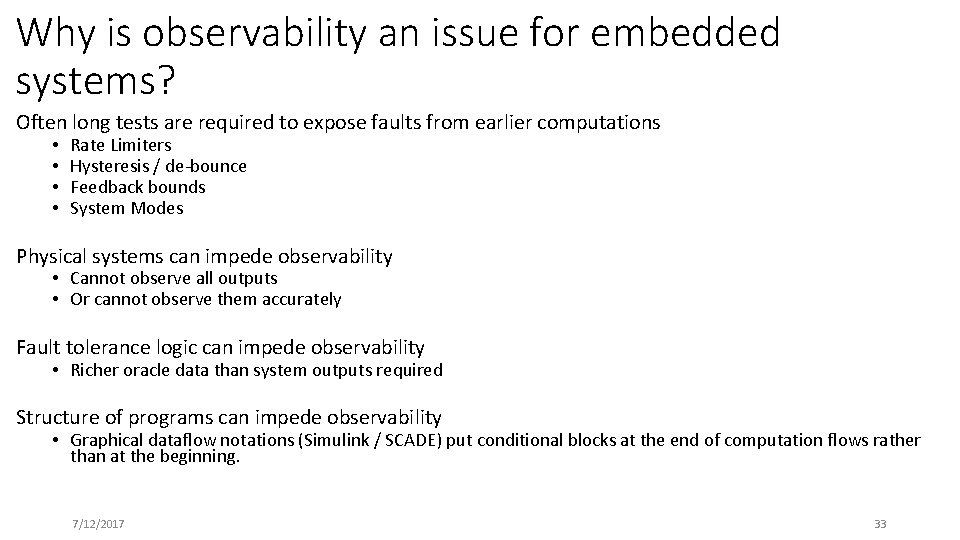

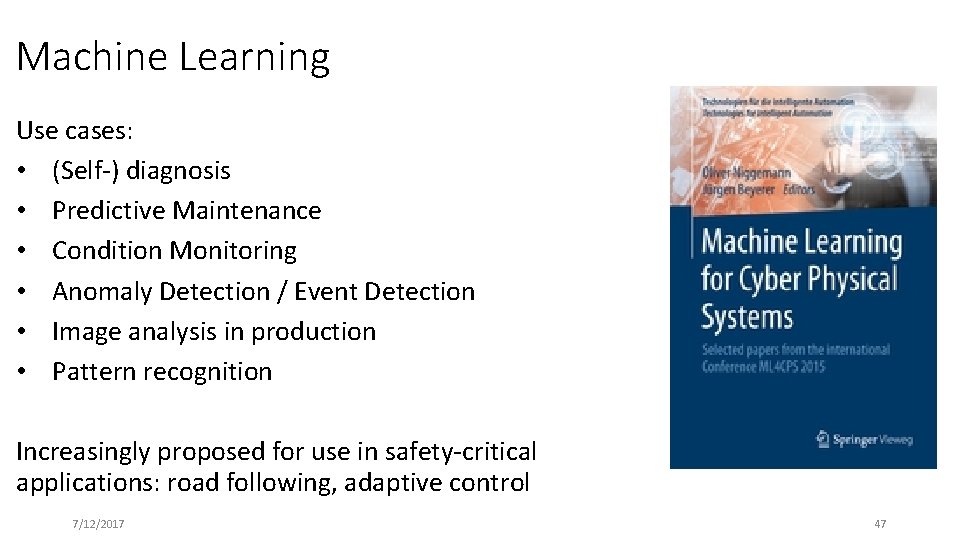

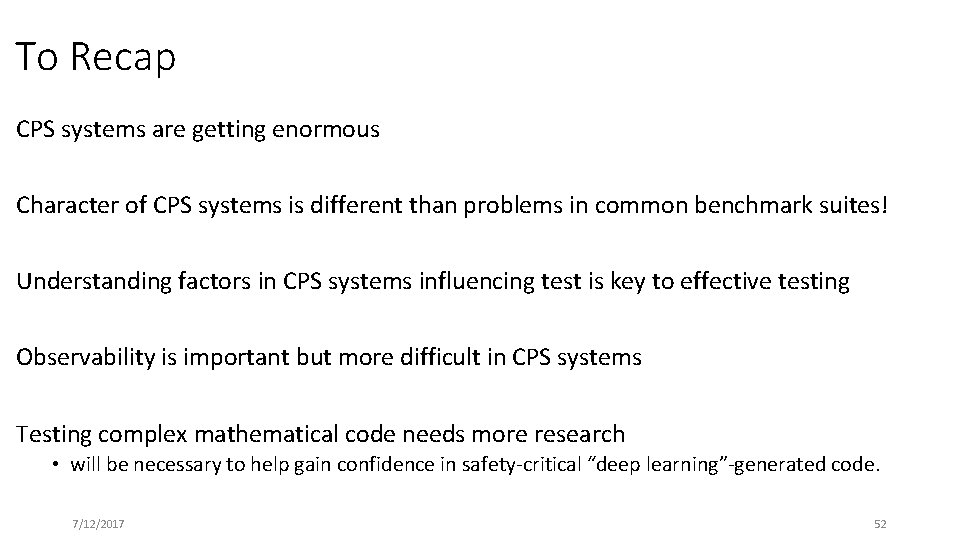

Neural Net Code Structure (in MATLAB) 1. function [y 1] = simulate. Standalone. Net(x 1) 2. % Input 1 3. x 1_step 1_xoffset = 0; 4. x 1_step 1_gain = 0. 200475452649894; 5. x 1_step 1_ymin = -1; 6. % Layer 1 7. b 1 = [6. 0358701949520981; 2. 725693924978148; 0. 58 426771719145909; -5. 1615078566382975]; 8. IW 1_1 = [14. 001919491063946; 4. 90641117353245; 15. 228280764533135; -5. 264207948688032]; 9. % Layer 2 10. b 2 = -0. 75620725148640833; 11. LW 2_1 = [0. 5484626432316061 0. 43580234386123884 -0. 085111261420612969 1. 1367922825337915]; 7/12/2017 1. % Output 1 2. y 1_step 1_ymin = -1; 3. y 1_step 1_gain = 0. 2; 4. y 1_step 1_xoffset = 0; 5. % ===== SIMULATION ==== 6. % Dimensions 7. Q = size(x 1, 2); % samples 8. % Input 1 9. xp 1 = mapminmax_apply(x 1, x 1_step 1_gain, x 1_step 1_xoffset, x 1_step 1_ymin); 10. % Layer 1 11. a 1 = tansig_apply(repmat(b 1, 1, Q) + IW 1_1*xp 1); 12. % Layer 2 13. a 2 = repmat(b 2, 1, Q) + LW 2_1*a 1; 14. % Output 1 15. y 1 = mapminmax_reverse(a 2, y 1_step 1_gain, y 1_step 1_xoffset, y 1_step 1_ymin); 16. end 48

…continued 1. % ===== MODULE FUNCTIONS ==== 2. % Map Minimum and Maximum Input Processing Function 3. function y = mapminmax_apply(x, settings_gain, settings_xoffset, settings_ymin) 4. y = bsxfun(@minus, x, settings_xoffset); 5. y = bsxfun(@times, y, settings_gain); 6. y = bsxfun(@plus, y, settings_ymin); Code observations: 7. End • No branches! • No relational operators! 8. % Sigmoid Symmetric Transfer Function 9. function a = tansig_apply(n) 10. a = 2. / (1 + exp(-2*n)) - 1; 11. End 12. 13. % Map Minimum and Maximum Output Reverse-Processing Function 14. function x = mapminmax_reverse(y, settings_gain, settings_xoffset, settings_ymin) 15. x = bsxfun(@minus, y, settings_ymin); 16. x = bsxfun(@rdivide, x, settings_gain); 17. x = bsxfun(@plus, x, settings_xoffset); 18. end 7/12/2017 49

So, how do we test this? • Black-box reliability testing? • How do we determine the input distributions? • How do we gain sufficient confidence for safety-critical use? • Ricky W. Butler, George B. Finelli: The Infeasibility of Quantifying the Reliability of Life-Critical Real-Time Software • Mutation testing? • What do we mutate? • What is our expectation as to the output effect? • A specialized testing regime? 7/12/2017 50

7/12/2017 51

To Recap CPS systems are getting enormous Character of CPS systems is different than problems in common benchmark suites! Understanding factors in CPS systems influencing test is key to effective testing Observability is important but more difficult in CPS systems Testing complex mathematical code needs more research • will be necessary to help gain confidence in safety-critical “deep learning”-generated code. 7/12/2017 52

Citations • • • • J. Gourlay. A mathematical framework for the investigation of testing. TSE, 1983 Staats, Whalen, and Heimdahl, Programs, Tests, and Oracles: The Foundations of Testing Revisited. ICSE 2011 Gay, Staats, Whalen, and Heimdahl, The Risks of Coverage-Directed Test Case Generation, FASE 2012, TSE 2015. Inozemtseva and Holmes, Coverage Is Not Strongly Correlated with Test Suite Effectiveness, ICSE 14 Zhang and Mesbah: Assertions Are Strongly Correlated with Test Suite Effectiveness, FSE 15 Just, Jalali, Inozemtseva, Ernst, Holmes, and Fraser. Are mutants a valid substitute for real faults in software testing? FSE 2014 Yao, Harmon, Jia, A study of Equivalent and Stubborn Mutation Operators using Human Analysis of Equivalence. ICSE 2014 W. Visser, What makes killing a mutant hard? ASE 2016. Whalen, Gay, You, Staats, and Heimdahl. Observable Modified Condition / Decision Coverage. ICSE 2013 Matinnejad, Nejati, and Briand, Automated Test Suite Generation for Time-Continuous Simulink Models. ICSE 2016 Matinnejad, Nejati, Briand, Bruckmann, Poull, Search-based automated testing of continuous controllers: Framework, tool support, and case studies. I&ST 57 (2015) Matinnejad, Nejati, Briand, Bruckmann: Effective test suites for mixed discrete-continuous stateflow controllers. FSE 2015 Machine Learning for Cyber Physical Systems. 2015, 2016, 2017. Springer. Ricky W. Butler, George B. Finelli: The Infeasibility of Quantifying the Reliability of Life-Critical Real-Time Software, TSE 1993 7/12/2017 53