Testing Approach and the Project Management Life Cycle

- Slides: 28

Testing Approach and the Project Management Life Cycle Tim Flahaven, PMP 08/13/2013 1

Agenda • Goal • Phases of PM Lifecycle affected by Testing • Systems Engineering Lifecycle • Scope Management • Requirements • Quality Management • Testing Philosophy • Building and Executing the Test • Evaluate the Test Results • Brief the Results • Test Closeout 2

Goal • This presentation will show some of the parallels between the Project Management Life Cycle (PMI) and the Systems Engineering Lifecycle (INCOSE) in regards to testing. • The format is one of an “after action report” highlighting the highs and lows of a large multi-discipline project. Presentation Title 3

PMBOK Process Groups • Initiating Process Group. Those processes performed to define a new project or a new phase of an existing project by obtaining authorization to start the project or phase. • Planning Process Group. Those processes required to establish the scope of the project, refine the objectives, and define the course of action to attain the objectives that the project was undertaken to achieve. • Executing Process Group. Those processes performed to complete the work defined in the project management plan to satisfy the project specifications. • Monitoring and Controlling Process Group. Those processes required to track, review, and regulate the progress and performance of the project; identify any areas in which changes to the plan are required; and initiate the corresponding changes. • Closing Process Group. Those processes performed to finalize all activities across all Process Groups to formally close the project or phase. Presentation Title 4

Testing in PM Lifecycle • Process Groups affected – Planning • Analyze testing needs and plan accordingly. – Monitoring/ Controlling • Implement testing plan. • Remember testing will always show you the shortcuts taken in the triple constraint. At the same time it will indicate your successes. 5

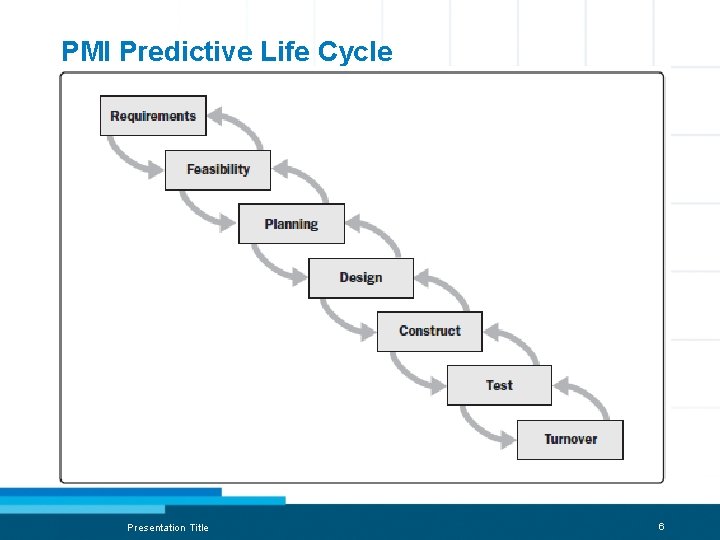

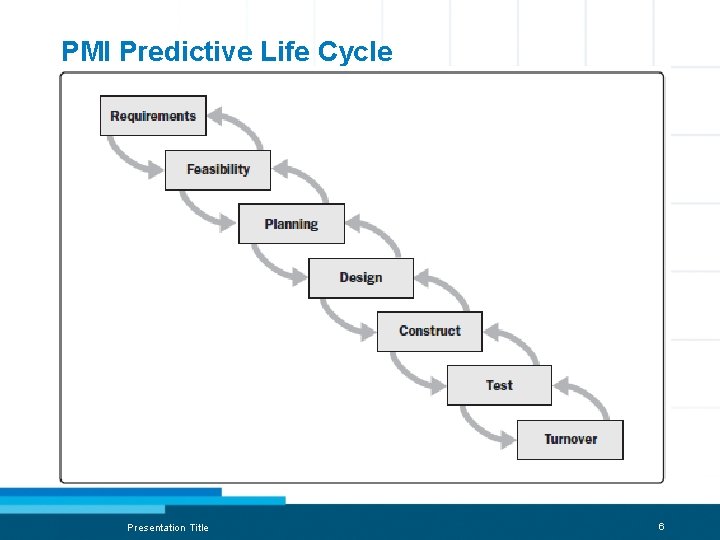

PMI Predictive Life Cycle Presentation Title 6

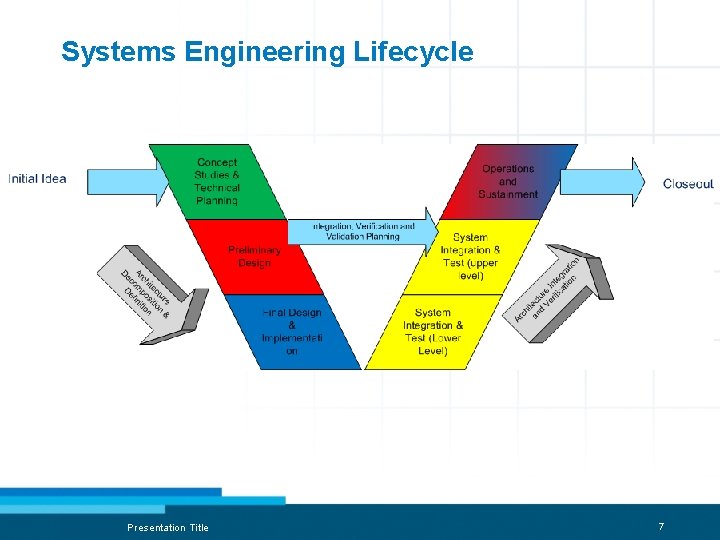

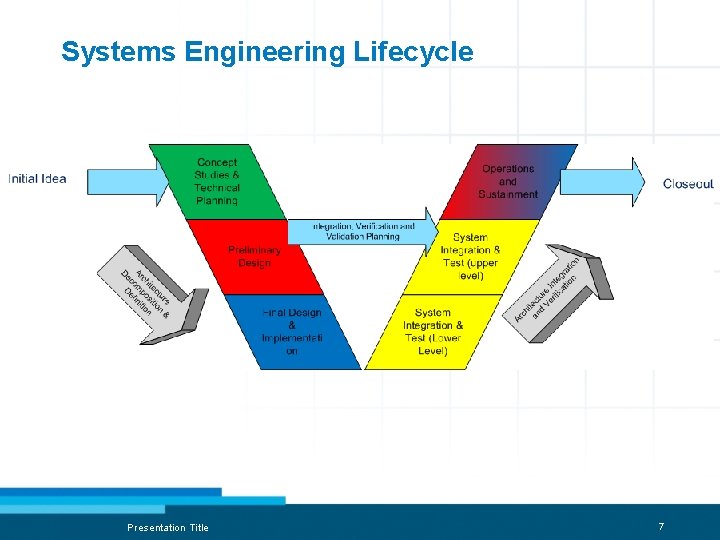

Systems Engineering Lifecycle Presentation Title 7

Scope Management • Good Requirements are: – Measurable and testable – Traceable – Complete – Consistent – Acceptable to key stakeholders. – Additional thoughts from a Systems Engineering perspective: • Will a simulator be needed for testing, if so what is its schedule? • What are the dependencies? • Who is responsible for each requirement? • How are changes being handled? • Identify Verification Method (next slide) 8

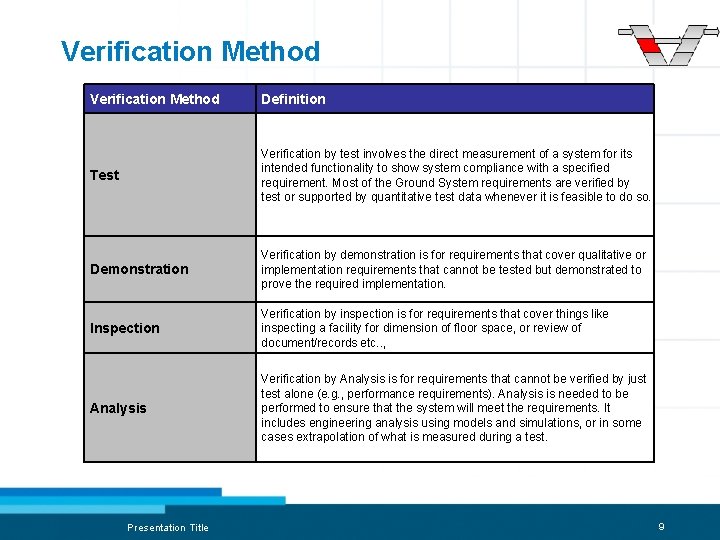

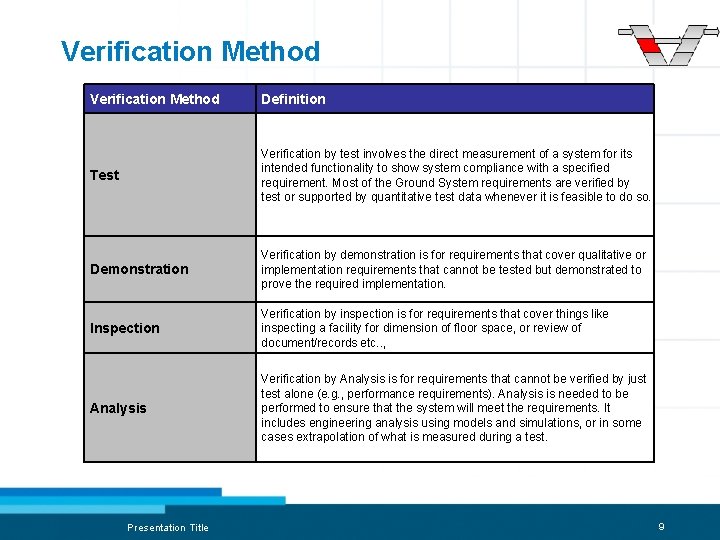

Verification Method Definition Test Verification by test involves the direct measurement of a system for its intended functionality to show system compliance with a specified requirement. Most of the Ground System requirements are verified by test or supported by quantitative test data whenever it is feasible to do so. Demonstration Verification by demonstration is for requirements that cover qualitative or implementation requirements that cannot be tested but demonstrated to prove the required implementation. Inspection Verification by inspection is for requirements that cover things like inspecting a facility for dimension of floor space, or review of document/records etc. . , Analysis Verification by Analysis is for requirements that cannot be verified by just test alone (e. g. , performance requirements). Analysis is needed to be performed to ensure that the system will meet the requirements. It includes engineering analysis using models and simulations, or in some cases extrapolation of what is measured during a test. Presentation Title 9

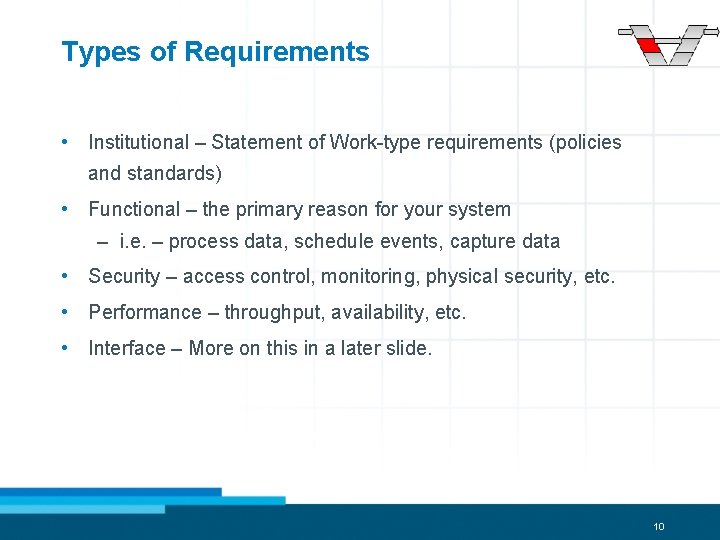

Types of Requirements • Institutional – Statement of Work-type requirements (policies and standards) • Functional – the primary reason for your system – i. e. – process data, schedule events, capture data • Security – access control, monitoring, physical security, etc. • Performance – throughput, availability, etc. • Interface – More on this in a later slide. 10

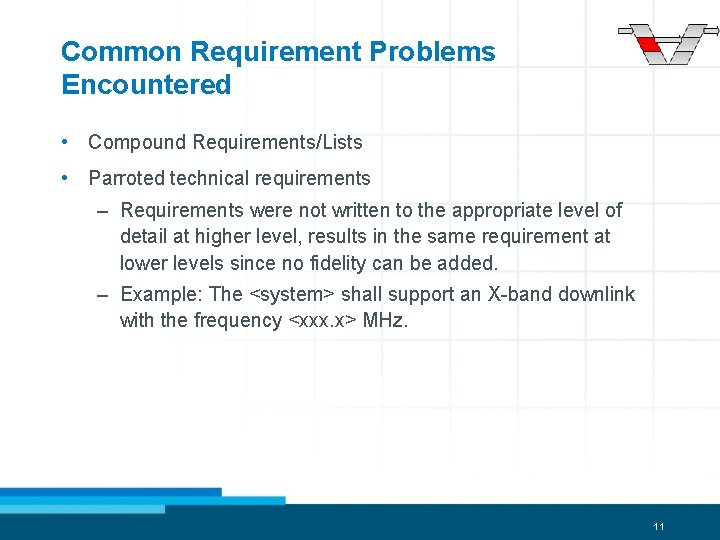

Common Requirement Problems Encountered • Compound Requirements/Lists • Parroted technical requirements – Requirements were not written to the appropriate level of detail at higher level, results in the same requirement at lower levels since no fidelity can be added. – Example: The <system> shall support an X-band downlink with the frequency <xxx. x> MHz. 11

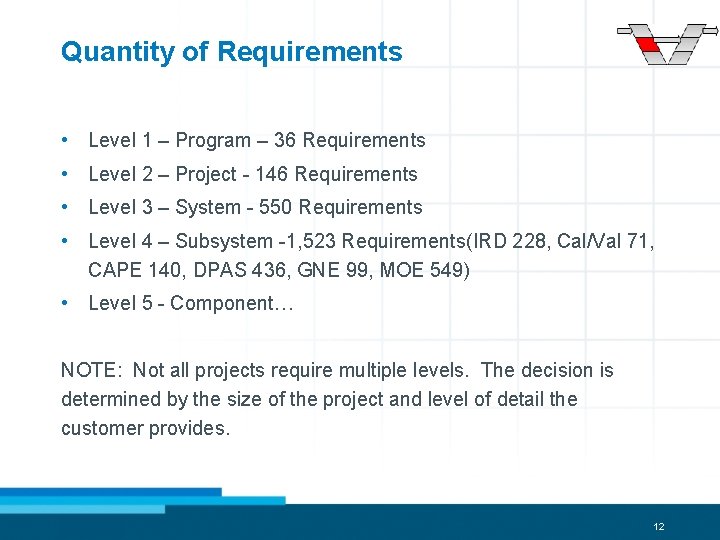

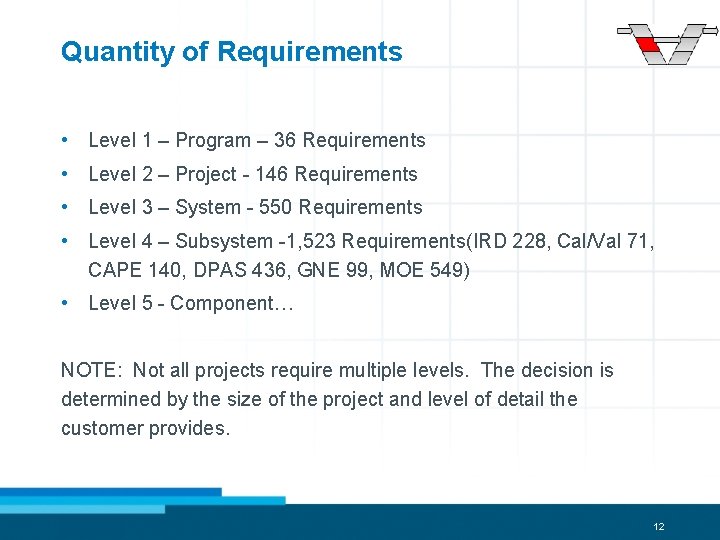

Quantity of Requirements • Level 1 – Program – 36 Requirements • Level 2 – Project - 146 Requirements • Level 3 – System - 550 Requirements • Level 4 – Subsystem -1, 523 Requirements(IRD 228, Cal/Val 71, CAPE 140, DPAS 436, GNE 99, MOE 549) • Level 5 - Component… NOTE: Not all projects require multiple levels. The decision is determined by the size of the project and level of detail the customer provides. 12

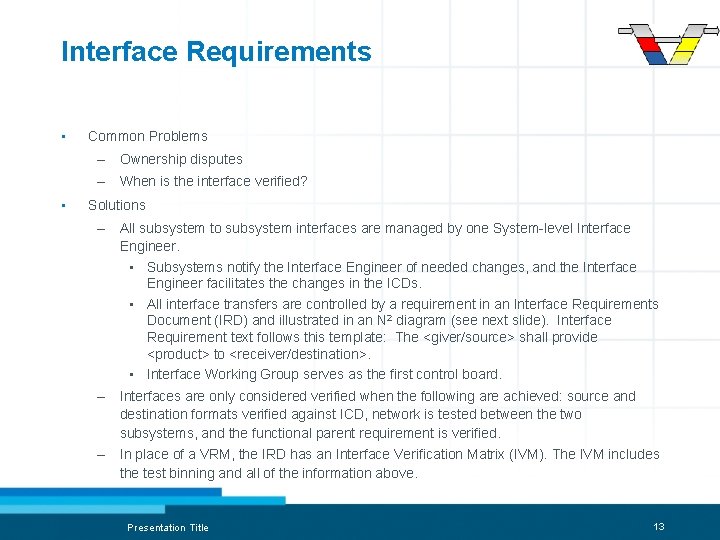

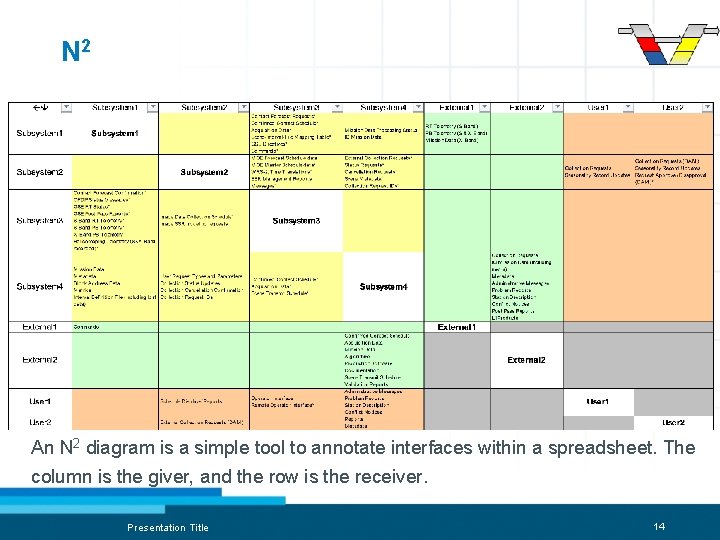

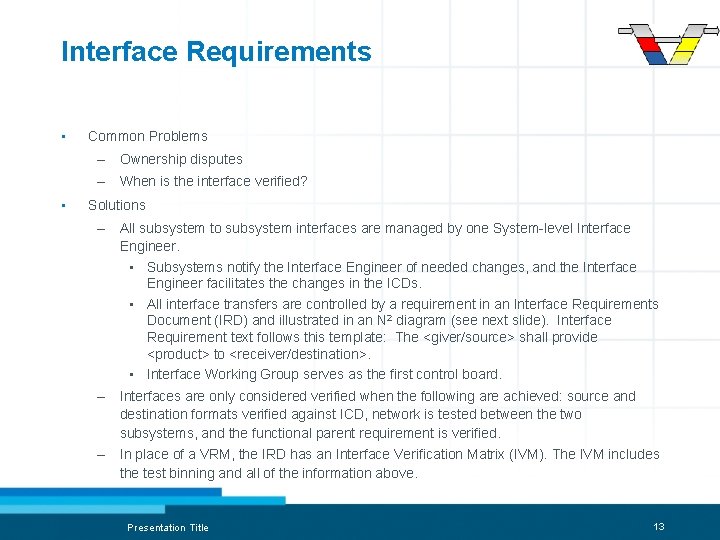

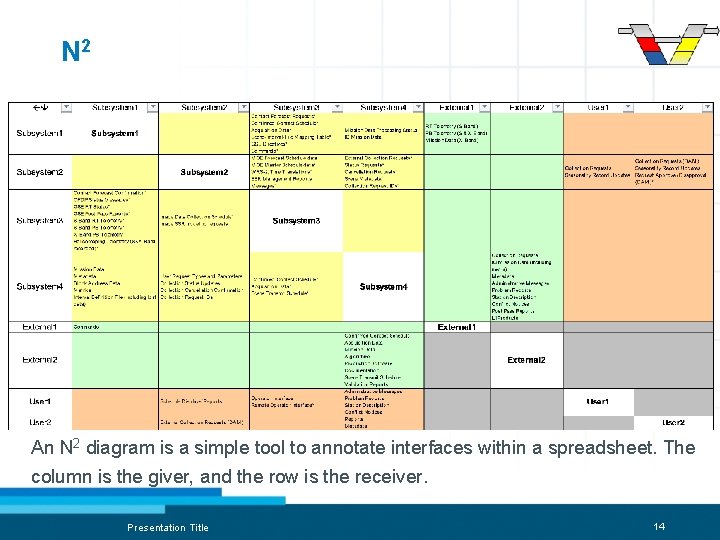

Interface Requirements • Common Problems – Ownership disputes – When is the interface verified? • Solutions – All subsystem to subsystem interfaces are managed by one System-level Interface Engineer. • Subsystems notify the Interface Engineer of needed changes, and the Interface Engineer facilitates the changes in the ICDs. • All interface transfers are controlled by a requirement in an Interface Requirements Document (IRD) and illustrated in an N 2 diagram (see next slide). Interface Requirement text follows this template: The <giver/source> shall provide <product> to <receiver/destination>. • Interface Working Group serves as the first control board. – Interfaces are only considered verified when the following are achieved: source and destination formats verified against ICD, network is tested between the two subsystems, and the functional parent requirement is verified. – In place of a VRM, the IRD has an Interface Verification Matrix (IVM). The IVM includes the test binning and all of the information above. Presentation Title 13

N 2 An N 2 diagram is a simple tool to annotate interfaces within a spreadsheet. The column is the giver, and the row is the receiver. Presentation Title 14

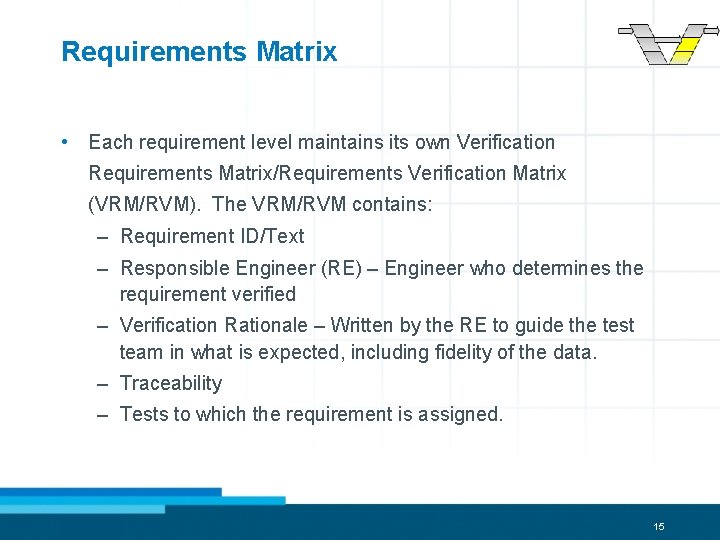

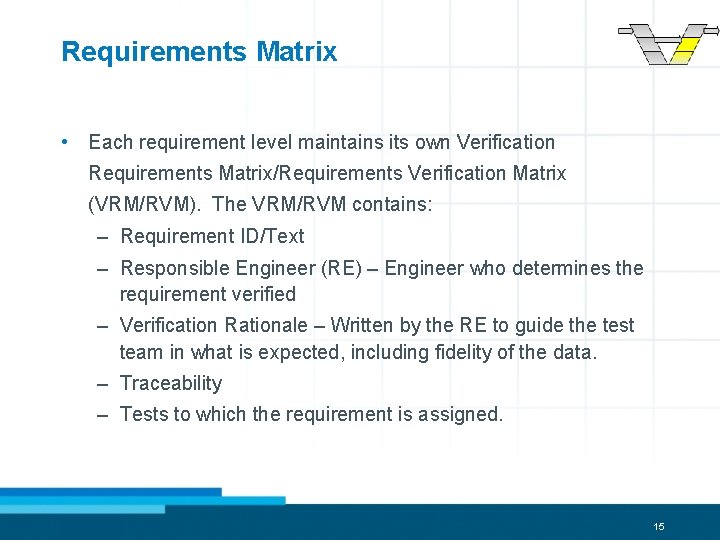

Requirements Matrix • Each requirement level maintains its own Verification Requirements Matrix/Requirements Verification Matrix (VRM/RVM). The VRM/RVM contains: – Requirement ID/Text – Responsible Engineer (RE) – Engineer who determines the requirement verified – Verification Rationale – Written by the RE to guide the test team in what is expected, including fidelity of the data. – Traceability – Tests to which the requirement is assigned. 15

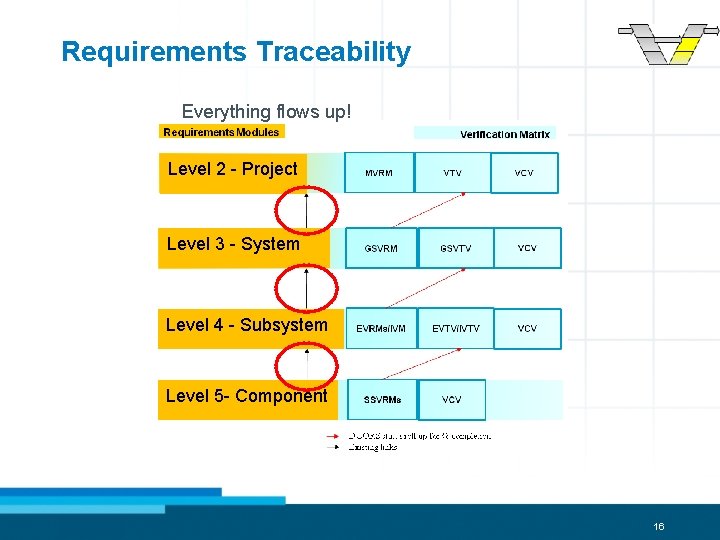

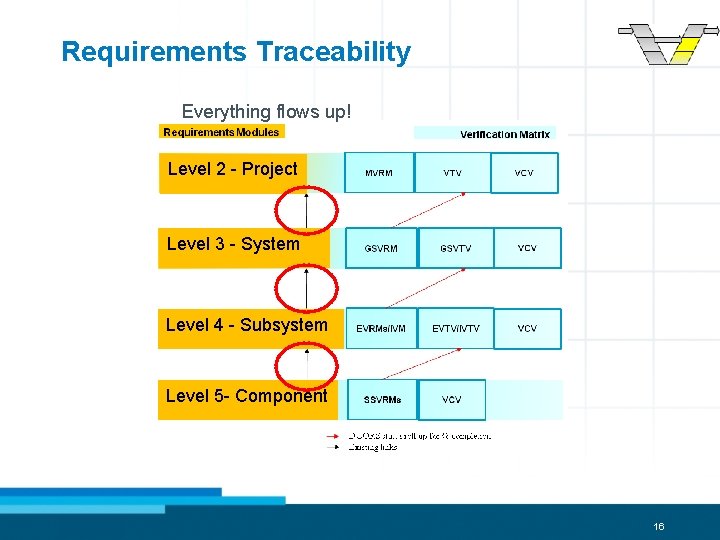

Requirements Traceability Everything flows up! Level 2 - Project Level 3 - System Level 4 - Subsystem Level 5 - Component 16

Quality Management • Perform Quality Assurance – Statistical Sampling can be performed in conjunction with testing – Perform Quality Audits • Testing adds value in identifying nonconformity, gaps and shortcomings. – Use of Change Requests (or Discrepancy Reports) Presentation Title 17

Testing Philosophies • Lessons Learned are reviewed prior to writing any test procedures. • All software and hardware is tested multiple times through the requirements levels. • Fidelity of the tests changes at each level. Individual components are tested rigorously at lower levels. The higher level tests are more about integration, overall operability and performance. • All tests are witnessed, thoroughly documented and test artifacts collected. • Tests are logical binnings of Test Cases based on functionality and schedule (next slide). Presentation Title 18

Create Logical Tests • Test should be developed in agreement with the Test Director, Responsible Engineer, Verification Owner, and Quality Assurance (roles in next slide). • • • Try to look at your Concept of Operations (or Operations Concept) and match tests to scenarios/use cases. – This allows for testing of requirements in an operational context and not an unrealistic engineering test. Furthermore scenario testing also allows for multiple requirements to be tested at once (good way to save schedule). – – – If an engineering test is required, limit the requirements assigned to a minimum. Scenario testing is also modular by nature and allows for future reuse. “Test as you Fly” common NASA term that embraces the scenario idea. One common idea is to test early and test often. – – Results in several partial verifications that show minor maturity gains. – It is best to perform informal “looks” or “checks” for maturity and formally verify each requirement only once. If it is not possible to test the entire functionality in one test, consider each test as being of equal importance (avoid partial and full verification distinctions) Contributes to more formal retests that may not be necessary (remember everyone loves performance metrics ) Plan for a capstone/end-to-end test as the final test. – – Builds confidence for the customer and end-users Allows for a built in retest if required. 19

Testing Roles • Verification Owner – Customer who is responsible for the overall development, integration, verification and validation of the respective level of requirements/ops concept. Final sign-off for all requirements. • Test Director - Overall owner of the tests – Generates Test Plans, Provides Test Procedure templates, Identifies requirements to be verified , interfaces to be exercised, and data products to be exchanged in each of the Tests. • Test Conductor - Reviews Test plans and generates Test Procedures with detailed steps (test cases) necessary to execute the tests and coordinates with test director, responsible engineers, and others as necessary. Executes test per the test procedure and record test progress and results, and archive as needed. Ideally an end user. • Responsible Engineer - Performs requirements verification analysis and assessments on behalf of the Verification Owner. Provides inputs to the Test Plan and Test Procedure and is a signatory. Witnesses tests affecting their requirements. • Test Witness – Witnesses tests on behalf of the RE, should they not be able to witness a test (i. e. testing in two geographic locations at once, leave, etc. ). Ensures the test procedure is followed annotated. • Quality Assurance - monitors test preparation, execution and reporting per established standards. Presentation Title 20

Test Plan/Procedure • Includes the following: – Test Objectives – outline goal of the test and known limitations. – Test Reporting – identifies how discrepancies and results are reported. – Test Resources – test participants, test configuration, inputs/outputs – Test Case – actual test steps. Includes a unique identifier, requirement, detailed steps, identify artifacts, and the TD/TC results (outline in next slide). Advice: avoid Word documents for test cases and use spreadsheets instead. This allows for more attributes and an easier test case for operators/testers to follow. – Test Case Traceability – test specific subset of the VRM showing which test cases test each requirement. – Space for Test Director, Test Conductor, RE/Test Witness and Quality Assurance to initial/date each page. Presentation Title 21

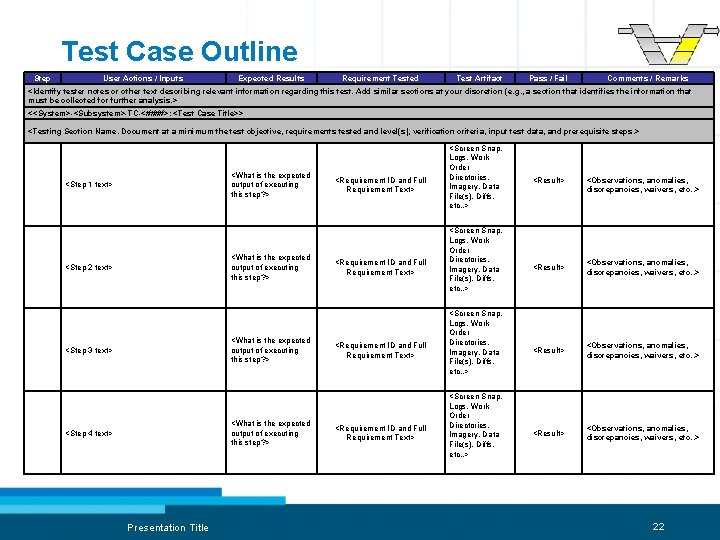

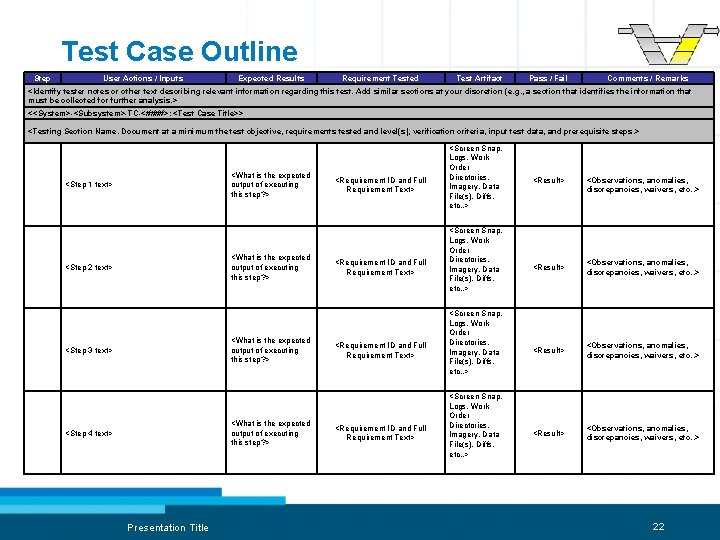

Test Case Outline Step User Actions / Inputs Expected Results Requirement Tested Test Artifact Pass / Fail Comments / Remarks <Identify tester notes or other text describing relevant information regarding this test. Add similar sections at your discretion (e. g. , a section that identifies the information that must be collected for further analysis. > <<System>-<Subsystem>-TC-<####>: <Test Case Title>> <Testing Section Name. Document at a minimum the test objective, requirements tested and level(s), verification criteria, input test data, and prerequisite steps. > <What is the expected output of executing this step? > <Step 1 text> <What is the expected output of executing this step? > <Step 2 text> <What is the expected output of executing this step? > <Step 3 text> <What is the expected output of executing this step? > <Step 4 text> Presentation Title <Requirement ID and Full Requirement Text> <Screen Snap, Logs, Work Order Directories, Imagery, Data File(s), Diffs, etc. . > <Result> <Observations, anomalies, discrepancies, waivers, etc. . > <Result> <Observations, anomalies, discrepancies, waivers, etc. . > 22

Discrepancy Reporting • Directly relates to Quality Management • Document all test discrepancies, no matter how minor. • The discrepancy reports must include the following: Description of the problem, submitter, date observed, logs if applicable, test identified in, priority, additional notes (optional), and requirement affected if known. • The responsible engineers will review all discrepancies to determine if a requirement is really impacted. • The discrepancy is assigned to a developer for resolution in a future release. • The discrepancy remains open until the fix release is formally tested/retested in the environment in which the problem was identified. – The test director will determine if the problem is resolved and the responsible engineer will provide concurrence. – The retest must regression test other functionalities provided by the same module fixed. • There is no such thing as a perfect system, expect discrepancies… Presentation Title 23

Evaluate the Test Results • You’re not done yet…. running the test execution is only part of testing. • Responsible Engineers need to review all test artifacts and perform assessments for each requirement tested. • Test Director, Test Conductors, Responsible Engineers and Verification Owners need to meet to reconcile the results for each requirement (remember the Test Director and Test Conductor determine Pass/Fail while running the test). • Final results are combined into one assessment spreadsheet and archived with any ancillary data (analysis documents, inputs/outputs). Presentation Title 24

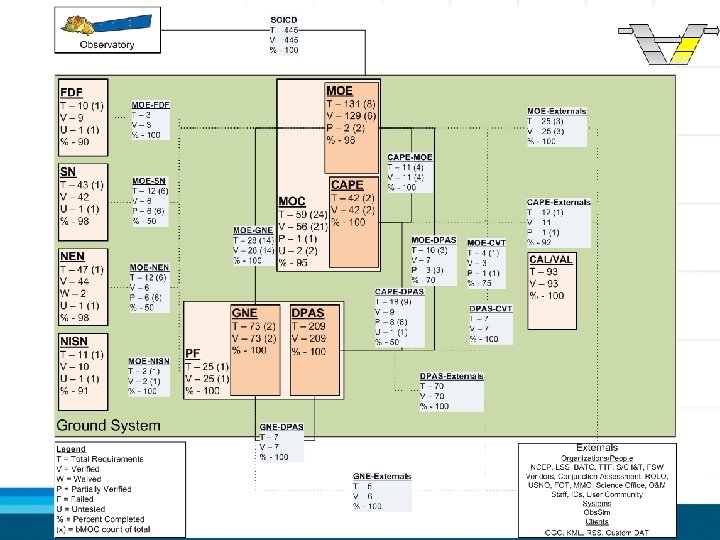

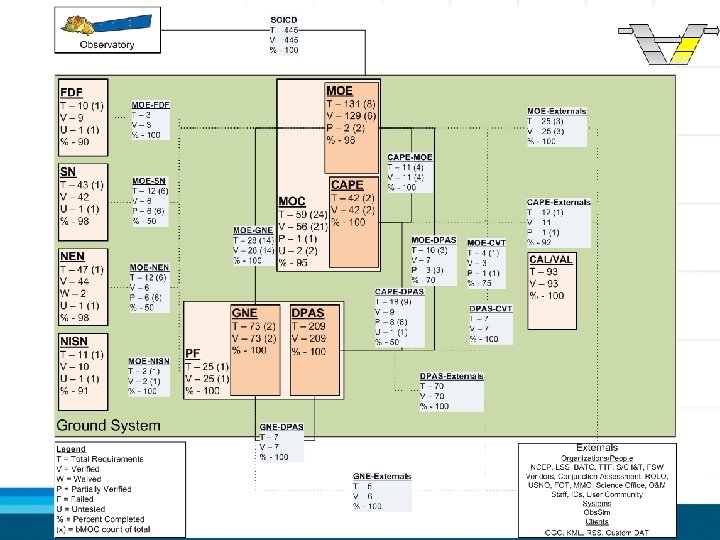

Brief the Results • Post Test Reports are generated after each test. These detail the results of the test in a prescribed template at a minimum stating pass/fail and any discrepancy reports generated for each test. • Post Test Reviews are presented after each test and walk management and the customer through the Post test Reports. Reporting of the test successes and data volume are often overlooked in these presentations and should be briefed every time. • Provide an artifact for snapshot status (A scorecard example is in the next slide). – During the course of the project it became evident that review panels needed a visual representation of each subsystem/components progress. – After much experimentation it was determined that traditional bar charts became overwhelming and a simpler diagram-type chart was needed. – An overall scorecard includes progress of all requirements (including interfaces) in a manner that shows maturity of all subsystems/components. – Allows for a simple format the customer can use in their internal meetings 25

Scorecards 26

Test Closeout • Key items to collect and archive (in PDF format if possible): – Digital copies of all test procedures – Scanned copies of the as-run procedures – Exports of all discrepancy reports from your tracking tool into a common archive format (i. e. spreadsheet). – Test Artifacts • Test scripts (if used) • Test Data (inputs) • Test Products (outputs) • Responsible Engineer Analysis • Assessment spreadsheets. Presentation Title 27

Questions? Presentation Title 28