Tensor Layer A Versatile Deep Learning Library for

Tensor. Layer: A Versatile Deep Learning Library for Developers and Scientists Speakers: Luo Mai, Jingqing Zhang

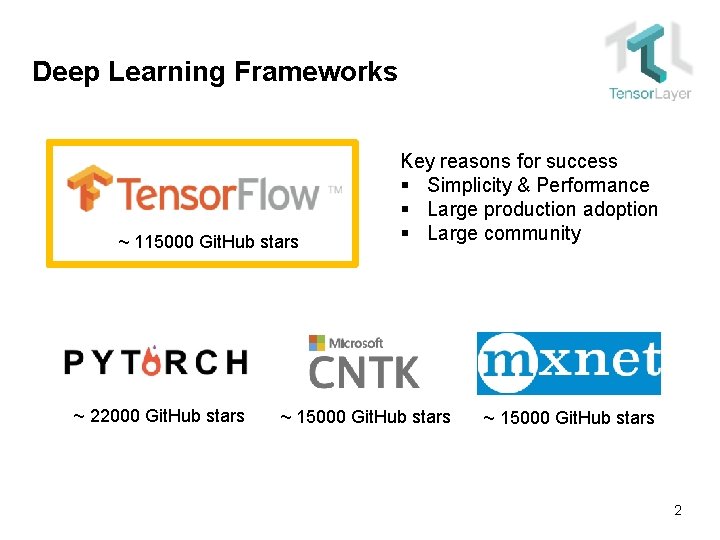

Deep Learning Frameworks ~ 115000 Git. Hub stars ~ 22000 Git. Hub stars Key reasons for success § Simplicity & Performance § Large production adoption § Large community ~ 15000 Git. Hub stars 2

Abstraction Mismatch Tensor. Flow low-level API: dataflow graph, placeholder, session, queue runner, devices … Deep learning high-level elements: neural networks stacked with layers Wrapper libraries that bridge the gap TFLearn 3

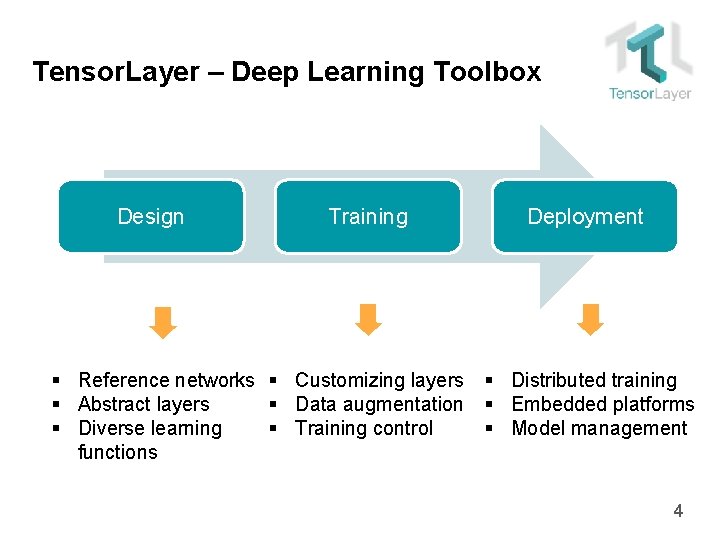

Tensor. Layer – Deep Learning Toolbox Design Training Deployment § Reference networks § Customizing layers § Distributed training § Abstract layers § Data augmentation § Embedded platforms § Diverse learning § Training control § Model management functions 4

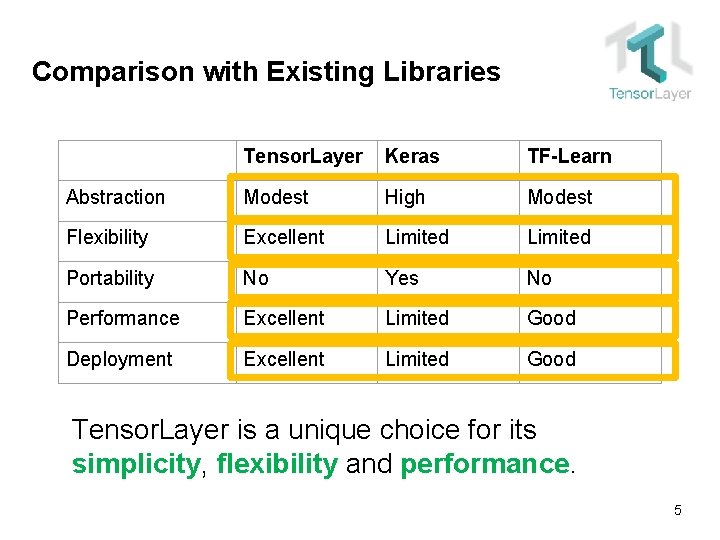

Comparison with Existing Libraries Tensor. Layer Keras TF-Learn Abstraction Modest High Modest Flexibility Excellent Limited Portability No Yes No Performance Excellent Limited Good Deployment Excellent Limited Good Tensor. Layer is a unique choice for its simplicity, flexibility and performance. 5

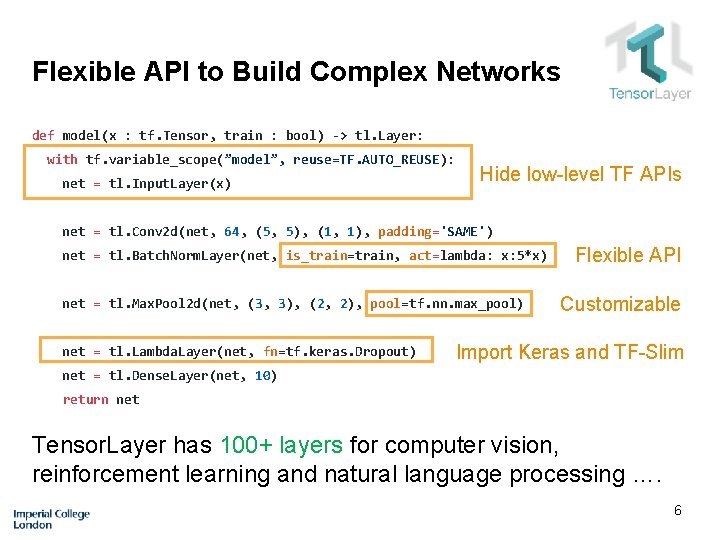

Flexible API to Build Complex Networks def model(x : tf. Tensor, train : bool) -> tl. Layer: with tf. variable_scope(”model”, reuse=TF. AUTO_REUSE): net = tl. Input. Layer(x) Hide low-level TF APIs net = tl. Conv 2 d(net, 64, (5, 5), (1, 1), padding='SAME') net = tl. Batch. Norm. Layer(net, is_train=train, act=lambda: x: 5*x) net = tl. Max. Pool 2 d(net, (3, 3), (2, 2), pool=tf. nn. max_pool) net = tl. Lambda. Layer(net, fn=tf. keras. Dropout) Flexible API Customizable Import Keras and TF-Slim net = tl. Dense. Layer(net, 10) return net Tensor. Layer has 100+ layers for computer vision, reinforcement learning and natural language processing …. 6

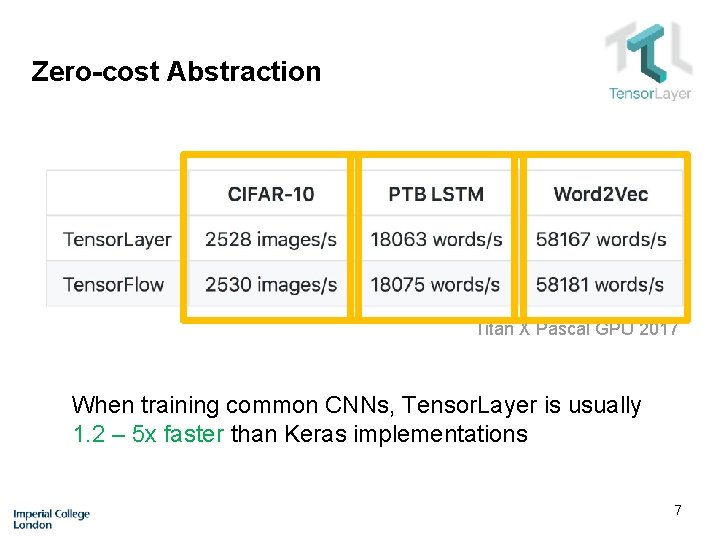

Zero-cost Abstraction Titan X Pascal GPU 2017 When training common CNNs, Tensor. Layer is usually 1. 2 – 5 x faster than Keras implementations 7

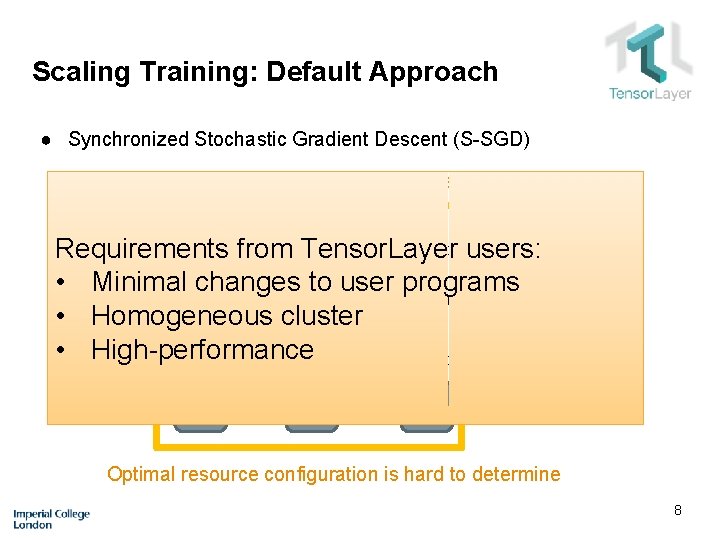

Scaling Training: Default Approach ● Synchronized Stochastic Gradient Descent (S-SGD) Extra works to include parameter server in TF programs Parameter users: Servers Requirements from Tensor. Layer • Minimal changes to user programs Parameters • Homogeneous cluster • High-performance Local gradients GPU workers Optimal resource configuration is hard to determine 8

Scaling Training: Tensor. Layer + Horovod ● S-SGD through peer-to-peer communication Broadcast parameters Aggregate gradients GPU Workers Initialize environment Enable synchronization 9

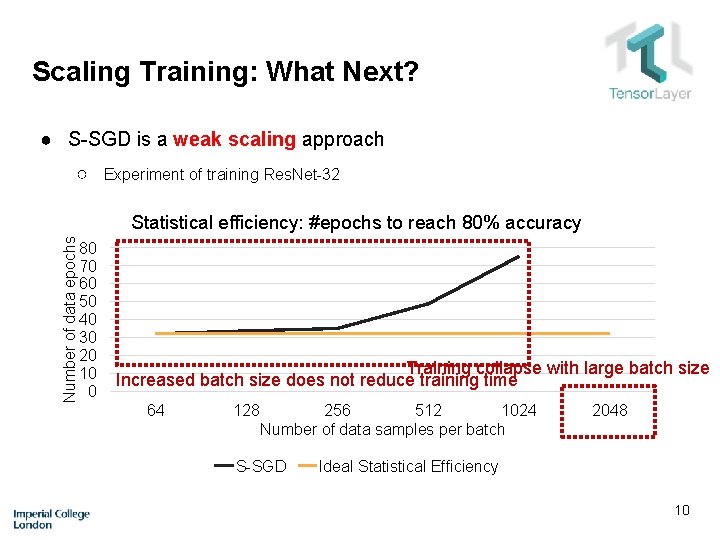

Scaling Training: What Next? ● S-SGD is a weak scaling approach ○ Experiment of training Res. Net-32 Number of data epochs Statistical efficiency: #epochs to reach 80% accuracy 80 70 60 50 40 30 20 10 0 Training collapse with large batch size Increased batch size does not reduce training time 64 128 256 512 1024 Number of data samples per batch S-SGD 2048 Ideal Statistical Efficiency 10

Scaling Training: What Next? “If the training rate of some models is restricted to small batch sizes, then we will need to find other algorithmic and architectural approaches to their acceleration” – Jeff Dean & David Patterson 11

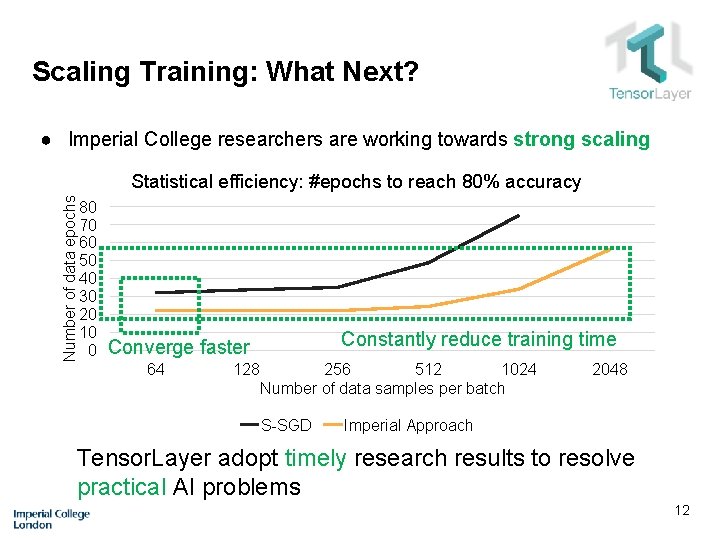

Scaling Training: What Next? ● Imperial College researchers are working towards strong scaling Number of data epochs Statistical efficiency: #epochs to reach 80% accuracy 80 70 60 50 40 30 20 10 0 Constantly reduce training time Converge faster 64 128 256 512 1024 Number of data samples per batch S-SGD 2048 Imperial Approach Tensor. Layer adopt timely research results to resolve practical AI problems 12

Impact - Github December 2018 ~ 4500 Stars ~ 1000 Forks ~ 70 Contributors 13

Impact - Media and Award 14

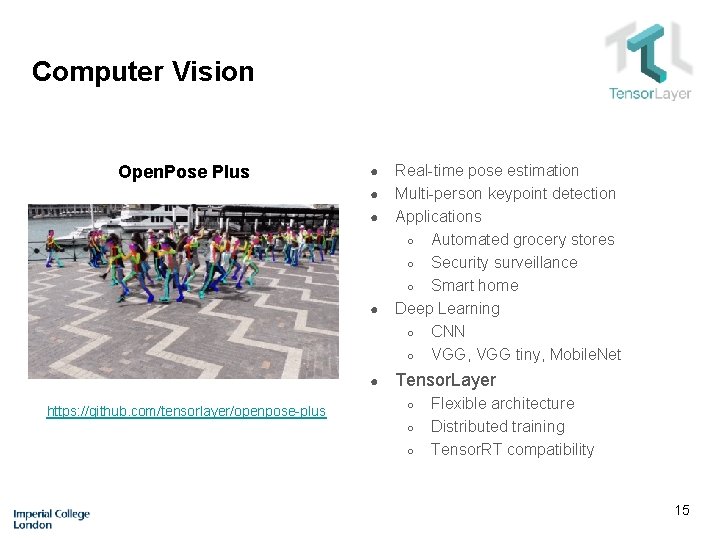

Computer Vision Open. Pose Plus ● ● ● https: //github. com/tensorlayer/openpose-plus Real-time pose estimation Multi-person keypoint detection Applications ○ Automated grocery stores ○ Security surveillance ○ Smart home Deep Learning ○ CNN ○ VGG, VGG tiny, Mobile. Net Tensor. Layer ○ ○ ○ Flexible architecture Distributed training Tensor. RT compatibility 15

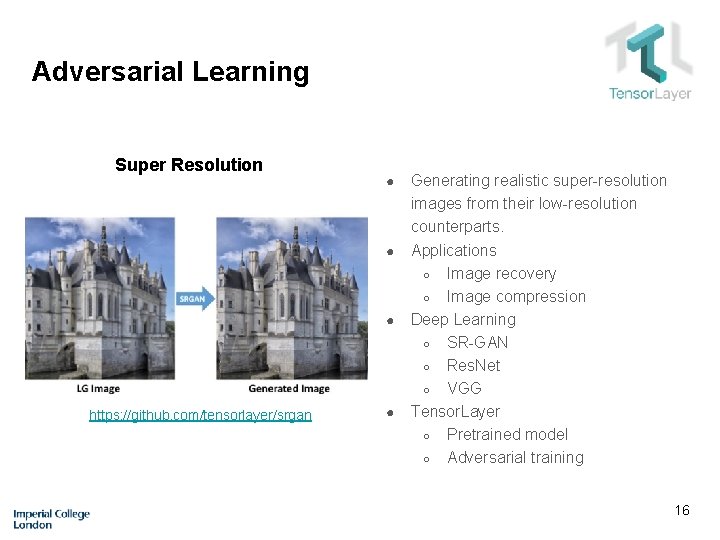

Adversarial Learning Super Resolution ● ● ● https: //github. com/tensorlayer/srgan ● Generating realistic super-resolution images from their low-resolution counterparts. Applications ○ Image recovery ○ Image compression Deep Learning ○ SR-GAN ○ Res. Net ○ VGG Tensor. Layer ○ Pretrained model ○ Adversarial training 16

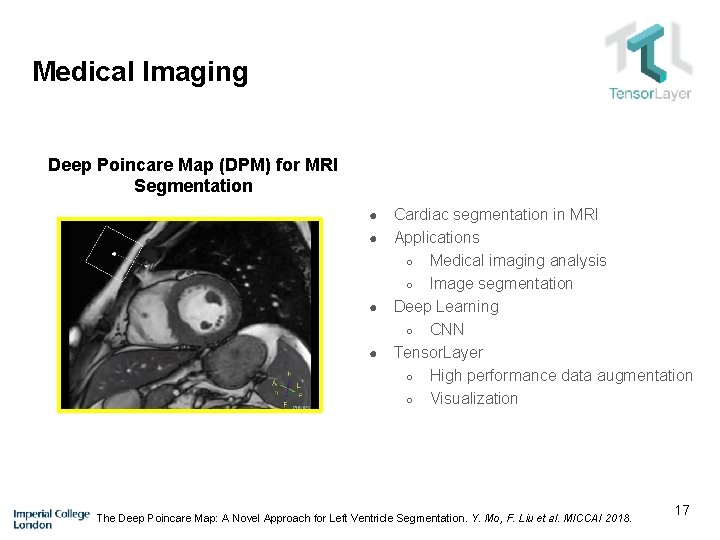

Medical Imaging Deep Poincare Map (DPM) for MRI Segmentation ● ● Cardiac segmentation in MRI Applications ○ Medical imaging analysis ○ Image segmentation Deep Learning ○ CNN Tensor. Layer ○ High performance data augmentation ○ Visualization The Deep Poincare Map: A Novel Approach for Left Ventricle Segmentation. Y. Mo, F. Liu et al. MICCAI 2018. 17

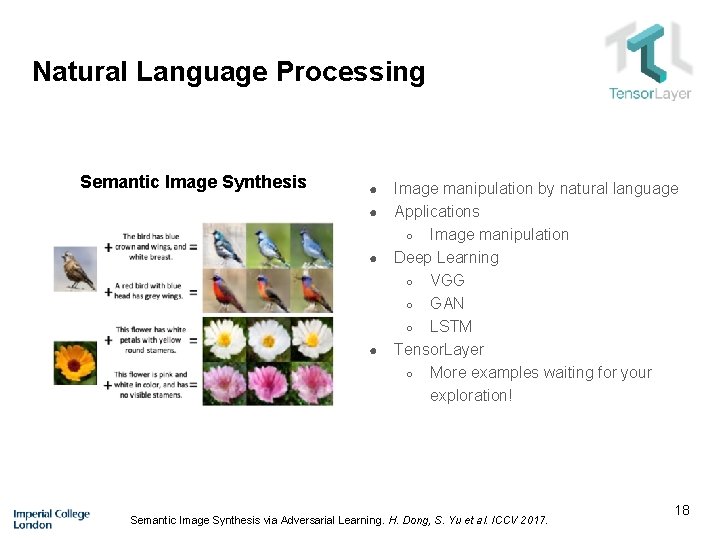

Natural Language Processing Semantic Image Synthesis ● ● Image manipulation by natural language Applications ○ Image manipulation Deep Learning ○ VGG ○ GAN ○ LSTM Tensor. Layer ○ More examples waiting for your exploration! Semantic Image Synthesis via Adversarial Learning. H. Dong, S. Yu et al. ICCV 2017. 18

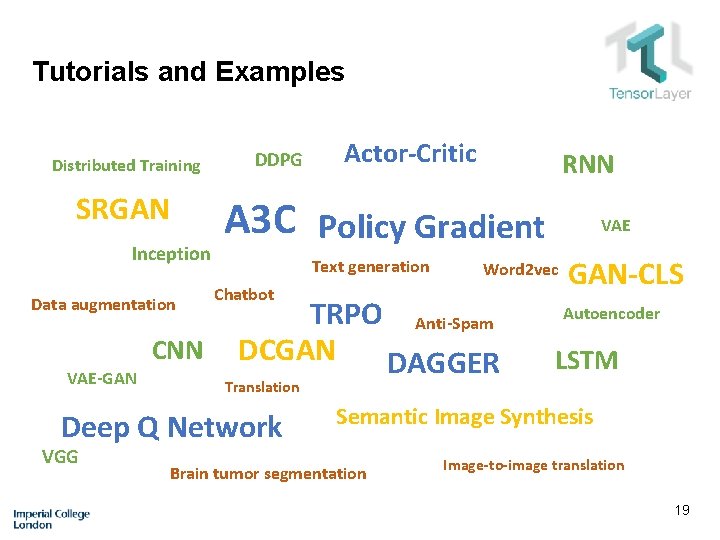

Tutorials and Examples Distributed Training SRGAN Inception Data augmentation VAE-GAN CNN DDPG RNN A 3 C Policy Gradient Text generation Chatbot VAE Word 2 vec TRPO Anti-Spam DCGAN DAGGER GAN-CLS Autoencoder LSTM Translation Deep Q Network VGG Actor-Critic Semantic Image Synthesis Brain tumor segmentation Image-to-image translation 19

Luo Mai: luo. mai 11@imperial. ac. uk Jingqing Zhang: jz 9215@imperial. ac. uk https: //github. com/tensorlayer

- Slides: 20