Tensor Flow Check installation and version Python 3

![c=a+b sess=tf. Session() print("sess. run(node 1, node 2): ", sess. run([node 1, node 2])) c=a+b sess=tf. Session() print("sess. run(node 1, node 2): ", sess. run([node 1, node 2]))](https://slidetodoc.com/presentation_image_h2/3d3b1ec848de1fb74091c09c2c0d5041/image-5.jpg)

![Variables and get_variable V=tf. get_variavle(“v”, [1]) With tf. variable_scope(“”, reuse=True): v 1=tf. get_variable(“v”, [1]) Variables and get_variable V=tf. get_variavle(“v”, [1]) With tf. variable_scope(“”, reuse=True): v 1=tf. get_variable(“v”, [1])](https://slidetodoc.com/presentation_image_h2/3d3b1ec848de1fb74091c09c2c0d5041/image-12.jpg)

- Slides: 25

Tensor. Flow 入�

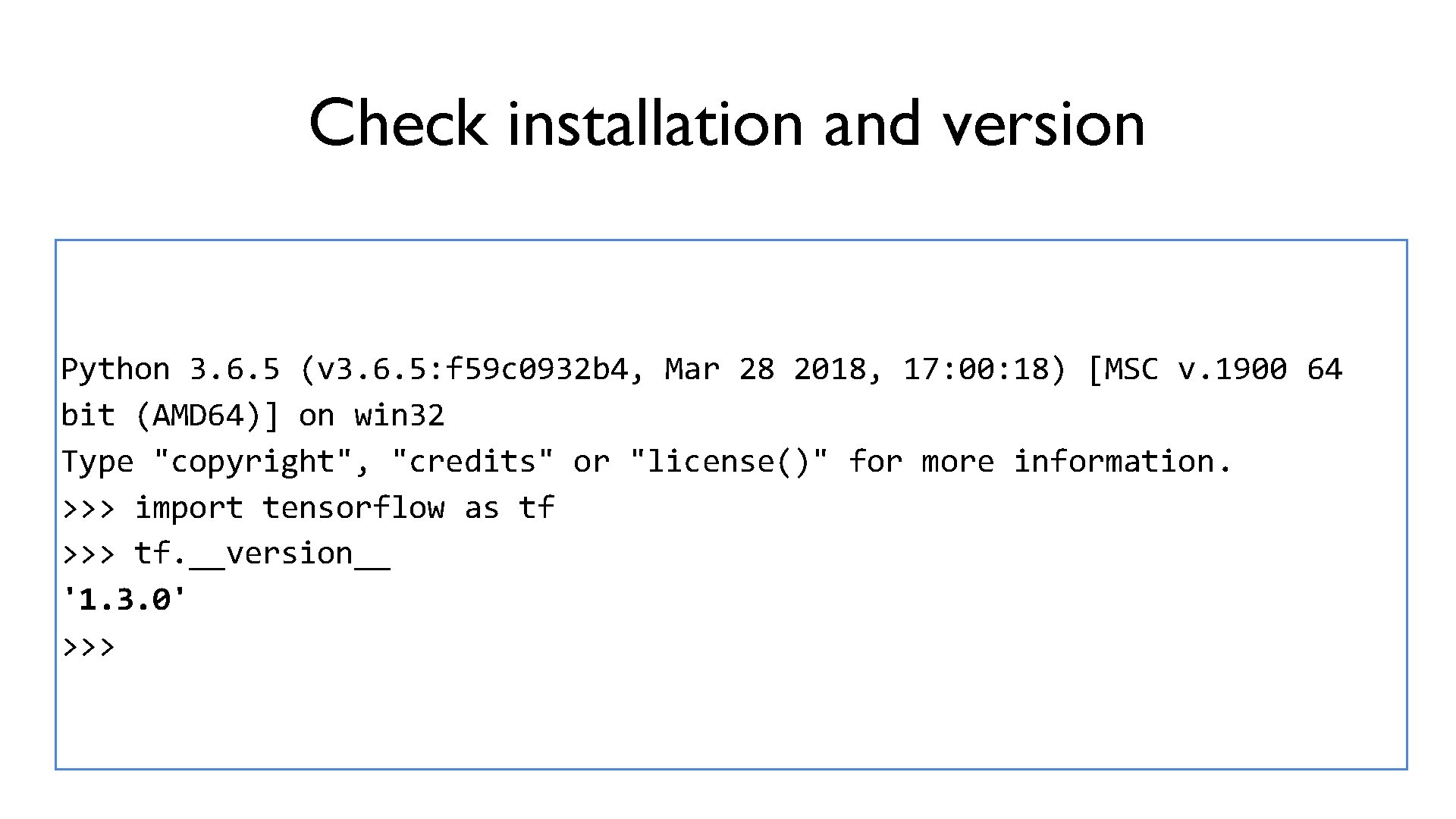

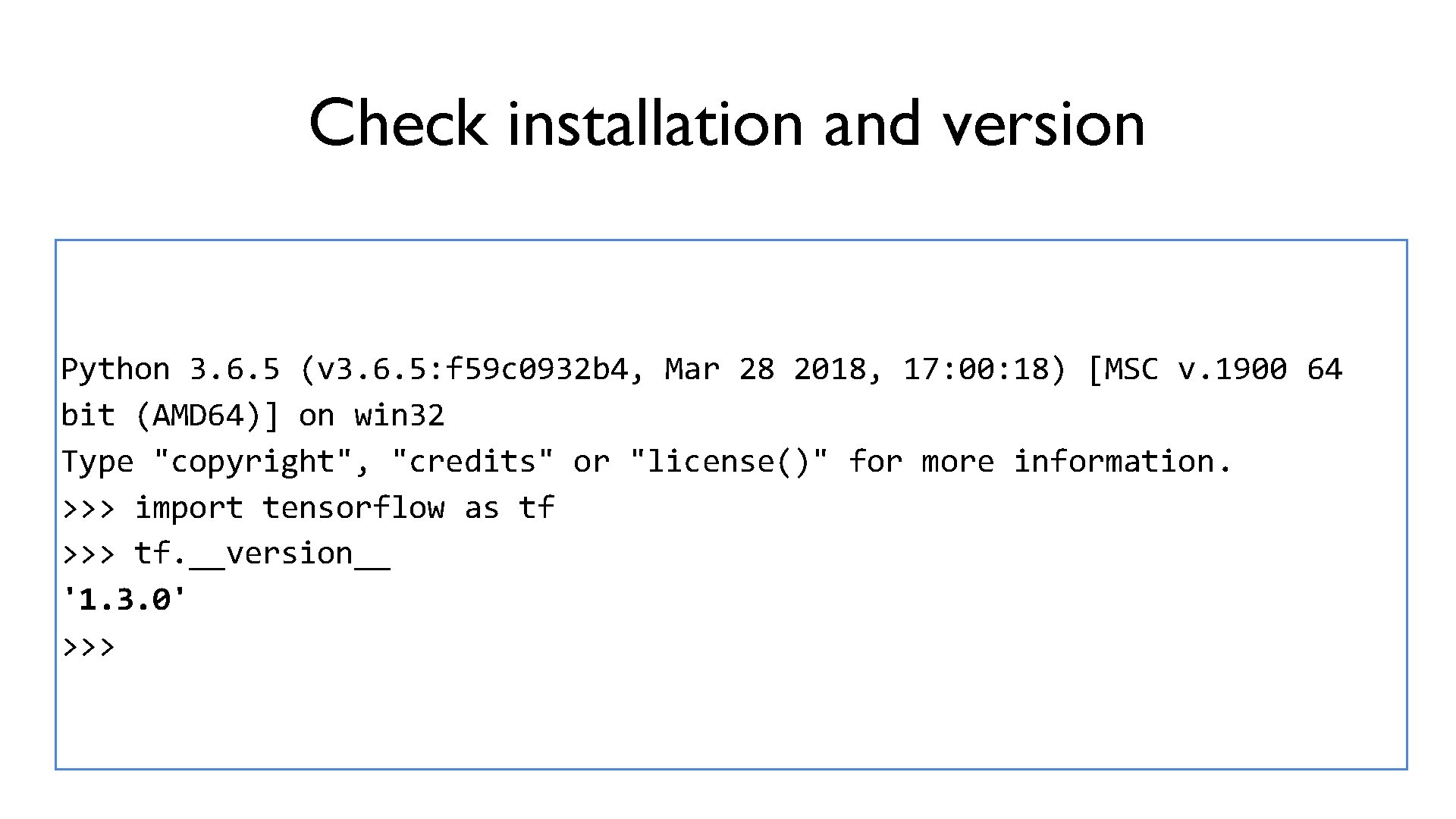

Check installation and version Python 3. 6. 5 (v 3. 6. 5: f 59 c 0932 b 4, Mar 28 2018, 17: 00: 18) [MSC v. 1900 64 bit (AMD 64)] on win 32 Type "copyright", "credits" or "license()" for more information. >>> import tensorflow as tf >>> tf. __version__ '1. 3. 0' >>>

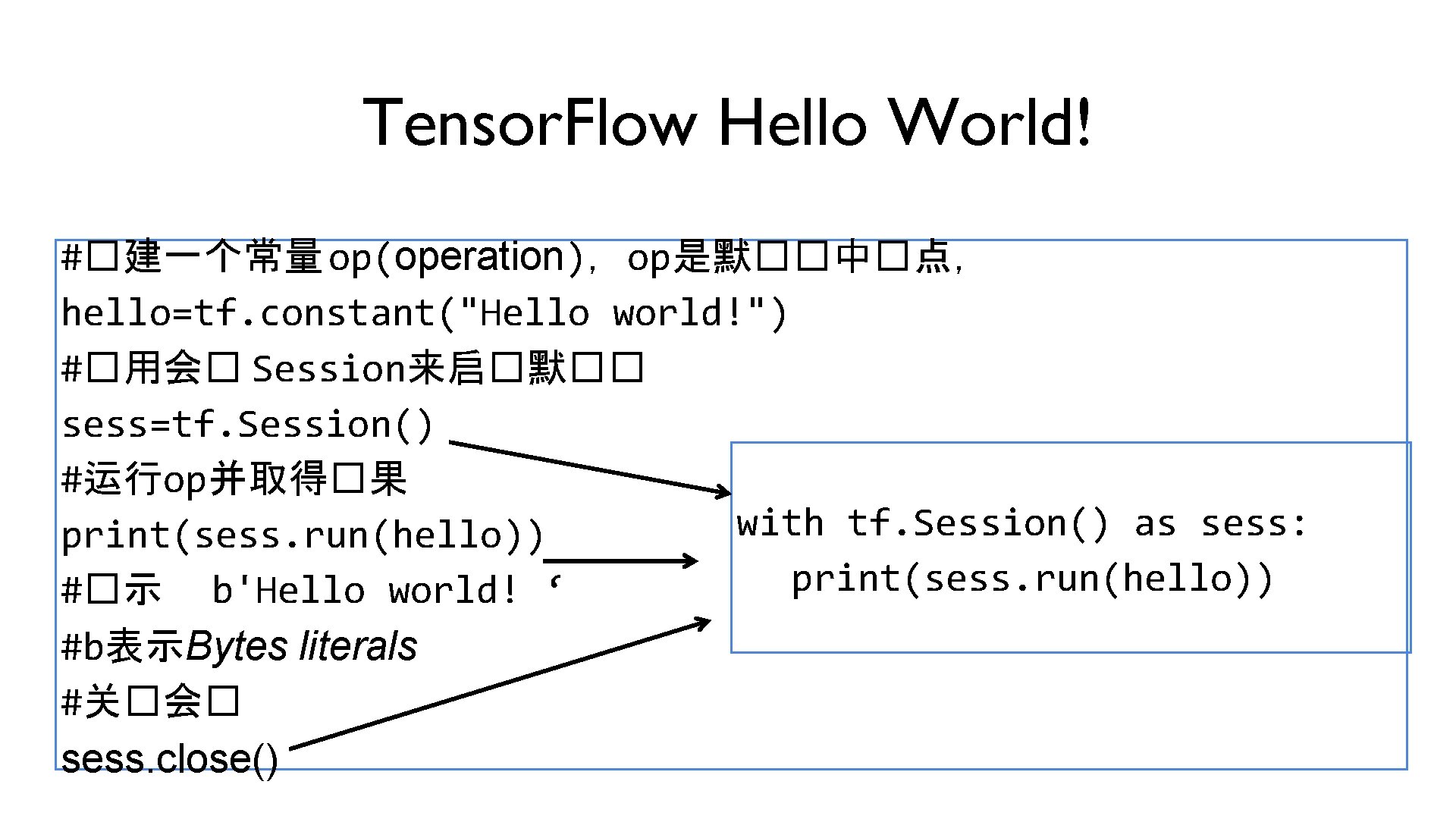

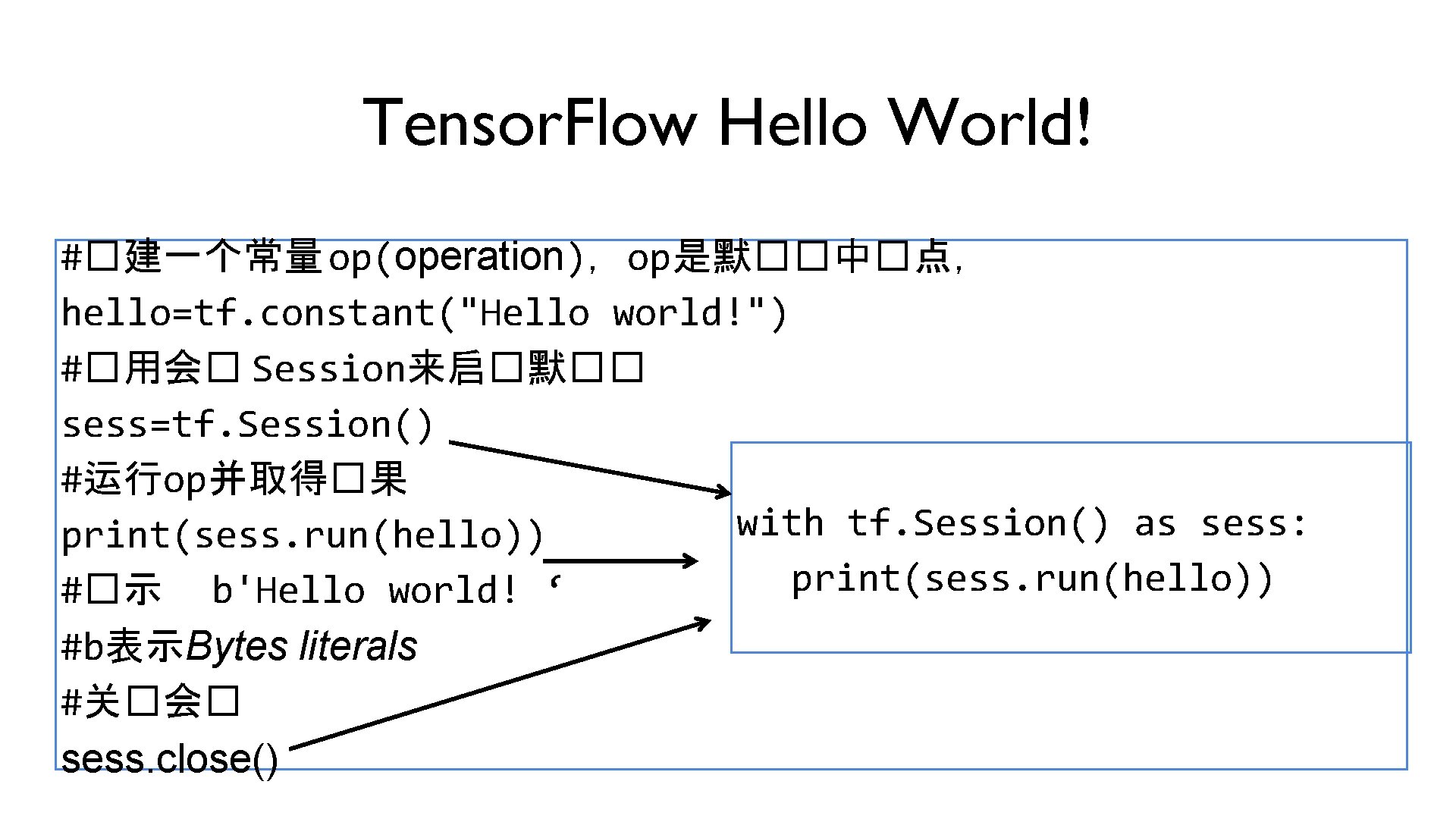

Tensor. Flow Hello World! #�建一个常量 op(operation),op是默��中�点, hello=tf. constant("Hello world!") #�用会� Session来启�默�� sess=tf. Session() #运行op并取得�果 with tf. Session() as sess: print(sess. run(hello)) #�示 b'Hello world! ‘ #b表示Bytes literals #关�会� sess. close()

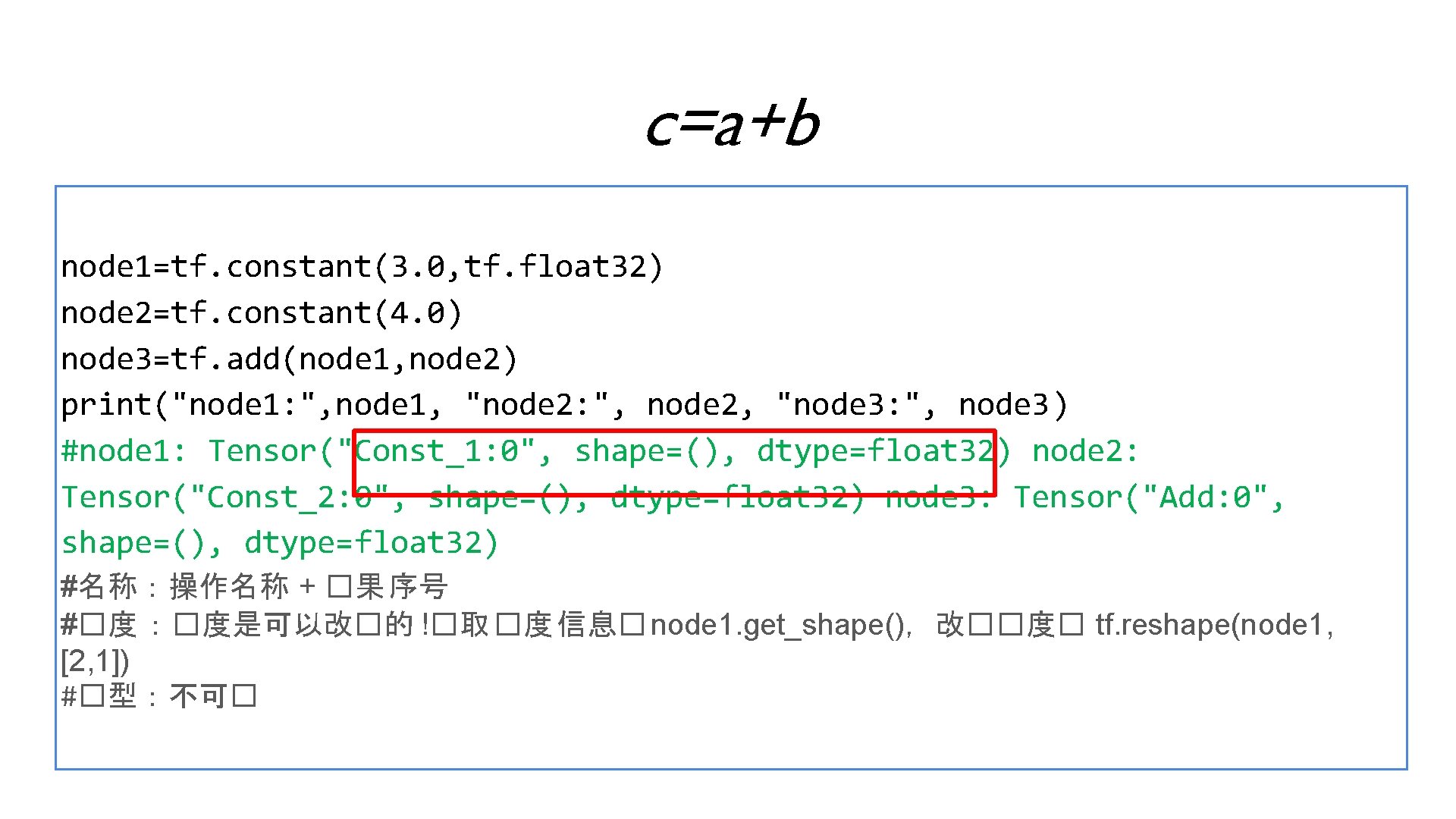

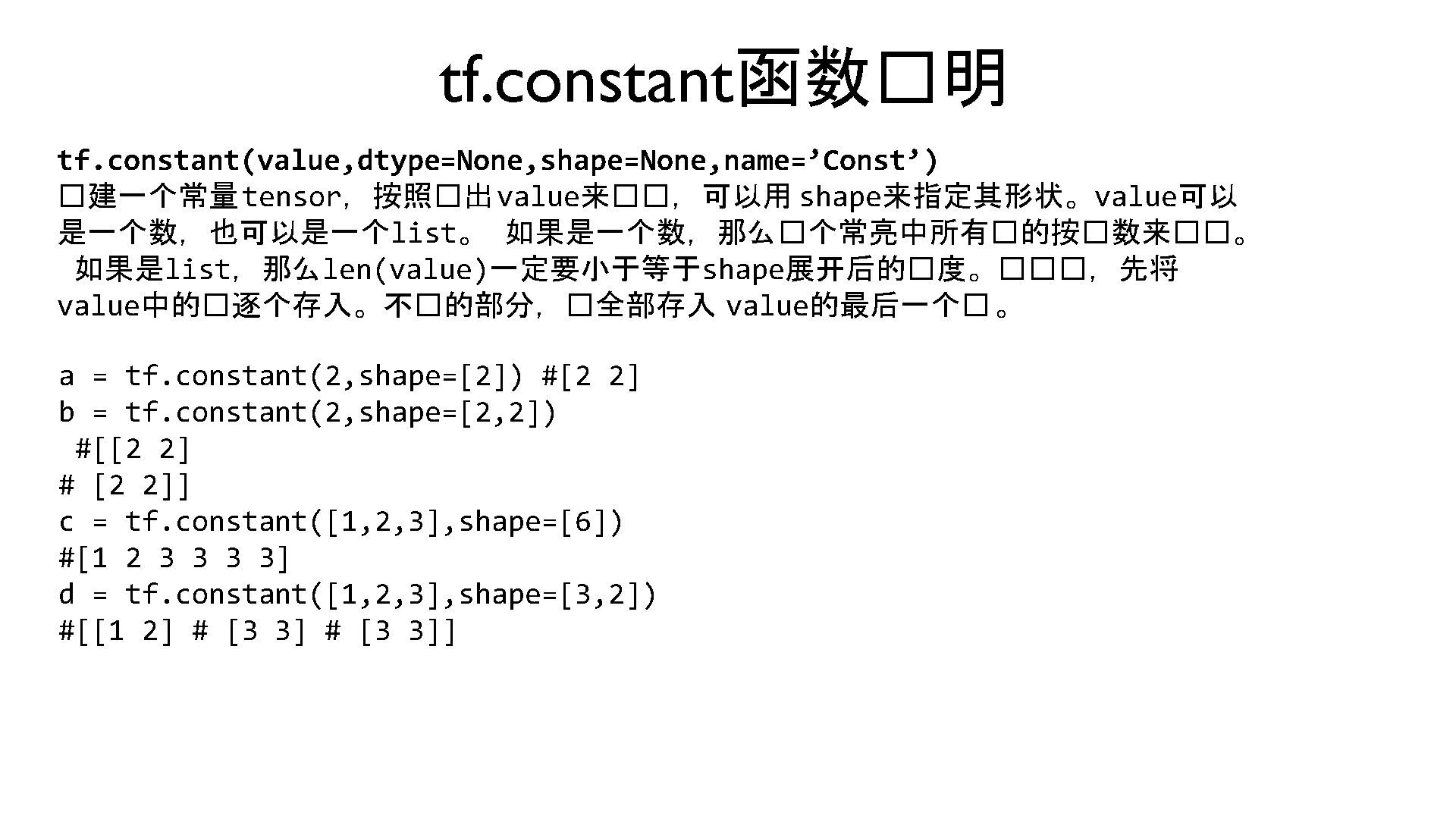

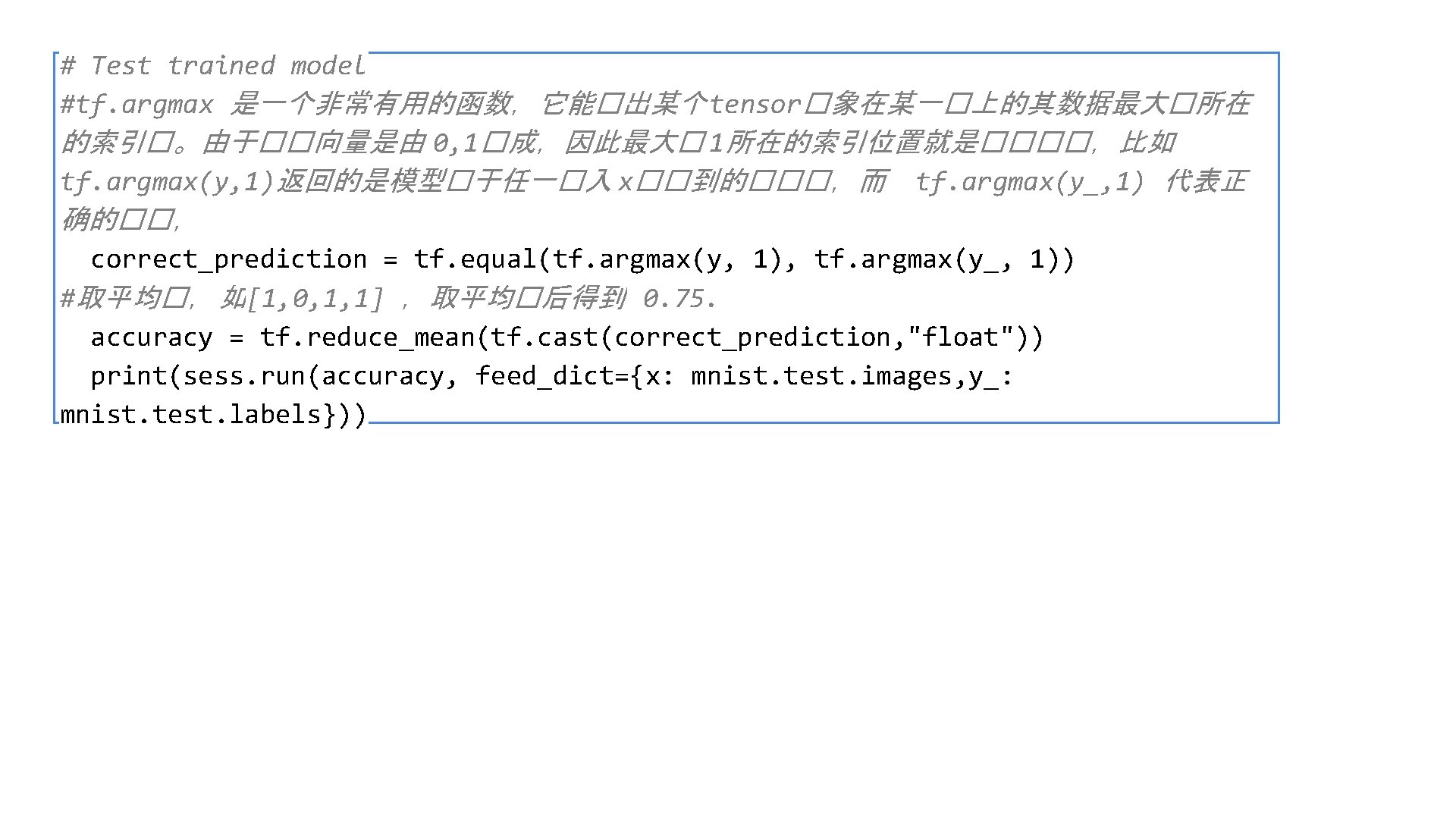

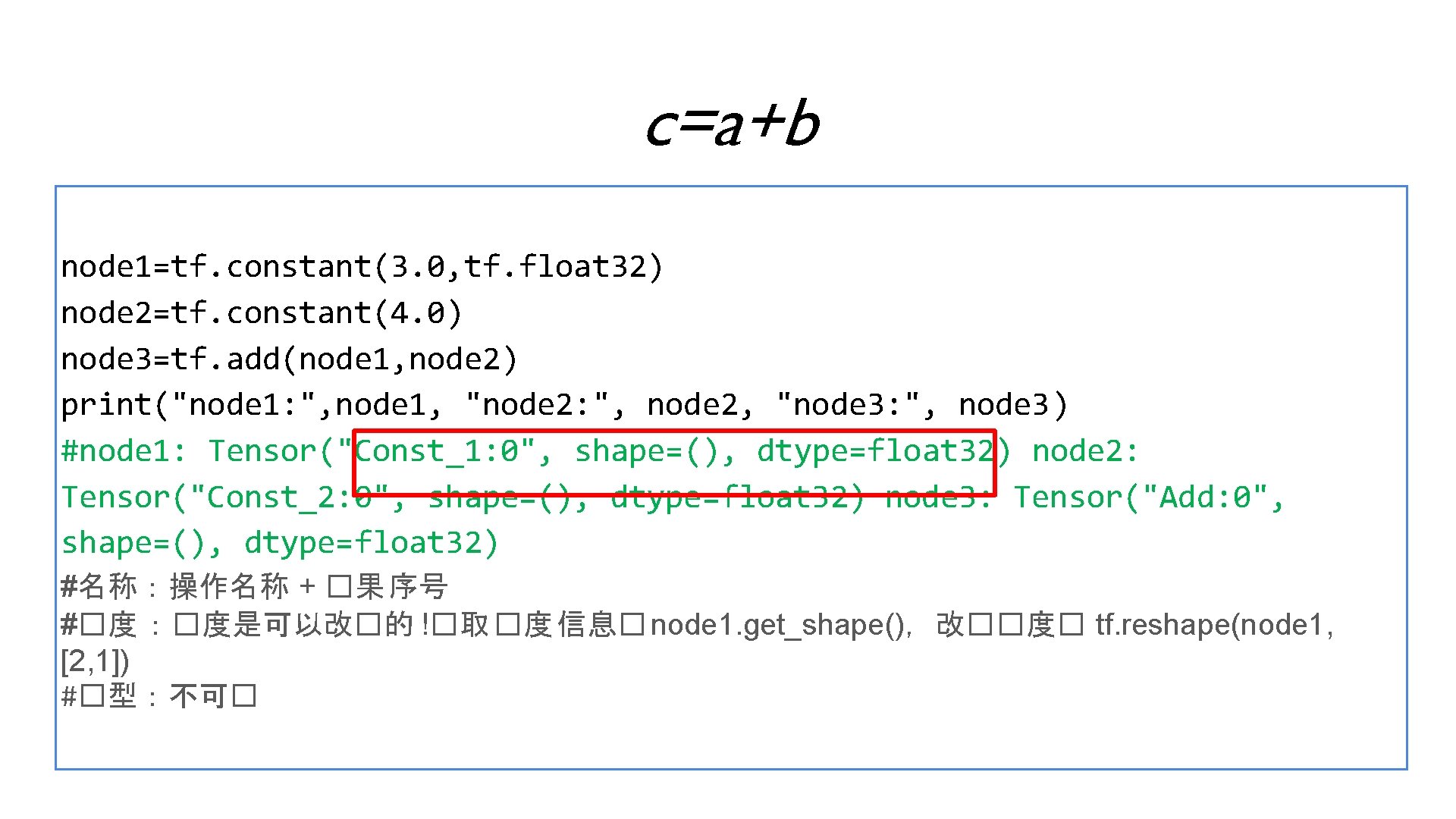

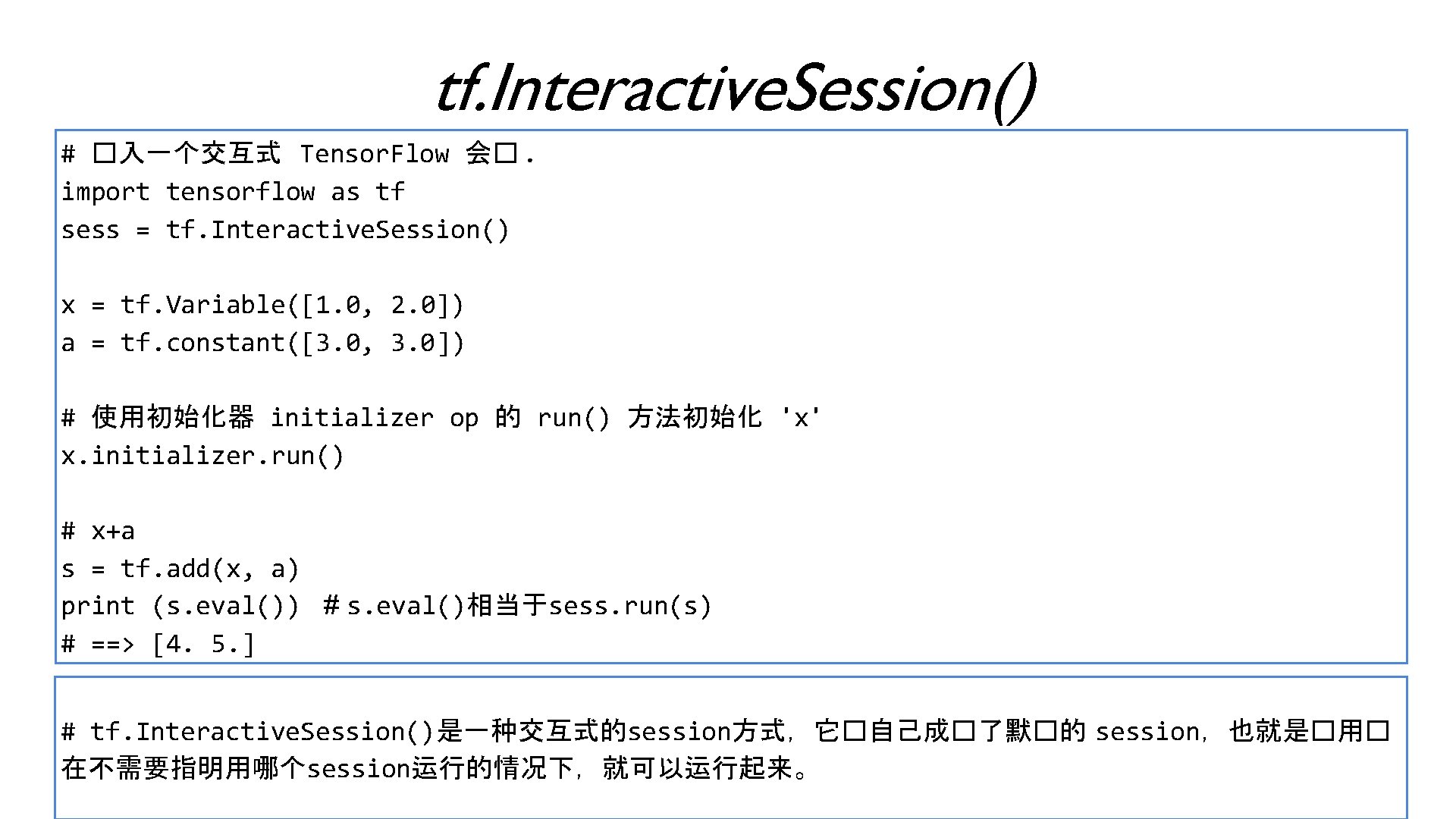

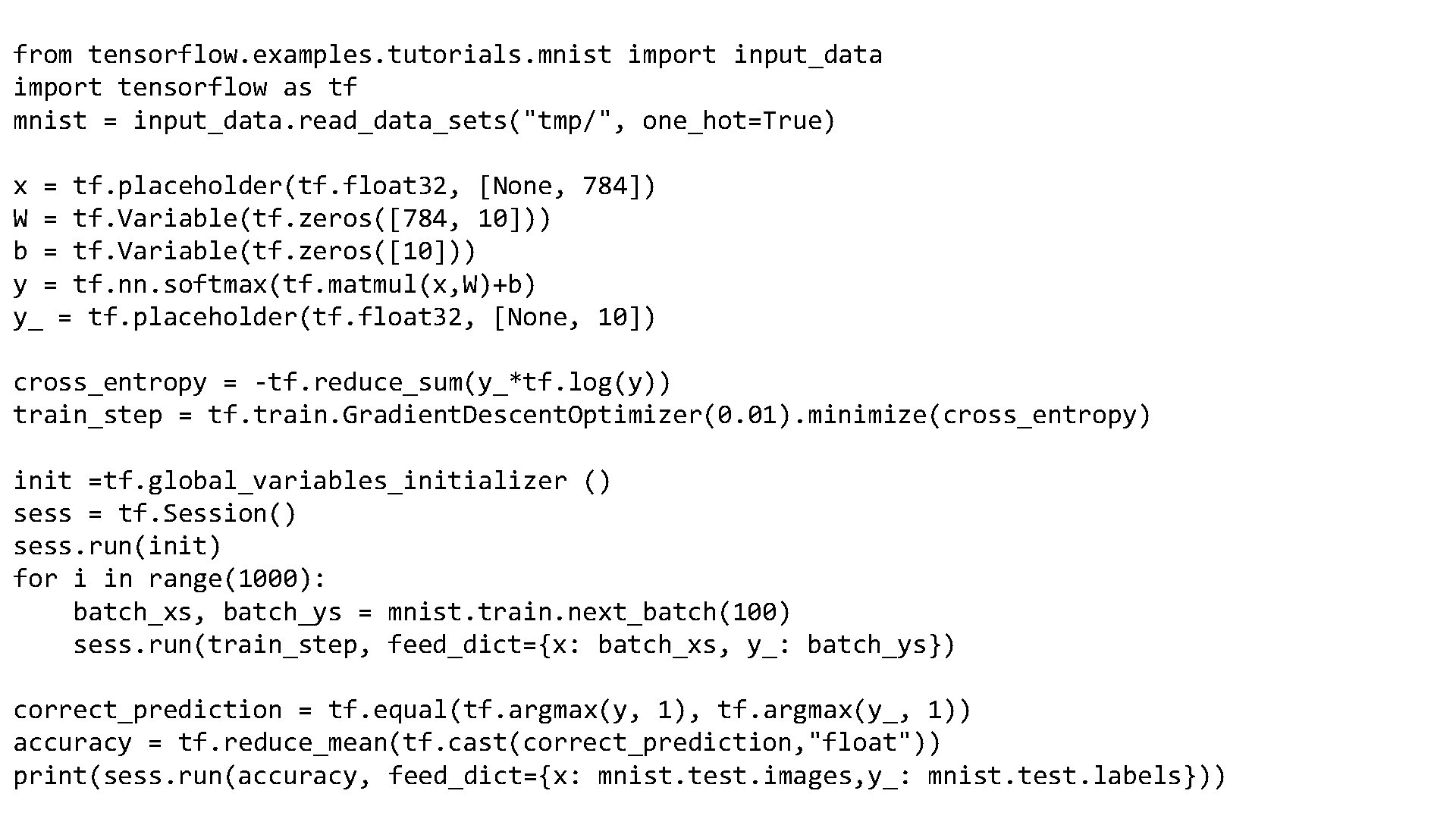

c=a+b node 1=tf. constant(3. 0, tf. float 32) node 2=tf. constant(4. 0) node 3=tf. add(node 1, node 2) print("node 1: ", node 1, "node 2: ", node 2, "node 3: ", node 3) #node 1: Tensor("Const_1: 0", shape=(), dtype=float 32) node 2: Tensor("Const_2: 0", shape=(), dtype=float 32) node 3: Tensor("Add: 0", shape=(), dtype=float 32) #名称:操作名称 + �果 序号 #�度 :�度是可以改�的 !�取 �度 信息� node 1. get_shape(),改��度� tf. reshape(node 1, [2, 1]) #�型:不可�

![cab sesstf Session printsess runnode 1 node 2 sess runnode 1 node 2 c=a+b sess=tf. Session() print("sess. run(node 1, node 2): ", sess. run([node 1, node 2]))](https://slidetodoc.com/presentation_image_h2/3d3b1ec848de1fb74091c09c2c0d5041/image-5.jpg)

c=a+b sess=tf. Session() print("sess. run(node 1, node 2): ", sess. run([node 1, node 2])) #sess. run(node 1, node 2): [3. 0, 4. 0] print("sess. run(node 3): ", sess. run(node 3)) #sess. run(node 3): 7. 0

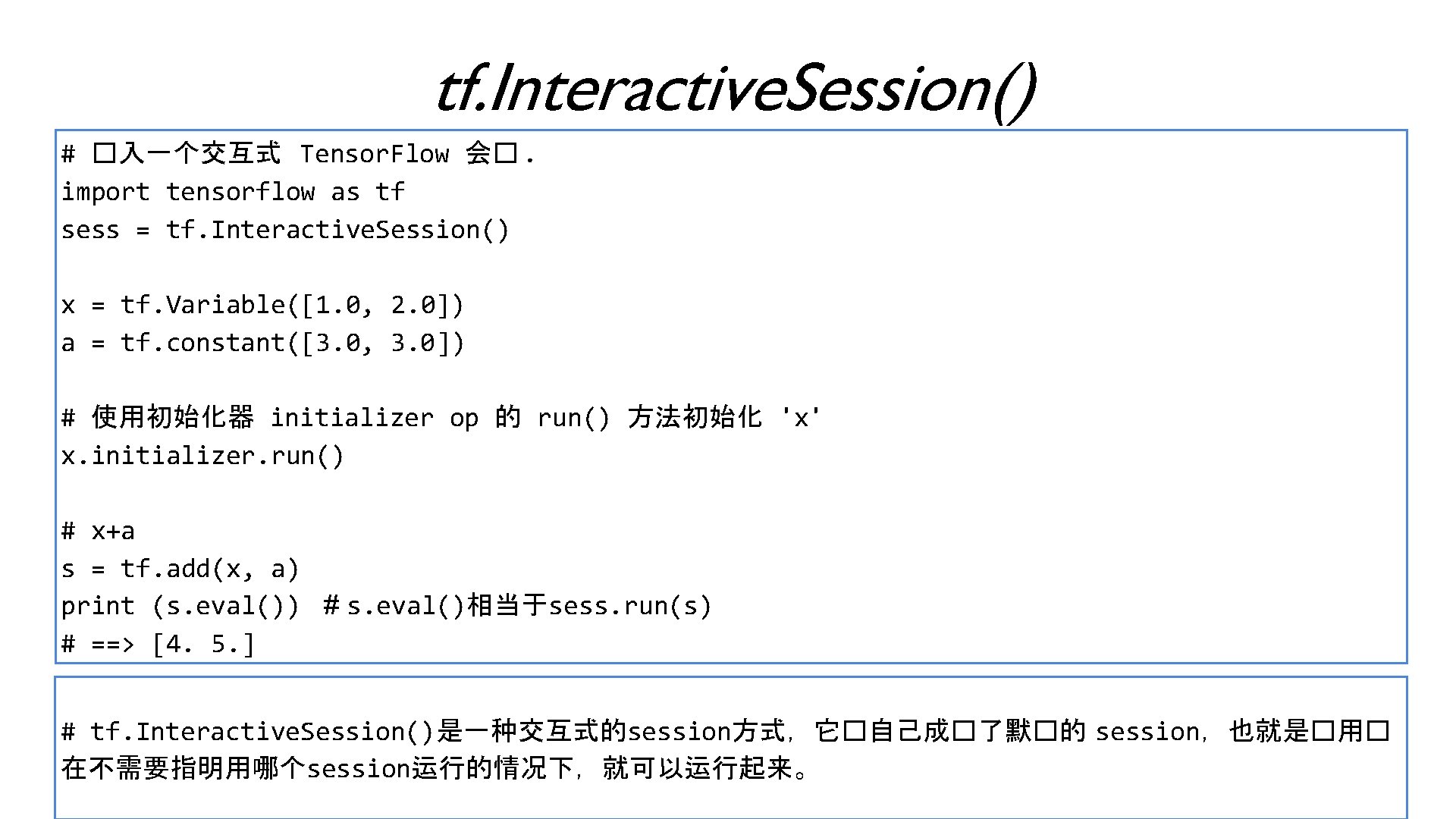

tf. Interactive. Session() # �入一个交互式 Tensor. Flow 会�. import tensorflow as tf sess = tf. Interactive. Session() x = tf. Variable([1. 0, 2. 0]) a = tf. constant([3. 0, 3. 0]) # 使用初始化器 initializer op 的 run() 方法初始化 'x' x. initializer. run() # x+a s = tf. add(x, a) print (s. eval()) # s. eval()相当于sess. run(s) # ==> [4. 5. ] # tf. Interactive. Session()是一种交互式的session方式,它�自己成�了默�的 session,也就是�用� 在不需要指明用哪个session运行的情况下,就可以运行起来。

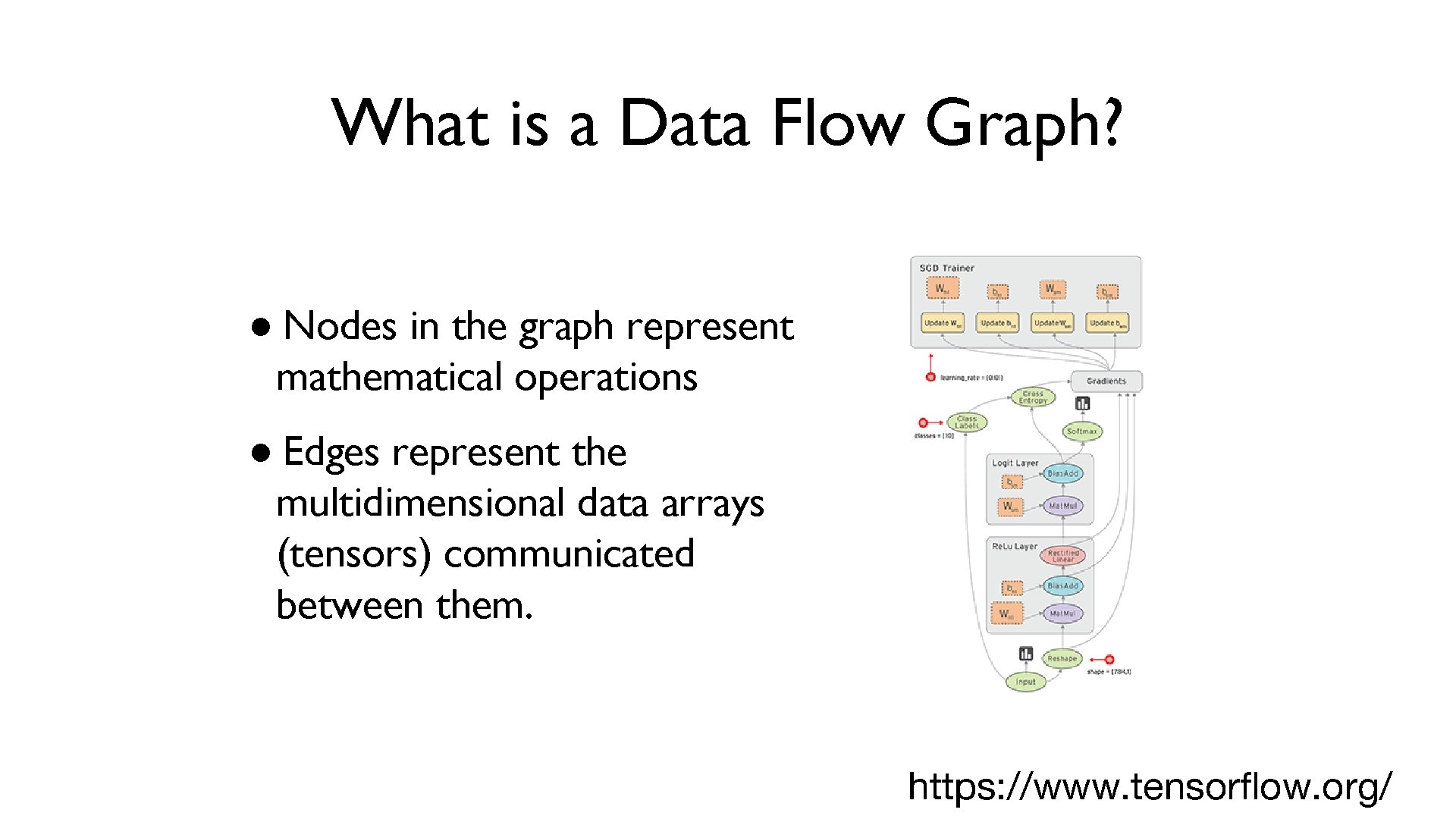

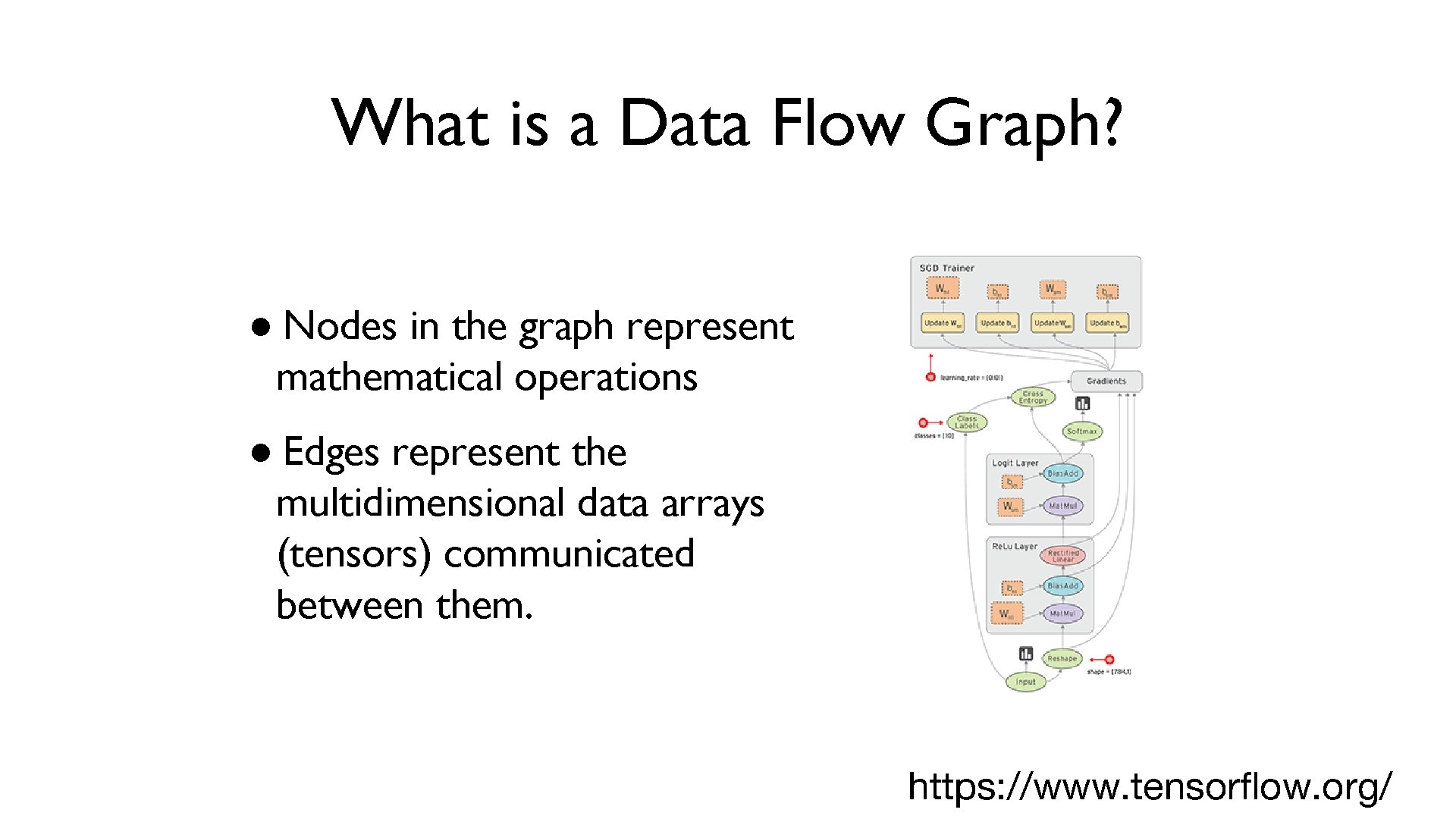

What is a Data Flow Graph? ● Nodes in the graph represent mathematical operations ● Edges represent the multidimensional data arrays (tensors) communicated between them. https: //www. tensorflow. org/

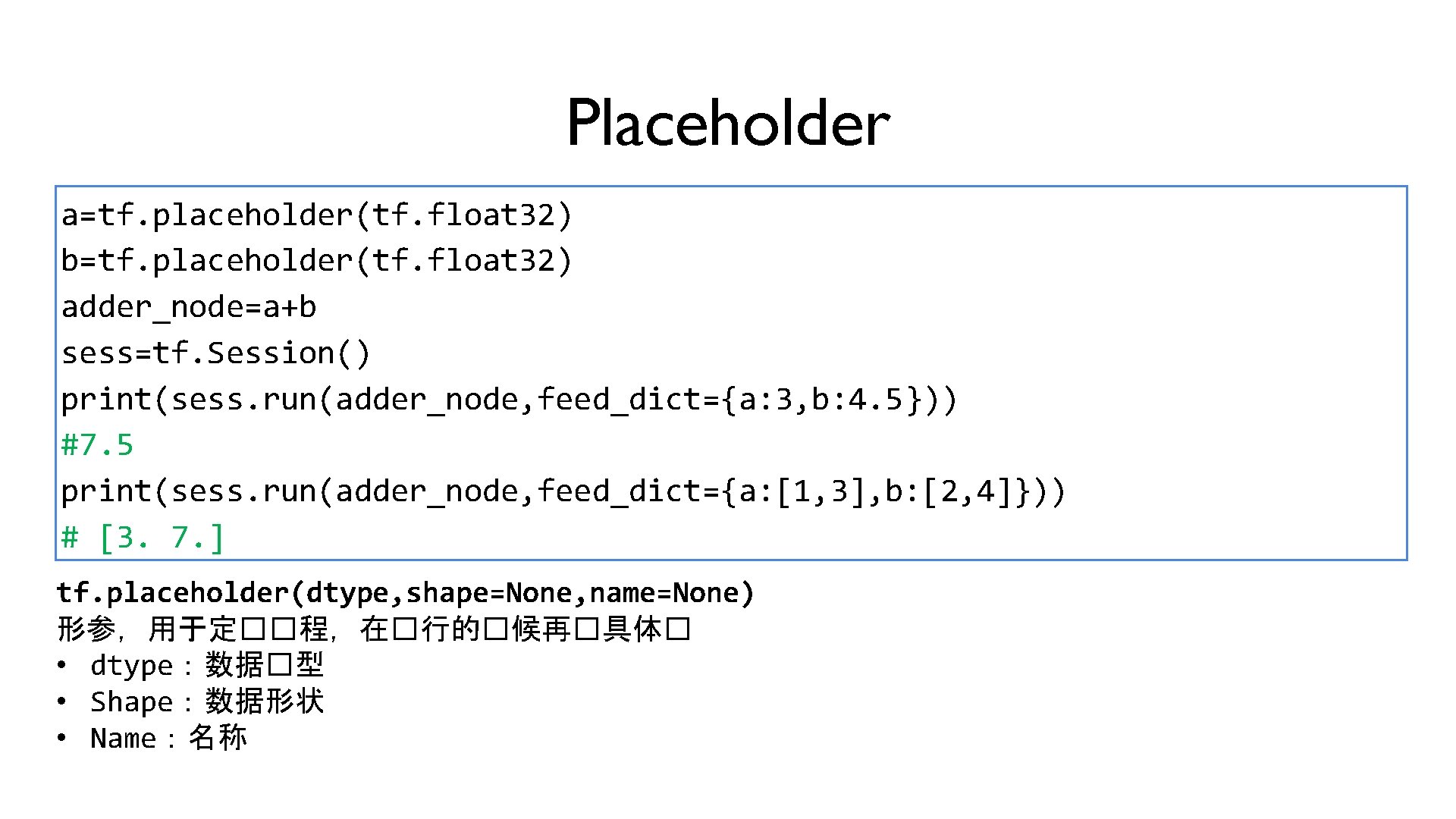

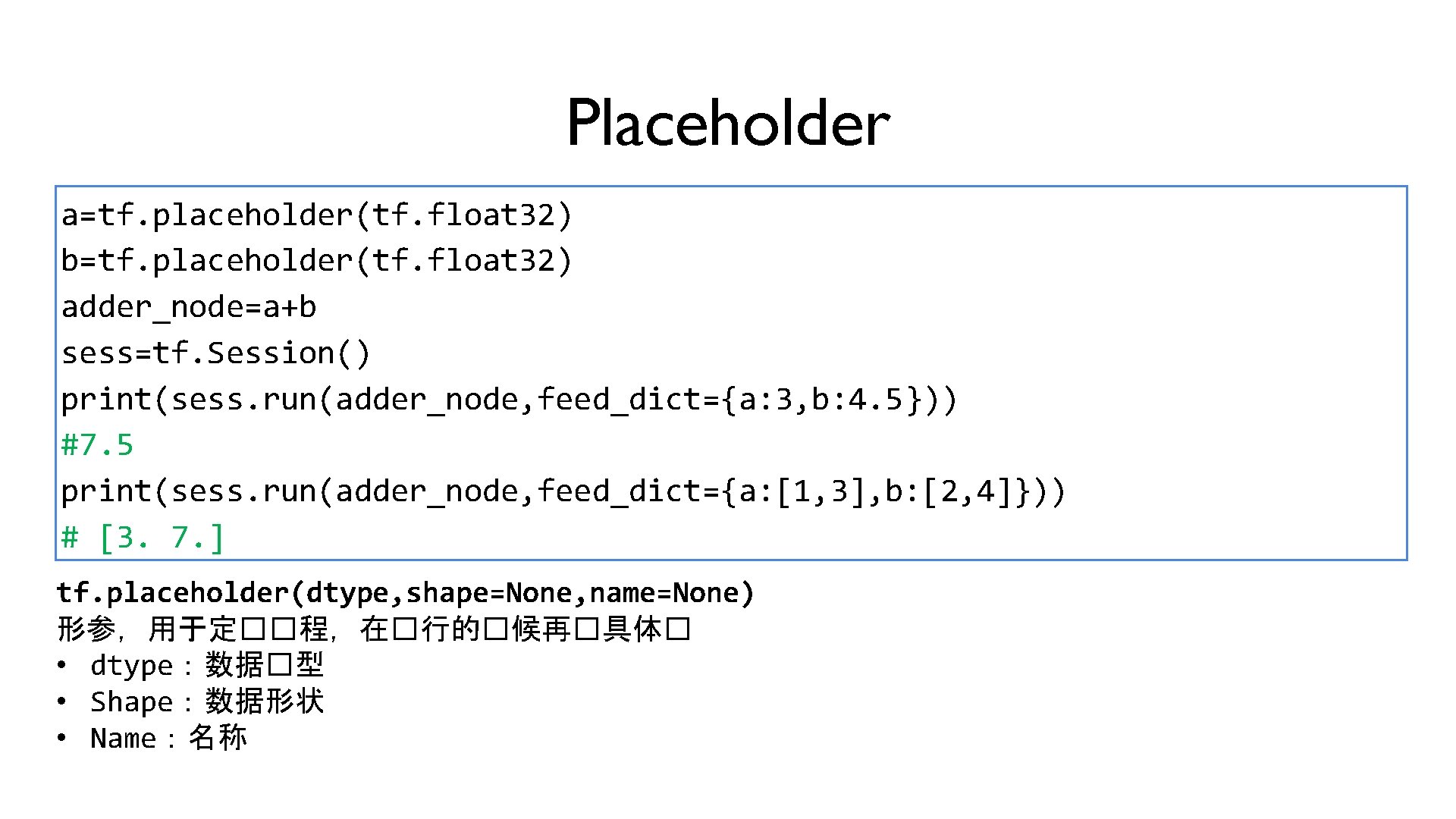

Placeholder a=tf. placeholder(tf. float 32) b=tf. placeholder(tf. float 32) adder_node=a+b sess=tf. Session() print(sess. run(adder_node, feed_dict={a: 3, b: 4. 5})) #7. 5 print(sess. run(adder_node, feed_dict={a: [1, 3], b: [2, 4]})) # [3. 7. ] tf. placeholder(dtype, shape=None, name=None) 形参,用于定��程,在�行的�候再�具体� • dtype:数据�型 • Shape:数据形状 • Name:名称

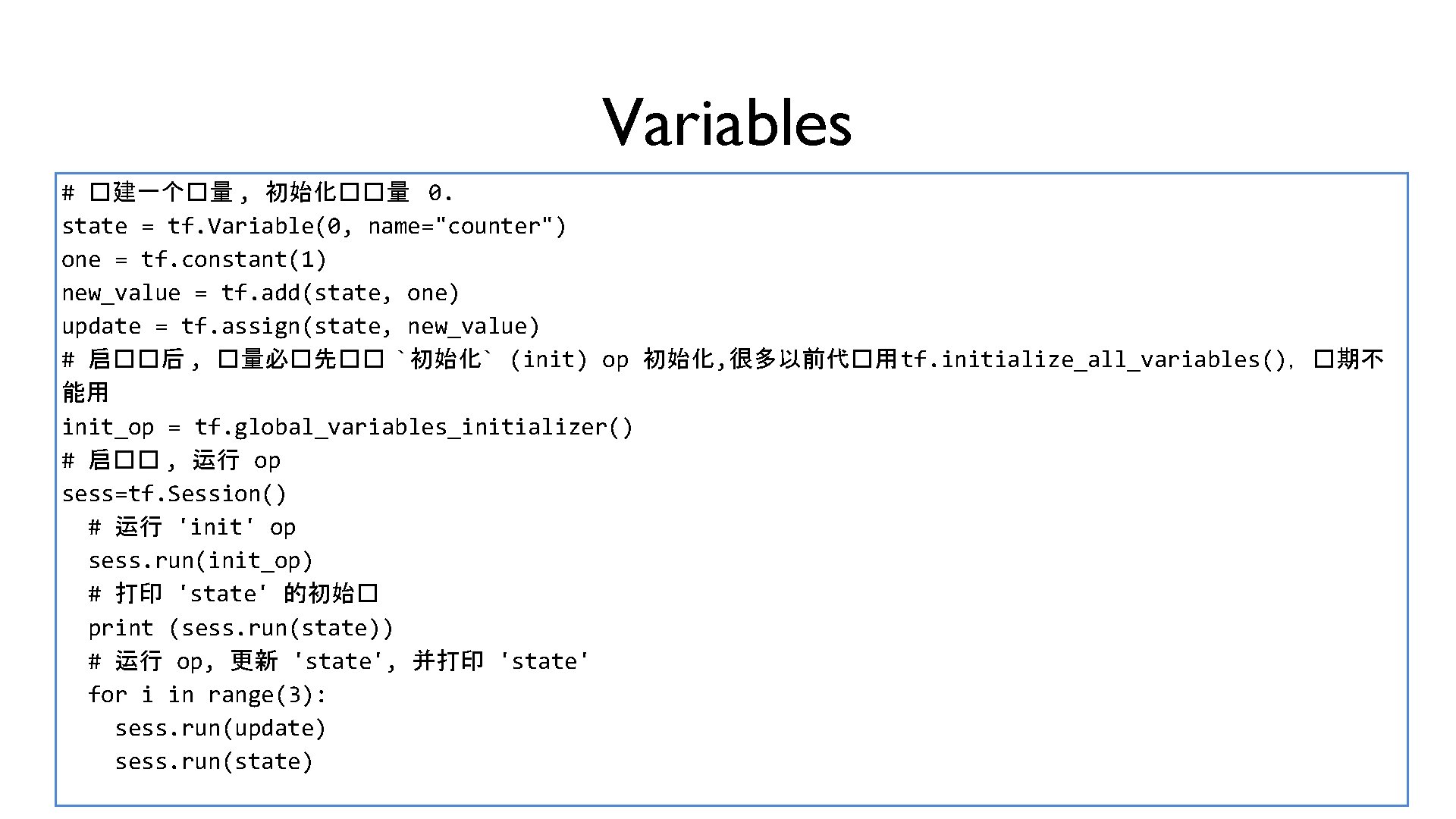

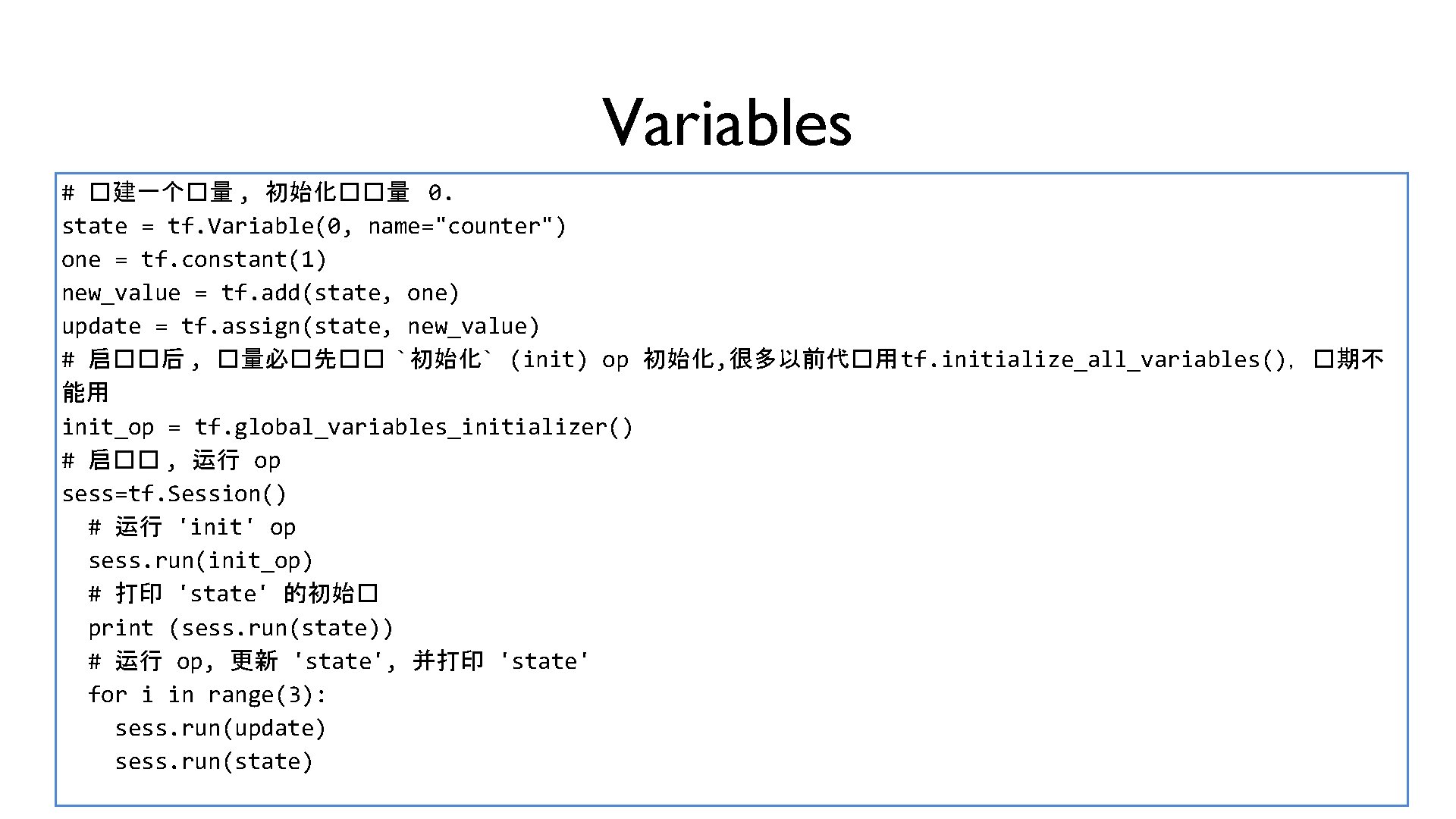

Variables # �建一个�量 , 初始化��量 0. state = tf. Variable(0, name="counter") one = tf. constant(1) new_value = tf. add(state, one) update = tf. assign(state, new_value) # 启��后 , �量必�先�� `初始化` (init) op 初始化, 很多以前代�用 tf. initialize_all_variables(),�期不 能用 init_op = tf. global_variables_initializer() # 启�� , 运行 op sess=tf. Session() # 运行 'init' op sess. run(init_op) # 打印 'state' 的初始� print (sess. run(state)) # 运行 op, 更新 'state', 并打印 'state' for i in range(3): sess. run(update) sess. run(state)

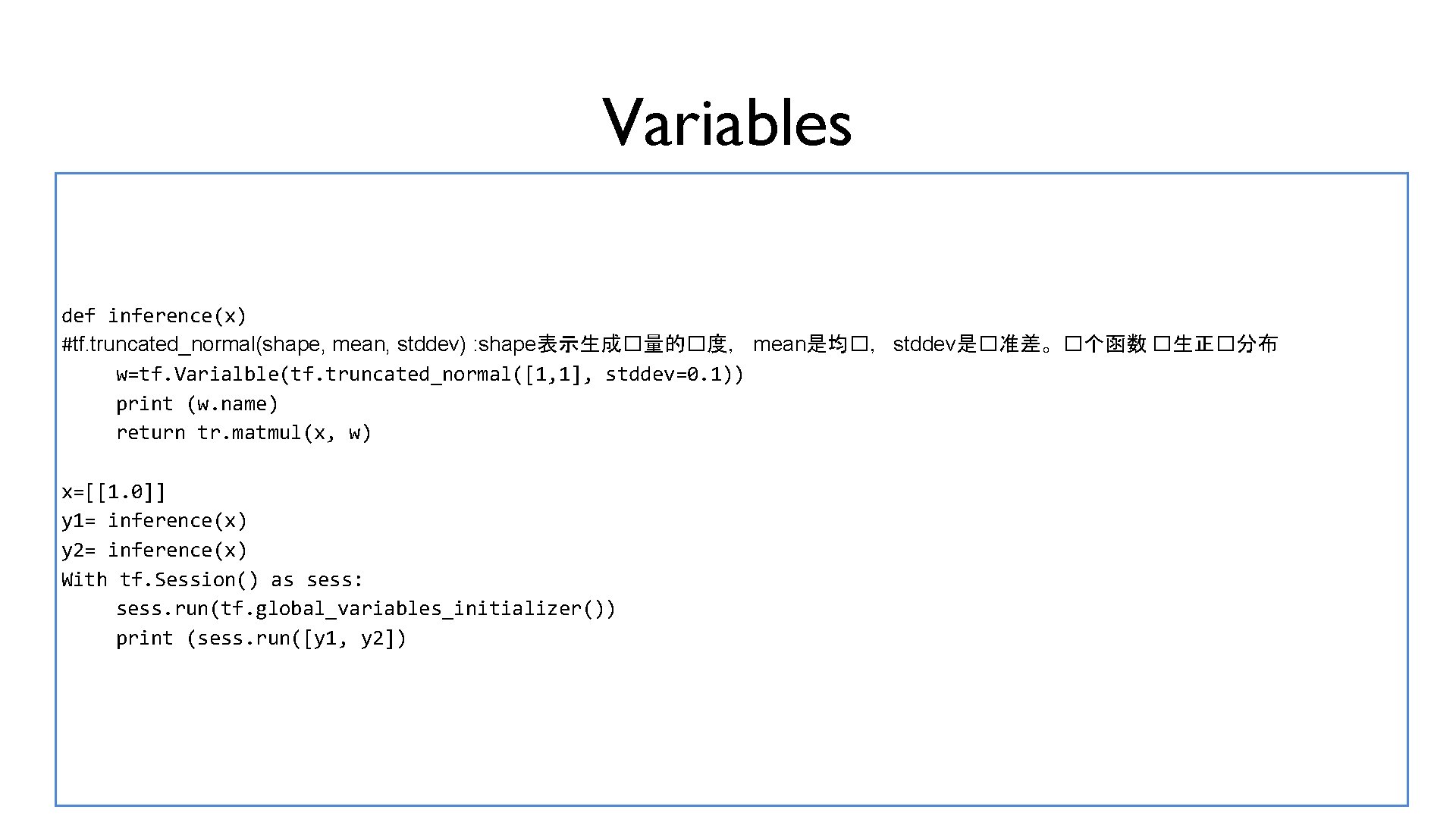

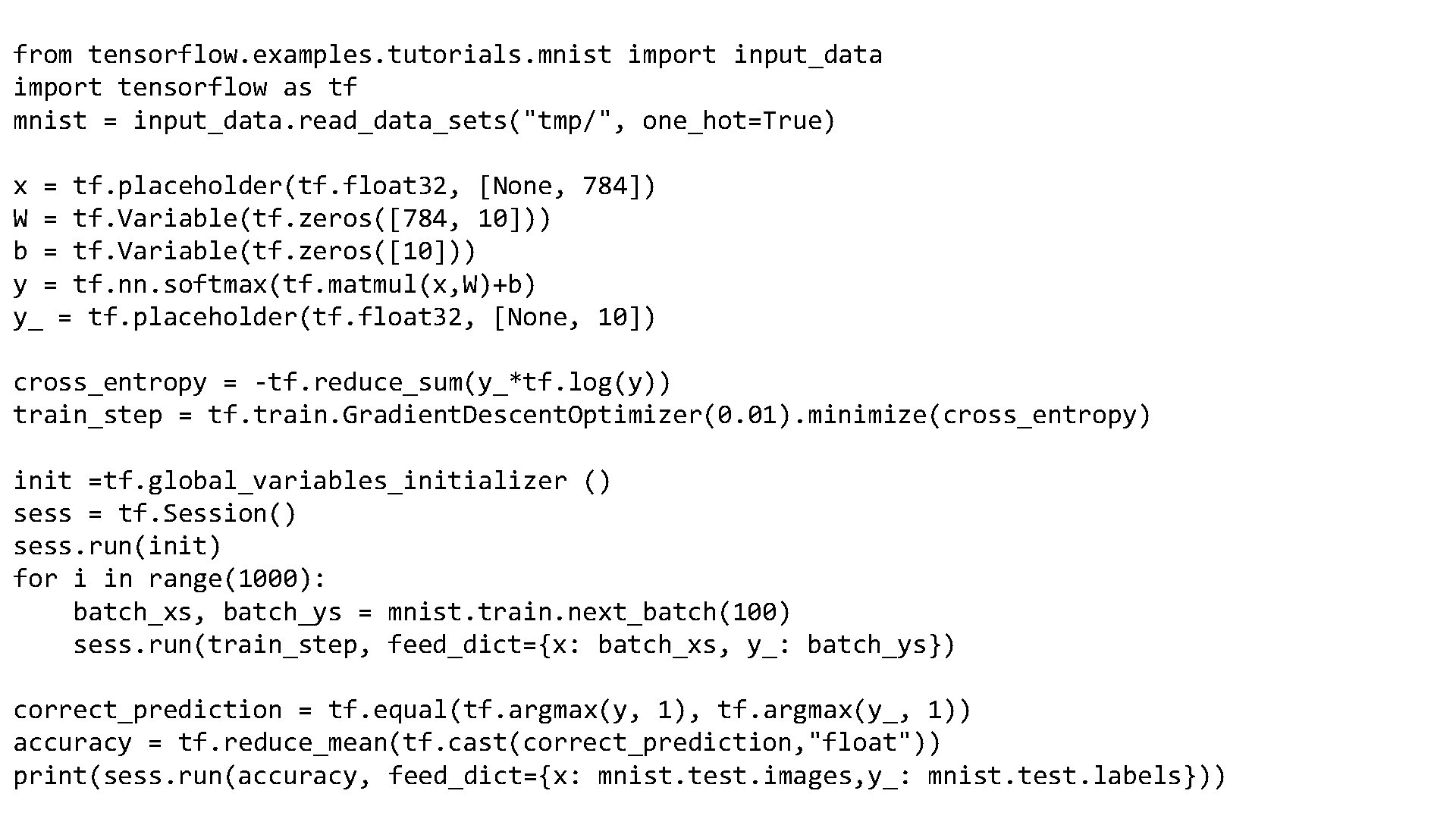

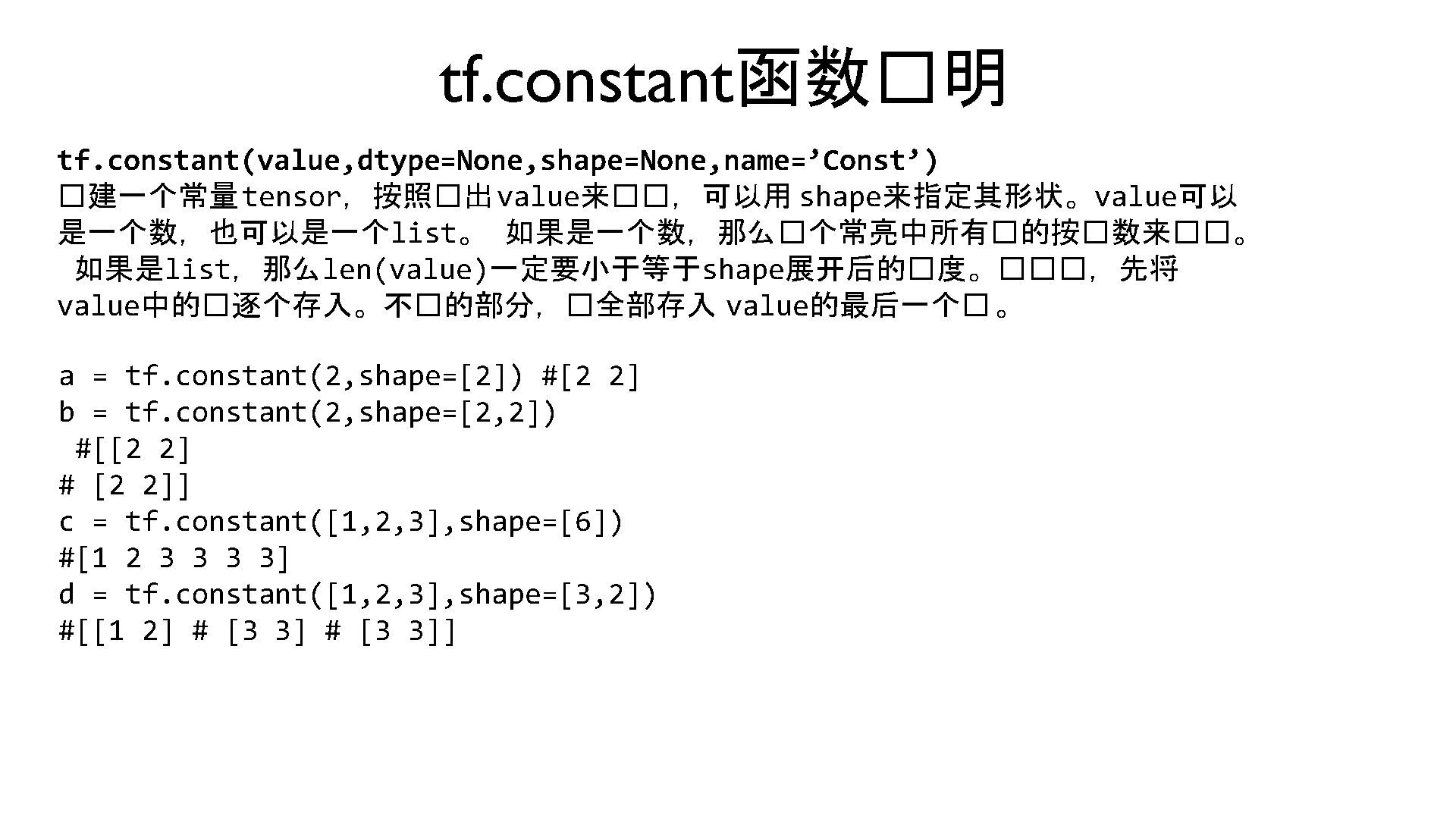

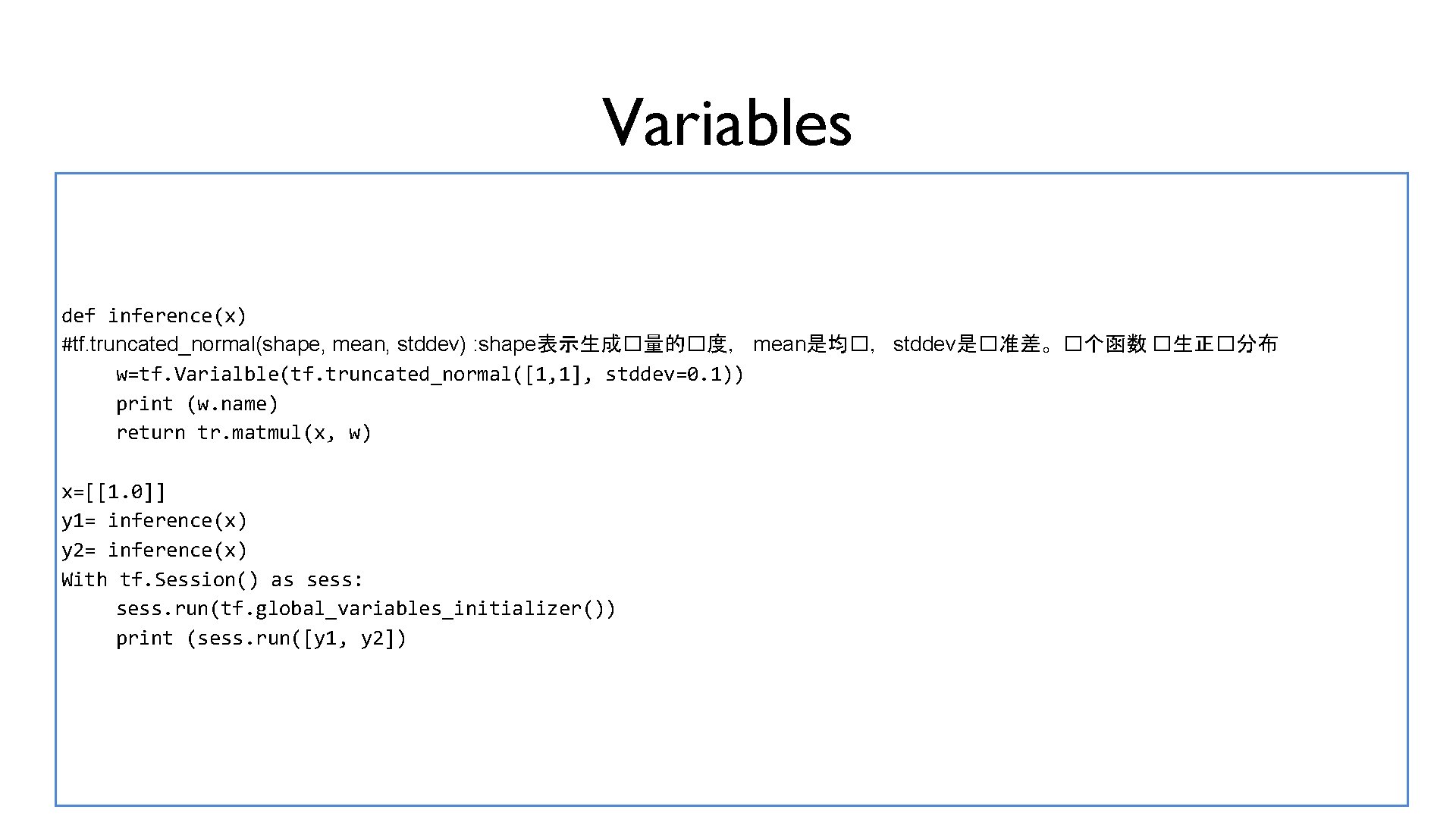

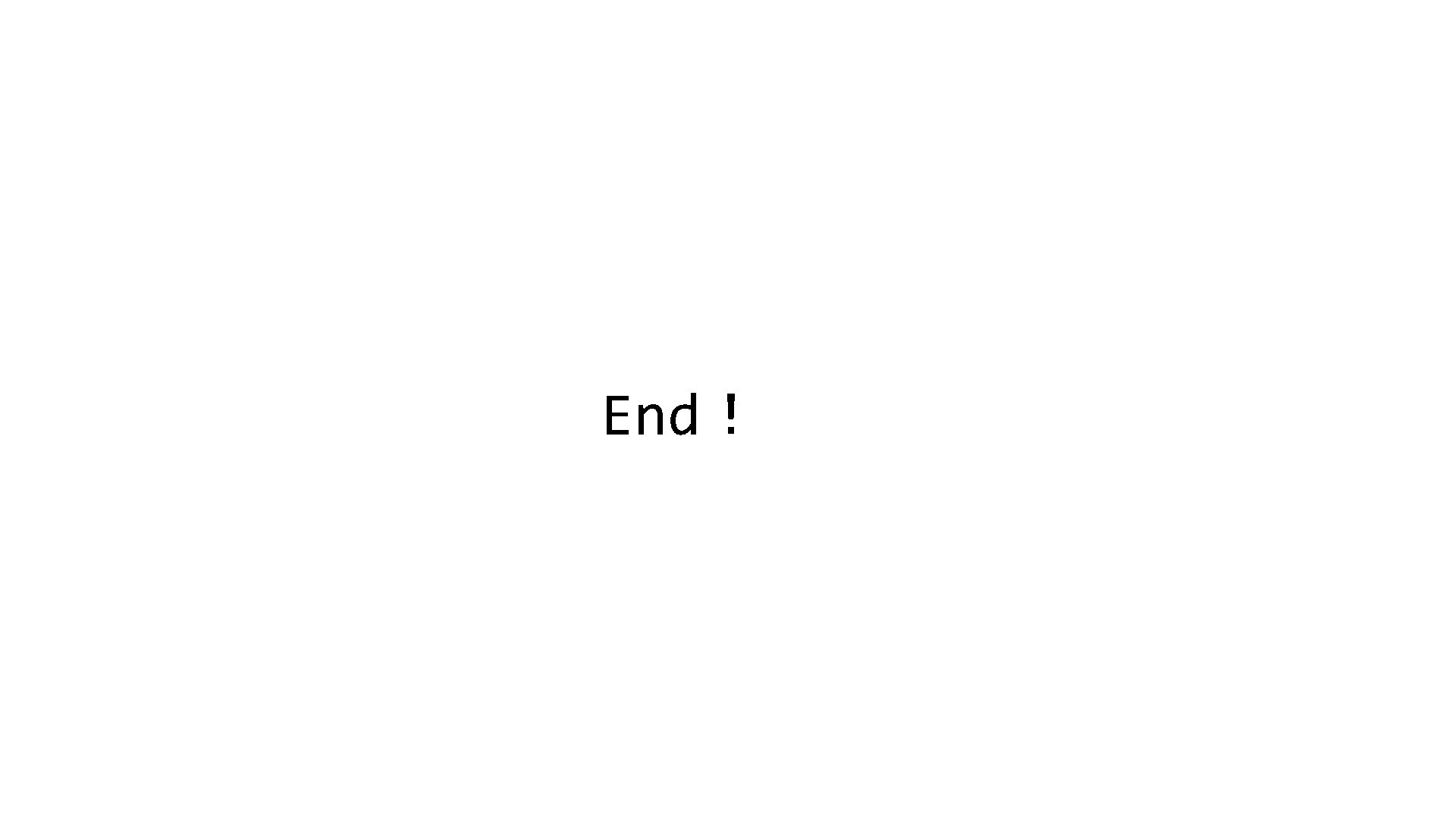

Variables def inference(x) #tf. truncated_normal(shape, mean, stddev) : shape表示生成�量的�度, mean是均�, stddev是�准差。�个函数 �生正�分布 w=tf. Varialble(tf. truncated_normal([1, 1], stddev=0. 1)) print (w. name) return tr. matmul(x, w) x=[[1. 0]] y 1= inference(x) y 2= inference(x) With tf. Session() as sess: sess. run(tf. global_variables_initializer()) print (sess. run([y 1, y 2])

![Variables and getvariable Vtf getvariavlev 1 With tf variablescope reuseTrue v 1tf getvariablev 1 Variables and get_variable V=tf. get_variavle(“v”, [1]) With tf. variable_scope(“”, reuse=True): v 1=tf. get_variable(“v”, [1])](https://slidetodoc.com/presentation_image_h2/3d3b1ec848de1fb74091c09c2c0d5041/image-12.jpg)

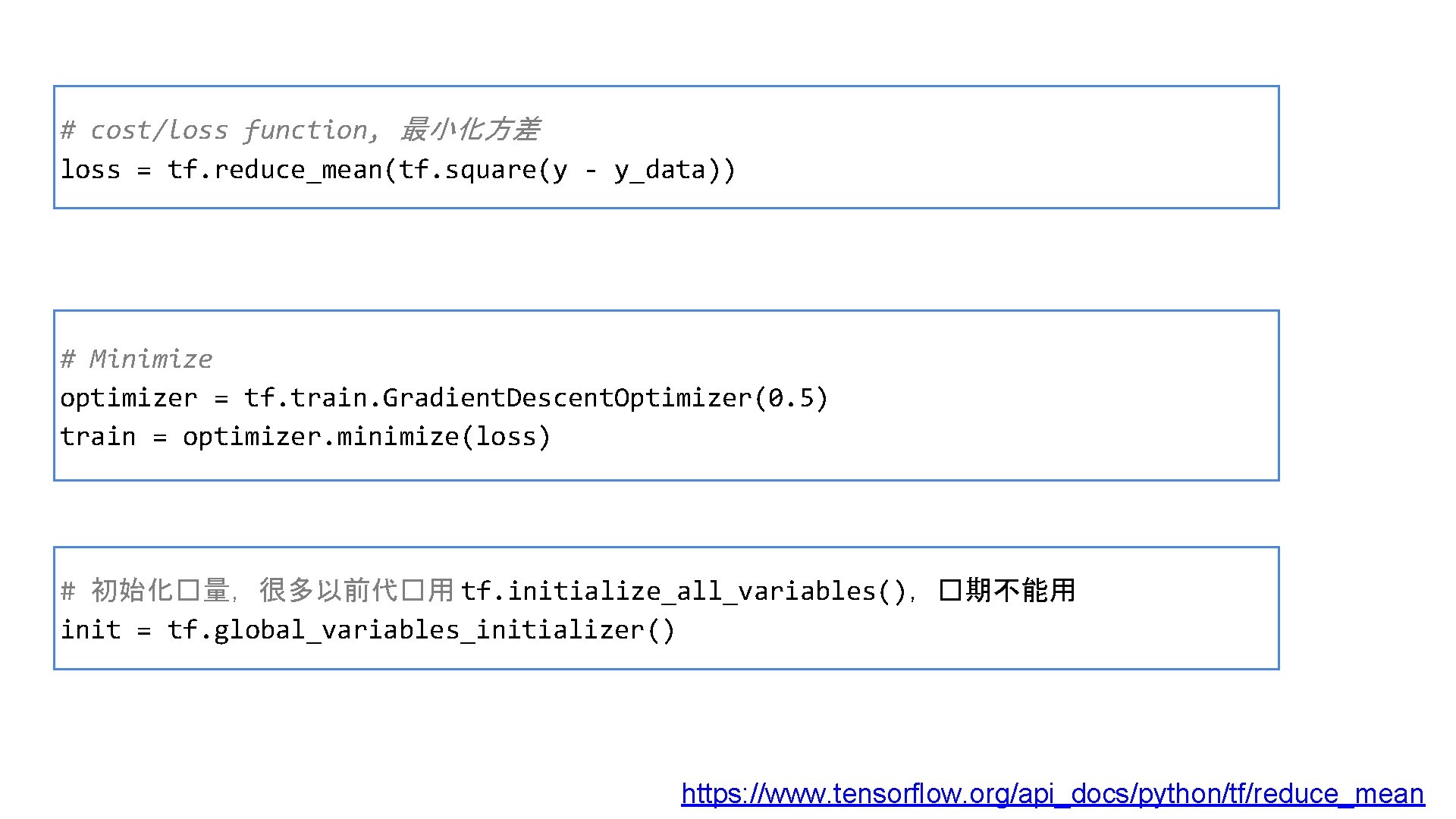

Variables and get_variable V=tf. get_variavle(“v”, [1]) With tf. variable_scope(“”, reuse=True): v 1=tf. get_variable(“v”, [1]) print (v==v 1) With tf. variable_scope(“”, reuse=True): x=[[1. 0]] y 1= inference(x) y 2= inference(x) With tf. Session() as sess: sess. run(tf. global_variables_initializer()) print (sess. run([y 1, y 2])

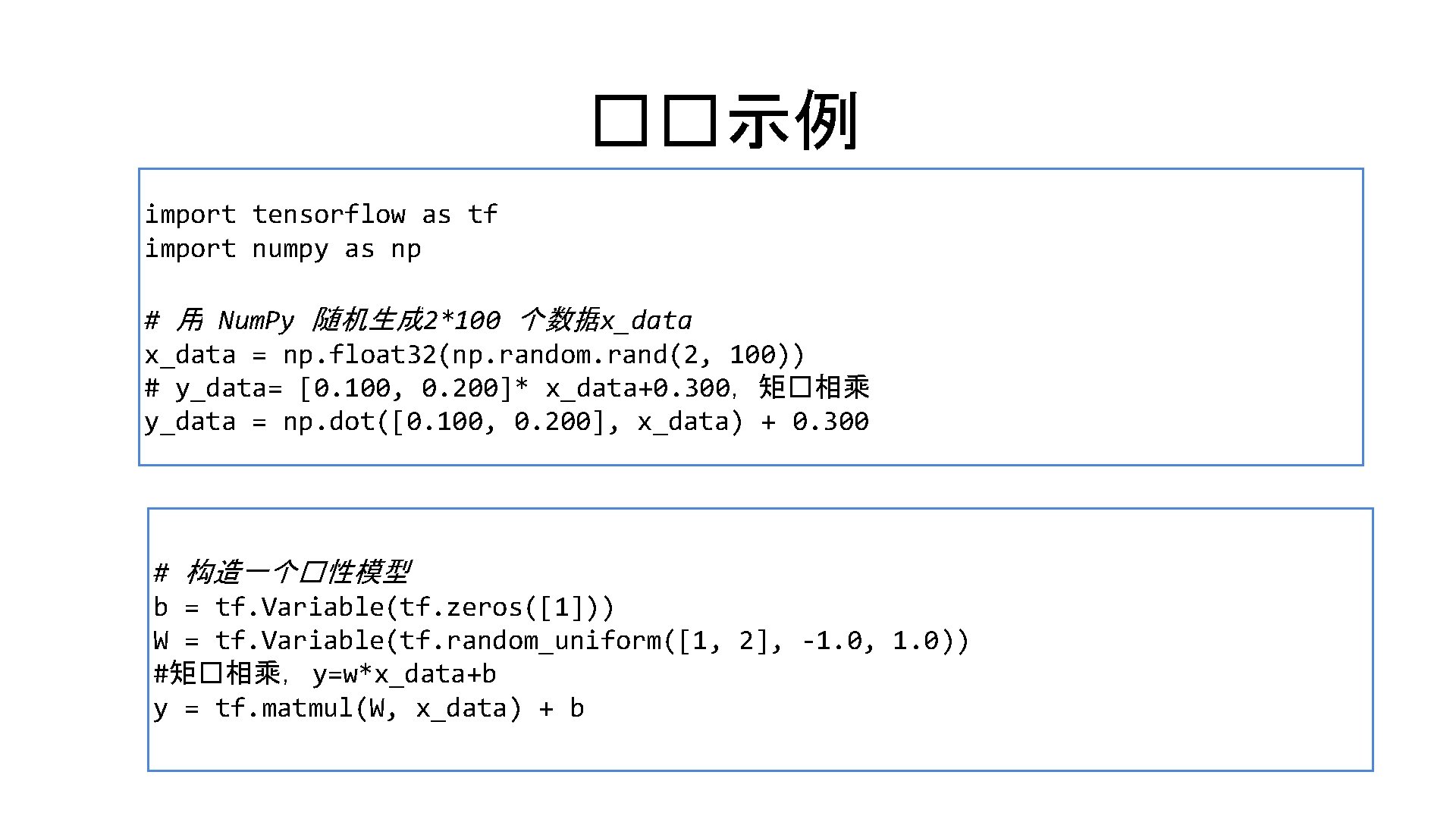

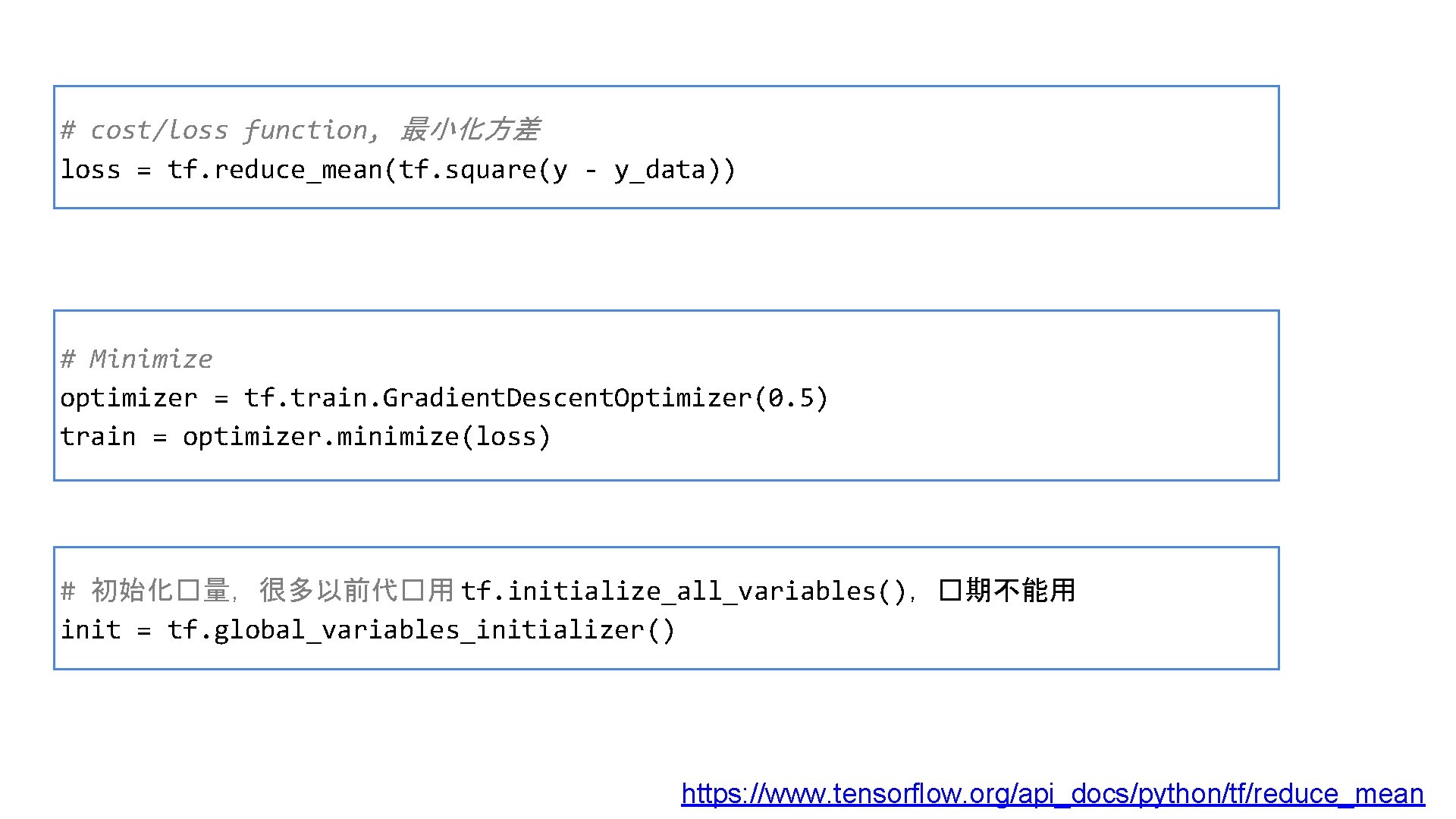

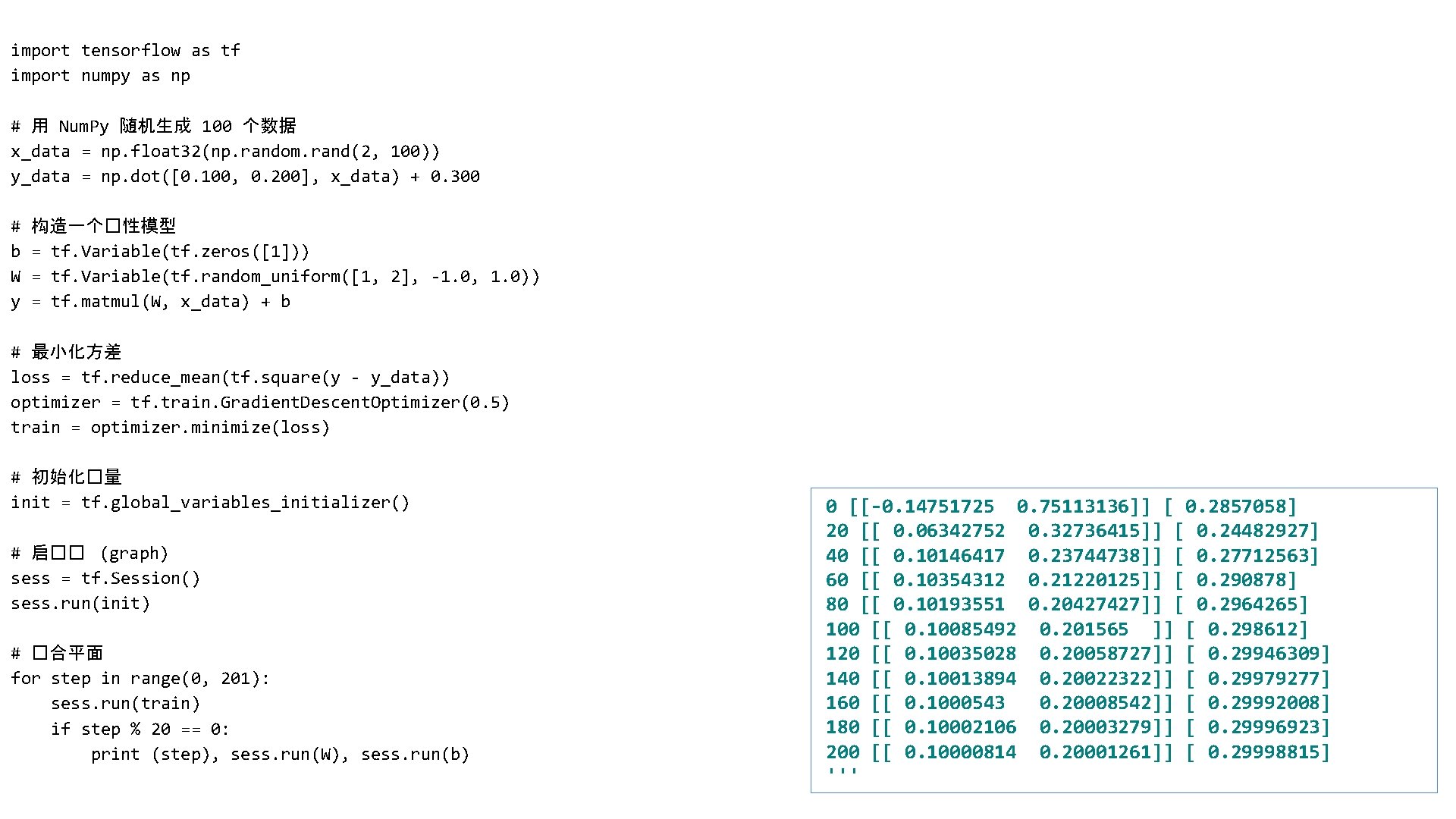

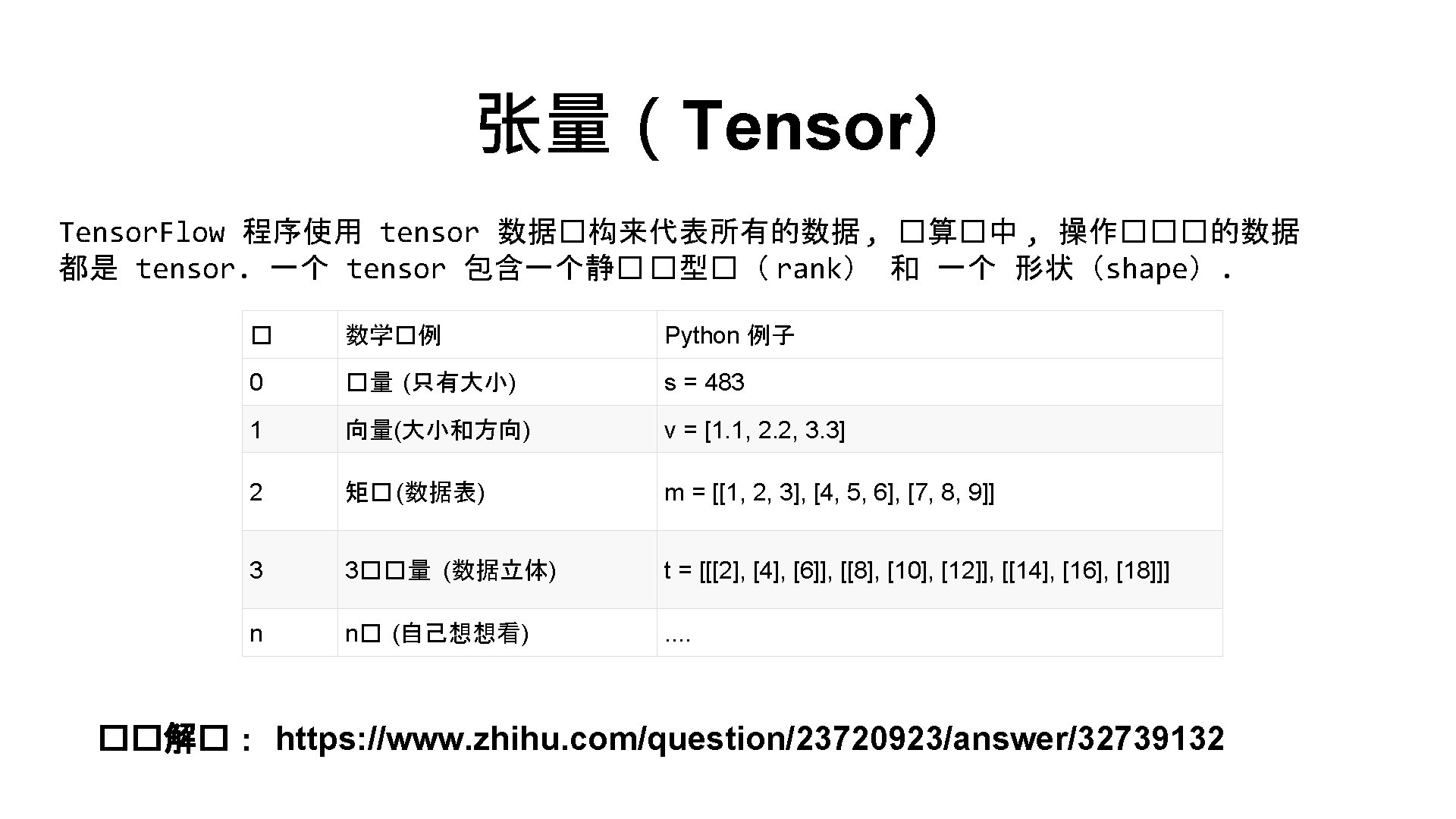

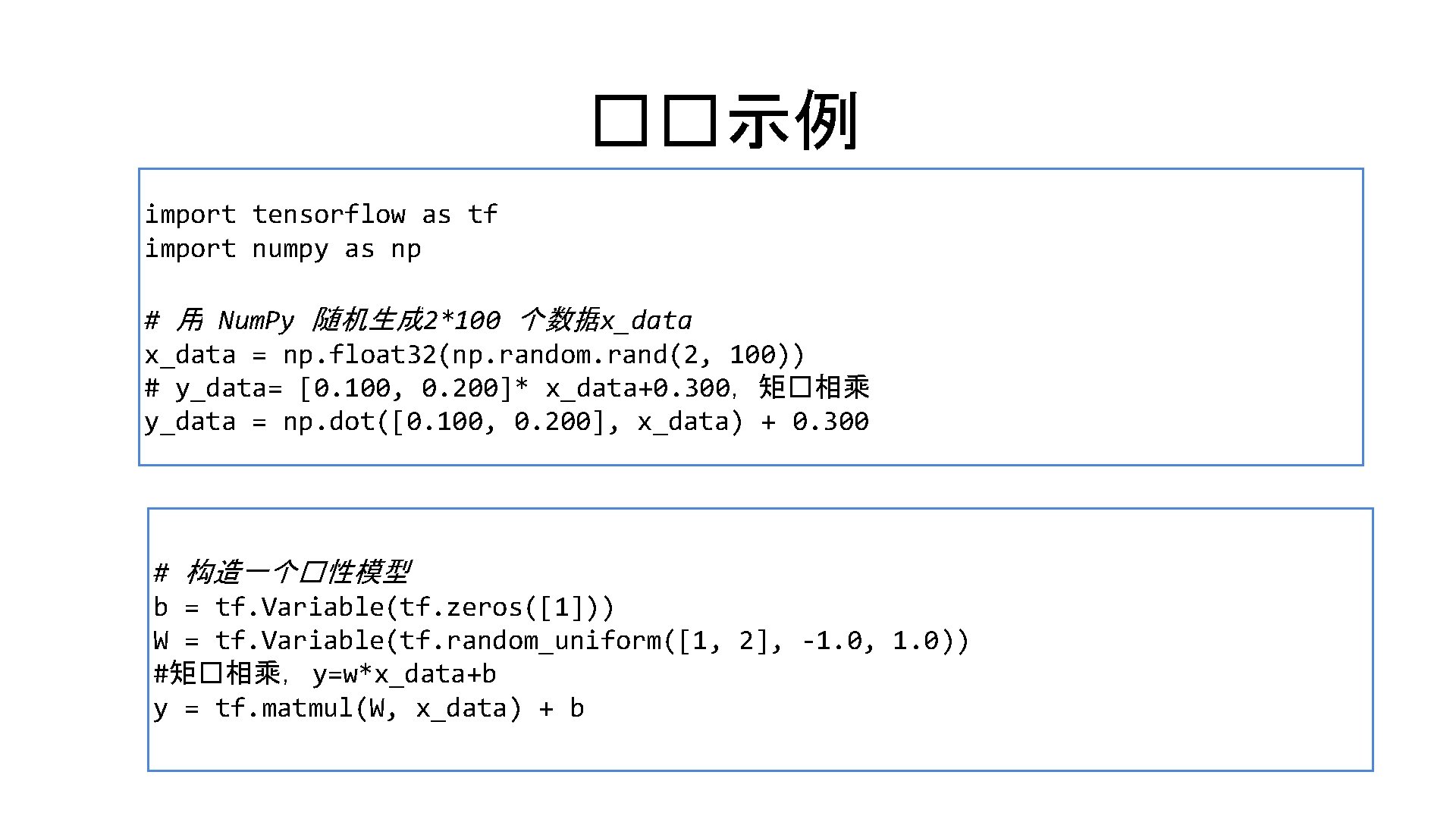

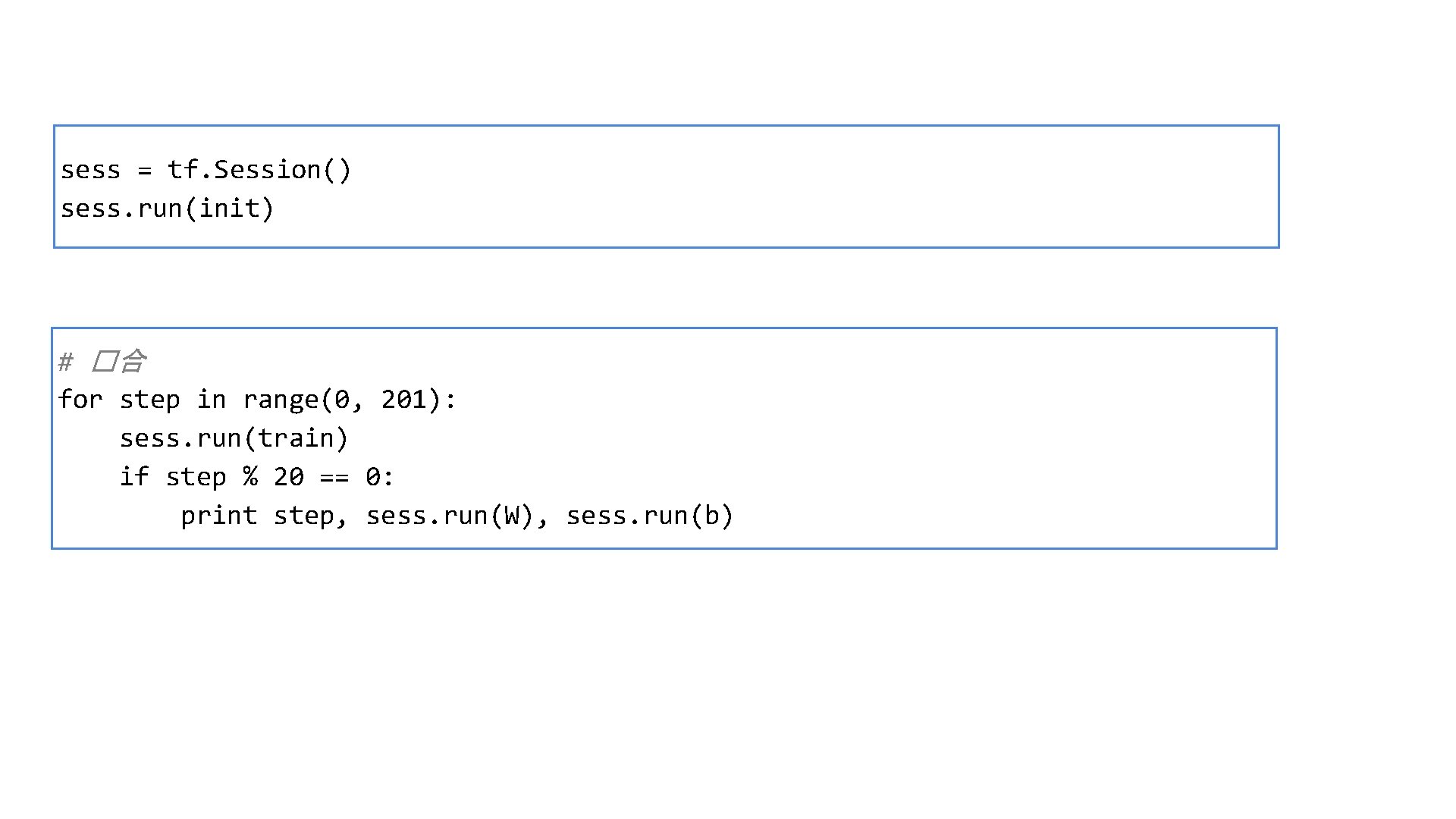

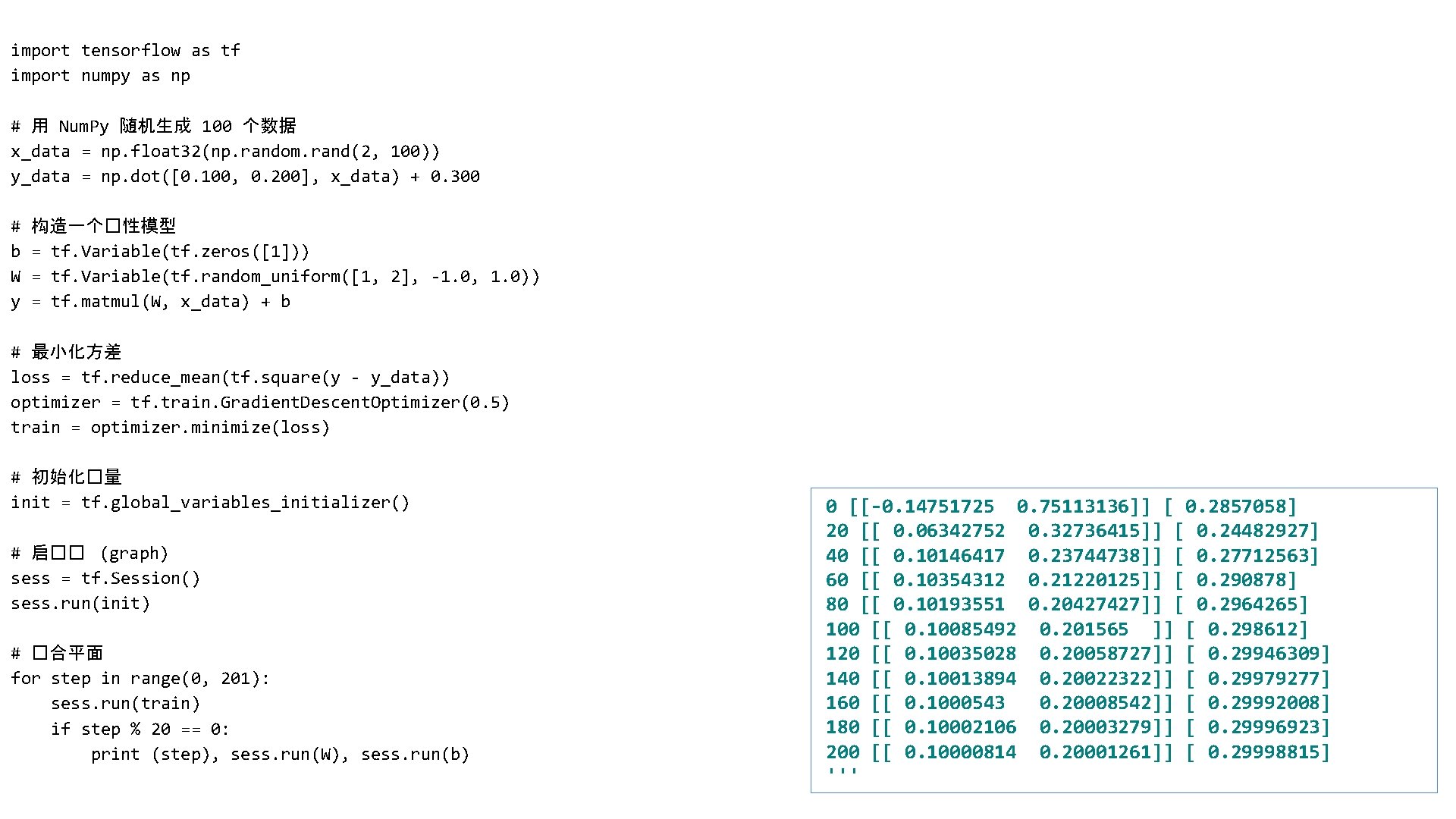

��示例 import tensorflow as tf import numpy as np # 用 Num. Py 随机生成 2*100 个数据x_data = np. float 32(np. random. rand(2, 100)) # y_data= [0. 100, 0. 200]* x_data+0. 300,矩�相乘 y_data = np. dot([0. 100, 0. 200], x_data) + 0. 300 # 构造一个�性模型 b = tf. Variable(tf. zeros([1])) W = tf. Variable(tf. random_uniform([1, 2], -1. 0, 1. 0)) #矩�相乘, y=w*x_data+b y = tf. matmul(W, x_data) + b

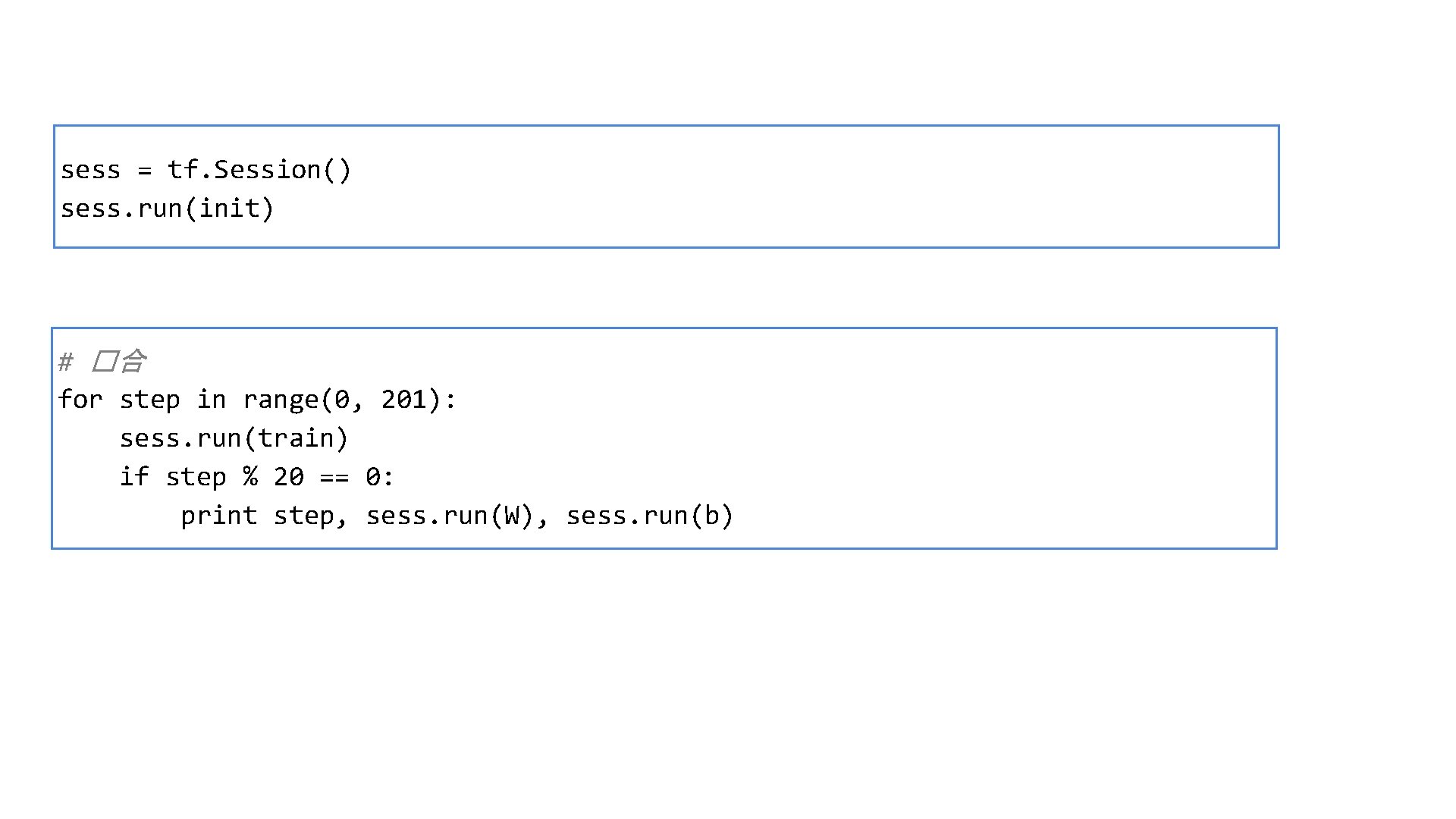

# cost/loss function, 最小化方差 loss = tf. reduce_mean(tf. square(y - y_data)) # Minimize optimizer = tf. train. Gradient. Descent. Optimizer(0. 5) train = optimizer. minimize(loss) # 初始化�量,很多以前代�用 tf. initialize_all_variables(),�期不能用 init = tf. global_variables_initializer() https: //www. tensorflow. org/api_docs/python/tf/reduce_mean

sess = tf. Session() sess. run(init) # �合 for step in range(0, 201): sess. run(train) if step % 20 == 0: print step, sess. run(W), sess. run(b)

import tensorflow as tf import numpy as np # 用 Num. Py 随机生成 100 个数据 x_data = np. float 32(np. random. rand(2, 100)) y_data = np. dot([0. 100, 0. 200], x_data) + 0. 300 # b W y 构造一个�性模型 = tf. Variable(tf. zeros([1])) = tf. Variable(tf. random_uniform([1, 2], -1. 0, 1. 0)) = tf. matmul(W, x_data) + b # 最小化方差 loss = tf. reduce_mean(tf. square(y - y_data)) optimizer = tf. train. Gradient. Descent. Optimizer(0. 5) train = optimizer. minimize(loss) # 初始化�量 init = tf. global_variables_initializer() # 启�� (graph) sess = tf. Session() sess. run(init) # �合平面 for step in range(0, 201): sess. run(train) if step % 20 == 0: print (step), sess. run(W), sess. run(b) 0 [[-0. 14751725 0. 75113136]] [ 0. 2857058] 20 [[ 0. 06342752 0. 32736415]] [ 0. 24482927] 40 [[ 0. 10146417 0. 23744738]] [ 0. 27712563] 60 [[ 0. 10354312 0. 21220125]] [ 0. 290878] 80 [[ 0. 10193551 0. 20427427]] [ 0. 2964265] 100 [[ 0. 10085492 0. 201565 ]] [ 0. 298612] 120 [[ 0. 10035028 0. 20058727]] [ 0. 29946309] 140 [[ 0. 10013894 0. 20022322]] [ 0. 29979277] 160 [[ 0. 1000543 0. 20008542]] [ 0. 29992008] 180 [[ 0. 10002106 0. 20003279]] [ 0. 29996923] 200 [[ 0. 10000814 0. 20001261]] [ 0. 29998815] '''

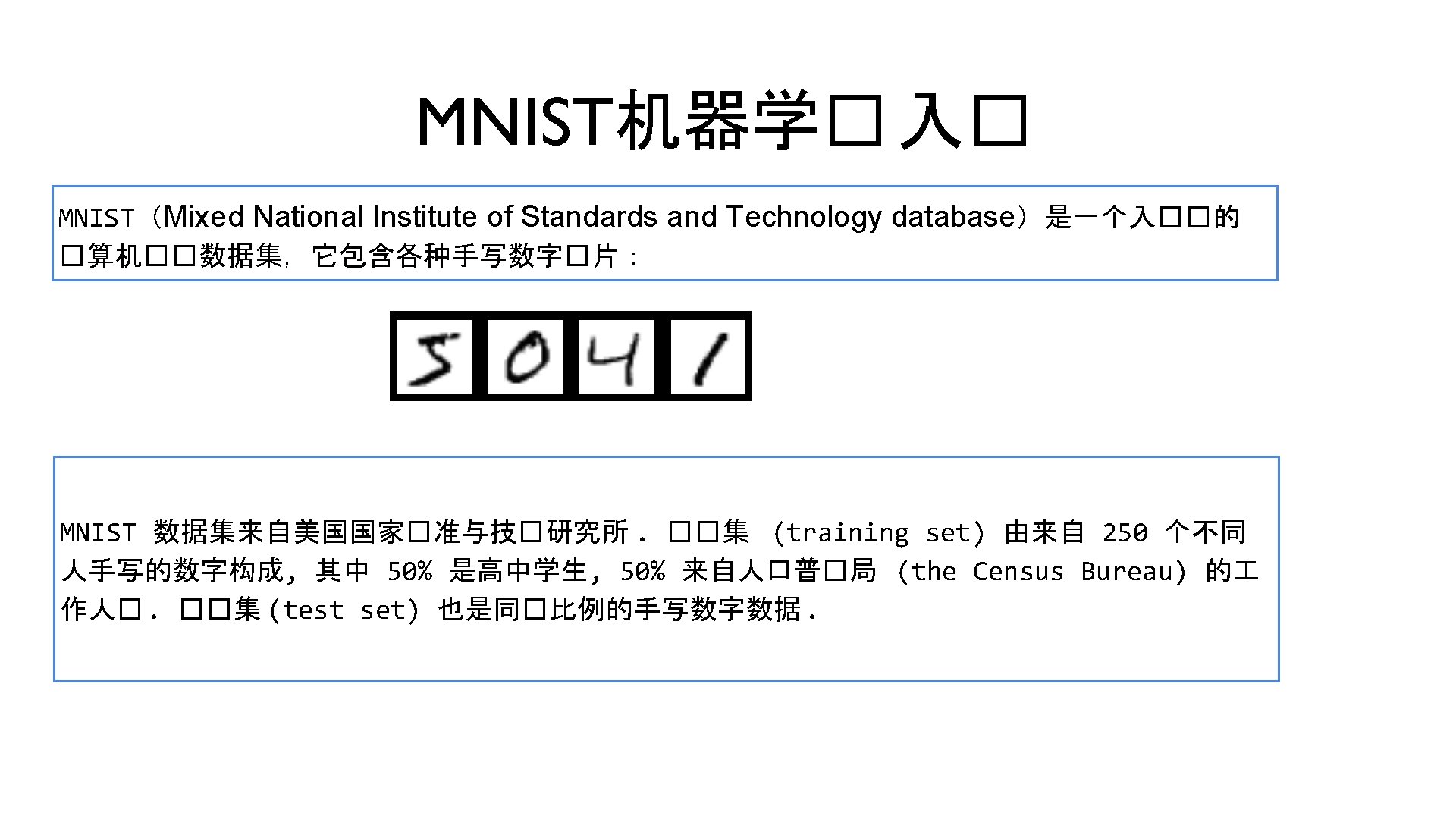

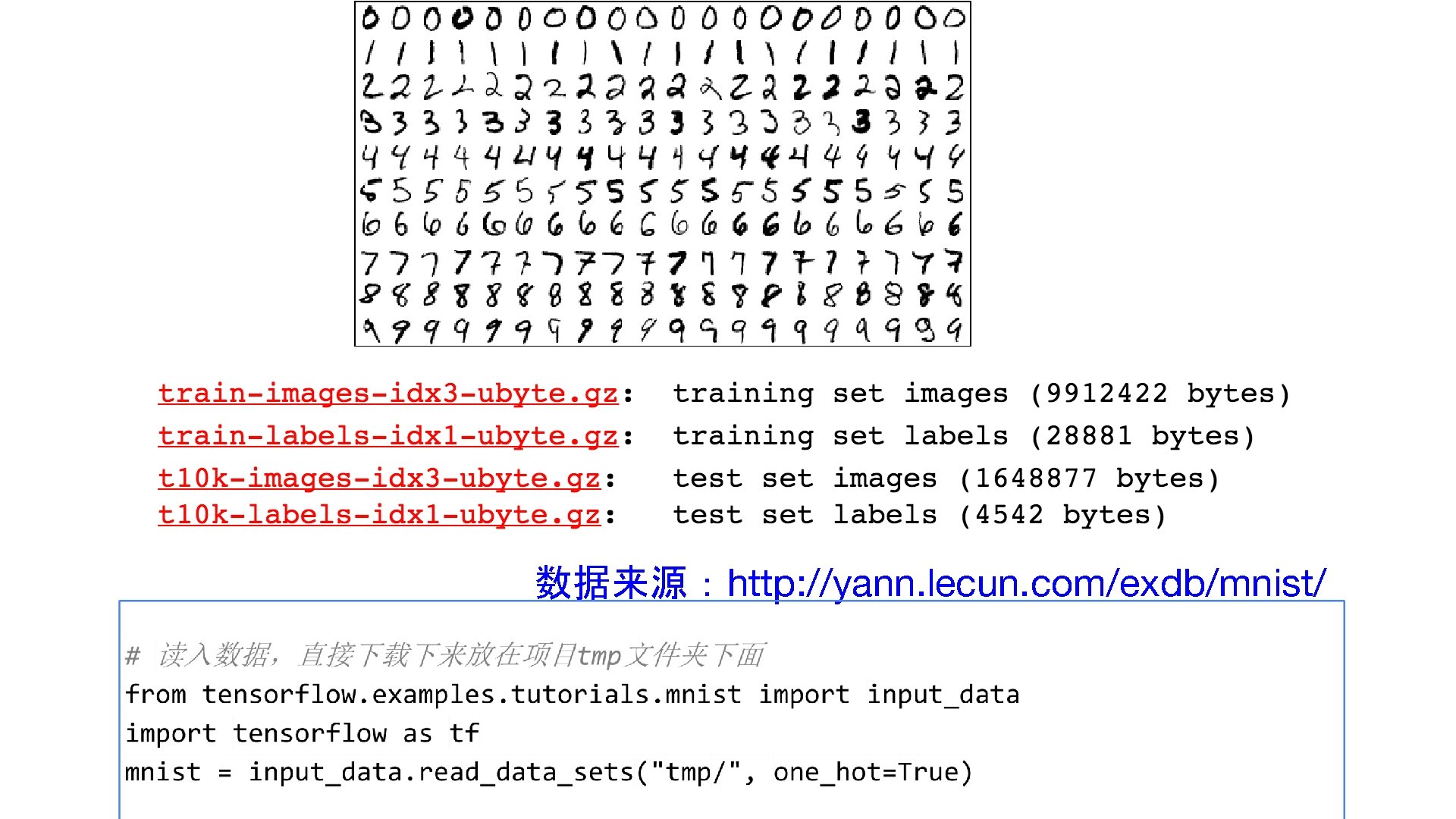

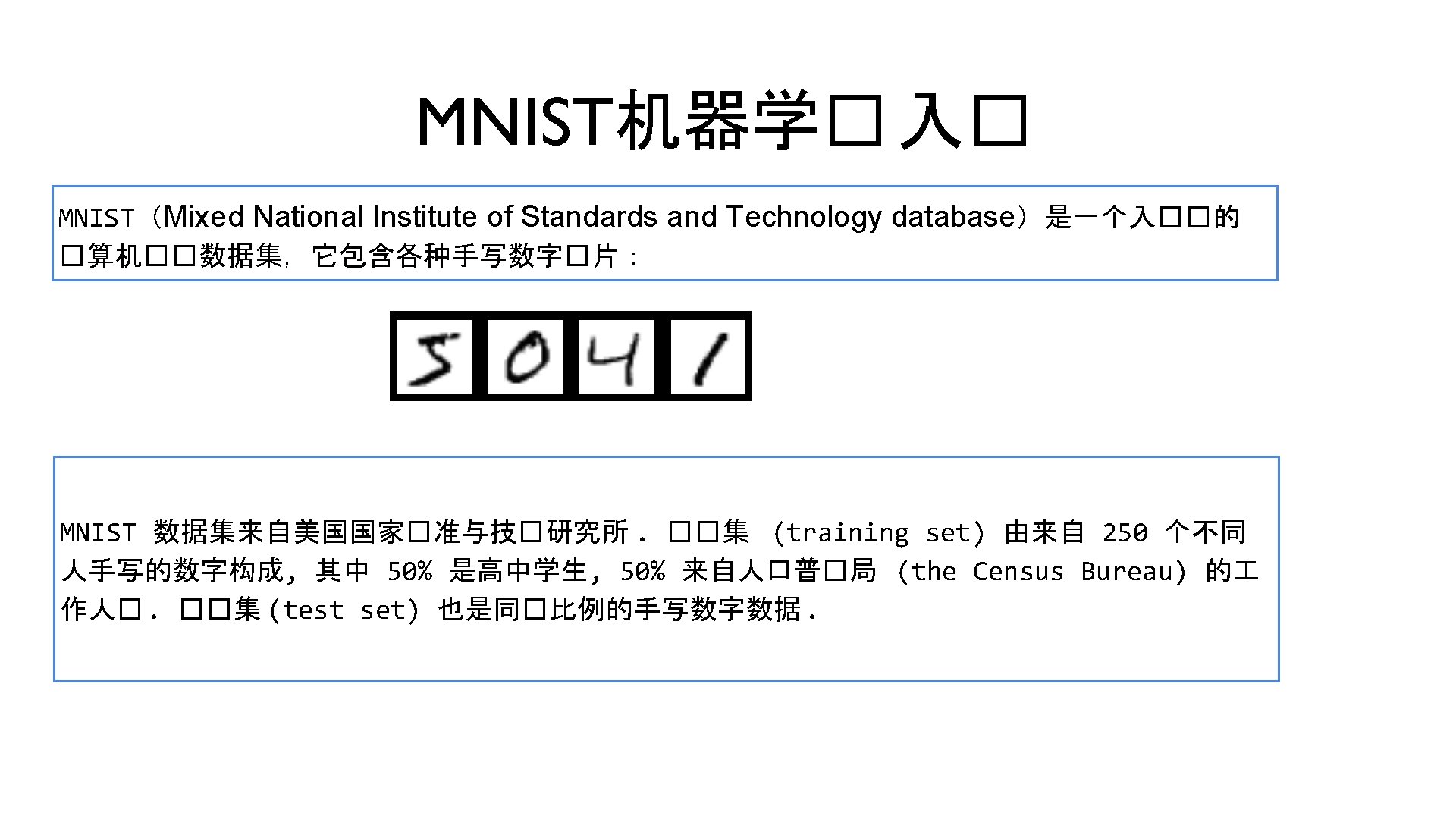

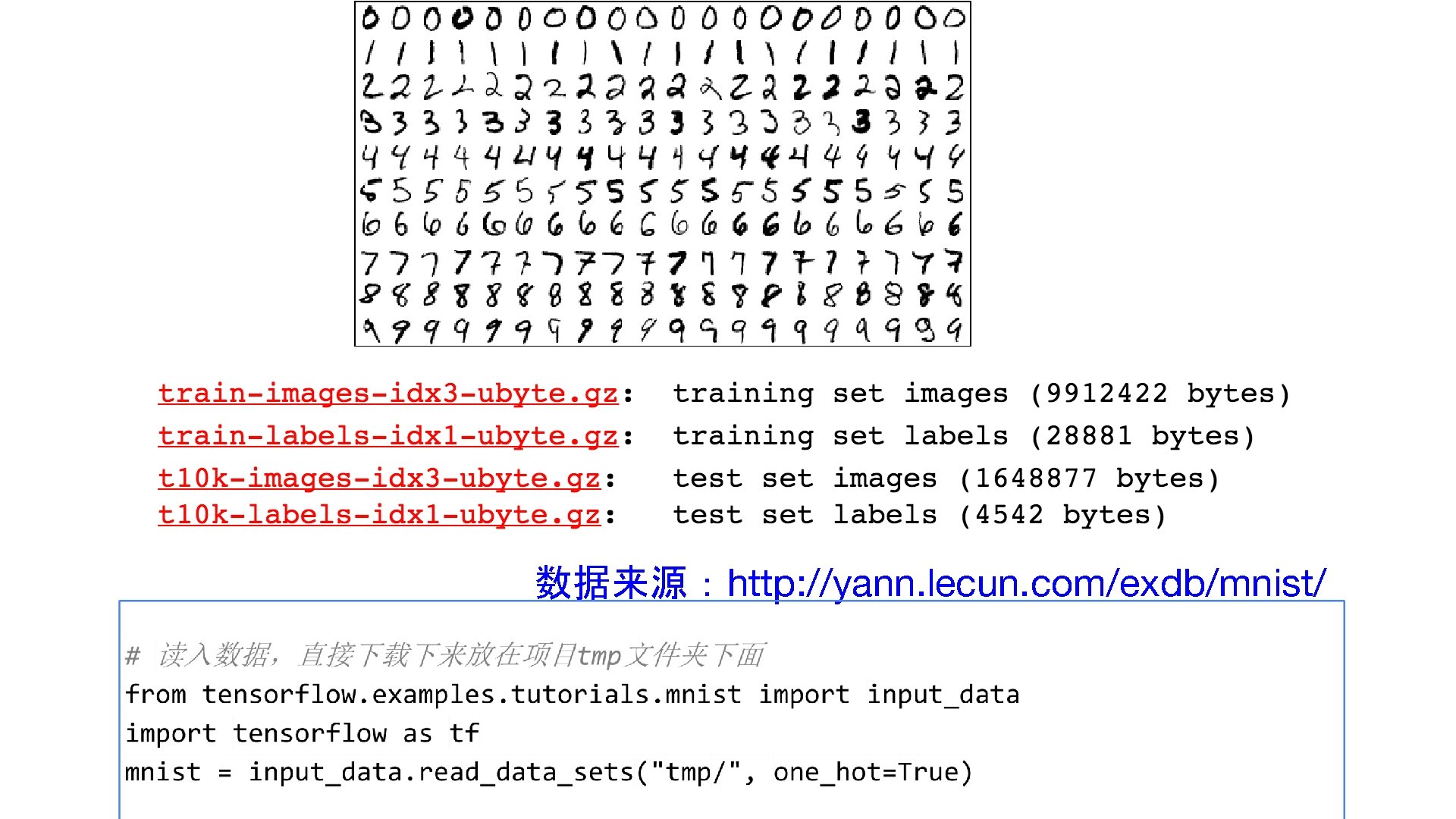

数据来源:http: //yann. lecun. com/exdb/mnist/

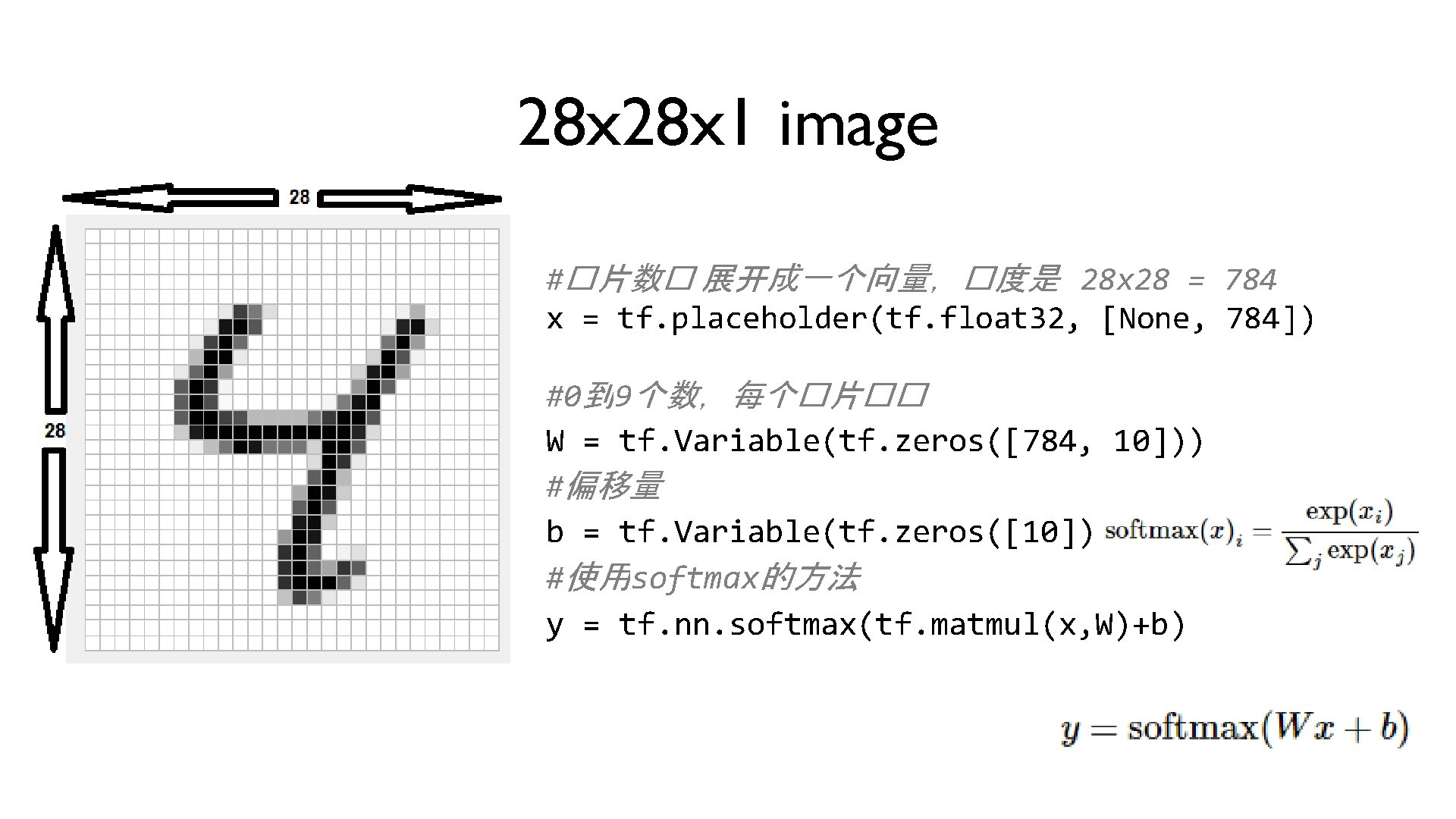

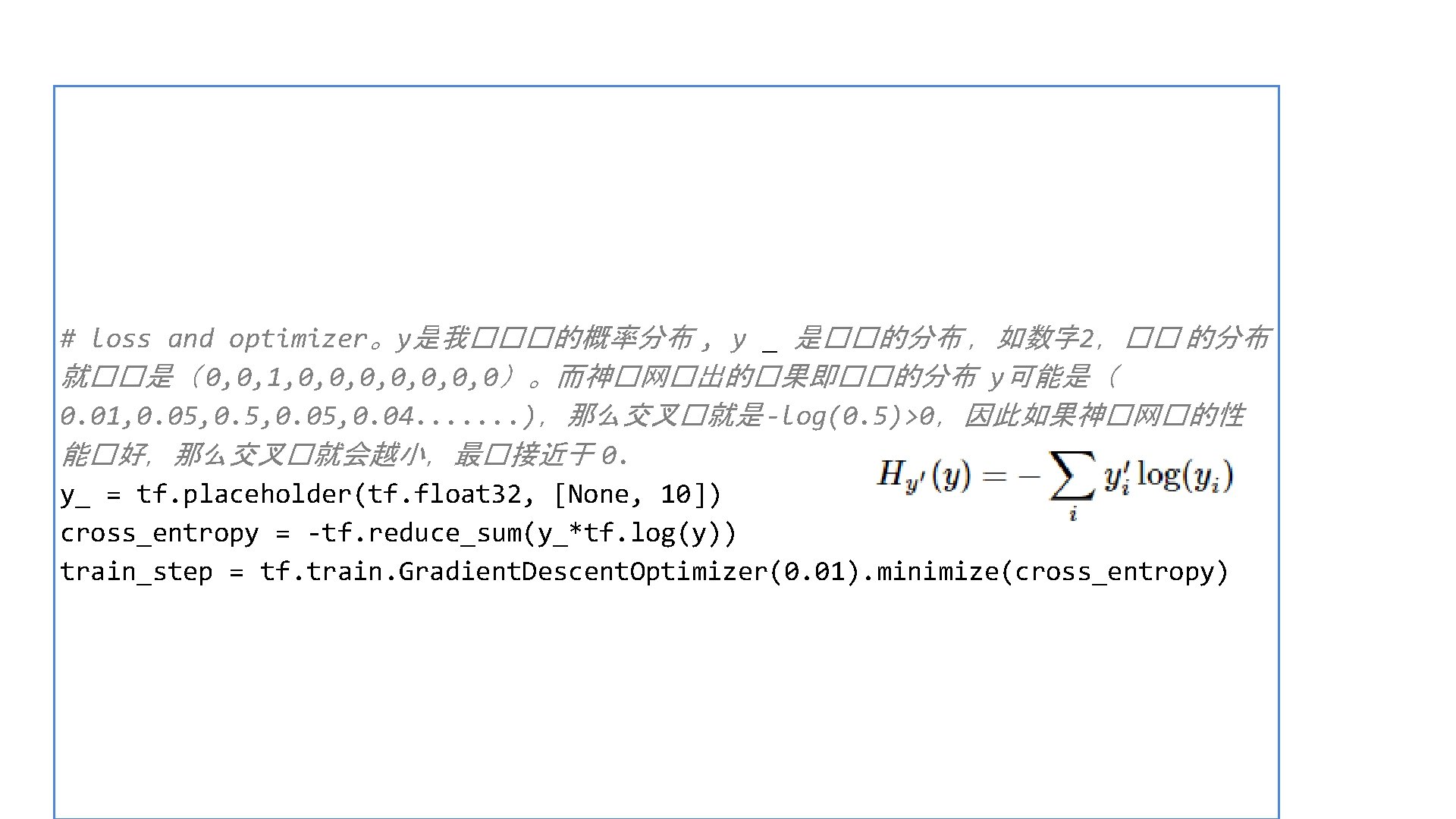

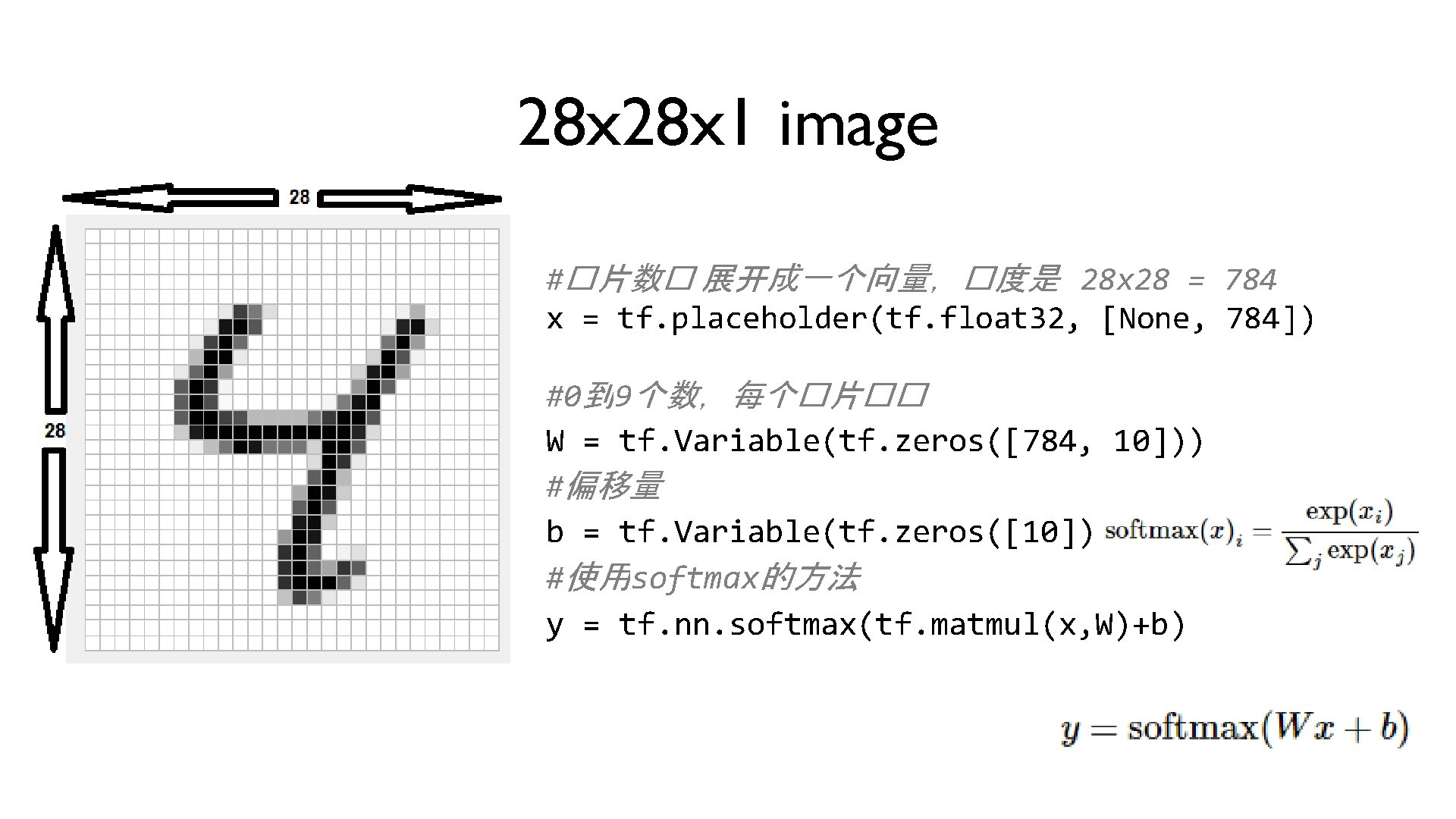

28 x 1 image #�片数� 展开成一个向量,�度是 28 x 28 = 784 x = tf. placeholder(tf. float 32, [None, 784]) #0到 9个数,每个�片�� W = tf. Variable(tf. zeros([784, 10])) #偏移量 b = tf. Variable(tf. zeros([10])) #使用softmax的方法 y = tf. nn. softmax(tf. matmul(x, W)+b)

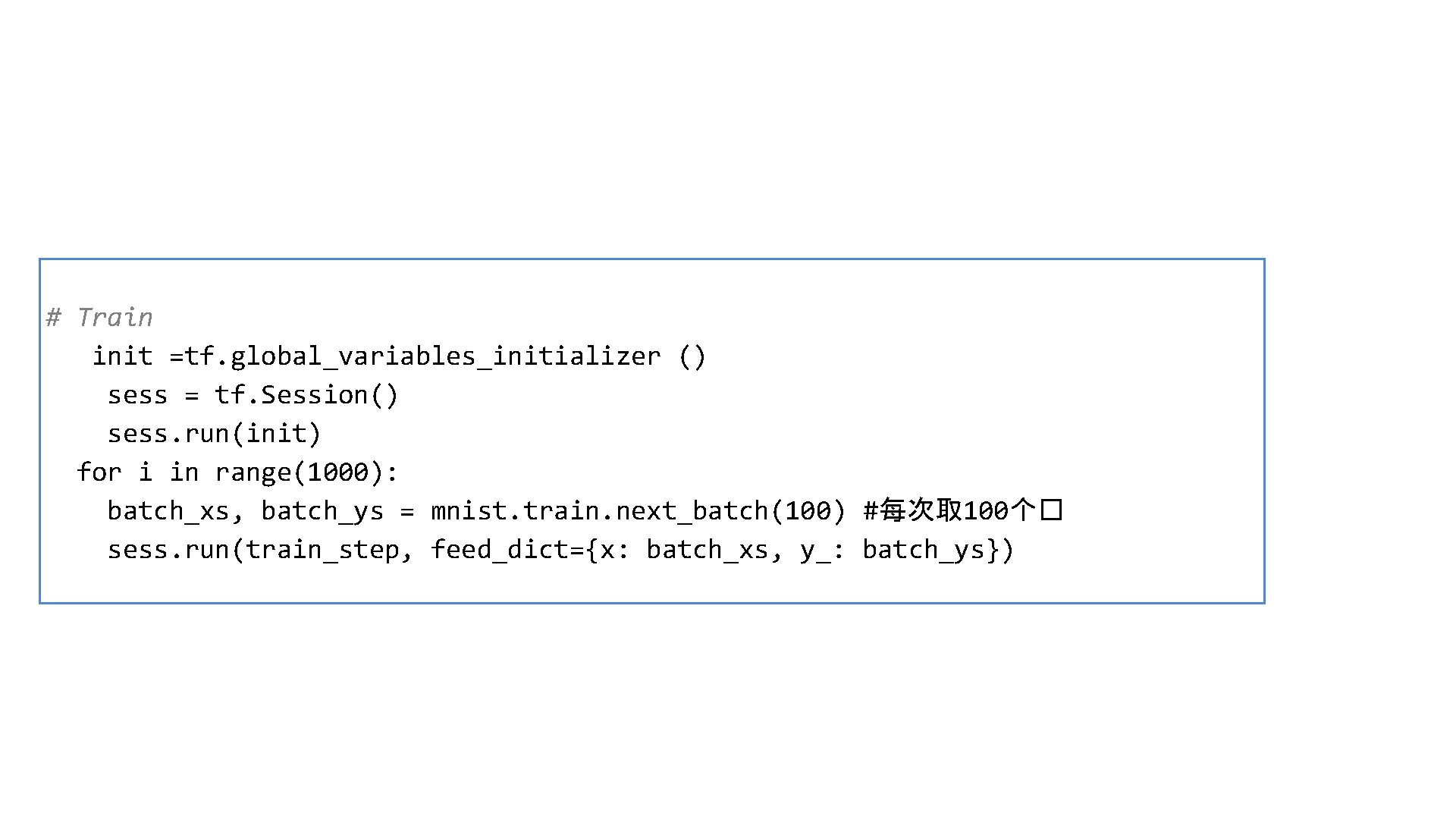

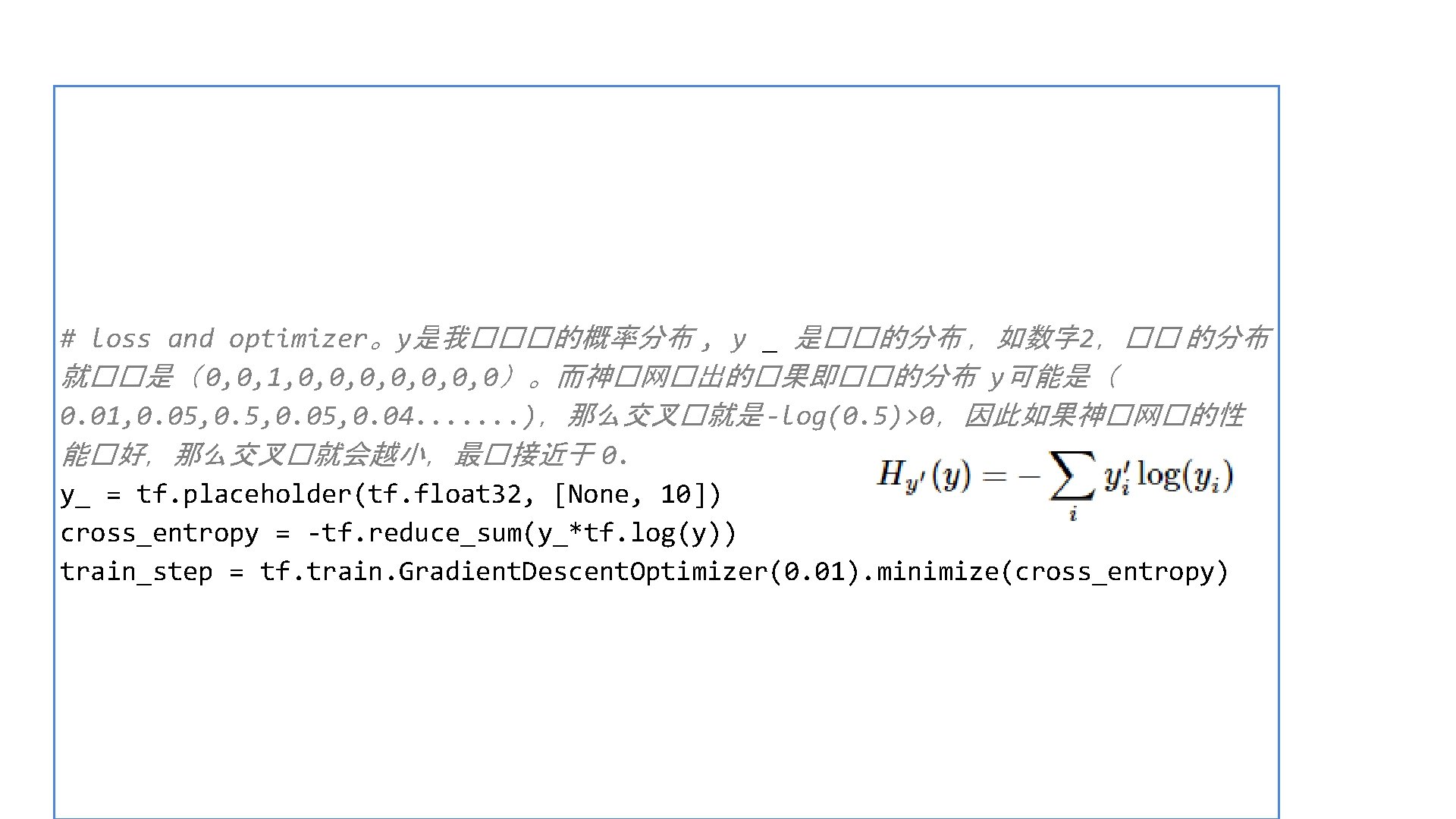

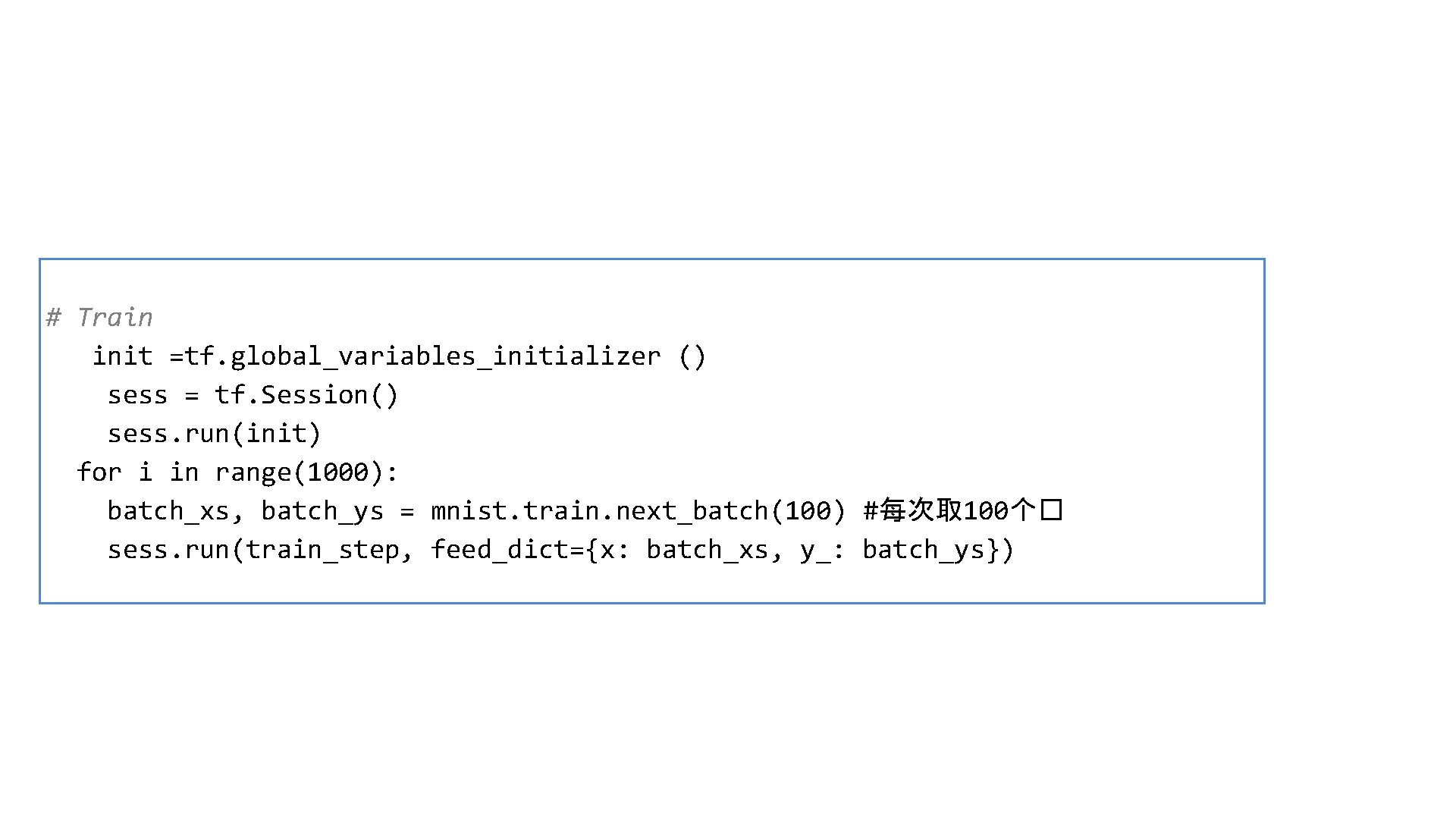

# Train init =tf. global_variables_initializer () sess = tf. Session() sess. run(init) for i in range(1000): batch_xs, batch_ys = mnist. train. next_batch(100) #每次取 100个� sess. run(train_step, feed_dict={x: batch_xs, y_: batch_ys})

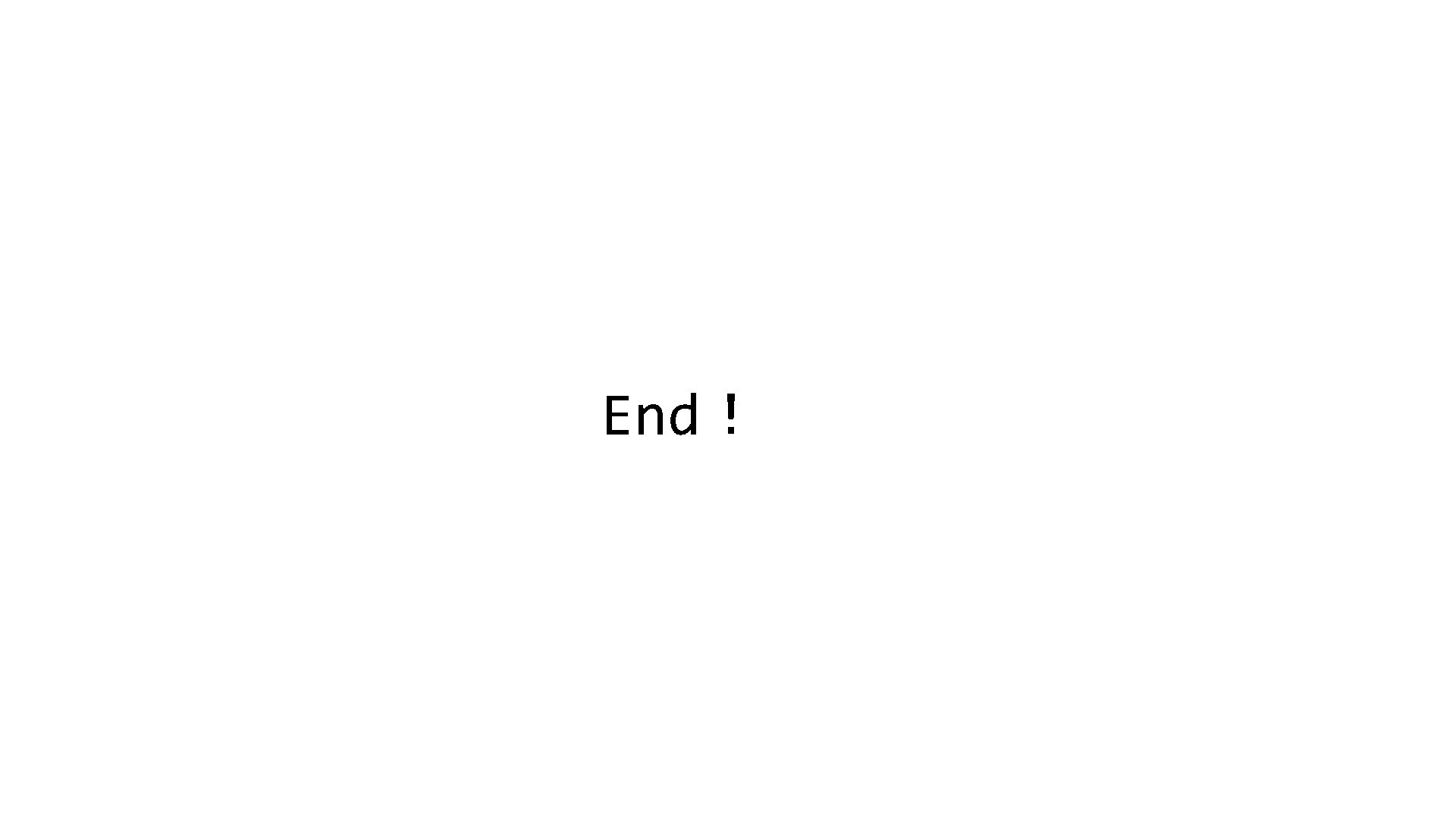

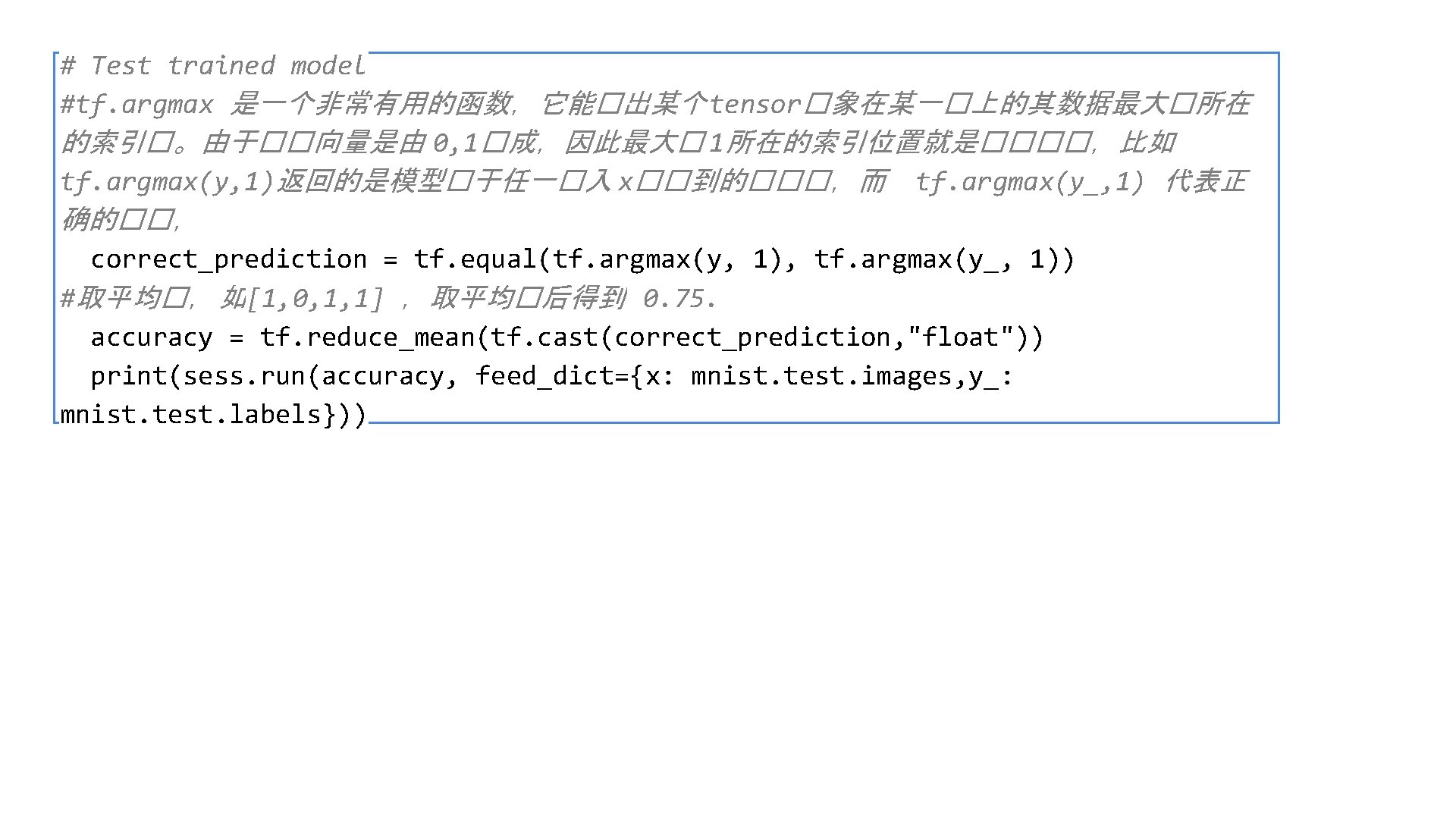

# Test trained model #tf. argmax 是一个非常有用的函数,它能�出某个 tensor�象在某一�上的其数据最大�所在 的索引�。由于��向量是由 0, 1�成,因此最大� 1所在的索引位置就是����,比如 tf. argmax(y, 1)返回的是模型�于任一�入 x��到的���,而 tf. argmax(y_, 1) 代表正 确的��, correct_prediction = tf. equal(tf. argmax(y, 1), tf. argmax(y_, 1)) #取平均�,如[1, 0, 1, 1] ,取平均�后得到 0. 75. accuracy = tf. reduce_mean(tf. cast(correct_prediction, "float")) print(sess. run(accuracy, feed_dict={x: mnist. test. images, y_: mnist. test. labels}))

from tensorflow. examples. tutorials. mnist import input_data import tensorflow as tf mnist = input_data. read_data_sets("tmp/", one_hot=True) x = tf. placeholder(tf. float 32, [None, 784]) W = tf. Variable(tf. zeros([784, 10])) b = tf. Variable(tf. zeros([10])) y = tf. nn. softmax(tf. matmul(x, W)+b) y_ = tf. placeholder(tf. float 32, [None, 10]) cross_entropy = -tf. reduce_sum(y_*tf. log(y)) train_step = tf. train. Gradient. Descent. Optimizer(0. 01). minimize(cross_entropy) init =tf. global_variables_initializer () sess = tf. Session() sess. run(init) for i in range(1000): batch_xs, batch_ys = mnist. train. next_batch(100) sess. run(train_step, feed_dict={x: batch_xs, y_: batch_ys}) correct_prediction = tf. equal(tf. argmax(y, 1), tf. argmax(y_, 1)) accuracy = tf. reduce_mean(tf. cast(correct_prediction, "float")) print(sess. run(accuracy, feed_dict={x: mnist. test. images, y_: mnist. test. labels}))

End!