Technische Universitt Mnchen IMU Sensor Fusion with Machine

- Slides: 53

Technische Universität München IMU Sensor Fusion with Machine Learning Özgür Akyazı 18. 04. 2019 Final: Master, Data Engineering and Analytics Supervisor(s): Adnane Jadid

Index Introduction/Motivation Problem Description: Issues Goals of this Project Existing Solutions Approach / Related Work Data Collection Implementation Data Preprocessing Neural Network(NN) Construction Evaluation Discussion of Potential Issues References Akyazı: Sensor Fusion with Deep Learning 2

Introduction / Motivation Tracking is one of the main tasks in Augmented Reality. Should be precise, accurate, and robust, as possible. Need multiple sensors → Sensor Fusion Deep Learning is a very trending topic !! Akyazı: Sensor Fusion with Deep Learning 3

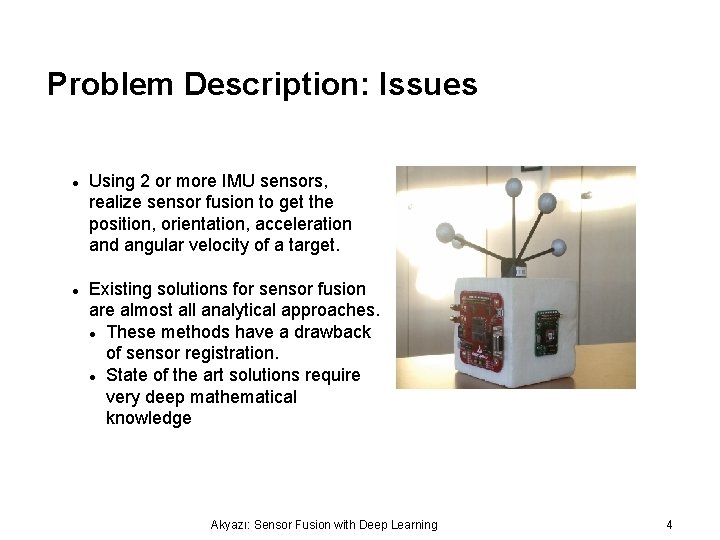

Problem Description: Issues Using 2 or more IMU sensors, realize sensor fusion to get the position, orientation, acceleration and angular velocity of a target. Existing solutions for sensor fusion are almost all analytical approaches. These methods have a drawback of sensor registration. State of the art solutions require very deep mathematical knowledge Akyazı: Sensor Fusion with Deep Learning 4

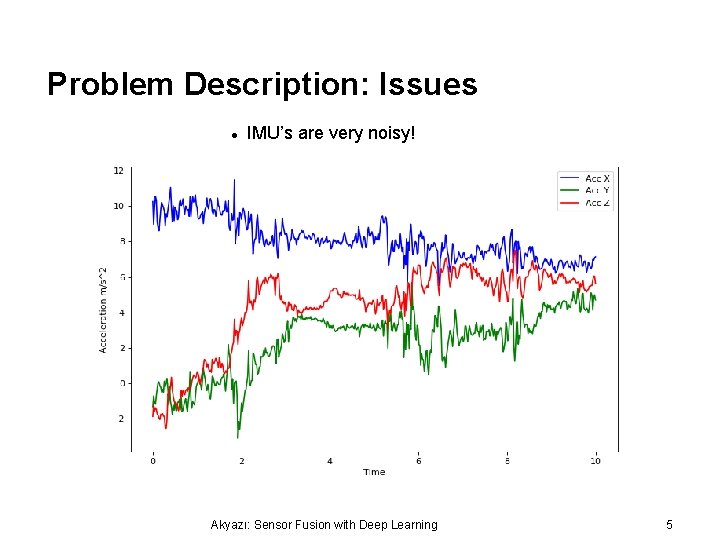

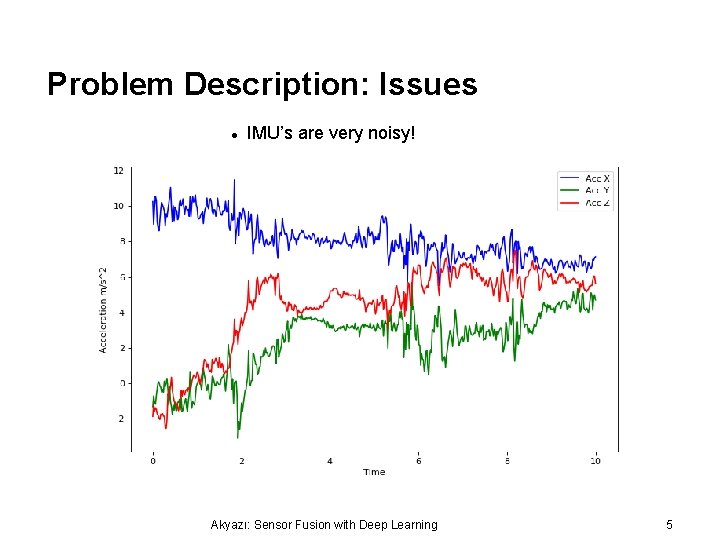

Problem Description: Issues IMU’s are very noisy! Akyazı: Sensor Fusion with Deep Learning 5

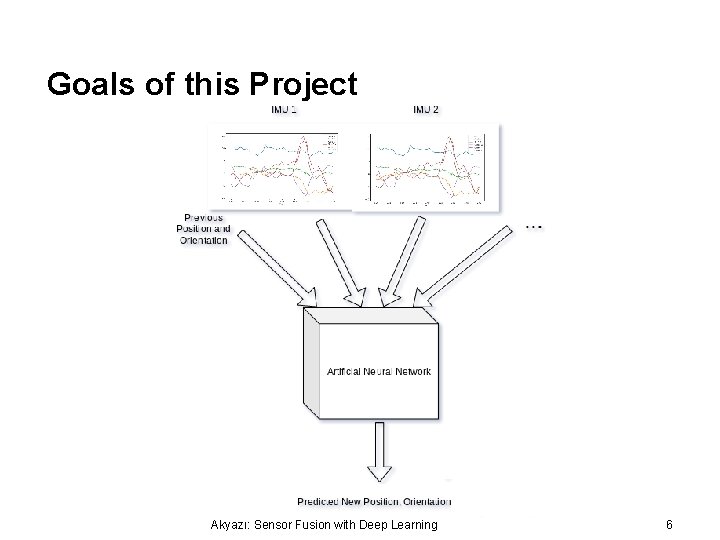

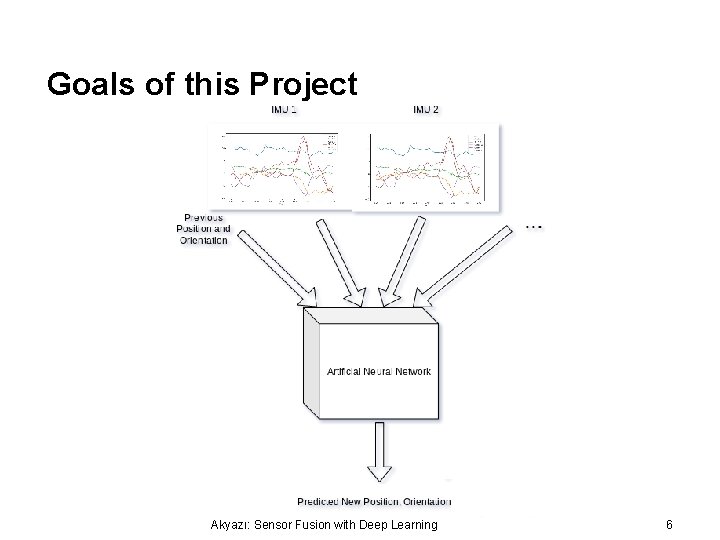

Goals of this Project Akyazı: Sensor Fusion with Deep Learning 6

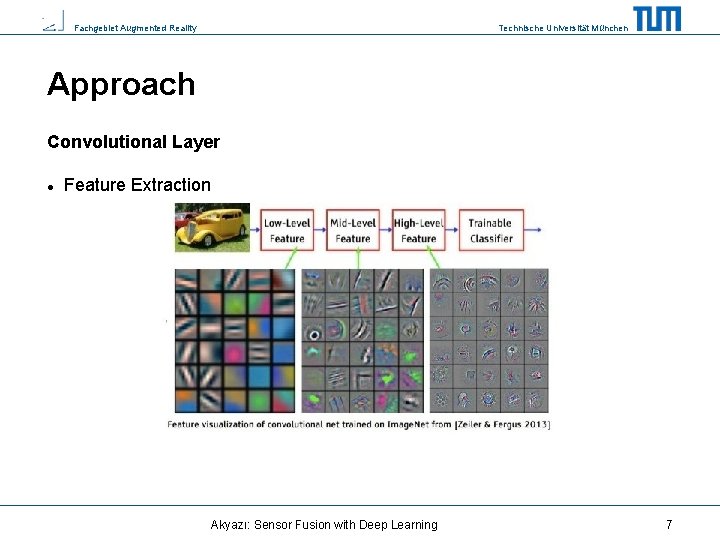

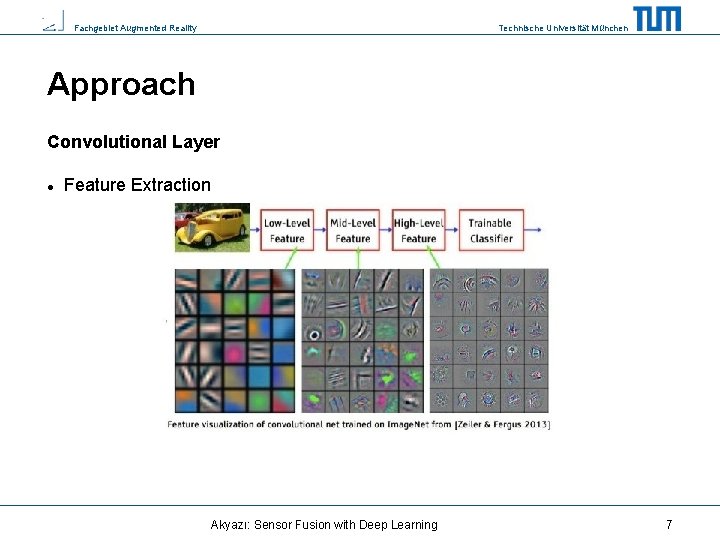

Fachgebiet Augmented Reality Technische Universität München Approach Convolutional Layer Feature Extraction Akyazı: Sensor Fusion with Deep Learning 7

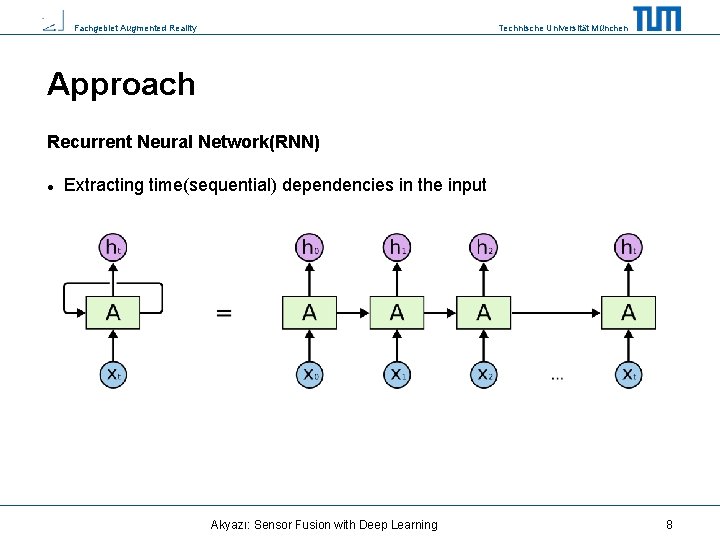

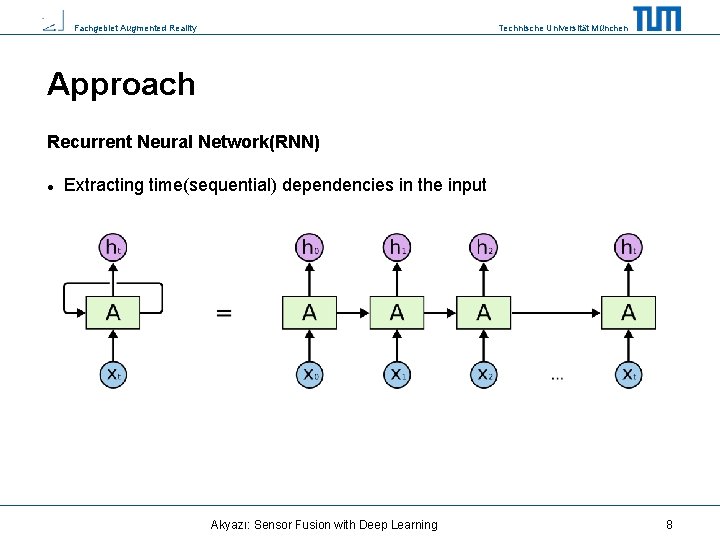

Fachgebiet Augmented Reality Technische Universität München Approach Recurrent Neural Network(RNN) Extracting time(sequential) dependencies in the input Akyazı: Sensor Fusion with Deep Learning 8

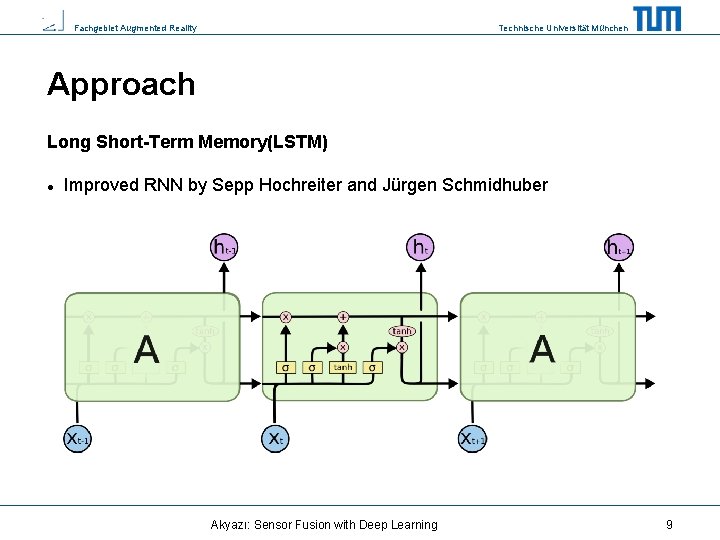

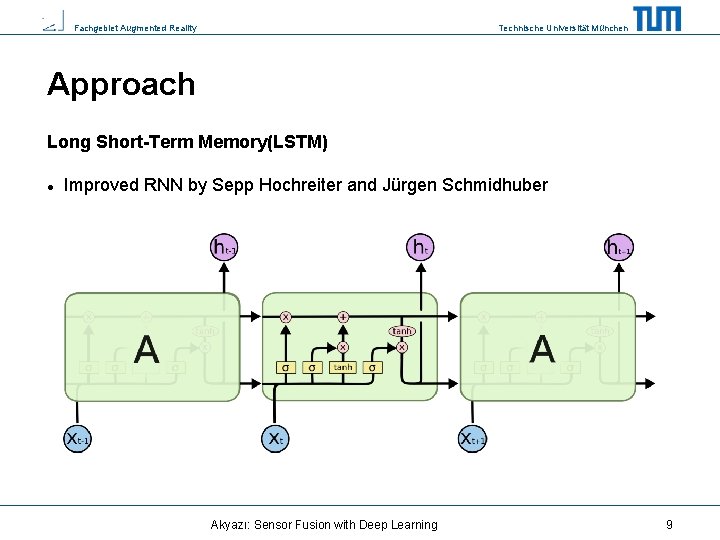

Fachgebiet Augmented Reality Technische Universität München Approach Long Short-Term Memory(LSTM) Improved RNN by Sepp Hochreiter and Jürgen Schmidhuber Akyazı: Sensor Fusion with Deep Learning 9

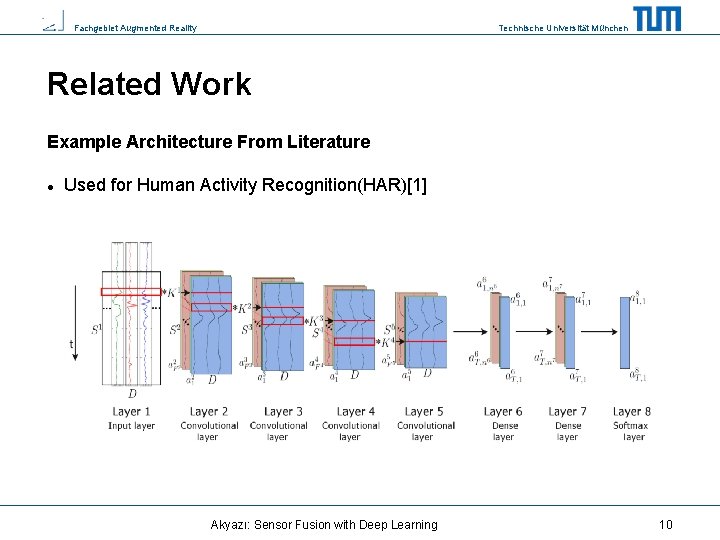

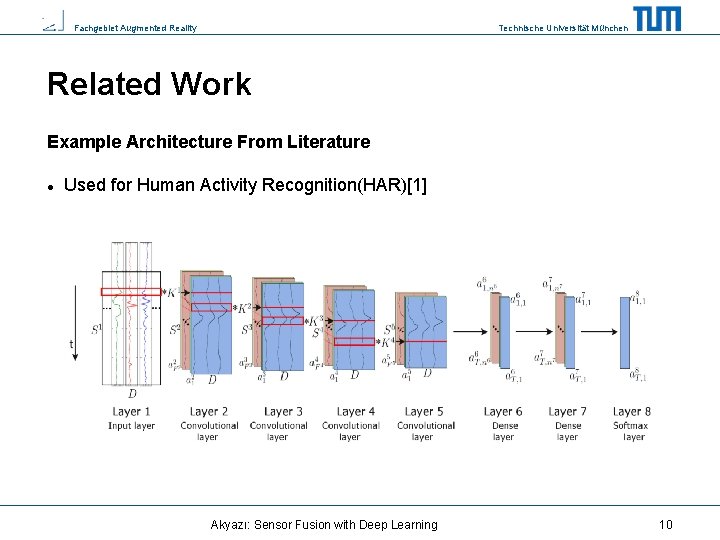

Fachgebiet Augmented Reality Technische Universität München Related Work Example Architecture From Literature Used for Human Activity Recognition(HAR)[1] Akyazı: Sensor Fusion with Deep Learning 10

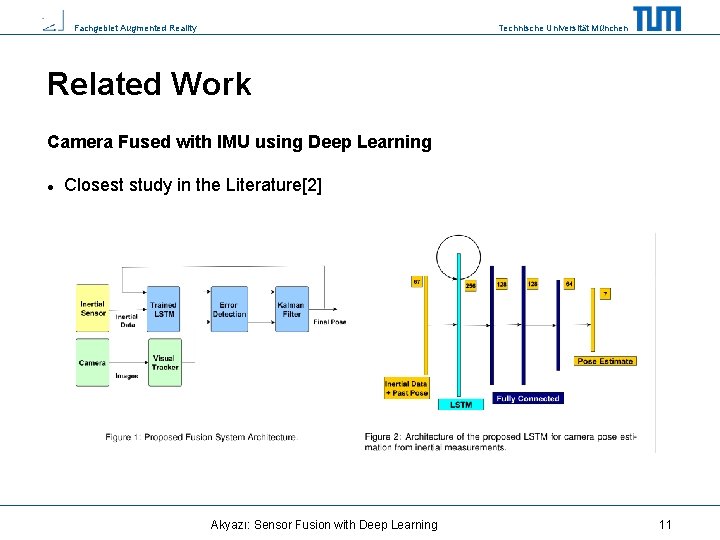

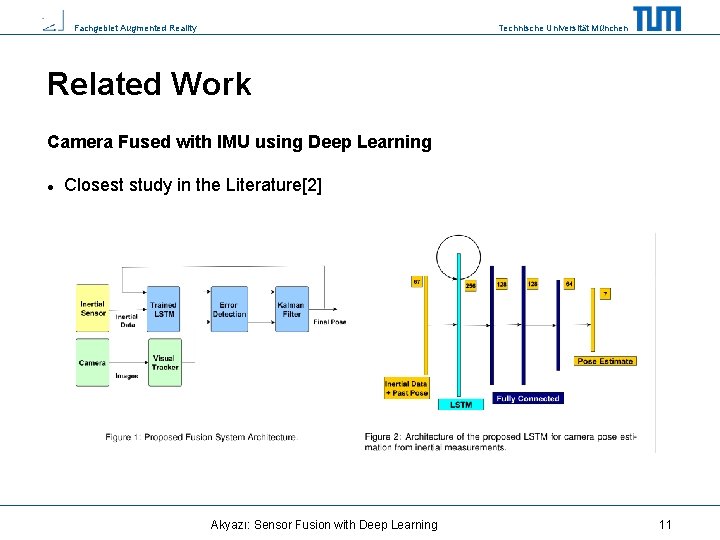

Fachgebiet Augmented Reality Technische Universität München Related Work Camera Fused with IMU using Deep Learning Closest study in the Literature[2] Akyazı: Sensor Fusion with Deep Learning 11

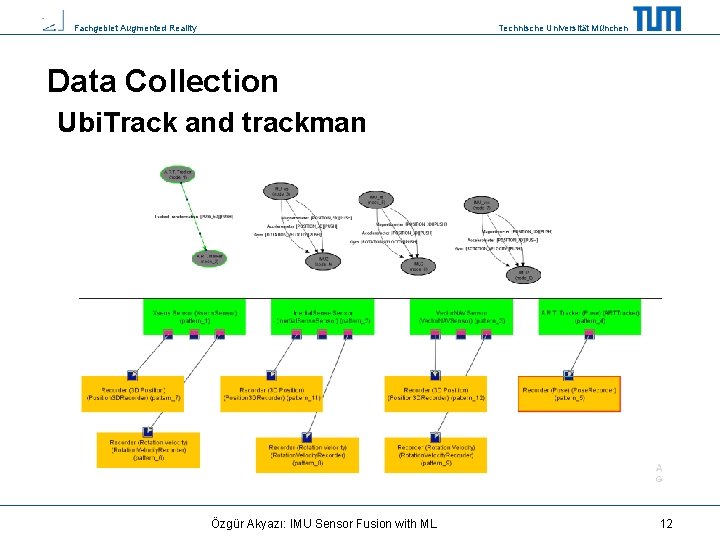

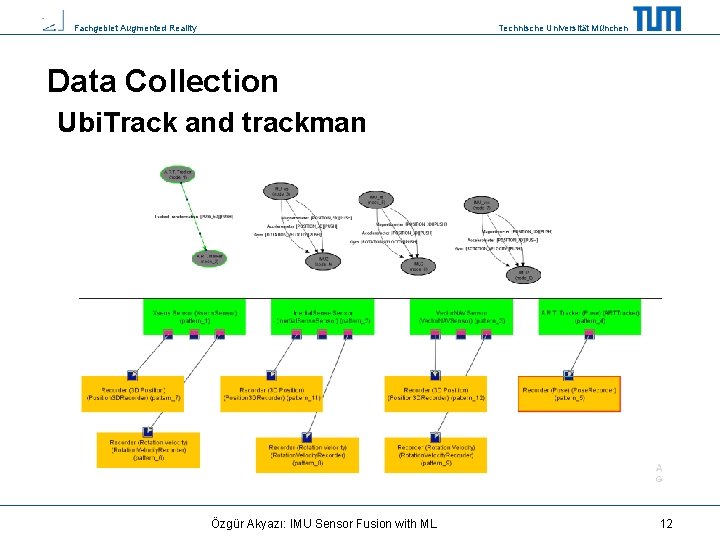

Fachgebiet Augmented Reality Technische Universität München Data Collection Ubi. Track and trackman Özgür Akyazı: IMU Sensor Fusion with ML 12

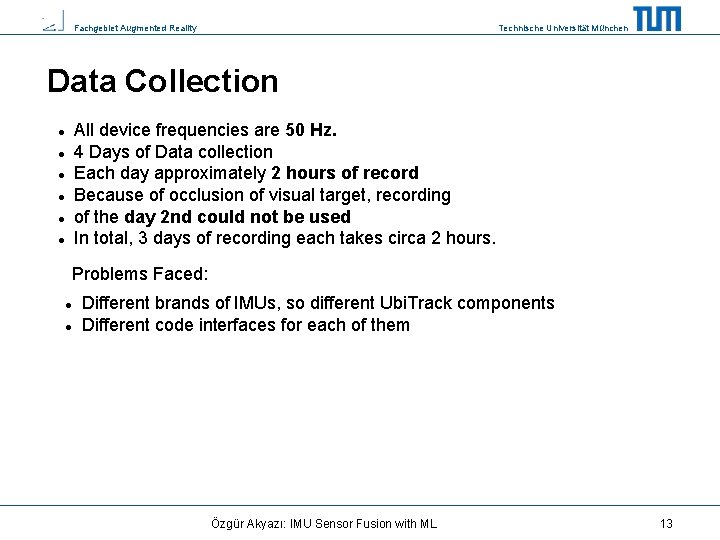

Fachgebiet Augmented Reality Technische Universität München Data Collection All device frequencies are 50 Hz. 4 Days of Data collection Each day approximately 2 hours of record Because of occlusion of visual target, recording of the day 2 nd could not be used In total, 3 days of recording each takes circa 2 hours. Problems Faced: Different brands of IMUs, so different Ubi. Track components Different code interfaces for each of them Özgür Akyazı: IMU Sensor Fusion with ML 13

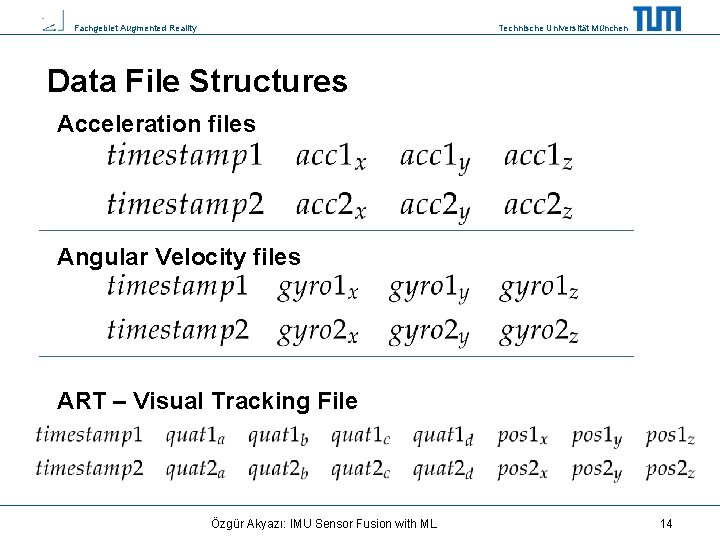

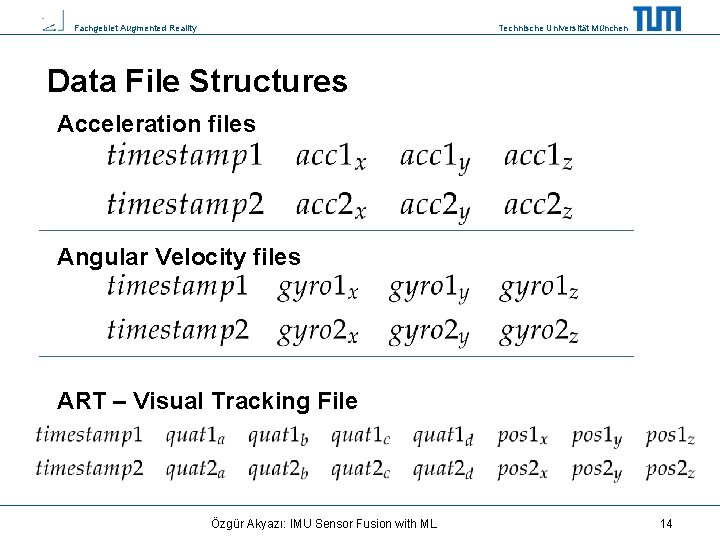

Fachgebiet Augmented Reality Technische Universität München Data File Structures Acceleration files Angular Velocity files ART – Visual Tracking File Özgür Akyazı: IMU Sensor Fusion with ML 14

Fachgebiet Augmented Reality Technische Universität München Data Issues Non-equal number of data points at each data collection session Asynchronous data Özgür Akyazı: IMU Sensor Fusion with ML 15

Fachgebiet Augmented Reality Technische Universität München Implementation For data collection, C/C++ are used when writing Ubi. Track modules and interacting with IMU APIs. Programming language for data preprocessing, neural network, model evaluation and test is Python Tensor. Flow and Keras are used to develop the neural network. Özgür Akyazı: IMU Sensor Fusion with ML 16

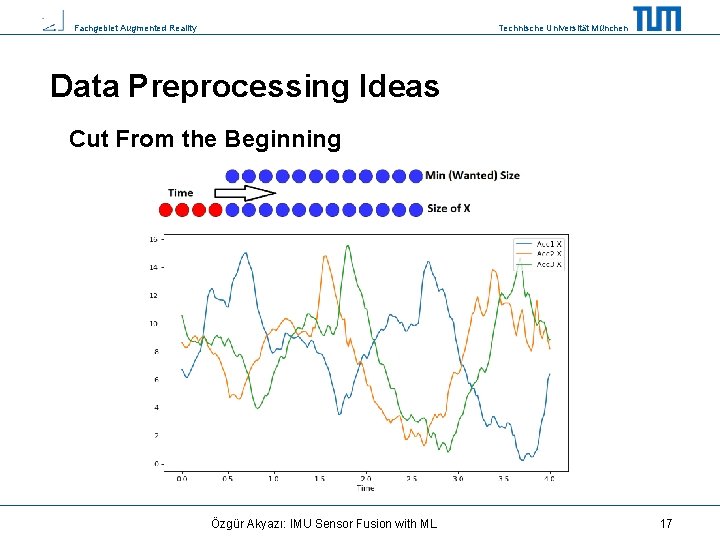

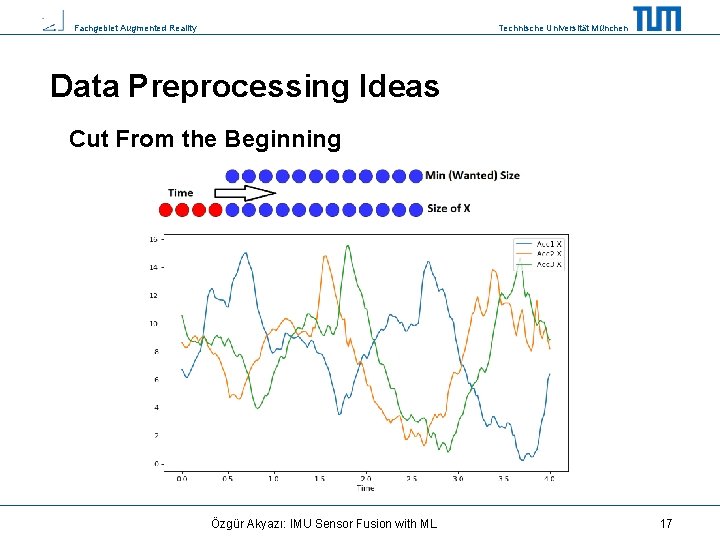

Fachgebiet Augmented Reality Technische Universität München Data Preprocessing Ideas Cut From the Beginning Özgür Akyazı: IMU Sensor Fusion with ML 17

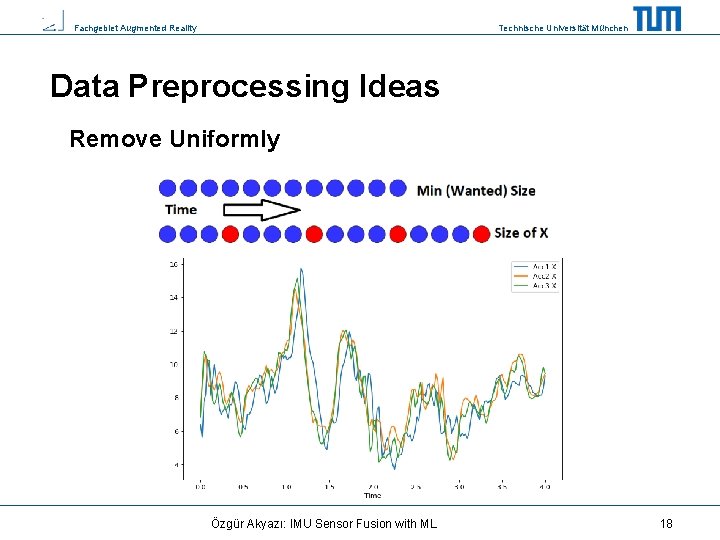

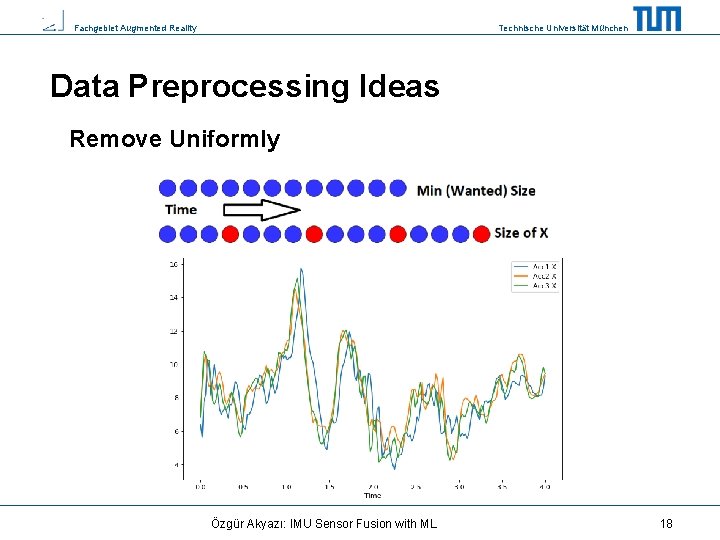

Fachgebiet Augmented Reality Technische Universität München Data Preprocessing Ideas Remove Uniformly Özgür Akyazı: IMU Sensor Fusion with ML 18

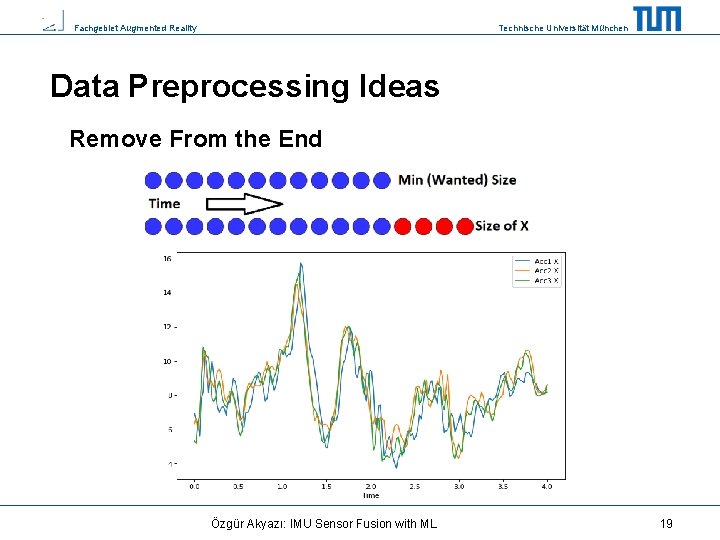

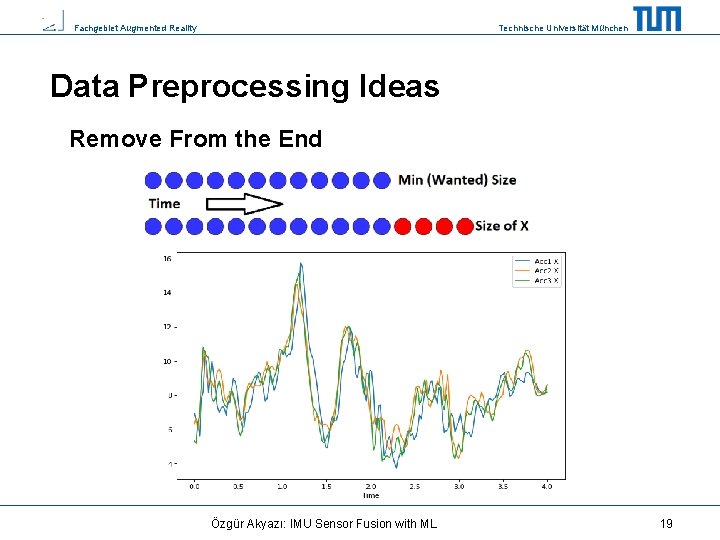

Fachgebiet Augmented Reality Technische Universität München Data Preprocessing Ideas Remove From the End Özgür Akyazı: IMU Sensor Fusion with ML 19

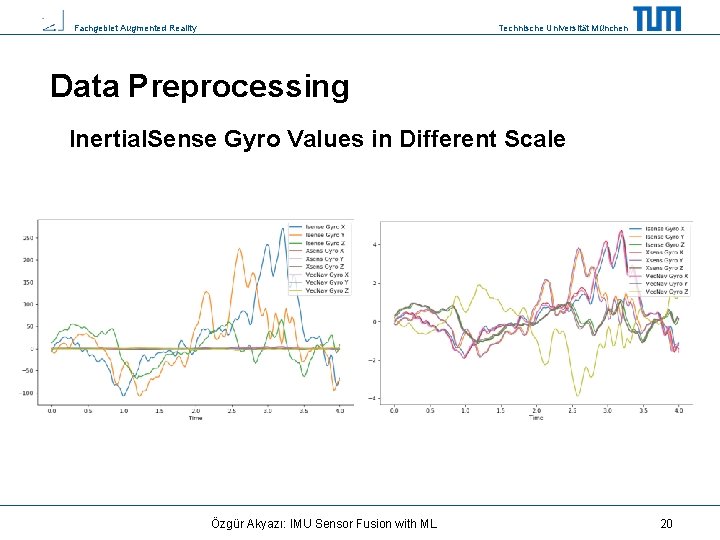

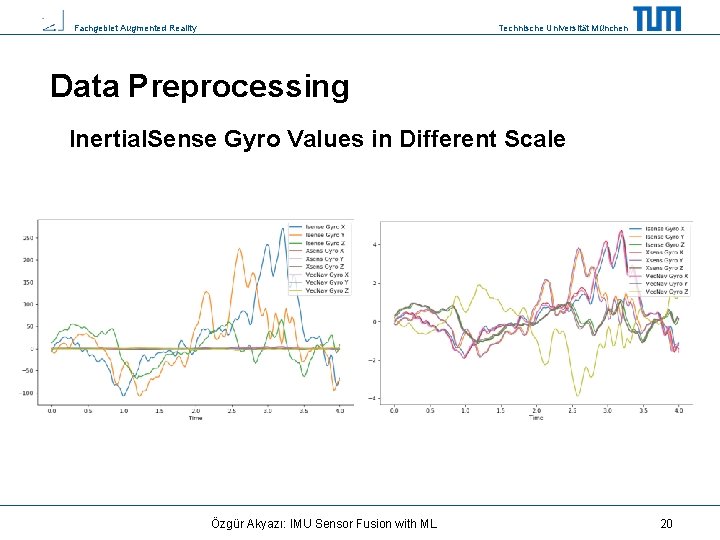

Fachgebiet Augmented Reality Technische Universität München Data Preprocessing Inertial. Sense Gyro Values in Different Scale Özgür Akyazı: IMU Sensor Fusion with ML 20

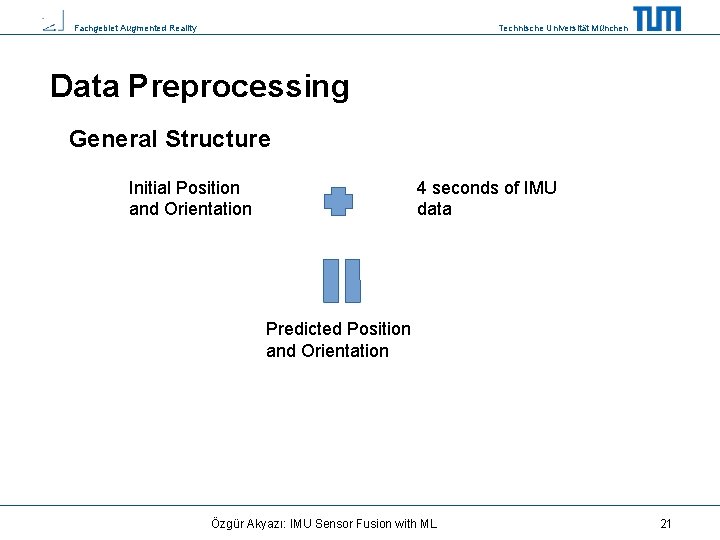

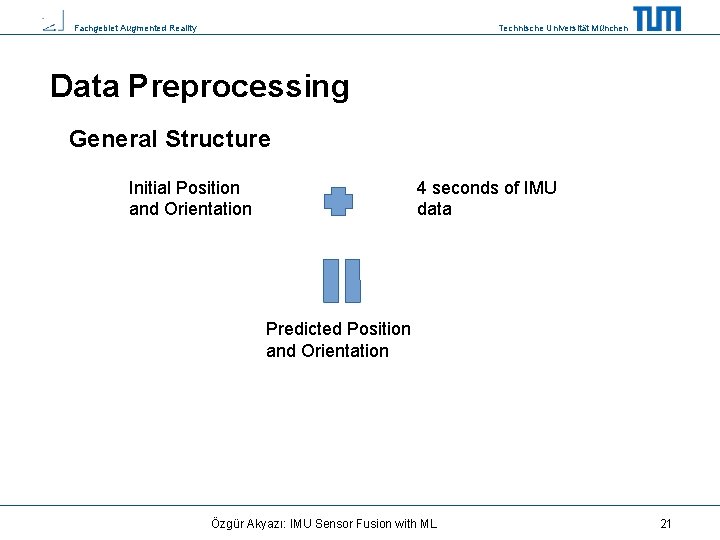

Fachgebiet Augmented Reality Technische Universität München Data Preprocessing General Structure Initial Position and Orientation 4 seconds of IMU data Predicted Position and Orientation Özgür Akyazı: IMU Sensor Fusion with ML 21

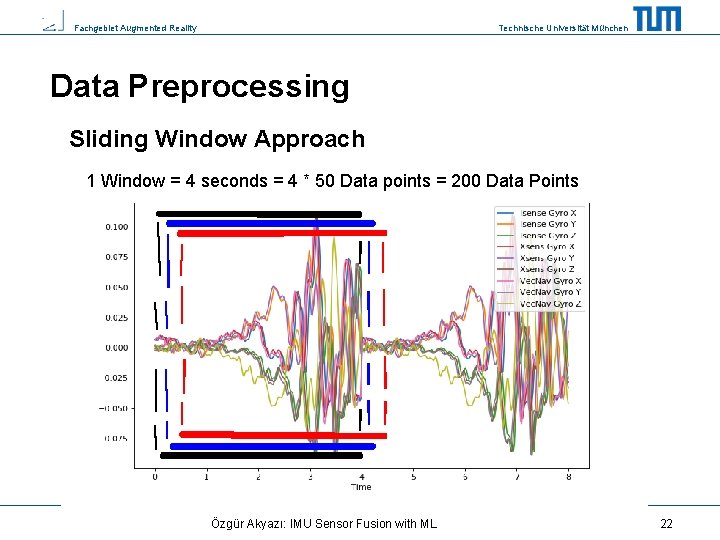

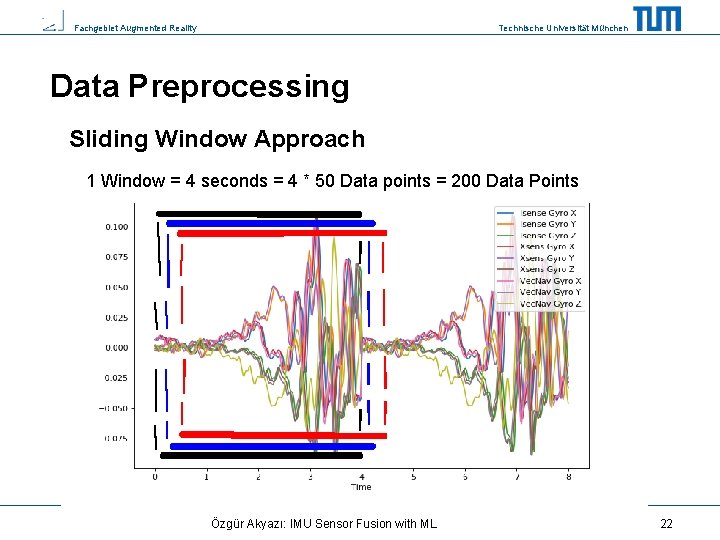

Fachgebiet Augmented Reality Technische Universität München Data Preprocessing Sliding Window Approach 1 Window = 4 seconds = 4 * 50 Data points = 200 Data Points Özgür Akyazı: IMU Sensor Fusion with ML 22

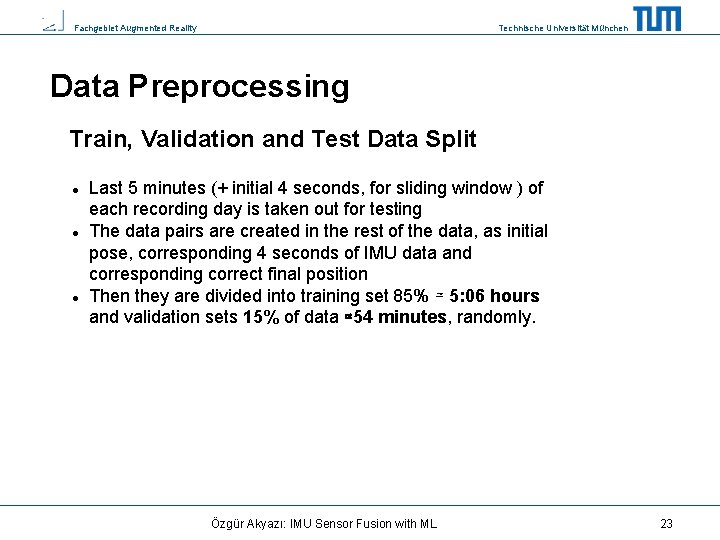

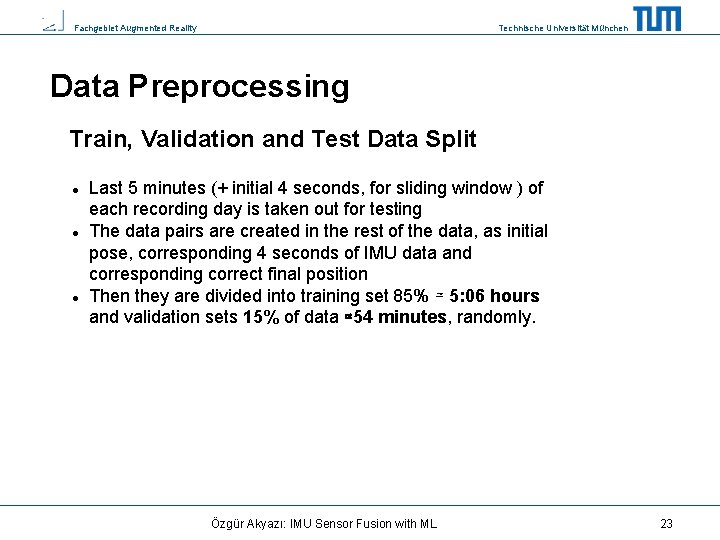

Fachgebiet Augmented Reality Technische Universität München Data Preprocessing Train, Validation and Test Data Split Last 5 minutes (+ initial 4 seconds, for sliding window ) of each recording day is taken out for testing The data pairs are created in the rest of the data, as initial pose, corresponding 4 seconds of IMU data and corresponding correct final position Then they are divided into training set 85% ≃ 5: 06 hours and validation sets 15% of data ≃54 minutes, randomly. Özgür Akyazı: IMU Sensor Fusion with ML 23

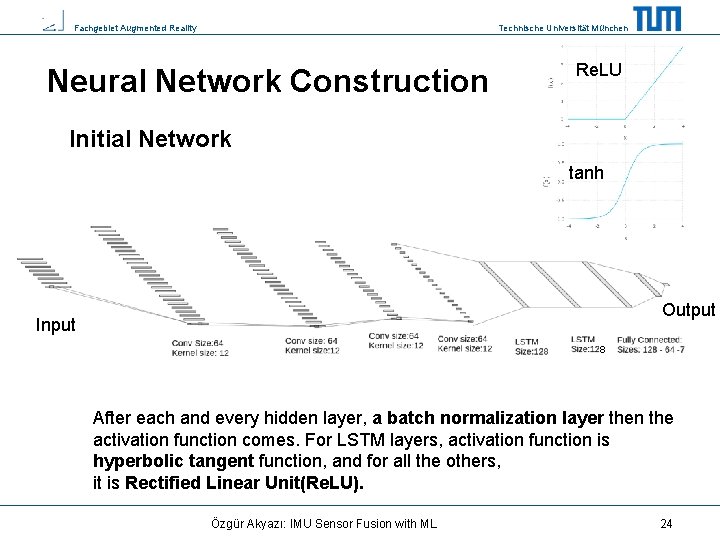

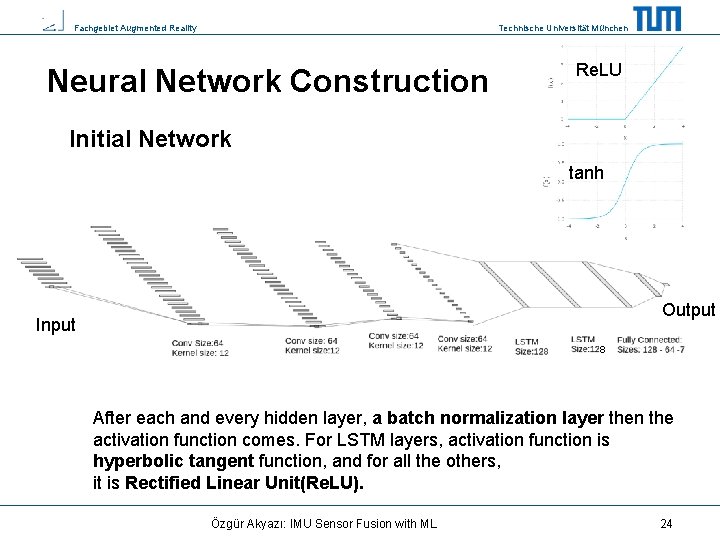

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Re. LU Initial Network tanh Output Input 8 After each and every hidden layer, a batch normalization layer then the activation function comes. For LSTM layers, activation function is hyperbolic tangent function, and for all the others, it is Rectified Linear Unit(Re. LU). Özgür Akyazı: IMU Sensor Fusion with ML 24

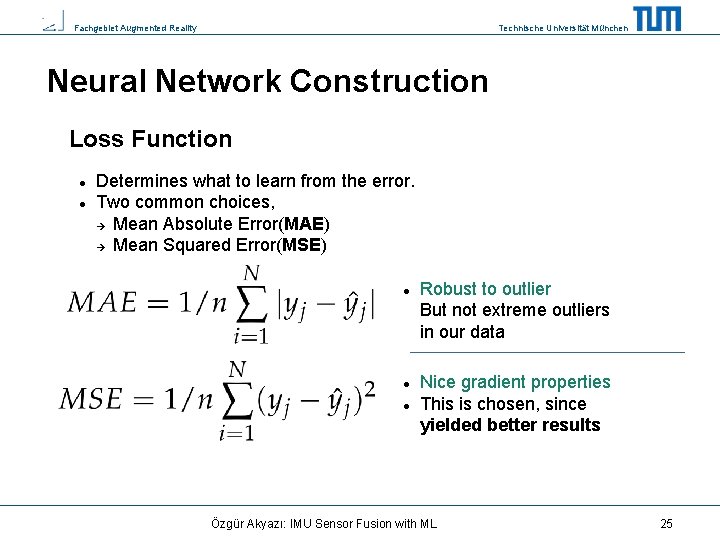

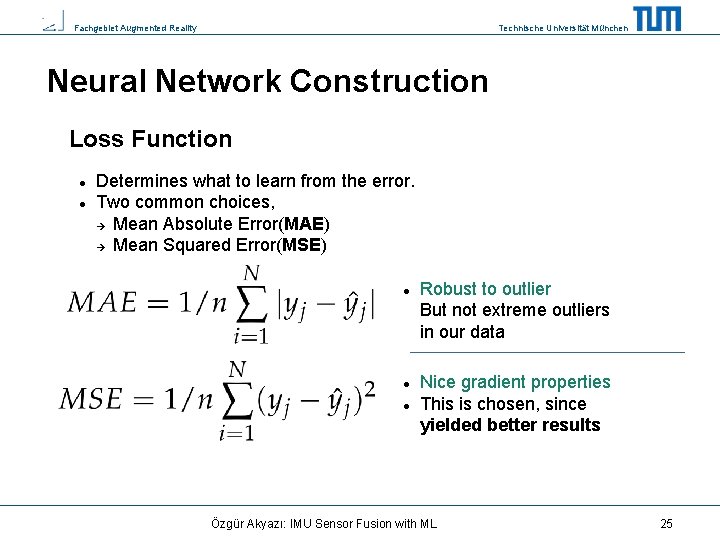

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Loss Function Determines what to learn from the error. Two common choices, Mean Absolute Error(MAE) Mean Squared Error(MSE) Robust to outlier But not extreme outliers in our data Nice gradient properties This is chosen, since yielded better results Özgür Akyazı: IMU Sensor Fusion with ML 25

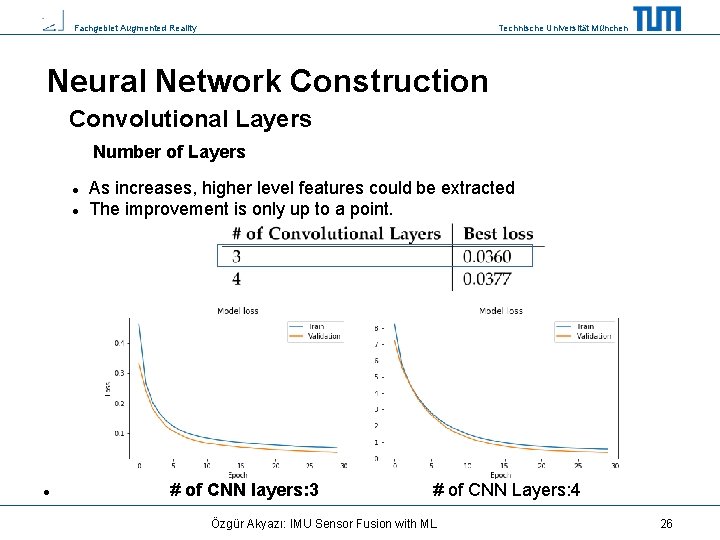

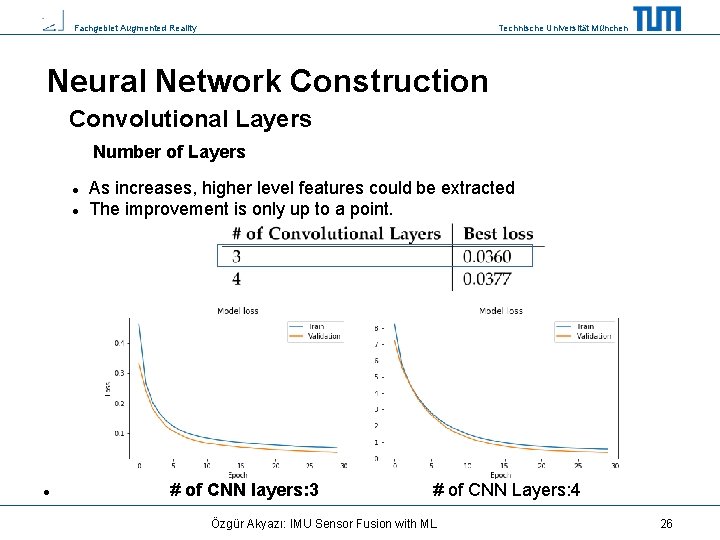

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Convolutional Layers Number of Layers As increases, higher level features could be extracted The improvement is only up to a point. # of CNN layers: 3 # of CNN Layers: 4 Özgür Akyazı: IMU Sensor Fusion with ML 26

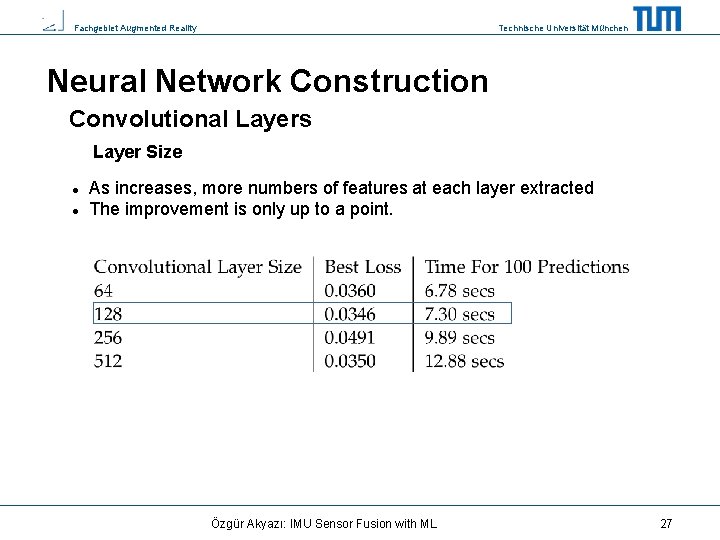

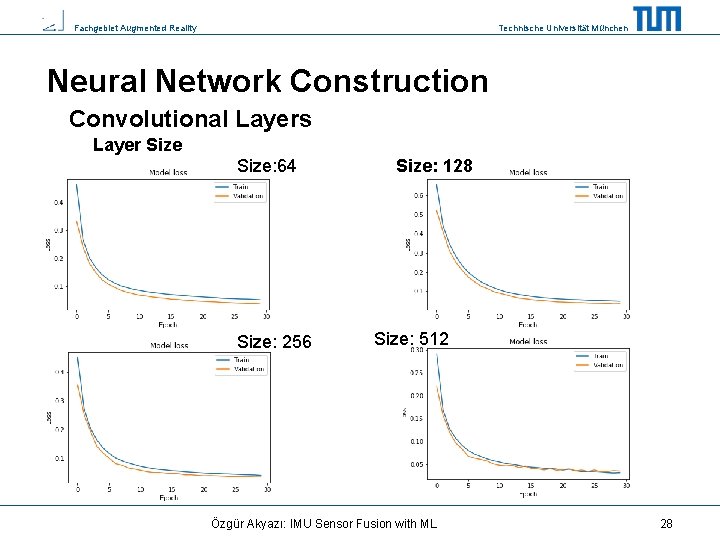

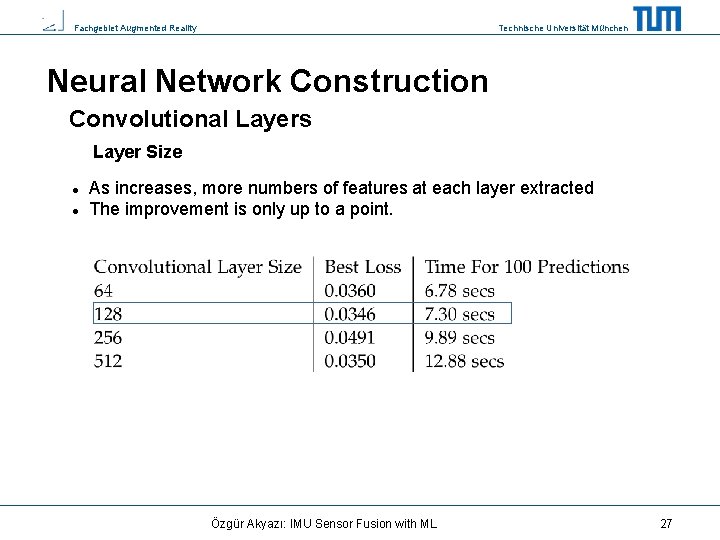

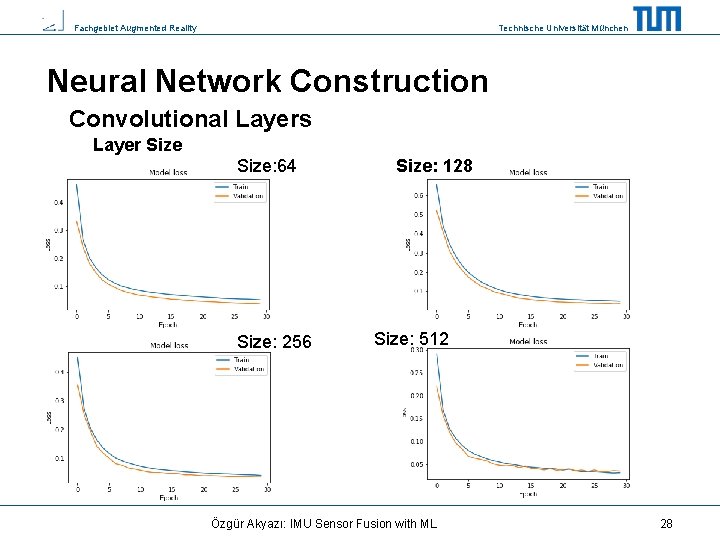

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Convolutional Layers Layer Size As increases, more numbers of features at each layer extracted The improvement is only up to a point. Özgür Akyazı: IMU Sensor Fusion with ML 27

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Convolutional Layers Layer Size: 64 Size: 256 Size: 128 Size: 512 Özgür Akyazı: IMU Sensor Fusion with ML 28

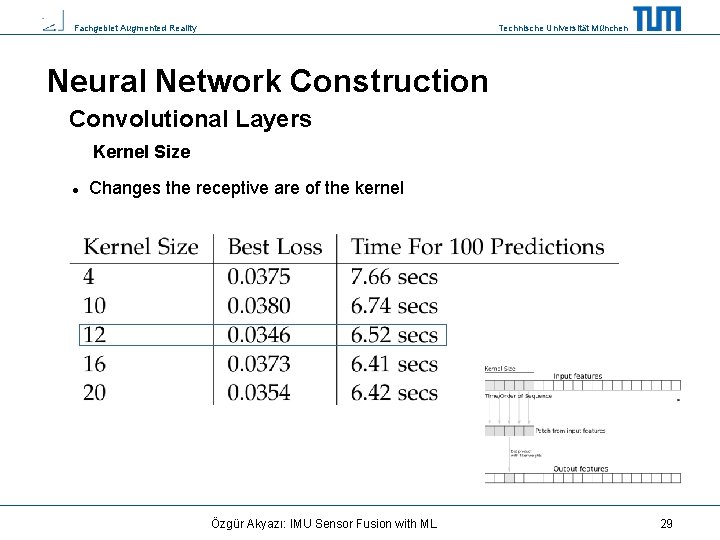

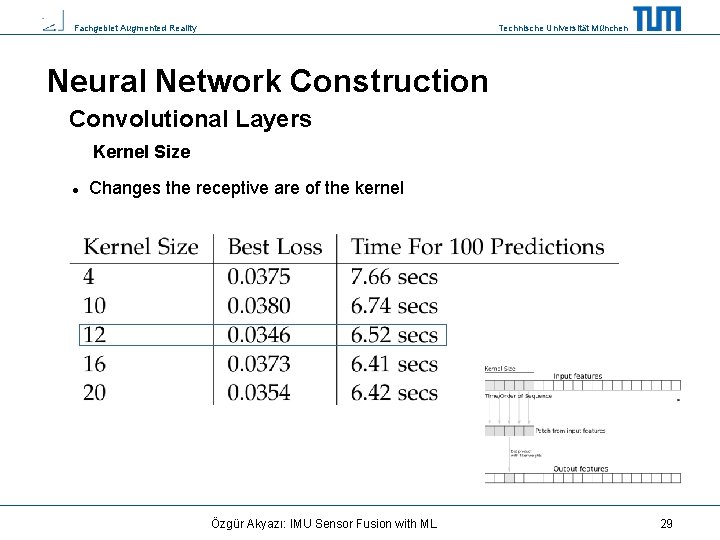

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Convolutional Layers Kernel Size Changes the receptive are of the kernel Özgür Akyazı: IMU Sensor Fusion with ML 29

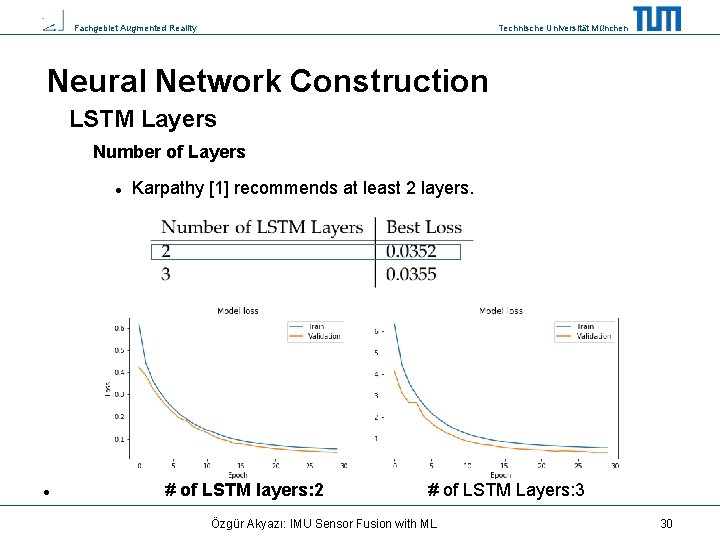

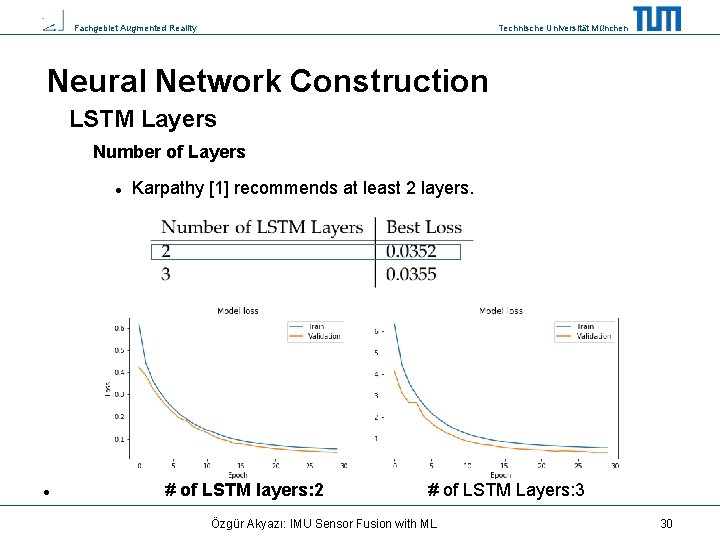

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction LSTM Layers Number of Layers Karpathy [1] recommends at least 2 layers. # of LSTM layers: 2 # of LSTM Layers: 3 Özgür Akyazı: IMU Sensor Fusion with ML 30

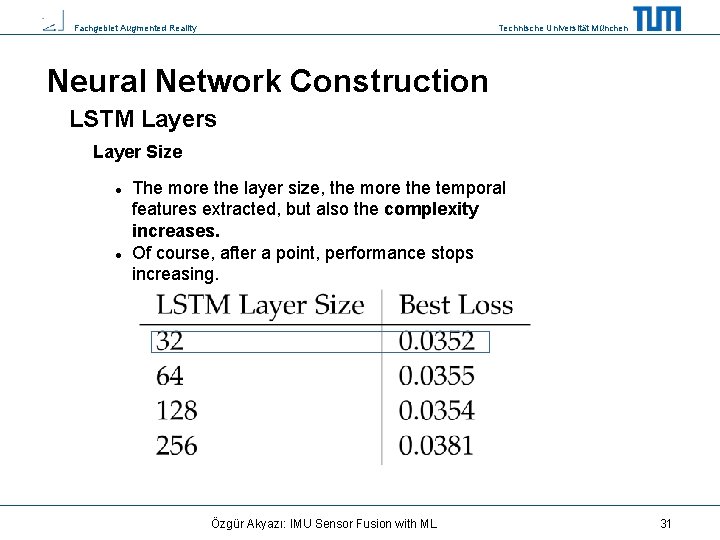

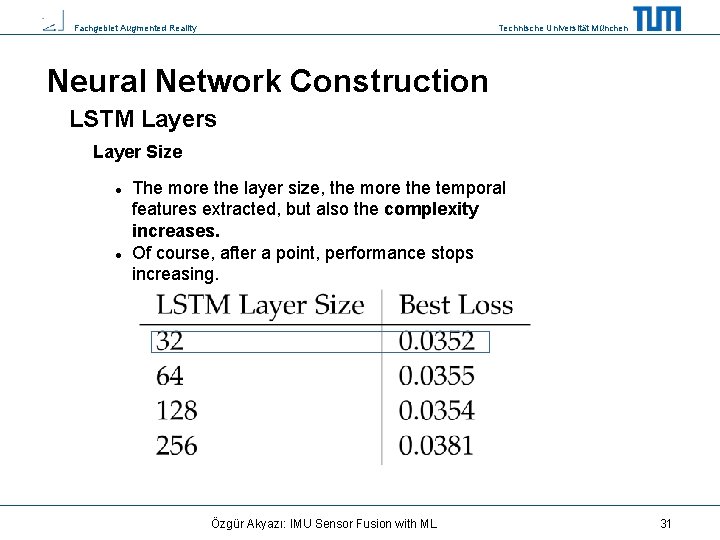

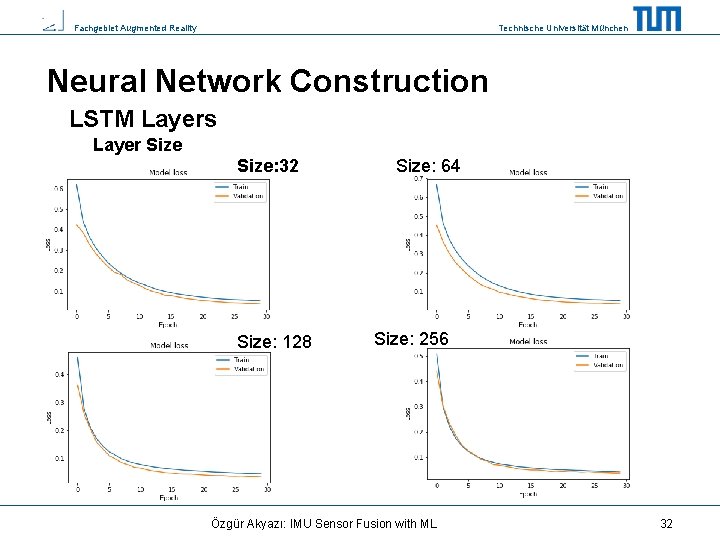

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction LSTM Layers Layer Size The more the layer size, the more the temporal features extracted, but also the complexity increases. Of course, after a point, performance stops increasing. Özgür Akyazı: IMU Sensor Fusion with ML 31

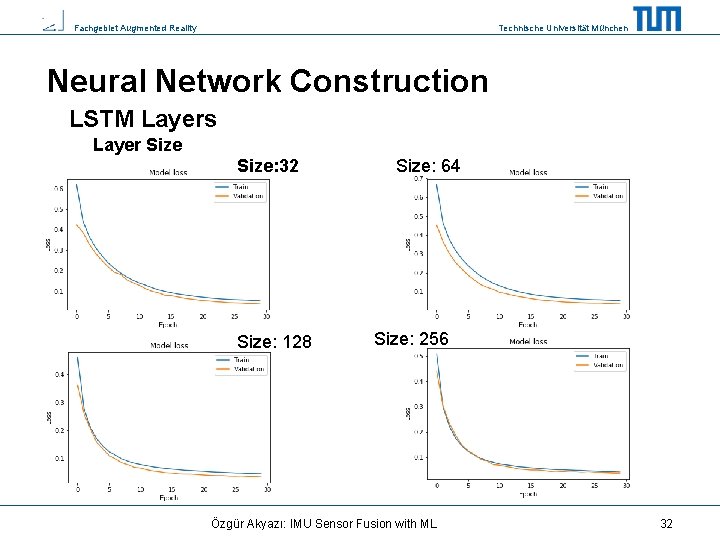

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction LSTM Layers Layer Size: 32 Size: 128 Size: 64 Size: 256 Özgür Akyazı: IMU Sensor Fusion with ML 32

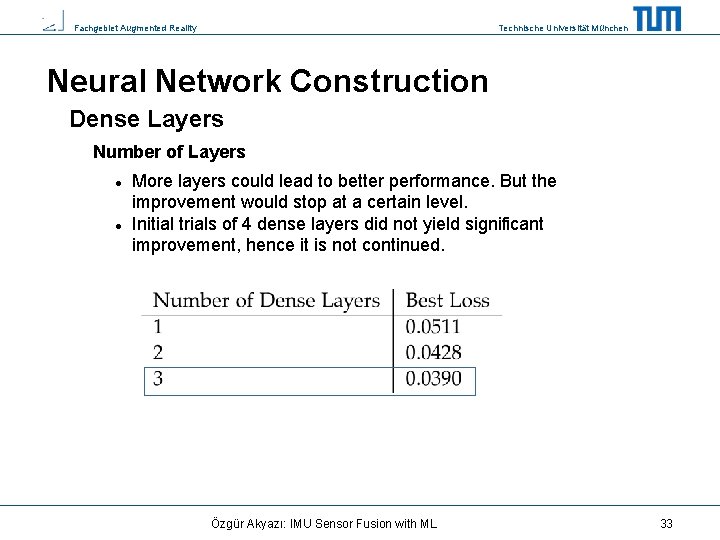

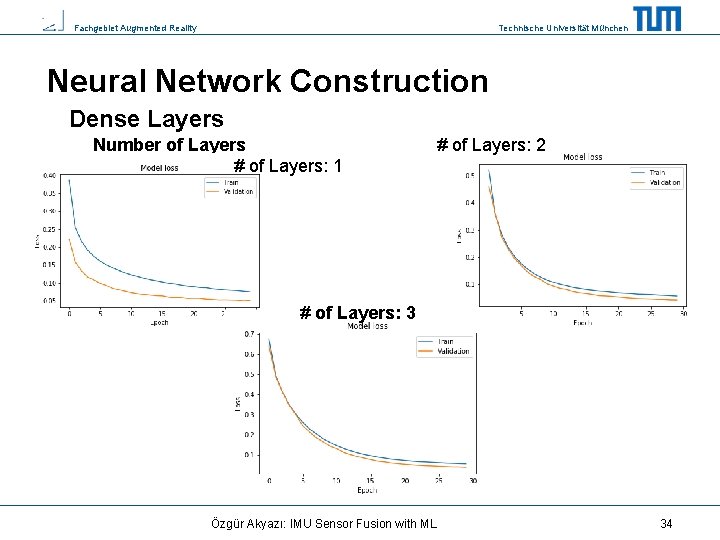

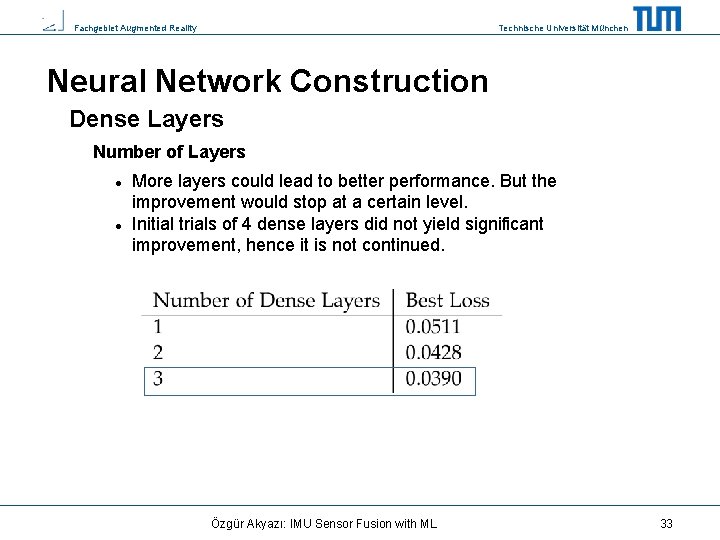

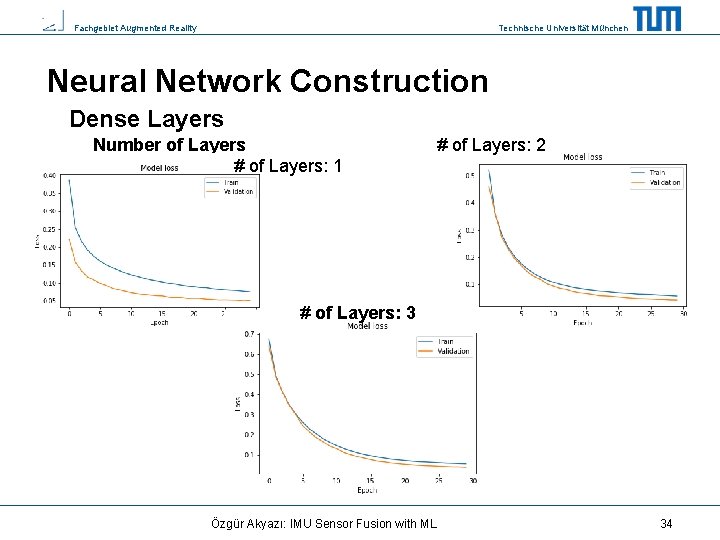

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Dense Layers Number of Layers More layers could lead to better performance. But the improvement would stop at a certain level. Initial trials of 4 dense layers did not yield significant improvement, hence it is not continued. Özgür Akyazı: IMU Sensor Fusion with ML 33

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Dense Layers Number of Layers # of Layers: 1 # of Layers: 2 # of Layers: 3 Özgür Akyazı: IMU Sensor Fusion with ML 34

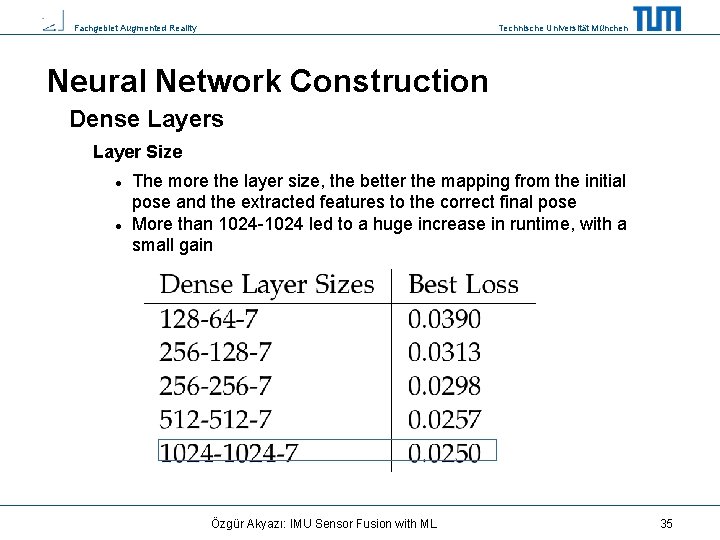

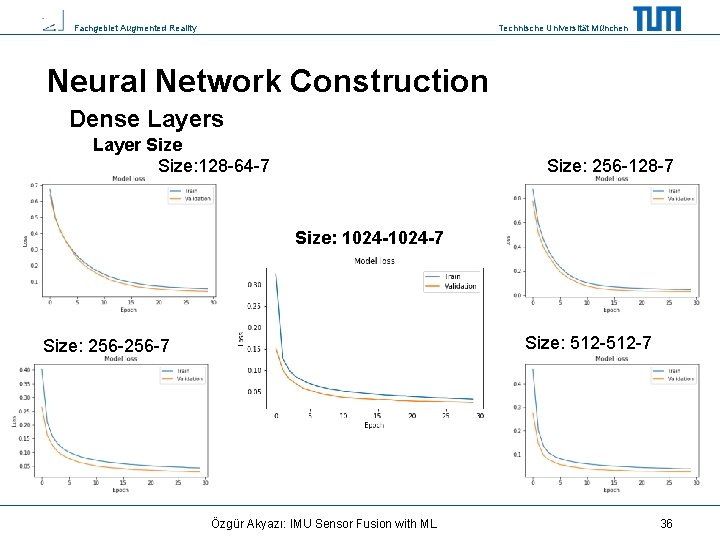

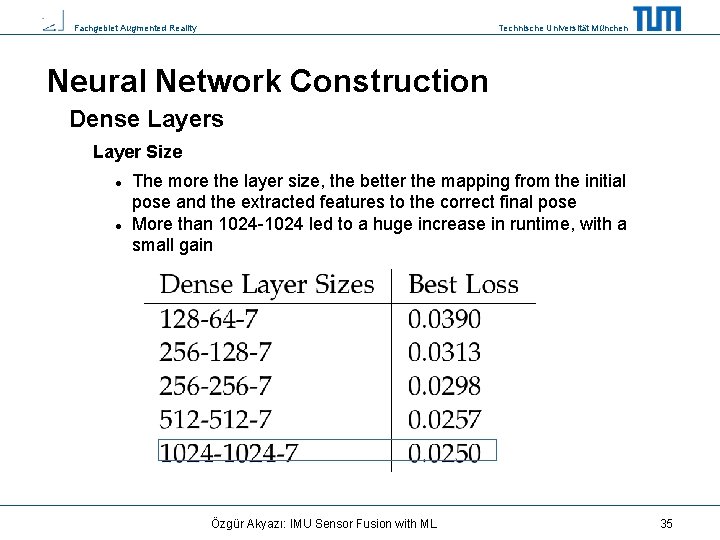

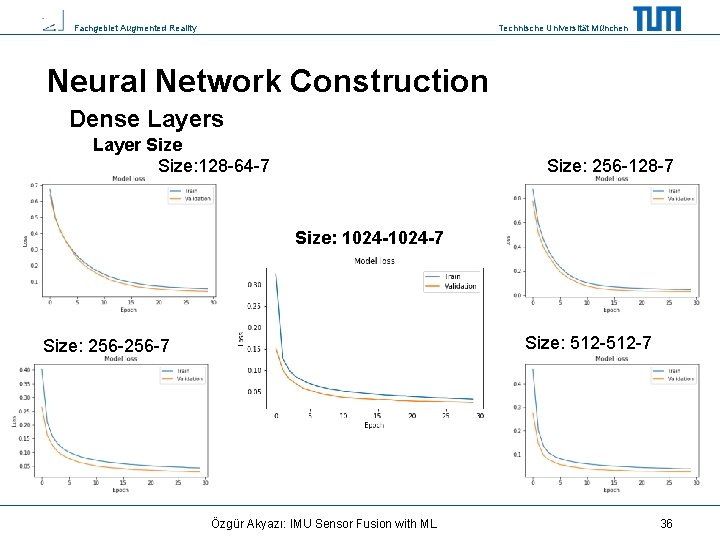

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Dense Layers Layer Size The more the layer size, the better the mapping from the initial pose and the extracted features to the correct final pose More than 1024 -1024 led to a huge increase in runtime, with a small gain Özgür Akyazı: IMU Sensor Fusion with ML 35

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Dense Layers Layer Size: 128 -64 -7 Size: 256 -128 -7 Size: 1024 -7 Size: 512 -7 Size: 256 -7 Özgür Akyazı: IMU Sensor Fusion with ML 36

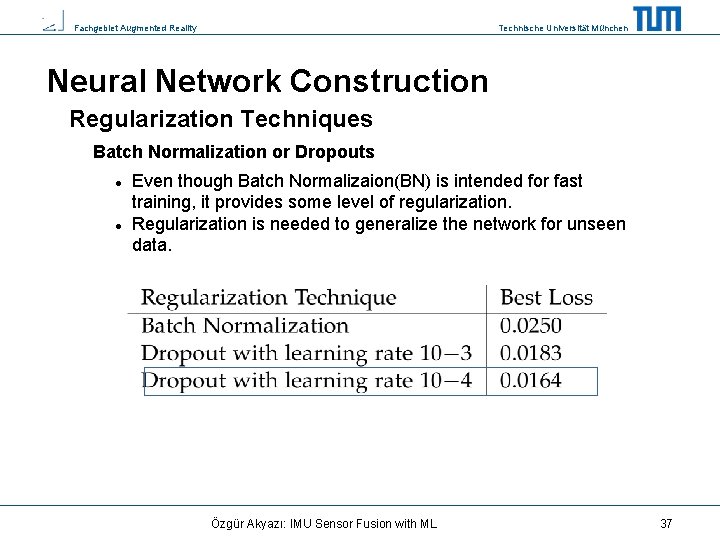

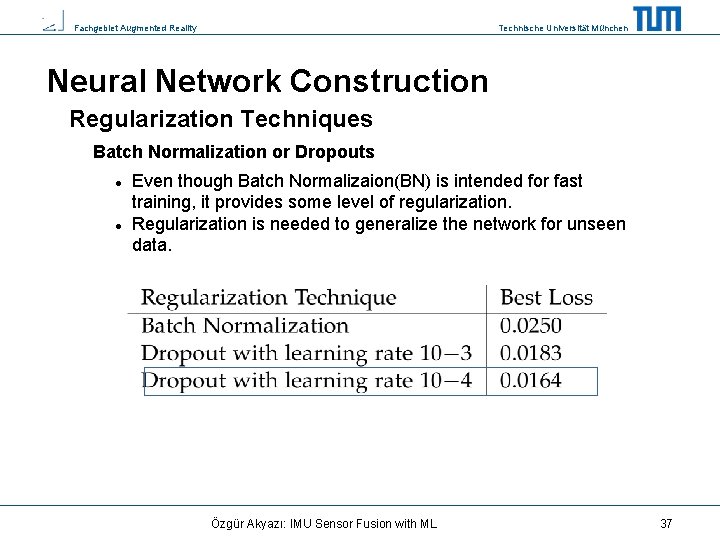

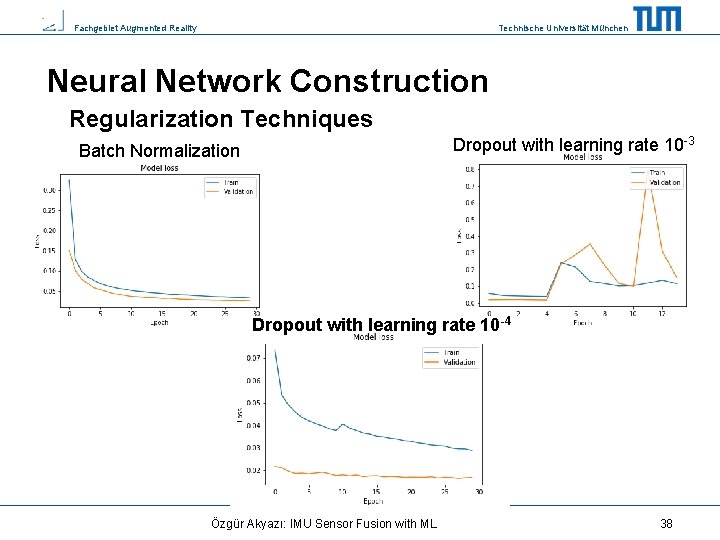

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Regularization Techniques Batch Normalization or Dropouts Even though Batch Normalizaion(BN) is intended for fast training, it provides some level of regularization. Regularization is needed to generalize the network for unseen data. Özgür Akyazı: IMU Sensor Fusion with ML 37

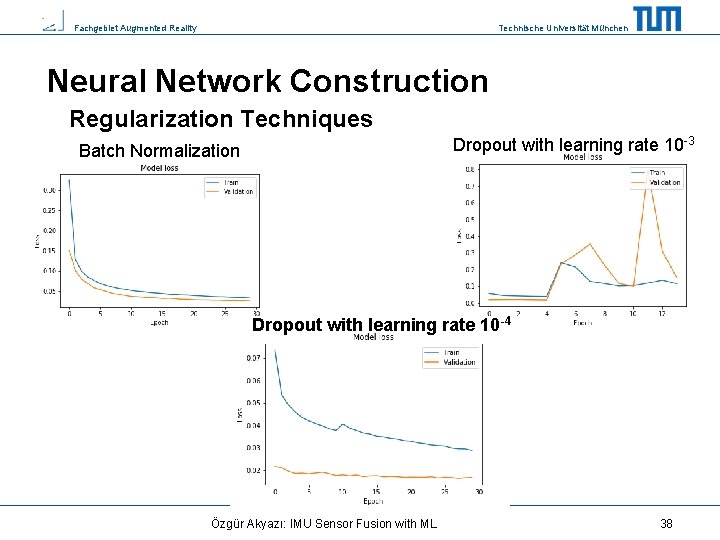

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Regularization Techniques Dropout with learning rate 10 -3 Batch Normalization Dropout with learning rate 10 -4 Özgür Akyazı: IMU Sensor Fusion with ML 38

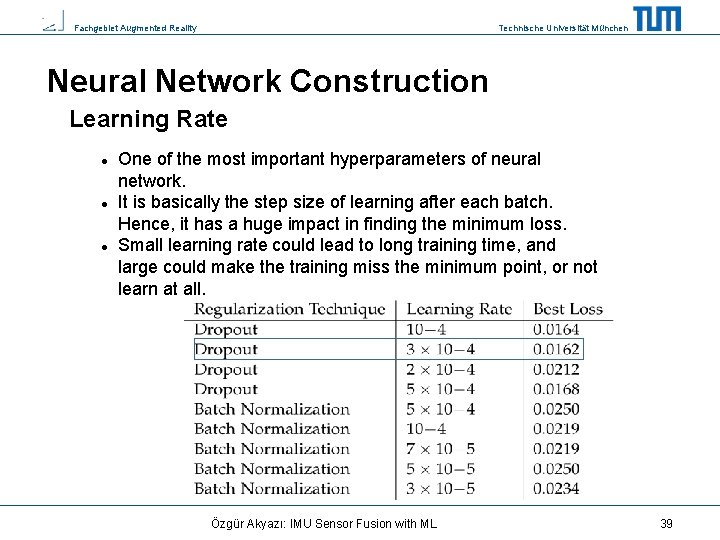

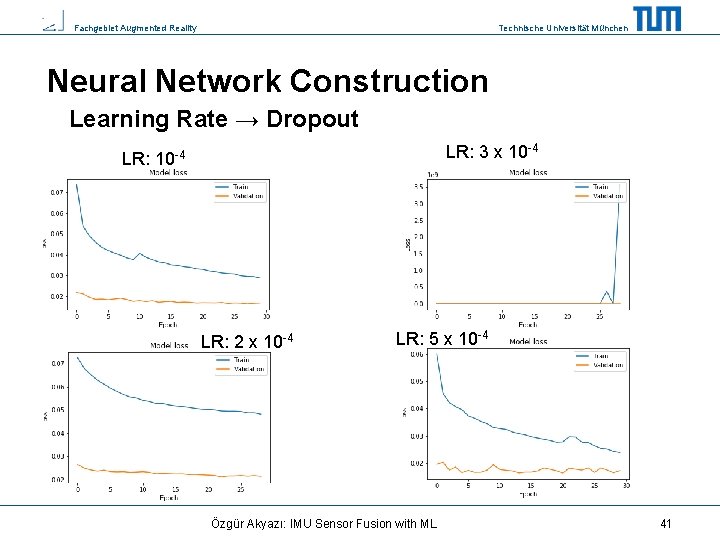

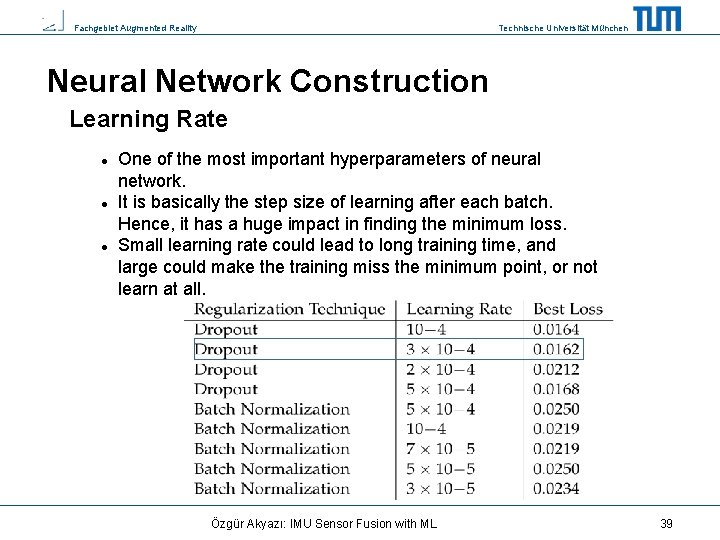

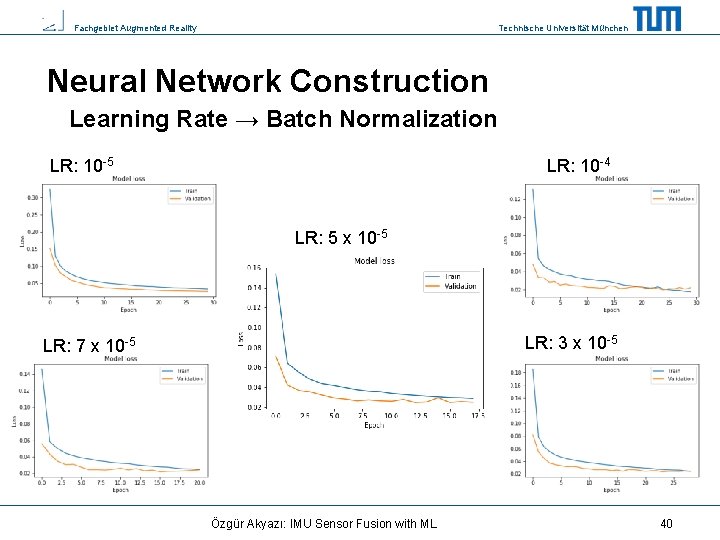

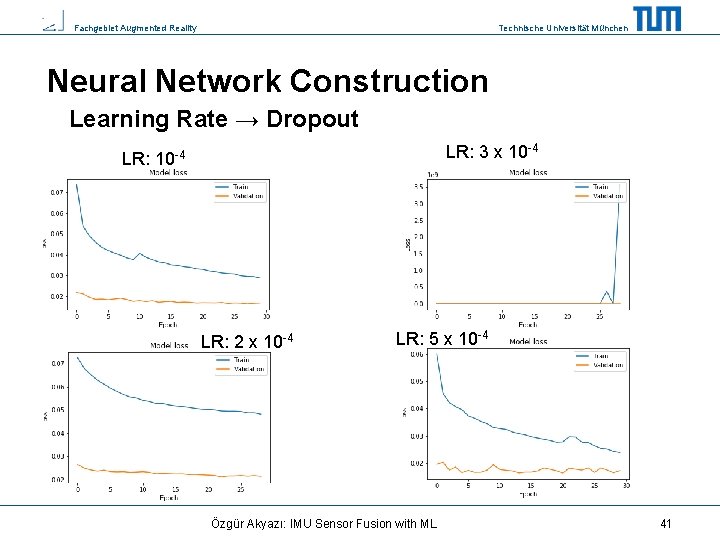

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Learning Rate One of the most important hyperparameters of neural network. It is basically the step size of learning after each batch. Hence, it has a huge impact in finding the minimum loss. Small learning rate could lead to long training time, and large could make the training miss the minimum point, or not learn at all. Özgür Akyazı: IMU Sensor Fusion with ML 39

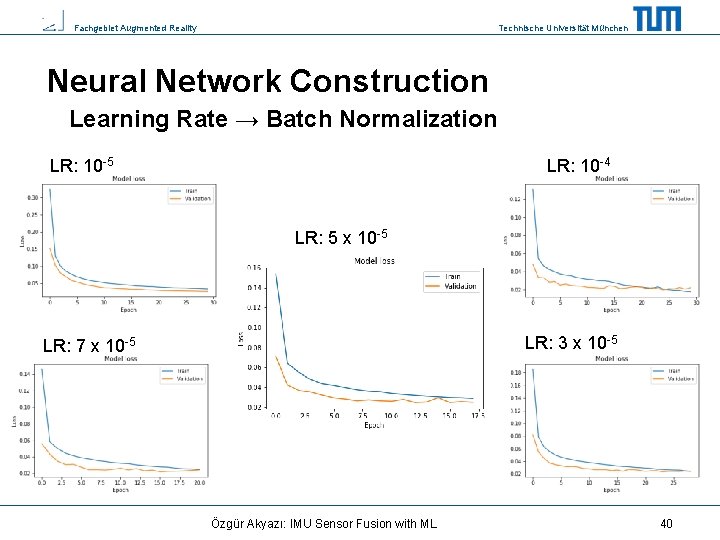

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Learning Rate → Batch Normalization LR: 10 -4 LR: 10 -5 LR: 5 x 10 -5 LR: 3 x 10 -5 LR: 7 x 10 -5 Özgür Akyazı: IMU Sensor Fusion with ML 40

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Learning Rate → Dropout LR: 3 x 10 -4 LR: 2 x 10 -4 LR: 5 x 10 -4 Özgür Akyazı: IMU Sensor Fusion with ML 41

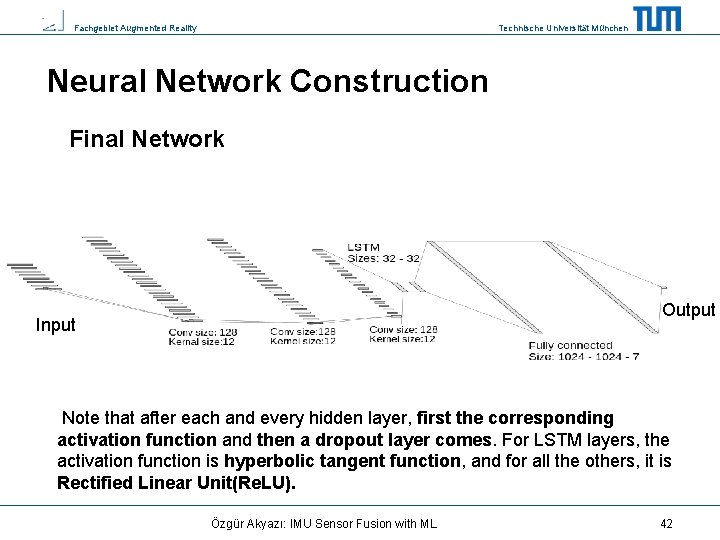

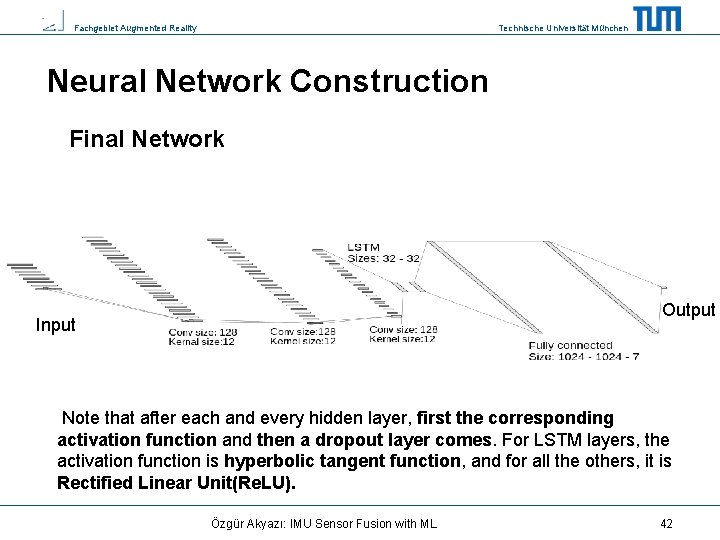

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Final Network Output Input Note that after each and every hidden layer, first the corresponding activation function and then a dropout layer comes. For LSTM layers, the activation function is hyperbolic tangent function, and for all the others, it is Rectified Linear Unit(Re. LU). Özgür Akyazı: IMU Sensor Fusion with ML 42

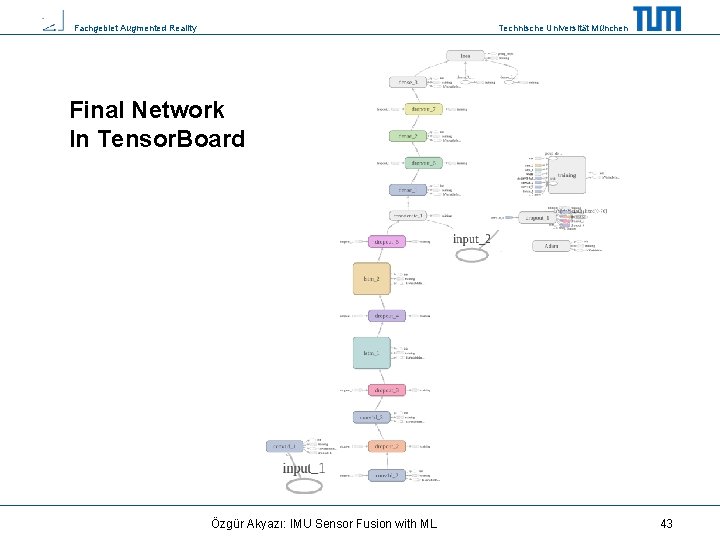

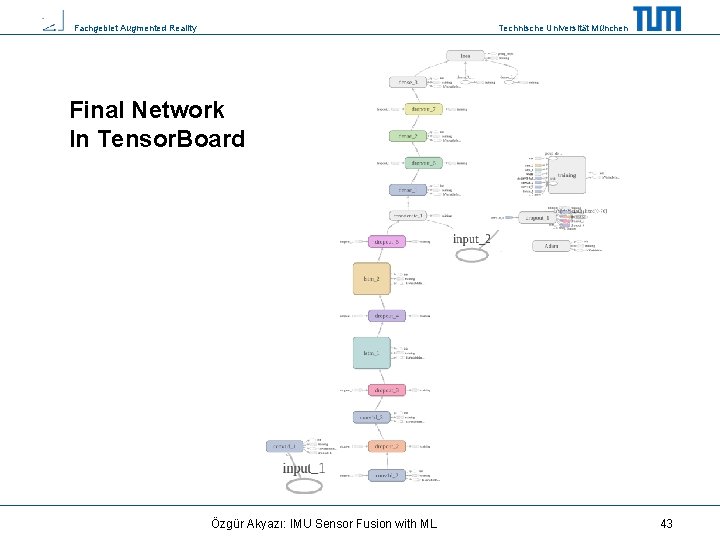

Fachgebiet Augmented Reality Technische Universität München Final Network In Tensor. Board Özgür Akyazı: IMU Sensor Fusion with ML 43

Fachgebiet Augmented Reality Technische Universität München Neural Network Construction Issues Asynchronous data has a negative effect on the results. Since with each recording, the indices shift between 3 IMUs will be changing, which makes learning hard for neural network. We will see this effect in Evaluation. Özgür Akyazı: IMU Sensor Fusion with ML 44

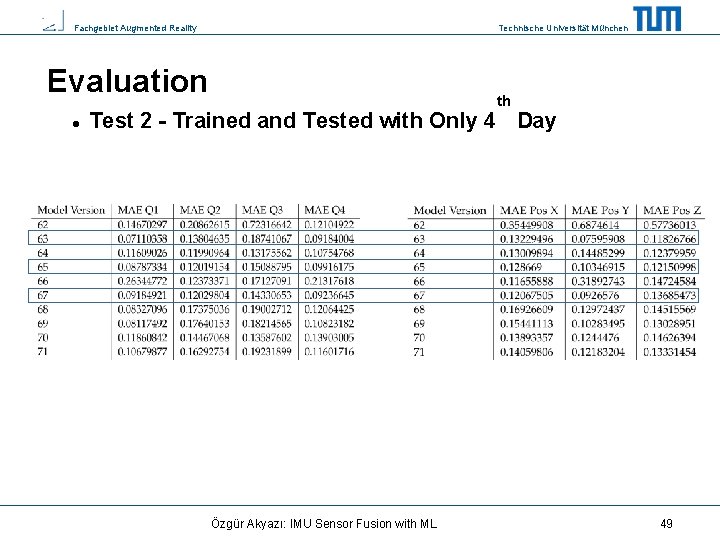

Fachgebiet Augmented Reality Technische Universität München Evaluation Errors reported in MAE, to keep the units same, which could be digested by humans easier. Test 1 First the models, trained with all 3 recording days’ data and tested with 3 recording days’ data(Last 5 minutes from each day). Test 2 Then the models, trained only with 4 th day’s data and tested only with it(Last 5 minutes). Özgür Akyazı: IMU Sensor Fusion with ML 45

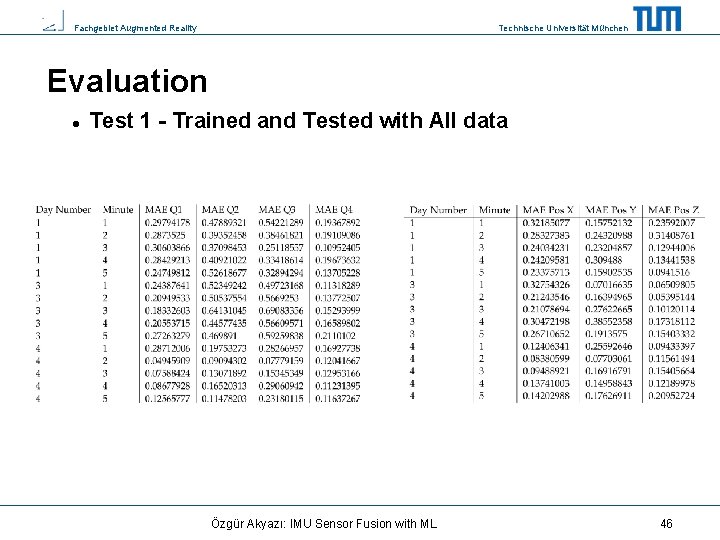

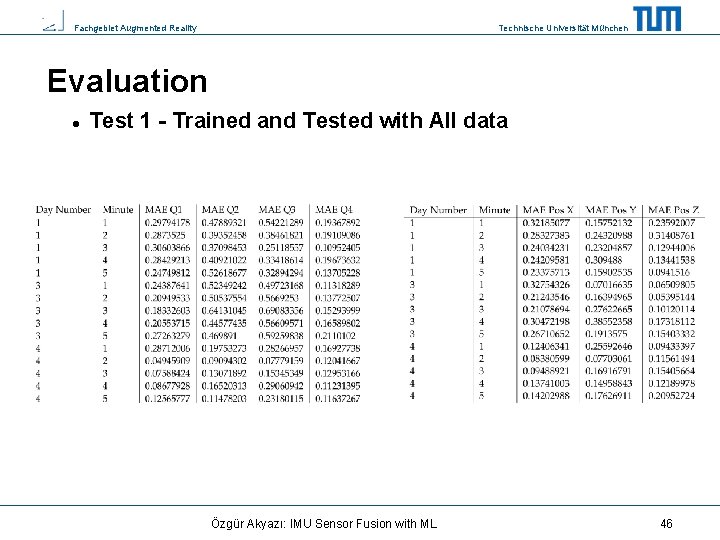

Fachgebiet Augmented Reality Technische Universität München Evaluation Test 1 - Trained and Tested with All data Özgür Akyazı: IMU Sensor Fusion with ML 46

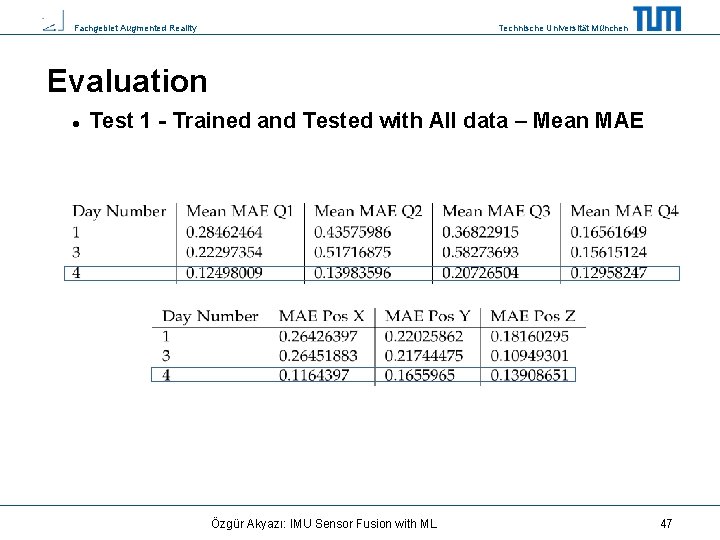

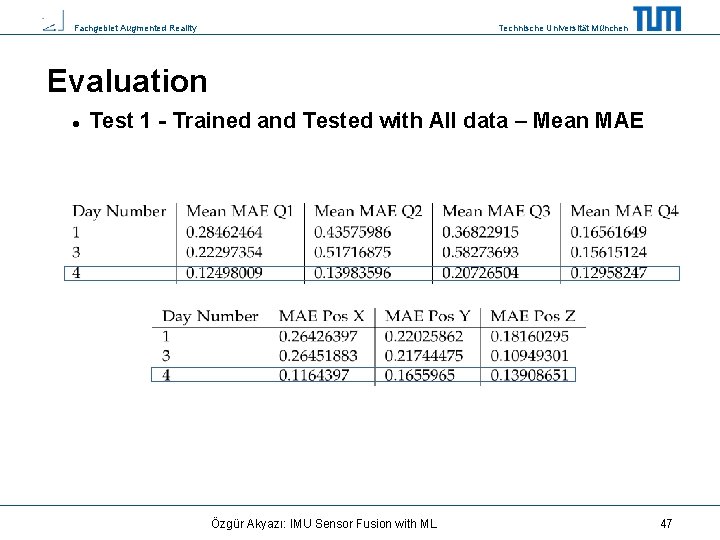

Fachgebiet Augmented Reality Technische Universität München Evaluation Test 1 - Trained and Tested with All data – Mean MAE Özgür Akyazı: IMU Sensor Fusion with ML 47

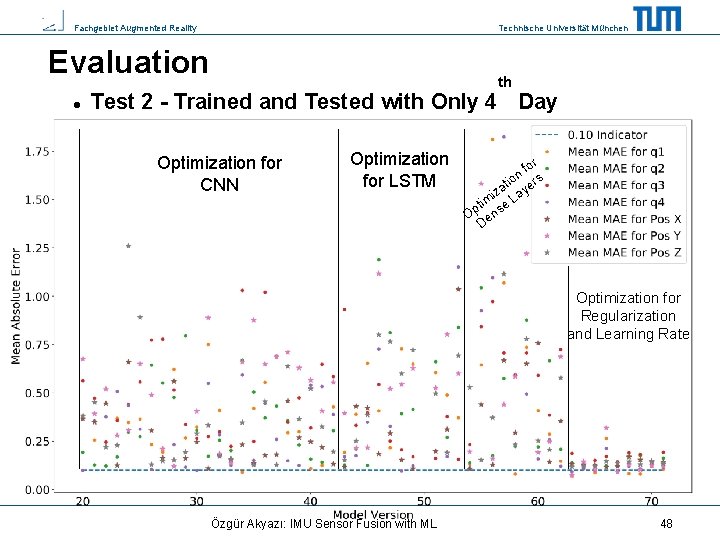

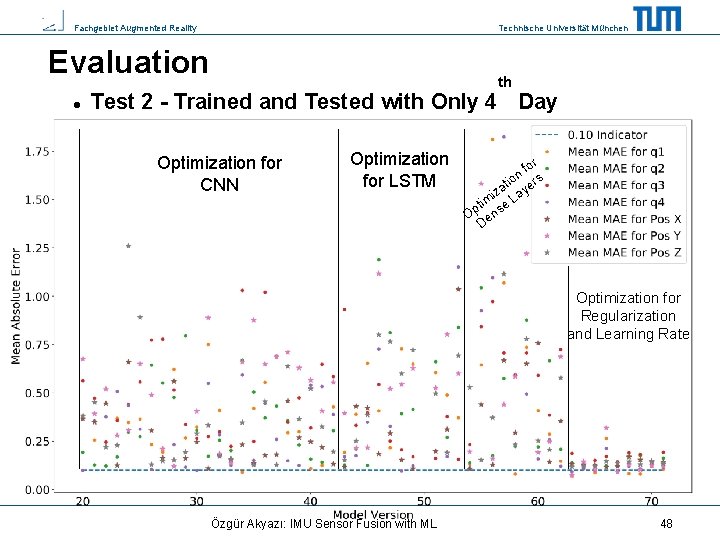

Fachgebiet Augmented Reality Technische Universität München Evaluation th Test 2 - Trained and Tested with Only 4 Day Optimization for CNN Optimization for LSTM r fo n tio yers a iz a im e L t Op ens D Optimization for Regularization and Learning Rate Özgür Akyazı: IMU Sensor Fusion with ML 48

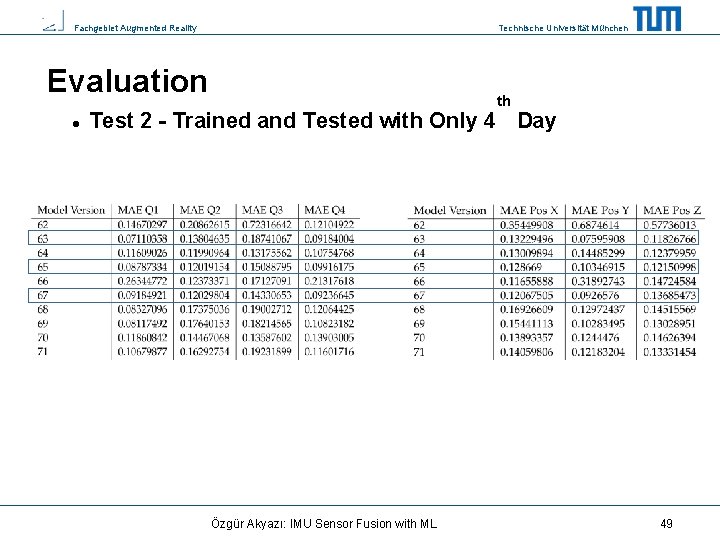

Fachgebiet Augmented Reality Technische Universität München Evaluation th Test 2 - Trained and Tested with Only 4 Day Özgür Akyazı: IMU Sensor Fusion with ML 49

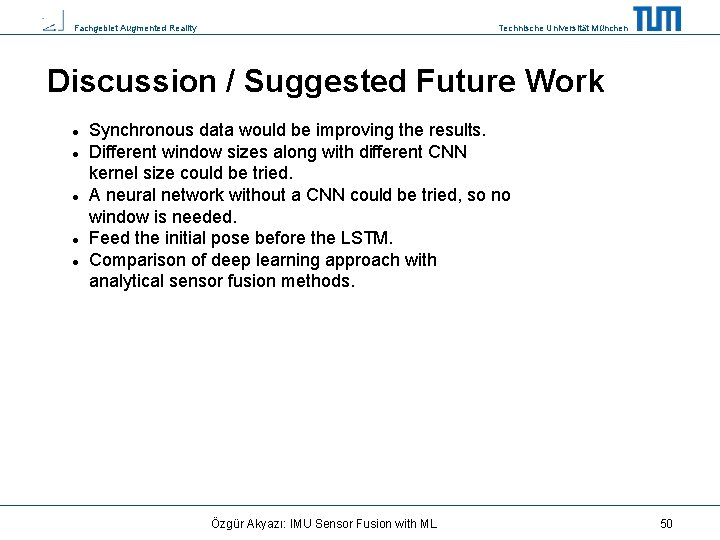

Fachgebiet Augmented Reality Technische Universität München Discussion / Suggested Future Work Synchronous data would be improving the results. Different window sizes along with different CNN kernel size could be tried. A neural network without a CNN could be tried, so no window is needed. Feed the initial pose before the LSTM. Comparison of deep learning approach with analytical sensor fusion methods. Özgür Akyazı: IMU Sensor Fusion with ML 50

Fachgebiet Augmented Reality Technische Universität München Conclusion Design of Neural Network for the Multi-IMU Sensor Fusion Evaluation for the accuracy of the NN Continuous improvement of the initial design Özgür Akyazı: IMU Sensor Fusion with ML 51

Fachgebiet Augmented Reality Technische Universität München List of References 1. Ordóñez, F. J. , & Roggen, D. (2016). Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors (Switzerland). 2. Rambach, J. R. , Tewari, A. , Pagani, A. , & Stricker, D. (2016). Learning to Fuse: A Deep Learning Approach to Visual-Inertial Camera Pose Estimation. 3. https: //developer. apple. com/library/archive/documentation/Performance/Conceptual/v. Image/Convolution. Operations/Convolution. Opera tions. html 4. Karpathy, A. , Johnson, J. , & Fei-Fei, L. (2015). Visualizing and Understand-ing Recurrent Networks, 1– 12. https: //doi. org/10. 1007/9783 -319 -10590 -1_53 5. Int 8, 02. 2018, https: //int 8. io/neural-networks-in-julia-hyperbolic-tangent-and-relu/, access date: 16. 04. 2019 6. Janakiev, N. , Practical Text Classification With Python and Keras, 24. 10. 2018 Özgür Akyazı: IMU Sensor Fusion with ML 52

Fachgebiet Augmented Reality Technische Universität München Thank You. . . Özgür Akyazı: IMU Sensor Fusion with ML 53