TECH Software Defined Networking SDN Applications Research Challenges

- Slides: 37

TECH Software Defined Networking: SDN Applications & Research Challenges James Won-Ki Hong Department of Computer Science and Engineering POSTECH, Korea jwkhong@postech. ac. kr CSED 702 Y: Software Defined Networking 1/35

Outline v Open. Flow Use Cases § VLAN, MPLS v Wireless and Mobile SDN v SDN in 5 G Network v Other SDNs § LISP v SDN Standardization v Research Challenges TECH CSED 702 Y: Software Defined Networking 2/35

Open. Flow applications & use cases v SDN separates control plane and data plane from traditional networking equipment v Open. Flow is one of the SDN protocols for controlling data flows v There are many applications that can be built on top of Open. Flow and the following are some popular examples TECH § MPLS-TE § VLAN CSED 702 Y: Software Defined Networking 3/35

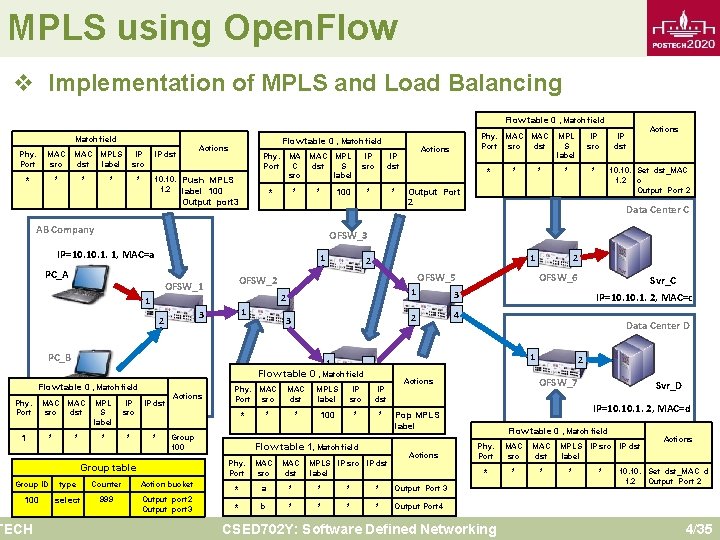

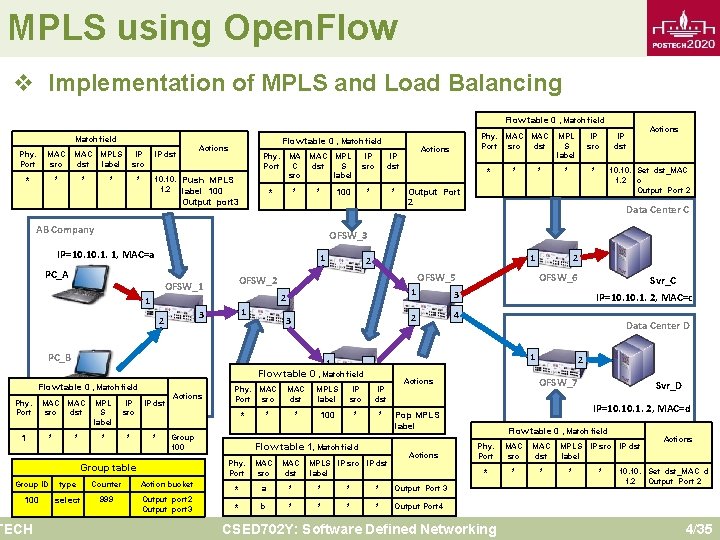

MPLS using Open. Flow v Implementation of MPLS and Load Balancing Flow table 0 , Match field Phy. Port MAC src MAC dst MPLS label IP src * * * Flow table 0 , Match field Actions IP dst Phy. Port MA C src * * 10. Push MPLS 1. 2 label 100 Output port 3 MAC MPL dst S label * IP src IP dst * * 100 AB Company 1 PC_A OFSW_1 * * * IP dst Actions 10. Set dst_MAC 1. 2 c Output Port 2 Data Center C OFSW_5 1 3 PC_B 1 Flow table 0 , Match field MACIP=10. 1. MAC MPL 1, MAC=b IP IP dst src dst S label src * * Actions Phy. MAC Port src Group 100 Group table Group ID type Counter Action bucket 100 select 999 Output port 2 Output port 3 1 3 2 4 OFSW_4 2 OFSW_6 Data Center D 1 MPLS label IP src IP dst * 100 * * Flow table 1, Match field MPLS IP src IP dst label Actions MAC dst * a * * Output Port 3 * b * * Output Port 4 Svr_D IP=10. 1. 2, MAC=d Pop MPLS label MAC src 2 OFSW_7 Actions Phy. Port Svr_C IP=10. 1. 2, MAC=c 2 MAC dst * * * 1 2 3 2 TECH IP src 2 OFSW_2 1 1 MPL S label OFSW_3 IP=10. 1. 1, MAC=a Phy. Port Actions Phy. MAC Port src dst Flow table 0 , Match field Phy. Port MAC src MAC dst * * * CSED 702 Y: Software Defined Networking MPLS IP src IP dst label * * Actions 10. Set dst_MAC d 1. 2 Output Port 2 4/35

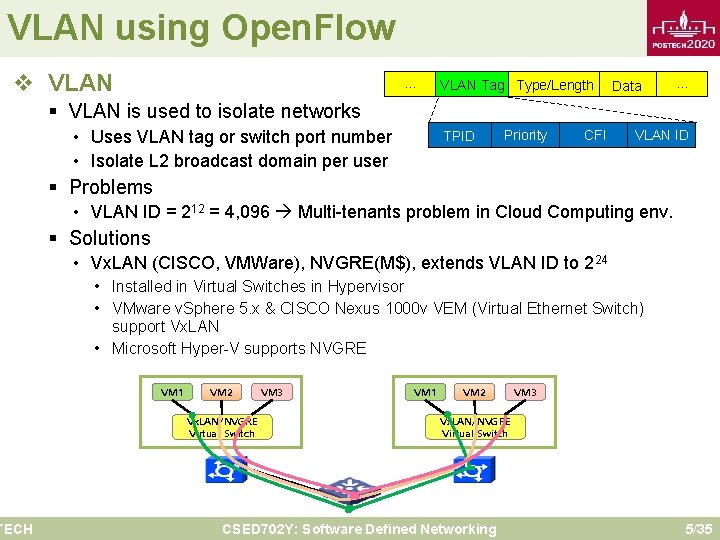

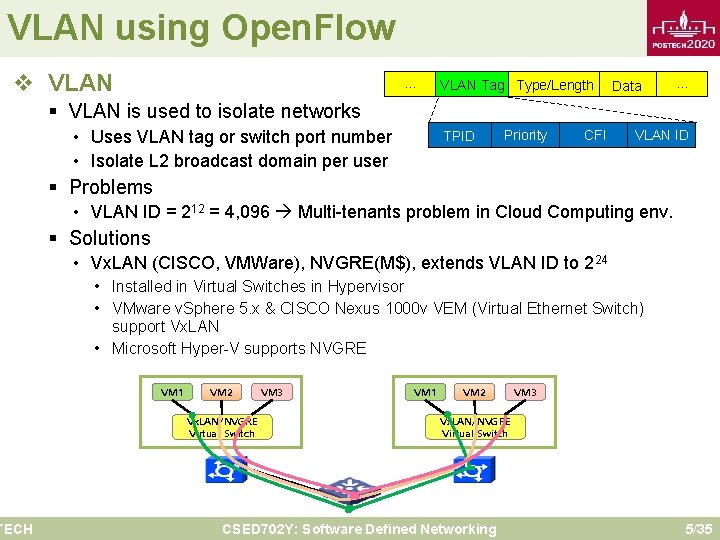

VLAN using Open. Flow v VLAN TECH … VLAN Tag Type/Length Data … § VLAN is used to isolate networks • Uses VLAN tag or switch port number • Isolate L 2 broadcast domain per user TPID Priority CFI VLAN ID § Problems • VLAN ID = 212 = 4, 096 Multi-tenants problem in Cloud Computing env. § Solutions • Vx. LAN (CISCO, VMWare), NVGRE(M$), extends VLAN ID to 224 • Installed in Virtual Switches in Hypervisor • VMware v. Sphere 5. x & CISCO Nexus 1000 v VEM (Virtual Ethernet Switch) support Vx. LAN • Microsoft Hyper-V supports NVGRE VM 1 VM 2 Vx. LAN/NVGRE Virtual Switch VM 3 VM 1 VM 2 VM 3 Vx. LAN/NVGRE Virtual Switch CSED 702 Y: Software Defined Networking 5/35

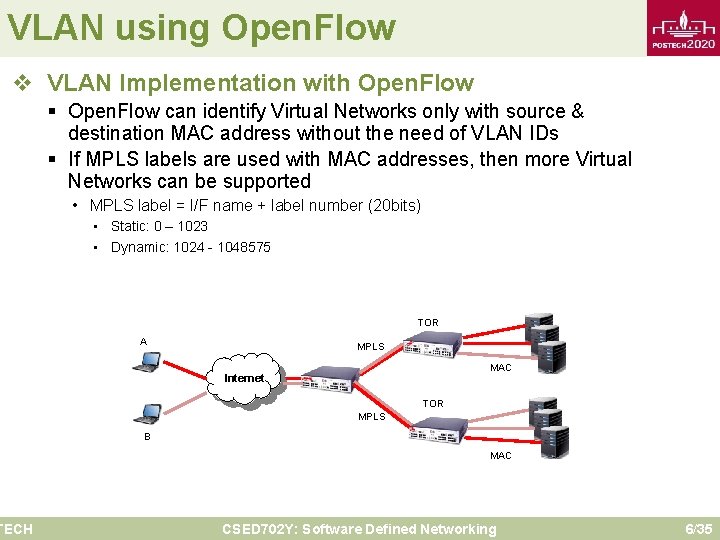

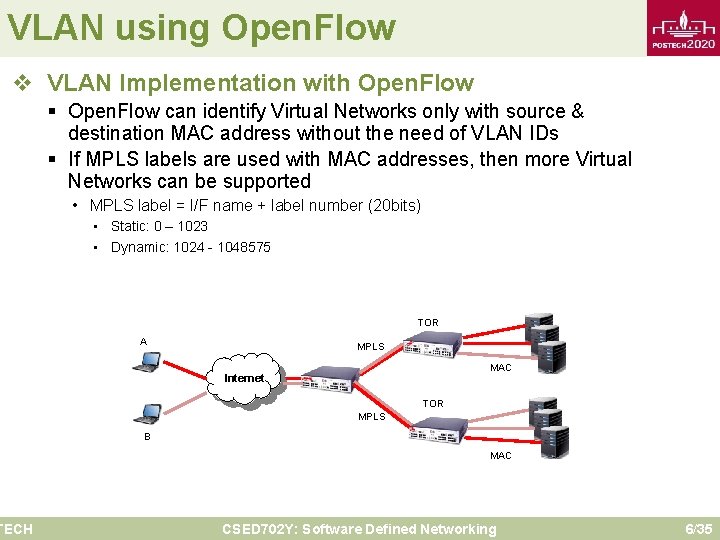

VLAN using Open. Flow v VLAN Implementation with Open. Flow TECH § Open. Flow can identify Virtual Networks only with source & destination MAC address without the need of VLAN IDs § If MPLS labels are used with MAC addresses, then more Virtual Networks can be supported • MPLS label = I/F name + label number (20 bits) • Static: 0 – 1023 • Dynamic: 1024 - 1048575 TOR A MPLS MAC Internet TOR MPLS B MAC CSED 702 Y: Software Defined Networking 6/35

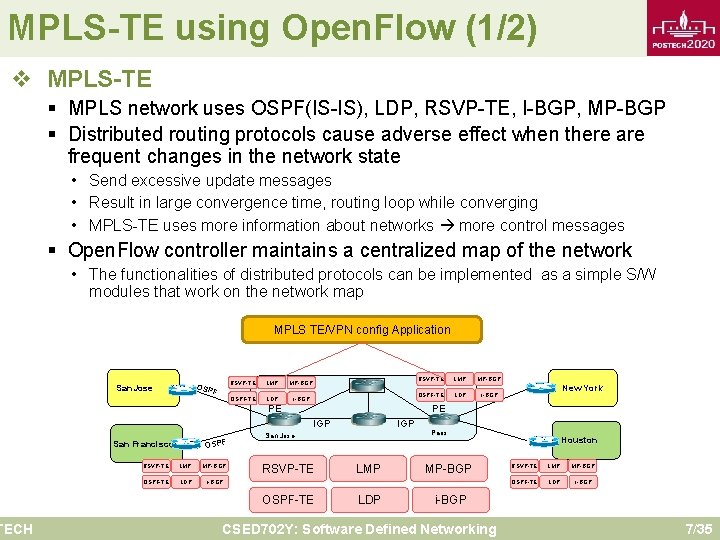

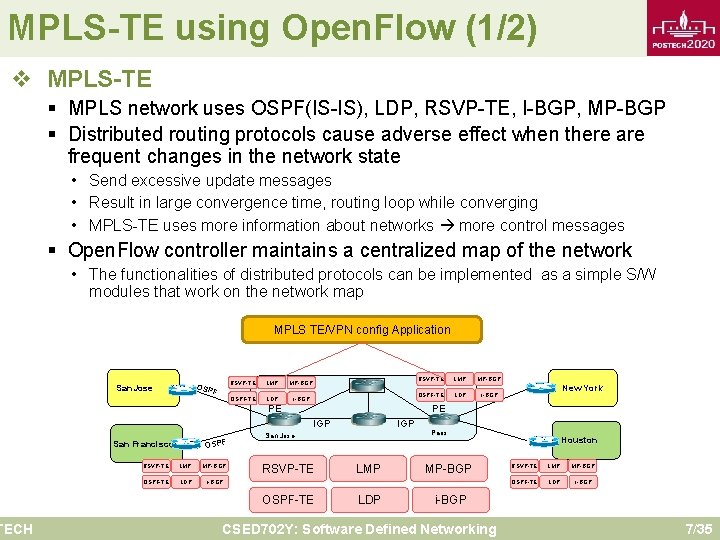

MPLS-TE using Open. Flow (1/2) v MPLS-TE TECH § MPLS network uses OSPF(IS-IS), LDP, RSVP-TE, I-BGP, MP-BGP § Distributed routing protocols cause adverse effect when there are frequent changes in the network state • Send excessive update messages • Result in large convergence time, routing loop while converging • MPLS-TE uses more information about networks more control messages § Open. Flow controller maintains a centralized map of the network • The functionalities of distributed protocols can be implemented as a simple S/W modules that work on the network map MPLS TE/VPN config Application OSP F San. Jose RSVP-TE LMP MP-BGP OSPF-TE LDP i-BGP PE OSPF RSVP-TE LMP MP-BGP OSPF-TE LDP i-BGP New York PE IGP San Francisco RSVP-TE IGP San Jose RSVP-TE OSPF-TE LMP LDP Paris MP-BGP Houston RSVP-TE LMP MP-BGP OSPF-TE LDP i-BGP CSED 702 Y: Software Defined Networking 7/35

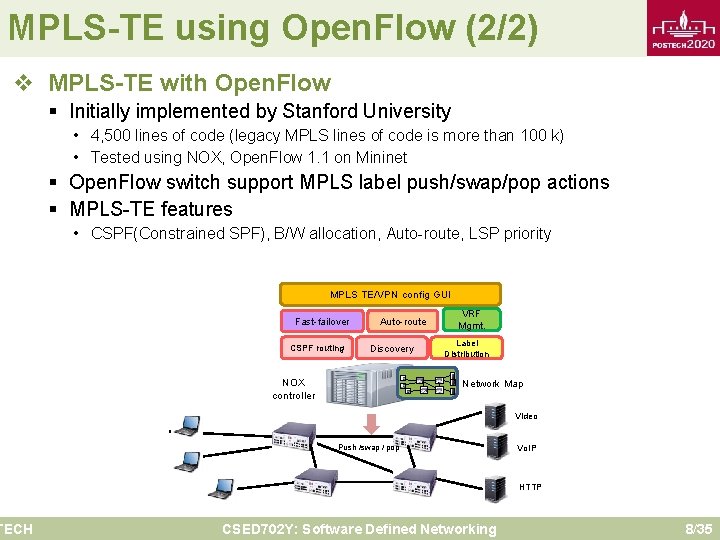

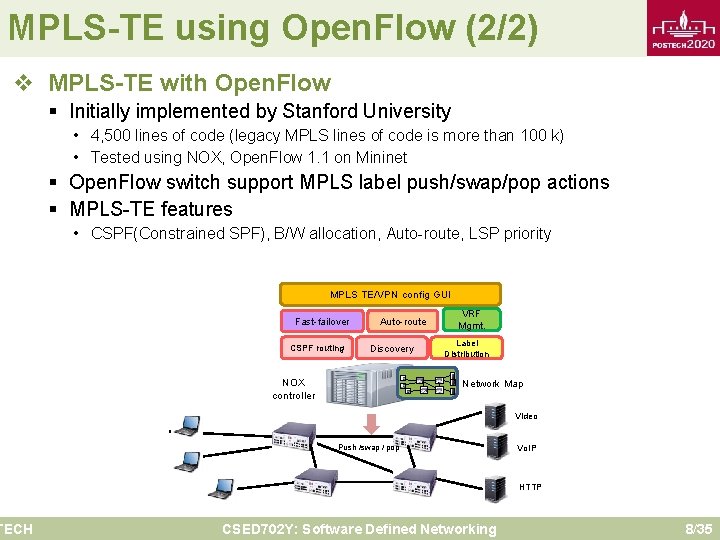

MPLS-TE using Open. Flow (2/2) v MPLS-TE with Open. Flow TECH § Initially implemented by Stanford University • 4, 500 lines of code (legacy MPLS lines of code is more than 100 k) • Tested using NOX, Open. Flow 1. 1 on Mininet § Open. Flow switch support MPLS label push/swap/pop actions § MPLS-TE features • CSPF(Constrained SPF), B/W allocation, Auto-route, LSP priority MPLS TE/VPN config GUI Fast-failover CSPF routing Auto-route Discovery NOX controller VRF Mgmt. Label Distribution Network Map Video Push /swap / pop Vo. IP HTTP CSED 702 Y: Software Defined Networking 8/35

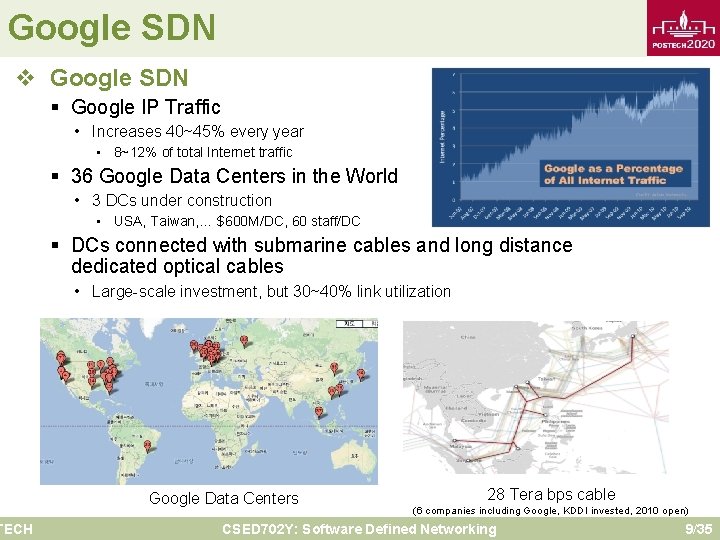

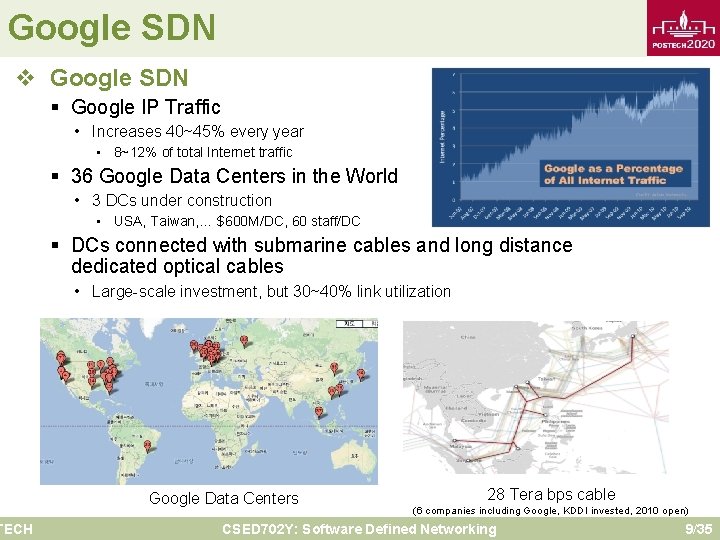

Google SDN v Google SDN TECH § Google IP Traffic • Increases 40~45% every year • 8~12% of total Internet traffic § 36 Google Data Centers in the World • 3 DCs under construction • USA, Taiwan, … $600 M/DC, 60 staff/DC § DCs connected with submarine cables and long distance dedicated optical cables • Large-scale investment, but 30~40% link utilization Google Data Centers 28 Tera bps cable (6 companies including Google, KDDI invested, 2010 open) CSED 702 Y: Software Defined Networking 9/35

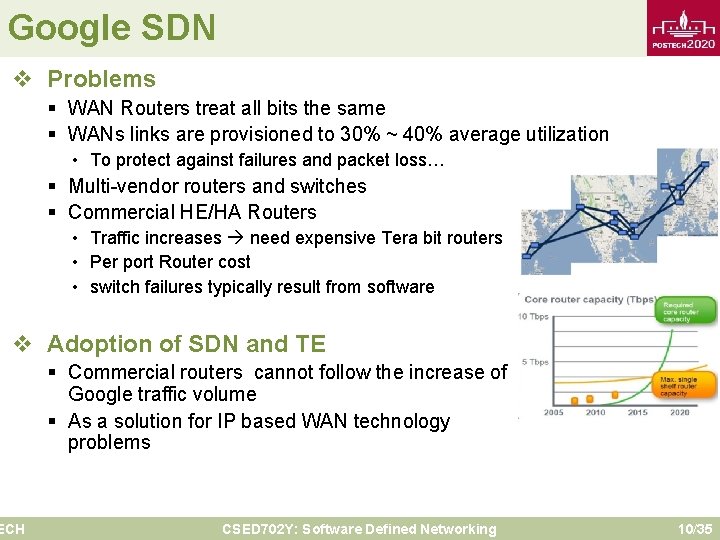

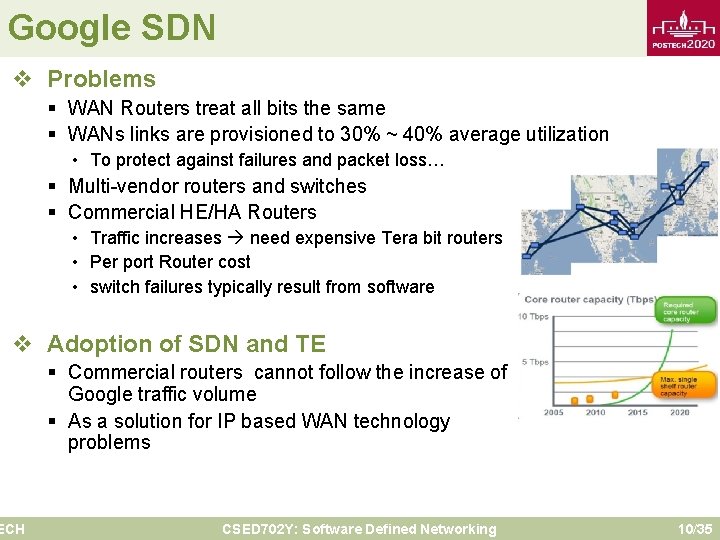

Google SDN v Problems § WAN Routers treat all bits the same § WANs links are provisioned to 30% ~ 40% average utilization • To protect against failures and packet loss… § Multi-vendor routers and switches § Commercial HE/HA Routers • Traffic increases need expensive Tera bit routers • Per port Router cost • switch failures typically result from software v Adoption of SDN and TE ECH § Commercial routers cannot follow the increase of Google traffic volume § As a solution for IP based WAN technology problems CSED 702 Y: Software Defined Networking 10/35

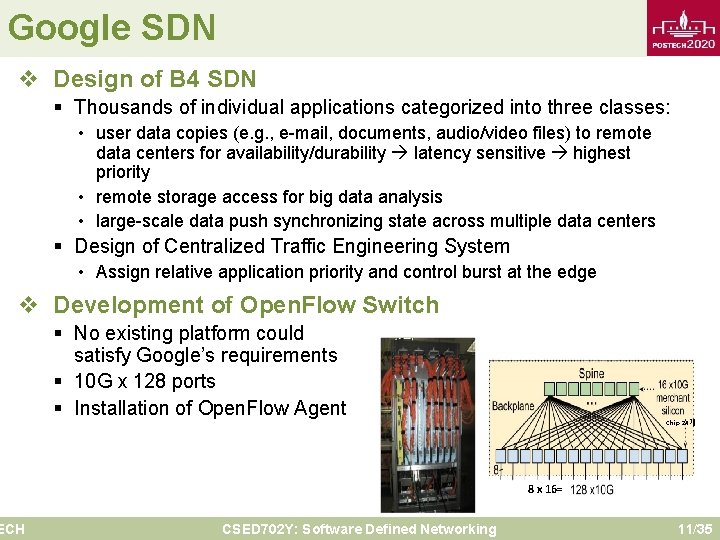

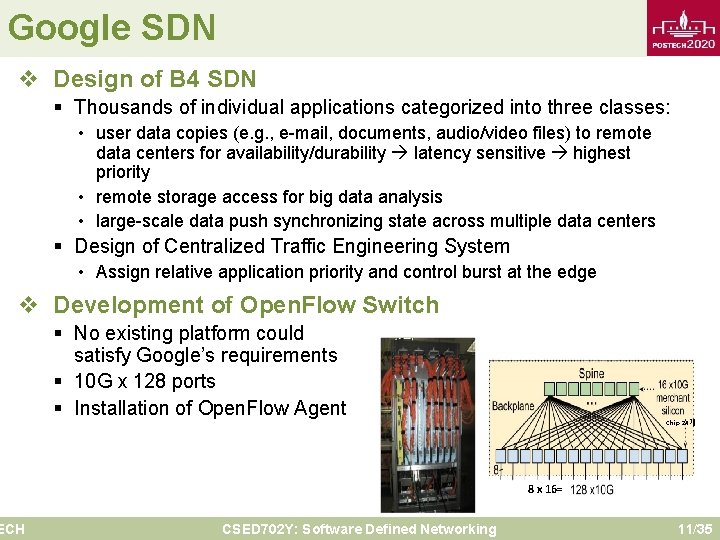

Google SDN v Design of B 4 SDN § Thousands of individual applications categorized into three classes: • user data copies (e. g. , e-mail, documents, audio/video files) to remote data centers for availability/durability latency sensitive highest priority • remote storage access for big data analysis • large-scale data push synchronizing state across multiple data centers § Design of Centralized Traffic Engineering System • Assign relative application priority and control burst at the edge v Development of Open. Flow Switch ECH § No existing platform could satisfy Google’s requirements § 10 G x 128 ports § Installation of Open. Flow Agent Chip 24개 8 x 16= CSED 702 Y: Software Defined Networking 11/35

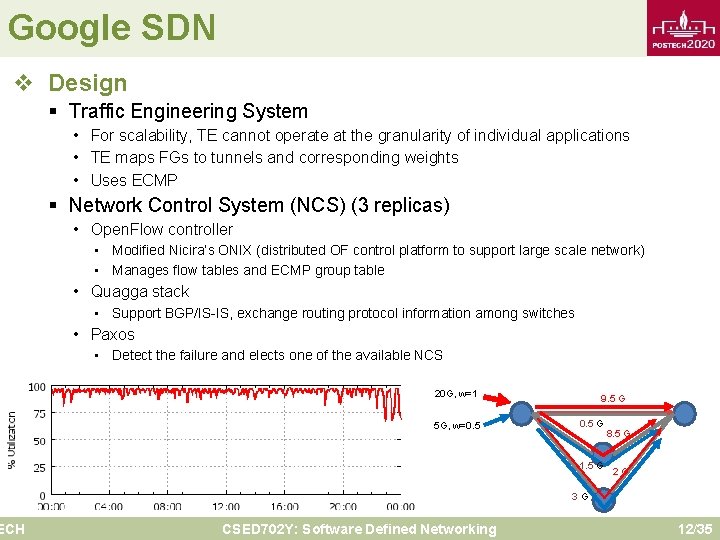

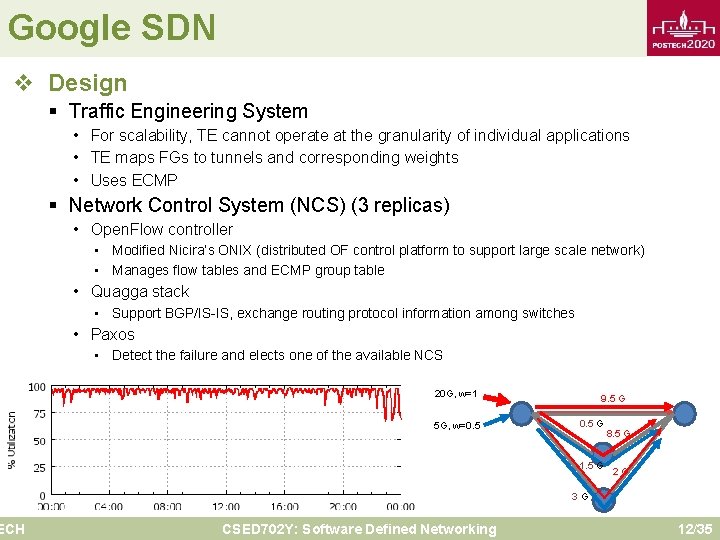

Google SDN v Design ECH § Traffic Engineering System • For scalability, TE cannot operate at the granularity of individual applications • TE maps FGs to tunnels and corresponding weights • Uses ECMP § Network Control System (NCS) (3 replicas) • Open. Flow controller • Modified Nicira’s ONIX (distributed OF control platform to support large scale network) • Manages flow tables and ECMP group table • Quagga stack • Support BGP/IS-IS, exchange routing protocol information among switches • Paxos • Detect the failure and elects one of the available NCS 20 G, w=1 5 G, w=0. 5 9. 5 G 0. 5 G 1. 5 G 8. 5 G 2 G 3 G CSED 702 Y: Software Defined Networking 12/35

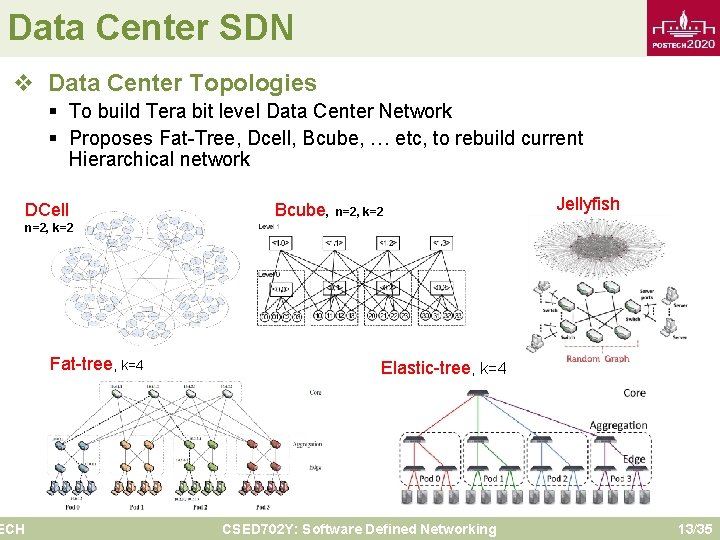

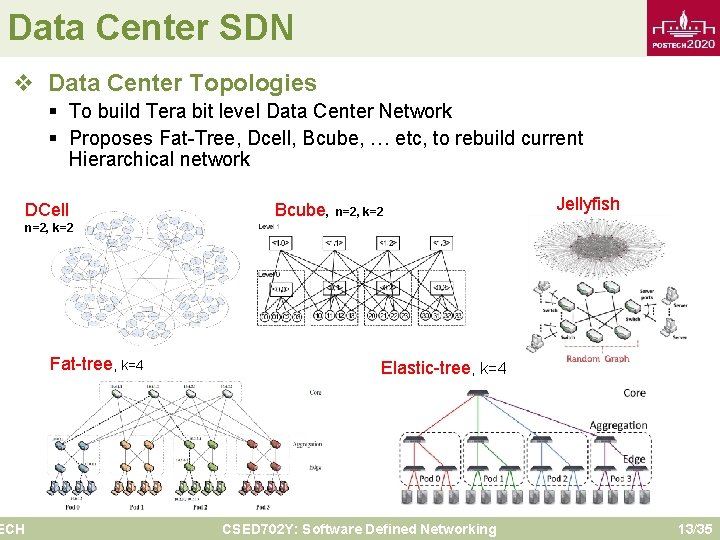

Data Center SDN v Data Center Topologies § To build Tera bit level Data Center Network § Proposes Fat-Tree, Dcell, Bcube, … etc, to rebuild current Hierarchical network DCell Bcube, n=2, k=2 Jellyfish n=2, k=2 ECH Fat-tree, k=4 Elastic-tree, k=4 CSED 702 Y: Software Defined Networking 13/35

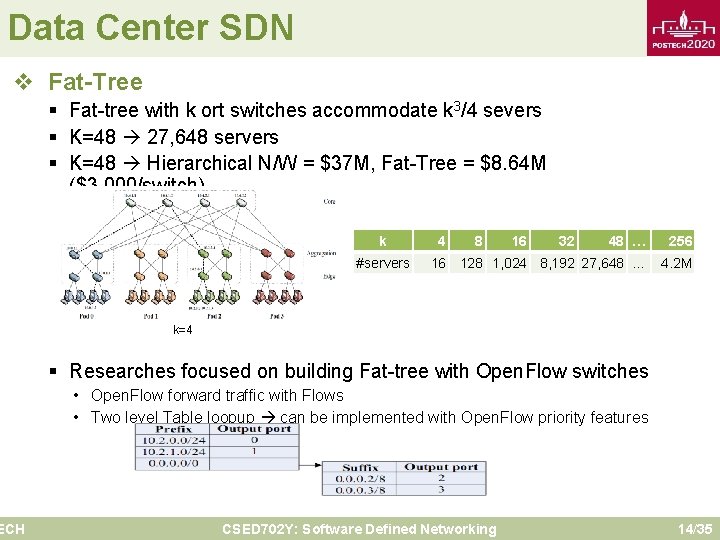

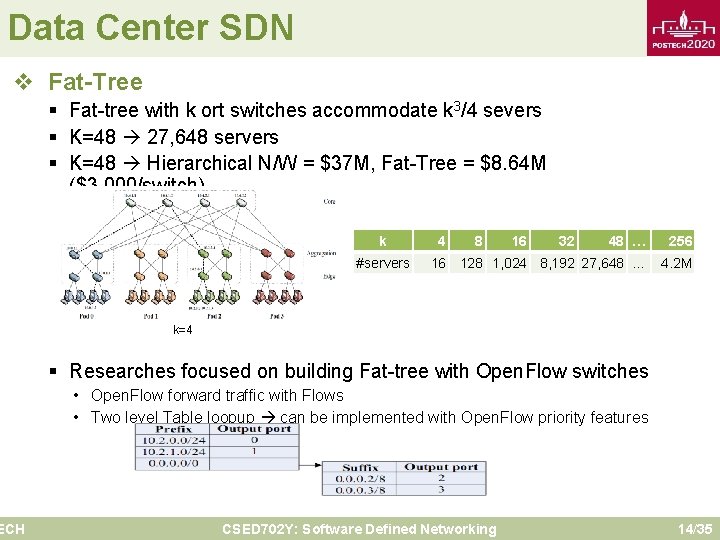

Data Center SDN v Fat-Tree ECH § Fat-tree with k ort switches accommodate k 3/4 severs § K=48 27, 648 servers § K=48 Hierarchical N/W = $37 M, Fat-Tree = $8. 64 M ($3, 000/switch) k #servers 4 8 16 32 48 … 256 16 128 1, 024 8, 192 27, 648 … 4. 2 M k=4 § Researches focused on building Fat-tree with Open. Flow switches • Open. Flow forward traffic with Flows • Two level Table loopup can be implemented with Open. Flow priority features CSED 702 Y: Software Defined Networking 14/35

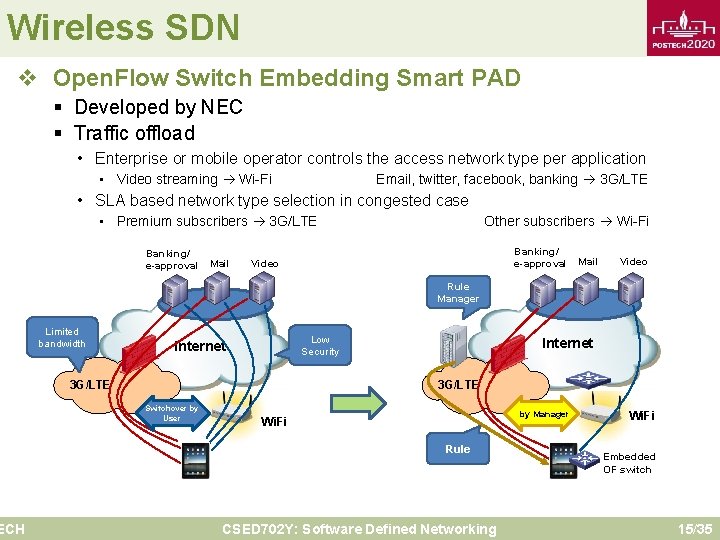

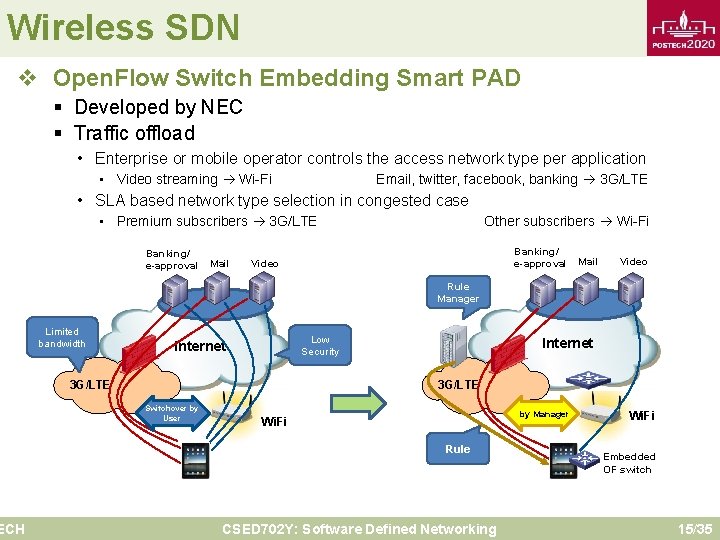

Wireless SDN v Open. Flow Switch Embedding Smart PAD ECH § Developed by NEC § Traffic offload • Enterprise or mobile operator controls the access network type per application • Video streaming Wi-Fi Email, twitter, facebook, banking 3 G/LTE • SLA based network type selection in congested case • Premium subscribers 3 G/LTE Banking/ e-approval Mail Other subscribers Wi-Fi Banking/ e-approval Video Mail Video Rule Manager Limited bandwidth Low Security Internet 3 G/LTE Switchover by User by Manager Wi. Fi Rule CSED 702 Y: Software Defined Networking Wi. Fi Embedded OF switch 15/35

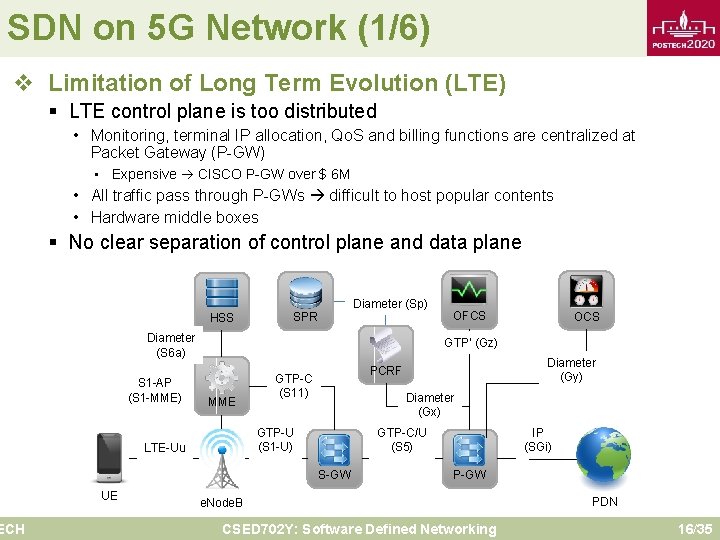

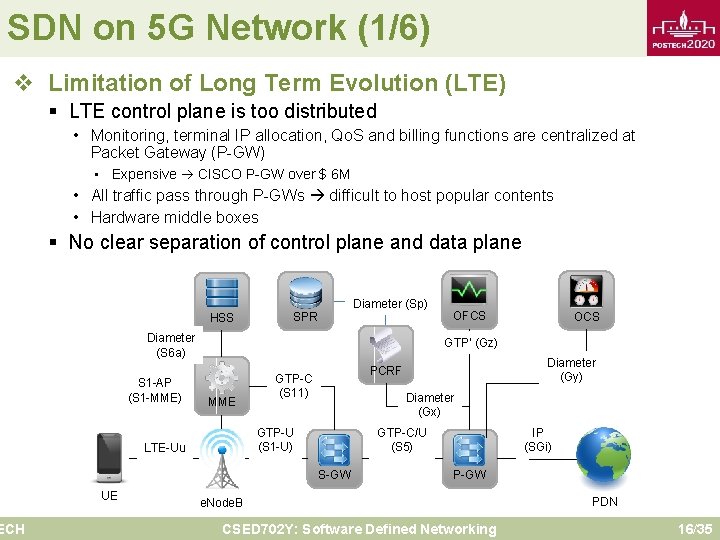

SDN on 5 G Network (1/6) v Limitation of Long Term Evolution (LTE) ECH § LTE control plane is too distributed • Monitoring, terminal IP allocation, Qo. S and billing functions are centralized at Packet Gateway (P-GW) • Expensive CISCO P-GW over $ 6 M • All traffic pass through P-GWs difficult to host popular contents • Hardware middle boxes § No clear separation of control plane and data plane Diameter (Sp) HSS SPR Diameter (S 6 a) S 1 -AP (S 1 -MME) OCS GTP’ (Gz) MME Diameter (Gy) PCRF GTP-C (S 11) Diameter (Gx) GTP-C/U (S 5) GTP-U (S 1 -U) LTE-Uu S-GW UE OFCS IP (SGi) P-GW e. Node. B CSED 702 Y: Software Defined Networking PDN 16/35

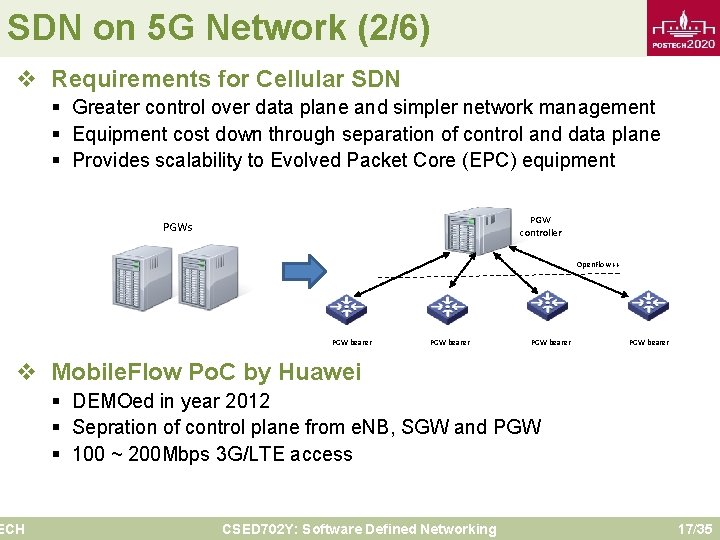

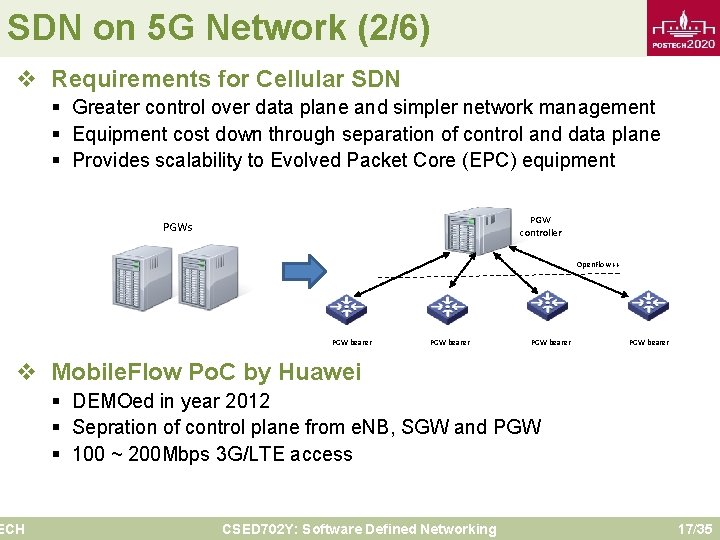

SDN on 5 G Network (2/6) v Requirements for Cellular SDN § Greater control over data plane and simpler network management § Equipment cost down through separation of control and data plane § Provides scalability to Evolved Packet Core (EPC) equipment PGW controller PGWs Open. Flow ++ PGW bearer v Mobile. Flow Po. C by Huawei ECH § DEMOed in year 2012 § Sepration of control plane from e. NB, SGW and PGW § 100 ~ 200 Mbps 3 G/LTE access CSED 702 Y: Software Defined Networking 17/35

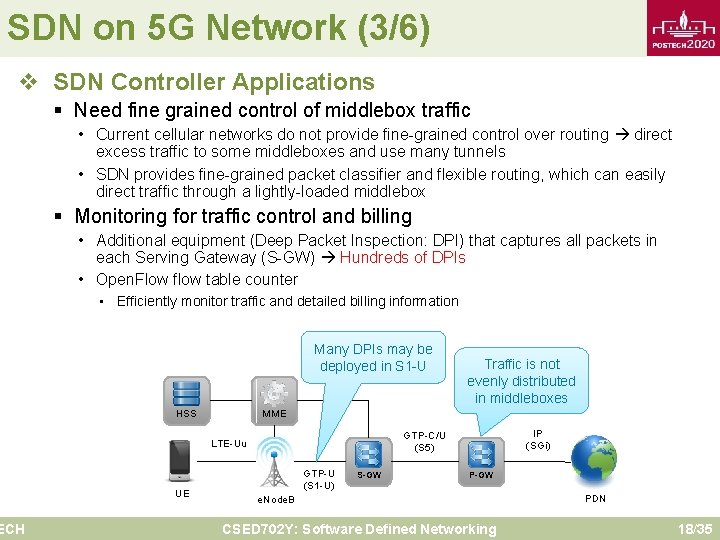

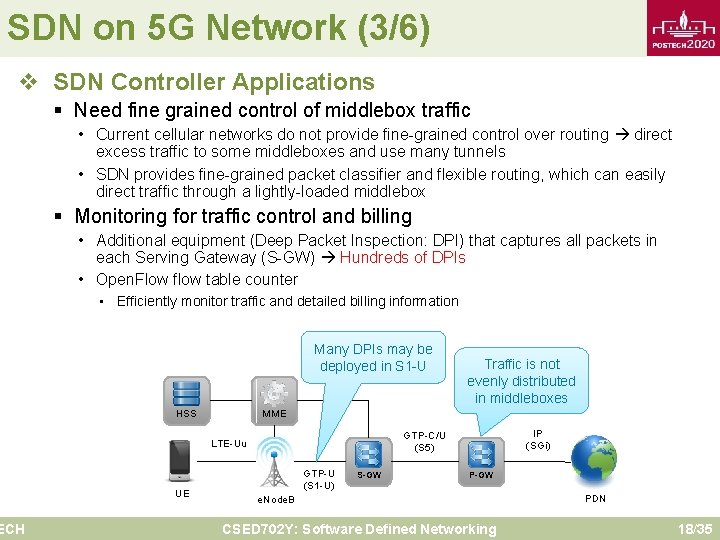

SDN on 5 G Network (3/6) v SDN Controller Applications ECH § Need fine grained control of middlebox traffic • Current cellular networks do not provide fine-grained control over routing direct excess traffic to some middleboxes and use many tunnels • SDN provides fine-grained packet classifier and flexible routing, which can easily direct traffic through a lightly-loaded middlebox § Monitoring for traffic control and billing • Additional equipment (Deep Packet Inspection: DPI) that captures all packets in each Serving Gateway (S-GW) Hundreds of DPIs • Open. Flow flow table counter • Efficiently monitor traffic and detailed billing information Many DPIs may be deployed in S 1 -U HSS MME IP (SGi) GTP-C/U (S 5) LTE-Uu UE Traffic is not evenly distributed in middleboxes GTP-U (S 1 -U) S-GW P-GW e. Node. B CSED 702 Y: Software Defined Networking PDN 18/35

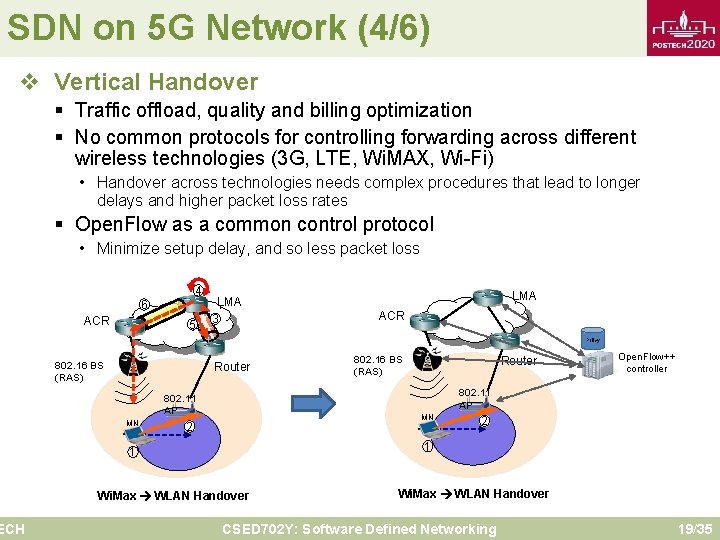

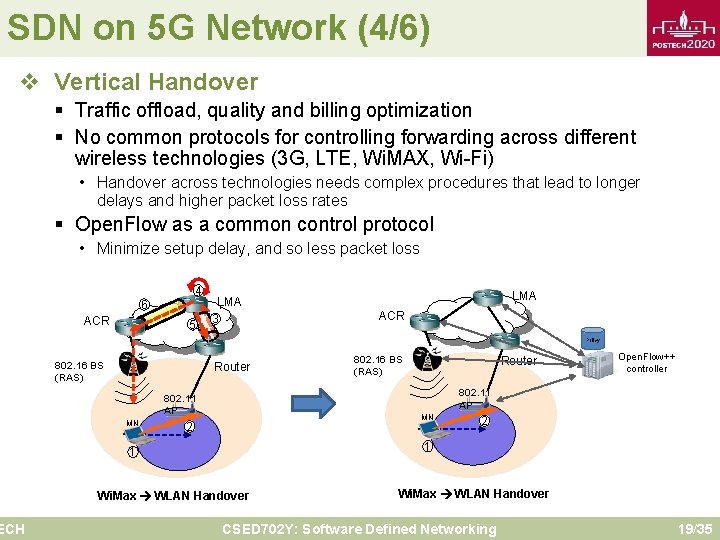

SDN on 5 G Network (4/6) v Vertical Handover ECH § Traffic offload, quality and billing optimization § No common protocols for controlling forwarding across different wireless technologies (3 G, LTE, Wi. MAX, Wi-Fi) • Handover across technologies needs complex procedures that lead to longer delays and higher packet loss rates § Open. Flow as a common control protocol • Minimize setup delay, and so less packet loss 4 6 ACR 5 LMA 3 LMA ACR Policy 802. 16 BS (RAS) Router Open. Flow++ controller 802. 11 AP MN 802. 16 BS (RAS) MN 2 2 1 1 Wi. Max WLAN Handover CSED 702 Y: Software Defined Networking 19/35

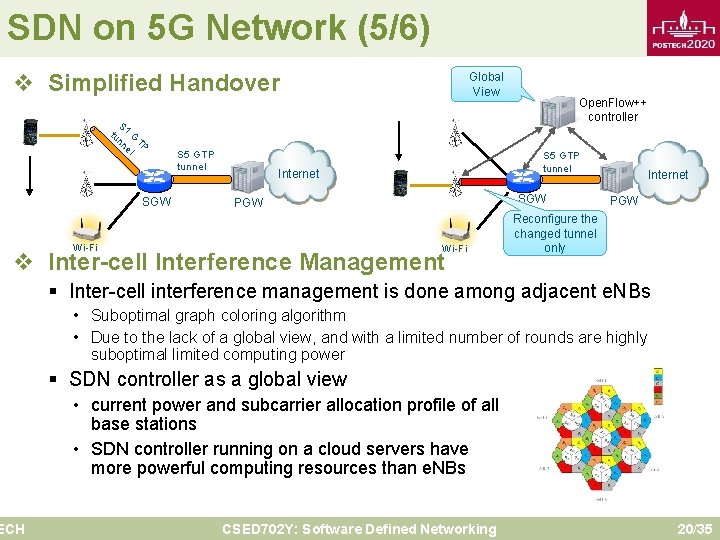

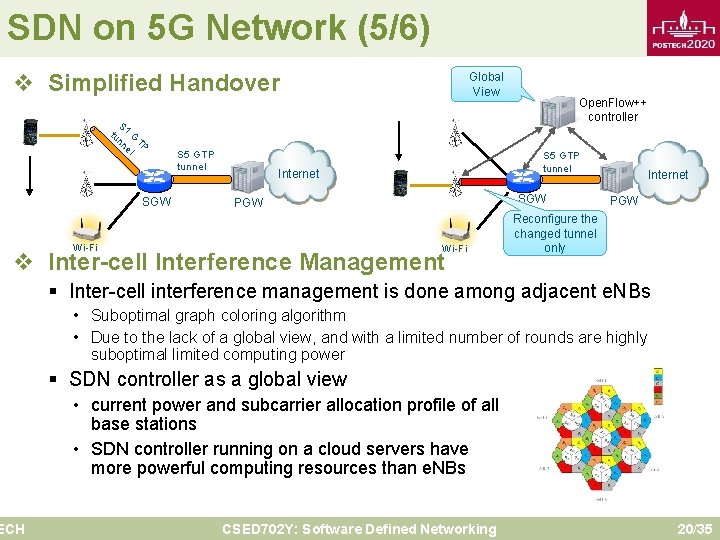

SDN on 5 G Network (5/6) v Simplified Handover S tu 1 G nn T el P SGW S 5 GTP tunnel Global View S 5 GTP tunnel Internet SGW PGW Wi-Fi v Inter-cell Interference Management ECH Open. Flow++ controller Internet PGW Reconfigure the changed tunnel only § Inter-cell interference management is done among adjacent e. NBs • Suboptimal graph coloring algorithm • Due to the lack of a global view, and with a limited number of rounds are highly suboptimal limited computing power § SDN controller as a global view • current power and subcarrier allocation profile of all base stations • SDN controller running on a cloud servers have more powerful computing resources than e. NBs CSED 702 Y: Software Defined Networking 20/35

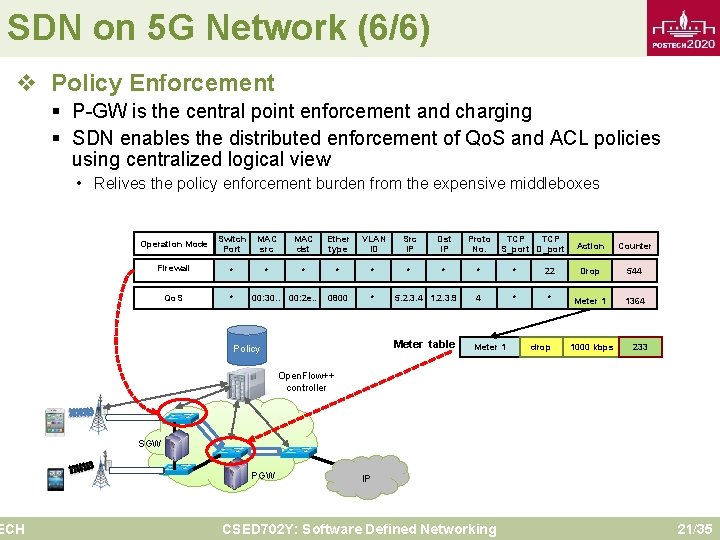

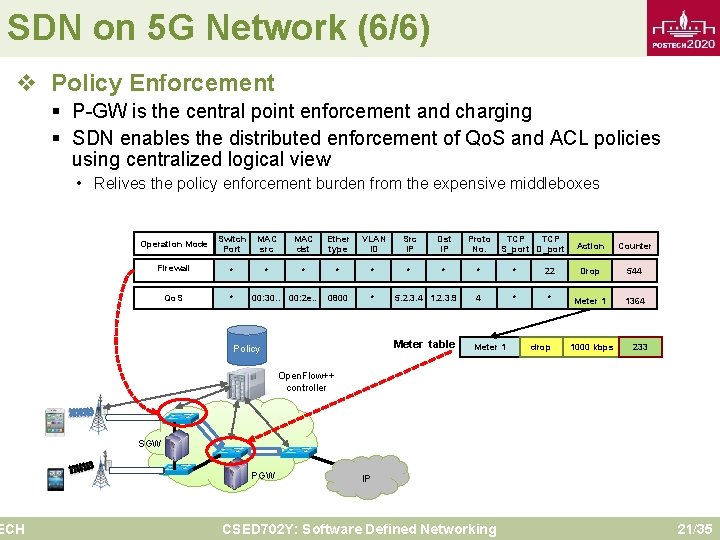

SDN on 5 G Network (6/6) v Policy Enforcement ECH § P-GW is the central point enforcement and charging § SDN enables the distributed enforcement of Qo. S and ACL policies using centralized logical view • Relives the policy enforcement burden from the expensive middleboxes Operation Mode Switch Port MAC src MAC dst Ether type VLAN ID Src IP Dst IP Proto No. Firewall * * * * * Qo. S * 0800 * 5. 2. 3. 4 1. 2. 3. 9 4 * Meter table Meter 1 00: 30. . 00: 2 e. . Policy TCP S_port D_port Action Counter 22 Drop 544 * Meter 1 1364 drop 1000 kbps 233 Open. Flow++ controller SGW PGW IP CSED 702 Y: Software Defined Networking 21/35

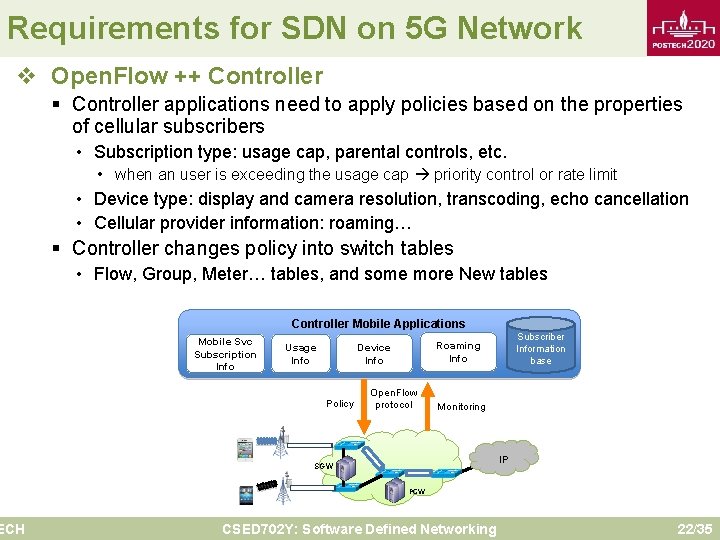

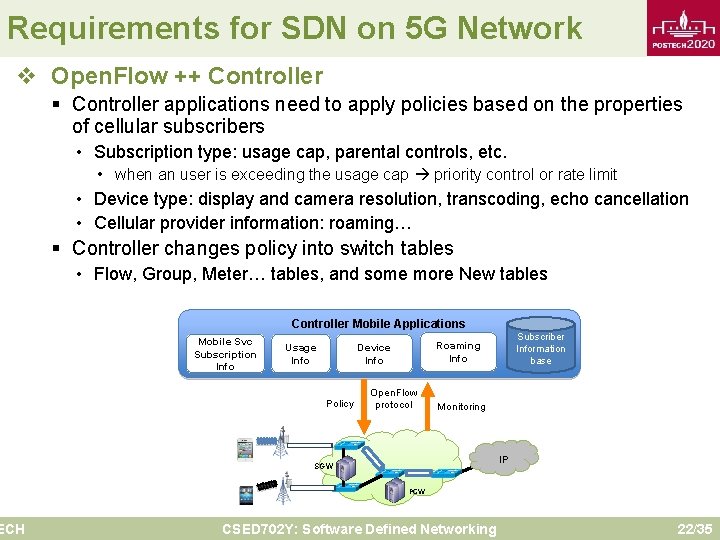

Requirements for SDN on 5 G Network v Open. Flow ++ Controller ECH § Controller applications need to apply policies based on the properties of cellular subscribers • Subscription type: usage cap, parental controls, etc. • when an user is exceeding the usage cap priority control or rate limit • Device type: display and camera resolution, transcoding, echo cancellation • Cellular provider information: roaming… § Controller changes policy into switch tables • Flow, Group, Meter… tables, and some more New tables Controller Mobile Applications Mobile Svc Subscription Info Usage Info Policy Subscriber Information base Roaming Info Device Info Open. Flow protocol Monitoring IP SGW PGW CSED 702 Y: Software Defined Networking 22/35

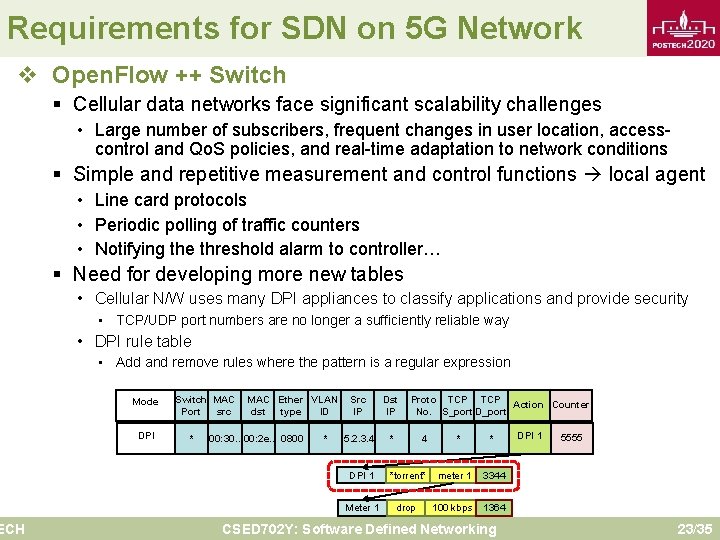

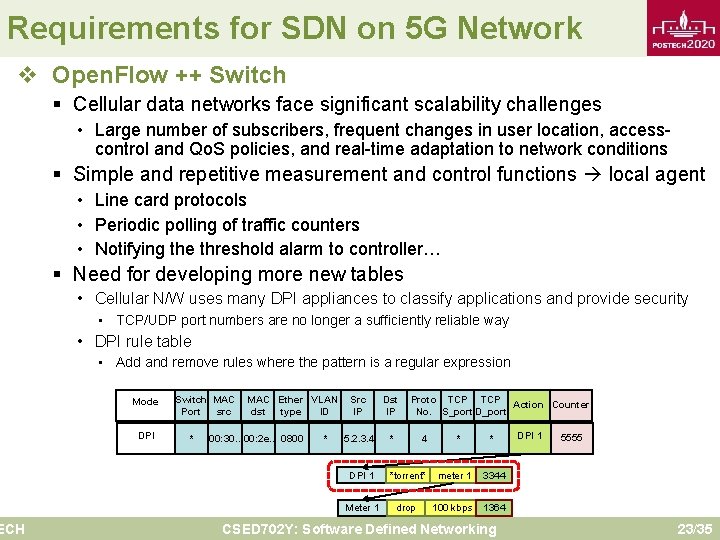

Requirements for SDN on 5 G Network v Open. Flow ++ Switch ECH § Cellular data networks face significant scalability challenges • Large number of subscribers, frequent changes in user location, accesscontrol and Qo. S policies, and real-time adaptation to network conditions § Simple and repetitive measurement and control functions local agent • Line card protocols • Periodic polling of traffic counters • Notifying the threshold alarm to controller… § Need for developing more new tables • Cellular N/W uses many DPI appliances to classify applications and provide security • TCP/UDP port numbers are no longer a sufficiently reliable way • DPI rule table • Add and remove rules where the pattern is a regular expression Mode DPI Switch MAC Port src * MAC Ether VLAN dst type ID 00: 30. . 00: 2 e. . 0800 * Src IP Dst IP 5. 2. 3. 4 * Proto TCP Action Counter No. S_port D_port 4 * * DPI 1 *torrent* meter 1 3344 Meter 1 drop 100 kbps 1364 CSED 702 Y: Software Defined Networking DPI 1 5555 23/35

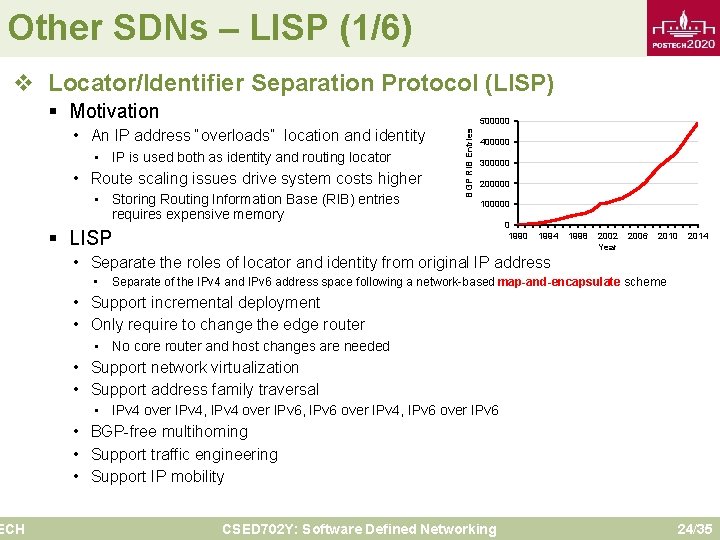

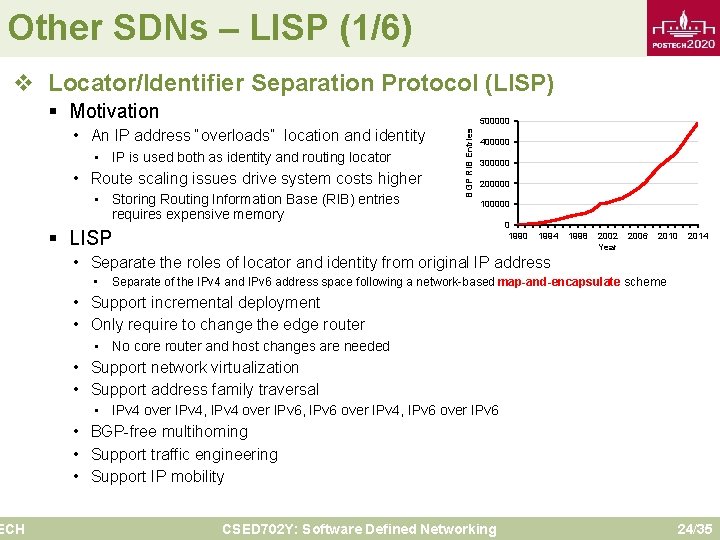

Other SDNs – LISP (1/6) v Locator/Identifier Separation Protocol (LISP) ECH § Motivation • IP is used both as identity and routing locator • Route scaling issues drive system costs higher • Storing Routing Information Base (RIB) entries requires expensive memory BGP RIB Entries • An IP address “overloads” location and identity 500000 400000 300000 200000 100000 § LISP 0 1994 1998 2002 Year 2006 2010 2014 • Separate the roles of locator and identity from original IP address • Separate of the IPv 4 and IPv 6 address space following a network-based map-and-encapsulate scheme • Support incremental deployment • Only require to change the edge router • No core router and host changes are needed • Support network virtualization • Support address family traversal • IPv 4 over IPv 4, IPv 4 over IPv 6, IPv 6 over IPv 4, IPv 6 over IPv 6 • BGP-free multihoming • Support traffic engineering • Support IP mobility CSED 702 Y: Software Defined Networking 24/35

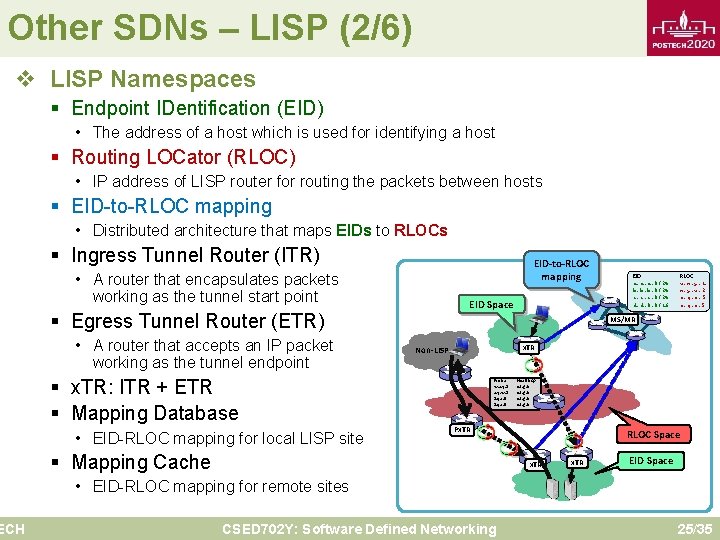

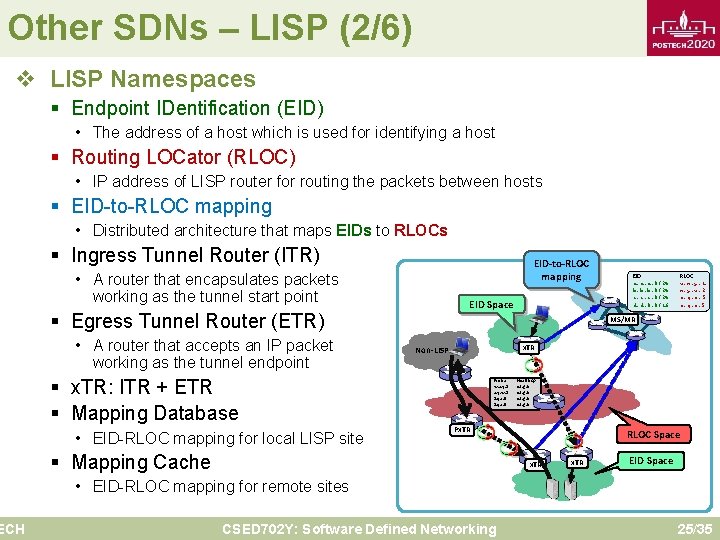

Other SDNs – LISP (2/6) v LISP Namespaces ECH § Endpoint IDentification (EID) • The address of a host which is used for identifying a host § Routing LOCator (RLOC) • IP address of LISP router for routing the packets between hosts § EID-to-RLOC mapping • Distributed architecture that maps EIDs to RLOCs § Ingress Tunnel Router (ITR) EID-to-RLOC mapping • A router that encapsulates packets working as the tunnel start point EID Space § Egress Tunnel Router (ETR) • A router that accepts an IP packet working as the tunnel endpoint § x. TR: ITR + ETR § Mapping Database • EID-RLOC mapping for local LISP site EID a. a. a. 0/24 b. b. b. 0/24 c. c. c. 0/24 d. d. 0. 0/16 RLOC w. x. y. 1 x. y. w. 2 z. q. r. 5 MS/MR x. TR Non-LISP Prefix w. x. y. 1 x. y. w. 2 z. q. r. 5 Next-hop e. f. g. h Px. TR § Mapping Cache RLOC Space x. TR EID Space • EID-RLOC mapping for remote sites CSED 702 Y: Software Defined Networking 25/35

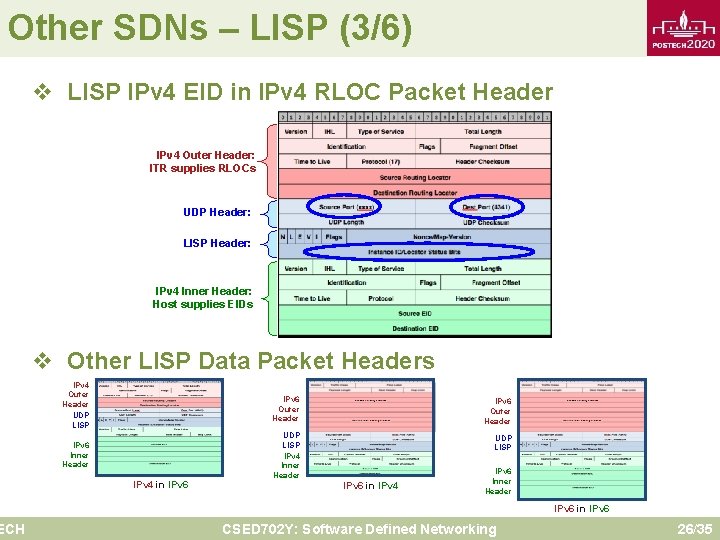

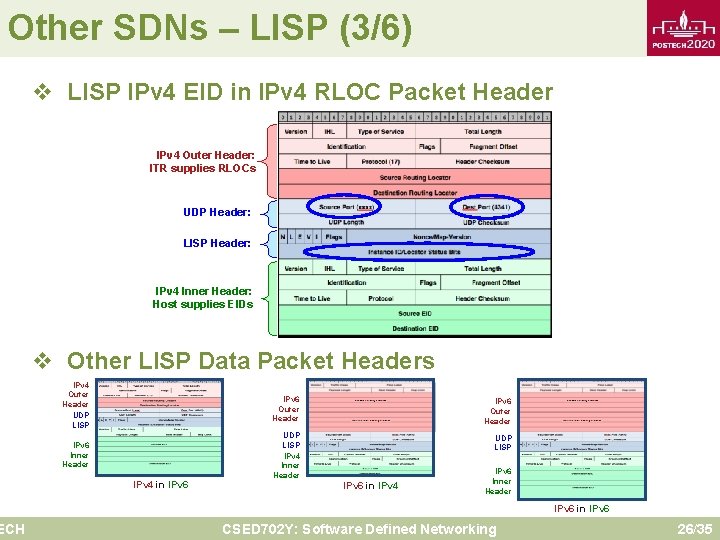

Other SDNs – LISP (3/6) ECH v LISP IPv 4 EID in IPv 4 RLOC Packet Header IPv 4 Outer Header: ITR supplies RLOCs UDP Header: LISP Header: IPv 4 Inner Header: Host supplies EIDs v Other LISP Data Packet Headers IPv 4 Outer Header UDP LISP IPv 6 Outer Header IPv 6 Inner Header UDP LISP IPv 4 in IPv 6 in IPv 4 IPv 6 Inner Header IPv 6 in IPv 6 CSED 702 Y: Software Defined Networking 26/35

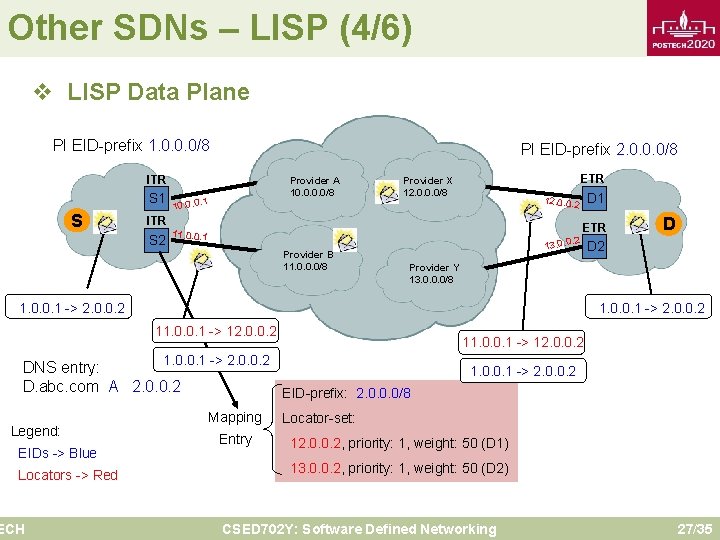

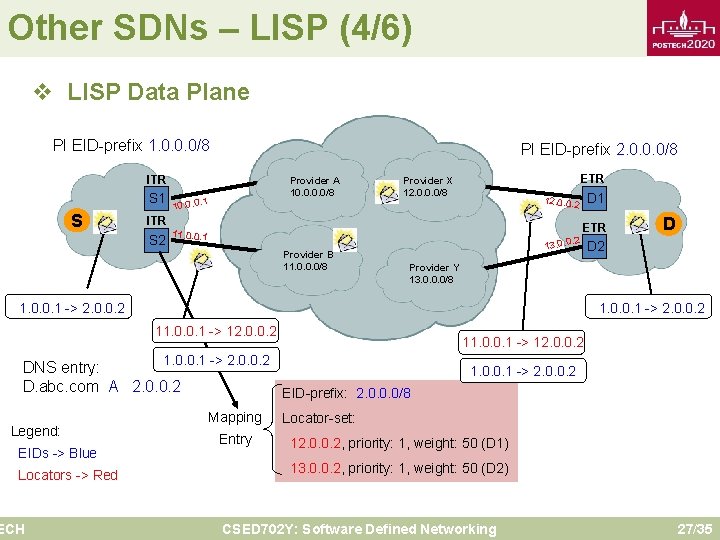

Other SDNs – LISP (4/6) v LISP Data Plane PI EID-prefix 1. 0. 0. 0/8 PI EID-prefix 2. 0. 0. 0/8 ITR S 1 S Provider A 10. 0/8 10. 0. 0. 1 ETR Provider X 12. 0. 0. 0/8 ITR S 2 11. 0. 0. 1 Provider B 11. 0. 0. 0/8 D 1 12. 0. 0. 2 13. 0. 0 ETR 1. 0. 0. 1 -> 2. 0. 0. 2 11. 0. 0. 1 -> 12. 0. 0. 2 1. 0. 0. 1 -> 2. 0. 0. 2 DNS entry: D. abc. com A 2. 0. 0. 2 EID-prefix: 2. 0. 0. 0/8 EIDs -> Blue Locators -> Red ECH D 2 Provider Y 13. 0. 0. 0/8 1. 0. 0. 1 -> 2. 0. 0. 2 Legend: D Mapping Entry 1. 0. 0. 1 -> 2. 0. 0. 2 Locator-set: 12. 0. 0. 2, priority: 1, weight: 50 (D 1) 13. 0. 0. 2, priority: 1, weight: 50 (D 2) CSED 702 Y: Software Defined Networking 27/35

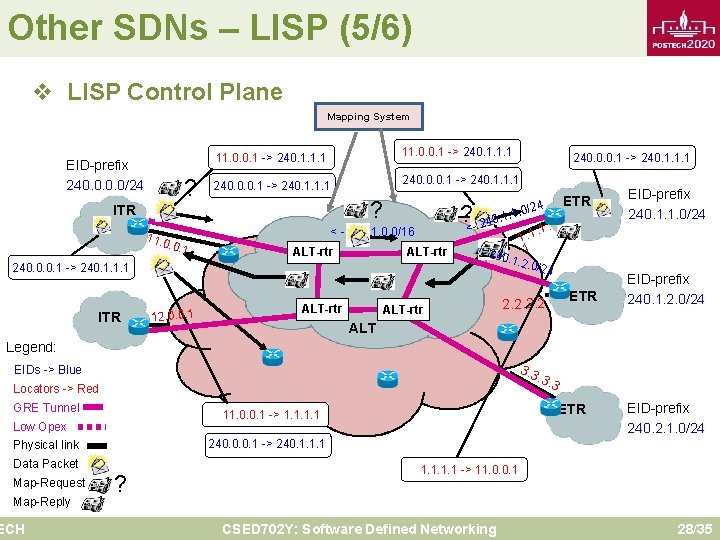

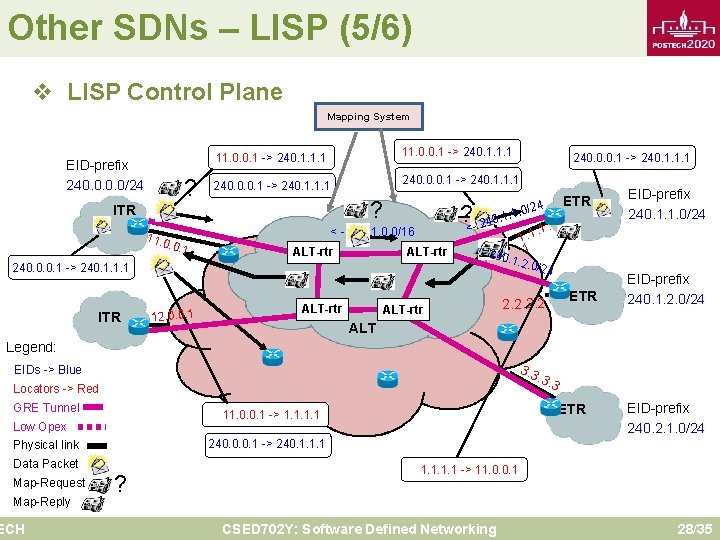

Other SDNs – LISP (5/6) v LISP Control Plane Mapping System EID-prefix 240. 0/24 ? 11. 0. 0. 1 -> 240. 1. 1. 1 240. 0. 0. 1 -> 240. 1. 1. 1 ? ITR 11. 0 . 0. 1 ? <- 240. 1. 1 ALT-rtr 240. 0. 0. 1 -> 240. 1. 1. 1 ITR 12. 0. 0. 1 ALT-rtr ETR 4. 0/2 < - 240. 1. 0. 0/16 ALT-rtr 240. 0. 0. 1 -> 240. 1. 1. 1 <- 2 40. 1 1. 2 ALT-rtr . 0/24 2. 2 ETR EID-prefix 240. 1. 1. 0/24 EID-prefix 240. 1. 2. 0/24 ALT Legend: 3. 3 EIDs -> Blue . 3. 3 Locators -> Red GRE Tunnel Low Opex Data Packet Map-Reply ECH EID-prefix 240. 2. 1. 0/24 240. 0. 0. 1 -> 240. 1. 1. 1 Physical link Map-Request ETR 11. 0. 0. 1 -> 1. 1 ? 1. 1 -> 11. 0. 0. 1 CSED 702 Y: Software Defined Networking 28/35

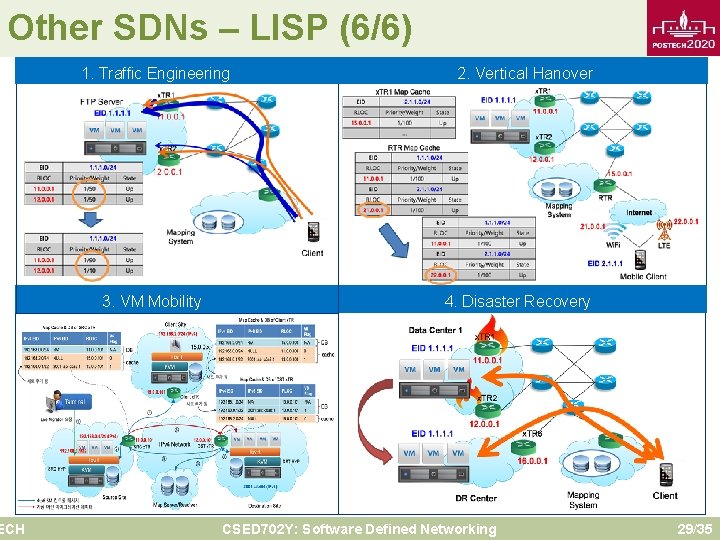

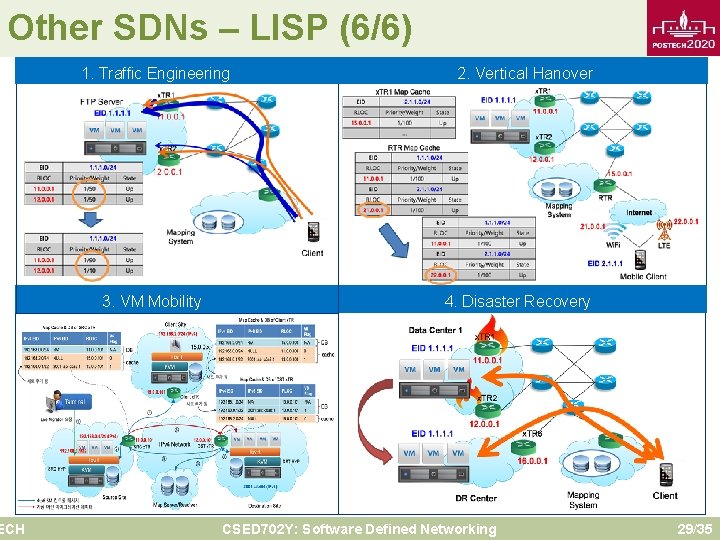

Other SDNs – LISP (6/6) ECH 1. Traffic Engineering 3. VM Mobility 2. Vertical Hanover 4. Disaster Recovery CSED 702 Y: Software Defined Networking 29/35

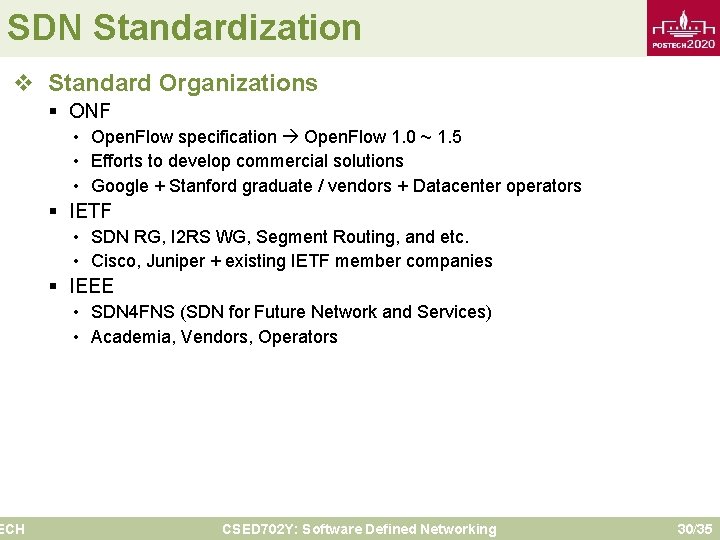

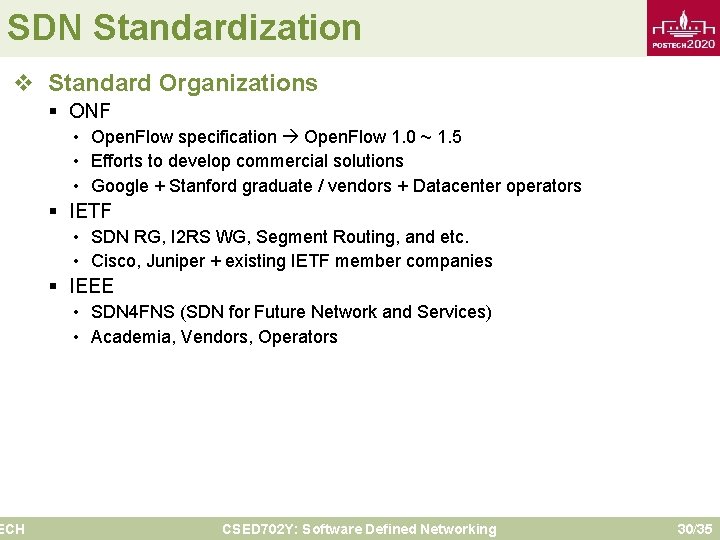

SDN Standardization v Standard Organizations ECH § ONF • Open. Flow specification Open. Flow 1. 0 ~ 1. 5 • Efforts to develop commercial solutions • Google + Stanford graduate / vendors + Datacenter operators § IETF • SDN RG, I 2 RS WG, Segment Routing, and etc. • Cisco, Juniper + existing IETF member companies § IEEE • SDN 4 FNS (SDN for Future Network and Services) • Academia, Vendors, Operators CSED 702 Y: Software Defined Networking 30/35

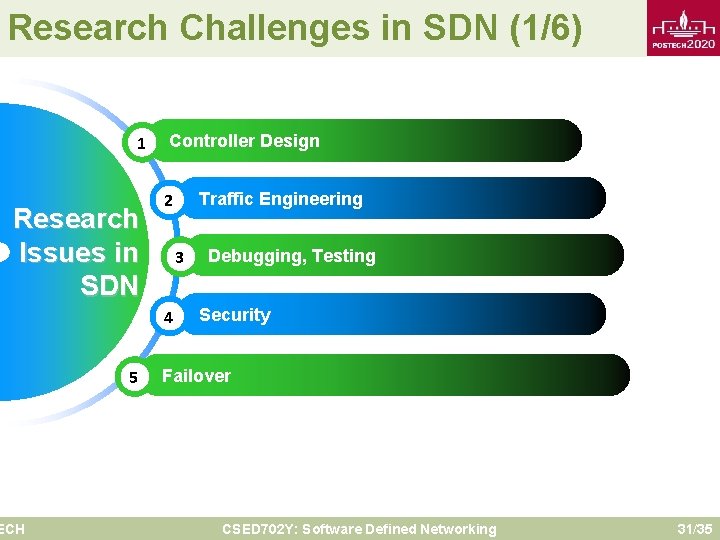

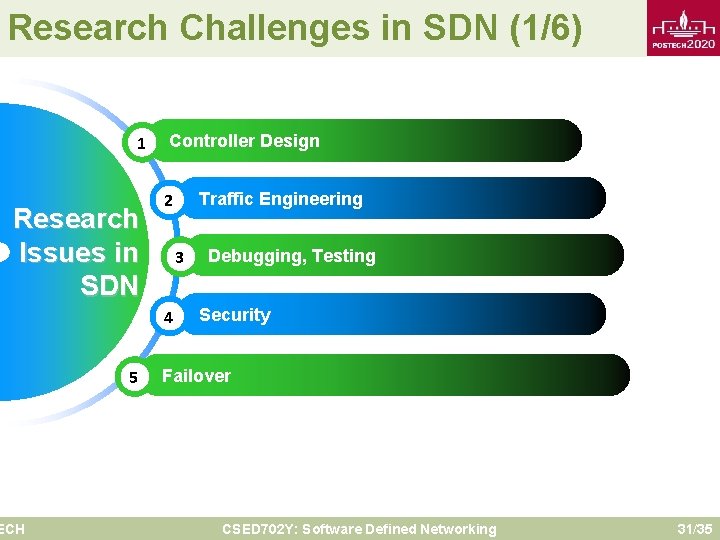

Research Challenges in SDN (1/6) 1 Research Issues in SDN ECH Controller Design 3 4 5 Traffic Engineering 2 Debugging, Testing Security Failover CSED 702 Y: Software Defined Networking 31/35

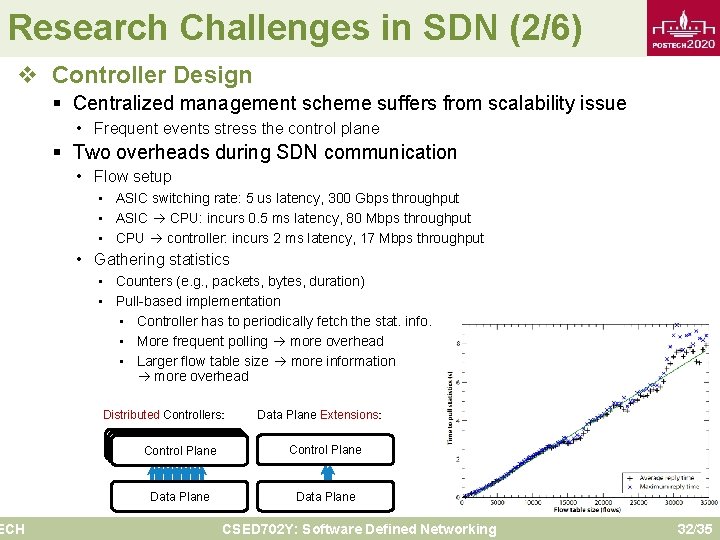

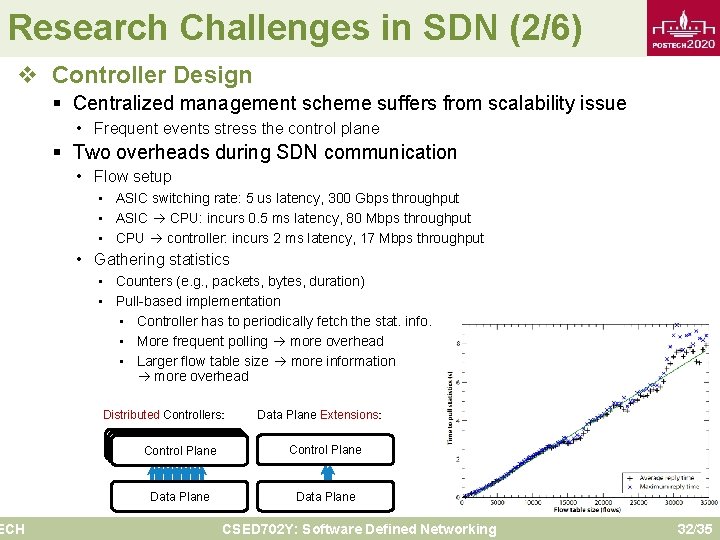

Research Challenges in SDN (2/6) v Controller Design ECH § Centralized management scheme suffers from scalability issue • Frequent events stress the control plane § Two overheads during SDN communication • Flow setup • ASIC switching rate: 5 us latency, 300 Gbps throughput • ASIC CPU: incurs 0. 5 ms latency, 80 Mbps throughput • CPU controller: incurs 2 ms latency, 17 Mbps throughput • Gathering statistics • Counters (e. g. , packets, bytes, duration) • Pull-based implementation • Controller has to periodically fetch the stat. info. • More frequent polling more overhead • Larger flow table size more information more overhead Distributed Controllers: Control Plane Data Plane Extensions: Control Plane Data Plane CSED 702 Y: Software Defined Networking 32/35

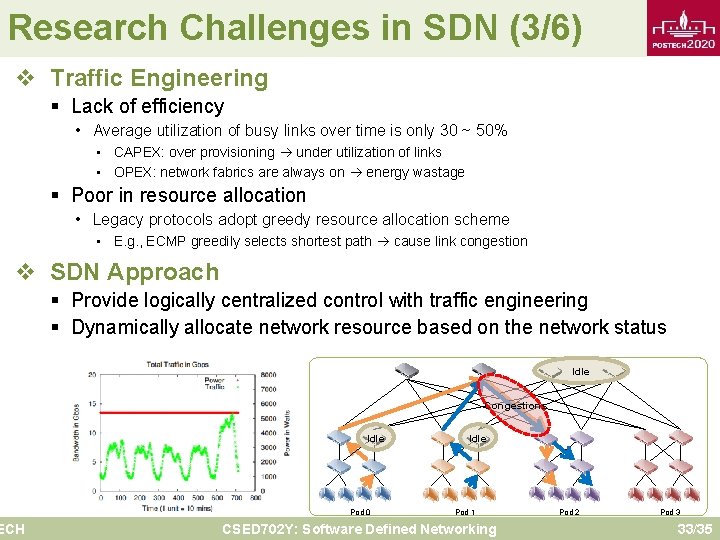

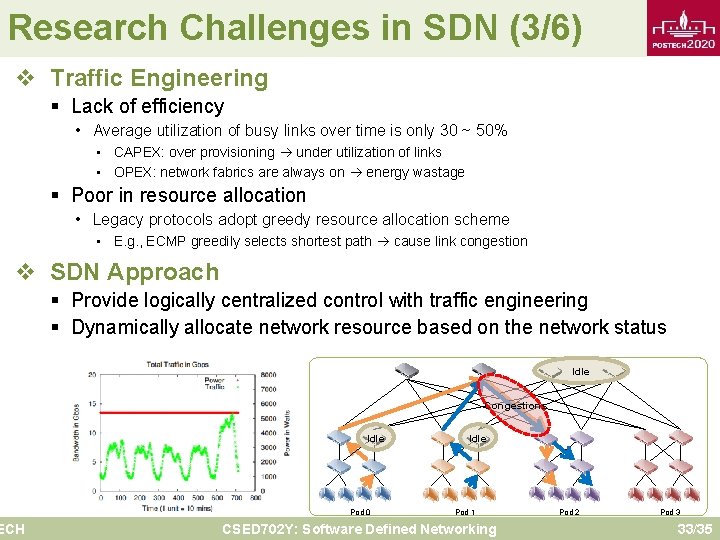

Research Challenges in SDN (3/6) v Traffic Engineering § Lack of efficiency • Average utilization of busy links over time is only 30 ~ 50% • CAPEX: over provisioning under utilization of links • OPEX: network fabrics are always on energy wastage § Poor in resource allocation • Legacy protocols adopt greedy resource allocation scheme • E. g. , ECMP greedily selects shortest path cause link congestion v SDN Approach ECH § Provide logically centralized control with traffic engineering § Dynamically allocate network resource based on the network status Idle Congestions Idle Pod 0 Idle Pod 1 CSED 702 Y: Software Defined Networking Pod 2 Pod 3 33/35

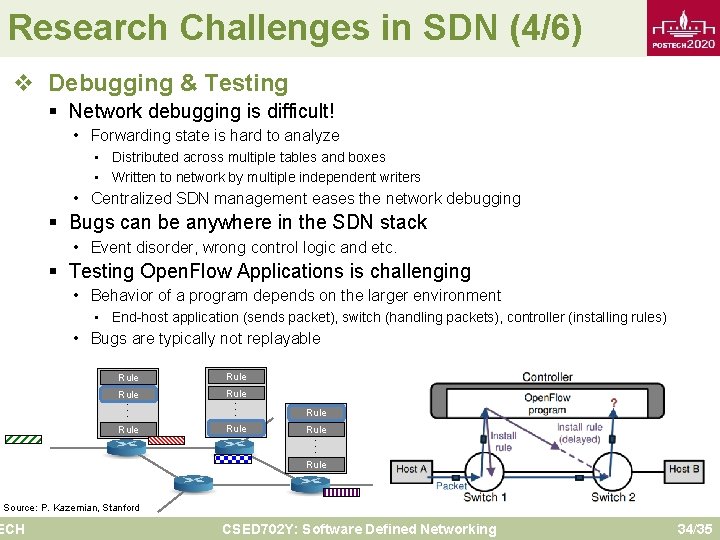

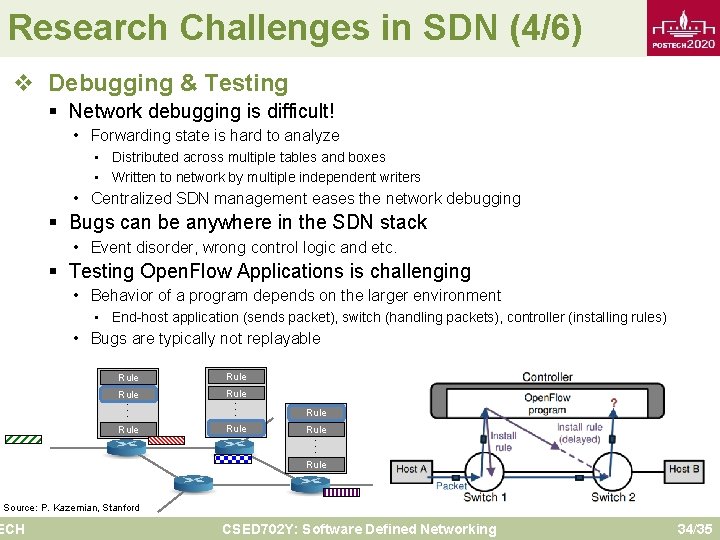

Research Challenges in SDN (4/6) v Debugging & Testing § Network debugging is difficult! • Forwarding state is hard to analyze • Distributed across multiple tables and boxes • Written to network by multiple independent writers • Centralized SDN management eases the network debugging § Bugs can be anywhere in the SDN stack • Event disorder, wrong control logic and etc. § Testing Open. Flow Applications is challenging • Behavior of a program depends on the larger environment • End-host application (sends packet), switch (handling packets), controller (installing rules) • Bugs are typically not replayable Rule. . . Rule Source: P. Kazemian, Stanford ECH CSED 702 Y: Software Defined Networking 34/35

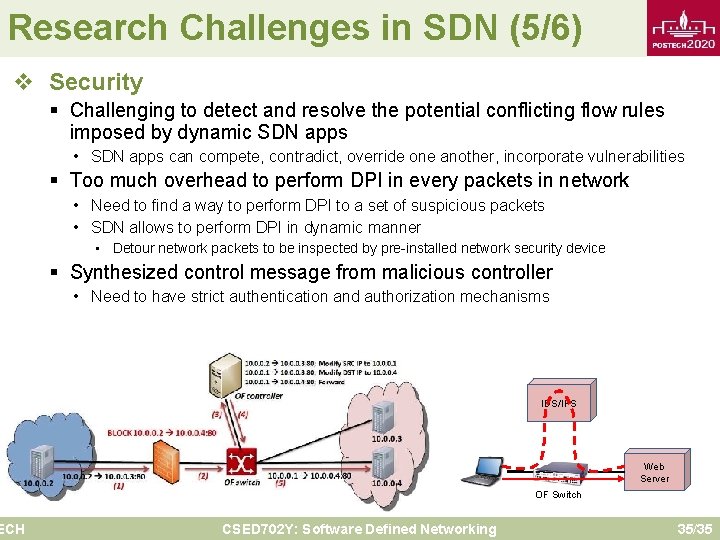

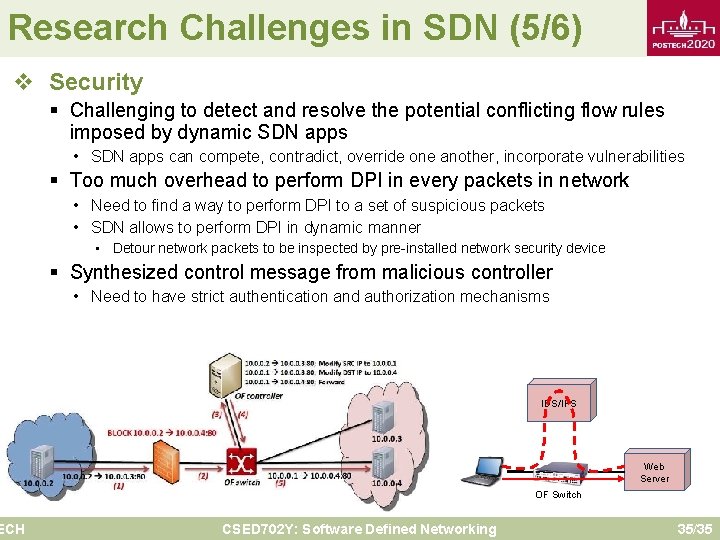

Research Challenges in SDN (5/6) v Security ECH § Challenging to detect and resolve the potential conflicting flow rules imposed by dynamic SDN apps • SDN apps can compete, contradict, override one another, incorporate vulnerabilities § Too much overhead to perform DPI in every packets in network • Need to find a way to perform DPI to a set of suspicious packets • SDN allows to perform DPI in dynamic manner • Detour network packets to be inspected by pre-installed network security device § Synthesized control message from malicious controller • Need to have strict authentication and authorization mechanisms IDS/IPS Web Server OF Switch CSED 702 Y: Software Defined Networking 35/35

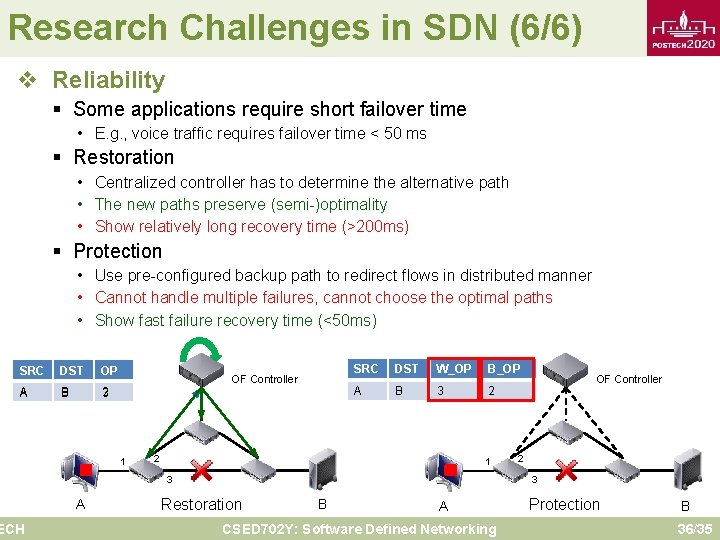

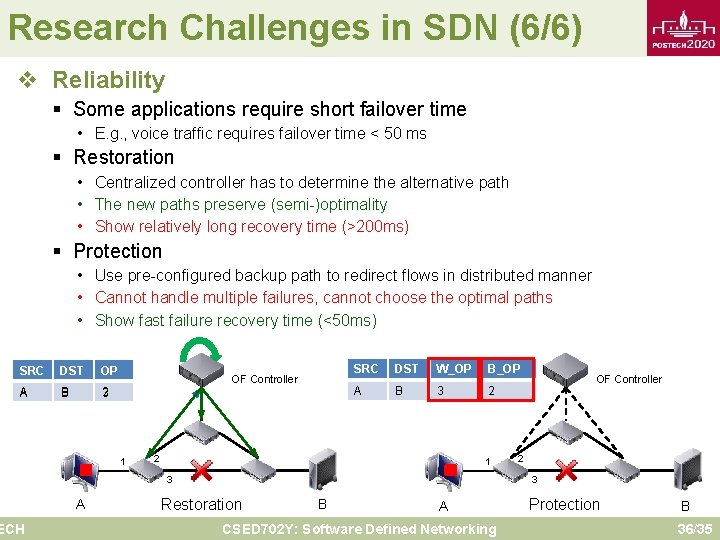

Research Challenges in SDN (6/6) v Reliability § Some applications require short failover time • E. g. , voice traffic requires failover time < 50 ms § Restoration • Centralized controller has to determine the alternative path • The new paths preserve (semi-)optimality • Show relatively long recovery time (>200 ms) § Protection • Use pre-configured backup path to redirect flows in distributed manner • Cannot handle multiple failures, cannot choose the optimal paths • Show fast failure recovery time (<50 ms) SRC A ECH DST B OP OF Controller 2 3 1 SRC DST W_OP B_OP A B 3 2 2 1 3 A OF Controller 2 3 Restoration B A CSED 702 Y: Software Defined Networking Protection B 36/35

Q&A ECH CSED 702 Y: Software Defined Networking 37/35