Teacher Practices in Scoring MultipleChoice Items Interpreting Test

- Slides: 23

Teacher Practices in Scoring Multiple-Choice Items, Interpreting Test Scores & Performing Item Analysis CHERYL NG LING HUI HEE JEE MEI, PH. D UNIVERSITI TEKNOLOGI MALAYSIA

Introduction Multiple choice items are widely used to assess student learning. They feature prominently in public examinations and likewise in teacher-made-tests. Research Project – to develop a tool to assist teachers in scoring multiple choice items, interpreting test scores and performing item analysis. Thus, this preliminary conducted…. needs analysis study was

Aim and Purposes of the Study Objectives: Identify teachers’ current practice and the challenges they face in scoring multiple-choice items, interpreting test scores and performing item analysis; and Ascertain the prevalence of OMR scanners and item analysis software in Malaysian secondary schools. Aim: Findings from this preliminary study serve to inform subsequent development of a software to aid teachers in these three aspects.

Methodology Research Design: Survey Participants: 130 secondary school teachers serving in public schools across Malaysia. Instrument: Questionnaire with 3 sections: Section A: Demographic Profile – gender, location and type of school, teaching experience Section B: Teacher Practices – scoring OMR answer sheets, interpreting test scores, performing item analysis Section C: OMR Scanner and Item Analysis Software – availability in school, willingness to invest in one etc.

Methodology Precedures: Questionnaires through the were disseminated to various schools help of colleagues and friends. An online version of the questionnaire was created using a free online survey tool and the link was sent to potential respondents. The collected data were analysed using descriptive statistics.

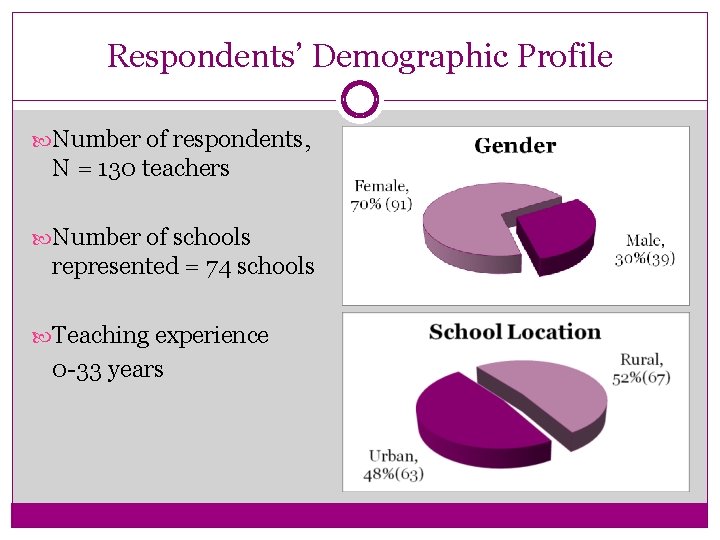

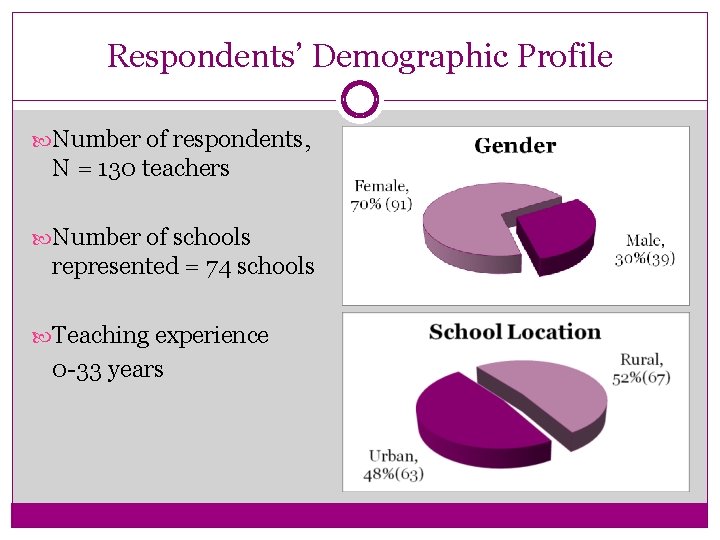

Respondents’ Demographic Profile Number of respondents, N = 130 teachers Number of schools represented = 74 schools Teaching experience 0 -33 years

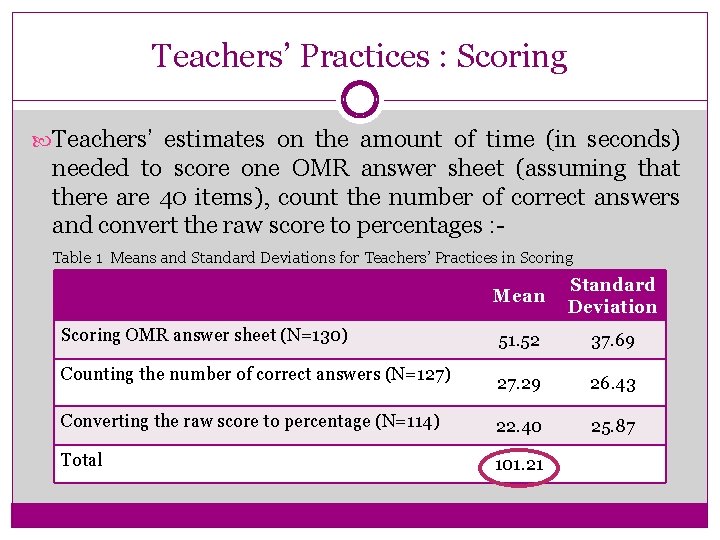

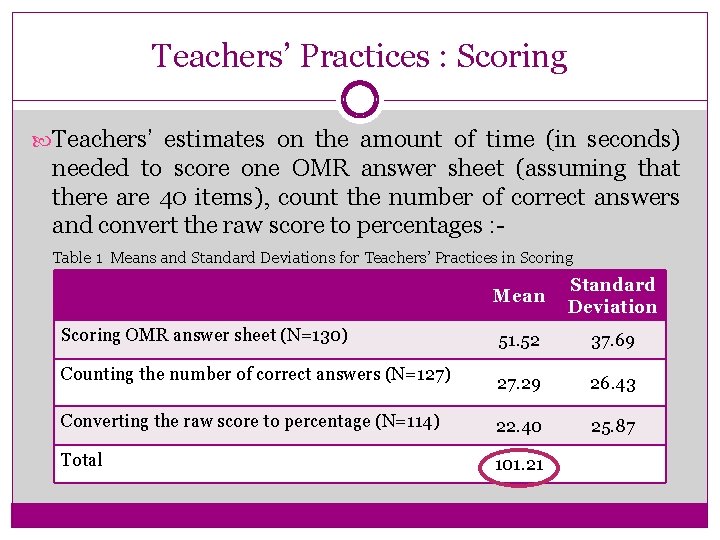

Teachers’ Practices : Scoring Teachers’ estimates on the amount of time (in seconds) needed to score one OMR answer sheet (assuming that there are 40 items), count the number of correct answers and convert the raw score to percentages : Table 1 Means and Standard Deviations for Teachers’ Practices in Scoring Mean Standard Deviation 51. 52 37. 69 27. 29 26. 43 Converting the raw score to percentage (N=114) 22. 40 25. 87 Total 101. 21 Scoring OMR answer sheet (N=130) Counting the number of correct answers (N=127)

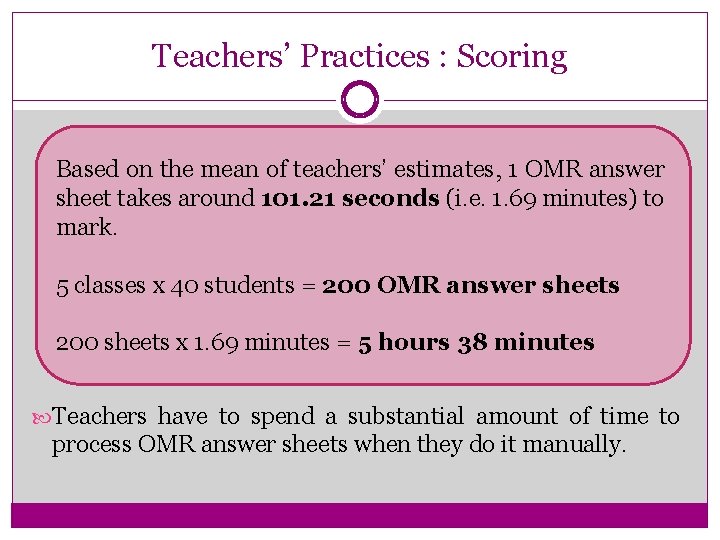

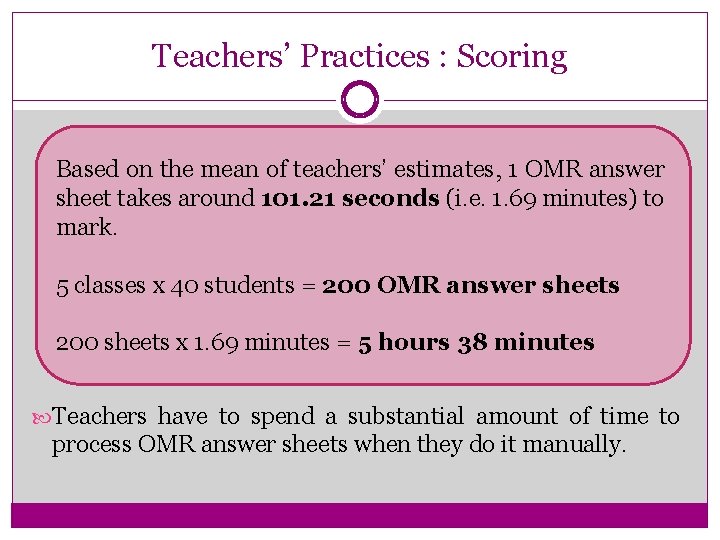

Teachers’ Practices : Scoring Based on the mean of teachers’ estimates, 1 OMR answer sheet takes around 101. 21 seconds (i. e. 1. 69 minutes) to mark. 5 classes x 40 students = 200 OMR answer sheets 200 sheets x 1. 69 minutes = 5 hours 38 minutes Teachers have to spend a substantial amount of time to process OMR answer sheets when they do it manually.

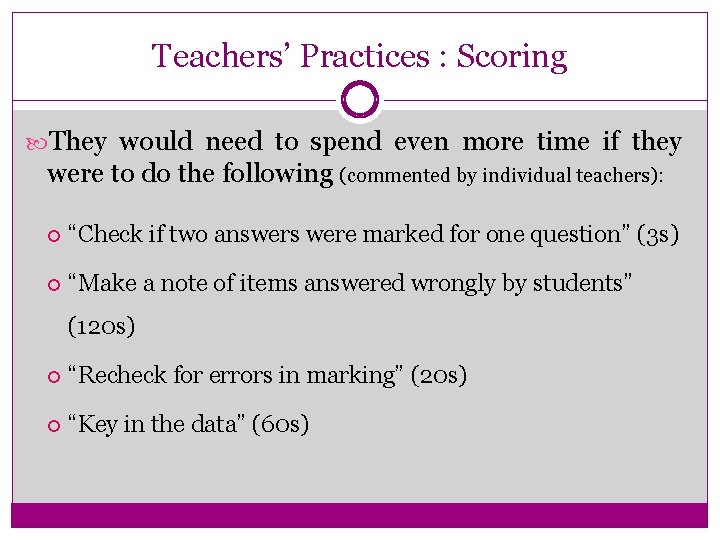

Teachers’ Practices : Scoring They would need to spend even more time if they were to do the following (commented by individual teachers): “Check if two answers were marked for one question” (3 s) “Make a note of items answered wrongly by students” (120 s) “Recheck for errors in marking” (20 s) “Key in the data” (60 s)

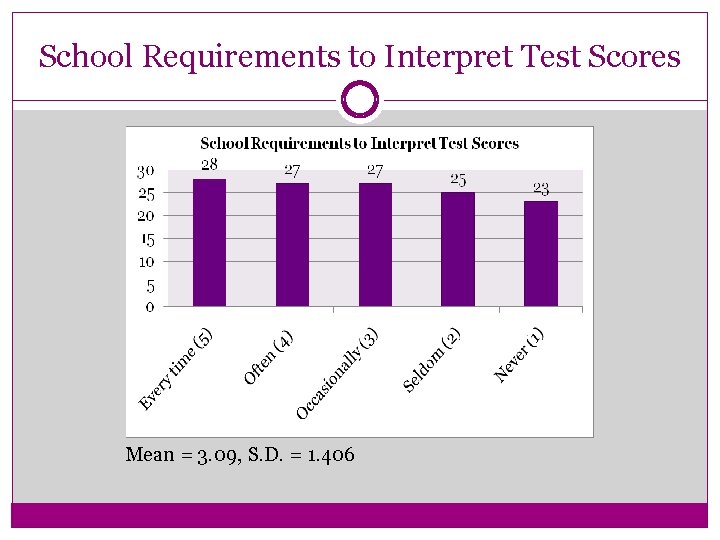

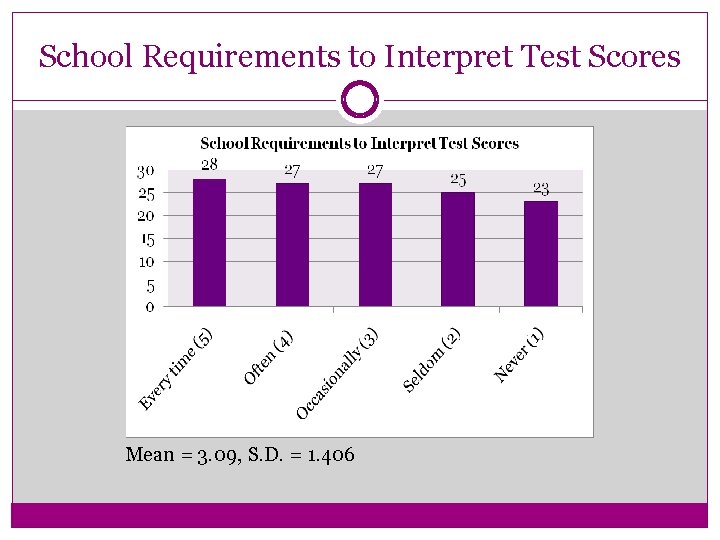

School Requirements to Interpret Test Scores Mean = 3. 09, S. D. = 1. 406

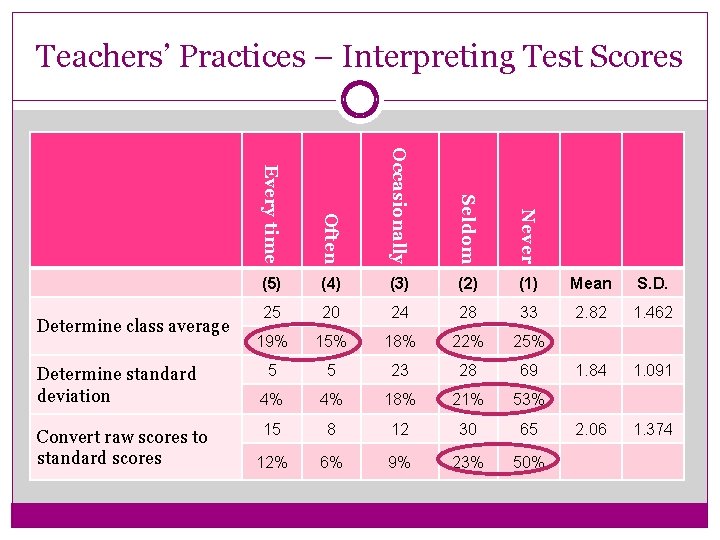

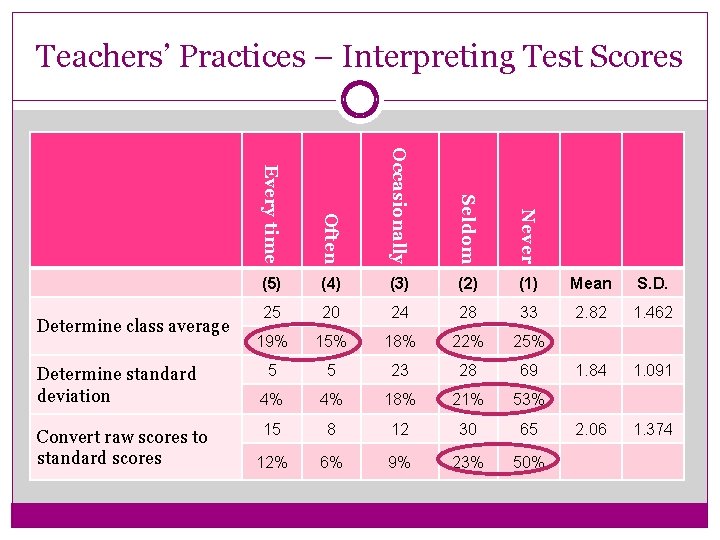

Teachers’ Practices – Interpreting Test Scores Occasionally Seldom Never Convert raw scores to standard scores Often Determine standard deviation Every time Determine class average (5) (4) (3) (2) (1) Mean S. D. 25 20 24 28 33 2. 82 1. 462 19% 15% 18% 22% 25% 5 5 23 28 69 1. 84 1. 091 4% 4% 18% 21% 53% 15 8 12 30 65 2. 06 1. 374 12% 6% 9% 23% 50%

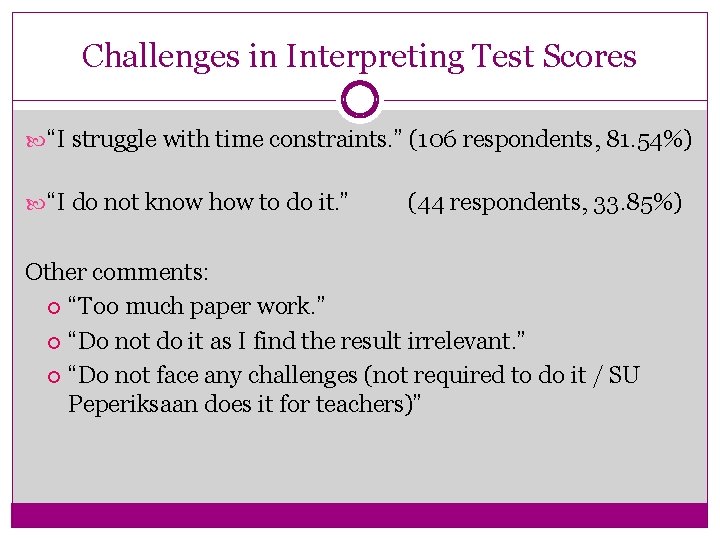

Challenges in Interpreting Test Scores “I struggle with time constraints. ” (106 respondents, 81. 54%) “I do not know how to do it. ” (44 respondents, 33. 85%) Other comments: “Too much paper work. ” “Do not do it as I find the result irrelevant. ” “Do not face any challenges (not required to do it / SU Peperiksaan does it for teachers)”

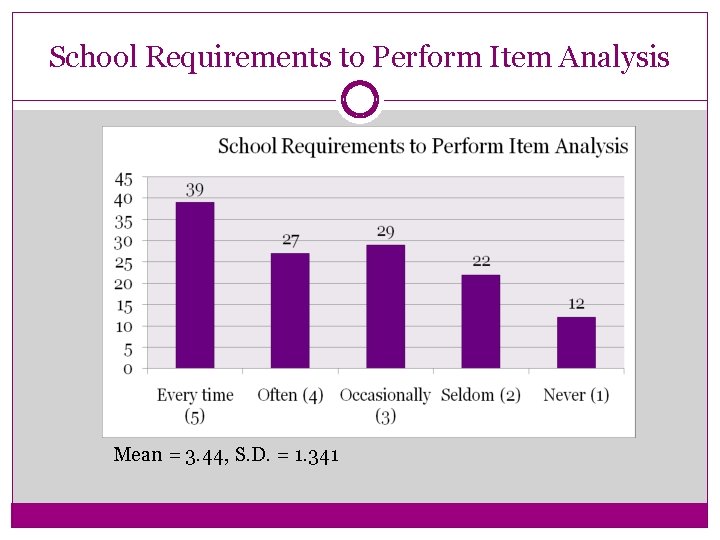

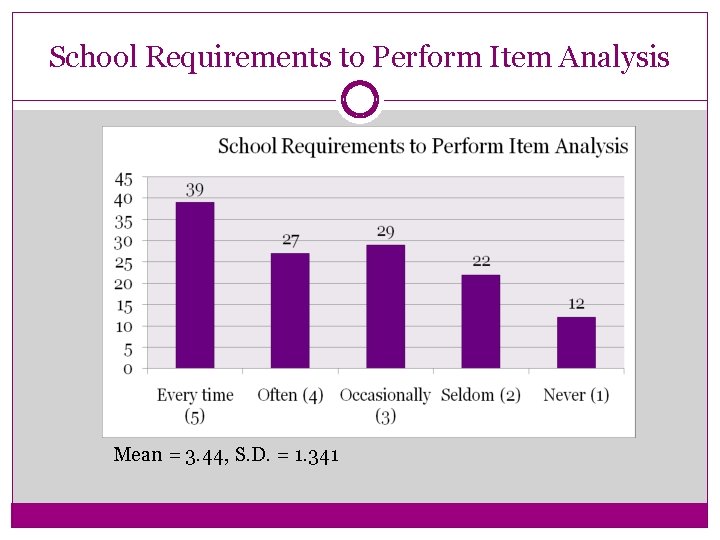

School Requirements to Perform Item Analysis Mean = 3. 44, S. D. = 1. 341

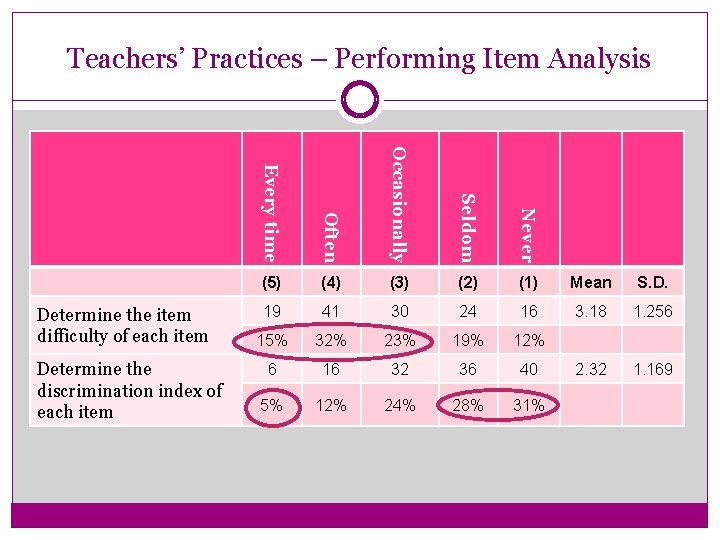

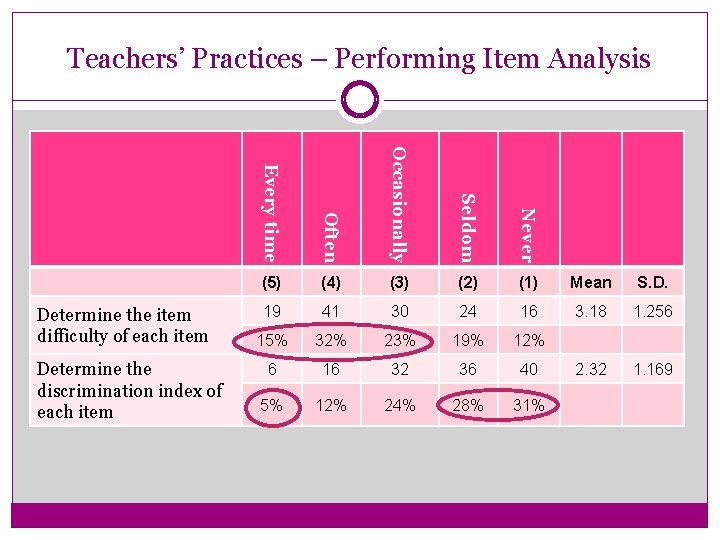

Teachers’ Practices – Performing Item Analysis Often Occasionally Seldom Never Determine the discrimination index of each item Every time Determine the item difficulty of each item (5) (4) (3) (2) (1) Mean S. D. 19 41 30 24 16 3. 18 1. 256 15% 32% 23% 19% 12% 6 16 32 36 40 2. 32 1. 169 5% 12% 24% 28% 31%

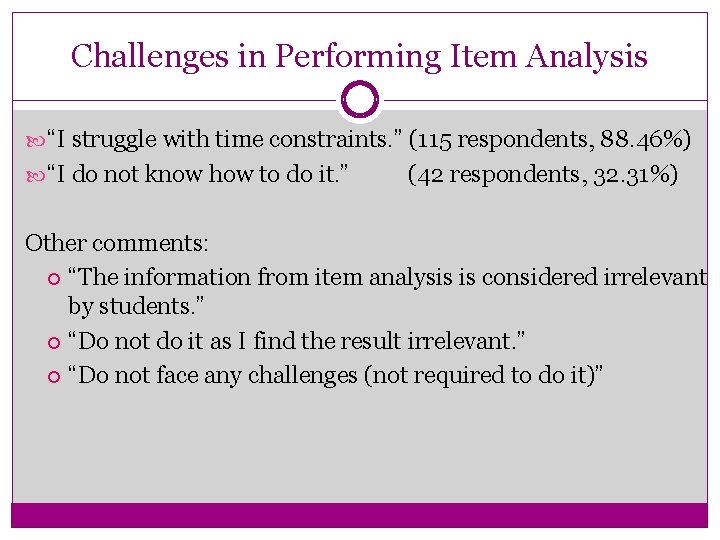

Challenges in Performing Item Analysis “I struggle with time constraints. ” (115 respondents, 88. 46%) “I do not know how to do it. ” (42 respondents, 32. 31%) Other comments: “The information from item analysis is considered irrelevant by students. ” “Do not do it as I find the result irrelevant. ” “Do not face any challenges (not required to do it)”

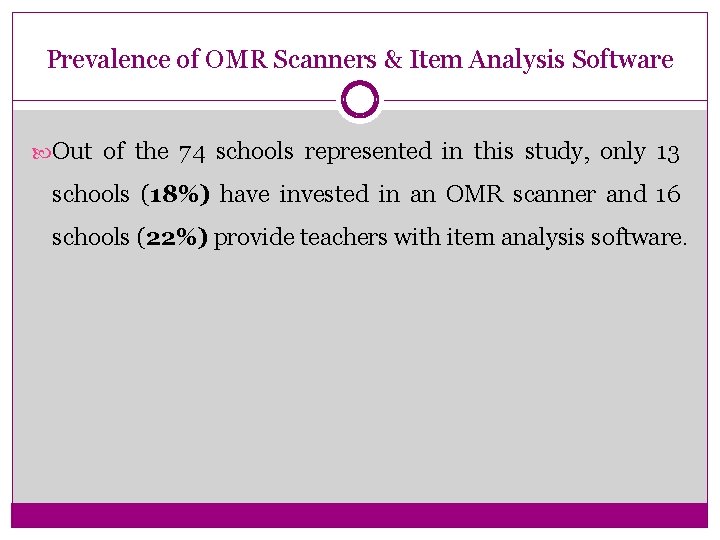

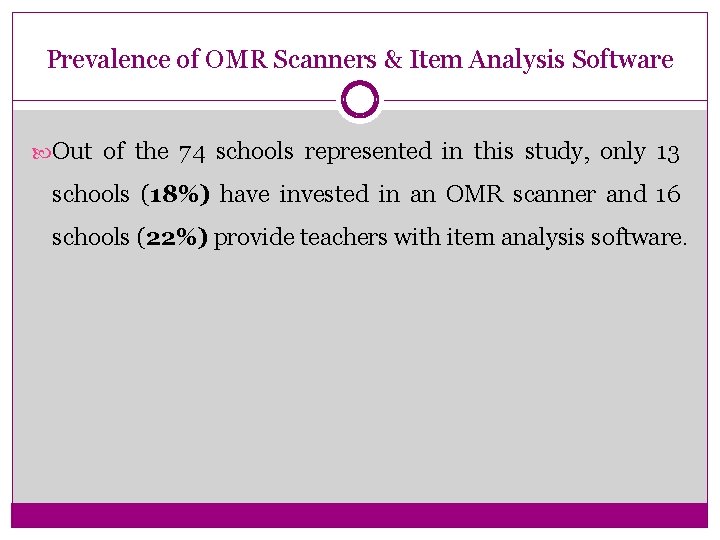

Prevalence of OMR Scanners & Item Analysis Software Out of the 74 schools represented in this study, only 13 schools (18%) have invested in an OMR scanner and 16 schools (22%) provide teachers with item analysis software.

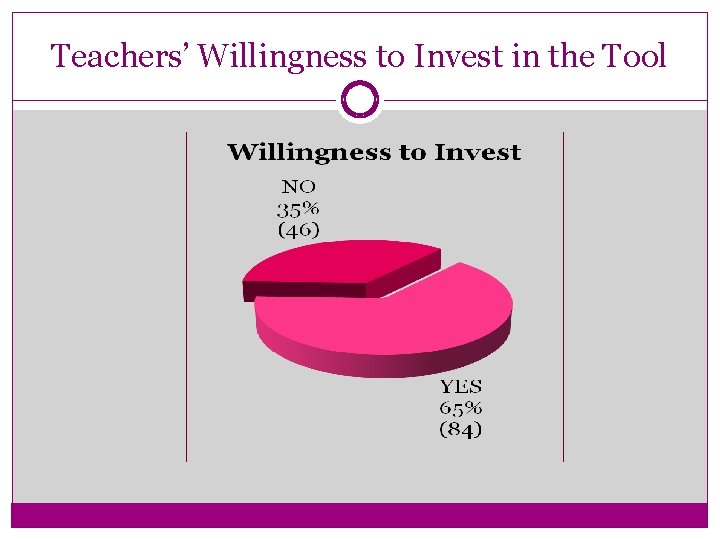

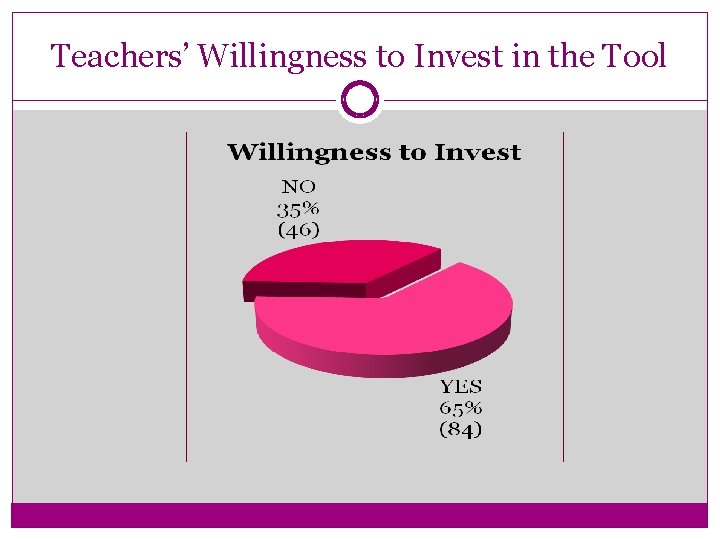

Teachers’ Willingness to Invest in the Tool

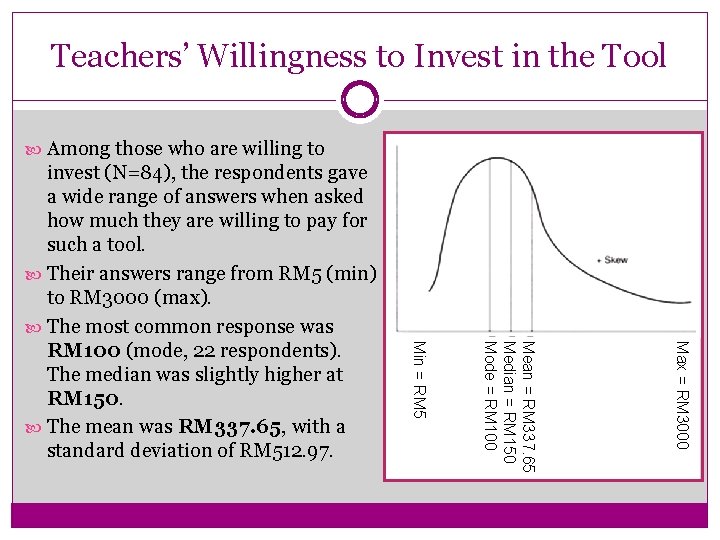

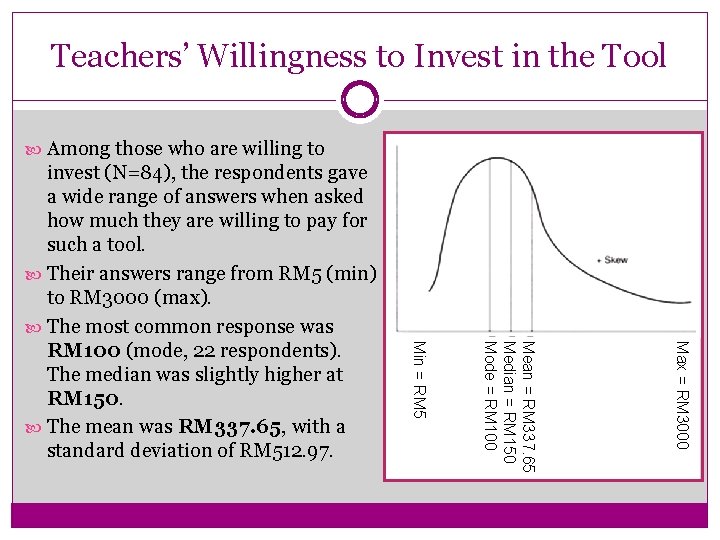

Teachers’ Willingness to Invest in the Tool Among those who are willing to Max = RM 3000 Mean = RM 337. 65 Median = RM 150 Mode = RM 100 Min = RM 5 invest (N=84), the respondents gave a wide range of answers when asked how much they are willing to pay for such a tool. Their answers range from RM 5 (min) to RM 3000 (max). The most common response was RM 100 (mode, 22 respondents). The median was slightly higher at RM 150. The mean was RM 337. 65, with a standard deviation of RM 512. 97.

Discussion Teachers have to spend a substantial amount of time to score OMR answer sheets, interpret test scores and perform item analysis if they do it manually. Time constraint is the greatest challenge that hinder teachers from interpreting test scores and performing item analysis. Therefore, a tool to aid teachers in scoring OMR answer sheets would greatly reduce teachers’ burden and allow them more time to focus on enhancing test items and improving their teaching practice. However, the tool should be made available to teachers at an affordable price range (RM 100 -RM 350).

Discussion Interpreting test scores and performing item analysis are crucial aspects of the assessment process. Interpreting test scores will give insights to the teachers as to their students’ performance in the test. It also helps to highlight areas of weakness that need to be addressed. Information from the item analysis is crucial for improving the item quality. From the item analysis results, teachers can decide whether to retain, modify or discard test items. Functional and modified items could be stored for future use. (Gronlund and Linn, 1990; Reynolds, Livingston and Willson, 2010) .

Discussion Requirements to interpret test scores and perform item analysis vary from school to school. If teachers neglect to interpret test scores and perform item analysis, then the value of the assessment process is greatly reduced. Suggestion: Teachers should be required to interpret test scores and perform item analysis as part of their professional practice. However, they should be released from the burden of clerical work (i. e. manually scoring OMR answer sheets and keying in the data) which could be done effectively with the assistance of an OMR processing and item analysis tool.

Discussion In conclusion, there is a need to develop an affordable and teacher-friendly tool to help teachers to process OMR answer sheets and provide them with information on students’ performance in the test as well as the quality of the items.

~Thank you~ Cheryl Ng Ling Hui Universiti Teknologi Malaysia cherlinghui@gmail. com http: //intoherworldofteaching. wordpress. com