TCP loss sensitivity analysis ADAM KRAJEWSKI ITCSCE CERN

![0 ms delay, default settings 10 9 8 Bandwidth [Gbps] 7 cubic 6 reno 0 ms delay, default settings 10 9 8 Bandwidth [Gbps] 7 cubic 6 reno](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-6.jpg)

![25 ms delay, default settings PROBLEM: only 1 G` 1. 2 1 Bandwidth [Gbps] 25 ms delay, default settings PROBLEM: only 1 G` 1. 2 1 Bandwidth [Gbps]](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-7.jpg)

![25 ms delay, tuned settings 10 9 Bandwidth [Gbps] 8 7 cubic 6 reno 25 ms delay, tuned settings 10 9 Bandwidth [Gbps] 8 7 cubic 6 reno](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-10.jpg)

![0 ms delay, tuned settings 10 9 8 cubic Bandwidth [Gbps] 7 6 DEFAULT 0 ms delay, tuned settings 10 9 8 cubic Bandwidth [Gbps] 7 6 DEFAULT](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-11.jpg)

![cubic vs bic Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6 cubic vs bic Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-15.jpg)

![cubic vs scalable Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6 cubic vs scalable Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-16.jpg)

![cubic vs reno Congestion control 25 ms delay Bandwidth [Gbps] 10 9 8 7 cubic vs reno Congestion control 25 ms delay Bandwidth [Gbps] 10 9 8 7](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-18.jpg)

- Slides: 25

TCP loss sensitivity analysis ADAM KRAJEWSKI, IT-CS-CE, CERN

The original presentation has been modified for the 2 nd ATCF 2016 EDOARDO MARTELI, CERN

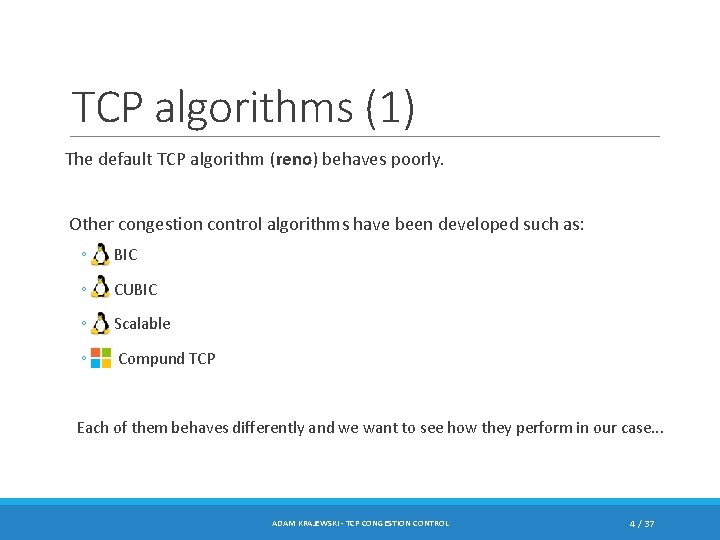

Original problem 4 G out of. . . 10 G Meyrin Wigner ADAM KRAJEWSKI - TCP CONGESTION CONTROL 3 / 37

TCP algorithms (1) The default TCP algorithm (reno) behaves poorly. Other congestion control algorithms have been developed such as: ◦ BIC ◦ CUBIC ◦ Scalable ◦ Compund TCP Each of them behaves differently and we want to see how they perform in our case. . . ADAM KRAJEWSKI - TCP CONGESTION CONTROL 4 / 37

Testbed Two servers connected back-to-back. How the congestion control algorithm performance changes with packet loss and delay ? Tested with iperf 3. Packet loss and delay emulated using Net. Em in Linux: ◦ tc qdisc add dev eth 2 root netem loss 0. 1 delay 12. 5 ms ADAM KRAJEWSKI - TCP CONGESTION CONTROL 5 / 37

![0 ms delay default settings 10 9 8 Bandwidth Gbps 7 cubic 6 reno 0 ms delay, default settings 10 9 8 Bandwidth [Gbps] 7 cubic 6 reno](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-6.jpg)

0 ms delay, default settings 10 9 8 Bandwidth [Gbps] 7 cubic 6 reno 5 bic 4 scalable 3 2 1 0 0. 0001 0. 1 1 10 Packet loss rate [%] ADAM KRAJEWSKI - TCP CONGESTION CONTROL 6 / 37

![25 ms delay default settings PROBLEM only 1 G 1 2 1 Bandwidth Gbps 25 ms delay, default settings PROBLEM: only 1 G` 1. 2 1 Bandwidth [Gbps]](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-7.jpg)

25 ms delay, default settings PROBLEM: only 1 G` 1. 2 1 Bandwidth [Gbps] 0. 8 cubic reno 0. 6 bic scalable 0. 4 0. 2 0 0. 0001 0. 1 1 10 Packet loss rate [%] ADAM KRAJEWSKI - TCP CONGESTION CONTROL 7 / 37

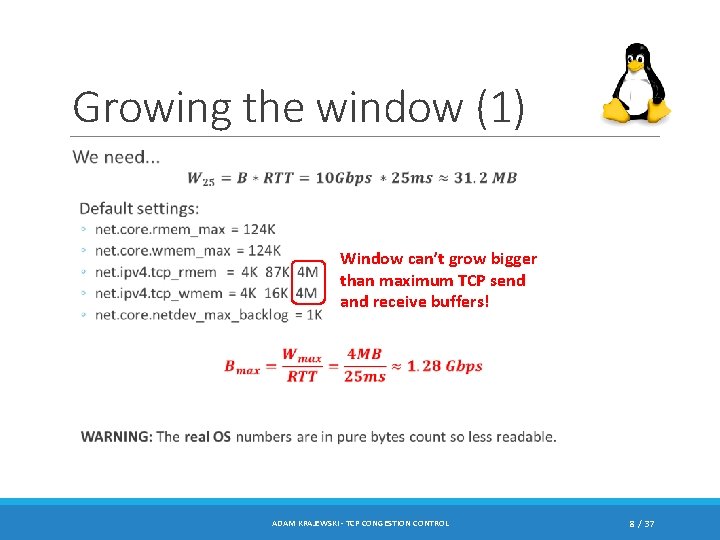

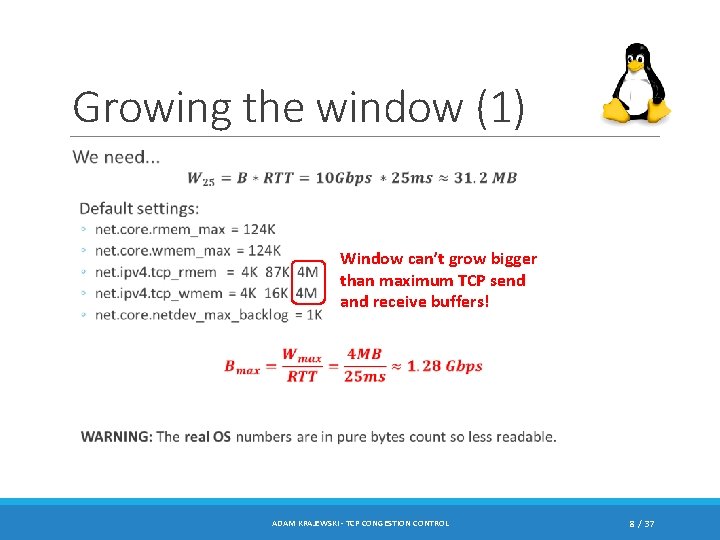

Growing the window (1) Window can’t grow bigger than maximum TCP send and receive buffers! ADAM KRAJEWSKI - TCP CONGESTION CONTROL 8 / 37

Growing the window (2) We tune TCP settings on both server and client. Tuned settings: ◦ net. core. rmem_max = 67 M ◦ net. core. wmem_max = 67 M ◦ net. ipv 4. tcp_rmem = 4 K 87 K 67 M Now it’s fine ◦ net. ipv 4. tcp_wmem = 4 K 65 K 67 M ◦ net. core. netdev_max_backlog = 30 K WARNING: TCP buffer size doesn’t translate directly to window size because TCP uses some portion of its buffers to allocate operational data structures. So the effective window size will be smaller than maximum buffer size set. Helpful link: https: //fasterdata. es. net/host-tuning/linux/ ADAM KRAJEWSKI - TCP CONGESTION CONTROL 9 / 37

![25 ms delay tuned settings 10 9 Bandwidth Gbps 8 7 cubic 6 reno 25 ms delay, tuned settings 10 9 Bandwidth [Gbps] 8 7 cubic 6 reno](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-10.jpg)

25 ms delay, tuned settings 10 9 Bandwidth [Gbps] 8 7 cubic 6 reno 5 scalable 4 bic 3 2 1 0 0. 0001 0. 1 1 10 Packet loss rate [%] ADAM KRAJEWSKI - TCP CONGESTION CONTROL 10 / 37

![0 ms delay tuned settings 10 9 8 cubic Bandwidth Gbps 7 6 DEFAULT 0 ms delay, tuned settings 10 9 8 cubic Bandwidth [Gbps] 7 6 DEFAULT](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-11.jpg)

0 ms delay, tuned settings 10 9 8 cubic Bandwidth [Gbps] 7 6 DEFAULT CUBIC scalable 5 reno 4 3 bic 2 1 0 0. 0001 0. 1 1 10 Packet loss rate [%] ADAM KRAJEWSKI - TCP CONGESTION CONTROL 11 / 37

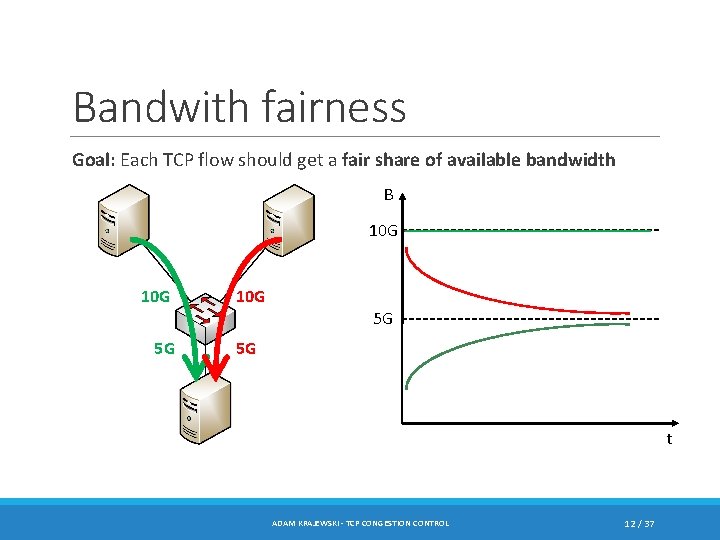

Bandwith fairness Goal: Each TCP flow should get a fair share of available bandwidth B 10 G 10 G 5 G 5 G 5 G t ADAM KRAJEWSKI - TCP CONGESTION CONTROL 12 / 37

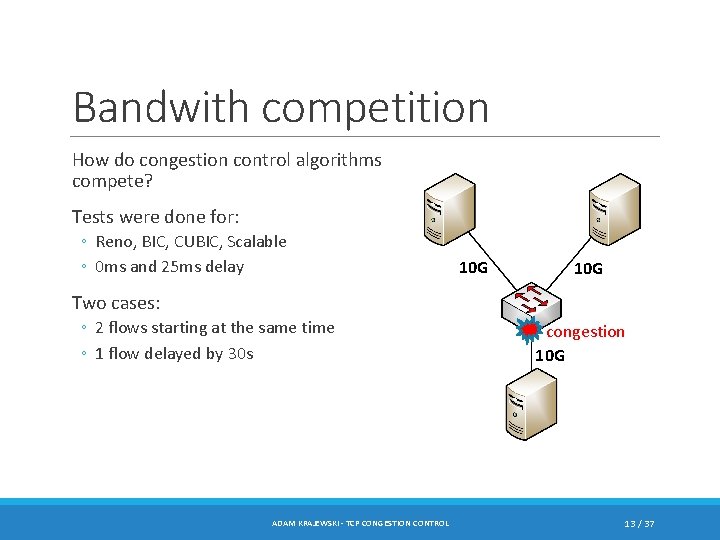

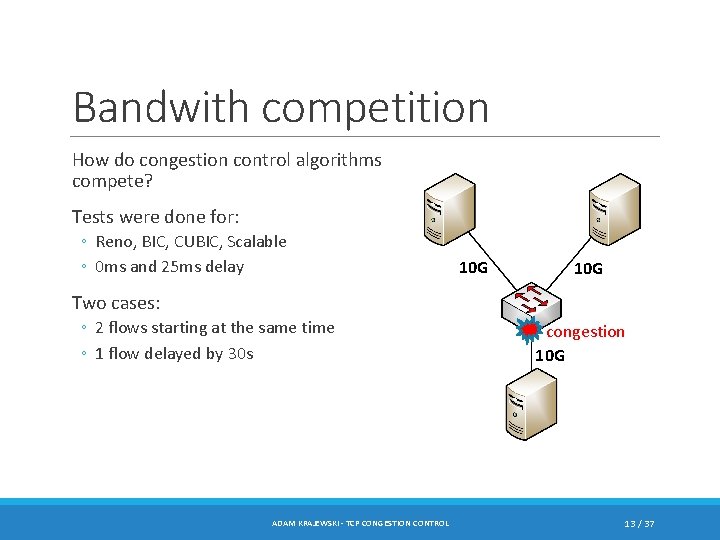

Bandwith competition How do congestion control algorithms compete? Tests were done for: ◦ Reno, BIC, CUBIC, Scalable ◦ 0 ms and 25 ms delay 10 G Two cases: ◦ 2 flows starting at the same time ◦ 1 flow delayed by 30 s ADAM KRAJEWSKI - TCP CONGESTION CONTROL congestion 10 G 13 / 37

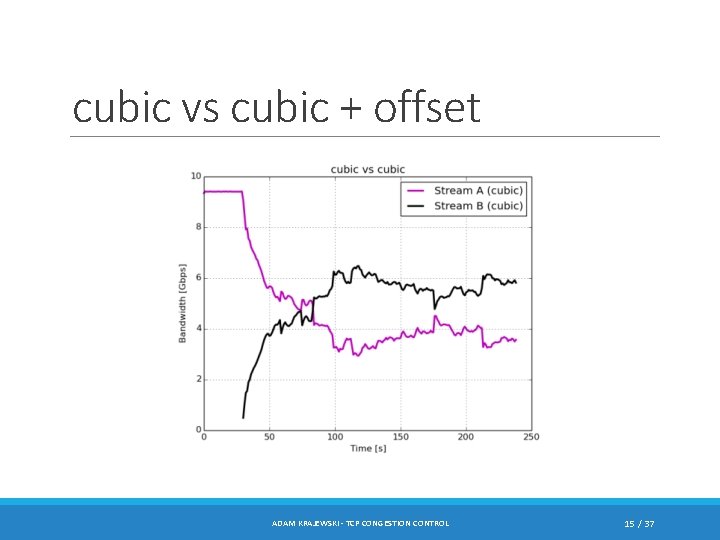

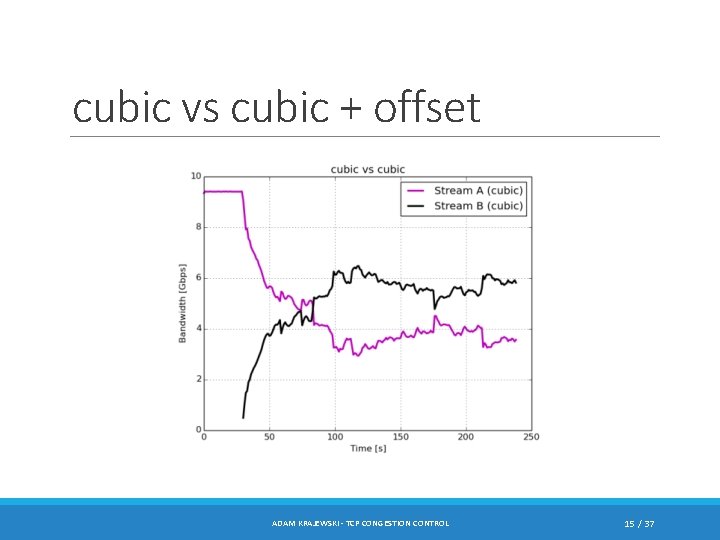

cubic vs cubic + offset ADAM KRAJEWSKI - TCP CONGESTION CONTROL 15 / 37

![cubic vs bic Congestion control 0 ms Bandwidth Gbps 10 9 8 7 6 cubic vs bic Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-15.jpg)

cubic vs bic Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6 5 4 3 2 1 0 0. 0001 0. 001 ADAM KRAJEWSKI - TCP CONGESTION CONTROL 0. 01 0. 1 Packet loss rate [%] cubic 1 10 16 / 37

![cubic vs scalable Congestion control 0 ms Bandwidth Gbps 10 9 8 7 6 cubic vs scalable Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-16.jpg)

cubic vs scalable Congestion control 0 ms Bandwidth [Gbps] 10 9 8 7 6 5 4 3 2 1 0 0. 0001 0. 001 ADAM KRAJEWSKI - TCP CONGESTION CONTROL 0. 01 0. 1 Packet loss rate [%] cubic scalable 1 10 17 / 37

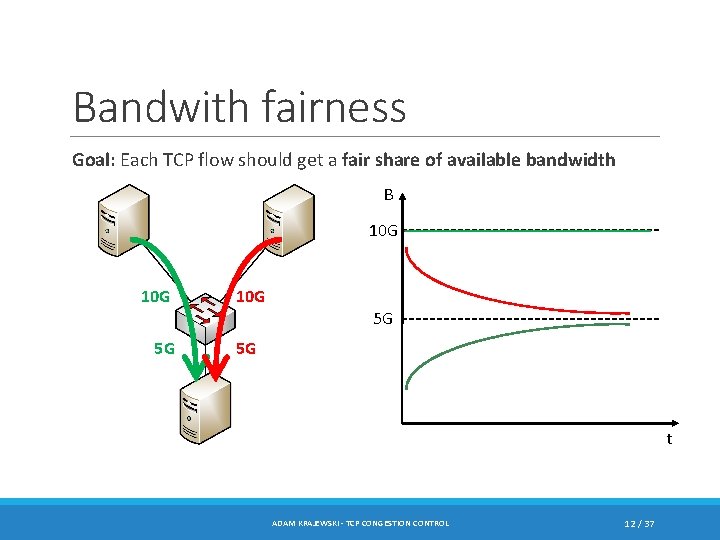

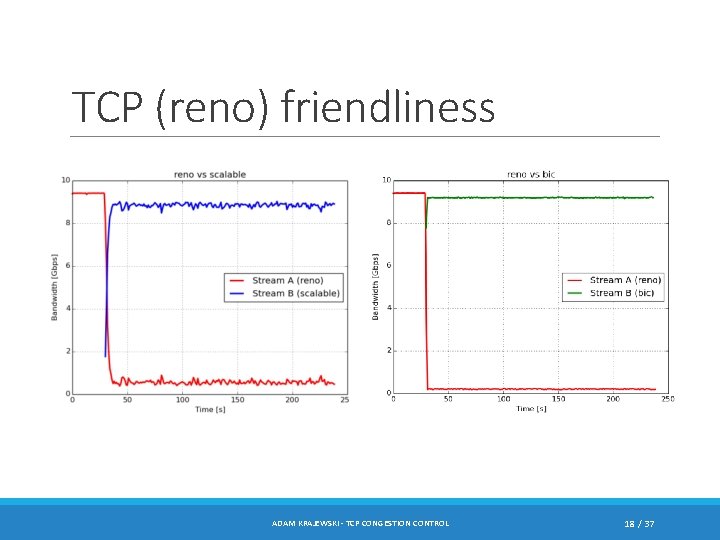

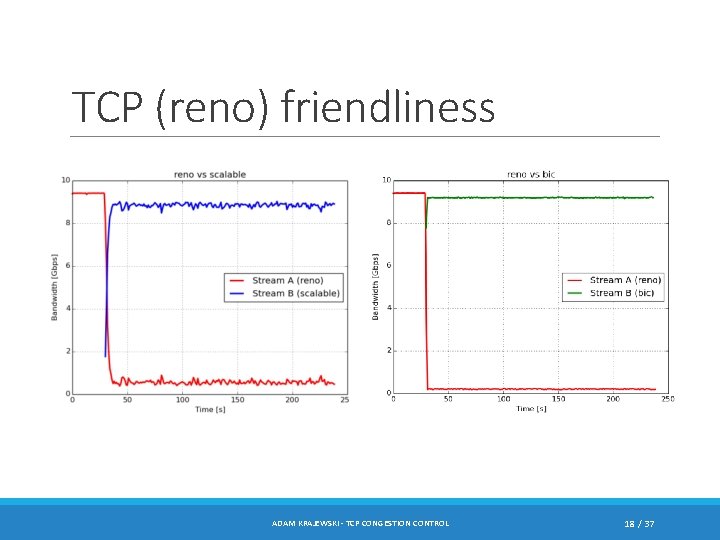

TCP (reno) friendliness ADAM KRAJEWSKI - TCP CONGESTION CONTROL 18 / 37

![cubic vs reno Congestion control 25 ms delay Bandwidth Gbps 10 9 8 7 cubic vs reno Congestion control 25 ms delay Bandwidth [Gbps] 10 9 8 7](https://slidetodoc.com/presentation_image_h2/4e6e3ed3225bd84772e2d510736c09d8/image-18.jpg)

cubic vs reno Congestion control 25 ms delay Bandwidth [Gbps] 10 9 8 7 6 5 4 3 2 1 0 0. 0001 cubic reno 0. 001 ADAM KRAJEWSKI - TCP CONGESTION CONTROL 0. 01 0. 1 Packet loss rate [%] 1 10 19 / 37

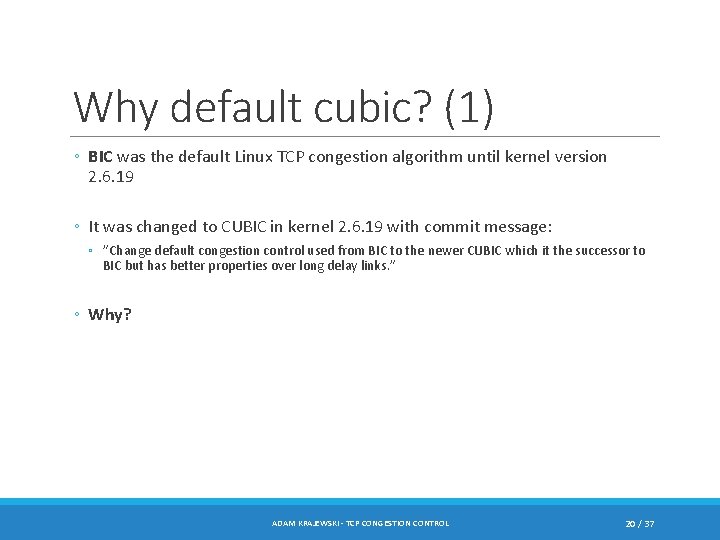

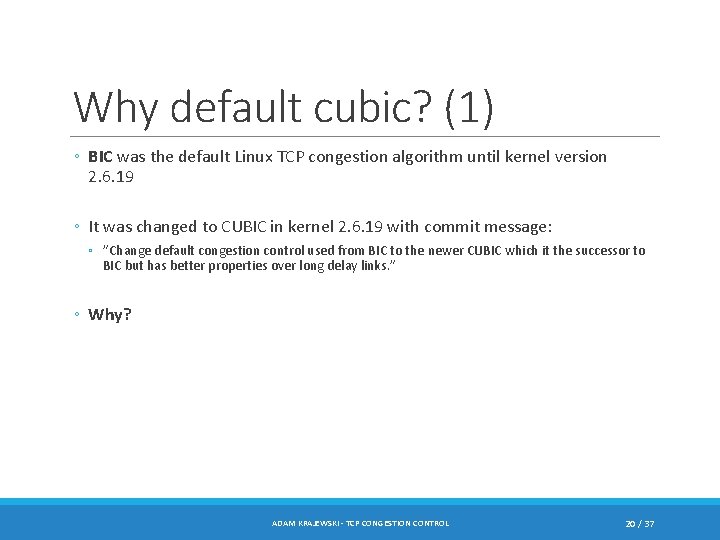

Why default cubic? (1) ◦ BIC was the default Linux TCP congestion algorithm until kernel version 2. 6. 19 ◦ It was changed to CUBIC in kernel 2. 6. 19 with commit message: ◦ ”Change default congestion control used from BIC to the newer CUBIC which it the successor to BIC but has better properties over long delay links. ” ◦ Why? ADAM KRAJEWSKI - TCP CONGESTION CONTROL 20 / 37

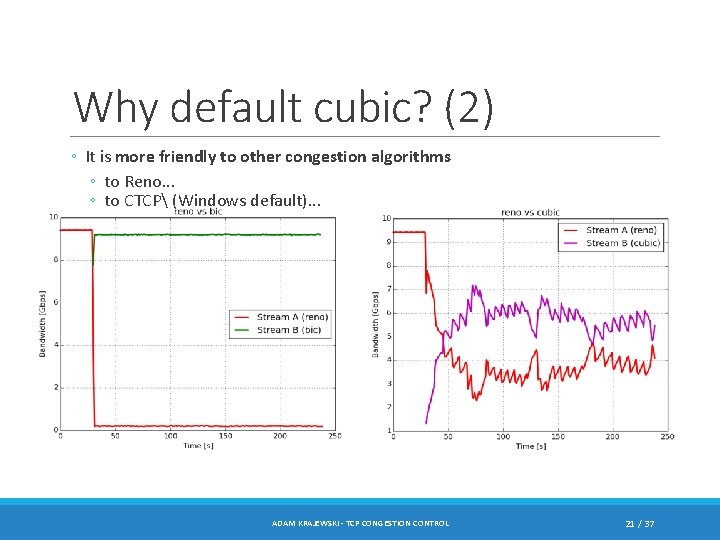

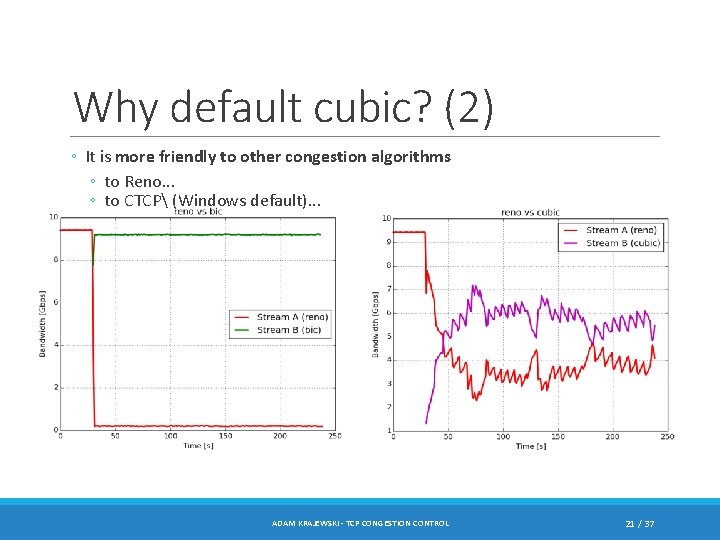

Why default cubic? (2) ◦ It is more friendly to other congestion algorithms ◦ to Reno. . . ◦ to CTCP (Windows default). . . ADAM KRAJEWSKI - TCP CONGESTION CONTROL 21 / 37

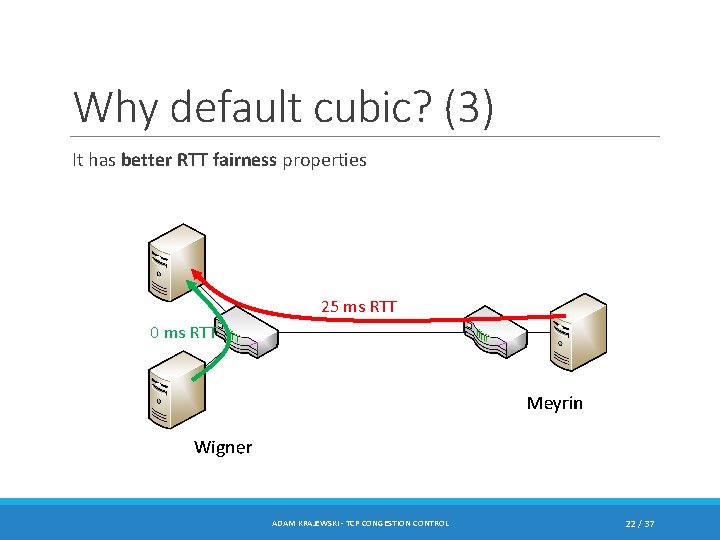

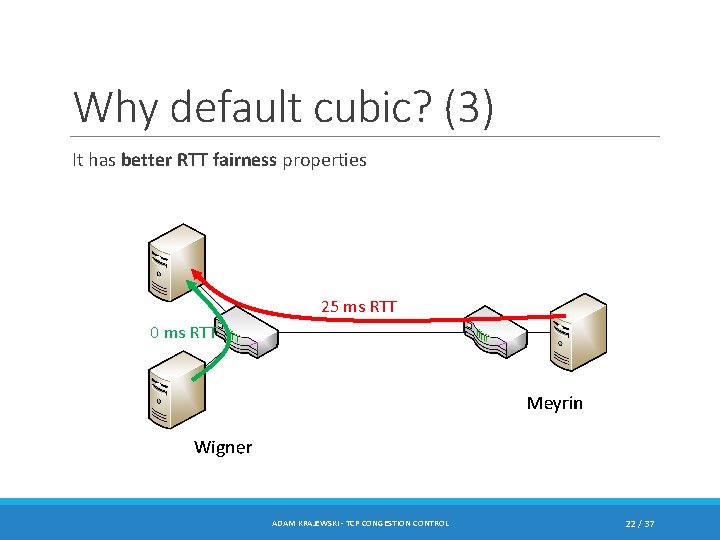

Why default cubic? (3) It has better RTT fairness properties 25 ms RTT 0 ms RTT ADAM KRAJEWSKI - TCP CONGESTION CONTROL 22 / 37

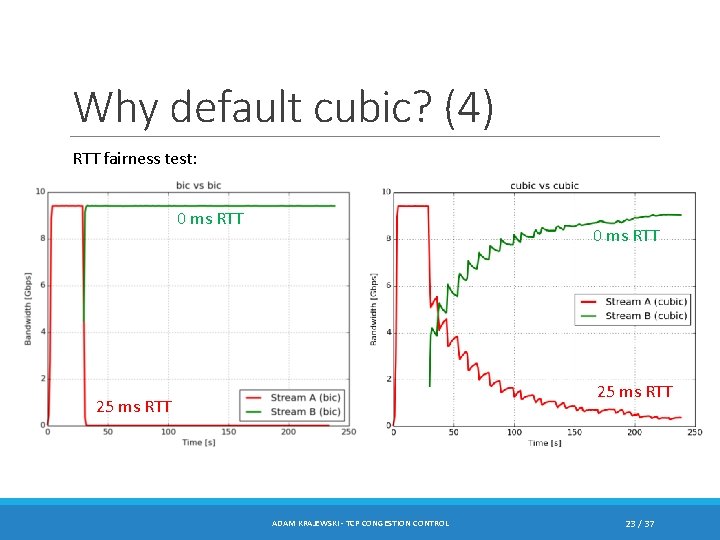

Why default cubic? (4) RTT fairness test: 0 ms RTT 25 ms RTT ADAM KRAJEWSKI - TCP CONGESTION CONTROL 23 / 37

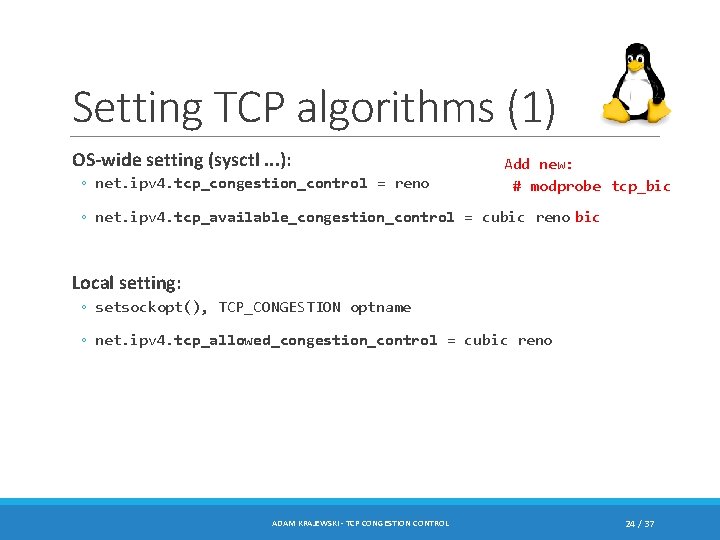

Setting TCP algorithms (1) OS-wide setting (sysctl. . . ): ◦ net. ipv 4. tcp_congestion_control = reno Add new: # modprobe tcp_bic ◦ net. ipv 4. tcp_available_congestion_control = cubic reno bic Local setting: ◦ setsockopt(), TCP_CONGESTION optname ◦ net. ipv 4. tcp_allowed_congestion_control = cubic reno ADAM KRAJEWSKI - TCP CONGESTION CONTROL 24 / 37

Setting TCP algorithms (2) OS-wide settings: C: > netsh interface tcp show global ◦ Add-On Congestion Control Provider: ctcp Enabled by default in Windows Server 2008 and newer. Needs to be enabled manually on client systems: ◦ Windows 7: C: >netsh interface tcp set global congestionprovider=ctcp ◦ Windows 8/8. 1 [Powershell]: Set-Net. TCPSetting –Congestion. Provider ctcp ADAM KRAJEWSKI - TCP CONGESTION CONTROL 25 / 37

TCP loss sensitivity analysis ADAM KRAJEWSKI, IT-CS-CE