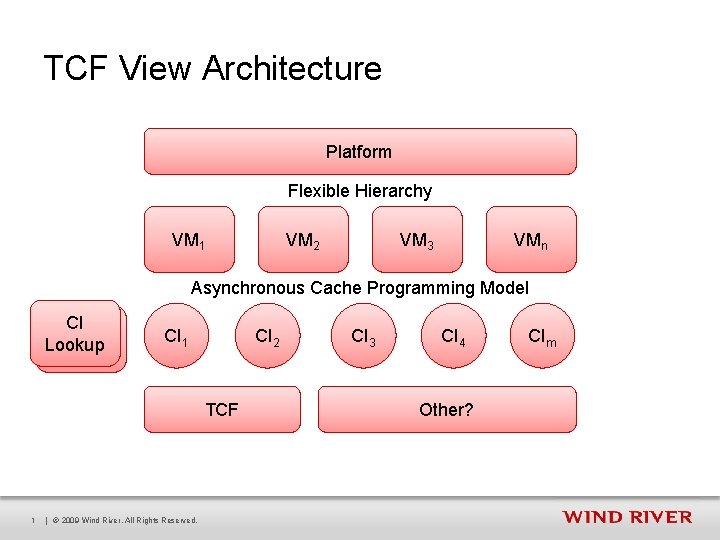

TCF View Architecture Platform Flexible Hierarchy VM 1

TCF View Architecture Platform Flexible Hierarchy VM 1 VM 2 VM 3 VMn Asynchronous Cache Programming Model CI CI Lookup CI 1 CI 2 TCF 1 | © 2009 Wind River. All Rights Reserved. CI 3 CI 4 Other? CIm

View Model Layer § The intention of this layer is to provide information required by views and flexible hierarchy – This may involve data from one or more Cache Instance(s) § A simplified view of this is that it – Collects information from the appropriate cache instances and formats it as needed for the flexible hierarchy – Listens to changes from CIs to generate delta events § More details to be discussed 2 | © 2009 Wind River. All Rights Reserved.

Cache Instance Abstraction § Same or similar to Eugene’s TCF prototype § APIs – validate() – returns true if valid, otherwise requests data and returns false – get. Data() – retrieves data (callable only if valid) – get. Error() – retrieves error (callable only if valid) – wait() – registers callback – set(data, error) – make CI valid and notifies all waiters – reset() – makes CI invalid and notifies all waiters – abstract request. Data() - called by validate() when needed 3 | © 2009 Wind River. All Rights Reserved.

Cache Instances § A cache instance can be created on any type that needs to be cached § Examples might be – Children context/CI lists, context properties, state, memory data, register data, symbol, variable, etc § There is a open ended number of types of CIs and there can be multiple CIs of the same type § Clients of CIs can register for CI changes, but don’t need to think about any particular events since that is handled by the CIs 4 | © 2009 Wind River. All Rights Reserved.

Asynchronous Cache Programming Model – Approach § Validate all data required for task – Implicitly requests invalid data § If anything is invalid then – Register (wait) for cache instance(s) “change” callback and return – Once callback is called the full validation is restarted § Once everything is valid the real work is done – All validation and calculation for a particular request is done in a single dispatch cycle (transaction) Similar to Software Transactional Memory 5 | © 2009 Wind River. All Rights Reserved.

Asynchronous Cache Programming Model – Benefits § Data consistency – Data from multiple sources are guaranteed to be consistent, even if already validated CIs change while waiting for other CIs, since we only take data from cache once all CIs are valid § Natural way to issue multiple requests before waiting – This fills the pipeline which solves the high latency network issues § Synchronous like programming model – Since we restart the full validation we can program in a similar fashion to synchronous program which is familiar to most programmers and avoids complete rewrite of existing code § Allows code sharing between Value-add and Agent 6 | © 2009 Wind River. All Rights Reserved.

- Slides: 6