TCCluster A Cluster Architecture Utilizing the Processor Host

![Motivation § Future Trends § More cores, 2 -fold increase per year [Asanovic 2006] Motivation § Future Trends § More cores, 2 -fold increase per year [Asanovic 2006]](https://slidetodoc.com/presentation_image_h2/d7580294d599ef453f5a58429f920842/image-2.jpg)

![Motivation § Latency lags Bandwidth [Patterson, 2004] § Memory vs. Network § § § Motivation § Latency lags Bandwidth [Patterson, 2004] § Memory vs. Network § § §](https://slidetodoc.com/presentation_image_h2/d7580294d599ef453f5a58429f920842/image-3.jpg)

![References § [Asanovic, 2006] Asanovic K, Bodik R, Catanzaro B, Gebis J. The landscape References § [Asanovic, 2006] Asanovic K, Bodik R, Catanzaro B, Gebis J. The landscape](https://slidetodoc.com/presentation_image_h2/d7580294d599ef453f5a58429f920842/image-18.jpg)

- Slides: 18

TCCluster: A Cluster Architecture Utilizing the Processor Host Interface as a Network Interconnect Heiner Litz University of Heidelberg

![Motivation Future Trends More cores 2 fold increase per year Asanovic 2006 Motivation § Future Trends § More cores, 2 -fold increase per year [Asanovic 2006]](https://slidetodoc.com/presentation_image_h2/d7580294d599ef453f5a58429f920842/image-2.jpg)

Motivation § Future Trends § More cores, 2 -fold increase per year [Asanovic 2006] § More nodes, 200. 000+ nodes for Exascale [Exascale Rep. ] § Consequence § Exploit fine grain parallelisim § Improve serialization/synchronization § Requirement § Low latency communication 2 Heiner. litz@uni-hd. de

![Motivation Latency lags Bandwidth Patterson 2004 Memory vs Network Motivation § Latency lags Bandwidth [Patterson, 2004] § Memory vs. Network § § §](https://slidetodoc.com/presentation_image_h2/d7580294d599ef453f5a58429f920842/image-3.jpg)

Motivation § Latency lags Bandwidth [Patterson, 2004] § Memory vs. Network § § § 3 Memory BW 10 GB/s Network BW 5 GB/s Memory Latency 50 ns Network Latency 1 us 2 x vs. 20 x Heiner. litz@uni-hd. de

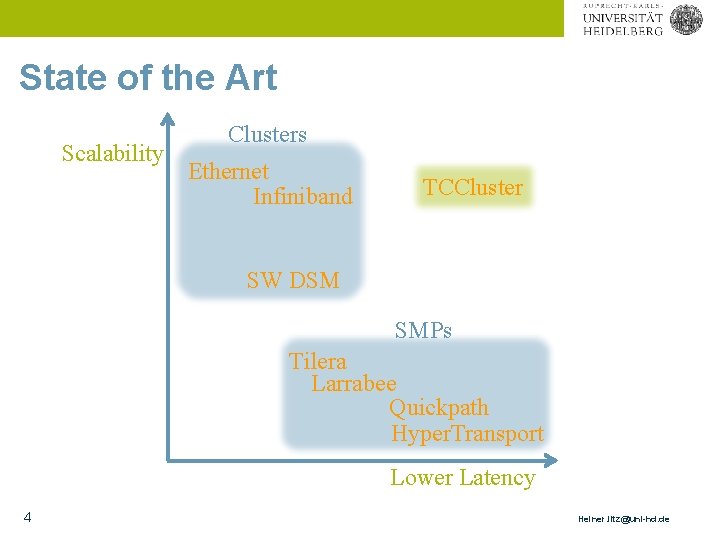

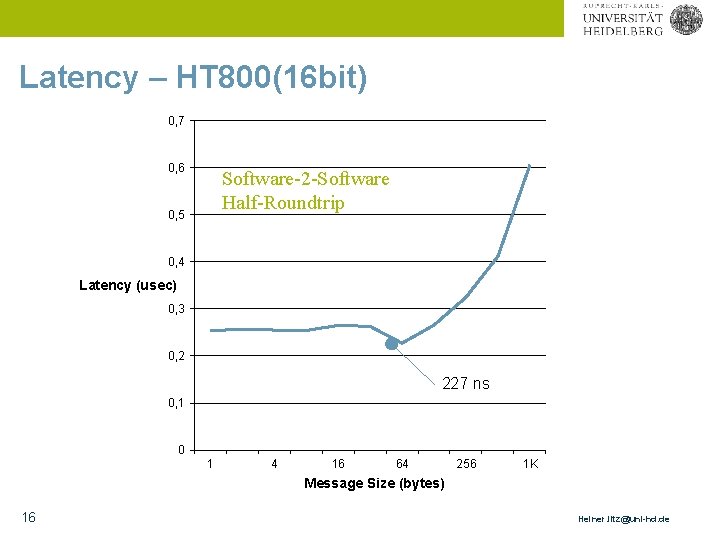

State of the Art Scalability Clusters Ethernet Infiniband TCCluster SW DSM SMPs Tilera Larrabee Quickpath Hyper. Transport Lower Latency 4 Heiner. litz@uni-hd. de

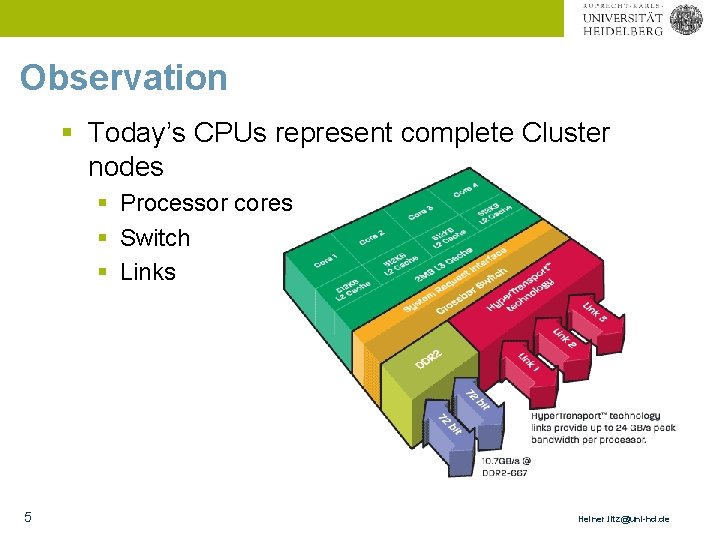

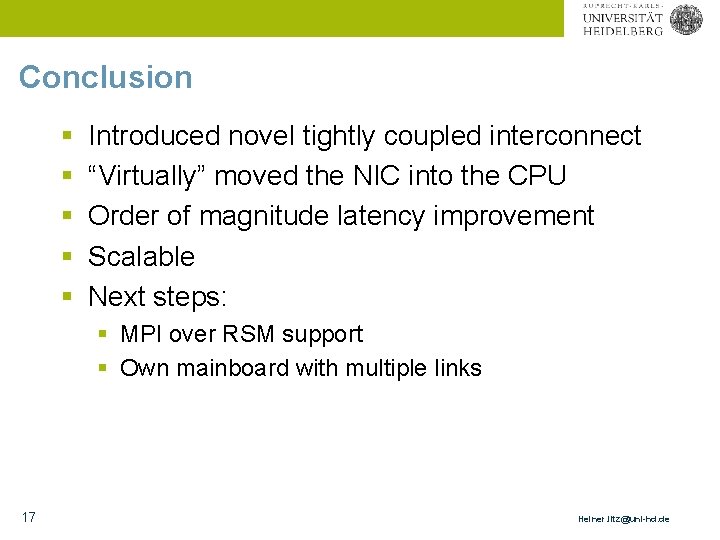

Observation § Today’s CPUs represent complete Cluster nodes § Processor cores § Switch § Links 5 Heiner. litz@uni-hd. de

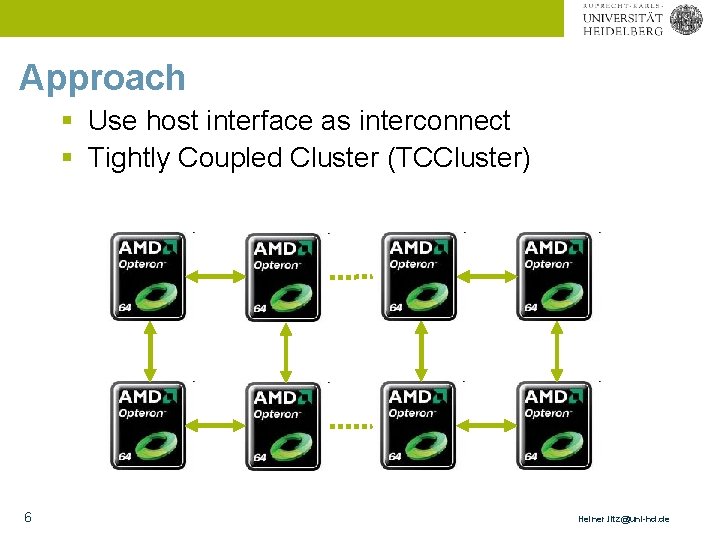

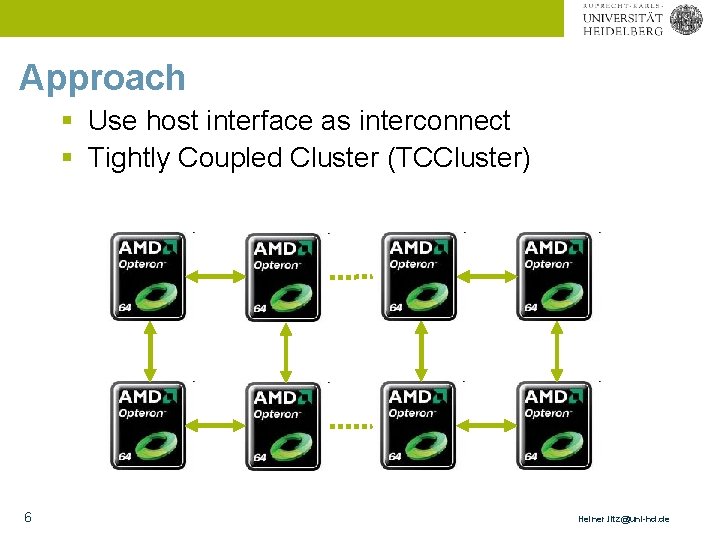

Approach § Use host interface as interconnect § Tightly Coupled Cluster (TCCluster) 6 Heiner. litz@uni-hd. de

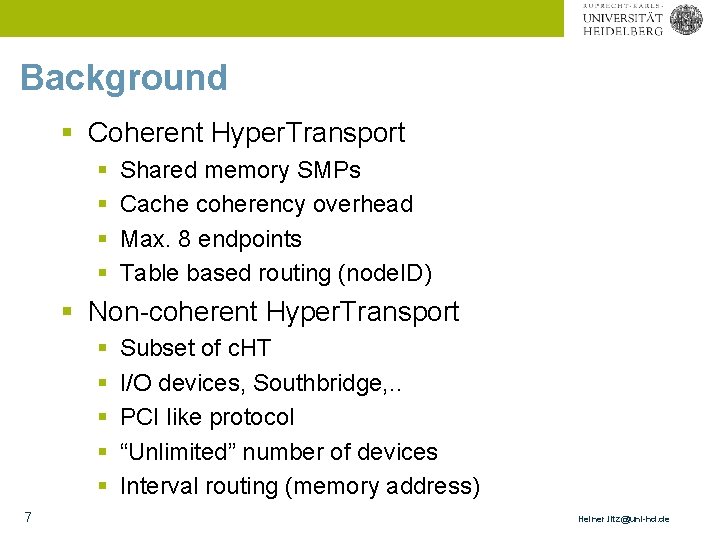

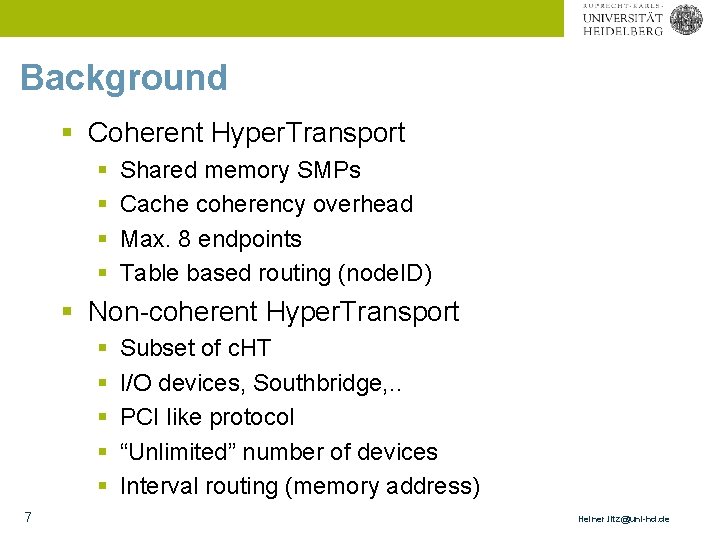

Background § Coherent Hyper. Transport § § Shared memory SMPs Cache coherency overhead Max. 8 endpoints Table based routing (node. ID) § Non-coherent Hyper. Transport § § § 7 Subset of c. HT I/O devices, Southbridge, . . PCI like protocol “Unlimited” number of devices Interval routing (memory address) Heiner. litz@uni-hd. de

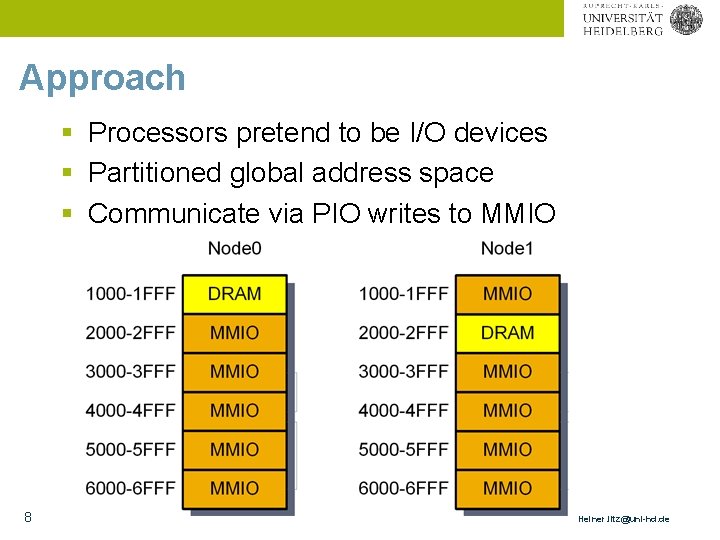

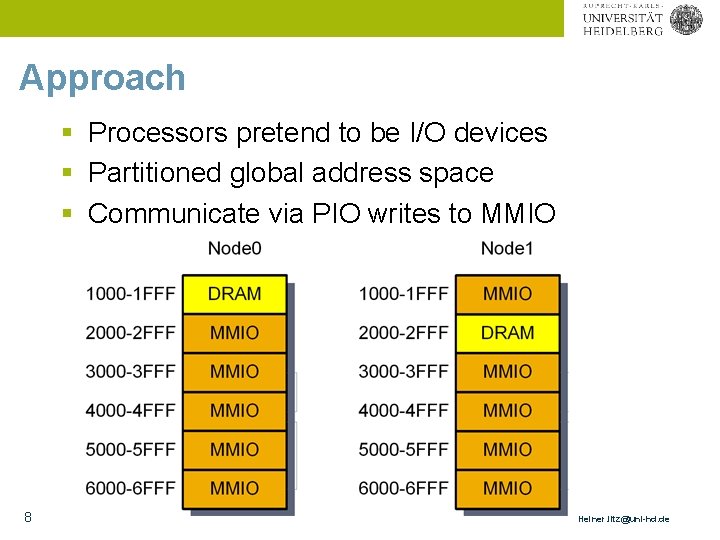

Approach § Processors pretend to be I/O devices § Partitioned global address space § Communicate via PIO writes to MMIO 8 Heiner. litz@uni-hd. de

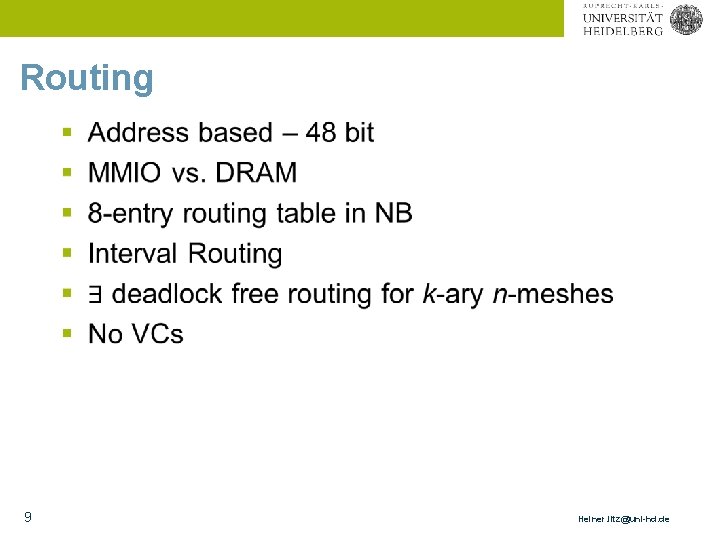

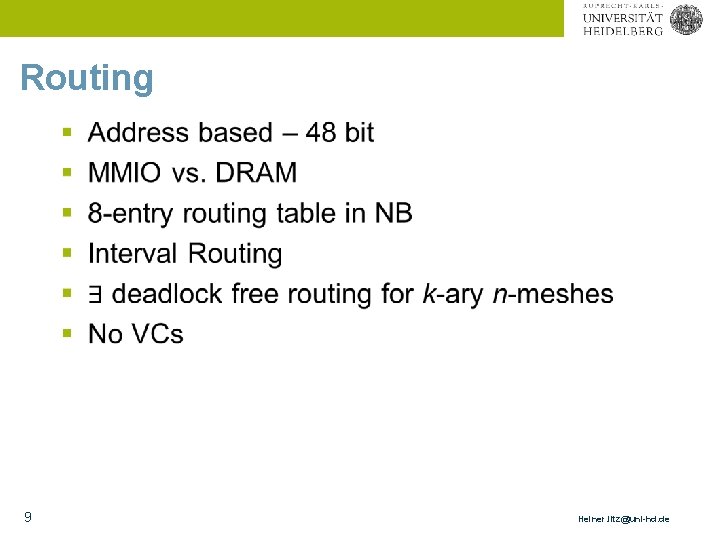

Routing § 9 Heiner. litz@uni-hd. de

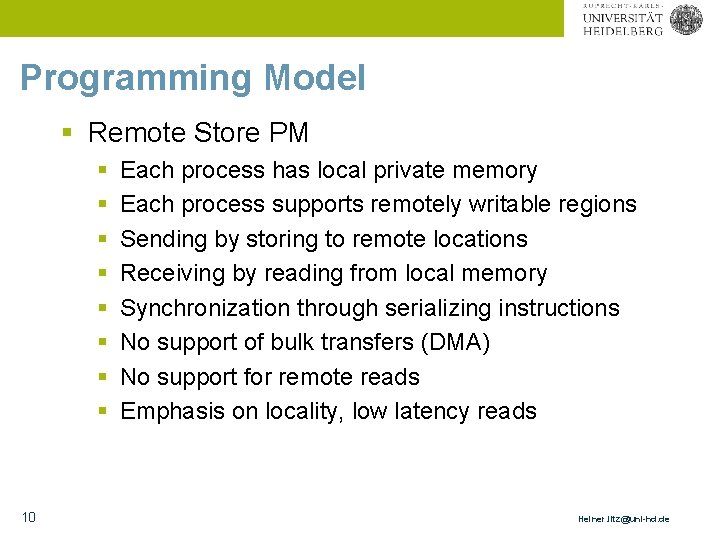

Programming Model § Remote Store PM § § § § 10 Each process has local private memory Each process supports remotely writable regions Sending by storing to remote locations Receiving by reading from local memory Synchronization through serializing instructions No support of bulk transfers (DMA) No support for remote reads Emphasis on locality, low latency reads Heiner. litz@uni-hd. de

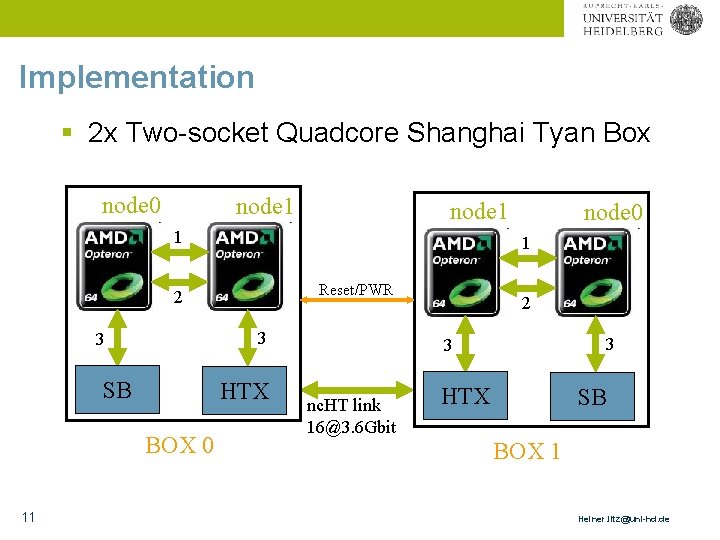

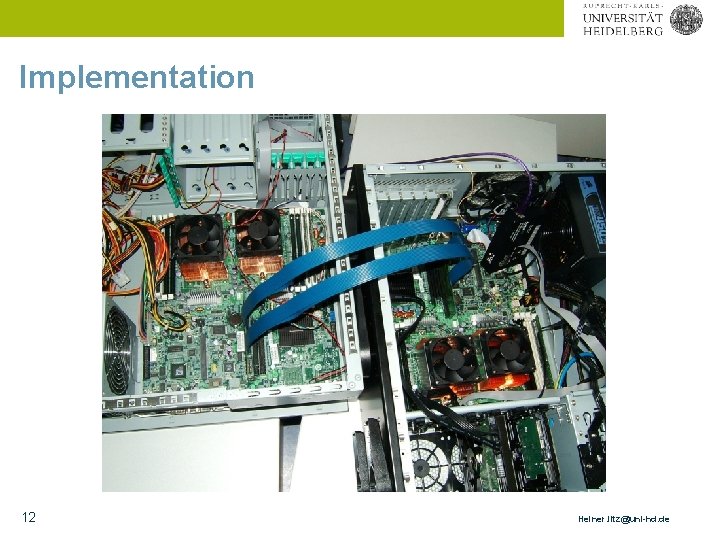

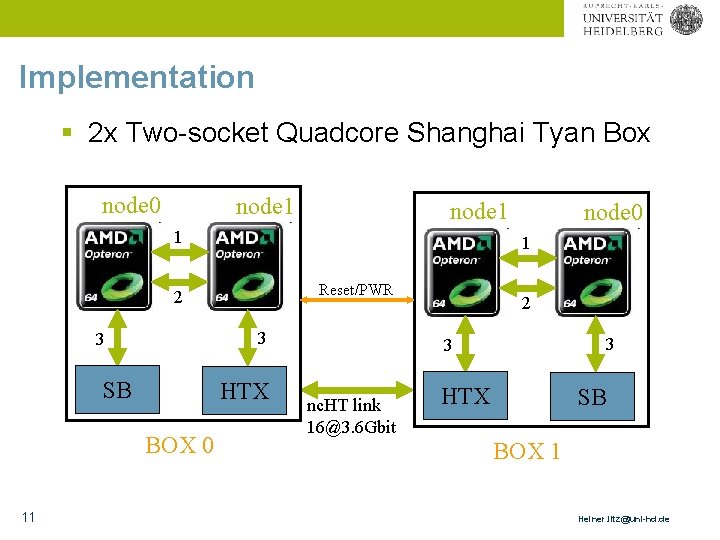

Implementation § 2 x Two-socket Quadcore Shanghai Tyan Box node 0 node 1 1 1 Reset/PWR 2 3 3 SB HTX BOX 0 11 node 0 2 3 3 nc. HT link 16@3. 6 Gbit HTX SB BOX 1 Heiner. litz@uni-hd. de

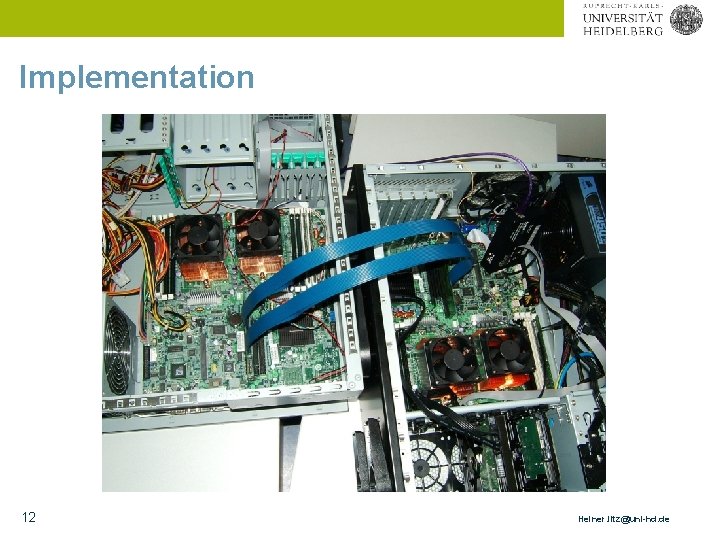

Implementation 12 Heiner. litz@uni-hd. de

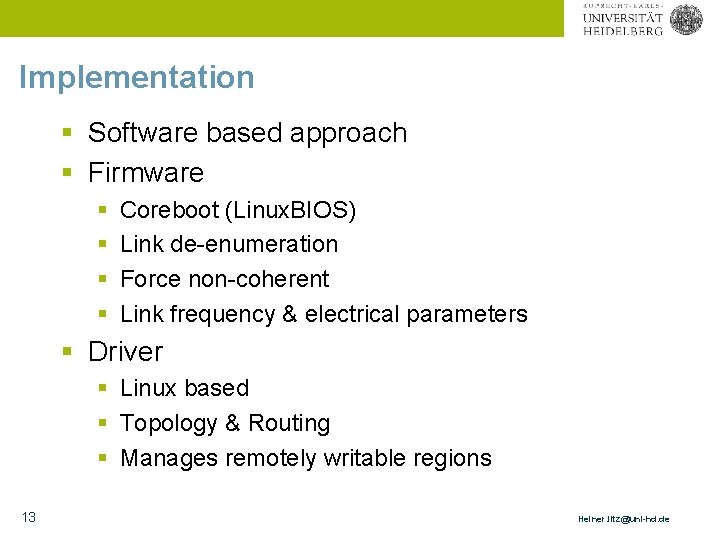

Implementation § Software based approach § Firmware § § Coreboot (Linux. BIOS) Link de-enumeration Force non-coherent Link frequency & electrical parameters § Driver § Linux based § Topology & Routing § Manages remotely writable regions 13 Heiner. litz@uni-hd. de

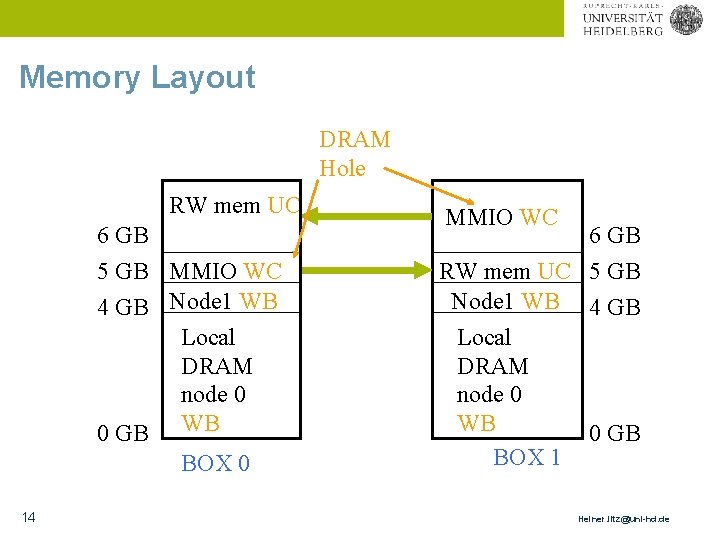

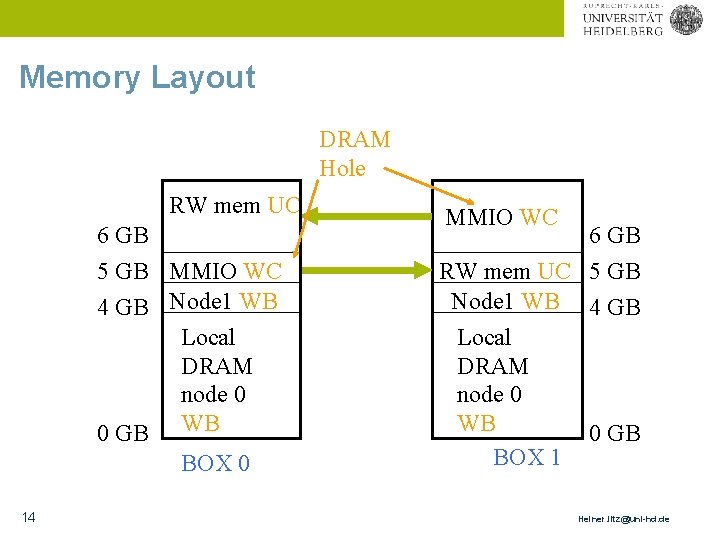

Memory Layout DRAM Hole RW mem UC 6 GB 5 GB MMIO WC 4 GB Node 1 WB Local DRAM node 0 0 GB WB BOX 0 14 MMIO WC 6 GB RW mem UC 5 GB Node 1 WB 4 GB Local DRAM node 0 WB 0 GB BOX 1 Heiner. litz@uni-hd. de

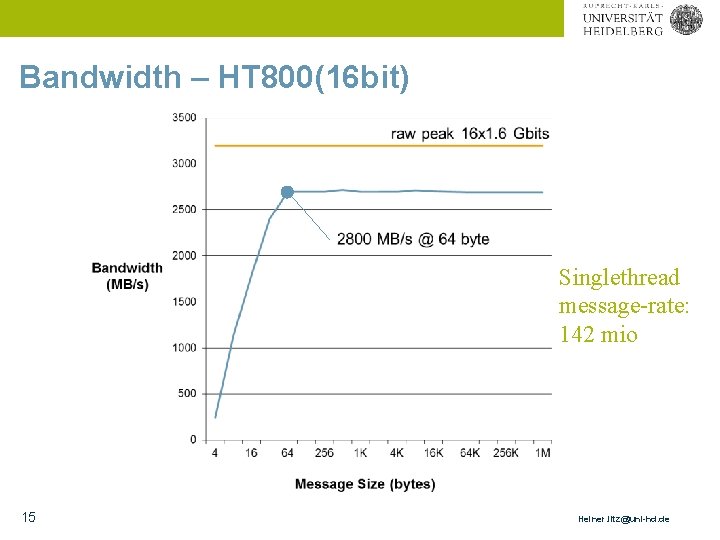

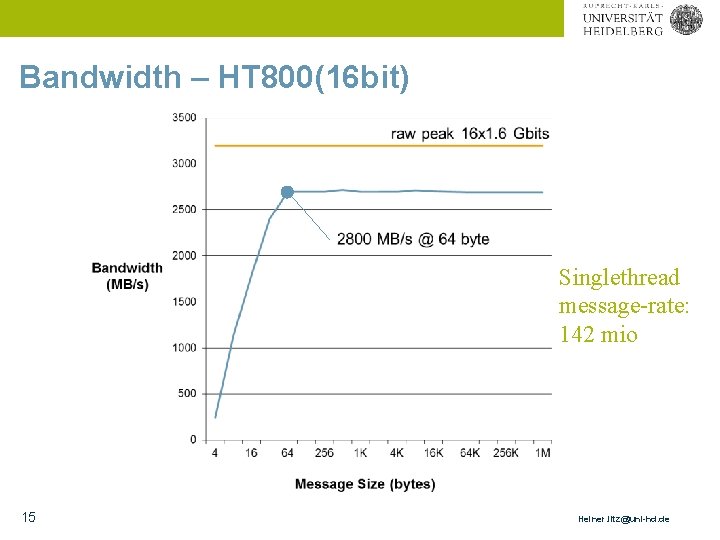

Bandwidth – HT 800(16 bit) Singlethread message-rate: 142 mio 15 Heiner. litz@uni-hd. de

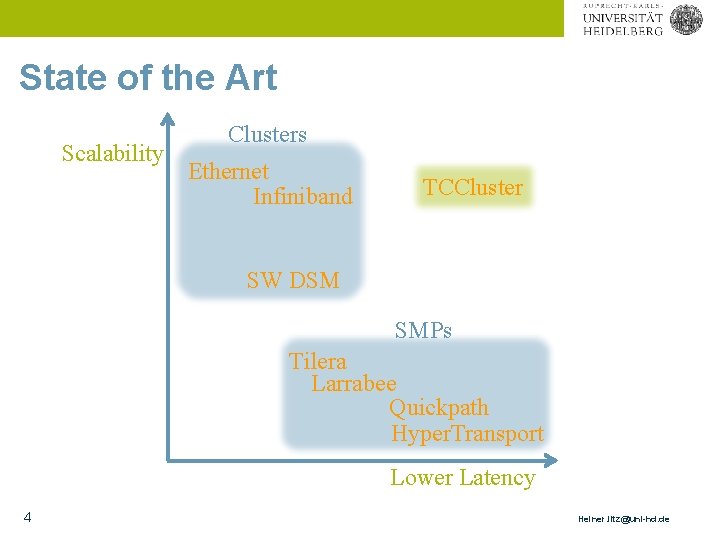

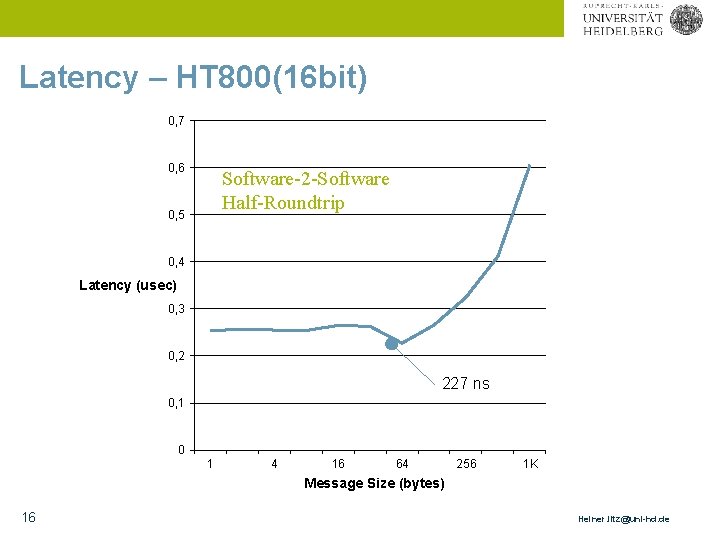

Latency – HT 800(16 bit) 0, 7 0, 6 Software-2 -Software Half-Roundtrip 0, 5 0, 4 Latency (usec) 0, 3 0, 2 227 ns 0, 1 0 1 4 16 64 256 1 K Message Size (bytes) 16 Heiner. litz@uni-hd. de

Conclusion § § § Introduced novel tightly coupled interconnect “Virtually” moved the NIC into the CPU Order of magnitude latency improvement Scalable Next steps: § MPI over RSM support § Own mainboard with multiple links 17 Heiner. litz@uni-hd. de

![References Asanovic 2006 Asanovic K Bodik R Catanzaro B Gebis J The landscape References § [Asanovic, 2006] Asanovic K, Bodik R, Catanzaro B, Gebis J. The landscape](https://slidetodoc.com/presentation_image_h2/d7580294d599ef453f5a58429f920842/image-18.jpg)

References § [Asanovic, 2006] Asanovic K, Bodik R, Catanzaro B, Gebis J. The landscape of parallel computing research: A view from berkeley. UC Berkeley Tech Report. 2006. § [Exascale Rep] Exa. Scale Computing Study: Technology Challenges in Achieving Exascale Systems § [Patterson, 2004] Latency lags Bandwidth. Communications of the ACM, vol. 47, number 10, pp. 71 -75, October 2004. 18 Heiner. litz@uni-hd. de