TASK WP 4 4 a k a JRA

- Slides: 30

TASK WP 4. 4 (a. k. a JRA 2. 4) Accelerated Computing Work Plan Marco Verlato/INFN Viet Tran/IISAS www. egi. eu EGI-Engage is co-funded by the Horizon 2020 Framework Programme of the European Union under grant number 654142

Outlines • Task description • Plan for the grid platform • Plan for the cloud platform 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 2

Task description • Task part of WP 4 (JRA 2): Platforms for the Data Commons – – Expand the EGI federated Cloud platform with new Iaa. S capabilities Prototype an open data platform Provide a new accelerated computing platform Integrate existing commercial and public Iaa. S Cloud deployments and e. Infrastructures with the current EGI production infrastructure • Duration: 1 March 2015 – 31 May 2016 (15 Months) • Funded effort: 13 PM • Partners: – INFN (6 PM) – CREAM and WMS developers at Padua and Milan divisions – IISAS (5 PM) - Institute of Informatics, Slovak Academy of Sciences – CIRMMP (2 PM) – scientific partner of Mo. Brain CC 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 3

From the Do. W • This task will: – implement the support in the information system, to expose the correct information about the accelerated computing technologies available – both software and hardware – at site level, developing a common extension of the information system structure, based on OGF GLUE standard… – extend the HTC and cloud middleware support for co-processors, where needed, in order to provide a transparent and uniform way to allocate these resources together with CPU cores efficiently to the users • Ideally divided in two subtasks: – Accelerated computing in the Grid – Accelerated computing in the Cloud 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 4

Synergy with CC • A Competence Center to Serve Translational Research from Molecule to Brain (see also next presentation) • Dedicated task for “GPU portals for biomolecular simulations” – To deploy the AMBER and/or GROMACS packages on GPGPU test beds, develop standardized protocols optimized for GPGPUs, and build web portals for their use – Develop GPGPU-enabled web portals for exhaustive search in cryo-EM density. This result links to the cryo. EM task 2 of Mo. Brain • Requirements – GPGPU resources for development and testing (CIRMMP, CESNET, BCBR) – Discoverable GPGPU resources for grid (or Cloud) submission (for the putting portals into production – GPGPU Cloud solution, if used, should allow for transparent and automated submission – Software and compiler support on sites providing GPGPU resources (CUDA, open. CL) 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 5

Accelerated computing in Grid Istituto Nazionale di Fisica Nucleare (INFN) Italy www. egi. eu EGI-Engage is co-funded by the Horizon 2020 Framework Programme of the European Union under grant number 654142

Outline • Status of accelerated computing in Grid – Accelerators – Previous work • Work plan 2/25/2021 Insert footer here 7

Accelerators • GPGPU (General-Purpose computing on Graphical Processing Units) – NVIDIA GPU/Tesla/GRID, AMD Radeon/Fire. Pro, Intel HD Graphics, . . . • Intel Many Integrated Core Architecture – Xeon Phi Coprocessor • Specialized PCIe cards with accelerators: – DSP (Digital Signal Processors) – FPGA (Field Programmable Gate Array) 2/25/2021 Insert footer here 8

Previous work • GPGPU Virtual Team: June-Sep. 2012 – Produced surveys for RC Admins and User Communities – Results presented at Prague - EGI TF 2012 (bit. ly/egitf 12 -gpu) – Main conclusions: • • • GPGPU deployment and user base is expected to increase in next 24 months Predominantly NVIDIA Users would like to be able to access these resources on EGI Users prefer full node allocation Potentially many GPGPUs per physical host • GPGPU Working Group: Sep. 2013 – June 2014 – “Simple” goal of working toward full grid support: • Discovery and Enumeration (GLUE + Info Providers) • JDL and Job Submission Support • Resource Accounting – vt-gpgpu(at)mailman. egi. eu and wiki. egi. eu/wiki/GPGPU-WG 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 9

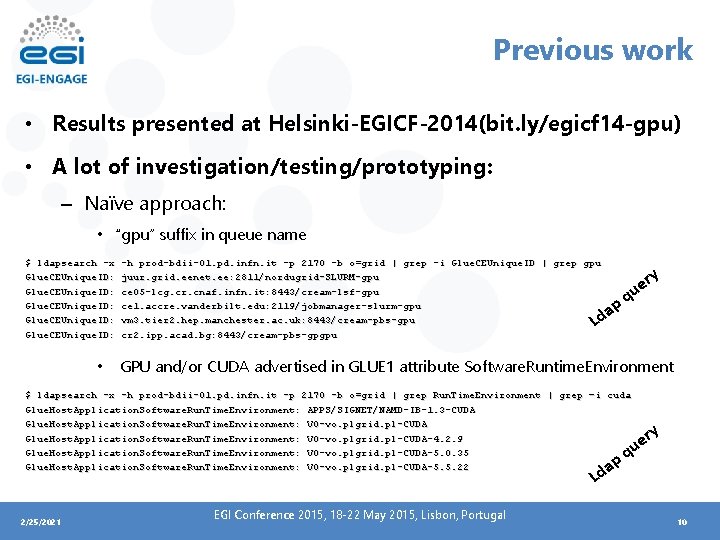

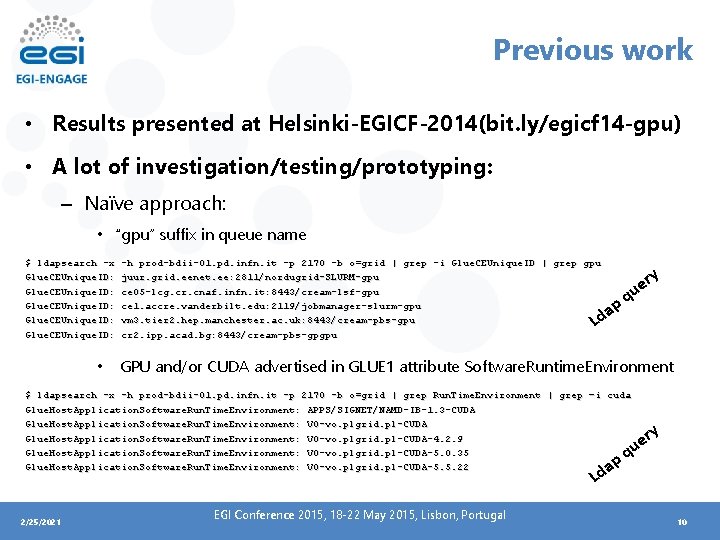

Previous work • Results presented at Helsinki-EGICF-2014(bit. ly/egicf 14 -gpu) • A lot of investigation/testing/prototyping: – Naïve approach: • “gpu” suffix in queue name $ ldapsearch -x Glue. CEUnique. ID: • -h prod-bdii-01. pd. infn. it -p 2170 -b o=grid | grep -i Glue. CEUnique. ID | grep gpu juur. grid. eenet. ee: 2811/nordugrid-SLURM-gpu ce 05 -lcg. cr. cnaf. infn. it: 8443/cream-lsf-gpu ce 1. accre. vanderbilt. edu: 2119/jobmanager-slurm-gpu vm 3. tier 2. hep. manchester. ac. uk: 8443/cream-pbs-gpu cr 2. ipp. acad. bg: 8443/cream-pbs-gpgpu y er u pq a Ld GPU and/or CUDA advertised in GLUE 1 attribute Software. Runtime. Environment $ ldapsearch -x -h prod-bdii-01. pd. infn. it -p 2170 -b o=grid | grep Run. Time. Environment | grep –i cuda Glue. Host. Application. Software. Run. Time. Environment: APPS/SIGNET/NAMD-IB-1. 3 -CUDA Glue. Host. Application. Software. Run. Time. Environment: VO-vo. plgrid. pl-CUDA-4. 2. 9 Glue. Host. Application. Software. Run. Time. Environment: VO-vo. plgrid. pl-CUDA-5. 0. 35 Glue. Host. Application. Software. Run. Time. Environment: VO-vo. plgrid. pl-CUDA-5. 5. 22 y er u pq a Ld 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 10

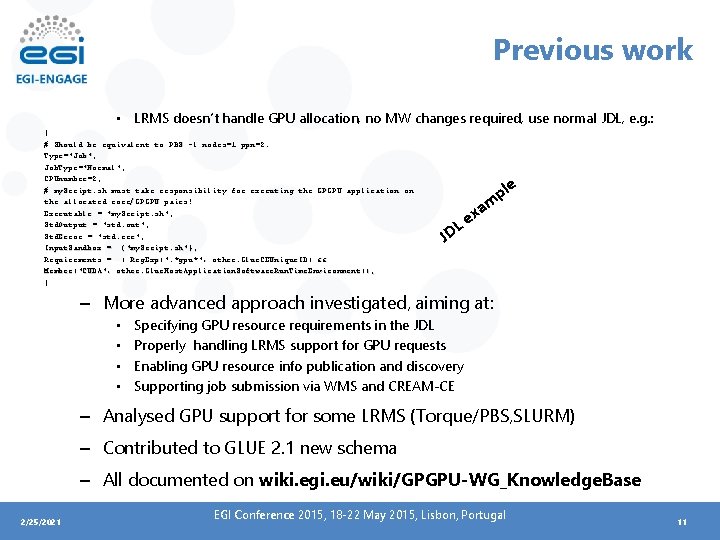

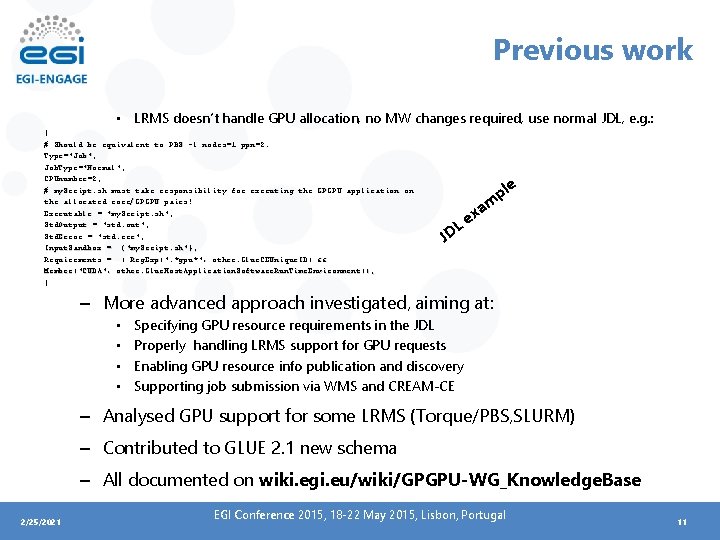

Previous work • LRMS doesn’t handle GPU allocation, no MW changes required, use normal JDL, e. g. : [ # Should be equivalent to PBS -l nodes=1: ppn=2. Type="Job"; Job. Type="Normal"; CPUnumber=2; # my. Script. sh must take responsibility for executing the GPGPU application on the allocated core/GPGPU pairs! Executable = "my. Script. sh"; Std. Output = "std. out"; Std. Error = "std. err"; Input. Sandbox = {"my. Script. sh"}; Requirements = ( Reg. Exp(". *gpu*", other. Glue. CEUnique. ID) && Member("CUDA", other. Glue. Host. Application. Software. Run. Time. Environment)); ] ple m L JD a ex – More advanced approach investigated, aiming at: • • Specifying GPU resource requirements in the JDL Properly handling LRMS support for GPU requests Enabling GPU resource info publication and discovery Supporting job submission via WMS and CREAM-CE – Analysed GPU support for some LRMS (Torque/PBS, SLURM) – Contributed to GLUE 2. 1 new schema – All documented on wiki. egi. eu/wiki/GPGPU-WG_Knowledge. Base 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 11

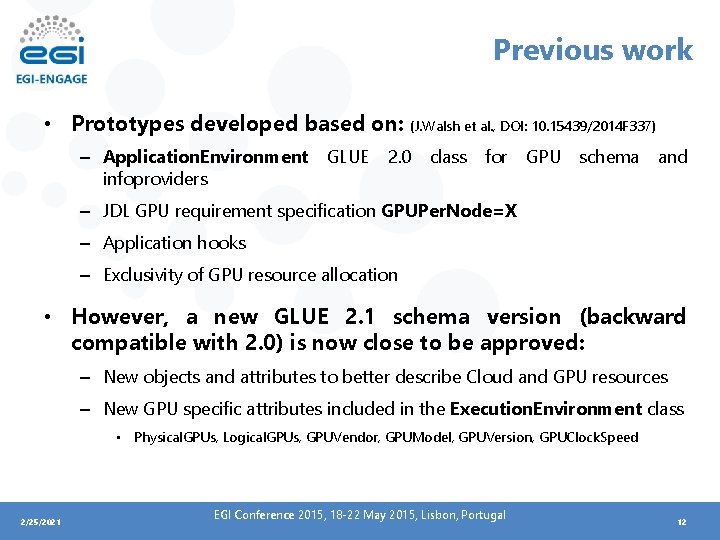

Previous work • Prototypes developed based on: (J. Walsh et al. , DOI: 10. 15439/2014 F 337) – Application. Environment infoproviders GLUE 2. 0 class for GPU schema and – JDL GPU requirement specification GPUPer. Node=X – Application hooks – Exclusivity of GPU resource allocation • However, a new GLUE 2. 1 schema version (backward compatible with 2. 0) is now close to be approved: – New objects and attributes to better describe Cloud and GPU resources – New GPU specific attributes included in the Execution. Environment class • Physical. GPUs, Logical. GPUs, GPUVendor, GPUModel, GPUVersion, GPUClock. Speed 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 12

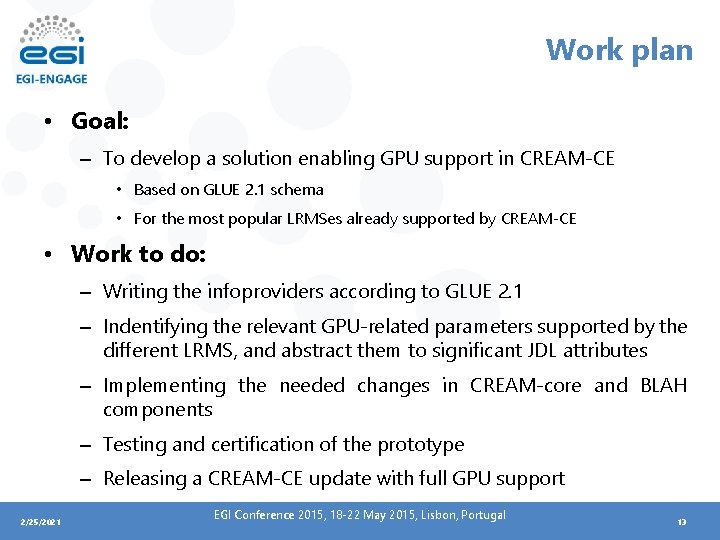

Work plan • Goal: – To develop a solution enabling GPU support in CREAM-CE • Based on GLUE 2. 1 schema • For the most popular LRMSes already supported by CREAM-CE • Work to do: – Writing the infoproviders according to GLUE 2. 1 – Indentifying the relevant GPU-related parameters supported by the different LRMS, and abstract them to significant JDL attributes – Implementing the needed changes in CREAM-core and BLAH components – Testing and certification of the prototype – Releasing a CREAM-CE update with full GPU support 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 13

Work plan • Work already started from Mo. Brain use-case and testbed – AMBER and GROMACS applications with CUDA 5. 5 – 3 nodes (2 x Intel Xeon E 5 -2620 v 2) with 2 NVIDIA Tesla K 20 m GPUs per node at CIRMMP – Torque 4. 2. 10 (source compiled with NVML libs)/ Maui 3. 3. 1 – EMI 3 CREAM-CE • Other use-cases and test-beds from User Communities and Resource Centres are welcome – Collection and analysis of requirements – Our aim is to develop the most general solution 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 14

Timeline • M 1 -M 4 – Review of available technologies for using GPUs in grid • investigating GPU support of the most popular LRMSes – Collecting and analysing requirements from user communities • M 5 -M 7 – Definition of abstract GPU-related CREAM JDL attributes to be mapped in the supported LRMSes / Update of the CREAM JDL specification document • M 8 -M 10 – First implementation of GPU support in CREAM / GPU-enabled CREAM prototype released for testing • M 12 – End of testing of GPU-enabled CREAM prototype and feedback collection / Official final CREAM release with full GPU support 2/25/2021 EGI Conference 2015, 18 -22 May 2015, Lisbon, Portugal 15

Accelerated computing in Clouds Institute of Informatics (IISAS) Slovakia www. egi. eu EGI-Engage is co-funded by the Horizon 2020 Framework Programme of the European Union under grant number 654142

Outlines • Status of accelerated computing in Clouds – – – Accelerators Hypervisors Cloud management frameworks (CMFs) VM images Federated cloud facilities • Next steps • Long-term plan 2/25/2021 EGI conference 2015 17

Supporting accelerators in clouds • Require efforts for development/support at all levels – Chipset : HW virtualization support – OS level: correct kernel configuration for the accelerators – Hypervisor: configuration passthrough, v. GPU – CMFs: VM start, scheduler – Fed. Cloud facilities: accounting, information discovery – Application: VM images with correct drivers for specific chipsets 2/25/2021 EGI conference 2015 18

Accelerators • GPGPU (General-Purpose computing on Graphical Processing Units) – NVIDIA GPU/Tesla/GRID, AMD Radeon/Fire. Pro, Intel HD Graphics, . . . – Virtualization using VGA passthrough, v. GPU (GPU partitioning) NVIDIA GRID accelerators • Intel Many Integrated Core Architecture – Xeon Phi Coprocessor – Virtualization using PCI passthrough • Specialized PCIe cards with accelerators: – DSP (Digital Signal Processors) – FPGA (Field Programmable Gate Array) – Not commonly used in cloud environment 2/25/2021 EGI conference 2015 19

Passthrough • Allows VMs to have direct access to devices, typically PCI, bypass virtualization layer – Allow VMs to use devices physically – Can reduce the overhead generated by virtualization • However, there are several limitations – Cannot share the devices between VMs – Live migration is not supported – Various stability and security issues 2/25/2021 EGI conference 2015 20

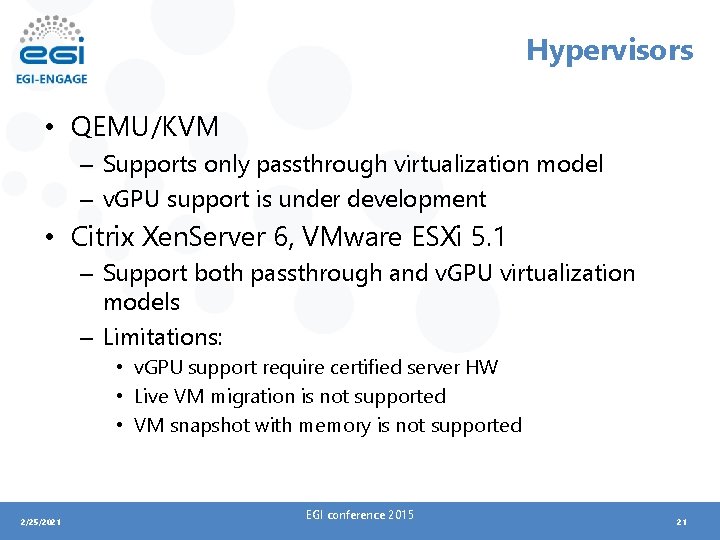

Hypervisors • QEMU/KVM – Supports only passthrough virtualization model – v. GPU support is under development • Citrix Xen. Server 6, VMware ESXi 5. 1 – Support both passthrough and v. GPU virtualization models – Limitations: • v. GPU support require certified server HW • Live VM migration is not supported • VM snapshot with memory is not supported 2/25/2021 EGI conference 2015 21

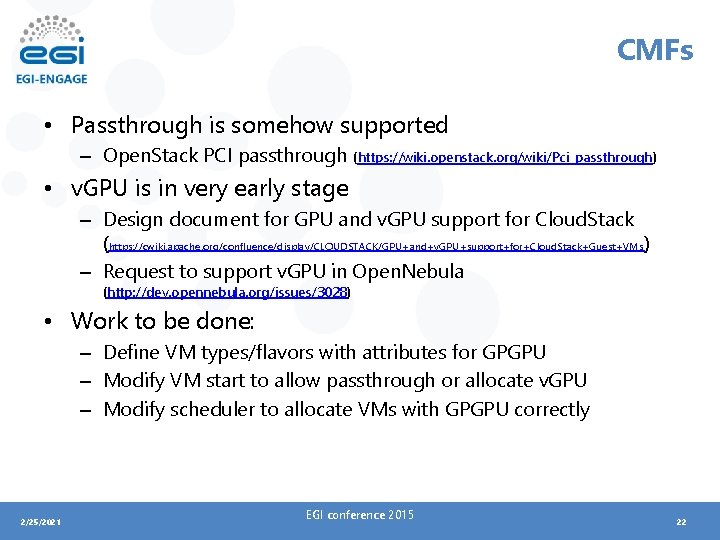

CMFs • Passthrough is somehow supported – Open. Stack PCI passthrough (https: //wiki. openstack. org/wiki/Pci_passthrough) • v. GPU is in very early stage – Design document for GPU and v. GPU support for Cloud. Stack (https: //cwiki. apache. org/confluence/display/CLOUDSTACK/GPU+and+v. GPU+support+for+Cloud. Stack+Guest+VMs) – Request to support v. GPU in Open. Nebula (http: //dev. opennebula. org/issues/3028) • Work to be done: – Define VM types/flavors with attributes for GPGPU – Modify VM start to allow passthrough or allocate v. GPU – Modify scheduler to allocate VMs with GPGPU correctly 2/25/2021 EGI conference 2015 22

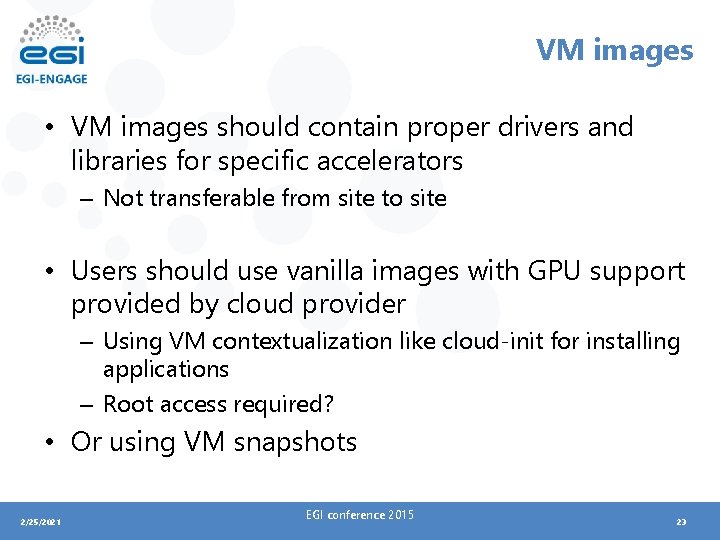

VM images • VM images should contain proper drivers and libraries for specific accelerators – Not transferable from site to site • Users should use vanilla images with GPU support provided by cloud provider – Using VM contextualization like cloud-init for installing applications – Root access required? • Or using VM snapshots 2/25/2021 EGI conference 2015 23

Fed. Cloud facilities • App. DB – VM images are rather site-specific: any sense to use App. DB ? • Information discovery – Should reuse GLUE 2 scheme of grid sites with GPGPU • Accounting – How to account GPU • Brokering, monitoring, VM management 2/25/2021 EGI conference 2015 24

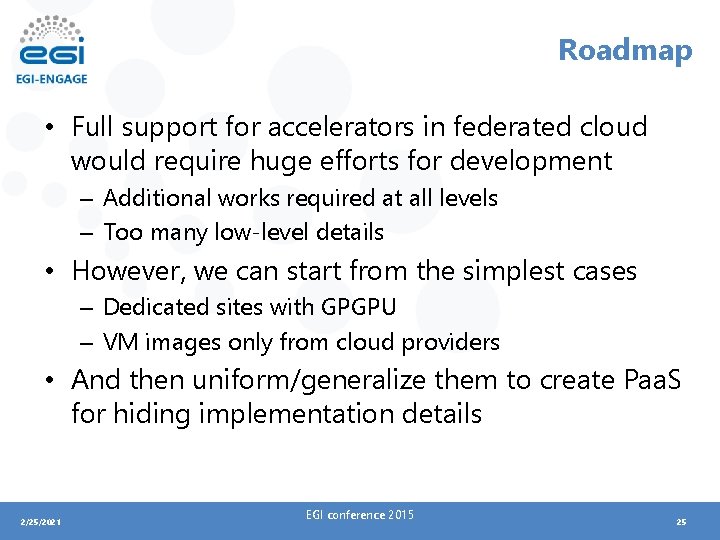

Roadmap • Full support for accelerators in federated cloud would require huge efforts for development – Additional works required at all levels – Too many low-level details • However, we can start from the simplest cases – Dedicated sites with GPGPU – VM images only from cloud providers • And then uniform/generalize them to create Paa. S for hiding implementation details 2/25/2021 EGI conference 2015 25

Next step • Dedicated cloud sites with GPGPU – Homogenous, identical working nodes – Single VM type, single VM per node Ø Simple configuration, no conflicting resources, no change in CMF scheduler, no v. GPU support required – VM image from cloud provider Ø No additional driver required, thoroughly tested by provider Ø Possibly configured without root access for security – Users just start the VMs and use Ø No low-level details, just download apps/data and compute • And integrate the site to EGI Fed. Cloud 2/25/2021 EGI conference 2015 26

Work in WP 4 • Review available technologies for supporting accelerated computing in the clouds – Identify what additional works required and evaluate them • Create cloud sites with GPGPU support for proofof-concept – Firstly dedicated cloud sites then generalize • Integrate sites to Fed. Cloud – Need cooperation with Fed. Cloud facilities 2/25/2021 EGI conference 2015 27

Long-term plan • To create a Paa. S for GPGPU – Hide low-level technical details – Uniform access to federated cloud – Performance optimization and brokering 2/25/2021 EGI conference 2015 28

Additional information • More information available at wiki pages https: //wiki. egi. eu/wiki/GPGPU-Fed. Cloud 2/25/2021 EGI conference 2015 29

Thank you for your attention. Questions? www. egi. eu EGI-Engage is co-funded by the Horizon 2020 Framework Programme of the European Union under grant number 654142