Systems Engineering at NASA Selected Topics Jim Andary

![Systems Modeling Language (Sys. ML) From [Peak, 2007] Morgan State University • Systems Engineering Systems Modeling Language (Sys. ML) From [Peak, 2007] Morgan State University • Systems Engineering](https://slidetodoc.com/presentation_image/2d14d5c9a400ef445660b8a2c2d1a343/image-16.jpg)

- Slides: 64

Systems Engineering at NASA Selected Topics Jim Andary NASA Goddard Space Flight Center James. F. Andary@nasa. gov November 5, 2009 Morgan State University • Systems Engineering Lecture 3

Agenda The lecture will be based on the NASA Systems Engineering Handbook (SP-2007 -6105) Included are discussions of the following: • Handbook Overview • System Modeling • Risk Management • Configuration Management • Technical Measures • Decision Analysis Morgan State University • Systems Engineering Lecture 3 2

Finding Documents • NASA Systems Engineering Processes and Requirements (NPR 7120. 1 A ) can be viewed at: http: //nodis 3. gsfc. nasa. gov/display. Dir. cfm? t=NPR&c=7123&s=1 A • The NASA Systems Engineering Handbook (SP-2007 -6105 Rev. 1) can be downloaded from: http: //education. ksc. nasa. gov/esmdspacegrant/Documents/NASA SP-2007 -6105 Rev 1 Final 31 Dec 2007. pdf Morgan State University • Systems Engineering Lecture 3 3

Handbook Overview Why a Handbook? • The handbook is intended to provide general guidance and information on systems engineering as it should be applied throughout NASA. • Its goal is to increase systems engineering process awareness and consistency across NASA and advance the practice of systems engineering. • The handbook is a companion to NASA Systems Engineering Processes and Requirements (NPR 7123. 1) which we studied last week. Morgan State University • Systems Engineering Lecture 3 4

Handbook Overview Table of Contents 1. Introduction 2. Fundamentals of Systems Engineering 3. NASA Program/Project Life Cycle 4. System Design 5. Product Realization 6. Crosscutting Technical Management 7. Special Topics Appendices (17) Morgan State University • Systems Engineering Lecture 3 5

Agenda The lecture will be based on the NASA Systems Engineering Handbook (SP-2007 -6105) Included are discussions of the following: • Handbook Overview • System Modeling • Risk Management • Configuration Management • Technical Measures • Decision Analysis Morgan State University • Systems Engineering Lecture 3 6

System Modeling Models • Models are the language of the designer. • Models are representations of the system-to-be-built or as-built. • Models are a vehicle for communications with various stakeholders. • Models allow reasoning about characteristics of the real system. • Models can be used for verification by analysis. • All models must themselves be verified. Morgan State University • Systems Engineering Lecture 3 7

System Modeling Tools • There are many modeling tools available to the systems engineering team: – Vitech CORE© -- models IDEF 0, functional flow block diagrams, etc. – Magic. Draw -- UML business process, architecture, software and system modeling tool with teamwork support – Enterprise Architect – UML, Sys. ML, architecture, system modeling – Solid. Works – 3 D design Morgan State University • Systems Engineering Lecture 3 8

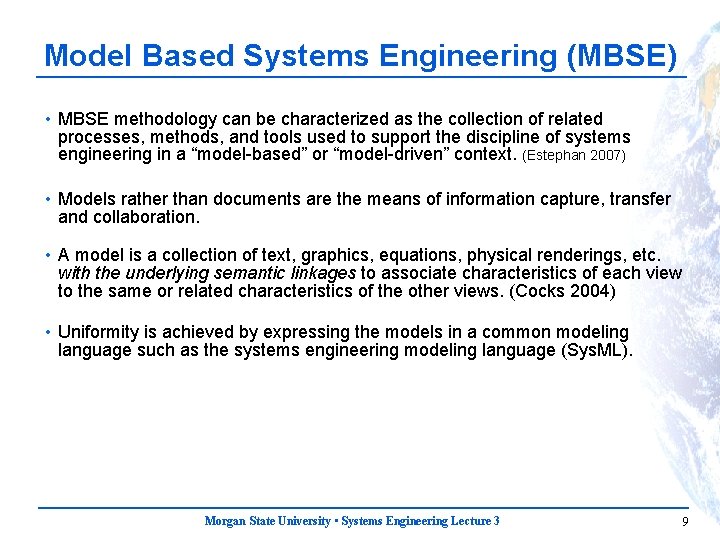

Model Based Systems Engineering (MBSE) • MBSE methodology can be characterized as the collection of related processes, methods, and tools used to support the discipline of systems engineering in a “model-based” or “model-driven” context. (Estephan 2007) • Models rather than documents are the means of information capture, transfer and collaboration. • A model is a collection of text, graphics, equations, physical renderings, etc. with the underlying semantic linkages to associate characteristics of each view to the same or related characteristics of the other views. (Cocks 2004) • Uniformity is achieved by expressing the models in a common modeling language such as the systems engineering modeling language (Sys. ML). Morgan State University • Systems Engineering Lecture 3 9

Model Based Systems Engineering (MBSE) • Although it holds considerable promise for revolutionizing the systems engineering process and freeing systems engineering from the present document-centric environment, MBSE still has a long way to go before it is universally accepted and implemented. • The key enablers of a fully-developed MBSE are the emerging technologies which facilitate the exchange of information among various system viewpoints that are developed in domain-specific languages using a variety of tools and platforms. • The International Council on Systems Engineering [INCOSE, 2007] vision for the future development of MBSE predicts that MBSE will be widely used throughout both academia and industry by the year 2020. Morgan State University • Systems Engineering Lecture 3 10

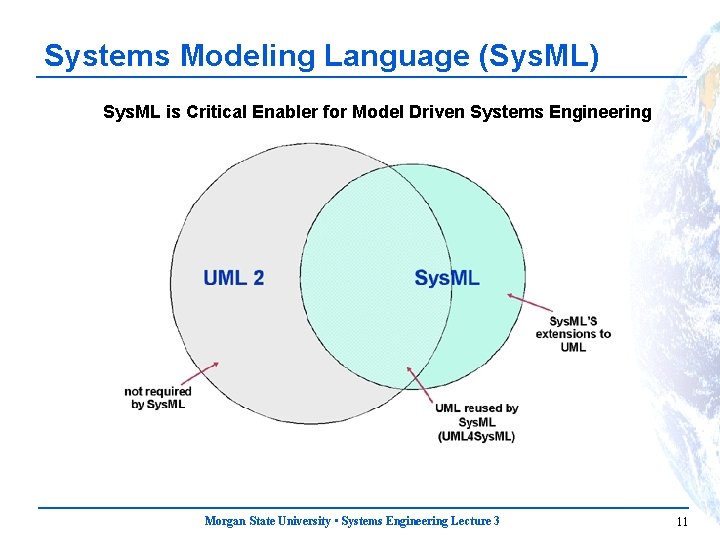

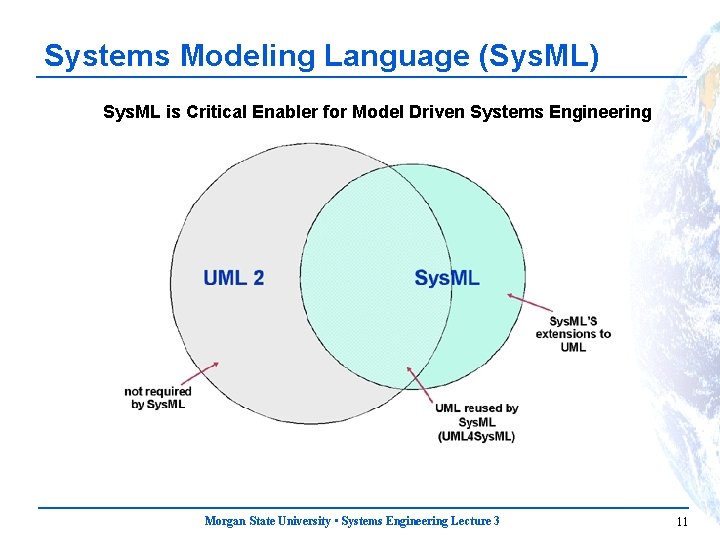

Systems Modeling Language (Sys. ML) Sys. ML is Critical Enabler for Model Driven Systems Engineering Morgan State University • Systems Engineering Lecture 3 11

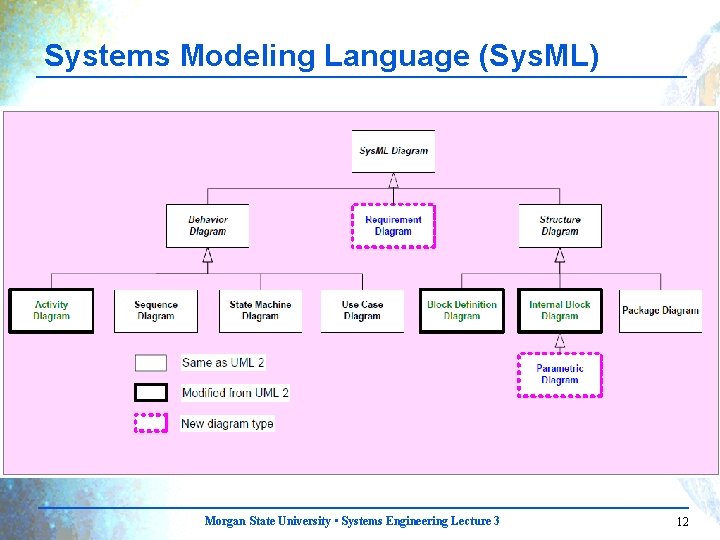

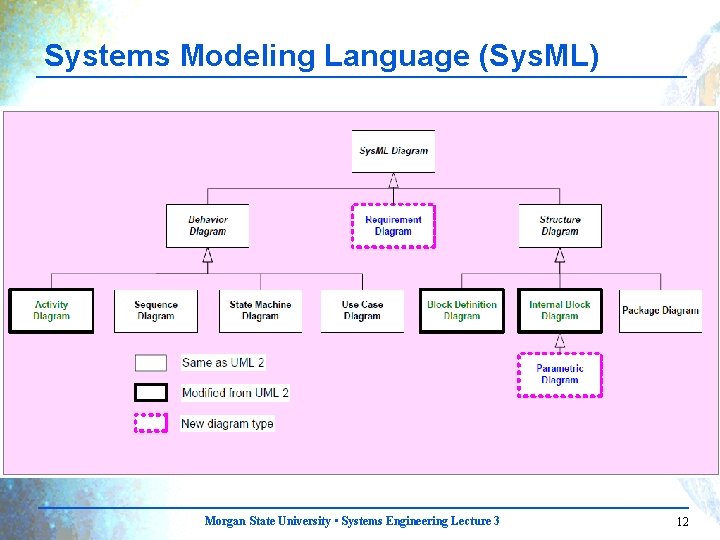

Systems Modeling Language (Sys. ML) Morgan State University • Systems Engineering Lecture 3 12

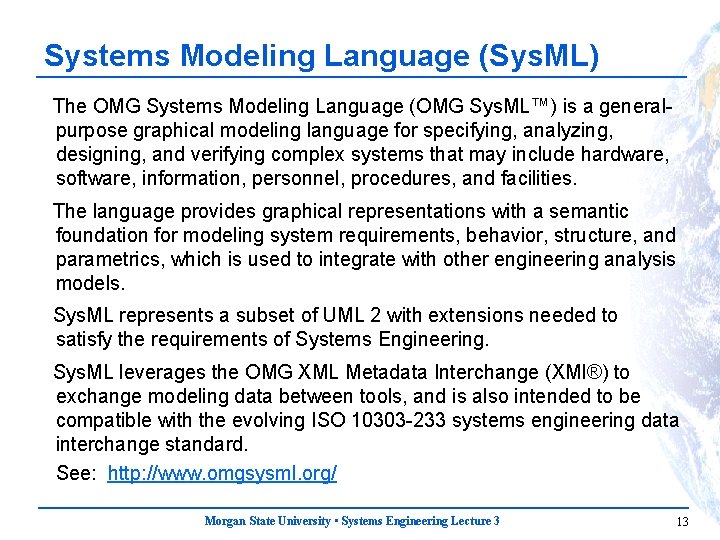

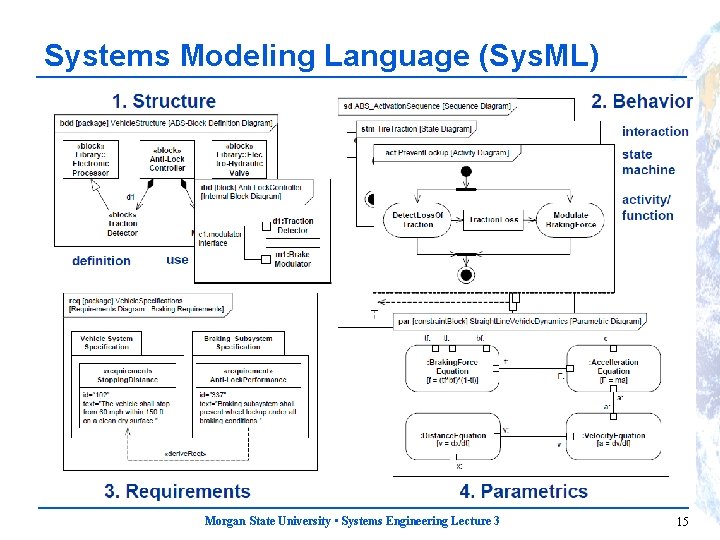

Systems Modeling Language (Sys. ML) The OMG Systems Modeling Language (OMG Sys. ML™) is a generalpurpose graphical modeling language for specifying, analyzing, designing, and verifying complex systems that may include hardware, software, information, personnel, procedures, and facilities. The language provides graphical representations with a semantic foundation for modeling system requirements, behavior, structure, and parametrics, which is used to integrate with other engineering analysis models. Sys. ML represents a subset of UML 2 with extensions needed to satisfy the requirements of Systems Engineering. Sys. ML leverages the OMG XML Metadata Interchange (XMI®) to exchange modeling data between tools, and is also intended to be compatible with the evolving ISO 10303 -233 systems engineering data interchange standard. See: http: //www. omgsysml. org/ Morgan State University • Systems Engineering Lecture 3 13

Systems Modeling Language (Sys. ML) Sys. ML is a visual modeling language that provides – Semantics (meaning) – Notation (representation of meaning) Sys. ML not a methodology or a tool Sys. ML is methodology and tool independent Morgan State University • Systems Engineering Lecture 3 14

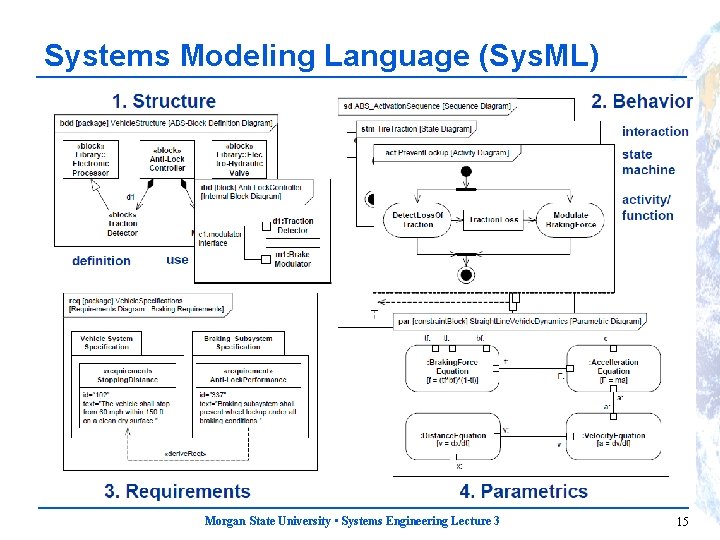

Systems Modeling Language (Sys. ML) Morgan State University • Systems Engineering Lecture 3 15

![Systems Modeling Language Sys ML From Peak 2007 Morgan State University Systems Engineering Systems Modeling Language (Sys. ML) From [Peak, 2007] Morgan State University • Systems Engineering](https://slidetodoc.com/presentation_image/2d14d5c9a400ef445660b8a2c2d1a343/image-16.jpg)

Systems Modeling Language (Sys. ML) From [Peak, 2007] Morgan State University • Systems Engineering Lecture 3 16

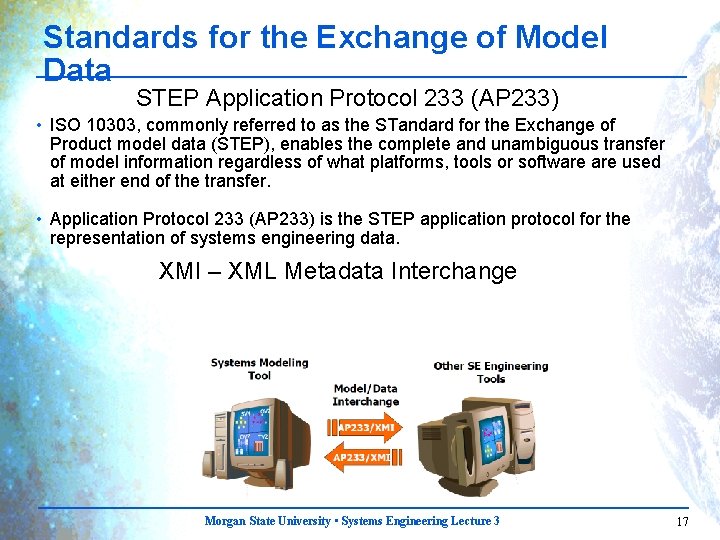

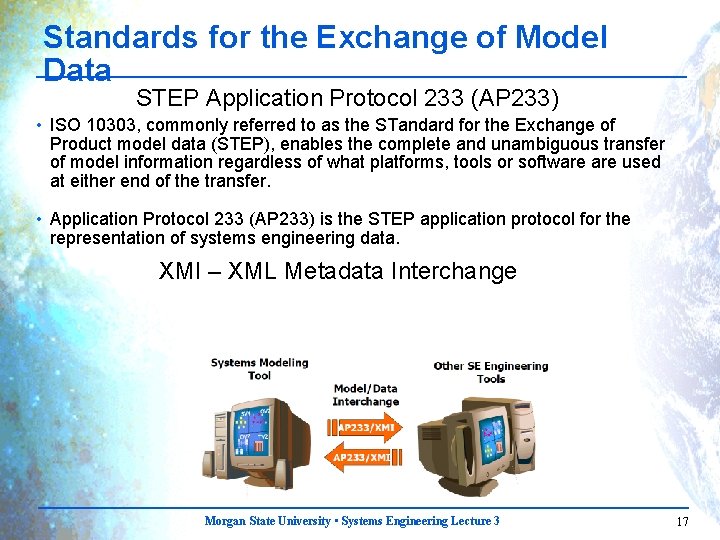

Standards for the Exchange of Model Data STEP Application Protocol 233 (AP 233) • ISO 10303, commonly referred to as the STandard for the Exchange of Product model data (STEP), enables the complete and unambiguous transfer of model information regardless of what platforms, tools or software used at either end of the transfer. • Application Protocol 233 (AP 233) is the STEP application protocol for the representation of systems engineering data. XMI – XML Metadata Interchange Morgan State University • Systems Engineering Lecture 3 17

Agenda The lecture will be based on the NASA Systems Engineering Handbook (SP-2007 -6105) Included are discussions of the following: • Handbook Overview • System Modeling • Risk Management • Configuration Management • Technical Measures • Decision Analysis Morgan State University • Systems Engineering Lecture 3 18

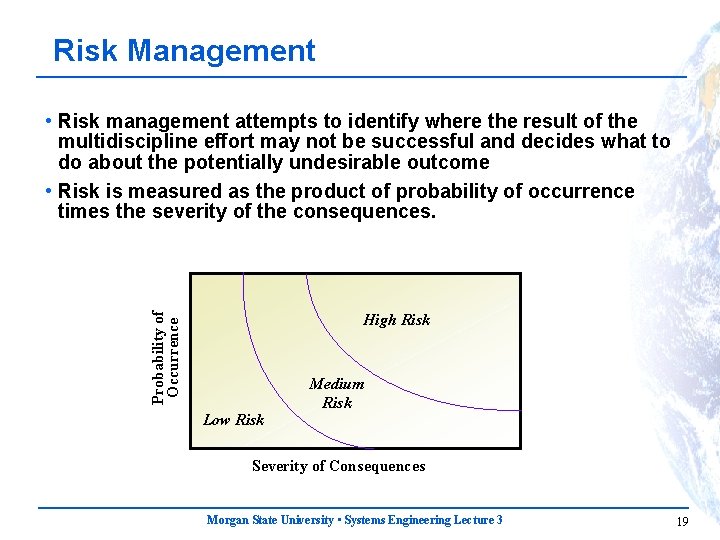

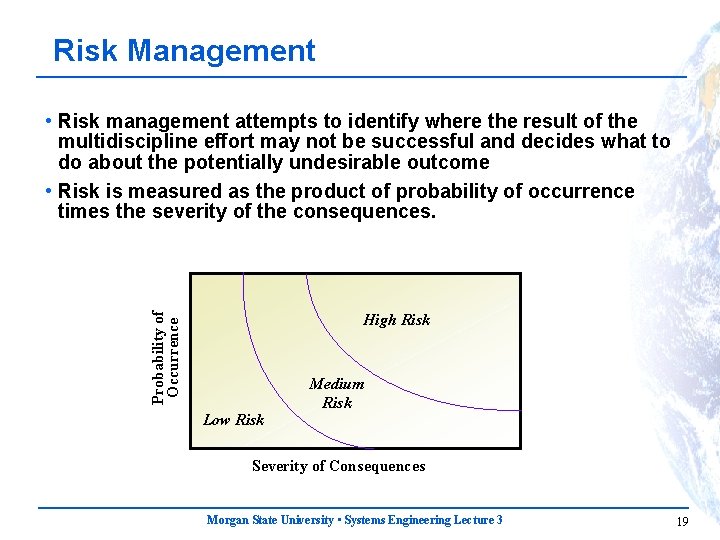

Risk Management Probability of Occurrence • Risk management attempts to identify where the result of the multidiscipline effort may not be successful and decides what to do about the potentially undesirable outcome • Risk is measured as the product of probability of occurrence times the severity of the consequences. High Risk Low Risk Medium Risk Severity of Consequences Morgan State University • Systems Engineering Lecture 3 19

Risk Management • Risk management must consider the loss of the mission and the risk that the end item is not completed on time and within cost. – Define tolerable risks of mission loss – Identify and document risks – Use reliability analyses to assess risks, • Probabilistic Risk Assessment (PRA) • Failure Modes and Effects Analysis (FMEA) • Fault Tree Analysis (FTA) – Analyze the probability and consequences of their occurrence – Apply resources to reduce the “High” risks – Implement risk reduction measures to mitigate risks before they become a problem • Many types of risks: – Safety risks – Risks to end item performance – Risks to delivery of the end item on time and within cost Morgan State University • Systems Engineering Lecture 3 20

Risk Management Responsibilities • The systems engineering team is responsible for: – Coordinating risk identification, – Performing risk analysis, and – Recommending risk reduction measures. • The product manager is responsible for deciding if, and to what level, resources will be allocated for risk reduction. Morgan State University • Systems Engineering Lecture 3 21

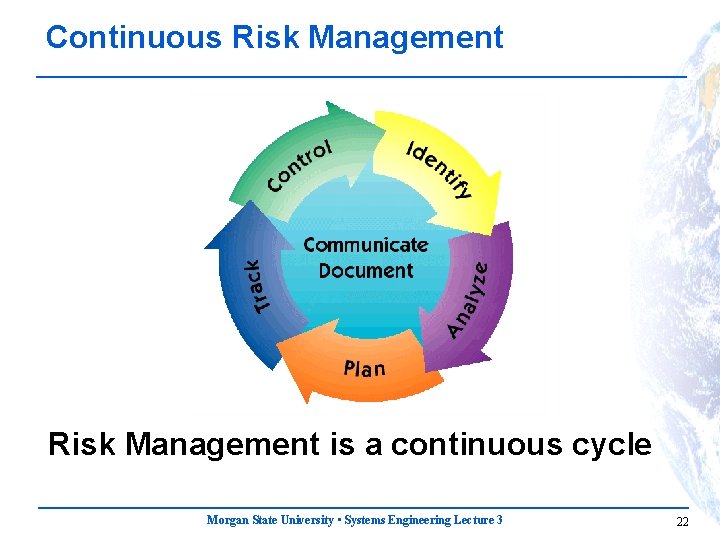

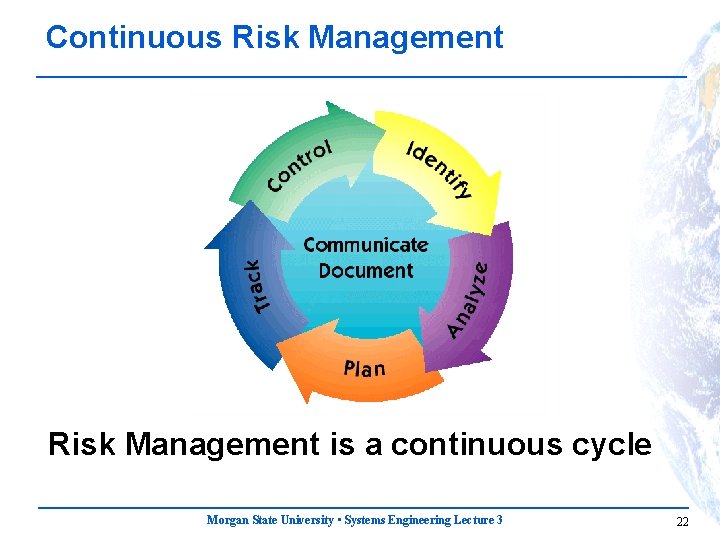

Continuous Risk Management is a continuous cycle Morgan State University • Systems Engineering Lecture 3 22

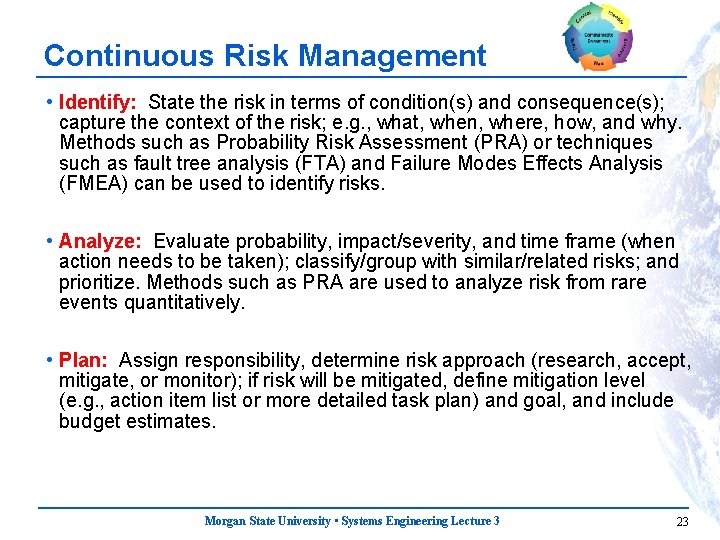

Continuous Risk Management • Identify: State the risk in terms of condition(s) and consequence(s); capture the context of the risk; e. g. , what, when, where, how, and why. Methods such as Probability Risk Assessment (PRA) or techniques such as fault tree analysis (FTA) and Failure Modes Effects Analysis (FMEA) can be used to identify risks. • Analyze: Evaluate probability, impact/severity, and time frame (when action needs to be taken); classify/group with similar/related risks; and prioritize. Methods such as PRA are used to analyze risk from rare events quantitatively. • Plan: Assign responsibility, determine risk approach (research, accept, mitigate, or monitor); if risk will be mitigated, define mitigation level (e. g. , action item list or more detailed task plan) and goal, and include budget estimates. Morgan State University • Systems Engineering Lecture 3 23

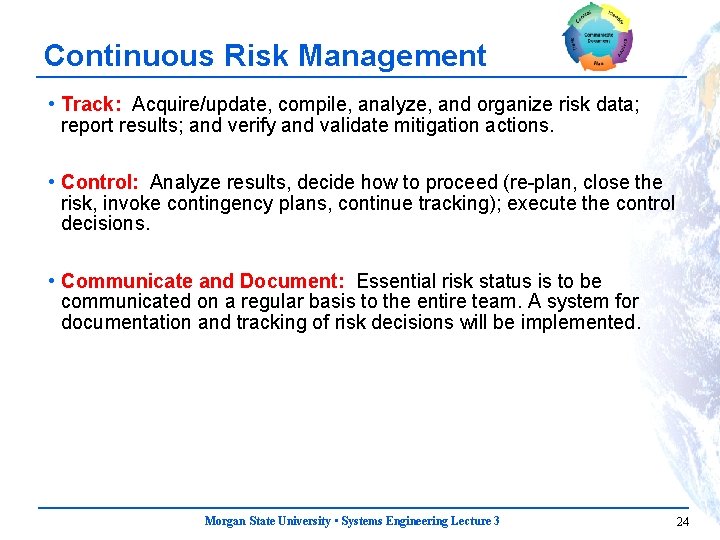

Continuous Risk Management • Track: Acquire/update, compile, analyze, and organize risk data; report results; and verify and validate mitigation actions. • Control: Analyze results, decide how to proceed (re-plan, close the risk, invoke contingency plans, continue tracking); execute the control decisions. • Communicate and Document: Essential risk status is to be communicated on a regular basis to the entire team. A system for documentation and tracking of risk decisions will be implemented. Morgan State University • Systems Engineering Lecture 3 24

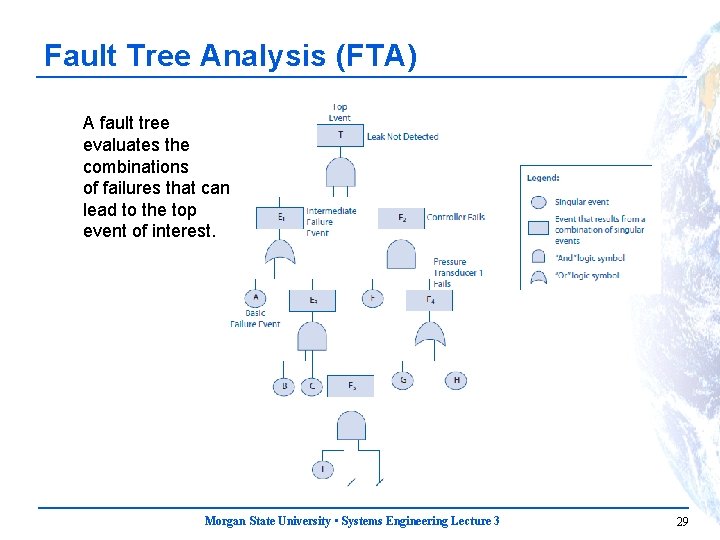

Risk Analysis Methods Failure Modes and Effects Analysis (FMEA) and Fault Tree Analysis (FTA) are methodologies designed to: • Identify potential failure modes for a product or process, • Assess the risk associated with those failure modes, • Rank the issues in terms of importance, and • Identify and carry out corrective actions to address the most serious concerns. Morgan State University • Systems Engineering Lecture 3 25

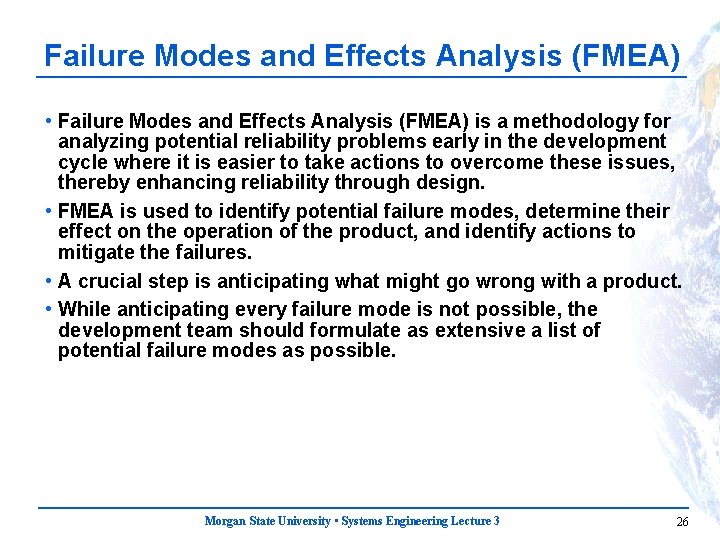

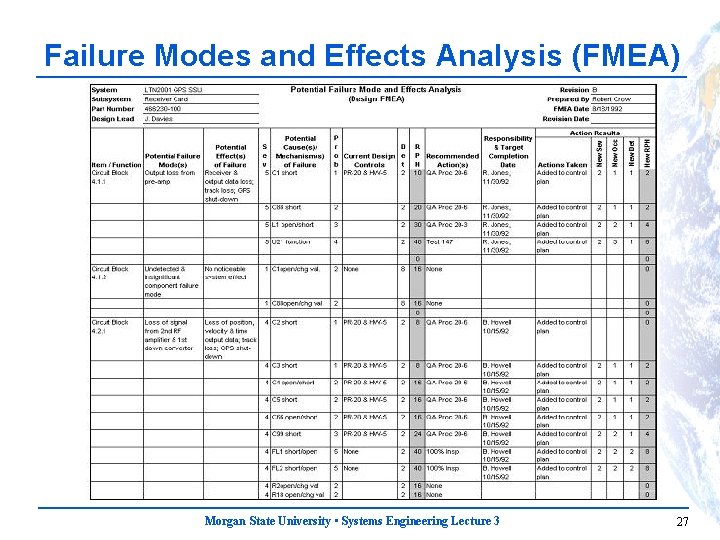

Failure Modes and Effects Analysis (FMEA) • Failure Modes and Effects Analysis (FMEA) is a methodology for analyzing potential reliability problems early in the development cycle where it is easier to take actions to overcome these issues, thereby enhancing reliability through design. • FMEA is used to identify potential failure modes, determine their effect on the operation of the product, and identify actions to mitigate the failures. • A crucial step is anticipating what might go wrong with a product. • While anticipating every failure mode is not possible, the development team should formulate as extensive a list of potential failure modes as possible. Morgan State University • Systems Engineering Lecture 3 26

Failure Modes and Effects Analysis (FMEA) Morgan State University • Systems Engineering Lecture 3 27

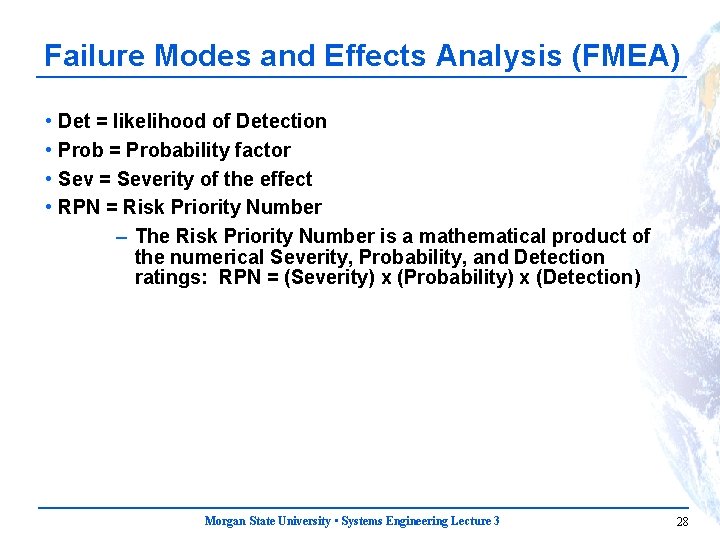

Failure Modes and Effects Analysis (FMEA) • • Det = likelihood of Detection Prob = Probability factor Sev = Severity of the effect RPN = Risk Priority Number – The Risk Priority Number is a mathematical product of the numerical Severity, Probability, and Detection ratings: RPN = (Severity) x (Probability) x (Detection) Morgan State University • Systems Engineering Lecture 3 28

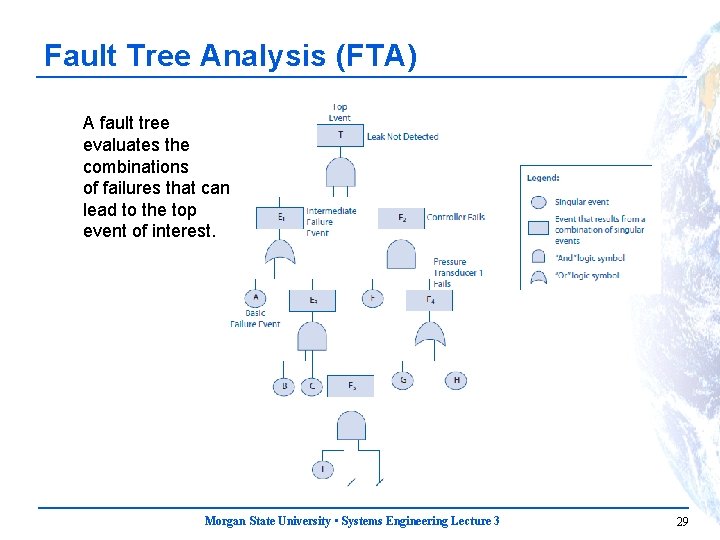

Fault Tree Analysis (FTA) A fault tree evaluates the combinations of failures that can lead to the top event of interest. Morgan State University • Systems Engineering Lecture 3 29

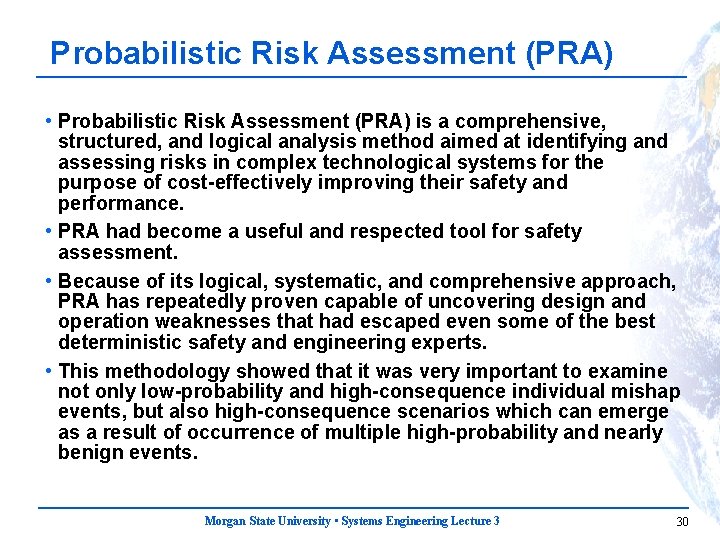

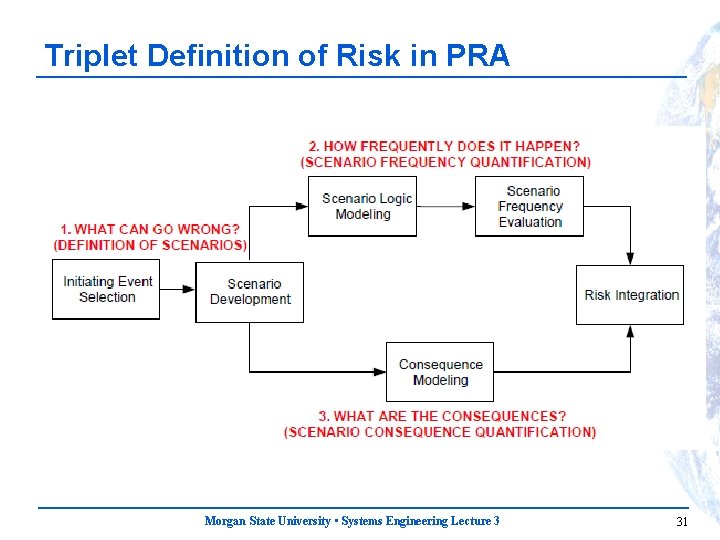

Probabilistic Risk Assessment (PRA) • Probabilistic Risk Assessment (PRA) is a comprehensive, structured, and logical analysis method aimed at identifying and assessing risks in complex technological systems for the purpose of cost-effectively improving their safety and performance. • PRA had become a useful and respected tool for safety assessment. • Because of its logical, systematic, and comprehensive approach, PRA has repeatedly proven capable of uncovering design and operation weaknesses that had escaped even some of the best deterministic safety and engineering experts. • This methodology showed that it was very important to examine not only low-probability and high-consequence individual mishap events, but also high-consequence scenarios which can emerge as a result of occurrence of multiple high-probability and nearly benign events. Morgan State University • Systems Engineering Lecture 3 30

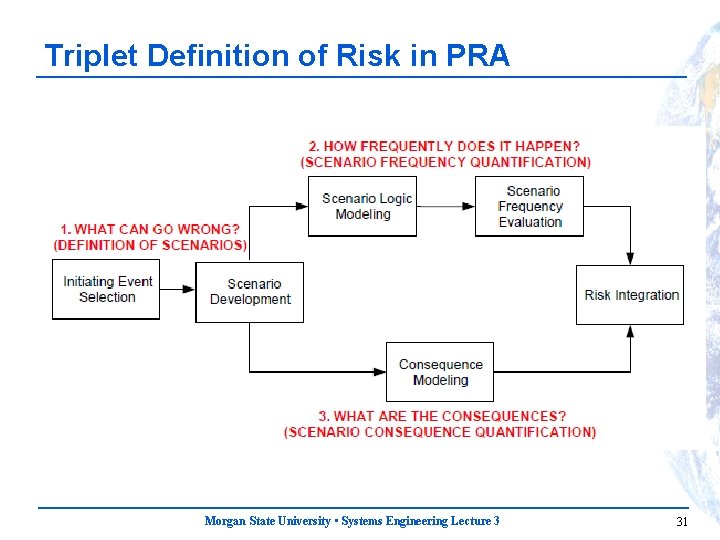

Triplet Definition of Risk in PRA Morgan State University • Systems Engineering Lecture 3 31

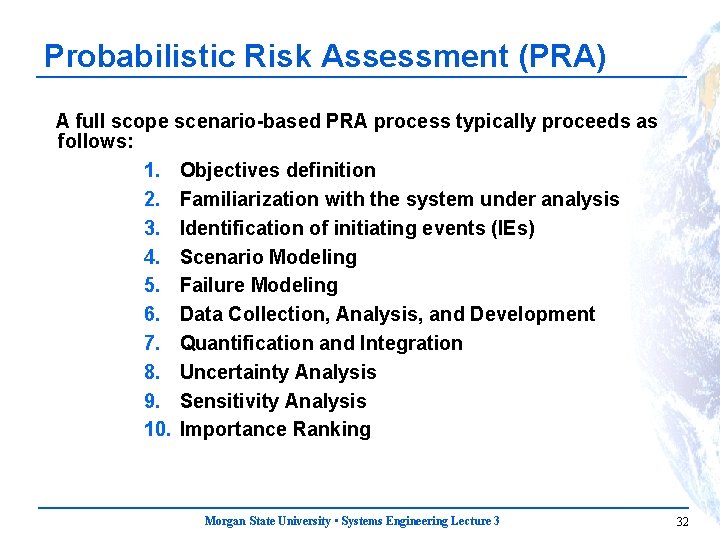

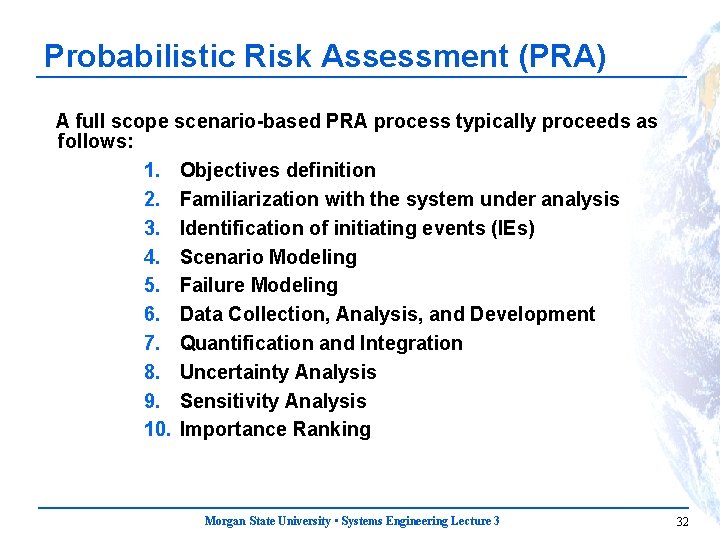

Probabilistic Risk Assessment (PRA) A full scope scenario-based PRA process typically proceeds as follows: 1. Objectives definition 2. Familiarization with the system under analysis 3. Identification of initiating events (IEs) 4. Scenario Modeling 5. Failure Modeling 6. Data Collection, Analysis, and Development 7. Quantification and Integration 8. Uncertainty Analysis 9. Sensitivity Analysis 10. Importance Ranking Morgan State University • Systems Engineering Lecture 3 32

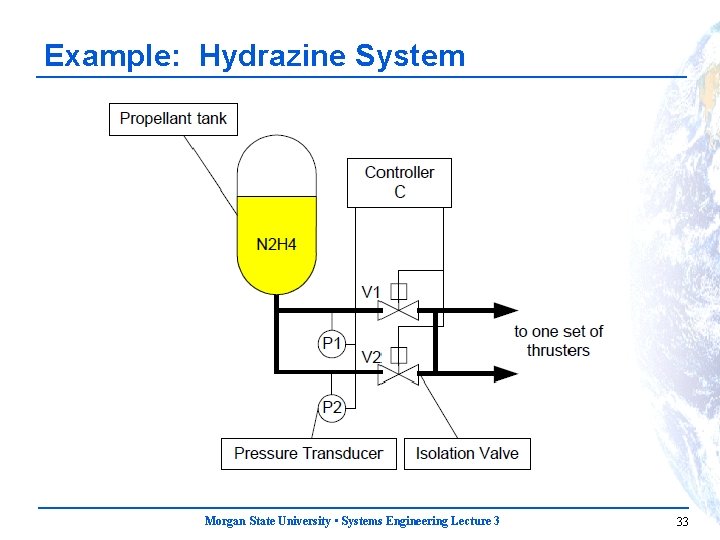

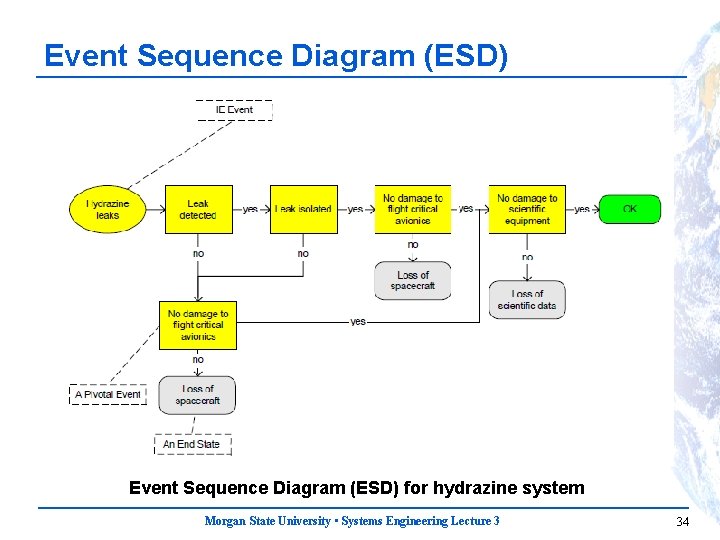

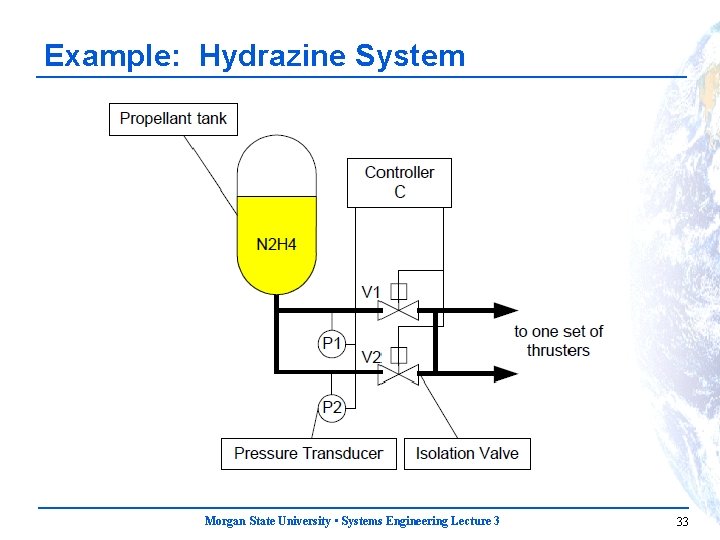

Example: Hydrazine System Morgan State University • Systems Engineering Lecture 3 33

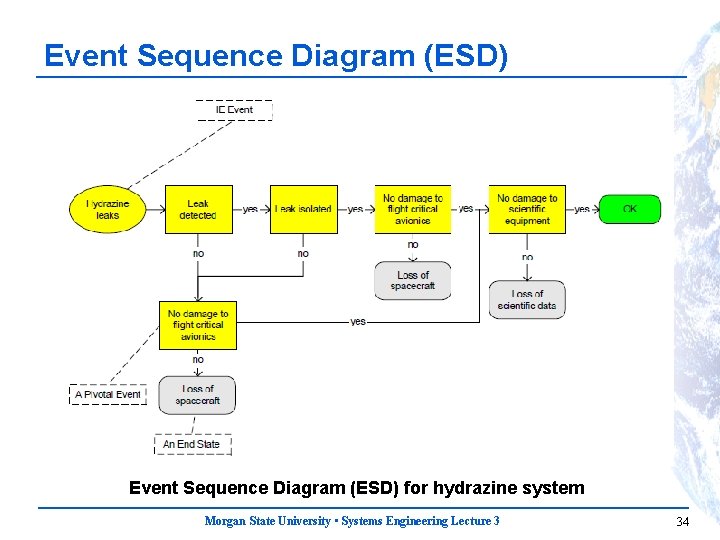

Event Sequence Diagram (ESD) for hydrazine system Morgan State University • Systems Engineering Lecture 3 34

Agenda The lecture will be based on the NASA Systems Engineering Handbook (SP-2007 -6105) Included are discussions of the following: • Handbook Overview • System Modeling • Risk Management • Configuration Management • Technical Measures • Decision Analysis Morgan State University • Systems Engineering Lecture 3 35

Configuration Management • Configuration Management (CM) is a process that establishes and maintains consistency of a product’s attributes with the requirements and product configuration information throughout the product’s life cycle. • Prevents requirements from “creeping”. • CM is a management discipline applied over the product’s life cycle to provide visibility into and to control changes to performance and functional and physical characteristics. • CM ensures that the configuration of a product is known and reflected in product information, that any product change is beneficial and is effected without adverse consequences, and that changes are managed. • CM reduces technical risks by ensuring correct product configurations, distinguishes among product versions, ensures consistency between the product and information about the product. Morgan State University • Systems Engineering Lecture 3 36

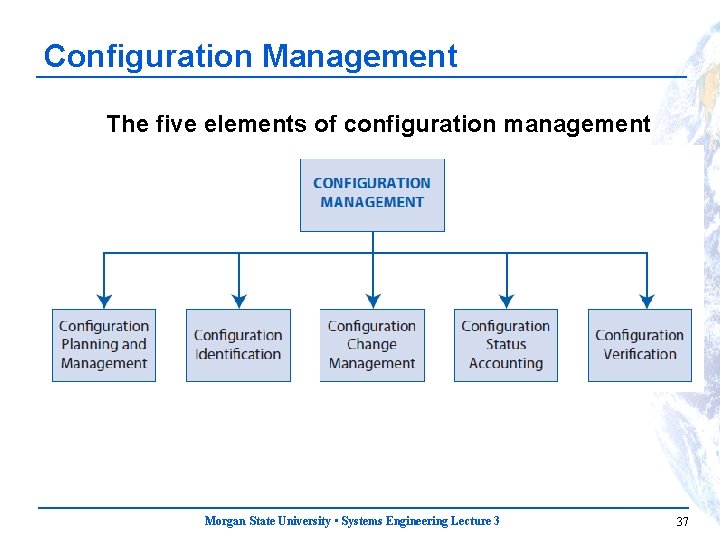

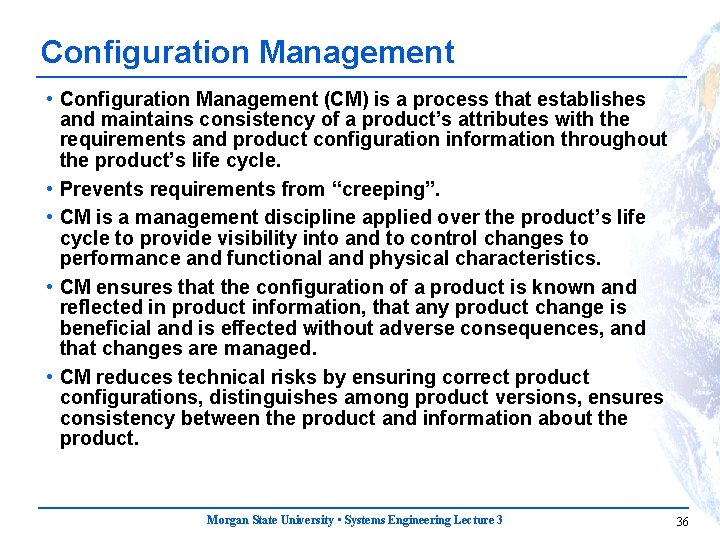

Configuration Management The five elements of configuration management Morgan State University • Systems Engineering Lecture 3 37

Configuration Management • The impact of not doing CM may result in a project being plagued by confusion, inaccuracies, low productivity, and unmanageable configuration data. • The following impacts are possible and have occurred in the past: – Mission failure and loss of property and life due to improperly configured or installed hardware or software, – Mission failure to gather mission data due to improperly configured or installed hardware or software, – Significant mission delay incurring additional cost due to improperly configured or installed hardware or software, and – Significant mission costs or delay due to improperly certified parts or subsystems due to fraudulent verification data. Morgan State University • Systems Engineering Lecture 3 38

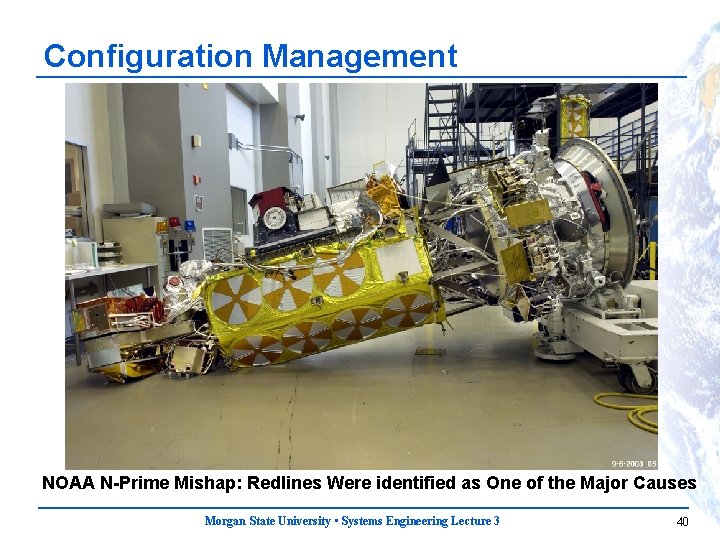

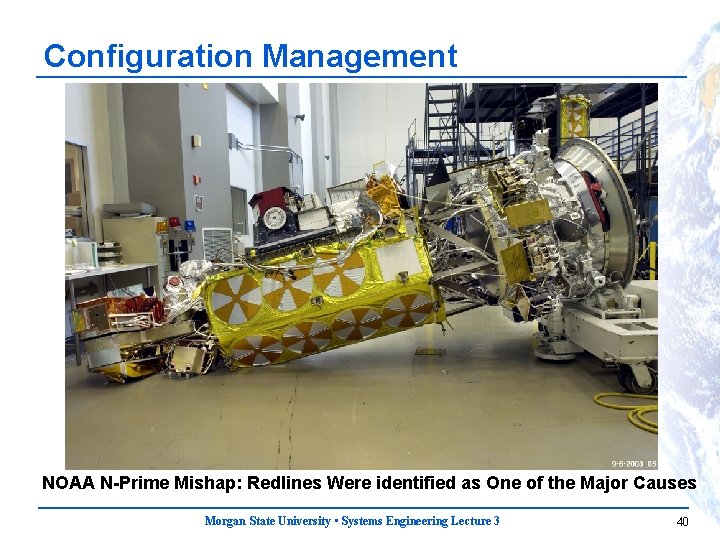

Configuration Management • “Redline” refers to the control process of marking up drawings and documents during design, fabrication, production, and testing that are found to contain errors or inaccuracies. • Work stoppages could occur if the documents were corrected through the formal change process. • All redlines require the approval of the responsible hardware manager and quality assurance manager at a minimum. • These managers will determine whether redlines are to be incorporated into the plan or procedure. • The important point is that each project must have a controlled procedure for redlines that specifies redline procedures and approvals. Morgan State University • Systems Engineering Lecture 3 39

Configuration Management NOAA N-Prime Mishap: Redlines Were identified as One of the Major Causes Morgan State University • Systems Engineering Lecture 3 40

Agenda The lecture will be based on the NASA Systems Engineering Handbook (SP-2007 -6105) Included are discussions of the following: • Handbook Overview • System Modeling • Risk Management • Configuration Management • Technical Measures • Decision Analysis Morgan State University • Systems Engineering Lecture 3 41

Technical Measures • Measures of Effectiveness (MOEs) – Are derived from stakeholder expectation statements – Deemed critical to the mission or operational success of the system – Example: Amount of science data to be collected during mission • Measures of Performance (MOPs) – Broad physical and performance parameters – Means of ensuring meeting the associated MOEs – Example: Total vehicle mass at launch • Technical Performance Measures (TPMs) – Typically selected from the defined set of MOEs and MOPs – Critical mission success or performance attributes – Measurable – Progress profile established, controlled and monitored Morgan State University • Systems Engineering Lecture 3 42

Technical Performance Measures (TPMs) • TPMs are intended to provide an early warning of the adequacy of a design in satisfying selected critical technical parameter requirements. • The systems engineer should select TPMs that fall within welldefined (quantitative) limits for reasons of system effectiveness or mission feasibility. • In summary, for a TPM to be a valuable status and assessment tool, certain criteria must be met: – Be a significant descriptor of the system (e. g. , weight, range, capacity, response time, safety parameter) that will be monitored at key events (e. g. , reviews, audits, planned tests) – Can be measured (either by test, inspection, demonstration, or analysis) – Is such that reasonable projected progress profiles can be established (e. g. , from historical data or based on test planning) Morgan State University • Systems Engineering Lecture 3 43

Technical Performance Measures (TPMs) • A formal TPM assessment program should be fully planned and baselined with the SEMP. • Tracking TPMs should begin as soon as practical in Phase B. Data to support the full set of selected TPMs may, however, not be available until later in the project life cycle. • As the project life cycle proceeds through Phases C and D, the measurement of TPMs should become increasingly more accurate with the availability of more actual data about the system. Morgan State University • Systems Engineering Lecture 3 44

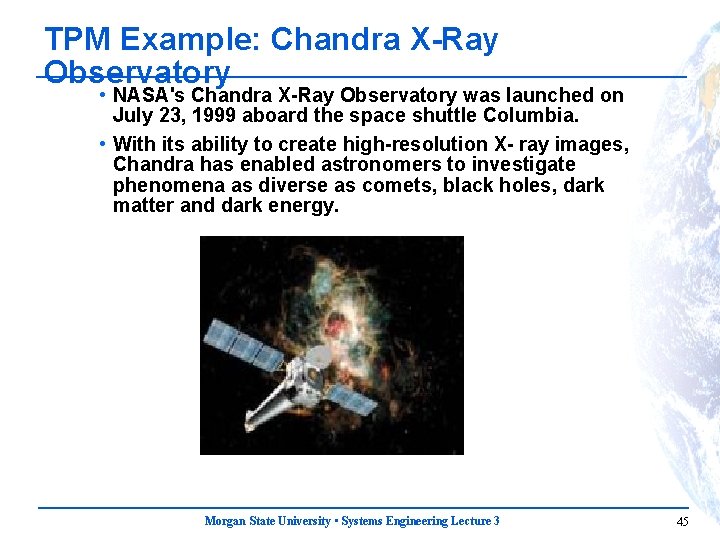

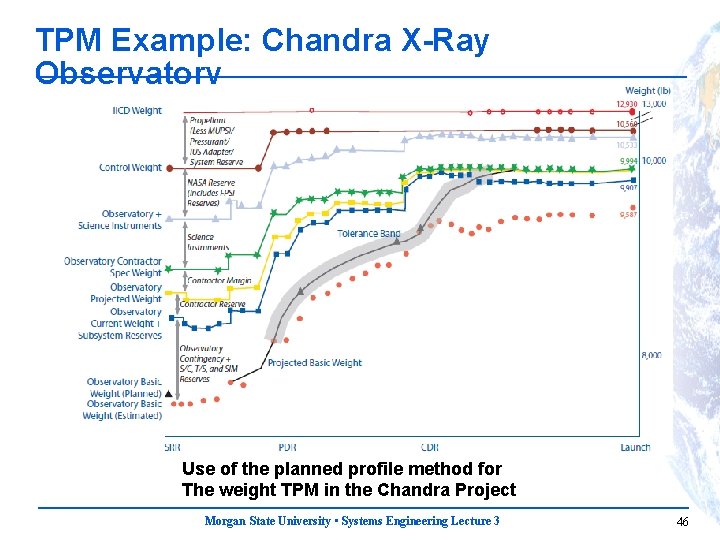

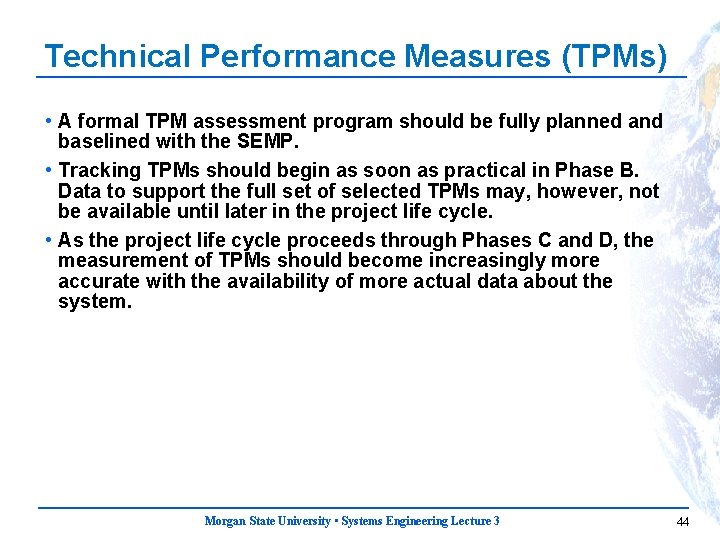

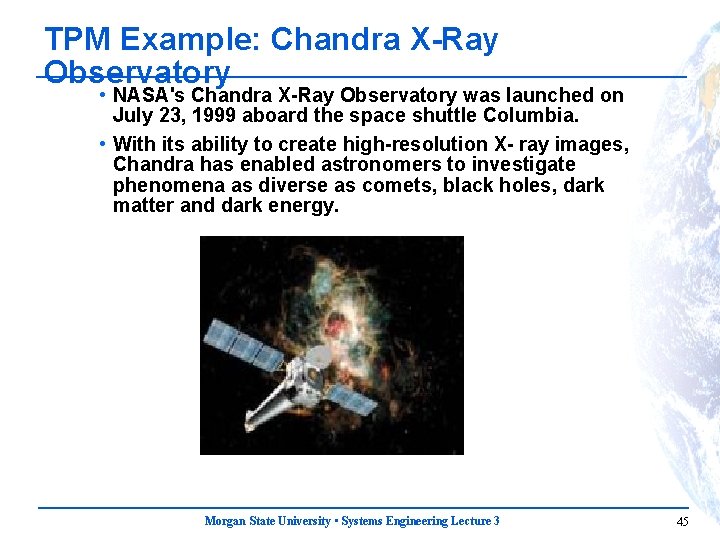

TPM Example: Chandra X-Ray Observatory • NASA's Chandra X-Ray Observatory was launched on July 23, 1999 aboard the space shuttle Columbia. • With its ability to create high-resolution X- ray images, Chandra has enabled astronomers to investigate phenomena as diverse as comets, black holes, dark matter and dark energy. Morgan State University • Systems Engineering Lecture 3 45

TPM Example: Chandra X-Ray Observatory Use of the planned profile method for The weight TPM in the Chandra Project Morgan State University • Systems Engineering Lecture 3 46

Agenda The lecture will be based on the NASA Systems Engineering Handbook (SP-2007 -6105) Included are discussions of the following: • Handbook Overview • System Modeling • Risk Management • Configuration Management • Technical Measures • Decision Analysis Morgan State University • Systems Engineering Lecture 3 47

Decision Analysis • Decision analysis offers individuals and organizations a methodology for making decisions; it also offers techniques for modeling decision problems mathematically and finding optimal decisions numerically. • Decision models have the capacity for accepting and quantifying human subjective inputs: judgments of experts and preferences of decision makers. • Implementation of models can take the form of simple paper-and-pencil procedures or sophisticated computer programs known as decision aids or decision systems. Morgan State University • Systems Engineering Lecture 3 48

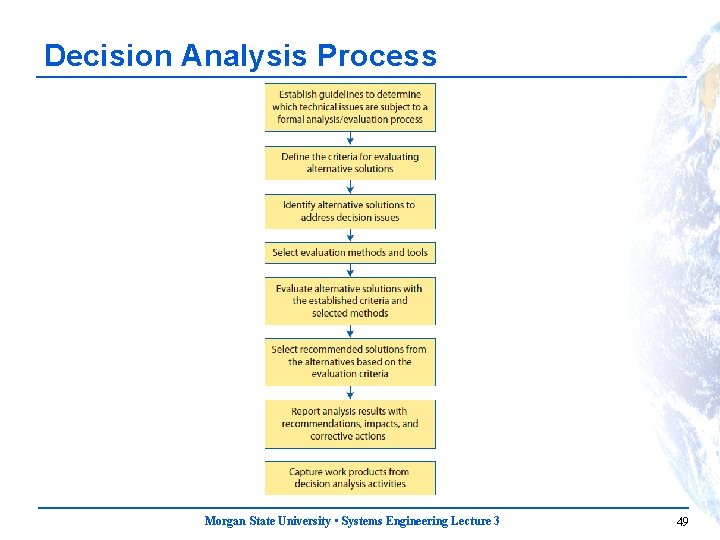

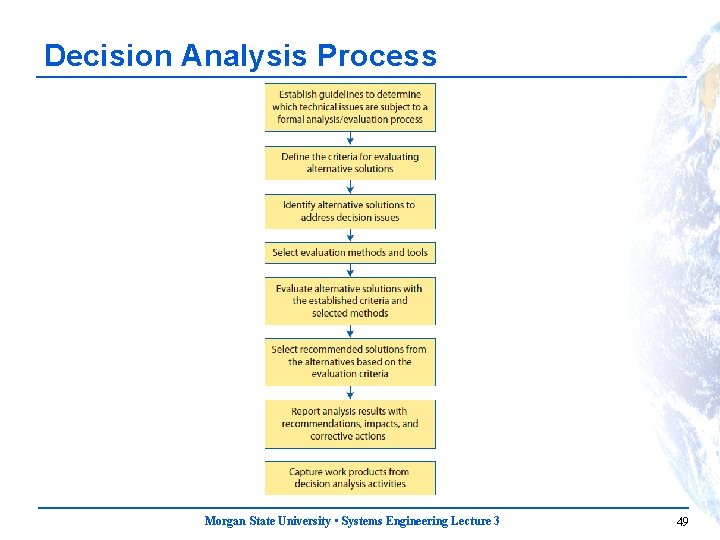

Decision Analysis Process Morgan State University • Systems Engineering Lecture 3 49

Decision Analysis Methods • Influence Diagrams • Decision Trees • Multi-Criteria Decision Analysis (MCDA) • Analytical Hierarchy Process (AHP) Morgan State University • Systems Engineering Lecture 3 50

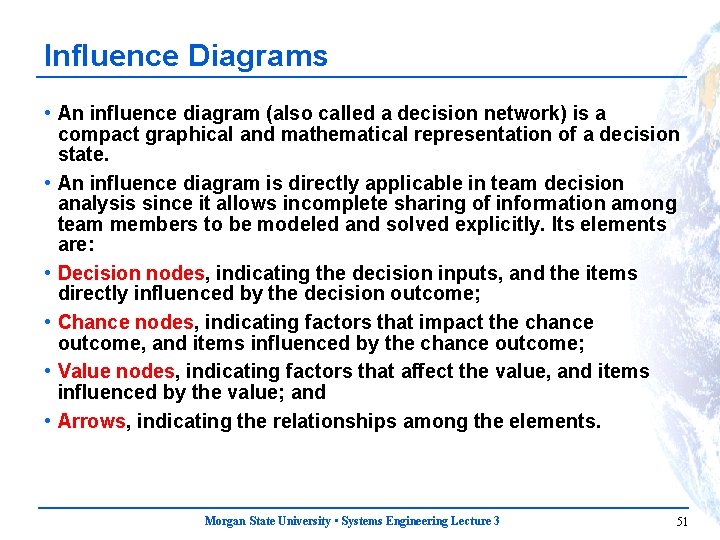

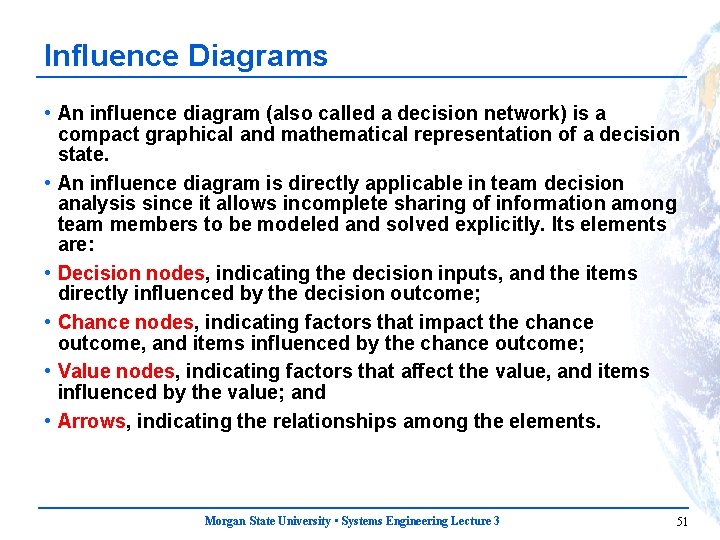

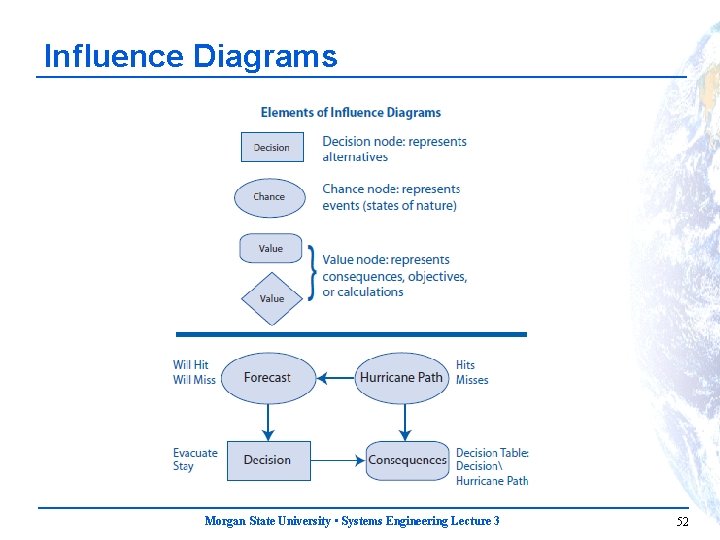

Influence Diagrams • An influence diagram (also called a decision network) is a compact graphical and mathematical representation of a decision state. • An influence diagram is directly applicable in team decision analysis since it allows incomplete sharing of information among team members to be modeled and solved explicitly. Its elements are: • Decision nodes, indicating the decision inputs, and the items directly influenced by the decision outcome; • Chance nodes, indicating factors that impact the chance outcome, and items influenced by the chance outcome; • Value nodes, indicating factors that affect the value, and items influenced by the value; and • Arrows, indicating the relationships among the elements. Morgan State University • Systems Engineering Lecture 3 51

Influence Diagrams Morgan State University • Systems Engineering Lecture 3 52

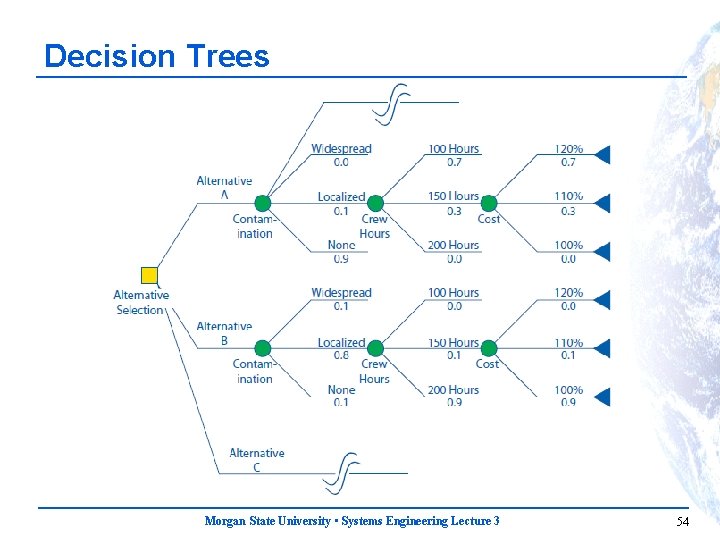

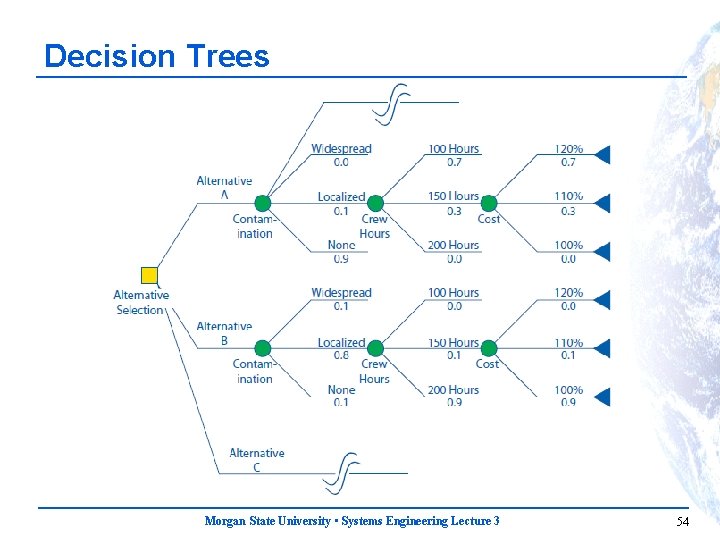

Decision Trees • Like the influence diagram, a decision tree portrays a decision model, but a decision tree is drawn from a point of view different from that of the influence diagram. • The decision tree exhaustively works out the expected consequences of all decision alternatives by discretizing all “chance” nodes, and, based on this discretization, calculating and appropriately weighting all possible consequences of all alternatives. • The preferred alternative is then identified by summing the appropriate outcome variables from the path end states. Morgan State University • Systems Engineering Lecture 3 53

Decision Trees Morgan State University • Systems Engineering Lecture 3 54

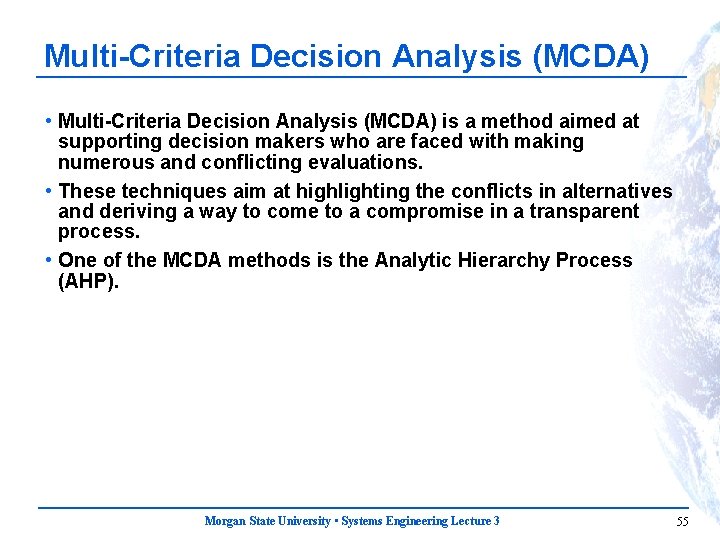

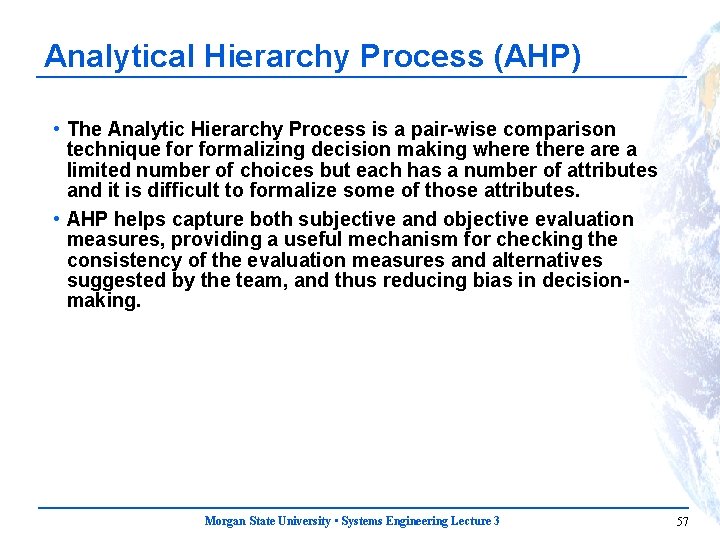

Multi-Criteria Decision Analysis (MCDA) • Multi-Criteria Decision Analysis (MCDA) is a method aimed at supporting decision makers who are faced with making numerous and conflicting evaluations. • These techniques aim at highlighting the conflicts in alternatives and deriving a way to come to a compromise in a transparent process. • One of the MCDA methods is the Analytic Hierarchy Process (AHP). Morgan State University • Systems Engineering Lecture 3 55

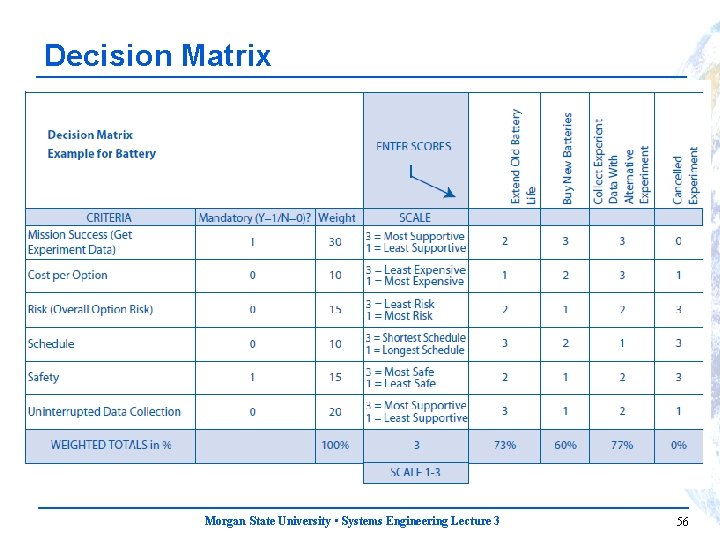

Decision Matrix Morgan State University • Systems Engineering Lecture 3 56

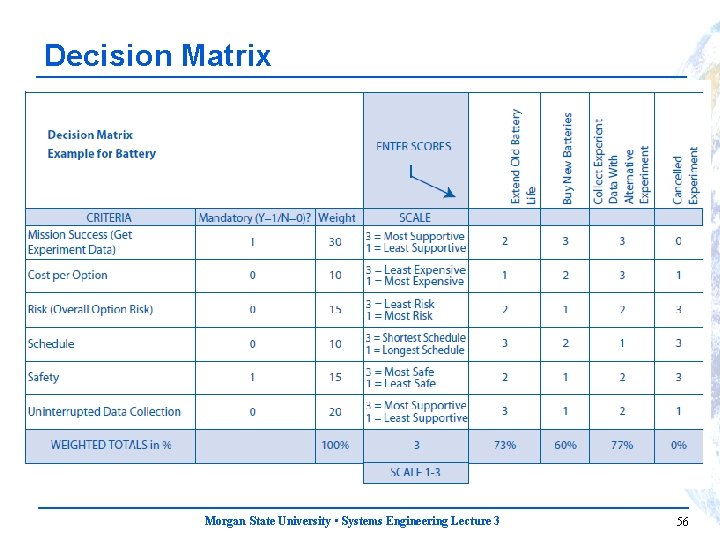

Analytical Hierarchy Process (AHP) • The Analytic Hierarchy Process is a pair-wise comparison technique formalizing decision making where there a limited number of choices but each has a number of attributes and it is difficult to formalize some of those attributes. • AHP helps capture both subjective and objective evaluation measures, providing a useful mechanism for checking the consistency of the evaluation measures and alternatives suggested by the team, and thus reducing bias in decisionmaking. Morgan State University • Systems Engineering Lecture 3 57

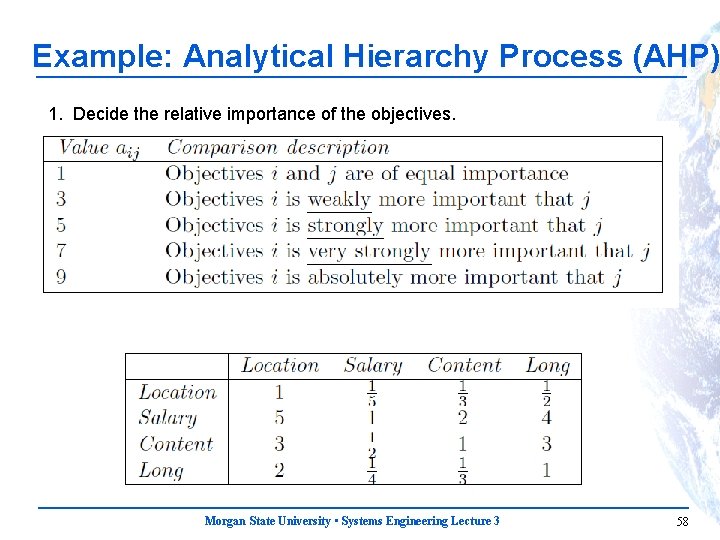

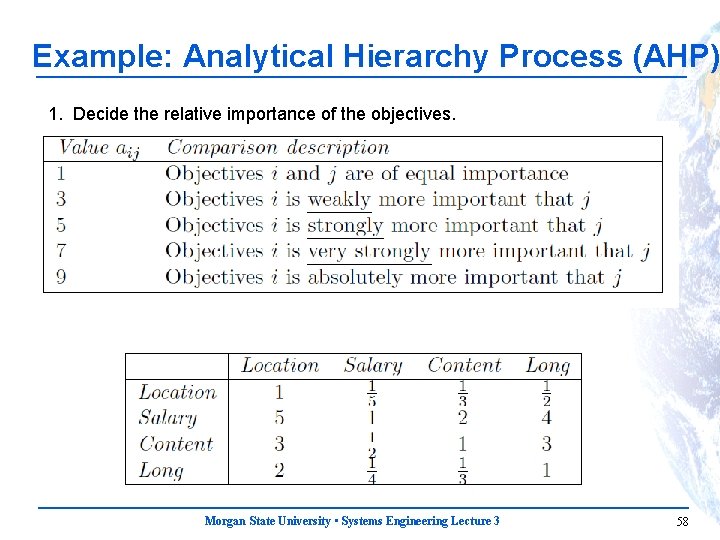

Example: Analytical Hierarchy Process (AHP) 1. Decide the relative importance of the objectives. Morgan State University • Systems Engineering Lecture 3 58

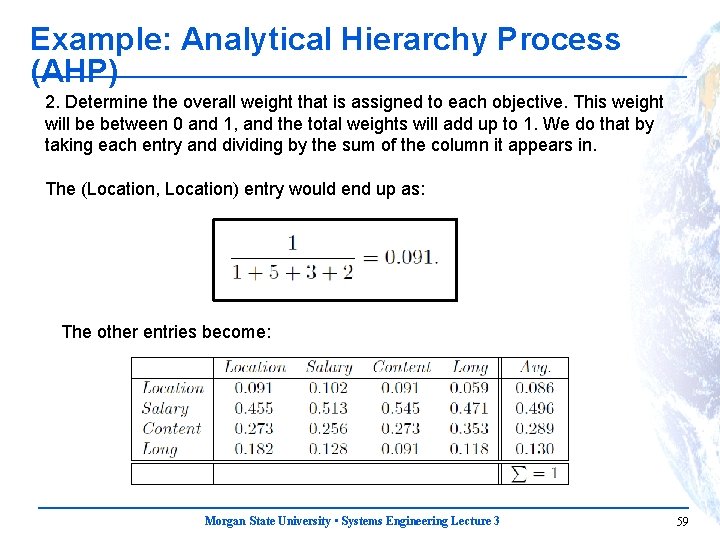

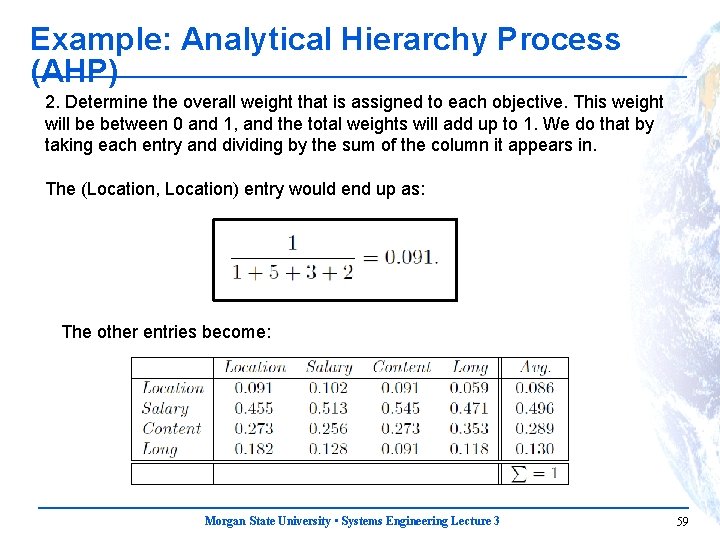

Example: Analytical Hierarchy Process (AHP) 2. Determine the overall weight that is assigned to each objective. This weight will be between 0 and 1, and the total weights will add up to 1. We do that by taking each entry and dividing by the sum of the column it appears in. The (Location, Location) entry would end up as: The other entries become: Morgan State University • Systems Engineering Lecture 3 59

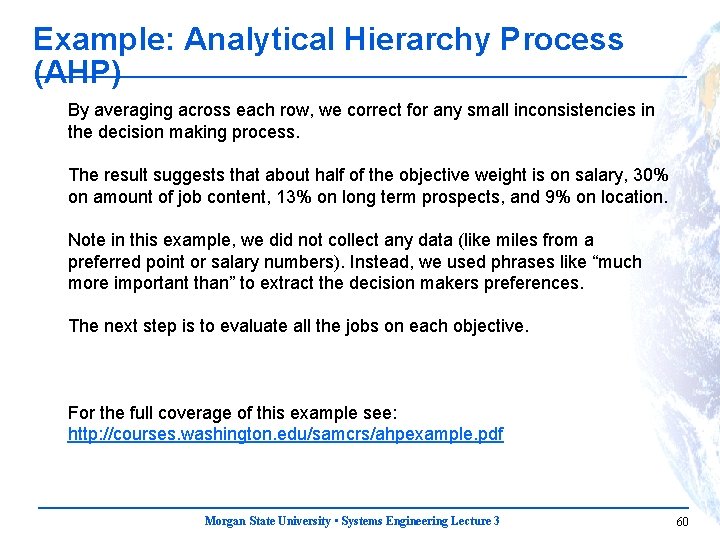

Example: Analytical Hierarchy Process (AHP) By averaging across each row, we correct for any small inconsistencies in the decision making process. The result suggests that about half of the objective weight is on salary, 30% on amount of job content, 13% on long term prospects, and 9% on location. Note in this example, we did not collect any data (like miles from a preferred point or salary numbers). Instead, we used phrases like “much more important than” to extract the decision makers preferences. The next step is to evaluate all the jobs on each objective. For the full coverage of this example see: http: //courses. washington. edu/samcrs/ahpexample. pdf Morgan State University • Systems Engineering Lecture 3 60

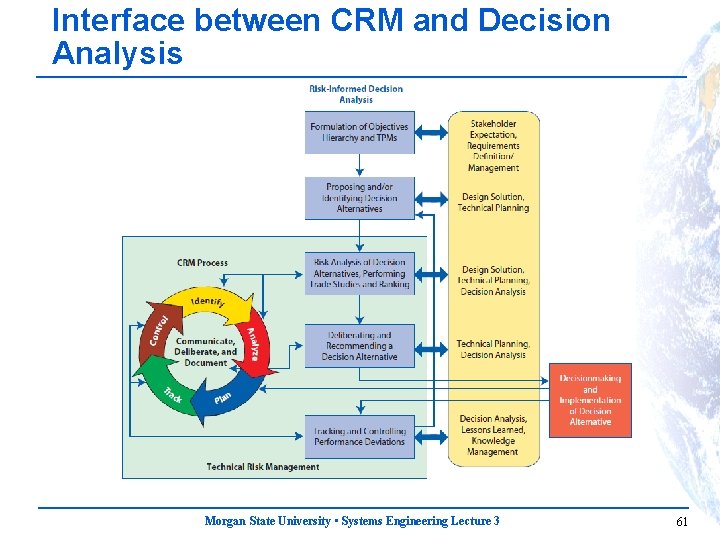

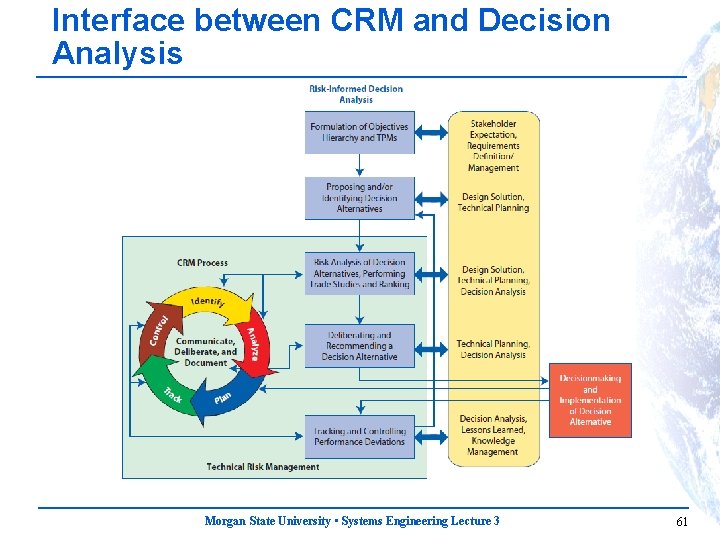

Interface between CRM and Decision Analysis Morgan State University • Systems Engineering Lecture 3 61

Decision Analysis Process • An important aspect of the Decision Analysis Process is to consider and understand at what time it is appropriate or required for a decision to be made or not made. • When considering a decision, it is important to ask questions such as: – Why is a decision required at this time? – For how long can a decision be delayed? – What is the impact of delaying a decision? – Is all of the necessary information available to make a decision? – Are there other key drivers or dependent factors and criteria that must be in place before a decision can be made? Morgan State University • Systems Engineering Lecture 3 62

References • Jeff A Estefan, Survey of Model-Based Systems Engineering Methodologies (MBSE) Rev B, INCOSE-TD-2007 -003 -01, June 10, 2008 • Thomas L. Saaty, The Analytic Hierarchy Process, 1980. • M. Stamelatos, H. Dezfuli, and G. Apostolakis, A Proposed Risk. Informed Decision-making Framework for NASA, 2006. Morgan State University • Systems Engineering Lecture 3 63

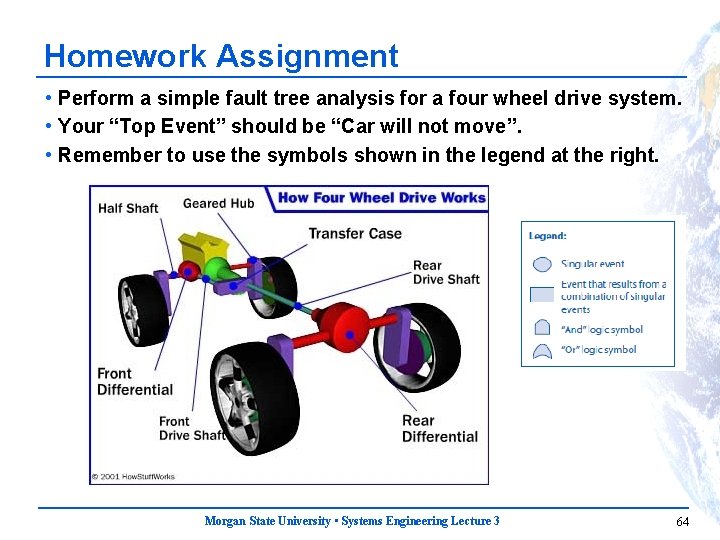

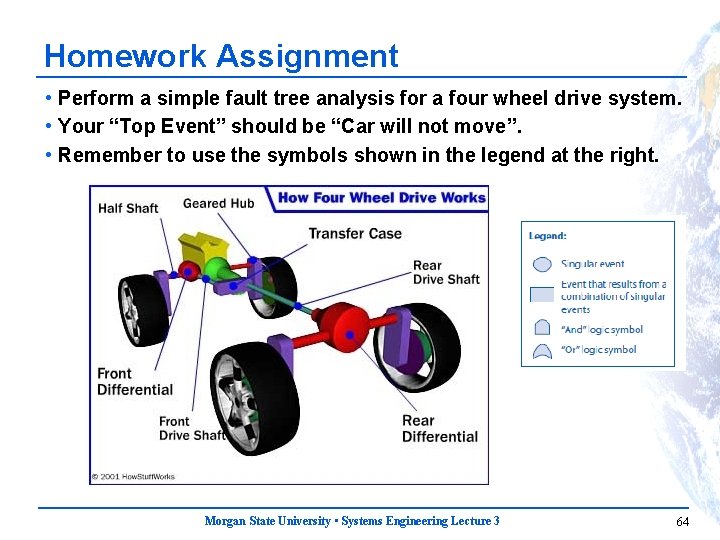

Homework Assignment • Perform a simple fault tree analysis for a four wheel drive system. • Your “Top Event” should be “Car will not move”. • Remember to use the symbols shown in the legend at the right. Morgan State University • Systems Engineering Lecture 3 64