Synchronization Shared Memory Thread Synchronization Threads cooperate in

- Slides: 28

Synchronization

Shared Memory Thread Synchronization • Threads cooperate in multithreaded environments – User threads and kernel threads – Share resources and data structures • E. g. memory cache in a web server – Coordinate execution • Producer/consumer model • For correctness, cooperation must be controlled – Must assume threads interleave execution arbitrarily • Scheduler cannot know everything – Control mechanism: synchronization • Allows restriction of interleaving • Note: This is a global issue (kernel and user) – Also in distributed systems

Shared Resources • Our focus: Coordinating access to shared resource • Basic Problem: – Two concurrent threads access a shared variable – Access methods: Read-Modify-Write • Two levels of approach: – Mechanisms for control • Low level locks • Higher level mutexes, semaphores, monitors, condition variables – Patterns for coordinating access • Bounded buffer, producer/consumer, …

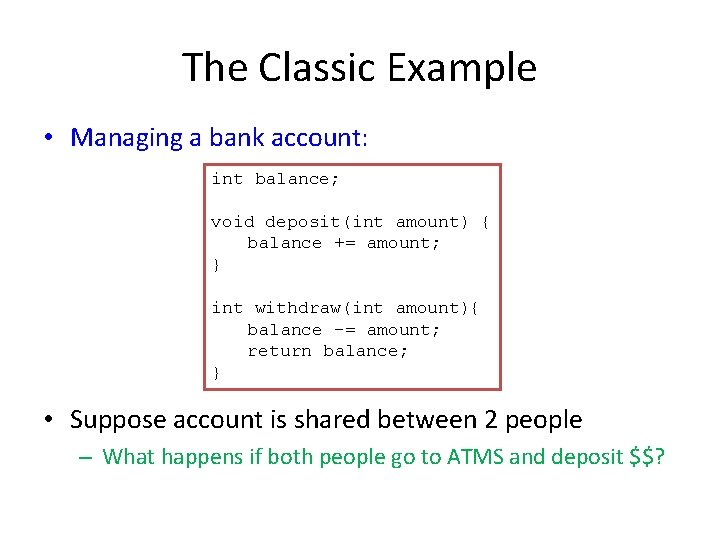

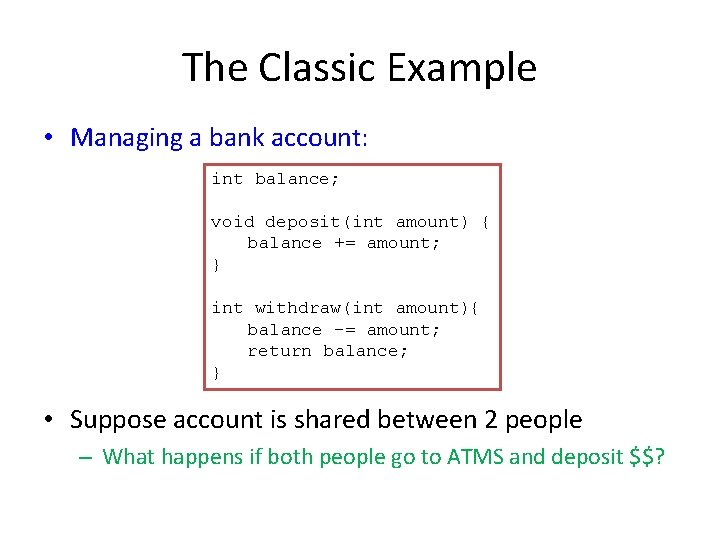

The Classic Example • Managing a bank account: int balance; void deposit(int amount) { balance += amount; } int withdraw(int amount){ balance -= amount; return balance; } • Suppose account is shared between 2 people – What happens if both people go to ATMS and deposit $$?

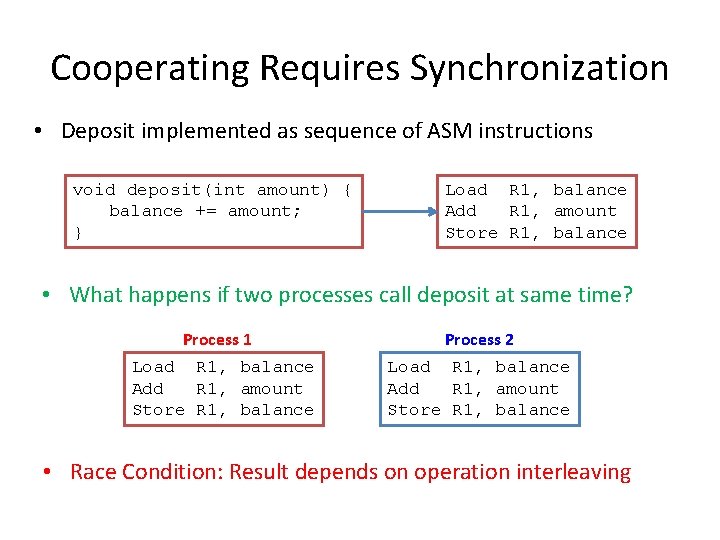

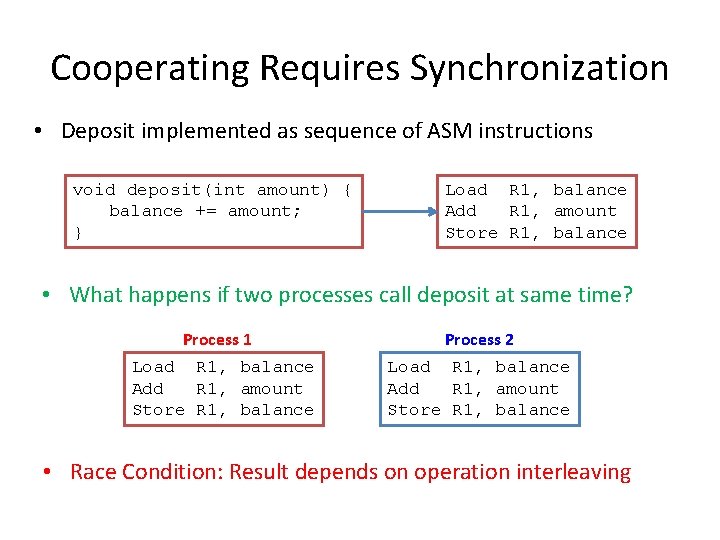

Cooperating Requires Synchronization • Deposit implemented as sequence of ASM instructions void deposit(int amount) { balance += amount; } Load R 1, balance Add R 1, amount Store R 1, balance • What happens if two processes call deposit at same time? Process 1 Load R 1, balance Add R 1, amount Store R 1, balance Process 2 Load R 1, balance Add R 1, amount Store R 1, balance • Race Condition: Result depends on operation interleaving

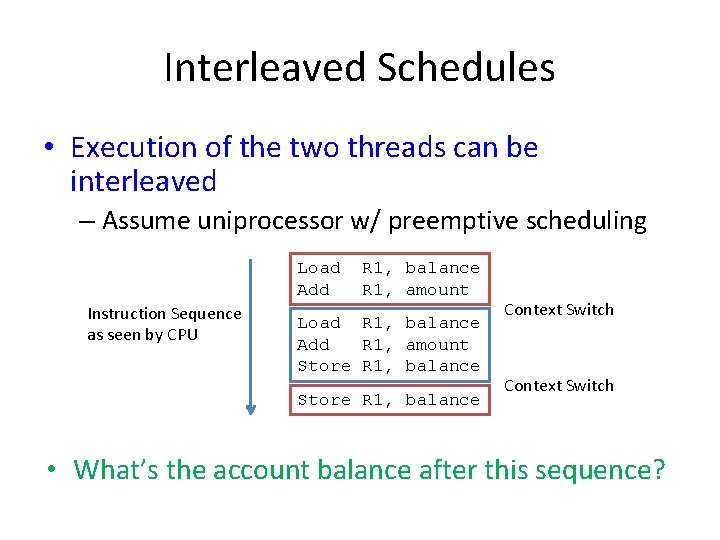

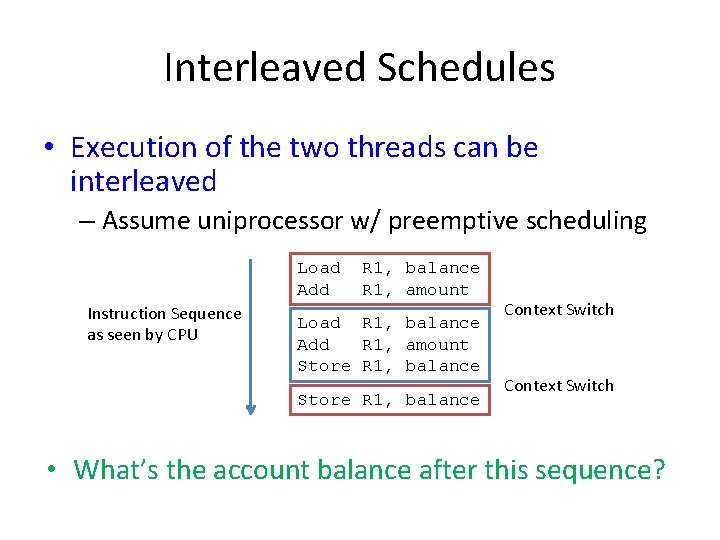

Interleaved Schedules • Execution of the two threads can be interleaved – Assume uniprocessor w/ preemptive scheduling Load Add Instruction Sequence as seen by CPU R 1, balance R 1, amount Load R 1, balance Add R 1, amount Store R 1, balance Context Switch • What’s the account balance after this sequence?

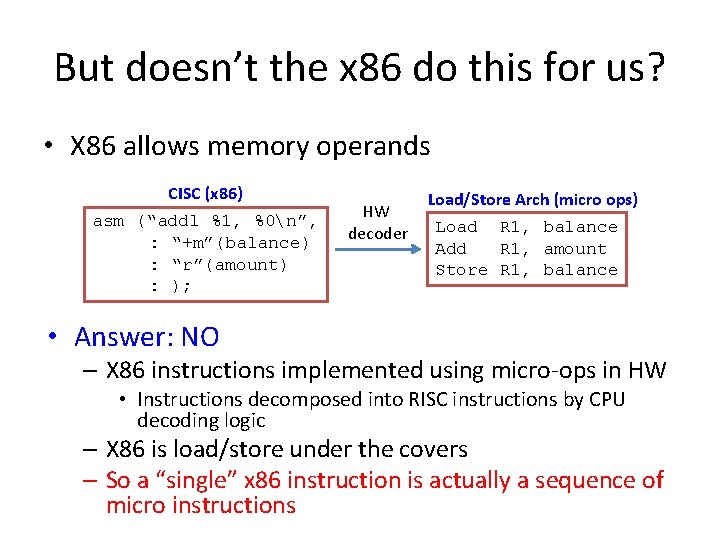

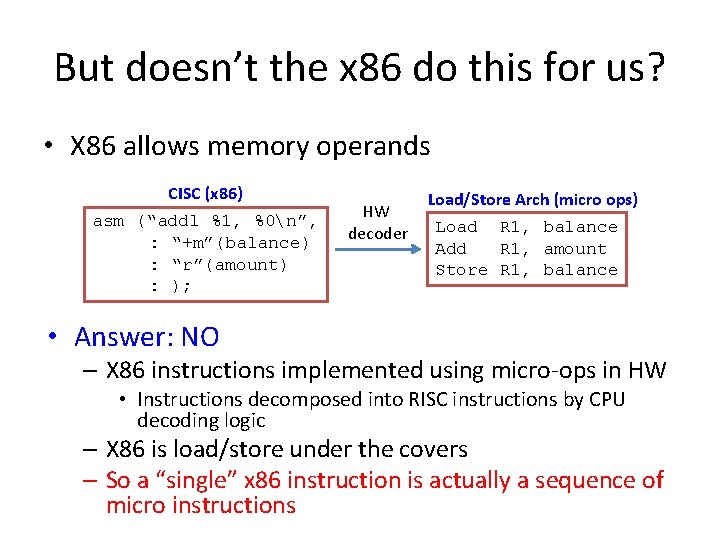

But doesn’t the x 86 do this for us? • X 86 allows memory operands CISC (x 86) asm (“addl %1, %0n”, : “+m”(balance) : “r”(amount) : ); HW decoder Load/Store Arch (micro ops) Load R 1, balance Add R 1, amount Store R 1, balance • Answer: NO – X 86 instructions implemented using micro-ops in HW • Instructions decomposed into RISC instructions by CPU decoding logic – X 86 is load/store under the covers – So a “single” x 86 instruction is actually a sequence of micro instructions

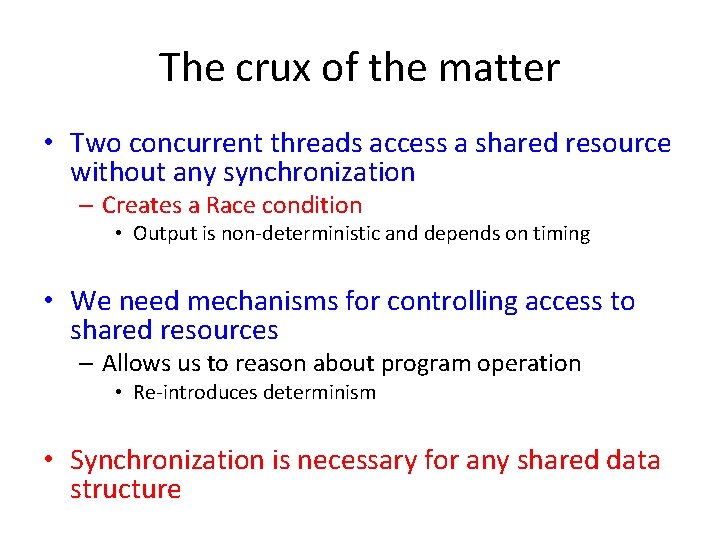

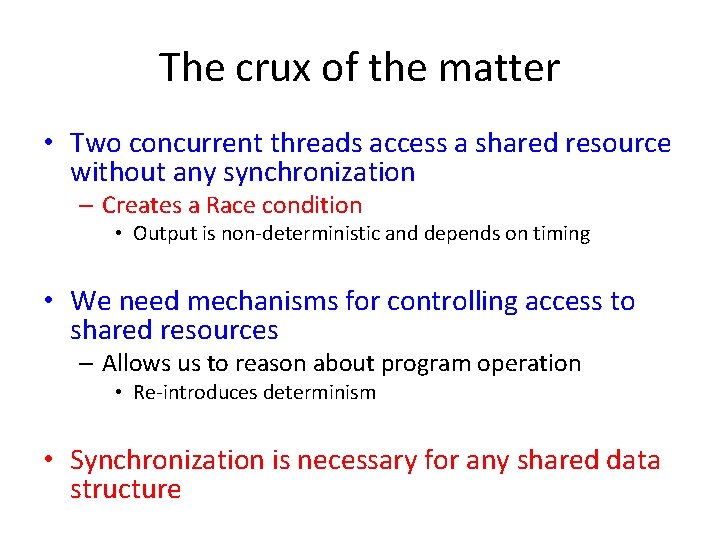

The crux of the matter • Two concurrent threads access a shared resource without any synchronization – Creates a Race condition • Output is non-deterministic and depends on timing • We need mechanisms for controlling access to shared resources – Allows us to reason about program operation • Re-introduces determinism • Synchronization is necessary for any shared data structure

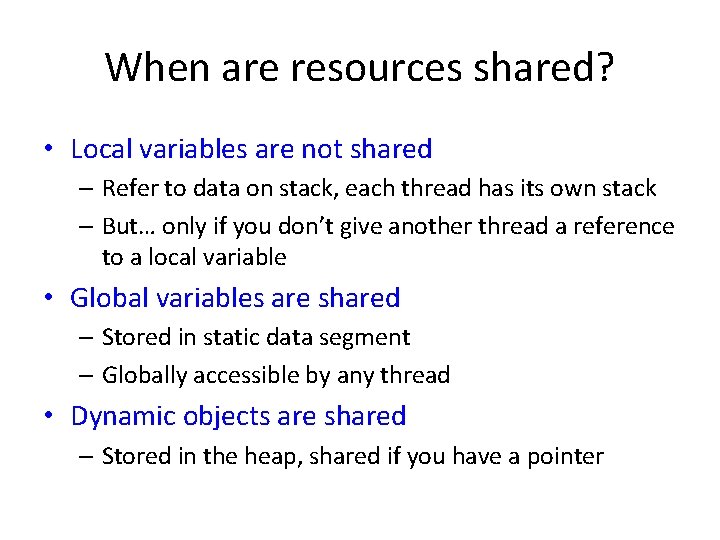

When are resources shared? • Local variables are not shared – Refer to data on stack, each thread has its own stack – But… only if you don’t give another thread a reference to a local variable • Global variables are shared – Stored in static data segment – Globally accessible by any thread • Dynamic objects are shared – Stored in the heap, shared if you have a pointer

Mutual Exclusion • Mutual exclusion synchronizes access to shared resources • Code requiring mutual exclusion for correctness is a “critical section” – Only one thread at a time can execute in critical section – All other threads must wait to enter – Only when a thread leaves can another can enter

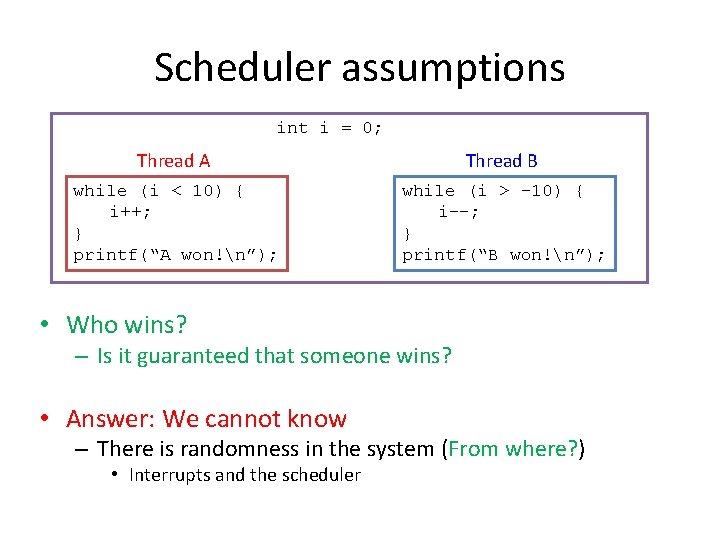

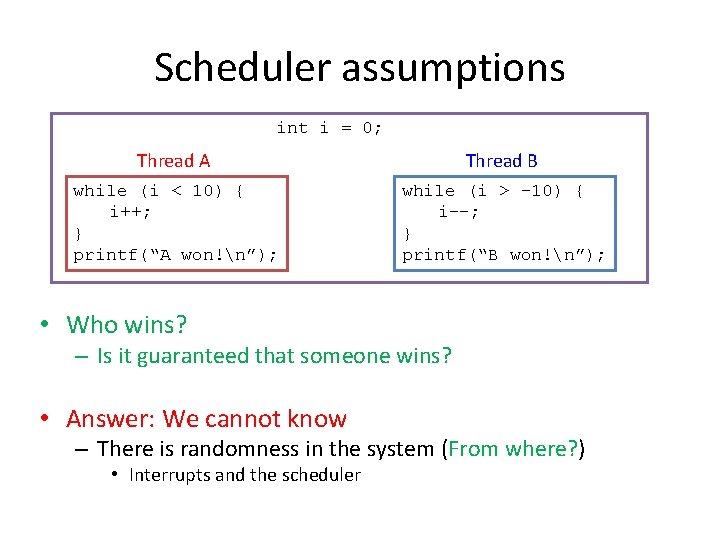

Scheduler assumptions int i = 0; Thread A Thread B while (i < 10) { i++; } printf(“A won!n”); while (i > -10) { i--; } printf(“B won!n”); • Who wins? – Is it guaranteed that someone wins? • Answer: We cannot know – There is randomness in the system (From where? ) • Interrupts and the scheduler

Easy solution for uniprocessors • Problem == Preemption • Solution == Disable Preemption • Sources of preemption – Voluntary preemption: Controlled by thread – Involuntary preemption: Controlled by Scheduler • How is scheduler invoked? • Solution: – Disable interrupts and do not give up CPU • On Linux w/ a single processor all spinlocks are translated to: asm (“cli”); and asm(“sti”);

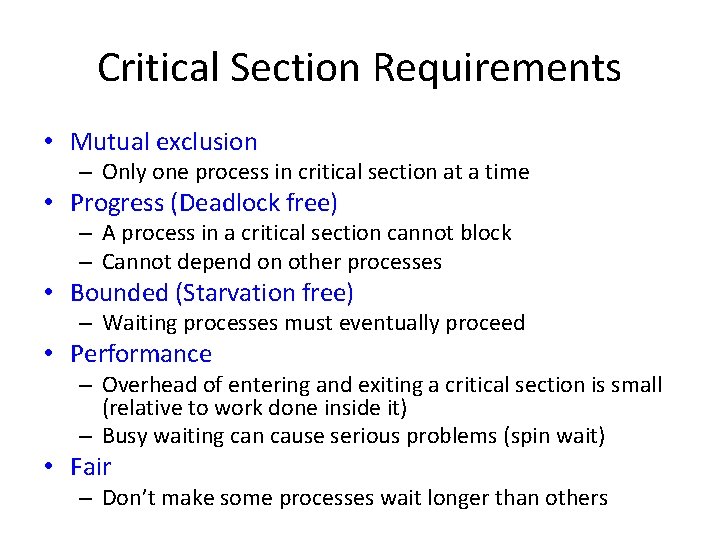

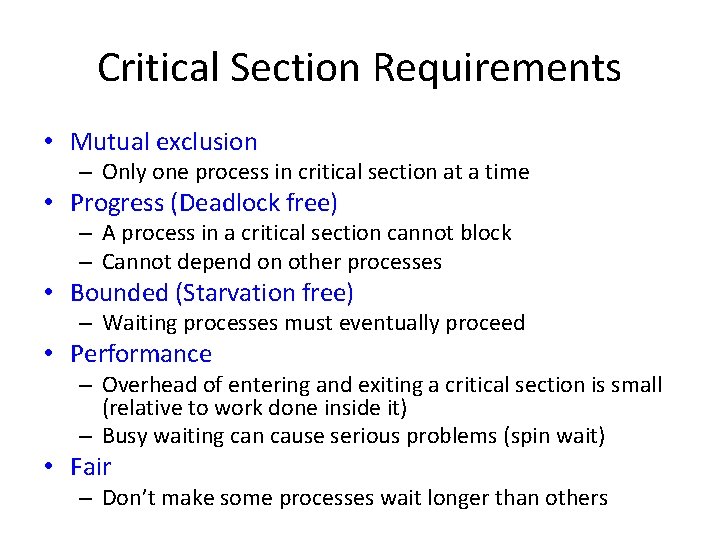

Critical Section Requirements • Mutual exclusion – Only one process in critical section at a time • Progress (Deadlock free) – A process in a critical section cannot block – Cannot depend on other processes • Bounded (Starvation free) – Waiting processes must eventually proceed • Performance – Overhead of entering and exiting a critical section is small (relative to work done inside it) – Busy waiting can cause serious problems (spin wait) • Fair – Don’t make some processes wait longer than others

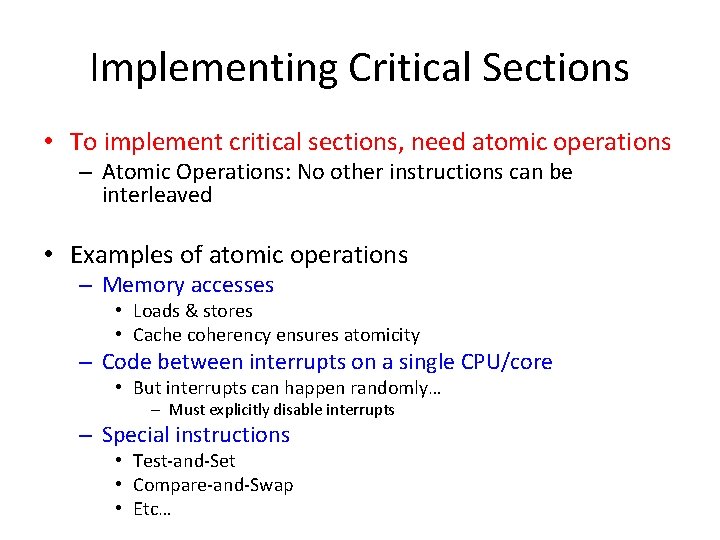

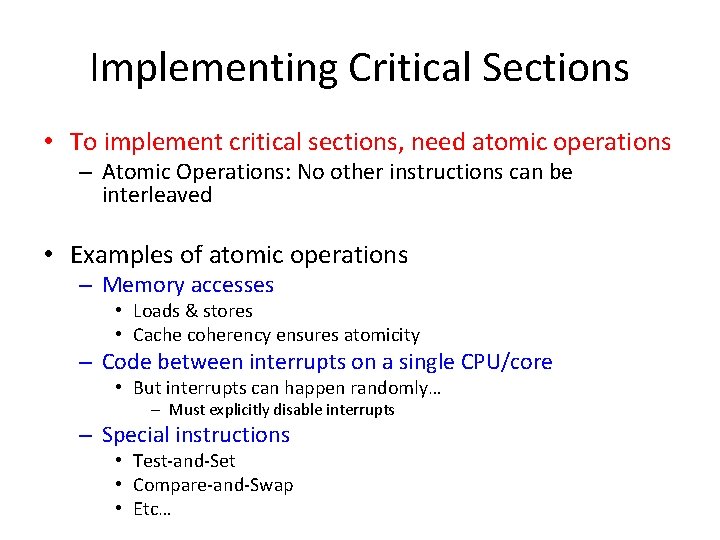

Implementing Critical Sections • To implement critical sections, need atomic operations – Atomic Operations: No other instructions can be interleaved • Examples of atomic operations – Memory accesses • Loads & stores • Cache coherency ensures atomicity – Code between interrupts on a single CPU/core • But interrupts can happen randomly… – Must explicitly disable interrupts – Special instructions • Test-and-Set • Compare-and-Swap • Etc…

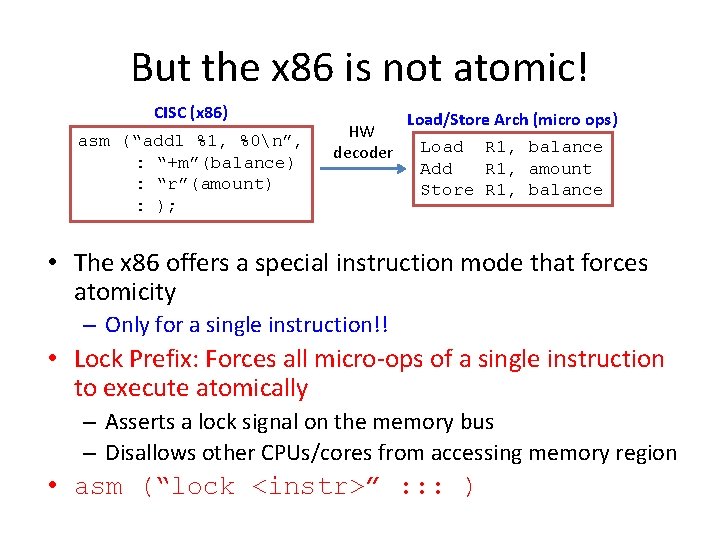

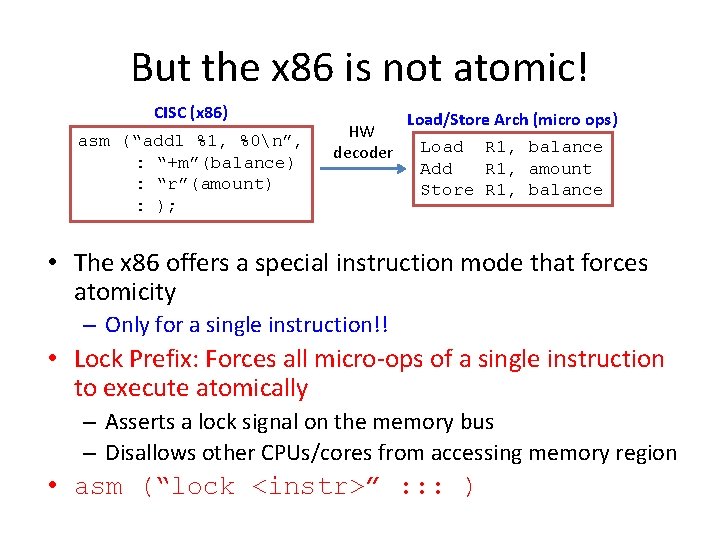

But the x 86 is not atomic! CISC (x 86) asm (“addl %1, %0n”, : “+m”(balance) : “r”(amount) : ); HW decoder Load/Store Arch (micro ops) Load R 1, balance Add R 1, amount Store R 1, balance • The x 86 offers a special instruction mode that forces atomicity – Only for a single instruction!! • Lock Prefix: Forces all micro-ops of a single instruction to execute atomically – Asserts a lock signal on the memory bus – Disallows other CPUs/cores from accessing memory region • asm (“lock <instr>” : : : )

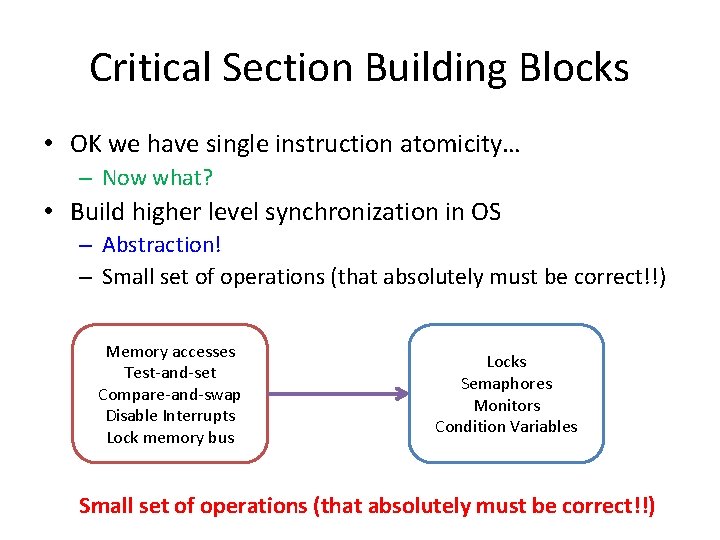

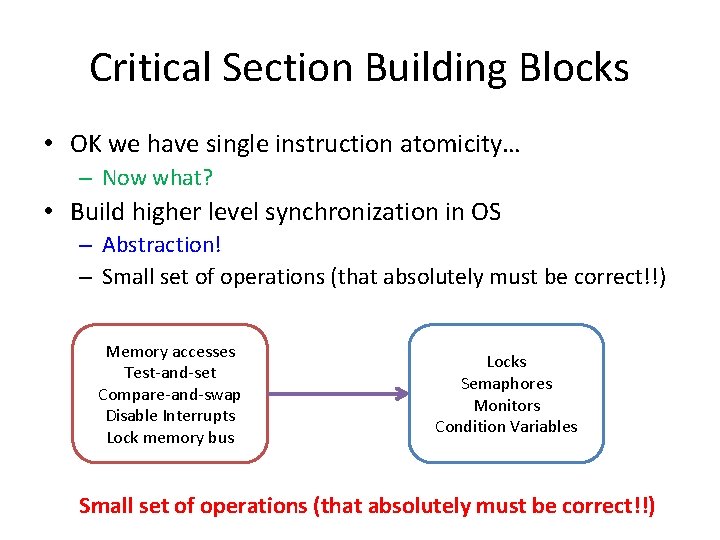

Critical Section Building Blocks • OK we have single instruction atomicity… – Now what? • Build higher level synchronization in OS – Abstraction! – Small set of operations (that absolutely must be correct!!) Memory accesses Test-and-set Compare-and-swap Disable Interrupts Lock memory bus Locks Semaphores Monitors Condition Variables Small set of operations (that absolutely must be correct!!)

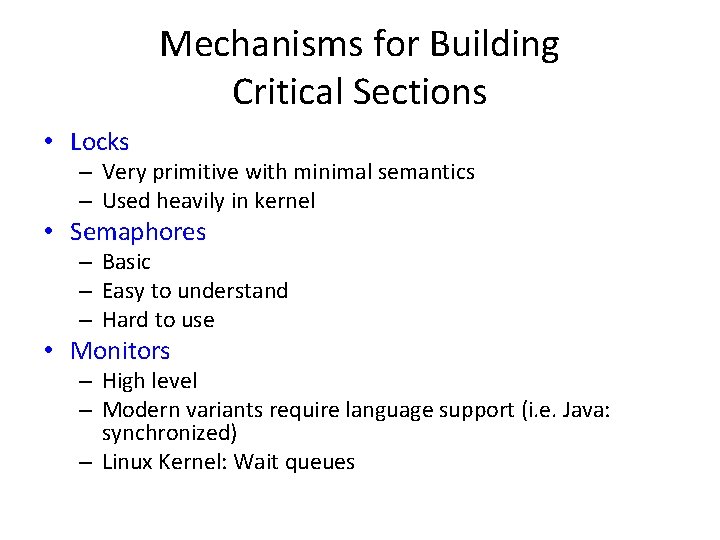

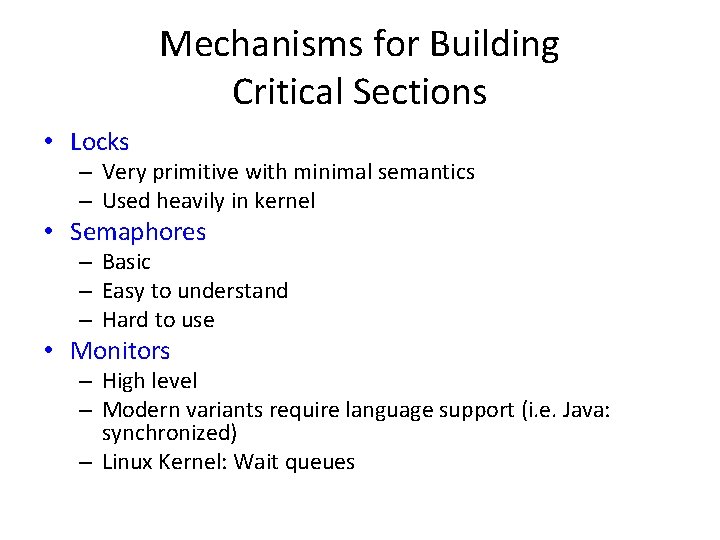

Mechanisms for Building Critical Sections • Locks – Very primitive with minimal semantics – Used heavily in kernel • Semaphores – Basic – Easy to understand – Hard to use • Monitors – High level – Modern variants require language support (i. e. Java: synchronized) – Linux Kernel: Wait queues

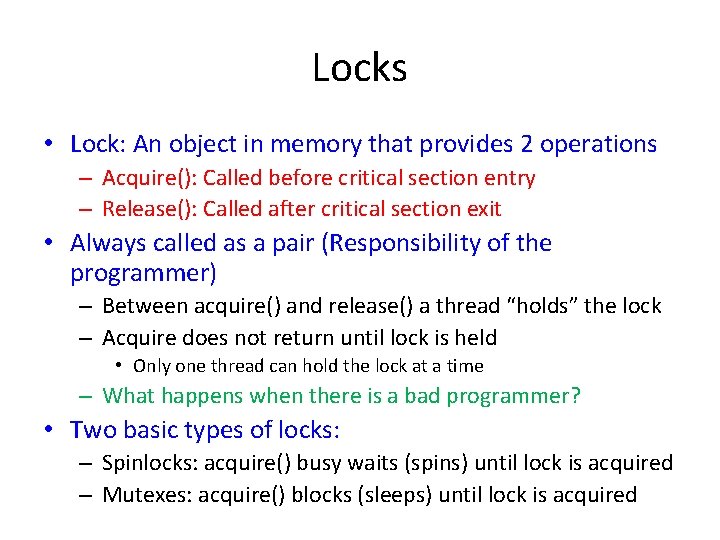

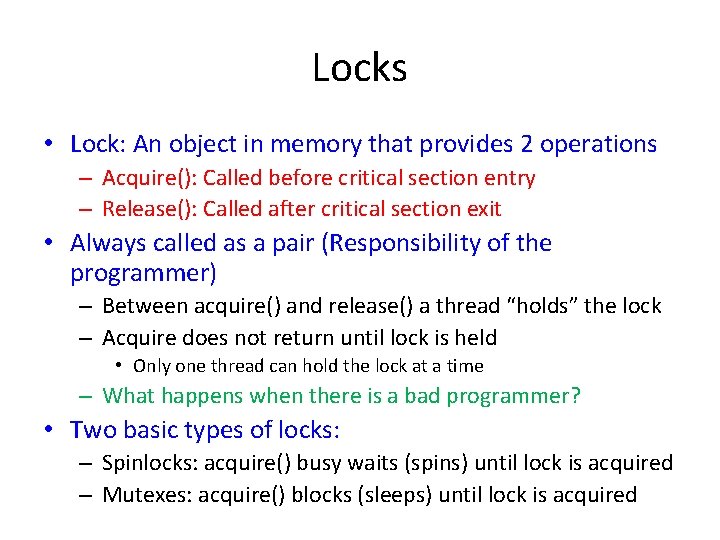

Locks • Lock: An object in memory that provides 2 operations – Acquire(): Called before critical section entry – Release(): Called after critical section exit • Always called as a pair (Responsibility of the programmer) – Between acquire() and release() a thread “holds” the lock – Acquire does not return until lock is held • Only one thread can hold the lock at a time – What happens when there is a bad programmer? • Two basic types of locks: – Spinlocks: acquire() busy waits (spins) until lock is acquired – Mutexes: acquire() blocks (sleeps) until lock is acquired

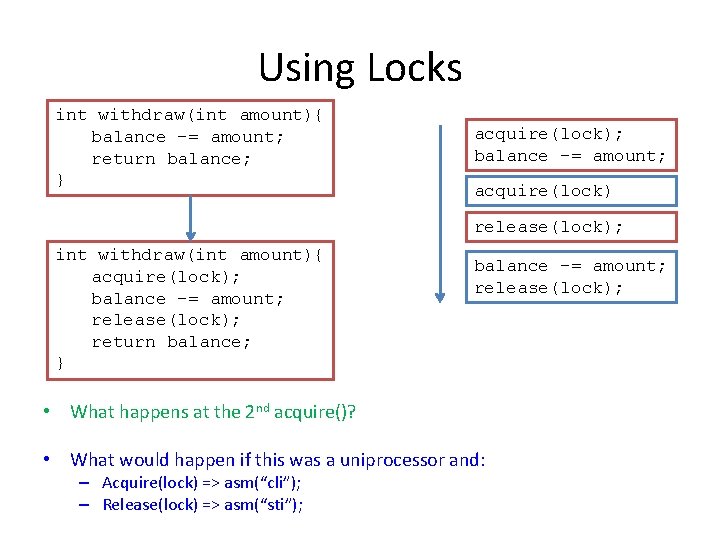

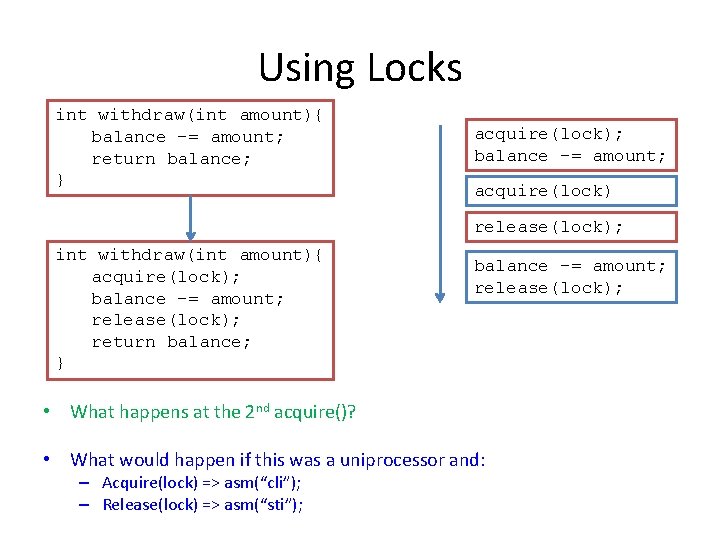

Using Locks int withdraw(int amount){ balance -= amount; return balance; } acquire(lock); balance -= amount; acquire(lock) release(lock); int withdraw(int amount){ acquire(lock); balance -= amount; release(lock); return balance; } balance -= amount; release(lock); • What happens at the 2 nd acquire()? • What would happen if this was a uniprocessor and: – Acquire(lock) => asm(“cli”); – Release(lock) => asm(“sti”);

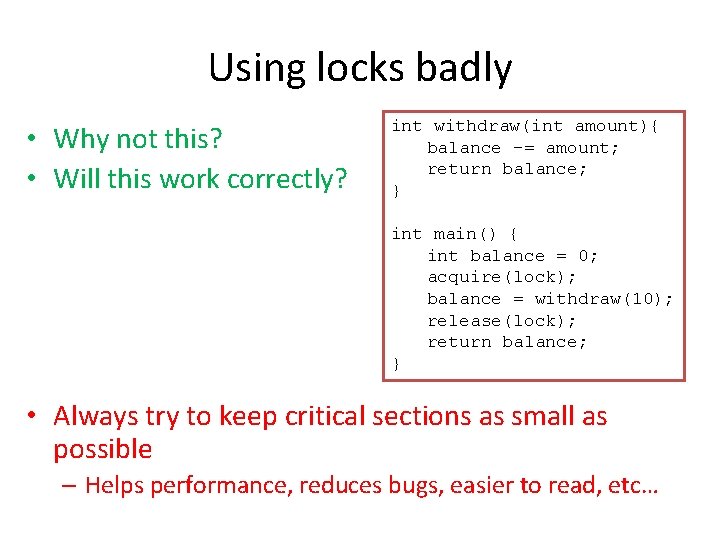

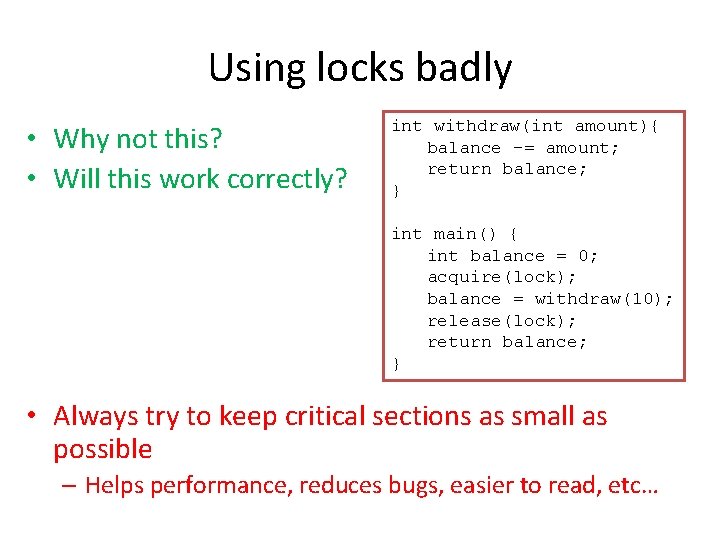

Using locks badly • Why not this? • Will this work correctly? int withdraw(int amount){ balance -= amount; return balance; } int main() { int balance = 0; acquire(lock); balance = withdraw(10); release(lock); return balance; } • Always try to keep critical sections as small as possible – Helps performance, reduces bugs, easier to read, etc…

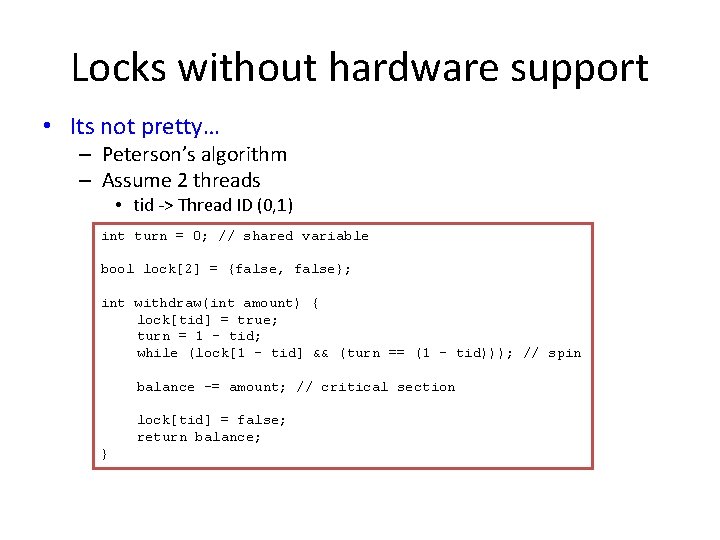

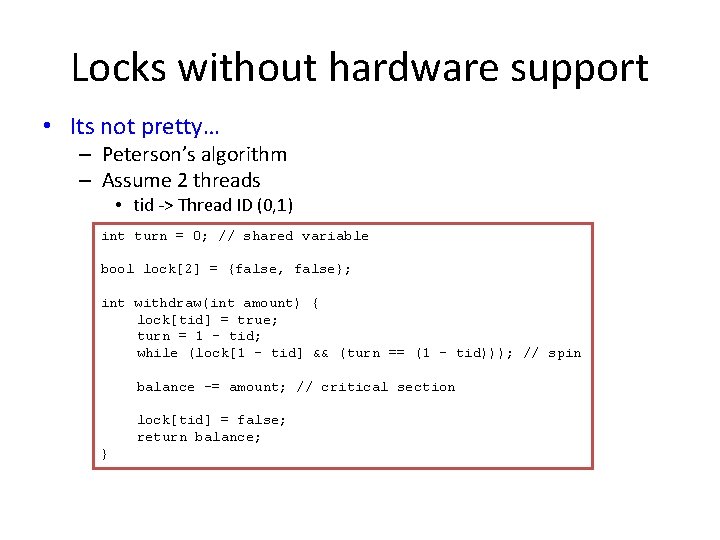

Locks without hardware support • Its not pretty… – Peterson’s algorithm – Assume 2 threads • tid -> Thread ID (0, 1) int turn = 0; // shared variable bool lock[2] = {false, false}; int withdraw(int amount) { lock[tid] = true; turn = 1 – tid; while (lock[1 - tid] && (turn == (1 – tid))); // spin balance -= amount; // critical section lock[tid] = false; return balance; }

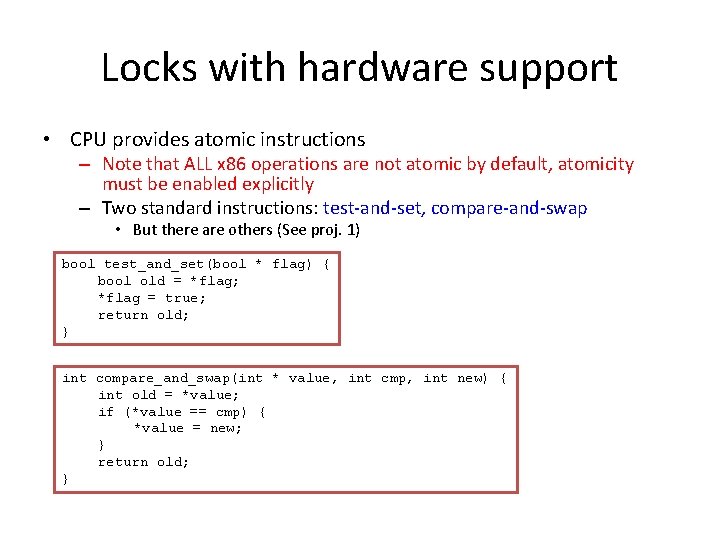

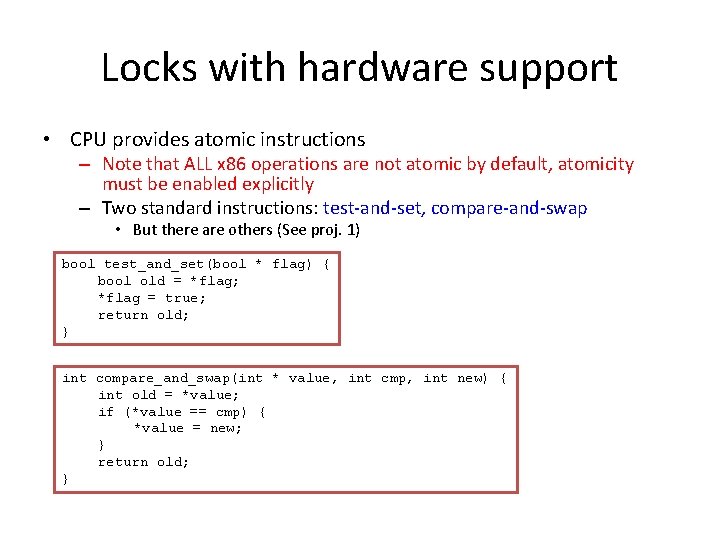

Locks with hardware support • CPU provides atomic instructions – Note that ALL x 86 operations are not atomic by default, atomicity must be enabled explicitly – Two standard instructions: test-and-set, compare-and-swap • But there are others (See proj. 1) bool test_and_set(bool * flag) { bool old = *flag; *flag = true; return old; } int compare_and_swap(int * value, int cmp, int new) { int old = *value; if (*value == cmp) { *value = new; } return old; }

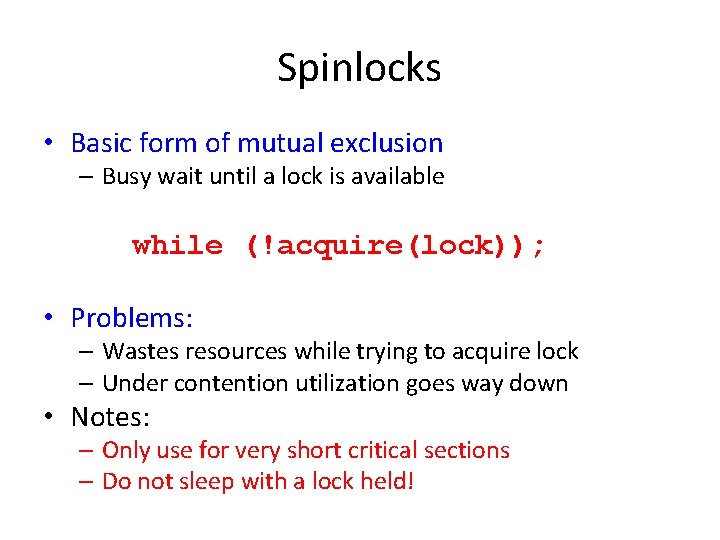

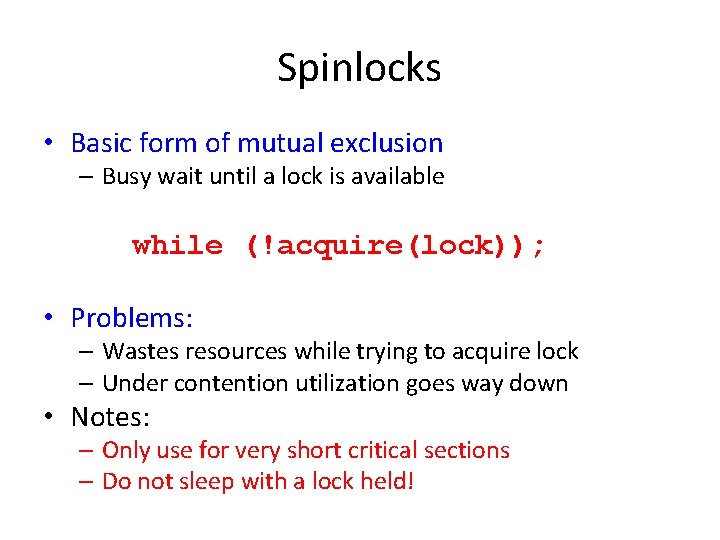

Spinlocks • Basic form of mutual exclusion – Busy wait until a lock is available while (!acquire(lock)); • Problems: – Wastes resources while trying to acquire lock – Under contention utilization goes way down • Notes: – Only use for very short critical sections – Do not sleep with a lock held!

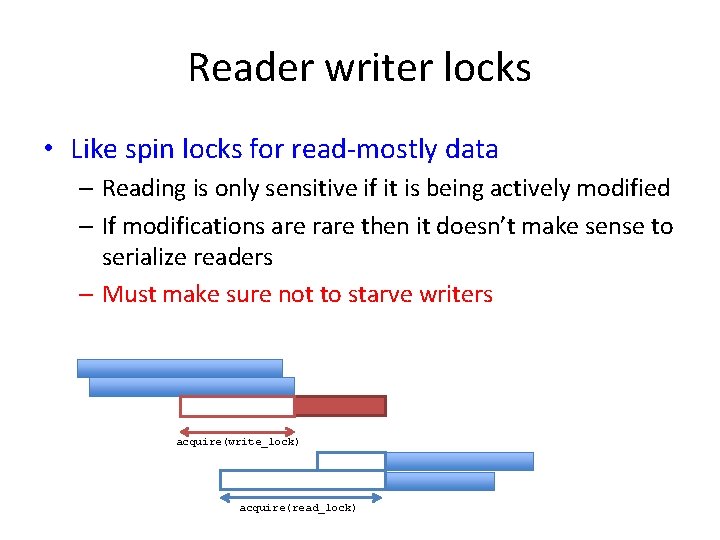

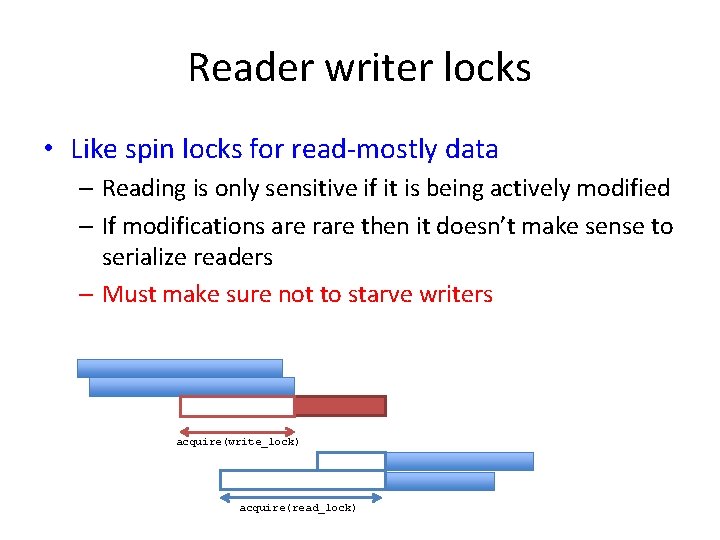

Reader writer locks • Like spin locks for read-mostly data – Reading is only sensitive if it is being actively modified – If modifications are rare then it doesn’t make sense to serialize readers – Must make sure not to starve writers acquire(write_lock) acquire(read_lock)

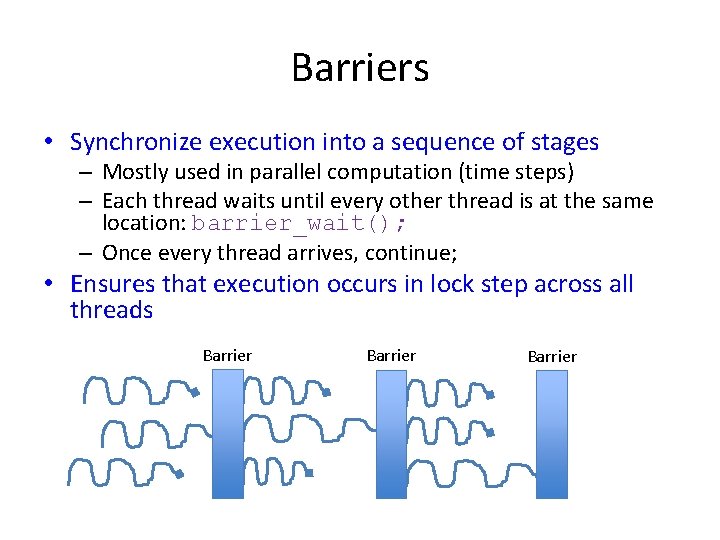

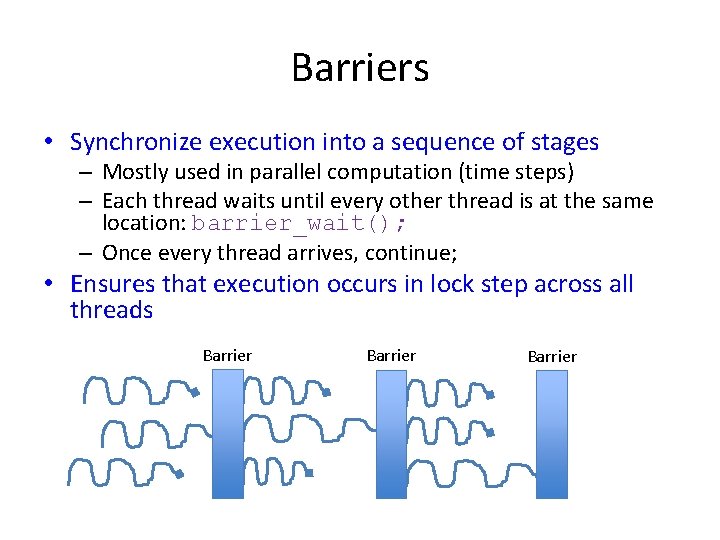

Barriers • Synchronize execution into a sequence of stages – Mostly used in parallel computation (time steps) – Each thread waits until every other thread is at the same location: barrier_wait(); – Once every thread arrives, continue; • Ensures that execution occurs in lock step across all threads Barrier

Wait Queues • Locking is bad • Busy waiting wastes resources • How do we use time waiting to do other stuff – Wait Queues – Sleep until an event arrives – Require signal from other thread to indicate status has changed – Re-evaluate current state before continuing

RCU • Read-copy-update – Replacement for reader-writer locks – Does not actually use locks – Creates different versions of shared data • Modifying data creates a new copy • Future readers access new copy • Old readers still have old version • Must garbage collect – Only after all readers are done – But how do we know? • Quiescent state – Place requirements on reader contexts (cannot sleep) – Therefore if a CPU with a reader has slept, then reader is done

Transactional memory • Embed transaction processing into memory system – Memory system tracks memory touched inside critical section (transaction) – If another thread modifies same data, transaction aborts • Execution returns to initial state • Memory contents reset to initial values • Present on latest Intel Haswell CPUs