SWE 681 ISA 681 Secure Software Design Programming

- Slides: 89

SWE 681 / ISA 681 Secure Software Design & Programming: Lecture 7: Cryptography Dr. David A. Wheeler 2020 06 02

Outline • Introduction • Basic crypto algorithms • Storing passwords – Use iterated per user salted hashes! • Basic protocols • Crypto wars 2

Cryptography & Cryptanalysis • Cryptography = the science (or art) of transforming intelligible data to an unintelligible form, and its inverse transformation – Means “Secret writing” • Cryptanalysis = the science (or art) of undoing the cryptographic transformation without (exact) knowledge of how that transformation was initially executed • We’ll focus on cryptography (defense), emphasizing how to counter cryptanalysis (attack) 3

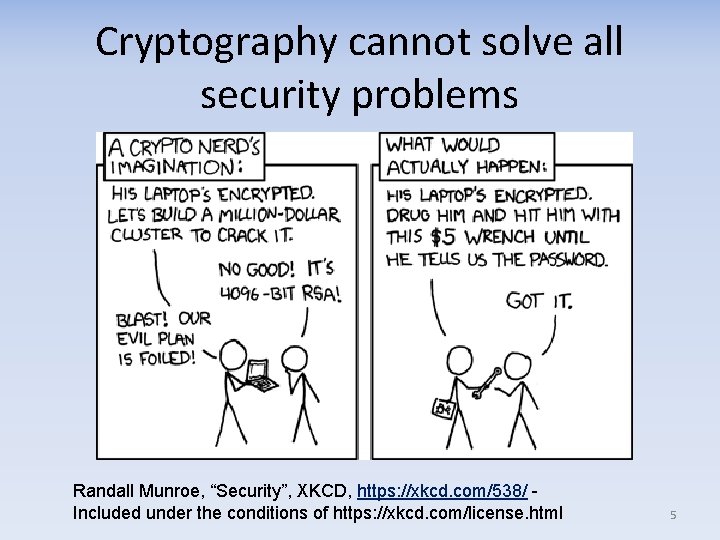

What cryptography is… and is not • Cryptography is a set of tools that can help develop secure software – Important component in some cases • Cryptography won’t solve most security issues – Cryptography is not the same as developing secure software – Note that most of this class is unrelated to crypto – “If you think cryptography is the answer to your problem, then you don’t know what your problem is” – Peter Neumann 4

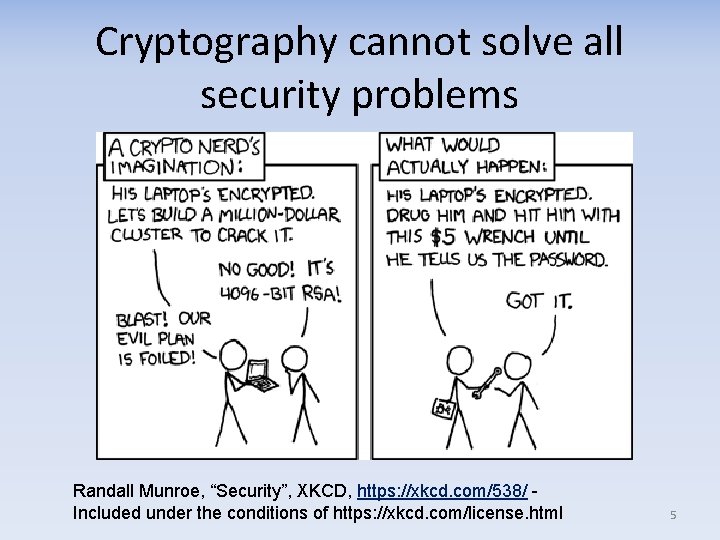

Cryptography cannot solve all security problems Randall Munroe, “Security”, XKCD, https: //xkcd. com/538/ Included under the conditions of https: //xkcd. com/license. html 5

Danger, Will Robinson • Never, never, never create your cryptographic algorithm or protocol – Specialist training: Ph. D in cryptography (which requires large amount of advanced college math), and many years experience to create new approaches that actually work – Even then, the odds are good you’ll get it wrong – Instead: Reuse what’s been done • Tiny implementation errors of crypto algorithms, or in how they are invoked, often become massive vulnerabilities – Don’t write your own crypto implementation either – Reuse good implementations where practical 12/4/2020 6

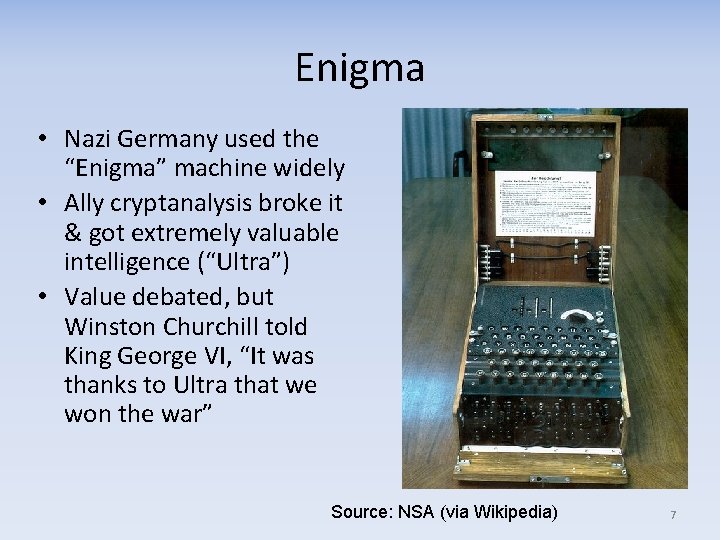

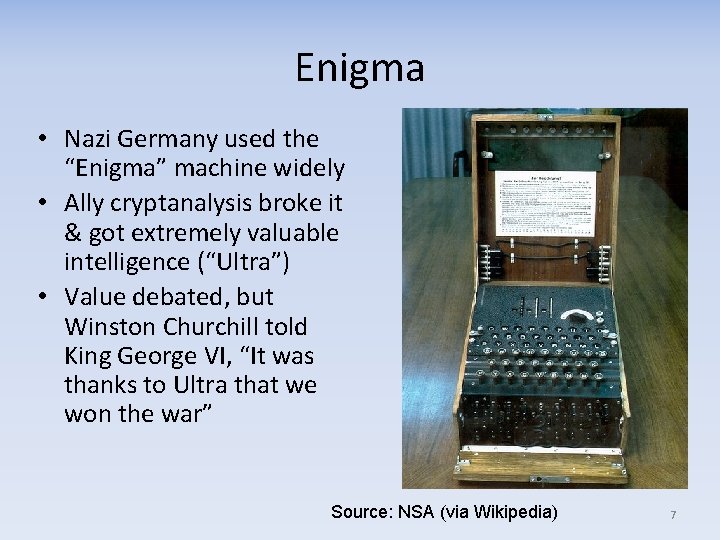

Enigma • Nazi Germany used the “Enigma” machine widely • Ally cryptanalysis broke it & got extremely valuable intelligence (“Ultra”) • Value debated, but Winston Churchill told King George VI, “It was thanks to Ultra that we won the war” Source: NSA (via Wikipedia) 7

Some common basic types of cryptographic algorithms • Symmetric (secret/shared key) encryption/decryption – Algorithm examples: DES*, 3 DES*, AES • Key exchange – Algorithm examples: Diffie Hellman, Curve 25519 • Asymmetric (public key) encryption/decryption – Algorithm examples: RSA, elliptic curve family • Cryptographic (one way) hash (aka “digital fingerprint”) – Algorithm examples: MD 5*, SHA 1*, SHA 512, SHA 3 • Cryptographic pseudo random number generator (PRNG) * These are examples; do not use these algorithms in future systems 8

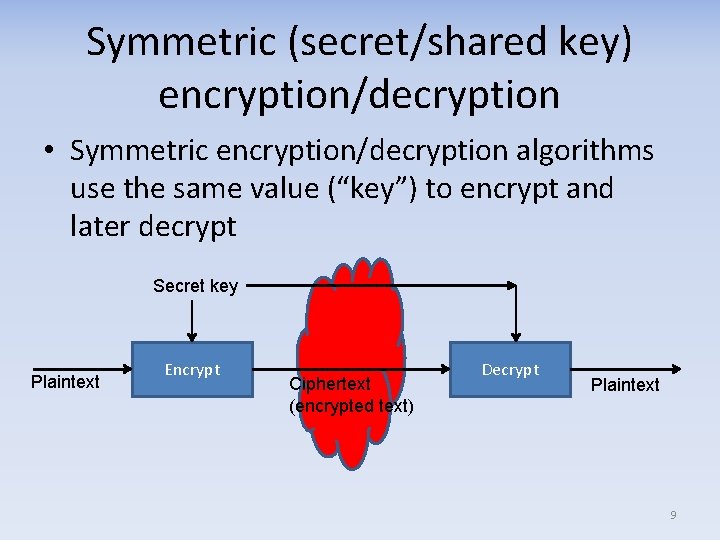

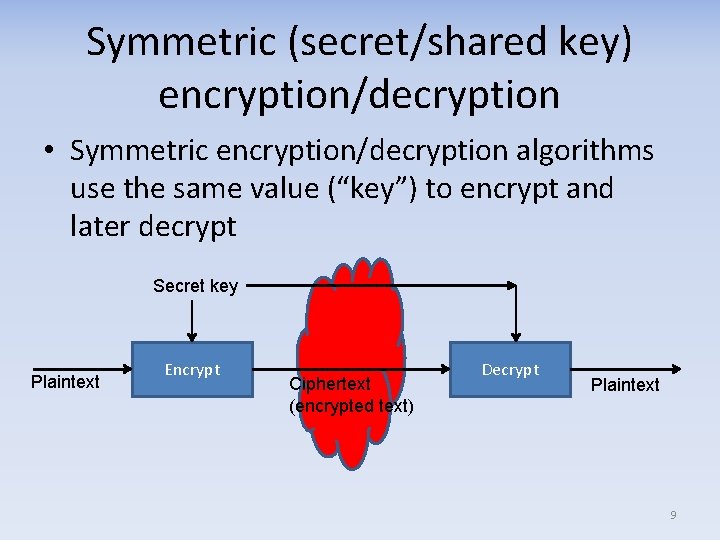

Symmetric (secret/shared key) encryption/decryption • Symmetric encryption/decryption algorithms use the same value (“key”) to encrypt and later decrypt Secret key Plaintext Encrypt Ciphertext (encrypted text) Decrypt Plaintext 9

Data Encryption Standard (DES) • • • 1973: NIST solicited for a DES 1974: NIST second solicitation, IBM responded 1975: Algorithm published 1976: NIST Workshop, adequate “ 10 15 years” 1977: DES standardized (FIPS) Has held up relatively well over time – Do need to avoid weak/semi weak keys • Slow in software • Big problem: Key only 56 variable bits – 64 bit key, but every 8 th bit is odd parity (beware: may use known key if don’t give correct parity!) – Easily broken with modern computers/hardware – Do not use DES for security today 10

Triple DES • Uses DES 3 times, with 3 keys K 1…K 3: – ciphertext = E(K 3, D(K 2, E(K 1, plaintext))) – plaintext = D(K 1, E(K 2, D(K 3, ciphertext))) • Each DES key 56 bits, full key length 3 x 56=168 bits – Effective key length 112 bits due to a “man in the middle” attack – Just like DES, keys must have correct parity & avoid weak keys • Historically relatively secure, but slow • Defined, as Triple Data Encryption Algorithm, in: – NIST Special Publication 800 67 Revision 1 – ISO/IEC 18033 3: 2005 Information technology — Security techniques — Encryption algorithms — Part 3: Block ciphers • Big problem: Block size only 64 bits (same as Blowfish) – Stop using it; attackable with long lived connections https: //sweet 32. info/ , CVE 2016 2183 (TLS), CVE 2016 6329 (Open. VPN) 11

Advanced Encryption Standard (AES) • Replaced DES – DES key too short, 3 DES too slow & block too short • Developed through open international competition run by NIST – Required algorithms & sample implementation – Forbid patents (so anyone can use) – Competition was a fantastic success • 15 candidates; Rijdael won & became AES – U. S. FIPS PUB 197 • A simple to understand detailed description is at: http: //www. moserware. com/2009/09/stick figure guide to advanced. html 12

AES (2) • Rijndael algorithm allows block and key sizes of any multiple of 32 bits, 128… 256 bits • AES is a limited variant of Rijndael with block size=128, key sizes = 128, 192, or 256 bits – Algorithm internally repeats; as key lengthens, number of iterations increases – 128 bit key: 10 cycles, 192 bit: 12, 256 bit: 14 • Much faster than 3 DES, longer keys 13

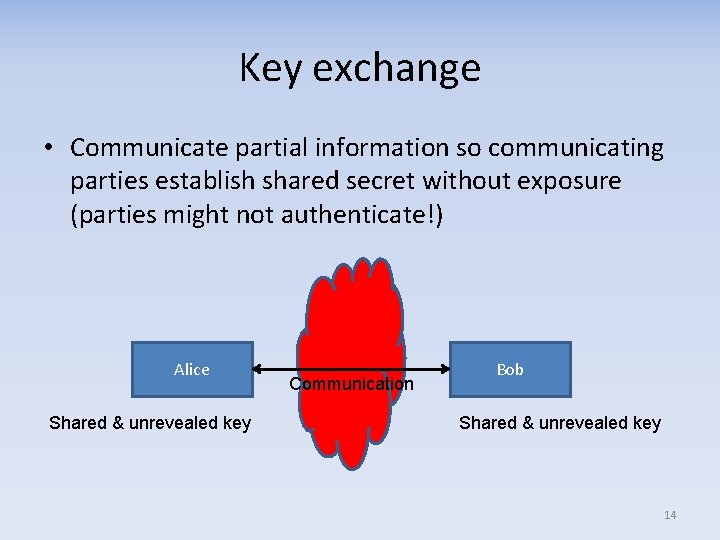

Key exchange • Communicate partial information so communicating parties establish shared secret without exposure (parties might not authenticate!) Alice Shared & unrevealed key Communication Bob Shared & unrevealed key 14

Diffie Hellman key exchange • Aka “Diffie–Hellman–Merkle” key exchange • First published in 1976 • Allows two parties, without prior knowledge, to jointly establish a shared secret key over an insecure communications channel – Key can then be used to encrypt subsequent communications using a symmetric key cipher – Does not authenticate (vulnerable to man in the middle) by itself, but other mechanisms can be used to address this • Used in Transport Layer Security (TLS) ephemeral modes (EDH or DHE) to provide perfect forward secrecy – Perfect forward secrecy = each session generates random public keys without using a deterministic algorithm. Thus, if a session key is compromised, newer & older session keys are not 15

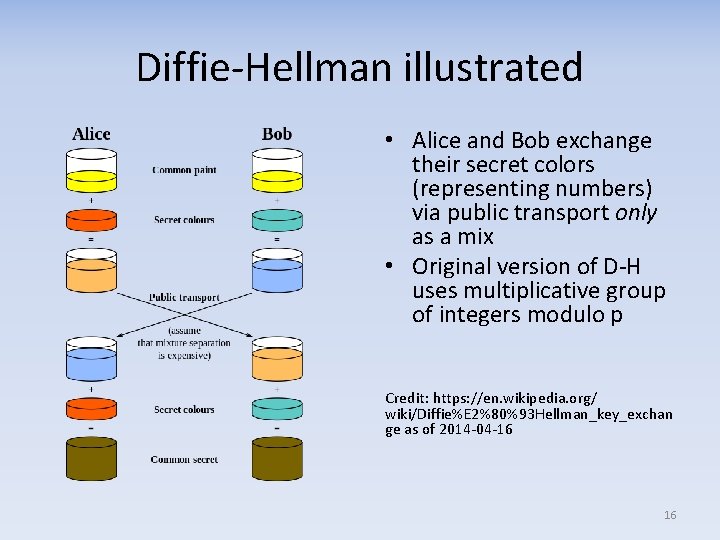

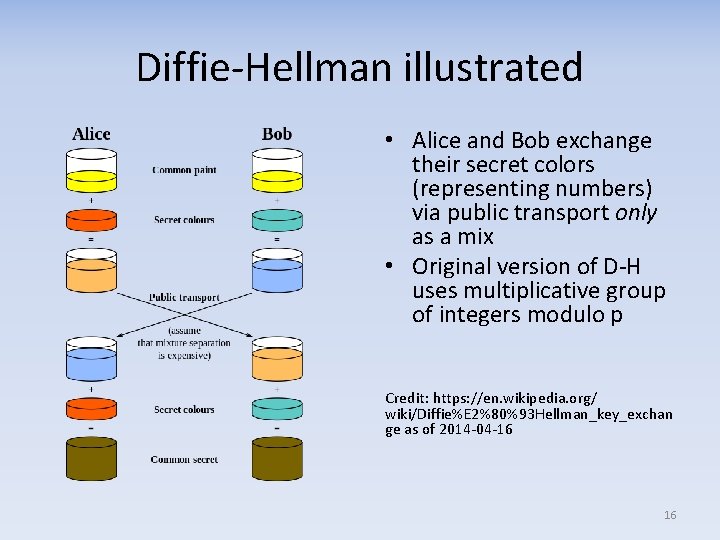

Diffie Hellman illustrated • Alice and Bob exchange their secret colors (representing numbers) via public transport only as a mix • Original version of D H uses multiplicative group of integers modulo p Credit: https: //en. wikipedia. org/ wiki/Diffie%E 2%80%93 Hellman_key_exchan ge as of 2014 04 16 16

Curve 25519 • Developed by D. J. Bernstein • Intended to be “a state of the art Diffie Hellman function” – “Given a user's 32 byte secret key, Curve 25519 computes the user's 32 byte public key. ” – “Given user's 32 byte secret key and another user's 32 byte public key, Curve 25519 computes a 32 byte secret shared by the two users. This secret can then be used to authenticate and encrypt messages between the two users. ” • Based on elliptic curve cryptography • Ed 25519 is a related public key elliptic curve signature system • More info: “Curve 25519: new Diffie Hellman speed records” by Daniel J. Bernstein, http: //cr. yp. to/ecdh. html 17

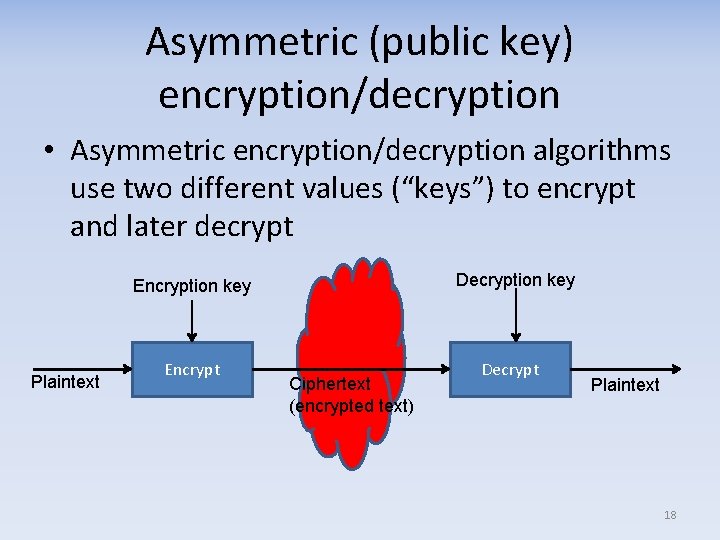

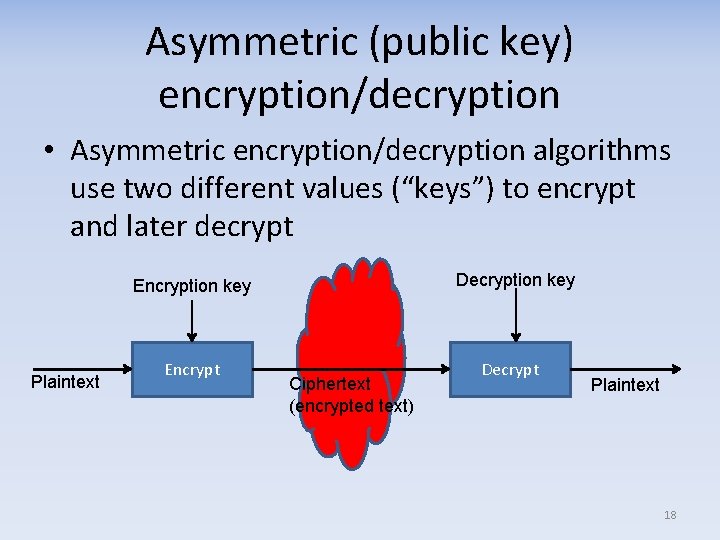

Asymmetric (public key) encryption/decryption • Asymmetric encryption/decryption algorithms use two different values (“keys”) to encrypt and later decrypt Decryption key Encryption key Plaintext Encrypt Ciphertext (encrypted text) Decrypt Plaintext 18

RSA • Named after Rivest, Shamir, & Adleman • Public key crypto based on difficulty of factoring into prime numbers – Public key & private key – Encryption & decryption raise “message” by large exponent • Patent released/expired in 2000 • Don’t use RSA keys < 1024 bits; 2048+ better – On August 14, 2012, Microsoft issued update to Windows XP & later to block RSA keys <1024 bits • Don’t re implement yourself – The math is easy, doing it correctly for crypto is hard 19

Elliptic curve cryptography (ECC) • Algorithms based on elliptic curves presume it’s infeasible to find the discrete logarithm of a random elliptic curve element, w. r. t. a publicly known base point – This is “elliptic curve discrete logarithm problem” (ECDLP) – Smaller key size for equivalent protection, e. g. , 256 ECC key ~ 3072 bit RSA key – Developed in 1985 by Neal Koblitz and Victor Miller – Very general approach, many algorithms based on ECC – Intro: http: //arstechnica. com/security/2013/10/a relatively easy to understand primer on elliptic curve cryptography/ • Several ECC algorithms included in NSA’s suite B (a suite of encryption algorithms) 20

Issue: Elliptic Curve Cryptography (ECC) & patents • Patent concerns are primary inhibitor of ECC use – ECC application may in some cases be inhibited in US by patents (Certicom) – Often unclear what’s covered (typical software patent problem) – RSA claims: “ECC… have no general patents, though some newer elliptic curve algorithms and certain efficient implementation techniques may be covered by patents… it is the implementation technique that is patented, not the prime or representation, and there alternative, compatible implementation techniques that are not covered by the patents…” • http: //web. archive. org/web/20130524001754/http: //www. rsa. com/rsalabs/node. asp? id=2325 – IETF RFC 6090 (released 2011) describes the fundamental ECC algorithms as defined seminal references from 1994 and earlier, with the goal of allowing patent free implementation… but patent holders can always sue anyway • US government has a license for national security uses – That doesn’t help the rest of us! – All parties have to agree before they can use a crypto algorithm – http: //www. nsa. gov/business/programs/elliptic_curve. shtml • Yet another example of how software patents put computer users & software developers at risk & inhibit innovation 21

Asymmetric often used with symmetric algorithms • Asymmetric algorithms tend to be slow • When used to encrypt, often used with symmetric algorithm – Sender creates single use “shared” key using cryptographically secure pseudo random number generator – Shared key encrypted using asymmetric algorithm – Receiver receives & decrypts shared key – Rest of data is encrypted with (faster) symmetric algorithm using this single use key 22

Cryptographic (one way) hash function • Cryptographic (one way) hash function takes arbitrary length data & generates fixed length hash (“fingerprint”) so infeasible to: – Create another message with a given hash value (“preimage resistance”) – Create another (modified? ) message with same hash as first message (“second preimage resistance”) – Create any two messages with same hash (“collision resistance”) Message Hash Cryptographic hash (fingerprint, digest) – fixed width 23

Cryptographic Hash • Overall goal: Adversary can’t replace or modify data without changing fingerprint • Some uses: – Verifying integrity – just store fingerprint, verify by recomputing to ensure unchanged/expected – Digital signing – Use private key to “encrypt” fingerprint; anyone can use public key to verify – File id – e. g. , CM systems – Creating “random” values – Password storage (“per user salted hashes”) 24

Cryptographic hash algorithms: MD 5, SHA 1, SHA 2 • MD 5: Was widely used, but now broken • SHA 1: Many moved to it, still widely used – Cryptanalysis work in 2004 found important weaknesses – Recommendation: gradually move from it • SHA 2 – Family: SHA 224, SHA 256, SHA 384, SHA 512 – Technical similarities with SHA 1 raised concerns 25

Cryptographic hash algorithms: SHA 3 • NIST announced on November 2, 2007 a public competition to develop a new cryptographic hash algorithm, SHA 3 • NIST received sixty four entries from cryptographers around the world by October 31, 2008 • NIST announced Keccak as the winner of the SHA 3 competition on October 2, 2012 26

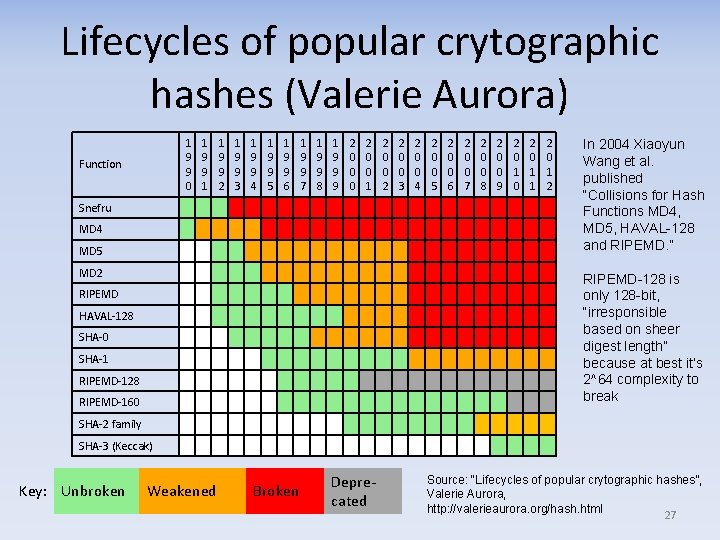

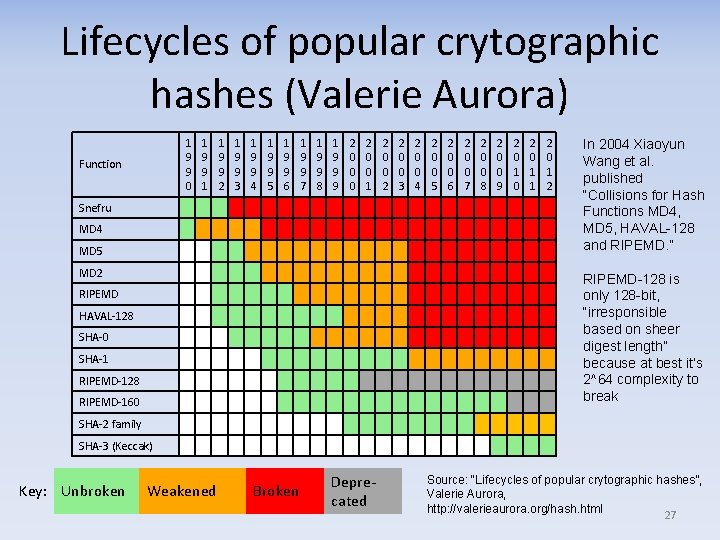

Lifecycles of popular crytographic hashes (Valerie Aurora) Function 1 9 9 0 1 9 9 1 1 9 9 2 1 9 9 3 1 9 9 4 1 9 9 5 1 9 9 6 1 9 9 7 1 9 9 8 1 9 9 9 2 0 0 0 2 0 0 1 2 0 0 2 2 0 0 3 2 0 0 4 2 0 0 5 2 0 0 6 2 0 0 7 2 0 0 8 2 0 0 9 Snefru MD 4 MD 5 MD 2 RIPEMD HAVAL 128 SHA 0 SHA 1 RIPEMD 128 RIPEMD 160 SHA 2 family 2 0 1 0 2 0 1 1 2 0 1 2 In 2004 Xiaoyun Wang et al. published “Collisions for Hash Functions MD 4, MD 5, HAVAL-128 and RIPEMD. ” RIPEMD-128 is only 128 -bit, “irresponsible based on sheer digest length” because at best it’s 2^64 complexity to break SHA 3 (Keccak) Key: Unbroken Weakened Broken Depre cated Source: “Lifecycles of popular crytographic hashes”, Valerie Aurora, http: //valerieaurora. org/hash. html 27

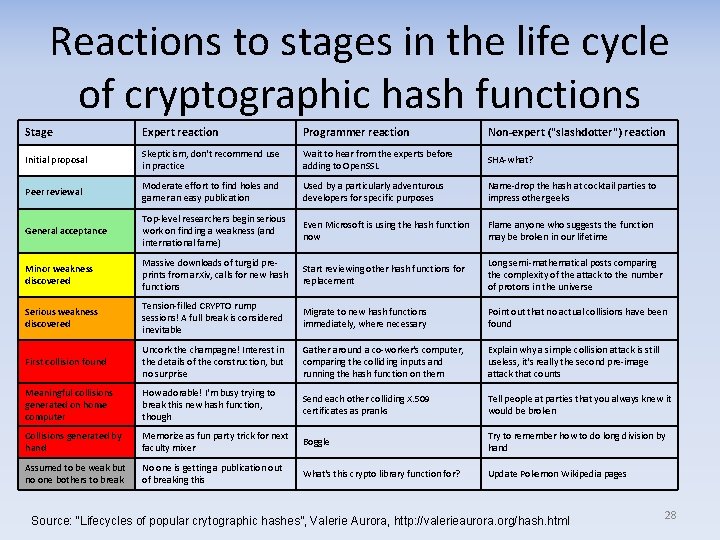

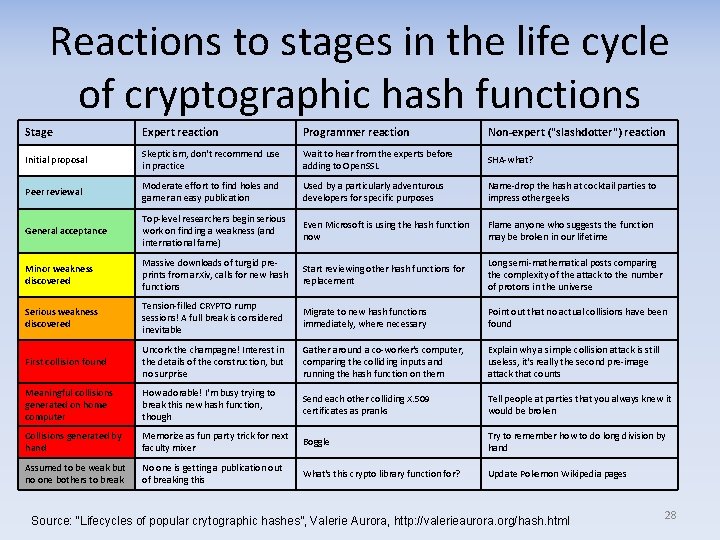

Reactions to stages in the life cycle of cryptographic hash functions Stage Expert reaction Programmer reaction Non-expert ("slashdotter") reaction Initial proposal Skepticism, don't recommend use in practice Wait to hear from the experts before adding to Open. SSL SHA what? Peer reviewal Moderate effort to find holes and garner an easy publication Used by a particularly adventurous developers for specific purposes Name drop the hash at cocktail parties to impress other geeks General acceptance Top level researchers begin serious work on finding a weakness (and international fame) Even Microsoft is using the hash function now Flame anyone who suggests the function may be broken in our lifetime Minor weakness discovered Massive downloads of turgid pre prints from ar. Xiv, calls for new hash functions Start reviewing other hash functions for replacement Long semi mathematical posts comparing the complexity of the attack to the number of protons in the universe Serious weakness discovered Tension filled CRYPTO rump sessions! A full break is considered inevitable Migrate to new hash functions immediately, where necessary Point out that no actual collisions have been found First collision found Uncork the champagne! Interest in the details of the construction, but no surprise Gather around a co worker's computer, comparing the colliding inputs and running the hash function on them Explain why a simple collision attack is still useless, it's really the second pre image attack that counts Meaningful collisions generated on home computer How adorable! I'm busy trying to break this new hash function, though Send each other colliding X. 509 certificates as pranks Tell people at parties that you always knew it would be broken Collisions generated by hand Memorize as fun party trick for next faculty mixer Boggle Try to remember how to do long division by hand Assumed to be weak but no one bothers to break No one is getting a publication out of breaking this What's this crypto library function for? Update Pokemon Wikipedia pages Source: “Lifecycles of popular crytographic hashes”, Valerie Aurora, http: //valerieaurora. org/hash. html 28

Cryptographic algorithms are often (eventually) broken • Cryptanalysis is an active field – Sometimes advances lead to weakening/break in algorithms… even ones considered secure before – Lifecycles of cryptographic hashes good illustration • Make sure you can change algorithms! – Designs should not assume that crypto algorithms never change • Best to have at least two algorithms implemented – So you can switch away from “broken” one • Not all experts agree that crypto agility is best – Alternate expert view: make it easy to update systems – My opinion: Agree in principle, but in practice can’t make everyone (especially OTHER people) update their systems 29

RSA & DH: Some concerns • Concerns about RSA & Diffie Hellman (DH) raised at Black Hat 2013 – RSA and DH underpinned by difficulty of “discrete logarithm problem” – French academic Antoine Joux published two papers suggesting an algorithm to break it could be found before long – “Our conclusion is there is a small but definite chance that RSA and classic Diffie Hellman will not be usable for encryption purposes in four to five years” Alex Stamos, chief technology officer, Artemis – “The RSA protocol that is the foundation of security on the Internet is likely to be broken in the very near future, ” Philippe Courtot, CEO of Qualys – http: //www. technologyreview. com/news/517781/math advances raise the prospect of an internet security crisis/). • Elliptic Curve Cryptography (ECC) techniques available – May be inhibited in US by Certicom patents (patents vs. security), as noted earlier • No evidence NIST will start a new public key crypto competition – why? !? 30

Use what you check (Android “Master Key”) • CVE 2013 4787 / Android bug 8219321 – Reported to Google by Jeff Forristal (Rain Forest Puppy) – Packages are really “zip” archive files – Special zip files can be created where >1 file can exist in a directory with same name – One is checked for its fingerprint… but a different one is actually installed • App stores can check for such malformed zip files 31

Cryptographic pseudo random number generator (PRNG) • Many algorithms depend on secret keys or “nonce” that won’t be reused • A great way to get these is to generate a random number (if enough bits) • Software fundamentally deterministic – Where can, use hardware for truly random value helpful – But can’t always use them, or just them, or trust them • Cryptographic pseudo random number generators create “random” data – So attackers cannot determine past/future values 32

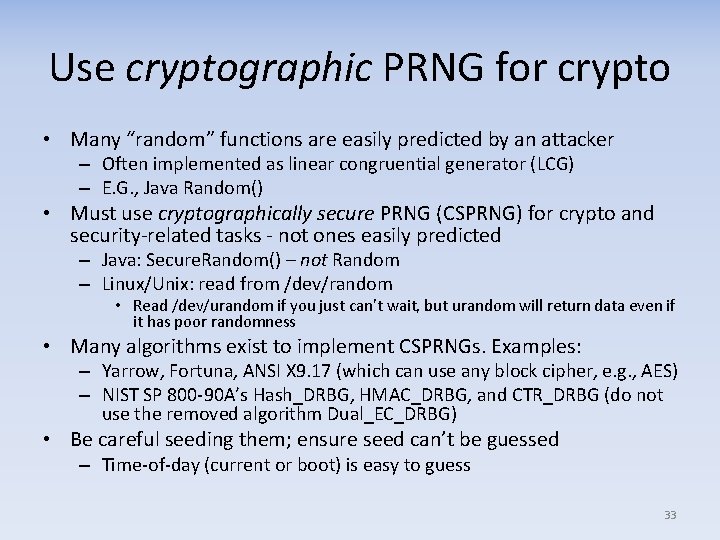

Use cryptographic PRNG for crypto • Many “random” functions are easily predicted by an attacker – Often implemented as linear congruential generator (LCG) – E. G. , Java Random() • Must use cryptographically secure PRNG (CSPRNG) for crypto and security related tasks not ones easily predicted – Java: Secure. Random() – not Random – Linux/Unix: read from /dev/random • Read /dev/urandom if you just can’t wait, but urandom will return data even if it has poor randomness • Many algorithms exist to implement CSPRNGs. Examples: – Yarrow, Fortuna, ANSI X 9. 17 (which can use any block cipher, e. g. , AES) – NIST SP 800 90 A’s Hash_DRBG, HMAC_DRBG, and CTR_DRBG (do not use the removed algorithm Dual_EC_DRBG) • Be careful seeding them; ensure seed can’t be guessed – Time of day (current or boot) is easy to guess 33

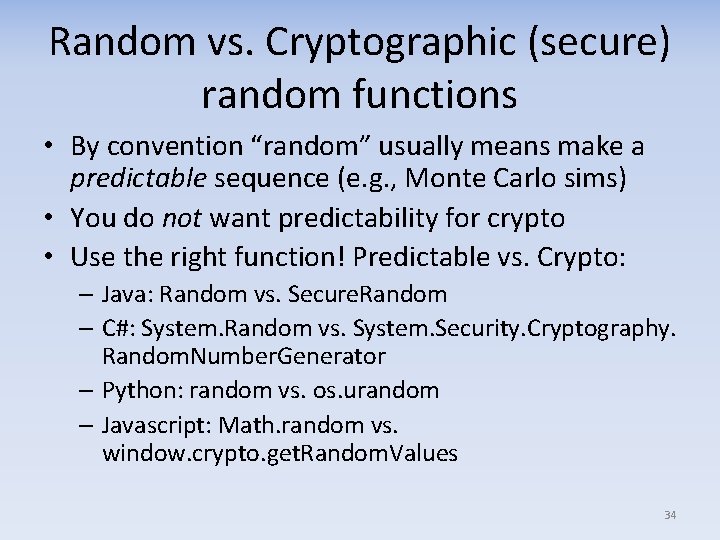

Random vs. Cryptographic (secure) random functions • By convention “random” usually means make a predictable sequence (e. g. , Monte Carlo sims) • You do not want predictability for crypto • Use the right function! Predictable vs. Crypto: – Java: Random vs. Secure. Random – C#: System. Random vs. System. Security. Cryptography. Random. Number. Generator – Python: random vs. os. urandom – Javascript: Math. random vs. window. crypto. get. Random. Values 34

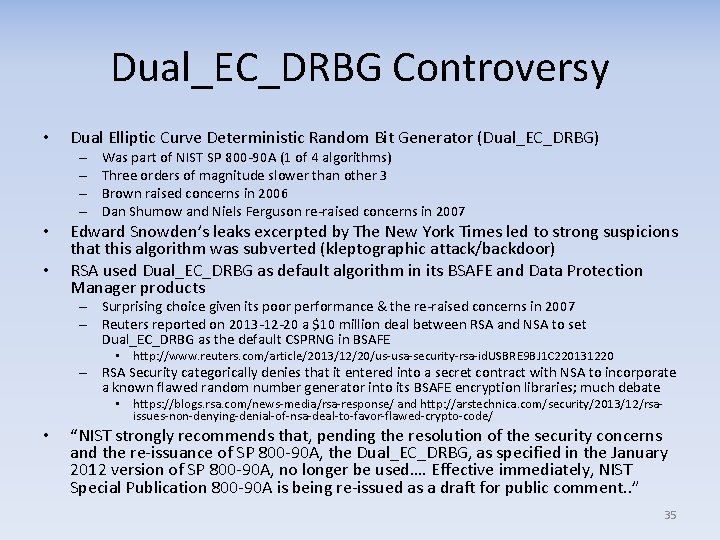

Dual_EC_DRBG Controversy • Dual Elliptic Curve Deterministic Random Bit Generator (Dual_EC_DRBG) – – • • Was part of NIST SP 800 90 A (1 of 4 algorithms) Three orders of magnitude slower than other 3 Brown raised concerns in 2006 Dan Shumow and Niels Ferguson re raised concerns in 2007 Edward Snowden’s leaks excerpted by The New York Times led to strong suspicions that this algorithm was subverted (kleptographic attack/backdoor) RSA used Dual_EC_DRBG as default algorithm in its BSAFE and Data Protection Manager products – Surprising choice given its poor performance & the re raised concerns in 2007 – Reuters reported on 2013 12 20 a $10 million deal between RSA and NSA to set Dual_EC_DRBG as the default CSPRNG in BSAFE • http: //www. reuters. com/article/2013/12/20/us usa security rsa id. USBRE 9 BJ 1 C 220131220 – RSA Security categorically denies that it entered into a secret contract with NSA to incorporate a known flawed random number generator into its BSAFE encryption libraries; much debate • https: //blogs. rsa. com/news media/rsa response/ and http: //arstechnica. com/security/2013/12/rsa issues non denying denial of nsa deal to favor flawed crypto code/ • “NIST strongly recommends that, pending the resolution of the security concerns and the re issuance of SP 800 90 A, the Dual_EC_DRBG, as specified in the January 2012 version of SP 800 90 A, no longer be used…. Effective immediately, NIST Special Publication 800 90 A is being re issued as a draft for public comment. . ” 35

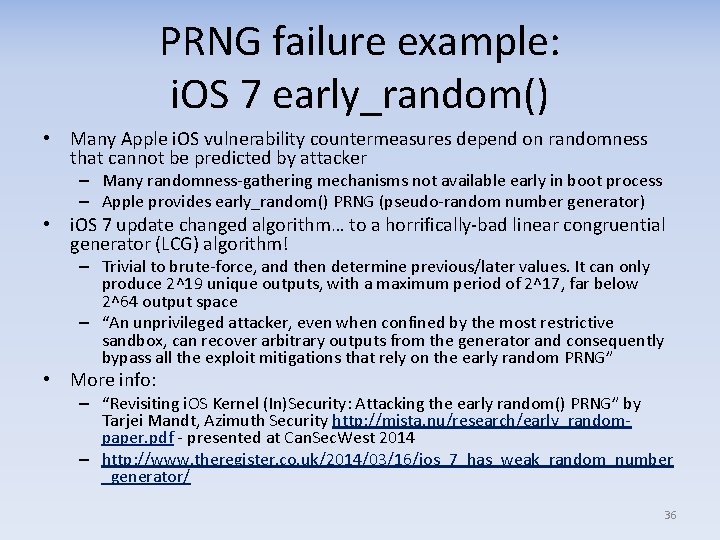

PRNG failure example: i. OS 7 early_random() • Many Apple i. OS vulnerability countermeasures depend on randomness that cannot be predicted by attacker – Many randomness gathering mechanisms not available early in boot process – Apple provides early_random() PRNG (pseudo random number generator) • i. OS 7 update changed algorithm… to a horrifically bad linear congruential generator (LCG) algorithm! – Trivial to brute force, and then determine previous/later values. It can only produce 2^19 unique outputs, with a maximum period of 2^17, far below 2^64 output space – “An unprivileged attacker, even when confined by the most restrictive sandbox, can recover arbitrary outputs from the generator and consequently bypass all the exploit mitigations that rely on the early random PRNG” • More info: – “Revisiting i. OS Kernel (In)Security: Attacking the early random() PRNG” by Tarjei Mandt, Azimuth Security http: //mista. nu/research/early_random paper. pdf presented at Can. Sec. West 2014 – http: //www. theregister. co. uk/2014/03/16/ios_7_has_weak_random_number _generator/ 36

Crypto algorithm: Simple, stream, and block level • “Simple” algorithms work symbol at a time – Read a byte, determine what to translate that to • Block level algorithms – Group symbols together into blocks – Replace sequence of blocks • Later byte in same block changes encoding of this byte – Many modern algorithms work this way • Stream algorithms – Work bit at a time 37

Block algorithm modes • Electronic code book (ECB) – Same block of data returns the same result – Essentially a debug/test mode for crypto algorithms – NEVER USE ECB for real work!! • Cipher block chaining (CBC) – Uses previous block result to encrypt next block – Secure & supported everywhere (issue: lacks an authentication code) – Big issue: sequential nature makes it slow for multi core systems • Counter (CTR): Much faster than CBC (parallelizable) • Galois/Counter Mode (GCM) – Fast (parallelizable) – Adds authentication code so it can immediately detect if decryption is correct (so it has built in support for confidentiality and integrity) – Widely used today it’s a good mode to use Block encryption algorithms can be used in many different modes – GCM is a good widely-used one, and beware of ECB! 38

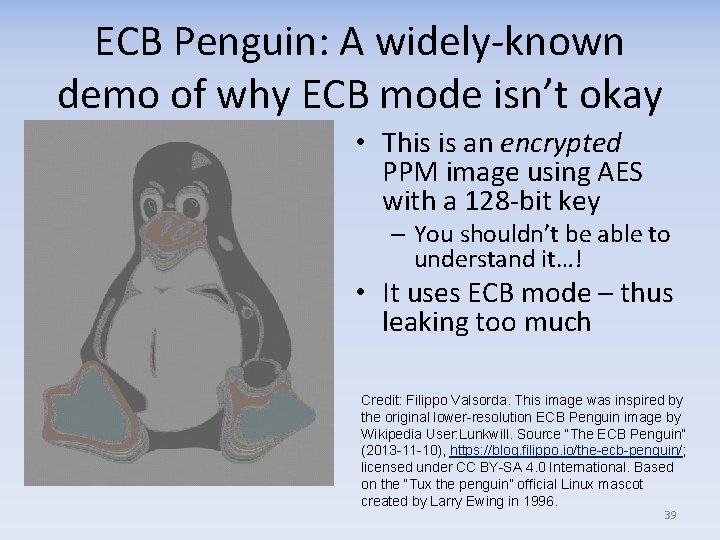

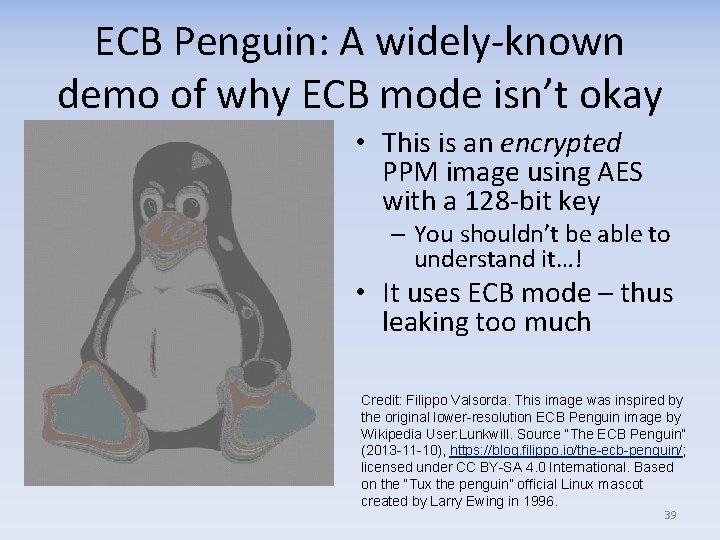

ECB Penguin: A widely known demo of why ECB mode isn’t okay • This is an encrypted PPM image using AES with a 128 bit key – You shouldn’t be able to understand it…! • It uses ECB mode – thus leaking too much Credit: Filippo Valsorda. This image was inspired by the original lower-resolution ECB Penguin image by Wikipedia User: Lunkwill. Source “The ECB Penguin” (2013 -11 -10), https: //blog. filippo. io/the-ecb-penguin/; licensed under CC BY-SA 4. 0 International. Based on the “Tux the penguin” official Linux mascot created by Larry Ewing in 1996. 39

Initialization vector (IV) • Many cryptographic modes require an “initialization vector” (IV) – Need not be secret, but must be unpredictable • Never reuse IVs – Create new IV each session/encryption – In general, don’t reuse values for crypto algorithms unless it is specifically documented as being fine • Use cryptographically random values – I. e. , from a cryptographic pseudo random number generator (PRNG) – In general, don’t use non cryptographic random values whenever using crypto algorithms 40

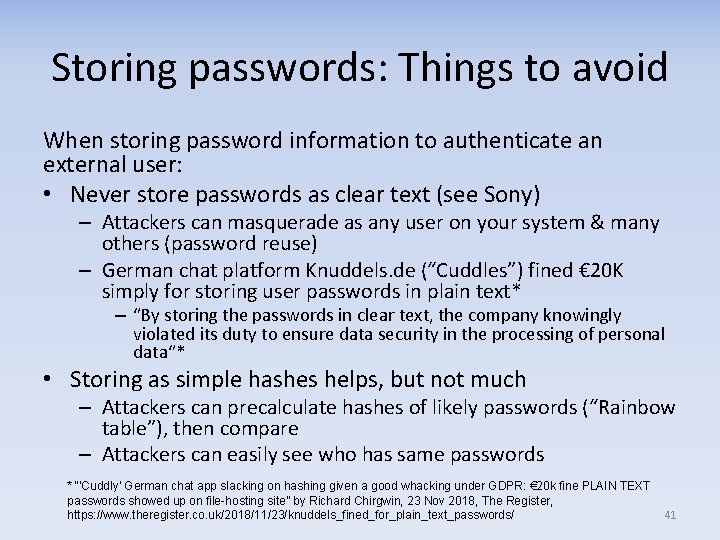

Storing passwords: Things to avoid When storing password information to authenticate an external user: • Never store passwords as clear text (see Sony) – Attackers can masquerade as any user on your system & many others (password reuse) – German chat platform Knuddels. de (“Cuddles”) fined € 20 K simply for storing user passwords in plain text* – “By storing the passwords in clear text, the company knowingly violated its duty to ensure data security in the processing of personal data“* • Storing as simple hashes helps, but not much – Attackers can precalculate hashes of likely passwords (“Rainbow table”), then compare – Attackers can easily see who has same passwords * “'Cuddly' German chat app slacking on hashing given a good whacking under GDPR: € 20 k fine PLAIN TEXT passwords showed up on file-hosting site” by Richard Chirgwin, 23 Nov 2018, The Register, https: //www. theregister. co. uk/2018/11/23/knuddels_fined_for_plain_text_passwords/ 41

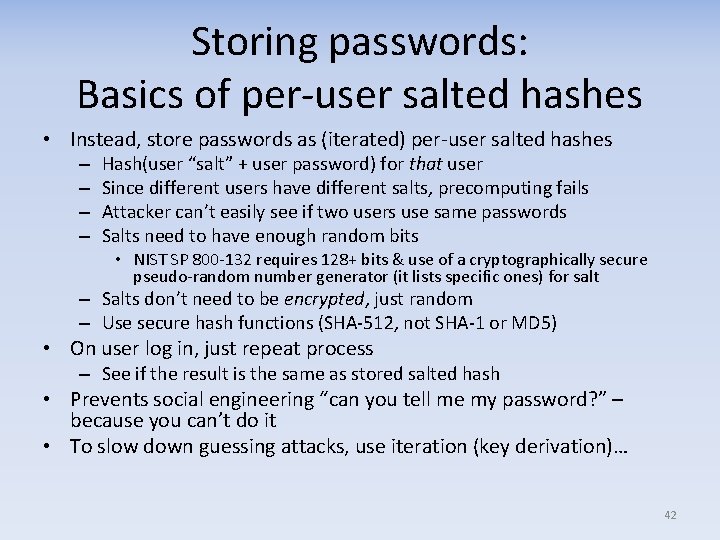

Storing passwords: Basics of per user salted hashes • Instead, store passwords as (iterated) per user salted hashes – – Hash(user “salt” + user password) for that user Since different users have different salts, precomputing fails Attacker can’t easily see if two users use same passwords Salts need to have enough random bits • NIST SP 800 132 requires 128+ bits & use of a cryptographically secure pseudo random number generator (it lists specific ones) for salt – Salts don’t need to be encrypted, just random – Use secure hash functions (SHA 512, not SHA 1 or MD 5) • On user log in, just repeat process – See if the result is the same as stored salted hash • Prevents social engineering “can you tell me my password? ” – because you can’t do it • To slow down guessing attacks, use iteration (key derivation)… 42

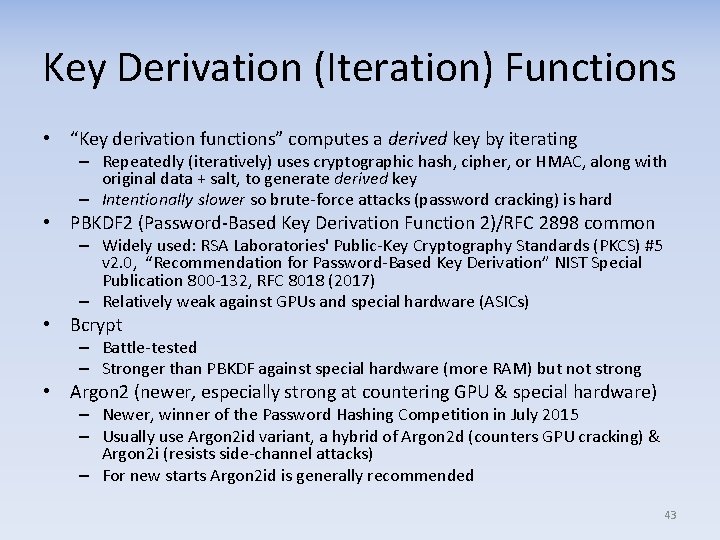

Key Derivation (Iteration) Functions • “Key derivation functions” computes a derived key by iterating – Repeatedly (iteratively) uses cryptographic hash, cipher, or HMAC, along with original data + salt, to generate derived key – Intentionally slower so brute force attacks (password cracking) is hard • PBKDF 2 (Password Based Key Derivation Function 2)/RFC 2898 common – Widely used: RSA Laboratories' Public Key Cryptography Standards (PKCS) #5 v 2. 0, “Recommendation for Password Based Key Derivation” NIST Special Publication 800 132, RFC 8018 (2017) – Relatively weak against GPUs and special hardware (ASICs) • Bcrypt – Battle tested – Stronger than PBKDF against special hardware (more RAM) but not strong • Argon 2 (newer, especially strong at countering GPU & special hardware) – Newer, winner of the Password Hashing Competition in July 2015 – Usually use Argon 2 id variant, a hybrid of Argon 2 d (counters GPU cracking) & Argon 2 i (resists side channel attacks) – For new starts Argon 2 id is generally recommended 43

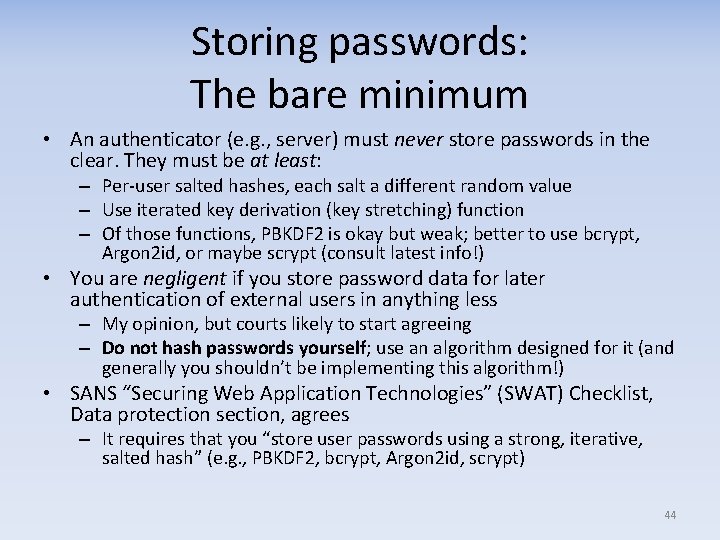

Storing passwords: The bare minimum • An authenticator (e. g. , server) must never store passwords in the clear. They must be at least: – Per user salted hashes, each salt a different random value – Use iterated key derivation (key stretching) function – Of those functions, PBKDF 2 is okay but weak; better to use bcrypt, Argon 2 id, or maybe scrypt (consult latest info!) • You are negligent if you store password data for later authentication of external users in anything less – My opinion, but courts likely to start agreeing – Do not hash passwords yourself; use an algorithm designed for it (and generally you shouldn’t be implementing this algorithm!) • SANS “Securing Web Application Technologies” (SWAT) Checklist, Data protection section, agrees – It requires that you “store user passwords using a strong, iterative, salted hash” (e. g. , PBKDF 2, bcrypt, Argon 2 id, scrypt) 44

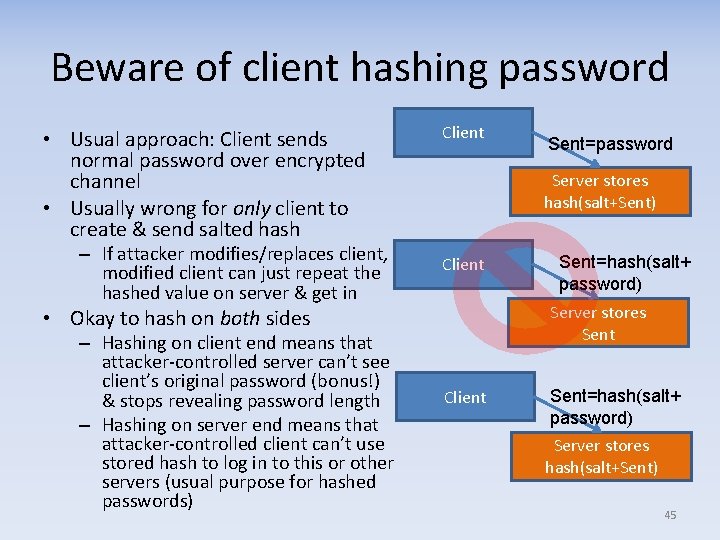

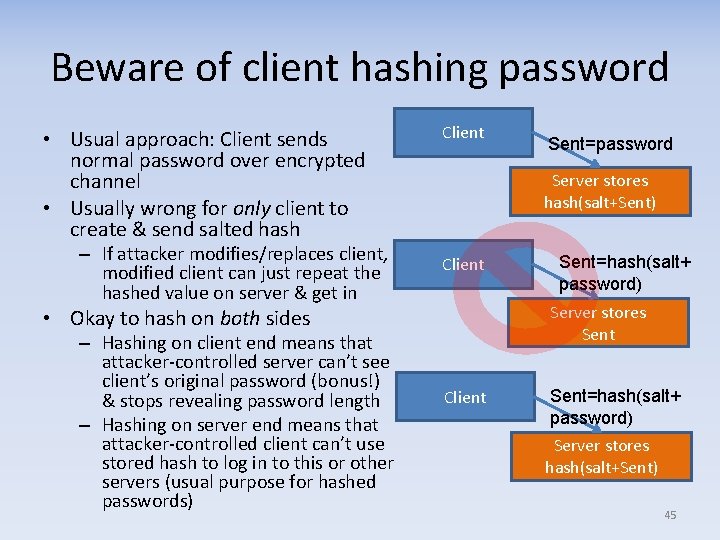

Beware of client hashing password • Usual approach: Client sends normal password over encrypted channel • Usually wrong for only client to create & send salted hash – If attacker modifies/replaces client, modified client can just repeat the hashed value on server & get in Client Server stores hash(salt+Sent) Client Sent=hash(salt+ password) Server stores Sent • Okay to hash on both sides – Hashing on client end means that attacker controlled server can’t see client’s original password (bonus!) & stops revealing password length – Hashing on server end means that attacker controlled client can’t use stored hash to log in to this or other servers (usual purpose for hashed passwords) Sent=password Client Sent=hash(salt+ password) Server stores hash(salt+Sent) 45

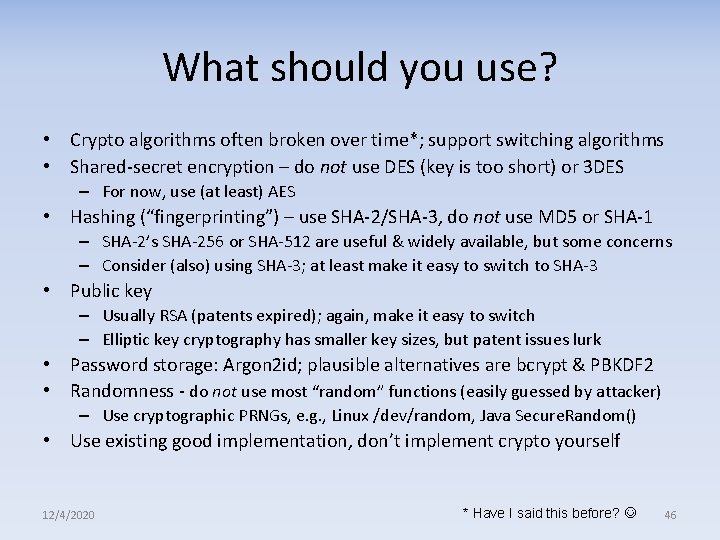

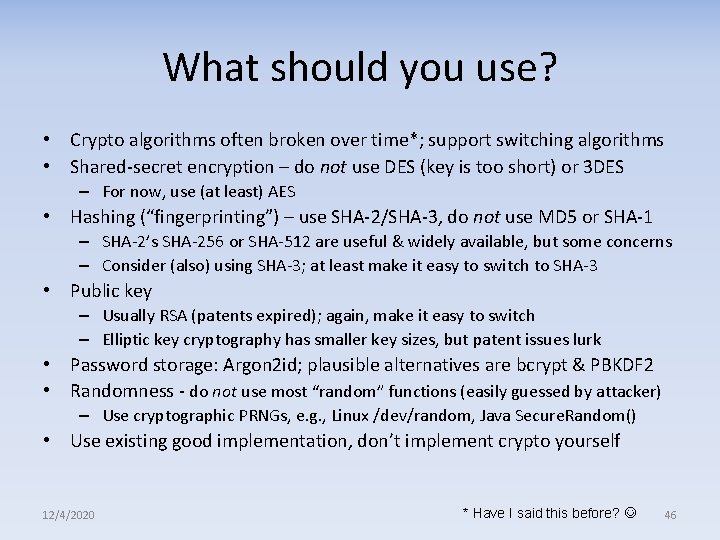

What should you use? • Crypto algorithms often broken over time*; support switching algorithms • Shared secret encryption – do not use DES (key is too short) or 3 DES – For now, use (at least) AES • Hashing (“fingerprinting”) – use SHA 2/SHA 3, do not use MD 5 or SHA 1 – SHA 2’s SHA 256 or SHA 512 are useful & widely available, but some concerns – Consider (also) using SHA 3; at least make it easy to switch to SHA 3 • Public key – Usually RSA (patents expired); again, make it easy to switch – Elliptic key cryptography has smaller key sizes, but patent issues lurk • Password storage: Argon 2 id; plausible alternatives are bcrypt & PBKDF 2 • Randomness do not use most “random” functions (easily guessed by attacker) – Use cryptographic PRNGs, e. g. , Linux /dev/random, Java Secure. Random() • Use existing good implementation, don’t implement crypto yourself 12/4/2020 * Have I said this before? 46

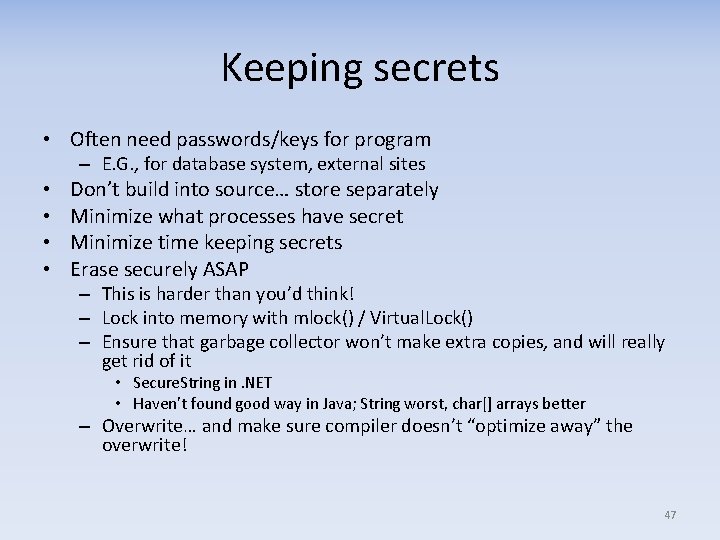

Keeping secrets • Often need passwords/keys for program – E. G. , for database system, external sites • • Don’t build into source… store separately Minimize what processes have secret Minimize time keeping secrets Erase securely ASAP – This is harder than you’d think! – Lock into memory with mlock() / Virtual. Lock() – Ensure that garbage collector won’t make extra copies, and will really get rid of it • Secure. String in. NET • Haven’t found good way in Java; String worst, char[] arrays better – Overwrite… and make sure compiler doesn’t “optimize away” the overwrite! 47

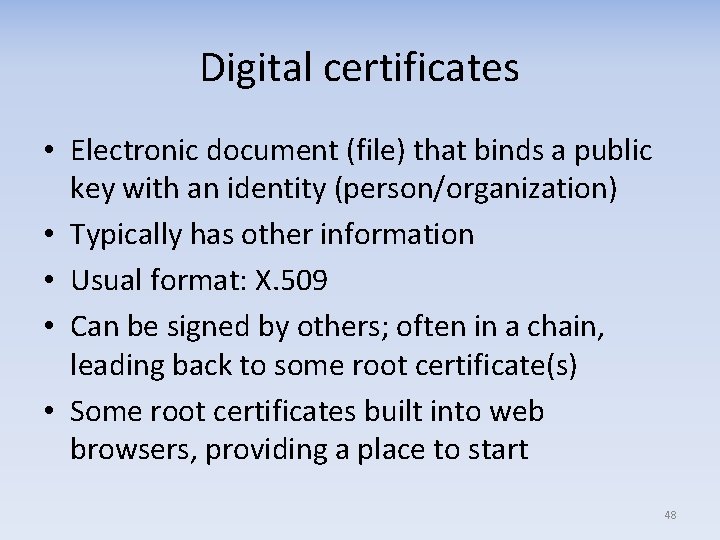

Digital certificates • Electronic document (file) that binds a public key with an identity (person/organization) • Typically has other information • Usual format: X. 509 • Can be signed by others; often in a chain, leading back to some root certificate(s) • Some root certificates built into web browsers, providing a place to start 48

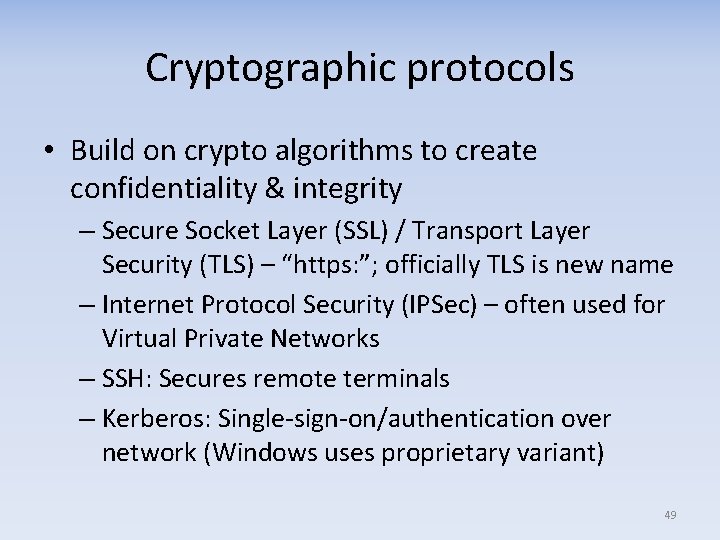

Cryptographic protocols • Build on crypto algorithms to create confidentiality & integrity – Secure Socket Layer (SSL) / Transport Layer Security (TLS) – “https: ”; officially TLS is new name – Internet Protocol Security (IPSec) – often used for Virtual Private Networks – SSH: Secures remote terminals – Kerberos: Single sign on/authentication over network (Windows uses proprietary variant) 49

Configuring an HTTPS server? Look for good guidance • Mozilla’s “Security/Server Side TLS” is good: – https: //wiki. mozilla. org/Security/Server_Side_TLS • Discusses some key issues – How old are your oldest acceptable clients? (modern, intermediate, old) – Avoid bad/broken algorithms – Enable countermeasures for known attacks • Qualys test: – https: //www. ssllabs. com/ssltest/ 50

SSL/TLS cert validation often implemented incorrectly • Common errors: – Failure to verify chain of trust – Failure verify hostname (certs only valid for given hostname or pattern!) – Failure to deal with certification revocation & X. 509 extensions (key usage limits, name constraints, etc. ) • Cause: Confusing APIs in SSL/TLS & data transport libraries – Open. SSL_connect: Return value signals some errors, but if “OK” must then check “verify result” flags – Java SSLSocket. Factory doesn’t always verify hostname – c. URL: if CURLOPT_SSL_VERIFYHOST=true, hostname check disabled • • Always test code with abnormal certs (unknown, wrong host, etc. ) In test environment add self signed certs/CAs to keystore; don’t disable Read API docs carefully, explicitly set options, carefully check results Read “The most dangerous code in the world: Validating SSL certificates in non browser software” by Goergiev et al, 2012 51

Public Key Infrastructure (PKI) / Certificate Authority (CA) Issues (1) • Most protocols authenticate the server (destination) by checking certificates – How can you be sure that the certificate belongs to that server? – Accepting a wrong one leads to man in the middle attack – “PKI” provides certificates, e. g. , from 1+ certificate authorities (CAs) • Unfortunately, many CAs untrustworthy – Summary: “Defcon 19: Moxie Marlinspike SSL And The Future Of Authenticity” http: //www. youtube. com/watch? v=x. Iikl. Py. S 8 MU – Throw away “bad” CAs = can’t access most of the Internet – Some organizations even self subvert! • TLS/SSL still helpful, but greatly weakened because of this – Originally designed vs. passive attacks, authenticity was “a bit of a hand wave” • No easy solution, and not enough people working to solve it – In some scenarios “certificate pinning” & DNSSEC can help 52

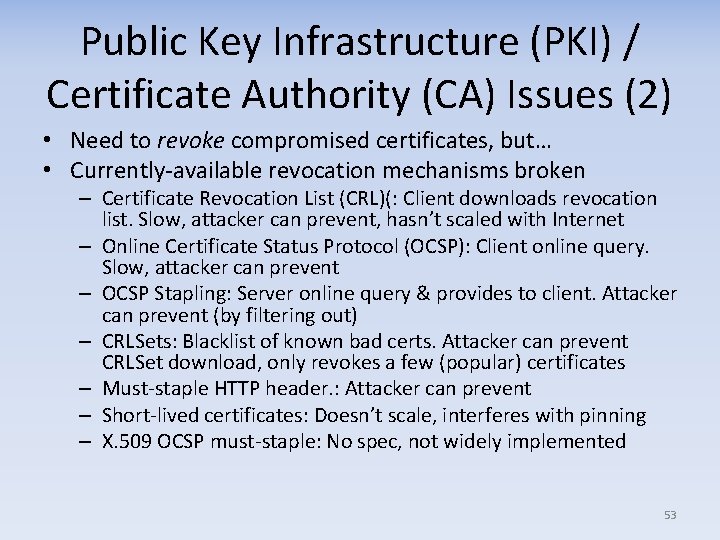

Public Key Infrastructure (PKI) / Certificate Authority (CA) Issues (2) • Need to revoke compromised certificates, but… • Currently available revocation mechanisms broken – Certificate Revocation List (CRL)(: Client downloads revocation list. Slow, attacker can prevent, hasn’t scaled with Internet – Online Certificate Status Protocol (OCSP): Client online query. Slow, attacker can prevent – OCSP Stapling: Server online query & provides to client. Attacker can prevent (by filtering out) – CRLSets: Blacklist of known bad certs. Attacker can prevent CRLSet download, only revokes a few (popular) certificates – Must staple HTTP header. : Attacker can prevent – Short lived certificates: Doesn’t scale, interferes with pinning – X. 509 OCSP must staple: No spec, not widely implemented 53

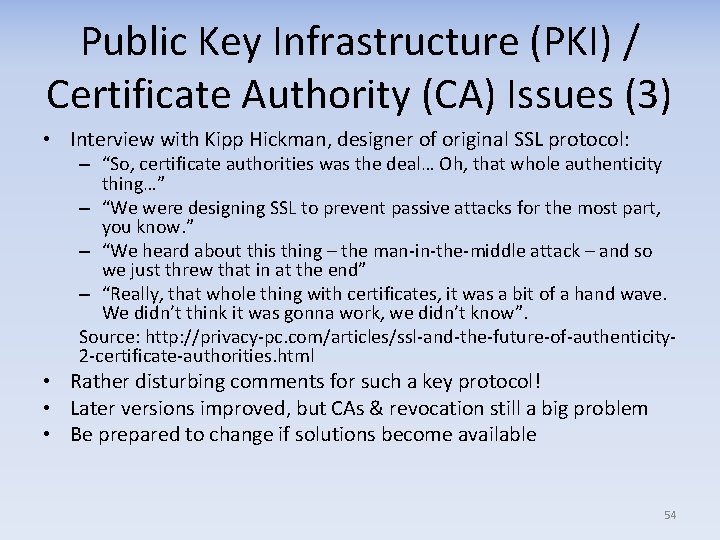

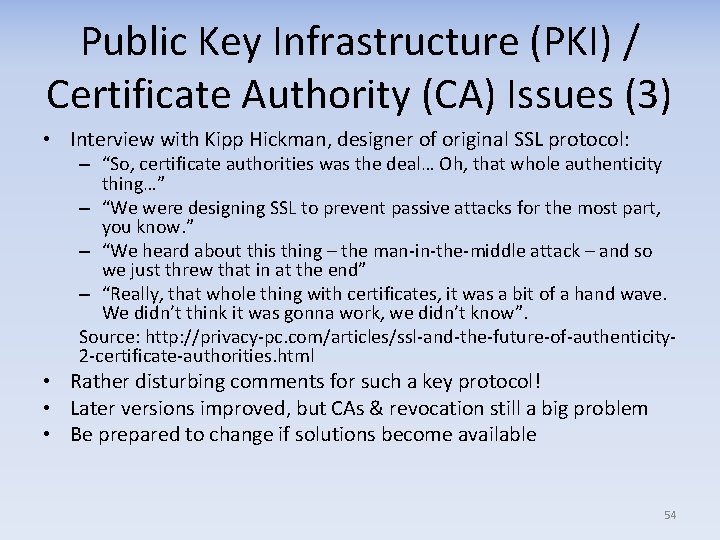

Public Key Infrastructure (PKI) / Certificate Authority (CA) Issues (3) • Interview with Kipp Hickman, designer of original SSL protocol: – “So, certificate authorities was the deal… Oh, that whole authenticity thing…” – “We were designing SSL to prevent passive attacks for the most part, you know. ” – “We heard about this thing – the man in the middle attack – and so we just threw that in at the end” – “Really, that whole thing with certificates, it was a bit of a hand wave. We didn’t think it was gonna work, we didn’t know”. Source: http: //privacy pc. com/articles/ssl and the future of authenticity 2 certificate authorities. html • Rather disturbing comments for such a key protocol! • Later versions improved, but CAs & revocation still a big problem • Be prepared to change if solutions become available 54

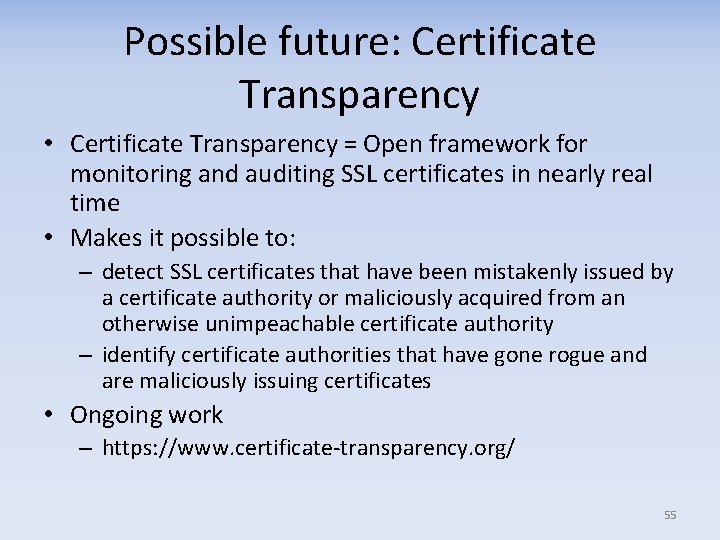

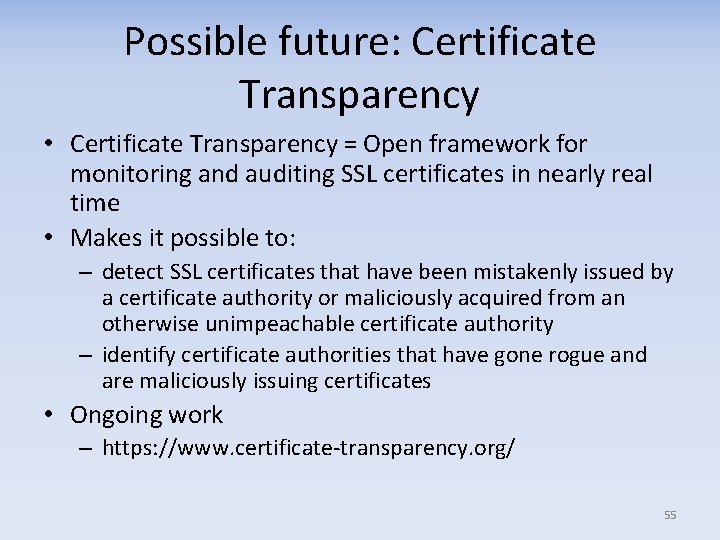

Possible future: Certificate Transparency • Certificate Transparency = Open framework for monitoring and auditing SSL certificates in nearly real time • Makes it possible to: – detect SSL certificates that have been mistakenly issued by a certificate authority or maliciously acquired from an otherwise unimpeachable certificate authority – identify certificate authorities that have gone rogue and are maliciously issuing certificates • Ongoing work – https: //www. certificate transparency. org/ 55

Certificate Transparency specific goals Make the issuance and existence of SSL certificates open to scrutiny by domain owners, CAs, and domain users: 1. Make it impossible (or at least very difficult) for a CA to issue a SSL certificate for a domain without the certificate being visible to the owner of that domain. 2. Provide an open auditing and monitoring system that lets any domain owner or CA determine whether certificates have been mistakenly or maliciously issued. 3. Protect users (as much as possible) from being duped by certificates that were mistakenly or maliciously issued. https: //www. certificate-transparency. org/what-is-ct 56

Certificate Transparency Components • Certificate logs = simple network services that maintain cryptographically assured, publicly auditable, append only records of certificates – Anyone can submit certificates to a log, although CAs will likely be the foremost submitters – Anyone can query a log for a cryptographic proof • Monitors = publicly run servers that periodically contact all of the log servers and watch for suspicious certificates • Auditors = lightweight software components that: – Verify that logs are behaving & cryptographically consistent. If a log is not behaving properly, then the log will need to explain itself or risk being shut down. – Certify that a particular certificate appears in a log https: //www. certificate-transparency. org/what-is-ct 57

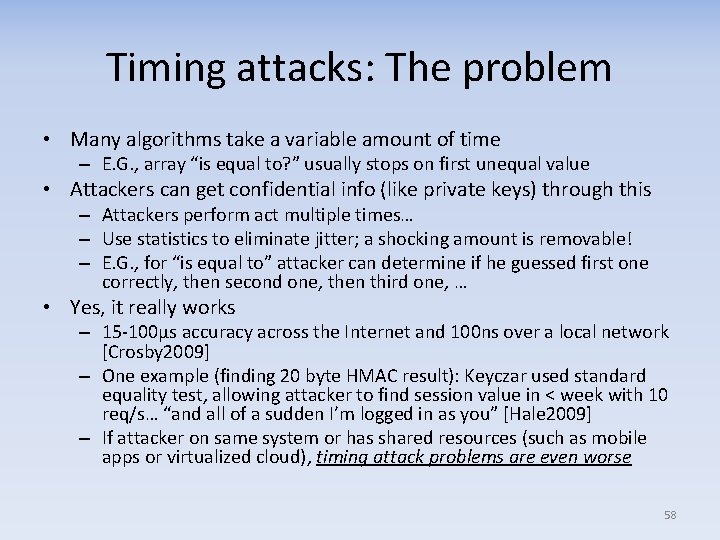

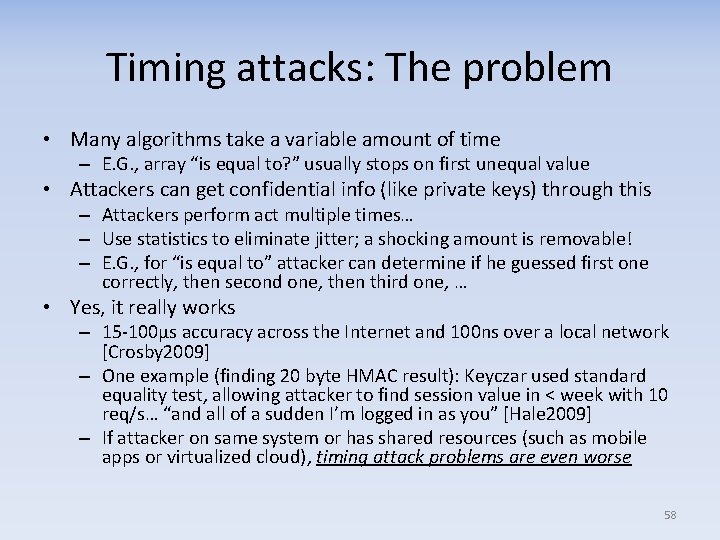

Timing attacks: The problem • Many algorithms take a variable amount of time – E. G. , array “is equal to? ” usually stops on first unequal value • Attackers can get confidential info (like private keys) through this – Attackers perform act multiple times… – Use statistics to eliminate jitter; a shocking amount is removable! – E. G. , for “is equal to” attacker can determine if he guessed first one correctly, then second one, then third one, … • Yes, it really works – 15 100μs accuracy across the Internet and 100 ns over a local network [Crosby 2009] – One example (finding 20 byte HMAC result): Keyczar used standard equality test, allowing attacker to find session value in < week with 10 req/s… “and all of a sudden I’m logged in as you” [Hale 2009] – If attacker on same system or has shared resources (such as mobile apps or virtualized cloud), timing attack problems are even worse 58

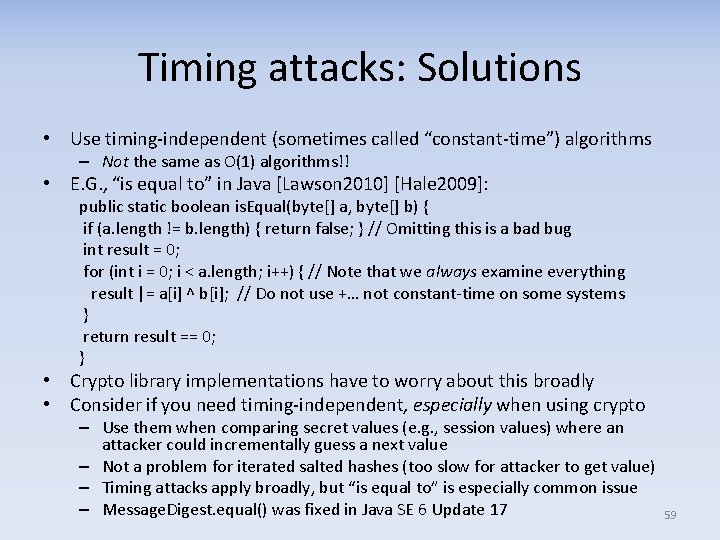

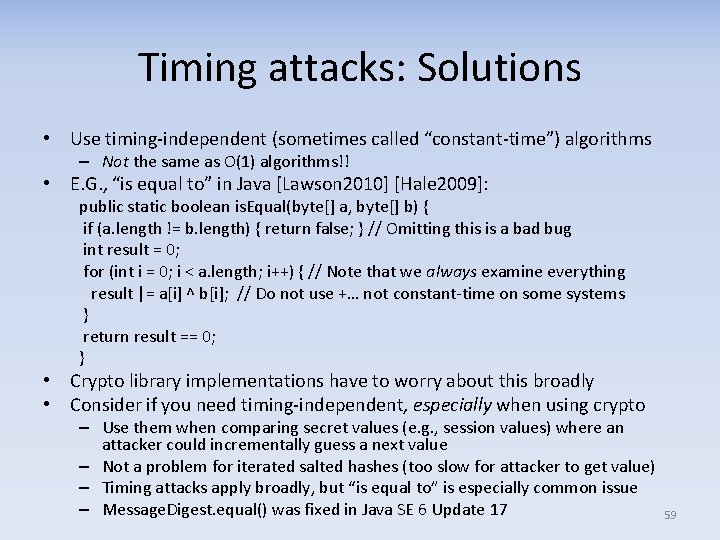

Timing attacks: Solutions • Use timing independent (sometimes called “constant time”) algorithms – Not the same as O(1) algorithms!! • E. G. , “is equal to” in Java [Lawson 2010] [Hale 2009]: public static boolean is. Equal(byte[] a, byte[] b) { if (a. length != b. length) { return false; } // Omitting this is a bad bug int result = 0; for (int i = 0; i < a. length; i++) { // Note that we always examine everything result |= a[i] ^ b[i]; // Do not use +… not constant time on some systems } return result == 0; } • Crypto library implementations have to worry about this broadly • Consider if you need timing independent, especially when using crypto – Use them when comparing secret values (e. g. , session values) where an attacker could incrementally guess a next value – Not a problem for iterated salted hashes (too slow for attacker to get value) – Timing attacks apply broadly, but “is equal to” is especially common issue – Message. Digest. equal() was fixed in Java SE 6 Update 17 59

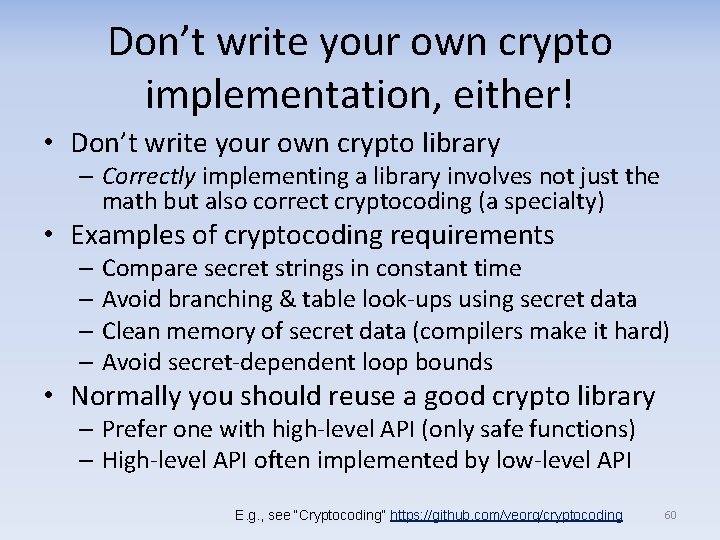

Don’t write your own crypto implementation, either! • Don’t write your own crypto library – Correctly implementing a library involves not just the math but also correct cryptocoding (a specialty) • Examples of cryptocoding requirements – Compare secret strings in constant time – Avoid branching & table look ups using secret data – Clean memory of secret data (compilers make it hard) – Avoid secret dependent loop bounds • Normally you should reuse a good crypto library – Prefer one with high level API (only safe functions) – High level API often implemented by low level API E. g. , see “Cryptocoding” https: //github. com/veorq/cryptocoding 60

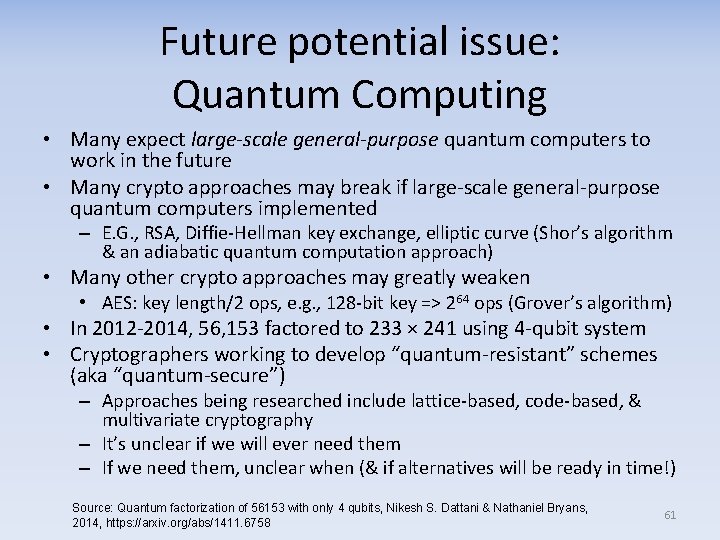

Future potential issue: Quantum Computing • Many expect large-scale general-purpose quantum computers to work in the future • Many crypto approaches may break if large scale general purpose quantum computers implemented – E. G. , RSA, Diffie Hellman key exchange, elliptic curve (Shor’s algorithm & an adiabatic quantum computation approach) • Many other crypto approaches may greatly weaken • AES: key length/2 ops, e. g. , 128 bit key => 264 ops (Grover’s algorithm) • In 2012 2014, 56, 153 factored to 233 × 241 using 4 qubit system • Cryptographers working to develop “quantum resistant” schemes (aka “quantum secure”) – Approaches being researched include lattice based, code based, & multivariate cryptography – It’s unclear if we will ever need them – If we need them, unclear when (& if alternatives will be ready in time!) Source: Quantum factorization of 56153 with only 4 qubits, Nikesh S. Dattani & Nathaniel Bryans, 2014, https: //arxiv. org/abs/1411. 6758 61

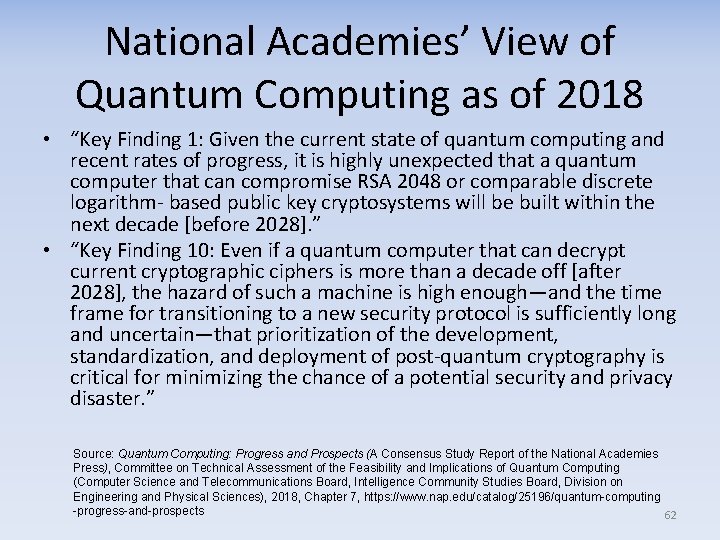

National Academies’ View of Quantum Computing as of 2018 • “Key Finding 1: Given the current state of quantum computing and recent rates of progress, it is highly unexpected that a quantum computer that can compromise RSA 2048 or comparable discrete logarithm based public key cryptosystems will be built within the next decade [before 2028]. ” • “Key Finding 10: Even if a quantum computer that can decrypt current cryptographic ciphers is more than a decade off [after 2028], the hazard of such a machine is high enough—and the time frame for transitioning to a new security protocol is sufficiently long and uncertain—that prioritization of the development, standardization, and deployment of post quantum cryptography is critical for minimizing the chance of a potential security and privacy disaster. ” Source: Quantum Computing: Progress and Prospects (A Consensus Study Report of the National Academies Press), Committee on Technical Assessment of the Feasibility and Implications of Quantum Computing (Computer Science and Telecommunications Board, Intelligence Community Studies Board, Division on Engineering and Physical Sciences), 2018, Chapter 7, https: //www. nap. edu/catalog/25196/quantum-computing -progress-and-prospects 62

Crypto Wars: Government access vs. security 63

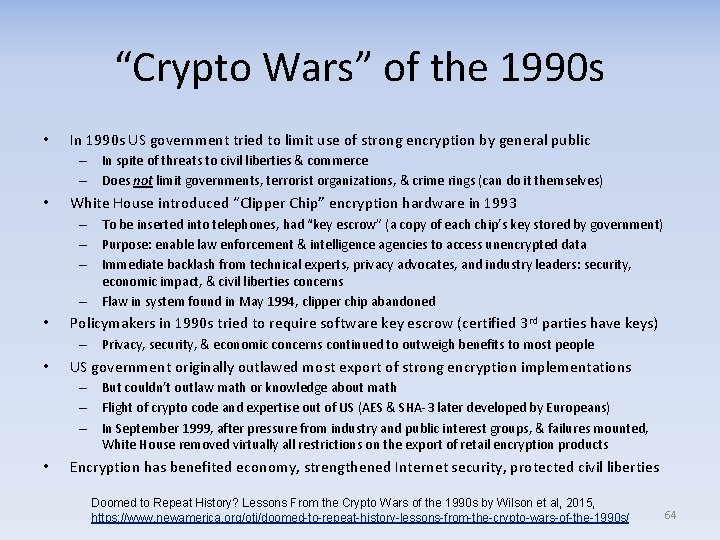

“Crypto Wars” of the 1990 s • In 1990 s US government tried to limit use of strong encryption by general public – In spite of threats to civil liberties & commerce – Does not limit governments, terrorist organizations, & crime rings (can do it themselves) • White House introduced “Clipper Chip” encryption hardware in 1993 – To be inserted into telephones, had “key escrow” (a copy of each chip’s key stored by government) – Purpose: enable law enforcement & intelligence agencies to access unencrypted data – Immediate backlash from technical experts, privacy advocates, and industry leaders: security, economic impact, & civil liberties concerns – Flaw in system found in May 1994, clipper chip abandoned • Policymakers in 1990 s tried to require software key escrow (certified 3 rd parties have keys) – Privacy, security, & economic concerns continued to outweigh benefits to most people • US government originally outlawed most export of strong encryption implementations – But couldn’t outlaw math or knowledge about math – Flight of crypto code and expertise out of US (AES & SHA 3 later developed by Europeans) – In September 1999, after pressure from industry and public interest groups, & failures mounted, White House removed virtually all restrictions on the export of retail encryption products • Encryption has benefited economy, strengthened Internet security, protected civil liberties Doomed to Repeat History? Lessons From the Crypto Wars of the 1990 s by Wilson et al, 2015, https: //www. newamerica. org/oti/doomed-to-repeat-history-lessons-from-the-crypto-wars-of-the-1990 s/ 64

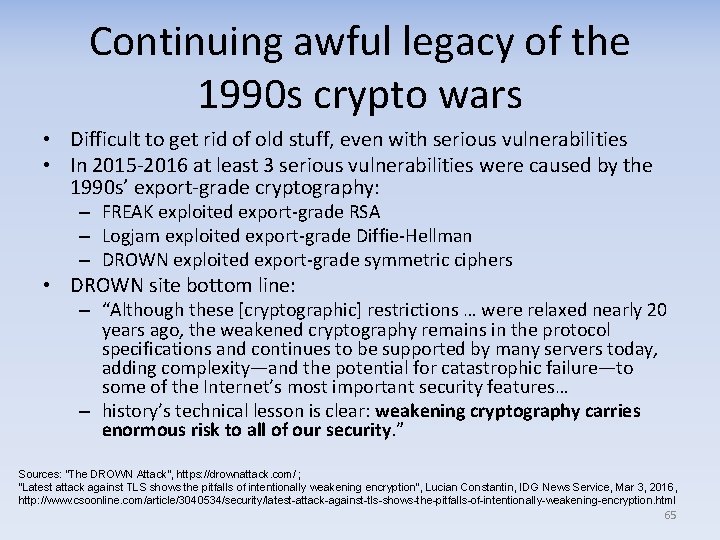

Continuing awful legacy of the 1990 s crypto wars • Difficult to get rid of old stuff, even with serious vulnerabilities • In 2015 2016 at least 3 serious vulnerabilities were caused by the 1990 s’ export grade cryptography: – FREAK exploited export grade RSA – Logjam exploited export grade Diffie Hellman – DROWN exploited export grade symmetric ciphers • DROWN site bottom line: – “Although these [cryptographic] restrictions … were relaxed nearly 20 years ago, the weakened cryptography remains in the protocol specifications and continues to be supported by many servers today, adding complexity—and the potential for catastrophic failure—to some of the Internet’s most important security features… – history’s technical lesson is clear: weakening cryptography carries enormous risk to all of our security. ” Sources: “The DROWN Attack”, https: //drownattack. com/ ; “Latest attack against TLS shows the pitfalls of intentionally weakening encryption”, Lucian Constantin, IDG News Service, Mar 3, 2016, http: //www. csoonline. com/article/3040534/security/latest-attack-against-tls-shows-the-pitfalls-of-intentionally-weakening-encryption. html 65

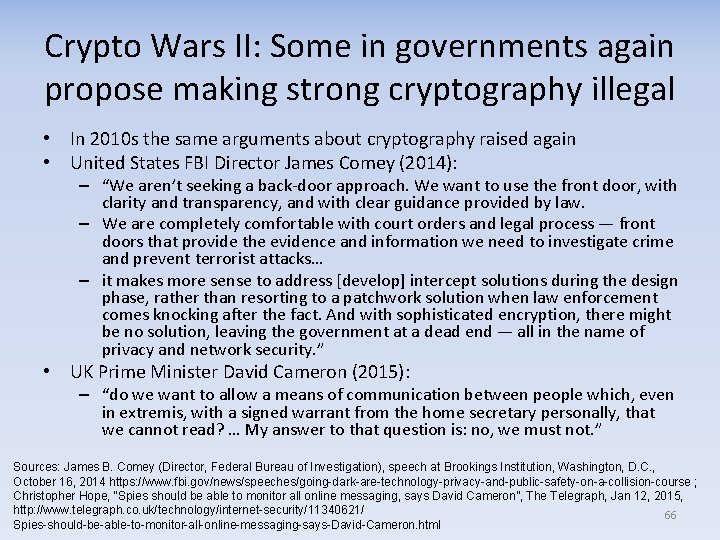

Crypto Wars II: Some in governments again propose making strong cryptography illegal • In 2010 s the same arguments about cryptography raised again • United States FBI Director James Comey (2014): – “We aren’t seeking a back door approach. We want to use the front door, with clarity and transparency, and with clear guidance provided by law. – We are completely comfortable with court orders and legal process — front doors that provide the evidence and information we need to investigate crime and prevent terrorist attacks… – it makes more sense to address [develop] intercept solutions during the design phase, rather than resorting to a patchwork solution when law enforcement comes knocking after the fact. And with sophisticated encryption, there might be no solution, leaving the government at a dead end — all in the name of privacy and network security. ” • UK Prime Minister David Cameron (2015): – “do we want to allow a means of communication between people which, even in extremis, with a signed warrant from the home secretary personally, that we cannot read? … My answer to that question is: no, we must not. ” Sources: James B. Comey (Director, Federal Bureau of Investigation), speech at Brookings Institution, Washington, D. C. , October 16, 2014 https: //www. fbi. gov/news/speeches/going-dark-are-technology-privacy-and-public-safety-on-a-collision-course ; Christopher Hope, “Spies should be able to monitor all online messaging, says David Cameron”, The Telegraph, Jan 12, 2015, http: //www. telegraph. co. uk/technology/internet-security/11340621/ 66 Spies-should-be-able-to-monitor-all-online-messaging-says-David-Cameron. html

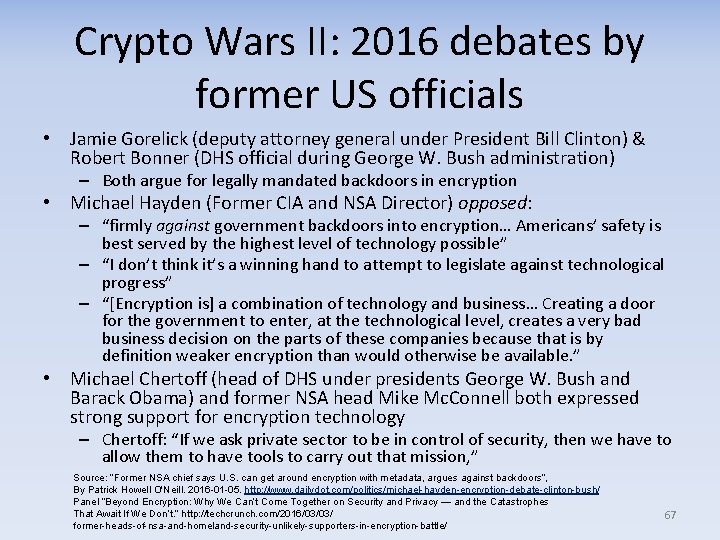

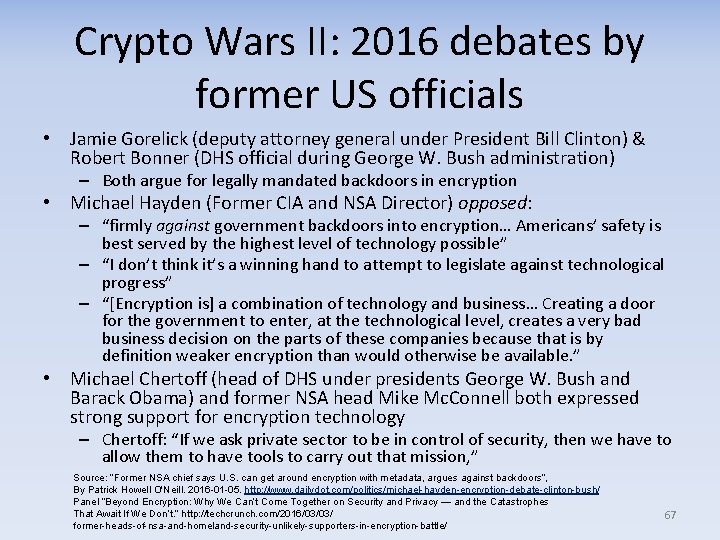

Crypto Wars II: 2016 debates by former US officials • Jamie Gorelick (deputy attorney general under President Bill Clinton) & Robert Bonner (DHS official during George W. Bush administration) – Both argue for legally mandated backdoors in encryption • Michael Hayden (Former CIA and NSA Director) opposed: – “firmly against government backdoors into encryption… Americans’ safety is best served by the highest level of technology possible” – “I don’t think it’s a winning hand to attempt to legislate against technological progress” – “[Encryption is] a combination of technology and business… Creating a door for the government to enter, at the technological level, creates a very bad business decision on the parts of these companies because that is by definition weaker encryption than would otherwise be available. ” • Michael Chertoff (head of DHS under presidents George W. Bush and Barack Obama) and former NSA head Mike Mc. Connell both expressed strong support for encryption technology – Chertoff: “If we ask private sector to be in control of security, then we have to allow them to have tools to carry out that mission, ” Source: “Former NSA chief says U. S. can get around encryption with metadata, argues against backdoors”, By Patrick Howell O'Neill. 2016 -01 -05. http: //www. dailydot. com/politics/michael-hayden-encryption-debate-clinton-bush/ Panel “Beyond Encryption: Why We Can’t Come Together on Security and Privacy — and the Catastrophes That Await If We Don’t. ” http: //techcrunch. com/2016/03/03/ former-heads-of-nsa-and-homeland-security-unlikely-supporters-in-encryption-battle/ 67

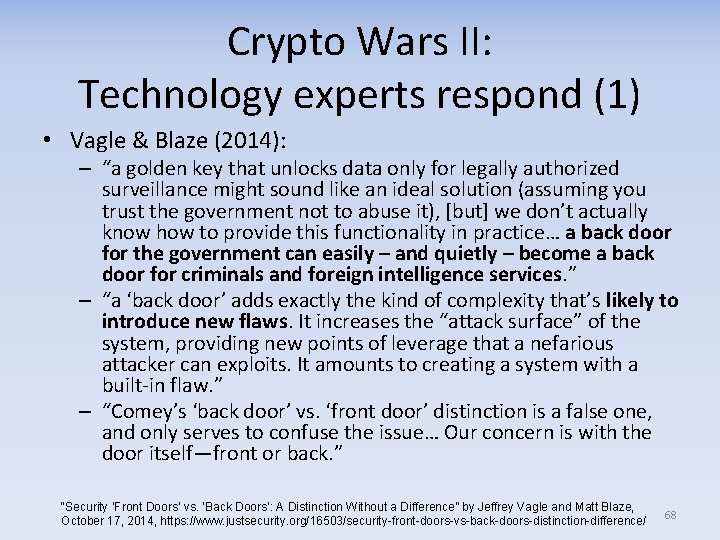

Crypto Wars II: Technology experts respond (1) • Vagle & Blaze (2014): – “a golden key that unlocks data only for legally authorized surveillance might sound like an ideal solution (assuming you trust the government not to abuse it), [but] we don’t actually know how to provide this functionality in practice… a back door for the government can easily – and quietly – become a back door for criminals and foreign intelligence services. ” – “a ‘back door’ adds exactly the kind of complexity that’s likely to introduce new flaws. It increases the “attack surface” of the system, providing new points of leverage that a nefarious attacker can exploits. It amounts to creating a system with a built in flaw. ” – “Comey’s ‘back door’ vs. ‘front door’ distinction is a false one, and only serves to confuse the issue… Our concern is with the door itself—front or back. ” “Security ‘Front Doors’ vs. ‘Back Doors’: A Distinction Without a Difference” by Jeffrey Vagle and Matt Blaze, October 17, 2014, https: //www. justsecurity. org/16503/security-front-doors-vs-back-doors-distinction-difference/ 68

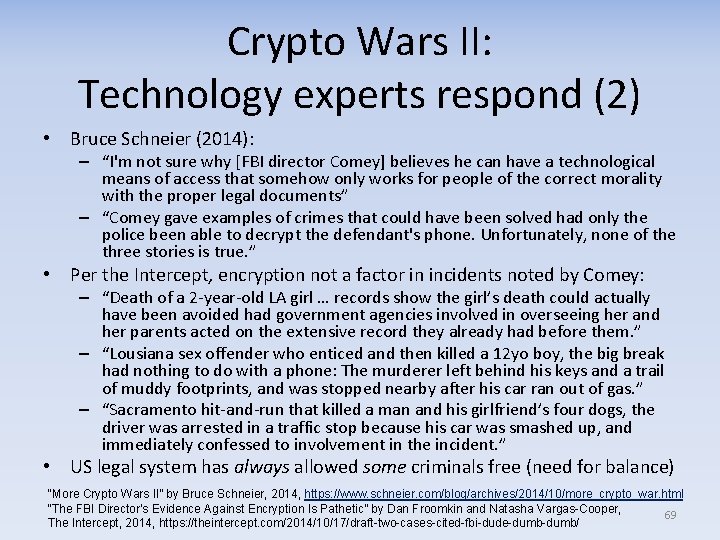

Crypto Wars II: Technology experts respond (2) • Bruce Schneier (2014): – “I'm not sure why [FBI director Comey] believes he can have a technological means of access that somehow only works for people of the correct morality with the proper legal documents” – “Comey gave examples of crimes that could have been solved had only the police been able to decrypt the defendant's phone. Unfortunately, none of the three stories is true. ” • Per the Intercept, encryption not a factor in incidents noted by Comey: – “Death of a 2 year old LA girl … records show the girl’s death could actually have been avoided had government agencies involved in overseeing her and her parents acted on the extensive record they already had before them. ” – “Lousiana sex offender who enticed and then killed a 12 yo boy, the big break had nothing to do with a phone: The murderer left behind his keys and a trail of muddy footprints, and was stopped nearby after his car ran out of gas. ” – “Sacramento hit and run that killed a man and his girlfriend’s four dogs, the driver was arrested in a traffic stop because his car was smashed up, and immediately confessed to involvement in the incident. ” • US legal system has always allowed some criminals free (need for balance) “More Crypto Wars II” by Bruce Schneier, 2014, https: //www. schneier. com/blog/archives/2014/10/more_crypto_war. html “The FBI Director’s Evidence Against Encryption Is Pathetic” by Dan Froomkin and Natasha Vargas-Cooper, 69 The Intercept, 2014, https: //theintercept. com/2014/10/17/draft-two-cases-cited-fbi-dude-dumb/

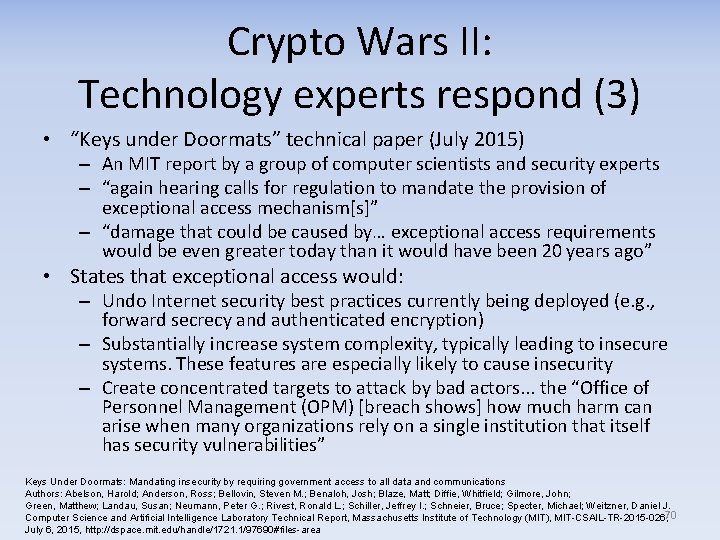

Crypto Wars II: Technology experts respond (3) • “Keys under Doormats” technical paper (July 2015) – An MIT report by a group of computer scientists and security experts – “again hearing calls for regulation to mandate the provision of exceptional access mechanism[s]” – “damage that could be caused by… exceptional access requirements would be even greater today than it would have been 20 years ago” • States that exceptional access would: – Undo Internet security best practices currently being deployed (e. g. , forward secrecy and authenticated encryption) – Substantially increase system complexity, typically leading to insecure systems. These features are especially likely to cause insecurity – Create concentrated targets to attack by bad actors. . . the “Office of Personnel Management (OPM) [breach shows] how much harm can arise when many organizations rely on a single institution that itself has security vulnerabilities” Keys Under Doormats: Mandating insecurity by requiring government access to all data and communications Authors: Abelson, Harold; Anderson, Ross; Bellovin, Steven M. ; Benaloh, Josh; Blaze, Matt; Diffie, Whitfield; Gilmore, John; Green, Matthew; Landau, Susan; Neumann, Peter G. ; Rivest, Ronald L. ; Schiller, Jeffrey I. ; Schneier, Bruce; Specter, Michael; Weitzner, Daniel J. Computer Science and Artificial Intelligence Laboratory Technical Report, Massachusetts Institute of Technology (MIT), MIT-CSAIL-TR-2015 -026, 70 July 6, 2015, http: //dspace. mit. edu/handle/1721. 1/97690#files-area

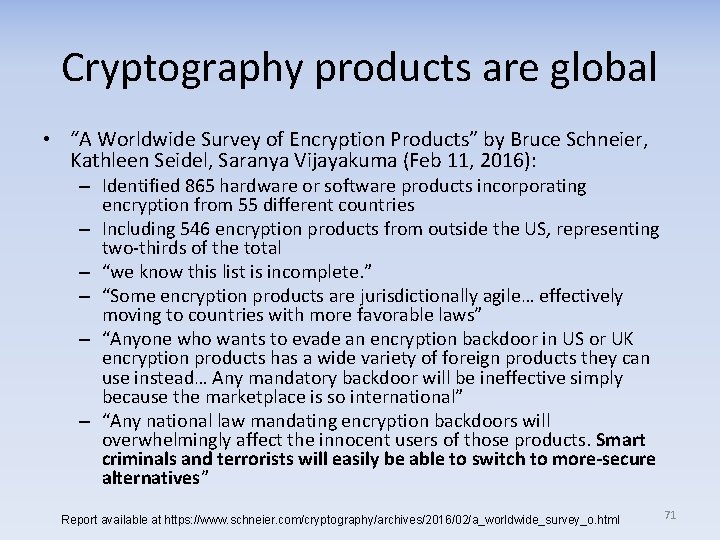

Cryptography products are global • “A Worldwide Survey of Encryption Products” by Bruce Schneier, Kathleen Seidel, Saranya Vijayakuma (Feb 11, 2016): – Identified 865 hardware or software products incorporating encryption from 55 different countries – Including 546 encryption products from outside the US, representing two thirds of the total – “we know this list is incomplete. ” – “Some encryption products are jurisdictionally agile… effectively moving to countries with more favorable laws” – “Anyone who wants to evade an encryption backdoor in US or UK encryption products has a wide variety of foreign products they can use instead… Any mandatory backdoor will be ineffective simply because the marketplace is so international” – “Any national law mandating encryption backdoors will overwhelmingly affect the innocent users of those products. Smart criminals and terrorists will easily be able to switch to more-secure alternatives” Report available at https: //www. schneier. com/cryptography/archives/2016/02/a_worldwide_survey_o. html 71

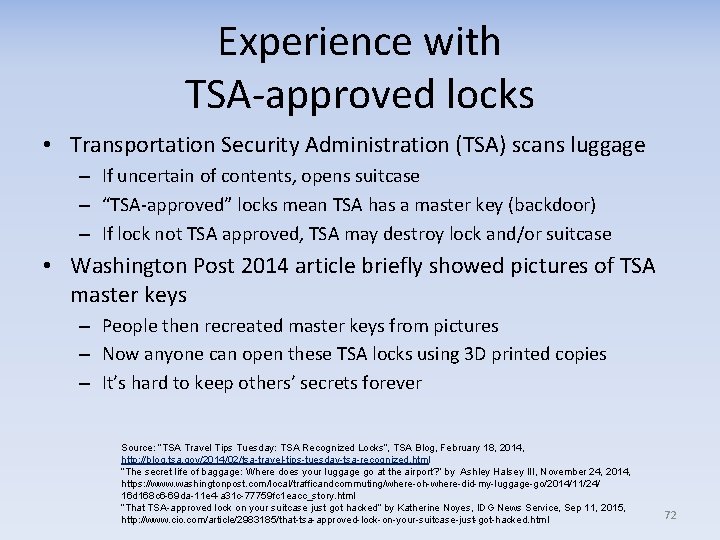

Experience with TSA approved locks • Transportation Security Administration (TSA) scans luggage – If uncertain of contents, opens suitcase – “TSA approved” locks mean TSA has a master key (backdoor) – If lock not TSA approved, TSA may destroy lock and/or suitcase • Washington Post 2014 article briefly showed pictures of TSA master keys – People then recreated master keys from pictures – Now anyone can open these TSA locks using 3 D printed copies – It’s hard to keep others’ secrets forever Source: “TSA Travel Tips Tuesday: TSA Recognized Locks”, TSA Blog, February 18, 2014, http: //blog. tsa. gov/2014/02/tsa-travel-tips-tuesday-tsa-recognized. html “The secret life of baggage: Where does your luggage go at the airport? ” by Ashley Halsey III, November 24, 2014, https: //www. washingtonpost. com/local/trafficandcommuting/where-oh-where-did-my-luggage-go/2014/11/24/ 16 d 168 c 6 -69 da-11 e 4 -a 31 c-77759 fc 1 eacc_story. html “That TSA-approved lock on your suitcase just got hacked” by Katherine Noyes, IDG News Service, Sep 11, 2015, http: //www. cio. com/article/2983185/that-tsa-approved-lock-on-your-suitcase-just-got-hacked. html 72

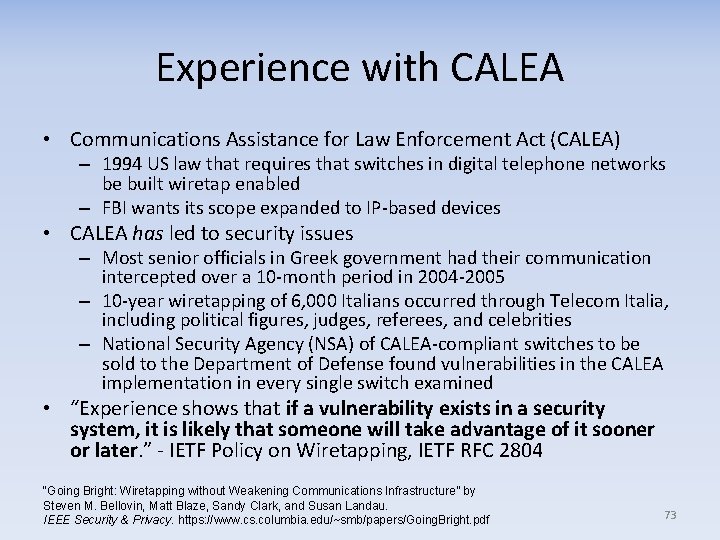

Experience with CALEA • Communications Assistance for Law Enforcement Act (CALEA) – 1994 US law that requires that switches in digital telephone networks be built wiretap enabled – FBI wants its scope expanded to IP based devices • CALEA has led to security issues – Most senior officials in Greek government had their communication intercepted over a 10 month period in 2004 2005 – 10 year wiretapping of 6, 000 Italians occurred through Telecom Italia, including political figures, judges, referees, and celebrities – National Security Agency (NSA) of CALEA compliant switches to be sold to the Department of Defense found vulnerabilities in the CALEA implementation in every single switch examined • “Experience shows that if a vulnerability exists in a security system, it is likely that someone will take advantage of it sooner or later. ” IETF Policy on Wiretapping, IETF RFC 2804 “Going Bright: Wiretapping without Weakening Communications Infrastructure” by Steven M. Bellovin, Matt Blaze, Sandy Clark, and Susan Landau. IEEE Security & Privacy. https: //www. cs. columbia. edu/~smb/papers/Going. Bright. pdf 73

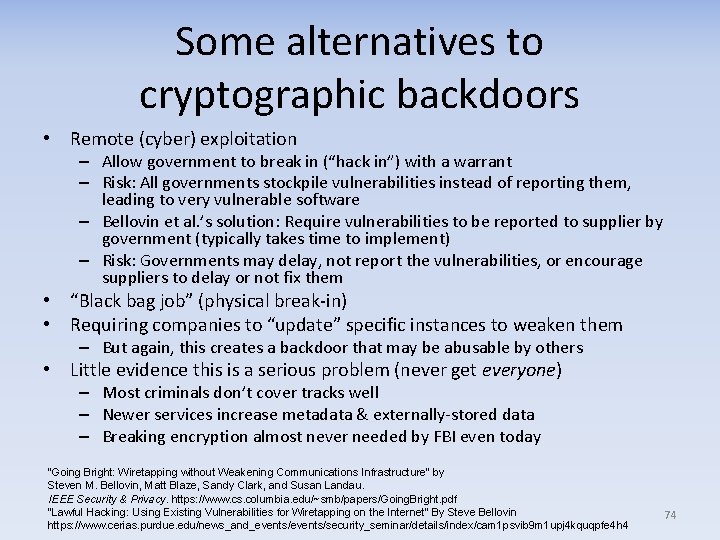

Some alternatives to cryptographic backdoors • Remote (cyber) exploitation – Allow government to break in (“hack in”) with a warrant – Risk: All governments stockpile vulnerabilities instead of reporting them, leading to very vulnerable software – Bellovin et al. ’s solution: Require vulnerabilities to be reported to supplier by government (typically takes time to implement) – Risk: Governments may delay, not report the vulnerabilities, or encourage suppliers to delay or not fix them • “Black bag job” (physical break in) • Requiring companies to “update” specific instances to weaken them – But again, this creates a backdoor that may be abusable by others • Little evidence this is a serious problem (never get everyone) – Most criminals don’t cover tracks well – Newer services increase metadata & externally stored data – Breaking encryption almost never needed by FBI even today “Going Bright: Wiretapping without Weakening Communications Infrastructure” by Steven M. Bellovin, Matt Blaze, Sandy Clark, and Susan Landau. IEEE Security & Privacy. https: //www. cs. columbia. edu/~smb/papers/Going. Bright. pdf “Lawful Hacking: Using Existing Vulnerabilities for Wiretapping on the Internet” By Steve Bellovin https: //www. cerias. purdue. edu/news_and_events/security_seminar/details/index/cam 1 psvib 9 m 1 upj 4 kquqpfe 4 h 4 74

Open letter on encryption to President Barack Obama, May 2015 • Huge number of signatories, including: – Companies: Adobe, Apple, Cisco, CCIA, Dropbox, Facebook, Google, HP, Linked. In, Microsoft, Mozilla, Symantec, Tumblr, Twitter, Wikimedia Foundation, Yahoo, … – Individuals: Steven M. Bellovin, Matt Bishop, Whitfield Diffie, Peter G. Neumann, Ronald L. Rivest, Bruce Schneier, Chris Wysopal, Philip Zimmerman, … – Civil groups: ACLU, Electronic Frontier Foundation (EFF), Electronic Privacy Information Center (EPIC), Free Software Foundation (FSF), Open Source Initiative (OSI), … • Key points in open letter: – "officials have suggested [US] companies should refrain from providing any products that are secured by encryption, unless [they] weaken their security [to] maintain the capability to decrypt their customers’ data at the government’s request. Some officials have [suggested] that Congress should act to ban such products or mandate such capabilities. ” – “We urge you to reject any proposal that U. S. companies deliberately weaken the security of their products… instead focus on developing policies that will promote rather than undermine the wide adoption of strong encryption technology. Such policies will in turn help to promote and protect cybersecurity, economic growth, and human rights, both here and abroad. Encryption. . . protects us from innumerable criminal and national security threats. ” – “This protection would be undermined by the mandatory insertion of any new vulnerabilities into encrypted devices and services. Whether you call them “front doors” or “back doors”, introducing intentional vulnerabilities… for the government’s use will make those products less secure against other attackers. ” Open Letter to President Barack Obama, May 19, 2015, https: //static. newamerica. org/attachments/3138 --113/Encryption_Letter_to_Obama_final_051915. pdf 75

Tech. Crunch commentary on the latest round of the crypto wars • • • “politicians… apparently cling to the notion that encryption can be magicked out of existence… You have to hope [they] are… not so stupid as to… attempt to outlaw math… The argument that national security is enhanced by perforating secure encryption has been roundly and consistently condemned by the security industry. You don’t enhance the public’s security by making everyone’s information more easily accessible to… bad actors… [Carlifornia bill argues this access is necessary] to combat human trafficking. In the U. K. [the justifications] are terrorism and/or pedophilia. The problem with such arguments is they have no boundaries. Where do you draw the line? Should every home have government installed security camera in every room…? Sure you might catch some criminals but it’s a massively disproportionate response. . . Policing can’t be absolute. It needs to be balanced against other considerations…. Yet mass surveillance rides rough shod over hard won democratic boundaries in the name of an ill defined and apparently eternal ‘war on terror’… attempts to outlaw encryption are doomed to fail on the grounds that it’s not possible to control… access to encrypted technology… bad actors will always finds ways [around while] everyone else’s data security gets screwed… Is there any way to stop the madness of repeat history? The most positive sign… is the robust public defense… by Apple… Even so, the cycle remains terribly tedious. ” “The Repeat Political Madness Of Never-Ending Crypto Wars, ” Natasha Lomas, Tech. Crunch, 2016, http: //techcrunch. com/2016/01/23/the-repeat-political-madness-of-never-ending-crypto-wars/ 76

Attorney General William Barr • July 2019: Attorney General William Barr spoke on “going dark” at Fordham University in New York – Argued that “adding backdoors decreases security but that it is worth it” • Bruce Schneier: – “this is a major change in government position. Previously [law enforcement] had claimed that backdoors for law enforcement could be added without any loss of security [and] technologists just need to figure out how an approach we have derisively named ‘nerd harder. ’ – With this change, we can finally have a sensible policy conversation. Yes, adding a backdoor increases our collective security because it allows law enforcement to eavesdrop on the bad guys. But adding that backdoor also decreases our collective security because the bad guys can eavesdrop on everyone. – This is exactly the policy debate we should be having not the fake one about whether or not we can have both security and surveillance. – [Hopefully we can move to the real debate. ] I know where I stand on that: As computers continue to permeate every aspect of our lives, society, and critical infrastructure, it is much more important to ensure that they are secure from everybody even at the cost of law enforcement access than it is to allow access at the cost of security. ” • https: //www. schneier. com/blog/archives/2019/08/attorney_genera. html 77

EARN IT (2020) • “EARN IT” bill is latest US attempt to require backdoors for government surveillance into all encrypted communications – Mechanism: Make it practically impossible operate a website without following “best practices” developed by a group controlled by those against strong end to end encryption – Proposed law never mentions encryption & claims it’s for child protection, in a transparent attempt to slip the law past the public • More information: – “The EARN IT Bill Is the Government’s Plan to Scan Every Message Online” https: //www. eff. org/deeplinks/2020/03/earn it bill governments not so secret plan scan every message online – “A sneaky attempt to end encryption is worming its way through Congress” https: //www. theverge. com/interface/2020/3/12/21174815/earn it act encryption killer lindsay graham match group – “The EARN IT Act” by Bruce Schneier, https: //www. schneier. com/blog/archives/2020/03/the_earn it_act. html – S. 3398 EARN IT Act of 2020 https: //www. congress. gov/bill/116 th congress/senate bill/3398/text 78

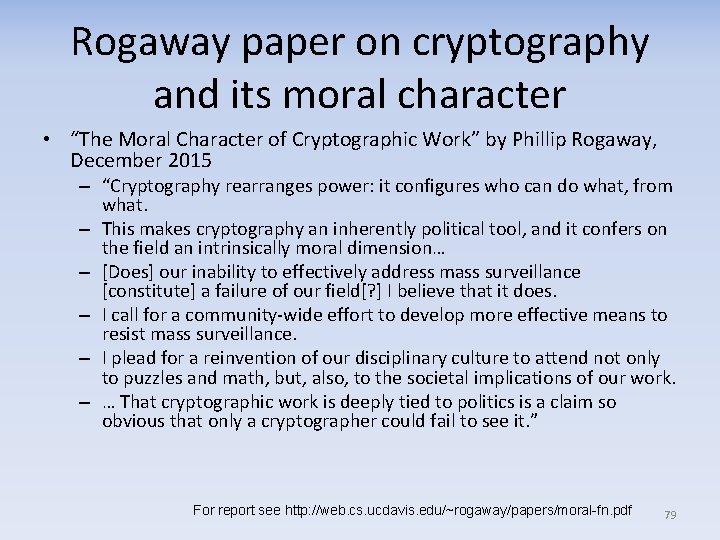

Rogaway paper on cryptography and its moral character • “The Moral Character of Cryptographic Work” by Phillip Rogaway, December 2015 – “Cryptography rearranges power: it configures who can do what, from what. – This makes cryptography an inherently political tool, and it confers on the field an intrinsically moral dimension… – [Does] our inability to effectively address mass surveillance [constitute] a failure of our field[? ] I believe that it does. – I call for a community wide effort to develop more effective means to resist mass surveillance. – I plead for a reinvention of our disciplinary culture to attend not only to puzzles and math, but, also, to the societal implications of our work. – … That cryptographic work is deeply tied to politics is a claim so obvious that only a cryptographer could fail to see it. ” For report see http: //web. cs. ucdavis. edu/~rogaway/papers/moral-fn. pdf 79

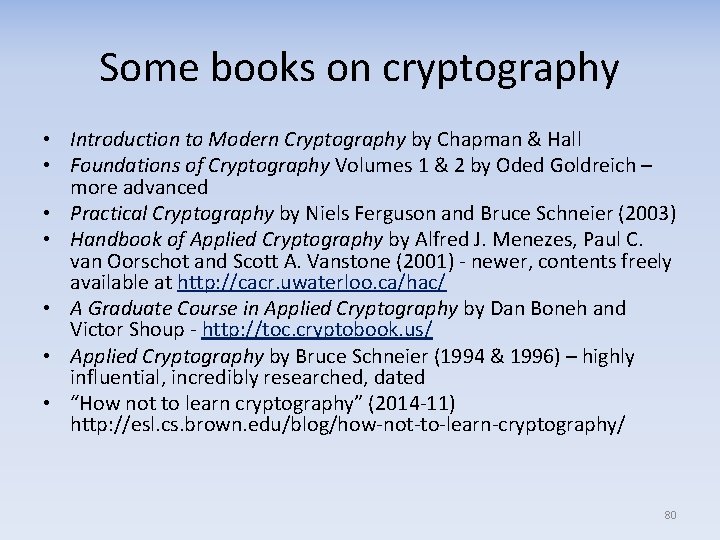

Some books on cryptography • Introduction to Modern Cryptography by Chapman & Hall • Foundations of Cryptography Volumes 1 & 2 by Oded Goldreich – more advanced • Practical Cryptography by Niels Ferguson and Bruce Schneier (2003) • Handbook of Applied Cryptography by Alfred J. Menezes, Paul C. van Oorschot and Scott A. Vanstone (2001) newer, contents freely available at http: //cacr. uwaterloo. ca/hac/ • A Graduate Course in Applied Cryptography by Dan Boneh and Victor Shoup http: //toc. cryptobook. us/ • Applied Cryptography by Bruce Schneier (1994 & 1996) – highly influential, incredibly researched, dated • “How not to learn cryptography” (2014 11) http: //esl. cs. brown. edu/blog/how not to learn cryptography/ 80

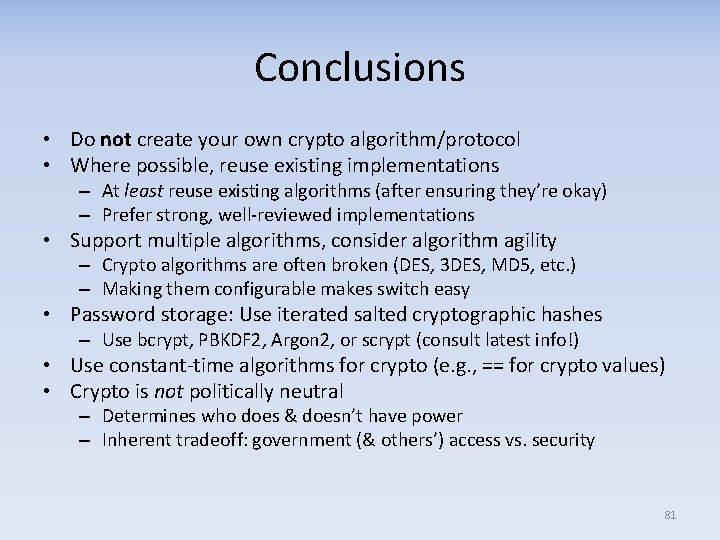

Conclusions • Do not create your own crypto algorithm/protocol • Where possible, reuse existing implementations – At least reuse existing algorithms (after ensuring they’re okay) – Prefer strong, well reviewed implementations • Support multiple algorithms, consider algorithm agility – Crypto algorithms are often broken (DES, 3 DES, MD 5, etc. ) – Making them configurable makes switch easy • Password storage: Use iterated salted cryptographic hashes – Use bcrypt, PBKDF 2, Argon 2, or scrypt (consult latest info!) • Use constant time algorithms for crypto (e. g. , == for crypto values) • Crypto is not politically neutral – Determines who does & doesn’t have power – Inherent tradeoff: government (& others’) access vs. security 81

Backup 82

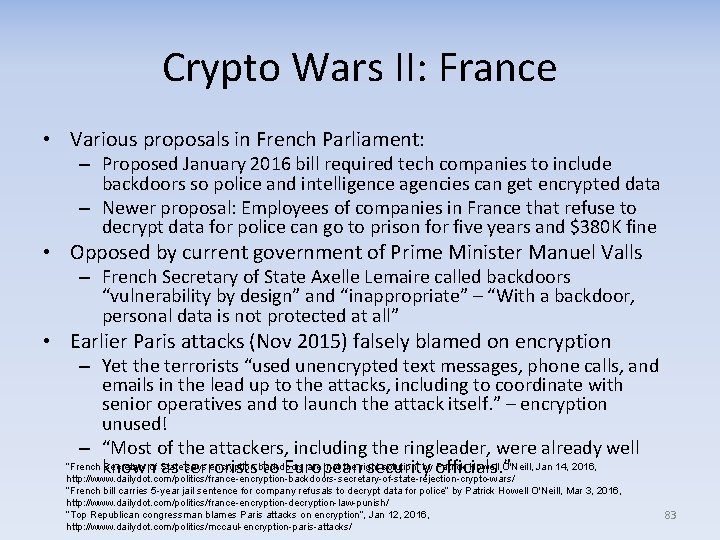

Crypto Wars II: France • Various proposals in French Parliament: – Proposed January 2016 bill required tech companies to include backdoors so police and intelligence agencies can get encrypted data – Newer proposal: Employees of companies in France that refuse to decrypt data for police can go to prison for five years and $380 K fine • Opposed by current government of Prime Minister Manuel Valls – French Secretary of State Axelle Lemaire called backdoors “vulnerability by design” and “inappropriate” – “With a backdoor, personal data is not protected at all” • Earlier Paris attacks (Nov 2015) falsely blamed on encryption – Yet the terrorists “used unencrypted text messages, phone calls, and emails in the lead up to the attacks, including to coordinate with senior operatives and to launch the attack itself. ” – encryption unused! – “Most of the attackers, including the ringleader, were already well “French known as terrorists to European security officials. ” Secretary of State says encryption backdoors are 'not the right solution” by Patrick Howell O'Neill, Jan 14, 2016, http: //www. dailydot. com/politics/france-encryption-backdoors-secretary-of-state-rejection-crypto-wars/ “French bill carries 5 -year jail sentence for company refusals to decrypt data for police” by Patrick Howell O'Neill, Mar 3, 2016, http: //www. dailydot. com/politics/france-encryption-decryption-law-punish/ “Top Republican congressman blames Paris attacks on encryption”, Jan 12, 2016, http: //www. dailydot. com/politics/mccaul-encryption-paris-attacks/ 83

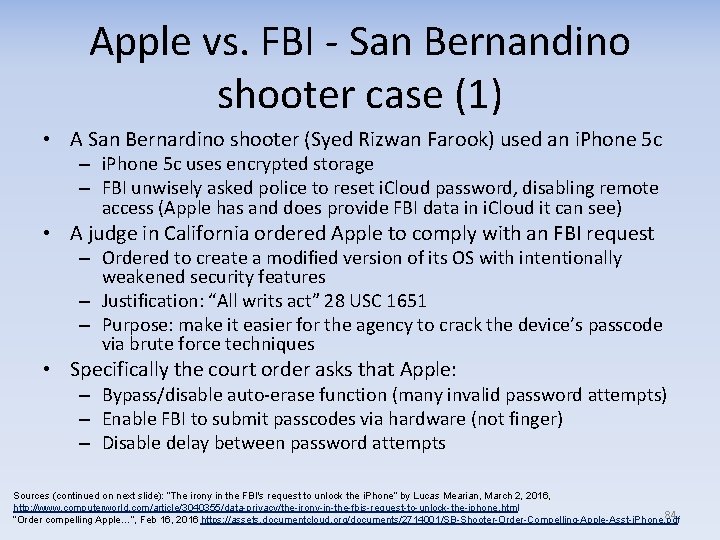

Apple vs. FBI San Bernandino shooter case (1) • A San Bernardino shooter (Syed Rizwan Farook) used an i. Phone 5 c – i. Phone 5 c uses encrypted storage – FBI unwisely asked police to reset i. Cloud password, disabling remote access (Apple has and does provide FBI data in i. Cloud it can see) • A judge in California ordered Apple to comply with an FBI request – Ordered to create a modified version of its OS with intentionally weakened security features – Justification: “All writs act” 28 USC 1651 – Purpose: make it easier for the agency to crack the device’s passcode via brute force techniques • Specifically the court order asks that Apple: – Bypass/disable auto erase function (many invalid password attempts) – Enable FBI to submit passcodes via hardware (not finger) – Disable delay between password attempts Sources (continued on next slide): “The irony in the FBI's request to unlock the i. Phone” by Lucas Mearian, March 2, 2016, http: //www. computerworld. com/article/3040355/data-privacy/the-irony-in-the-fbis-request-to-unlock-the-iphone. html 84 “Order compelling Apple…”, Feb 16, 2016 https: //assets. documentcloud. org/documents/2714001/SB-Shooter-Order-Compelling-Apple-Asst-i. Phone. pdf

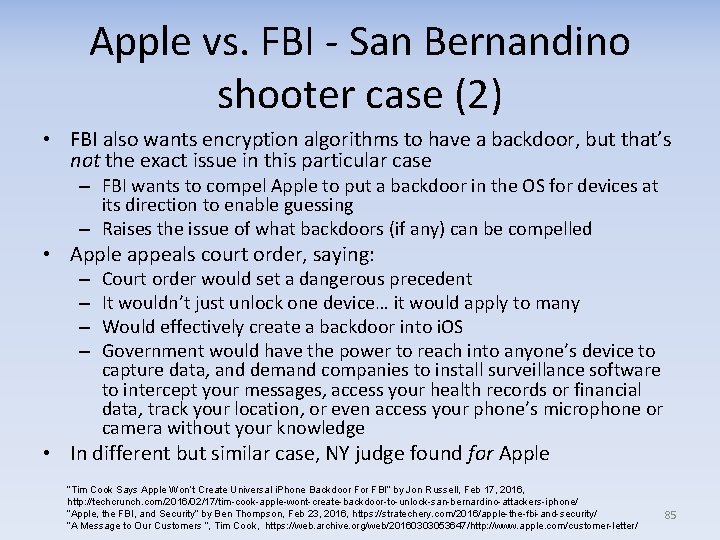

Apple vs. FBI San Bernandino shooter case (2) • FBI also wants encryption algorithms to have a backdoor, but that’s not the exact issue in this particular case – FBI wants to compel Apple to put a backdoor in the OS for devices at its direction to enable guessing – Raises the issue of what backdoors (if any) can be compelled • Apple appeals court order, saying: – – Court order would set a dangerous precedent It wouldn’t just unlock one device… it would apply to many Would effectively create a backdoor into i. OS Government would have the power to reach into anyone’s device to capture data, and demand companies to install surveillance software to intercept your messages, access your health records or financial data, track your location, or even access your phone’s microphone or camera without your knowledge • In different but similar case, NY judge found for Apple “Tim Cook Says Apple Won’t Create Universal i. Phone Backdoor FBI” by Jon Russell, Feb 17, 2016, http: //techcrunch. com/2016/02/17/tim-cook-apple-wont-create-backdoor-to-unlock-san-bernardino-attackers-iphone/ “Apple, the FBI, and Security” by Ben Thompson, Feb 23, 2016, https: //stratechery. com/2016/apple-the-fbi-and-security/ “A Message to Our Customers ”, Tim Cook, https: //web. archive. org/web/20160303053647/http: //www. apple. com/customer-letter/ 85

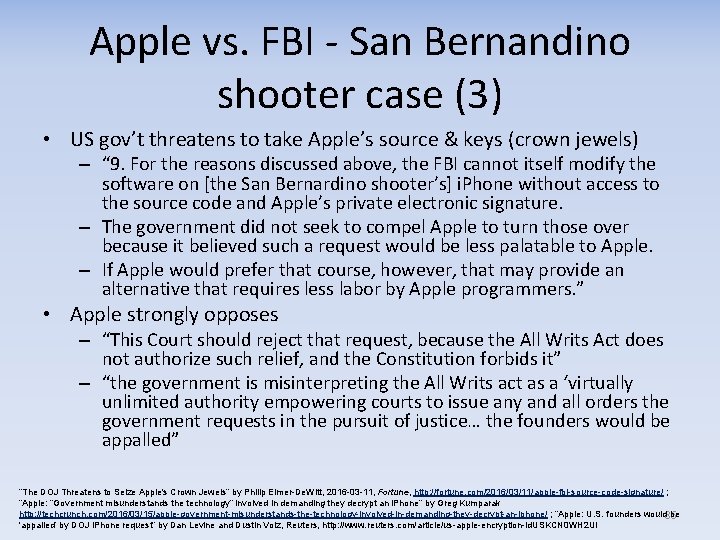

Apple vs. FBI San Bernandino shooter case (3) • US gov’t threatens to take Apple’s source & keys (crown jewels) – “ 9. For the reasons discussed above, the FBI cannot itself modify the software on [the San Bernardino shooter’s] i. Phone without access to the source code and Apple’s private electronic signature. – The government did not seek to compel Apple to turn those over because it believed such a request would be less palatable to Apple. – If Apple would prefer that course, however, that may provide an alternative that requires less labor by Apple programmers. ” • Apple strongly opposes – “This Court should reject that request, because the All Writs Act does not authorize such relief, and the Constitution forbids it” – “the government is misinterpreting the All Writs act as a ‘virtually unlimited authority empowering courts to issue any and all orders the government requests in the pursuit of justice… the founders would be appalled” “The DOJ Threatens to Seize Apple's Crown Jewels” by Philip Elmer-De. Witt, 2016 -03 -11, Fortune, http: //fortune. com/2016/03/11/apple-fbi-source-code-signature/ ; “Apple: “Government misunderstands the technology” involved in demanding they decrypt an i. Phone” by Greg Kumparak http: //techcrunch. com/2016/03/15/apple-government-misunderstands-the-technology-involved-in-demanding-they-decrypt-an-iphone/ ; “Apple: U. S. founders would 86 be 'appalled‘ by DOJ i. Phone request” by Dan Levine and Dustin Volz, Reuters, http: //www. reuters. com/article/us-apple-encryption-id. USKCN 0 WH 2 UI

Microsoft Azure in Germany – no unapproved access • In 2016 Microsoft will offer their cloud services from within German datacenters – Microsoft Azure, Office 365 and Dynamics CRM Online – in addition to the more than 100 worldwide datacenters • Keys (physical and logical) that control access to customer data held by a German company – Microsoft will have no access to customer data without approval and supervision by the Data Trustee – “Data trustee” is Deutsche Telekom’s subsidiary, T Systems • If data isn’t safe in a country, users can flee to another – Mandating access within a country not really effective “Microsoft Cloud in Germany” by Ralf. Wi, 8 Dec 2015, http: //blogs. technet. com/b/ralfwi/archive/2015/12/08/microsoft-cloud-in-germany. aspx 87

Detailed timeline for “Crypto wars 2” • “A complete guide to the new 'Crypto Wars‘” by Eric Geller, The Daily Dot, Apr 26, 2016, http: //www. dailydot. com/politics/encryption crypto wars backdoors timeline security privacy/ • Starts on Jan. 9, 2003, where The Justice Department drafts the Domestic Security Enhancement Act of 2003, aka "Patriot Act II“ – Didn’t pass, but included a provision adding a minimum of five years to the sentence of any convicted felon who used encryption to conceal “incriminating communication or information” related to their crime – foray into re criminalizing encryption 88

Released under CC BY SA 3. 0 • This presentation is released under the Creative Commons Attribution Share. Alike 3. 0 Unported (CC BY SA 3. 0) license • You are free: – to Share — to copy, distribute and transmit the work – to Remix — to adapt the work – to make commercial use of the work • Under the following conditions: – Attribution — You must attribute the work in the manner specified by the author or licensor (but not in any way that suggests that they endorse you or your use of the work) – Share Alike — If you alter, transform, or build upon this work, you may distribute the resulting work only under the same or similar license to this one • These conditions can be waived by permission from the copyright holder – dwheeler at dwheeler dot com • Details at: http: //creativecommons. org/licenses/by sa/3. 0/ • Attribute me as “David A. Wheeler” 89