SWE 681 ISA 681 Secure Software Design Programming

- Slides: 67

SWE 681 / ISA 681 Secure Software Design & Programming Lecture 9: Analysis Approaches & Tools Dr. David A. Wheeler 2018 -09 -25

Outline • Verification in the lifecycle • Types of analysis (static/dynamic/hybrid) – Some measurement terminology • Analysis – Static analysis – Dynamic analysis (including fuzz testing) – Hybrid analysis • • Operational Fool with a tool SWAMP Adopting tools 2

Verification in the lifecycle • Software development lifecycle has a set of processes – Including design, implementation, & verification – Verification = compare system/element against the requirements/design characteristics • Verification process is tightly integrated with the rest – It’s a mistake to think design/implementation/ verification happens in lockstep sequence in time – We must discuss verification, incl. analysis tools to help verification 3

Types of analysis • Static analysis: Approach for verifying software (including finding defects) without executing software – Source code vulnerability scanning tools, code inspections, etc. • Dynamic analysis: Approach for verifying software (including finding defects) by executing software on specific inputs & checking results (“oracle”) – Functional testing, web application scanners, fuzz testing, etc. • Hybrid analysis: Combine above approaches Analysis can be implemented by humans (manual/technique), automated tools, or a combination, but depending solely on manual techniques is generally too expensive 4

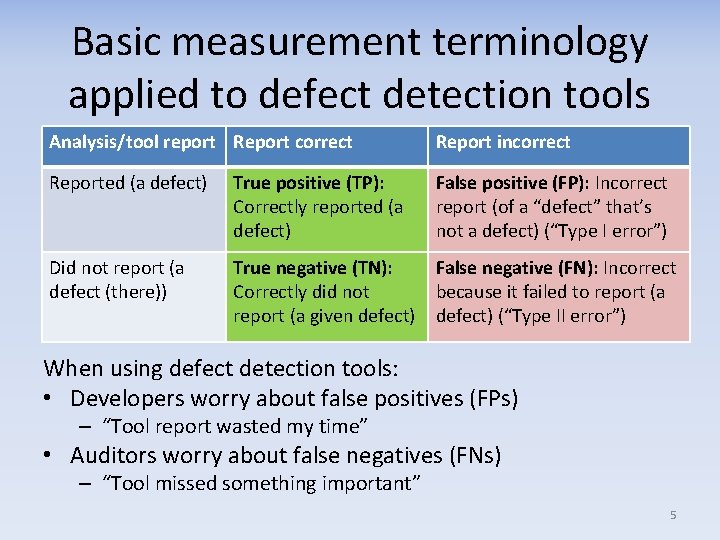

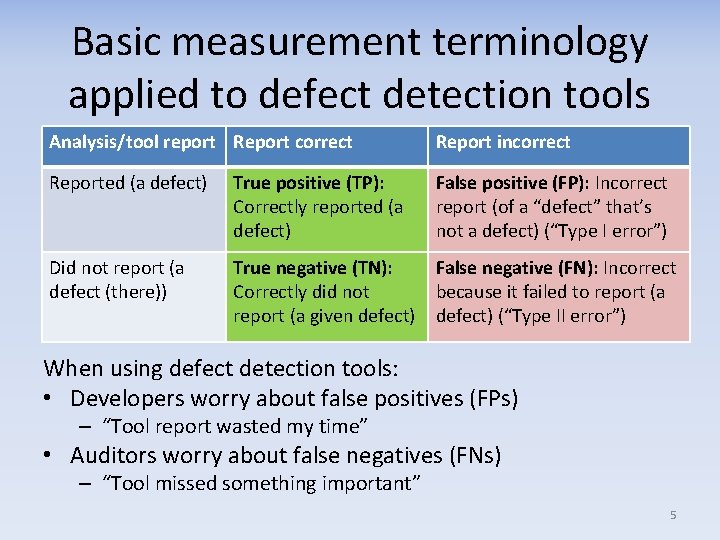

Basic measurement terminology applied to defect detection tools Analysis/tool report Report correct Report incorrect Reported (a defect) True positive (TP): Correctly reported (a defect) False positive (FP): Incorrect report (of a “defect” that’s not a defect) (“Type I error”) Did not report (a defect (there)) True negative (TN): Correctly did not report (a given defect) False negative (FN): Incorrect because it failed to report (a defect) (“Type II error”) When using defect detection tools: • Developers worry about false positives (FPs) – “Tool report wasted my time” • Auditors worry about false negatives (FNs) – “Tool missed something important” 5

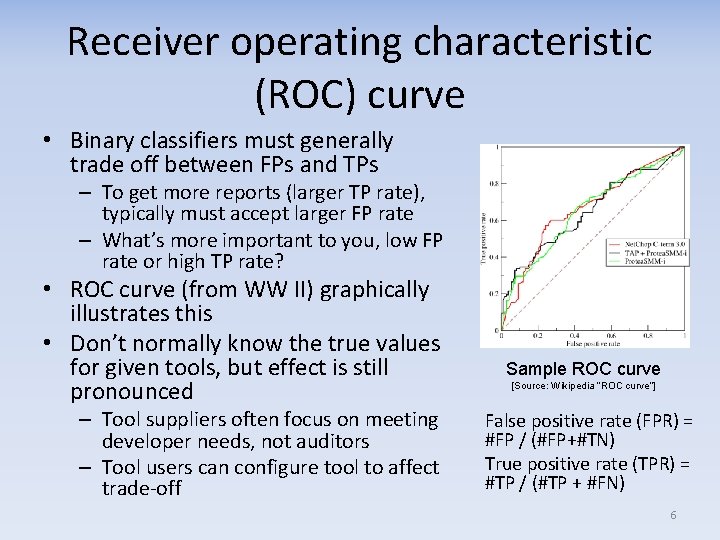

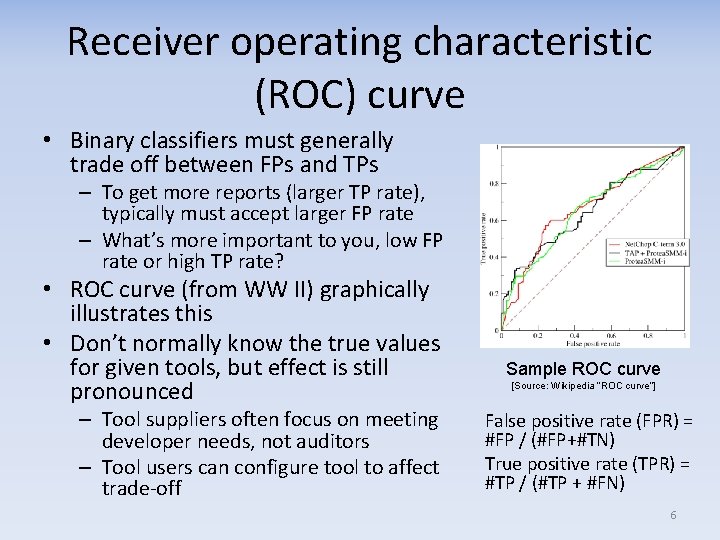

Receiver operating characteristic (ROC) curve • Binary classifiers must generally trade off between FPs and TPs – To get more reports (larger TP rate), typically must accept larger FP rate – What’s more important to you, low FP rate or high TP rate? • ROC curve (from WW II) graphically illustrates this • Don’t normally know the true values for given tools, but effect is still pronounced – Tool suppliers often focus on meeting developer needs, not auditors – Tool users can configure tool to affect trade-off Sample ROC curve [Source: Wikipedia “ROC curve”] False positive rate (FPR) = #FP / (#FP+#TN) True positive rate (TPR) = #TP / (#TP + #FN) 6

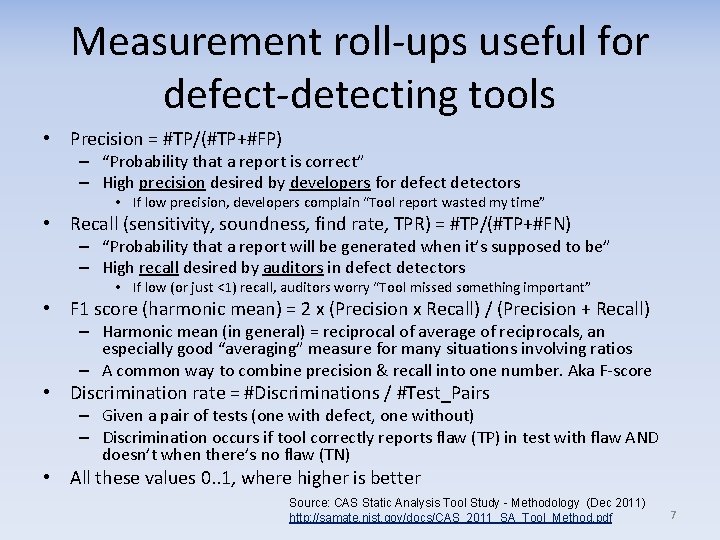

Measurement roll-ups useful for defect-detecting tools • Precision = #TP/(#TP+#FP) – “Probability that a report is correct” – High precision desired by developers for defect detectors • If low precision, developers complain “Tool report wasted my time” • Recall (sensitivity, soundness, find rate, TPR) = #TP/(#TP+#FN) – “Probability that a report will be generated when it’s supposed to be” – High recall desired by auditors in defect detectors • If low (or just <1) recall, auditors worry “Tool missed something important” • F 1 score (harmonic mean) = 2 x (Precision x Recall) / (Precision + Recall) – Harmonic mean (in general) = reciprocal of average of reciprocals, an especially good “averaging” measure for many situations involving ratios – A common way to combine precision & recall into one number. Aka F-score • Discrimination rate = #Discriminations / #Test_Pairs – Given a pair of tests (one with defect, one without) – Discrimination occurs if tool correctly reports flaw (TP) in test with flaw AND doesn’t when there’s no flaw (TN) • All these values 0. . 1, where higher is better Source: CAS Static Analysis Tool Study - Methodology (Dec 2011) http: //samate. nist. gov/docs/CAS_2011_SA_Tool_Method. pdf 7

Some tool information sources • State-of-the-Art Resources (SOAR) for Software Vulnerability Detection, Test, and Evaluation 2016 (aka “Software SOAR”) by David A. Wheeler and Amy E. Henninger, Institute for Defense Analyses (Report P-8005), November 2016 – https: //www. acq. osd. mil/se/initiatives/init_jfac. html – “Appendix E” has a large matrix of different types of tools • NIST SAMATE (http: //samate. nist. gov) – “Classes of tools & techniques”: http: //samate. nist. gov/index. php/Tool_Survey. html – Can test tools using Software Assurance Reference Dataset (SARD), formerly known as the SAMATE Reference Dataset (SRD). It’s a set of programs with known properties: http: //samate. nist. gov/SARD/ • NSA Center for Assured Software (CAS) • OWASP (https: //www. owasp. org) 8

Static analysis 9

Static analysis: Source vs. Executables • Source code pros: – Provides much more context; executable-only tools can miss important information – Can examine variable names & comments (can be very helpful!) – Can fix problems found (hard with just executable) – Difficult to decompile code • Source code cons: – Can mislead tools – executable runs, not source (if there’s a difference) – Often can’t get source for proprietary off-the-shelf programs • Can get for open source software • Often can get for custom • Bytecode is somewhere between 10

(Some) Static analysis approaches • • • Human analysis (including peer reviews) Type checkers Compiler warnings Style checkers / defect finders / quality scanners Security analysis: – Security weakness analysis - text scanners – Security weakness analysis - beyond text scanners • Property checkers • Knowledge extraction • We’ll cover formal methods separately Different people will group approaches in different ways 11

Human (manual) analysis • Humans are great at discerning context & intent • Get bored & get overwhelmed • Expensive – Especially if analyzing executables • Can be one person, e. g. , “desk-checking” • Peer reviews – Inspections: Special way to use group, defined roles including “reader”; see IEEE standard 1028 • Can focus on specific issues – E. G. , “Is everything that’s supposed be authenticated covered by authentication processes? ” 12

Automated tool limitations • Tools typically don’t “understand”: – System architecture – System mission/goal – Technical environment – Human environment • Except formal methods… – Most have significant FP and/or FN rates • Best when part of a process to develop secure software, not as the only mechanism 13

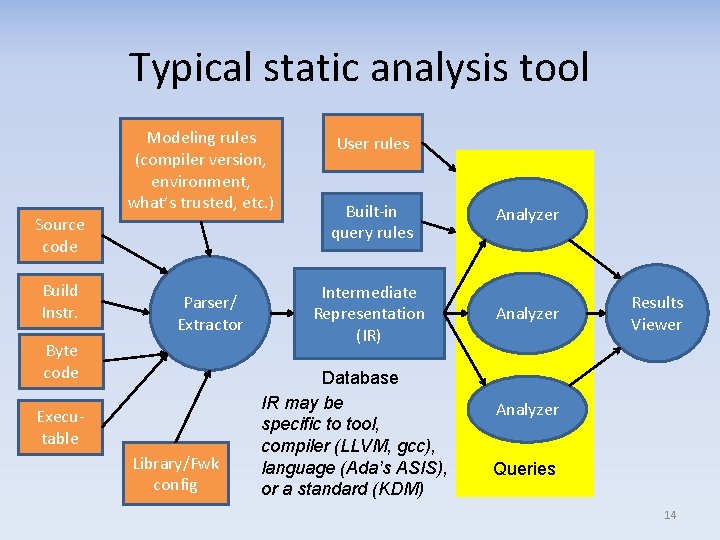

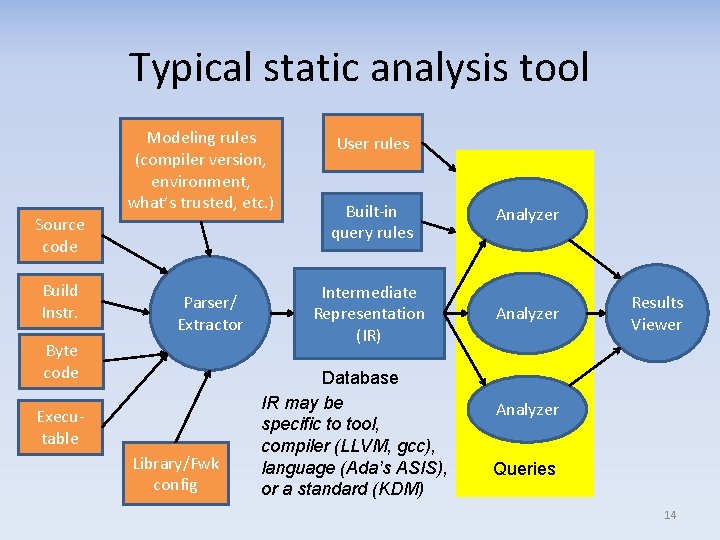

Typical static analysis tool Modeling rules (compiler version, environment, what’s trusted, etc. ) Source code Build Instr. Parser/ Extractor Byte code Executable Library/Fwk config User rules Built-in query rules Analyzer Intermediate Representation (IR) Analyzer Database IR may be specific to tool, compiler (LLVM, gcc), language (Ada’s ASIS), or a standard (KDM) Results Viewer Analyzer Queries 14

Static analysis tools not specific to security can still be useful • Many static analysis tools’ focus is other than security – E. g. , may look for generic defects, or focus on “code cleanliness” (maintainability, style, “quality”etc. ) – Some defects are security vulnerabilities – Reports that “clean” code is easier for other (security-specific) static analysis to analyze (for fewer false positives/negatives) • They’re probably easier for humans to review too • Know of no hard evidence, though; some would be welcome! – Such tools often faster, cheaper, & easier • E. G. , many don’t need to do whole-program analysis • Such tools may be useful in reducing as a precursor step before using security-specific tools • Java users: Consider quality scanners Spot. Bugs (successor of Find. Bugs) or PMD 15

Type checkers • Many languages have static type checking built in – Some more rigorous than others – C/C++ not very strong (& must often work around) – Java/C# stronger (interfaces, etc. , ease use) • Can detect some defects before fielding – Including some security defects – Also really useful in documenting intent • Work with type system – be as narrow as you can – Beware diminishing returns 16

Compiler warnings: Not securityspecific but useful • Where practical, enable compiler/interpreter warnings & fix anything found – E. g. , gcc “-Wall -Wextra -Wimplicit-fallthrough”, perl/Java. Script “use strict” – Include in implementation/build commands • • Autoconf: GNU autoconf archive https: //www. gnu. org/software/autoconf-archive/ AX_CFLAGS_WARN_ALL AX_APPEND_COMPILE_FLAGS([-Wextra]) AX_APPEND_COMPILE_FLAGS([-pedantic]) – “Fix” so no warning, even if technically not a problem • That way, any warning is obviously a new issue • Turn on run-time warnings too (where reasonable) • Reasons: – May detect security vulnerabilities – Improve other tools’ results (fewer false results) – expected, no data so far • Often hard/expensive to turn on later – Code not written with warnings in mind may require substantial changes 17

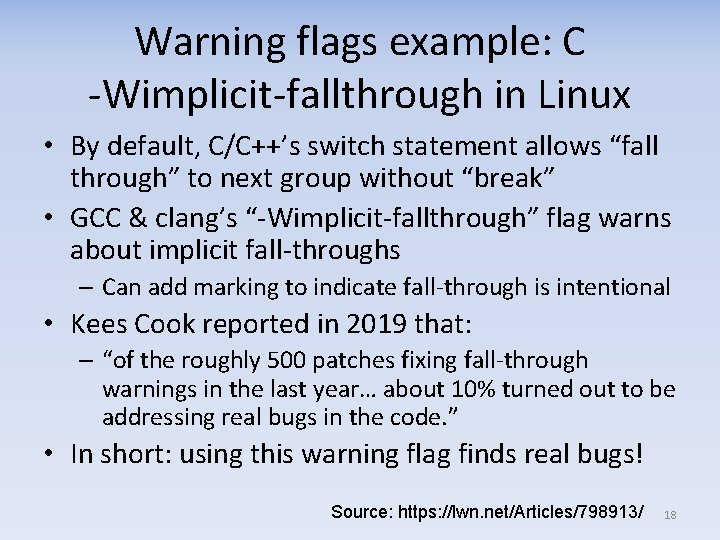

Warning flags example: C -Wimplicit-fallthrough in Linux • By default, C/C++’s switch statement allows “fall through” to next group without “break” • GCC & clang’s “-Wimplicit-fallthrough” flag warns about implicit fall-throughs – Can add marking to indicate fall-through is intentional • Kees Cook reported in 2019 that: – “of the roughly 500 patches fixing fall-through warnings in the last year… about 10% turned out to be addressing real bugs in the code. ” • In short: using this warning flag finds real bugs! Source: https: //lwn. net/Articles/798913/ 18

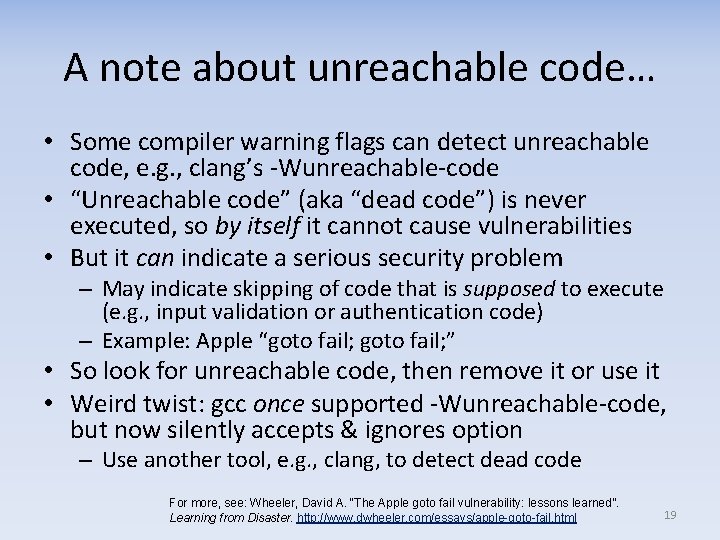

A note about unreachable code… • Some compiler warning flags can detect unreachable code, e. g. , clang’s -Wunreachable-code • “Unreachable code” (aka “dead code”) is never executed, so by itself it cannot cause vulnerabilities • But it can indicate a serious security problem – May indicate skipping of code that is supposed to execute (e. g. , input validation or authentication code) – Example: Apple “goto fail; ” • So look for unreachable code, then remove it or use it • Weird twist: gcc once supported -Wunreachable-code, but now silently accepts & ignores option – Use another tool, e. g. , clang, to detect dead code For more, see: Wheeler, David A. “The Apple goto fail vulnerability: lessons learned”. Learning from Disaster. http: //www. dwheeler. com/essays/apple-goto-fail. html 19

Style checkers / Defect finders / Quality scanners • Compare code (usually source) to set of precanned “style” rules or probable defects • Goal: – Make it easier to understand/modify code – Avoid common defects/mistakes, or patterns likely to lead to them • Some try to have low FP rate – Don’t report something unless it’s a defect 20

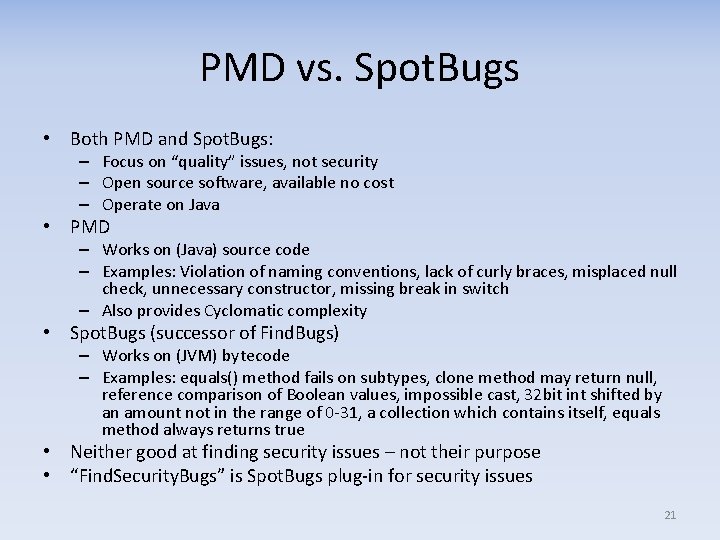

PMD vs. Spot. Bugs • Both PMD and Spot. Bugs: – Focus on “quality” issues, not security – Open source software, available no cost – Operate on Java • PMD – Works on (Java) source code – Examples: Violation of naming conventions, lack of curly braces, misplaced null check, unnecessary constructor, missing break in switch – Also provides Cyclomatic complexity • Spot. Bugs (successor of Find. Bugs) – Works on (JVM) bytecode – Examples: equals() method fails on subtypes, clone method may return null, reference comparison of Boolean values, impossible cast, 32 bit int shifted by an amount not in the range of 0 -31, a collection which contains itself, equals method always returns true • Neither good at finding security issues – not their purpose • “Find. Security. Bugs” is Spot. Bugs plug-in for security issues 21

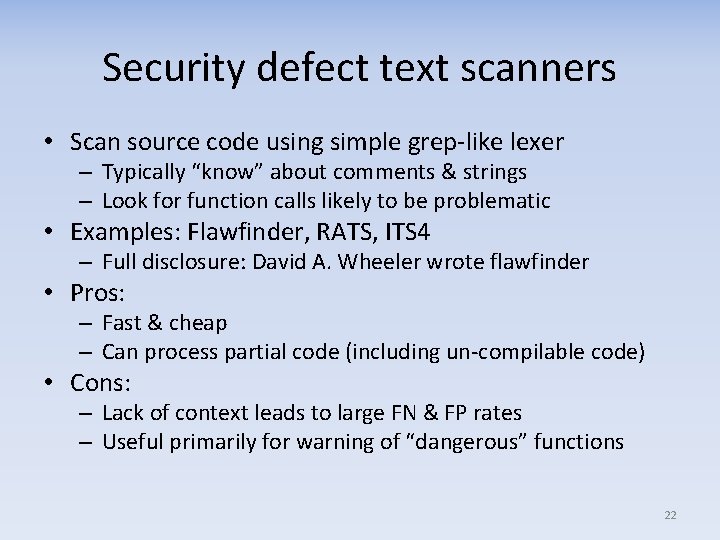

Security defect text scanners • Scan source code using simple grep-like lexer – Typically “know” about comments & strings – Look for function calls likely to be problematic • Examples: Flawfinder, RATS, ITS 4 – Full disclosure: David A. Wheeler wrote flawfinder • Pros: – Fast & cheap – Can process partial code (including un-compilable code) • Cons: – Lack of context leads to large FN & FP rates – Useful primarily for warning of “dangerous” functions 22

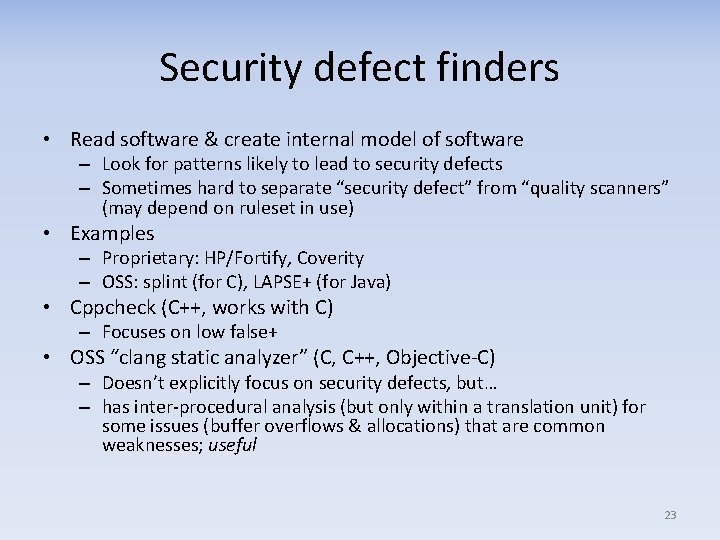

Security defect finders • Read software & create internal model of software – Look for patterns likely to lead to security defects – Sometimes hard to separate “security defect” from “quality scanners” (may depend on ruleset in use) • Examples – Proprietary: HP/Fortify, Coverity – OSS: splint (for C), LAPSE+ (for Java) • Cppcheck (C++, works with C) – Focuses on low false+ • OSS “clang static analyzer” (C, C++, Objective-C) – Doesn’t explicitly focus on security defects, but… – has inter-procedural analysis (but only within a translation unit) for some issues (buffer overflows & allocations) that are common weaknesses; useful 23

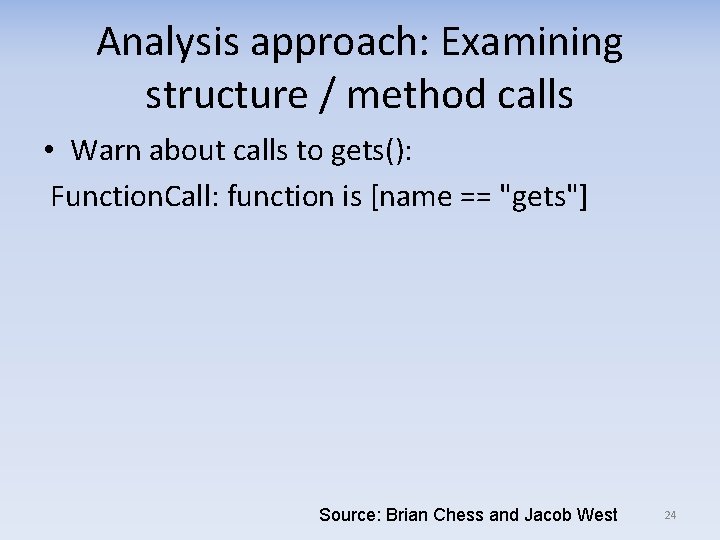

Analysis approach: Examining structure / method calls • Warn about calls to gets(): Function. Call: function is [name == "gets"] Source: Brian Chess and Jacob West 24

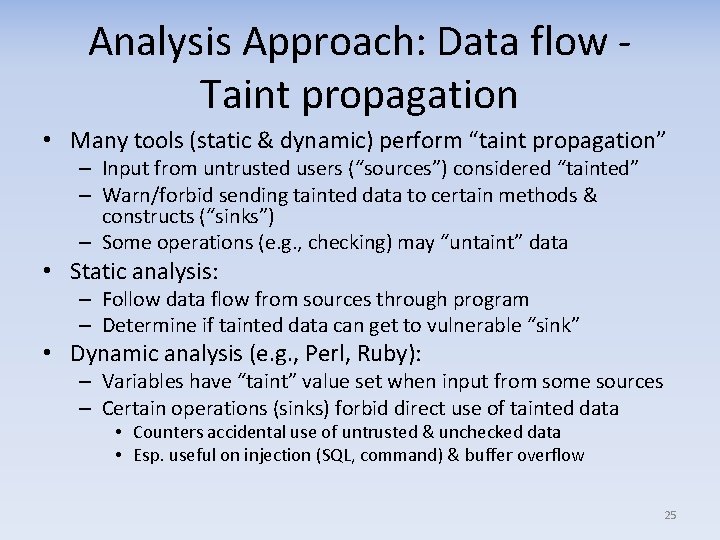

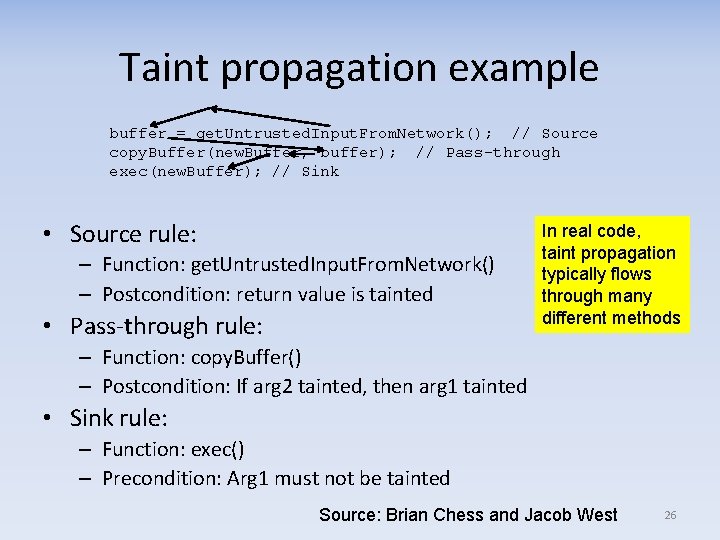

Analysis Approach: Data flow Taint propagation • Many tools (static & dynamic) perform “taint propagation” – Input from untrusted users (“sources”) considered “tainted” – Warn/forbid sending tainted data to certain methods & constructs (“sinks”) – Some operations (e. g. , checking) may “untaint” data • Static analysis: – Follow data flow from sources through program – Determine if tainted data can get to vulnerable “sink” • Dynamic analysis (e. g. , Perl, Ruby): – Variables have “taint” value set when input from some sources – Certain operations (sinks) forbid direct use of tainted data • Counters accidental use of untrusted & unchecked data • Esp. useful on injection (SQL, command) & buffer overflow 25

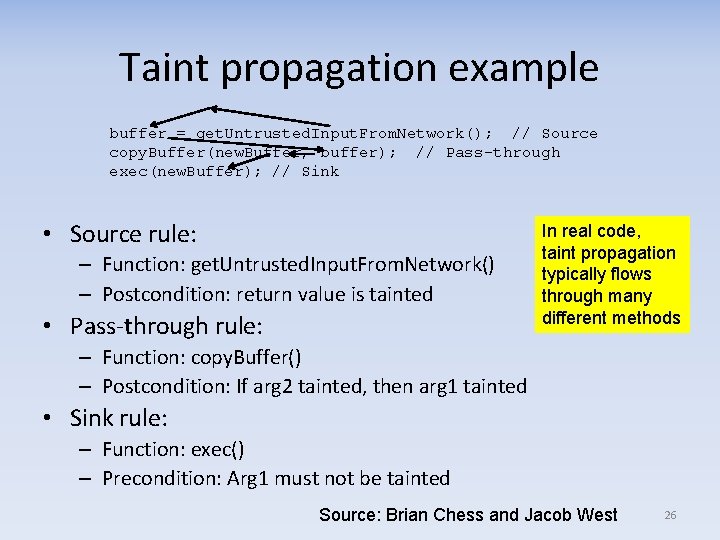

Taint propagation example buffer = get. Untrusted. Input. From. Network(); // Source copy. Buffer(new. Buffer, buffer); // Pass-through exec(new. Buffer); // Sink • Source rule: – Function: get. Untrusted. Input. From. Network() – Postcondition: return value is tainted • Pass-through rule: In real code, taint propagation typically flows through many different methods – Function: copy. Buffer() – Postcondition: If arg 2 tainted, then arg 1 tainted • Sink rule: – Function: exec() – Precondition: Arg 1 must not be tainted Source: Brian Chess and Jacob West 26

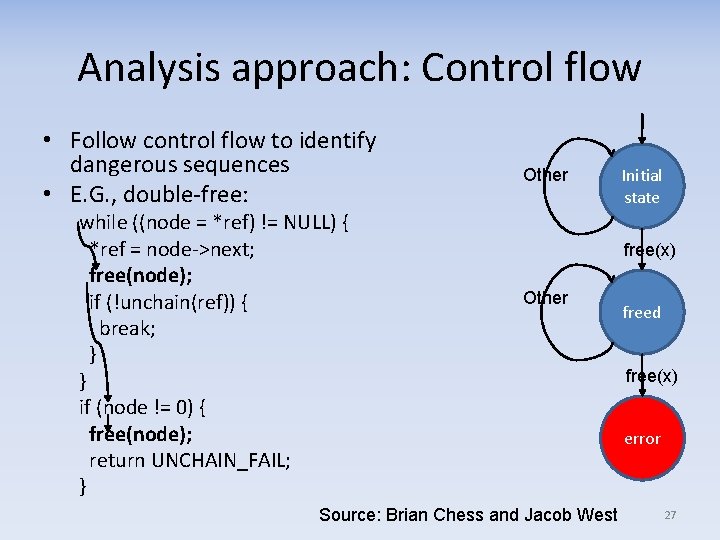

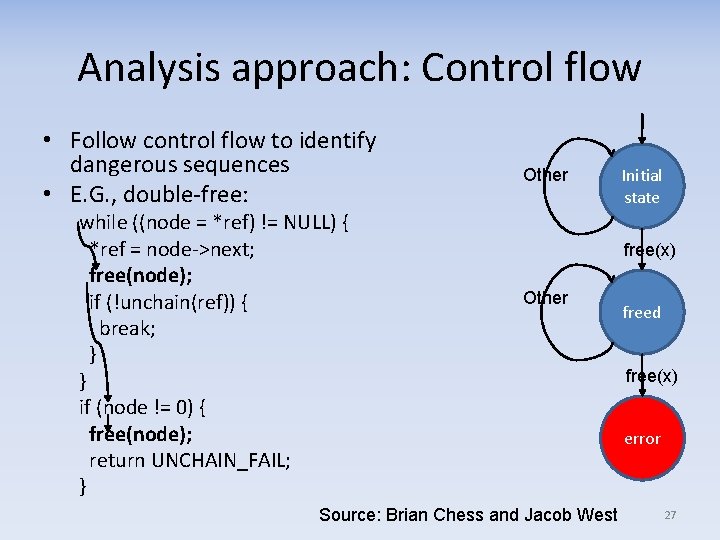

Analysis approach: Control flow • Follow control flow to identify dangerous sequences • E. G. , double-free: while ((node = *ref) != NULL) { *ref = node->next; free(node); if (!unchain(ref)) { break; } } if (node != 0) { free(node); return UNCHAIN_FAIL; } Other Initial state free(x) Other Source: Brian Chess and Jacob West freed free(x) error 27

Property checkers • “Prove” that a program has very specific narrow property • Typically focuses on very specific temporal safety, e. g. : – “Always frees allocated memory” – “Can never have livelock/deadlock” • Many strive to be sound (“reports all possible problems”) • Examples: Gramma. Tech, GNATPro Praxis, Polyspace 28

Knowledge extraction / program understanding • Create view of software automatically for analysis – Especially useful for large code bases – Visualizes architecture – Enables queries, translation to another language • Examples: – Hatha Systems’ Knowledge Refinery – IBM Rational Asset Analyzer (RAA) – Relativity Micro. Focus (COBOL-focused) 29

Source/Byte/Binary code security scanners/analyzers – some lists • NIST SAMATE’s tool list: – http: //samate. nist. gov/index. php/Tool_Survey. html – Click on “Source Code Security Analyzers”, “Byte Code Scanners”, & “Binary Code Scanners” • OWASP’s: – https: //www. owasp. org/index. php/Static_Code_Anal ysis • David A. Wheeler’s list: – https: //www. dwheeler. com/essays/static-analysistools. html 30

Origin analysis (Obsolete/Vulnerable libraries) • Also called Software Composition Analysis (SCA) • Examine embedded software libraries/modules – Historially for legal review (are licenses okay? ), e. g. , part of due diligence for mergers and acquisitions (M&A) – Can identify some libraries with known vulnerabilities (“The Unfortunate Reality of Insecure Libraries”) – Useful for developers, potential users, and current users • Can use package manager data or review source/executable – Opinion: Better to use package managers & integrate with origin analysis – easy, fast, low false+ rates • Examples – OWASP – Dependency Check (OSS) – Sonatype – Nexus, Component Lifecycle Management (CLM) – Black Duck Software – Black Duck Suite • One data source for “what is vulnerable” is NIST’s National Vulnerability Database (NVD) 31

Dynamic analysis 32

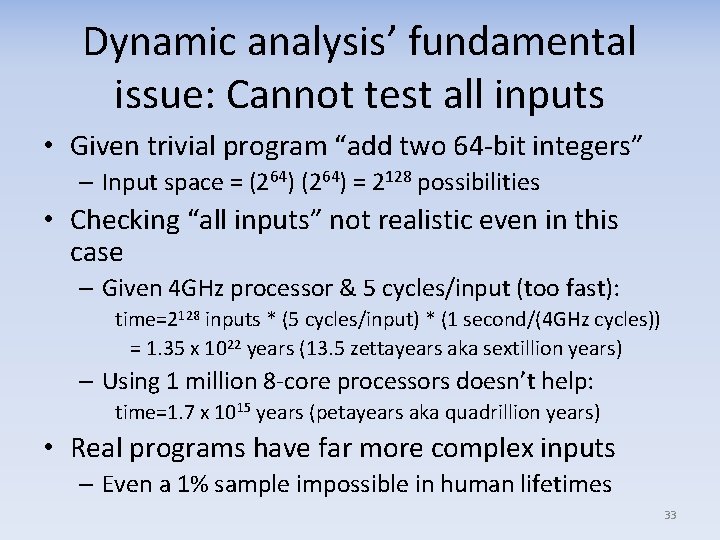

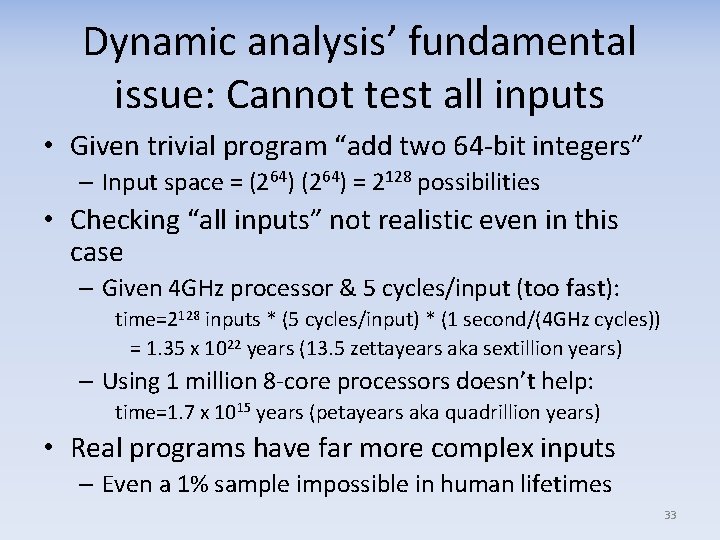

Dynamic analysis’ fundamental issue: Cannot test all inputs • Given trivial program “add two 64 -bit integers” – Input space = (264) = 2128 possibilities • Checking “all inputs” not realistic even in this case – Given 4 GHz processor & 5 cycles/input (too fast): time=2128 inputs * (5 cycles/input) * (1 second/(4 GHz cycles)) = 1. 35 x 1022 years (13. 5 zettayears aka sextillion years) – Using 1 million 8 -core processors doesn’t help: time=1. 7 x 1015 years (petayears aka quadrillion years) • Real programs have far more complex inputs – Even a 1% sample impossible in human lifetimes 33

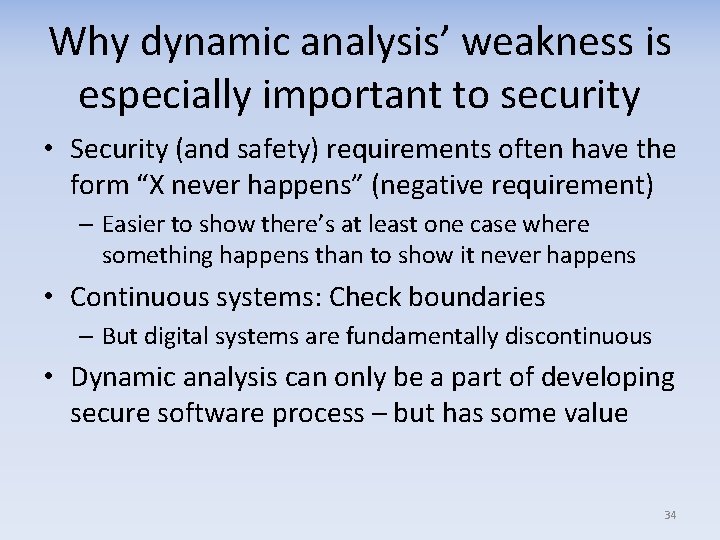

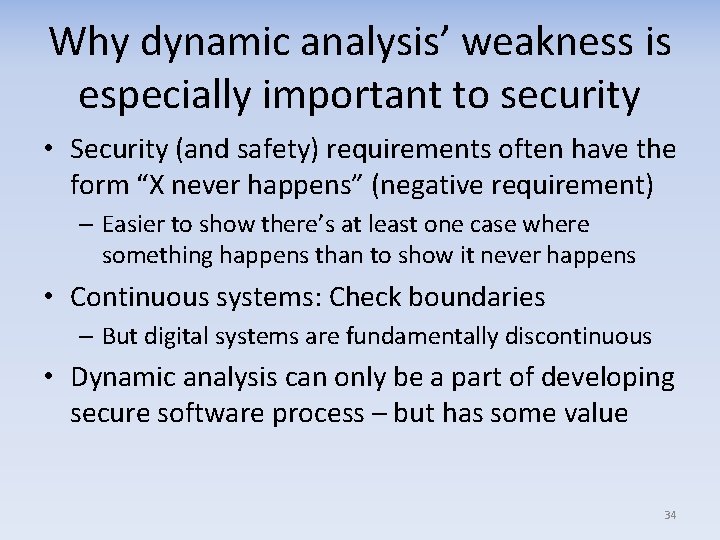

Why dynamic analysis’ weakness is especially important to security • Security (and safety) requirements often have the form “X never happens” (negative requirement) – Easier to show there’s at least one case where something happens than to show it never happens • Continuous systems: Check boundaries – But digital systems are fundamentally discontinuous • Dynamic analysis can only be a part of developing secure software process – but has some value 34

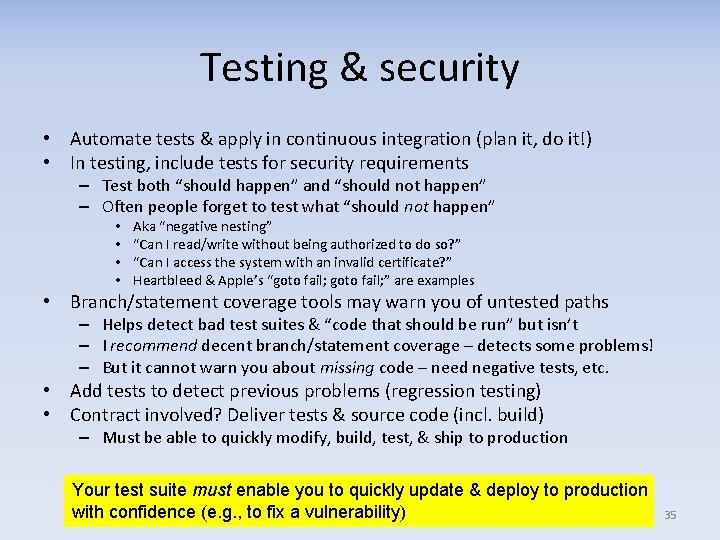

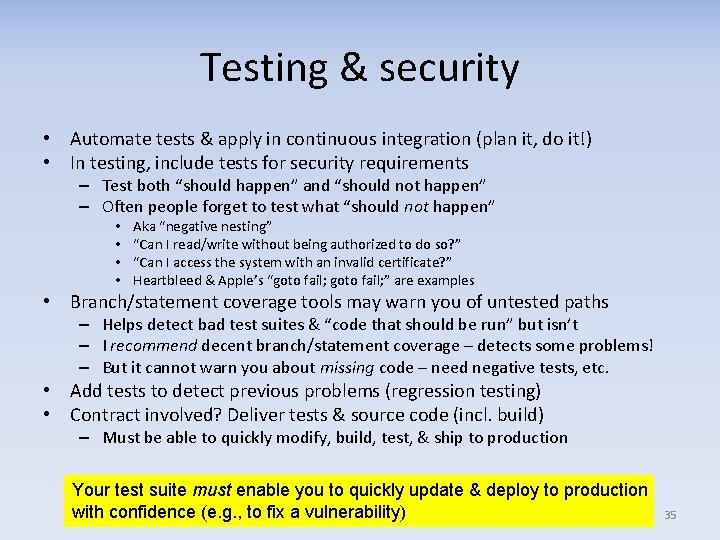

Testing & security • Automate tests & apply in continuous integration (plan it, do it!) • In testing, include tests for security requirements – Test both “should happen” and “should not happen” – Often people forget to test what “should not happen” • • Aka “negative nesting” “Can I read/write without being authorized to do so? ” “Can I access the system with an invalid certificate? ” Heartbleed & Apple’s “goto fail; ” are examples • Branch/statement coverage tools may warn you of untested paths – Helps detect bad test suites & “code that should be run” but isn’t – I recommend decent branch/statement coverage – detects some problems! – But it cannot warn you about missing code – need negative tests, etc. • Add tests to detect previous problems (regression testing) • Contract involved? Deliver tests & source code (incl. build) – Must be able to quickly modify, build, test, & ship to production Your test suite must enable you to quickly update & deploy to production with confidence (e. g. , to fix a vulnerability) 35

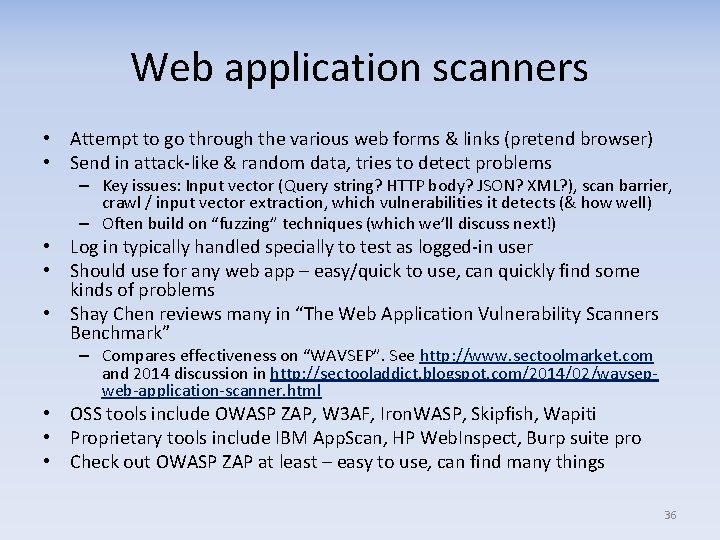

Web application scanners • Attempt to go through the various web forms & links (pretend browser) • Send in attack-like & random data, tries to detect problems – Key issues: Input vector (Query string? HTTP body? JSON? XML? ), scan barrier, crawl / input vector extraction, which vulnerabilities it detects (& how well) – Often build on “fuzzing” techniques (which we’ll discuss next!) • Log in typically handled specially to test as logged-in user • Should use for any web app – easy/quick to use, can quickly find some kinds of problems • Shay Chen reviews many in “The Web Application Vulnerability Scanners Benchmark” – Compares effectiveness on “WAVSEP”. See http: //www. sectoolmarket. com and 2014 discussion in http: //sectooladdict. blogspot. com/2014/02/wavsepweb-application-scanner. html • OSS tools include OWASP ZAP, W 3 AF, Iron. WASP, Skipfish, Wapiti • Proprietary tools include IBM App. Scan, HP Web. Inspect, Burp suite pro • Check out OWASP ZAP at least – easy to use, can find many things 36

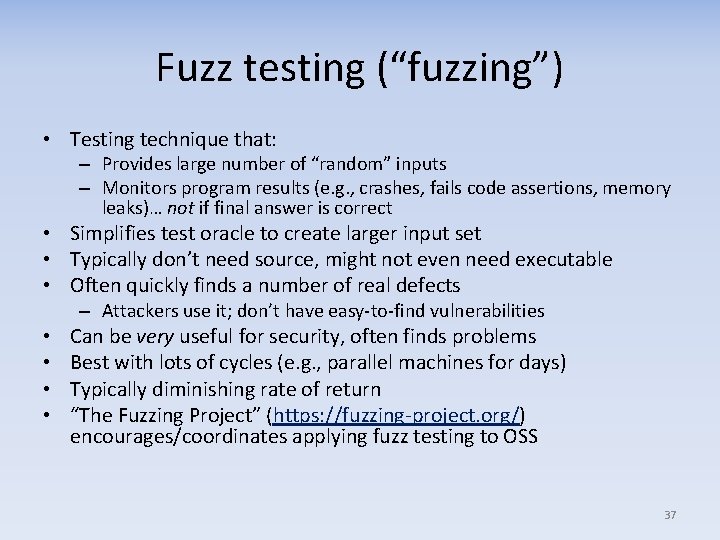

Fuzz testing (“fuzzing”) • Testing technique that: – Provides large number of “random” inputs – Monitors program results (e. g. , crashes, fails code assertions, memory leaks)… not if final answer is correct • Simplifies test oracle to create larger input set • Typically don’t need source, might not even need executable • Often quickly finds a number of real defects – Attackers use it; don’t have easy-to-find vulnerabilities • • Can be very useful for security, often finds problems Best with lots of cycles (e. g. , parallel machines for days) Typically diminishing rate of return “The Fuzzing Project” (https: //fuzzing-project. org/) encourages/coordinates applying fuzz testing to OSS 37

Fuzz testing history • Fuzz testing concept from Barton Miller’s 1988 class project University of Wisconsin – Project created “fuzzer” to test reliability of command -line Unix programs – Repeatedly generated random data for them until crash/hang – Later expanded for GUIs, network protocols, etc. • Approach quickly found a number of defects • Many tools & approach variations created since 38

Fuzz testing variations: Input • Basic test data creation approaches: – Fully random – Mutation based: mutate input samples to create test data (“Dumb”) – Generation based: create test data based on model of input (“Smart”) • May try to improve input sets using: – Evolution: mutate preferring the use of inputs that produced “more interesting” responses (e. g. , paths previously not covered) – Constraints: Generate tests that execute previously-unexecuted paths using, e. g. , symbolic execution or constraint analysis (e. g. , SAGE, fuzzgrind) – Heuristics: Create “likely security vulnerability” patterns (e. g. metachars) to increase value, provide keyword list • Type of input data: File formats, network protocols, environment variables, API call sequences, database contents, etc. 39

Fuzz testing variations: Monitoring results • Originally, just “did it crash/hang”? • Adding program assertions (enabled!) can reveal more • Test other “should not happen” – – – Ensure files/directories unchanged if shouldn’t be Memory leak (e. g. , valgrind) Branches executed or not (and perhaps how often) More intermediate (external) state checking Final state or results “valid” (!= “correct”) – Cryptonomicon used this approach to find Heartbleed • Generational approaches can have a lot of information to help – Invalid memory access, e. g. , using Address. Sanitizer (aka ASan) to detect buffer overflows & double-frees – More generally, using tools (“sanitizers”) to detect problems 40

Sample fuzz testing tools • zzuf – very simple mutation-based fuzzer • CERT Basic Fuzzing Framework (BFF) – Built on “zzuf” which does the input fuzzing • CERT Failure Observation Engine (FOE) – From-scratch Windows • Peach fuzzer • Immunity’s SPIKE Proxy – generation with “blocks”, C • Sulley – Python, monitoring framework, generation (uses block concept like SPIKE) • Codenomicon – various proprietary tools, generation based • American Fuzzy Lop (AFL) – Mutation+evolutionary There a huge number of fuzzing tools! 41

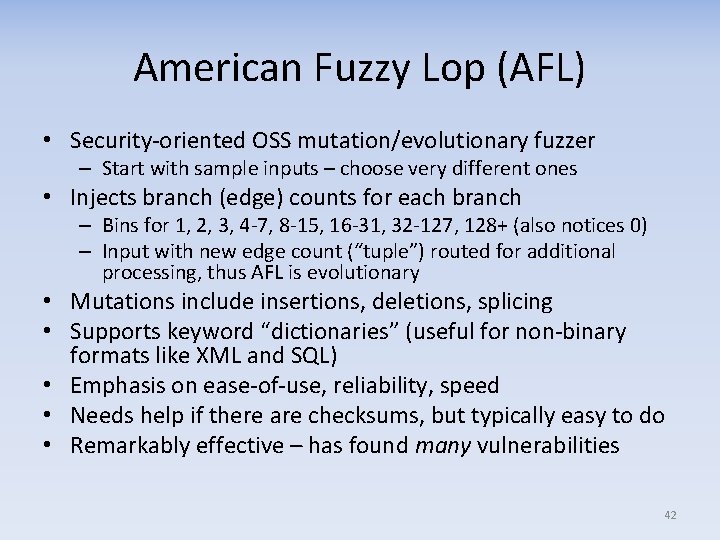

American Fuzzy Lop (AFL) • Security-oriented OSS mutation/evolutionary fuzzer – Start with sample inputs – choose very different ones • Injects branch (edge) counts for each branch – Bins for 1, 2, 3, 4 -7, 8 -15, 16 -31, 32 -127, 128+ (also notices 0) – Input with new edge count (“tuple”) routed for additional processing, thus AFL is evolutionary • Mutations include insertions, deletions, splicing • Supports keyword “dictionaries” (useful for non-binary formats like XML and SQL) • Emphasis on ease-of-use, reliability, speed • Needs help if there are checksums, but typically easy to do • Remarkably effective – has found many vulnerabilities 42

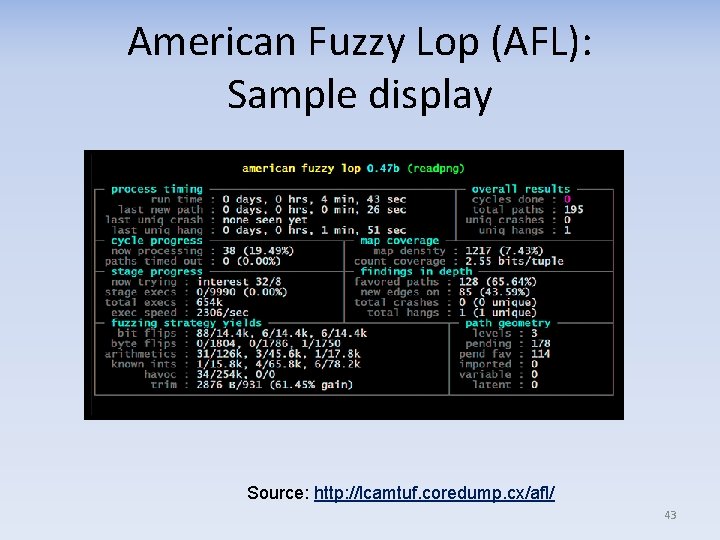

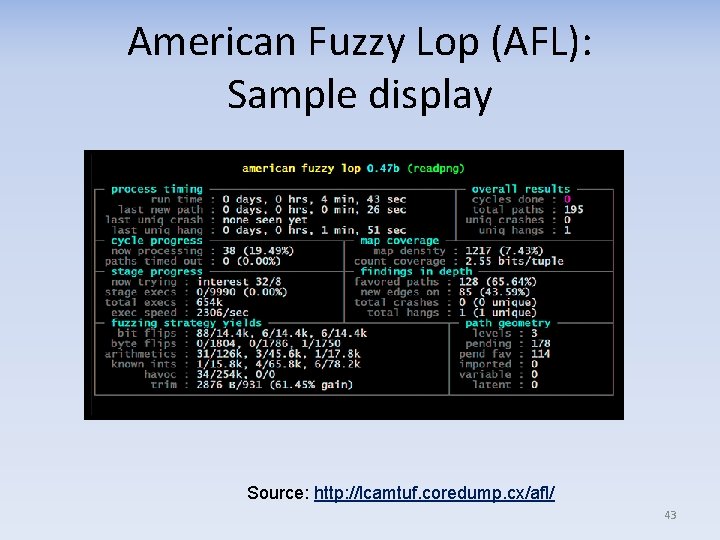

American Fuzzy Lop (AFL): Sample display Source: http: //lcamtuf. coredump. cx/afl/ 43

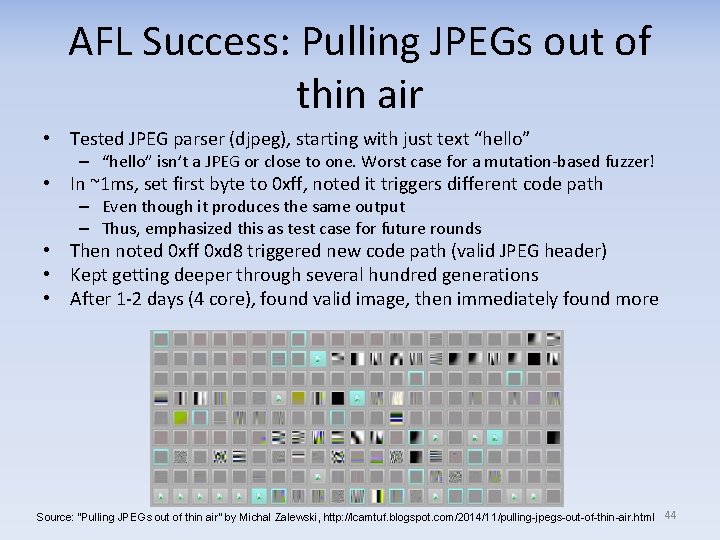

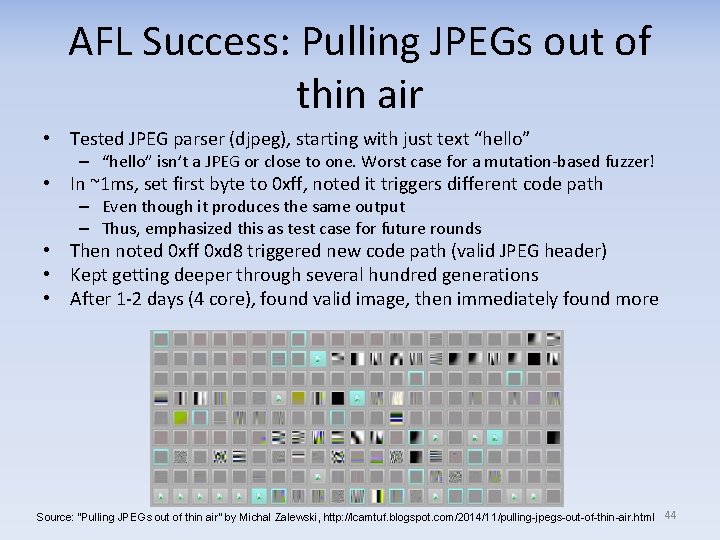

AFL Success: Pulling JPEGs out of thin air • Tested JPEG parser (djpeg), starting with just text “hello” – “hello” isn’t a JPEG or close to one. Worst case for a mutation-based fuzzer! • In ~1 ms, set first byte to 0 xff, noted it triggers different code path – Even though it produces the same output – Thus, emphasized this as test case for future rounds • Then noted 0 xff 0 xd 8 triggered new code path (valid JPEG header) • Kept getting deeper through several hundred generations • After 1 -2 days (4 core), found valid image, then immediately found more Source: “Pulling JPEGs out of thin air” by Michal Zalewski, http: //lcamtuf. blogspot. com/2014/11/pulling-jpegs-out-of-thin-air. html 44

Fuzz testing: Issues • Simple random fuzzing finds only “shallow” problems – Some conditions/situations unlikely to be hit randomly – Often only a small amount of program gets covered – E. g. , if input has a checksum, random fuzz testing ends up primarily checking the checksum algorithm – Often need to tweak fuzzing process or program for checksums • Ongoing work to improve coverage depth, with some success – E. g. , generational fuzz testers, American Fuzzy Lop branch coverage approach, constraint analysis (e. g. , SAGE) – Often hybrid instead of just dynamic approaches • Once defects found by fuzz testing fixed, fuzz testing typically has a quickly diminishing rate of return – Fuzz testing is still a very good idea… but not by itself 45

Fuzz testing: Some resources • Sutton, Michael, Adam Greene, and Pedram Amini. Fuzzing: Brute Force Vulnerability Discovery. 2007. • Takanen, Ari, Jared D. Demott, and Charles Miller. Fuzzing for Software Security Testing and Quality Assurance. 2007. (Takanen is CTO of Codenomicon) 46

Address Sanitizer (ASan): Compilation-time countermeasure • “Address Sanitizer” (ASan) available in LLVM & gcc as “-fsanitize=address” – Counters buffer overflow (global/stack/heap), use-after-free, & double-free • Can also detect use-after-return, memory leaks. In rare cases doesn’t detect above list • Counters some other C/C++ memory issues, but not read-before-write – 73% measured average CPU overhead (often 2 x), 2 x-4 x memory overhead • Overhead low given how it works, but still significant (hw support could help!) • Overhead sometimes acceptable overhead, e. g. , fuzz testing (Chromium & Firefox) – More info: http: //code. google. com/p/address-sanitizer/ • Esp. “Address. Sanitizer: A Fast Address Sanity Checker” by Konstantin Serebryany, Derek Bruening, Alexander Potapenko, & Dmitry Vyukov (Google), USENIX ATC 2012 • Uses “shadow bytes” to record memory addressability – All allocations (global, stack, & heap) aligned to (at least) 8 bytes – Every 8 bytes of memory’s addressability represented by “shadow byte” – In shadow byte, 0 = all 8 bytes addressable, 1. . 7= only next N addressable, negative (high bit) means no bytes addressable (addressability = read or write) – All allocations surrounded by inaccessible “red zones” (default: 128 bytes) – Every allocation/deallocation in stack & heap manipulates shadow bytes – Every read/write checks shadow bytes to see if access ok (hw ~20% instead) – Strong, but can be fooled if calculated address is in different valid region • Strengtheners: Delay reusing previously-allocated memory & big red zones 47

Sanitizers & exploit mitigation • Many tools designed to detect run-time errors – Sanitizers: Often used in testing to detect likely problems, some tolerance for false+ – Exploit mitigation: Used in production to reduce exploit likelihood, 0 tolerance for false+ – Some tools can be used both ways • “So. K: Sanitizing for Security” (Song et al) is survey paper of sanitizers; see: https: //arxiv. org/pdf/1806. 04355. pdf 48

Hybrid analysis 49

Coverage measures • Hybrid = Combine static & dynamic analysis • Historically common hybrid approach: Coverage measures • “Coverage measures” measure “how well” program has been tested in dynamic analysis (by some measure) – Many coverage measures exist • Two common coverage for dynamic testing: – Statement coverage: Which (%) program statements have been executed by at least one test? – Branch coverage: Which (%) program branch options have been executed by at least one test? if (a > 0) { dostuff(); } // Has two branches, “true” & “false” // Statement coverage 100% with a=1 • Can then examine what’s uncovered (untested) 50

More hybrid approaches • Concolic testing (“Concolic” = concrete + symbolic) – Hybrid software verification technique that interleaves concrete execution (testing on particular inputs) with symbolic execution – Can be combined with fuzz testing for better test coverage to detect vulnerabilities • Sparks, Embleton, Cunningham, Zou 2007 “Automated Vulnerability Analysis: Leveraging Control Flow for Evolutionary Input Crafting” http: //www. acsac. org/2007/abstracts/22. html – Extends black box fuzz testing with genetic algorithm – Uses “dynamic program instrumentation to gather runtime information about each input’s progress on the control flow graph, and using this information, we calculate and assign it a ‘fitness’ value. Inputs which make more runtime progress on the control flow graph or explore new, previously unexplored regions receive a higher fitness value. Eventually, the inputs achieving the highest fitness are ‘mated’ (e. g. combined using various operators) to produce a new generation of inputs…. does not require that source code be available” • Hybrid approaches are an active research area 51

More hybrid approaches (2) • Dao and Shibayama 2011, “Security sensitive data flow coverage criterion for automatic security testing of web applications” (ACM) – proposes new coverage measure, “security sensitive data flow coverage”: “This criterion aims to show well test cases cover security sensitive data flows. We conducted an experiment of automatic security testing of real-world web applications to evaluate the effectiveness of our proposed coverage criterion, which is intended to guide test case generation. The experiment results show that security sensitive data flow coverage helps reduce test cost while keeping the effectiveness of vulnerability detection high. ” 52

Penetration testing (pen testing) • Pretend to be adversary, try to break in • Depends on the skills of the pen testers • Need to set rules-of-engagement (Ro. E) – Problem: Ro. E often unrealistic • Really a combination of static & dynamic approaches 53

Tool collections • Often convenient to have a pre-created set of tools • Kali Linux (successor to Back. Track): – Linux distribution based on (widely-used) Debian – Preinstalled with over 600 penetration-testing programs, including nmap (a port scanner), Wireshark (a packet analyzer), and OWASP ZAP – Can run natively when installed on a computer's hard disk, can be booted from a live CD or live USB, or it can run within a virtual machine – Useful for pen testing applications before release 54

Operational 55

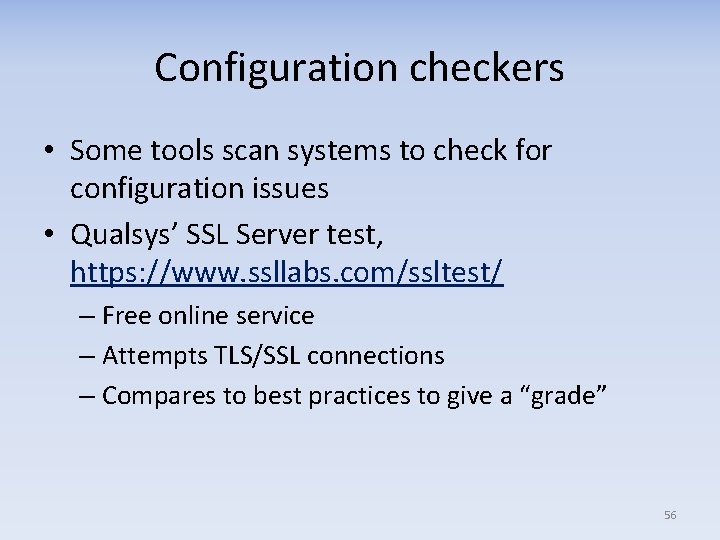

Configuration checkers • Some tools scan systems to check for configuration issues • Qualsys’ SSL Server test, https: //www. ssllabs. com/ssltest/ – Free online service – Attempts TLS/SSL connections – Compares to best practices to give a “grade” 56

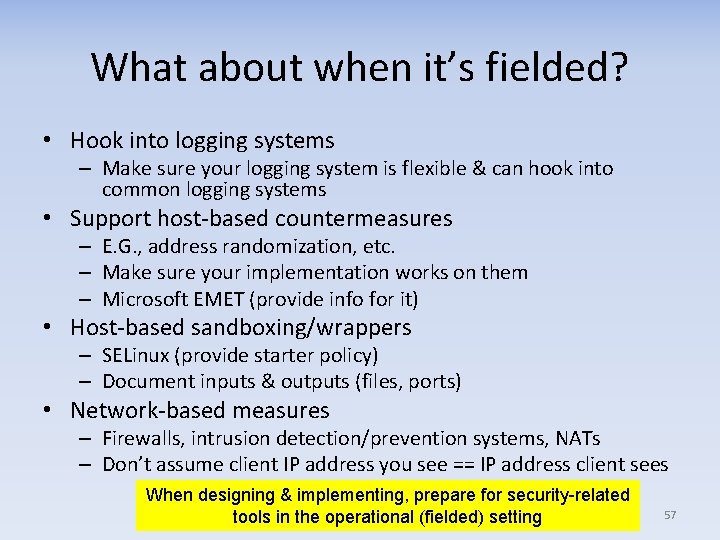

What about when it’s fielded? • Hook into logging systems – Make sure your logging system is flexible & can hook into common logging systems • Support host-based countermeasures – E. G. , address randomization, etc. – Make sure your implementation works on them – Microsoft EMET (provide info for it) • Host-based sandboxing/wrappers – SELinux (provide starter policy) – Document inputs & outputs (files, ports) • Network-based measures – Firewalls, intrusion detection/prevention systems, NATs – Don’t assume client IP address you see == IP address client sees When designing & implementing, prepare for security-related tools in the operational (fielded) setting 57

Many ways to organize tool types 58

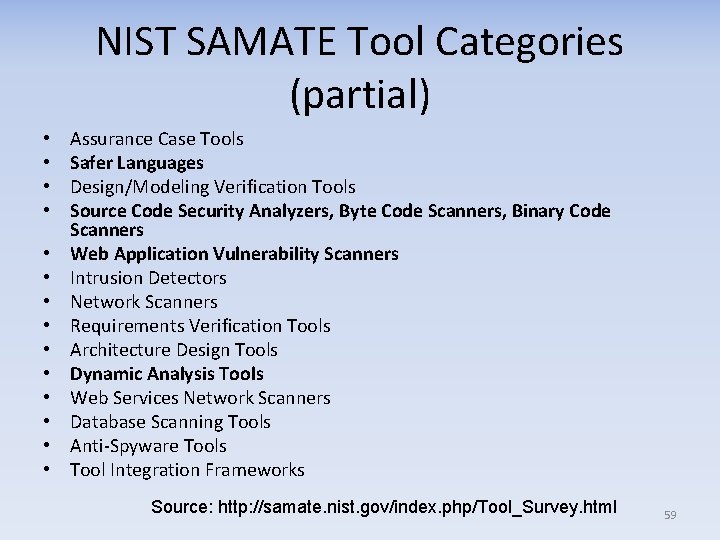

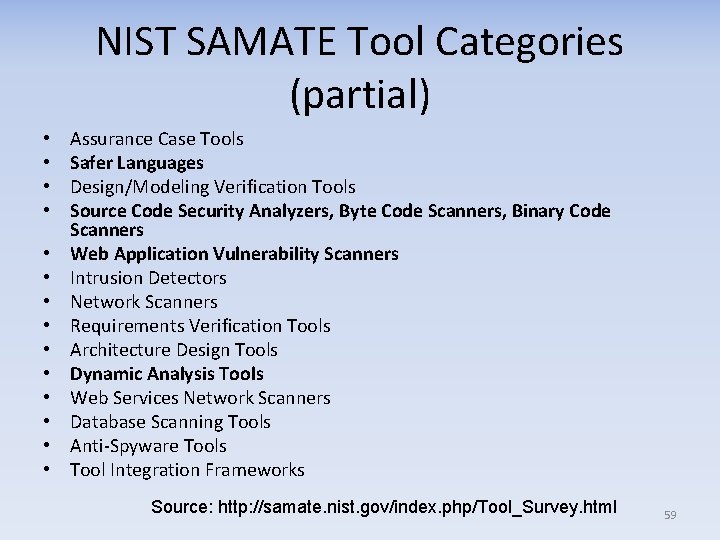

NIST SAMATE Tool Categories (partial) • • • • Assurance Case Tools Safer Languages Design/Modeling Verification Tools Source Code Security Analyzers, Byte Code Scanners, Binary Code Scanners Web Application Vulnerability Scanners Intrusion Detectors Network Scanners Requirements Verification Tools Architecture Design Tools Dynamic Analysis Tools Web Services Network Scanners Database Scanning Tools Anti-Spyware Tools Tool Integration Frameworks Source: http: //samate. nist. gov/index. php/Tool_Survey. html 59

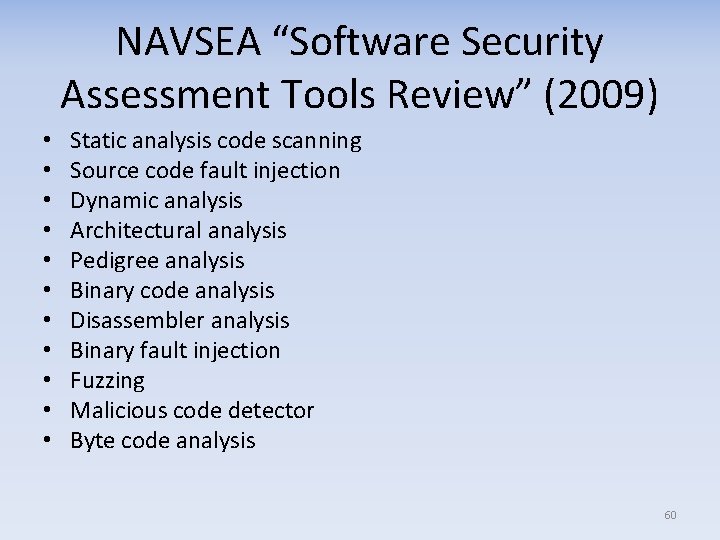

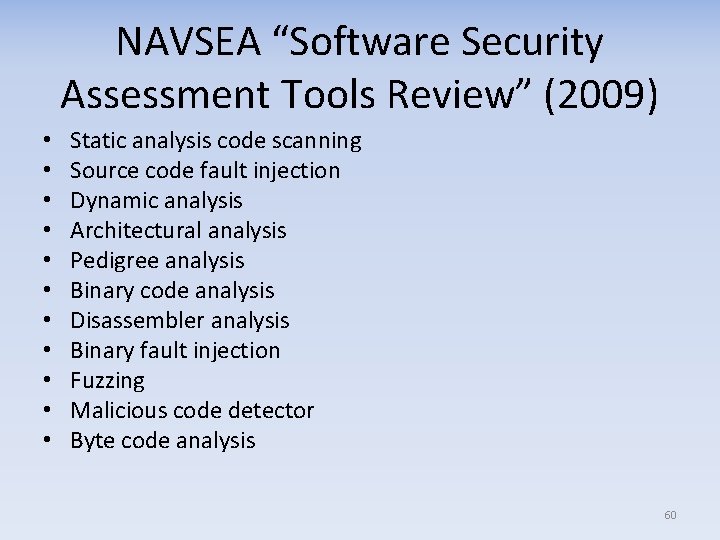

NAVSEA “Software Security Assessment Tools Review” (2009) • • • Static analysis code scanning Source code fault injection Dynamic analysis Architectural analysis Pedigree analysis Binary code analysis Disassembler analysis Binary fault injection Fuzzing Malicious code detector Byte code analysis 60

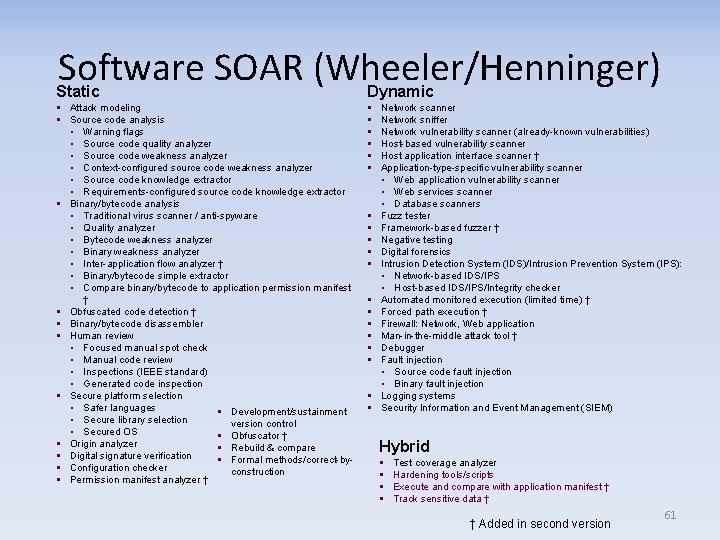

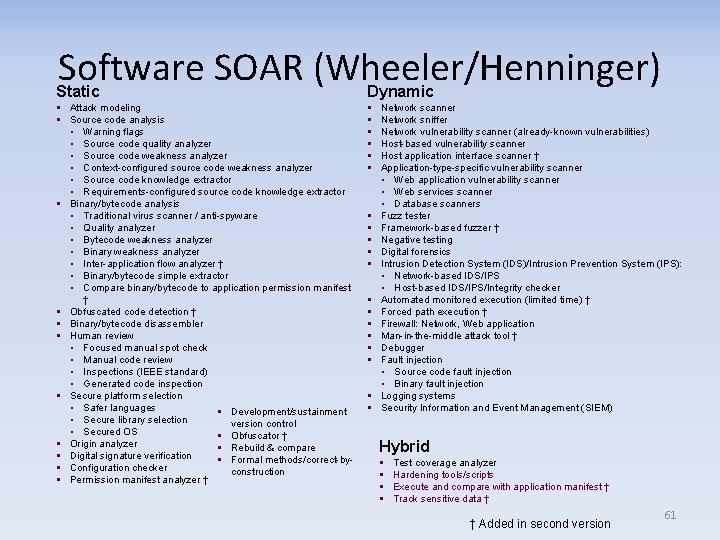

Software SOAR (Wheeler/Henninger) Static Dynamic § Attack modeling § Source code analysis • Warning flags • Source code quality analyzer • Source code weakness analyzer • Context-configured source code weakness analyzer • Source code knowledge extractor • Requirements-configured source code knowledge extractor § Binary/bytecode analysis • Traditional virus scanner / anti-spyware • Quality analyzer • Bytecode weakness analyzer • Binary weakness analyzer • Inter-application flow analyzer † • Binary/bytecode simple extractor • Compare binary/bytecode to application permission manifest † § Obfuscated code detection † § Binary/bytecode disassembler § Human review • Focused manual spot check • Manual code review • Inspections (IEEE standard) • Generated code inspection § Secure platform selection • Safer languages § Development/sustainment • Secure library selection version control • Secured OS § Obfuscator † § Origin analyzer § Rebuild & compare § Digital signature verification § Formal methods/correct-by§ Configuration checker construction § Permission manifest analyzer † § § § § § Network scanner Network sniffer Network vulnerability scanner (already-known vulnerabilities) Host-based vulnerability scanner Host application interface scanner † Application-type-specific vulnerability scanner • Web application vulnerability scanner • Web services scanner • Database scanners Fuzz tester Framework-based fuzzer † Negative testing Digital forensics Intrusion Detection System (IDS)/Intrusion Prevention System (IPS): • Network-based IDS/IPS • Host-based IDS/IPS/Integrity checker Automated monitored execution (limited time) † Forced path execution † Firewall: Network, Web application Man-in-the-middle attack tool † Debugger Fault injection • Source code fault injection • Binary fault injection Logging systems Security Information and Event Management (SIEM) Hybrid § § Test coverage analyzer Hardening tools/scripts Execute and compare with application manifest † Track sensitive data † † Added in second version 61

A fool with a tool… and adopting tools 62

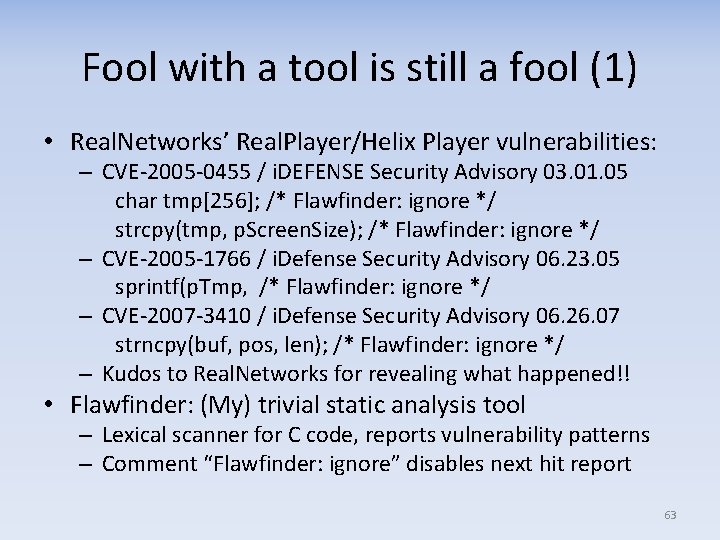

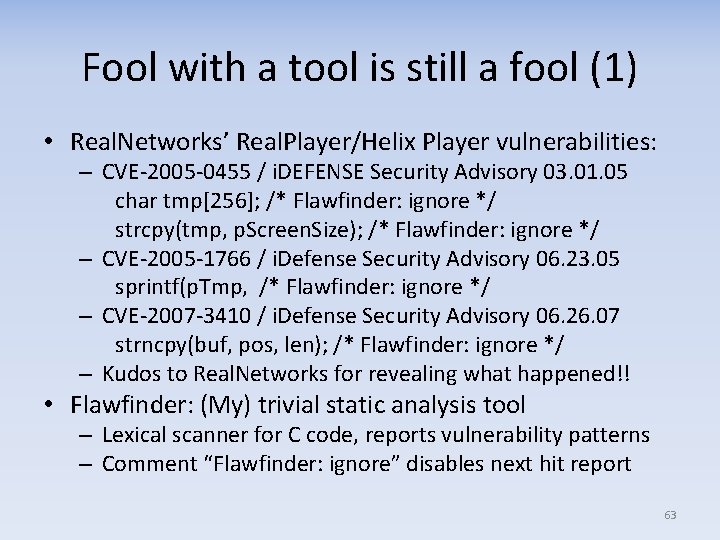

Fool with a tool is still a fool (1) • Real. Networks’ Real. Player/Helix Player vulnerabilities: – CVE-2005 -0455 / i. DEFENSE Security Advisory 03. 01. 05 char tmp[256]; /* Flawfinder: ignore */ strcpy(tmp, p. Screen. Size); /* Flawfinder: ignore */ – CVE-2005 -1766 / i. Defense Security Advisory 06. 23. 05 sprintf(p. Tmp, /* Flawfinder: ignore */ – CVE-2007 -3410 / i. Defense Security Advisory 06. 26. 07 strncpy(buf, pos, len); /* Flawfinder: ignore */ – Kudos to Real. Networks for revealing what happened!! • Flawfinder: (My) trivial static analysis tool – Lexical scanner for C code, reports vulnerability patterns – Comment “Flawfinder: ignore” disables next hit report 63

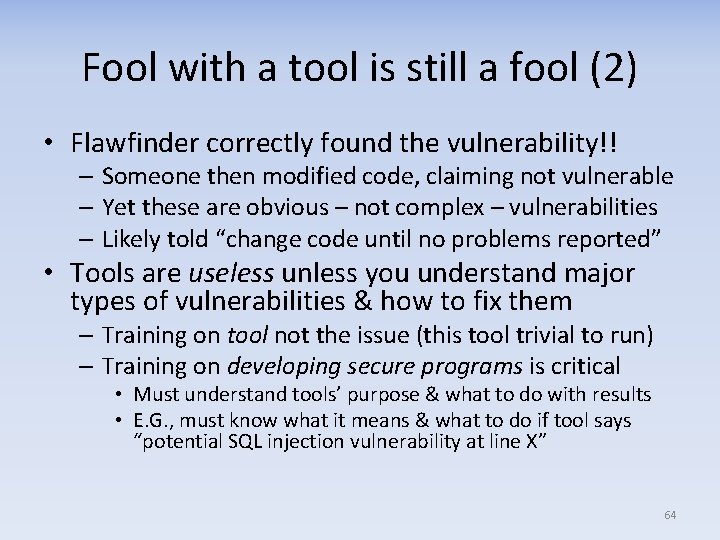

Fool with a tool is still a fool (2) • Flawfinder correctly found the vulnerability!! – Someone then modified code, claiming not vulnerable – Yet these are obvious – not complex – vulnerabilities – Likely told “change code until no problems reported” • Tools are useless unless you understand major types of vulnerabilities & how to fix them – Training on tool not the issue (this tool trivial to run) – Training on developing secure programs is critical • Must understand tools’ purpose & what to do with results • E. G. , must know what it means & what to do if tool says “potential SQL injection vulnerability at line X” 64

SWAMP • SWAMP = Software Assurance (Sw. A) Marketplace – DHS-sponsored project – Cloud-based tool analysis of submitted software • Makes it easy to run many Sw. A tools against software. Users: – “Software developers can bring software… to continuously test it against a suite of software assurance tools. We will provide interfaces to common source code repositories and develop common tool reporting formats…” – “Software assurance tool developers can run tools in our facility to access a large set of software packages and compare the performance of their tools against other tools. ” – “Software assurance researchers will have a unique set of continuous data to analyze…” • Initial capability focuses on analyzing C, C++, Java using OSS tools – Initial tools: Spot. Bugs, PMD, cppcheck, clang, gcc – Focus: Making it easy to apply the tools – don’t need to install tools, SWAMP figures out how to apply the tools (e. g. , through build monitoring), and works to integrate tool results • More info: http: //continuousassurance. org/ – Short video: https: //www. youtube. com/watch? v=11 c. ZVLt. W 9 go 65

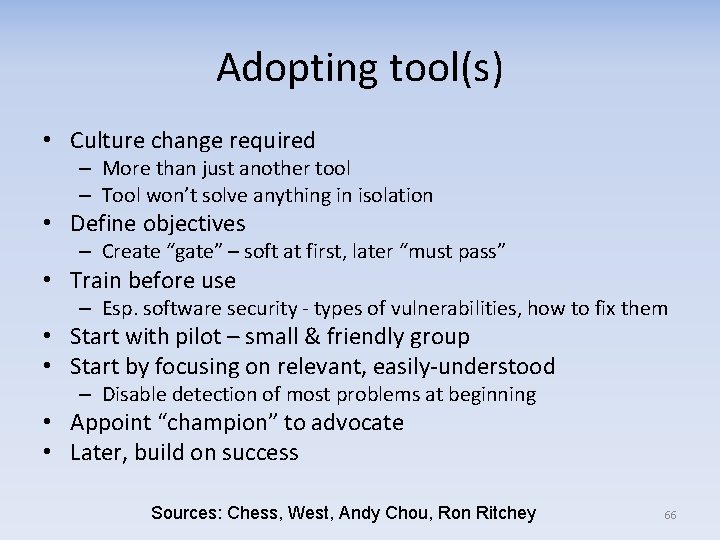

Adopting tool(s) • Culture change required – More than just another tool – Tool won’t solve anything in isolation • Define objectives – Create “gate” – soft at first, later “must pass” • Train before use – Esp. software security - types of vulnerabilities, how to fix them • Start with pilot – small & friendly group • Start by focusing on relevant, easily-understood – Disable detection of most problems at beginning • Appoint “champion” to advocate • Later, build on success Sources: Chess, West, Andy Chou, Ron Ritchey 66

Released under CC BY-SA 3. 0 • This presentation is released under the Creative Commons Attribution. Share. Alike 3. 0 Unported (CC BY-SA 3. 0) license • You are free: – to Share — to copy, distribute and transmit the work – to Remix — to adapt the work – to make commercial use of the work • Under the following conditions: – Attribution — You must attribute the work in the manner specified by the author or licensor (but not in any way that suggests that they endorse you or your use of the work) – Share Alike — If you alter, transform, or build upon this work, you may distribute the resulting work only under the same or similar license to this one • These conditions can be waived by permission from the copyright holder – dwheeler at dwheeler dot com • Details at: http: //creativecommons. org/licenses/by-sa/3. 0/ • Attribute me as “David A. Wheeler” 67