Survivability Analysis Framework Carol Woody Ph D Software

Survivability Analysis Framework Carol Woody Ph. D. Software Engineering Institute Carnegie Mellon University Pittsburgh, PA 15213 11/19/08 © 2008 Carnegie Mellon University

NO WARRANTY THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE MATERIAL IS FURNISHED ON AN “AS-IS" BASIS. CARNEGIE MELLON UNIVERSITY MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT, TRADEMARK, OR COPYRIGHT INFRINGEMENT. Use of any trademarks in this presentation is not intended in any way to infringe on the rights of the trademark holder. This Presentation may be reproduced in its entirety, without modification, and freely distributed in written or electronic form without requesting formal permission. Permission is required for any other use. Requests for permission should be directed to the Software Engineering Institute at permission@sei. cmu. edu. This work was created in the performance of Federal Government Contract Number FA 8721 -05 -C-0003 with Carnegie Mellon University for the operation of the Software Engineering Institute, a federally funded research and development center. The Government of the United States has a royalty-free government-purpose license to use, duplicate, or disclose the work, in whole or in part and in any manner, and to have or permit others to do so, for government purposes pursuant to the copyright license under the clause at 252. 227 -7013. ` © 2008 Carnegie Mellon University

Agenda Plan for Mission Survivability Analysis Framework (SAF) Analysis of a Complex Failure — Northeast power failure © 2008 Carnegie Mellon University 3

Change is Always Occurring Usual problems • Power and communication outages • Bomb scare • Snow storms • Staff illness & death Expected changes • New technology insertion • Technology refreshes • Location moves • Operational security Catastrophe is a matter of scale © 2008 Carnegie Mellon University 4

Operational Assurance Build a shared view of the influences on critical operational processes to analyze them for quality and survivability • Hardware • Software • People • Policies and practices All components must work together for effective results © 2008 Carnegie Mellon University 5

Agenda Plan for Mission Survivability Analysis Framework (SAF) Analysis of a Complex Failure — Northeast power failure © 2008 Carnegie Mellon University 6

Survivability Analysis Framework (SAF) -1 Focus on successful completion of a mission thread (satisfactory execution of each critical step) SAF Process: • Identify a mission thread-specific example • Describe critical steps required to complete the process (end to end) - sequenced activities, participants, and resources • Select one or more critical steps within the mission thread for detail analysis. • Identifying the mission critical resource(s) • Identify stresses relevant to critical resources within the context of this mission • Evaluate threats relevant to the selected mission critical resource © 2008 Carnegie Mellon University 7

Survivability Analysis Framework (SAF) -2 Focus on catastrophe support (satisfactory addressing crises needs) SAF Process: • Identify a critical mission threads • Describe critical steps required (end to end) - sequenced activities, participants, and resources • Identifying anticipated available mission resource(s) • Identify catastrophe stresses relevant to critical resources © 2008 Carnegie Mellon University 8

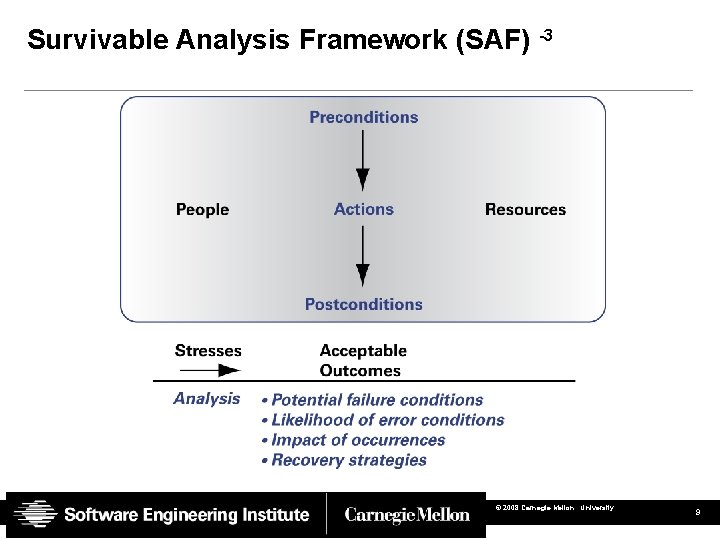

Survivable Analysis Framework (SAF) -3 © 2008 Carnegie Mellon University 9

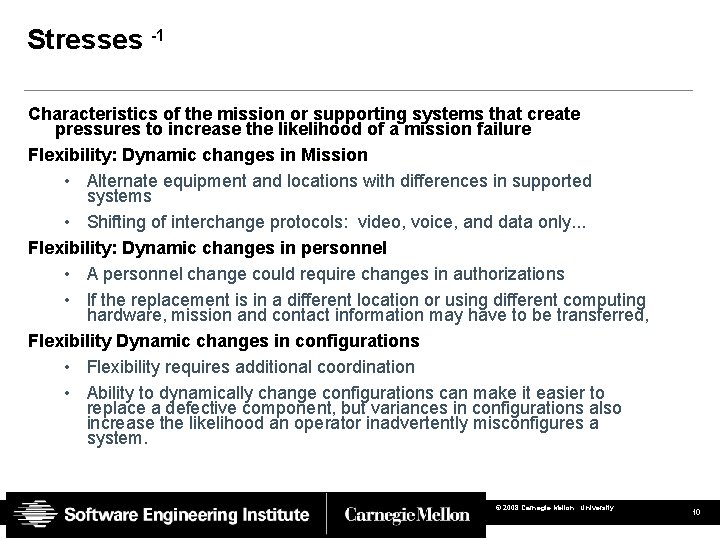

Stresses -1 Characteristics of the mission or supporting systems that create pressures to increase the likelihood of a mission failure Flexibility: Dynamic changes in Mission • Alternate equipment and locations with differences in supported systems • Shifting of interchange protocols: video, voice, and data only. . . Flexibility: Dynamic changes in personnel • A personnel change could require changes in authorizations • If the replacement is in a different location or using different computing hardware, mission and contact information may have to be transferred, Flexibility Dynamic changes in configurations • Flexibility requires additional coordination • Ability to dynamically change configurations can make it easier to replace a defective component, but variances in configurations also increase the likelihood an operator inadvertently misconfigures a system. © 2008 Carnegie Mellon University 10

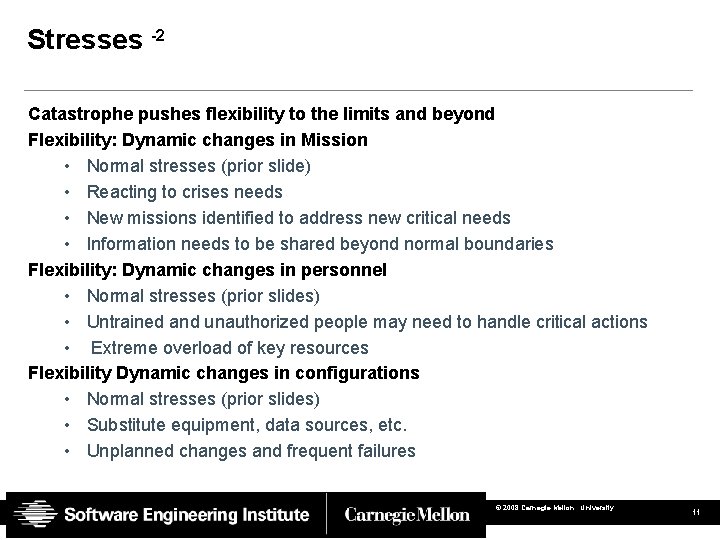

Stresses -2 Catastrophe pushes flexibility to the limits and beyond Flexibility: Dynamic changes in Mission • Normal stresses (prior slide) • Reacting to crises needs • New missions identified to address new critical needs • Information needs to be shared beyond normal boundaries Flexibility: Dynamic changes in personnel • Normal stresses (prior slides) • Untrained and unauthorized people may need to handle critical actions • Extreme overload of key resources Flexibility Dynamic changes in configurations • Normal stresses (prior slides) • Substitute equipment, data sources, etc. • Unplanned changes and frequent failures © 2008 Carnegie Mellon University 11

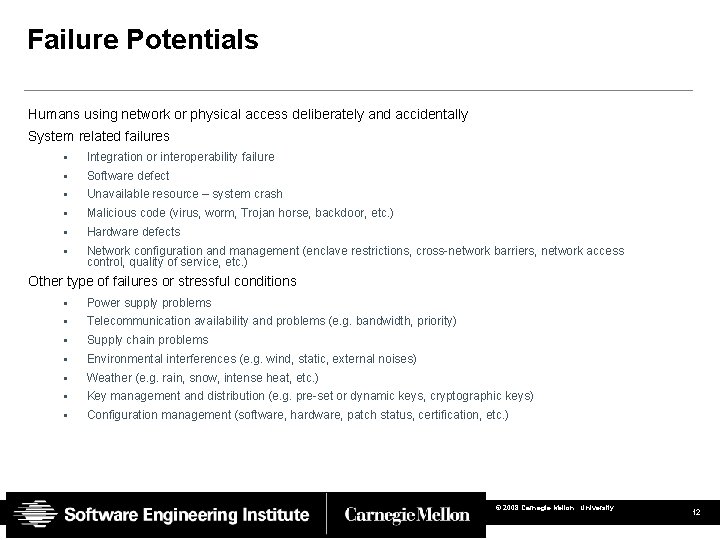

Failure Potentials Humans using network or physical access deliberately and accidentally System related failures • Integration or interoperability failure • Software defect • Unavailable resource – system crash • Malicious code (virus, worm, Trojan horse, backdoor, etc. ) • Hardware defects • Network configuration and management (enclave restrictions, cross-network barriers, network access control, quality of service, etc. ) Other type of failures or stressful conditions • Power supply problems • Telecommunication availability and problems (e. g. bandwidth, priority) • Supply chain problems • Environmental interferences (e. g. wind, static, external noises) • Weather (e. g. rain, snow, intense heat, etc. ) • Key management and distribution (e. g. pre-set or dynamic keys, cryptographic keys) • Configuration management (software, hardware, patch status, certification, etc. ) © 2008 Carnegie Mellon University 12

Agenda Plan for Mission Survivability Analysis Framework (SAF) Analysis of a Complex Failure — Northeast power failure © 2008 Carnegie Mellon University 13

Complex Failure: 2003 Power Blackout -1 On August 14, 2003, approximately 50 million electricity consumers in Canada and the northeastern U. S. were subject to a cascading blackout. There was not a single cause for this event. The blackout was initiated when three high-voltage transmission lines went out of service when they came into contact with trees too close to the lines. The loss of the three lines resulted in too much electricity flowing onto other nearby lines, which caused those lines to overload and then be automatically shut down. Independent monitoring system was not set up to consider the effects of an out-of-service line and had to be manually adjusted. © 2008 Carnegie Mellon University 14

Complex Failure: 2003 Power Blackout -2 The power failure demonstrated the need to consider not only the technical systems but also business operations, training, and IT operations • Tree trimmers did not follow proper procedures • The system operators were not well trained for managing emergency conditions. • The power grid operators were no longer being notified of failure conditions in the power grid but were not told that. • The design had considered how to handle hardware failures but had not considered the effects of software, operator, and user failures. No requirements for “business continuity. ” © 2008 Carnegie Mellon University 15

Systems of Systems Tolerate Discrepancies A mission process breakdown results from a combination of failures that drive operational execution outside of acceptable limits Work processes span multiple systems. Analysis must include understanding how the failure of one system affects the work process including related systems Inconsistencies must be assumed as we compose systems • Components developed at different times with variances in technology and expected usage • A system will not be constructed from uniform parts: always some misfits, especially as the system is extended and repaired Human interactions are necessary to bridge between components • Interface capabilities are insufficient for business needs • Extensive legacy environment remains unchanged, and people become the interfaces © 2008 Carnegie Mellon University 16

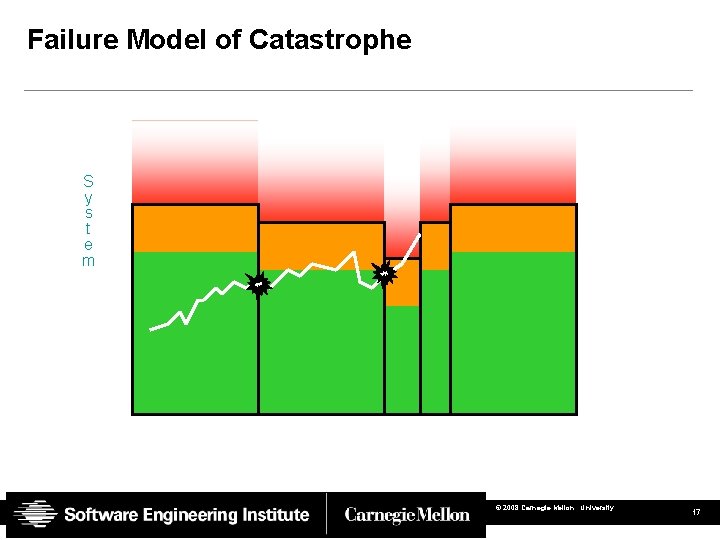

Failure Model of Catastrophe S y s t e m © 2008 Carnegie Mellon University 17

Summary Apply SAF for catastrophe • Develop range of mission threads that must be survivable (normal and catastrophe operations) • Identify critical mission activities and resources • Evaluate the range of failure potentials that could create mission failure • Adjust current operations to support extreme stresses • Identify ways to simplify missions for catastrophe © 2008 Carnegie Mellon University 18

Reference "Survivability Assurance for System of Systems" http: //www. sei. cmu. edu/publications/documents/08. reports/08 tr 008. html Based on research sponsored by the Office of the Under Secretary of Defense for Acquisition, Technology and Logistics (OUSD [AT&L]) and the U. S. Air Force’s Electronic systems Center (ESC) Cryptologic Systems Group (CPSG) © 2008 Carnegie Mellon University 19

Contact Information Slide Format Carol Woody, Ph. D. U. S. mail: Senior Technical Staff Software Engineering Institute CERT Customer Relations Telephone: +1 412 -268 -9137 4500 Fifth Avenue Email: cwoody@cert. org Pittsburgh, PA 15213 -2612 USA World Wide Web: Customer Relations www. sei. cmu. edu Email: customerrelations@sei. cmu. edu www. sei. cmu. edu/contact. html Telephone: +1 412 -268 -5800 SEI Phone: +1 412 -268 -5800 SEI Fax: © 2008 Carnegie Mellon University +1 412 -268 -6257 20

- Slides: 20