Surveying Data collection methods Interviews Focus groups SurveysQuestionnaires

- Slides: 37

Surveying

Data collection methods • Interviews • Focus groups • Surveys/Questionnaires

When we Use Surveys • • Requirements specification User and task analysis Prototype testing User feedback

Surveys • Principles, methods of survey research in general • Content of surveys for needs and usability • Survey methods for needs, usability

Definitions • Survey: – (n): A gathering of a sample of data or opinions considered to be representative of a whole. – (v): To conduct a statistical survey on. • Questionnaire: (n) A form containing a set of questions, especially one addressed to a statistically significant number of subjects as a way of gathering information for a survey. • Interview – (n): A conversation, such as one conducted by a reporter, in which facts or statements are elicited from another. – (v) To obtain an interview from. – American Heritage Dictionary

Surveying Steps • • Sample selection Questionnaire construction Data collection Data analysis

Surveys – detailed steps • • • Determine purpose, information needed Identify target audience(s) Select method of administration Design sampling method Design prelim questionnaire – including analysis – Often based on unstructured or semistructured interviews with people like your respondents • Pretest, revise • Administer: draw sample, administer q’aire, follow-up non-respondents • Analyze results

Why surveys? • Answers from many people, including those at a distance • Relatively easy to administer • Can continue for a long time • Easy to analyze • Yield quantitative data – Incl. Comparable x time

Ways of Administering Surveys • • • In person Phone Mail Paper, in person Email (usually with a link) Web

Possible Data • Facts – Characteristics of respondents – Self-reported behavior • This instance • Generally/usually • Past • Opinions and attitudes: – Preferences, opinions, satisfaction, concerns

Some Limits of Surveys • Reaching users easier than non-users, members/non-members, insiders/outsiders • Relies on voluntary cooperation, possibly biasing responses • Questions have to be unambiguous, amenable to short answers • Self-reports • Only get answers to the questions you ask • The longer, more complex, more sensitive the survey the less cooperation

Some sources of error • • • Sample, respondents Question choice Question wording Method of administration Inferences from the data Users’ interests in influencing results – “vote and view the results” CNN quick vote: http: //www. cnn. com/

When to do interviews? • • Need details that can’t get from survey Need more open-ended discussions with users Small #s OK Can identify and gain cooperation from target group • Sometimes: want to influence respondents as well as get info from them

Sample selection

Targeting respondents • What info do you need? • From whom can you get the information you need? – E. g. non-users are hard to reach – Can’t ask 5 -year-olds; what do their parents know?

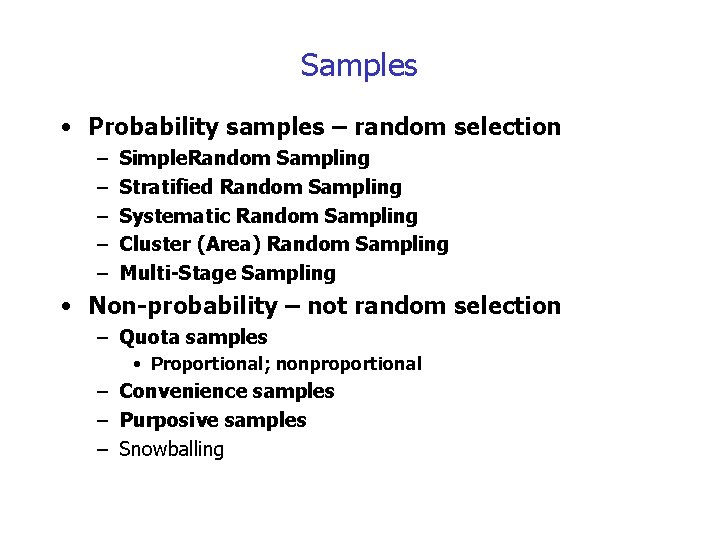

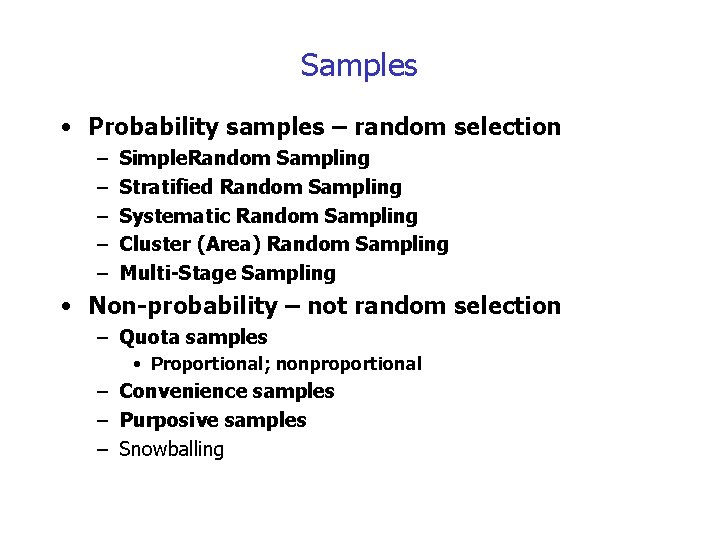

Samples • Probability samples – random selection – – – Simple. Random Sampling Stratified Random Sampling Systematic Random Sampling Cluster (Area) Random Sampling Multi-Stage Sampling • Non-probability – not random selection – Quota samples • Proportional; nonproportional – Convenience samples – Purposive samples – Snowballing

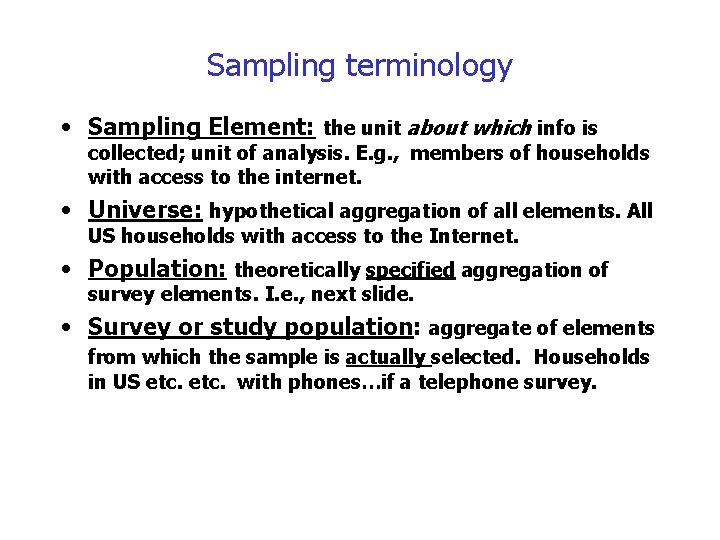

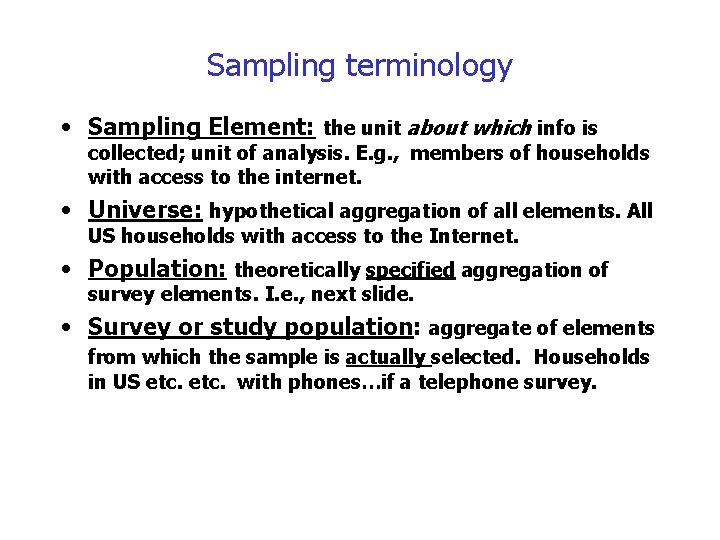

Sampling terminology • Sampling Element: the unit about which info is collected; unit of analysis. E. g. , members of households with access to the internet. • Universe: hypothetical aggregation of all elements. All US households with access to the Internet. • Population: theoretically specified aggregation of survey elements. I. e. , next slide. • Survey or study population: aggregate of elements from which the sample is actually selected. Households in US etc. with phones…if a telephone survey.

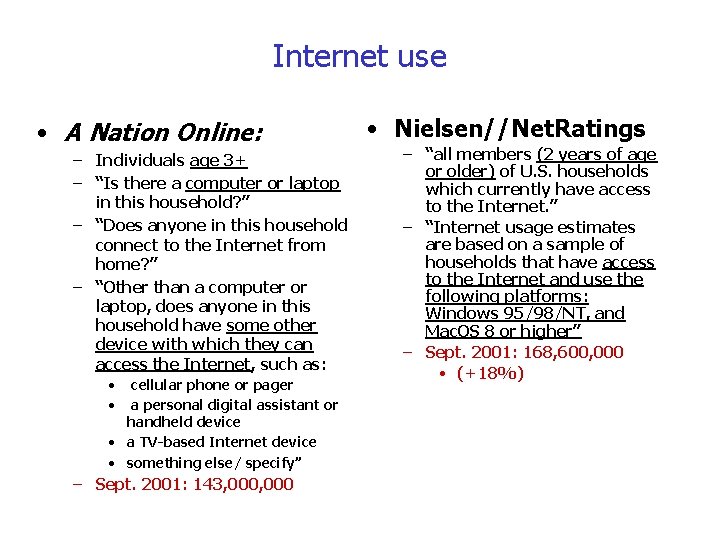

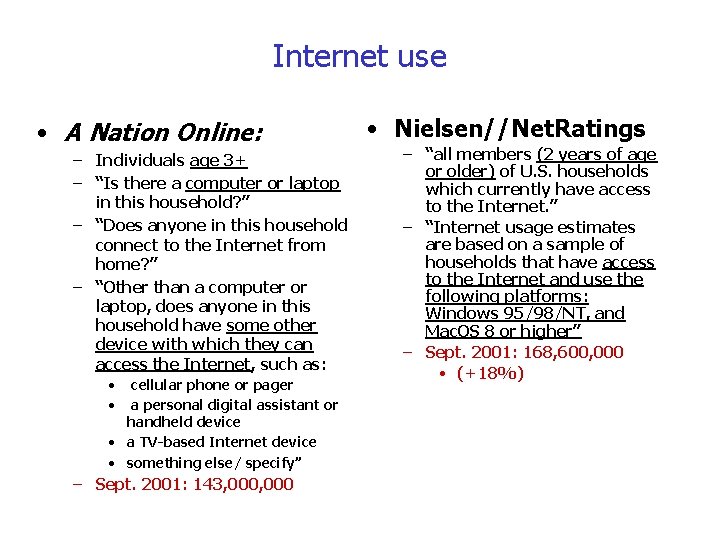

Internet use • A Nation Online: – Individuals age 3+ – “Is there a computer or laptop in this household? ” – “Does anyone in this household connect to the Internet from home? ” – “Other than a computer or laptop, does anyone in this household have some other device with which they can access the Internet, such as: • cellular phone or pager • a personal digital assistant or handheld device • a TV-based Internet device • something else/ specify” – Sept. 2001: 143, 000 • Nielsen//Net. Ratings – “all members (2 years of age or older) of U. S. households which currently have access to the Internet. ” – “Internet usage estimates are based on a sample of households that have access to the Internet and use the following platforms: Windows 95/98/NT, and Mac. OS 8 or higher” – Sept. 2001: 168, 600, 000 • (+18%)

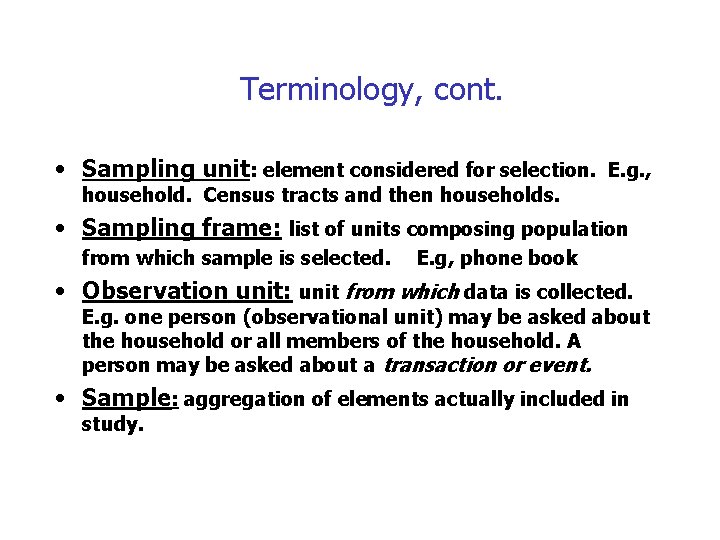

Terminology, cont. • Sampling unit: element considered for selection. E. g. , household. Census tracts and then households. • Sampling frame: list of units composing population from which sample is selected. E. g, phone book • Observation unit: unit from which data is collected. E. g. one person (observational unit) may be asked about the household or all members of the household. A person may be asked about a transaction or event. • Sample: aggregation of elements actually included in study.

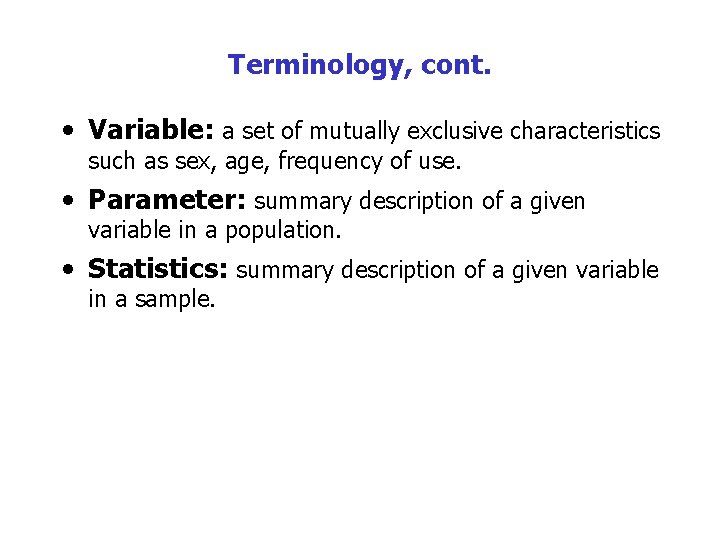

Terminology, cont. • Variable: a set of mutually exclusive characteristics such as sex, age, frequency of use. • Parameter: summary description of a given variable in a population. • Statistics: summary description of a given variable in a sample.

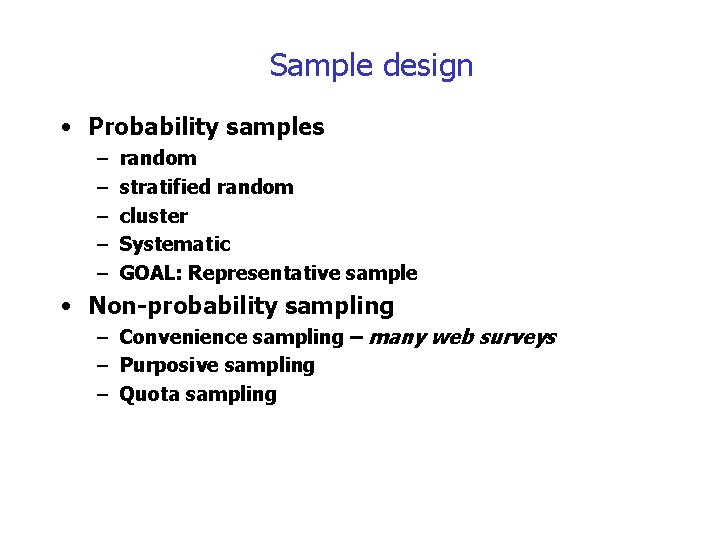

Sample design • Probability samples – – – random stratified random cluster Systematic GOAL: Representative sample • Non-probability sampling – Convenience sampling – many web surveys – Purposive sampling – Quota sampling

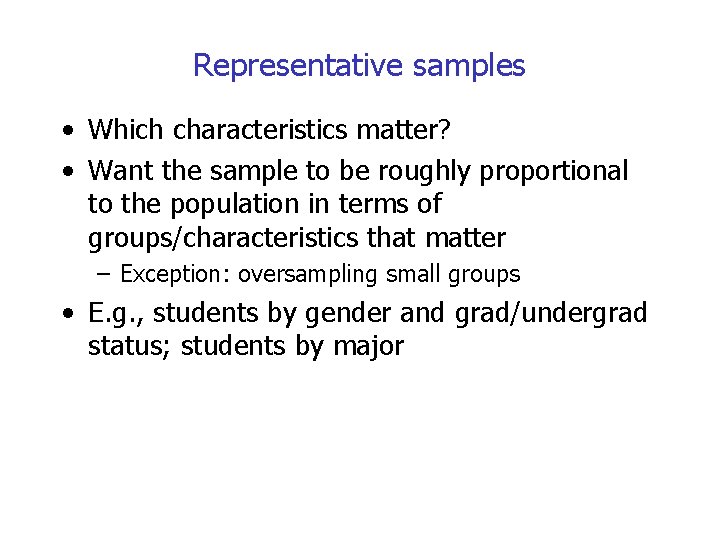

Representative samples • Which characteristics matter? • Want the sample to be roughly proportional to the population in terms of groups/characteristics that matter – Exception: oversampling small groups • E. g. , students by gender and grad/undergrad status; students by major

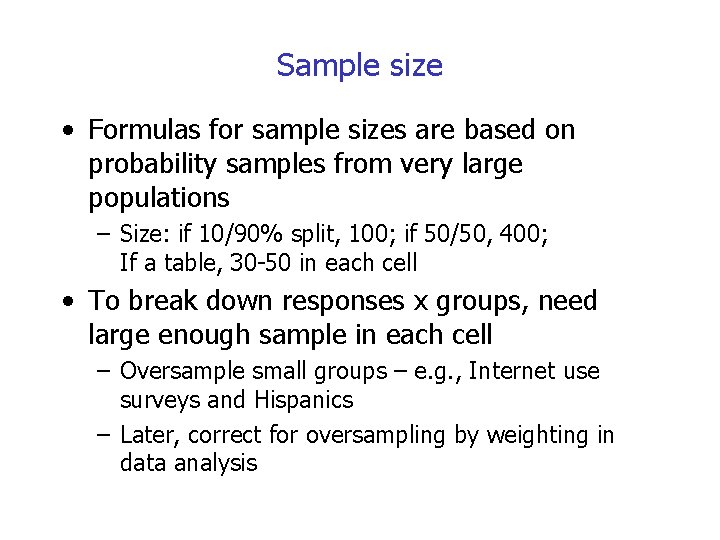

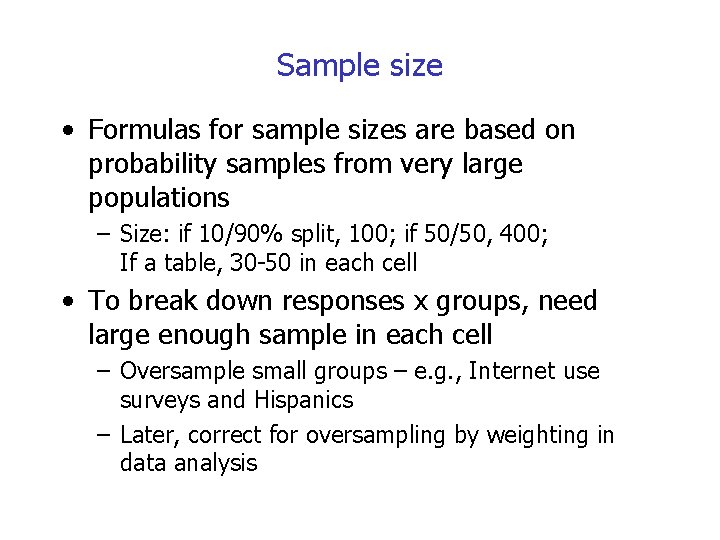

Sample size • Formulas for sample sizes are based on probability samples from very large populations – Size: if 10/90% split, 100; if 50/50, 400; If a table, 30 -50 in each cell • To break down responses x groups, need large enough sample in each cell – Oversample small groups – e. g. , Internet use surveys and Hispanics – Later, correct for oversampling by weighting in data analysis

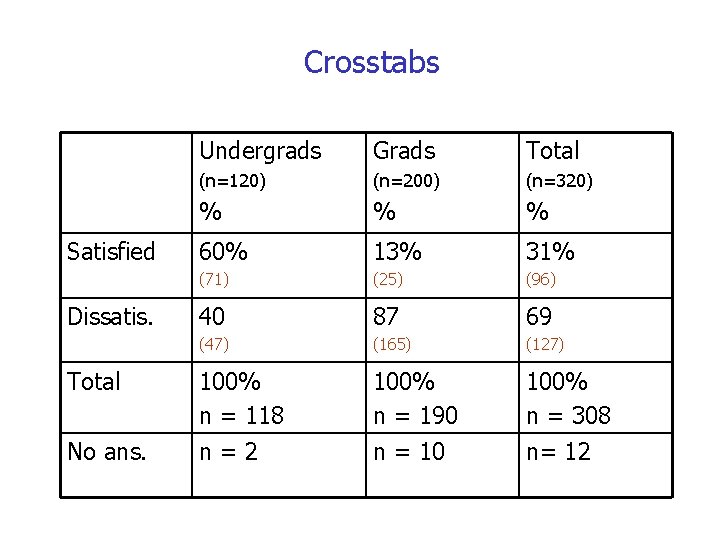

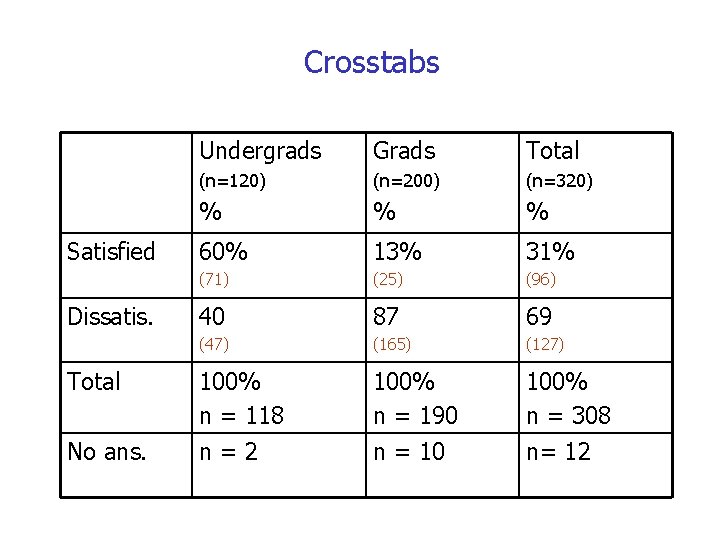

Crosstabs Satisfied Dissatis. Total No ans. Undergrads Grads Total (n=120) (n=200) (n=320) % % % 60% 13% 31% (71) (25) (96) 40 87 69 (47) (165) (127) 100% n = 118 n=2 100% n = 190 n = 10 100% n = 308 n= 12

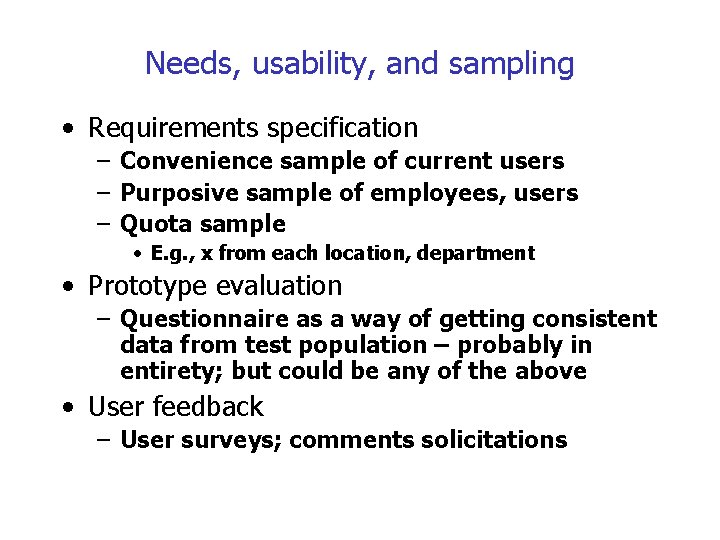

Needs, usability, and sampling • Requirements specification – Convenience sample of current users – Purposive sample of employees, users – Quota sample • E. g. , x from each location, department • Prototype evaluation – Questionnaire as a way of getting consistent data from test population – probably in entirety; but could be any of the above • User feedback – User surveys; comments solicitations

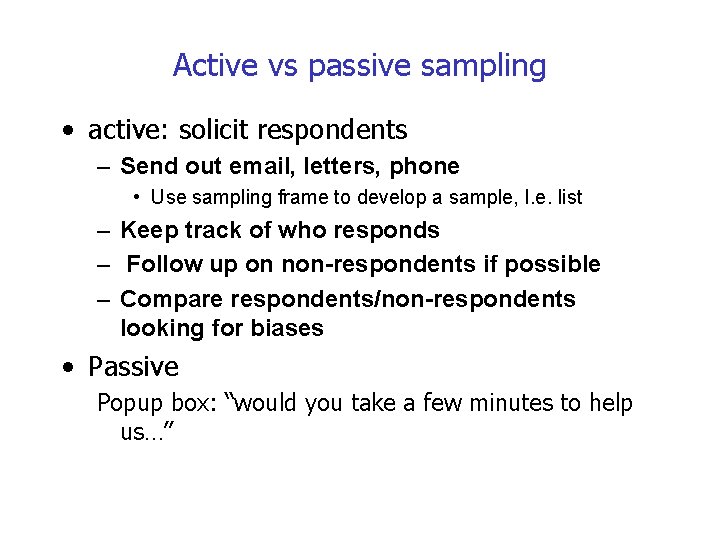

Active vs passive sampling • active: solicit respondents – Send out email, letters, phone • Use sampling frame to develop a sample, I. e. list – Keep track of who responds – Follow up on non-respondents if possible – Compare respondents/non-respondents looking for biases • Passive Popup box: “would you take a few minutes to help us…”

Response Rates • % of sample who actually participate • low rates may indicate bias in responses – Whom did you miss? Why? – Who chose to cooperate? Why? • How much is enough? – Babbie: 50% is adequate; 70% is very good

Increasing response rates • • Harder to say ‘no’ to a person Captive audience NOT an extra step Explain purpose of study – Don’t underestimate altruism • Why you need them • Incentives – Reporting back to respondents as a way of getting response – Money; entry in a sweepstakes • Follow up (if you can)

Web survey problems • Loss of context – what exactly are you asking about, what are they responding to? – Are you reaching them at the appropriate point in their interaction with site? • Incomplete responses • Multiple submissions

Passive: problems may include • Response rate probably unmeasurable • May be difficult to compare respondents to population as a whole • Likely to be biased (systematic error) – Frequent users probably over-represented – Busy people probably under-represented – Disgruntled and/or happy users probably overrepresented

Questionnaire construction

Questionnaire construction • Content – Goals of study: What do you need to know? – What can respondents tell you? • Conceptualization • Operationalization – e. g. , how exactly do you define “household with access to internet”? • Question design • Question ordering

Topics addressed by surveys • Respondent characteristics • Sampling element characteristics – “Tell me about every member of this household…” • Respondent/sampling element behavior • Respondent opinions, perceptions, preferences, evaluations

Respondent characteristics • Demographics: what do you need to know? How will you analyze data? – Age, sex, education, occupation, year in school, race/ethnicity, type of employer… – Equal intervals • User role (e. g. , buyer, browser…) • Expertise – hard to ask – Subject domain – Technology – System/site

Behavior • Tasks (e. g. , what did you do today? ) • Site usage, activity – Frequency; common functions – hard to answer accurately – Self-reports vs observations • Web and internet use: Pew study

Opinions, preferences, concerns • About the site: Content, organization, architecture, interface • Ease of use • Perceived needs • Preferences • Concerns – E. g. , security • Success, satisfaction – Subdivided by part of site, task, purpose… • Other requirements • Suggestions