Supporting Annotation Layers for Natural Language Processing Marti

![Experimental Setup: 4 Workloads (a) Protein-Protein Interaction [layer='sentence' {ALLOW GAPS} [layer='gene'] AS gene 1 Experimental Setup: 4 Workloads (a) Protein-Protein Interaction [layer='sentence' {ALLOW GAPS} [layer='gene'] AS gene 1](https://slidetodoc.com/presentation_image_h2/681f3a5c1f37240a34182de20078ec77/image-38.jpg)

- Slides: 60

Supporting Annotation Layers for Natural Language Processing Marti Hearst, Preslav Nakov, Ariel Schwartz, Brian Wolf, Rowena Luk UC Berkeley Stanford Info. Seminar March 17, 2006 Supported by NSF DBI-0317510 And a gift from Genentech

Outline • • Motivation: NLP tasks System Description n n • • • Annotation architecture Sample queries Database Design and Evaluation Related Work Future Work UC Berkeley Biotext Project

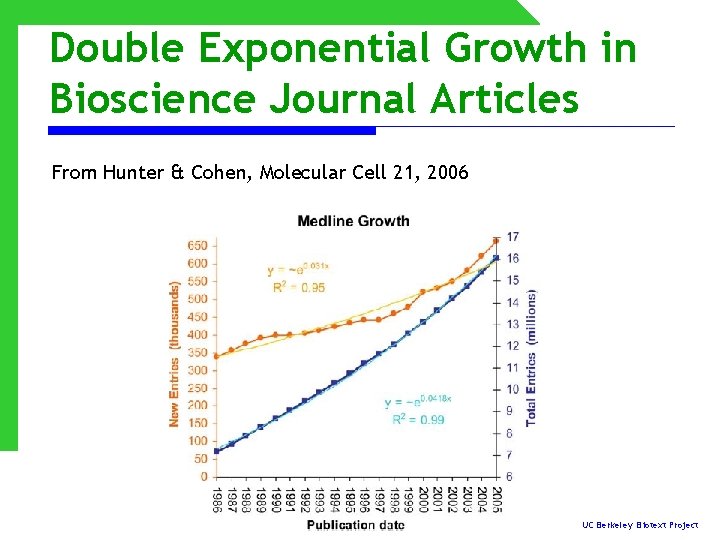

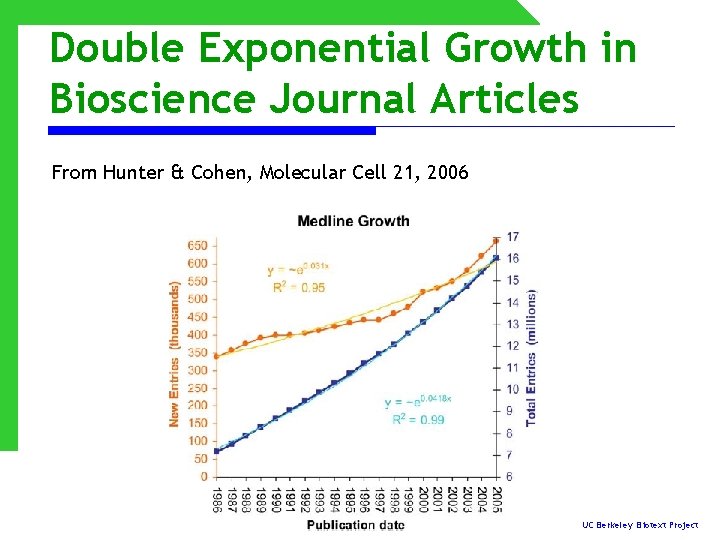

Double Exponential Growth in Bioscience Journal Articles From Hunter & Cohen, Molecular Cell 21, 2006 UC Berkeley Biotext Project

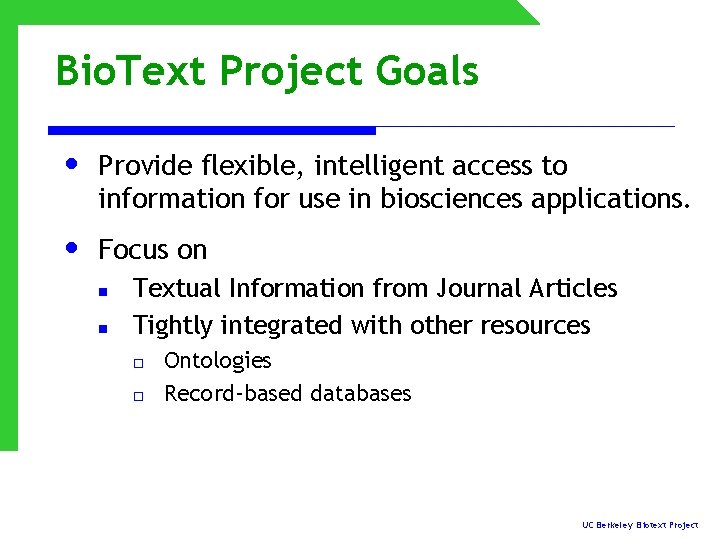

Bio. Text Project Goals • Provide flexible, intelligent access to information for use in biosciences applications. • Focus on n n Textual Information from Journal Articles Tightly integrated with other resources o o Ontologies Record-based databases UC Berkeley Biotext Project

Project Team • Project Leaders: n n • PI: Marti Hearst Co-PI: Adam Arkin Computational Linguistics and Databases n n n Presley Nakov Ariel Schwartz Brian Wolf Barbara Rosario (alum) Gaurav Bhalotia (alum) • User Interface / IR n n • Rowena Luk Dr. Emilia Stoica Bioscience n n Janice Hamerja Dr. Ting Zhang (alum) UC Berkeley Biotext Project

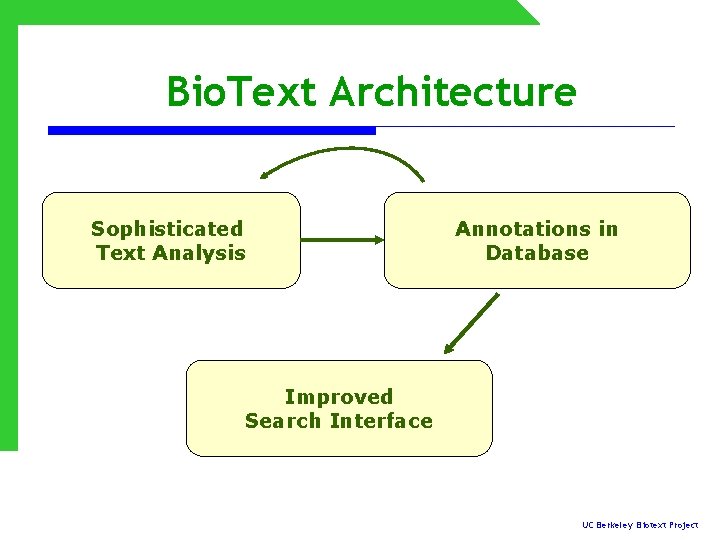

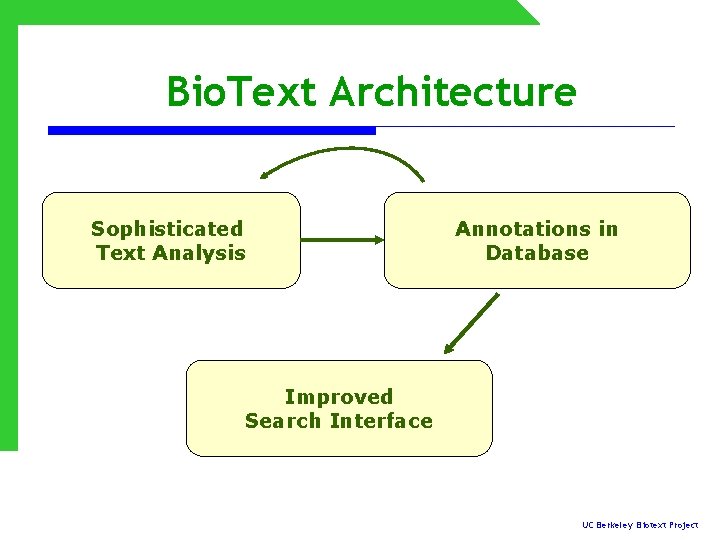

Bio. Text Architecture Sophisticated Text Analysis Annotations in Database Improved Search Interface UC Berkeley Biotext Project

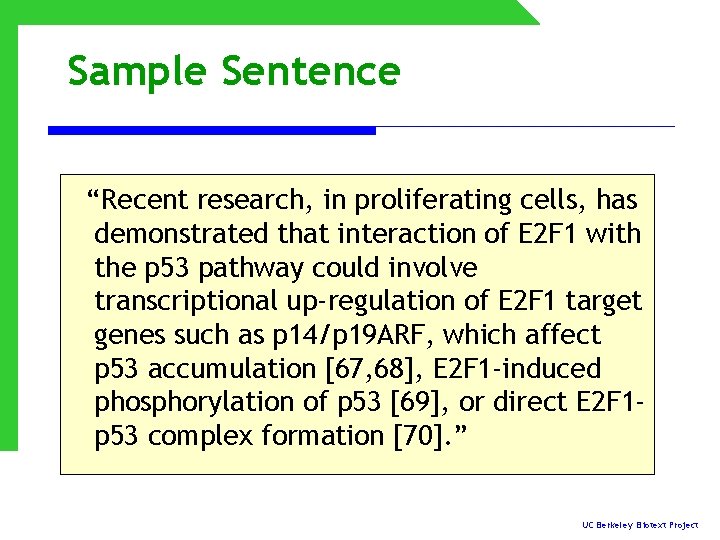

Sample Sentence “Recent research, in proliferating cells, has demonstrated that interaction of E 2 F 1 with the p 53 pathway could involve transcriptional up-regulation of E 2 F 1 target genes such as p 14/p 19 ARF, which affect p 53 accumulation [67, 68], E 2 F 1 -induced phosphorylation of p 53 [69], or direct E 2 F 1 p 53 complex formation [70]. ” UC Berkeley Biotext Project

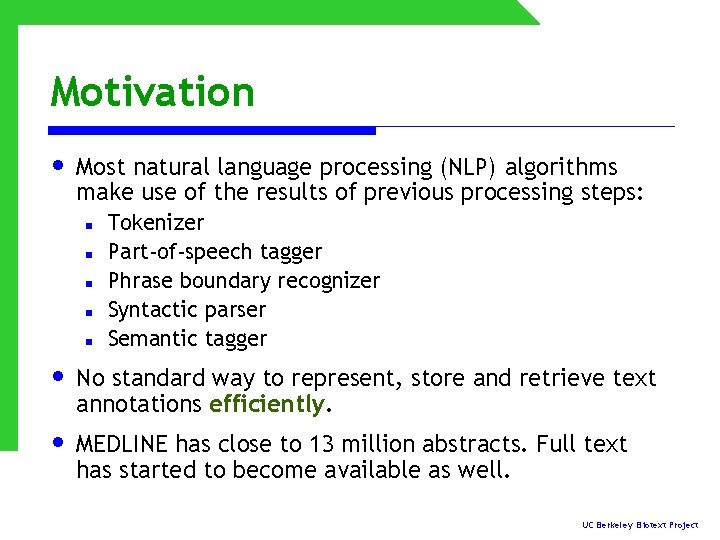

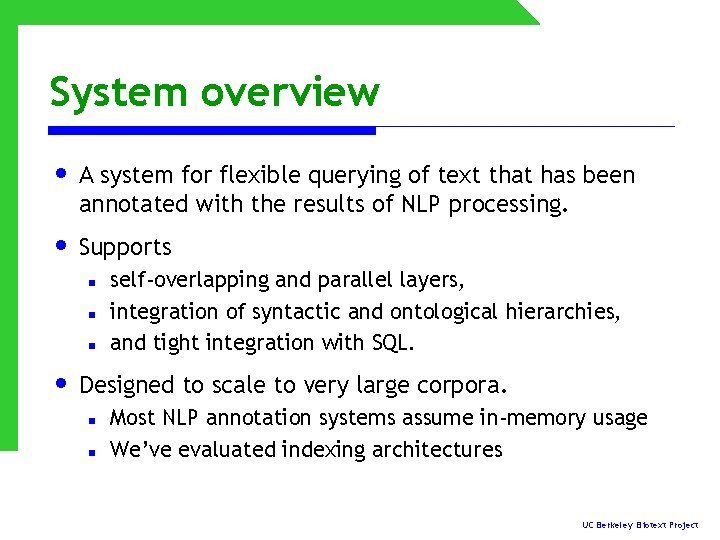

Motivation • Most natural language processing (NLP) algorithms make use of the results of previous processing steps: n n n Tokenizer Part-of-speech tagger Phrase boundary recognizer Syntactic parser Semantic tagger • No standard way to represent, store and retrieve text annotations efficiently. • MEDLINE has close to 13 million abstracts. Full text has started to become available as well. UC Berkeley Biotext Project

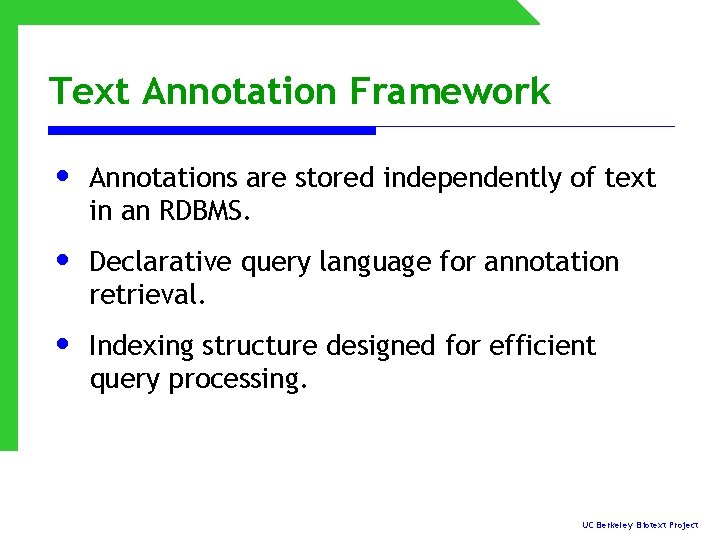

System overview • A system for flexible querying of text that has been annotated with the results of NLP processing. • Supports n n n self-overlapping and parallel layers, integration of syntactic and ontological hierarchies, and tight integration with SQL. • Designed to scale to very large corpora. n n Most NLP annotation systems assume in-memory usage We’ve evaluated indexing architectures UC Berkeley Biotext Project

Text Annotation Framework • Annotations are stored independently of text in an RDBMS. • Declarative query language for annotation retrieval. • Indexing structure designed for efficient query processing. UC Berkeley Biotext Project

Key Contributions • Support for hierarchical and overlapping layers of annotation. • Querying multiple levels of annotations simultaneously. • First to evaluate different physical database designs for NLP annotation architecture. UC Berkeley Biotext Project

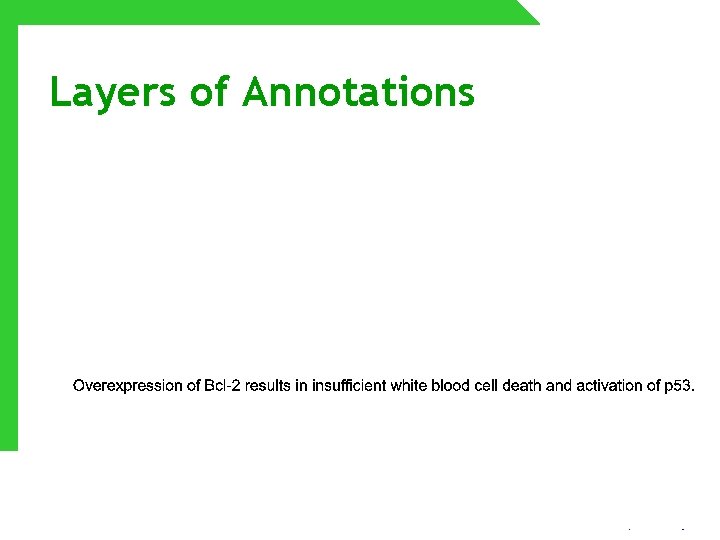

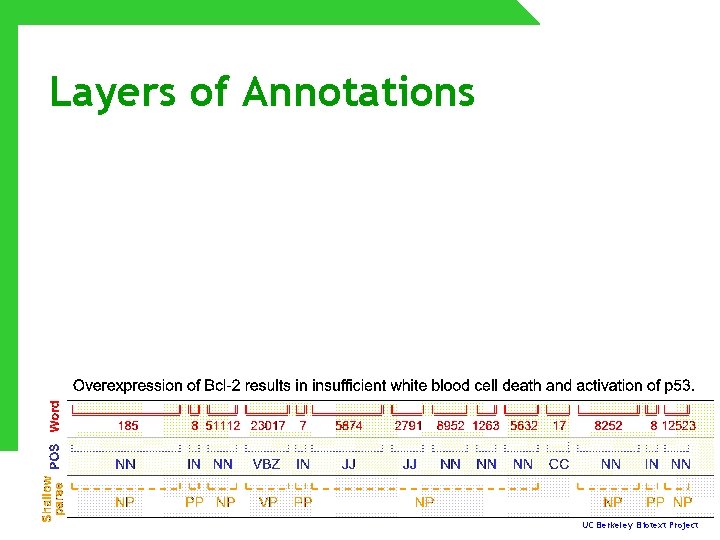

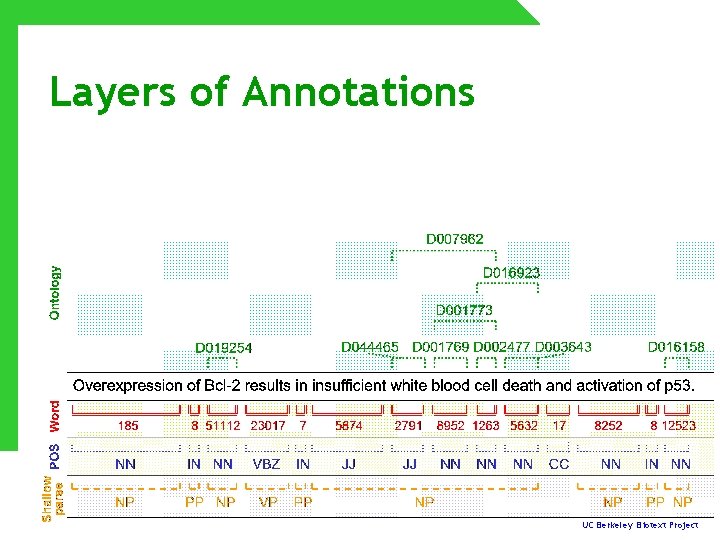

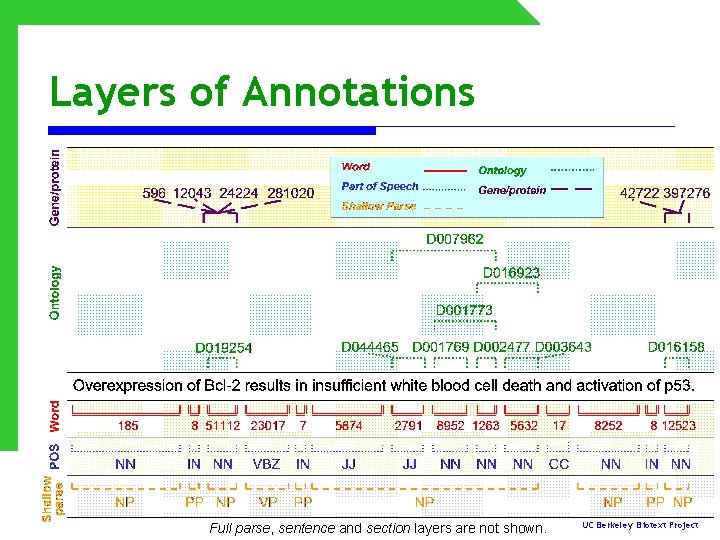

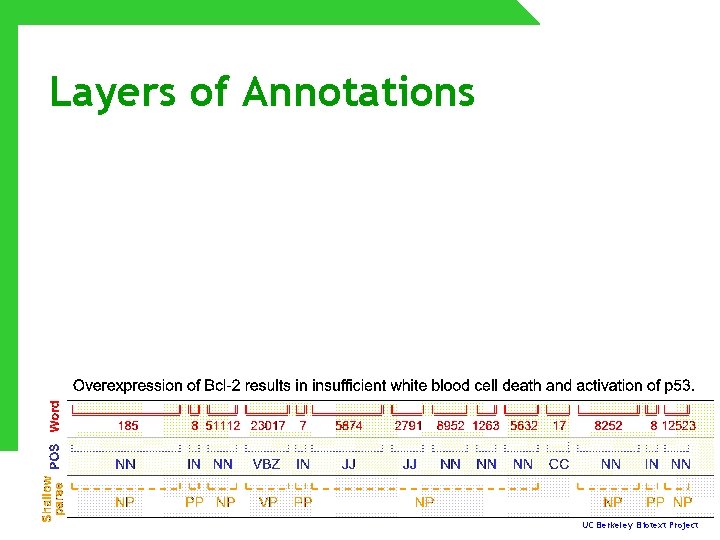

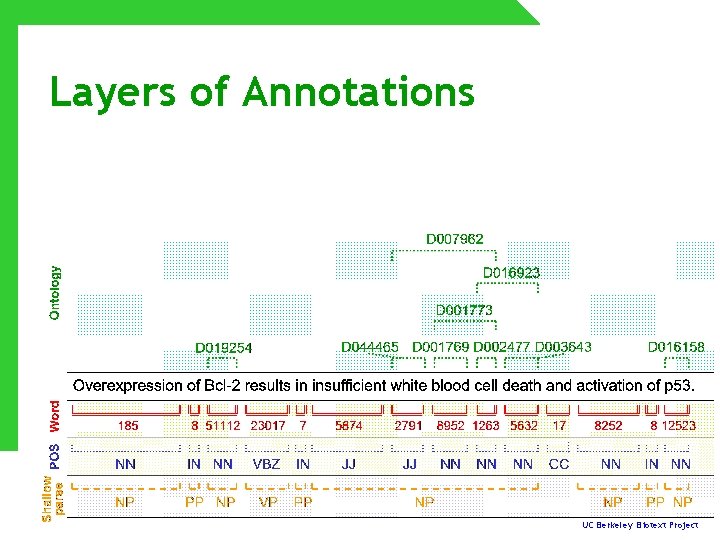

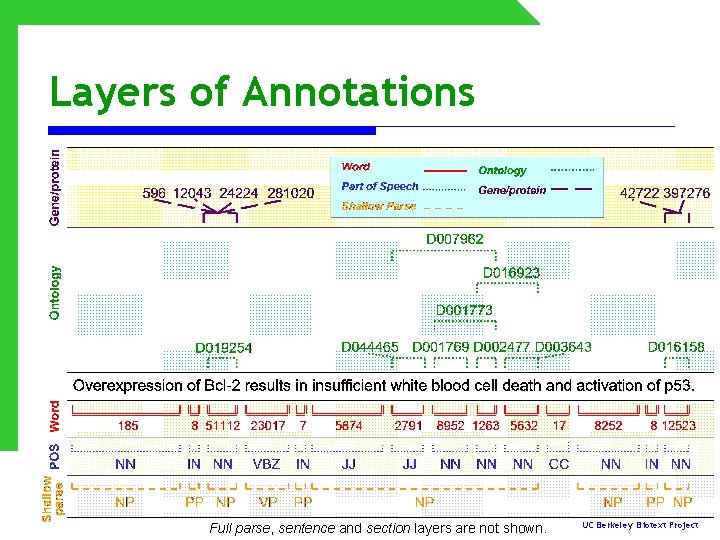

Layers of Annotations • Each annotation represents an interval spanning a sequence of characters n absolute start and end positions • Each layer corresponds to a conceptually different kind of annotation n Protein, MESH label, Noun Phrase • Layers can be n Sequential n Overlapping o n two multiple-word concepts sharing a word Hierarchical (two different ways) o o spanning, when the intervals are nested as in a parse tree, or ontologically, when the token itself is derived from a hierarchical ontology UC Berkeley Biotext Project

Layers of Annotations UC Berkeley Biotext Project

Layers of Annotations UC Berkeley Biotext Project

Layers of Annotations UC Berkeley Biotext Project

Layers of Annotations Full parse, sentence and section layers are not shown. UC Berkeley Biotext Project

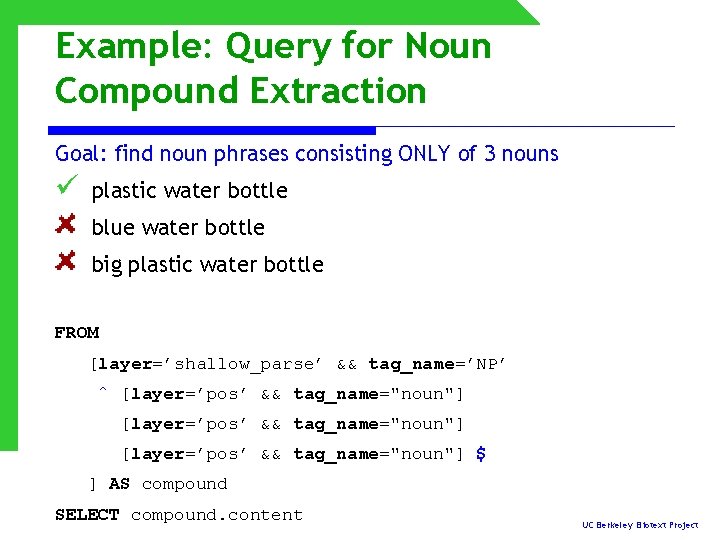

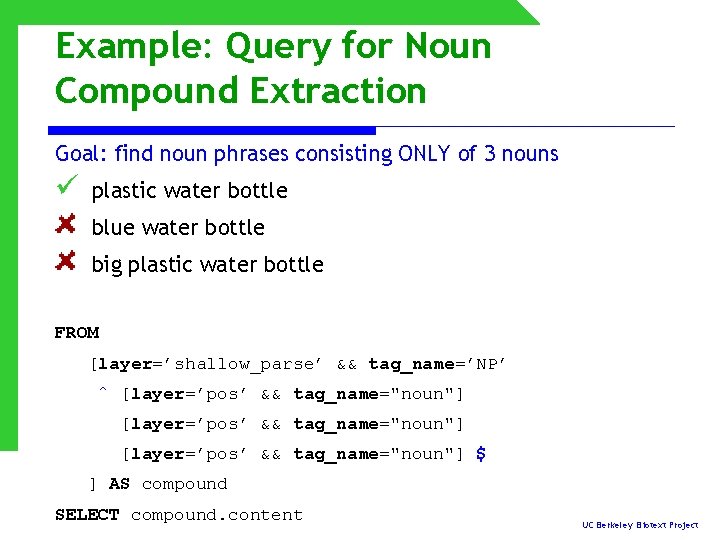

Example: Query for Noun Compound Extraction Goal: find noun phrases consisting ONLY of 3 nouns ü plastic water bottle blue water bottle big plastic water bottle FROM [layer=’shallow_parse’ && tag_name=’NP’ ˆ [layer=’pos’ && tag_name="noun"] $ ] AS compound SELECT compound. content UC Berkeley Biotext Project

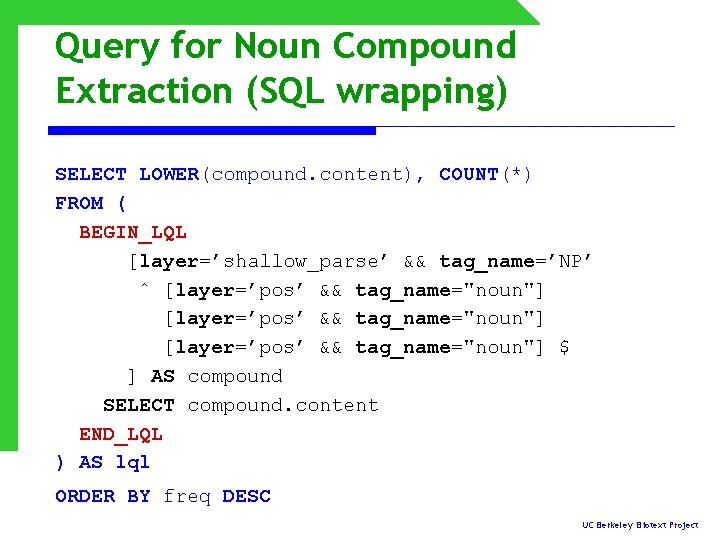

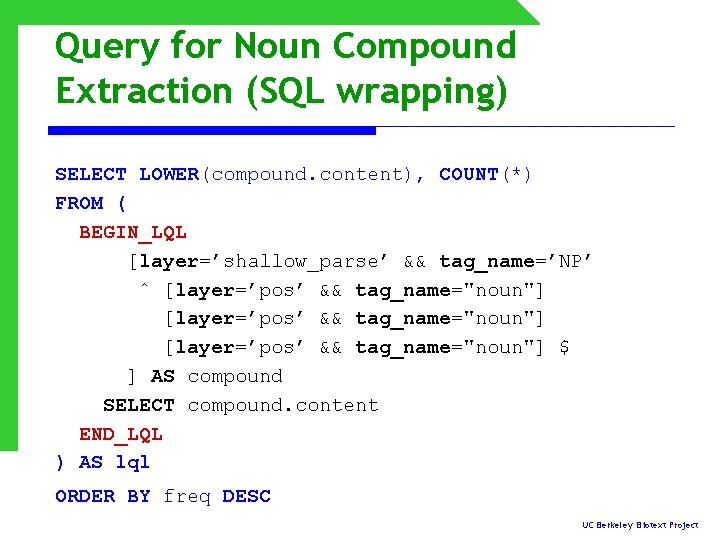

Query for Noun Compound Extraction (SQL wrapping) SELECT LOWER(compound. content), COUNT(*) FROM ( BEGIN_LQL [layer=’shallow_parse’ && tag_name=’NP’ ˆ [layer=’pos’ && tag_name="noun"] $ ] AS compound SELECT compound. content END_LQL ) AS lql ORDER BY freq DESC UC Berkeley Biotext Project

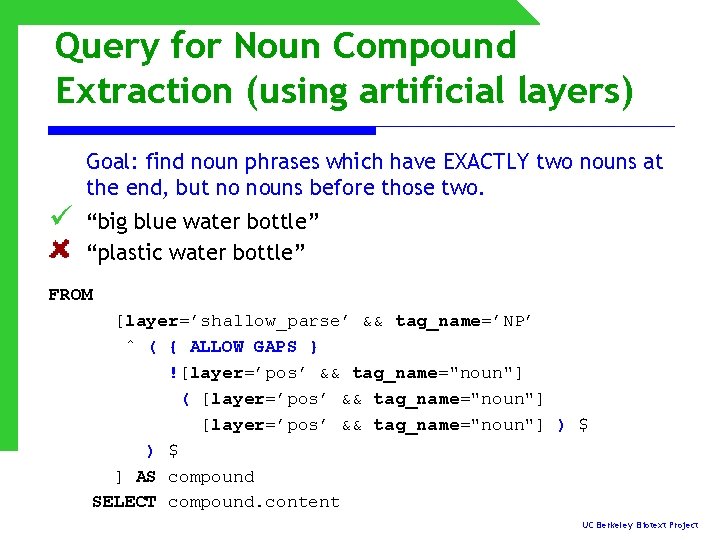

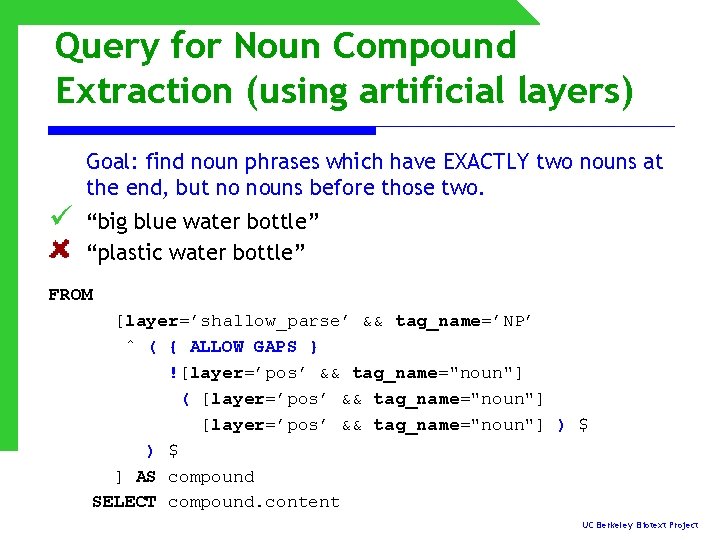

Query for Noun Compound Extraction (using artificial layers) Goal: find noun phrases which have EXACTLY two nouns at the end, but no nouns before those two. ü “big blue water bottle” “plastic water bottle” FROM [layer=’shallow_parse’ && tag_name=’NP’ ˆ ( { ALLOW GAPS } ![layer=’pos’ && tag_name="noun"] ( [layer=’pos’ && tag_name="noun"] ) $ ] AS compound SELECT compound. content UC Berkeley Biotext Project

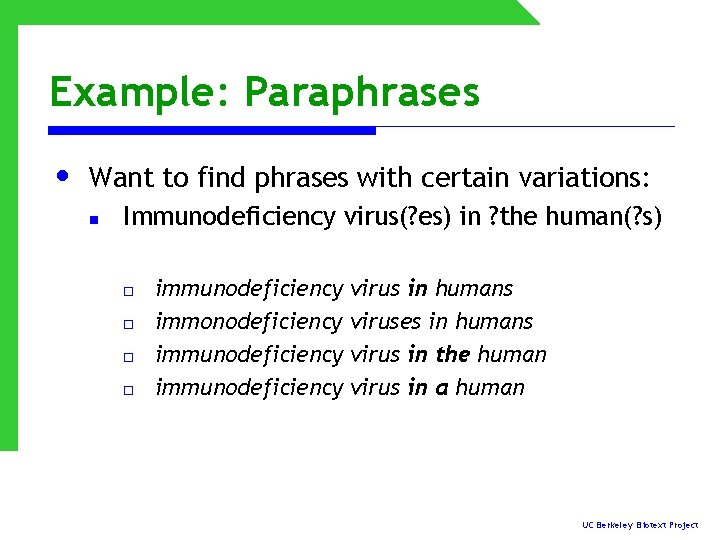

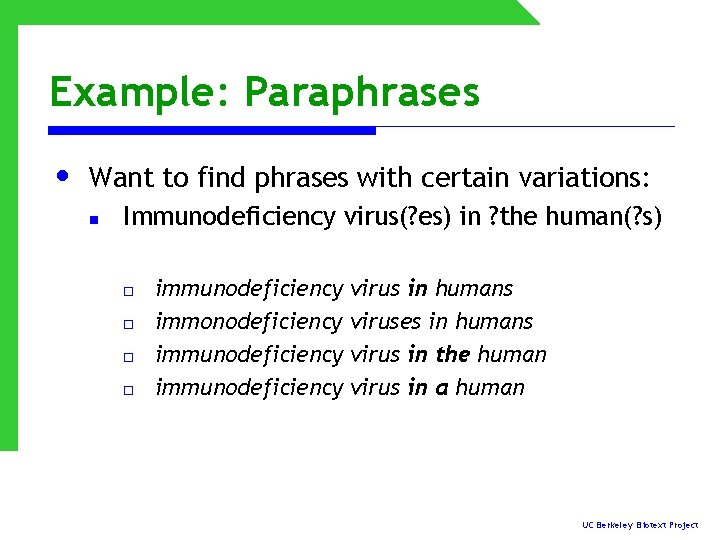

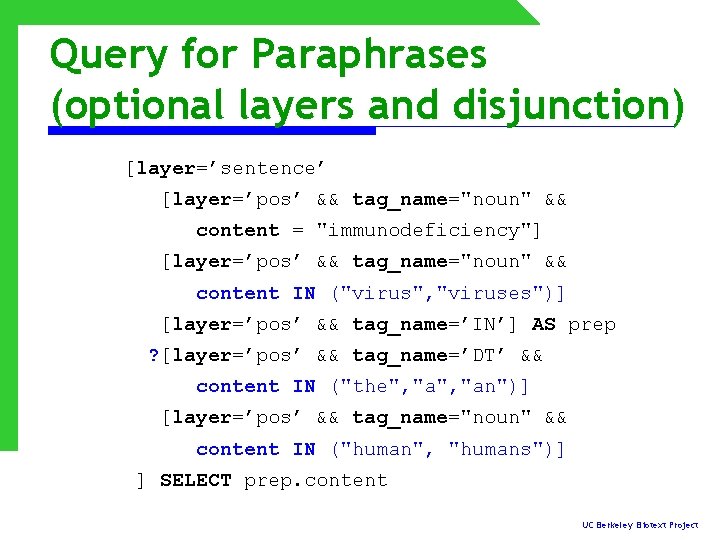

Example: Paraphrases • Want to find phrases with certain variations: n Immunodeficiency virus(? es) in ? the human(? s) o o immunodeficiency virus in humans immonodeficiency viruses in humans immunodeficiency virus in the human immunodeficiency virus in a human UC Berkeley Biotext Project

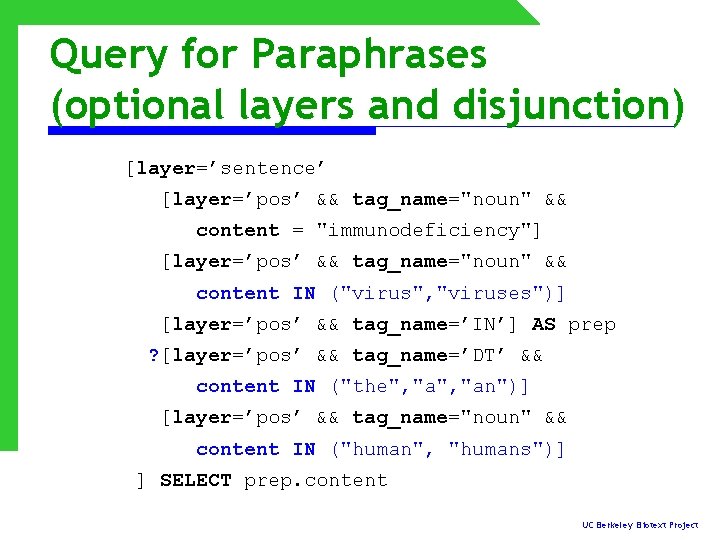

Query for Paraphrases (optional layers and disjunction) [layer=’sentence’ [layer=’pos’ && tag_name="noun" && content = "immunodeficiency"] [layer=’pos’ && tag_name="noun" && content IN ("virus", "viruses")] [layer=’pos’ && tag_name=’IN’] AS prep ? [layer=’pos’ && tag_name=’DT’ && content IN ("the", "an")] [layer=’pos’ && tag_name="noun" && content IN ("human", "humans")] ] SELECT prep. content UC Berkeley Biotext Project

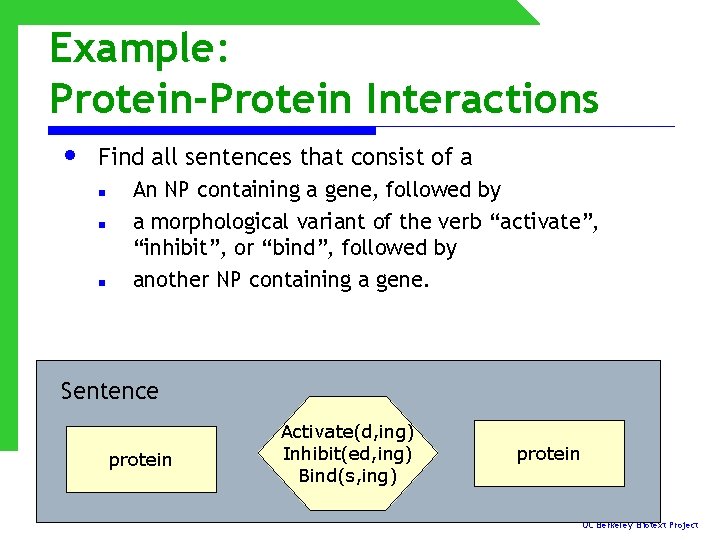

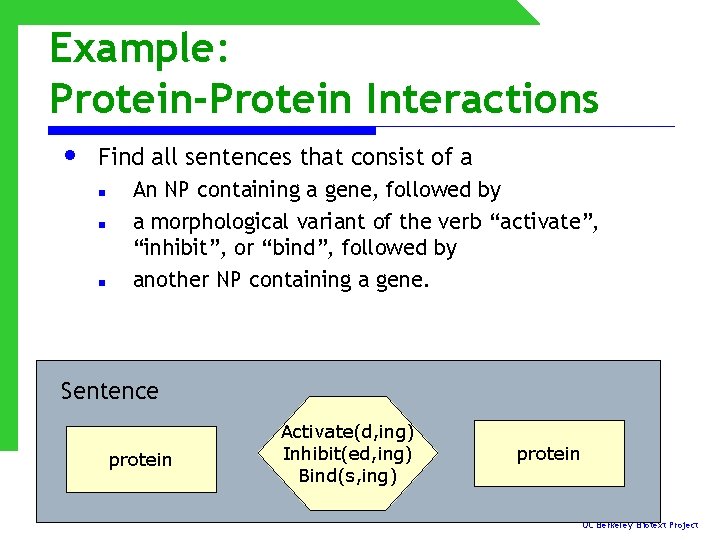

Example: Protein-Protein Interactions • Find all sentences that consist of a n n n An NP containing a gene, followed by a morphological variant of the verb “activate”, “inhibit”, or “bind”, followed by another NP containing a gene. Sentence protein Activate(d, ing) Inhibit(ed, ing) Bind(s, ing) protein UC Berkeley Biotext Project

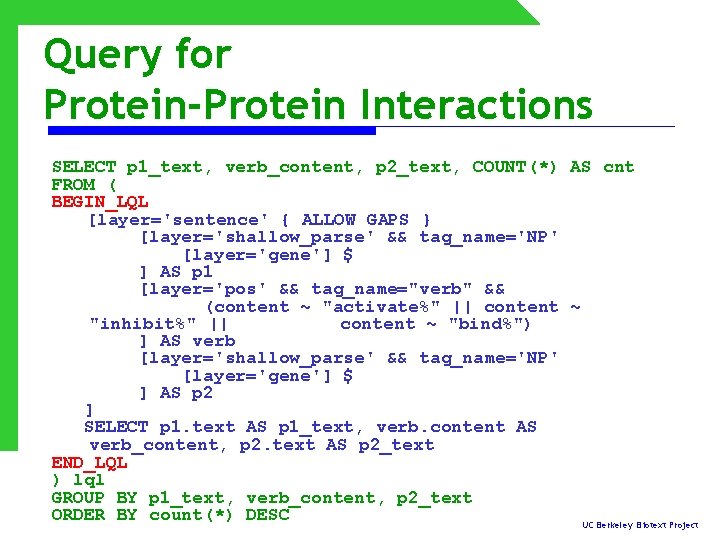

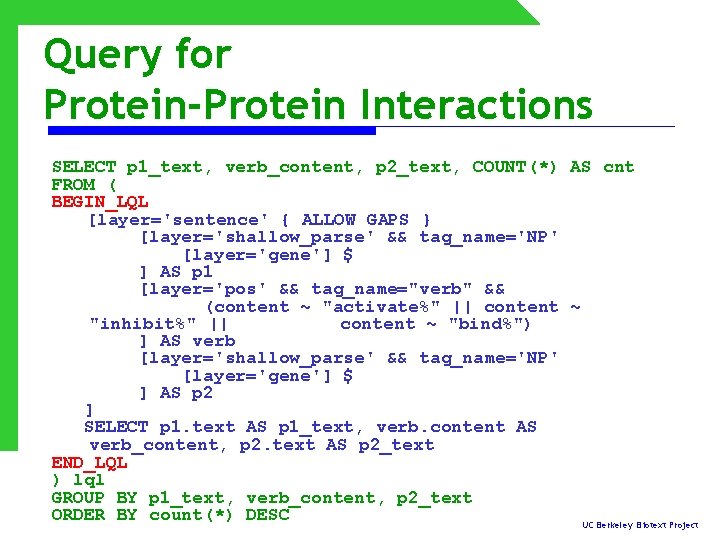

Query for Protein-Protein Interactions SELECT p 1_text, verb_content, p 2_text, COUNT(*) AS cnt FROM ( BEGIN_LQL [layer='sentence' { ALLOW GAPS } [layer='shallow_parse' && tag_name='NP' [layer='gene'] $ ] AS p 1 [layer='pos' && tag_name="verb" && (content ~ "activate%" || content ~ "inhibit%" || content ~ "bind%") ] AS verb [layer='shallow_parse' && tag_name='NP' [layer='gene'] $ ] AS p 2 ] SELECT p 1. text AS p 1_text, verb. content AS verb_content, p 2. text AS p 2_text END_LQL ) lql GROUP BY p 1_text, verb_content, p 2_text ORDER BY count(*) DESC UC Berkeley Biotext Project

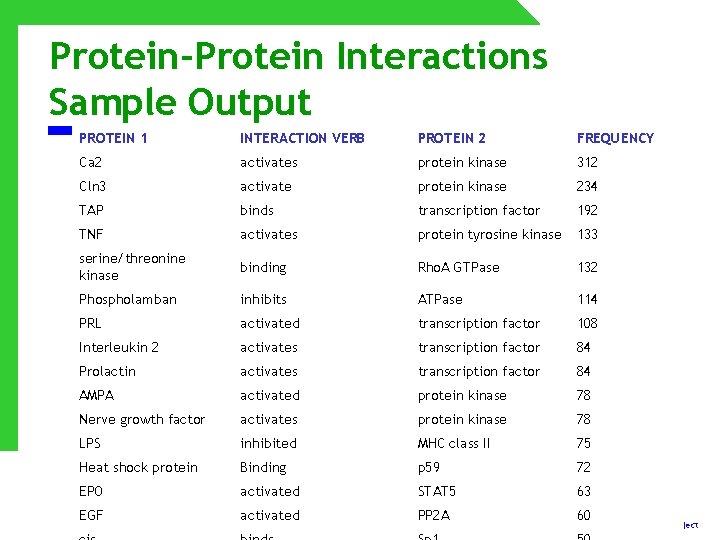

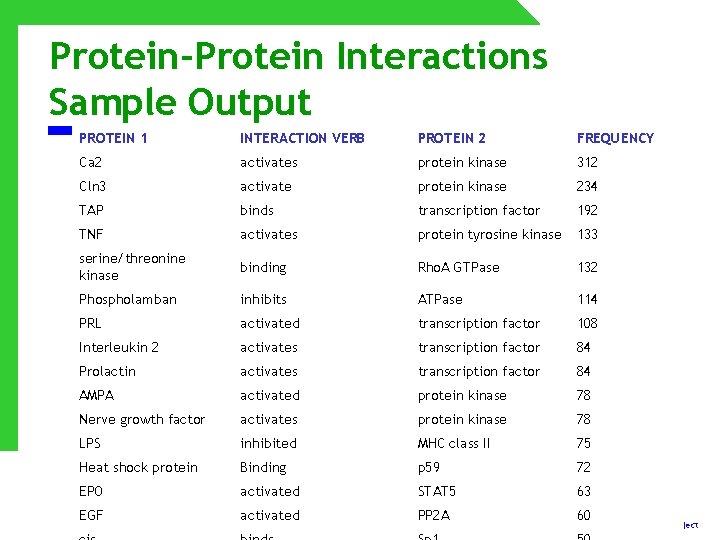

Protein-Protein Interactions Sample Output PROTEIN 1 INTERACTION VERB PROTEIN 2 FREQUENCY Ca 2 activates protein kinase 312 Cln 3 activate protein kinase 234 TAP binds transcription factor 192 TNF activates protein tyrosine kinase 133 serine/threonine kinase binding Rho. A GTPase 132 Phospholamban inhibits ATPase 114 PRL activated transcription factor 108 Interleukin 2 activates transcription factor 84 Prolactin activates transcription factor 84 AMPA activated protein kinase 78 Nerve growth factor activates protein kinase 78 LPS inhibited MHC class II 75 Heat shock protein Binding p 59 72 EPO activated STAT 5 63 EGF activated PP 2 A 60 UC Berkeley Biotext Project

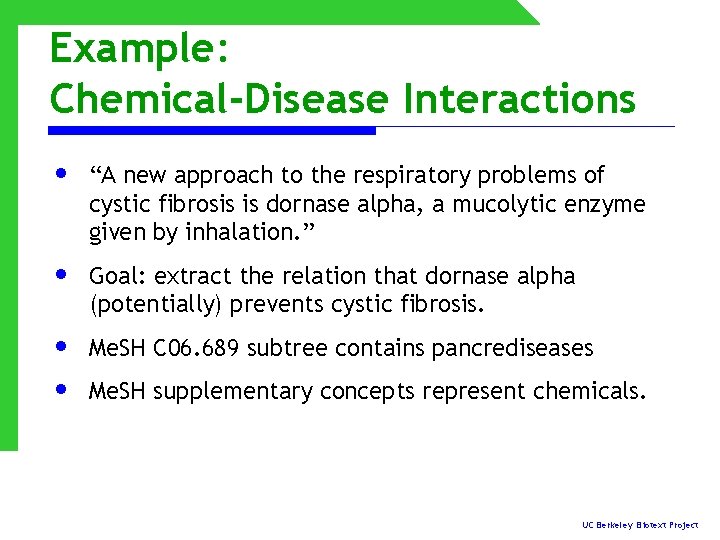

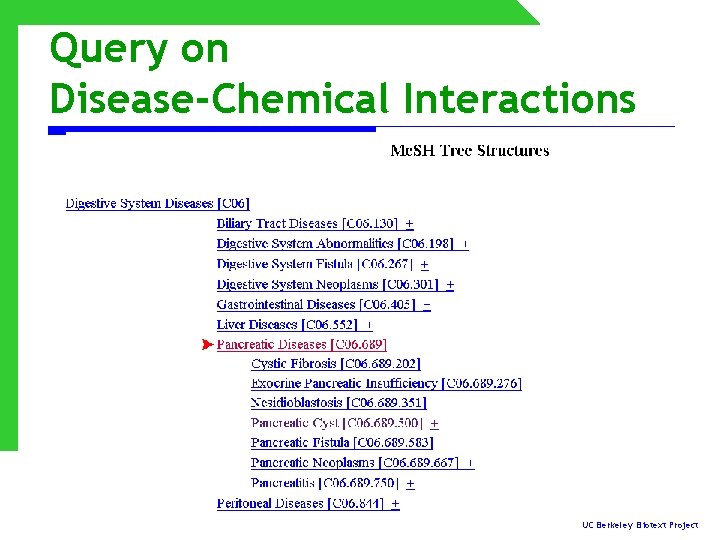

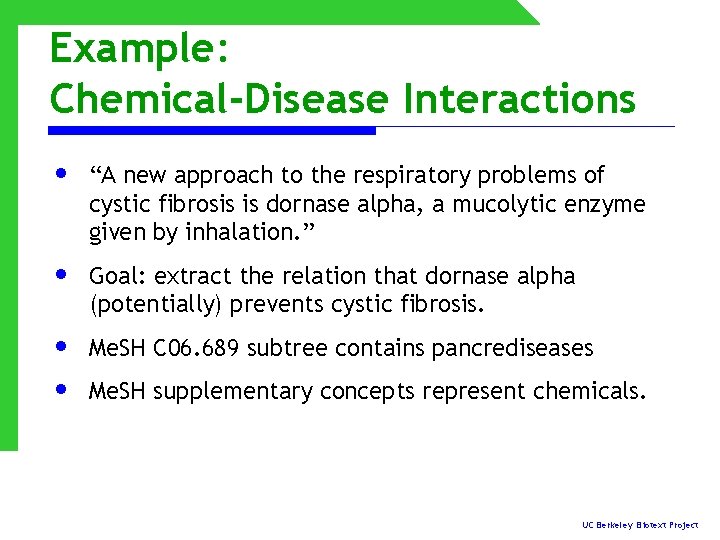

Example: Chemical-Disease Interactions • “A new approach to the respiratory problems of cystic fibrosis is dornase alpha, a mucolytic enzyme given by inhalation. ” • Goal: extract the relation that dornase alpha (potentially) prevents cystic fibrosis. • • Me. SH C 06. 689 subtree contains pancrediseases Me. SH supplementary concepts represent chemicals. UC Berkeley Biotext Project

Query on Disease-Chemical Interactions UC Berkeley Biotext Project

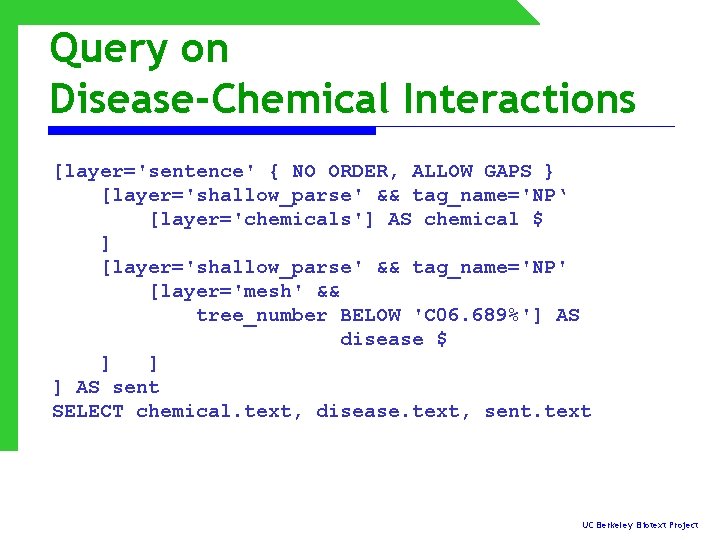

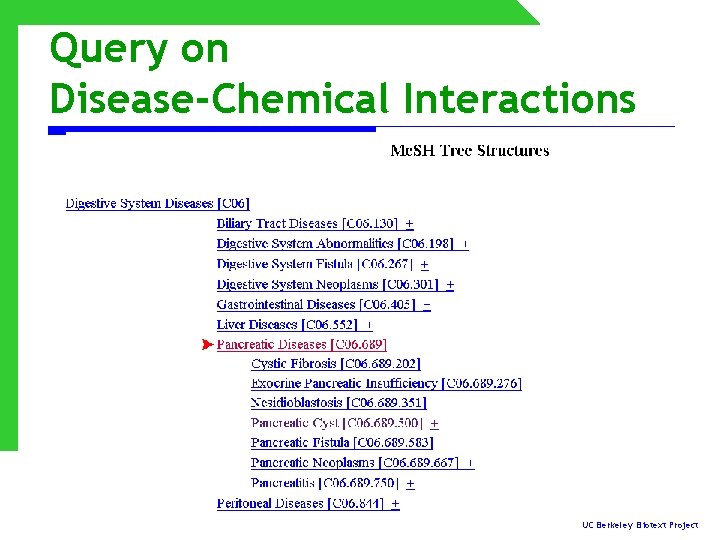

Query on Disease-Chemical Interactions [layer='sentence' { NO ORDER, ALLOW GAPS } [layer='shallow_parse' && tag_name='NP‘ [layer='chemicals'] AS chemical $ ] [layer='shallow_parse' && tag_name='NP' [layer='mesh' && tree_number BELOW 'C 06. 689%'] AS disease $ ] ] ] AS sent SELECT chemical. text, disease. text, sent. text UC Berkeley Biotext Project

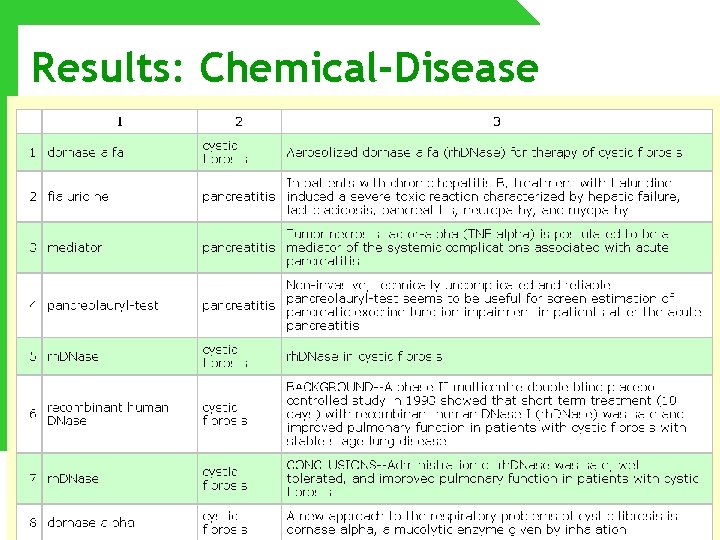

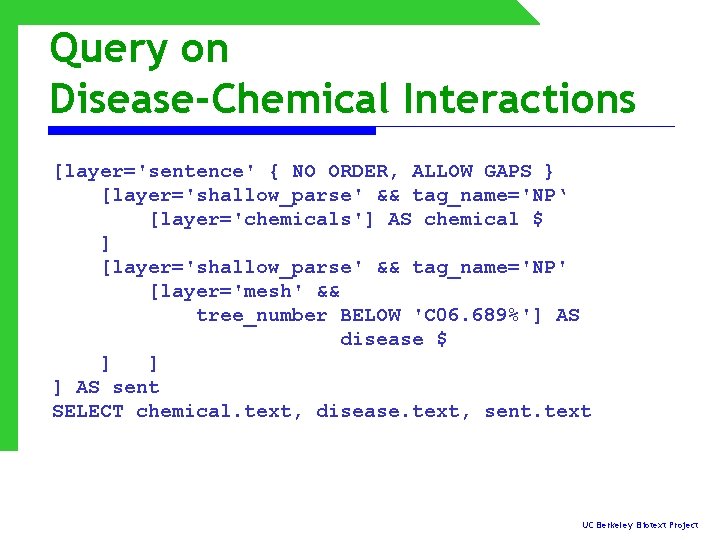

Results: Chemical-Disease UC Berkeley Biotext Project

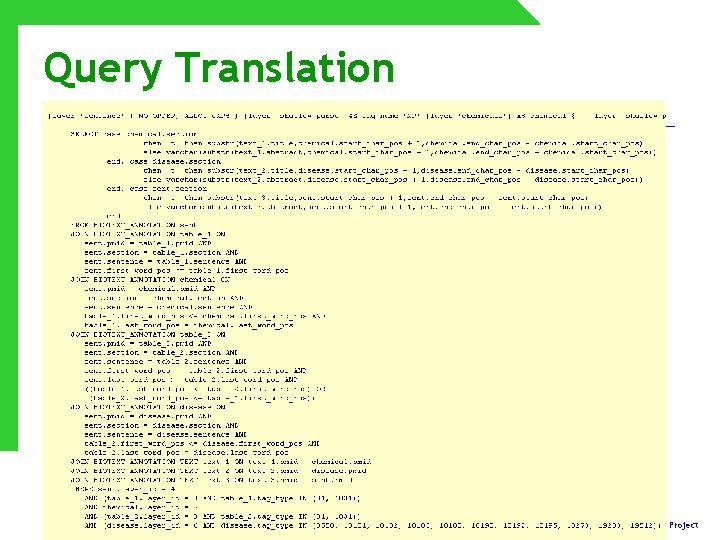

Query Translation UC Berkeley Biotext Project

Database Design & Evaluation

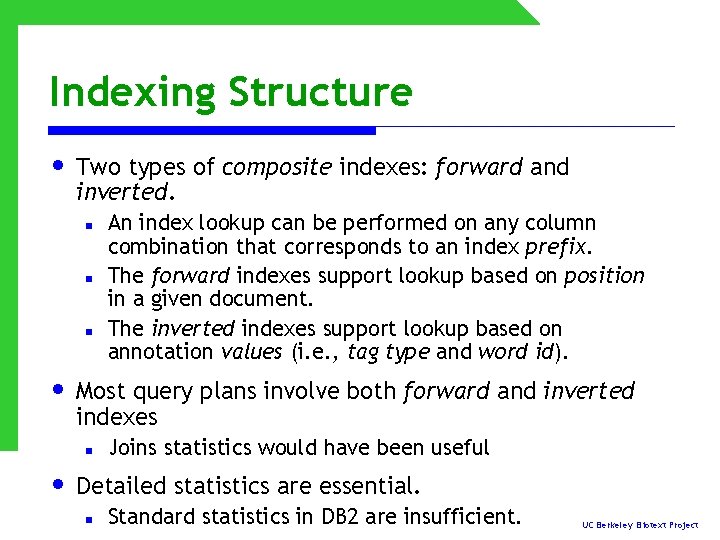

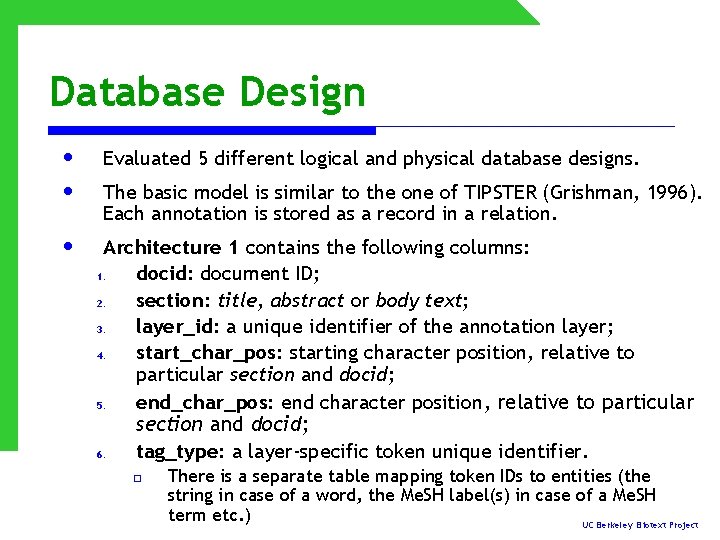

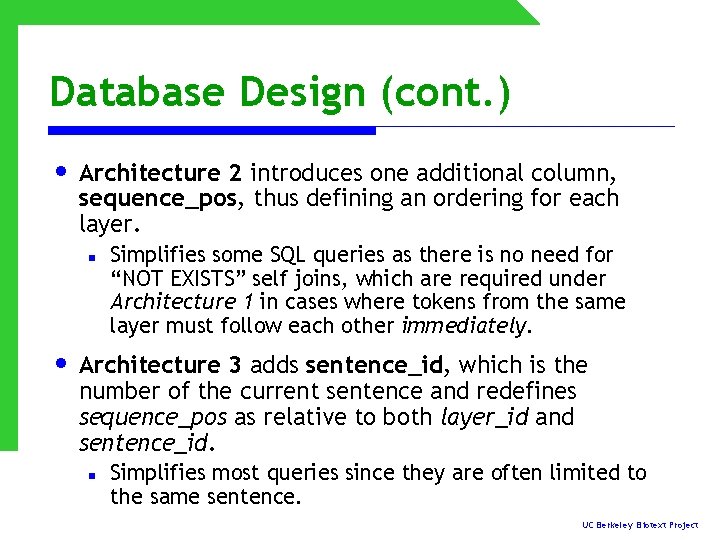

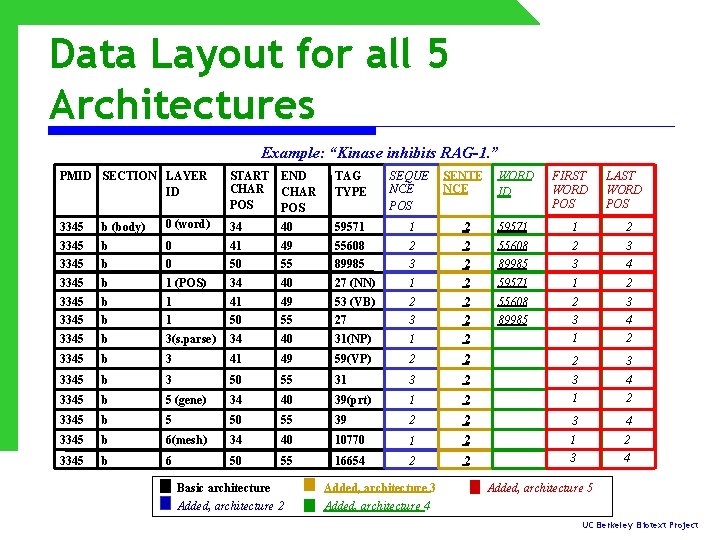

Database Design • • Evaluated 5 different logical and physical database designs. • Architecture 1 contains the following columns: 1. docid: document ID; 2. section: title, abstract or body text; 3. layer_id: a unique identifier of the annotation layer; 4. start_char_pos: starting character position, relative to particular section and docid; 5. end_char_pos: end character position, relative to particular The basic model is similar to the one of TIPSTER (Grishman, 1996). Each annotation is stored as a record in a relation. 6. section and docid; tag_type: a layer-specific token unique identifier. o There is a separate table mapping token IDs to entities (the string in case of a word, the Me. SH label(s) in case of a Me. SH term etc. ) UC Berkeley Biotext Project

Database Design (cont. ) • Architecture 2 introduces one additional column, sequence_pos, thus defining an ordering for each layer. n Simplifies some SQL queries as there is no need for “NOT EXISTS” self joins, which are required under Architecture 1 in cases where tokens from the same layer must follow each other immediately. • Architecture 3 adds sentence_id, which is the number of the current sentence and redefines sequence_pos as relative to both layer_id and sentence_id. n Simplifies most queries since they are often limited to the same sentence. UC Berkeley Biotext Project

Database Design (cont. ) • Architecture 4 merges the word and POS layers, and adds word_id assuming a one-to-one correspondence between them. n Reduces the number of stored annotations and the number of joins in queries with both word and POS constraints. • Architecture 5 replaces sequence_pos with first_word_pos and last_word_pos, which correspond to the sequence_pos of the first/last word covered by the annotation. n Requires all annotation boundaries to coincide with word boundaries. n Copes naturally with adjacency constraints between different layers. n Allows for a simpler indexing structure. UC Berkeley Biotext Project

Data Layout for all 5 Architectures Example: “Kinase inhibits RAG-1. ” PMID SECTION LAYER ID START END CHAR POS TAG TYPE SEQUE NCE POS SENTE NCE WORD ID FIRST WORD POS LAST WORD POS 3345 b (body) 0 (word) 34 40 59571 1 2 3345 b 0 41 49 55608 2 2 55608 2 3 3345 b 0 50 55 89985 3 2 89985 3 4 3345 b 1 (POS) 34 40 27 (NN) 1 2 59571 1 2 3345 b 1 41 49 53 (VB) 2 2 55608 2 3 3345 b 1 50 55 27 3 2 89985 3345 b 3(s. parse) 34 40 31(NP) 1 2 3 1 4 2 3345 b 3 41 49 59(VP) 2 2 2 3 3345 b 3 50 55 31 3 2 3345 b 5 (gene) 34 40 39(prt) 1 2 3 1 4 2 3345 b 5 50 55 39 2 2 3 4 3345 b 6(mesh) 34 40 10770 1 2 3345 b 6 50 55 16654 2 2 1 3 2 4 Basic architecture Added, architecture 3 Added, architecture 2 Added, architecture 4 Added, architecture 5 UC Berkeley Biotext Project

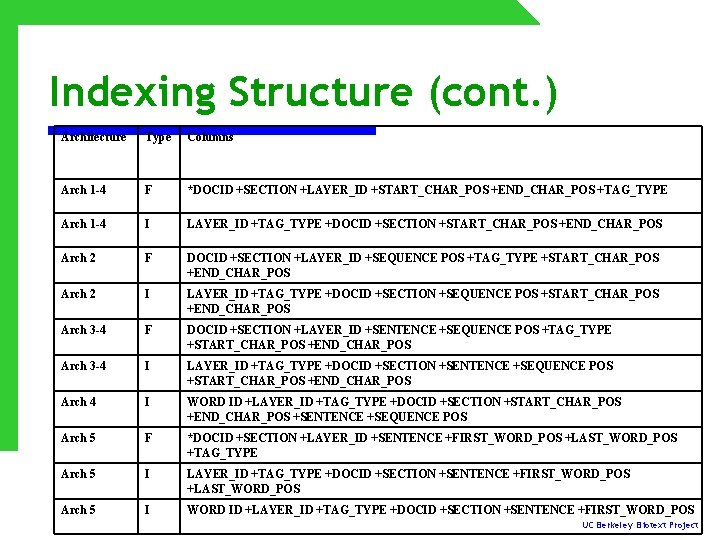

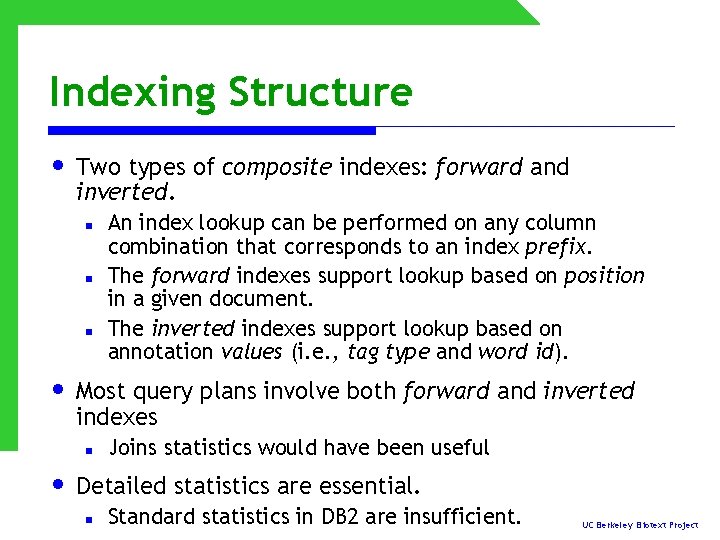

Indexing Structure • Two types of composite indexes: forward and inverted. n n n An index lookup can be performed on any column combination that corresponds to an index prefix. The forward indexes support lookup based on position in a given document. The inverted indexes support lookup based on annotation values (i. e. , tag type and word id). • Most query plans involve both forward and inverted indexes n Joins statistics would have been useful • Detailed statistics are essential. n Standard statistics in DB 2 are insufficient. UC Berkeley Biotext Project

Indexing Structure (cont. ) Architecture Type Columns Arch 1 -4 F *DOCID +SECTION +LAYER_ID +START_CHAR_POS +END_CHAR_POS +TAG_TYPE Arch 1 -4 I LAYER_ID +TAG_TYPE +DOCID +SECTION +START_CHAR_POS +END_CHAR_POS Arch 2 F DOCID +SECTION +LAYER_ID +SEQUENCE POS +TAG_TYPE +START_CHAR_POS +END_CHAR_POS Arch 2 I LAYER_ID +TAG_TYPE +DOCID +SECTION +SEQUENCE POS +START_CHAR_POS +END_CHAR_POS Arch 3 -4 F DOCID +SECTION +LAYER_ID +SENTENCE +SEQUENCE POS +TAG_TYPE +START_CHAR_POS +END_CHAR_POS Arch 3 -4 I LAYER_ID +TAG_TYPE +DOCID +SECTION +SENTENCE +SEQUENCE POS +START_CHAR_POS +END_CHAR_POS Arch 4 I WORD ID +LAYER_ID +TAG_TYPE +DOCID +SECTION +START_CHAR_POS +END_CHAR_POS +SENTENCE +SEQUENCE POS Arch 5 F *DOCID +SECTION +LAYER_ID +SENTENCE +FIRST_WORD_POS +LAST_WORD_POS +TAG_TYPE Arch 5 I LAYER_ID +TAG_TYPE +DOCID +SECTION +SENTENCE +FIRST_WORD_POS +LAST_WORD_POS Arch 5 I WORD ID +LAYER_ID +TAG_TYPE +DOCID +SECTION +SENTENCE +FIRST_WORD_POS UC Berkeley Biotext Project

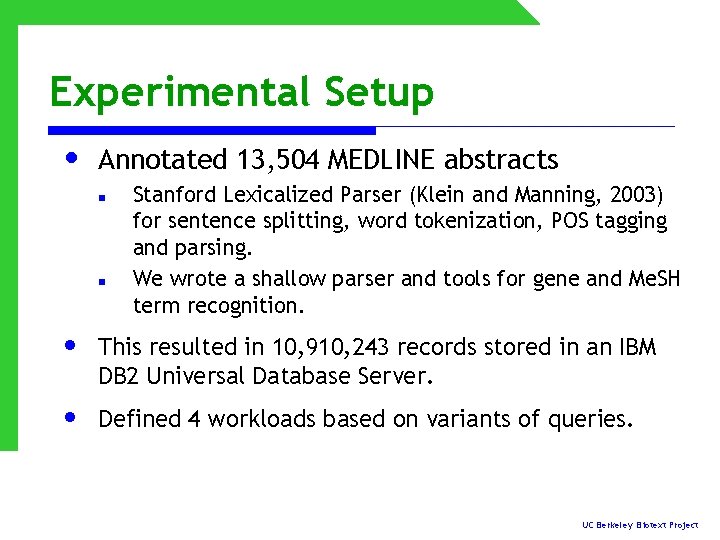

Experimental Setup • Annotated 13, 504 MEDLINE abstracts n n Stanford Lexicalized Parser (Klein and Manning, 2003) for sentence splitting, word tokenization, POS tagging and parsing. We wrote a shallow parser and tools for gene and Me. SH term recognition. • This resulted in 10, 910, 243 records stored in an IBM DB 2 Universal Database Server. • Defined 4 workloads based on variants of queries. UC Berkeley Biotext Project

![Experimental Setup 4 Workloads a ProteinProtein Interaction layersentence ALLOW GAPS layergene AS gene 1 Experimental Setup: 4 Workloads (a) Protein-Protein Interaction [layer='sentence' {ALLOW GAPS} [layer='gene'] AS gene 1](https://slidetodoc.com/presentation_image_h2/681f3a5c1f37240a34182de20078ec77/image-38.jpg)

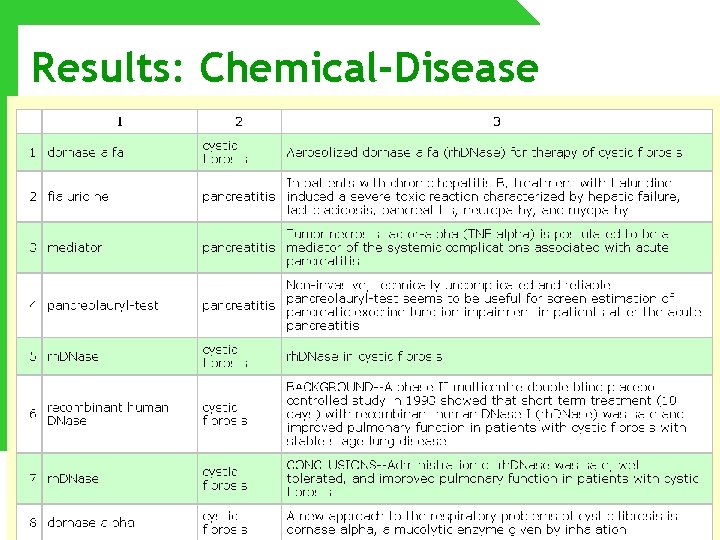

Experimental Setup: 4 Workloads (a) Protein-Protein Interaction [layer='sentence' {ALLOW GAPS} [layer='gene'] AS gene 1 [layer='pos' && tag_name="verb" && content="binds"] AS verb [layer='gene'] AS gene 2 ] SELECT gene 1. content, verb. content, gene 2. content (Blaschke et al. , 1999) (b) Protein-Protein Interaction [layer='sentence' [layer='shallow_parse' && tag_name="NP"] AS np 1 [layer='pos' && tag_name="verb" && content='binds'] AS verb [layer='pos' && tag_name="prep" && content='to'] [layer='shallow_parse' && tag_name="NP"] AS np 2 ] SELECT np 1. content, verb. content, np 2. content (Thomas et al. , 2000) (c) Descent of Hierarchy: [layer='shallow_parse' && tag_name="NP" [layer='pos' && tag_name="noun" ^ [layer='mesh' && tree_number BELOW "G 07. 553"] AS m 1 $ ] [layer='pos' && tag_name="noun" ^ [layer='mesh' && tree_number BELOW "D"] AS m 2 $ ] ] SELECT m 1. content, m 2. content A 01 A 07 limb: vein shoulder: artery (Rosario et al. , 2002) (d) Acronym-Meaning Extraction [layer='shallow_parse' && tag_name="NP"] AS np 1 [layer='pos' && content='('] [layer='shallow_parse' && tag_name="NP"] AS np 2 [layer='pos' && content=')'] (Pustejovsky et al. , 2001) UC Berkeley Biotext Project

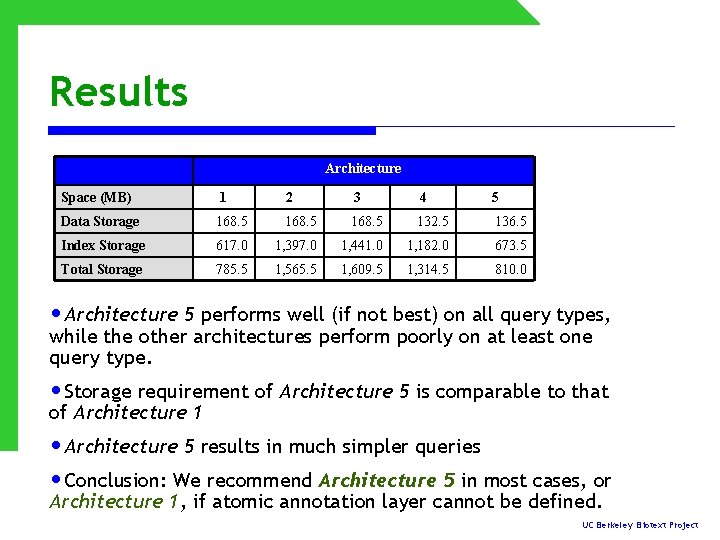

Results Workload (a) (b) (c) (d) #Queries 54 11 50 1 #Results/query 303. 4 77. 5 1. 6 16, 701 LQL lines 8 6 5 4 (a) (b) Architecture 1 2 3 4 5 SQL lines 37 37 34 29 29 91 77 75 65 50 # Joins 6 6 6 5 5 12 11 11 9 7 3. 98 4. 35 3. 59 1. 69 1. 94 3. 88 5. 68 5. 41 3. 85 3. 55 Time (sec) Workload (c) (d) Architecture 1 2 3 4 5 SQL lines 45 38 38 39 41 59 50 53 53 35 # Joins 7 6 6 7 7 4 17. 9 23. 42 21. 49 30. 07 4. 06 1, 879 1, 700 2, 182 1, 682 1, 582 Time (sec) UC Berkeley Biotext Project

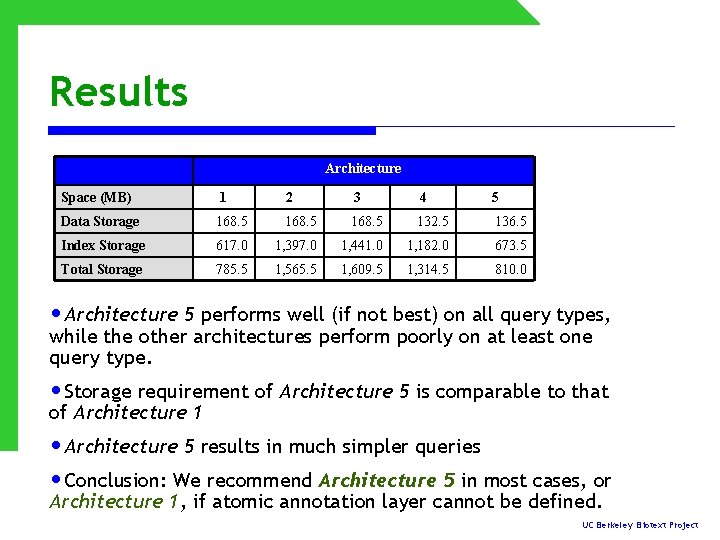

Results Architecture Space (MB) 1 2 3 4 5 Data Storage 168. 5 132. 5 136. 5 Index Storage 617. 0 1, 397. 0 1, 441. 0 1, 182. 0 673. 5 Total Storage 785. 5 1, 565. 5 1, 609. 5 1, 314. 5 810. 0 • Architecture 5 performs well (if not best) on all query types, while the other architectures perform poorly on at least one query type. • Storage requirement of Architecture 5 is comparable to that of Architecture 1 • Architecture 5 results in much simpler queries • Conclusion: We recommend Architecture 5 in most cases, or Architecture 1, if atomic annotation layer cannot be defined. UC Berkeley Biotext Project

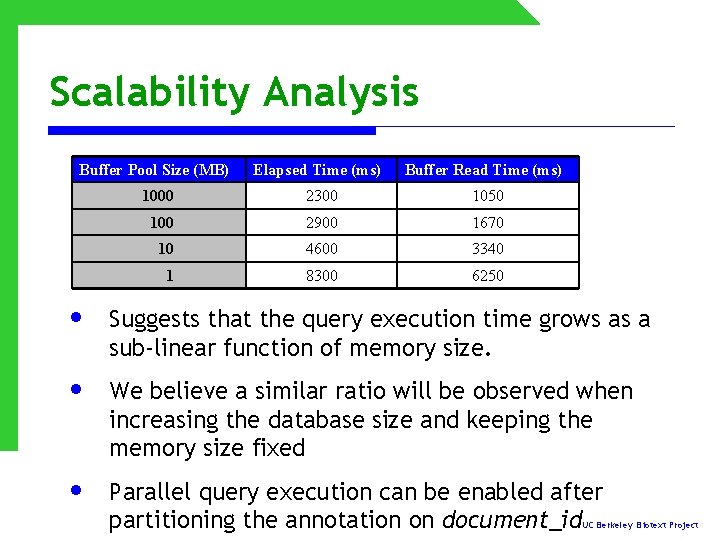

Scalability Analysis • • Combined workload of 3 query types Varying buffer pool sizes UC Berkeley Biotext Project

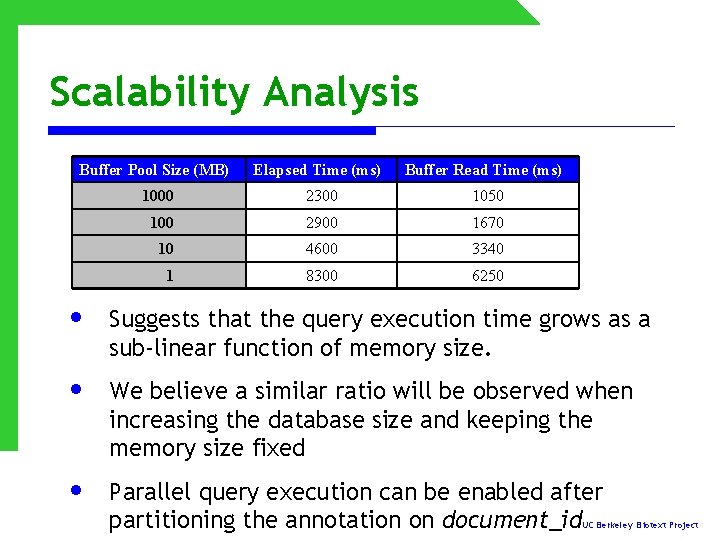

Scalability Analysis Buffer Pool Size (MB) Elapsed Time (ms) Buffer Read Time (ms) 1000 2300 1050 100 2900 1670 10 4600 3340 1 8300 6250 • Suggests that the query execution time grows as a sub-linear function of memory size. • We believe a similar ratio will be observed when increasing the database size and keeping the memory size fixed • Parallel query execution can be enabled after partitioning the annotation on document_id UC Berkeley Biotext Project

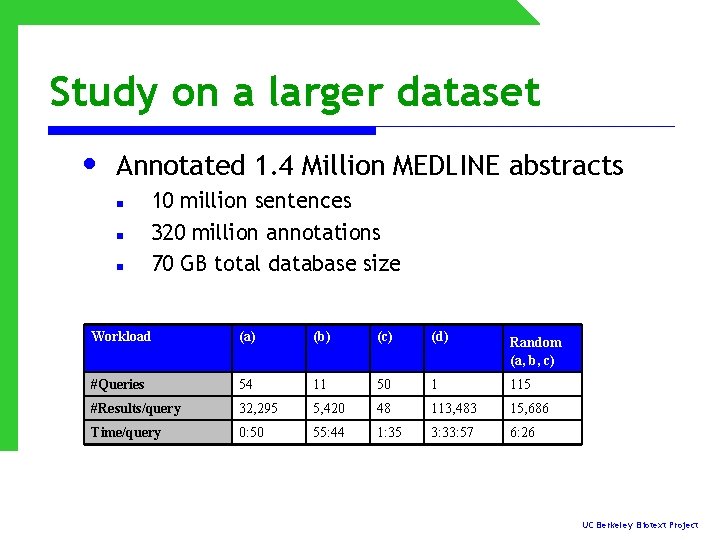

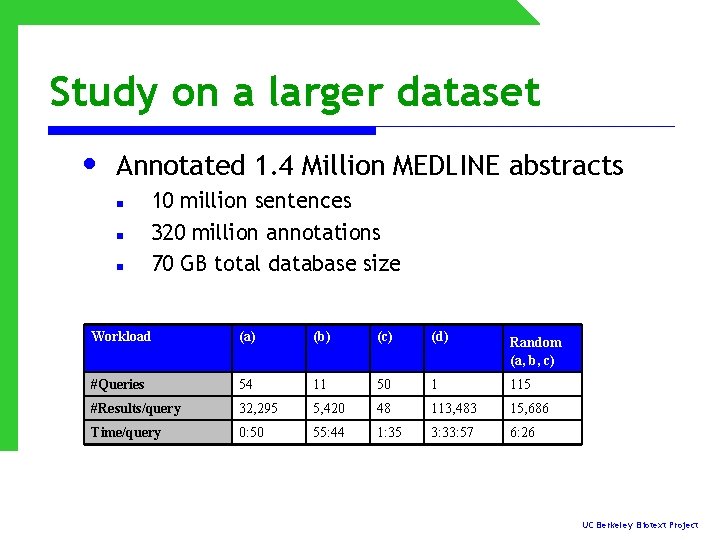

Study on a larger dataset • Annotated 1. 4 Million MEDLINE abstracts n n n 10 million sentences 320 million annotations 70 GB total database size Workload (a) (b) (c) (d) Random (a, b, c) #Queries 54 11 50 1 115 #Results/query 32, 295 5, 420 48 113, 483 15, 686 Time/query 0: 50 55: 44 1: 35 3: 33: 57 6: 26 UC Berkeley Biotext Project

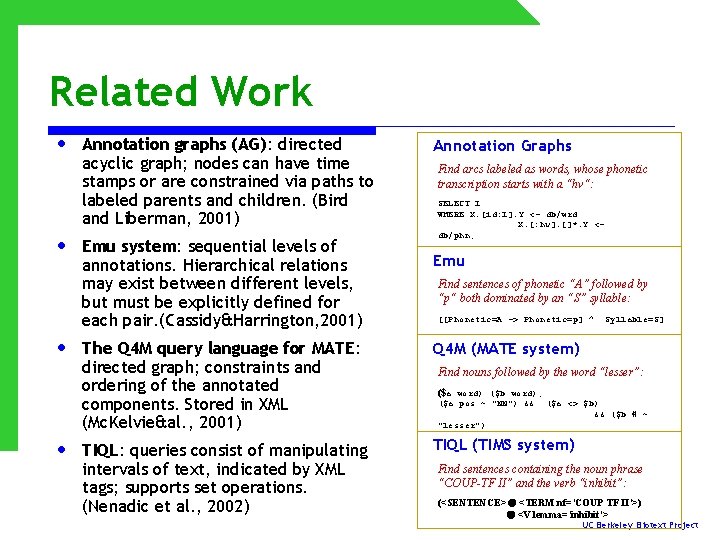

Related Work • • Annotation graphs (AG): directed acyclic graph; nodes can have time stamps or are constrained via paths to labeled parents and children. (Bird and Liberman, 2001) Emu system: sequential levels of annotations. Hierarchical relations may exist between different levels, but must be explicitly defined for each pair. (Cassidy&Harrington, 2001) Annotation Graphs Find arcs labeled as words, whose phonetic transcription starts with a “hv“: SELECT I WHERE X. [id: I]. Y <- db/wrd X. [: hv]. []*. Y <db/phn; Emu Find sentences of phonetic “A” followed by “p“ both dominated by an “S” syllable: [[Phonetic=A -> Phonetic=p] ^ The Q 4 M query language for MATE: directed graph; constraints and ordering of the annotated components. Stored in XML (Mc. Kelvie&al. , 2001) Q 4 M (MATE system) TIQL: queries consist of manipulating intervals of text, indicated by XML tags; supports set operations. (Nenadic et al. , 2002) TIQL (TIMS system) Syllable=S] Find nouns followed by the word “lesser”: ($a word) ($b word); ($a pos ~ "NN") && ($a <> $b) && ($b # ~ "lesser") Find sentences containing the noun phrase “COUP-TF II” and the verb “inhibit”: (<SENTENCE> <TERM nf=‘COUP TF II’>) <V lemma=‘inhibit’> UC Berkeley Biotext Project

What about XQuery/XPath? UC Berkeley Biotext Project

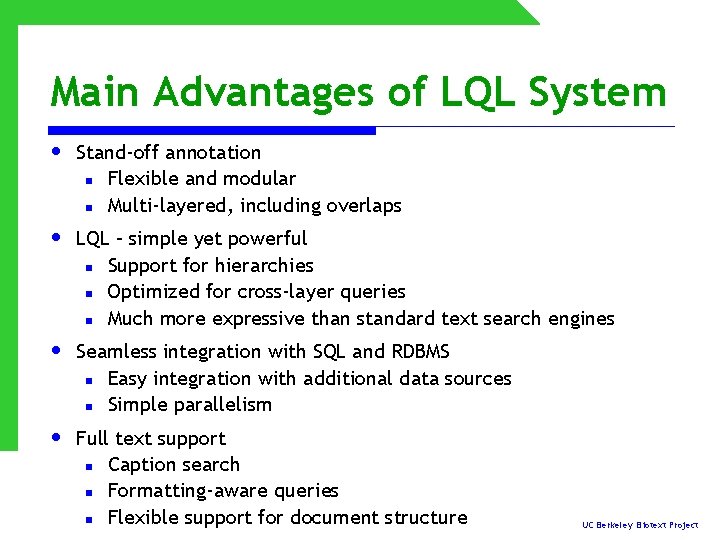

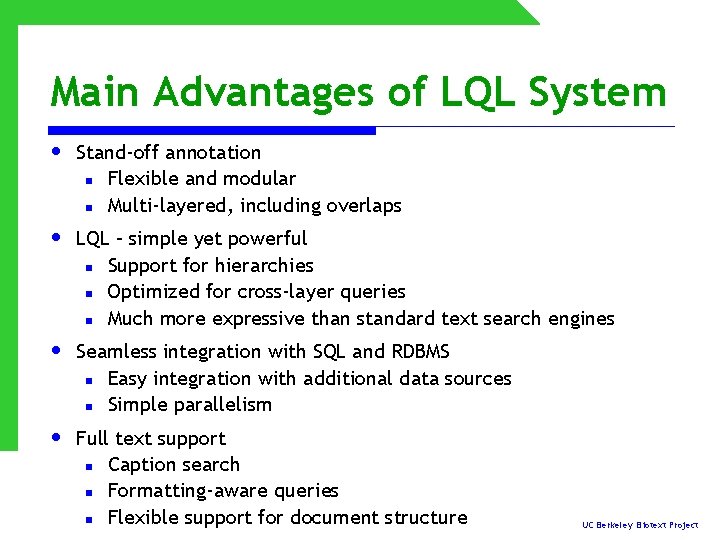

Main Advantages of LQL System • Stand-off annotation n Flexible and modular n Multi-layered, including overlaps • LQL – simple yet powerful n Support for hierarchies n Optimized for cross-layer queries n Much more expressive than standard text search engines • Seamless integration with SQL and RDBMS n Easy integration with additional data sources n Simple parallelism • Full text support n Caption search n Formatting-aware queries n Flexible support for document structure UC Berkeley Biotext Project

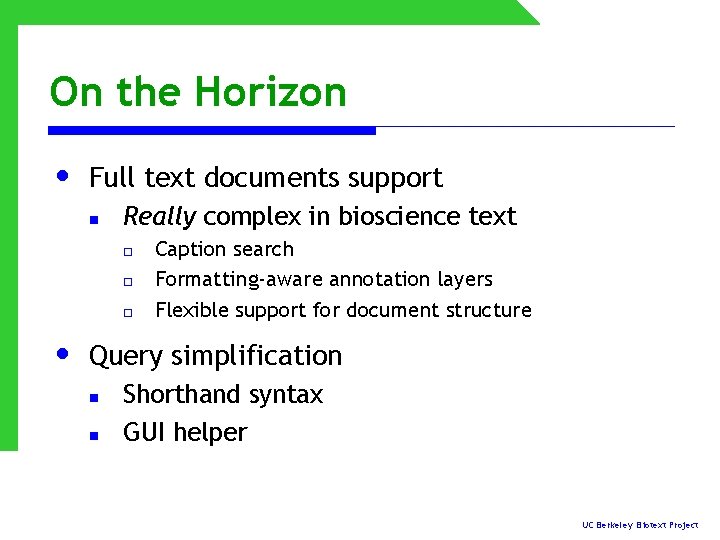

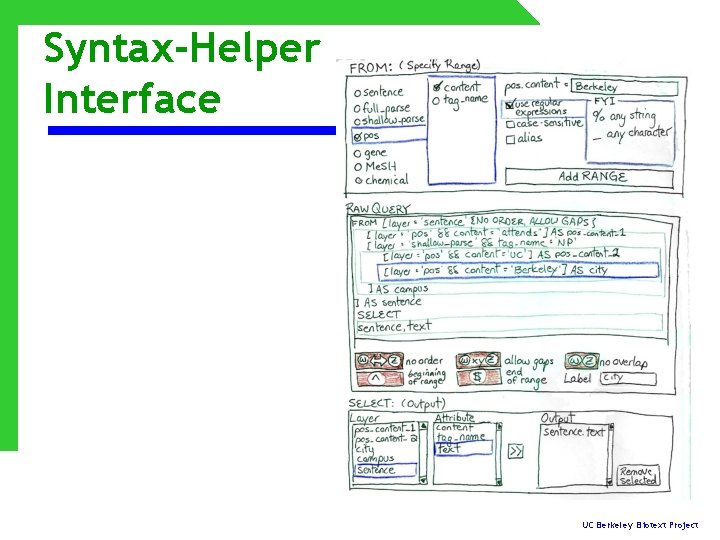

On the Horizon • Full text documents support n Really complex in bioscience text o o o • Caption search Formatting-aware annotation layers Flexible support for document structure Query simplification n n Shorthand syntax GUI helper UC Berkeley Biotext Project

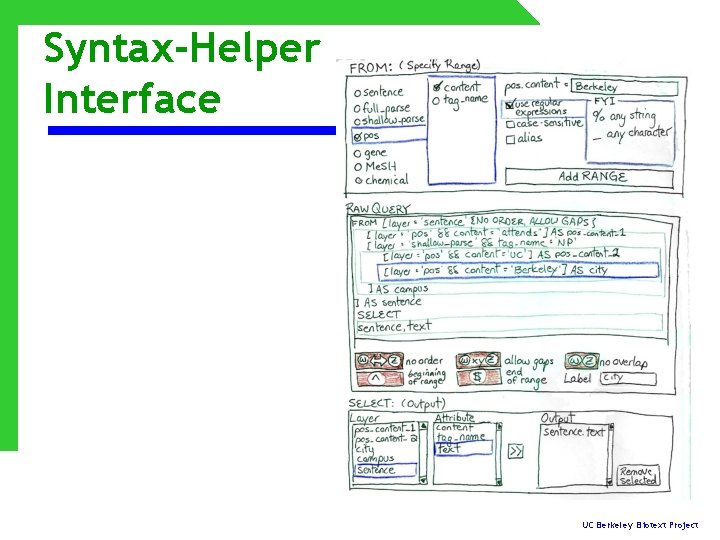

Syntax-Helper Interface UC Berkeley Biotext Project

Thank you! biotext. berkeley. edu/lql

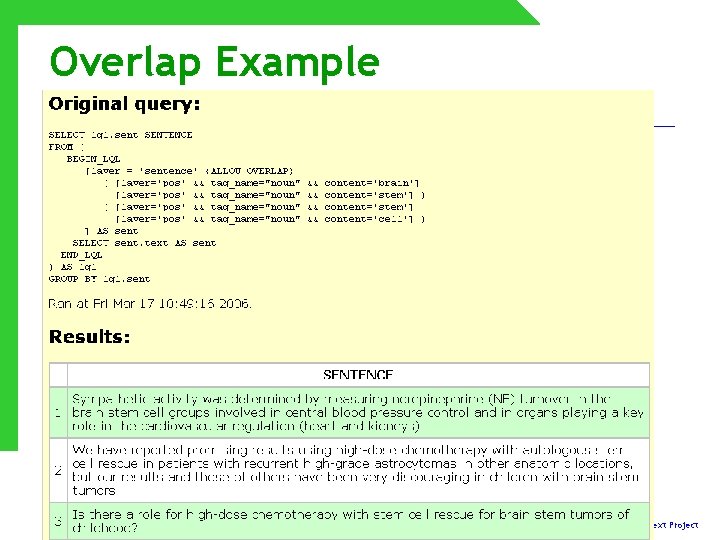

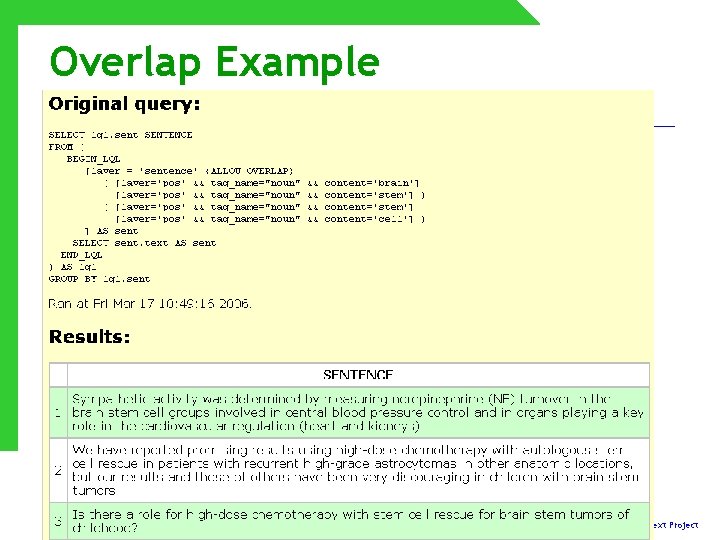

Overlap Example UC Berkeley Biotext Project

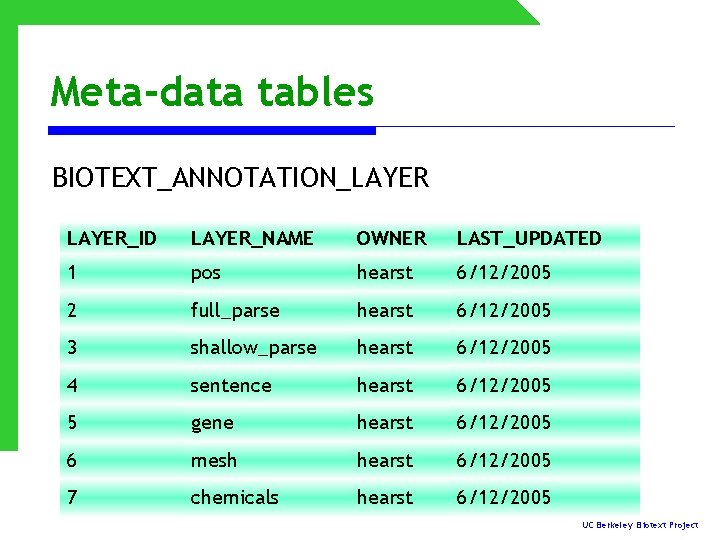

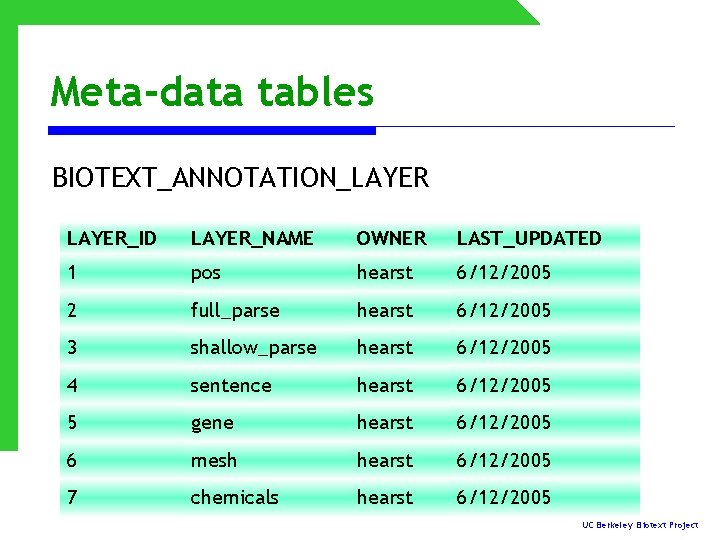

Meta-data tables BIOTEXT_ANNOTATION_LAYER_ID LAYER_NAME OWNER LAST_UPDATED 1 pos hearst 6/12/2005 2 full_parse hearst 6/12/2005 3 shallow_parse hearst 6/12/2005 4 sentence hearst 6/12/2005 5 gene hearst 6/12/2005 6 mesh hearst 6/12/2005 7 chemicals hearst 6/12/2005 UC Berkeley Biotext Project

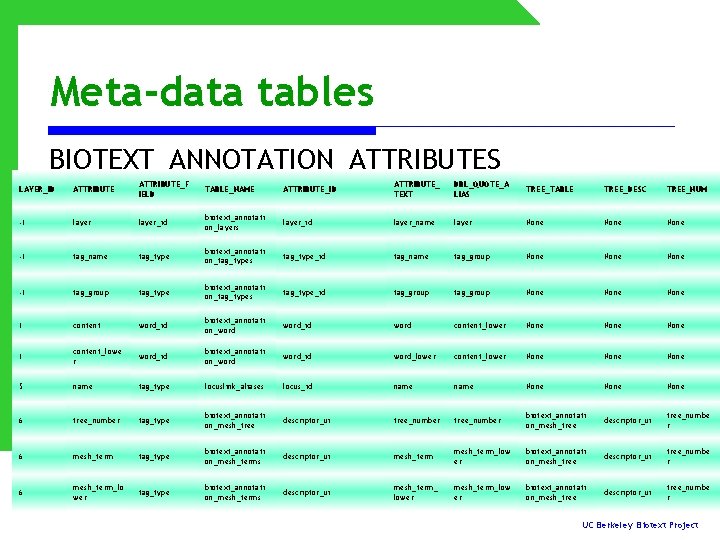

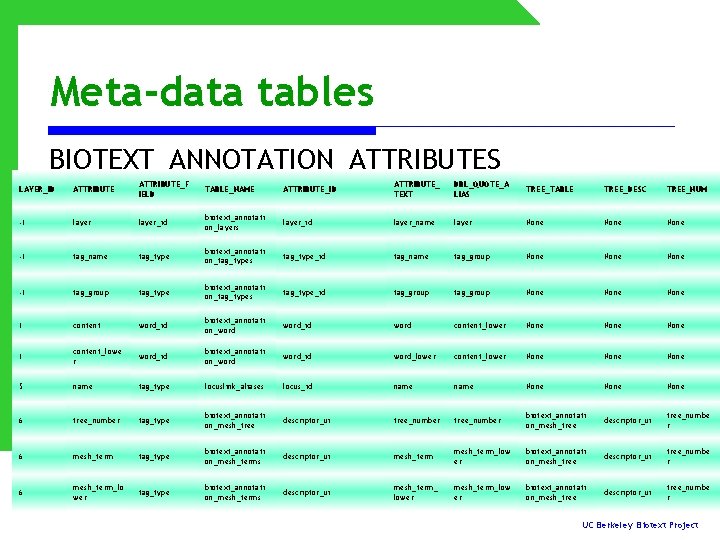

Meta-data tables BIOTEXT_ANNOTATION_ATTRIBUTES LAYER_ID ATTRIBUTE_F IELD TABLE_NAME ATTRIBUTE_ID ATTRIBUTE_ TEXT DBL_QUOTE_A LIAS TREE_TABLE TREE_DESC TREE_NUM -1 layer_id biotext_annotati on_layers layer_id layer_name layer None -1 tag_name tag_type biotext_annotati on_tag_types tag_type_id tag_name tag_group None -1 tag_group tag_type biotext_annotati on_tag_types tag_type_id tag_group None 1 content word_id biotext_annotati on_word_id word content_lower None 1 content_lowe r word_id biotext_annotati on_word_id word_lower content_lower None 5 name tag_type locuslink_aliases locus_id name None 6 tree_number tag_type biotext_annotati on_mesh_tree descriptor_ui tree_number biotext_annotati on_mesh_tree descriptor_ui tree_numbe r 6 mesh_term tag_type biotext_annotati on_mesh_terms descriptor_ui mesh_term_low er biotext_annotati on_mesh_tree descriptor_ui tree_numbe r 6 mesh_term_lo wer tag_type biotext_annotati on_mesh_terms descriptor_ui mesh_term_ lower mesh_term_low er biotext_annotati on_mesh_tree descriptor_ui tree_numbe r UC Berkeley Biotext Project

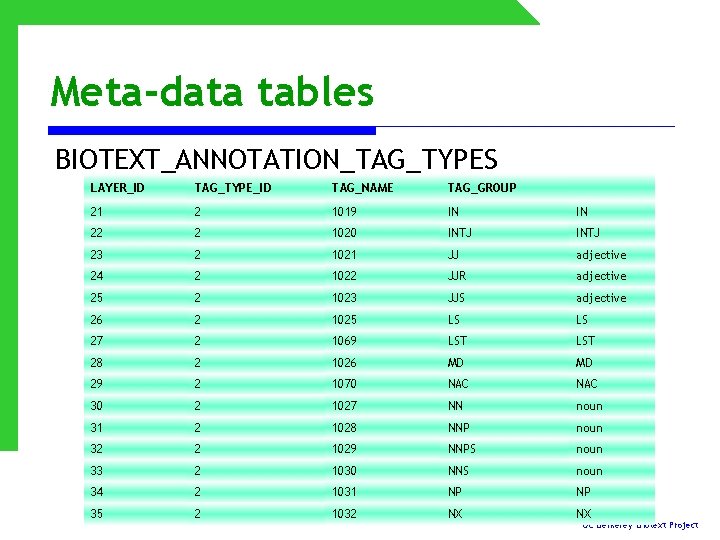

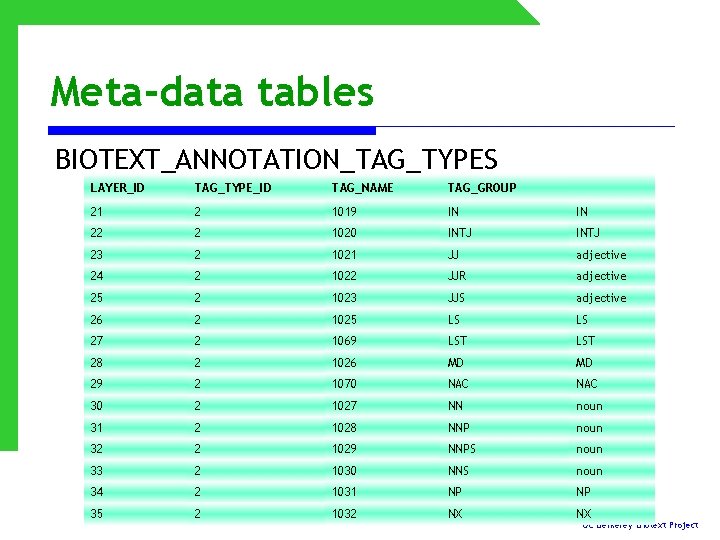

Meta-data tables BIOTEXT_ANNOTATION_TAG_TYPES LAYER_ID TAG_TYPE_ID TAG_NAME TAG_GROUP 21 2 1019 IN IN 22 2 1020 INTJ 23 2 1021 JJ adjective 24 2 1022 JJR adjective 25 2 1023 JJS adjective 26 2 1025 LS LS 27 2 1069 LST 28 2 1026 MD MD 29 2 1070 NAC 30 2 1027 NN noun 31 2 1028 NNP noun 32 2 1029 NNPS noun 33 2 1030 NNS noun 34 2 1031 NP NP 35 2 1032 NX NX UC Berkeley Biotext Project

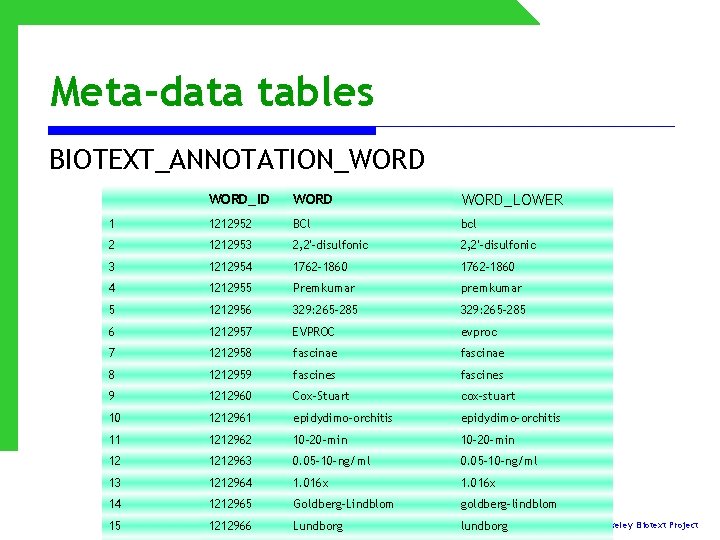

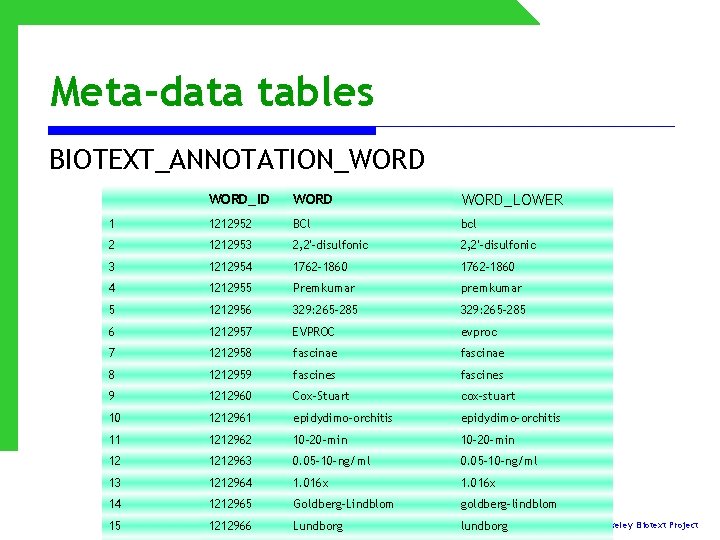

Meta-data tables BIOTEXT_ANNOTATION_WORD_ID WORD_LOWER 1 1212952 BCl bcl 2 1212953 2, 2'-disulfonic 3 1212954 1762 -1860 4 1212955 Premkumar premkumar 5 1212956 329: 265 -285 6 1212957 EVPROC evproc 7 1212958 fascinae 8 1212959 fascines 9 1212960 Cox-Stuart cox-stuart 10 1212961 epidydimo-orchitis 11 1212962 10 -20 -min 12 1212963 0. 05 -10 -ng/ml 13 1212964 1. 016 x 14 1212965 Goldberg-Lindblom goldberg-lindblom 15 1212966 Lundborg lundborg UC Berkeley Biotext Project

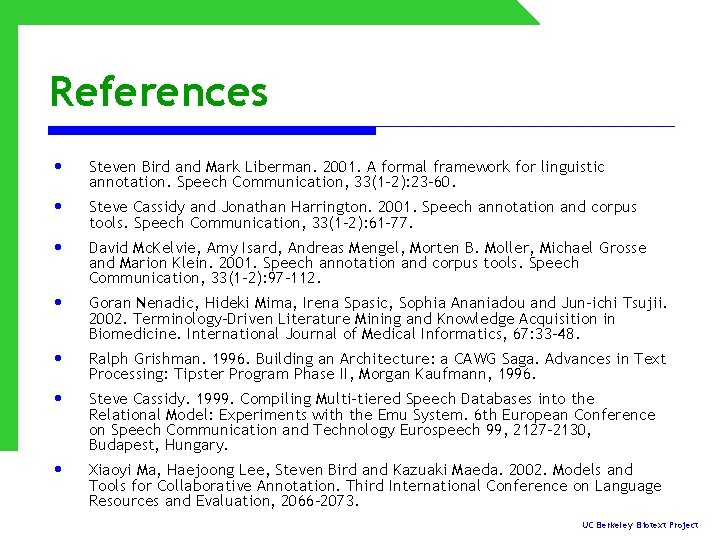

References • • Steven Bird and Mark Liberman. 2001. A formal framework for linguistic annotation. Speech Communication, 33(1– 2): 23– 60. Steve Cassidy and Jonathan Harrington. 2001. Speech annotation and corpus tools. Speech Communication, 33(1– 2): 61– 77. David Mc. Kelvie, Amy Isard, Andreas Mengel, Morten B. Moller, Michael Grosse and Marion Klein. 2001. Speech annotation and corpus tools. Speech Communication, 33(1– 2): 97– 112. Goran Nenadic, Hideki Mima, Irena Spasic, Sophia Ananiadou and Jun-ichi Tsujii. 2002. Terminology-Driven Literature Mining and Knowledge Acquisition in Biomedicine. International Journal of Medical Informatics, 67: 33– 48. Ralph Grishman. 1996. Building an Architecture: a CAWG Saga. Advances in Text Processing: Tipster Program Phase II, Morgan Kaufmann, 1996. Steve Cassidy. 1999. Compiling Multi-tiered Speech Databases into the Relational Model: Experiments with the Emu System. 6 th European Conference on Speech Communication and Technology Eurospeech 99, 2127– 2130, Budapest, Hungary. Xiaoyi Ma, Haejoong Lee, Steven Bird and Kazuaki Maeda. 2002. Models and Tools for Collaborative Annotation. Third International Conference on Language Resources and Evaluation, 2066– 2073. UC Berkeley Biotext Project

Acquiring Labeled Data using Citances UC Berkeley Biotext Project

A discovery is made … A paper is written … UC Berkeley Biotext Project

That paper is cited … and cited … … as the evidence for some fact(s) F. UC Berkeley Biotext Project

Each of these in turn are cited for some fact(s) … … until it is the case that all important facts in the field can be found in citation sentences alone! UC Berkeley Biotext Project

Citances • Nearly every statement in a bioscience journal article is backed up with a cite. • • It is quite common for papers to be cited 30 -100 times. • • Different citances will state the same facts in different ways … The text around the citation tends to state biological facts. (Call these citances. ) … so can we use these for creating models of language expressing semantic relations? UC Berkeley Biotext Project