Supervised learning Mixture Of Experts MOE Network MOE

![Mixture of Experts The MOE [Jacobs 91] exhibits an explicit relationship with statistical pattern Mixture of Experts The MOE [Jacobs 91] exhibits an explicit relationship with statistical pattern](https://slidetodoc.com/presentation_image_h/e6a4c63c30586115f10916ef57e1a56e/image-6.jpg)

- Slides: 13

Supervised learning: Mixture Of Experts (MOE) Network

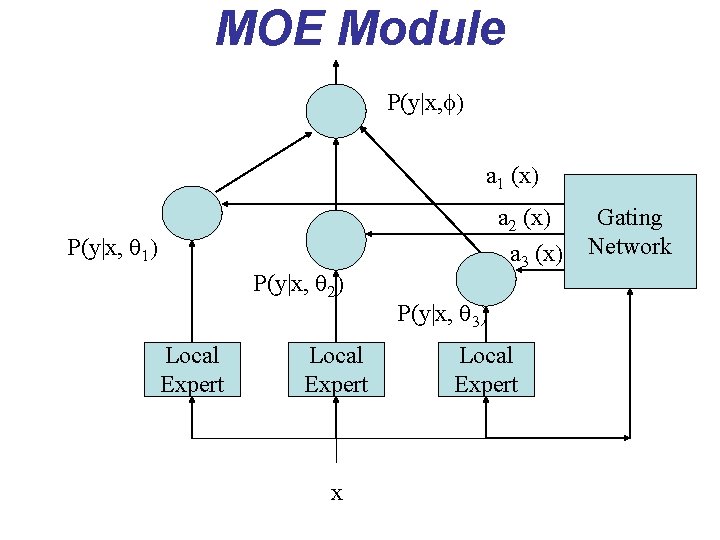

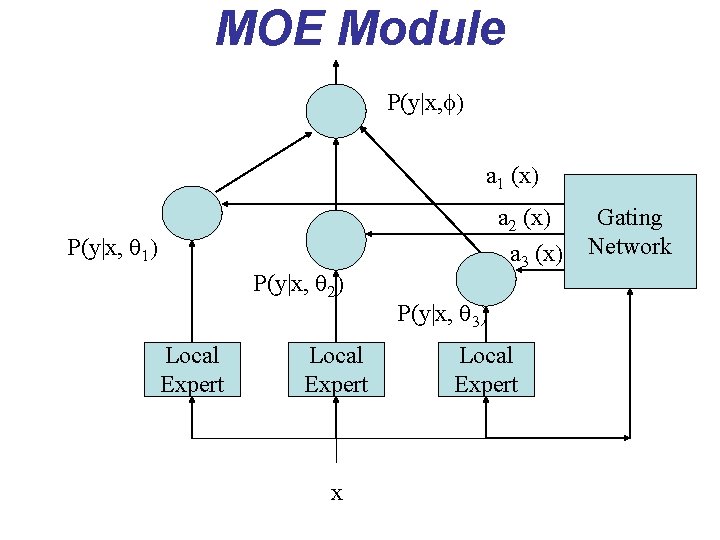

MOE Module P(y|x, f) a 1 (x) a 2 (x) a 3 (x) P(y|x, q 1) P(y|x, q 2) Local Expert x P(y|x, q 3) Local Expert Gating Network

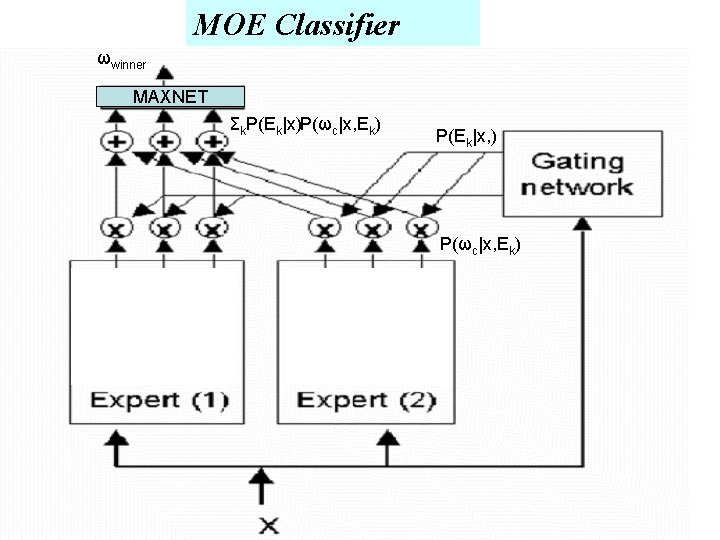

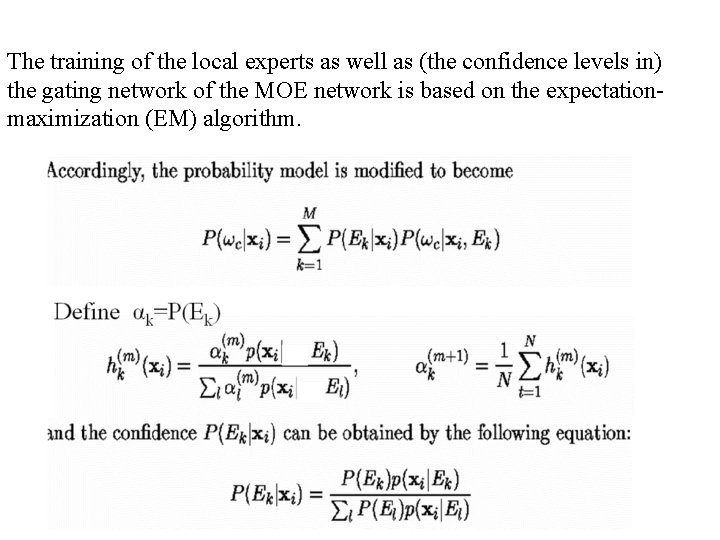

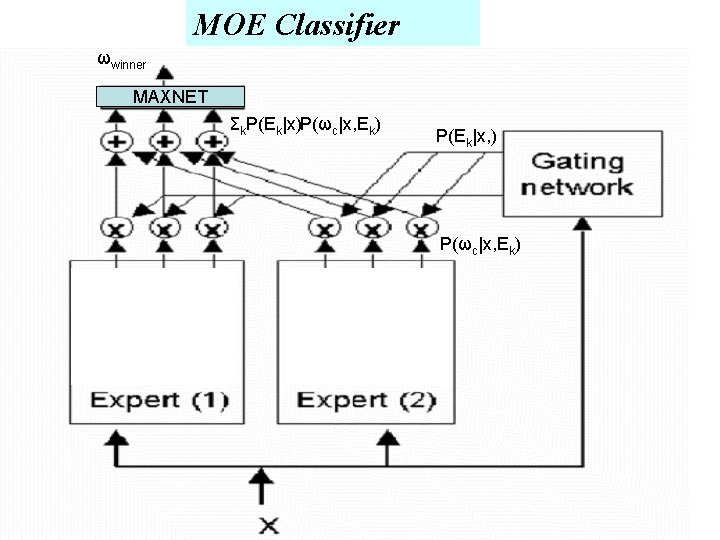

The objective is to estimate the model parameters so as to attain the highest probability of the training set given the estimated parameters. For a given input x , the posterior probability of generating class y given x using K experts can be computed as P( y | x , Φ) = Σj P( y | x , Θj) aj( x )

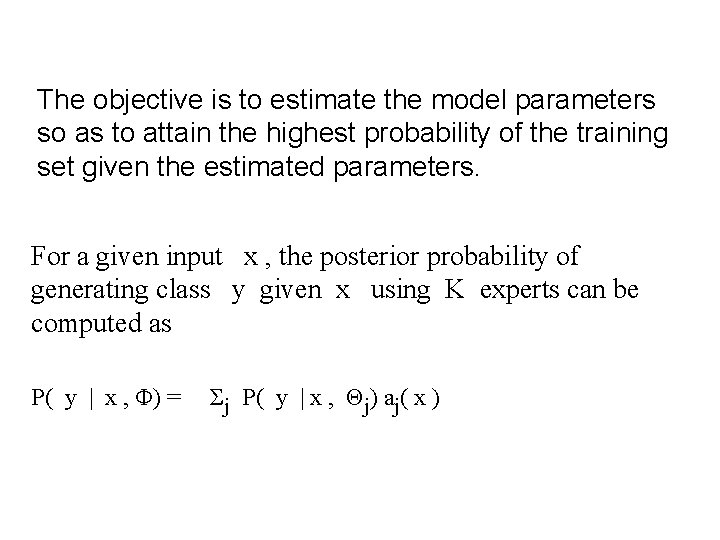

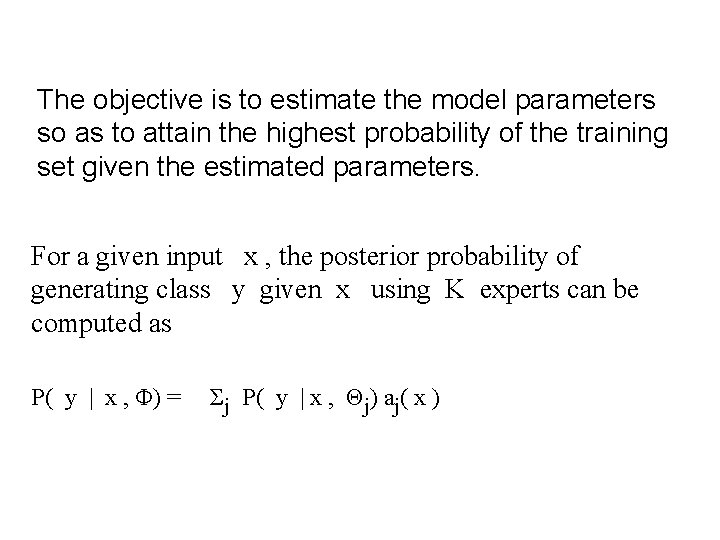

Each RBF Gaussian kernel can be viewed as an local expert. MAXNET Gating. NET

MOE Classifier ωwinner MAXNET Σk. P(Ek|x)P(ωc|x, Ek) P(Ek|x, ) P(ωc|x, Ek)

![Mixture of Experts The MOE Jacobs 91 exhibits an explicit relationship with statistical pattern Mixture of Experts The MOE [Jacobs 91] exhibits an explicit relationship with statistical pattern](https://slidetodoc.com/presentation_image_h/e6a4c63c30586115f10916ef57e1a56e/image-6.jpg)

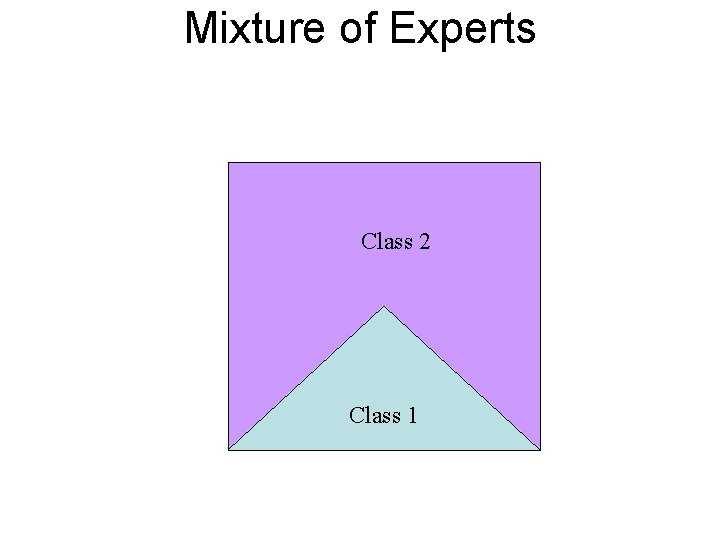

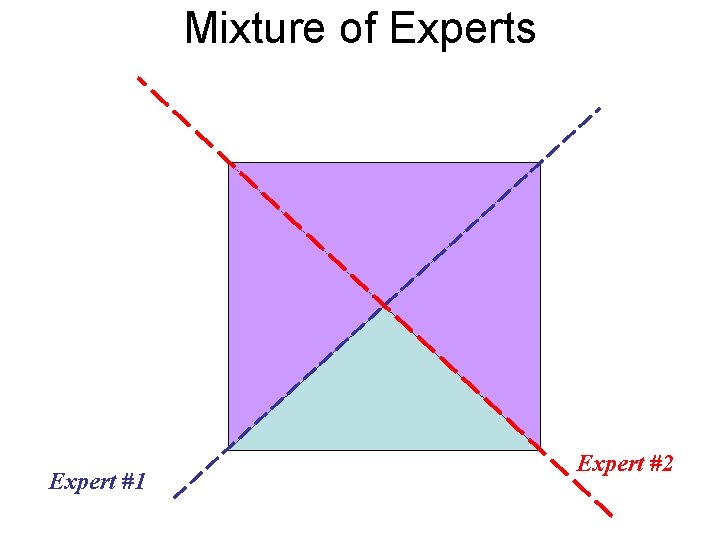

Mixture of Experts The MOE [Jacobs 91] exhibits an explicit relationship with statistical pattern classification methods as well as a close resemblance to fuzzy inference systems. Given a pattern, each expert network estimates the pattern's conditional a posteriori probability on the (adaptively-tuned or preassigned) feature space. Each local expert network performs multi-way classification over K classes by using either K independent binomial model, each modeling only one class, or one multinomial model for all classes.

Two Components of MOE • local experts: • gating network:

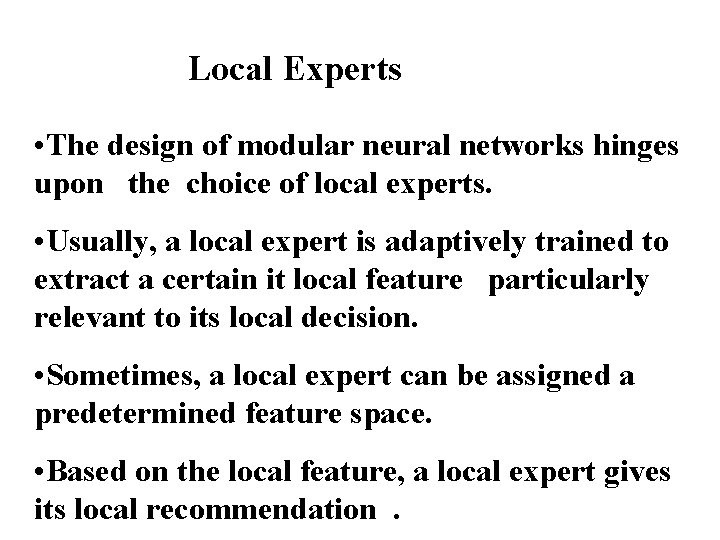

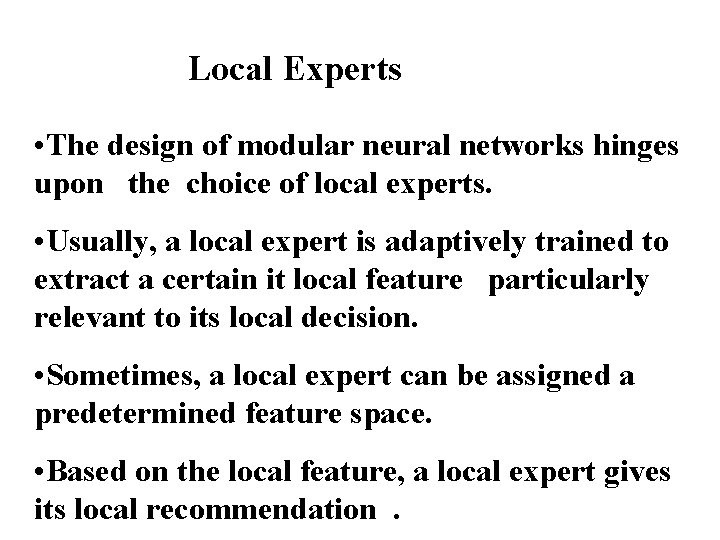

Local Experts • The design of modular neural networks hinges upon the choice of local experts. • Usually, a local expert is adaptively trained to extract a certain it local feature particularly relevant to its local decision. • Sometimes, a local expert can be assigned a predetermined feature space. • Based on the local feature, a local expert gives its local recommendation.

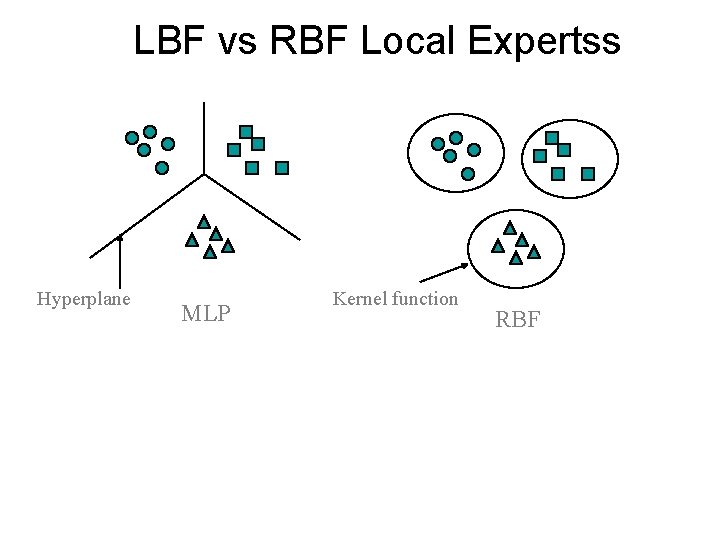

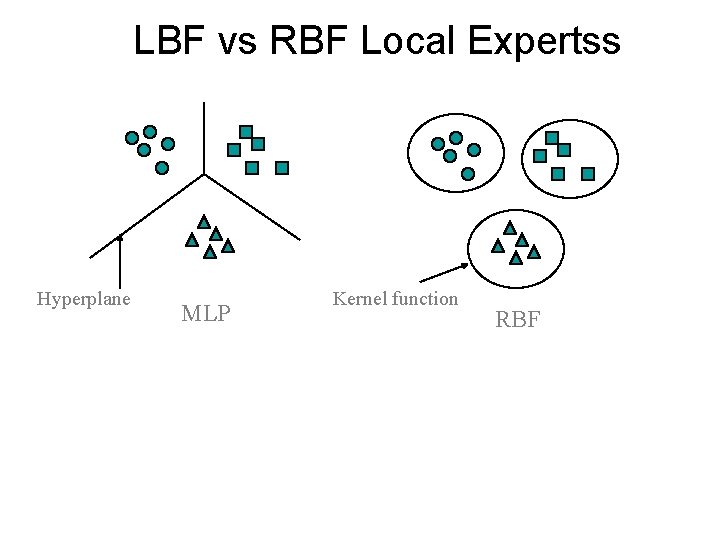

LBF vs RBF Local Expertss Hyperplane MLP Kernel function RBF

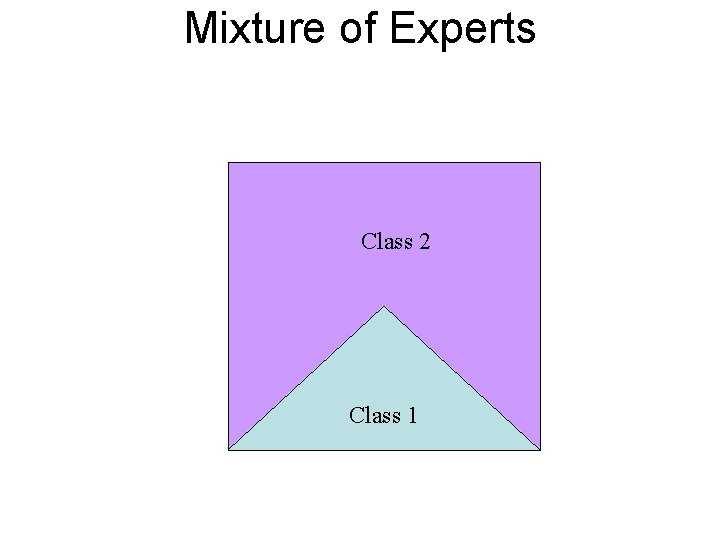

Mixture of Experts Class 2 Class 1

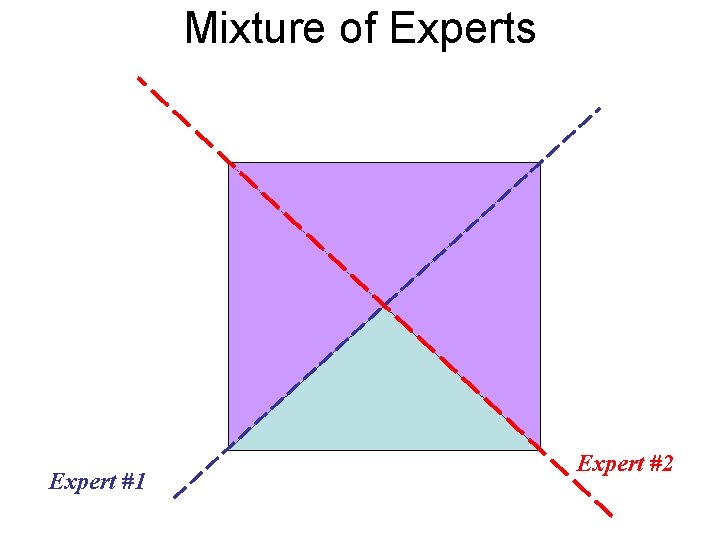

Mixture of Experts Expert #1 Expert #2

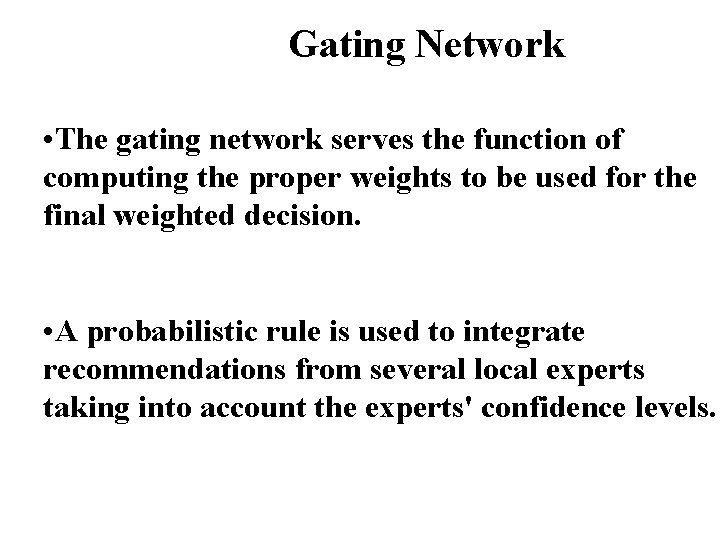

Gating Network • The gating network serves the function of computing the proper weights to be used for the final weighted decision. • A probabilistic rule is used to integrate recommendations from several local experts taking into account the experts' confidence levels.

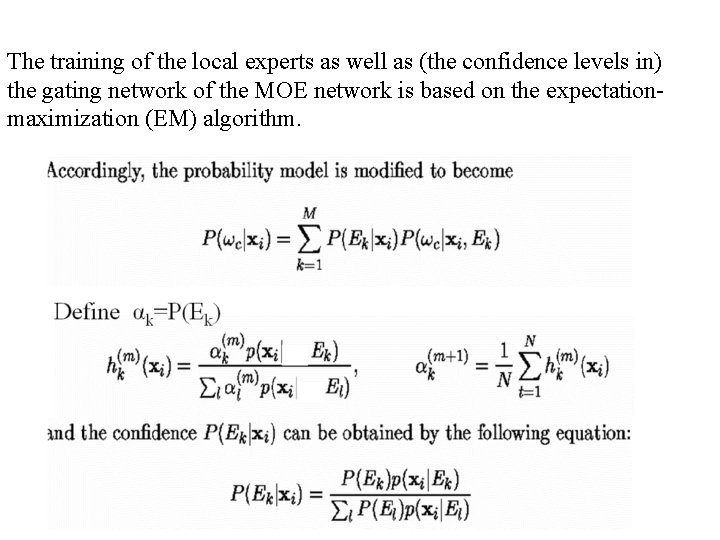

The training of the local experts as well as (the confidence levels in) the gating network of the MOE network is based on the expectationmaximization (EM) algorithm.