SUMS STAR Unified Meta Scheduler SUMS is a

- Slides: 43

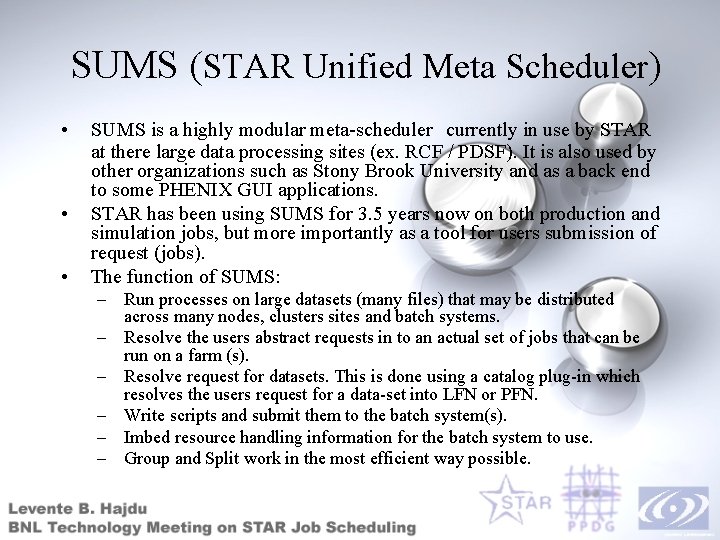

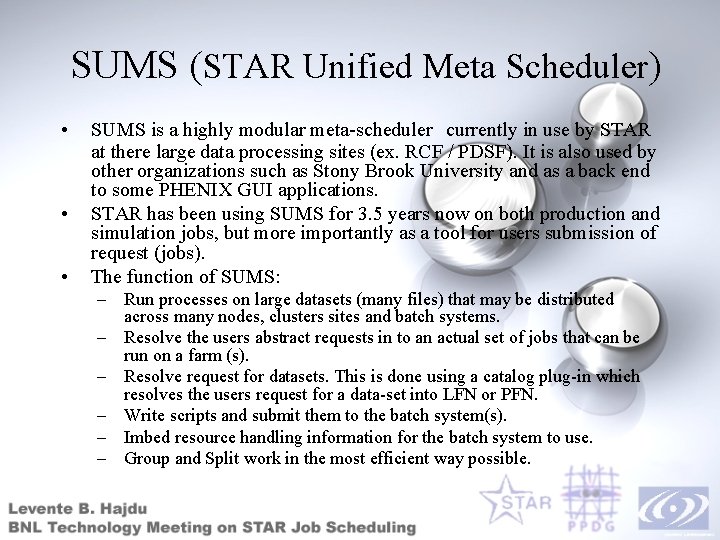

SUMS (STAR Unified Meta Scheduler) • • • SUMS is a highly modular meta-scheduler currently in use by STAR at there large data processing sites (ex. RCF / PDSF). It is also used by other organizations such as Stony Brook University and as a back end to some PHENIX GUI applications. STAR has been using SUMS for 3. 5 years now on both production and simulation jobs, but more importantly as a tool for users submission of request (jobs). The function of SUMS: – Run processes on large datasets (many files) that may be distributed across many nodes, clusters sites and batch systems. – Resolve the users abstract requests in to an actual set of jobs that can be run on a farm (s). – Resolve request for datasets. This is done using a catalog plug-in which resolves the users request for a data-set into LFN or PFN. – Write scripts and submit them to the batch system(s). – Imbed resource handling information for the batch system to use. – Group and Split work in the most efficient way possible.

Who contributes to SUMS research, development and administration • PPDG – funding • Jerome Lauret and Levente Hajdu – development and administration of SUMS at BNL • Lidia Didenko – Testing for grid readiness • David Alexander and Paul Hamill (Tech-X corp) - RDL deployment and prototype client and web service • Eric Hjort, Iwona Sakrejda, Doug Olson – administration of SUMS at PDSF • Valeri Fine – Job tracking. • Andrey Y. Shevel - administration of SUM at Stony Brook University • And Others • Gabriele Carcassi – development and administration of SUMS • Efstratios Efstathiadis – Queue monitoring, research

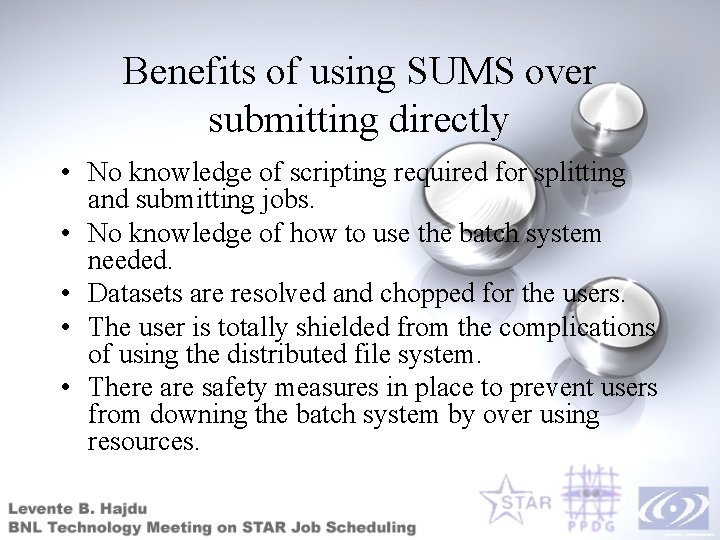

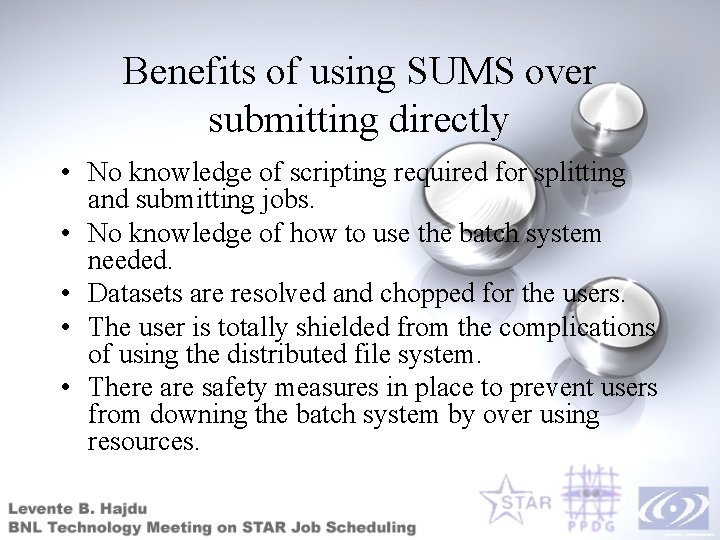

Benefits of using SUMS over submitting directly • No knowledge of scripting required for splitting and submitting jobs. • No knowledge of how to use the batch system needed. • Datasets are resolved and chopped for the users. • The user is totally shielded from the complications of using the distributed file system. • There are safety measures in place to prevent users from downing the batch system by over using resources.

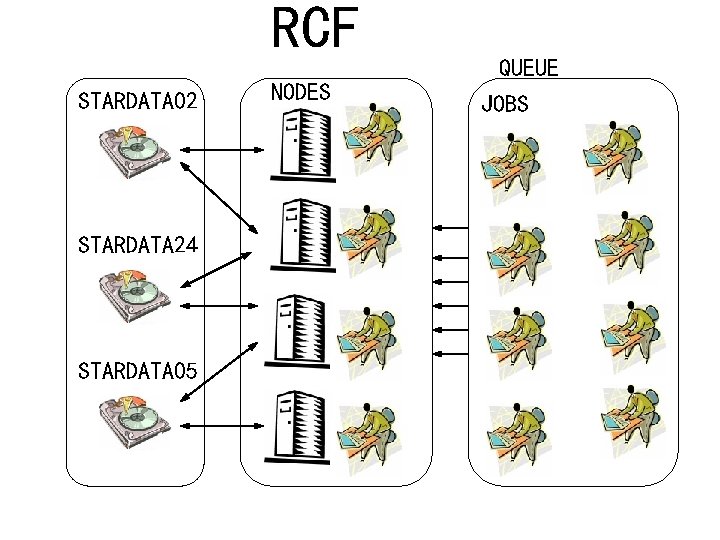

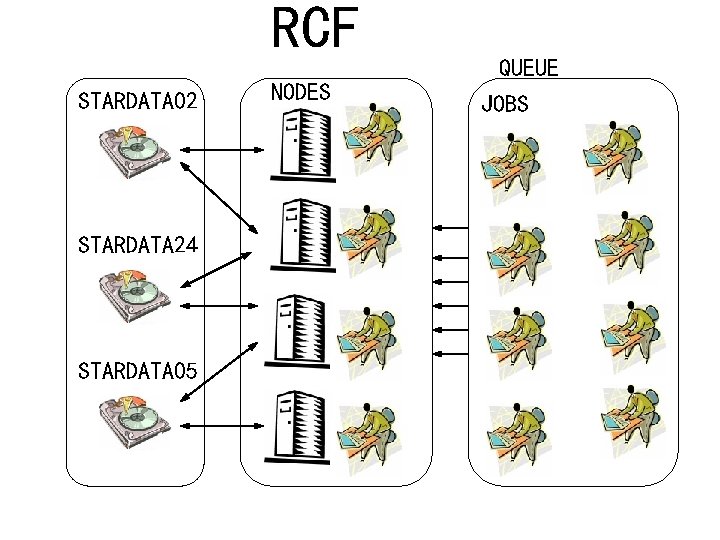

RCF STARDATA 02 STARDATA 24 STARDATA 05 NODES QUEUE JOBS

RCF STARDATA 02 STARDATA 24 STARDATA 05 NODES QUEUE JOBS

Queued jobs SD 05 = 800 SD 24 = 50 SD 05 = 700 SD 02 = 450 Running jobs SD 05 = 500 SD 24 = 50 SD 05 = 500 SD 24 = 10 SD 05 = 1000 SD 24 = 2 SD 05 = 30 SD 24 = 3 SD 05 = 30 SD 02 = 600 SD 05 virtual resource SD 24 virtual resource SD 02 virtual resource (2040 units total) (102 units total) (800 units total) STARDATA 05 STARDATA 24 STARDATA 02

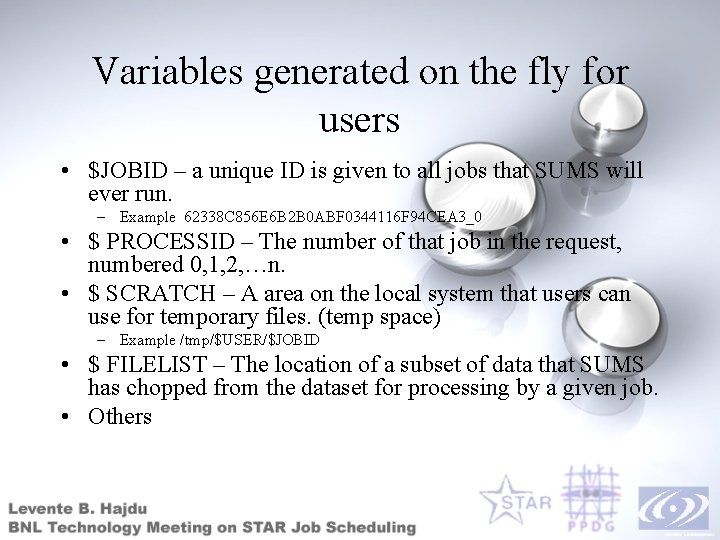

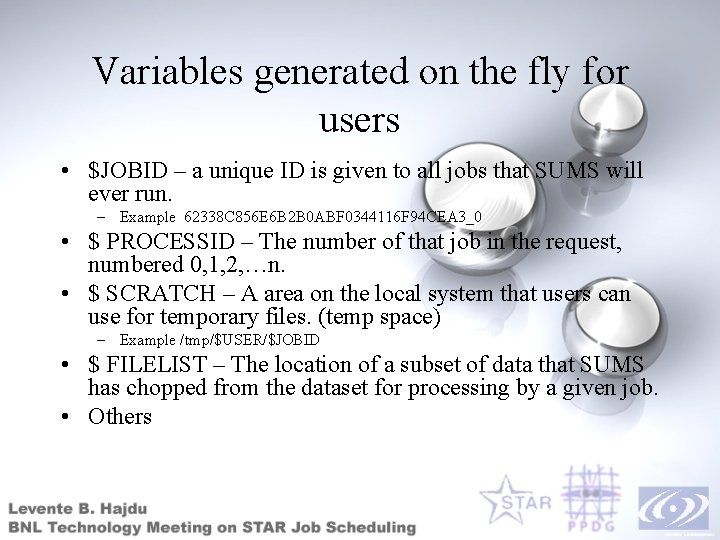

Variables generated on the fly for users • $JOBID – a unique ID is given to all jobs that SUMS will ever run. – Example 62338 C 856 E 6 B 2 B 0 ABF 0344116 F 94 CEA 3_0 • $ PROCESSID – The number of that job in the request, numbered 0, 1, 2, …n. • $ SCRATCH – A area on the local system that users can use for temporary files. (temp space) – Example /tmp/$USER/$JOBID • $ FILELIST – The location of a subset of data that SUMS has chopped from the dataset for processing by a given job. • Others

JDL job XSD tree View

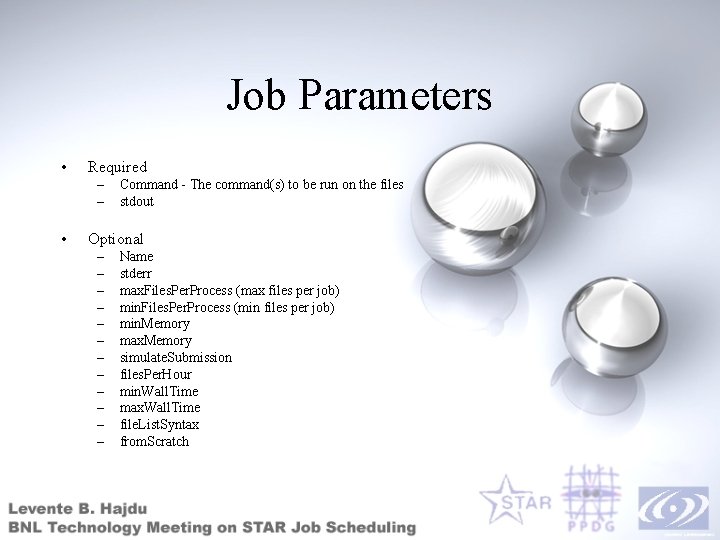

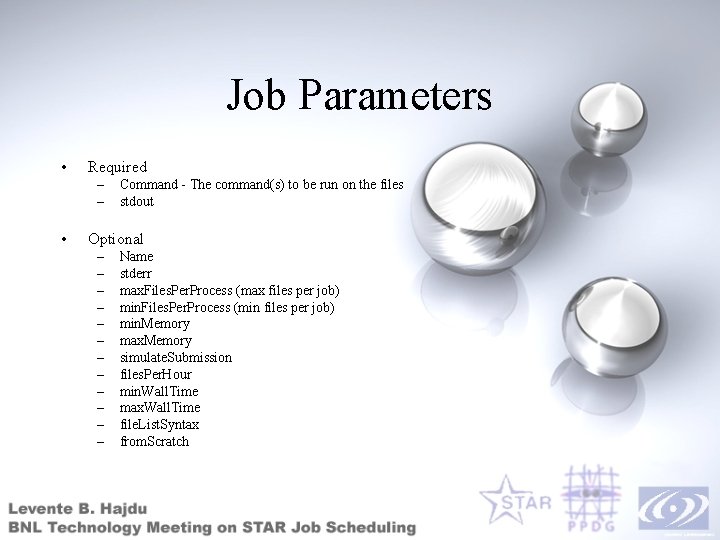

Job Parameters • Required – – • Command - The command(s) to be run on the files stdout Optional – – – Name stderr max. Files. Per. Process (max files per job) min. Files. Per. Process (min files per job) min. Memory max. Memory simulate. Submission files. Per. Hour min. Wall. Time max. Wall. Time file. List. Syntax from. Scratch

Sample job <? xml version="1. 0" encoding="utf-8" ? > <job max. Files. Per. Process="10" file. List. Syntax="rootd" min. Memory= "15" > <command> root 4 star -q –b /star/macro/run. Mu. Heavy. Maker. C("$SCRATCH/heavy. Mu. Dst. root", "$FILELIST") </command> <stdout URL="file: /star/u/lbhajdu/temp/heavy. $JOBID. out" /> <input URL="catalog: star. bnl. gov? production=P 04 ik, trgsetupname=pro. High, filetype=daq_reco_Mu. Dst, tpc=1, ftpc=1, sanity=1“, n. Files="all"/> <output from. Scratch=“*. Mu. Dst. root” to. URL="file: /star/data 02/heavy. $JOBID. root" /> </job>

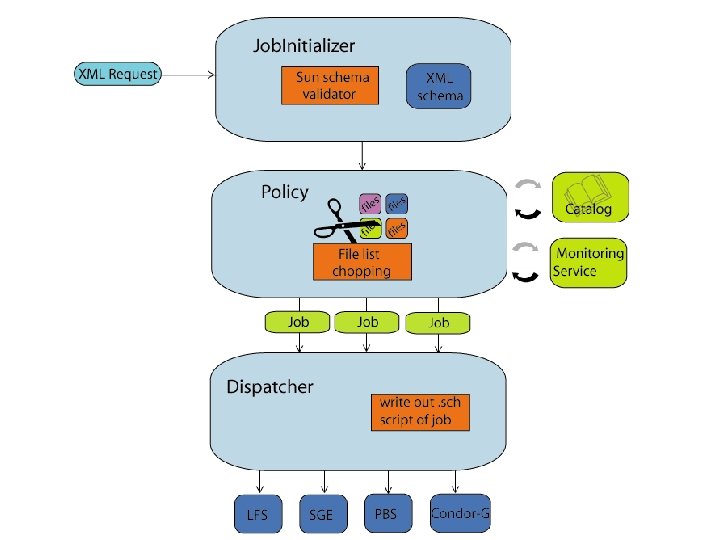

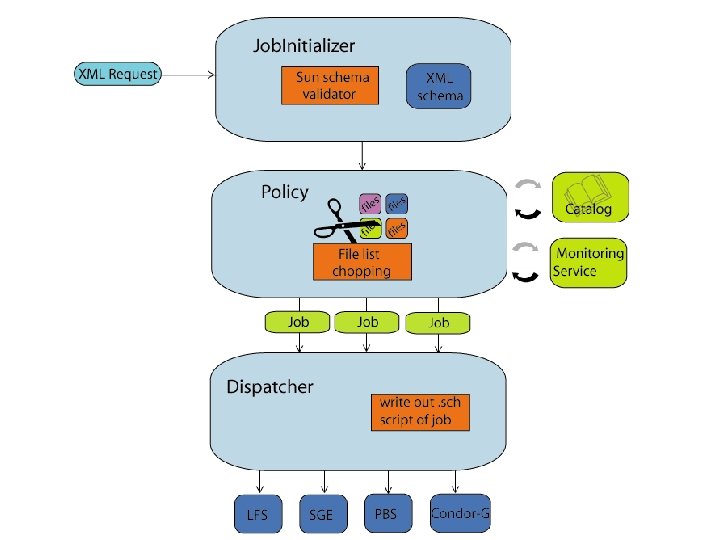

Configuring SUMS • • • SUMS uses java standardized xml de-serialization for its configuration. Over the years we have found this to be the ideal balance between ease of use and the power to define complex systems abstractly. Pre-initialized scheduler objects are defined by the administrator. One configuration file can hold many different instances of the same object. By default the user will be given the default objects, or they can specify other objects that have been customized for the special needs of there jobs. Objects include: – Job. Initializer – Policy – Queue – Dispatcher – Application – Statistics recorder – Others

Job. Initializer • The job initializer is the module through which the user submits his job. • Job. Initializers currently available: – Local command line – command line (web service) • Tested still in beta – GUI (web service) • Tested still in beta

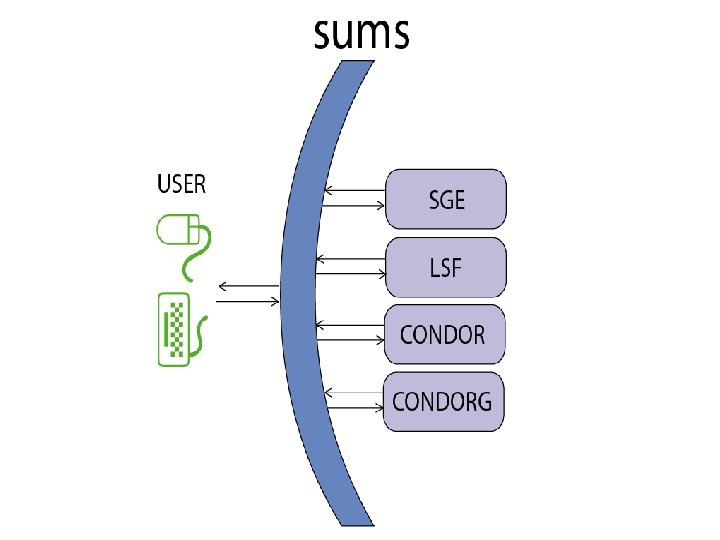

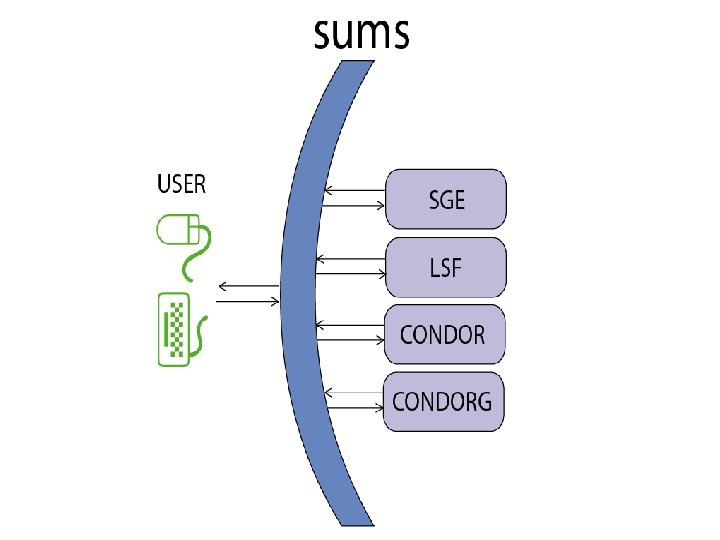

Dispatchers • A scheduler plug-in module, that implements the dispatcher interface, that converts job objects to a “real” job actually submitted to the batch system • Currently available dispatchers: – – – – Boss Condor. G Local (new) LSF PBS SGE (new but heavily tested by PDSF)

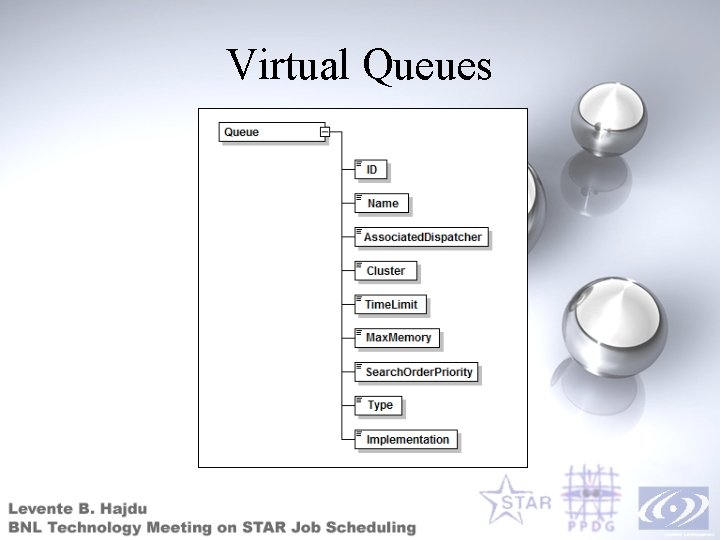

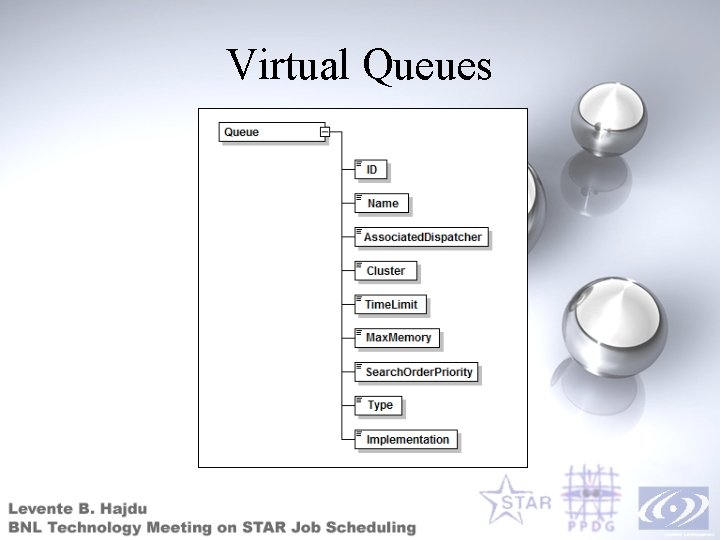

Virtual Queues • Defines a “place” (queue, pool, meta queue, service , etc. ) that a job can be submitted to. • Defines properties of that place. • Each Virtual Queue points to one dispatcher object.

Virtual Queues

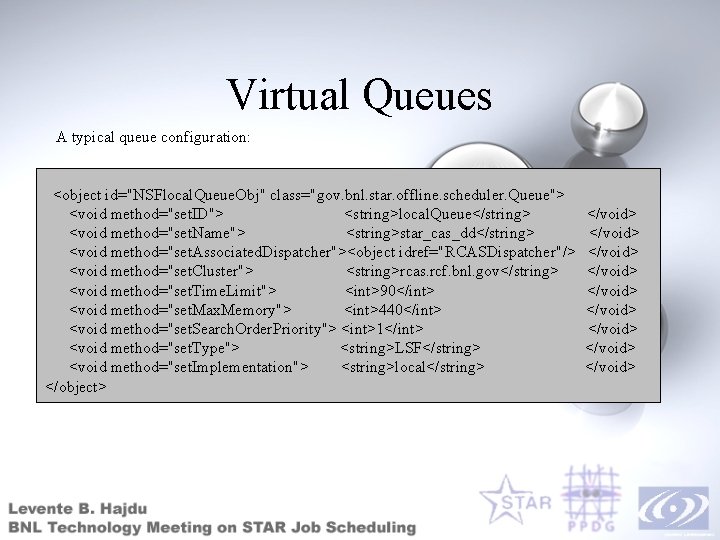

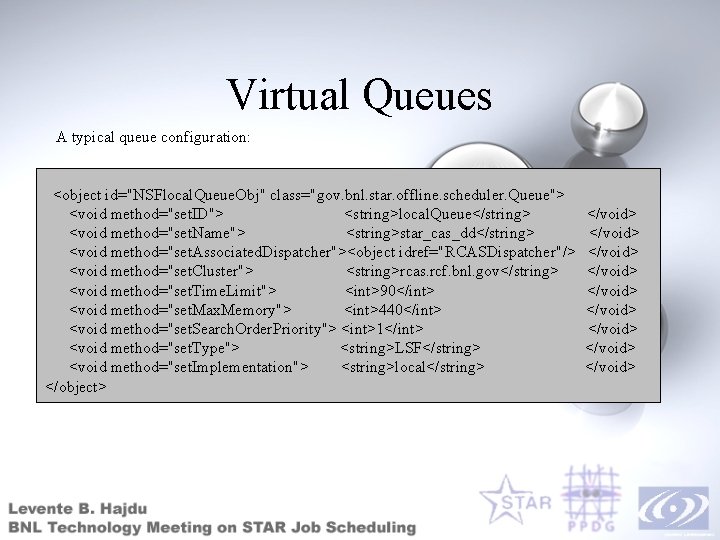

Virtual Queues A typical queue configuration: <object id="NSFlocal. Queue. Obj" class="gov. bnl. star. offline. scheduler. Queue"> <void method="set. ID"> <string>local. Queue</string> <void method="set. Name"> <string>star_cas_dd</string> <void method="set. Associated. Dispatcher"><object idref="RCASDispatcher"/> <void method="set. Cluster"> <string>rcas. rcf. bnl. gov</string> <void method="set. Time. Limit"> <int>90</int> <void method="set. Max. Memory"> <int>440</int> <void method="set. Search. Order. Priority"> <int>1</int> <void method="set. Type"> <string>LSF</string> <void method="set. Implementation"> <string>local</string> </object> </void> </void> </void>

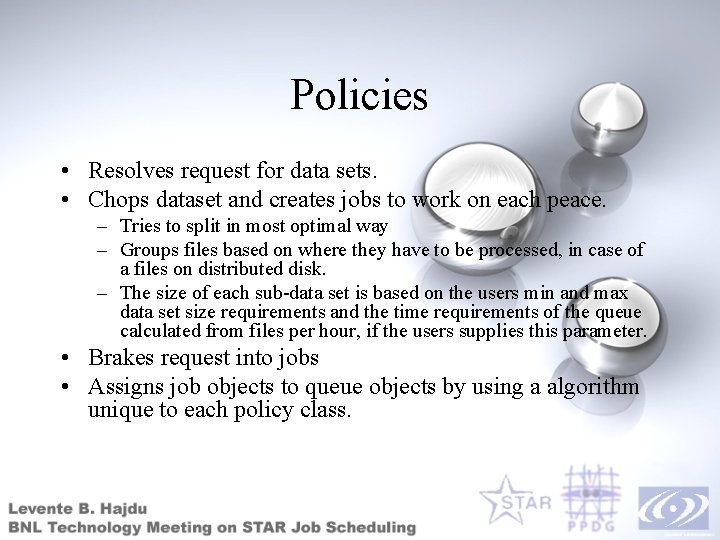

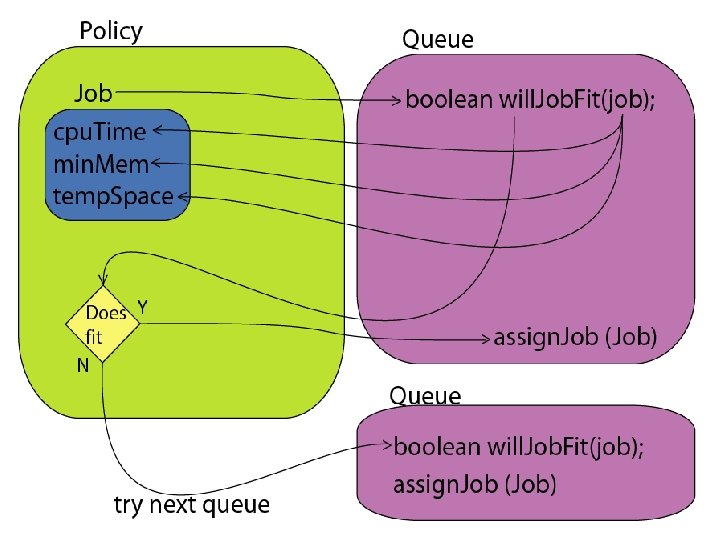

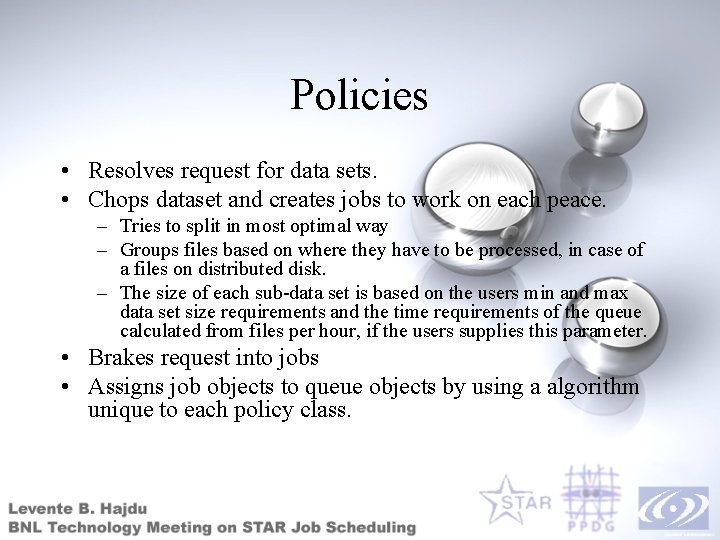

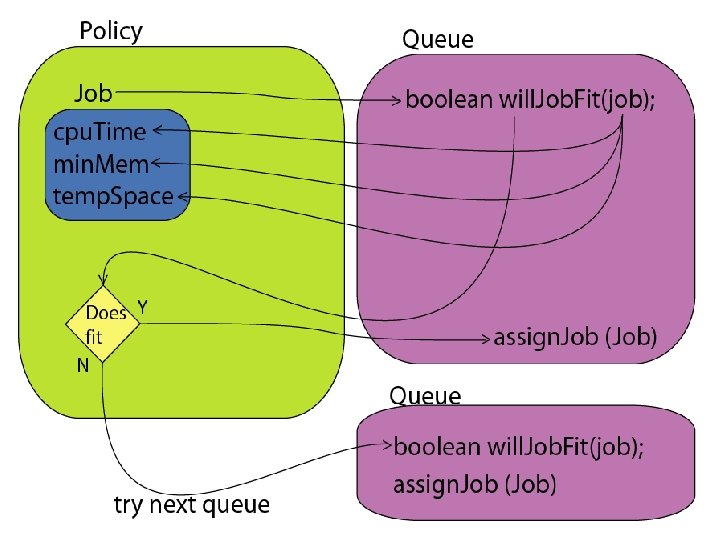

Policies • Resolves request for data sets. • Chops dataset and creates jobs to work on each peace. – Tries to split in most optimal way – Groups files based on where they have to be processed, in case of a files on distributed disk. – The size of each sub-data set is based on the users min and max data set size requirements and the time requirements of the queue calculated from files per hour, if the users supplies this parameter. • Brakes request into jobs • Assigns job objects to queue objects by using a algorithm unique to each policy class.

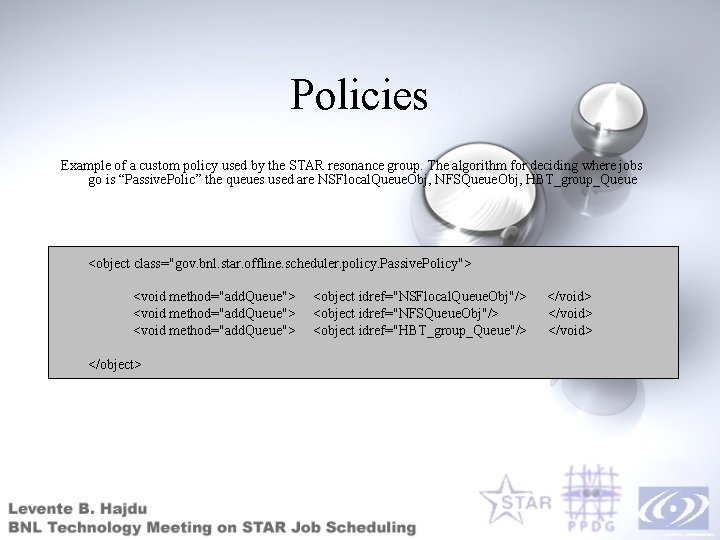

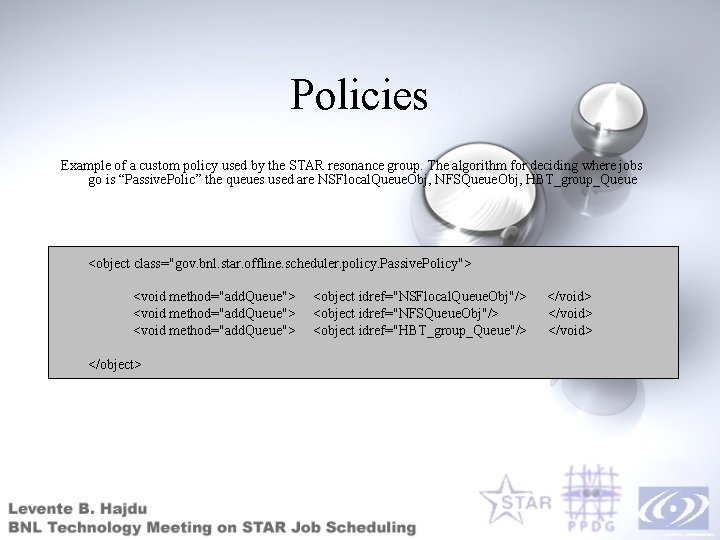

Policies

Policies Example of a custom policy used by the STAR resonance group. The algorithm for deciding where jobs go is “Passive. Polic” the queues used are NSFlocal. Queue. Obj, NFSQueue. Obj, HBT_group_Queue <object class="gov. bnl. star. offline. scheduler. policy. Passive. Policy"> <void method="add. Queue"> </object> <object idref="NSFlocal. Queue. Obj"/> <object idref="NFSQueue. Obj"/> <object idref="HBT_group_Queue"/> </void>

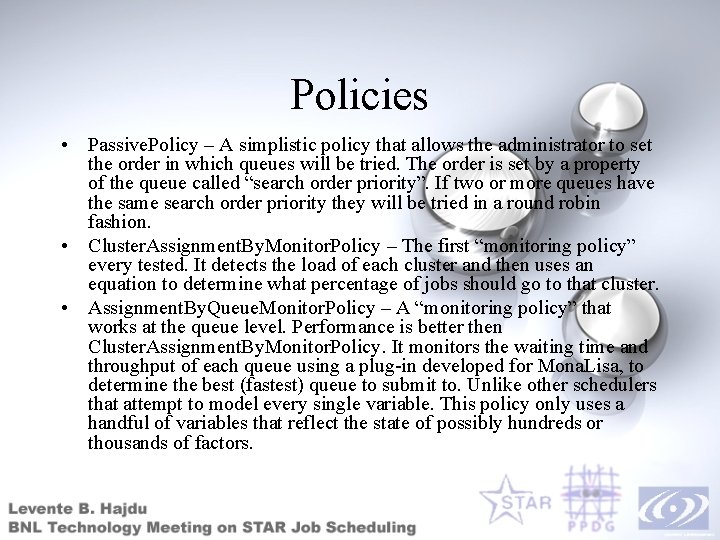

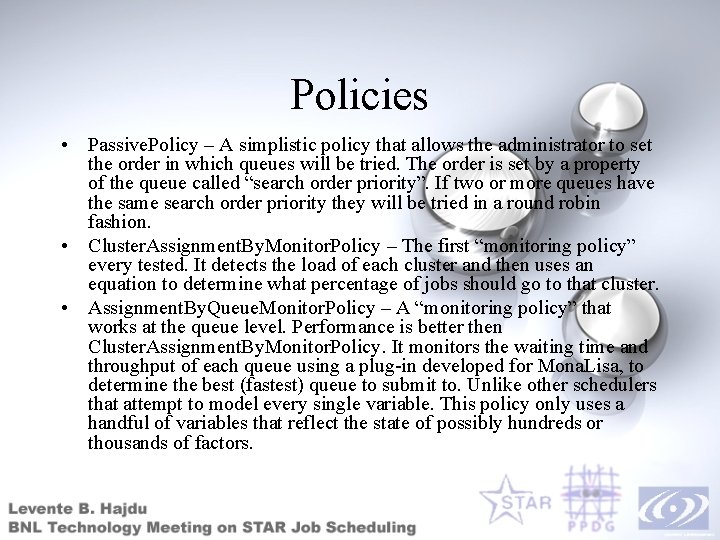

Policies • Passive. Policy – A simplistic policy that allows the administrator to set the order in which queues will be tried. The order is set by a property of the queue called “search order priority”. If two or more queues have the same search order priority they will be tried in a round robin fashion. • Cluster. Assignment. By. Monitor. Policy – The first “monitoring policy” every tested. It detects the load of each cluster and then uses an equation to determine what percentage of jobs should go to that cluster. • Assignment. By. Queue. Monitor. Policy – A “monitoring policy” that works at the queue level. Performance is better then Cluster. Assignment. By. Monitor. Policy. It monitors the waiting time and throughput of each queue using a plug-in developed for Mona. Lisa, to determine the best (fastest) queue to submit to. Unlike other schedulers that attempt to model every single variable. This policy only uses a handful of variables that reflect the state of possibly hundreds or thousands of factors.

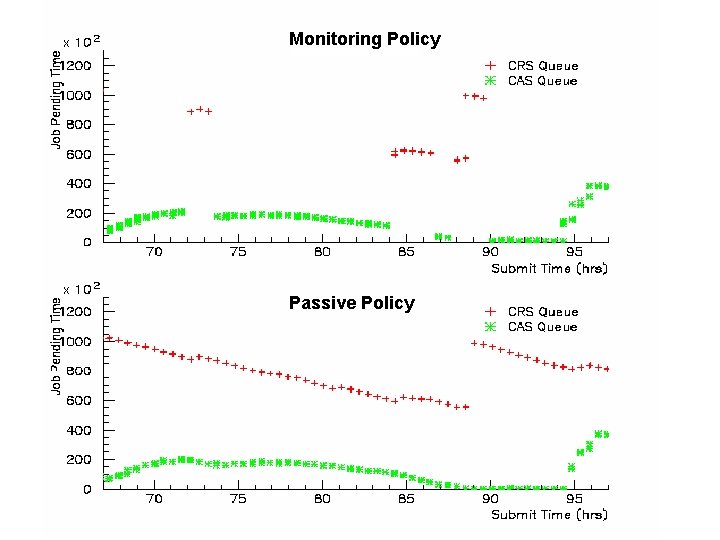

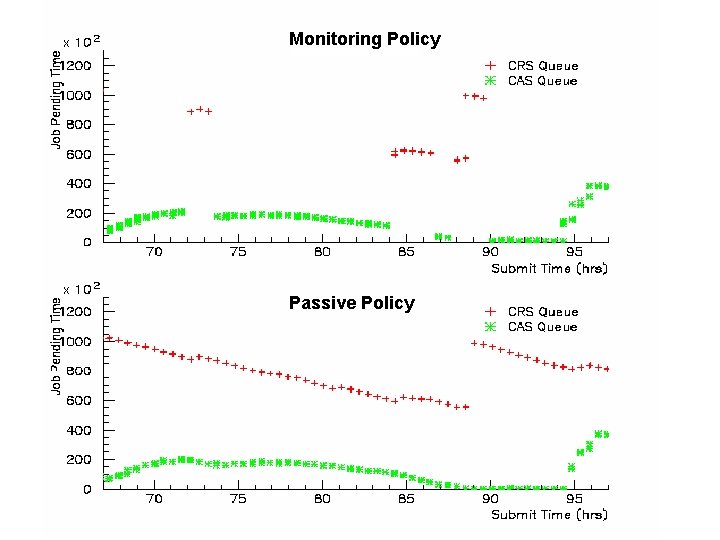

Monitoring Policy Passive Policy

Reports, Logs and Statistics • Logs and statistics collection is optional and the users report file is always generated. • Reports – Reports are put in the users directory they give information about the internal workings of SUMS to the user. – Reports information about every job that was processed. – The user decides when to delete these. • Logs – Holds information in a central area more valid to the administrator, for diagnosing problems. – The administrator decides when to delete these. • Statistics – General information about how many people are using SUMS and what options there using.

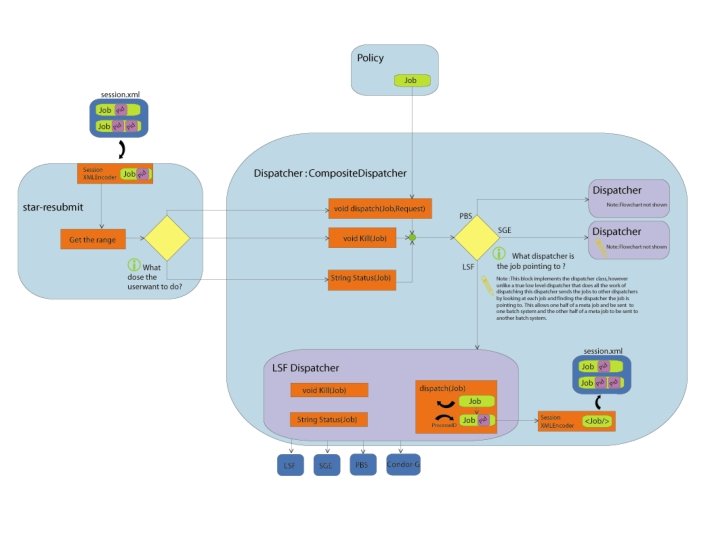

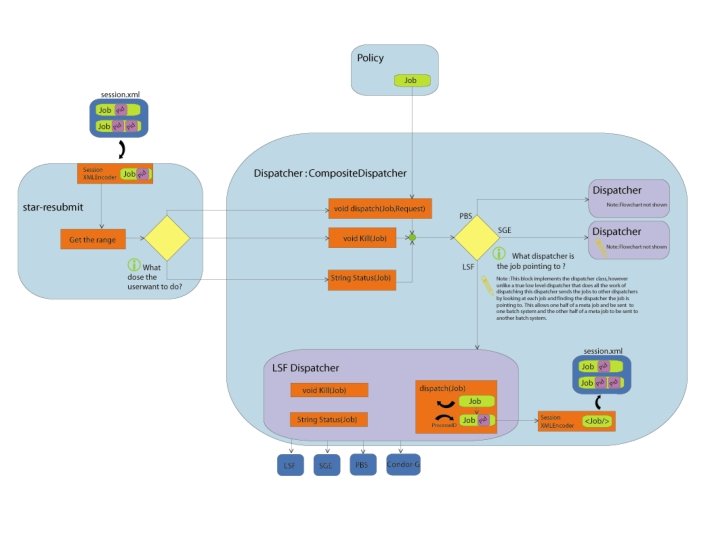

Job tracking / monitoring /crash recovery • Dispatchers in SUMS currently provide 3 functions: – Submit Job(s) – Get Status of job(s) – Kill Job(s)

Job tracking / monitoring /crash recovery • To implement this in the most simplest care free way possible it was decided no central data base should be used to store this information. The information should be given to the users directly. • The benefits are: – No db’s need to be set up on sites running SUMS. This automatically eliminates all securely and administration considerations. – The user decides when they no longer need this data. As the data is now in the user file system. As a file generated by SUMS

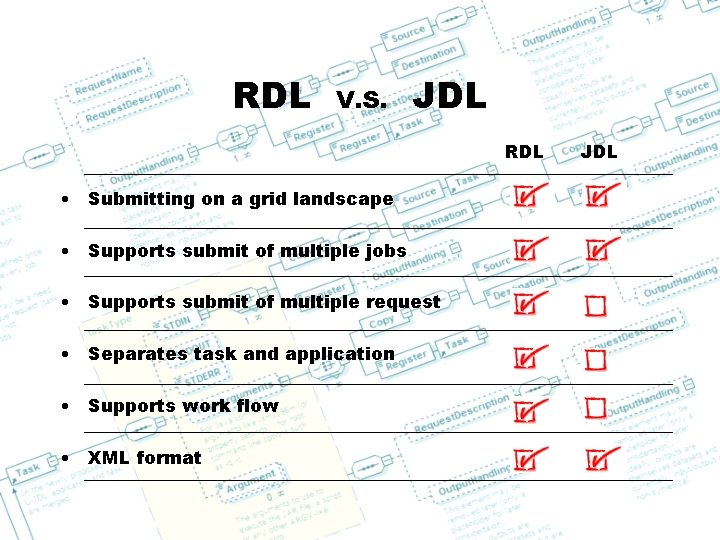

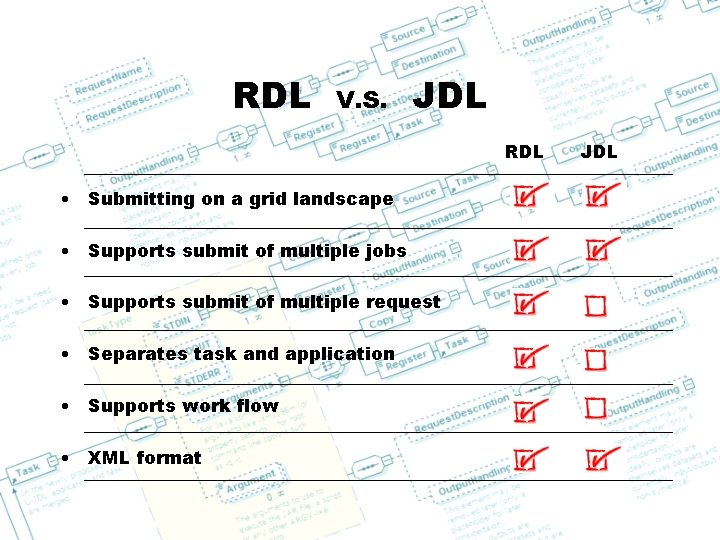

RDL Request Definition Language – An XML based language under development by STAR in collaboration with other scientific groups and private industry for describing not only one job, but many jobs and the relationships between them geared towards web services with advanced gui clients.

RDL Terminology on the layers of abstraction are not very clear all inclusive definitions are hard to come by. Note: These are only guide lines. Abstract / Meta / Composite request – defines a group of requests performing a common task. The order in which they run many be important. The output of one request may be the input to another request in the same meta request. example: Make a new dataset by running a program. When it is done sum the output and render a histogram. Request or meta job – defines a group of [0 to many] jobs that have a common function and can be run simultaneously. example: Take a data set and run an application on it. Physical Job – The unit of work the batch system deals with. example: Take a dataset and run an application on it.

RDL V. S. JDL RDL • Submitting on a grid landscape • Supports submit of multiple jobs • Supports submit of multiple request • Separates task and application • Supports work flow • XML format JDL

Star of wonder star of night star of royal beauty bright

Star of wonder star of night star of royal beauty bright Meta synthesis vs meta analysis

Meta synthesis vs meta analysis A* vs ao* algorithm

A* vs ao* algorithm What does star stand for fccla

What does star stand for fccla Bodmas questions

Bodmas questions Geogebra riemann sums

Geogebra riemann sums Partial sum formula

Partial sum formula Midpoint riemann sum

Midpoint riemann sum How to read summation notation

How to read summation notation Multiplication

Multiplication Riemann sum to integral

Riemann sum to integral A rule of nature that sums up related observations

A rule of nature that sums up related observations Estimate fraction sums and differences

Estimate fraction sums and differences Infinite sequence

Infinite sequence Front-end rounding

Front-end rounding Estimating sums and differences of whole numbers

Estimating sums and differences of whole numbers Properties of summation

Properties of summation 3b multiplying radicals

3b multiplying radicals Trapezoid riemann sums

Trapezoid riemann sums Hcf problems

Hcf problems Bodmas printing

Bodmas printing Partial sums addition

Partial sums addition Word sums morphology

Word sums morphology How to write riemann sums

How to write riemann sums Parallel prefix sum

Parallel prefix sum Bullhorn appointment scheduler

Bullhorn appointment scheduler Sas lsf scheduler

Sas lsf scheduler Scheduler activations

Scheduler activations Pintos advanced scheduler

Pintos advanced scheduler Maximo work scheduling

Maximo work scheduling Scheduler

Scheduler Automic scheduler

Automic scheduler Long term scheduler

Long term scheduler Long term scheduler

Long term scheduler Ibm maximo for oil and gas

Ibm maximo for oil and gas Enterprise job scheduler comparison

Enterprise job scheduler comparison Bilkent scheduler

Bilkent scheduler Dolphin scheduler

Dolphin scheduler Scheduler.c

Scheduler.c Dollar universe tutorial

Dollar universe tutorial Job scheduler architecture

Job scheduler architecture Argent monitoring

Argent monitoring Priority scheduling algorithm example

Priority scheduling algorithm example Dollar universe scheduler

Dollar universe scheduler