Summer School on Hashing 14 Locality Sensitive Hashing

![Locality-Sensitive Hashing [Indyk-Motwani’ 98] q • p q “not-so-small” 1 Locality-Sensitive Hashing [Indyk-Motwani’ 98] q • p q “not-so-small” 1](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-5.jpg)

![NNS for Euclidean space [Datar-Immorlica-Indyk-Mirrokni’ 04] • 11 NNS for Euclidean space [Datar-Immorlica-Indyk-Mirrokni’ 04] • 11](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-11.jpg)

![Optimal* LSH [A-Indyk’ 06] • Regular grid → grid of balls p • p Optimal* LSH [A-Indyk’ 06] • Regular grid → grid of balls p • p](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-12.jpg)

![Time-Space Trade-offs space query Space time Time Comment Reference [Ind’ 01, Pan’ 06] low Time-Space Trade-offs space query Space time Time Comment Reference [Ind’ 01, Pan’ 06] low](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-16.jpg)

![Data-dependent Hashing! [A-Indyk-Nguyen-Razenshteyn’ 14] • 18 Data-dependent Hashing! [A-Indyk-Nguyen-Razenshteyn’ 14] • 18](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-18.jpg)

![A look at LSH lower bounds • [O’Donnell-Wu-Zhou’ 11] 19 A look at LSH lower bounds • [O’Donnell-Wu-Zhou’ 11] 19](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-19.jpg)

![Open question: • [Prob. needle of length 1 is not cut] ≥ 1/c 2 Open question: • [Prob. needle of length 1 is not cut] ≥ 1/c 2](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-31.jpg)

- Slides: 31

Summer School on Hashing’ 14 Locality Sensitive Hashing Alex Andoni (Microsoft Research)

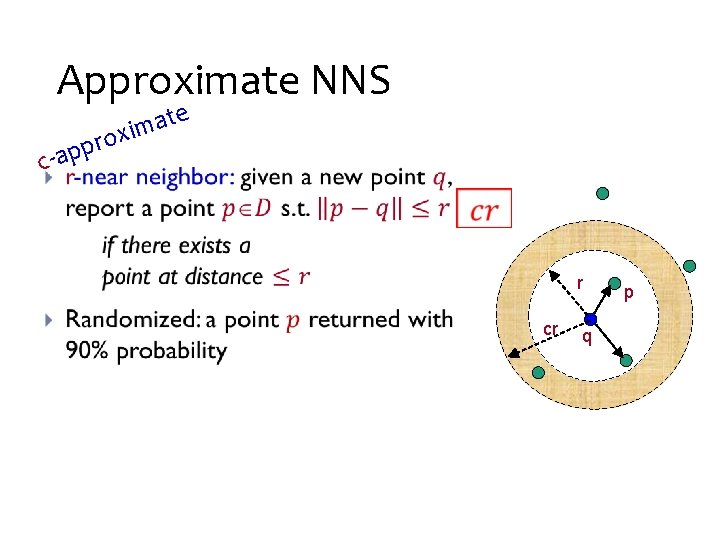

Nearest Neighbor Search (NNS) •

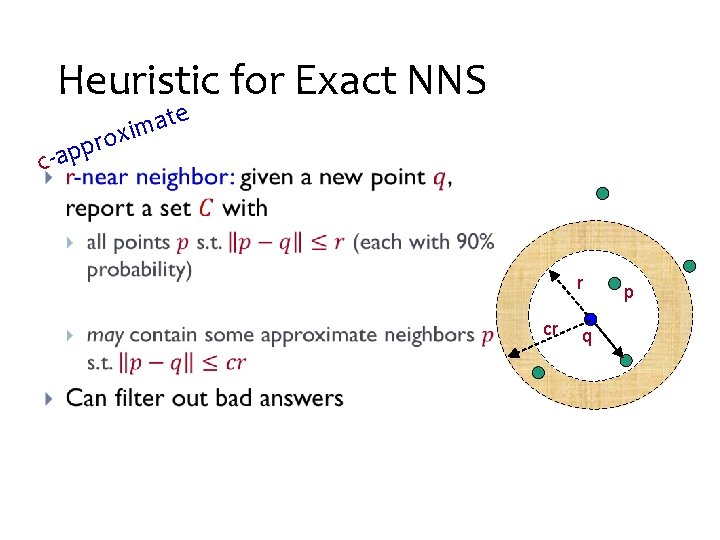

Approximate NNS e t a im x o r • -a pp c r cr q p

Heuristic for Exact NNS e t a im x o r • -a pp c r cr q p

![LocalitySensitive Hashing IndykMotwani 98 q p q notsosmall 1 Locality-Sensitive Hashing [Indyk-Motwani’ 98] q • p q “not-so-small” 1](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-5.jpg)

Locality-Sensitive Hashing [Indyk-Motwani’ 98] q • p q “not-so-small” 1

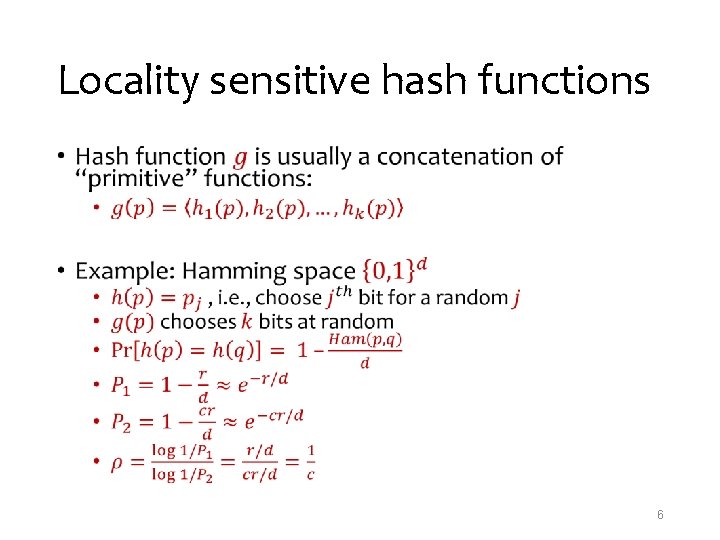

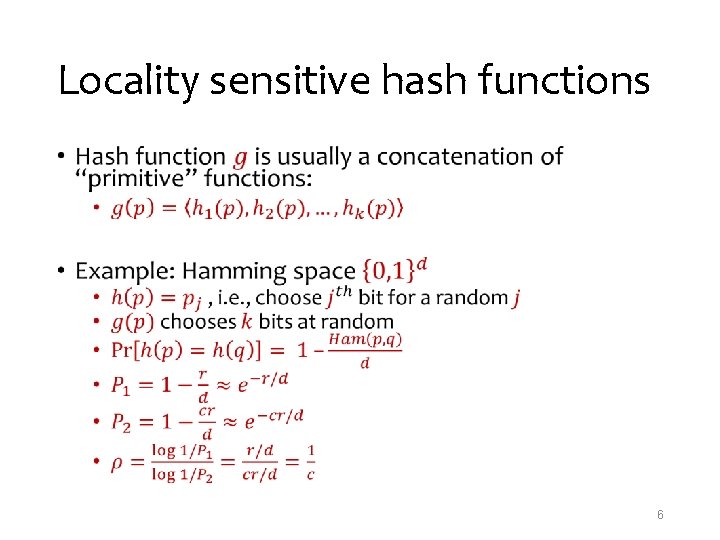

Locality sensitive hash functions • 6

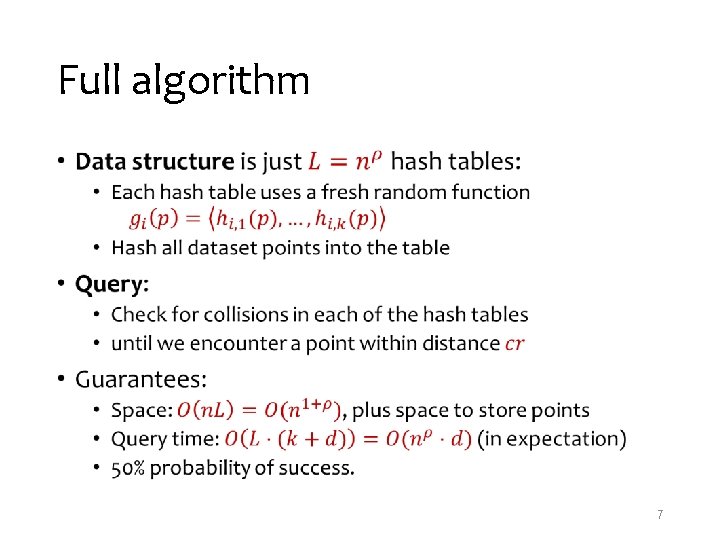

Full algorithm • 7

Analysis of LSH Scheme • collision probability distance 8

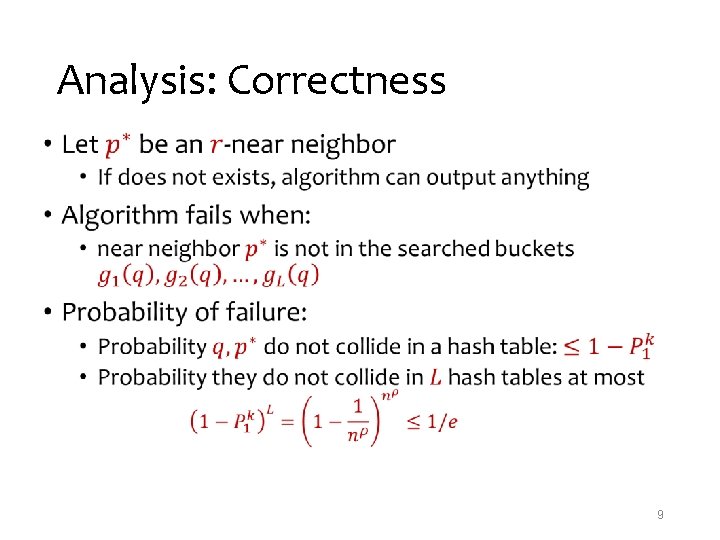

Analysis: Correctness • 9

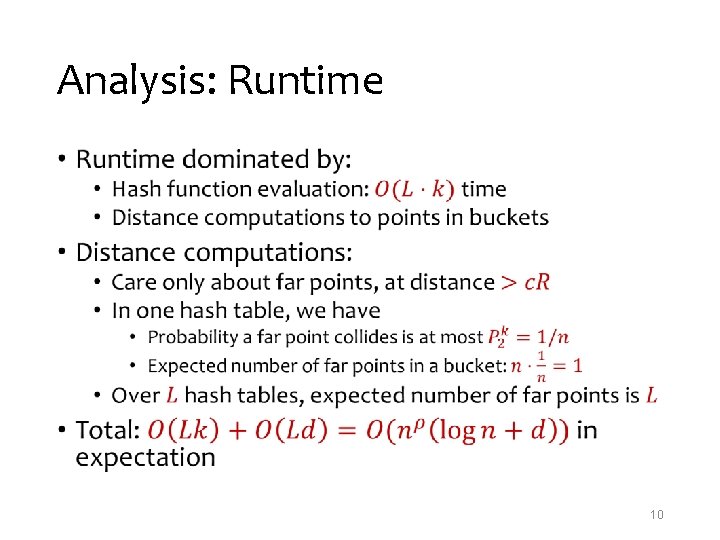

Analysis: Runtime • 10

![NNS for Euclidean space DatarImmorlicaIndykMirrokni 04 11 NNS for Euclidean space [Datar-Immorlica-Indyk-Mirrokni’ 04] • 11](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-11.jpg)

NNS for Euclidean space [Datar-Immorlica-Indyk-Mirrokni’ 04] • 11

![Optimal LSH AIndyk 06 Regular grid grid of balls p p Optimal* LSH [A-Indyk’ 06] • Regular grid → grid of balls p • p](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-12.jpg)

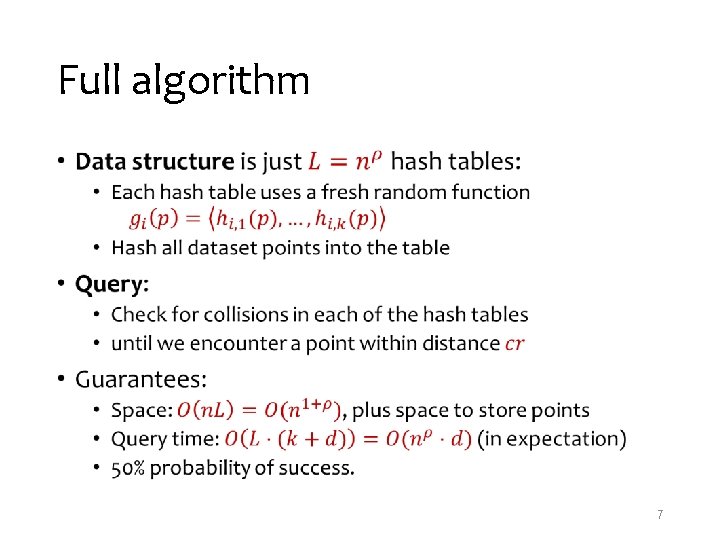

Optimal* LSH [A-Indyk’ 06] • Regular grid → grid of balls p • p can hit empty space, so take more such grids until p is in a ball • Need (too) many grids of balls • Start by projecting in dimension t • Analysis gives • Choice of reduced dimension t? 2 D • Tradeoff between • # hash tables, n , and • Time to hash, t. O(t) • Total query time: dn 1/c 2+o(1) p Rt

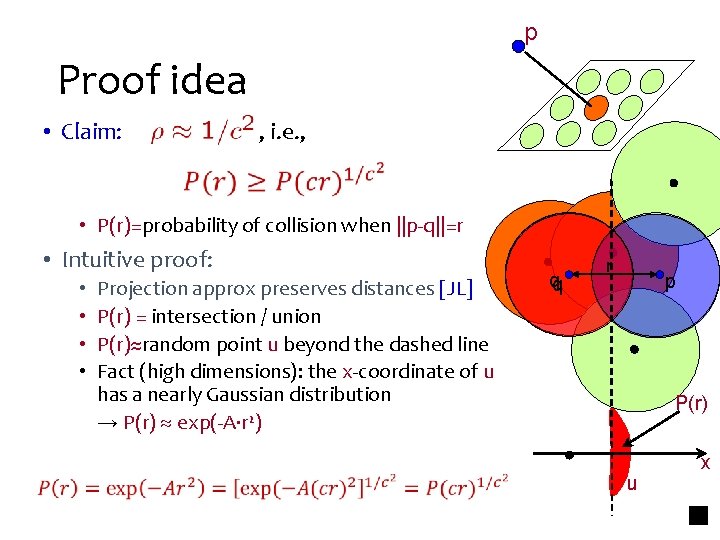

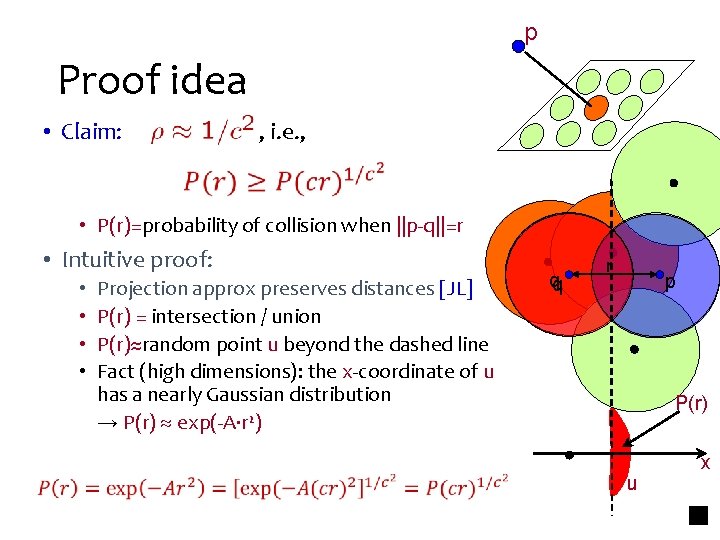

p Proof idea • Claim: , i. e. , • P(r)=probability of collision when ||p-q||=r • Intuitive proof: Projection approx preserves distances [JL] P(r) = intersection / union P(r)≈random point u beyond the dashed line Fact (high dimensions): the x-coordinate of u has a nearly Gaussian distribution → P(r) exp(-A·r 2) • • qq r p P(r) u x

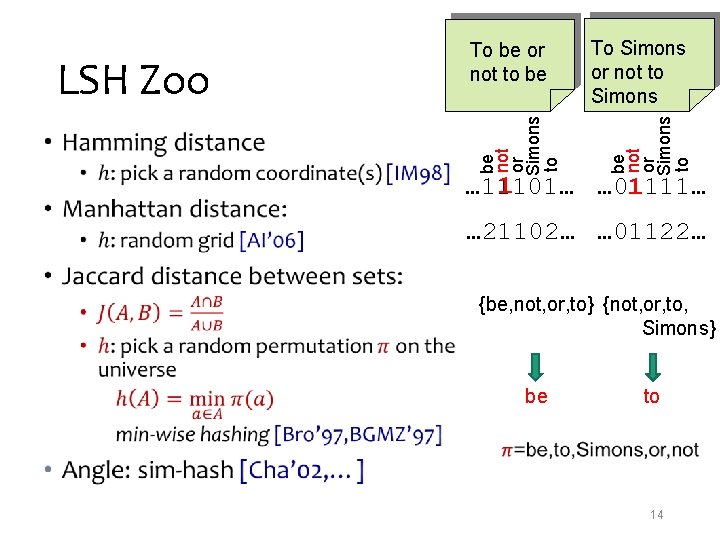

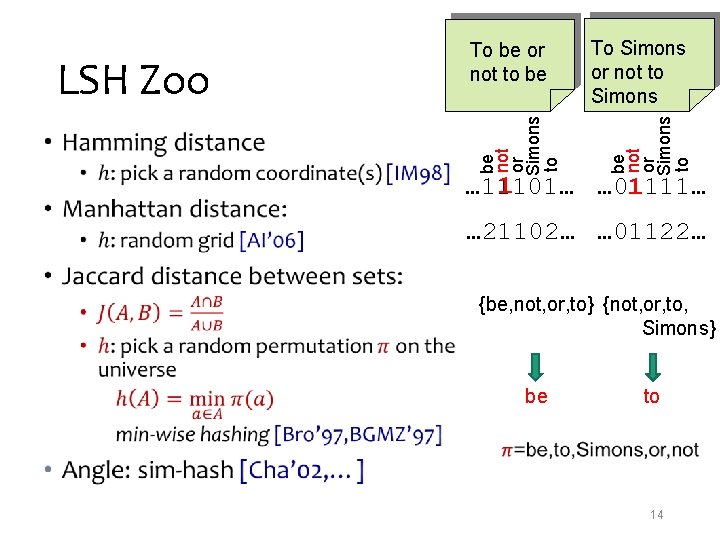

To Simons or not to Simons be not or Simons to • be not or Simons to LSH Zoo To be or not to be 1 … 01111… … 11101… 1 … 21102… … 01122… {be, not, or, to} {not, or, to, Simons} be to 14

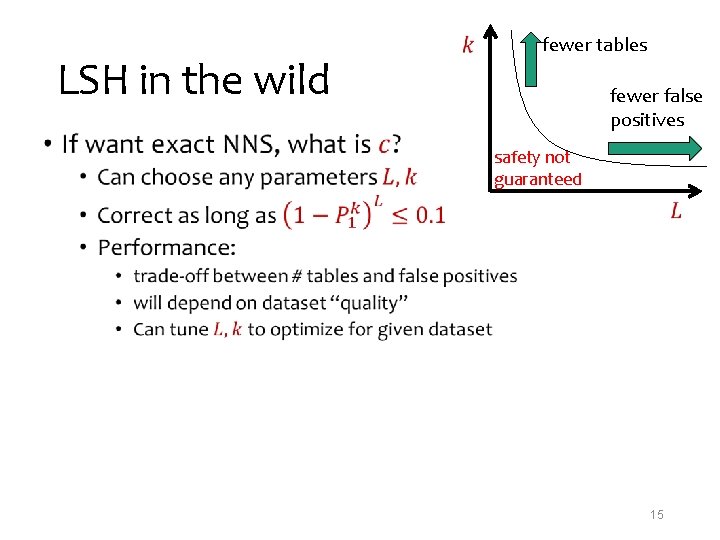

LSH in the wild • fewer tables fewer false positives safety not guaranteed 15

![TimeSpace Tradeoffs space query Space time Time Comment Reference Ind 01 Pan 06 low Time-Space Trade-offs space query Space time Time Comment Reference [Ind’ 01, Pan’ 06] low](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-16.jpg)

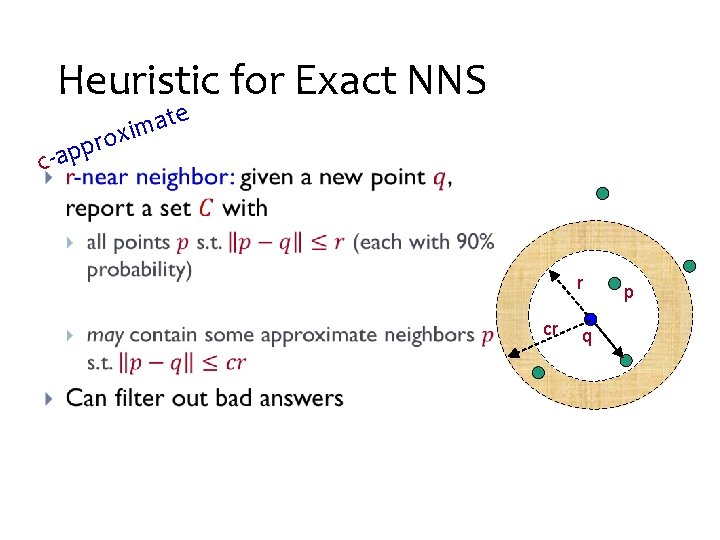

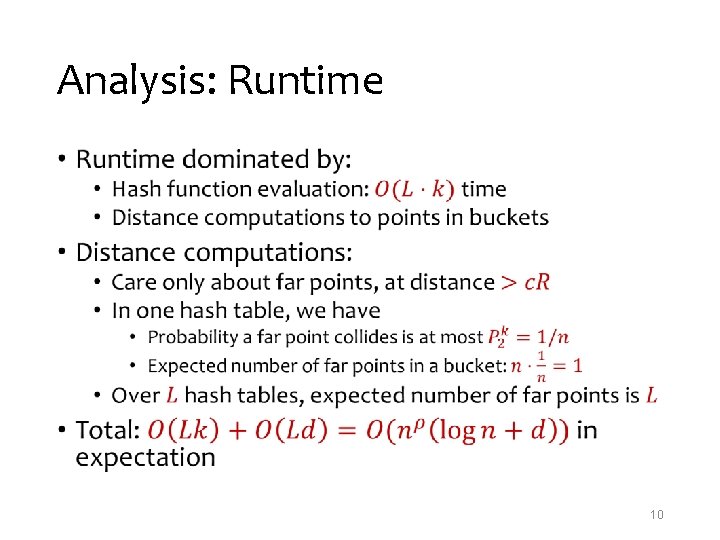

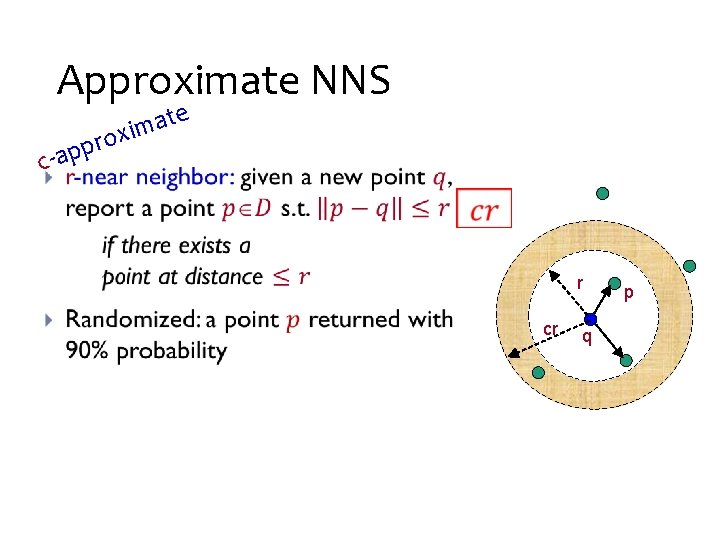

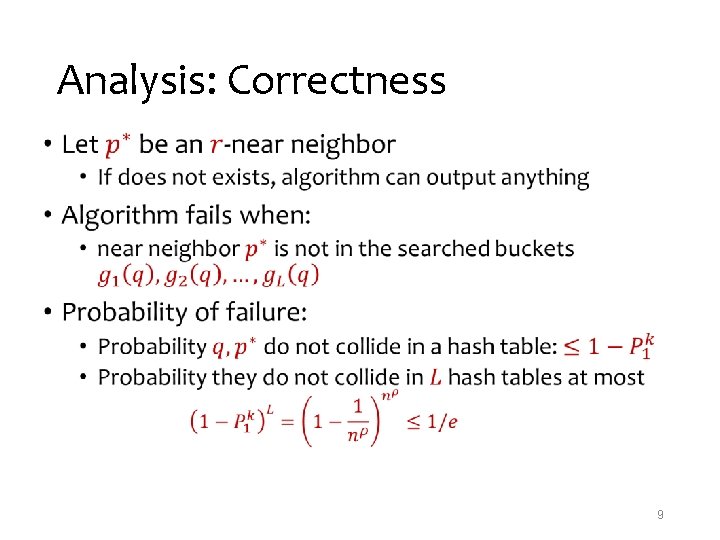

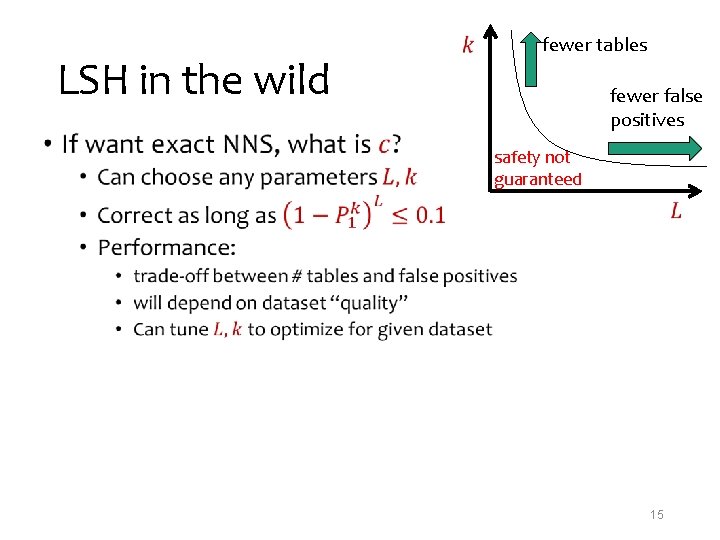

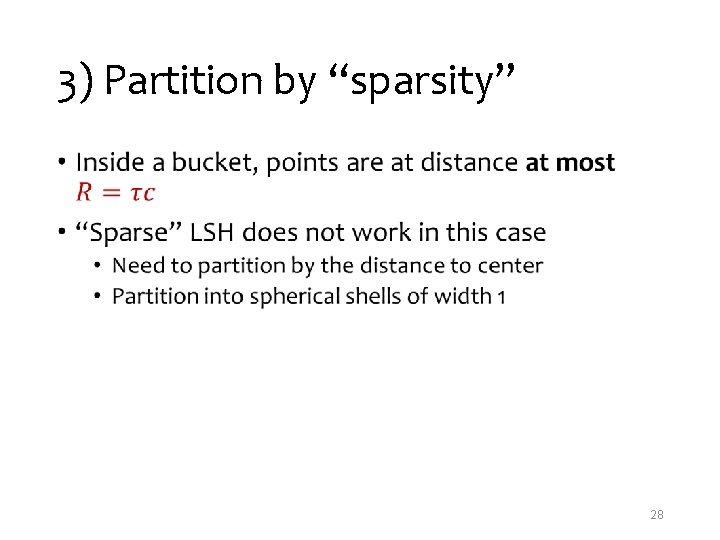

Time-Space Trade-offs space query Space time Time Comment Reference [Ind’ 01, Pan’ 06] low high [AI’ 06] [IM’ 98] [DIIM’ 04, AI’ 06] medium [MNP’ 06, OWZ’ 11] ω(1) memory lookups [PTW’ 08, PTW’ 10] p oku o l m e 1 m high low ω(1) memory lookups [KOR’ 98, IM’ 98, Pan’ 06] [AIP’ 06]

LSH is tight… leave the rest to cell-probe lower bounds?

![Datadependent Hashing AIndykNguyenRazenshteyn 14 18 Data-dependent Hashing! [A-Indyk-Nguyen-Razenshteyn’ 14] • 18](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-18.jpg)

Data-dependent Hashing! [A-Indyk-Nguyen-Razenshteyn’ 14] • 18

![A look at LSH lower bounds ODonnellWuZhou 11 19 A look at LSH lower bounds • [O’Donnell-Wu-Zhou’ 11] 19](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-19.jpg)

A look at LSH lower bounds • [O’Donnell-Wu-Zhou’ 11] 19

Why not NNS lower bound? • 20

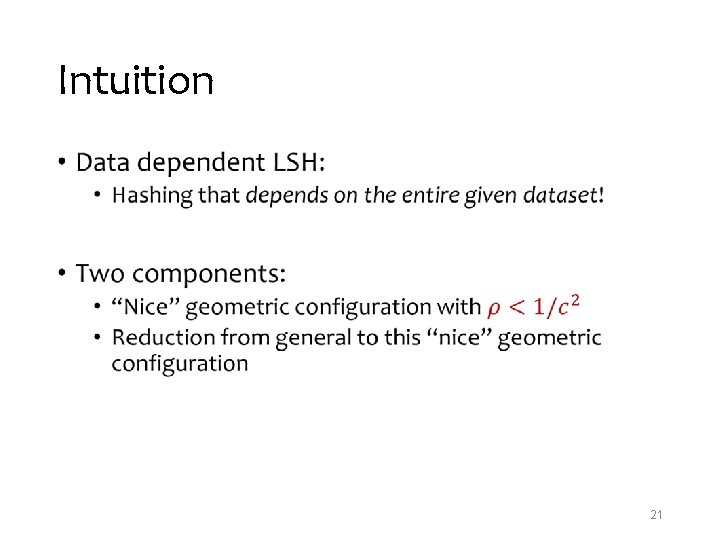

Intuition • 21

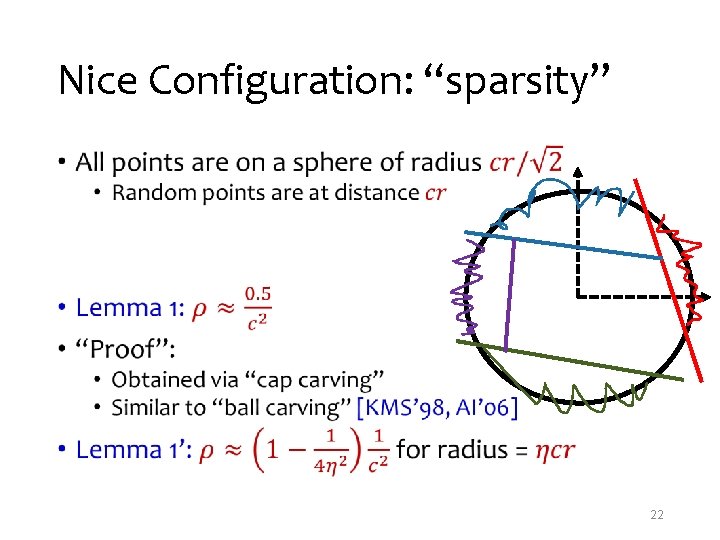

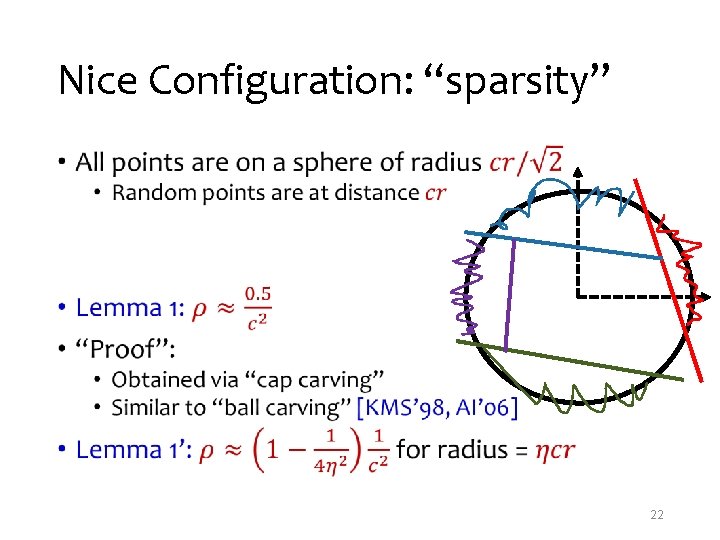

Nice Configuration: “sparsity” • 22

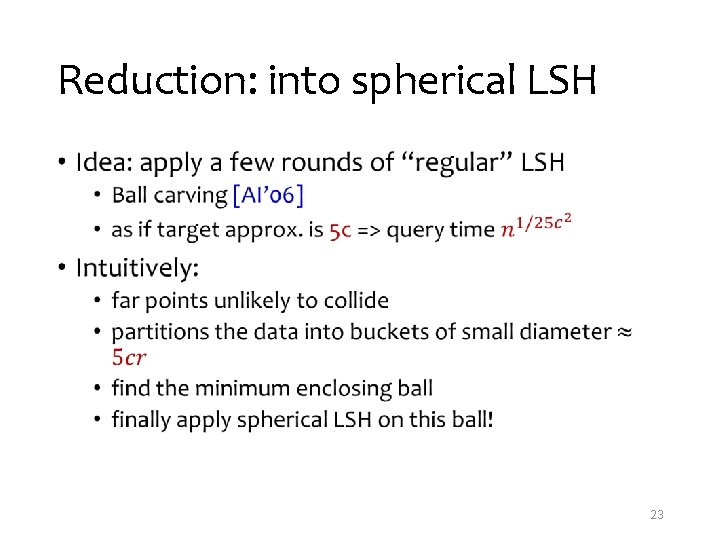

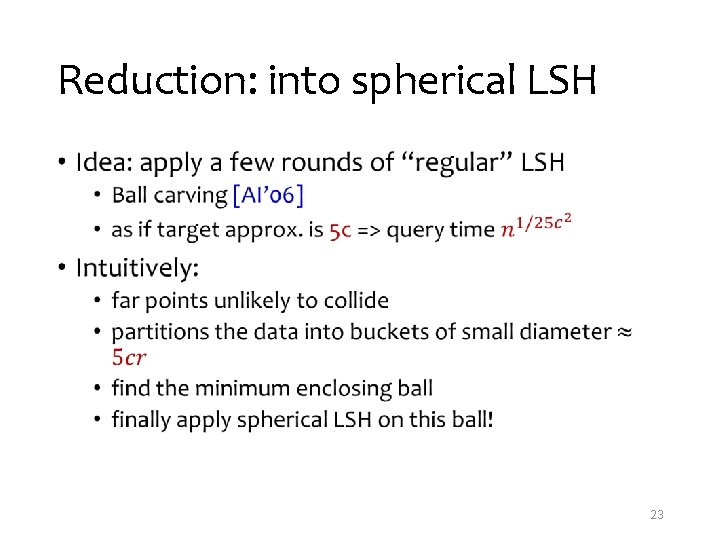

Reduction: into spherical LSH • 23

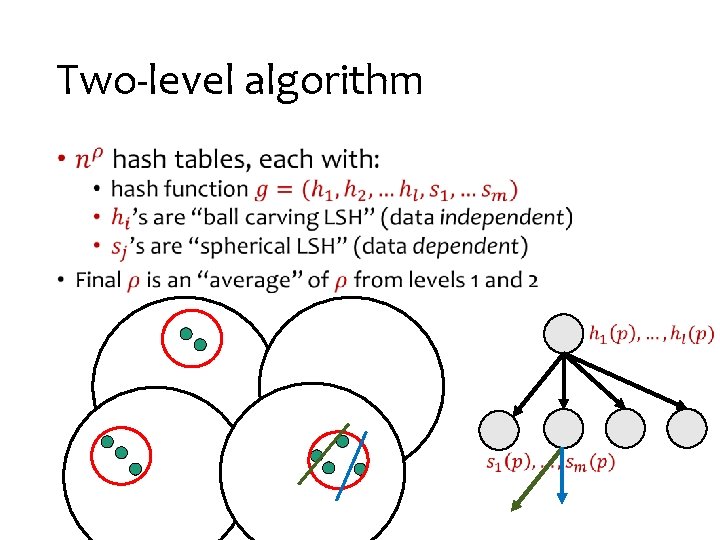

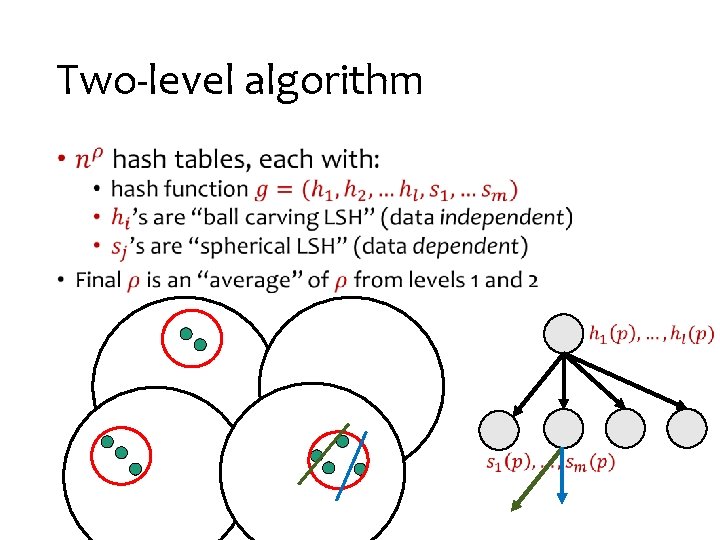

Two-level algorithm •

Details • Inside a bucket, need to ensure “sparse” case • 1) drop all “far pairs” • 2) find minimum enclosing ball (MEB) • 3) partition by “sparsity” (distance from center) 25

1) Far points • 26

2) Minimum Enclosing Ball • 27

3) Partition by “sparsity” • 28

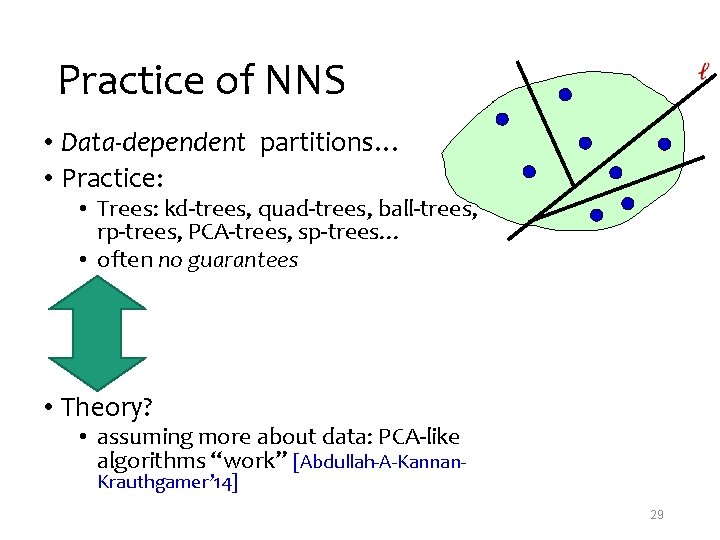

Practice of NNS • Data-dependent partitions… • Practice: • Trees: kd-trees, quad-trees, ball-trees, rp-trees, PCA-trees, sp-trees… • often no guarantees • Theory? • assuming more about data: PCA-like algorithms “work” [Abdullah-A-Kannan. Krauthgamer’ 14] 29

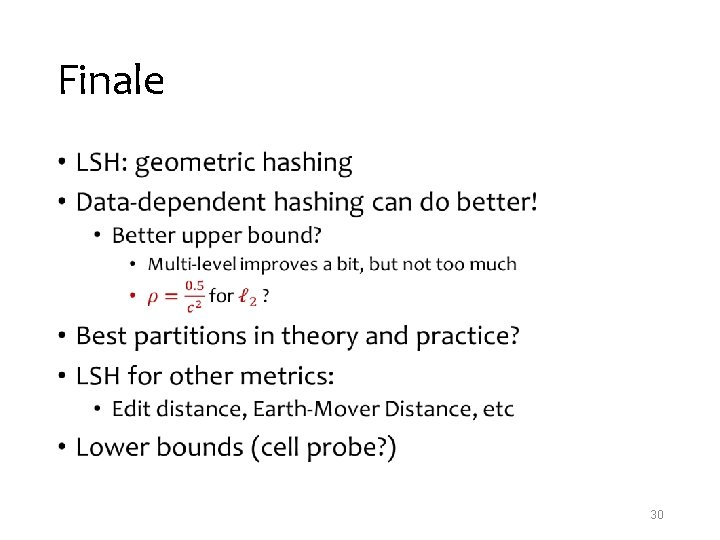

Finale • 30

![Open question Prob needle of length 1 is not cut 1c 2 Open question: • [Prob. needle of length 1 is not cut] ≥ 1/c 2](https://slidetodoc.com/presentation_image_h/cf19b3c2600c5e0a2c985bca2760f1a8/image-31.jpg)

Open question: • [Prob. needle of length 1 is not cut] ≥ 1/c 2 [Prob needle of length c is not cut]