Summary of Machine Learning J S Roger Jang

- Slides: 10

Summary of Machine Learning J. -S. Roger Jang (張智星) jang@mirlab. org http: //mirlab. org/jang MIR Lab, CSIE Dept. National Taiwan University

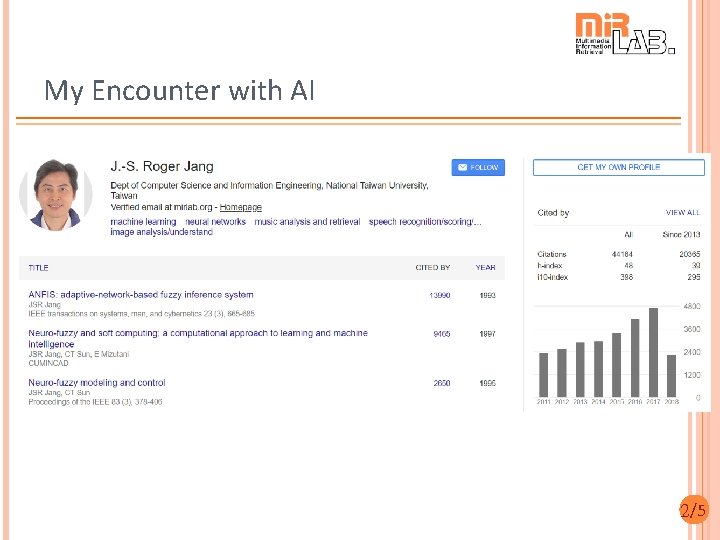

My Encounter with AI 2/5

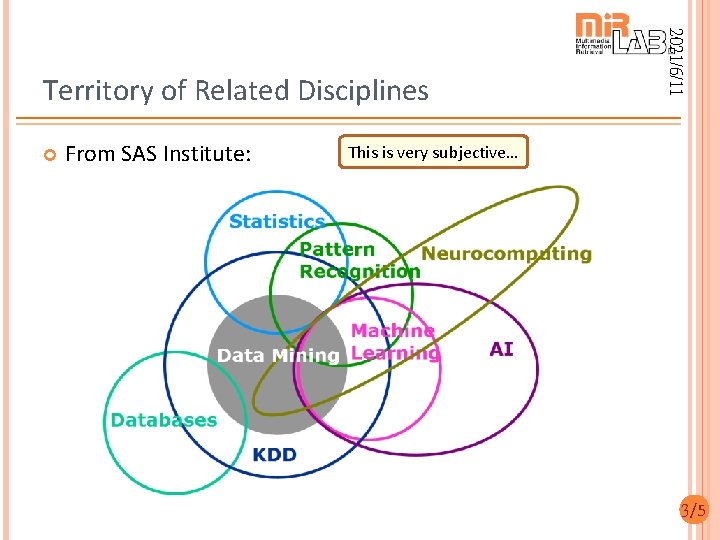

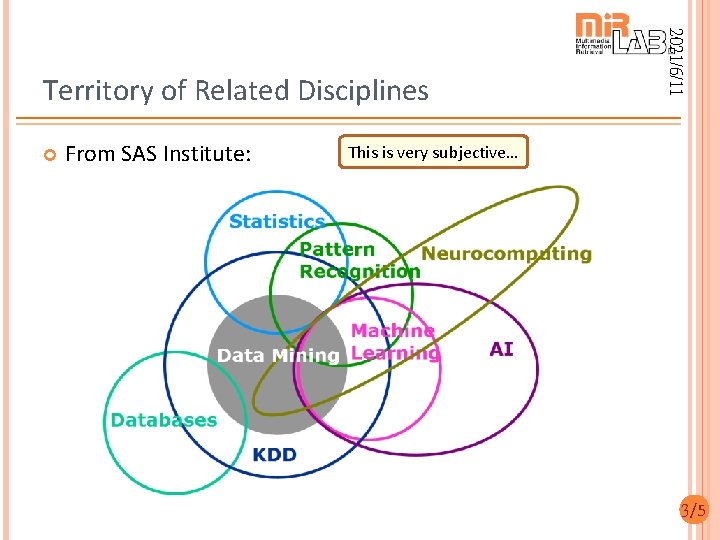

From SAS Institute: 2021/6/11 Territory of Related Disciplines This is very subjective… 3/5

Differences between ML and Statistics What’s in common between ML and statistics � With the same goal to build a model for regression and classification Machine learning focuses on… � Mostly nonlinear models � Less theoretical exploration (for instance, we do not need to assume the error in regression is Gaussian distribution) � More application-driven � With short history (since 1990 or so) � Leverage GPU to deal with complex computing � Leverage diversified optimization techniques 4/5

Naming Convention: ML vs. Statistics Machine Learning Statistics � Neural networks, classifiers Models � Weights (for NN) Parameters � Learning Fitting, classification � Generalization Test set performance � Supervised learning Parameter identification in regression or classification � Unsupervised learning Clustering, density estimation 5/5

「AI產業化」的痛點 訓練資料取得不易 � Data is King – Who masters the Data will rule the World! � 資料是數據經濟中的石油! � Amazon echo, google home, … 缺乏領域知識(domain know-how) � 資料矯正 (Data correction) Body temperation: 363 Height: 72, weight: 168 Compare with. com bubbles… (Missing data imputation) � 特徵過濾 (Feature filtering) � 遺漏值處理 Useless features affect modeling! 6/5

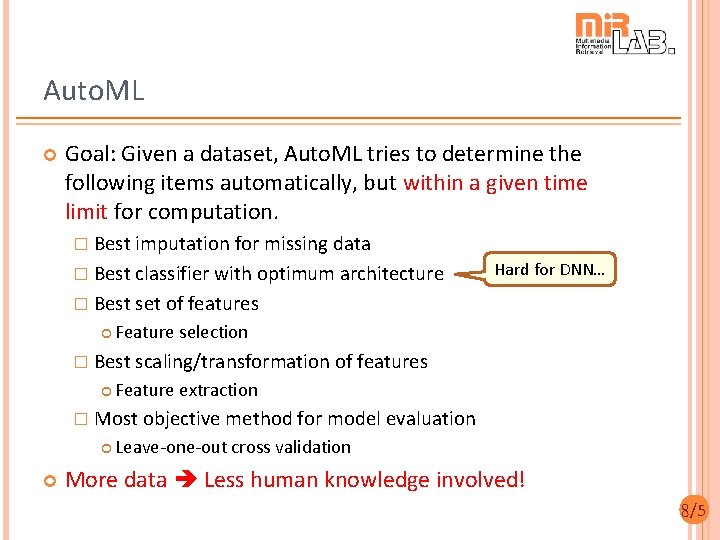

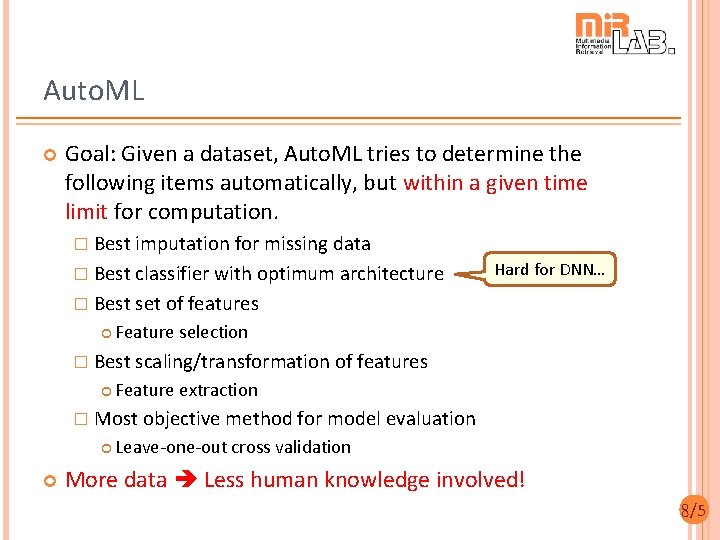

Auto. ML Goal: Given a dataset, Auto. ML tries to determine the following items automatically, but within a given time limit for computation. � Best imputation for missing data � Best classifier with optimum architecture Hard for DNN… � Best set of features Feature selection � Best scaling/transformation of features Feature extraction � Most objective method for model evaluation Leave-one-out cross validation More data Less human knowledge involved! 8/5

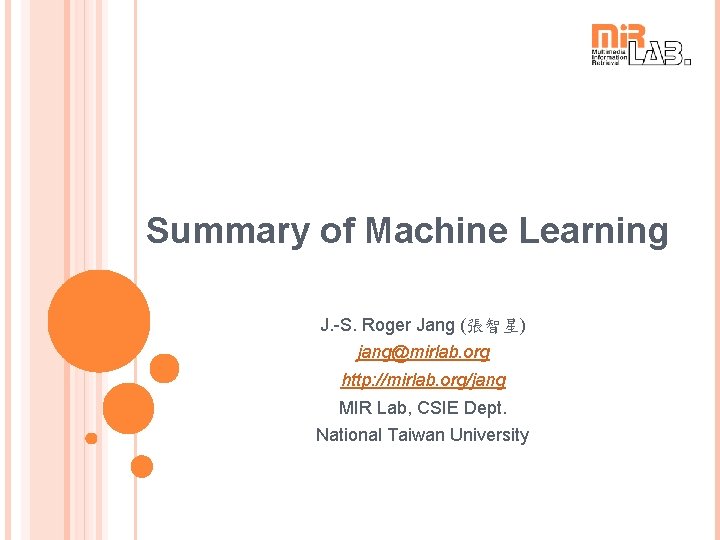

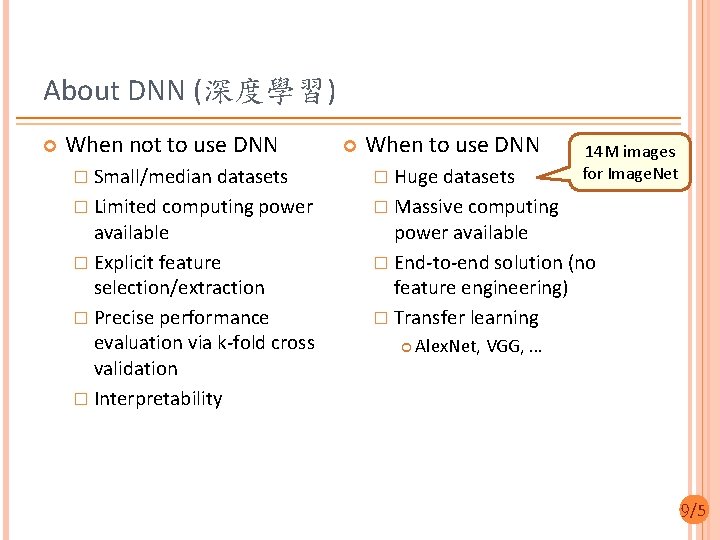

About DNN (深度學習) When not to use DNN When to use DNN � Small/median datasets � Huge datasets � Limited computing power � Massive computing available � Explicit feature selection/extraction � Precise performance evaluation via k-fold cross validation � Interpretability 14 M images for Image. Net power available � End-to-end solution (no feature engineering) � Transfer learning Alex. Net, VGG, … 9/5

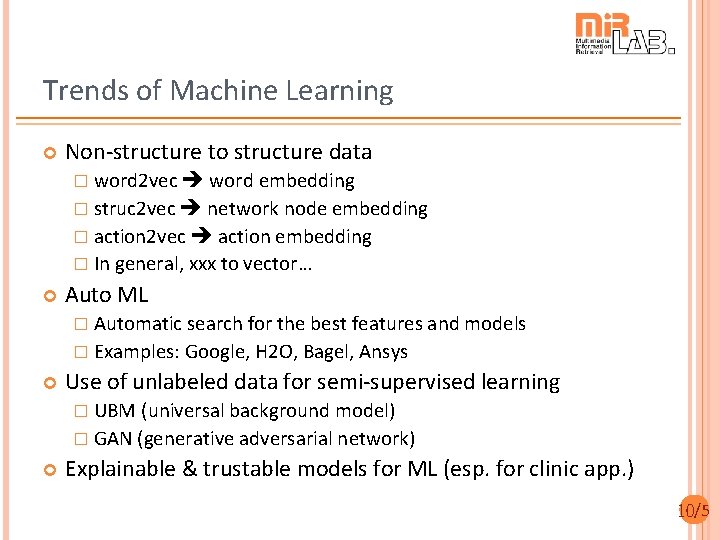

Trends of Machine Learning Non-structure to structure data � word 2 vec word embedding � struc 2 vec network node embedding � action 2 vec action embedding � In general, xxx to vector… Auto ML � Automatic search for the best features and models � Examples: Google, H 2 O, Bagel, Ansys Use of unlabeled data for semi-supervised learning � UBM (universal background model) � GAN (generative adversarial network) Explainable & trustable models for ML (esp. for clinic app. ) 10/5