Successfully Virtualizing SQL Server on v Sphere Straight

- Slides: 56

Successfully Virtualizing SQL Server on v. Sphere - Straight from the Source Microsoft Applications Virtualization Lead VMware © 2014 VMware Inc. All rights reserved.

Let’s Agree on These First Virtualization is Mainstream You will Virtualize Your Applications You will Care about the Outcome Your Applications will be Important That is WHY you are Here

Virtualizing Microsoft Application for Performance and Scale

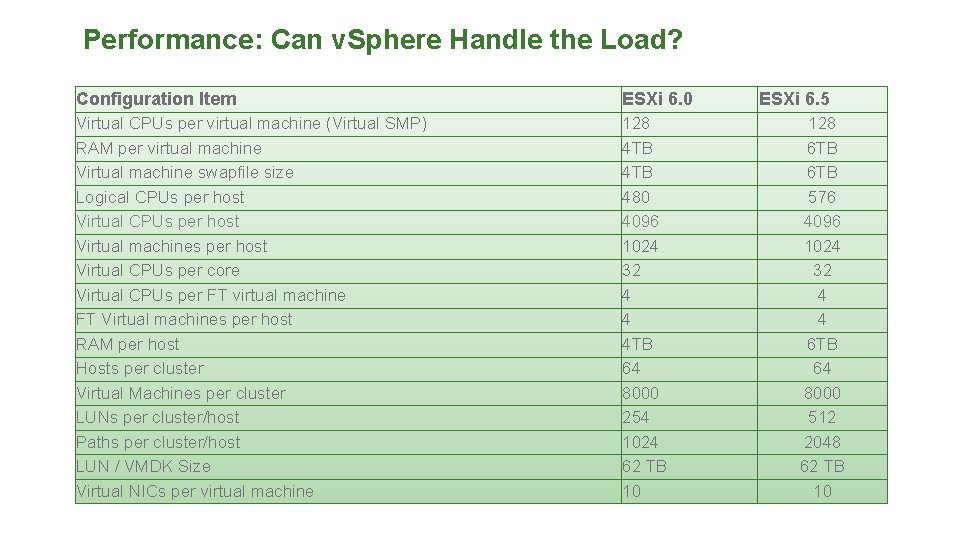

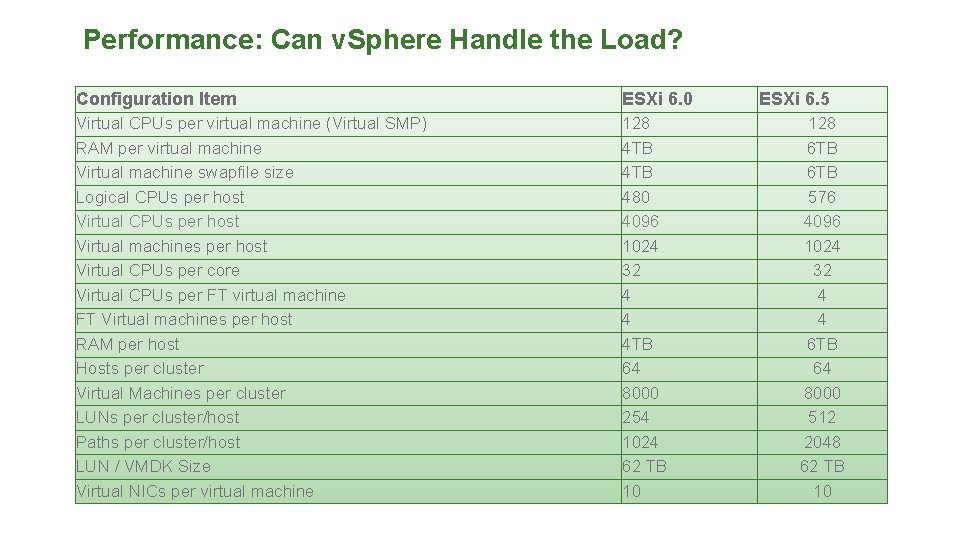

Performance: Can v. Sphere Handle the Load? Configuration Item ESXi 6. 0 Virtual CPUs per virtual machine (Virtual SMP) RAM per virtual machine Virtual machine swapfile size Logical CPUs per host Virtual machines per host Virtual CPUs per core Virtual CPUs per FT virtual machine FT Virtual machines per host RAM per host Hosts per cluster Virtual Machines per cluster LUNs per cluster/host Paths per cluster/host LUN / VMDK Size Virtual NICs per virtual machine 128 4 TB 480 4096 1024 32 4 4 4 TB 64 8000 254 1024 62 TB 10 ESXi 6. 5 128 6 TB 576 4096 1024 32 4 4 6 TB 64 8000 512 2048 62 TB 10

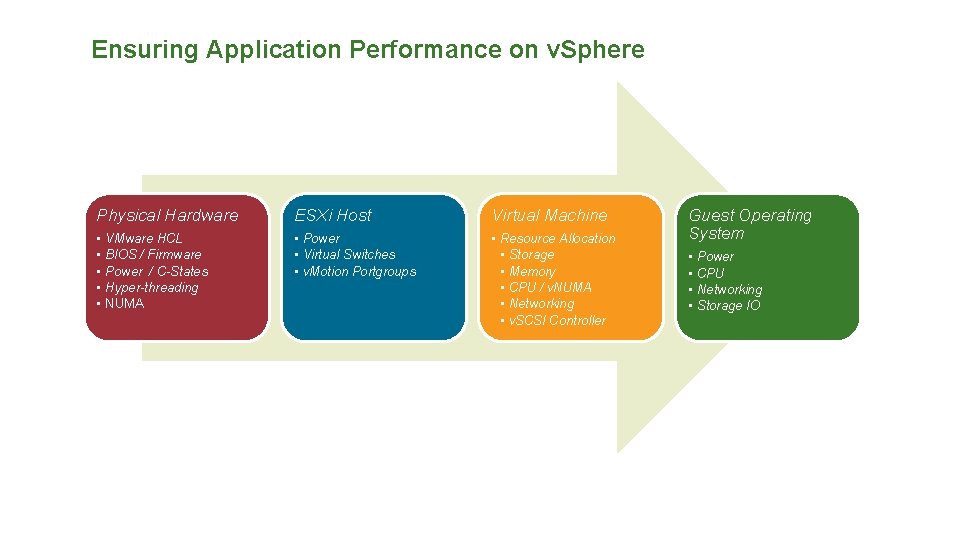

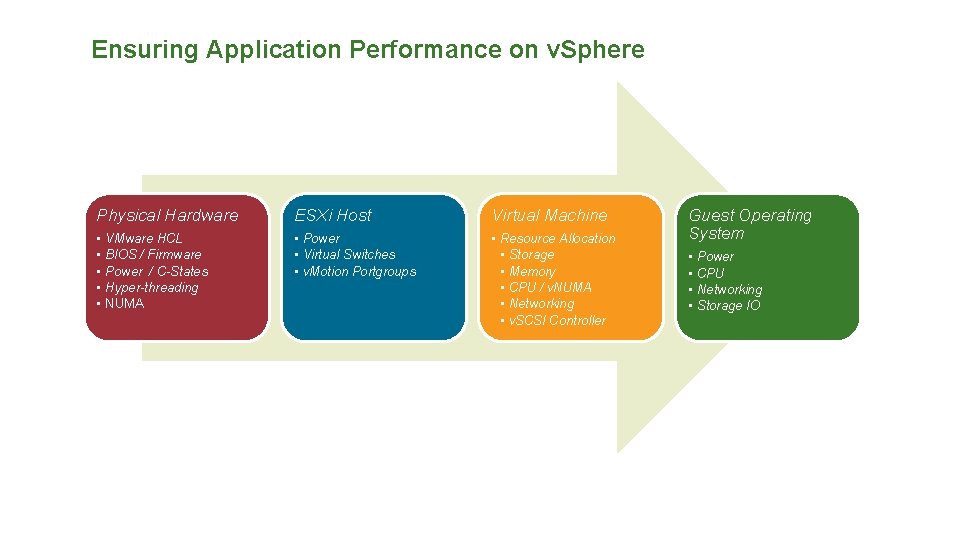

Ensuring Application Performance on v. Sphere Physical Hardware ESXi Host Virtual Machine • • • Power • Virtual Switches • v. Motion Portgroups • Resource Allocation • Storage • Memory • CPU / v. NUMA • Networking • v. SCSI Controller VMware HCL BIOS / Firmware Power / C-States Hyper-threading NUMA Guest Operating System • • Power CPU Networking Storage IO

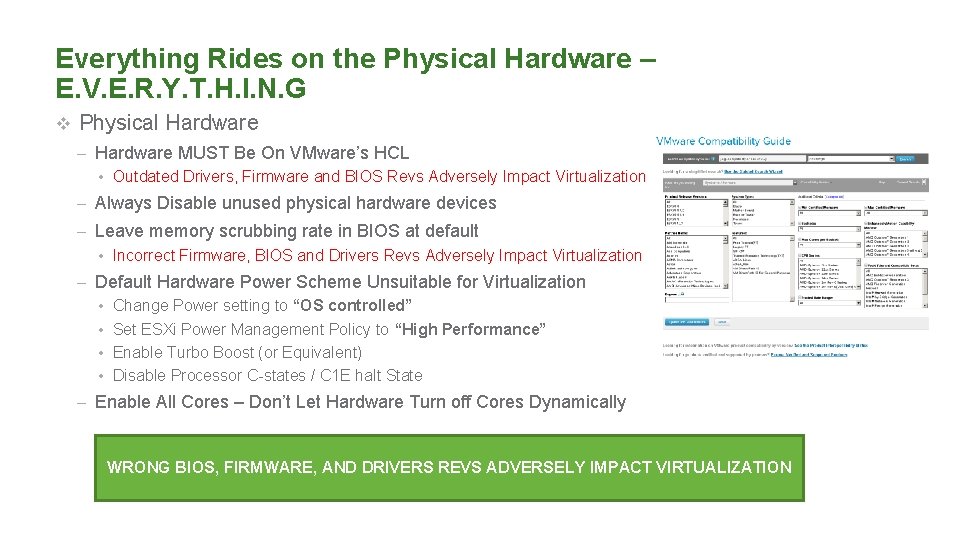

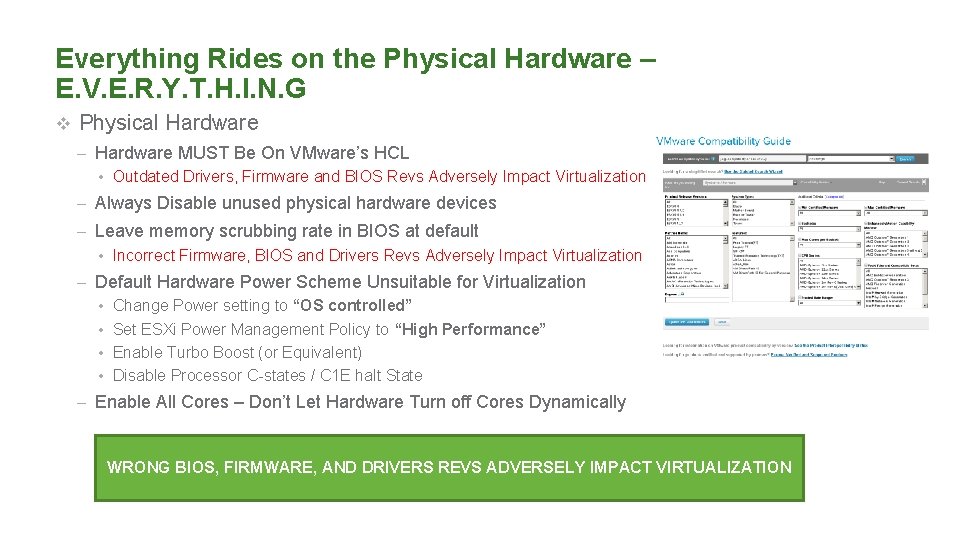

Everything Rides on the Physical Hardware – E. V. E. R. Y. T. H. I. N. G v Physical Hardware – Hardware MUST Be On VMware’s HCL • Outdated Drivers, Firmware and BIOS Revs Adversely Impact Virtualization – Always Disable unused physical hardware devices – Leave memory scrubbing rate in BIOS at default • Incorrect Firmware, BIOS and Drivers Revs Adversely Impact Virtualization – Default Hardware Power Scheme Unsuitable for Virtualization • Change Power setting to “OS controlled” • Set ESXi Power Management Policy to “High Performance” • Enable Turbo Boost (or Equivalent) • Disable Processor C-states / C 1 E halt State – Enable All Cores – Don’t Let Hardware Turn off Cores Dynamically WRONG BIOS, FIRMWARE, AND DRIVERS REVS ADVERSELY IMPACT VIRTUALIZATION

Storage Optimization

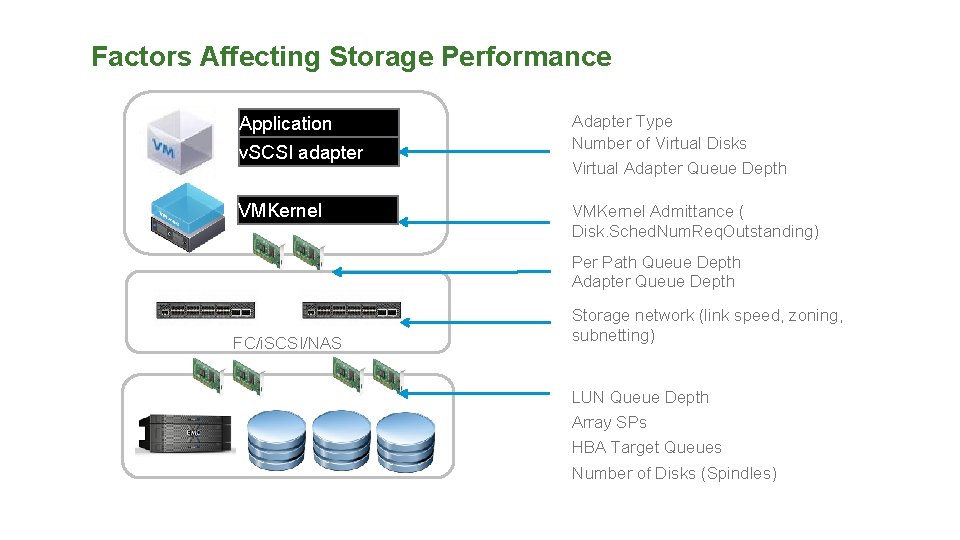

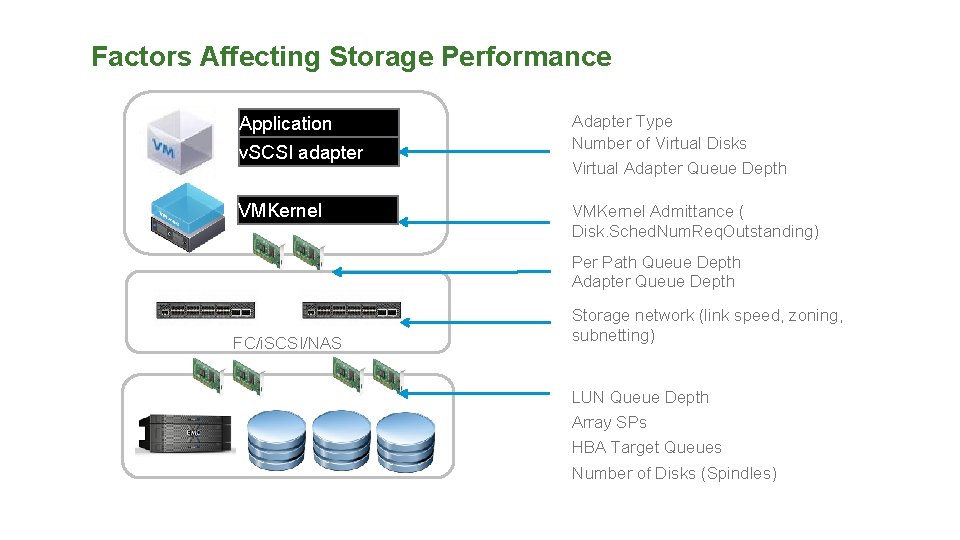

Factors Affecting Storage Performance Application v. SCSI adapter Adapter Type Number of Virtual Disks Virtual Adapter Queue Depth VMKernel Admittance ( Disk. Sched. Num. Req. Outstanding) Per Path Queue Depth Adapter Queue Depth FC/i. SCSI/NAS Storage network (link speed, zoning, subnetting) LUN Queue Depth Array SPs HBA Target Queues Number of Disks (Spindles)

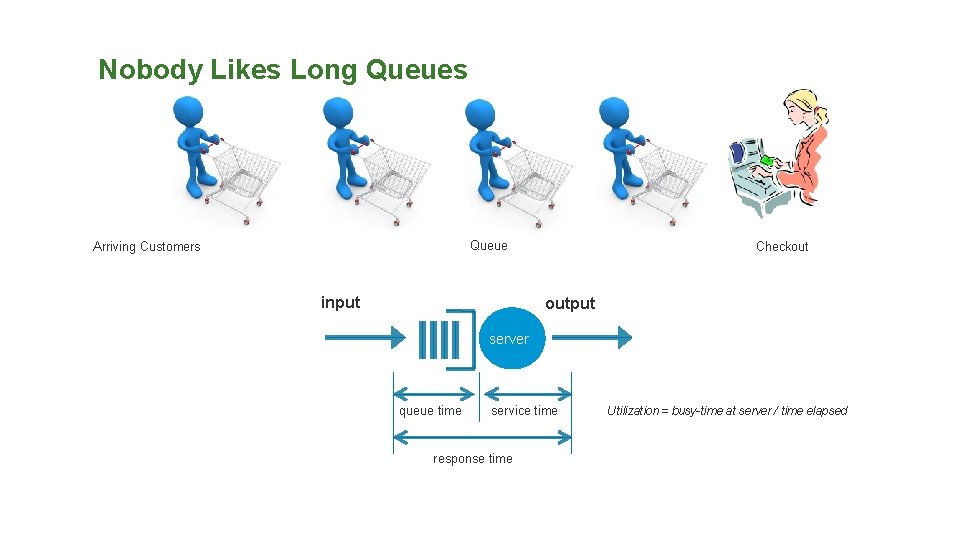

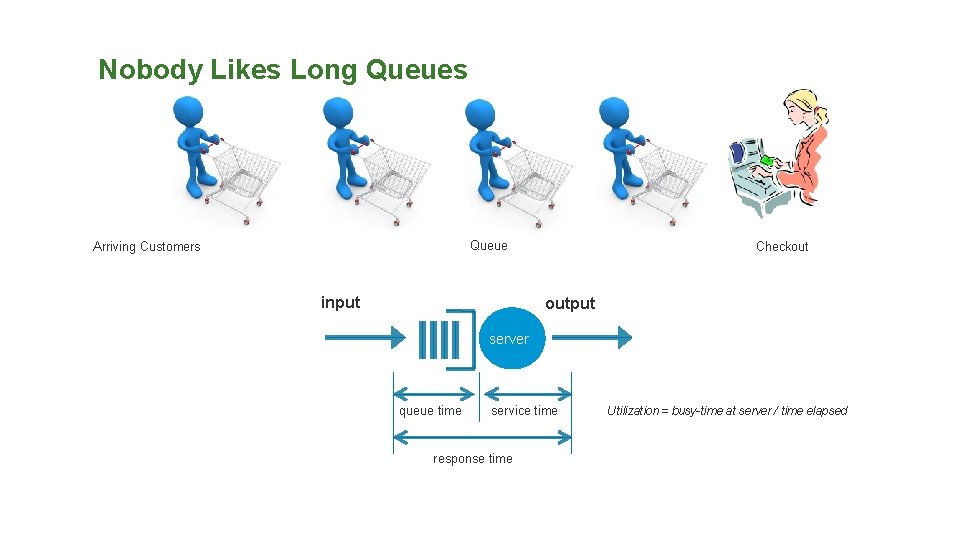

Nobody Likes Long Queues Queue Arriving Customers input Checkout output server queue time service time response time Utilization = busy-time at server / time elapsed

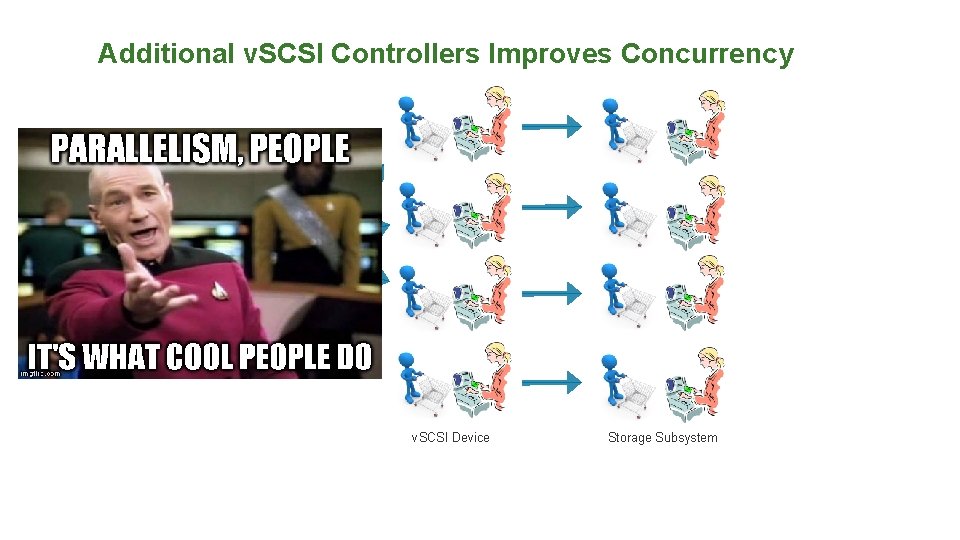

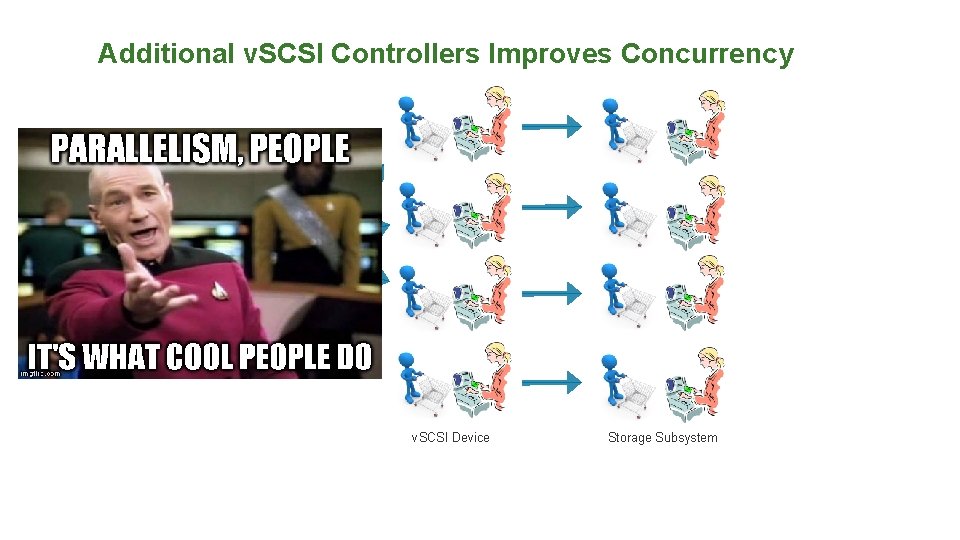

Additional v. SCSI Controllers Improves Concurrency Guest Device v. SCSI Device Storage Subsystem

Optimize for Performance – Queue Depth • v. SCSI Adapter – Be aware of per device/adapter queue depth maximums (KB 1267) – Use multiple PVSCSI adapters • VMKernel Admittance – VMKernel admittance policy affecting shared datastore (KB 1268), use dedicated datastores for DB and Log Volumes – VMKernel admittance changes dynamically when SIOC is enabled (may be used to control IOs for lower-tiered VMs) • Physical HBAs – Follow vendor recommendation on max queue depth per LUN (http: //kb. vmware. com/kb/1267) – Follow vendor recommendation on HBA execution throttle – Be aware settings are global if host is connected to multiple storage arrays – Ensure cards are installed in slots with enough bandwidth to support their expected throughput – Pick the right multi-pathing policy based on vendor storage array design (ask your storage vendor)

Increase PVSCSI Queue Depth v Just increasing LUN, HBA queue depths is NOT ENOUGH PVSCSI - http: //KB. vmware. com/kb/2053145 v Increase PVSCSI Default Queue Depth (after consultation with array vendor) v Linux: v Add following line to /etc/modprobe. d/ or /etc/modprobe. conf file: v options vmw_pvscsi cmd_per_lun=254 ring_pages=32 v OR, append these to the appropriate kernel boot arguments (grub. conf or grub. cfg) v vmw_pvscsi. cmd_per_lun=254 v vmw_pvscsi. ring_pages=32 v Windows: v Key: HKLMSYSTEMCurrent. Control. SetservicespvscsiParametersDevice – Value: Driver. Parameter | Value Data: "Request. Ring. Pages=32, Max. Queue. Depth=254“

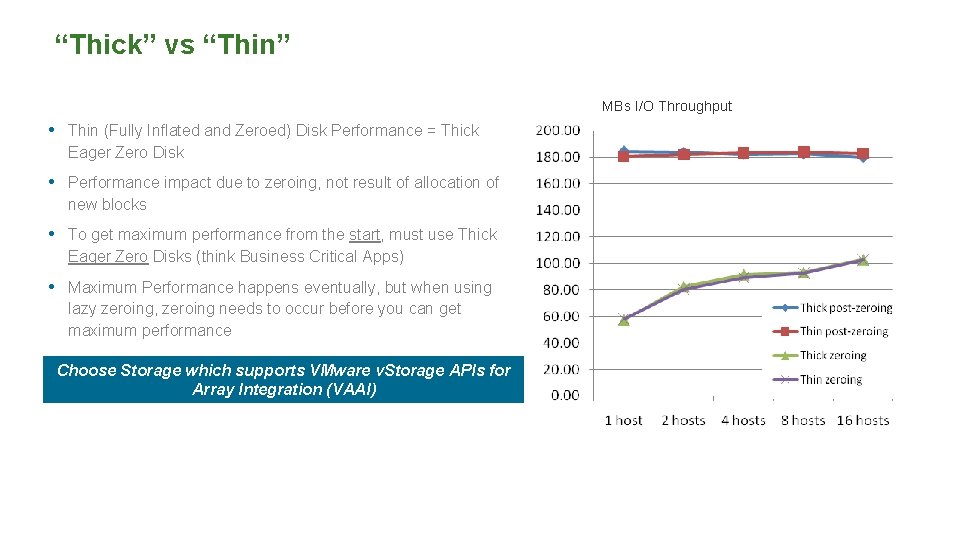

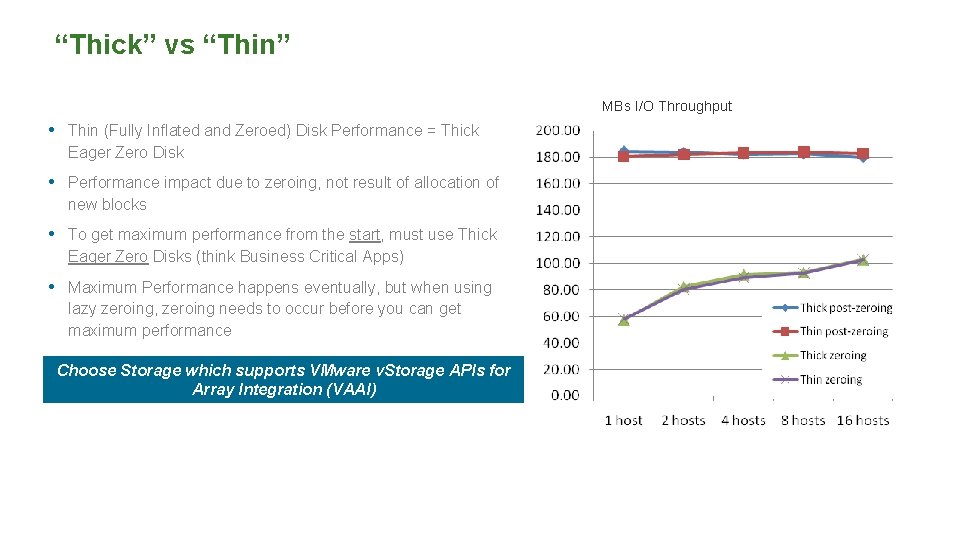

“Thick” vs “Thin” MBs I/O Throughput • Thin (Fully Inflated and Zeroed) Disk Performance = Thick Eager Zero Disk • Performance impact due to zeroing, not result of allocation of new blocks • To get maximum performance from the start, must use Thick Eager Zero Disks (think Business Critical Apps) • Maximum Performance happens eventually, but when using lazy zeroing, zeroing needs to occur before you can get maximum performance Choose Storage which supports VMware v. Storage APIs for Array Integration (VAAI) http: //www. vmware. com/pdf/vsp_4_thinprov_perf. pdf

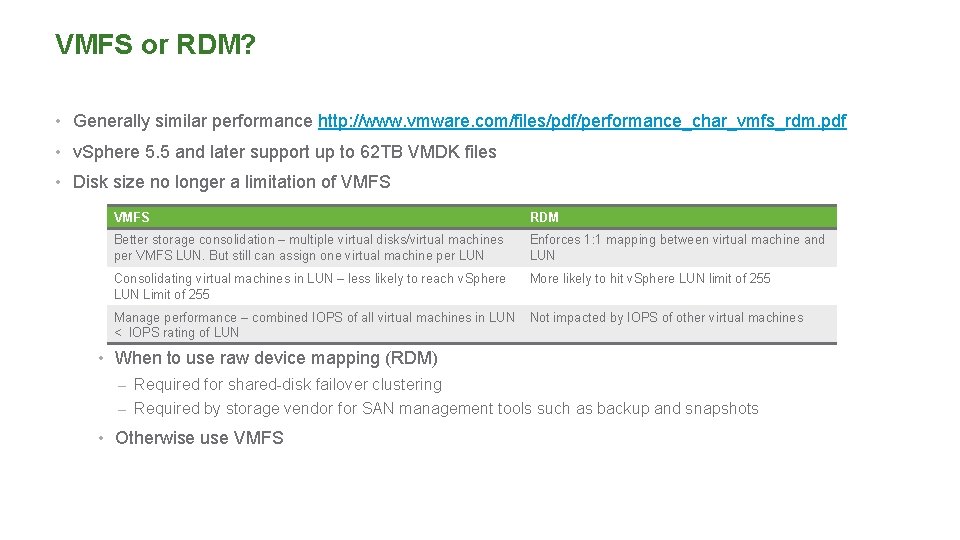

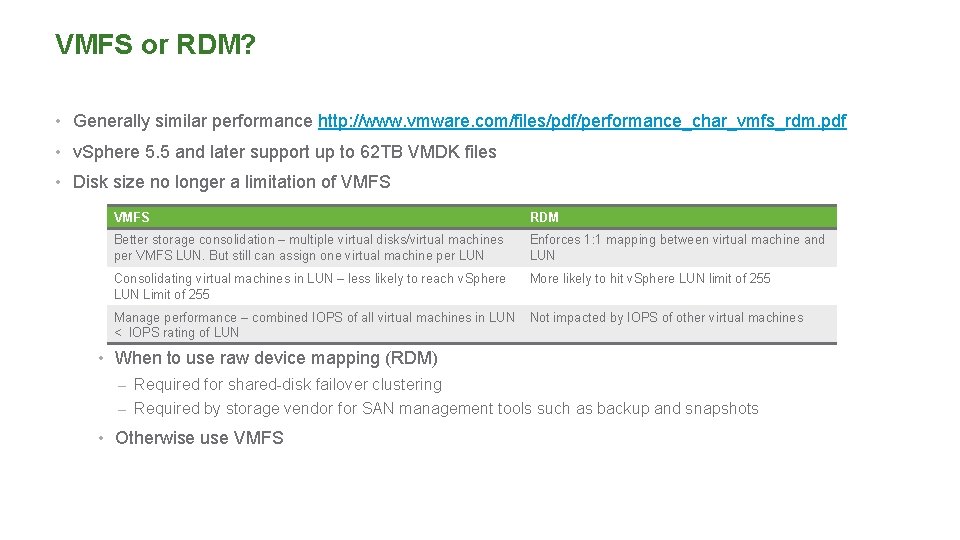

VMFS or RDM? • Generally similar performance http: //www. vmware. com/files/pdf/performance_char_vmfs_rdm. pdf • v. Sphere 5. 5 and later support up to 62 TB VMDK files • Disk size no longer a limitation of VMFS RDM Better storage consolidation – multiple virtual disks/virtual machines per VMFS LUN. But still can assign one virtual machine per LUN Enforces 1: 1 mapping between virtual machine and LUN Consolidating virtual machines in LUN – less likely to reach v. Sphere LUN Limit of 255 More likely to hit v. Sphere LUN limit of 255 Manage performance – combined IOPS of all virtual machines in LUN < IOPS rating of LUN Not impacted by IOPS of other virtual machines • When to use raw device mapping (RDM) – Required for shared-disk failover clustering – Required by storage vendor for SAN management tools such as backup and snapshots • Otherwise use VMFS

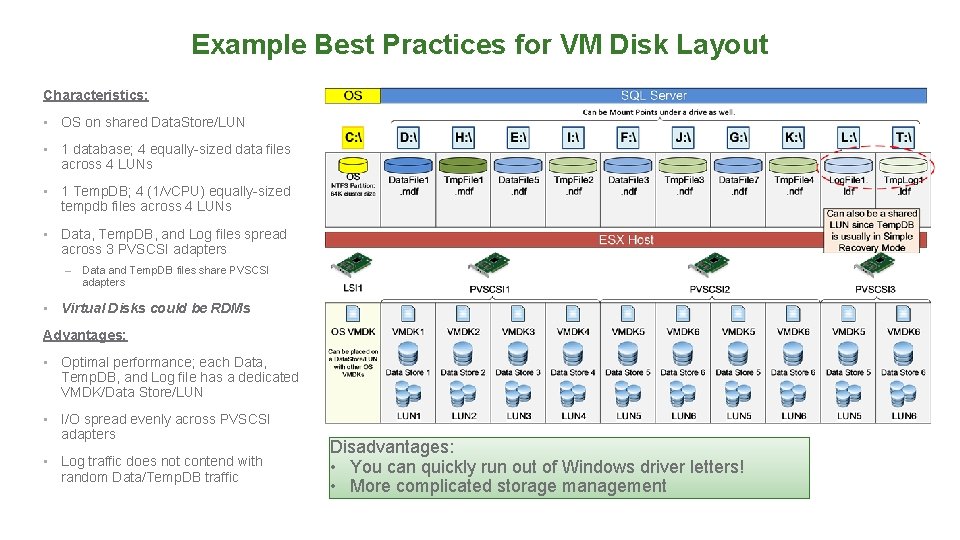

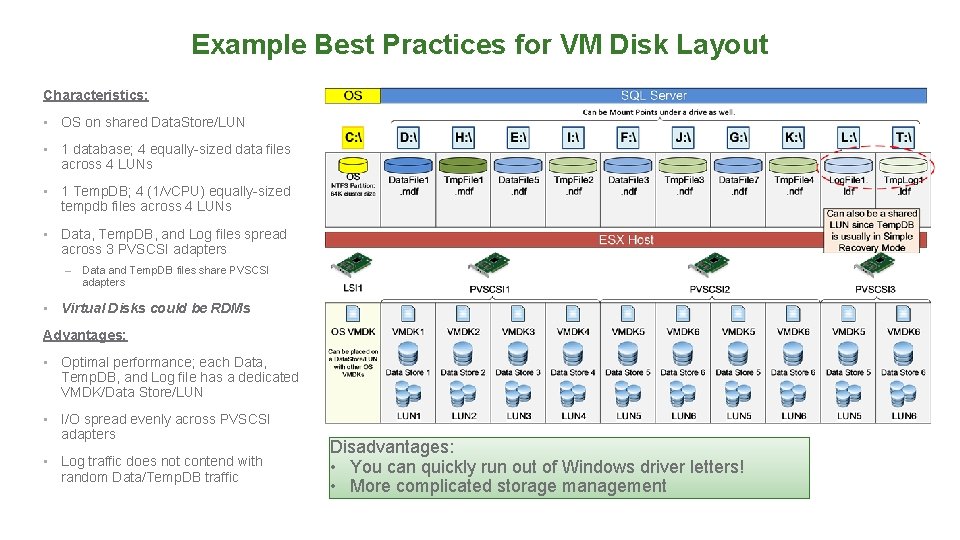

Example Best Practices for VM Disk Layout Characteristics: • OS on shared Data. Store/LUN • 1 database; 4 equally-sized data files across 4 LUNs • 1 Temp. DB; 4 (1/v. CPU) equally-sized tempdb files across 4 LUNs • Data, Temp. DB, and Log files spread across 3 PVSCSI adapters – • Data and Temp. DB files share PVSCSI adapters Virtual Disks could be RDMs Advantages: • Optimal performance; each Data, Temp. DB, and Log file has a dedicated VMDK/Data Store/LUN • I/O spread evenly across PVSCSI adapters • Log traffic does not contend with random Data/Temp. DB traffic Disadvantages: • You can quickly run out of Windows driver letters! • More complicated storage management

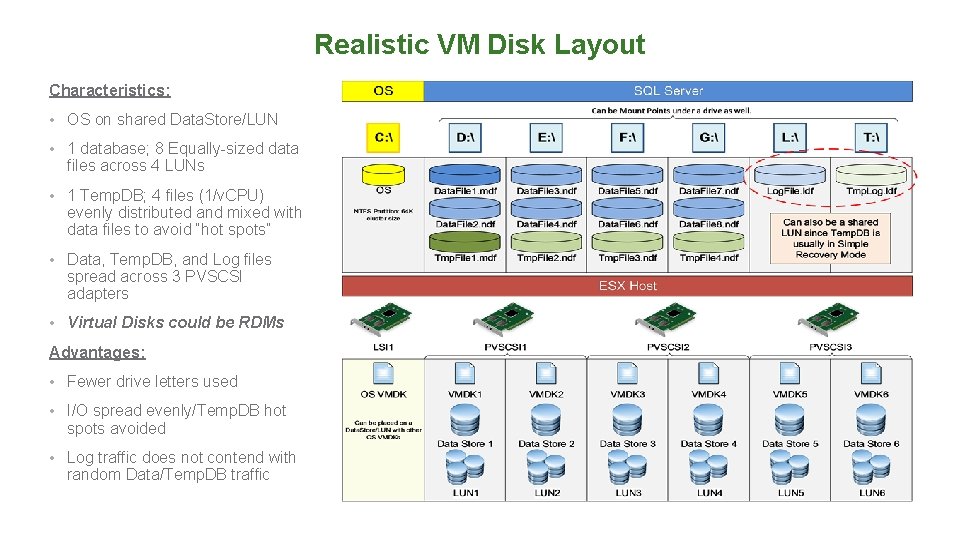

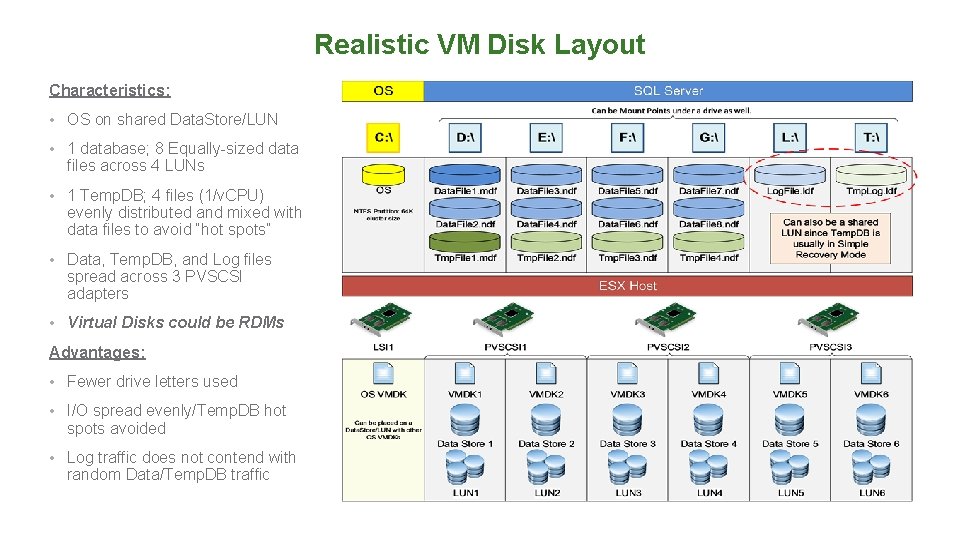

Realistic VM Disk Layout Characteristics: • OS on shared Data. Store/LUN • 1 database; 8 Equally-sized data files across 4 LUNs • 1 Temp. DB; 4 files (1/v. CPU) evenly distributed and mixed with data files to avoid “hot spots” • Data, Temp. DB, and Log files spread across 3 PVSCSI adapters • Virtual Disks could be RDMs Advantages: • Fewer drive letters used • I/O spread evenly/Temp. DB hot spots avoided • Log traffic does not contend with random Data/Temp. DB traffic

Now, We Talk CPU, v. CPUs and other Things

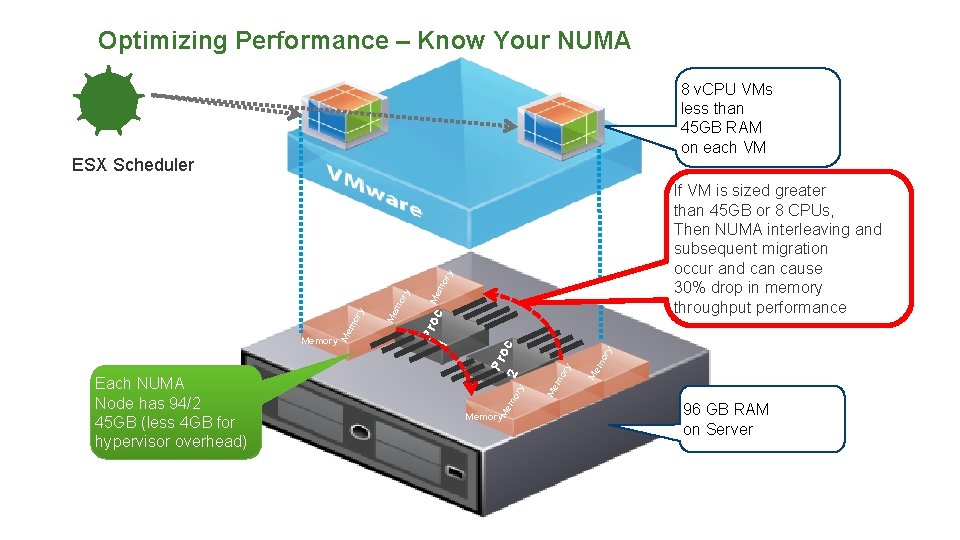

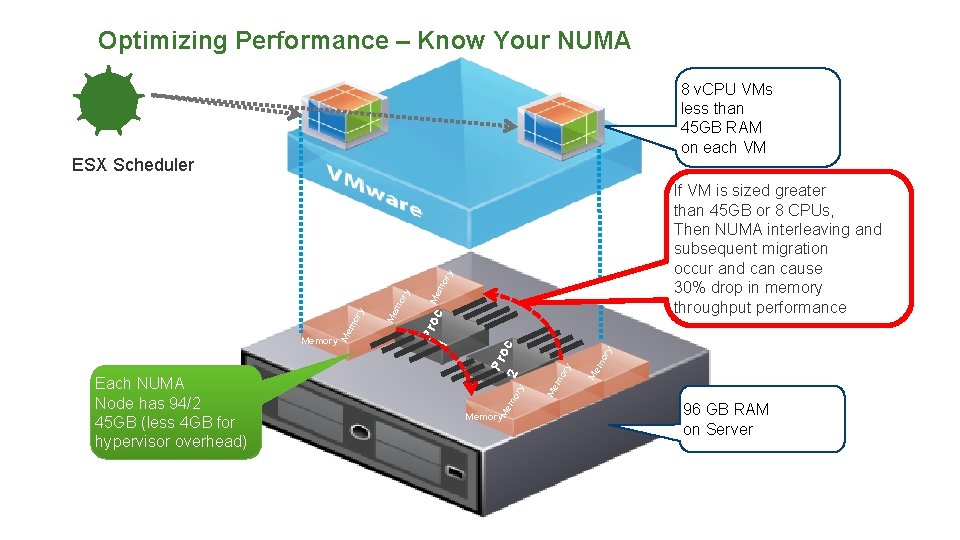

Optimizing Performance – Know Your NUMA 8 v. CPU VMs less than 45 GB RAM on each VM ESX Scheduler mo Me ry ry If VM is sized greater than 45 GB or 8 CPUs, Then NUMA interleaving and subsequent migration occur and can cause 30% drop in memory throughput performance c mo Memory Me ry 2 ry Memory Me Each NUMA Node has 94/2 45 GB (less 4 GB for hypervisor overhead) mo ry c Pro Memory 1 Me mo Memory Pro mo Me ry Memory 96 GB RAM on Server

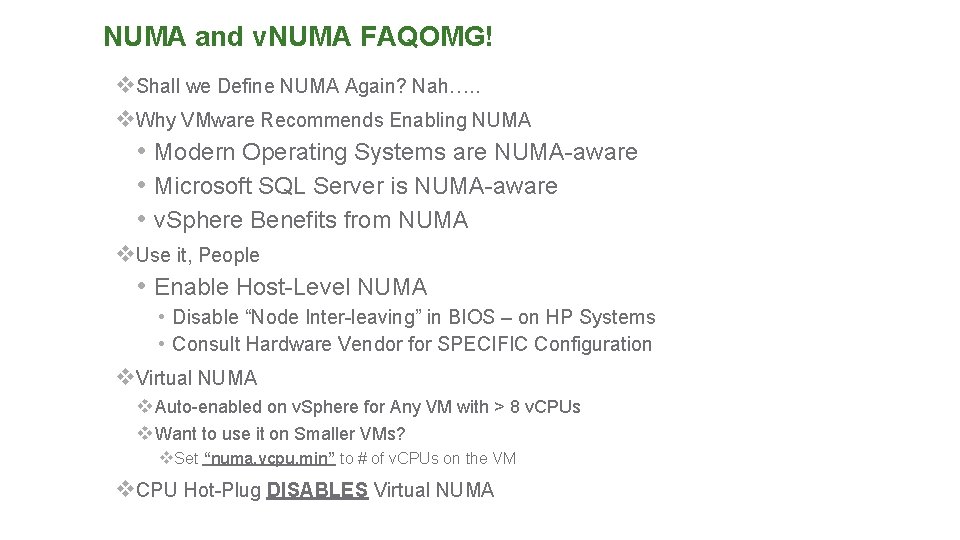

NUMA and v. NUMA FAQOMG! v. Shall we Define NUMA Again? Nah…. . v. Why VMware Recommends Enabling NUMA • Modern Operating Systems are NUMA-aware • Microsoft SQL Server is NUMA-aware • v. Sphere Benefits from NUMA v. Use it, People • Enable Host-Level NUMA • Disable “Node Inter-leaving” in BIOS – on HP Systems • Consult Hardware Vendor for SPECIFIC Configuration v. Virtual NUMA v. Auto-enabled on v. Sphere for Any VM with > 8 v. CPUs v. Want to use it on Smaller VMs? v. Set “numa. vcpu. min” to # of v. CPUs on the VM v. CPU Hot-Plug DISABLES Virtual NUMA

NUMA Best Practices • http: //www. vmware. com/files/pdf/techpaper/VMware-v. Sphere-CPU-Sched-Perf. pdf • Avoid Remote NUMA access – Size # of v. CPUs to be <= the # of cores on a NUMA node (processor socket) • Allocate v. CPUs based on PHYSICAL NUMA topologies • Where possible, align VMs with physical NUMA boundaries: • ONE NUMA boundary • Multiple NUMA boundaries • EVEN divisor (fraction) of a NUMA boundary • Hyperthreading – Initial conservative sizing: set v. CPUs equal to # of physical cores • When performing v. Motion operations, move between hosts with the same NUMA architecture to avoid performance hit (until reboot) • ESXTOP to monitor NUMA performance in v. Sphere – Coreinfo. exe to see NUMA topology in Windows Guest

Memory Optimization

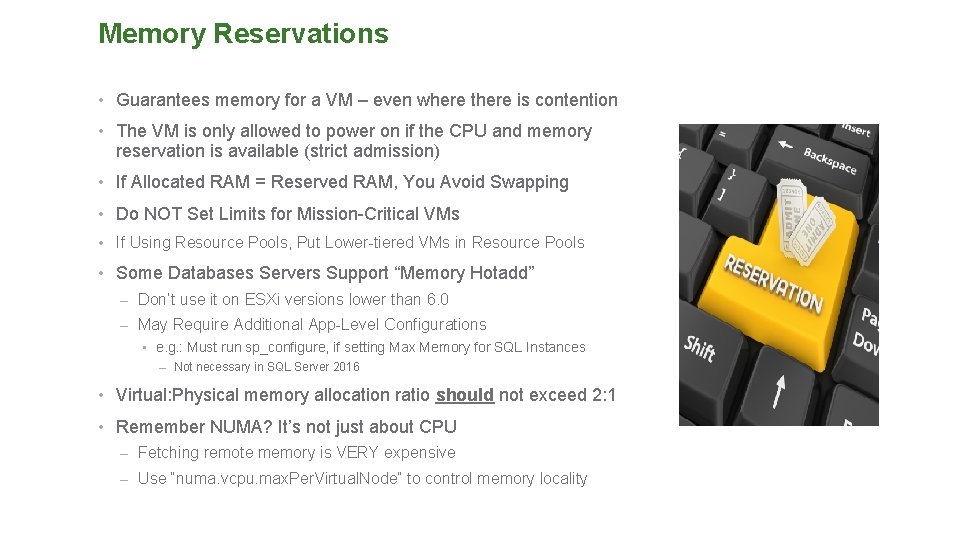

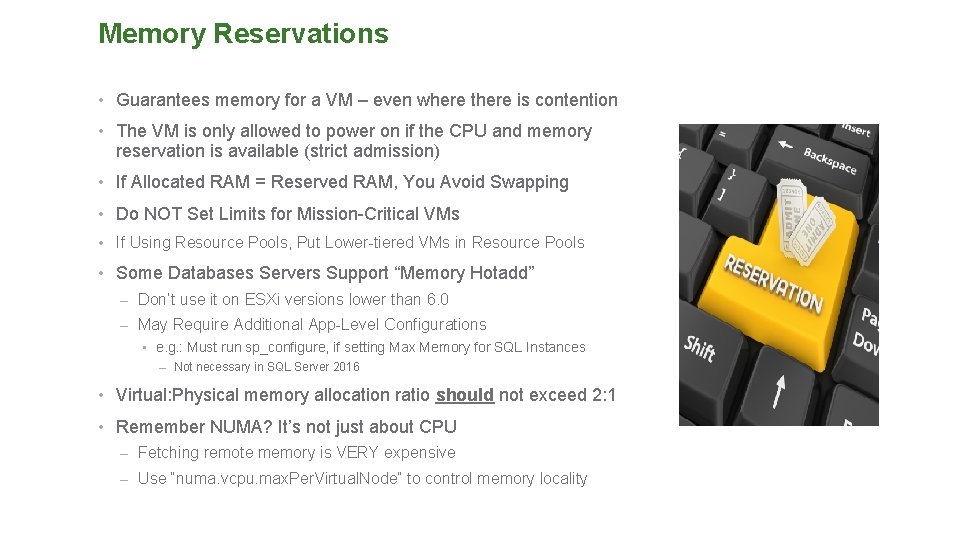

Memory Reservations • Guarantees memory for a VM – even where there is contention • The VM is only allowed to power on if the CPU and memory reservation is available (strict admission) • If Allocated RAM = Reserved RAM, You Avoid Swapping • Do NOT Set Limits for Mission-Critical VMs • If Using Resource Pools, Put Lower-tiered VMs in Resource Pools • Some Databases Servers Support “Memory Hotadd” – Don’t use it on ESXi versions lower than 6. 0 – May Require Additional App-Level Configurations • e. g. : Must run sp_configure, if setting Max Memory for SQL Instances – Not necessary in SQL Server 2016 • Virtual: Physical memory allocation ratio should not exceed 2: 1 • Remember NUMA? It’s not just about CPU – Fetching remote memory is VERY expensive – Use “numa. vcpu. max. Per. Virtual. Node” to control memory locality

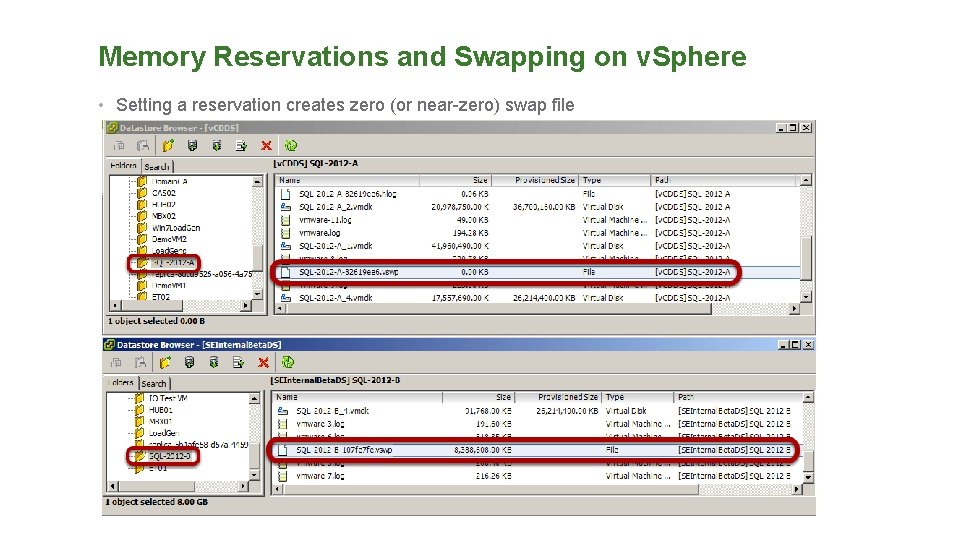

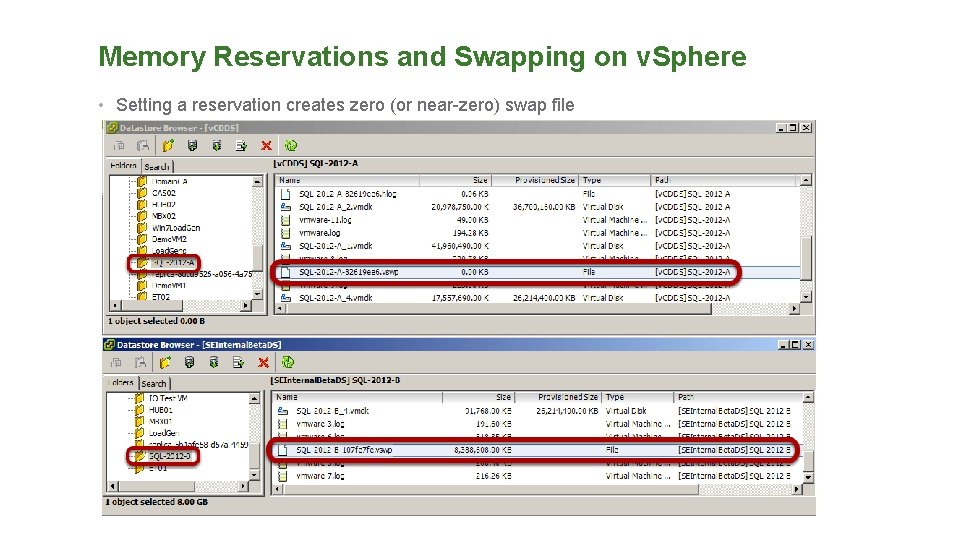

Memory Reservations and Swapping on v. Sphere • Setting a reservation creates zero (or near-zero) swap file

Network Optimization

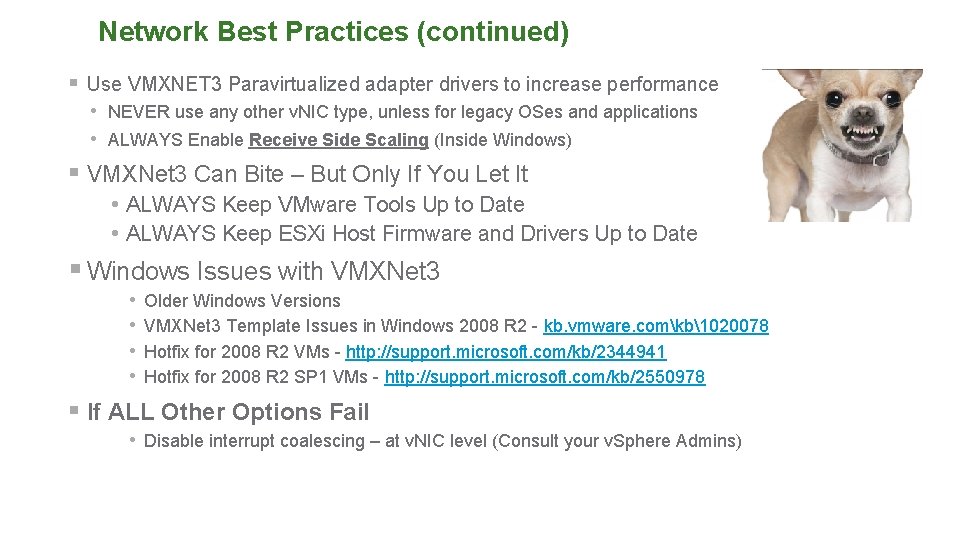

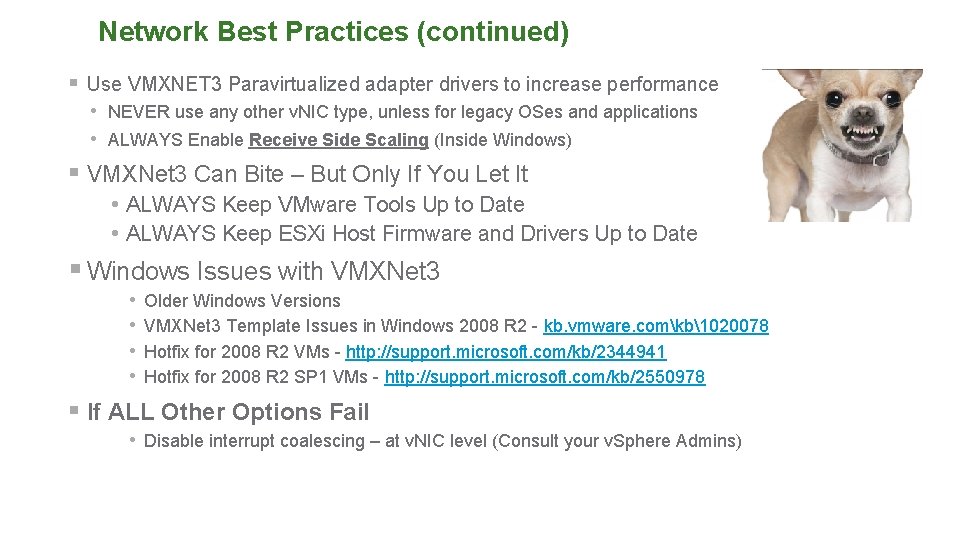

Network Best Practices (continued) § Use VMXNET 3 Paravirtualized adapter drivers to increase performance • NEVER use any other v. NIC type, unless for legacy OSes and applications • ALWAYS Enable Receive Side Scaling (Inside Windows) § VMXNet 3 Can Bite – But Only If You Let It • ALWAYS Keep VMware Tools Up to Date • ALWAYS Keep ESXi Host Firmware and Drivers Up to Date § Windows Issues with VMXNet 3 • • Older Windows Versions VMXNet 3 Template Issues in Windows 2008 R 2 - kb. vmware. comkb1020078 Hotfix for 2008 R 2 VMs - http: //support. microsoft. com/kb/2344941 Hotfix for 2008 R 2 SP 1 VMs - http: //support. microsoft. com/kb/2550978 § If ALL Other Options Fail • Disable interrupt coalescing – at v. NIC level (Consult your v. Sphere Admins)

A Word on Windows RSS – Don’t Tase Me, Bro v. Windows Default Behaviors • Default RSS Behavior Result in Unbalanced CPU Usage • Saturates CPU 0, Service Network IOs • Problem Manifested in In-Guest Packet Drops • Problems Not Seen in v. Sphere Kernel, Making Problem Difficult to Detect • Solution • Enable RSS in 2 Places in Windows • At the NIC Properties • Get-Net. Adapter. Rss |fl name, enabled • Enable-Net. Adapter. Rss -name <Adaptername> • At the Windows Kernel • Netsh int tcp show global • Netsh int tcp set global rss=enabled • Please See http: //kb. vmware. com/kb/2008925 and http: //kb. vmware. com/kb/2061598

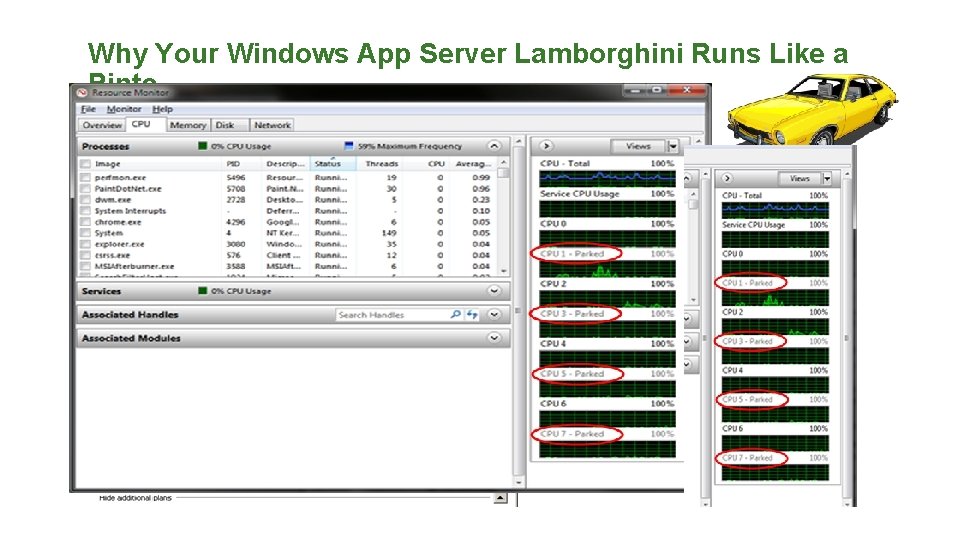

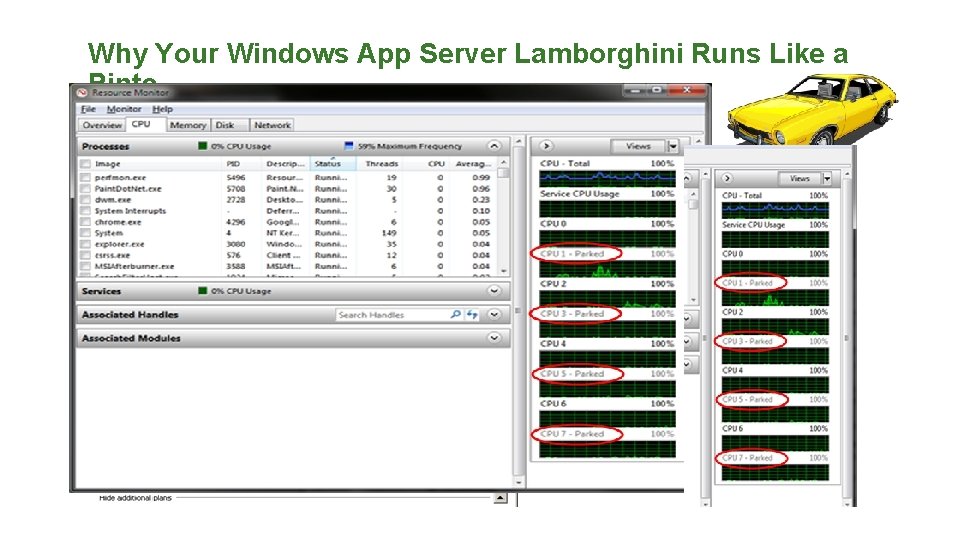

Why Your Windows App Server Lamborghini Runs Like a v. Pinto Default “Balanced” Power Setting Results in Core Parking • De-scheduling and Re-scheduling CPUs Introduces Performance Latency • Doesn’t even save power - http: //bit. ly/20 Dau. DR • Now (allegedly) changed in Windows Server 2012 v. How to Check: • Perfmon: • If "Processor Information(_Total)% of Maximum Frequency“ < 100, “Core Parking” is going on • Command Prompt: • “Powerfcg –list” (Anything other than “High Performance”? You have “Core Parking”) v. Solution • Set Power Scheme to “High Performance” • Do Some other “complex” Things - http: //bit. ly/1 HQs. Ox. L

Microsoft Applications High Availability Options – The Caveats

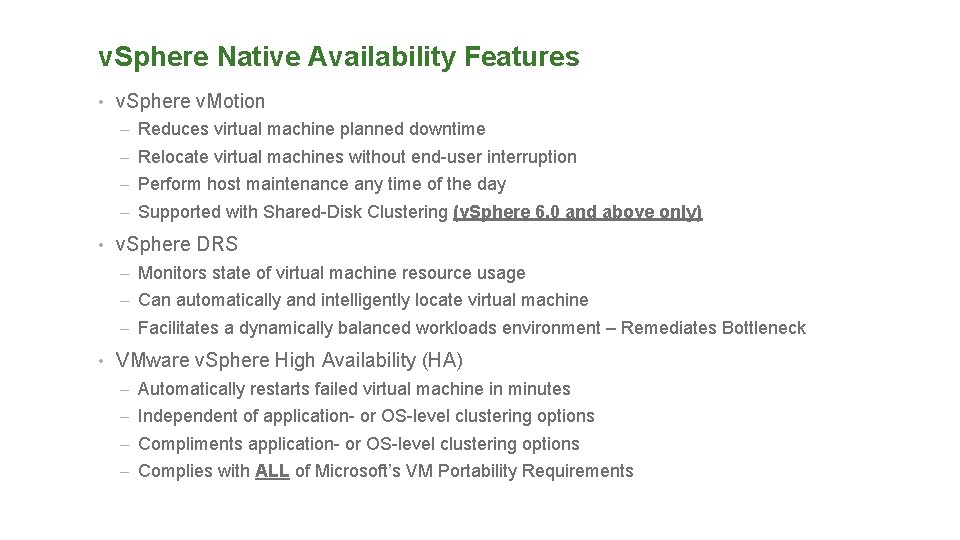

v. Sphere Native Availability Features • v. Sphere v. Motion – Reduces virtual machine planned downtime – Relocate virtual machines without end-user interruption – Perform host maintenance any time of the day – Supported with Shared-Disk Clustering (v. Sphere 6. 0 and above only) • v. Sphere DRS – Monitors state of virtual machine resource usage – Can automatically and intelligently locate virtual machine – Facilitates a dynamically balanced workloads environment – Remediates Bottleneck • VMware v. Sphere High Availability (HA) – Automatically restarts failed virtual machine in minutes – Independent of application- or OS-level clustering options – Compliments application- or OS-level clustering options – Complies with ALL of Microsoft’s VM Portability Requirements

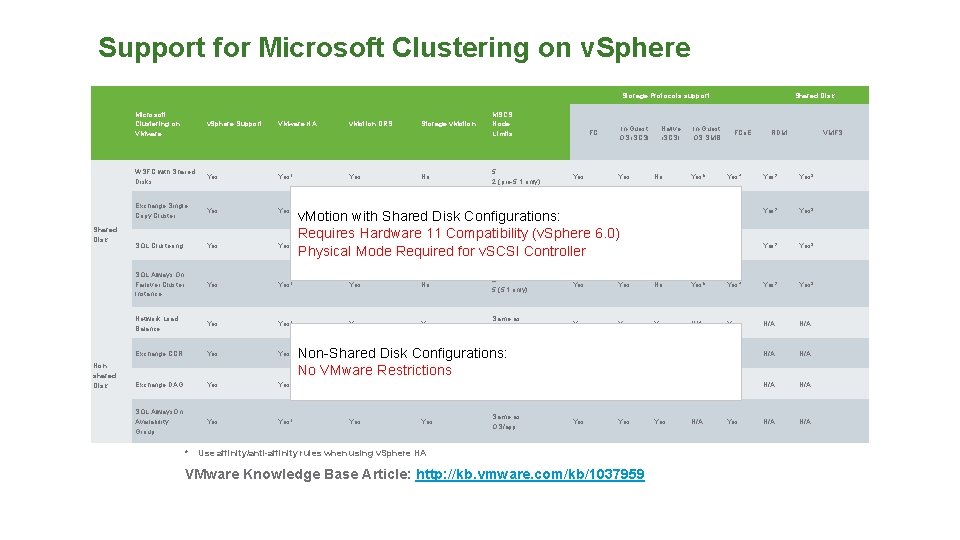

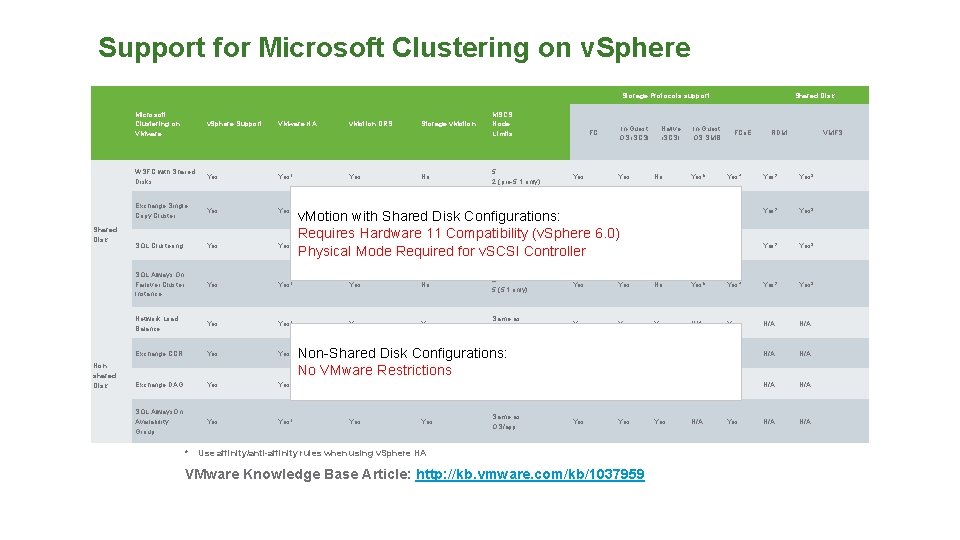

Support for Microsoft Clustering on v. Sphere Storage Protocols support Shared Disk Non shared Disk Microsoft Clustering on VMware v. Sphere Support WSFC with Shared Disks Yes 1 Exchange Single Copy Cluster Yes 1 SQL Clustering Yes 1 SQL Always On Failover Cluster Instance Yes 1 Yes No 2 5 (5. 1 only) Yes Network Load Balance Yes 1 Yes Same as OS/app Exchange CCR Yes 1 Exchange DAG Yes 1 SQL Always. On Availability Group Yes 1 * VMware HA v. Motion DRS Storage v. Motion Yes No MSCS Node Limits 5 2 (pre-5. 1 only) 2 FC In-Guest OS i. SCSI Yes Yes OS/app Same as OS/app In-Guest OS SMB FCo. E RDM VMFS Yes Yes No Yes 5 Yes 4 Yes 2 Yes 3 Yes Yes Yes N/A N/A Yes Yes N/A Yes Yes N/A N/A 5 (5. 1 only) v. Motion with Shared Disk Configurations: Requires Hardware 11 Compatibility (v. Sphere 6. 0) 2 Yes No Yes 5 (5. 1 only) Physical Mode Required for v. SCSI Controller Same as Yes Non-Shared Disk Configurations: OS/app No VMware Restrictions Same as Native i. SCSI Shared Disk Use affinity/anti-affinity rules when using v. Sphere HA VMware Knowledge Base Article: http: //kb. vmware. com/kb/1037959

Are You Going to Cluster THAT? v Do you NEED SQL Clustering? • Purely business and administrative decision • Virtualization does not preclude you from doing so • v. Sphere HA is NOT a Replacement for SQL Clustering v. Want AG? • No “Special” requirements on v. Sphere <EOM> v Want FCI? MSCS? • • • You MUST use Raw Device Mapping (RDM) Disks Type for Shared Disks MUST be connected to v. SCSI controllers in PHYSICAL Mode Bus Sharing Wonder why it’s called “Physical Mode RDM”, eh? In Pre-v. Sphere 6. 0, FCI/MSCS nodes CANNOT be v. Motioned. Period In v. Sphere 6. 0, you have v. Motions capabilities under following conditions • Clustered VMs are at Hardware Version 11 • v. Motion VMKernel Portgroup Connected to 10 GB Network

v. Motioning Clustered Windows Nodes – Avoid the Pitfall v Clustered Windows Applications Use Windows Server Failover Clustering (WSFC) • WSFC has a Default 5 Seconds Heartbeat Timeout Threshold • v. Motion Operations MAY Exceed 5 Seconds (During VM Quiescing) • Leading to Unintended and Disruptive Clustered Resource Failover Events v SOLUTION • Use MULTIPLE v. Motion Portgroups, where possible • Enable jumbo frames on all vmkernel ports, IF PHYSICAL Network Supports it • If jumbo frames is not supported, consider modifying default WSFC behaviors: • • (get-cluster). Same. Subnet. Threshold = 10 (get-cluster). Cross. Subnet. Threshold = 20 (get-cluster). Route. History. Length = 40 NOTES: • You may need to “Import-Module Failover. Clusters” first v Behavior NOT Unique to VMware or Virtualization • If Your Backup Software Quiesces Exchange, You Experience Symptom • See Microsoft’s “Tuning Failover Cluster Network Thresholds” – http: //bit. ly/1 n. JRPs 3

Dude, What About Security? ? ?

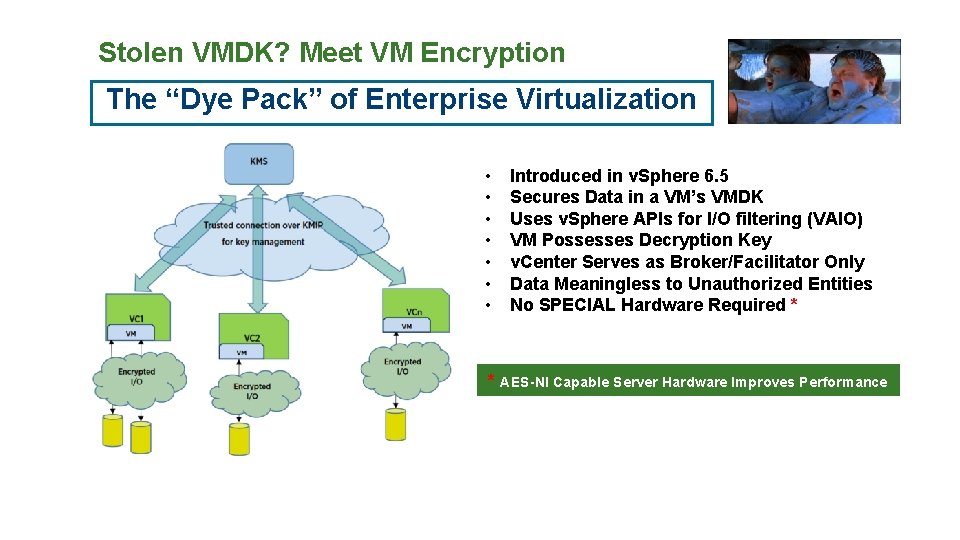

Stolen VMDK? Meet VM Encryption The “Dye Pack” of Enterprise Virtualization • • Introduced in v. Sphere 6. 5 Secures Data in a VM’s VMDK Uses v. Sphere APIs for I/O filtering (VAIO) VM Possesses Decryption Key v. Center Serves as Broker/Facilitator Only Data Meaningless to Unauthorized Entities No SPECIAL Hardware Required * * AES-NI Capable Server Hardware Improves Performance

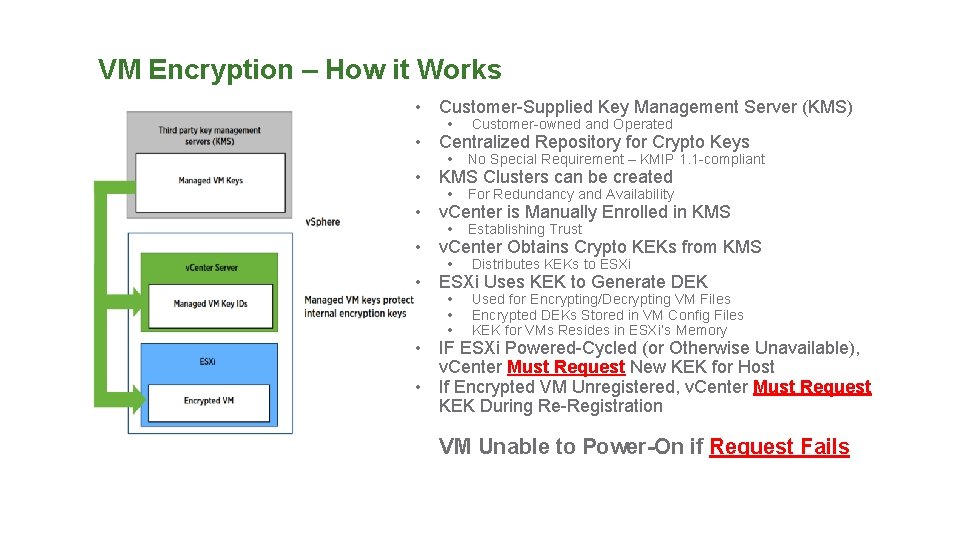

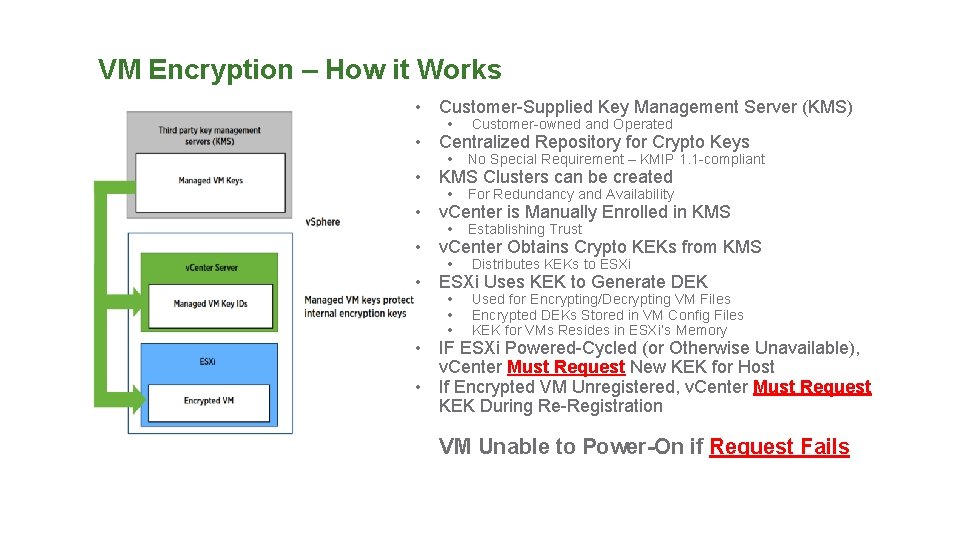

VM Encryption – How it Works • Customer-Supplied Key Management Server (KMS) • Customer-owned and Operated • No Special Requirement – KMIP 1. 1 -compliant • For Redundancy and Availability • Establishing Trust • Distributes KEKs to ESXi • • • Used for Encrypting/Decrypting VM Files Encrypted DEKs Stored in VM Config Files KEK for VMs Resides in ESXi’s Memory • Centralized Repository for Crypto Keys • KMS Clusters can be created • v. Center is Manually Enrolled in KMS • v. Center Obtains Crypto KEKs from KMS • ESXi Uses KEK to Generate DEK • IF ESXi Powered-Cycled (or Otherwise Unavailable), v. Center Must Request New KEK for Host • If Encrypted VM Unregistered, v. Center Must Request KEK During Re-Registration VM Unable to Power-On if Request Fails

VMware v. Sphere Documentation – One Link to Rule them All http: //bit. ly/2 vo. ACb 9

The Links are Free. Really ALL ABOUT VIRTUALIZING MICROSOFT BCA ON VSPHERE • http: //bit. ly/2 m. XT 32 R - Architecting Microsoft SQL Server on VMware v. Sphere • http: //bit. ly/2 j 6 xk. FQ - Microsoft Exchange Server 2016 on VMware v. Sphere Best Practices Guide • http: //bit. ly/2 kb 9 Tyx - Microsoft Exchange Server 2013 on VMware v. Sphere Best Practices Guide • http: //bit. ly/2 kd. W 0 Qd - Skype for Business on VMware v. Sphere Best Practices Guide • http: //bit. ly/2 Avnbf. K - Virtualizing Active Directory Domain Services on VMware v. Sphere • http: //bit. ly/2 z. Tu 4 s 9 - Microsoft SQL Server on VMware Availability and Recovery Option • http: //bit. ly/2 Am. Agpe - Performance Characterization of Microsoft SQL Server on VMware v. Sphere 6. 5 • http: //bit. ly/1 LRotv. E - Performance and Scalability of Microsoft SQL Server on VMware v. Sphere 6. 0 • http: //bit. ly/2 j. AMgv. F - Performance Best Practices for VMware v. Sphere® 6. 5 • http: //bit. ly/1 tf. Gv. RN - Performance Tuning for Latency-sensitive apps • http: //bit. ly/2 jz 4 Nr. S - DBA Guide to Databases on v. Sphere • http: //bit. ly/1 m 9 Hn. Zl - Setup for Failover Clustering and Microsoft Cluster Service (6. 0) • http: //bit. ly/1 Mn. EJGi - Microsoft SQL Server on VMware Availability and Recovery Option • http: //bit. ly/2 i 4 g 1 V 4 - Microsoft Document on Cluster Failover Tuning • http: //bit. ly/2 Aweulq - Perennial Reservations for RDMs for Windows Clustering (1016106) • http: //bit. ly/2 i 4 f 8 v. I - v. Sphere Resource Management (Section 14, Page 107 is where the NUMA/v. NUMA starts) • http: //bit. ly/1 DCVCfd - v. Sphere 6 ESXTOP quick overview for Troubleshooting UNINTENDED RESOURCE FAILOVER for CLUSTERED DATABASES • Microsoft Document on Cluster Failover Tunning QUEUE DEPTH and Storage IO Optimization: • Changing the queue depth for QLogic and Emulex HBAs (VMware KB 1267) • Setting the Maximum Outstanding Disk Requests for virtual machines (VMware KB 1268) • Controlling LUN queue depth throttling in VMware ESX/ESXi (VMware KB 1008113) • Execution Throttle and Queue Depth with VMware and Qlogic HBAs • Fibre Channel Adapter for VMware ESX User’s Guidehttp: //bit. ly/2 Ai 7 FDu – Everything about Clustering Windows Applications on v. Sphere

Take-Home Slides

Time-Keeping in your v. Sphere Infrastructure

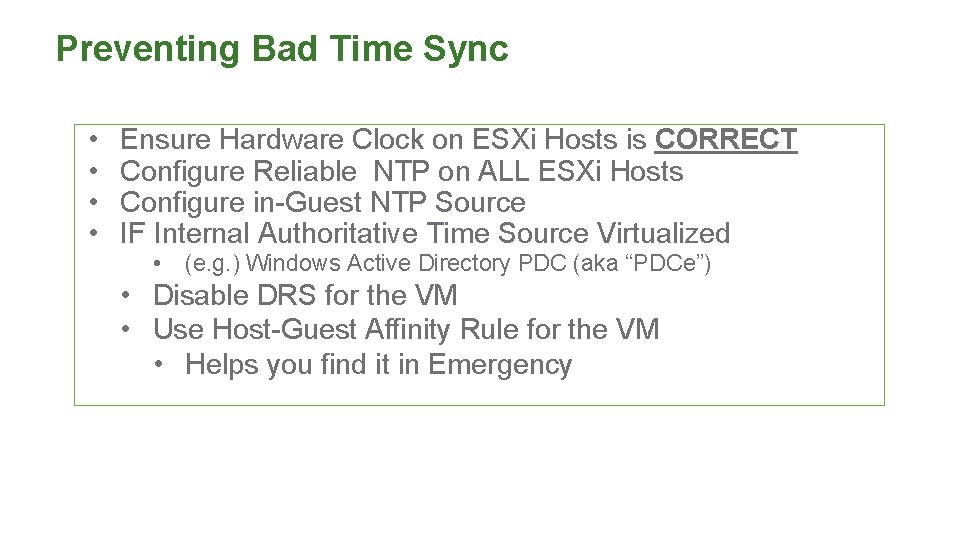

Preventing Bad Time Sync • • Ensure Hardware Clock on ESXi Hosts is CORRECT Configure Reliable NTP on ALL ESXi Hosts Configure in-Guest NTP Source IF Internal Authoritative Time Source Virtualized • (e. g. ) Windows Active Directory PDC (aka “PDCe”) • Disable DRS for the VM • Use Host-Guest Affinity Rule for the VM • Helps you find it in Emergency

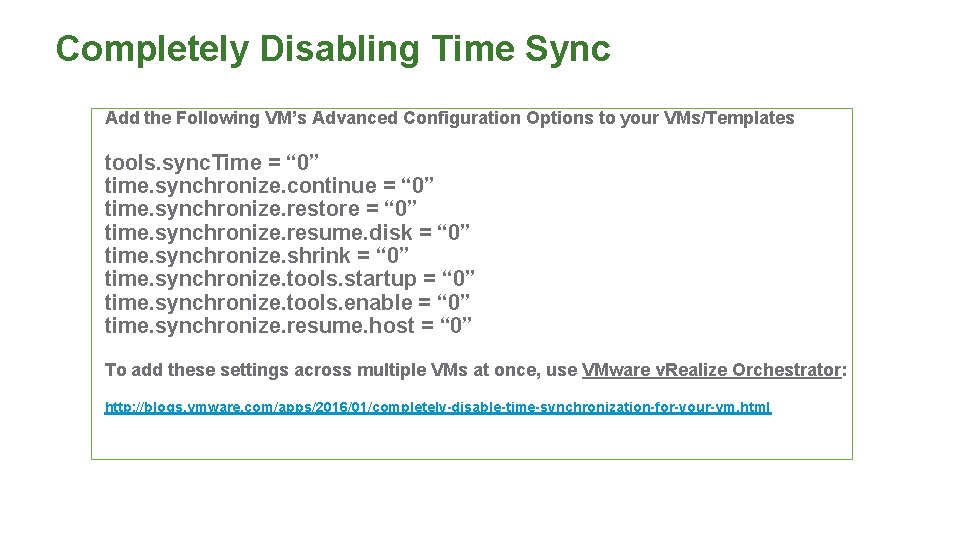

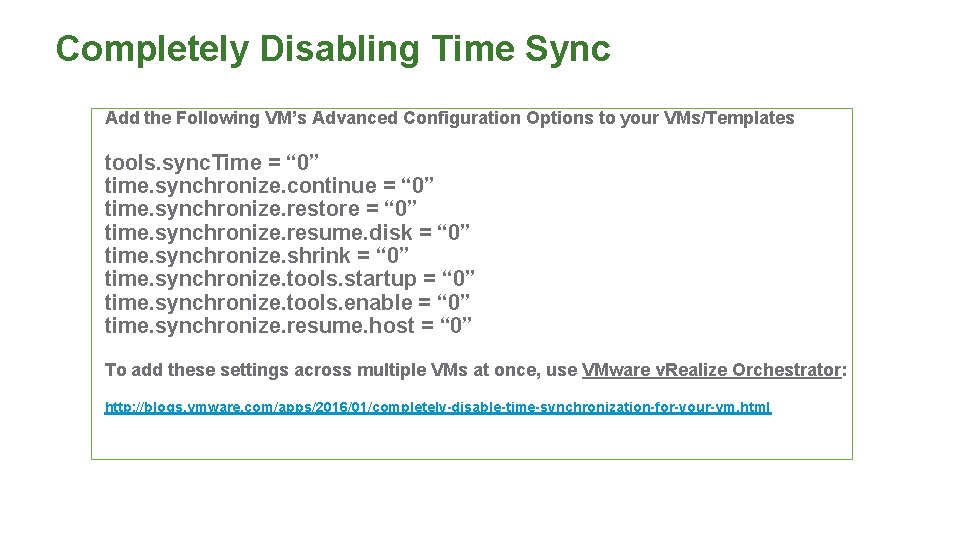

Completely Disabling Time Sync Add the Following VM’s Advanced Configuration Options to your VMs/Templates tools. sync. Time = “ 0” time. synchronize. continue = “ 0” time. synchronize. restore = “ 0” time. synchronize. resume. disk = “ 0” time. synchronize. shrink = “ 0” time. synchronize. tools. startup = “ 0” time. synchronize. tools. enable = “ 0” time. synchronize. resume. host = “ 0” To add these settings across multiple VMs at once, use VMware v. Realize Orchestrator: http: //blogs. vmware. com/apps/2016/01/completely-disable-time-synchronization-for-your-vm. html

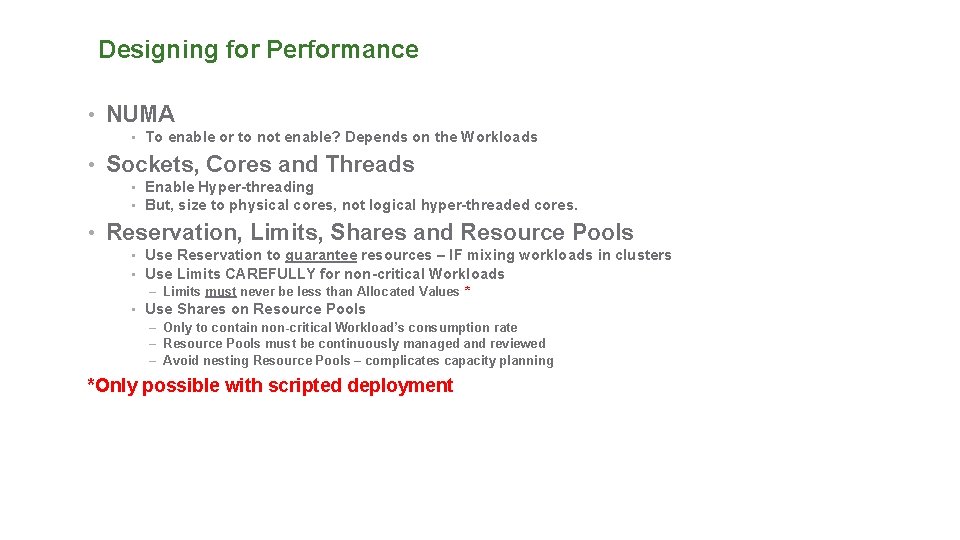

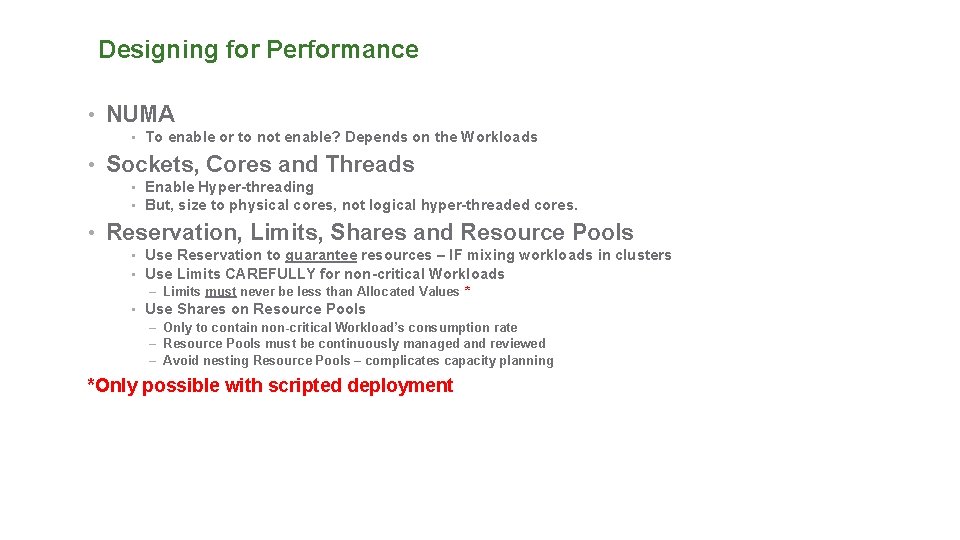

Designing for Performance • NUMA • To enable or to not enable? Depends on the Workloads • Sockets, Cores and Threads • Enable Hyper-threading • But, size to physical cores, not logical hyper-threaded cores. • Reservation, Limits, Shares and Resource Pools • Use Reservation to guarantee resources – IF mixing workloads in clusters • Use Limits CAREFULLY for non-critical Workloads – Limits must never be less than Allocated Values * • Use Shares on Resource Pools – Only to contain non-critical Workload’s consumption rate – Resource Pools must be continuously managed and reviewed – Avoid nesting Resource Pools – complicates capacity planning *Only possible with scripted deployment

Monitoring Your Virtualized SQL Server

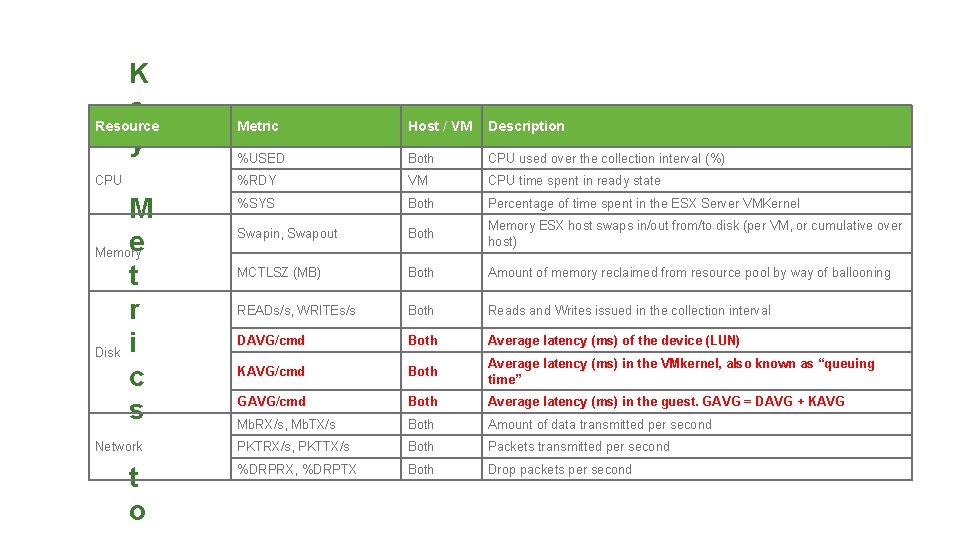

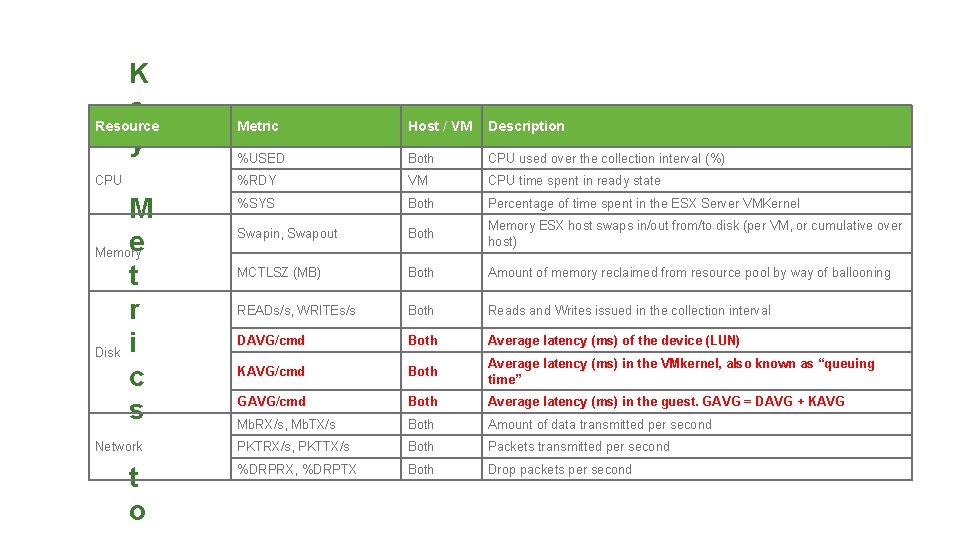

K e Resource y Metric Host / VM Description %USED Both CPU used over the collection interval (%) CPU %RDY VM CPU time spent in ready state M e Memory t r Disk i c s %SYS Both Percentage of time spent in the ESX Server VMKernel Swapin, Swapout Both Memory ESX host swaps in/out from/to disk (per VM, or cumulative over host) MCTLSZ (MB) Both Amount of memory reclaimed from resource pool by way of ballooning READs/s, WRITEs/s Both Reads and Writes issued in the collection interval DAVG/cmd Both Average latency (ms) of the device (LUN) KAVG/cmd Both Average latency (ms) in the VMkernel, also known as “queuing time” GAVG/cmd Both Average latency (ms) in the guest. GAVG = DAVG + KAVG Mb. RX/s, Mb. TX/s Both Amount of data transmitted per second Network PKTRX/s, PKTTX/s Both Packets transmitted per second %DRPRX, %DRPTX Both Drop packets per second t o

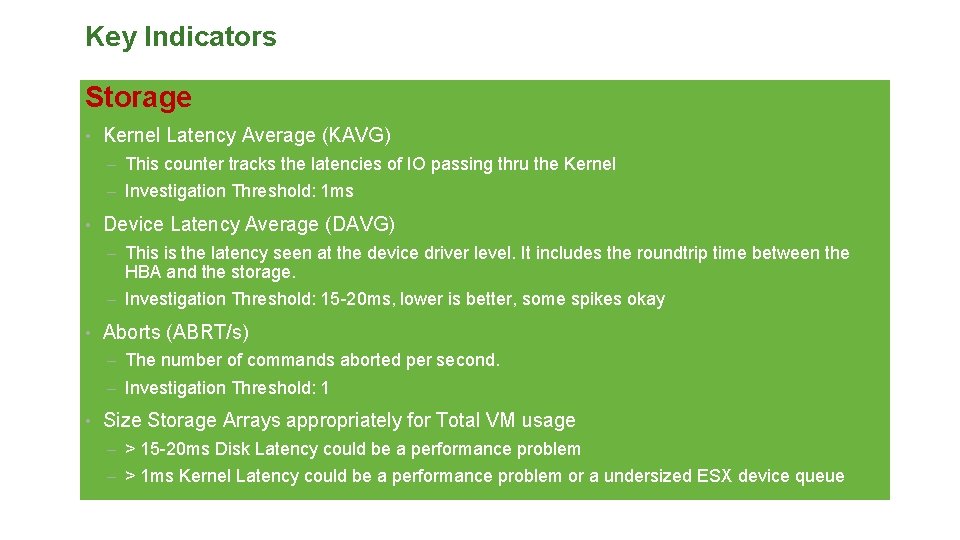

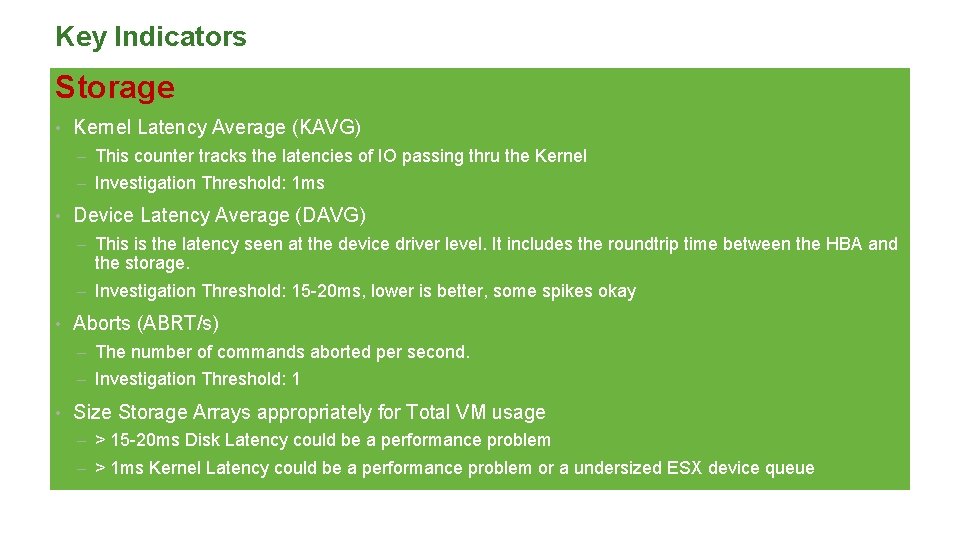

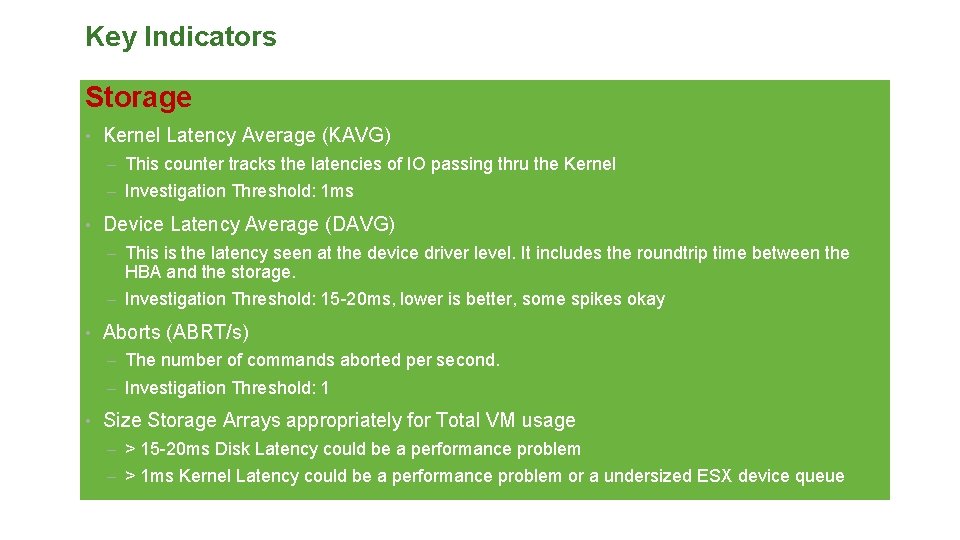

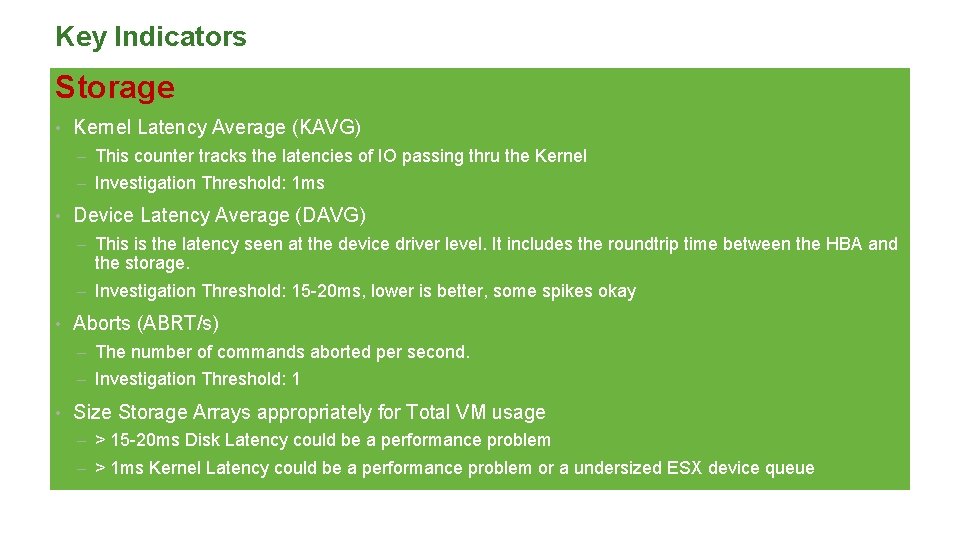

Key Indicators Storage • Kernel Latency Average (KAVG) – This counter tracks the latencies of IO passing thru the Kernel – Investigation Threshold: 1 ms • Device Latency Average (DAVG) – This is the latency seen at the device driver level. It includes the roundtrip time between the HBA and the storage. – Investigation Threshold: 15 -20 ms, lower is better, some spikes okay • Aborts (ABRT/s) – The number of commands aborted per second. – Investigation Threshold: 1 • Size Storage Arrays appropriately for Total VM usage – > 15 -20 ms Disk Latency could be a performance problem – > 1 ms Kernel Latency could be a performance problem or a undersized ESX device queue

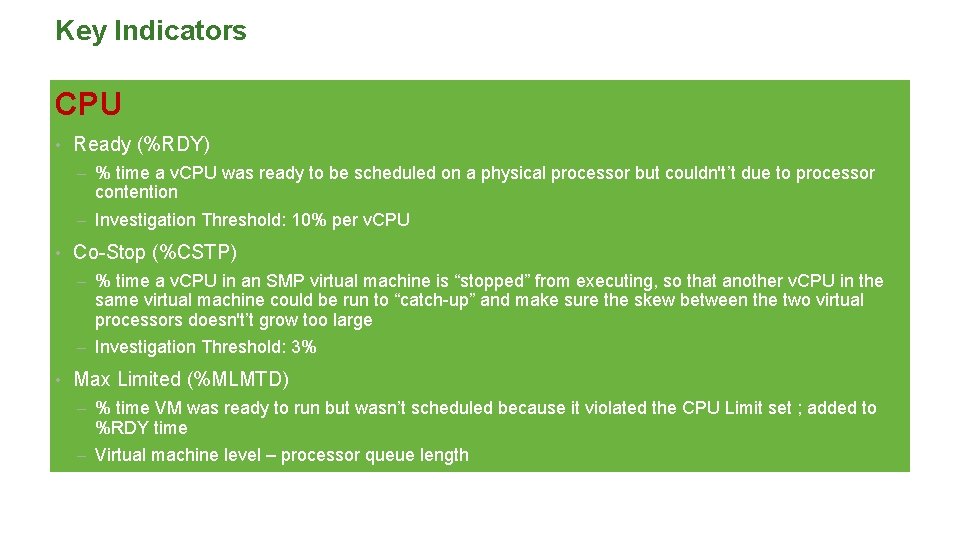

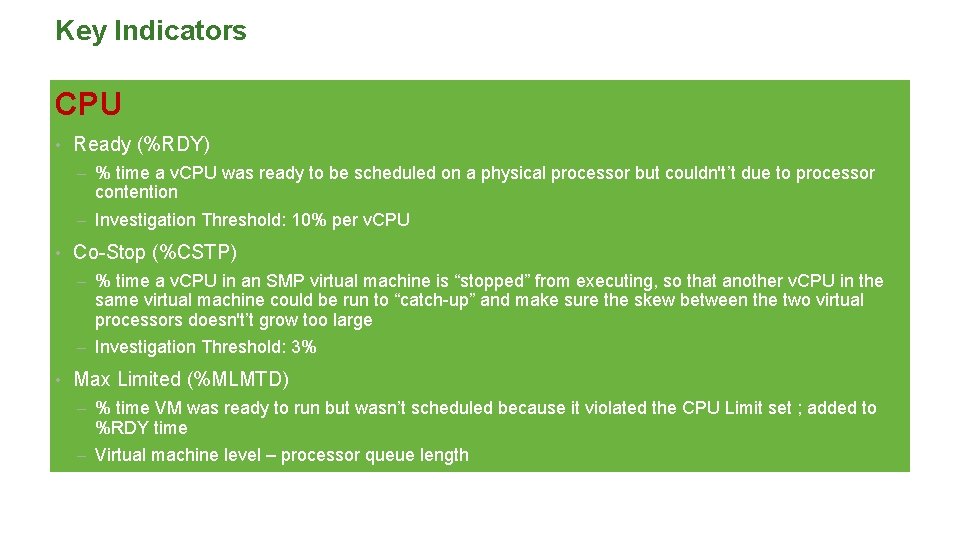

Key Indicators CPU • Ready (%RDY) – % time a v. CPU was ready to be scheduled on a physical processor but couldn't’t due to processor contention – Investigation Threshold: 10% per v. CPU • Co-Stop (%CSTP) – % time a v. CPU in an SMP virtual machine is “stopped” from executing, so that another v. CPU in the same virtual machine could be run to “catch-up” and make sure the skew between the two virtual processors doesn't’t grow too large – Investigation Threshold: 3% • Max Limited (%MLMTD) – % time VM was ready to run but wasn’t scheduled because it violated the CPU Limit set ; added to %RDY time – Virtual machine level – processor queue length

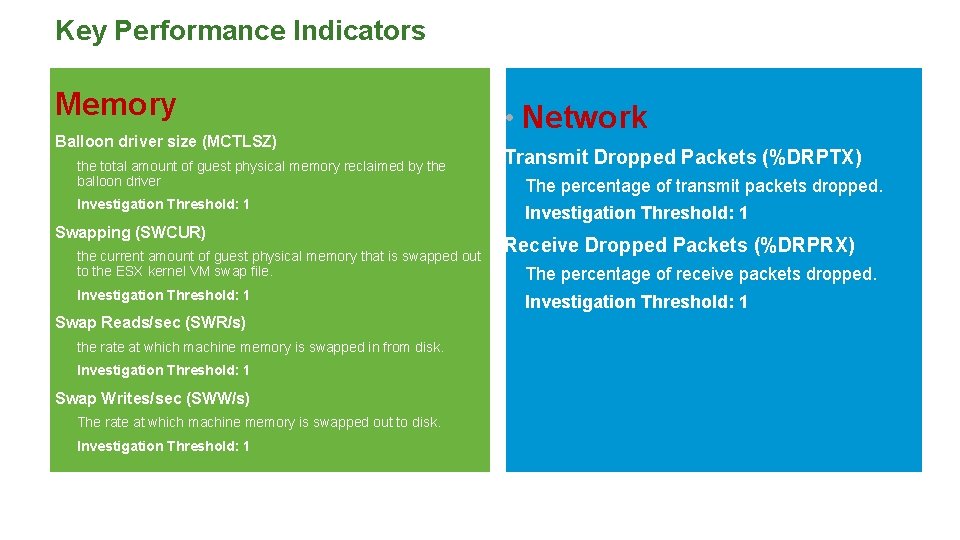

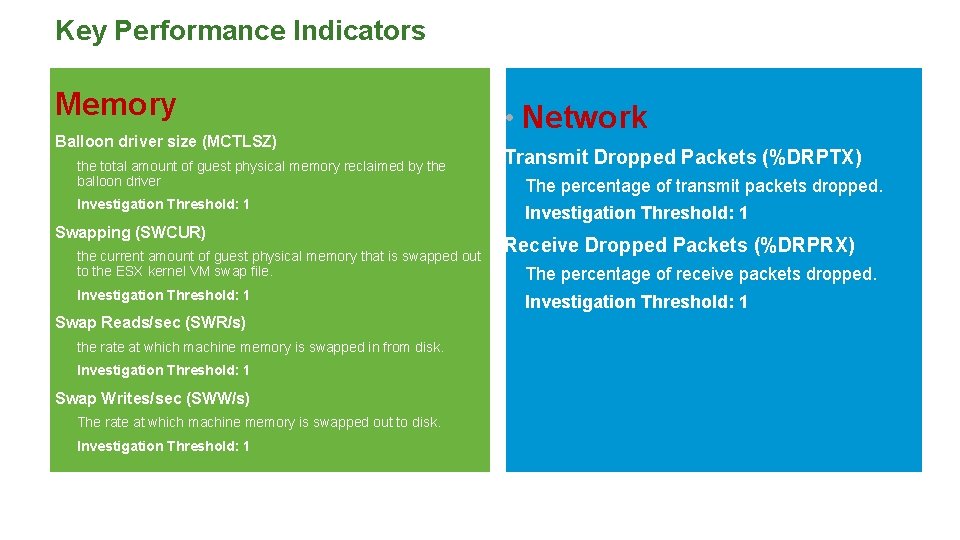

Key Performance Indicators Memory Balloon driver size (MCTLSZ) the total amount of guest physical memory reclaimed by the balloon driver Investigation Threshold: 1 Swapping (SWCUR) the current amount of guest physical memory that is swapped out to the ESX kernel VM swap file. Investigation Threshold: 1 Swap Reads/sec (SWR/s) the rate at which machine memory is swapped in from disk. Investigation Threshold: 1 Swap Writes/sec (SWW/s) The rate at which machine memory is swapped out to disk. Investigation Threshold: 1 • Network Transmit Dropped Packets (%DRPTX) The percentage of transmit packets dropped. Investigation Threshold: 1 Receive Dropped Packets (%DRPRX) The percentage of receive packets dropped. Investigation Threshold: 1

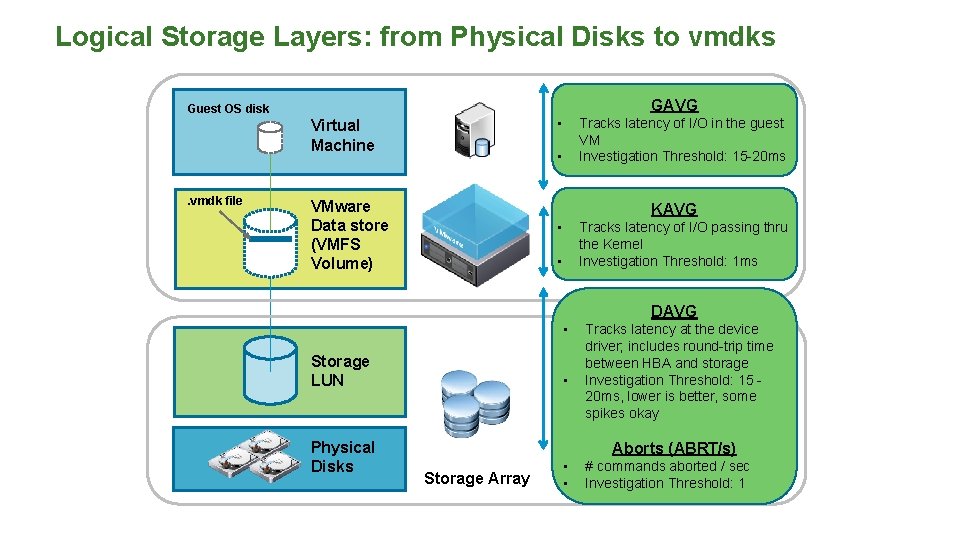

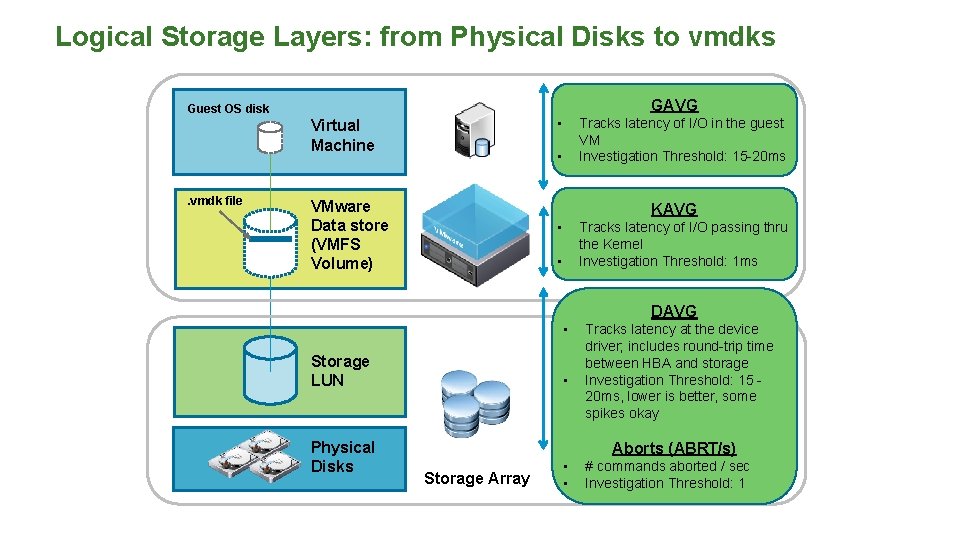

Logical Storage Layers: from Physical Disks to vmdks GAVG Guest OS disk • Virtual Machine . vmdk file Tracks latency of I/O in the guest VM Investigation Threshold: 15 -20 ms • VMware Data store (VMFS Volume) KAVG • Tracks latency of I/O passing thru the Kernel Investigation Threshold: 1 ms • DAVG • Storage LUN Physical Disks • Tracks latency at the device driver; includes round-trip time between HBA and storage Investigation Threshold: 15 20 ms, lower is better, some spikes okay Aborts (ABRT/s) Storage Array • • # commands aborted / sec Investigation Threshold: 1

Key Indicators Storage • Kernel Latency Average (KAVG) – This counter tracks the latencies of IO passing thru the Kernel – Investigation Threshold: 1 ms • Device Latency Average (DAVG) – This is the latency seen at the device driver level. It includes the roundtrip time between the HBA and the storage. – Investigation Threshold: 15 -20 ms, lower is better, some spikes okay • Aborts (ABRT/s) – The number of commands aborted per second. – Investigation Threshold: 1 • Size Storage Arrays appropriately for Total VM usage – > 15 -20 ms Disk Latency could be a performance problem – > 1 ms Kernel Latency could be a performance problem or a undersized ESX device queue

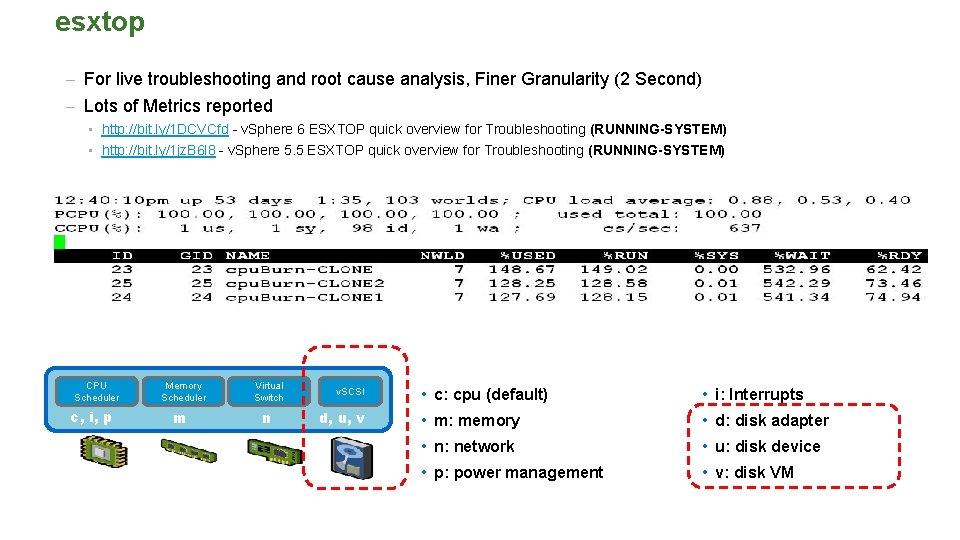

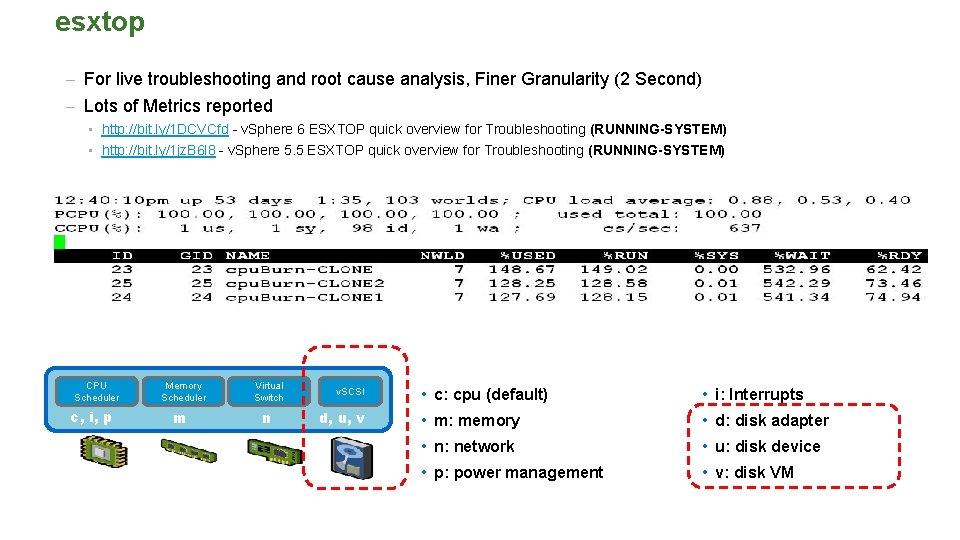

esxtop – For live troubleshooting and root cause analysis, Finer Granularity (2 Second) – Lots of Metrics reported • http: //bit. ly/1 DCVCfd - v. Sphere 6 ESXTOP quick overview for Troubleshooting (RUNNING-SYSTEM) • http: //bit. ly/1 jz. B 6 l 8 - v. Sphere 5. 5 ESXTOP quick overview for Troubleshooting (RUNNING-SYSTEM) E S X T O P CPU Scheduler c, i, p Memory Scheduler m Virtual Switch n v. SCSI d, u, v • • c: cpu (default) m: memory n: network p: power management S C R E E N S • • i: Interrupts d: disk adapter u: disk device v: disk VM

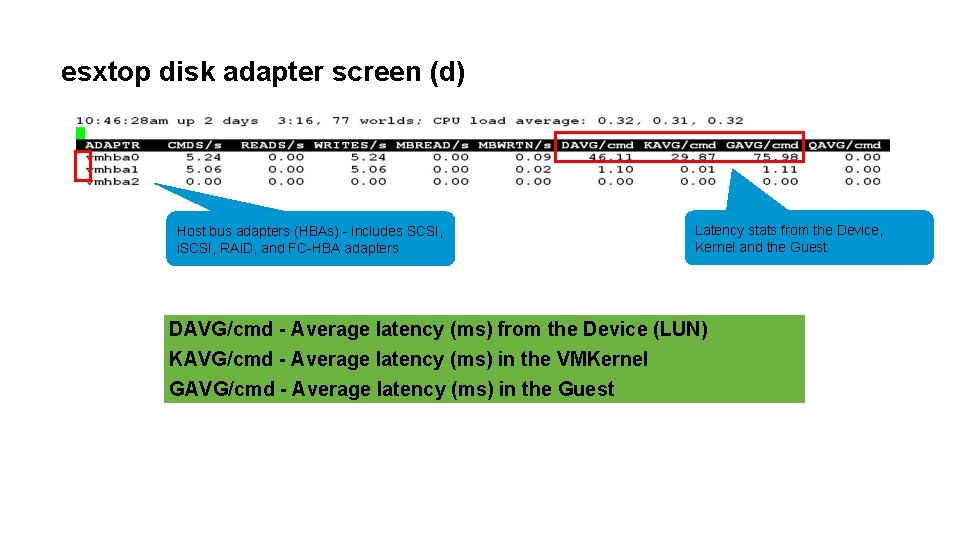

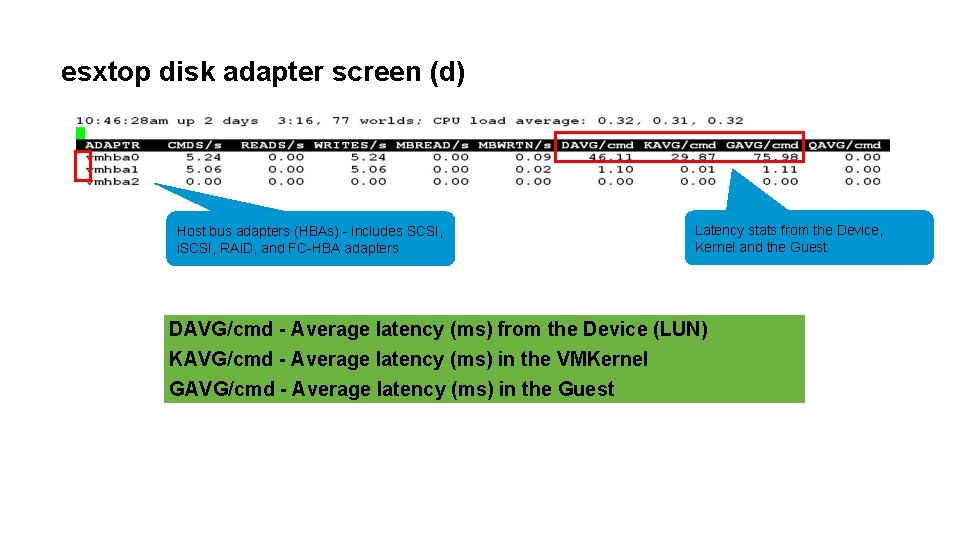

esxtop disk adapter screen (d) Host bus adapters (HBAs) - includes SCSI, i. SCSI, RAID, and FC-HBA adapters Latency stats from the Device, Kernel and the Guest DAVG/cmd - Average latency (ms) from the Device (LUN) KAVG/cmd - Average latency (ms) in the VMKernel GAVG/cmd - Average latency (ms) in the Guest

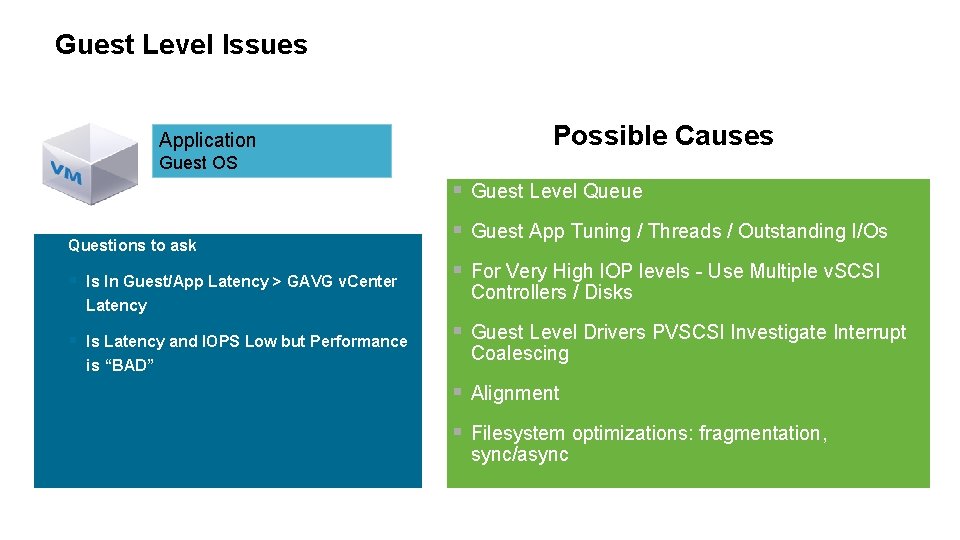

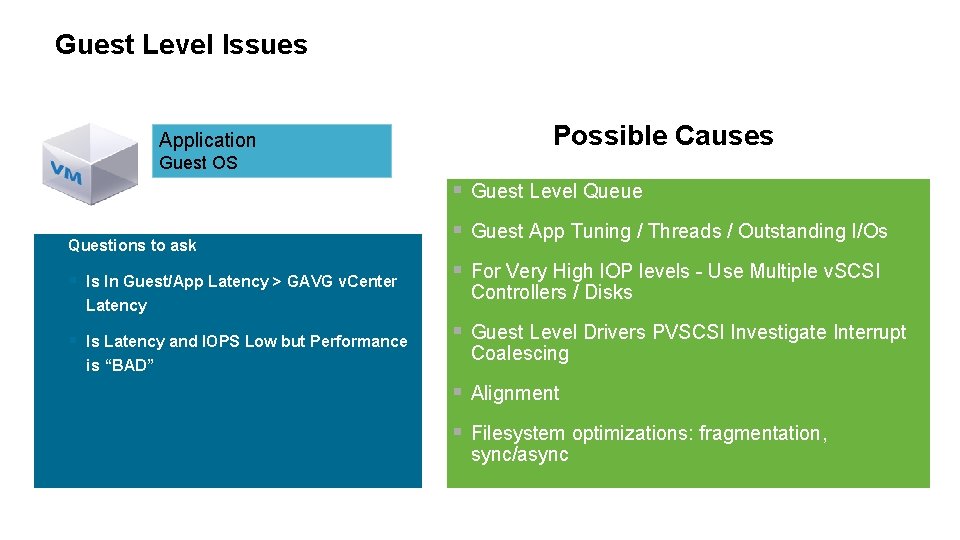

Guest Level Issues Application Possible Causes Guest OS § Guest Level Queue Questions to ask § Is In Guest/App Latency > GAVG v. Center Latency § Is Latency and IOPS Low but Performance is “BAD” § Guest App Tuning / Threads / Outstanding I/Os § For Very High IOP levels - Use Multiple v. SCSI Controllers / Disks § Guest Level Drivers PVSCSI Investigate Interrupt Coalescing § Alignment § Filesystem optimizations: fragmentation, sync/async

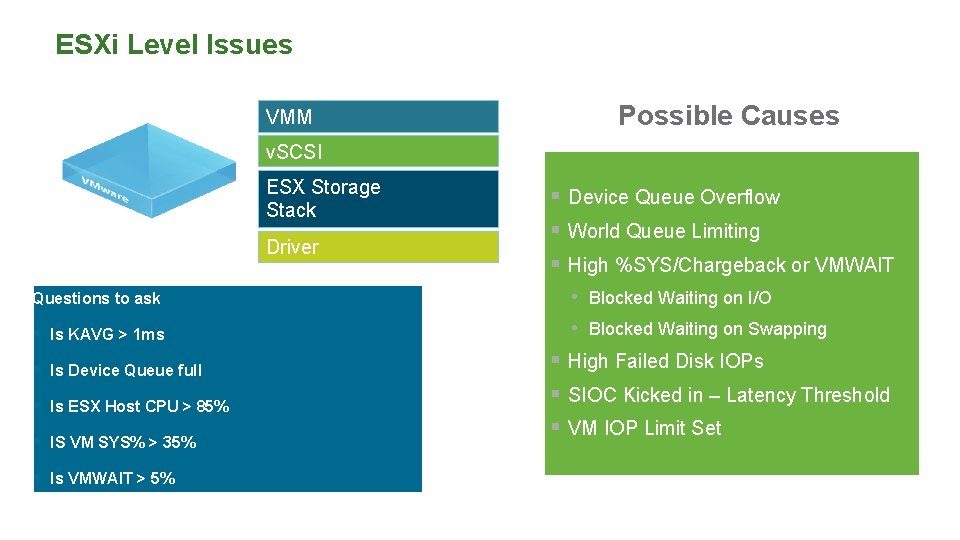

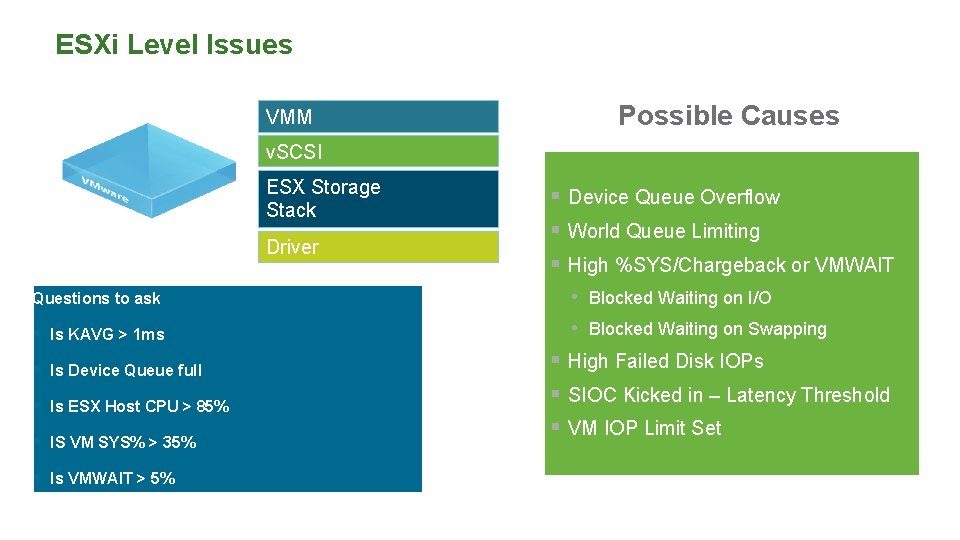

ESXi Level Issues VMM Possible Causes v. SCSI ESX Storage Stack Driver § Device Queue Overflow § World Queue Limiting § High %SYS/Chargeback or VMWAIT Questions to ask • Blocked Waiting on I/O § Is KAVG > 1 ms • Blocked Waiting on Swapping § Is Device Queue full § Is ESX Host CPU > 85% § IS VM SYS% > 35% § Is VMWAIT > 5% § High Failed Disk IOPs § SIOC Kicked in – Latency Threshold § VM IOP Limit Set

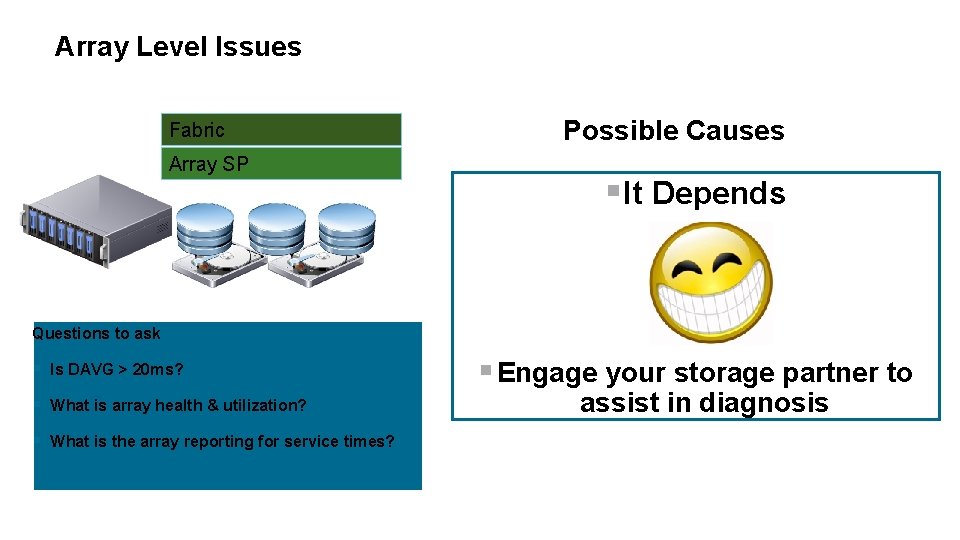

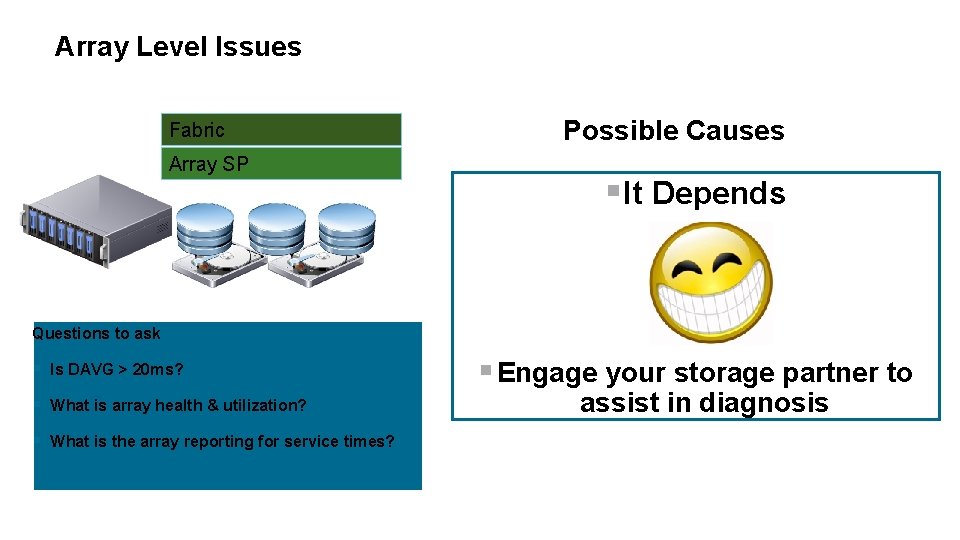

Array Level Issues Fabric Array SP Possible Causes § It Depends Questions to ask § Is DAVG > 20 ms? § What is array health & utilization? § What is the array reporting for service times? § Engage your storage partner to assist in diagnosis

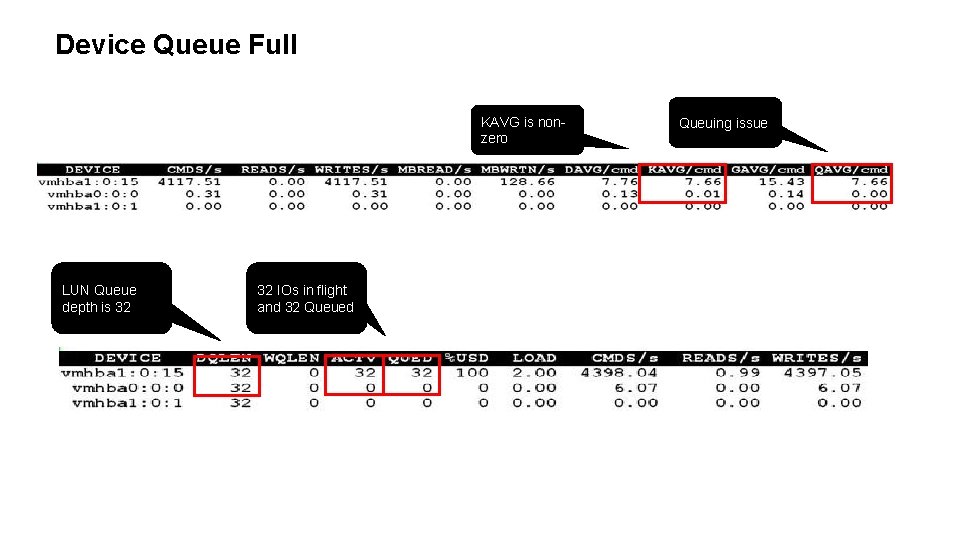

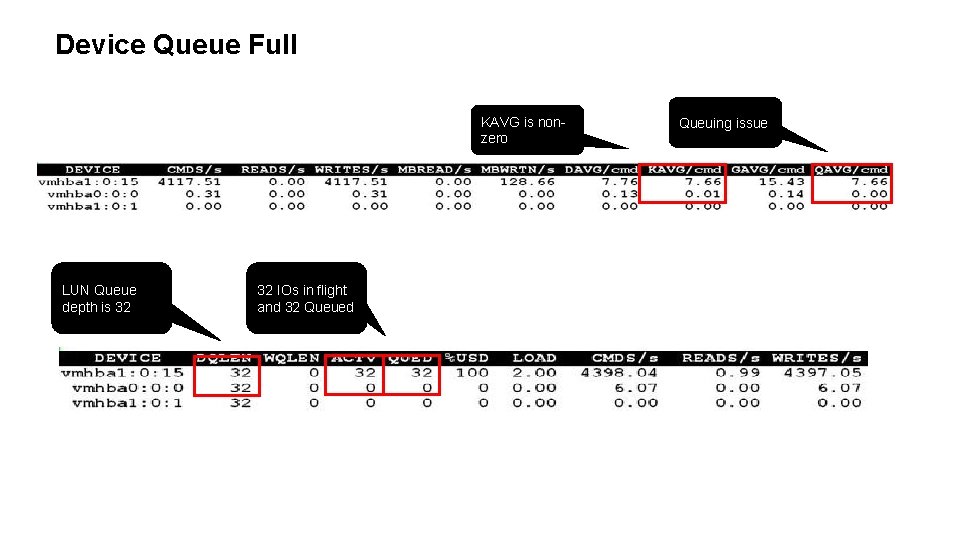

Device Queue Full KAVG is nonzero LUN Queue depth is 32 32 IOs in flight and 32 Queued Queuing issue

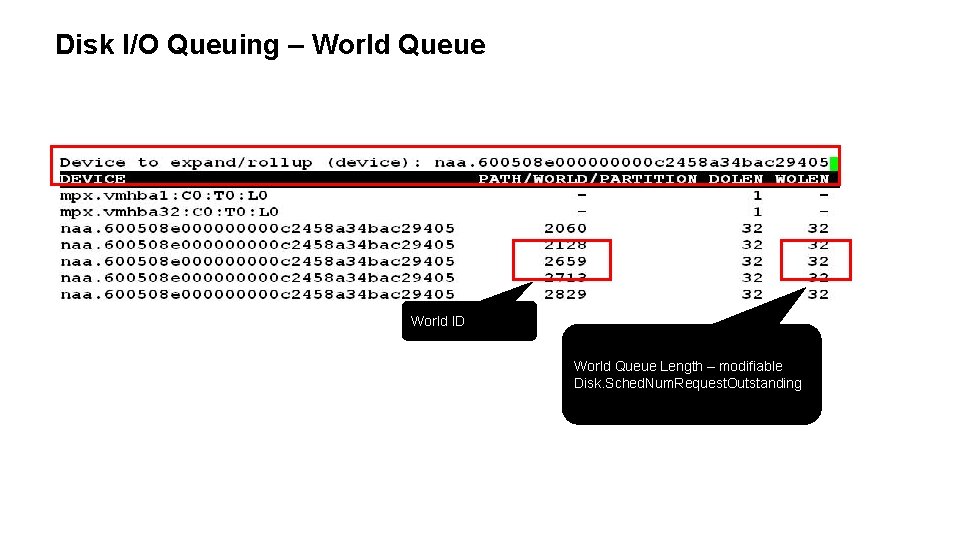

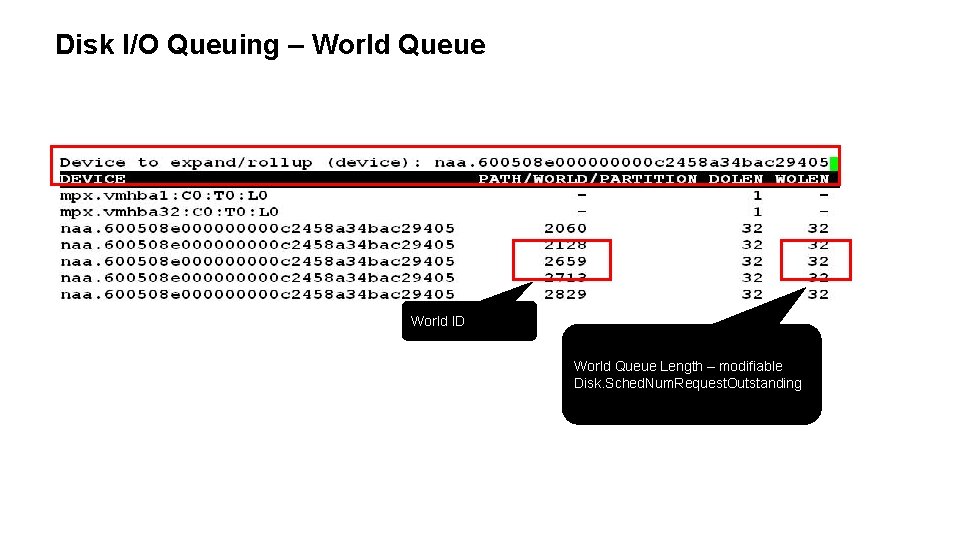

Disk I/O Queuing – World Queue World ID World Queue Length – modifiable Disk. Sched. Num. Request. Outstanding