Structure of Computer Systems Course 4 The Central

![A simple computer l Example 1 – ADD Acc, M[100 h] • • IF A simple computer l Example 1 – ADD Acc, M[100 h] • • IF](https://slidetodoc.com/presentation_image_h2/c9009e01a1d712fb397747c843cd2cf4/image-6.jpg)

![A simple computer l Homework: try to implement: • • MOV M[addr], Acc MOV A simple computer l Homework: try to implement: • • MOV M[addr], Acc MOV](https://slidetodoc.com/presentation_image_h2/c9009e01a1d712fb397747c843cd2cf4/image-8.jpg)

- Slides: 28

Structure of Computer Systems Course 4 The Central Processing Unit CPU

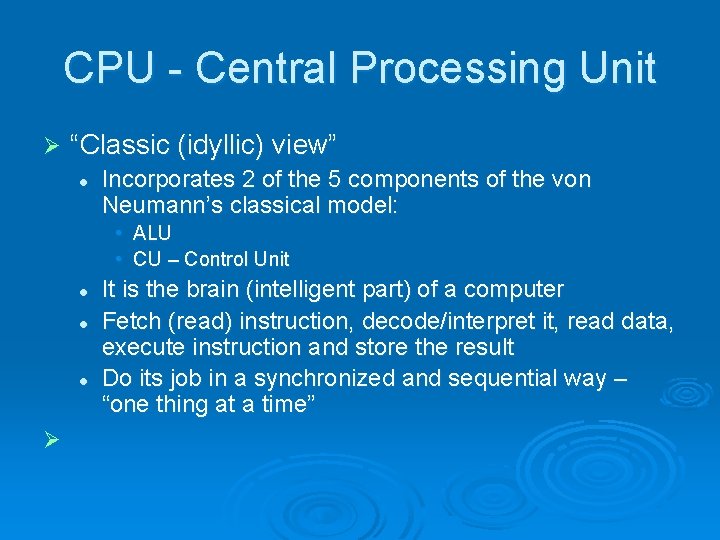

CPU - Central Processing Unit Ø “Classic (idyllic) view” l Incorporates 2 of the 5 components of the von Neumann’s classical model: • ALU • CU – Control Unit l l l Ø It is the brain (intelligent part) of a computer Fetch (read) instruction, decode/interpret it, read data, execute instruction and store the result Do its job in a synchronized and sequential way – “one thing at a time”

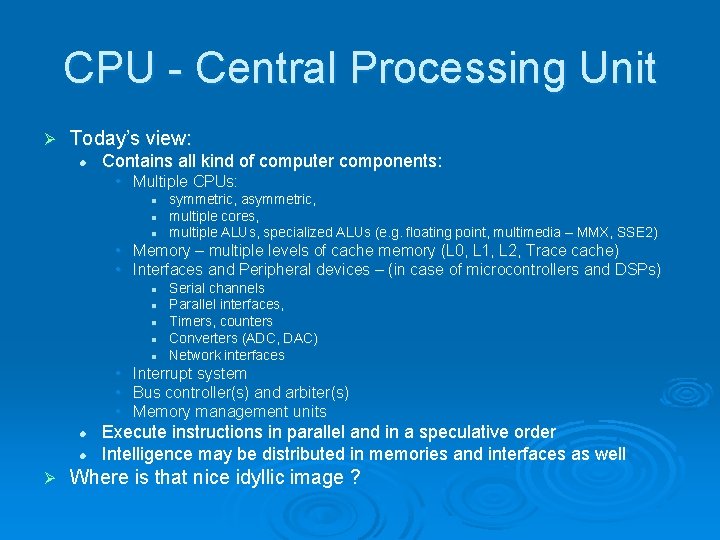

CPU - Central Processing Unit Ø Today’s view: l Contains all kind of computer components: • Multiple CPUs: l l l symmetric, asymmetric, multiple cores, multiple ALUs, specialized ALUs (e. g. floating point, multimedia – MMX, SSE 2) • Memory – multiple levels of cache memory (L 0, L 1, L 2, Trace cache) • Interfaces and Peripheral devices – (in case of microcontrollers and DSPs) l l l Serial channels Parallel interfaces, Timers, counters Converters (ADC, DAC) Network interfaces • Interrupt system • Bus controller(s) and arbiter(s) • Memory management units l l Ø Execute instructions in parallel and in a speculative order Intelligence may be distributed in memories and interfaces as well Where is that nice idyllic image ?

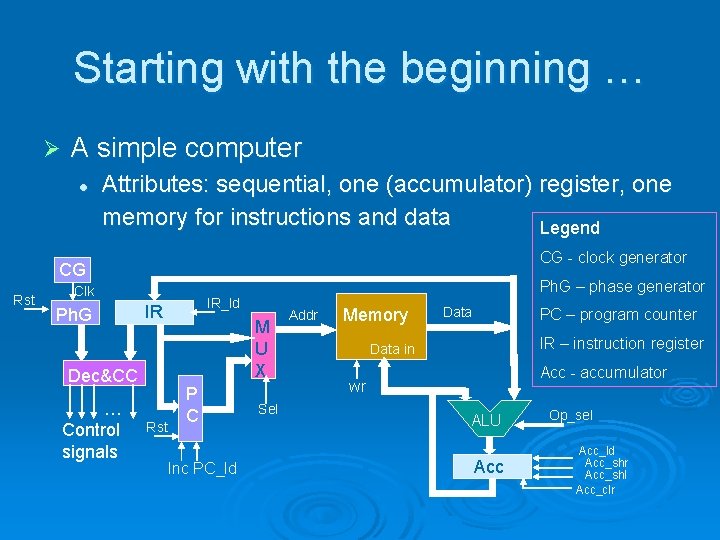

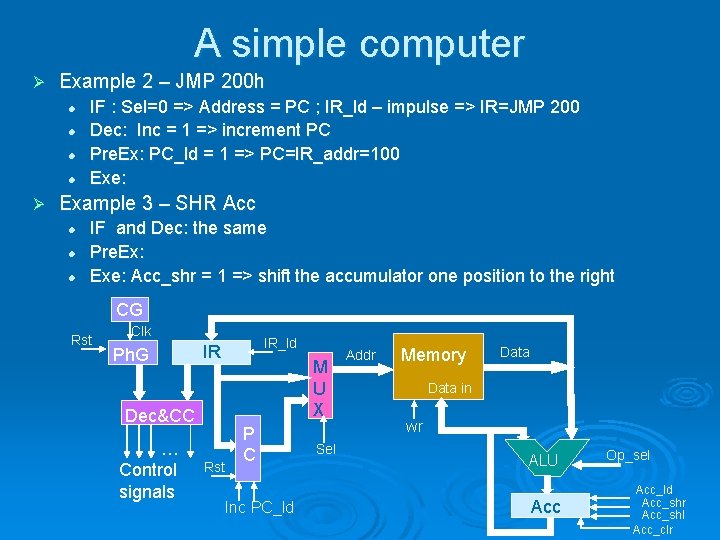

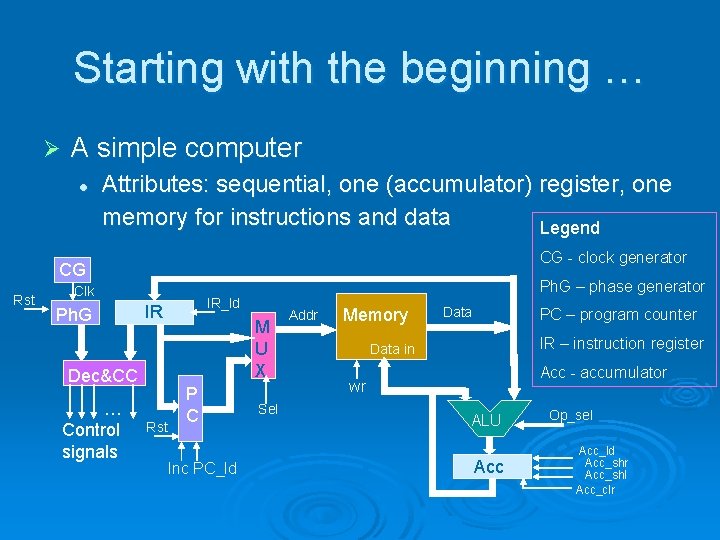

Starting with the beginning … Ø A simple computer l Attributes: sequential, one (accumulator) register, one memory for instructions and data Legend CG - clock generator CG Rst Ph. G – phase generator Clk Ph. G Dec&CC … Control signals IR_ld IR Rst M U X P C Inc PC_ld Sel Addr Memory Data PC – program counter IR – instruction register Data in Acc - accumulator wr ALU Acc Op_sel Acc_ld Acc_shr Acc_shl Acc_clr

A simple computer Ø How does it work? l 4 phases: • IF – instruction fetch – read the instruction into IR • Dec - Decode the instruction – generate control signals • Pre. Ex - Prepare execution – e. g. read the data from memory • Exe – Execute – e. g. adding, subtraction

![A simple computer l Example 1 ADD Acc M100 h IF A simple computer l Example 1 – ADD Acc, M[100 h] • • IF](https://slidetodoc.com/presentation_image_h2/c9009e01a1d712fb397747c843cd2cf4/image-6.jpg)

A simple computer l Example 1 – ADD Acc, M[100 h] • • IF : Sel=0 => Address = PC ; IR_ld – impuls => IR = ADD 100 Dec: Sel=1 =>Address = IR_adr[100] ; Inc=1 increment PC Pre. Ex: Op_sel = code_add => ALU is doing an adding Exe: Acc_ld => Acc = Acc +M[100] CG Rst Clk Ph. G Dec&CC … Control signals IR_ld IR Rst M U X P C Inc PC_ld Sel Addr Memory Data in wr ALU Acc Op_sel Acc_ld Acc_shr Acc_shl Acc_clr

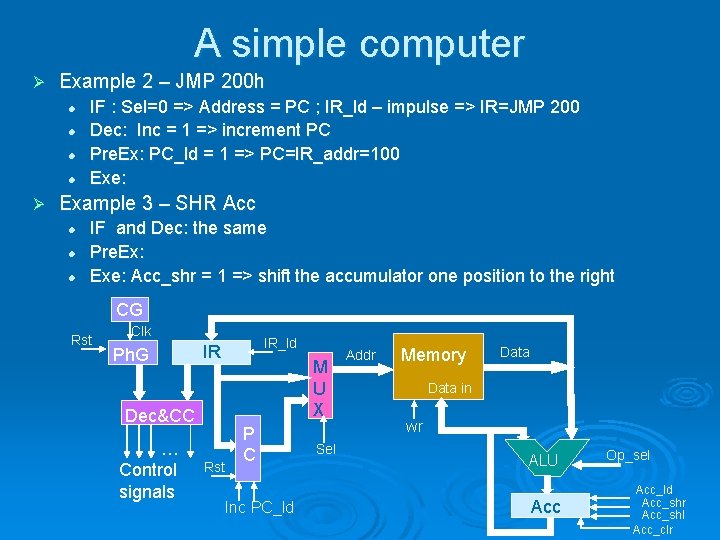

A simple computer Ø Example 2 – JMP 200 h l l Ø IF : Sel=0 => Address = PC ; IR_ld – impulse => IR=JMP 200 Dec: Inc = 1 => increment PC Pre. Ex: PC_ld = 1 => PC=IR_addr=100 Exe: Example 3 – SHR Acc l l l IF and Dec: the same Pre. Ex: Exe: Acc_shr = 1 => shift the accumulator one position to the right CG Rst Clk Ph. G Dec&CC … Control signals IR_ld IR Rst M U X P C Inc PC_ld Sel Addr Memory Data in wr ALU Acc Op_sel Acc_ld Acc_shr Acc_shl Acc_clr

![A simple computer l Homework try to implement MOV Maddr Acc MOV A simple computer l Homework: try to implement: • • MOV M[addr], Acc MOV](https://slidetodoc.com/presentation_image_h2/c9009e01a1d712fb397747c843cd2cf4/image-8.jpg)

A simple computer l Homework: try to implement: • • MOV M[addr], Acc MOV Acc, M[addr] Conditional jump (e. g if Acc=0, >0, <0) MOV Acc, 0

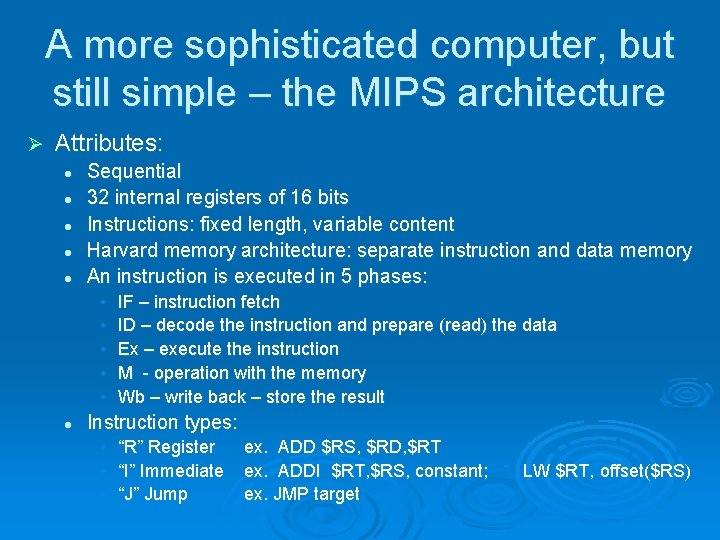

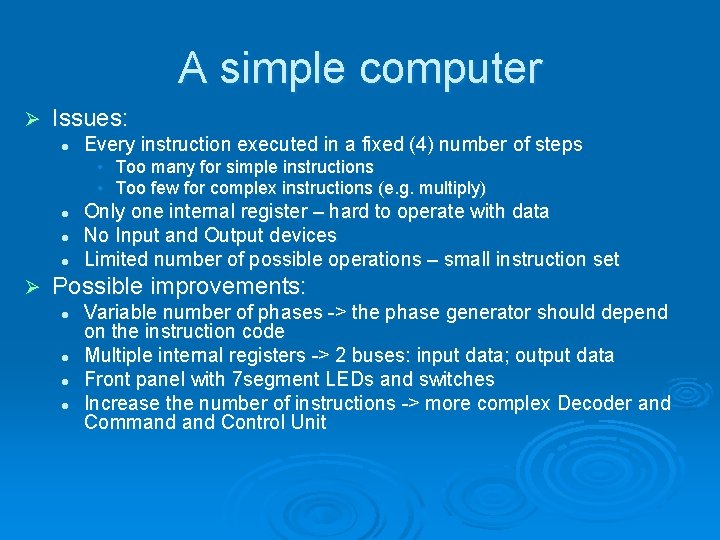

A simple computer Ø Issues: l Every instruction executed in a fixed (4) number of steps • Too many for simple instructions • Too few for complex instructions (e. g. multiply) l l l Ø Only one internal register – hard to operate with data No Input and Output devices Limited number of possible operations – small instruction set Possible improvements: l l Variable number of phases -> the phase generator should depend on the instruction code Multiple internal registers -> 2 buses: input data; output data Front panel with 7 segment LEDs and switches Increase the number of instructions -> more complex Decoder and Command Control Unit

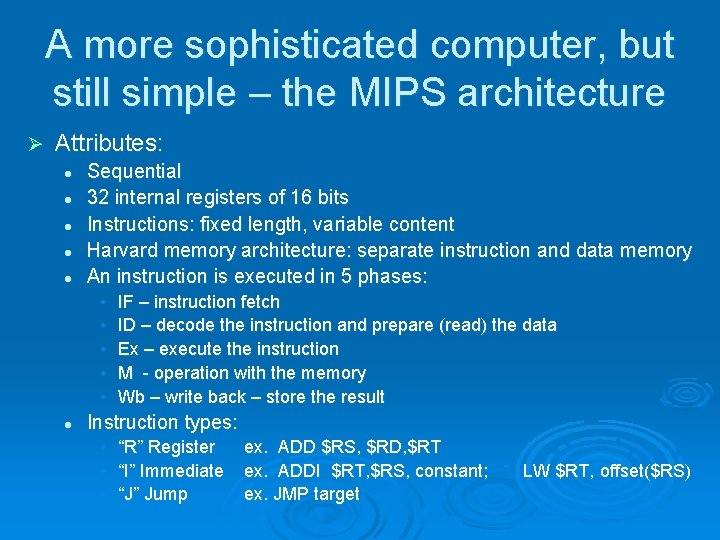

A more sophisticated computer, but still simple – the MIPS architecture Ø Attributes: l l l Sequential 32 internal registers of 16 bits Instructions: fixed length, variable content Harvard memory architecture: separate instruction and data memory An instruction is executed in 5 phases: • • • l IF – instruction fetch ID – decode the instruction and prepare (read) the data Ex – execute the instruction M - operation with the memory Wb – write back – store the result Instruction types: • “R” Register • “I” Immediate • “J” Jump ex. ADD $RS, $RD, $RT ex. ADDI $RT, $RS, constant; ex. JMP target LW $RT, offset($RS)

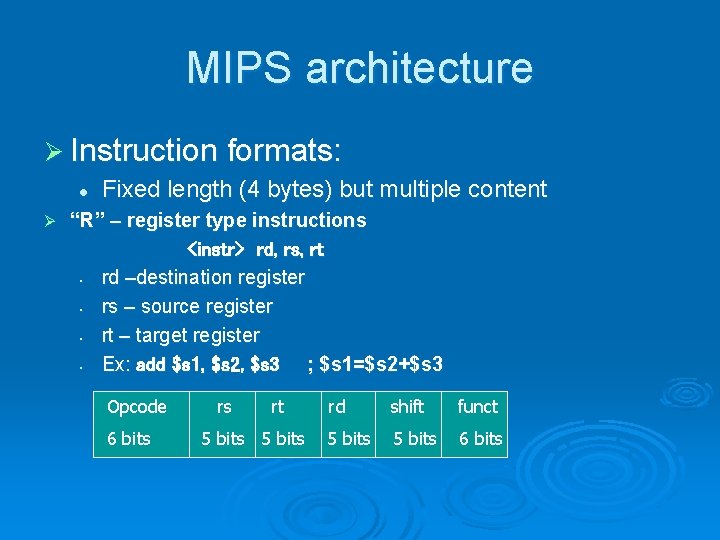

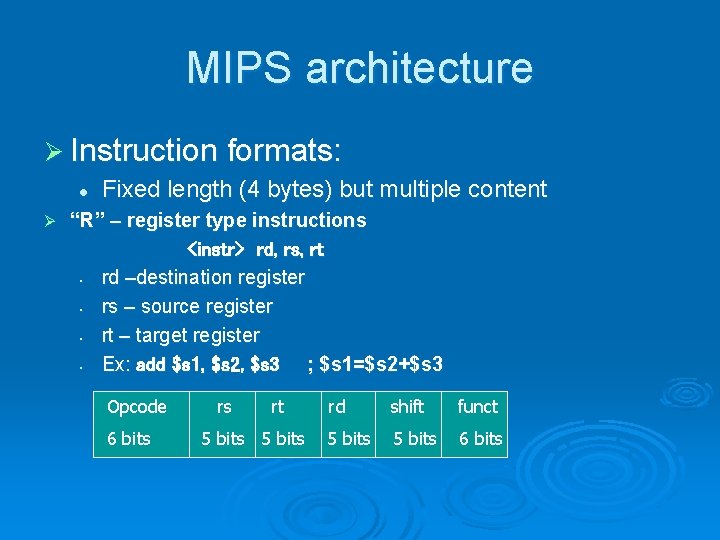

MIPS architecture Ø Instruction formats: l Ø Fixed length (4 bytes) but multiple content “R” – register type instructions <instr> rd, rs, rt • rd –destination register • rs – source register • rt – target register • Ex: add $s 1, $s 2, $s 3 ; $s 1=$s 2+$s 3 Opcode 6 bits rs rt 5 bits rd shift funct 5 bits 6 bits

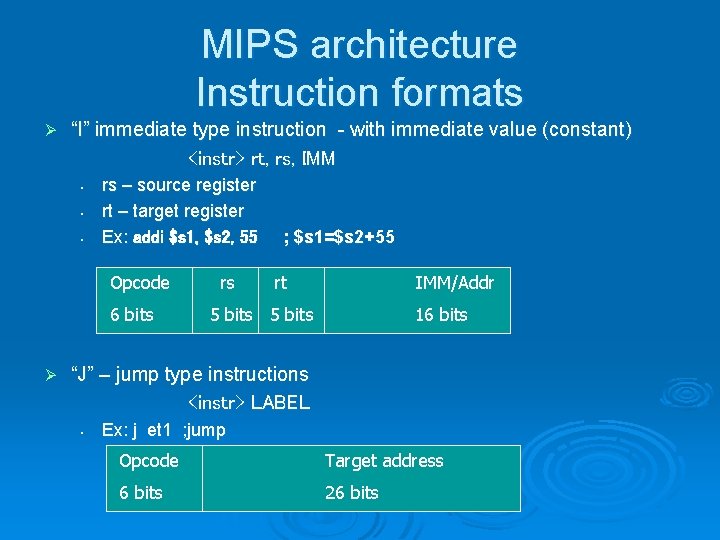

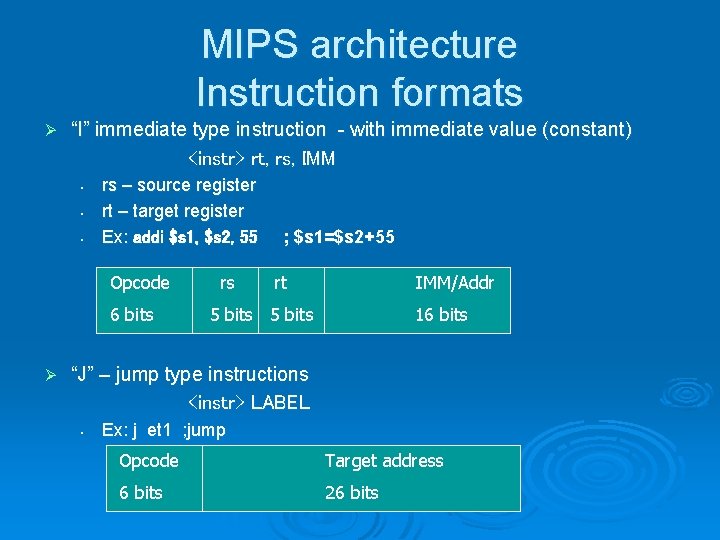

MIPS architecture Instruction formats Ø “I” immediate type instruction - with immediate value (constant) <instr> rt, rs, IMM • • • rs – source register rt – target register Ex: addi $s 1, $s 2, 55 Opcode 6 bits Ø rs ; $s 1=$s 2+55 rt IMM/Addr 5 bits 16 bits “J” – jump type instructions <instr> LABEL • Ex: j et 1 ; jump Opcode Target address 6 bits 26 bits

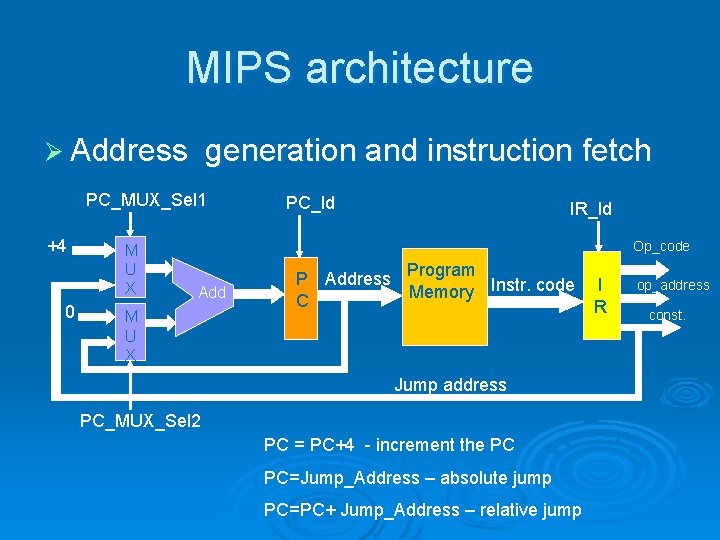

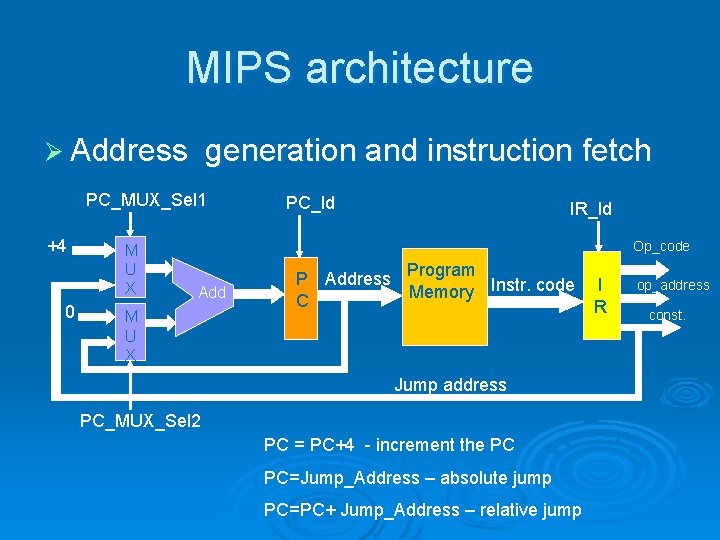

MIPS architecture Ø Address generation and instruction fetch PC_MUX_Sel 1 +4 0 M U X PC_ld IR_ld Op_code Add M U X P Address C Program Memory Instr. code Jump address PC_MUX_Sel 2 PC = PC+4 - increment the PC PC=Jump_Address – absolute jump PC=PC+ Jump_Address – relative jump I R op_address const.

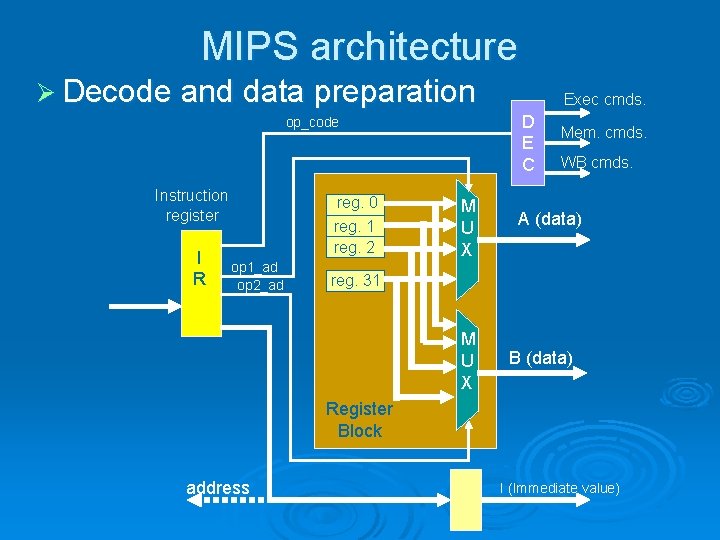

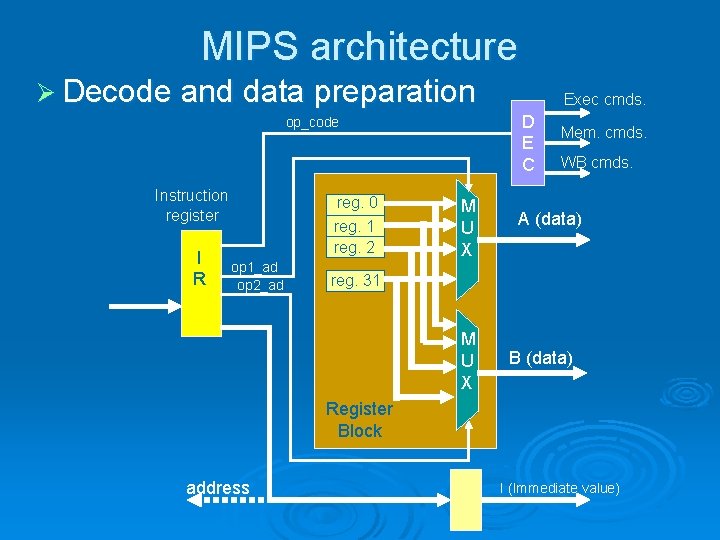

MIPS architecture Ø Decode and data preparation D E C op_code Instruction register I R reg. 0 reg. 1 reg. 2 op 1_ad op 2_ad Exec cmds. M U X Mem. cmds. WB cmds. A (data) reg. 31 M U X B (data) Register Block address I (Immediate value)

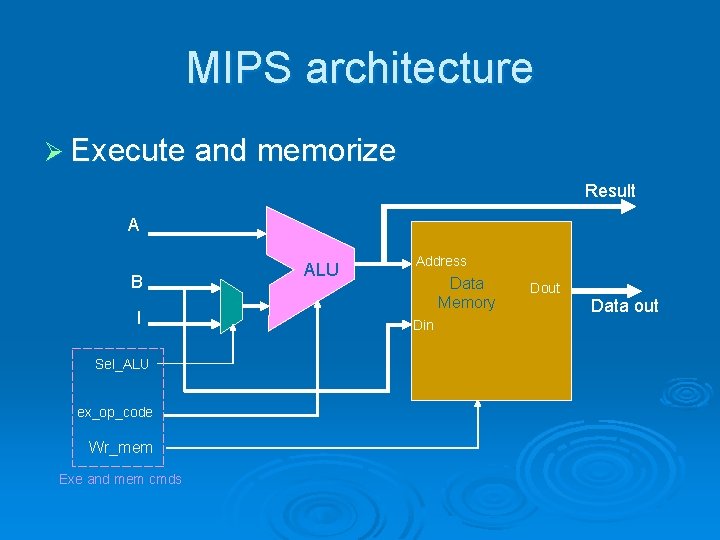

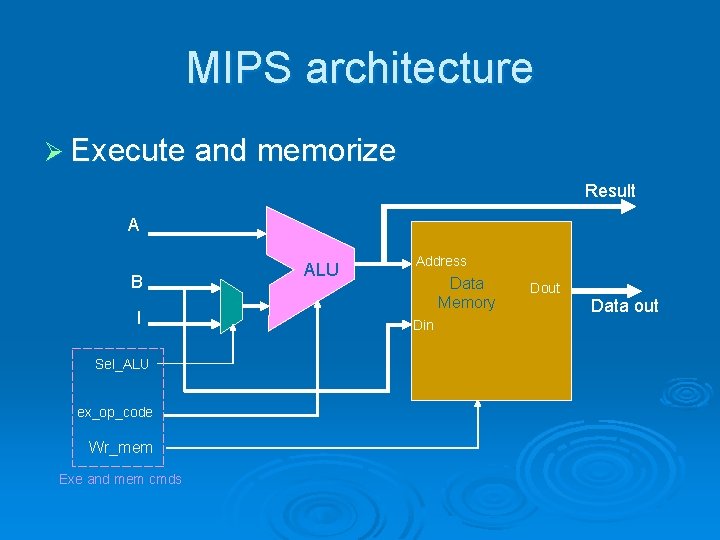

MIPS architecture Ø Execute and memorize Result A B I Sel_ALU ex_op_code Wr_mem Exe and mem cmds ALU Address Data Memory Din Dout Data out

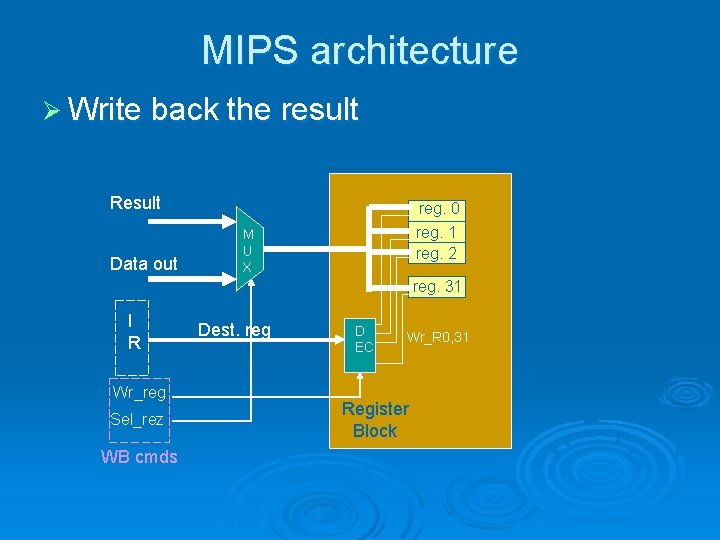

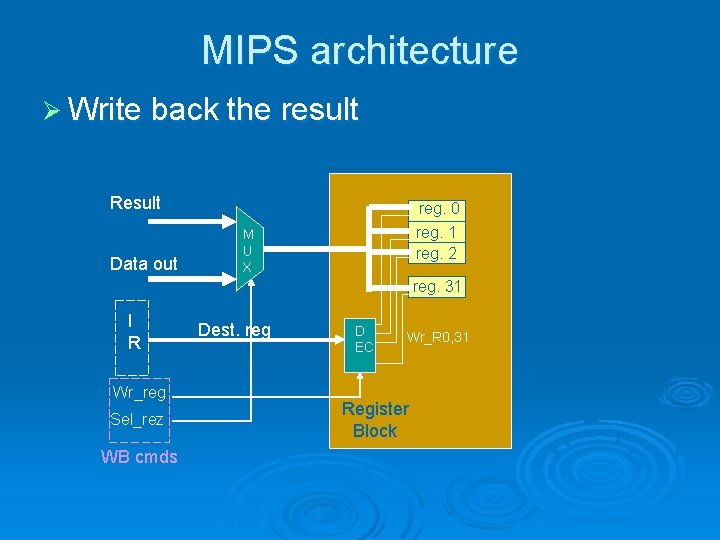

MIPS architecture Ø Write back the result Result Data out reg. 0 reg. 1 reg. 2 M U X reg. 31 I R Wr_reg Sel_rez WB cmds Dest. reg D EC Wr_R 0, 31 Register Block

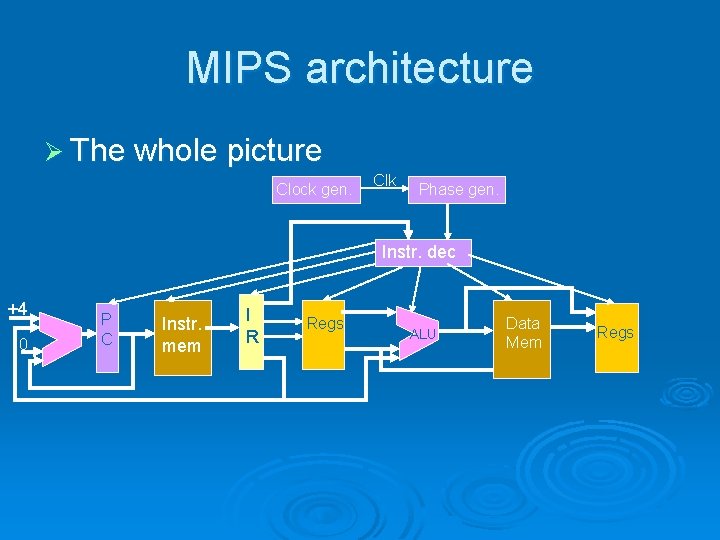

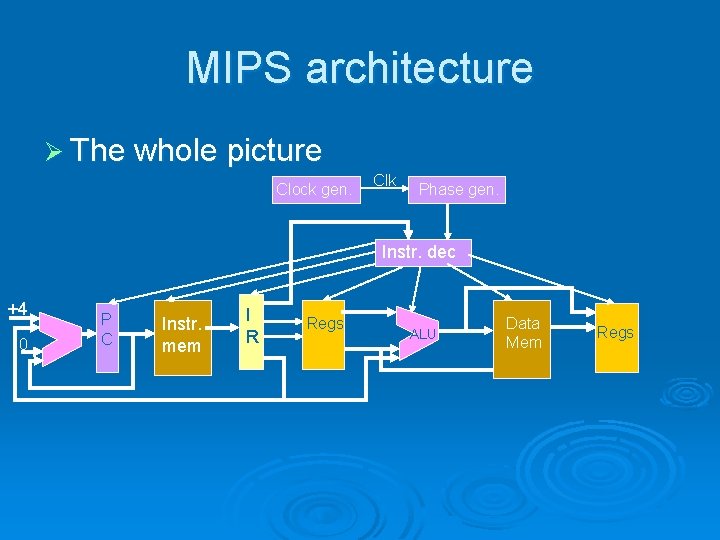

MIPS architecture Ø The whole picture Clock gen. Clk Phase gen. Instr. dec +4 0 P C Instr. mem I R Regs ALU Data Mem Regs

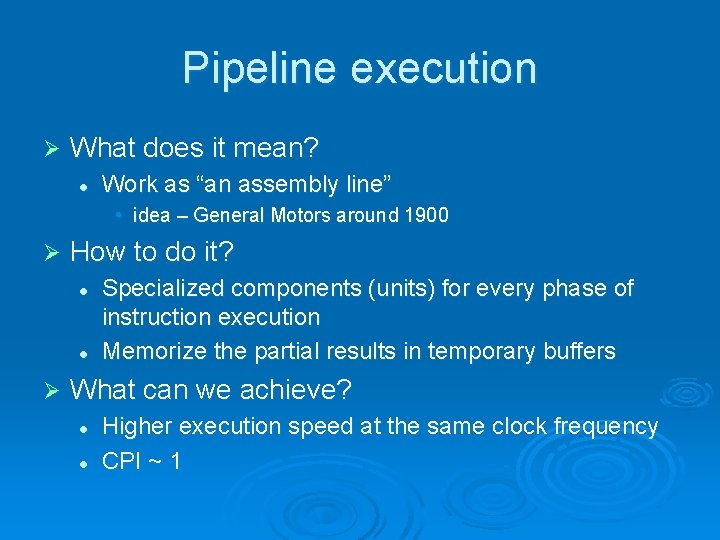

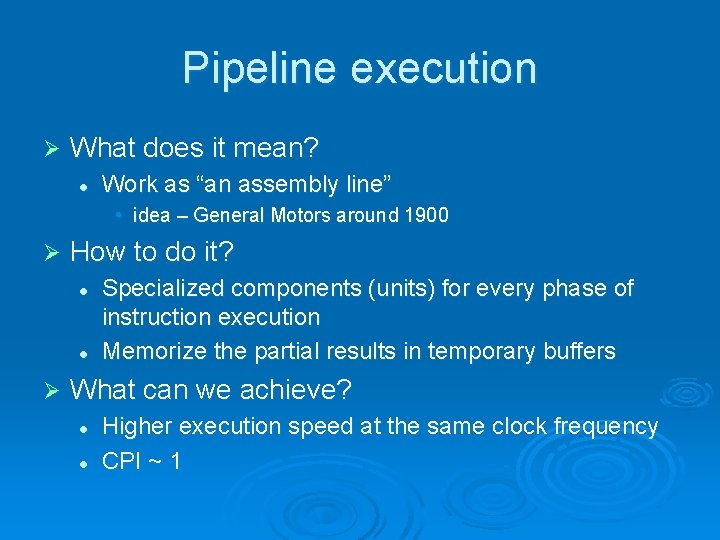

Pipeline execution Ø What does it mean? l Work as “an assembly line” • idea – General Motors around 1900 Ø How to do it? l l Ø Specialized components (units) for every phase of instruction execution Memorize the partial results in temporary buffers What can we achieve? l l Higher execution speed at the same clock frequency CPI ~ 1

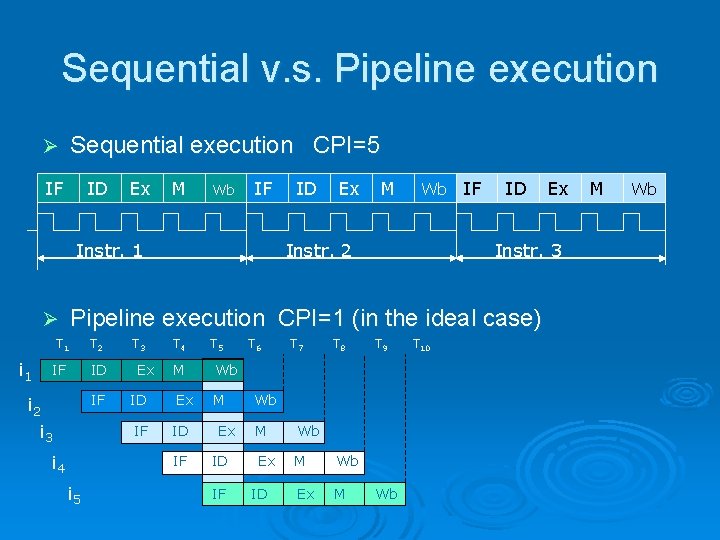

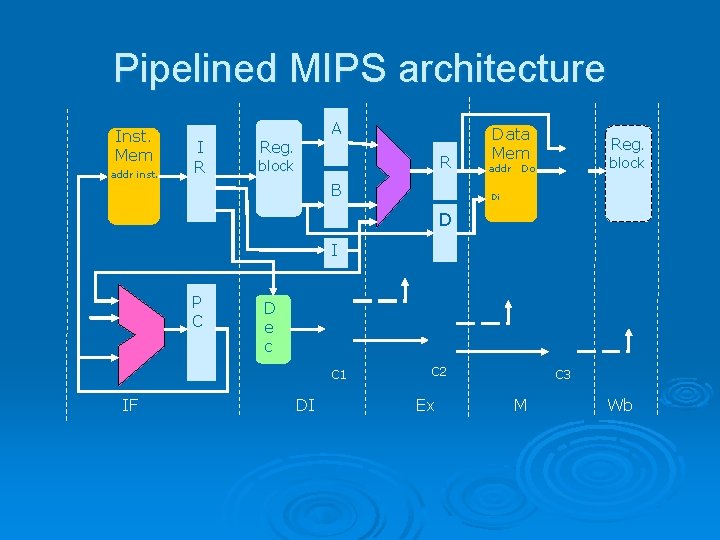

Sequential v. s. Pipeline execution Sequential execution CPI=5 Ø IF ID Ex M Wb IF Instr. 1 Ex M Wb Instr. 2 IF ID T 1 T 2 IF ID IF i 2 i 3 i 4 T 3 Ex T 4 M Wb ID Ex M IF ID IF i 5 T 5 Ex ID IF T 6 T 7 T 8 T 9 Wb M Wb Ex M ID Ex Instr. 3 Pipeline execution CPI=1 (in the ideal case) Ø i 1 ID Wb T 10 M Wb

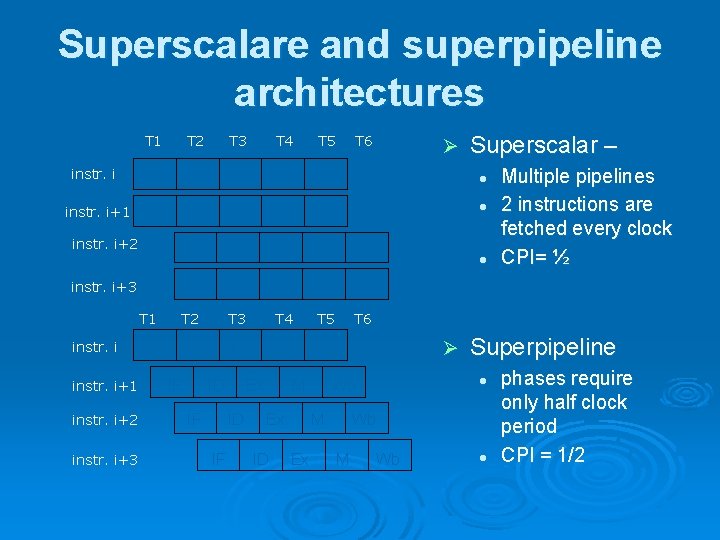

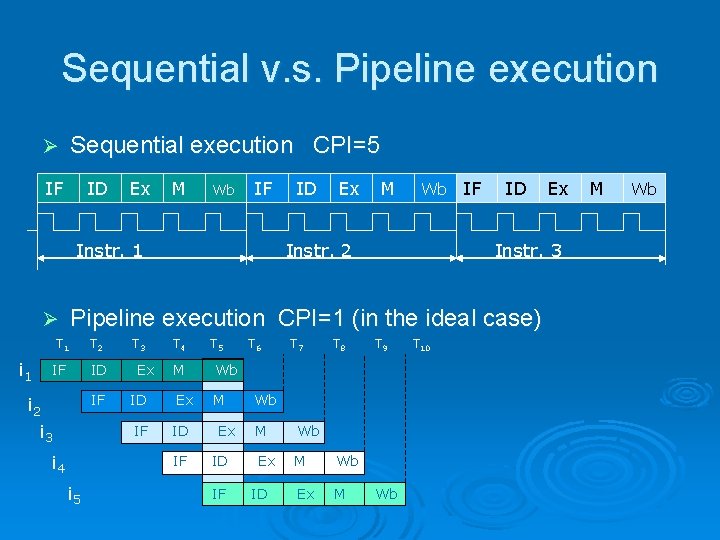

Superscalare and superpipeline architectures instr. i+1 T 2 T 3 T 4 T 5 IF ID Ex M Wb IF ID Ex M IF instr. i+2 T 6 Ø Superscalar – l l Wb l IF instr. i+3 T 1 instr. i+1 instr. i+2 instr. i+3 ID T 2 IF ID IF Wb T 6 T 4 T 5 Ex M Wb Ex ID IF M T 3 ID IF Ex M Ex ID Superpipeline l Wb M Ex Ø Wb Multiple pipelines 2 instructions are fetched every clock CPI= ½ l phases require only half clock period CPI = 1/2

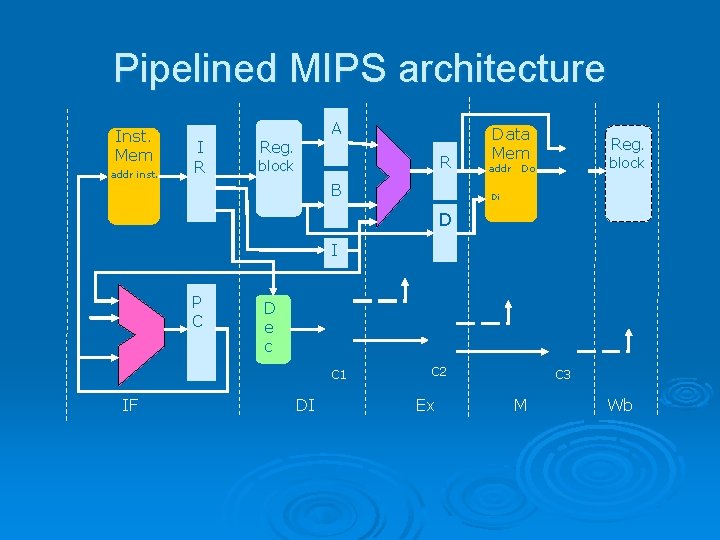

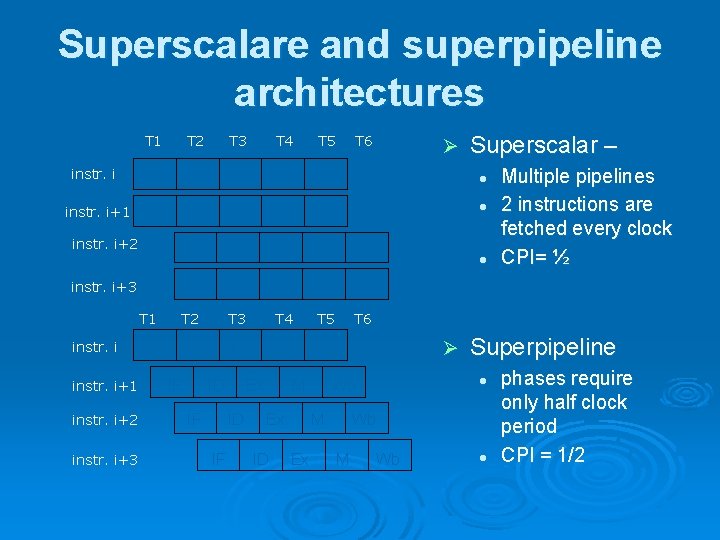

Pipelined MIPS architecture Inst. Mem addr inst. I R A Reg. R block B Data Mem addr Do M Reg. block Di D I P C +4 ex m wb D e c C 1 IF DI m wb wb C 2 Ex C 3 M Wb

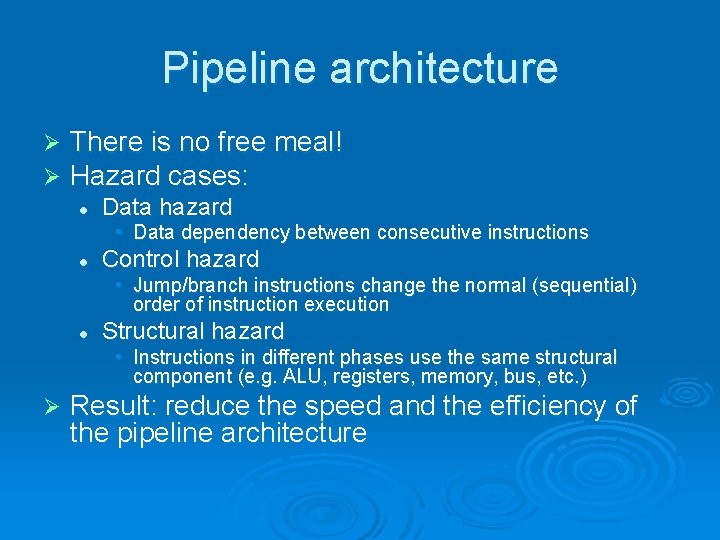

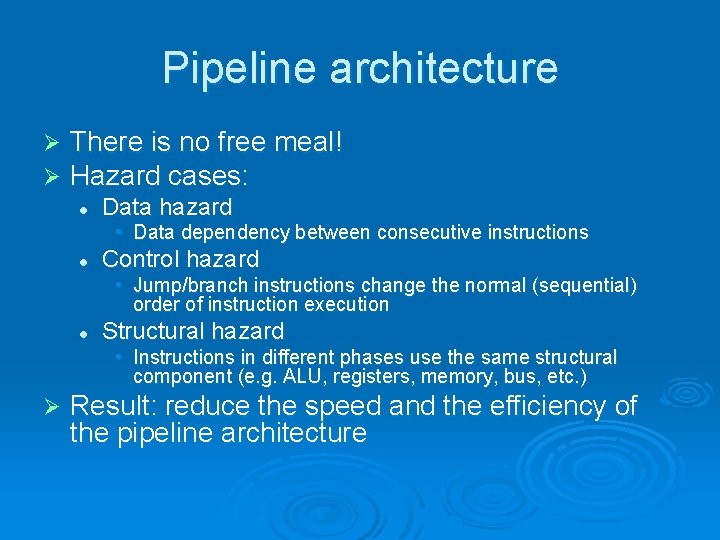

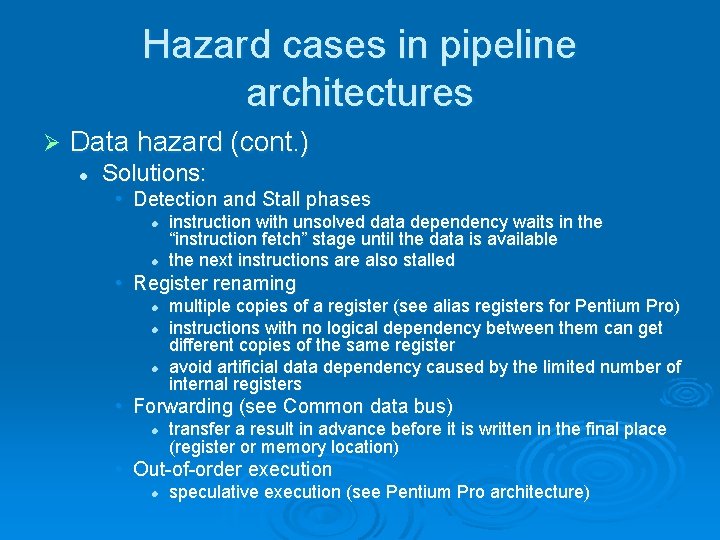

Pipeline architecture Ø Ø There is no free meal! Hazard cases: l Data hazard • Data dependency between consecutive instructions l Control hazard • Jump/branch instructions change the normal (sequential) order of instruction execution l Structural hazard • Instructions in different phases use the same structural component (e. g. ALU, registers, memory, bus, etc. ) Ø Result: reduce the speed and the efficiency of the pipeline architecture

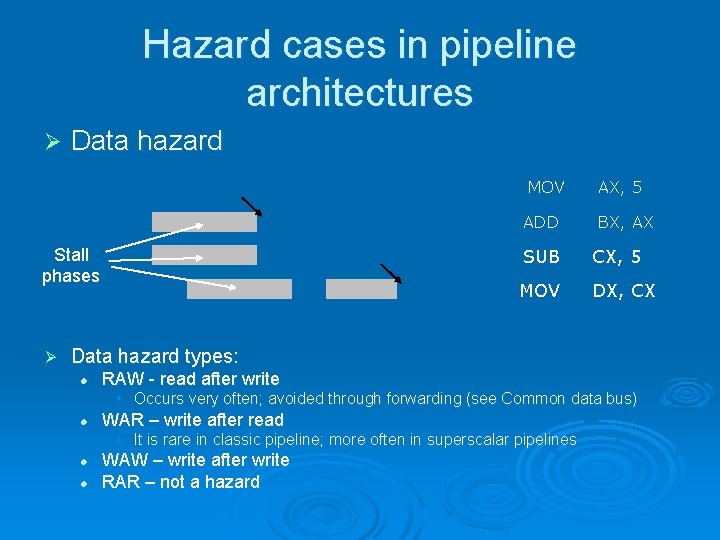

Hazard cases in pipeline architectures Ø Data hazard IF ID Ex M Wb IF Stall phases Ø ID IF Ex M ID Ex IF M ID Ex M MOV AX, 5 ADD BX, AX SUB CX, 5 MOV DX, CX Data hazard types: l RAW - read after write • Occurs very often; avoided through forwarding (see Common data bus) l WAR – write after read • It is rare in classic pipeline; more often in superscalar pipelines l l WAW – write after write RAR – not a hazard

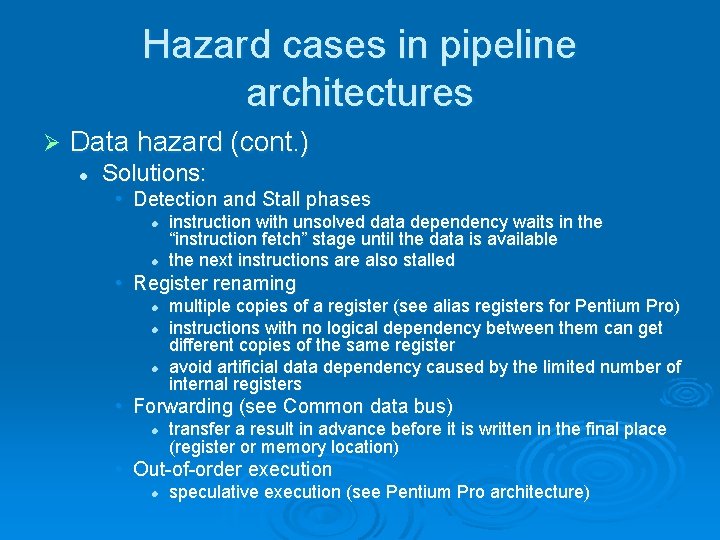

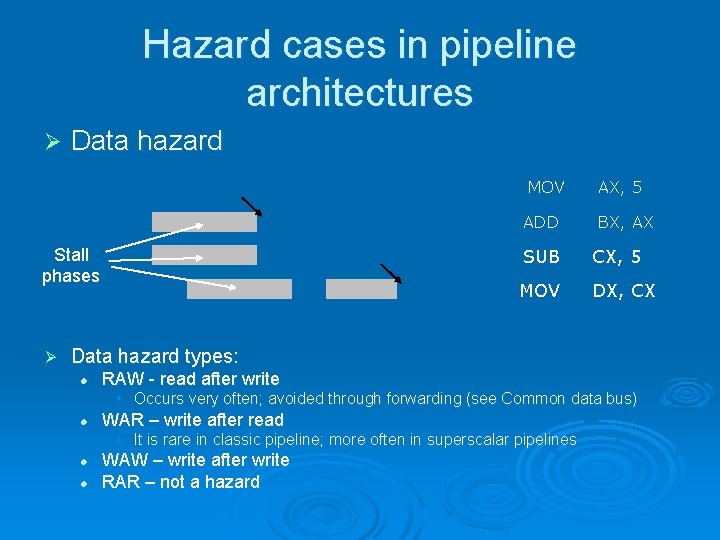

Hazard cases in pipeline architectures Ø Data hazard (cont. ) l Solutions: • Detection and Stall phases l l instruction with unsolved data dependency waits in the “instruction fetch” stage until the data is available the next instructions are also stalled • Register renaming l l l multiple copies of a register (see alias registers for Pentium Pro) instructions with no logical dependency between them can get different copies of the same register avoid artificial data dependency caused by the limited number of internal registers • Forwarding (see Common data bus) l transfer a result in advance before it is written in the final place (register or memory location) • Out-of-order execution l speculative execution (see Pentium Pro architecture)

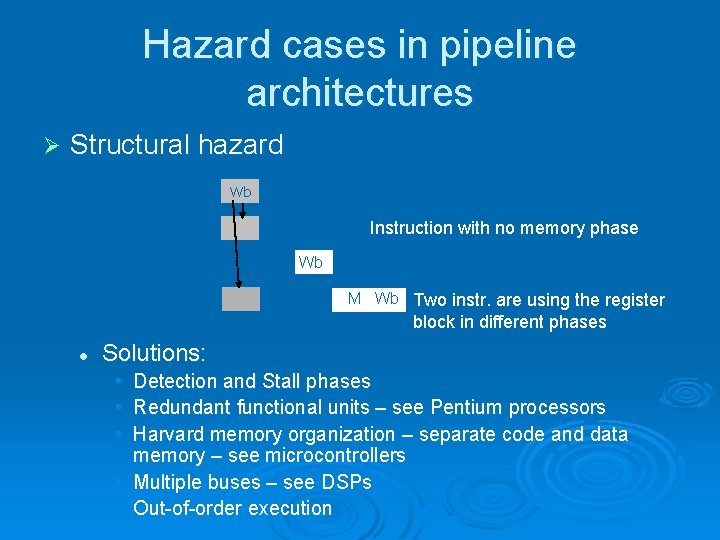

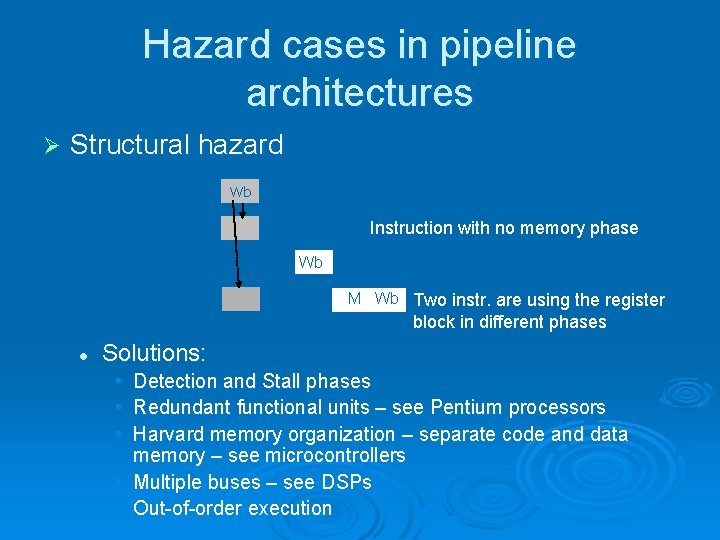

Hazard cases in pipeline architectures Ø Structural hazard IF ID IF Ex M ID Ex IF ID IF Wb Instruction with no memory phase Wb Ex M Wb ID Ex M Wb Two instr. are using the register block in different phases l Solutions: • Detection and Stall phases • Redundant functional units – see Pentium processors • Harvard memory organization – separate code and data memory – see microcontrollers • Multiple buses – see DSPs • Out-of-order execution

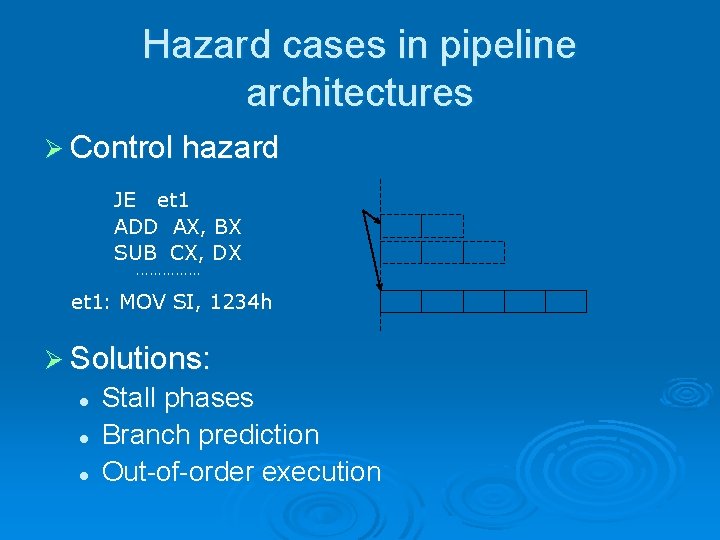

Hazard cases in pipeline architectures Ø Control hazard JE et 1 ADD AX, BX SUB CX, DX IF . . . . ID Ex IF ID IF et 1: MOV SI, 1234 h Ø Solutions: l l l Stall phases Branch prediction Out-of-order execution Ex M ID Ex M IF ID Ex M Wb

Pipeline architecture – hazard cases Ø Solving hazard cases: l l Detect hazard cases and introduce “stall” phases Rearrange instructions: • re-arrange instructions in order to reduce the dependences between consecutive instructions • Methods: l l l Static scheduling – made before program execution – optimization made by the compiler or user Dynamic scheduling – made during program execution – optimization made by the processor – out-of-order execution Branch prediction techniques

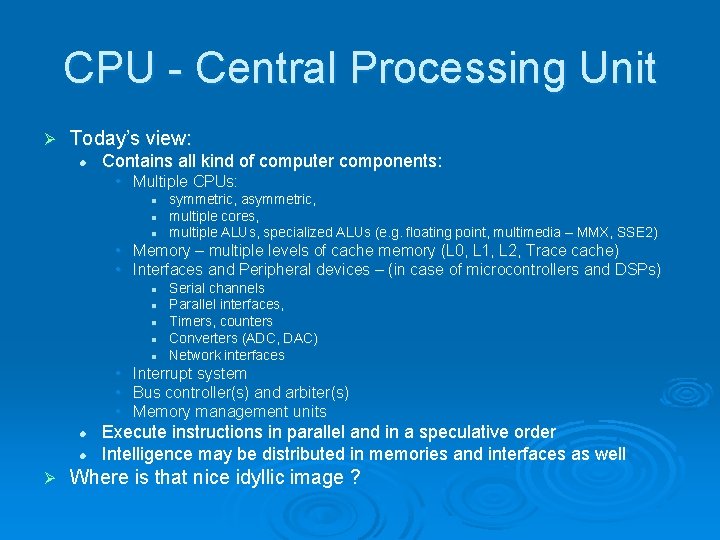

Static v. s. dynamic scheduling Ø Static scheduling: l l l Ø The optimal order of instructions is established by the compiler, based on information about the structure of the pipeline Advantages: it is made once and benefit every time the code is executed Drawback: compiler should know about the structure of the hardware (e. g. pipeline stages, phases of every instruction); compiler must be changed when the processor version changes Dynamic scheduling: l l l The hardware has the capacity to reorder instruction to avoid or reduce the effect of hazard cases Advantage: the processor knows best its structure; optimization can be better connected to the hardware; some dependences are reviled on at run-time Drawbacks: reordering decisions are made every time the code is executed; mode complex hardware is needed