Structural Logistic Regression for Data Mining and Link

- Slides: 16

Structural Logistic Regression for Data Mining and Link Prediction by Alexandrin Popescul and Lyle Ungar University of Pennsylvania presented by Mark Goadrich 1/28/2003 for CS 838 Statistical Relational Learning

Outline Upgrading vs. Propositionalization l Dataset: Citeseer Document Graph l Document Classification l Structural Logistic Regression l Citation Prediction l Results and Discussion l

Upgrading vs. Propositionalization How to use standard ML algorithms for relational tasks? l Propositionalization l l reduction of a relational data to feature vector l usually join of all possible attributes l Upgrading l adapting algorithm to handle relational data l feature selection directly coupled with algorithm

Cite. Seer and DBLP l Look at towell 94 knowledgebased l http: //citeseer. ist. psu. edu/ l http: //www. pmbrowser. info/citeseer. html l http: //dblp. uni-trier. de/

Document Classification Task l l l Background Knowledge Select pair of journals l published_in(Document, Venue) or conferences for core l cites(Document, Document) documents l author(Document, Person) l word_count(Document, Word, Count) Split into 50/50 train/test l title_word(Document, Word) Include documents in the immediate citation neighborhood: cites(A, B) Remove all cites references to test data Generate background knowledge for word_count, title_word, author_of, and published_in. (published_in only generated for linked documents) Compare between flat and relational learning

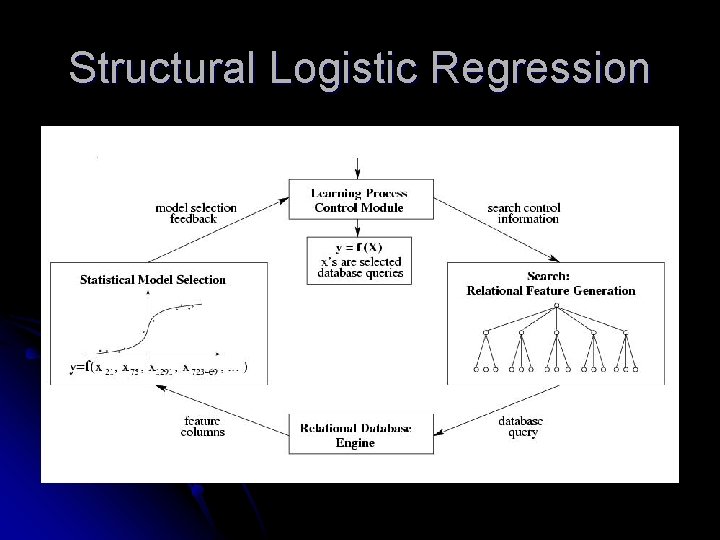

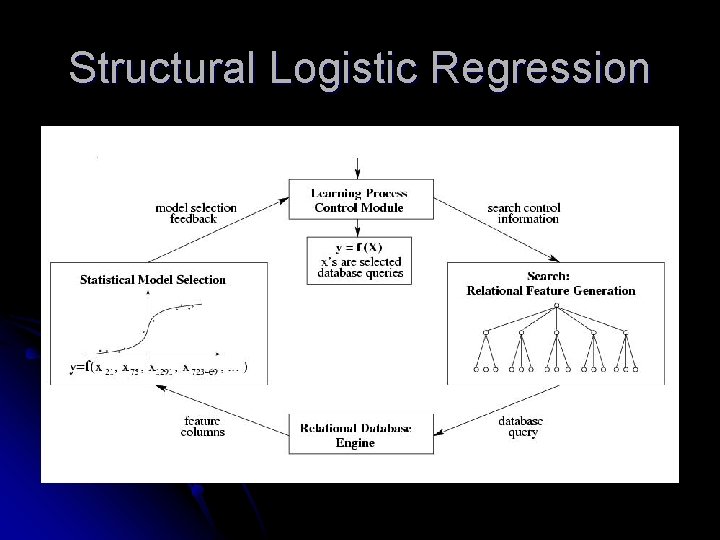

Structural Logistic Regression

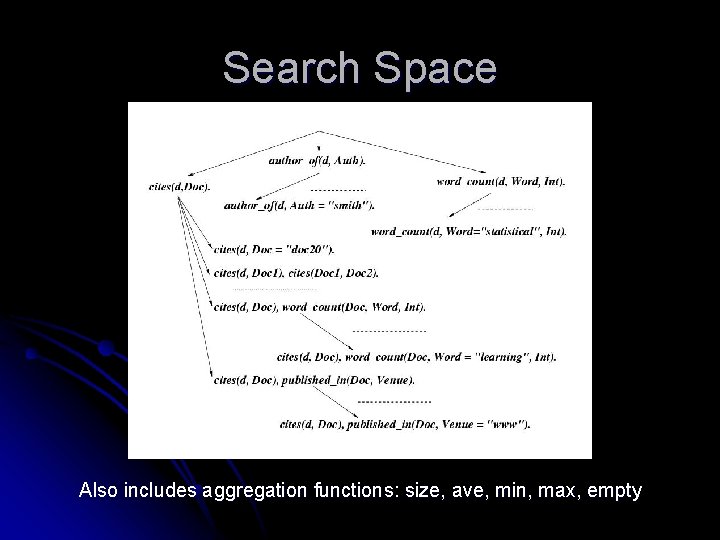

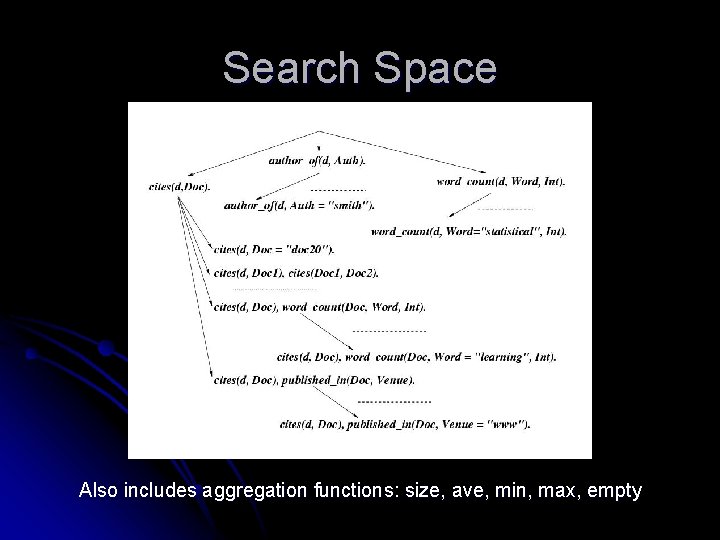

Search Space Also includes aggregation functions: size, ave, min, max, empty

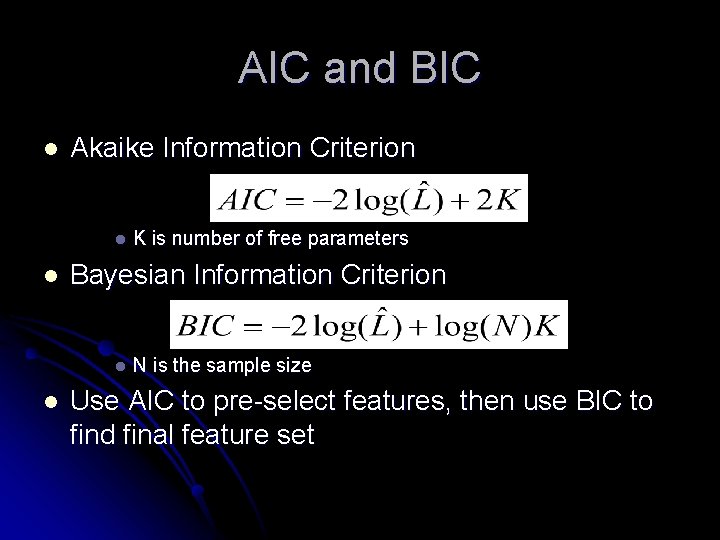

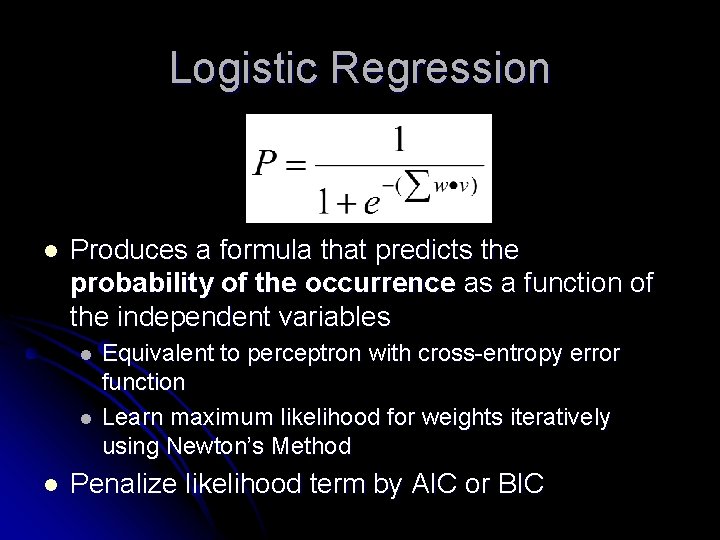

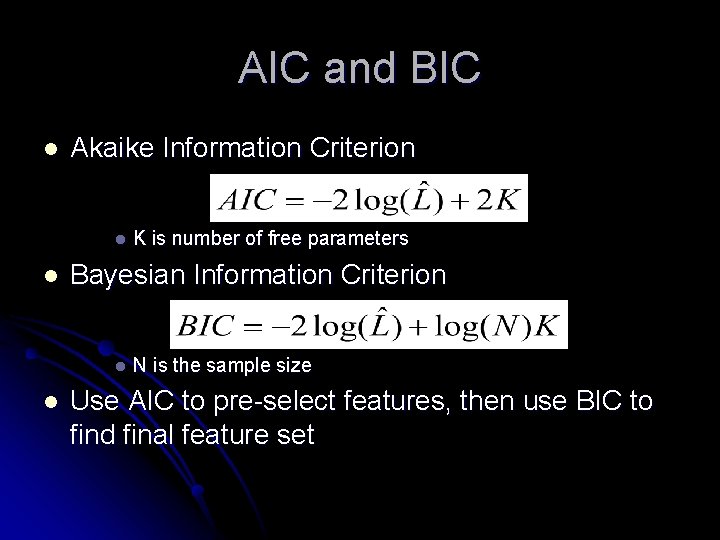

Logistic Regression l Produces a formula that predicts the probability of the occurrence as a function of the independent variables l l l Equivalent to perceptron with cross-entropy error function Learn maximum likelihood for weights iteratively using Newton’s Method Penalize likelihood term by AIC or BIC

AIC and BIC l Akaike Information Criterion l l Bayesian Information Criterion l l K is number of free parameters N is the sample size Use AIC to pre-select features, then use BIC to find final feature set

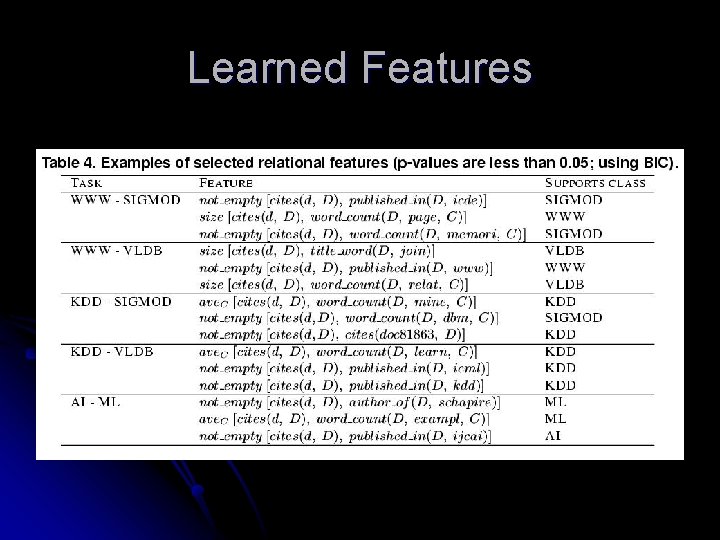

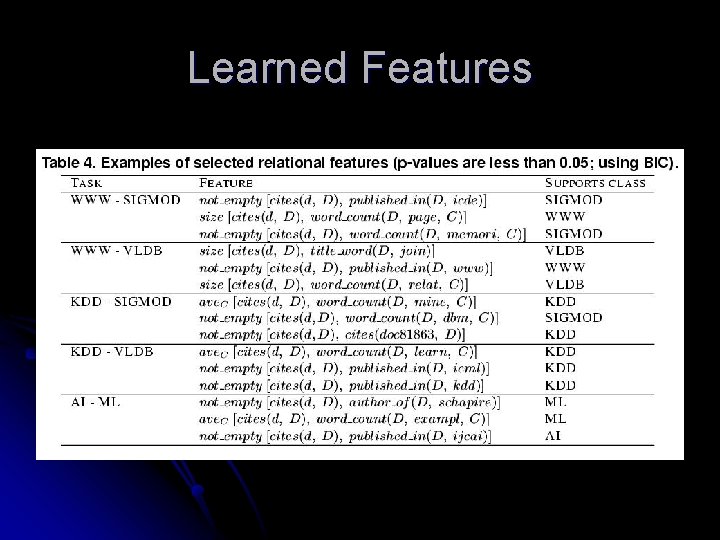

Learned Features

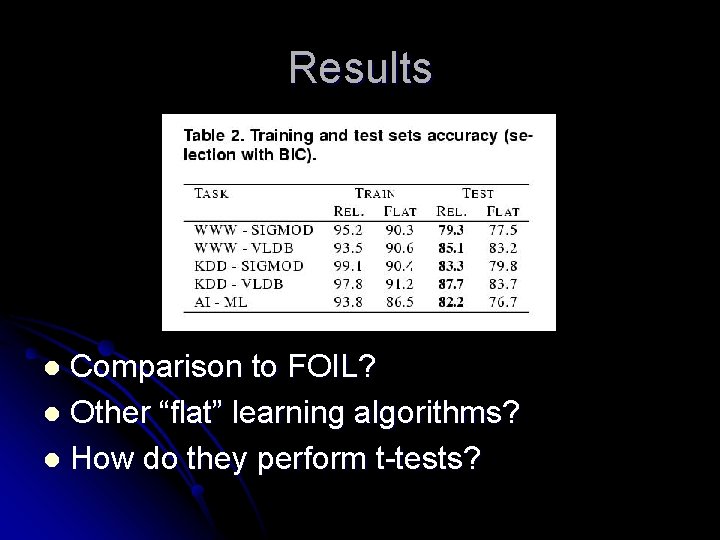

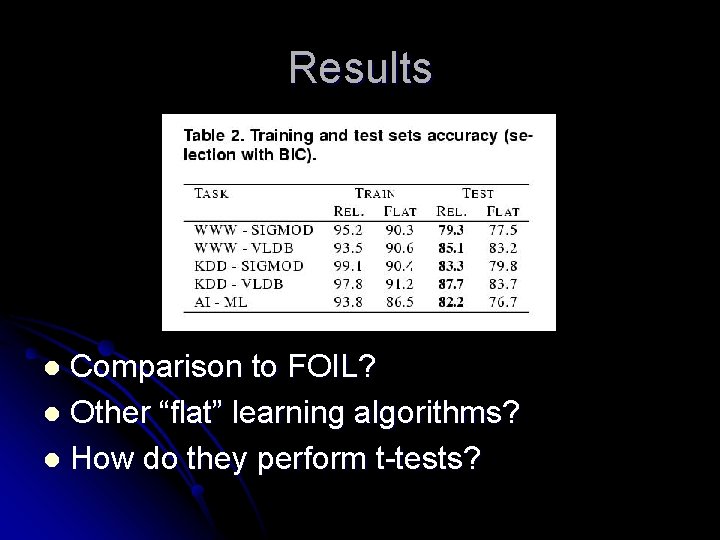

Results Comparison to FOIL? l Other “flat” learning algorithms? l How do they perform t-tests? l

Objects vs. Relations l Classification of relational objects l drugs: good vs. bad l web pages: professor, class, student, project l Learning relations between objects l links between web pages l relations between phrases in sentences Size of examples grows quadratic l Most examples are of one class (negative) l

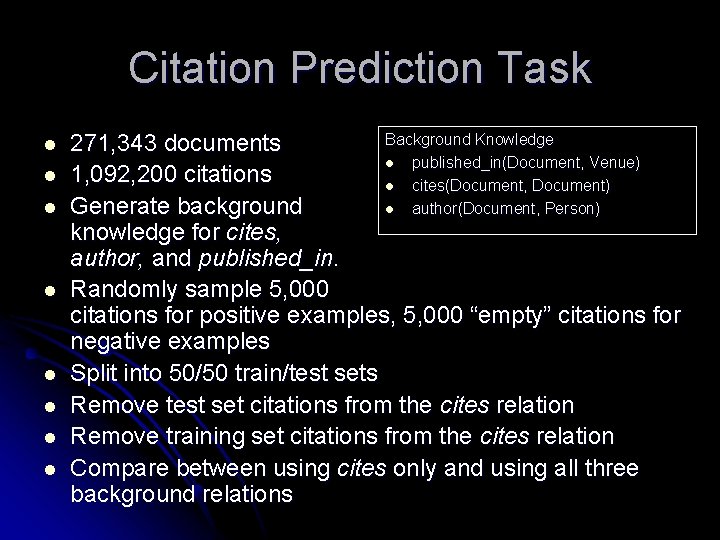

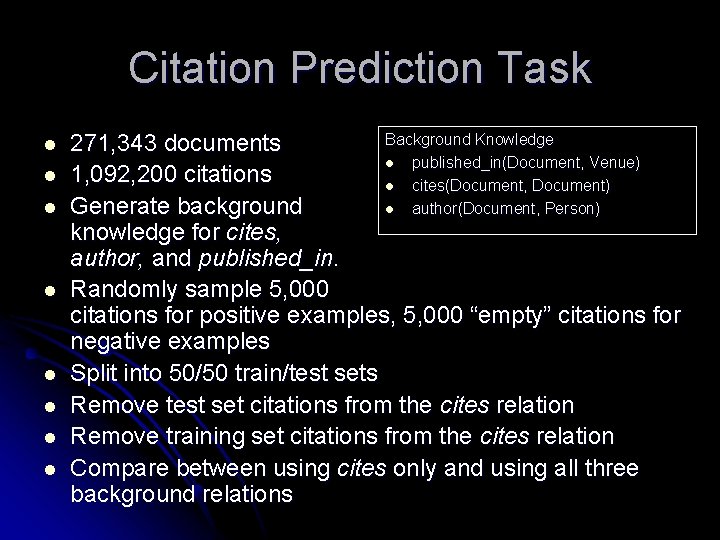

Citation Prediction Task l l l l Background Knowledge 271, 343 documents l published_in(Document, Venue) 1, 092, 200 citations l cites(Document, Document) Generate background l author(Document, Person) knowledge for cites, author, and published_in. Randomly sample 5, 000 citations for positive examples, 5, 000 “empty” citations for negative examples Split into 50/50 train/test sets Remove test set citations from the cites relation Remove training set citations from the cites relation Compare between using cites only and using all three background relations

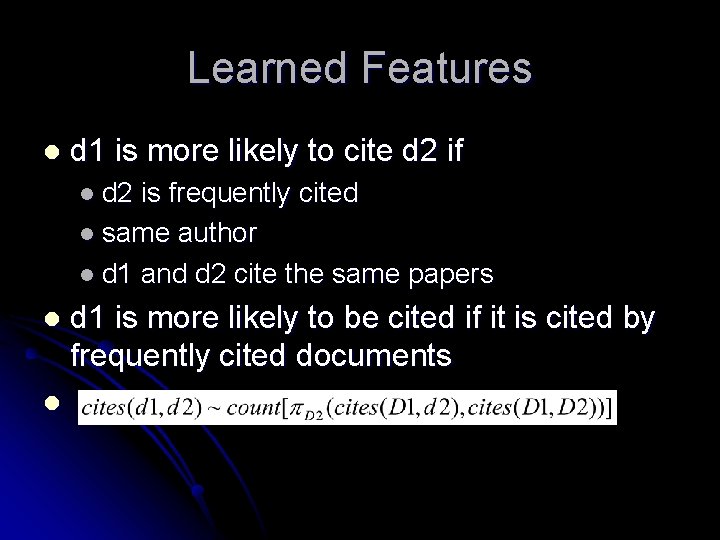

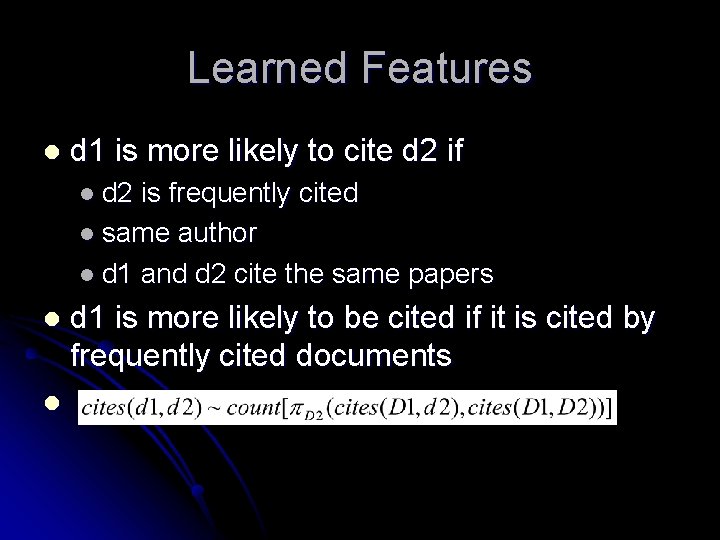

Learned Features l d 1 is more likely to cite d 2 if l d 2 is frequently cited l same author l d 1 and d 2 cite the same papers l l d 1 is more likely to be cited if it is cited by frequently cited documents

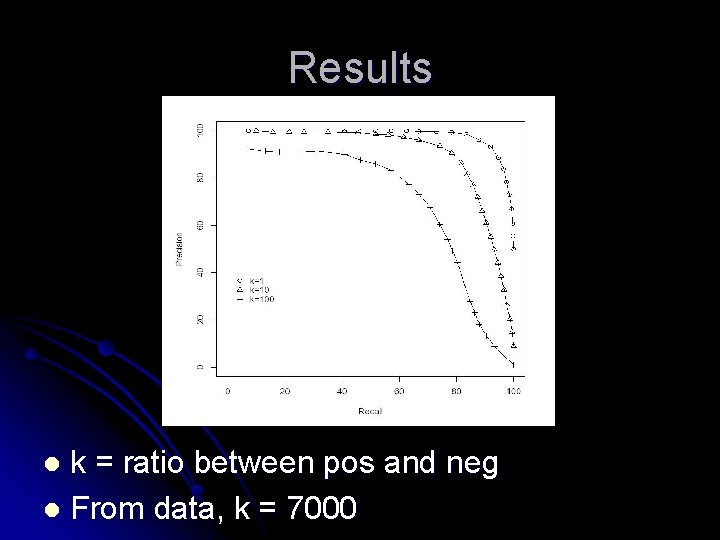

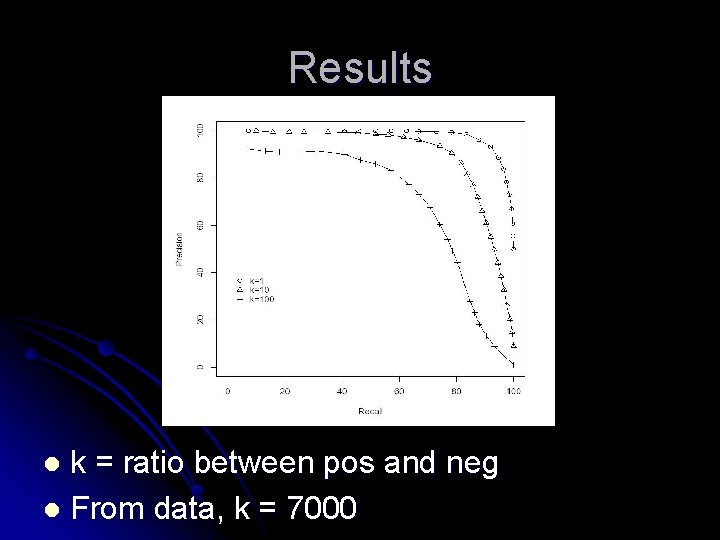

Results k = ratio between pos and neg l From data, k = 7000 l

Discussion and Future Work l Other tasks l web, social networks, biological interactions Create features from clusters of data l Others? ? ? l