String Matching Algorithm Overview Analysis By cyclone NSlab

String Matching Algorithm Overview & Analysis By cyclone @ NSlab, RIIT Sep. 6 2008

Structure l Algorithm overview l Performance experiments l Solution and future work l Bibliography and resources

Algorithm Overview

Definition l Given an alphabet S, a pattern P of length m and a text T of length n, find if P is in T or the position (s) P matches a substring of T, where usually m<<n l Considering the pattern P ¡ string with errors ¡ regular expression exact string matching approximate string matching regular expression matching

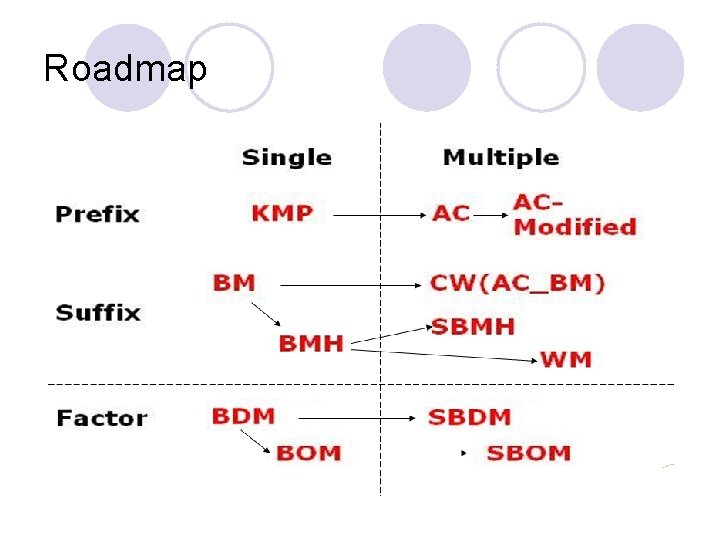

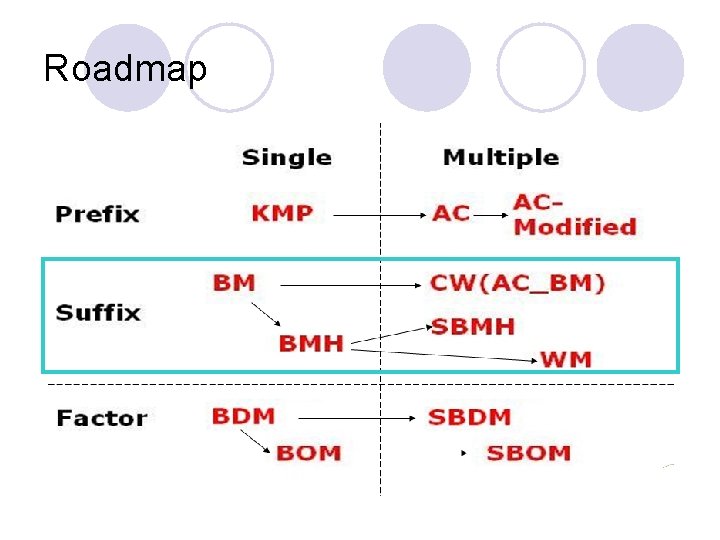

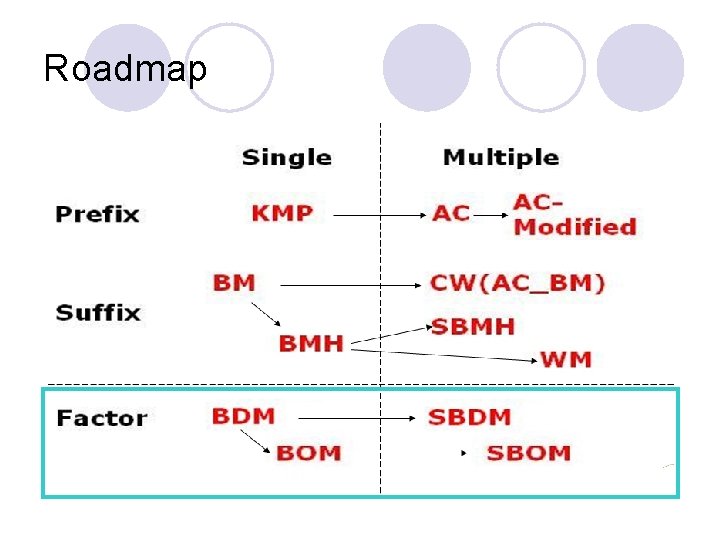

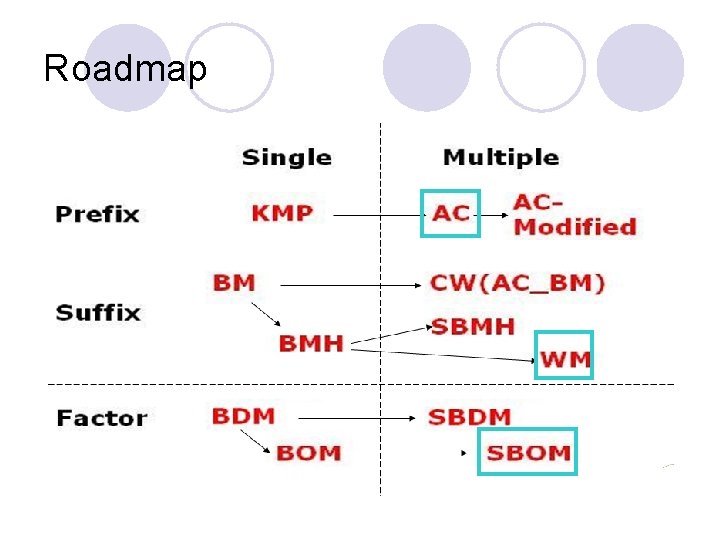

Categories l Category 1 ¡ Single string matching Algorithms ¡ Multiple string matching Algorithms l Category 2 ¡ Prefix based Algorithms ¡ Suffix based Algorithms ¡ Factor based Algorithms l Category 3 ¡ Automaton based Algorithms ¡ Trie based Algorithms ¡ Table based Algorithms l ……

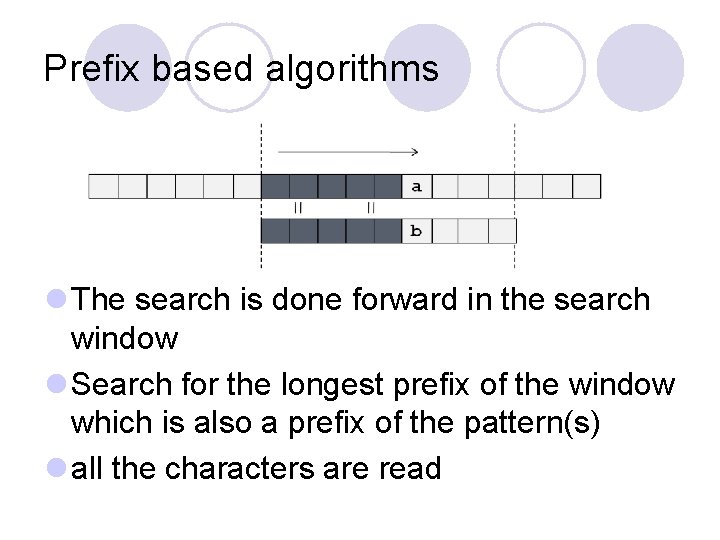

Prefix based algorithms l The search is done forward in the search window l Search for the longest prefix of the window which is also a prefix of the pattern(s) l all the characters are read

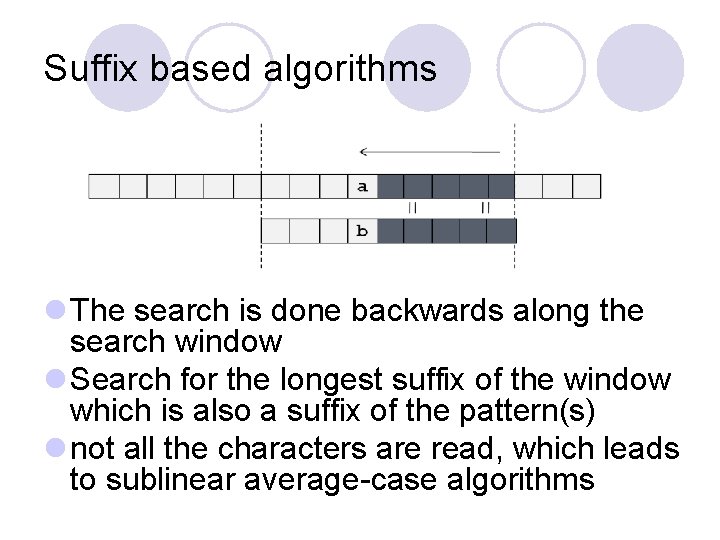

Suffix based algorithms l The search is done backwards along the search window l Search for the longest suffix of the window which is also a suffix of the pattern(s) l not all the characters are read, which leads to sublinear average-case algorithms

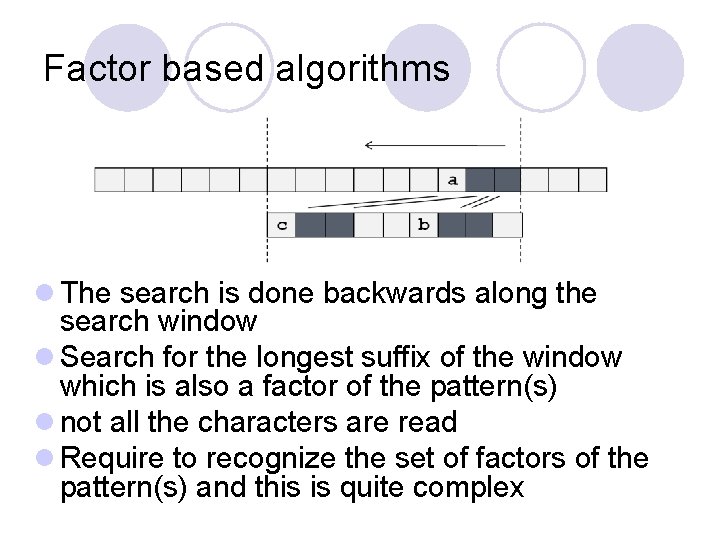

Factor based algorithms l The search is done backwards along the search window l Search for the longest suffix of the window which is also a factor of the pattern(s) l not all the characters are read l Require to recognize the set of factors of the pattern(s) and this is quite complex

Roadmap

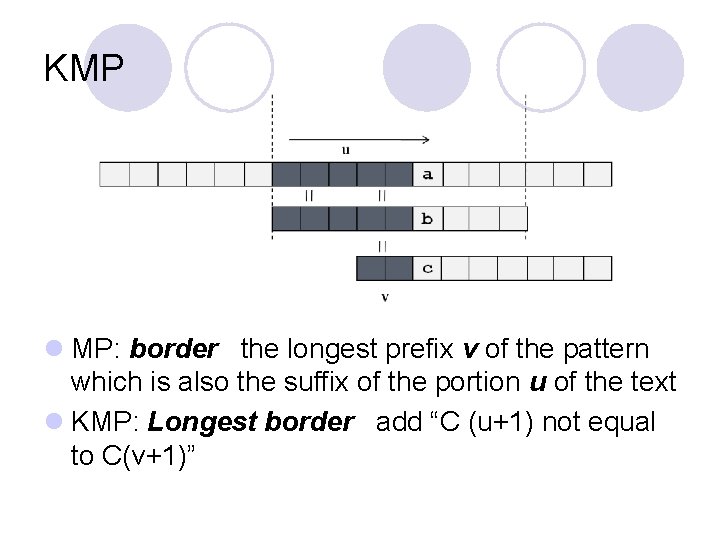

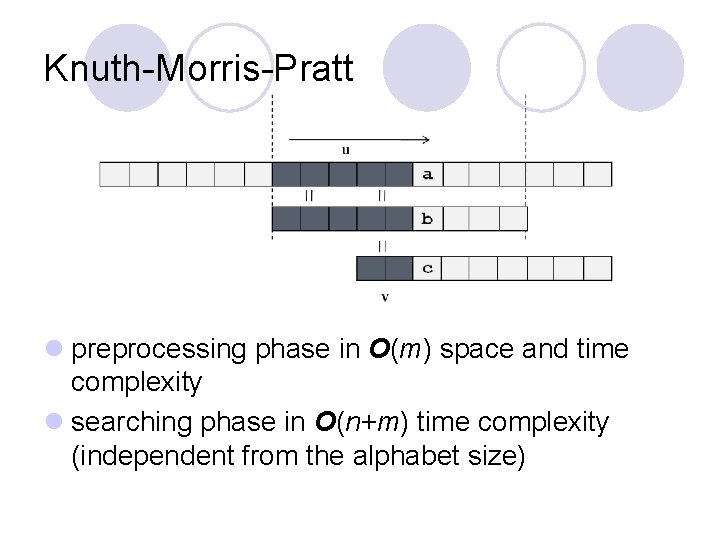

KMP l MP: border the longest prefix v of the pattern which is also the suffix of the portion u of the text l KMP: Longest border add “C (u+1) not equal to C(v+1)”

Knuth-Morris-Pratt l preprocessing phase in O(m) space and time complexity l searching phase in O(n+m) time complexity (independent from the alphabet size)

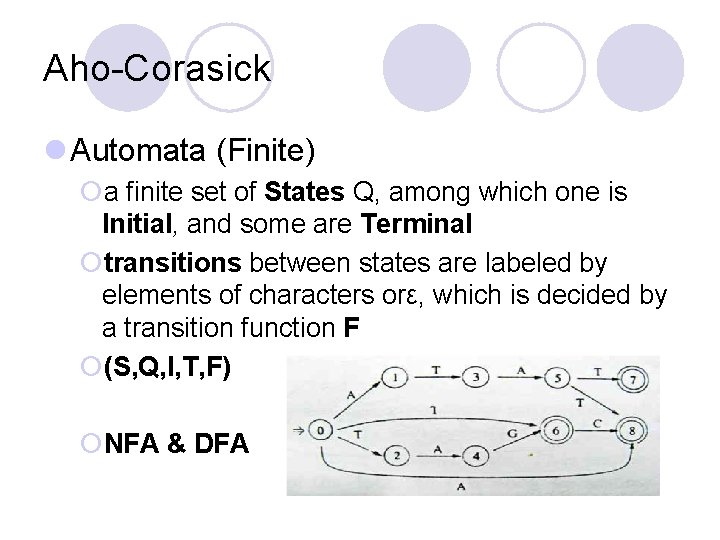

Aho-Corasick l Automata (Finite) ¡a finite set of States Q, among which one is Initial, and some are Terminal ¡transitions between states are labeled by elements of characters orε, which is decided by a transition function F ¡(S, Q, I, T, F) ¡NFA & DFA

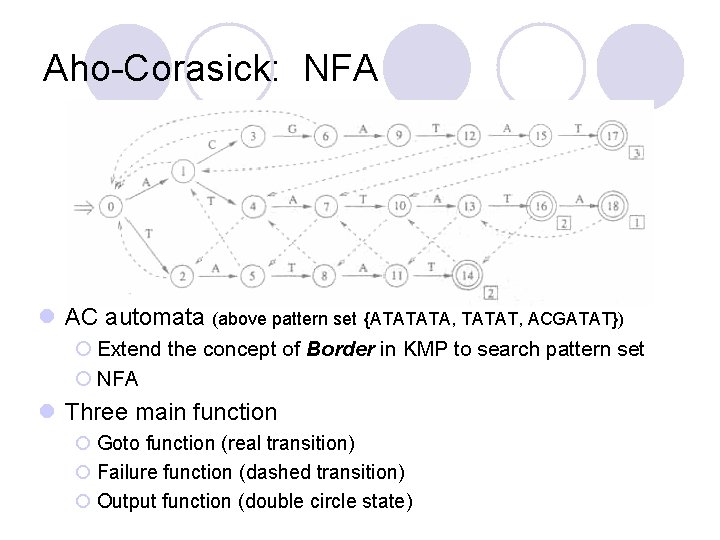

Aho-Corasick: NFA l AC automata (above pattern set {ATATATA, TATAT, ACGATAT}) ¡ Extend the concept of Border in KMP to search pattern set ¡ NFA l Three main function ¡ Goto function (real transition) ¡ Failure function (dashed transition) ¡ Output function (double circle state)

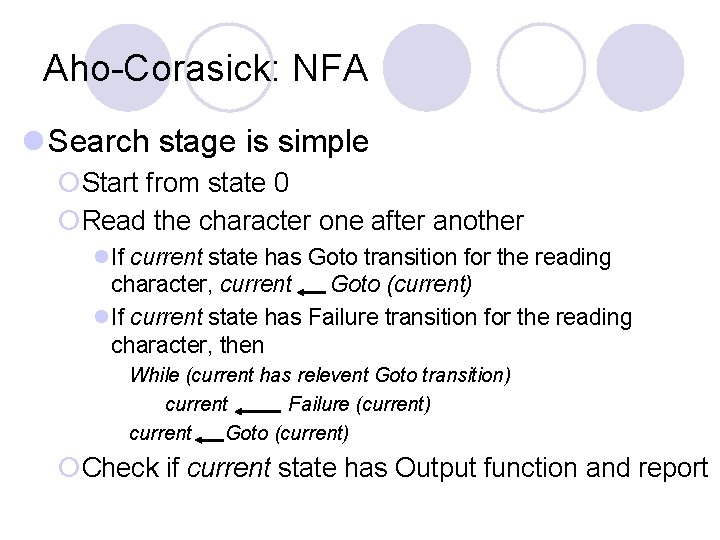

Aho-Corasick: NFA l Search stage is simple ¡Start from state 0 ¡Read the character one after another l. If current state has Goto transition for the reading character, current Goto (current) l. If current state has Failure transition for the reading character, then While (current has relevent Goto transition) current Failure (current) current Goto (current) ¡Check if current state has Output function and report

Aho-Corasick: DFA l Preprocess: Conversion from NFA to DFA ¡Traverse the NFA and previously calculate all failure path ¡Each reading character can find Goto transition l Search ¡Without travel back the failure path Trade-off between storage and search speed

Aho-Corasick l Pro: ¡searching time complexity is O(n) (independent from the pattern set size) l Cons: ¡When pattern set size increase, the memory needed increase drastically. ¡Cache and memory access time changing will compromise the time performance.

AC-Modified l AC-sparse and AC-banded ¡Sparse vector storing method for transitions l State and Path compression by tuck l Character index AC by Jianming l Others……

Boyer-Moore l How to safely shifting the search window without missing possible match l Two major heuristics ¡Good Suffix (two shift value: s 1, s 2) ¡Bad Character (one shift value: s 3) ¡In the searching stage, the shift value is calculated by max(min(s 1, s 2), s 3).

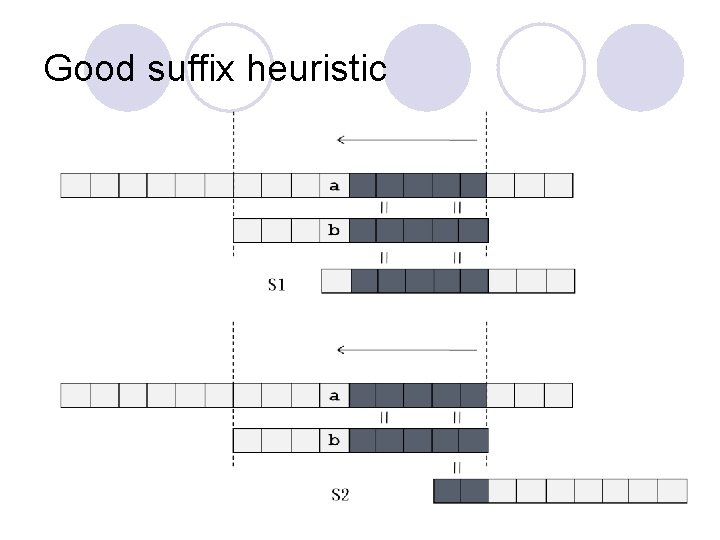

Good suffix heuristic

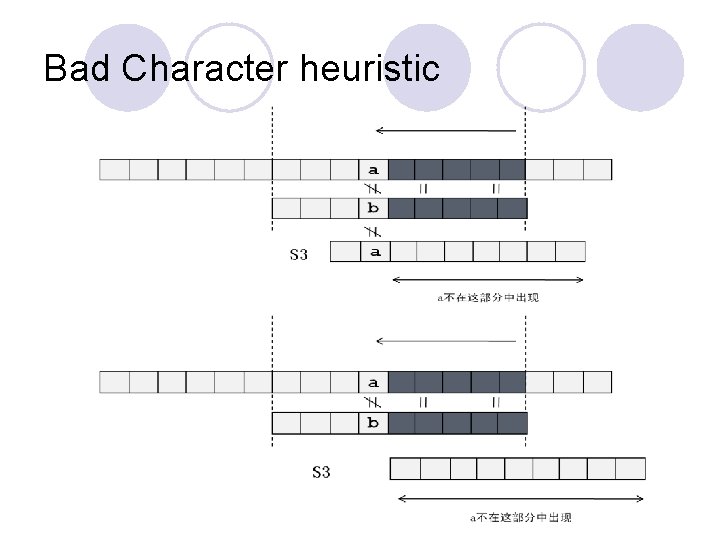

Bad Character heuristic

Boyer-Moore l Excellent time performance ¡Worse case: O(mn) ¡Average case: sublinear ¡Best case: O(n/m) lex: am-1 b in bn l. Fast when alphabet size is large, common in NIMS l Cons: calculating the shift value in both heuristics is somewhat complex

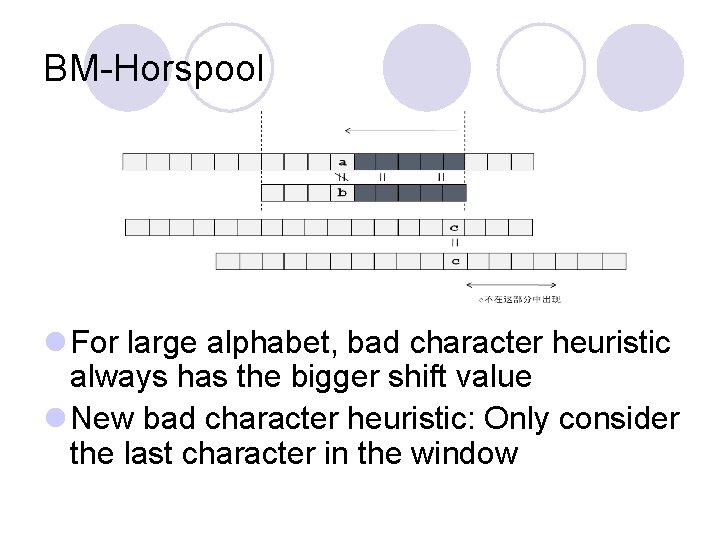

BM-Horspool l For large alphabet, bad character heuristic always has the bigger shift value l New bad character heuristic: Only consider the last character in the window

Roadmap

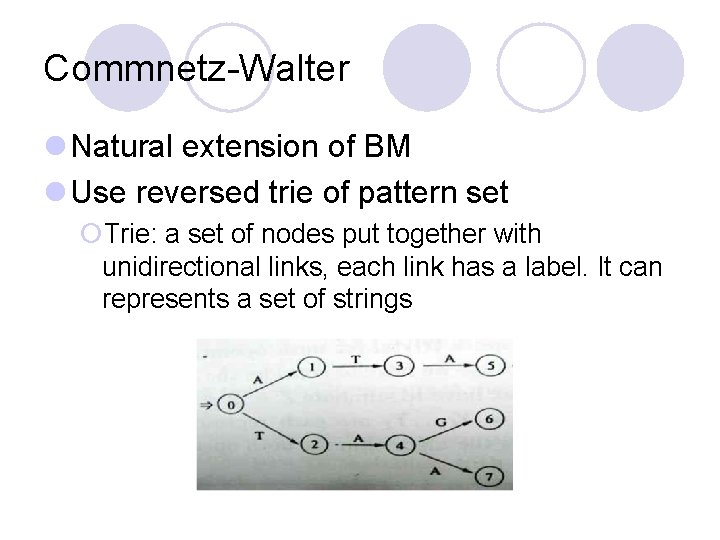

Commnetz-Walter l Natural extension of BM l Use reversed trie of pattern set ¡Trie: a set of nodes put together with unidirectional links, each link has a label. It can represents a set of strings

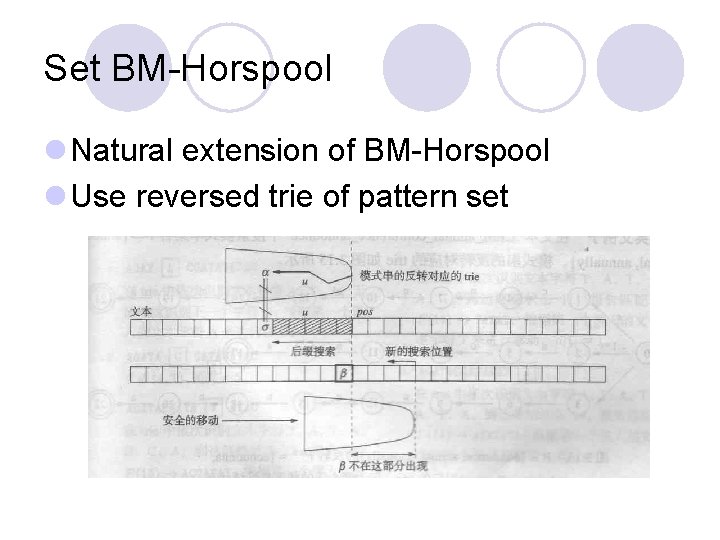

Set BM-Horspool l Natural extension of BM-Horspool l Use reversed trie of pattern set

Wu-Manber l AC_BM is complex in shift value calculation l SBMH has bad performance when pattern set size is large ¡the probability of a character appear in a certain pattern is high, so the average shift value is comparatively small l So, WM extend “bad character” of SBMH to “bad character block”

Wu-Manber l Use a hash table called SHIFT to store the shift values of character blocks l Use HASH table to link the patterns has the same last character block l Use PREFIX table to discriminate patterns link with the HASH entry l SHIFT table and HASH table share the same hash function

Wu-Manber l SHIFT ¡Block: Bl Size of block: B Hash function: h() ¡Minimum pattern length: lmin ¡SHIFT(j) entry stores the minimum shift value of all the block that h(Bl)=j ¡The shift value of block is calculated as follows: l. If Bl does not appear in any pattern, its shift value is lmin-B+1 l. If Bl appear in some patterns, find the rightmost of them, let the offset of Bl in that pattern be j, its shift value is lmin-B-j

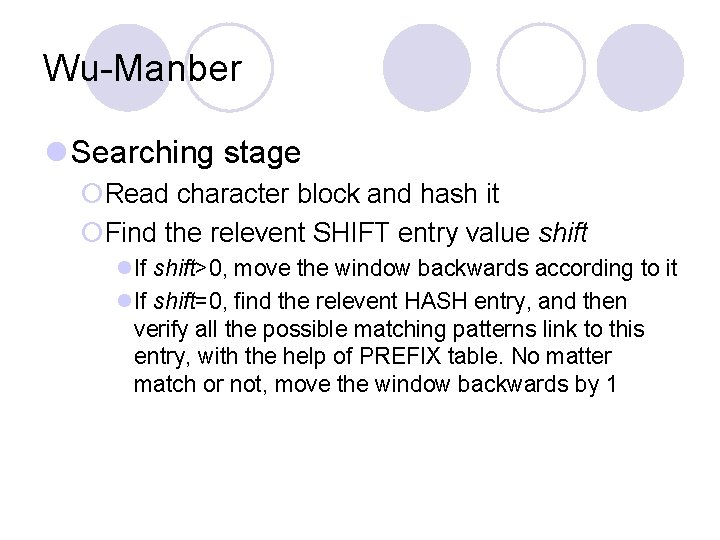

Wu-Manber l Searching stage ¡Read character block and hash it ¡Find the relevent SHIFT entry value shift l. If shift>0, move the window backwards according to it l. If shift=0, find the relevent HASH entry, and then verify all the possible matching patterns link to this entry, with the help of PREFIX table. No matter match or not, move the window backwards by 1

Wu-Manber l Pros: Excellent average time performance ¡Hash function ¡Avoid unnecessary character comparison l Cons: ¡Bad worse case performance l. Ex: {baa, caa, daa} in an ¡shift value is limited by lmin

Roadmap

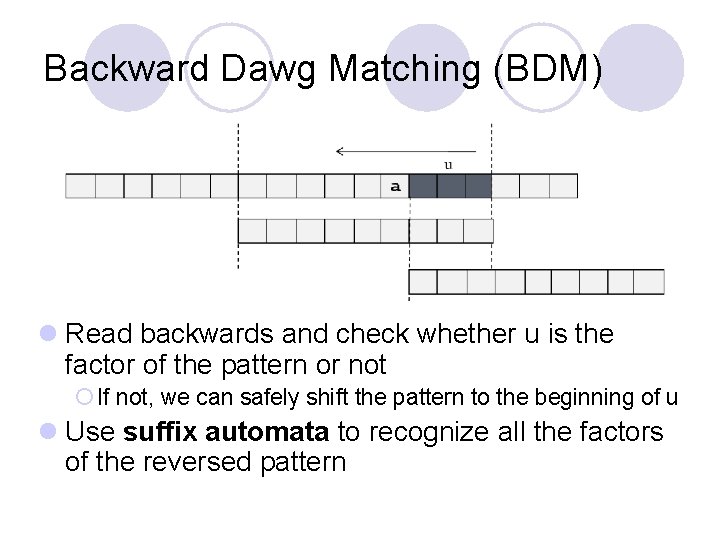

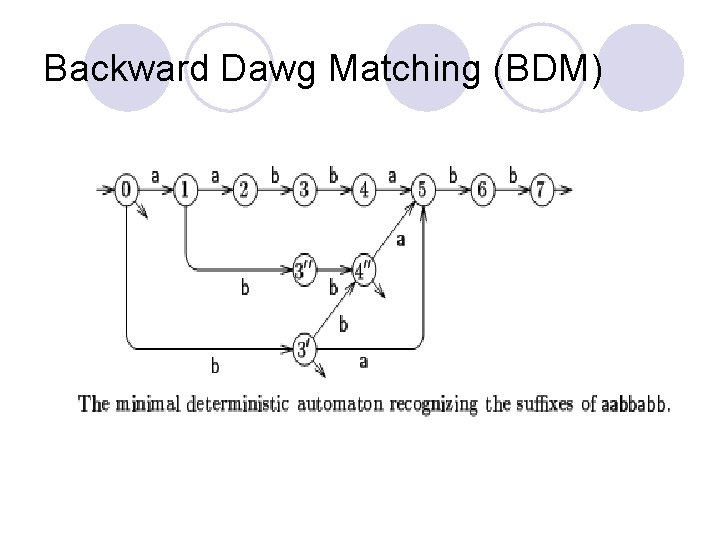

Backward Dawg Matching (BDM) l Read backwards and check whether u is the factor of the pattern or not ¡ If not, we can safely shift the pattern to the beginning of u l Use suffix automata to recognize all the factors of the reversed pattern

Backward Dawg Matching (BDM)

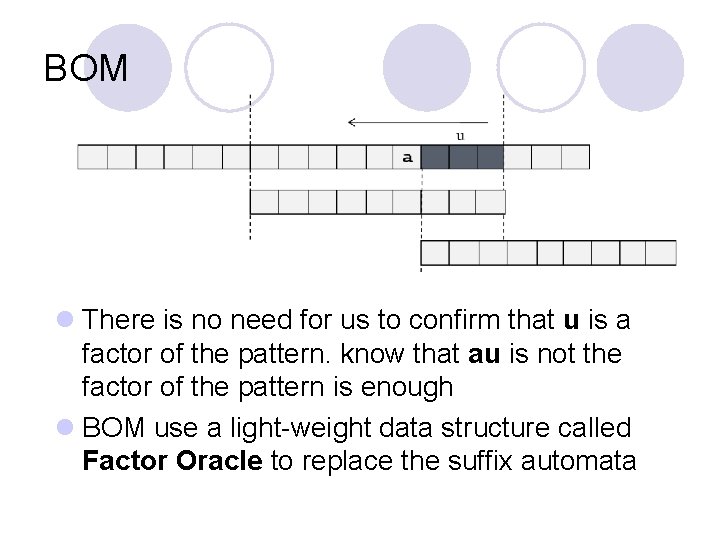

BOM l There is no need for us to confirm that u is a factor of the pattern. know that au is not the factor of the pattern is enough l BOM use a light-weight data structure called Factor Oracle to replace the suffix automata

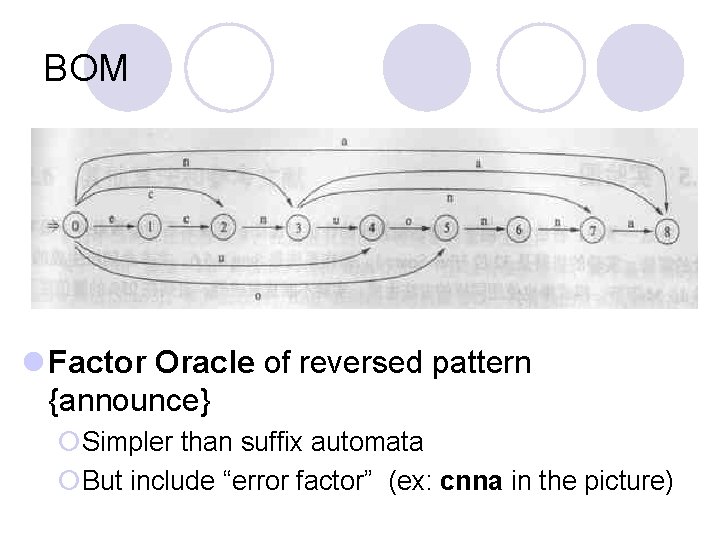

BOM l Factor Oracle of reversed pattern {announce} ¡Simpler than suffix automata ¡But include “error factor” (ex: cnna in the picture)

Set BDM l Natural extension of BDM l Cons: ¡The suffix automata consume lots of memory ¡The construction of automata is complex

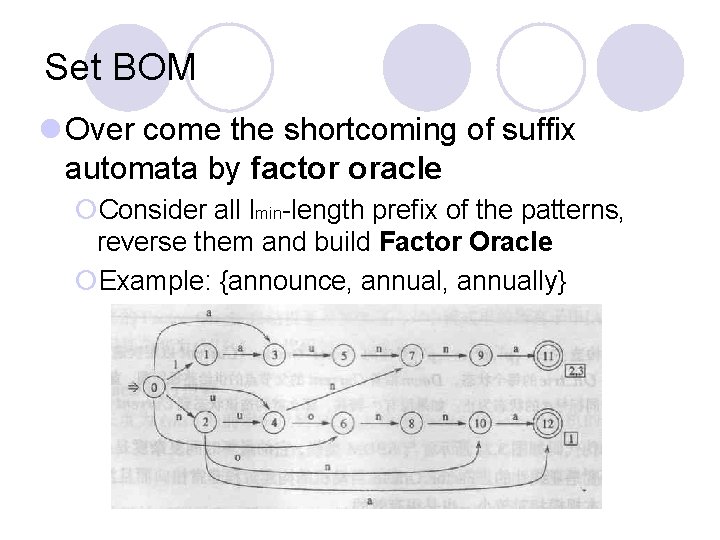

Set BOM l Over come the shortcoming of suffix automata by factor oracle ¡Consider all lmin-length prefix of the patterns, reverse them and build Factor Oracle ¡Example: {announce, annually}

Set BOM l Searching stage ¡Read the character backwards if the factor recognition stop, we can shift the search window ¡If reach the beginning of the window, then we need to first verify the lmin path in SBOM with the characters in the window. l. If verification pass, we can further verify the whole pattern l. If verification fail, move the window backwards by one character.

Roadmap

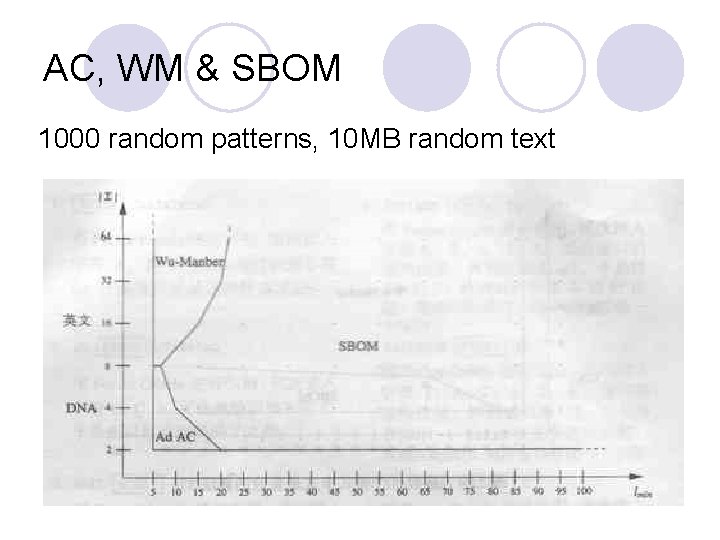

AC, WM & SBOM 1000 random patterns, 10 MB random text

Performance Experiments

Performance Test l Algorithm ¡AC, AC_BM, WM, Hybrid. WM, SBOM, RSI l Test environment ¡Processor : Intel Centrino Duo, 1. 83 GHz ¡Cache: 32 KB L 1 instruction, 32 KB L 1 data. 2048 KB shared L 2 Cache. ¡DRAM: 1. 5 GB DDR 2, 667 MHz ¡OS —— windows XP sp 2

Test 1 -Random Scenario l Alphabet size: 256 l Random text 32 MB with manually set matches of 10% l Random pattern set with special length distribution (pattern length from 4 to 100, about 80% of patterns are of length 8 to 16) l Pattern set size from 50 to 5000

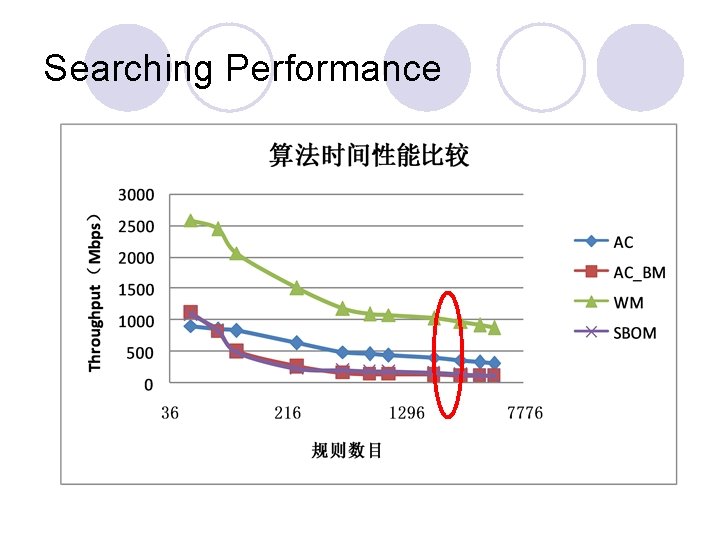

Searching Performance

Analysis l WM is the most efficient algorithm under such scenarios. ¡Long random patterns ¡Low matching rate ¡Low memory requirement l AC performance does not suffer great decline when comparing with others. ¡Matches with theoretical analysis

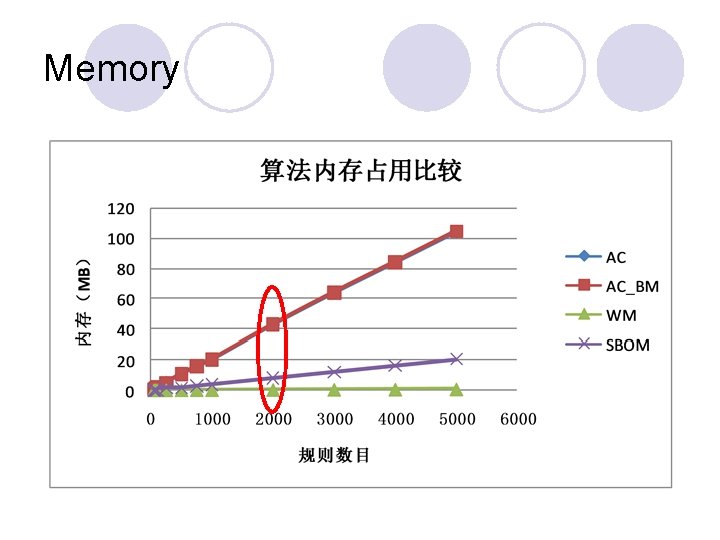

Memory

Analysis l WM consume much less memory than other algorithms ¡Hash table l AC and AC_BM consumes lots of memory ¡Automata data structure l SBOM is in the middle ¡Light weight Factor Oracle

Test 2 l Snort Pattern set (1785 patterns, average length 16)

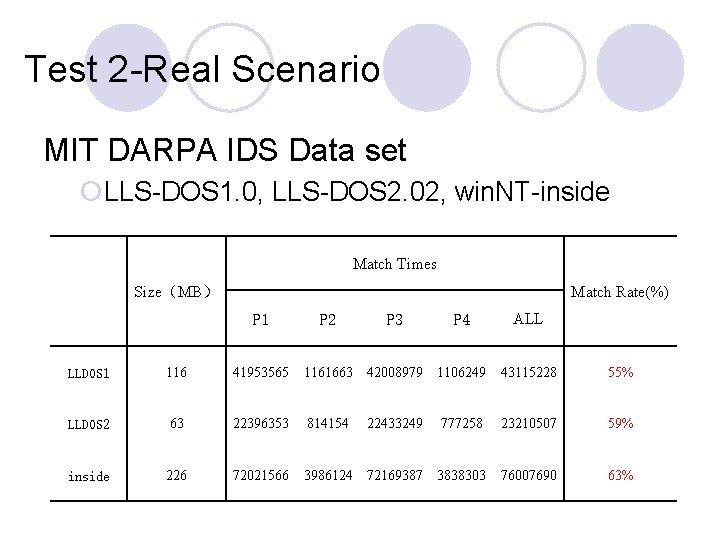

Test 2 -Real Scenario MIT DARPA IDS Data set ¡LLS-DOS 1. 0, LLS-DOS 2. 02, win. NT-inside Match Times Size(MB) Match Rate(%) P 1 P 2 P 3 P 4 ALL LLDOS 1 116 41953565 1161663 42008979 1106249 43115228 55% LLDOS 2 63 22396353 814154 22433249 777258 23210507 59% inside 226 72021566 3986124 72169387 3838303 76007690 63%

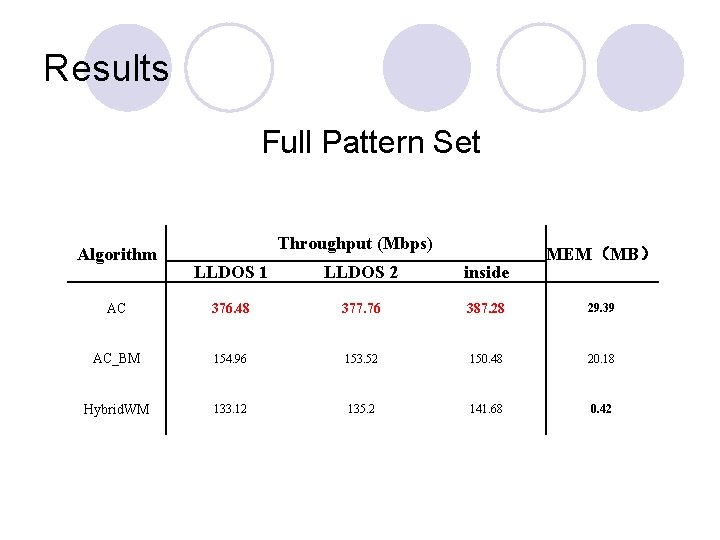

Results Full Pattern Set Algorithm Throughput (Mbps) MEM(MB) LLDOS 1 LLDOS 2 inside AC 376. 48 377. 76 387. 28 29. 39 AC_BM 154. 96 153. 52 150. 48 20. 18 Hybrid. WM 133. 12 135. 2 141. 68 0. 42

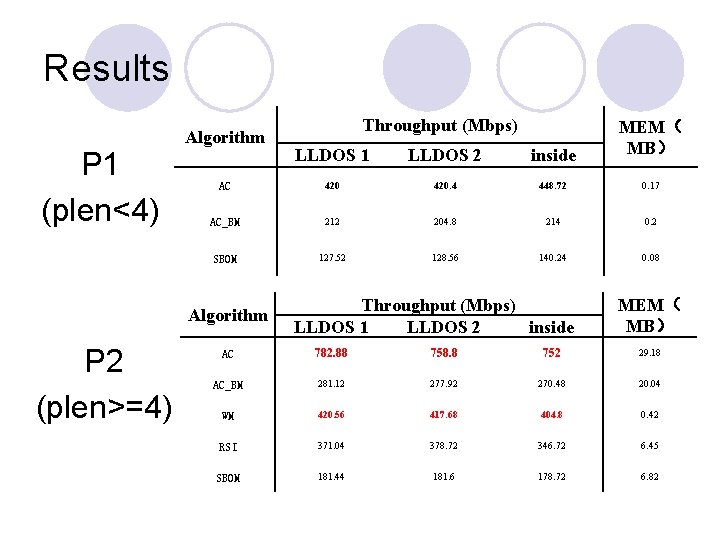

Results P 1 (plen<4) Algorithm MEM( MB) LLDOS 1 LLDOS 2 inside AC 420. 4 448. 72 0. 17 AC_BM 212 204. 8 214 0. 2 SBOM 127. 52 128. 56 140. 24 0. 08 Algorithm P 2 (plen>=4) Throughput (Mbps) LLDOS 1 LLDOS 2 inside MEM( MB) AC 782. 88 758. 8 752 29. 18 AC_BM 281. 12 277. 92 270. 48 20. 04 WM 420. 56 417. 68 404. 8 0. 42 RSI 371. 04 378. 72 346. 72 6. 45 SBOM 181. 44 181. 6 178. 72 6. 82

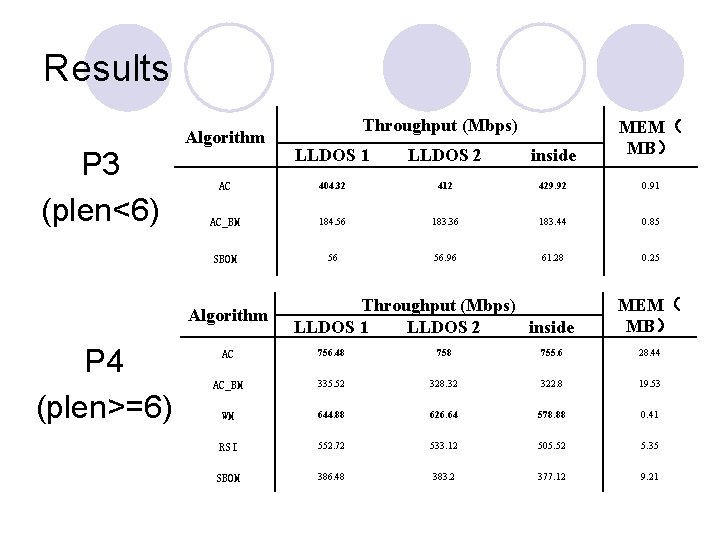

Results P 3 (plen<6) Algorithm MEM( MB) LLDOS 1 LLDOS 2 inside AC 404. 32 412 429. 92 0. 91 AC_BM 184. 56 183. 36 183. 44 0. 85 SBOM 56 56. 96 61. 28 0. 25 Algorithm P 4 (plen>=6) Throughput (Mbps) LLDOS 1 LLDOS 2 inside MEM( MB) AC 756. 48 755. 6 28. 44 AC_BM 335. 52 328. 32 322. 8 19. 53 WM 644. 88 626. 64 578. 88 0. 41 RSI 552. 72 533. 12 505. 52 5. 35 SBOM 386. 48 383. 2 377. 12 9. 21

Analysis l AC performance does not suffer great decline from P 1 to P 3 l WM performance greatly increase from P 2 to P 4 ¡ divided pattern length could be bigger than 6 l AC possess higher searching performance, even comparing with WM in P 2 and P 4. ¡ Many of the patterns in snort has same prefixes, which is not good for WM, especially when matching rate is high.

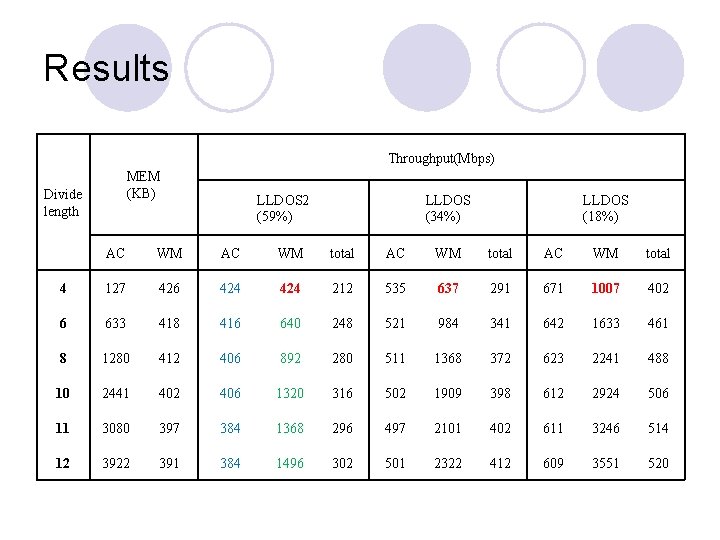

Test 3 -AC & WM Hybrid l MIT DARPA IDS Data set ¡LLS-DOS 2. 02 (match rate: 59%) ¡Mix with background flows l. LLDOS 30 (match rate: 34%) l. LLDOS 10 (match rate: 18%) l Flexible pattern division ¡Division line from 4~12

Results Throughput(Mbps) MEM (KB) Divide length LLDOS 2 (59%) LLDOS (34%) LLDOS (18%) AC WM total 4 127 426 424 212 535 637 291 671 1007 402 6 633 418 416 640 248 521 984 341 642 1633 461 8 1280 412 406 892 280 511 1368 372 623 2241 488 10 2441 402 406 1320 316 502 1909 398 612 2924 506 11 3080 397 384 1368 296 497 2101 402 611 3246 514 12 3922 391 384 1496 302 501 2322 412 609 3551 520

Solution and Future Work

Alternatives l AC Only l AC & WM Hybrid

Future Work l AC (memory compression) ¡ Automata: NFA, Banded, Sparse or other idea? ¡ Pattern Set: Sub set division? ¡ …… l WM ¡ Same prefixes problem: dynamic cut? ¡ Worst-case problem: matches signal? ¡ Performance improve: intelligent verification?

Bibliography l All the paper involved in this presentation has been upload to NSlab server 20 ¡Categorized by prefix, suffix and factor (done) ¡There is also a document name current for new papers appeared in recent high-ranked conferences like INFOCOM, SIGCOMM, USENIX Security, CPM and so on. (in progress) \166. 111. 137. 20venus文献 资源Zongweistring matching algorithm

Other Useful Resource l Book l Websites ¡Pattern Matching Pointer lhttp: //www. cs. ucr. edu/~stelo/pattern. html l. Maintained by associate professor Stefano Lonardi of UC Reiverside ¡EXACT STRING MATCHING ALGORITHMS lhttp: //www-igm. univ-mlv. fr/~lecroq/string/ l. Description, complexity analysis, C source code of many single string matching algorithms

- Slides: 60