Stream Stream Different Streaming methods with Spark and

![That's one small step for [a] man… (2014) OLAP In-DB aggregation “Apache Spark is That's one small step for [a] man… (2014) OLAP In-DB aggregation “Apache Spark is](https://slidetodoc.com/presentation_image_h/e00b1eace27fd8e864a9f18d9f8d7b7d/image-10.jpg)

- Slides: 45

Stream, Stream: Different Streaming methods with Spark and Kafka Itai Yaffe Nielsen

Introduction Itai Yaffe ● Tech Lead, Big Data group ● Dealing with Big Data challenges since 2012

Introduction - part 2 (or: “your turn…”) ● Data engineers? Data architects? Something else? ● Working with Spark? Planning to? ● Working Kafka? Planning to?

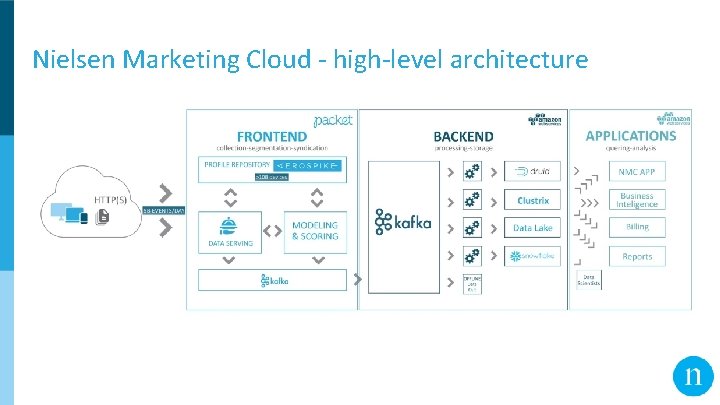

Agenda ● Nielsen Marketing Cloud (NMC) ○ About ○ High-level architecture ● Data flow - past and present ● Spark Streaming ○ “Stateless” and “stateful” use-cases ● Spark Structured Streaming ● “Streaming” over our Data Lake

Nielsen Marketing Cloud (NMC) ● e. Xelate was acquired by Nielsen on March 2015 ● A Data company ● Machine learning models for insights ● Targeting ● Business decisions

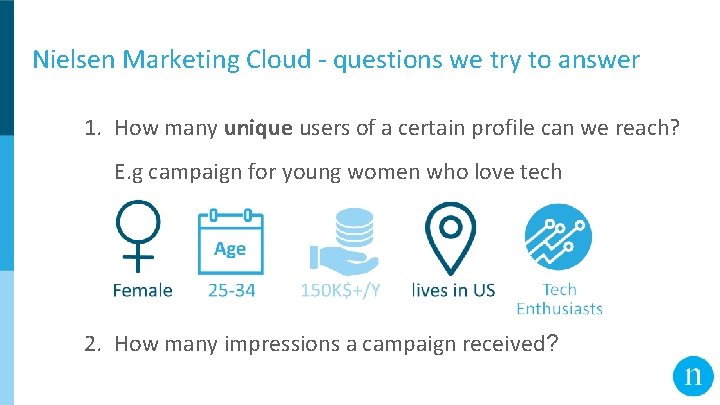

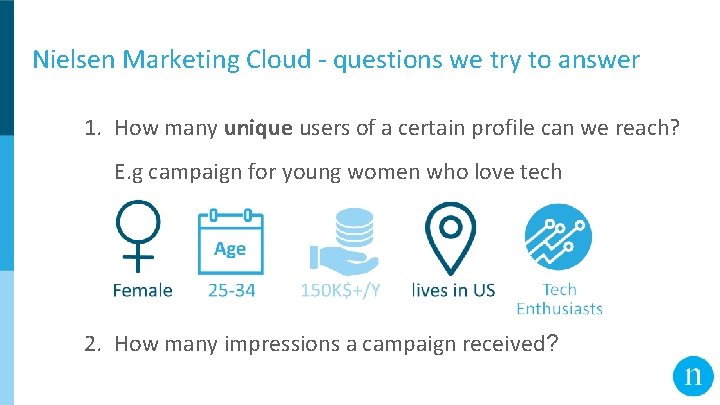

Nielsen Marketing Cloud - questions we try to answer 1. How many unique users of a certain profile can we reach? E. g campaign for young women who love tech 2. How many impressions a campaign received?

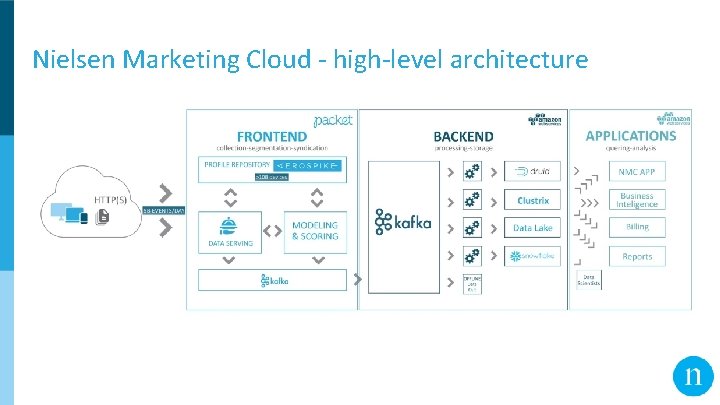

Nielsen Marketing Cloud - high-level architecture

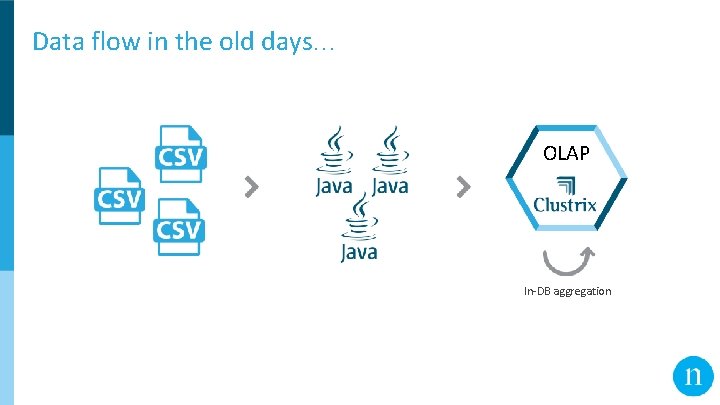

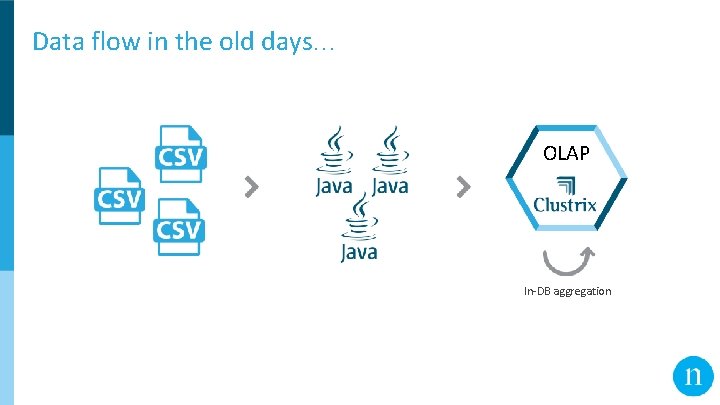

Data flow in the old days. . . OLAP In-DB aggregation

Data flow in the old days… What’s wrong with that? ● CSV-related issues, e. g: ○ ○ Truncated lines in input files Can’t enforce schema ● Scale-related issues, e. g: ○ Had to “manually” scale the processes

![Thats one small step for a man 2014 OLAP InDB aggregation Apache Spark is That's one small step for [a] man… (2014) OLAP In-DB aggregation “Apache Spark is](https://slidetodoc.com/presentation_image_h/e00b1eace27fd8e864a9f18d9f8d7b7d/image-10.jpg)

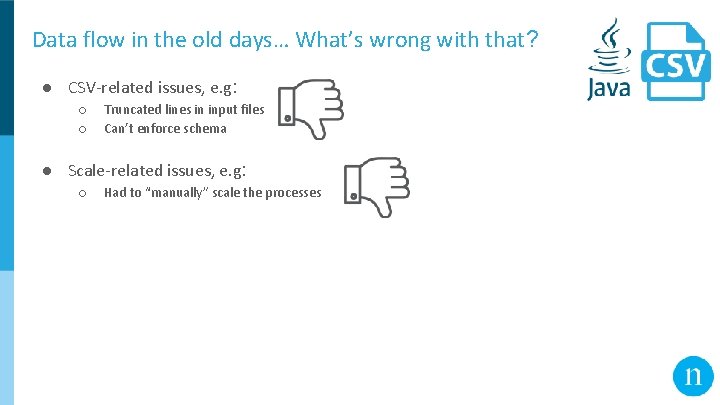

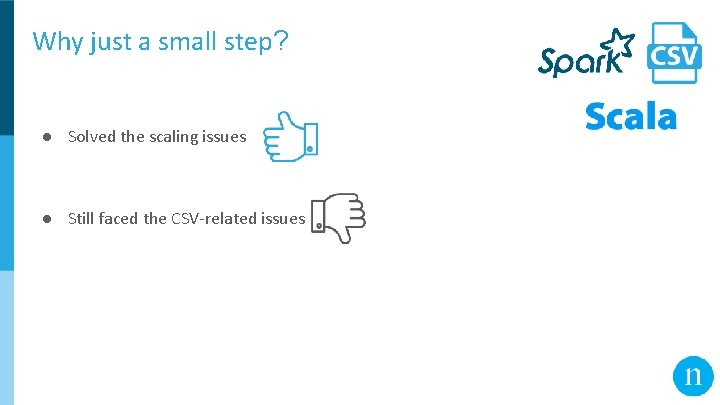

That's one small step for [a] man… (2014) OLAP In-DB aggregation “Apache Spark is the Taylor Swift of big data software" (Derrick Harris, Fortune. com, 2015)

Why just a small step? ● Solved the scaling issues ● Still faced the CSV-related issues

Data flow - the modern way + Photography Copyright: NBC

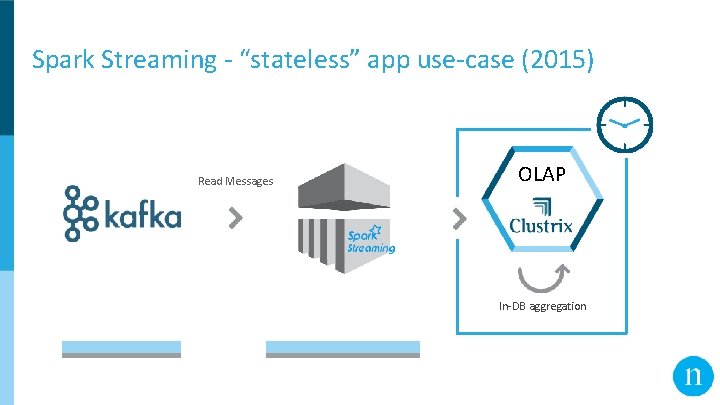

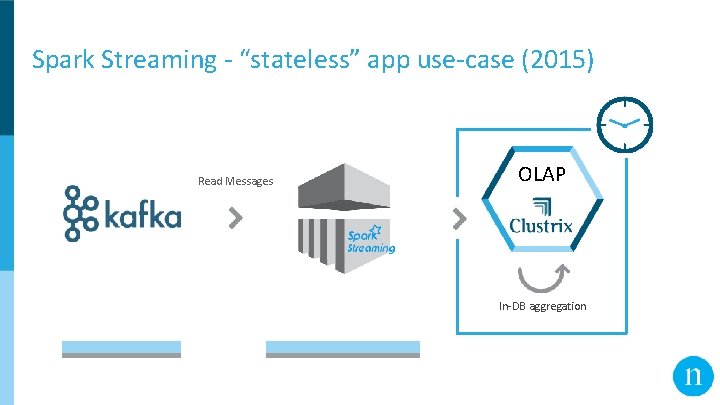

Spark Streaming - “stateless” app use-case (2015) Read Messages OLAP In-DB aggregation

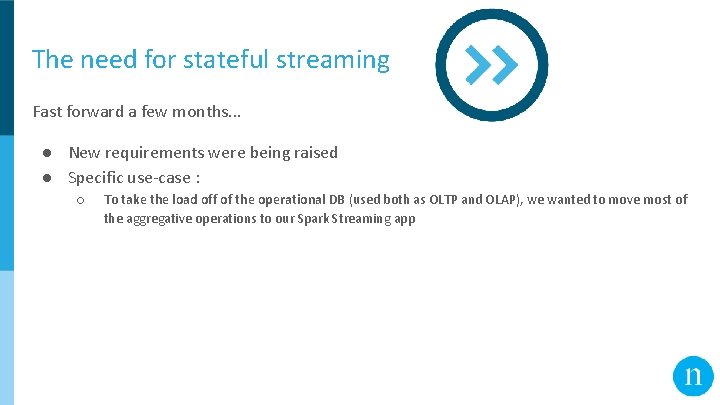

The need for stateful streaming Fast forward a few months. . . ● New requirements were being raised ● Specific use-case : ○ To take the load off of the operational DB (used both as OLTP and OLAP), we wanted to move most of the aggregative operations to our Spark Streaming app

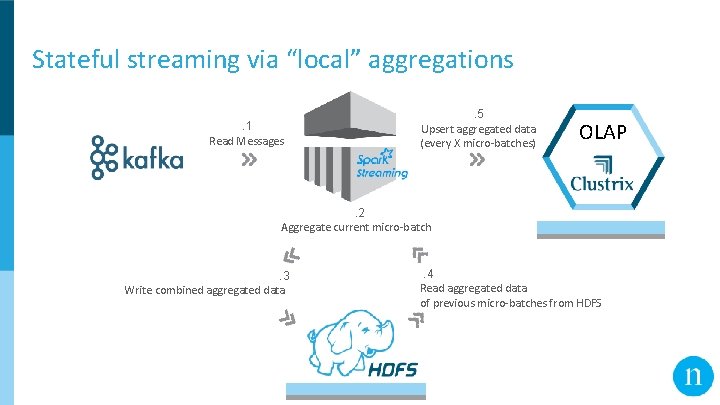

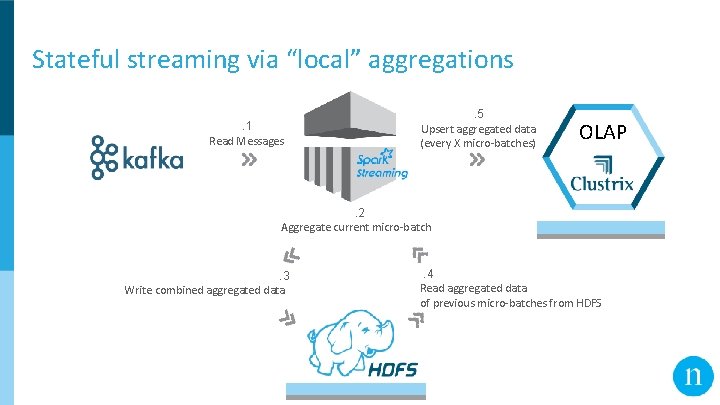

Stateful streaming via “local” aggregations. 1 Read Messages . 5 Upsert aggregated data (every X micro-batches) OLAP . 2 Aggregate current micro-batch . 3 Write combined aggregated data . 4 Read aggregated data of previous micro-batches from HDFS

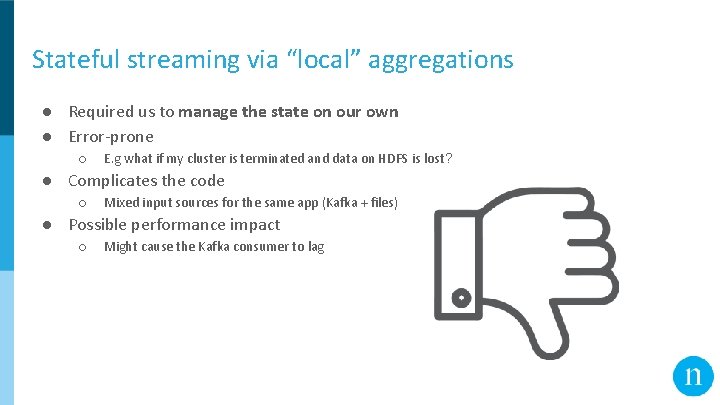

Stateful streaming via “local” aggregations ● Required us to manage the state on our own ● Error-prone ○ E. g what if my cluster is terminated and data on HDFS is lost? ● Complicates the code ○ Mixed input sources for the same app (Kafka + files) ● Possible performance impact ○ Might cause the Kafka consumer to lag

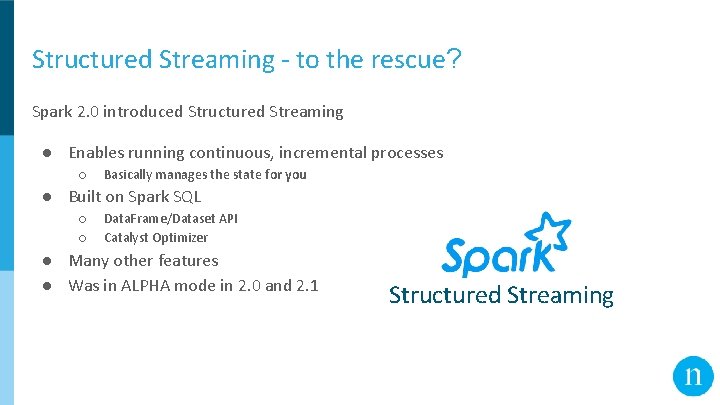

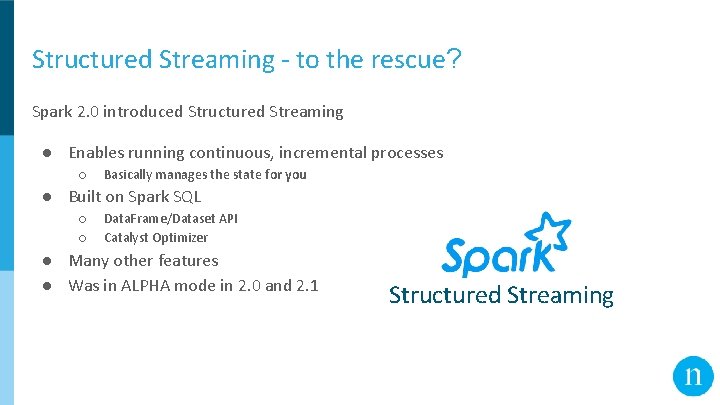

Structured Streaming - to the rescue? Spark 2. 0 introduced Structured Streaming ● Enables running continuous, incremental processes ○ Basically manages the state for you ● Built on Spark SQL ○ ○ Data. Frame/Dataset API Catalyst Optimizer ● Many other features ● Was in ALPHA mode in 2. 0 and 2. 1 Structured Streaming

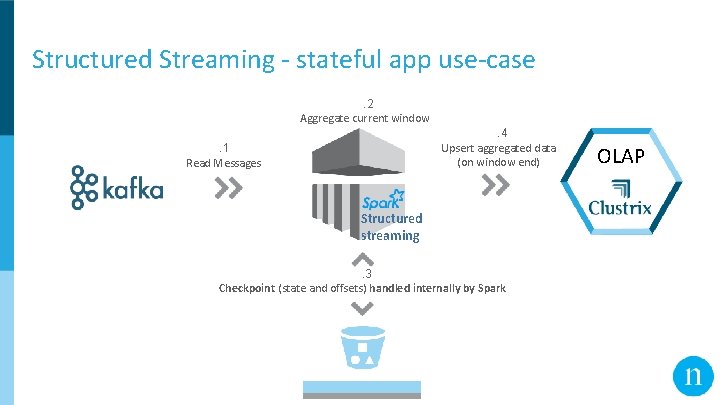

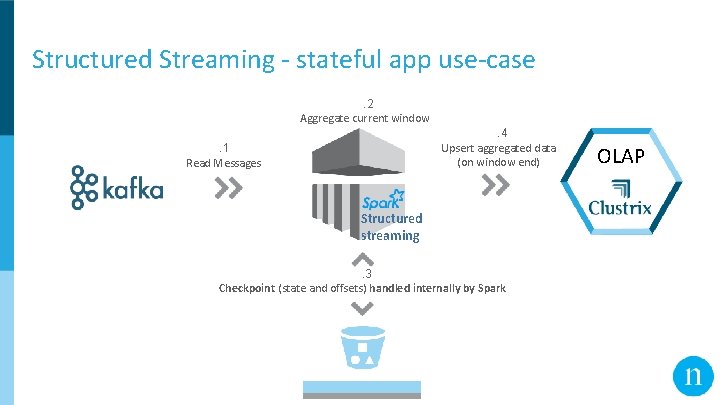

Structured Streaming - stateful app use-case. 2 Aggregate current window. 4 Upsert aggregated data (on window end) . 1 Read Messages Structured streaming. 3 Checkpoint (state and offsets) handled internally by Spark OLAP

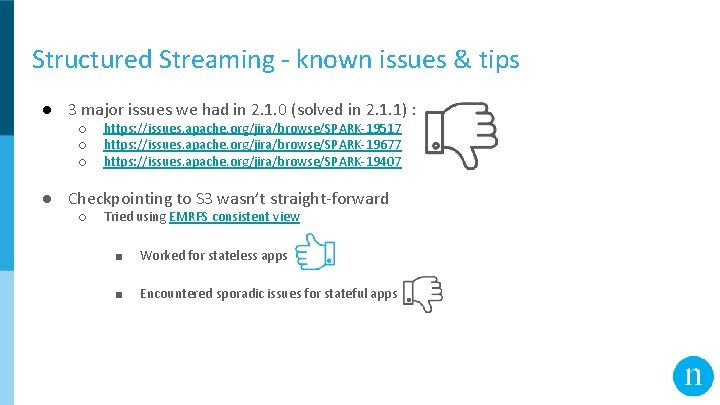

Structured Streaming - known issues & tips ● 3 major issues we had in 2. 1. 0 (solved in 2. 1. 1) : ○ ○ ○ https: //issues. apache. org/jira/browse/SPARK-19517 https: //issues. apache. org/jira/browse/SPARK-19677 https: //issues. apache. org/jira/browse/SPARK-19407 ● Checkpointing to S 3 wasn’t straight-forward ○ Tried using EMRFS consistent view ■ Worked for stateless apps ■ Encountered sporadic issues for stateful apps

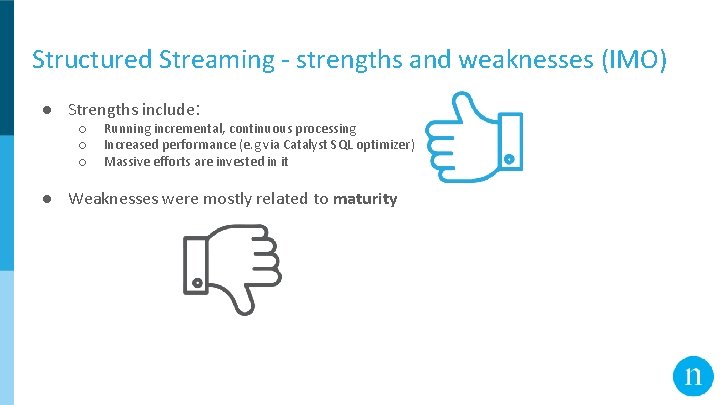

Structured Streaming - strengths and weaknesses (IMO) ● Strengths include: ○ ○ ○ Running incremental, continuous processing Increased performance (e. g via Catalyst SQL optimizer) Massive efforts are invested in it ● Weaknesses were mostly related to maturity

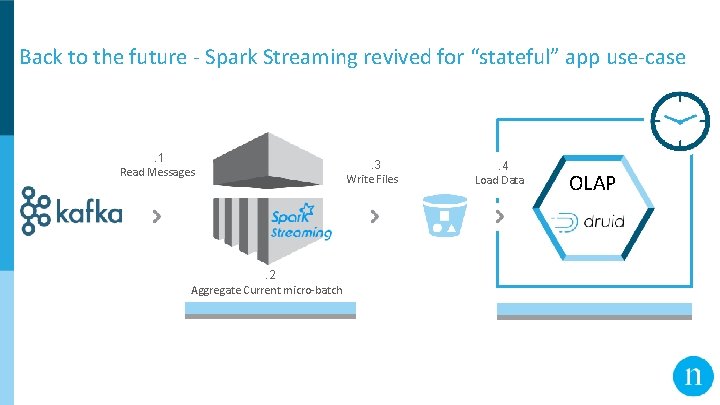

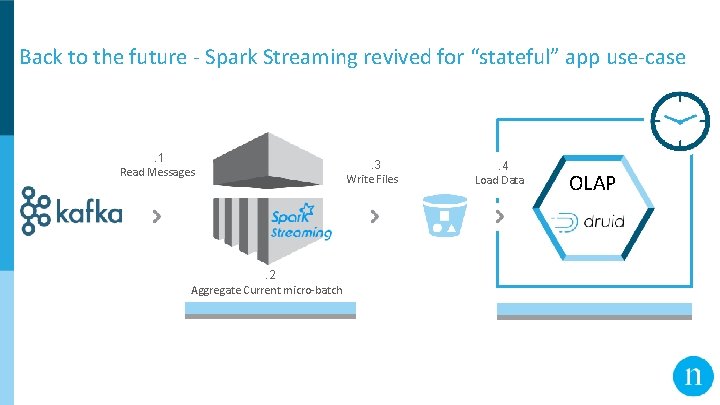

Back to the future - Spark Streaming revived for “stateful” app use-case . 1 Read Messages . 2 Aggregate Current micro-batch . 3 Write Files . 4 Load Data OLAP

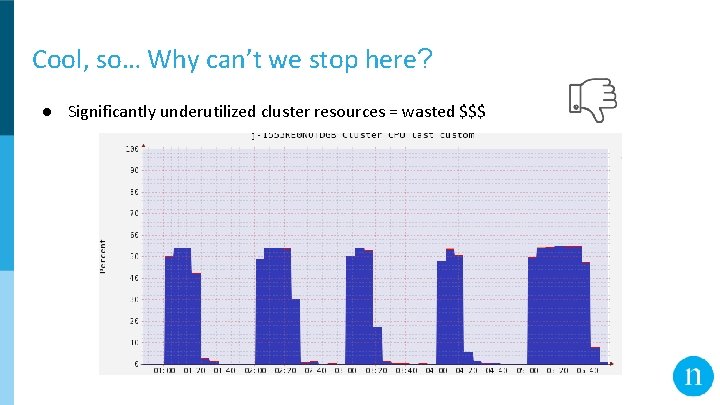

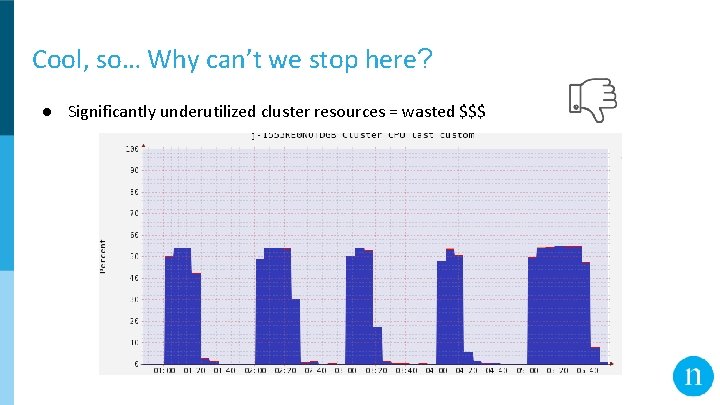

Cool, so… Why can’t we stop here? ● Significantly underutilized cluster resources = wasted $$$

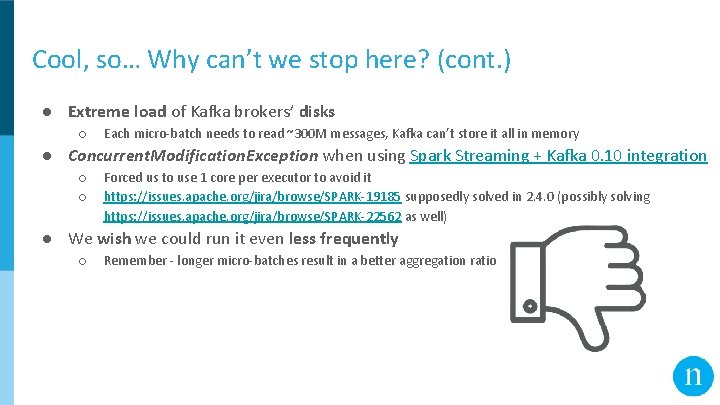

Cool, so… Why can’t we stop here? (cont. ) ● Extreme load of Kafka brokers’ disks ○ Each micro-batch needs to read ~300 M messages, Kafka can’t store it all in memory ● Concurrent. Modification. Exception when using Spark Streaming + Kafka 0. 10 integration ○ ○ Forced us to use 1 core per executor to avoid it https: //issues. apache. org/jira/browse/SPARK-19185 supposedly solved in 2. 4. 0 (possibly solving https: //issues. apache. org/jira/browse/SPARK-22562 as well) ● We wish we could run it even less frequently ○ Remember - longer micro-batches result in a better aggregation ratio

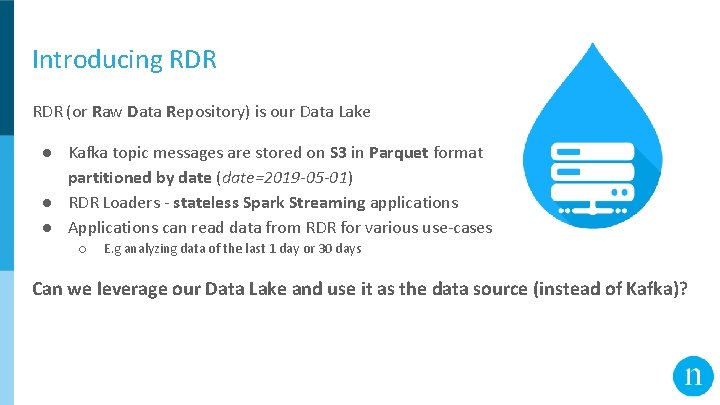

Introducing RDR (or Raw Data Repository) is our Data Lake ● Kafka topic messages are stored on S 3 in Parquet format partitioned by date (date=2019 -05 -01) ● RDR Loaders - stateless Spark Streaming applications ● Applications can read data from RDR for various use-cases ○ E. g analyzing data of the last 1 day or 30 days Can we leverage our Data Lake and use it as the data source (instead of Kafka)?

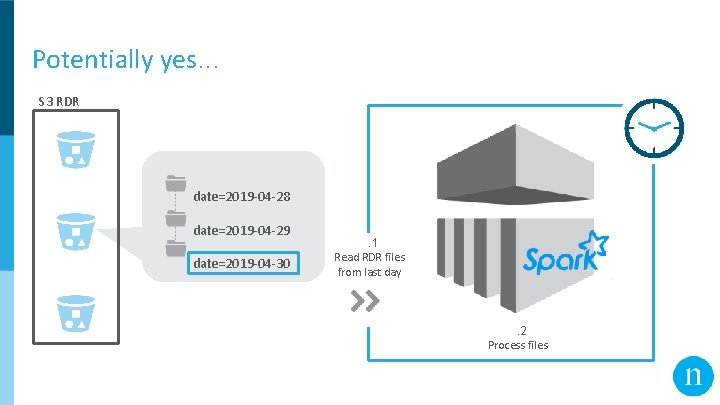

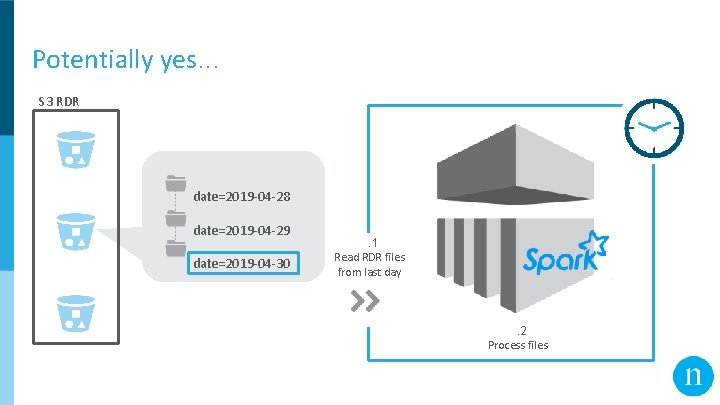

Potentially yes. . . S 3 RDR date=2019 -04 -28 date=2019 -04 -29 date=2019 -04 -30 . 1 Read RDR files from last day . 2 Process files

. . . but ● This ignores late arriving events

Enter “streaming” over RDR + +

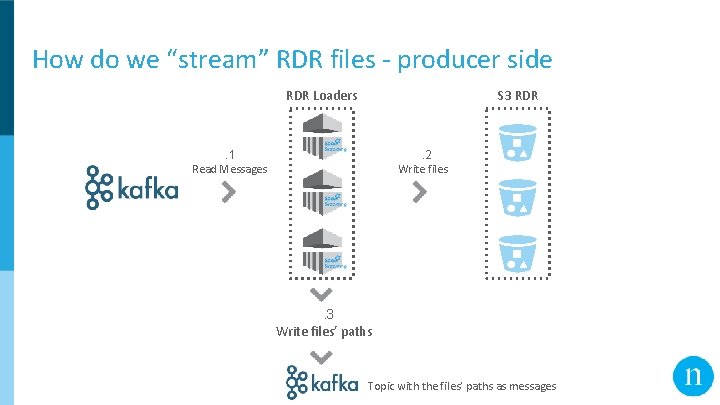

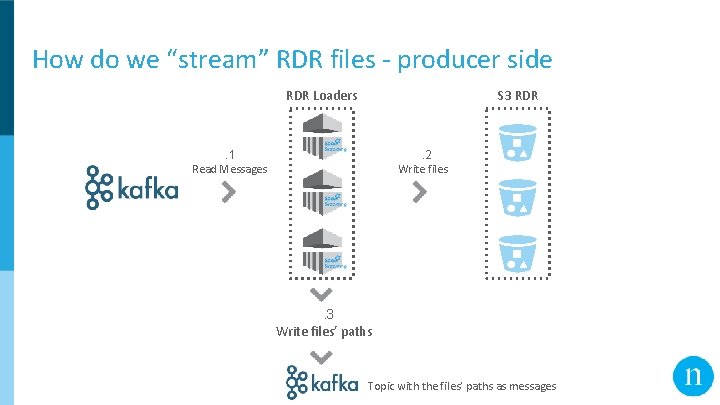

How do we “stream” RDR files - producer side RDR Loaders S 3 RDR . 1 Read Messages . 2 Write files . 3 Write files’ paths Topic with the files’ paths as messages

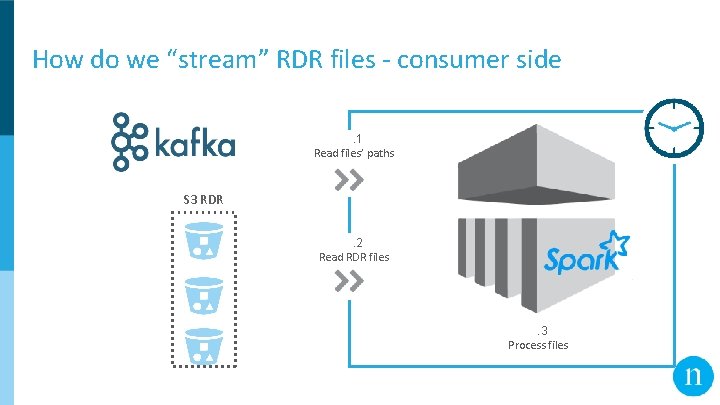

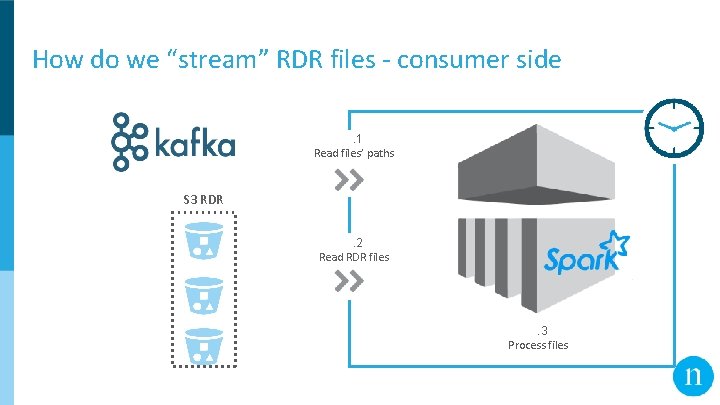

How do we “stream” RDR files - consumer side. 1 Read files’ paths S 3 RDR. 2 Read RDR files . 3 Process files

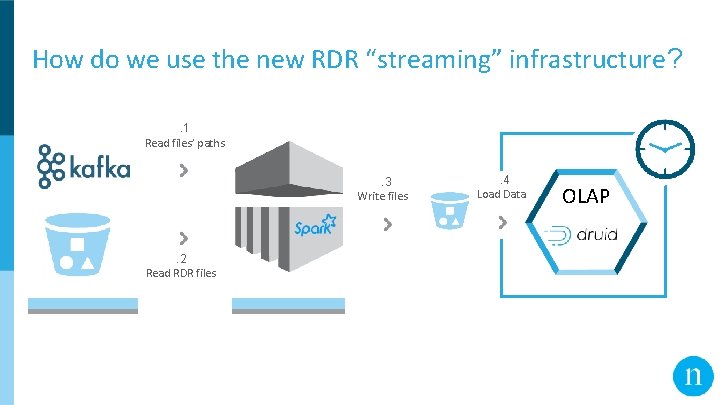

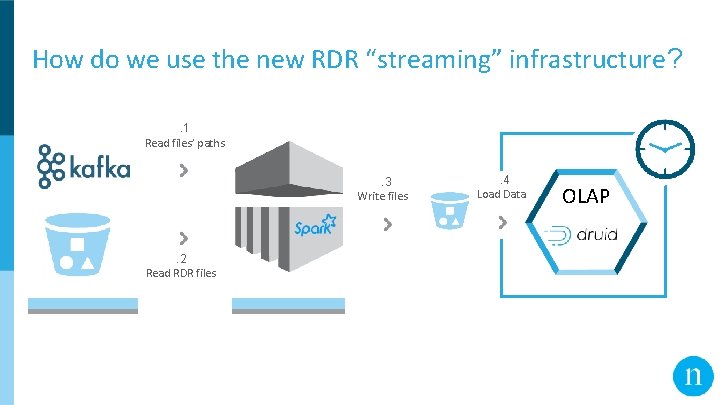

How do we use the new RDR “streaming” infrastructure? . 1 Read files’ paths. 3 Write files . 2 Read RDR files . 4 Load Data OLAP

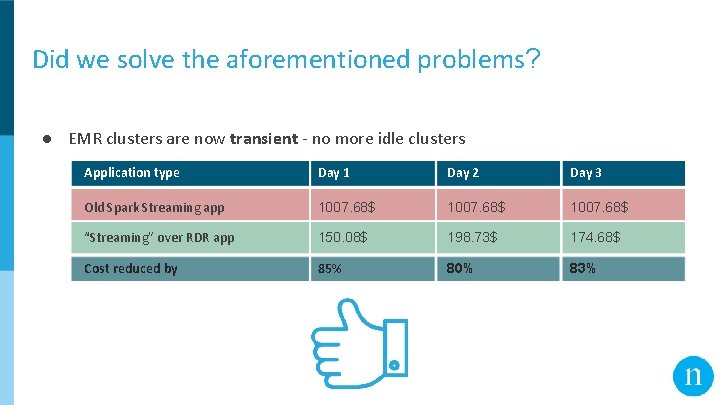

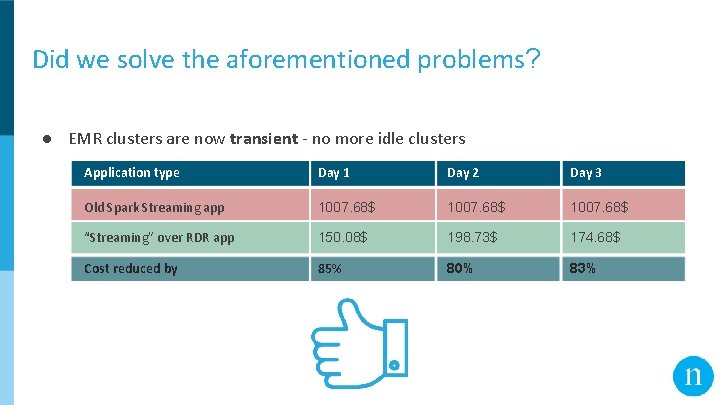

Did we solve the aforementioned problems? ● EMR clusters are now transient - no more idle clusters Application type Day 1 Day 2 Day 3 Old Spark Streaming app 1007. 68$ “Streaming” over RDR app 150. 08$ 198. 73$ 174. 68$ Cost reduced by 85% 80% 83%

Did we solve the aforementioned problems? (cont. ) ● No more extreme load of Kafka brokers’ disks ○ We still read old messages from Kafka, but now we only read about 1 K messages per hour (rather than ~300 M) ● The new infra doesn’t depend on the integration of Spark Streaming with Kafka ○ No more weird exceptions. . . ● We can run the Spark batch applications as (in)frequent as we’d like ● Built-in handling of late arriving events

Summary ● Initially replaced standalone Java with Spark & Scala ○ Still faced CSV-related issues ● Introduced Spark Streaming & Kafka for “stateless” use-cases ○ Quickly needed to handle stateful use-cases as well ● Tried Spark Streaming for stateleful use-cases (via “local” aggregations) ○ Required us to manage the state on our own ● Moved to Structured Streaming (for all use-cases) ○ Cons were mostly related to maturity

Summary (cont. ) ● Went back to Spark Streaming (with Druid as OLAP) ○ ○ ○ Performance penalty in Kafka for long micro-batches Under-utilized Spark clusters Etc. ● Introduced “streaming” over our Data Lake ○ ○ ○ Eliminated Kafka performance penalty Spark clusters are much better utilized = $$$ saved And more. . .

Want to know more? DRUID ● Women in Big Data ○ ○ ES A world-wide program that aims: ■ To inspire, connect, grow, and champion success of women in Big Data ■ To grow women representation in Big Data field > 25% by 2020 Visit the website (https: //www. womeninbigdata. org/) ● Nielsen presents: Fun with Kafka, Spark, and offset management ○ Today, 14: 05 -14: 45, Expo Hall 2 (Capital Hall N 24), http: //tinyurl. com/yywkbclg ● NMC Tech Blog - https: //medium. com/nmc-techblog

QUESTIONS

THANK YOU https: //www. linkedin. com/in/itaiy/

Structured Streaming additional slides

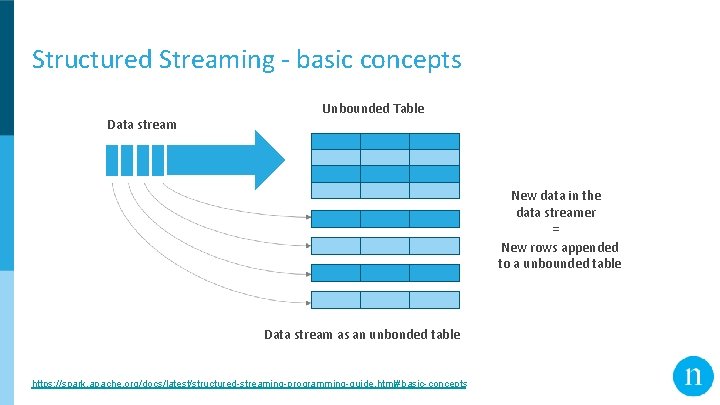

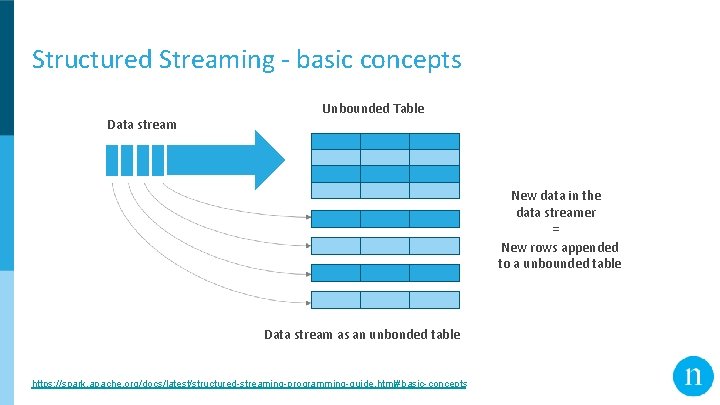

Structured Streaming - basic concepts Data stream Unbounded Table New data in the data streamer = New rows appended to a unbounded table Data stream as an unbonded table https: //spark. apache. org/docs/latest/structured-streaming-programming-guide. html#basic-concepts

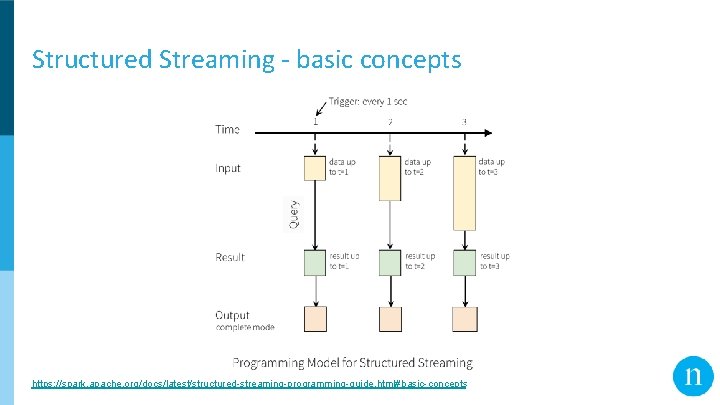

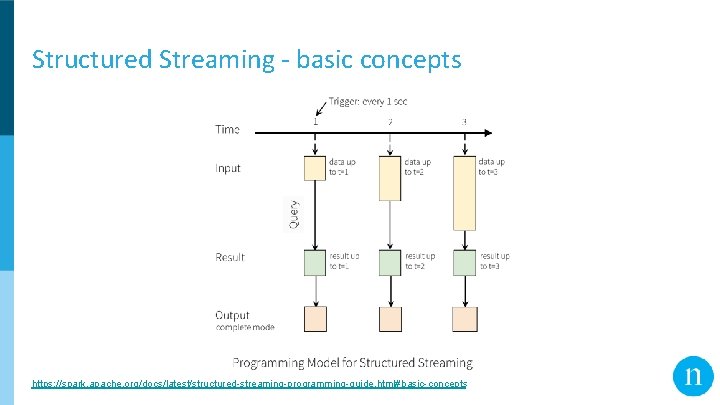

Structured Streaming - basic concepts https: //spark. apache. org/docs/latest/structured-streaming-programming-guide. html#basic-concepts

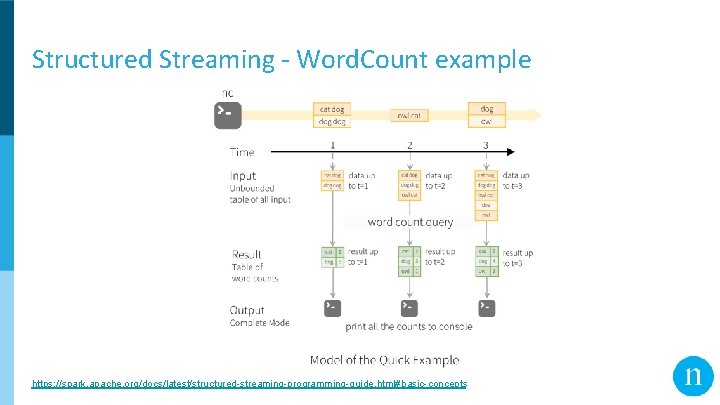

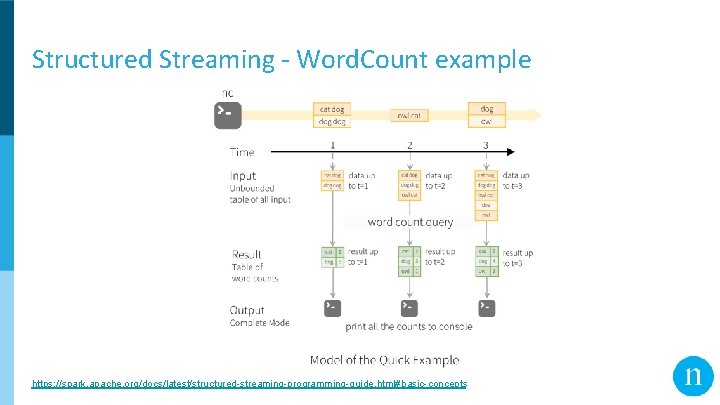

Structured Streaming - Word. Count example https: //spark. apache. org/docs/latest/structured-streaming-programming-guide. html#basic-concepts

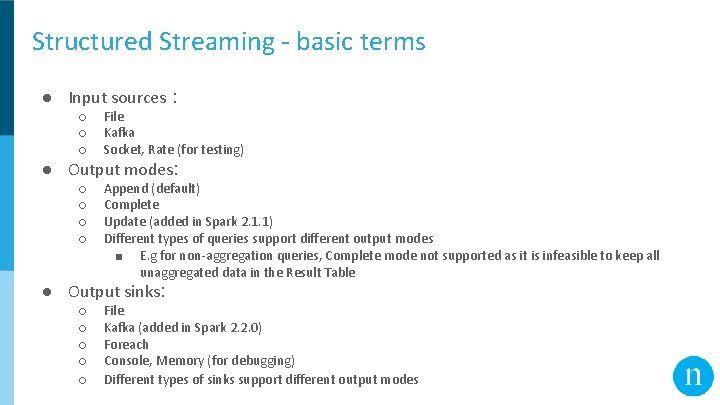

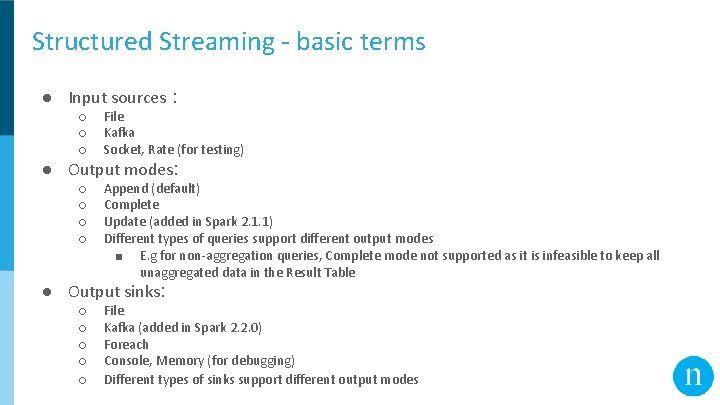

Structured Streaming - basic terms ● Input sources : ○ ○ ○ File Kafka Socket, Rate (for testing) ○ ○ Append (default) Complete Update (added in Spark 2. 1. 1) Different types of queries support different output modes ■ E. g for non-aggregation queries, Complete mode not supported as it is infeasible to keep all unaggregated data in the Result Table ● Output modes: ● Output sinks: ○ ○ ○ File Kafka (added in Spark 2. 2. 0) Foreach Console, Memory (for debugging) Different types of sinks support different output modes

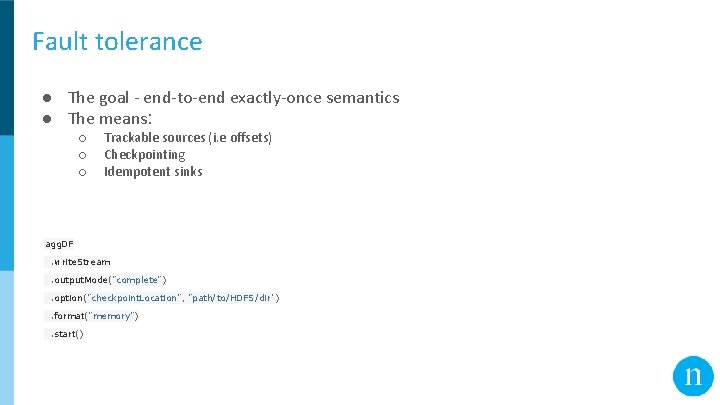

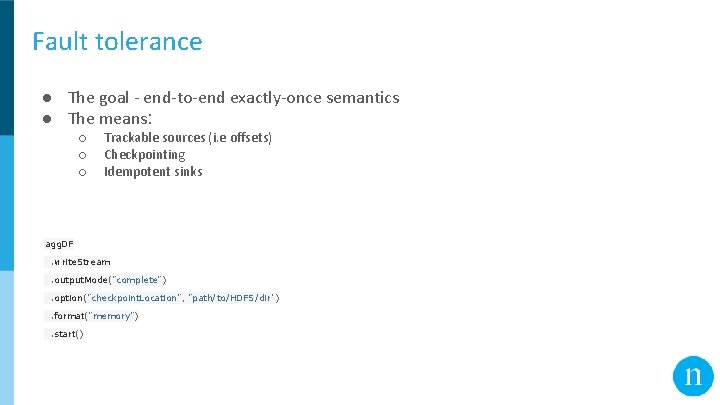

Fault tolerance ● The goal - end-to-end exactly-once semantics ● The means: ○ ○ ○ Trackable sources (i. e offsets) Checkpointing Idempotent sinks agg. DF. write. Stream. output. Mode("complete"). option("checkpoint. Location", "path/to/HDFS/dir"). format("memory"). start()

Monitoring ● Interactive APIs : ● ○ streaming. Query. last. Progress()/status() ○ Output example Asynchronous API : ○ val spark: Spark. Session =. . . spark. streams. add. Listener(new Streaming. Query. Listener() { override def on. Query. Started(query. Started: Query. Started. Event): Unit = { println("Query started: " + query. Started. id) } override def on. Query. Terminated(query. Terminated: Query. Terminated. Event): Unit = { println("Query terminated: " + query. Terminated. id) } override def on. Query. Progress(query. Progress: Query. Progress. Event): Unit = { println("Query made progress: " + query. Progress. progress) } })

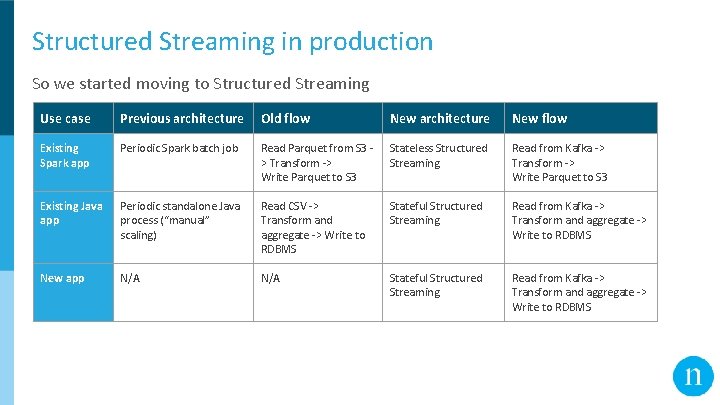

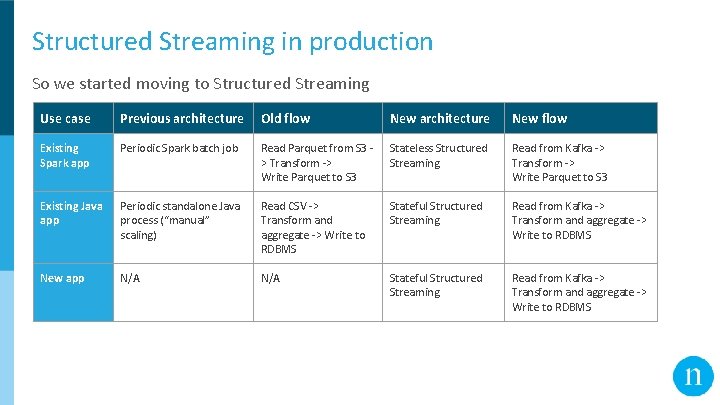

Structured Streaming in production So we started moving to Structured Streaming Use case Previous architecture Old flow New architecture New flow Existing Spark app Periodic Spark batch job Read Parquet from S 3 > Transform -> Write Parquet to S 3 Stateless Structured Streaming Read from Kafka -> Transform -> Write Parquet to S 3 Existing Java app Periodic standalone Java process (“manual” scaling) Read CSV -> Transform and aggregate -> Write to RDBMS Stateful Structured Streaming Read from Kafka -> Transform and aggregate -> Write to RDBMS New app N/A Stateful Structured Streaming Read from Kafka -> Transform and aggregate -> Write to RDBMS