Stream Programming Luring Programmers into the Multicore Era

![The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D] The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D]](https://slidetodoc.com/presentation_image_h/04490bf95e0fbce745ae09bbb5bf210e/image-61.jpg)

![The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D] The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D]](https://slidetodoc.com/presentation_image_h/04490bf95e0fbce745ae09bbb5bf210e/image-62.jpg)

- Slides: 78

Stream Programming: Luring Programmers into the Multicore Era Bill Thies Computer Science and Artificial Intelligence Laboratory Massachusetts Institute of Technology Spring 2008

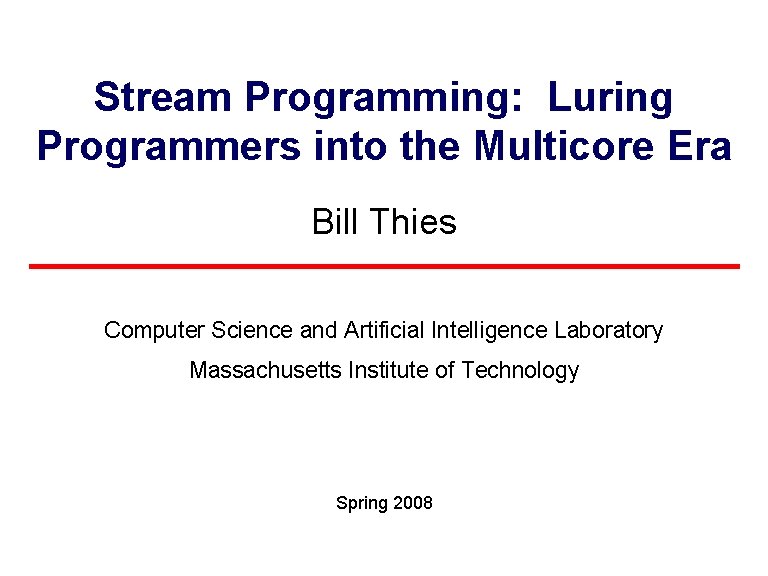

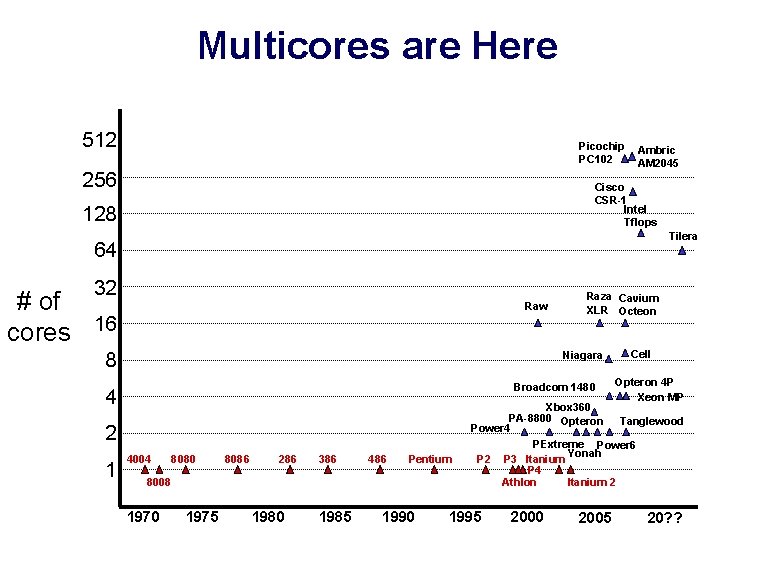

Multicores are Here 512 Picochip PC 102 256 Ambric AM 2045 Cisco CSR-1 Intel Tflops 128 Tilera 64 32 # of cores 16 Raw 8 Niagara Broadcom 1480 4 2 1 Raza Cavium XLR Octeon 4004 8080 8086 286 386 486 Pentium 8008 1970 1975 1980 1985 1990 Cell Opteron 4 P Xeon MP Xbox 360 PA-8800 Opteron Tanglewood Power 4 PExtreme Power 6 Yonah P 2 P 3 Itanium P 4 Athlon Itanium 2 1995 2000 2005 20? ?

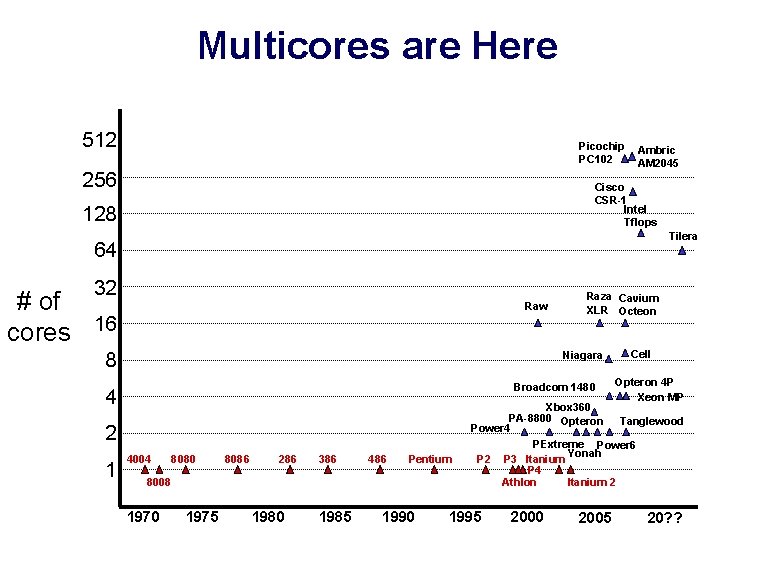

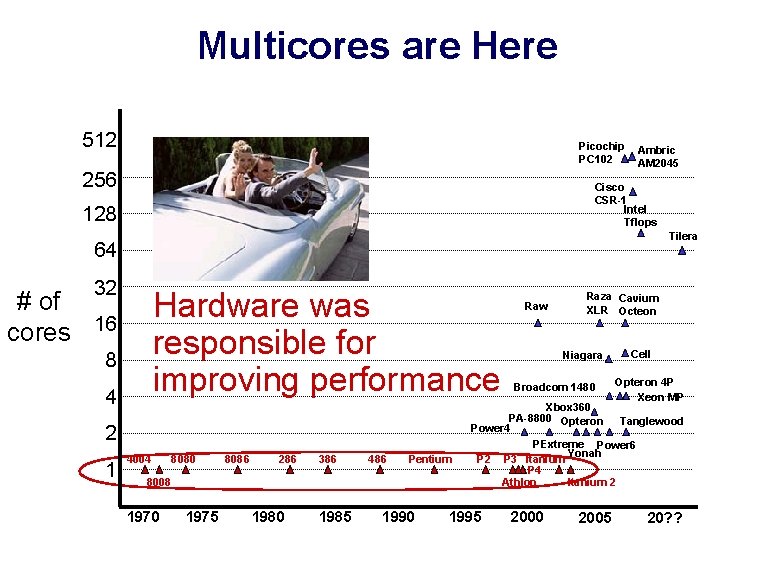

Multicores are Here 512 Picochip PC 102 256 Ambric AM 2045 Cisco CSR-1 Intel Tflops 128 Tilera 64 32 # of cores 16 Hardware was responsible for improving performance 8 4 2 1 4004 8080 8086 286 386 486 Pentium 8008 1970 1975 1980 1985 1990 Raw Raza Cavium XLR Octeon Niagara Broadcom 1480 Cell Opteron 4 P Xeon MP Xbox 360 PA-8800 Opteron Tanglewood Power 4 PExtreme Power 6 Yonah P 2 P 3 Itanium P 4 Athlon Itanium 2 1995 2000 2005 20? ?

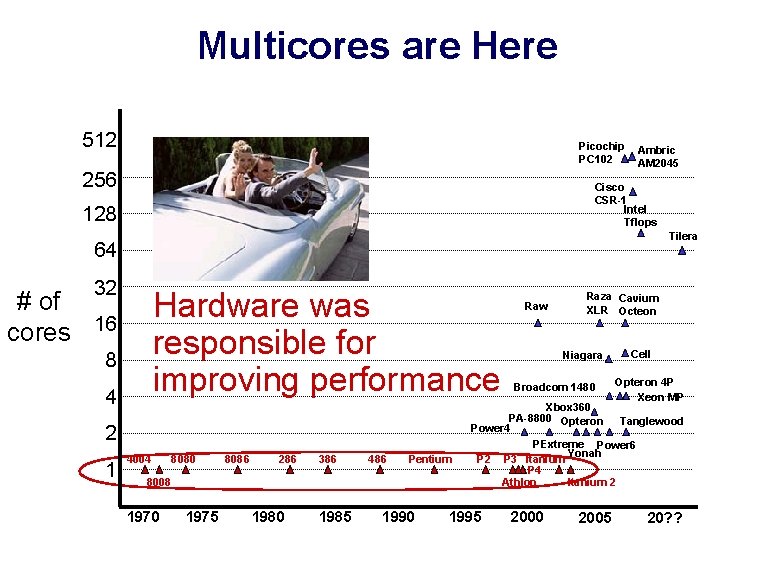

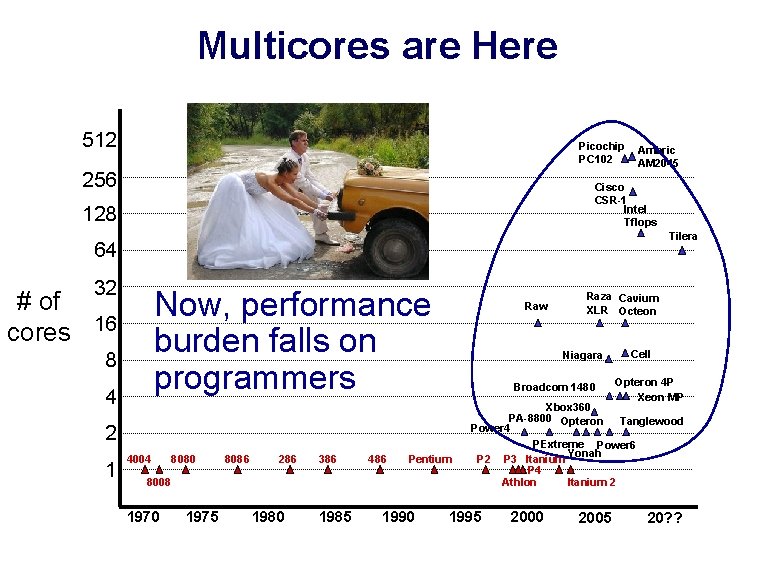

Multicores are Here 512 Picochip PC 102 256 Ambric AM 2045 Cisco CSR-1 Intel Tflops 128 Tilera 64 32 Now, performance burden falls on programmers # of cores 16 8 4 Raw Niagara Broadcom 1480 2 1 4004 8080 8086 286 386 486 Pentium 8008 1970 1975 1980 1985 1990 Raza Cavium XLR Octeon Cell Opteron 4 P Xeon MP Xbox 360 PA-8800 Opteron Tanglewood Power 4 PExtreme Power 6 Yonah P 2 P 3 Itanium P 4 Athlon Itanium 2 1995 2000 2005 20? ?

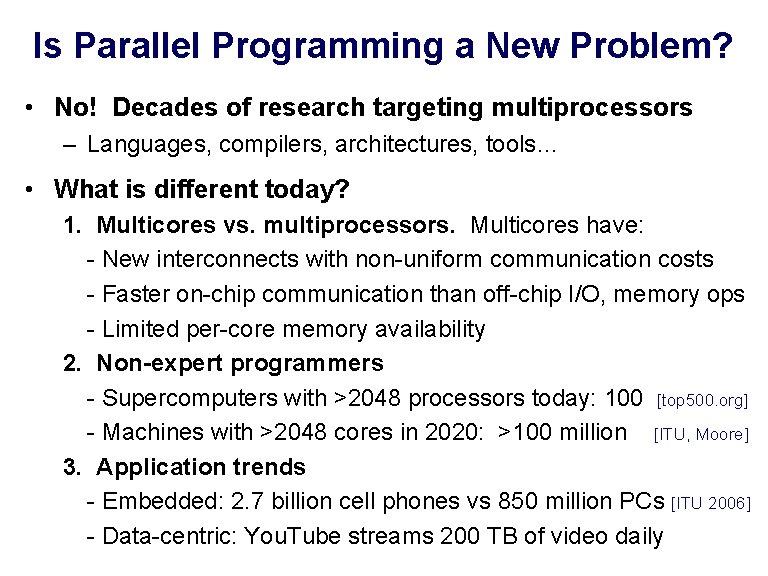

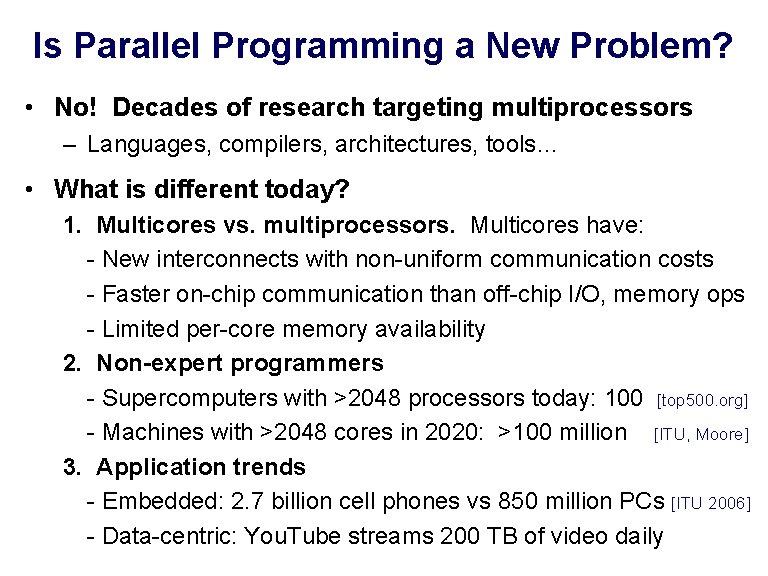

Is Parallel Programming a New Problem? • No! Decades of research targeting multiprocessors – Languages, compilers, architectures, tools… • What is different today? 1. Multicores vs. multiprocessors. Multicores have: - New interconnects with non-uniform communication costs - Faster on-chip communication than off-chip I/O, memory ops - Limited per-core memory availability 2. Non-expert programmers - Supercomputers with >2048 processors today: 100 [top 500. org] - Machines with >2048 cores in 2020: >100 million [ITU, Moore] 3. Application trends - Embedded: 2. 7 billion cell phones vs 850 million PCs [ITU 2006] - Data-centric: You. Tube streams 200 TB of video daily

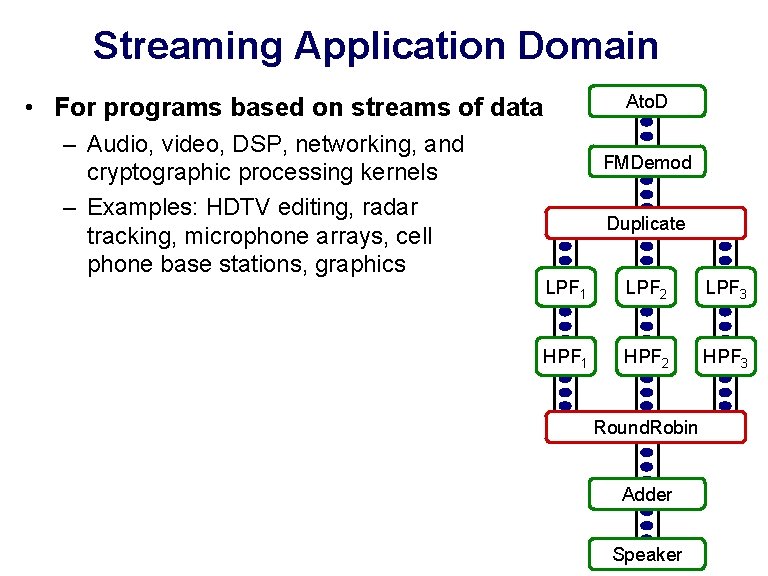

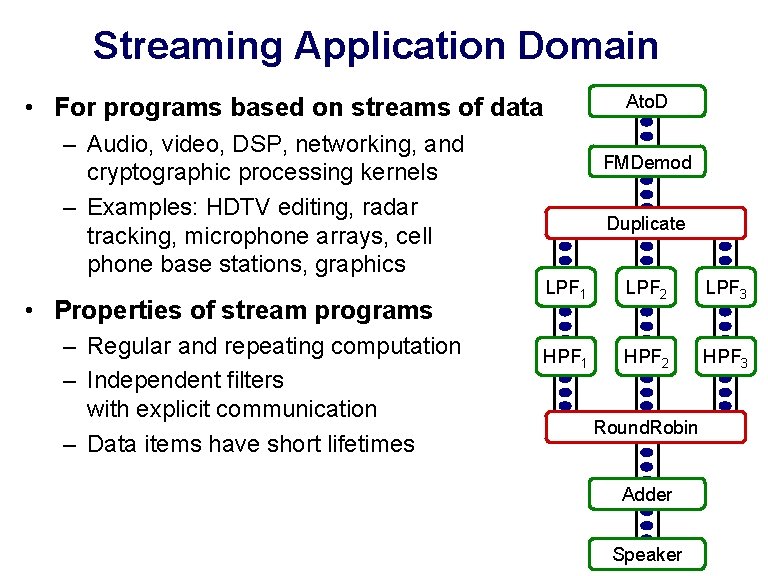

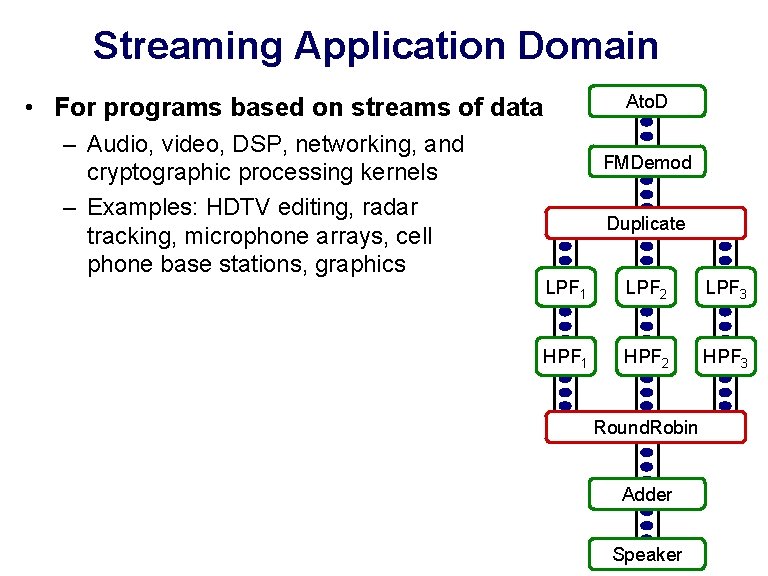

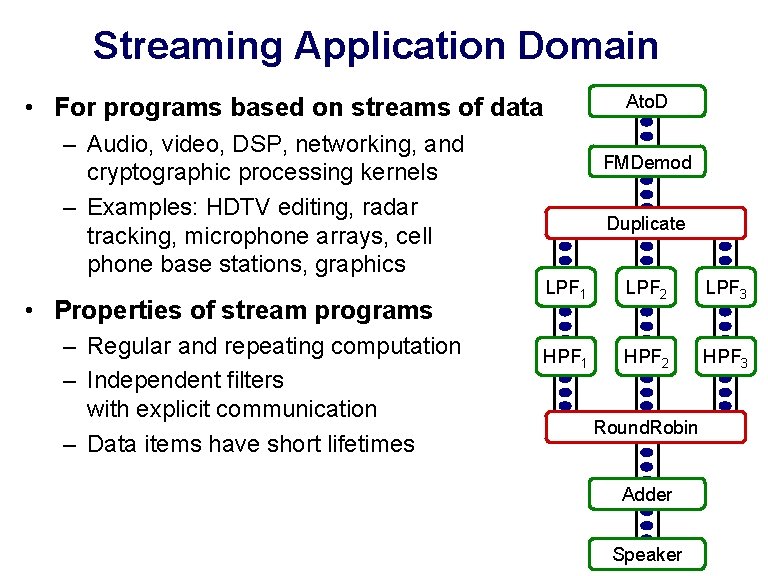

Streaming Application Domain Ato. D • For programs based on streams of data – Audio, video, DSP, networking, and cryptographic processing kernels – Examples: HDTV editing, radar tracking, microphone arrays, cell phone base stations, graphics FMDemod Duplicate LPF 1 LPF 2 LPF 3 HPF 1 HPF 2 HPF 3 Round. Robin Adder Speaker

Streaming Application Domain Ato. D • For programs based on streams of data – Audio, video, DSP, networking, and cryptographic processing kernels – Examples: HDTV editing, radar tracking, microphone arrays, cell phone base stations, graphics • Properties of stream programs – Regular and repeating computation – Independent filters with explicit communication – Data items have short lifetimes FMDemod Duplicate LPF 1 LPF 2 LPF 3 HPF 1 HPF 2 HPF 3 Round. Robin Adder Speaker

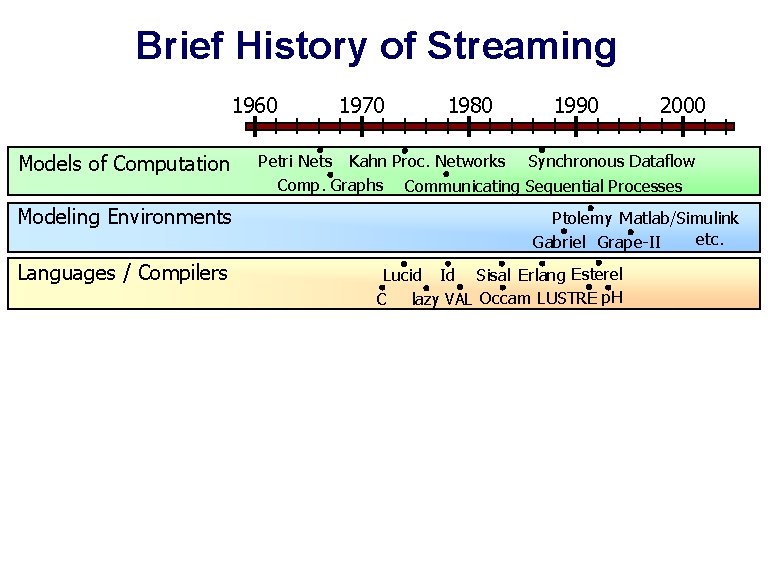

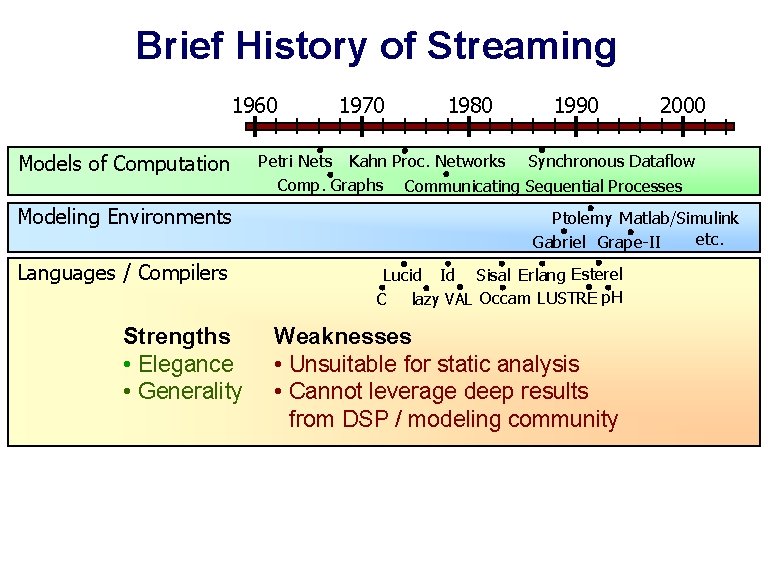

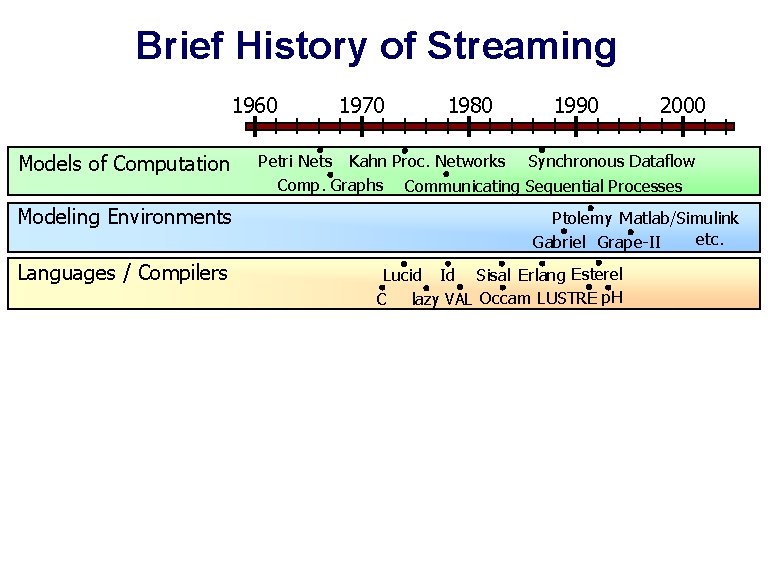

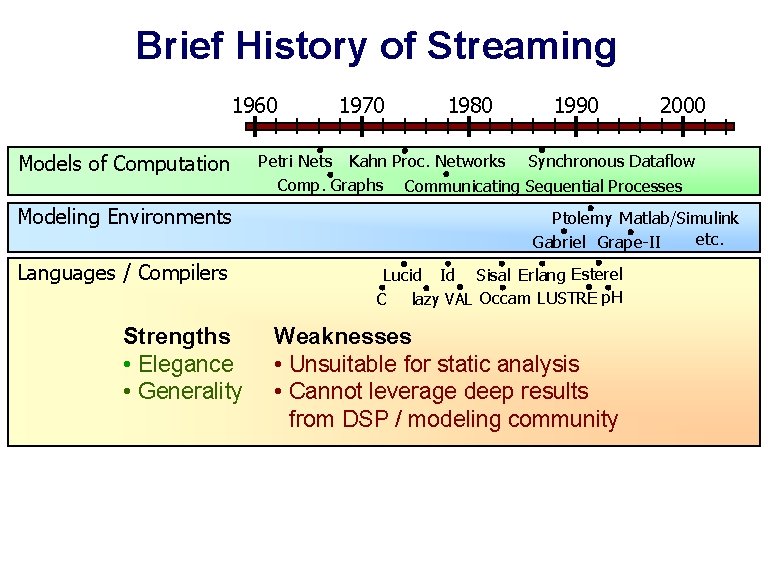

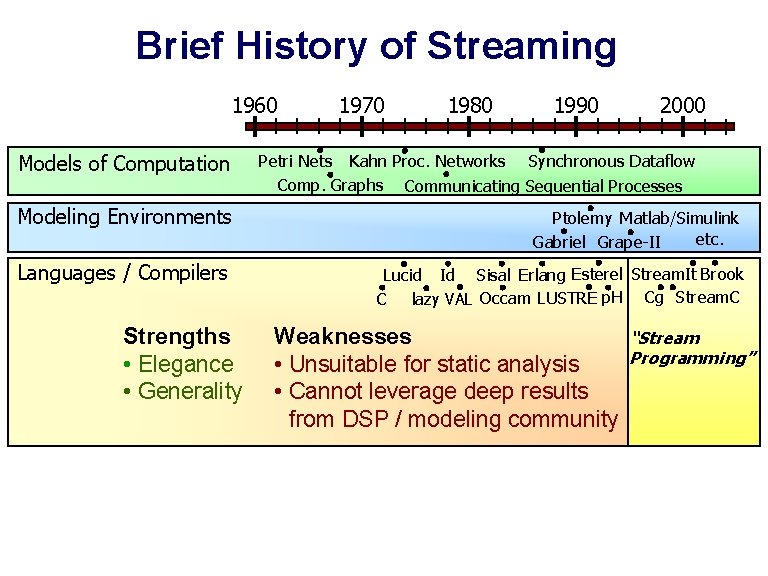

Brief History of Streaming 1960 Models of Computation Modeling Environments Languages / Compilers 1970 1980 1990 2000 Petri Nets Kahn Proc. Networks Synchronous Dataflow Comp. Graphs Communicating Sequential Processes Ptolemy Matlab/Simulink etc. Gabriel Grape-II Lucid Id Sisal Erlang Esterel C lazy VAL Occam LUSTRE p. H

Brief History of Streaming 1960 Models of Computation Modeling Environments Languages / Compilers Strengths • Elegance • Generality 1970 1980 1990 2000 Petri Nets Kahn Proc. Networks Synchronous Dataflow Comp. Graphs Communicating Sequential Processes Ptolemy Matlab/Simulink etc. Gabriel Grape-II Lucid Id Sisal Erlang Esterel C lazy VAL Occam LUSTRE p. H Weaknesses • Unsuitable for static analysis • Cannot leverage deep results from DSP / modeling community

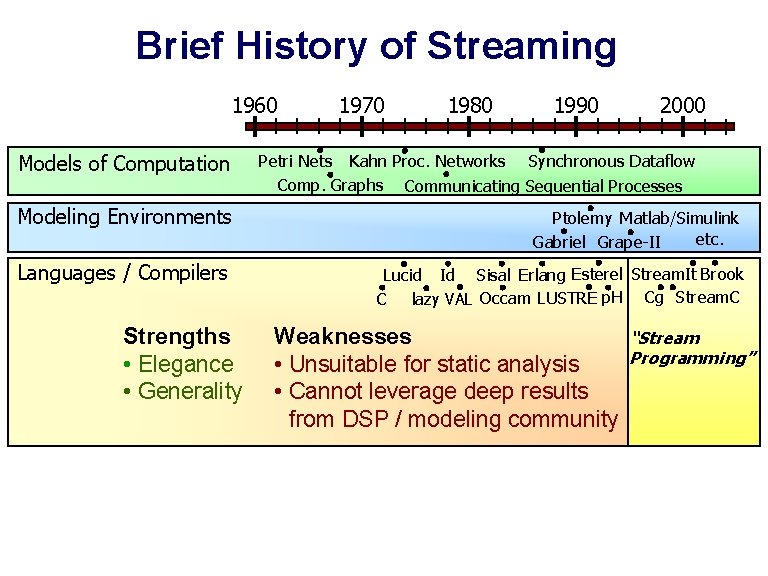

Brief History of Streaming 1960 Models of Computation 1970 1980 1990 2000 Petri Nets Kahn Proc. Networks Synchronous Dataflow Comp. Graphs Communicating Sequential Processes Modeling Environments Ptolemy Matlab/Simulink etc. Gabriel Grape-II Languages / Compilers Lucid Id Sisal Erlang Esterel Stream. It Brook C lazy VAL Occam LUSTRE p. H Cg Stream. C Strengths • Elegance • Generality Weaknesses • Unsuitable for static analysis • Cannot leverage deep results from DSP / modeling community “Stream Programming”

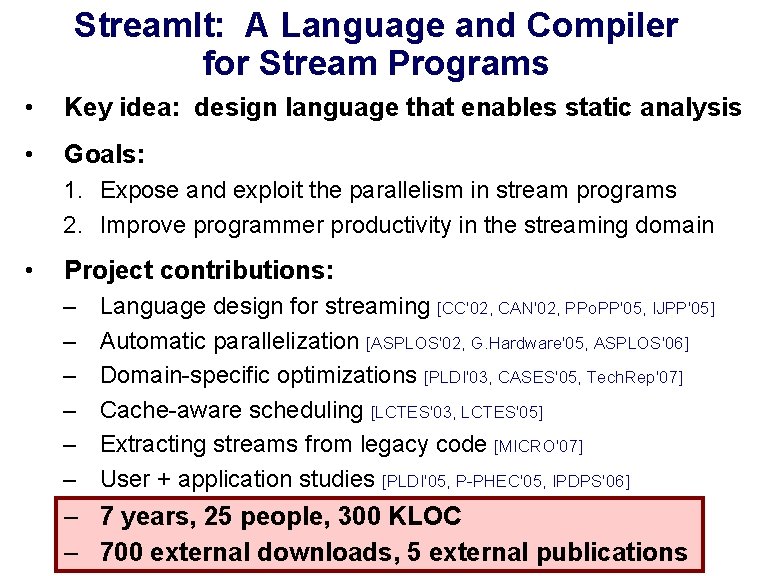

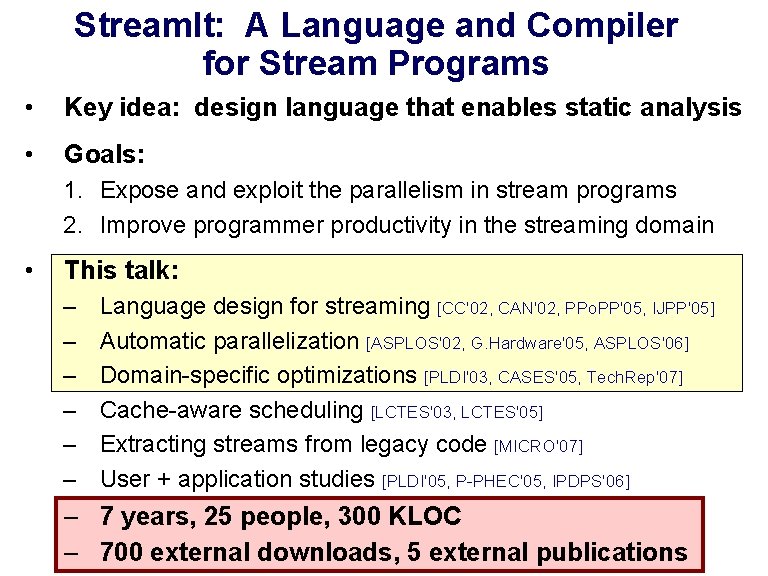

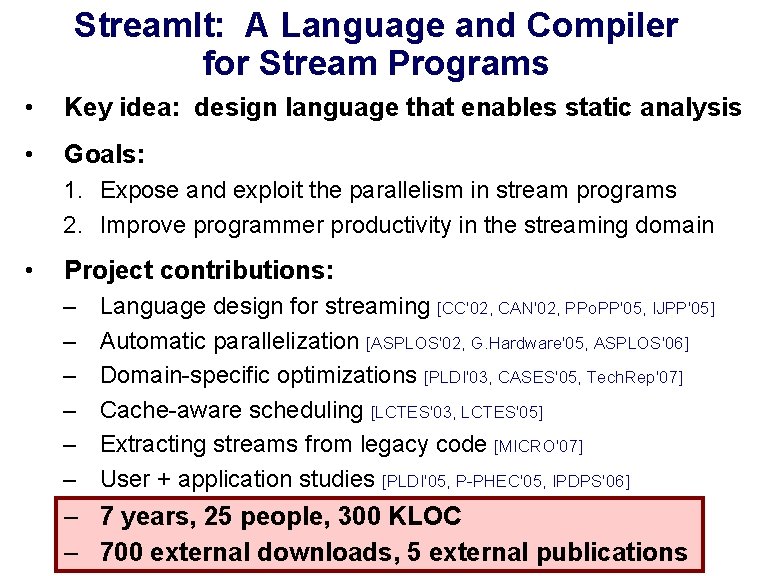

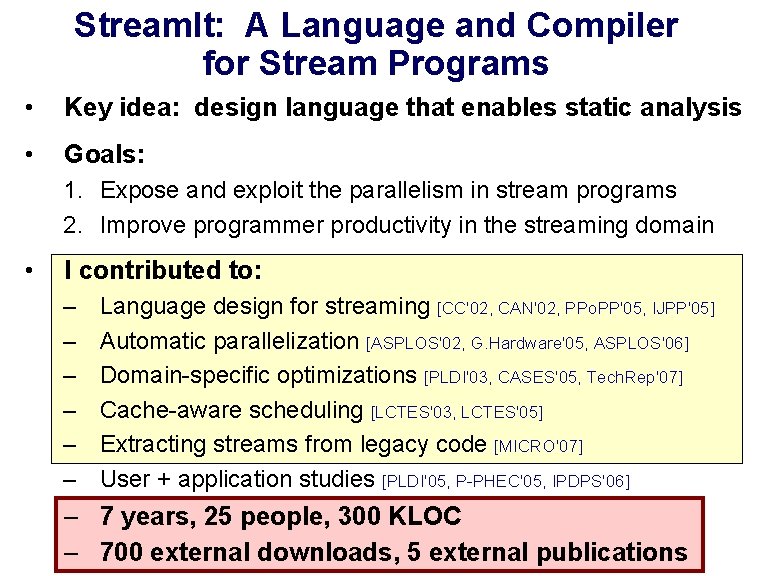

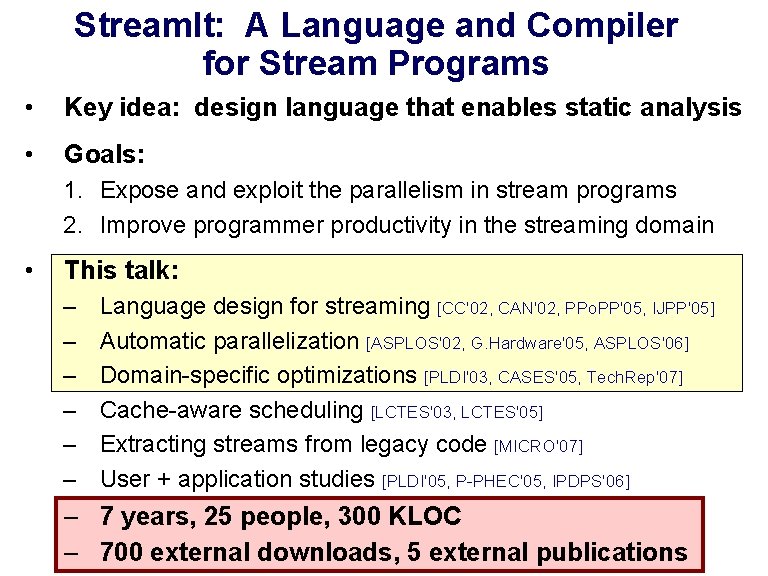

Stream. It: A Language and Compiler for Stream Programs • Key idea: design language that enables static analysis • Goals: 1. Expose and exploit the parallelism in stream programs 2. Improve programmer productivity in the streaming domain • Project contributions: – – – Language design for streaming [CC'02, CAN'02, PPo. PP'05, IJPP'05] Automatic parallelization [ASPLOS'02, G. Hardware'05, ASPLOS'06] Domain-specific optimizations [PLDI'03, CASES'05, Tech. Rep'07] Cache-aware scheduling [LCTES'03, LCTES'05] Extracting streams from legacy code [MICRO'07] User + application studies [PLDI'05, P-PHEC'05, IPDPS'06] – 7 years, 25 people, 300 KLOC – 700 external downloads, 5 external publications

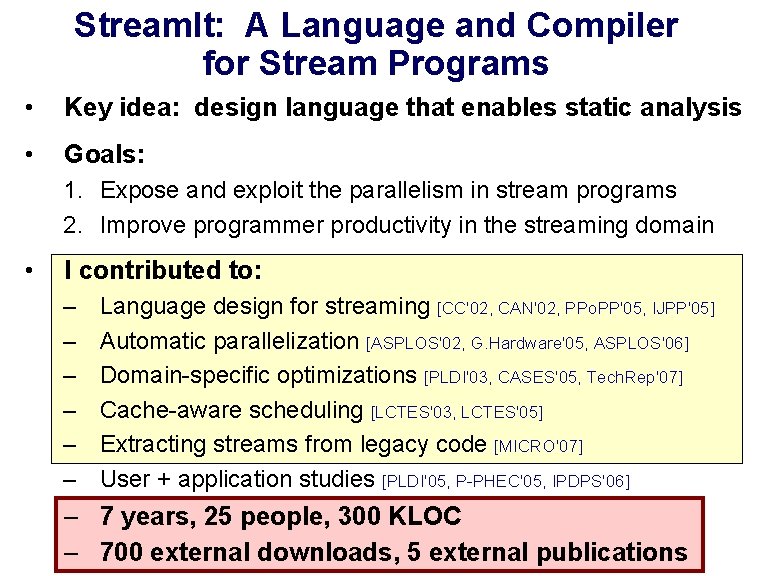

Stream. It: A Language and Compiler for Stream Programs • Key idea: design language that enables static analysis • Goals: 1. Expose and exploit the parallelism in stream programs 2. Improve programmer productivity in the streaming domain • I contributed to: – – – Language design for streaming [CC'02, CAN'02, PPo. PP'05, IJPP'05] Automatic parallelization [ASPLOS'02, G. Hardware'05, ASPLOS'06] Domain-specific optimizations [PLDI'03, CASES'05, Tech. Rep'07] Cache-aware scheduling [LCTES'03, LCTES'05] Extracting streams from legacy code [MICRO'07] User + application studies [PLDI'05, P-PHEC'05, IPDPS'06] – 7 years, 25 people, 300 KLOC – 700 external downloads, 5 external publications

Stream. It: A Language and Compiler for Stream Programs • Key idea: design language that enables static analysis • Goals: 1. Expose and exploit the parallelism in stream programs 2. Improve programmer productivity in the streaming domain • This talk: – – – Language design for streaming [CC'02, CAN'02, PPo. PP'05, IJPP'05] Automatic parallelization [ASPLOS'02, G. Hardware'05, ASPLOS'06] Domain-specific optimizations [PLDI'03, CASES'05, Tech. Rep'07] Cache-aware scheduling [LCTES'03, LCTES'05] Extracting streams from legacy code [MICRO'07] User + application studies [PLDI'05, P-PHEC'05, IPDPS'06] – 7 years, 25 people, 300 KLOC – 700 external downloads, 5 external publications

Part 1: Language Design William Thies, Michal Karczmarek, Saman Amarasinghe (CC’ 02) Joint work with Michael Gordon William Thies, Michal Karczmarek, Janis Sermulins, Rodric Rabbah, Saman Amarasinghe (PPo. PP’ 05)

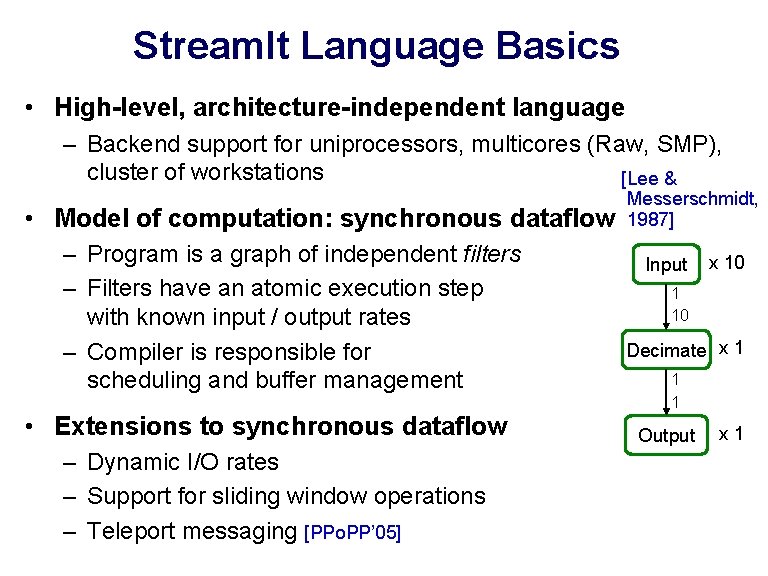

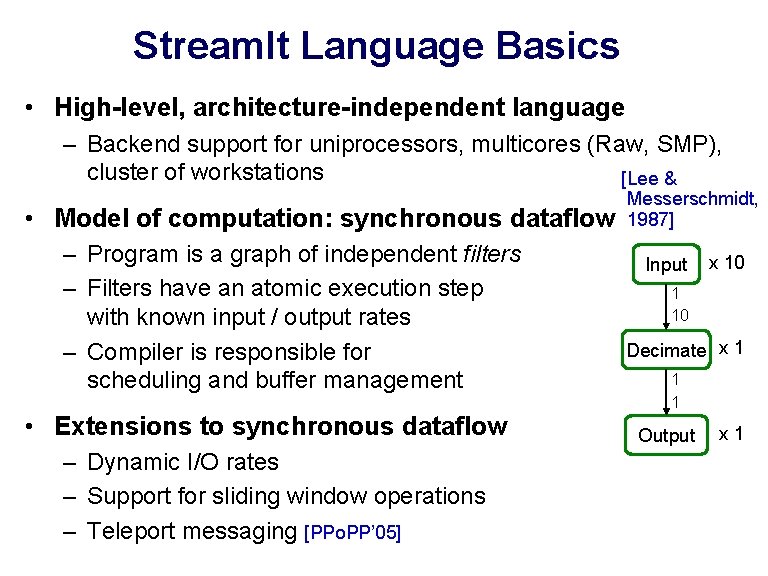

Stream. It Language Basics • High-level, architecture-independent language – Backend support for uniprocessors, multicores (Raw, SMP), cluster of workstations [Lee & • Model of computation: synchronous dataflow – Program is a graph of independent filters – Filters have an atomic execution step with known input / output rates – Compiler is responsible for scheduling and buffer management • Extensions to synchronous dataflow – Dynamic I/O rates – Support for sliding window operations – Teleport messaging [PPo. PP’ 05] Messerschmidt, 1987] Input x 10 1 10 Decimate x 1 1 1 Output x 1

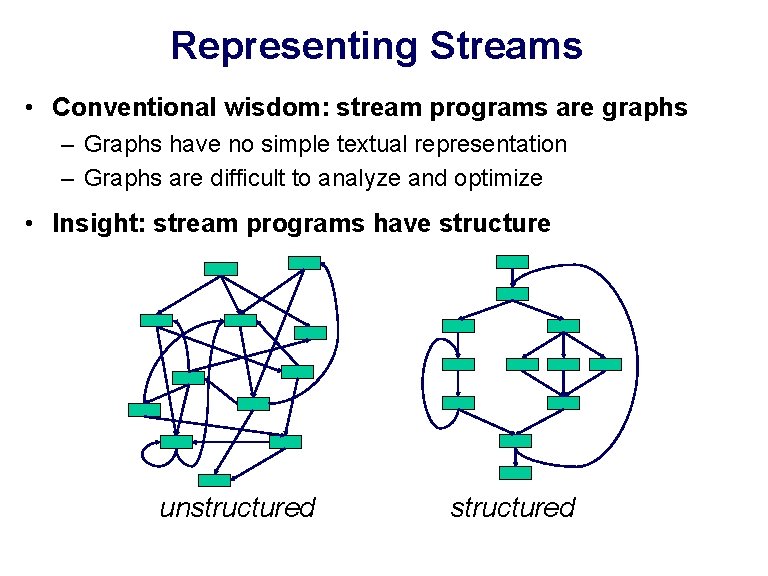

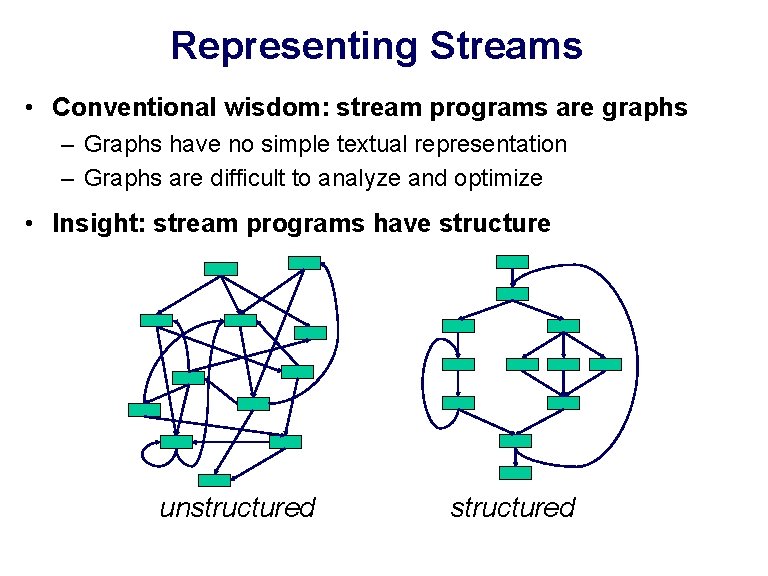

Representing Streams • Conventional wisdom: stream programs are graphs – Graphs have no simple textual representation – Graphs are difficult to analyze and optimize • Insight: stream programs have structure unstructured

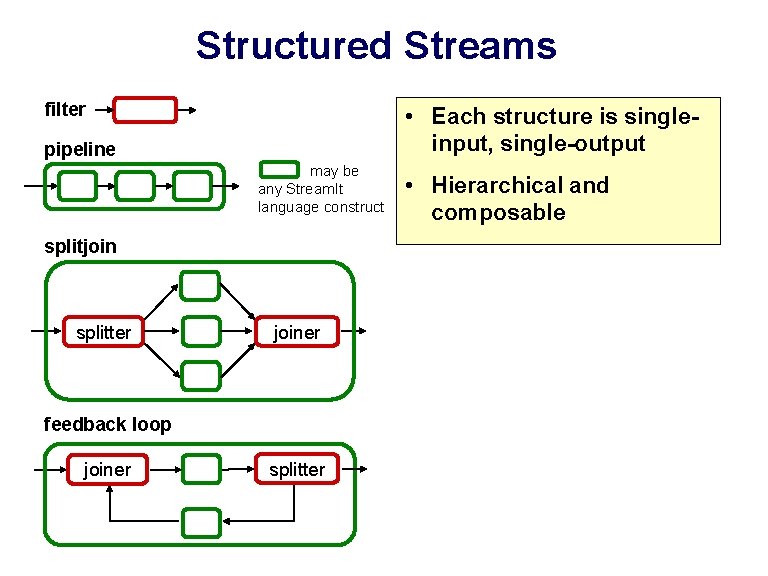

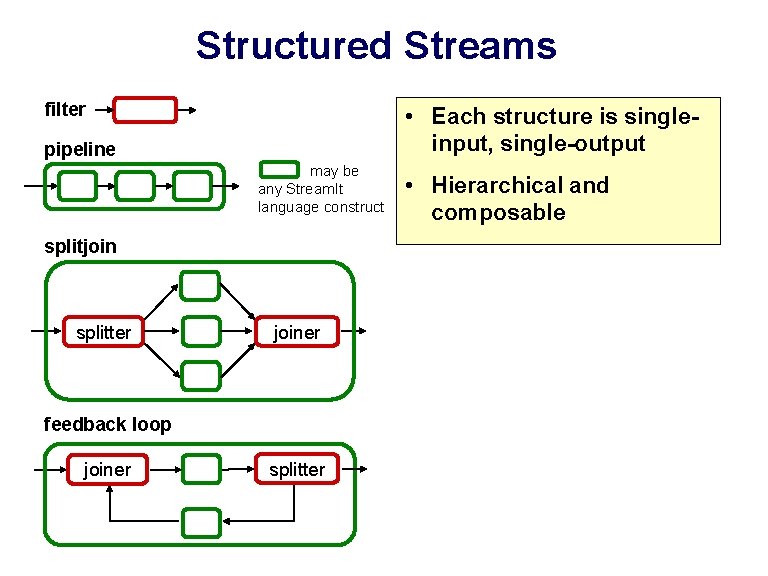

Structured Streams filter • Each structure is singleinput, single-output pipeline may be any Stream. It language construct splitjoin splitter joiner feedback loop joiner splitter • Hierarchical and composable

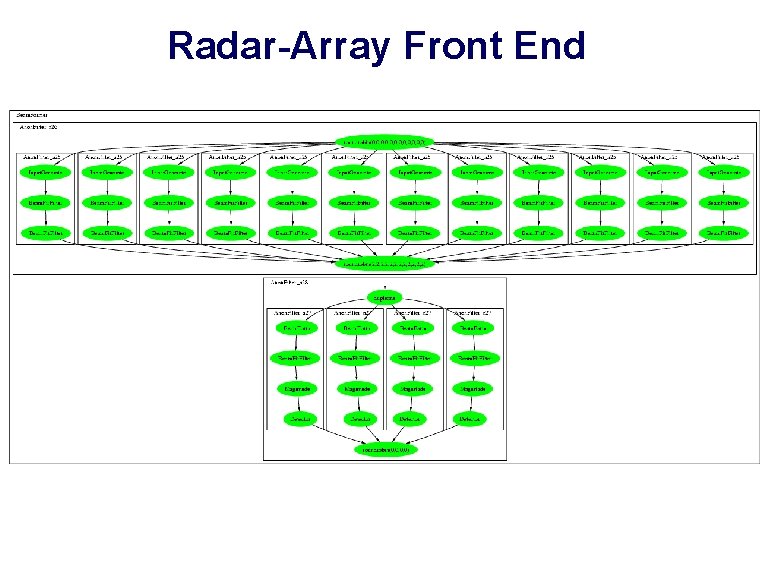

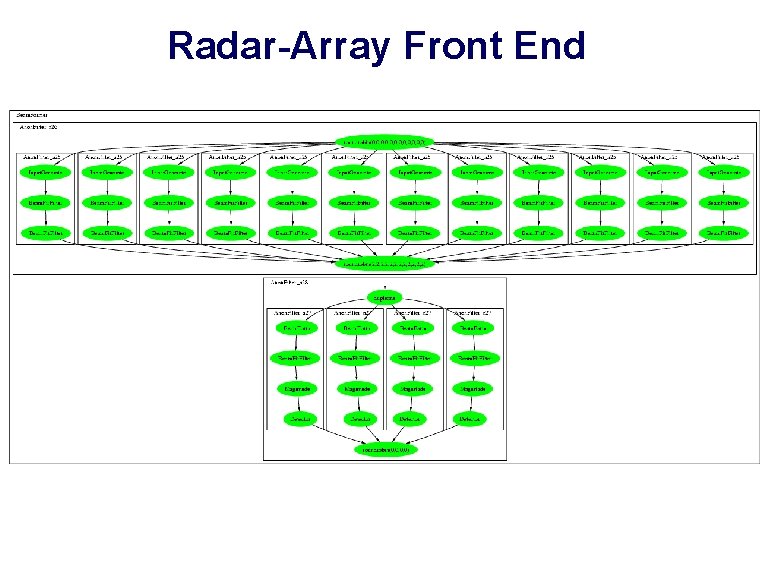

Radar-Array Front End

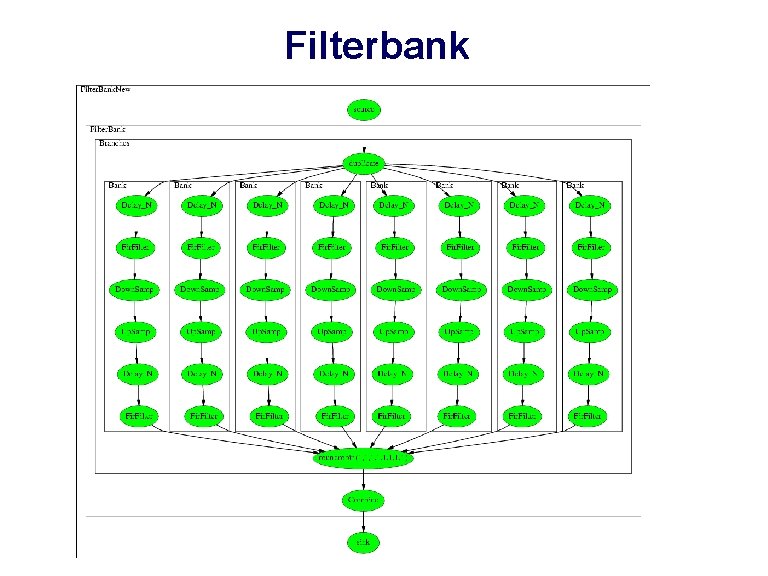

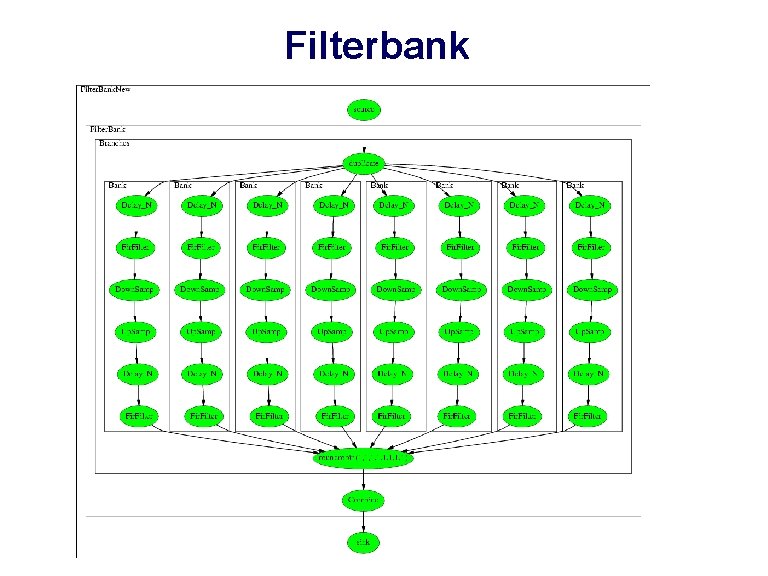

Filterbank

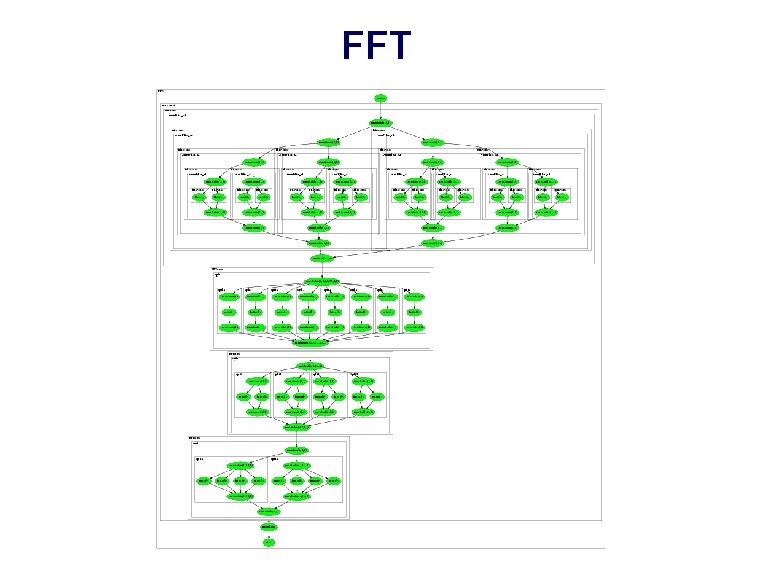

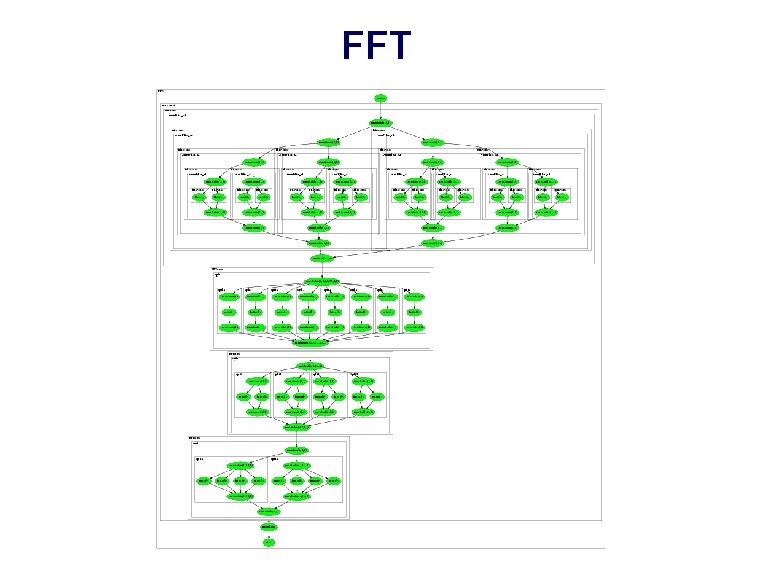

FFT

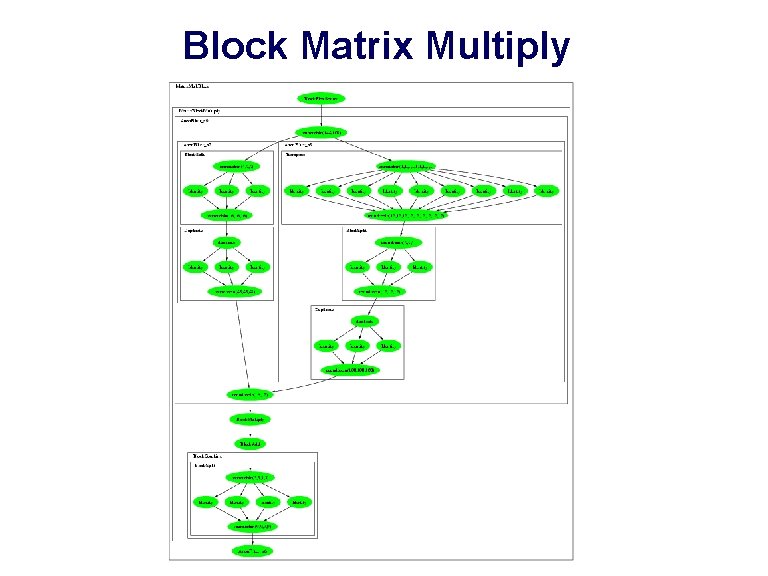

Block Matrix Multiply

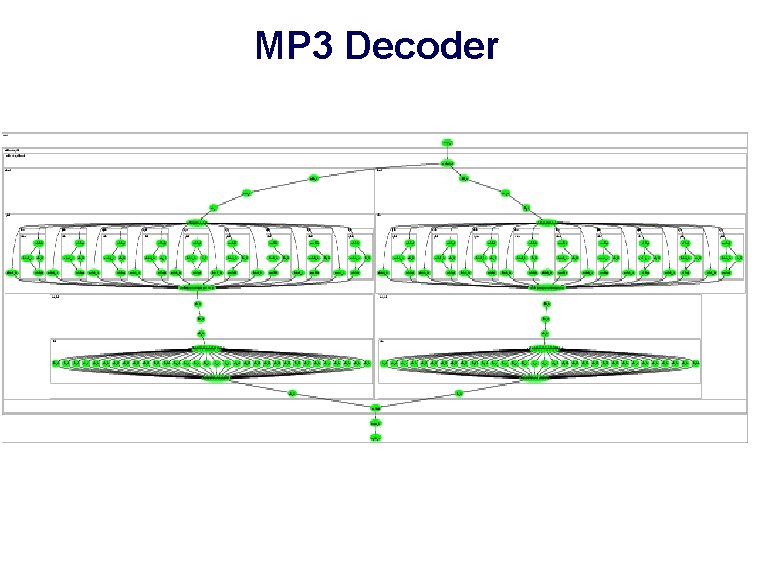

MP 3 Decoder

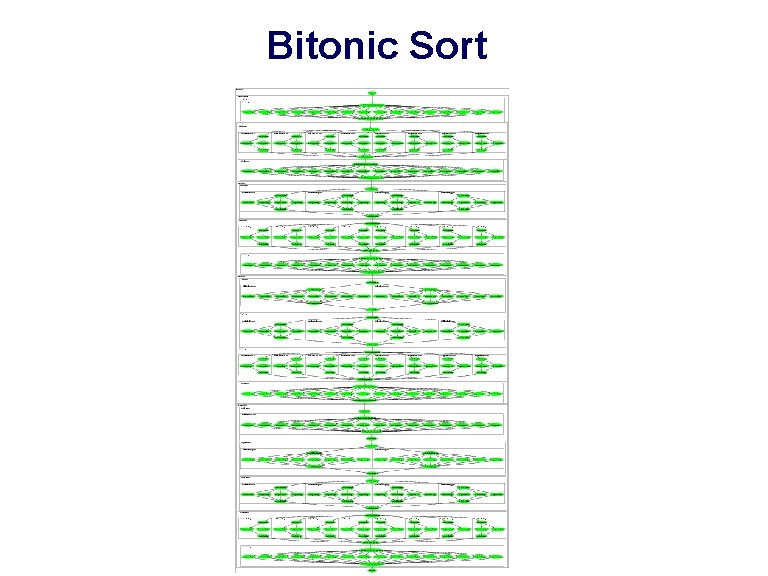

Bitonic Sort

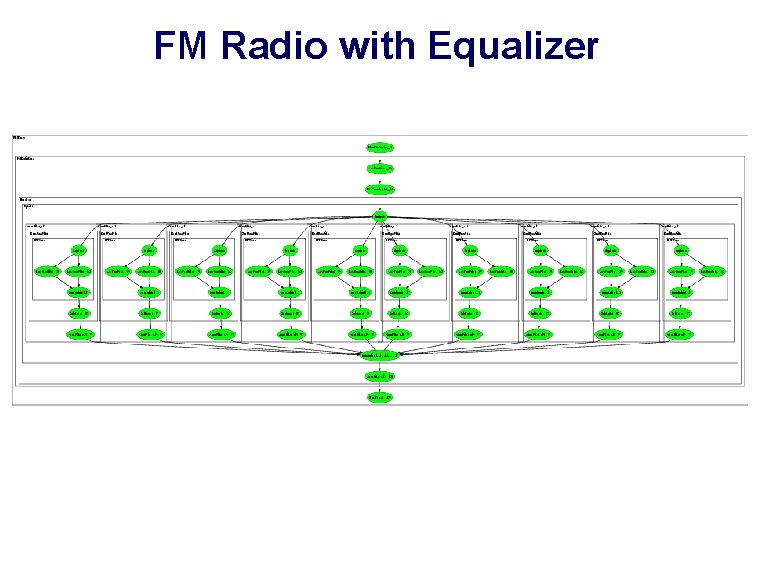

FM Radio with Equalizer

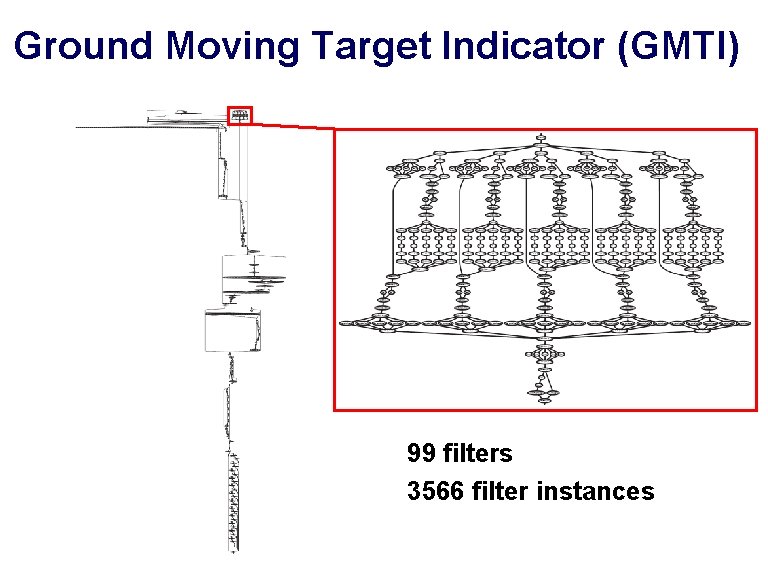

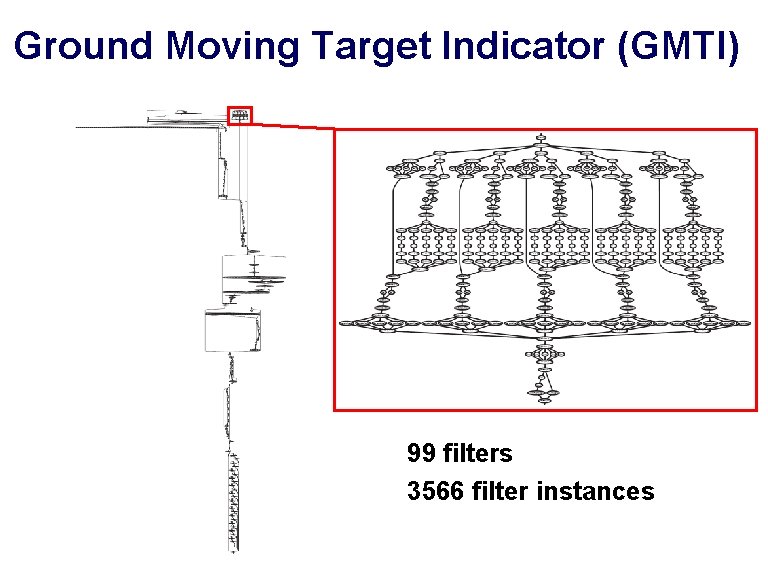

Ground Moving Target Indicator (GMTI) 99 filters 3566 filter instances

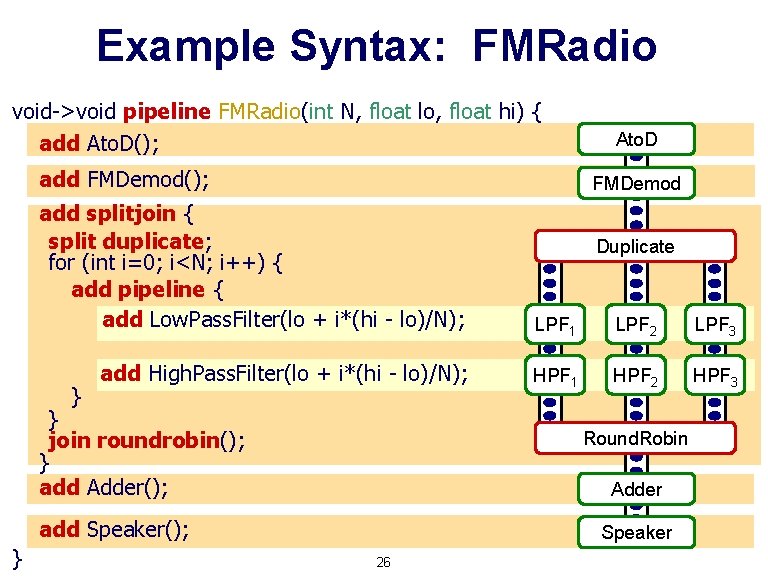

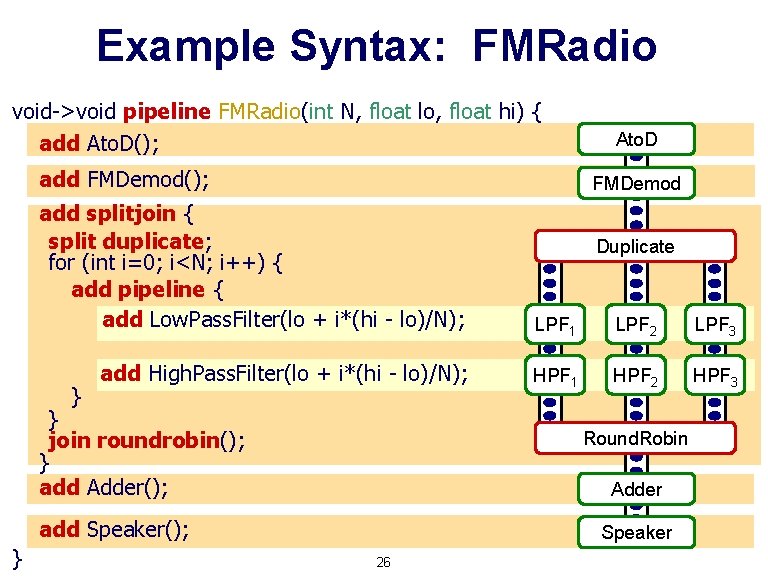

Example Syntax: FMRadio void->void pipeline FMRadio(int N, float lo, float hi) { add Ato. D(); add FMDemod(); FMDemod add splitjoin { split duplicate; for (int i=0; i<N; i++) { add pipeline { add Low. Pass. Filter(lo + i*(hi - lo)/N); LPF 1 LPF 2 LPF 3 add High. Pass. Filter(lo + i*(hi - lo)/N); HPF 1 HPF 2 HPF 3 } } join roundrobin(); } add Adder(); Duplicate Round. Robin Adder add Speaker(); } Ato. D Speaker 26

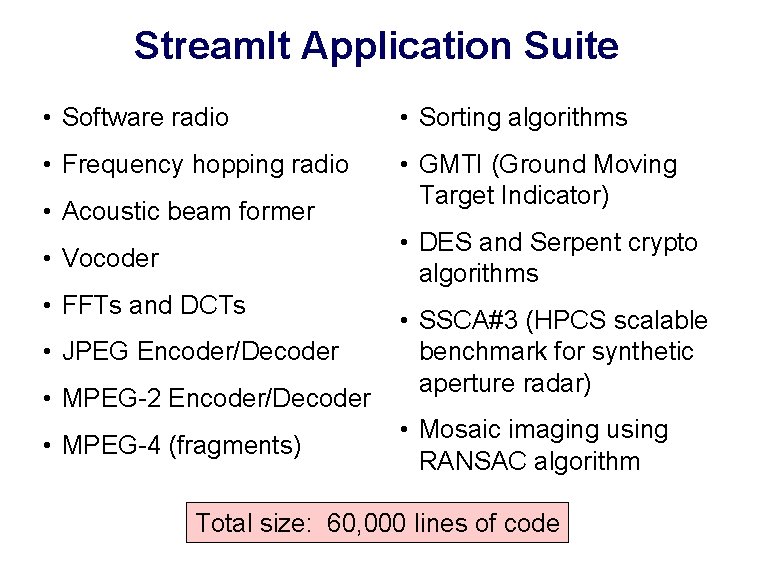

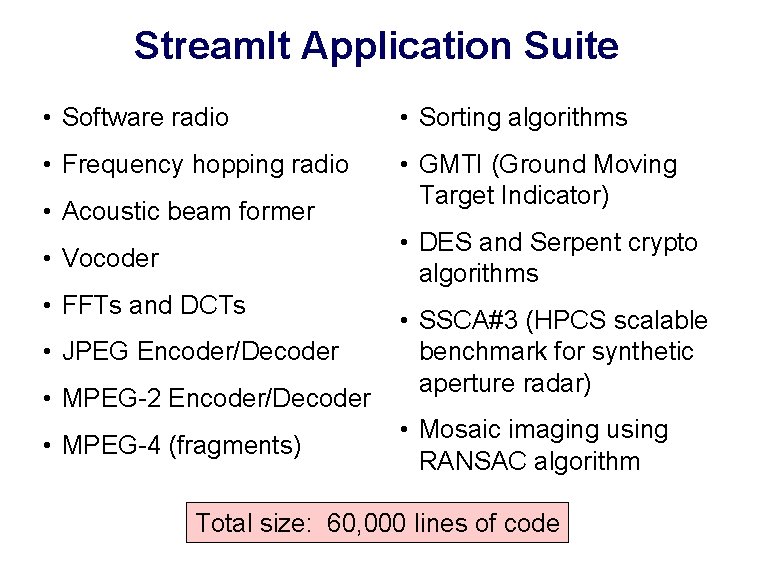

Stream. It Application Suite • Software radio • Sorting algorithms • Frequency hopping radio • GMTI (Ground Moving Target Indicator) • Acoustic beam former • DES and Serpent crypto algorithms • Vocoder • FFTs and DCTs • JPEG Encoder/Decoder • MPEG-2 Encoder/Decoder • MPEG-4 (fragments) • SSCA#3 (HPCS scalable benchmark for synthetic aperture radar) • Mosaic imaging using RANSAC algorithm Total size: 60, 000 lines of code

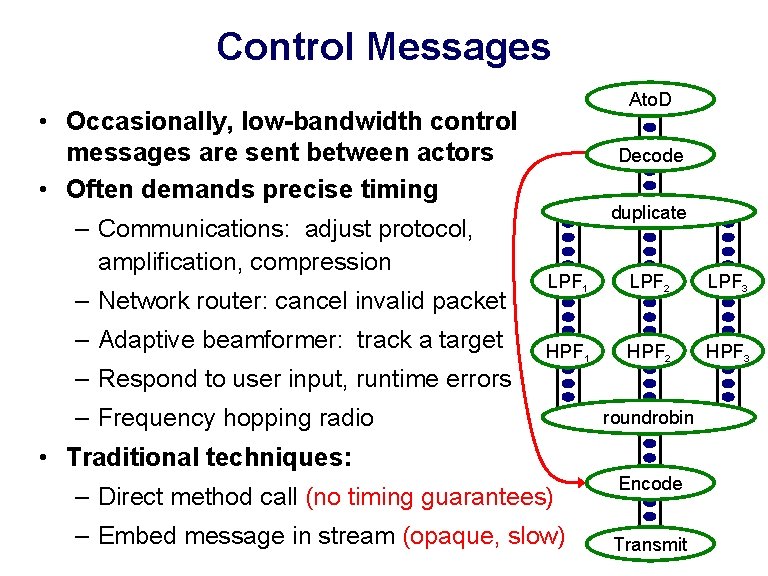

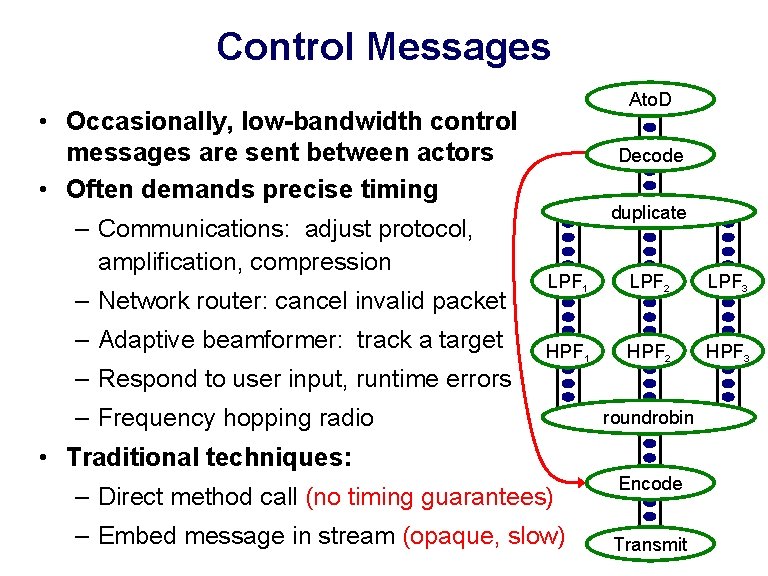

Control Messages Ato. D • Occasionally, low-bandwidth control messages are sent between actors • Often demands precise timing – Communications: adjust protocol, amplification, compression – Network router: cancel invalid packet – Adaptive beamformer: track a target – Respond to user input, runtime errors Decode duplicate LPF 1 LPF 2 LPF 3 HPF 1 HPF 2 HPF 3 – Frequency hopping radio • Traditional techniques: – Direct method call (no timing guarantees) – Embed message in stream (opaque, slow) roundrobin Encode Transmit

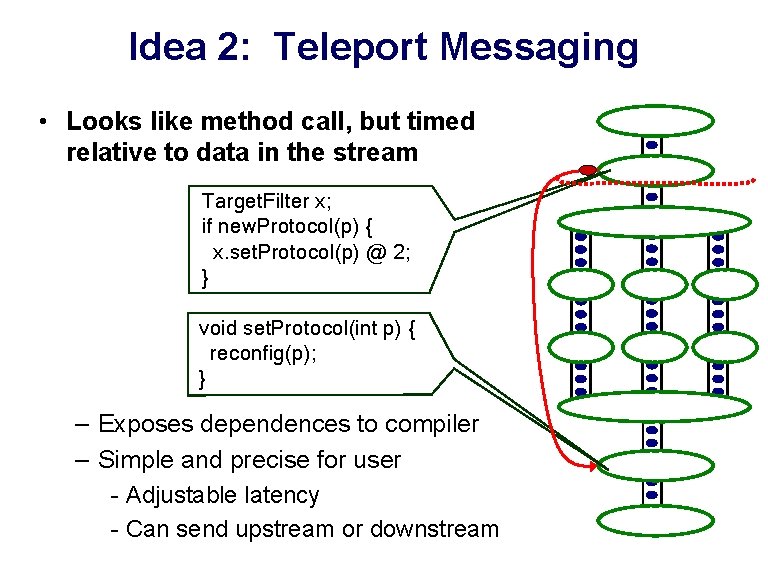

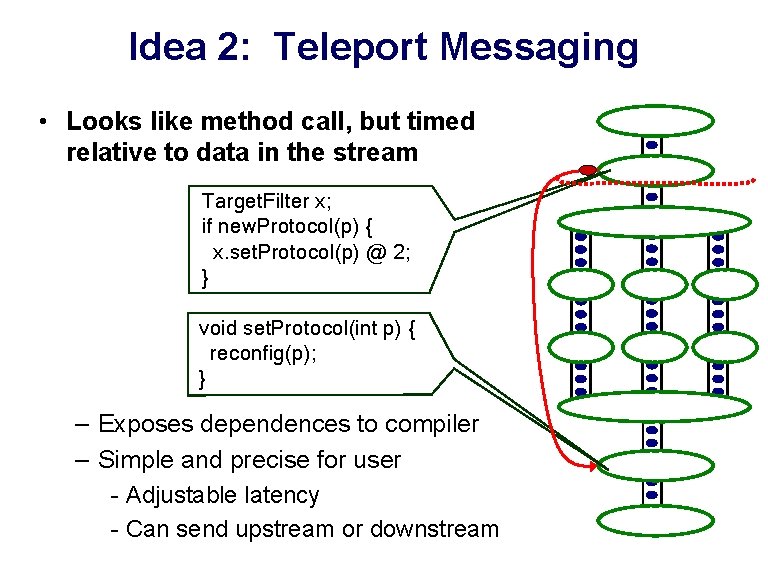

Idea 2: Teleport Messaging • Looks like method call, but timed relative to data in the stream Target. Filter x; if new. Protocol(p) { x. set. Protocol(p) @ 2; } void set. Protocol(int p) { reconfig(p); } – Exposes dependences to compiler – Simple and precise for user - Adjustable latency - Can send upstream or downstream

Part 2: Automatic Parallelization Michael I. Gordon, William Thies, Saman Amarasinghe (ASPLOS’ 06) Joint work with Michael Gordon Michael I. Gordon, William Thies, Michal Karczmarek, Jasper Lin, Ali S. Meli, Andrew A. Lamb, Chris Leger, Jeremy Wong, Henry Hoffmann, David Maze, Saman Amarasinghe (ASPLOS’ 02)

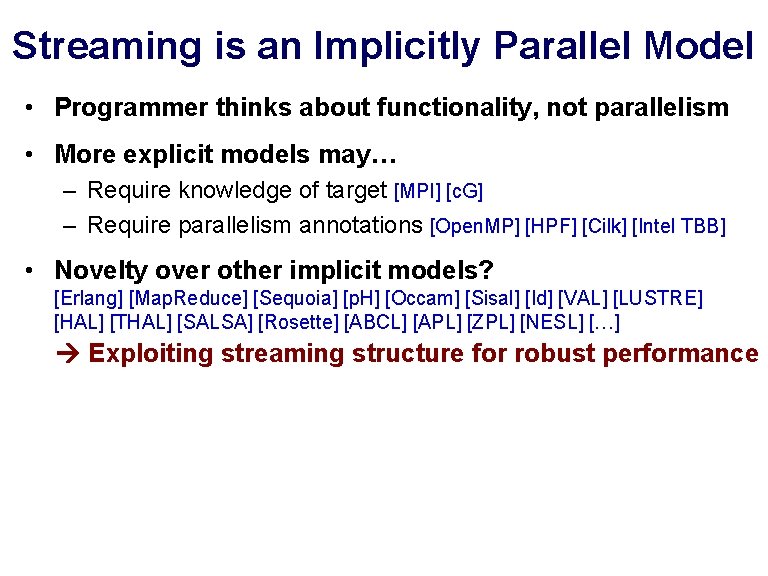

Streaming is an Implicitly Parallel Model • Programmer thinks about functionality, not parallelism • More explicit models may… – Require knowledge of target [MPI] [c. G] – Require parallelism annotations [Open. MP] [HPF] [Cilk] [Intel TBB] • Novelty over other implicit models? [Erlang] [Map. Reduce] [Sequoia] [p. H] [Occam] [Sisal] [Id] [VAL] [LUSTRE] [HAL] [THAL] [SALSA] [Rosette] [ABCL] [APL] [ZPL] [NESL] […] Exploiting streaming structure for robust performance

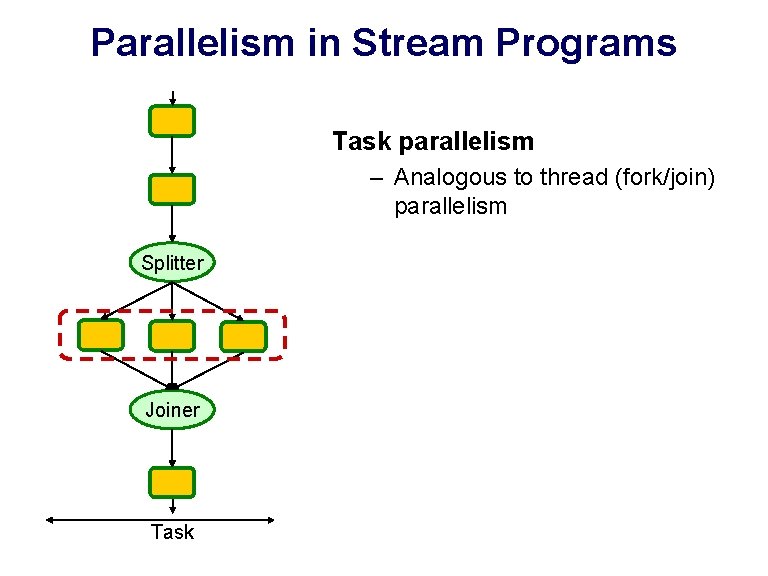

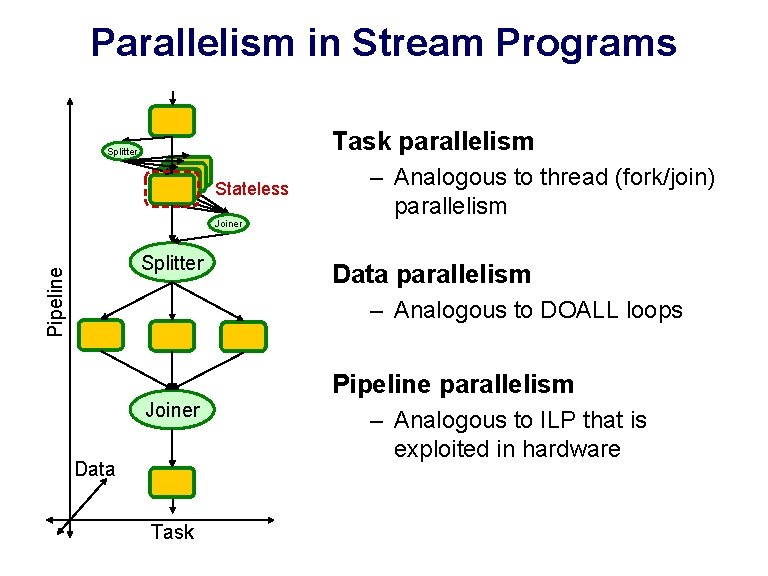

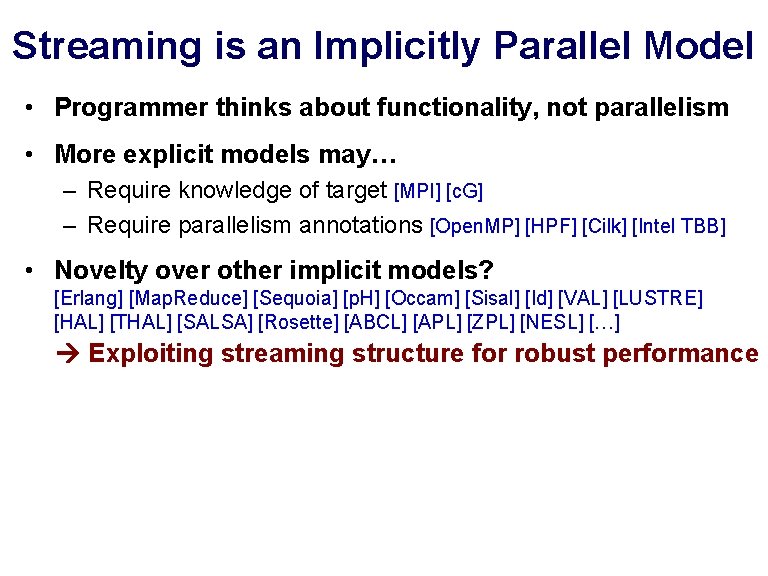

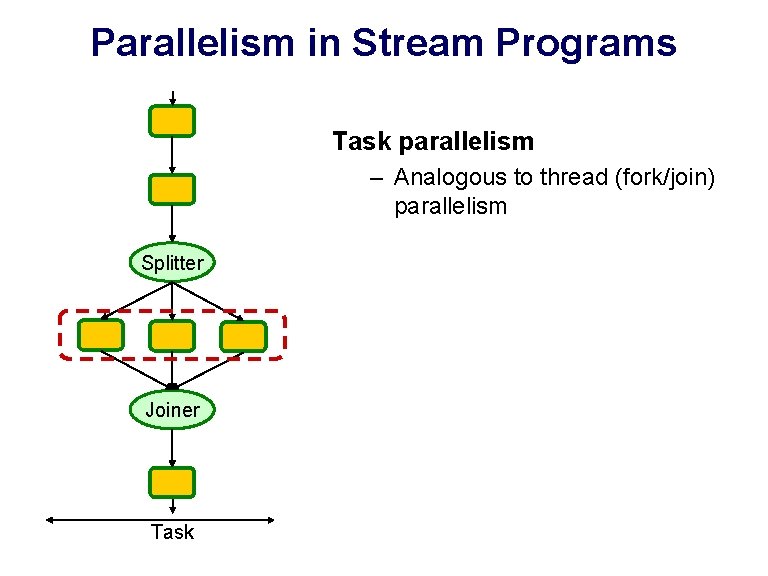

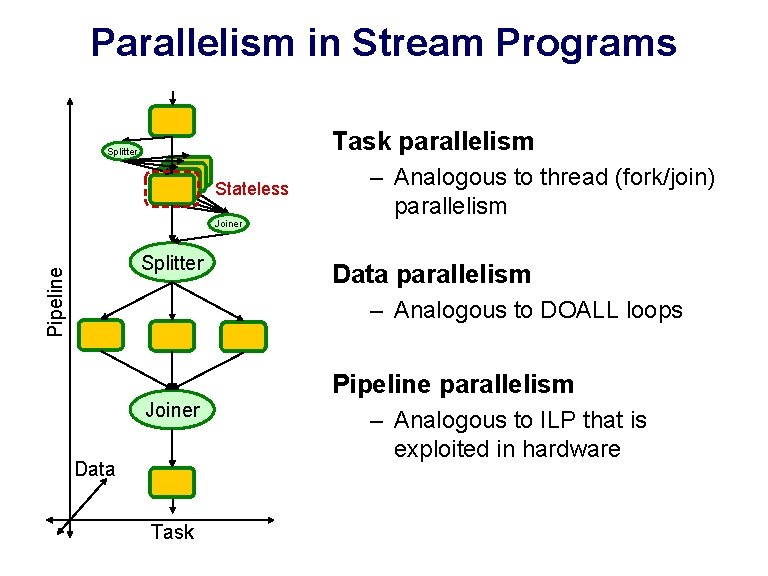

Parallelism in Stream Programs Task parallelism – Analogous to thread (fork/join) parallelism Splitter Data Parallelism – Peel iterations of filter, place within scatter/gather pair (fission) – parallelize filters with state Joiner Pipeline Parallelism – Between producers and consumers – Stateful filters can be parallelized Task

Parallelism in Stream Programs Task parallelism Splitter Stateless Joiner Pipeline Splitter – Analogous to thread (fork/join) parallelism Data parallelism – Analogous to DOALL loops Pipeline parallelism Joiner Data Task – Analogous to ILP that is exploited in hardware

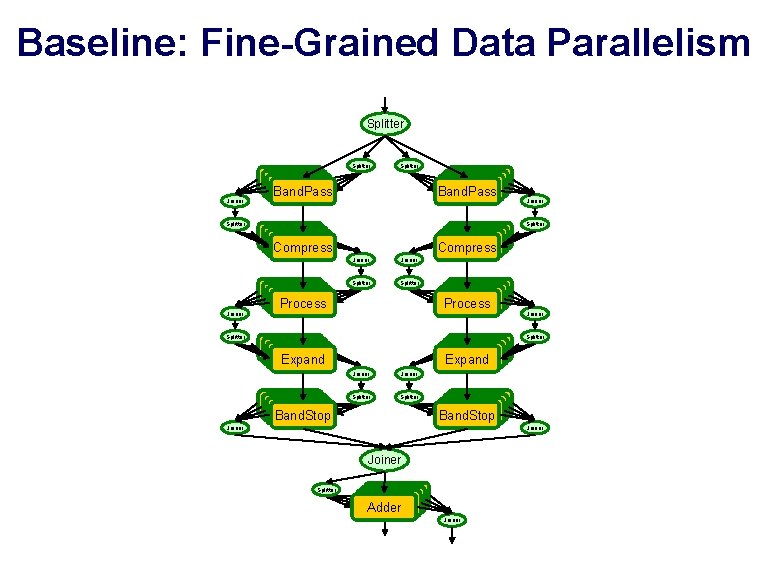

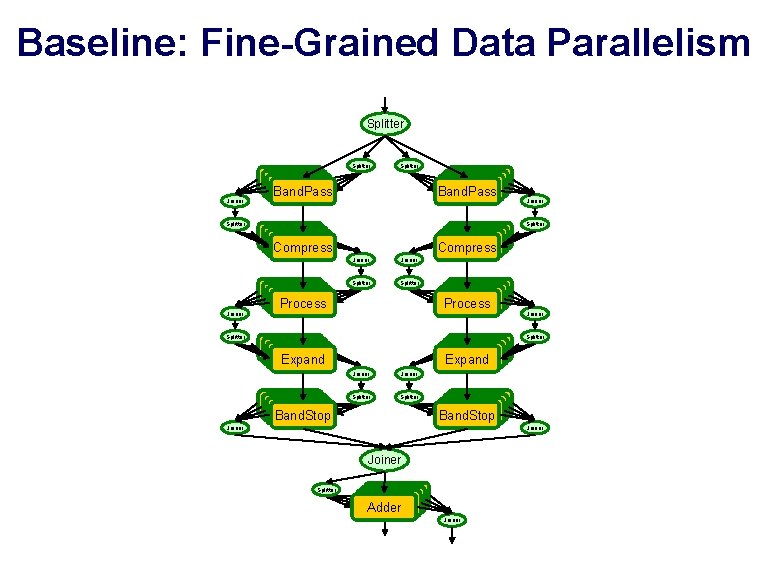

Baseline: Fine-Grained Data Parallelism Splitter Joiner Splitter Band. Pass Band. Pass Splitter Compress Joiner Splitter Joiner Process Compress Joiner Splitter Expand Band. Stop Process Expand Joiner Splitter Band. Stop Joiner Splitter Band. Stop Adder Joiner

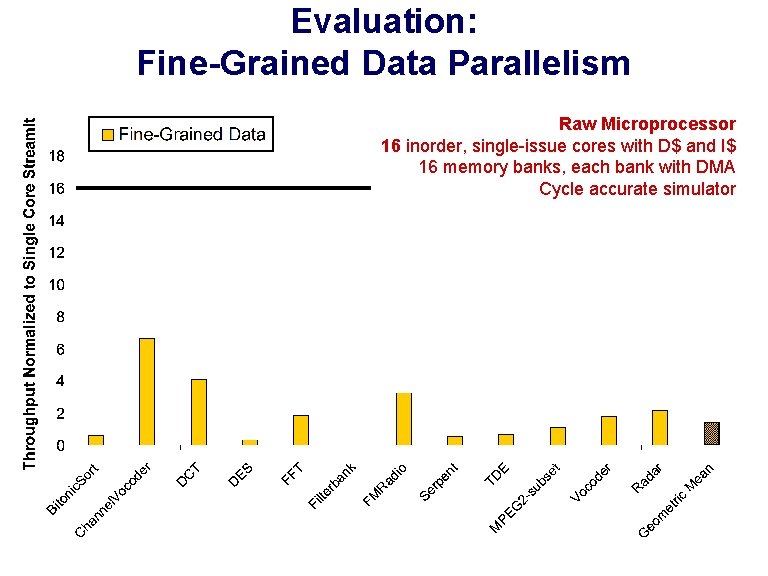

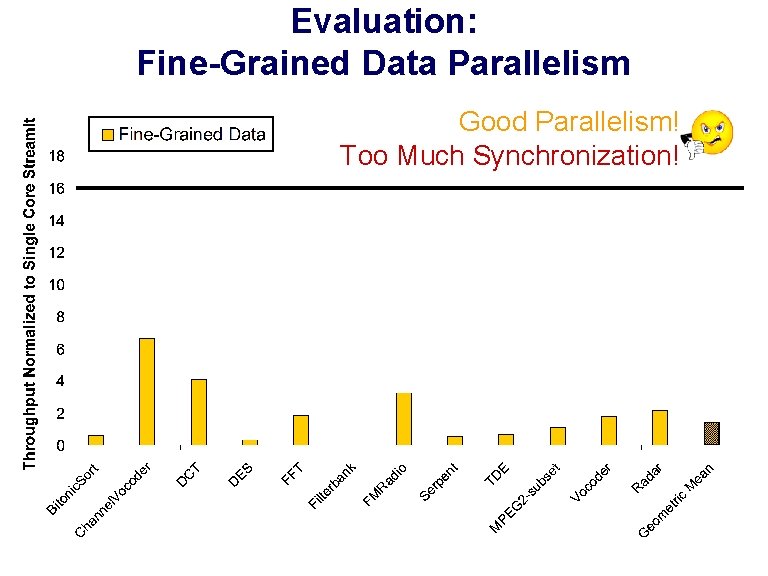

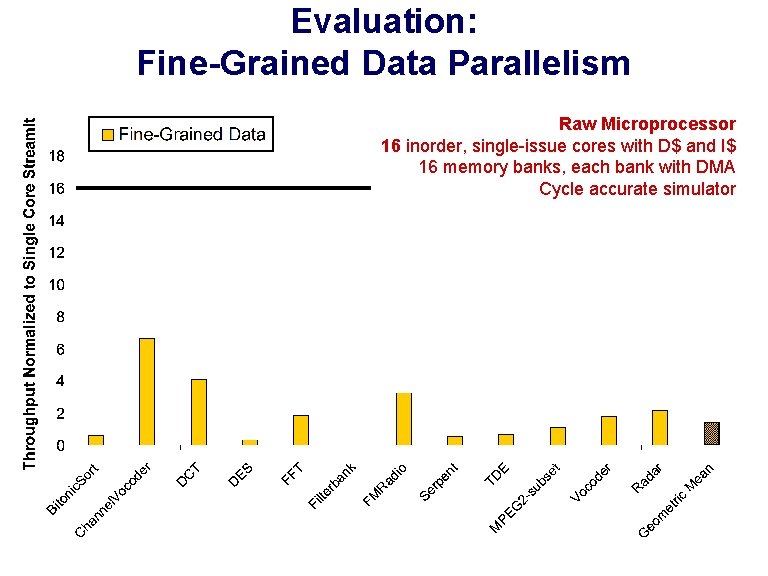

Evaluation: Fine-Grained Data Parallelism Raw Microprocessor 16 inorder, single-issue cores with D$ and I$ 16 memory banks, each bank with DMA Cycle accurate simulator

Evaluation: Fine-Grained Data Parallelism Good Parallelism! Too Much Synchronization!

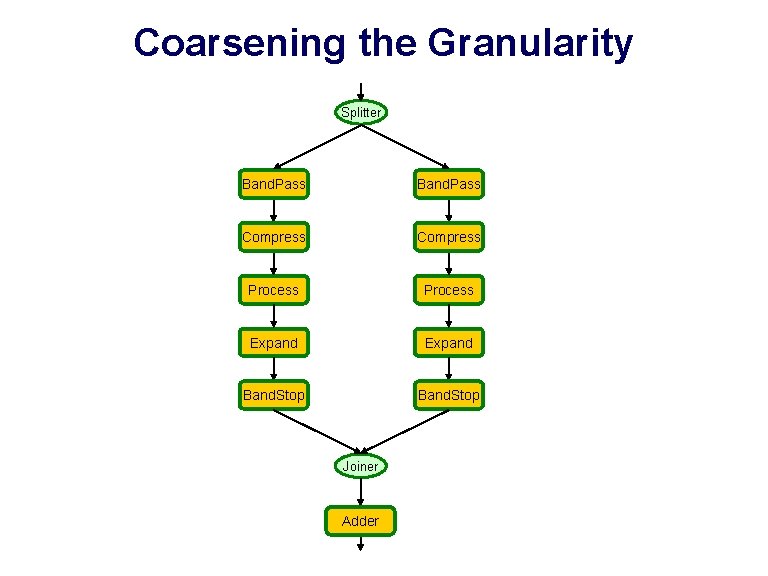

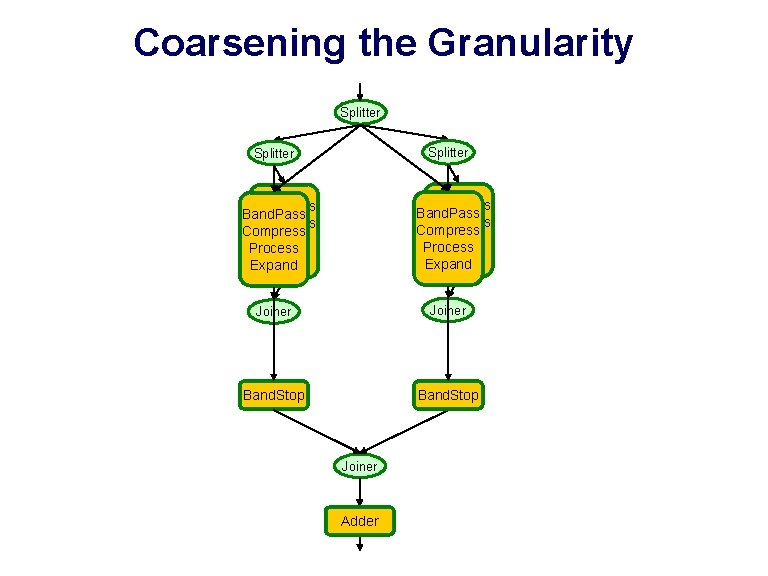

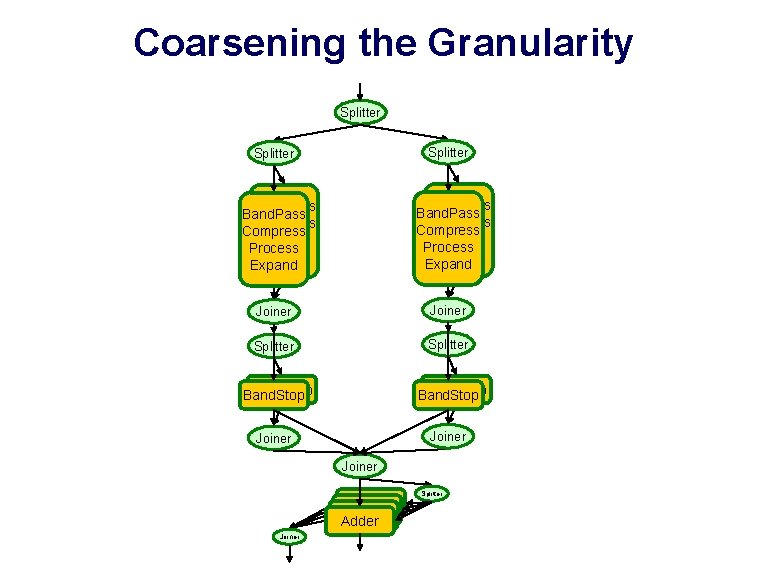

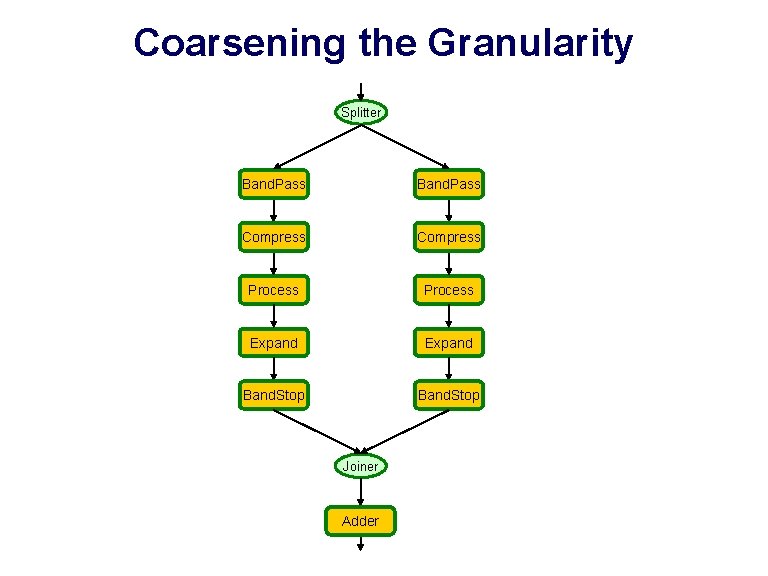

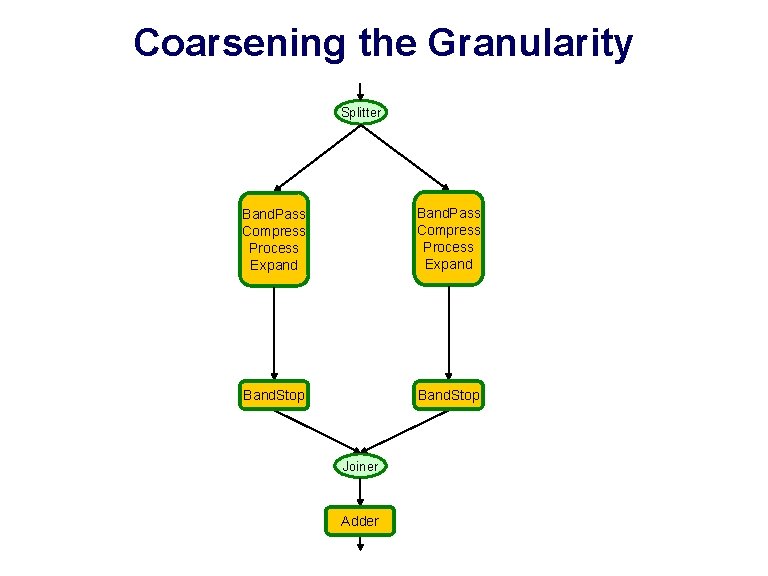

Coarsening the Granularity Splitter Band. Pass Compress Process Expand Band. Stop Joiner Adder

Coarsening the Granularity Splitter Band. Pass Compress Process Expand Band. Stop Joiner Adder

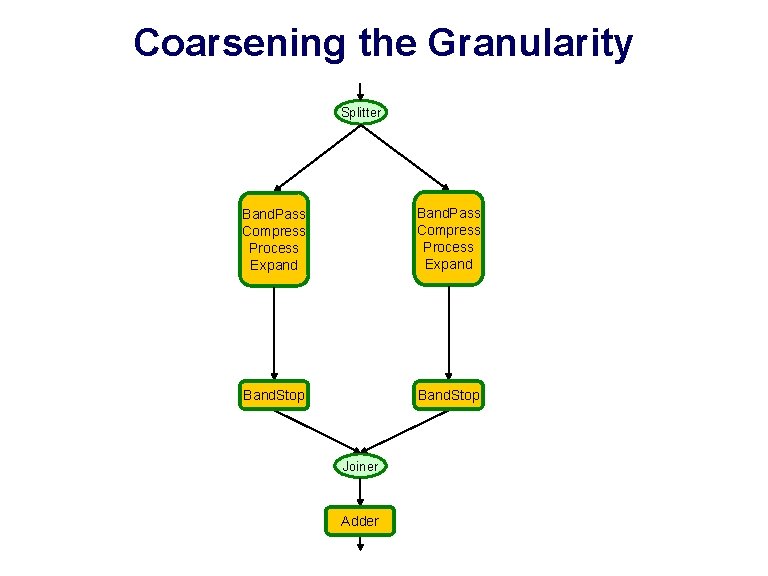

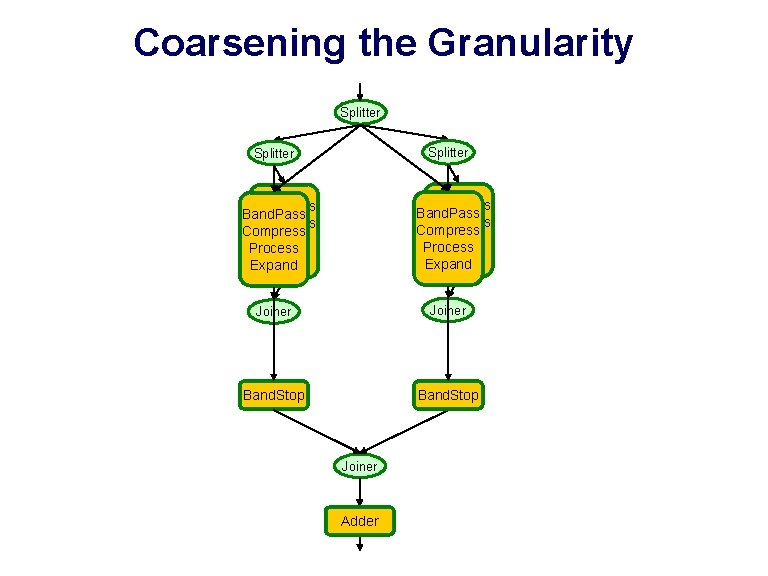

Coarsening the Granularity Splitter Band. Pass Compress Process Expand Joiner Band. Stop Joiner Adder

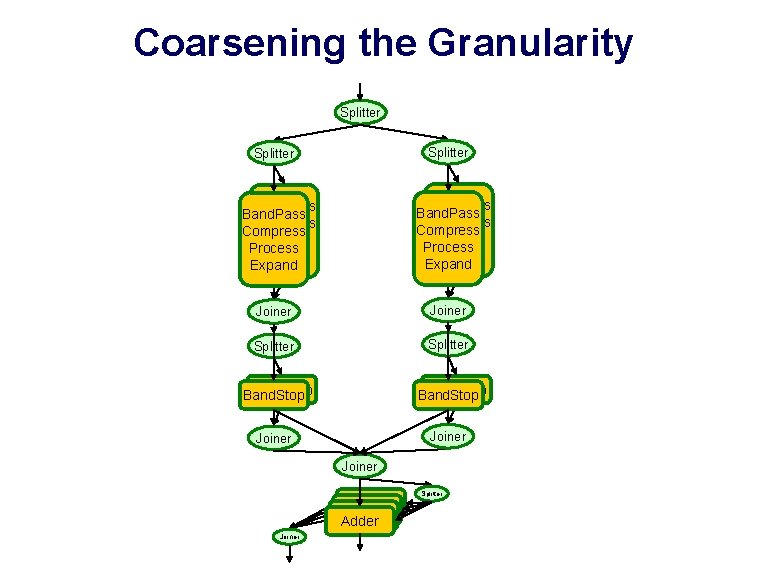

Coarsening the Granularity Splitter Band. Pass Compress Process Expand Joiner Splitter Band. Stop Joiner Adder Joiner Splitter

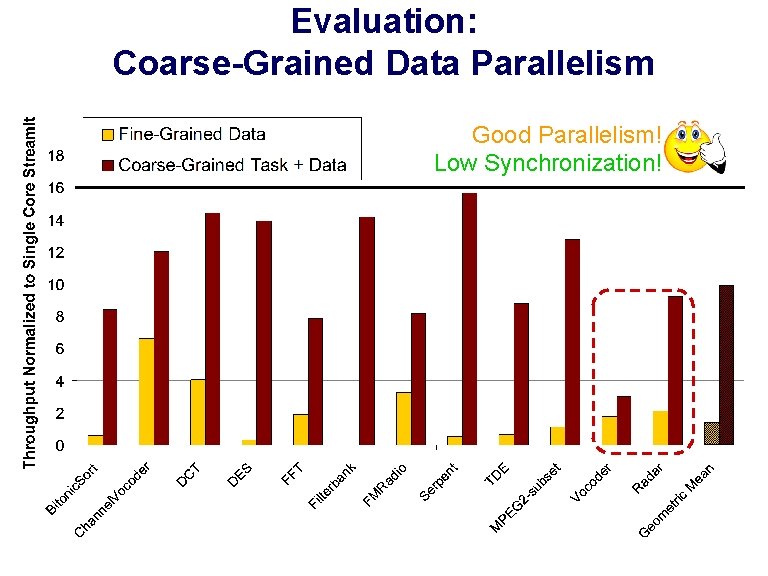

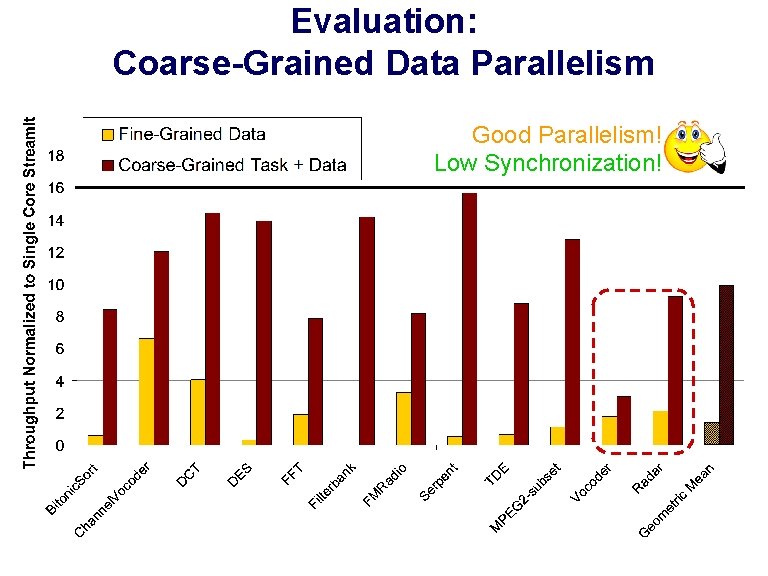

Evaluation: Coarse-Grained Data Parallelism Good Parallelism! Low Synchronization!

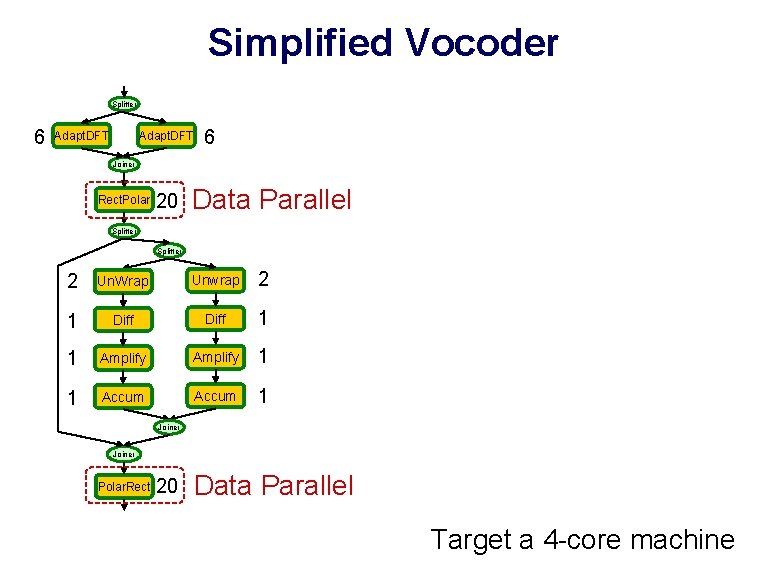

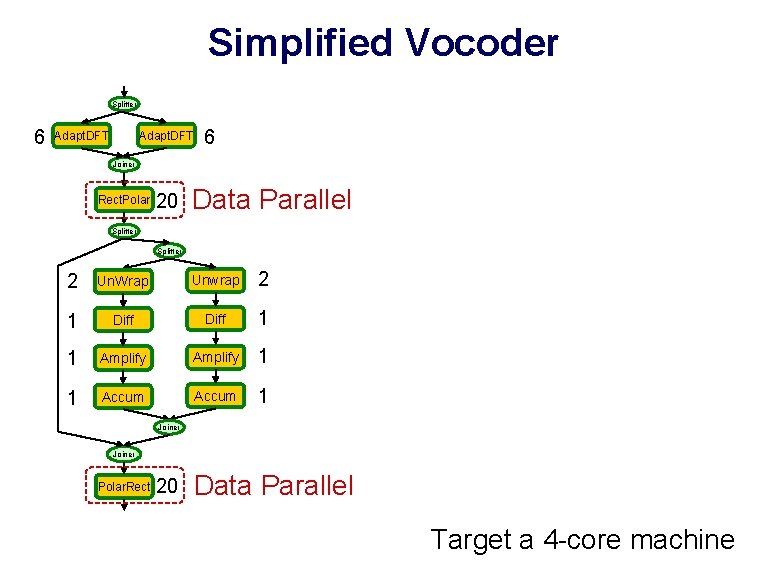

Simplified Vocoder Splitter 6 Adapt. DFT 6 Joiner Rect. Polar 20 Data Parallel Splitter 2 Un. Wrap Unwrap 2 1 Diff 1 1 Amplify 1 1 Accum 1 Joiner Polar. Rect 20 Data Parallel Target a 4 -core machine

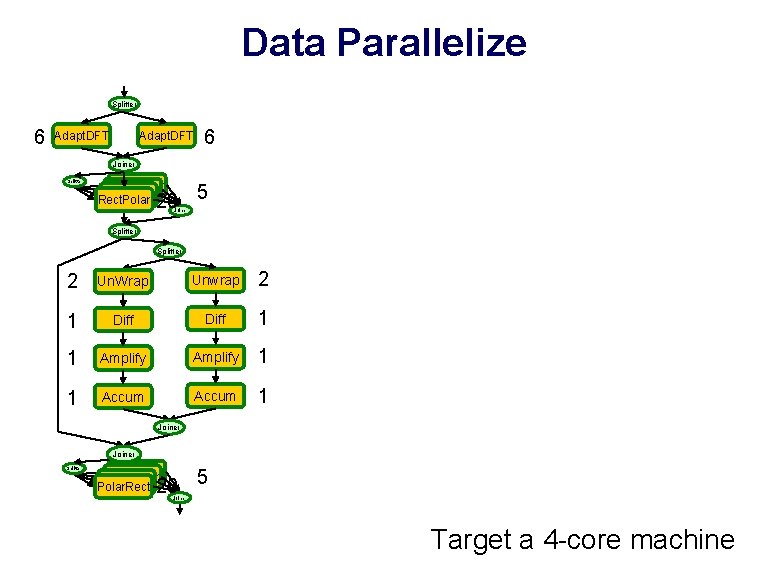

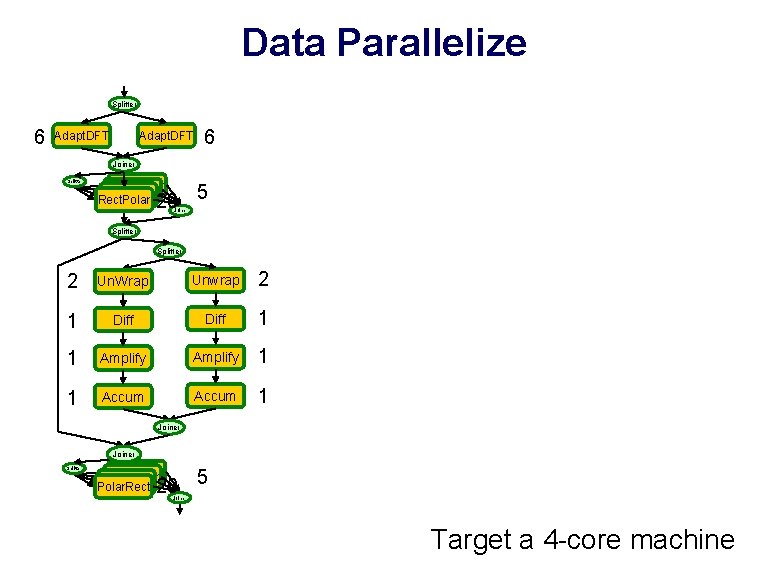

Data Parallelize Splitter 6 Adapt. DFT 6 Joiner Splitter Rect. Polar 20 5 Joiner Splitter 2 Un. Wrap Unwrap 2 1 Diff 1 1 Amplify 1 1 Accum 1 Joiner Splitter Rect. Polar. Rect 20 5 Joiner Target a 4 -core machine

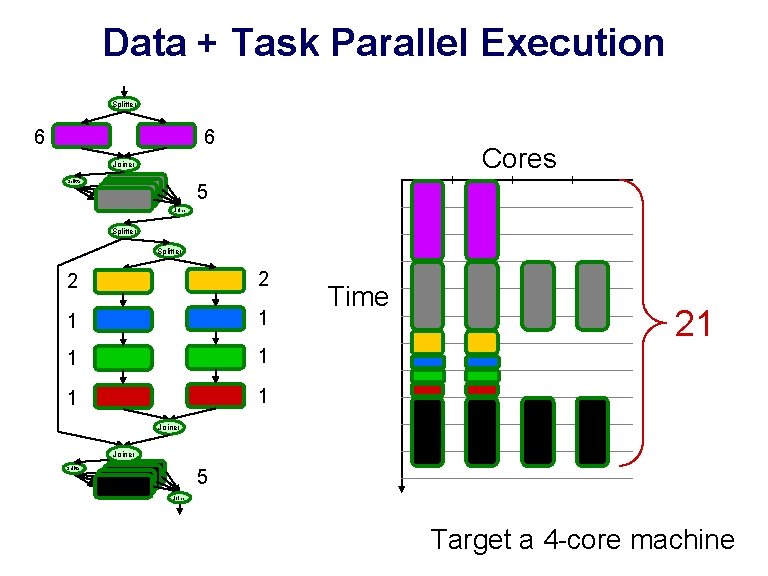

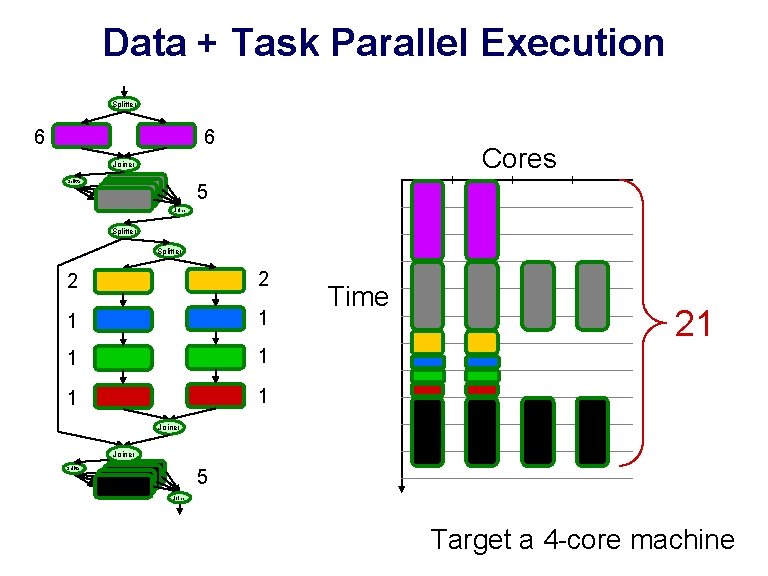

Data + Task Parallel Execution Splitter 6 6 Cores Joiner Splitter 5 Joiner Splitter 2 2 1 1 1 Time 21 Joiner 5 Splitter Rect. Polar Joiner Target a 4 -core machine

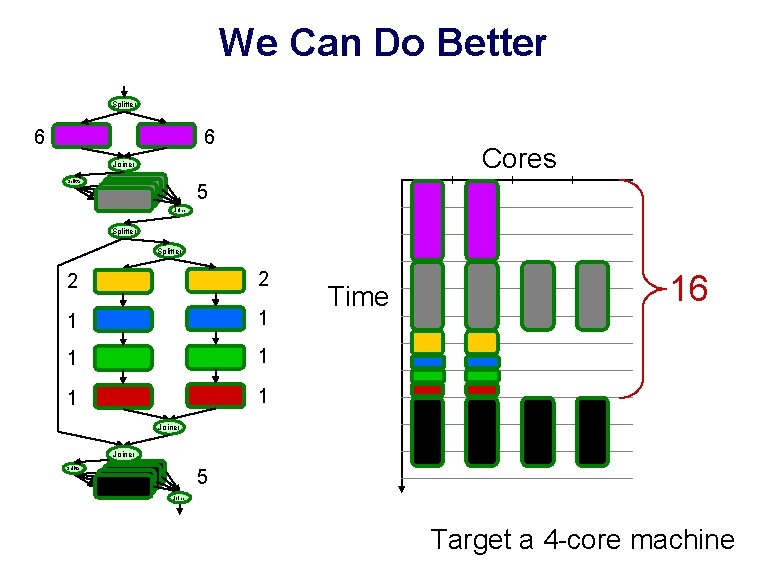

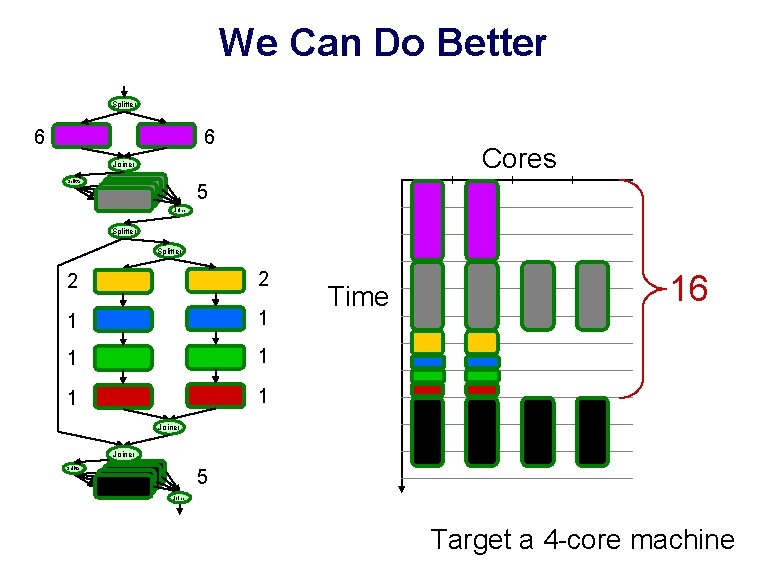

We Can Do Better Splitter 6 6 Cores Joiner Splitter 5 Joiner Splitter 2 2 1 1 1 Time 16 Joiner 5 Splitter Rect. Polar Joiner Target a 4 -core machine

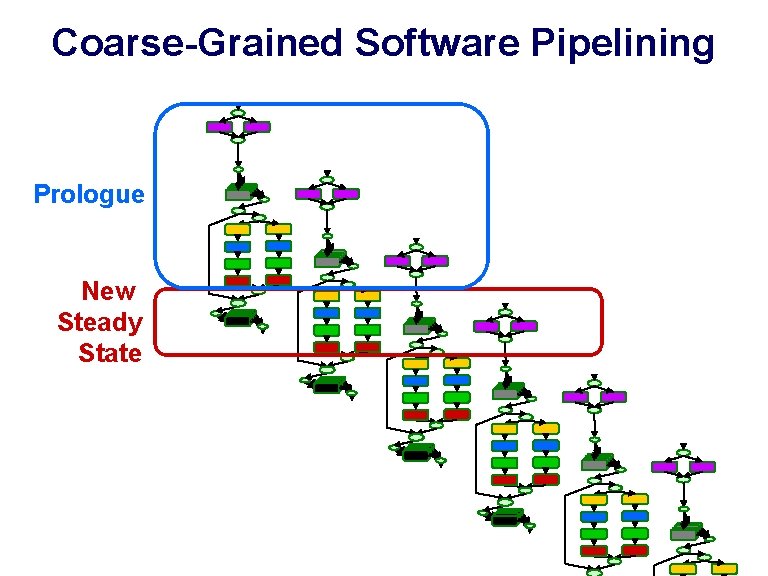

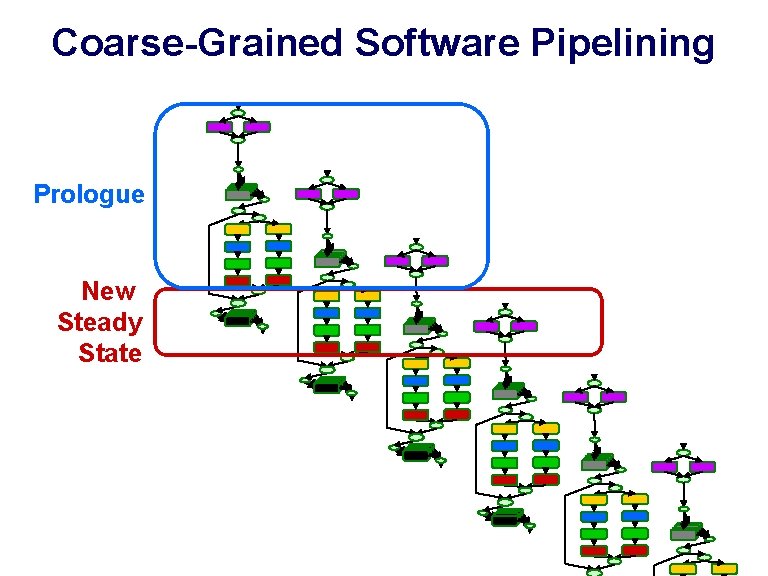

Coarse-Grained Software Pipelining Prologue New Steady State Rect. Polar

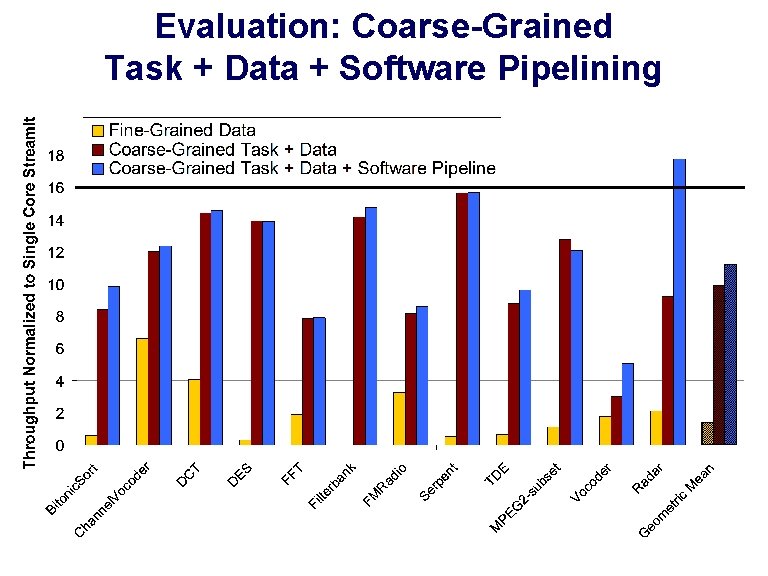

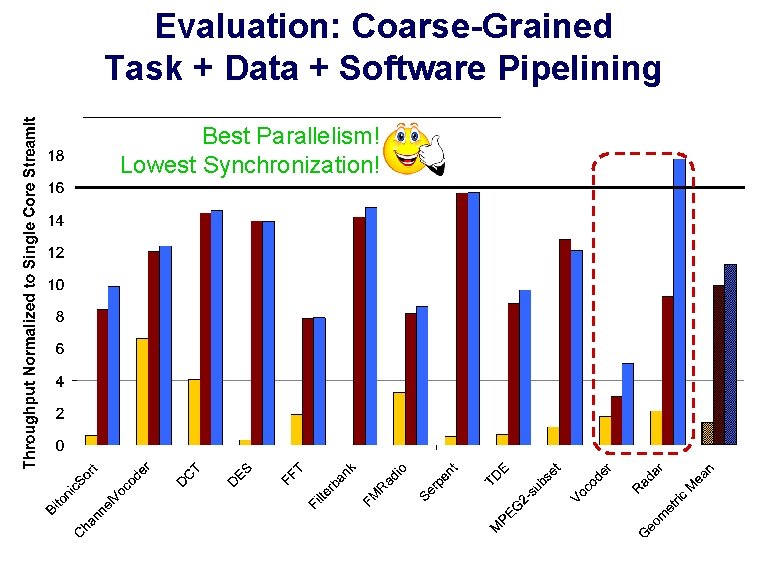

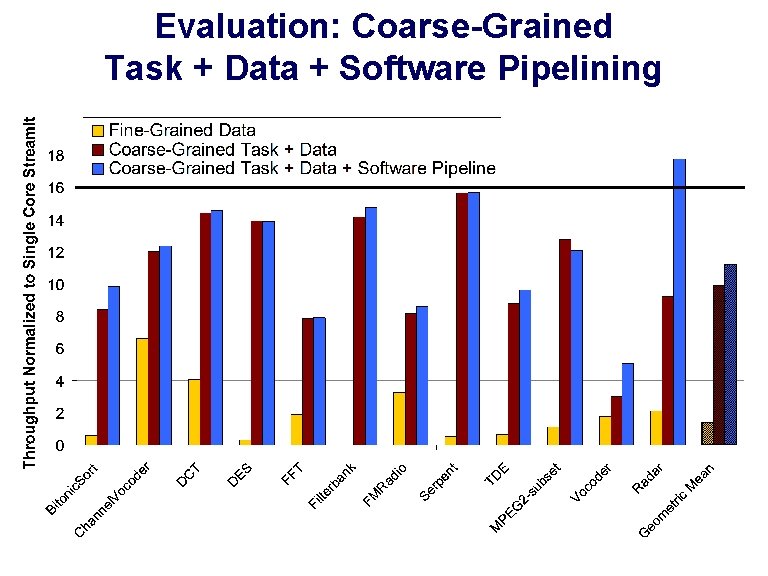

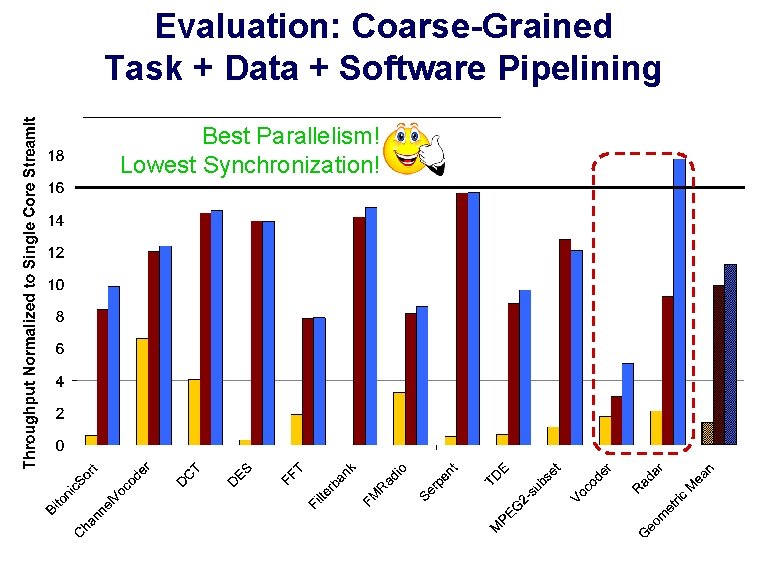

Evaluation: Coarse-Grained Task + Data + Software Pipelining

Evaluation: Coarse-Grained Task + Data + Software Pipelining Best Parallelism! Lowest Synchronization!

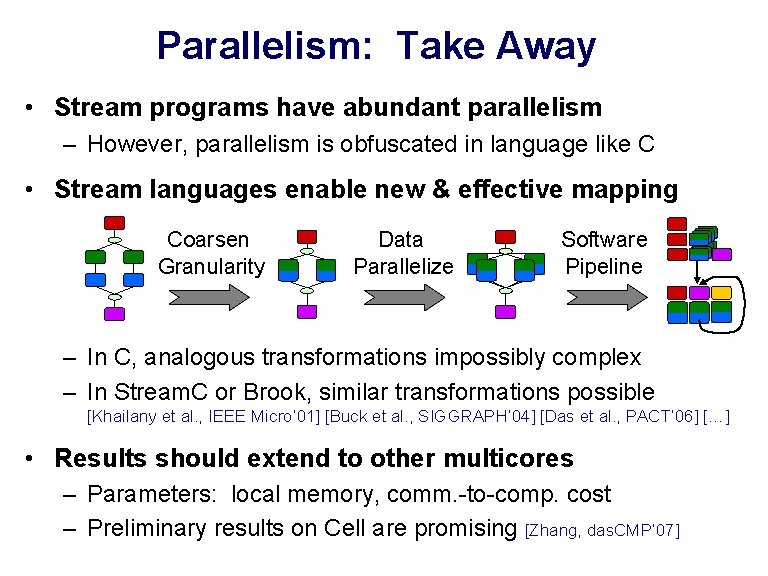

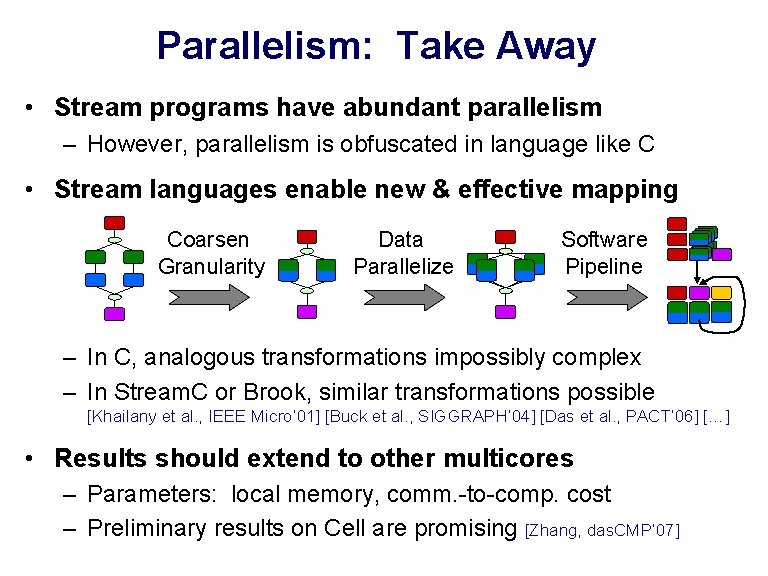

Parallelism: Take Away • Stream programs have abundant parallelism – However, parallelism is obfuscated in language like C • Stream languages enable new & effective mapping Coarsen Granularity Data Parallelize Software Pipeline – In C, analogous transformations impossibly complex – In Stream. C or Brook, similar transformations possible [Khailany et al. , IEEE Micro’ 01] [Buck et al. , SIGGRAPH’ 04] [Das et al. , PACT’ 06] […] • Results should extend to other multicores – Parameters: local memory, comm. -to-comp. cost – Preliminary results on Cell are promising [Zhang, das. CMP’ 07]

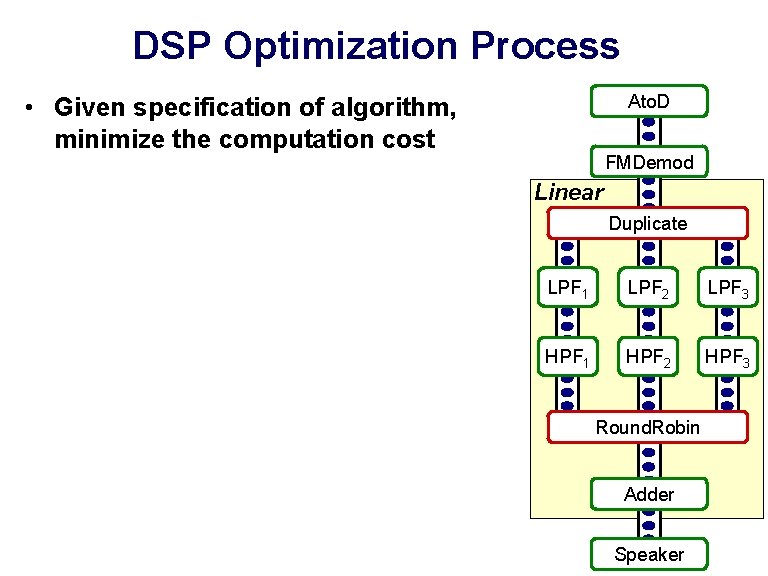

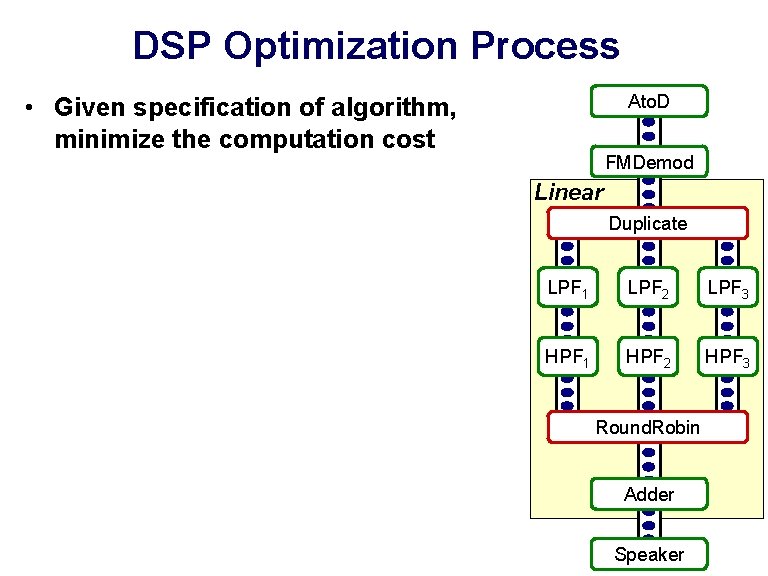

Part 3: Domain-Specific Optimizations Andrew Lamb, William Thies, Saman Amarasinghe (PLDI’ 03) Joint work with Andrew Lamb, Sitij Agrawal, William Thies, Saman Amarasinghe (CASES’ 05)

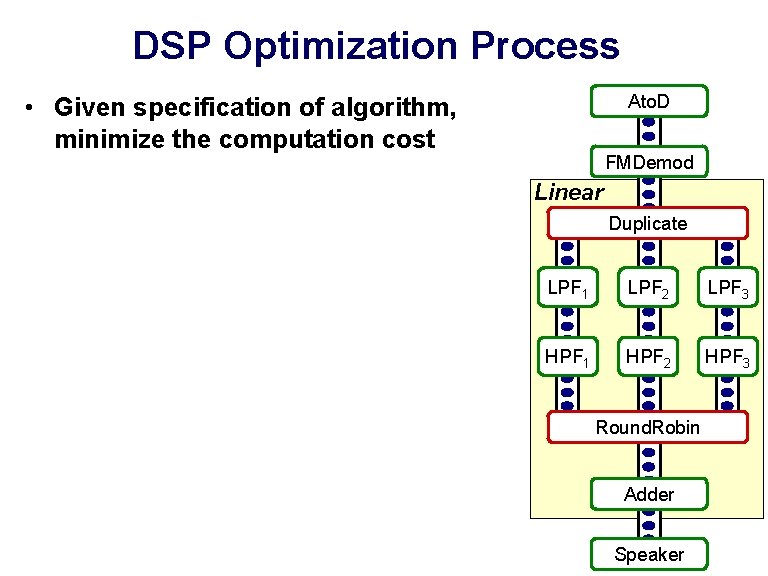

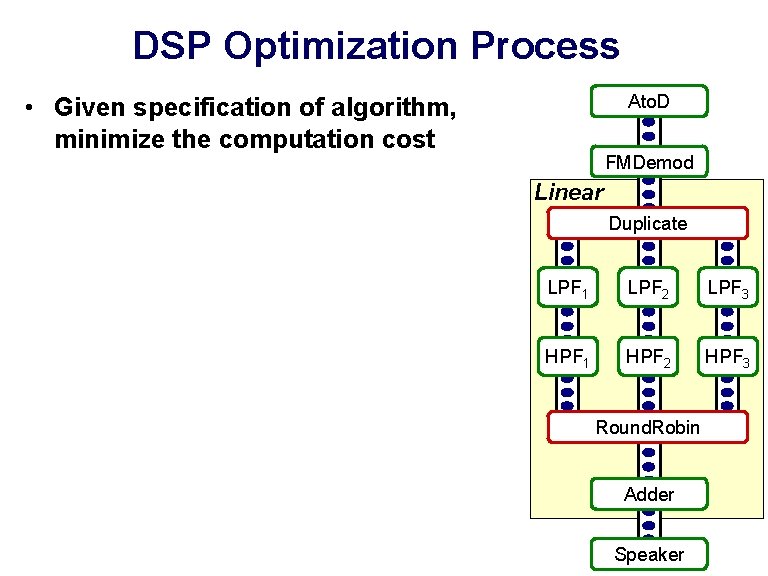

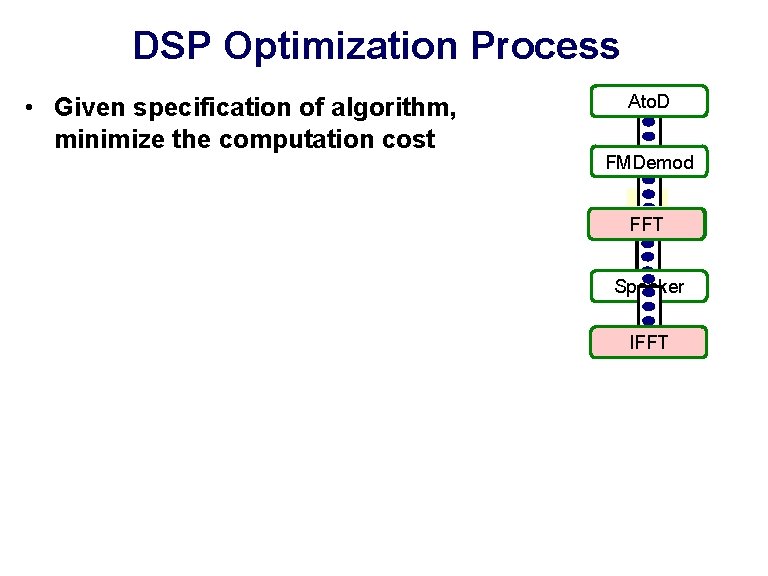

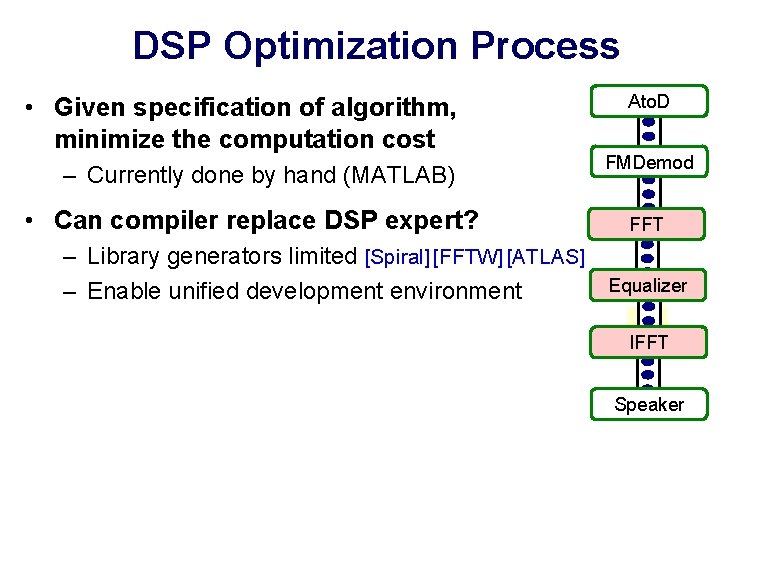

DSP Optimization Process Ato. D • Given specification of algorithm, minimize the computation cost FMDemod Linear Duplicate LPF 1 LPF 2 LPF 3 HPF 1 HPF 2 HPF 3 Round. Robin Adder Speaker

DSP Optimization Process Ato. D • Given specification of algorithm, minimize the computation cost FMDemod Linear Duplicate Equalizer LPF 1 LPF 2 LPF 3 HPF 1 HPF 2 HPF 3 Round. Robin Adder Speaker

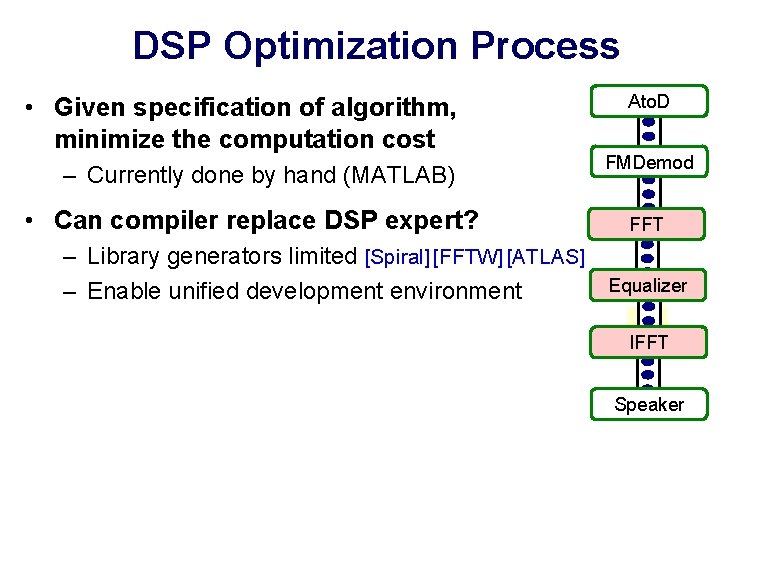

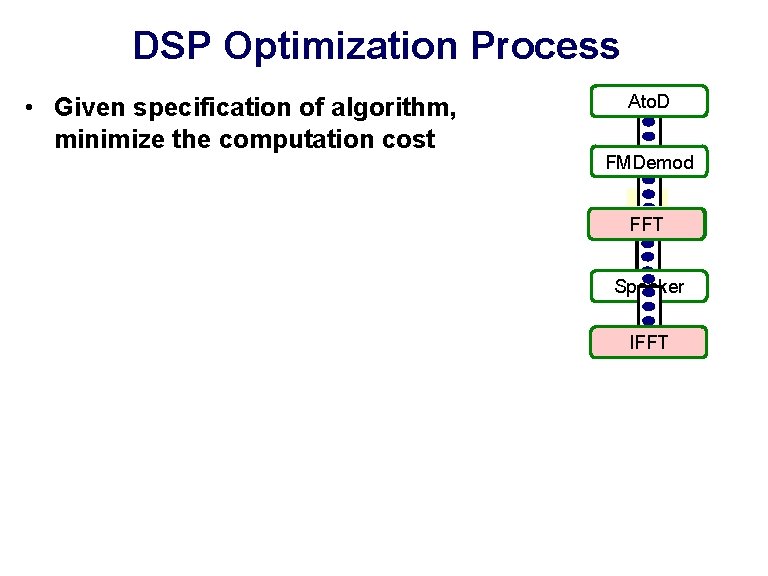

DSP Optimization Process • Given specification of algorithm, minimize the computation cost Ato. D FMDemod Equalizer FFT Speaker IFFT

DSP Optimization Process • Given specification of algorithm, minimize the computation cost – Currently done by hand (MATLAB) • Can compiler replace DSP expert? – Library generators limited [Spiral] [FFTW] [ATLAS] – Enable unified development environment Ato. D FMDemod FFT Equalizer IFFT Speaker

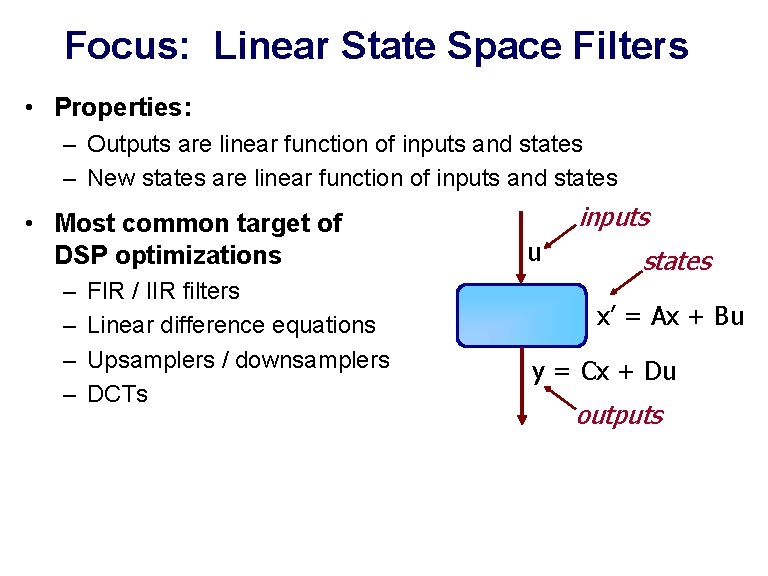

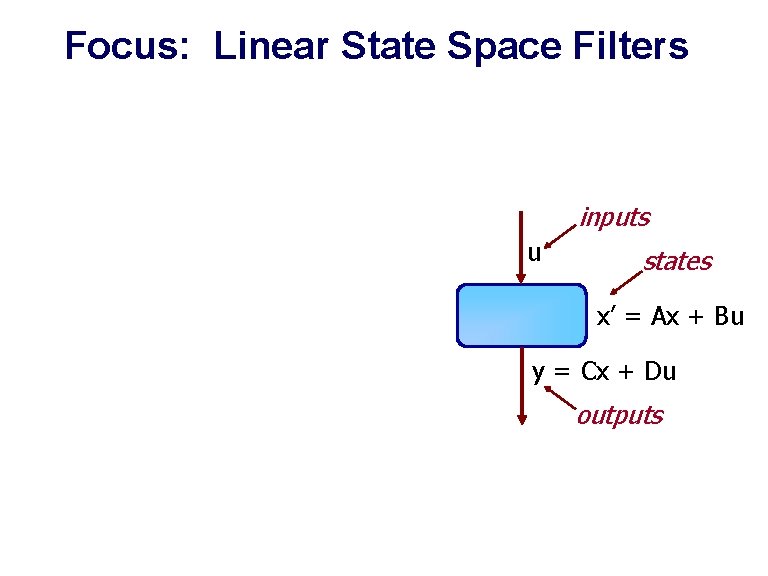

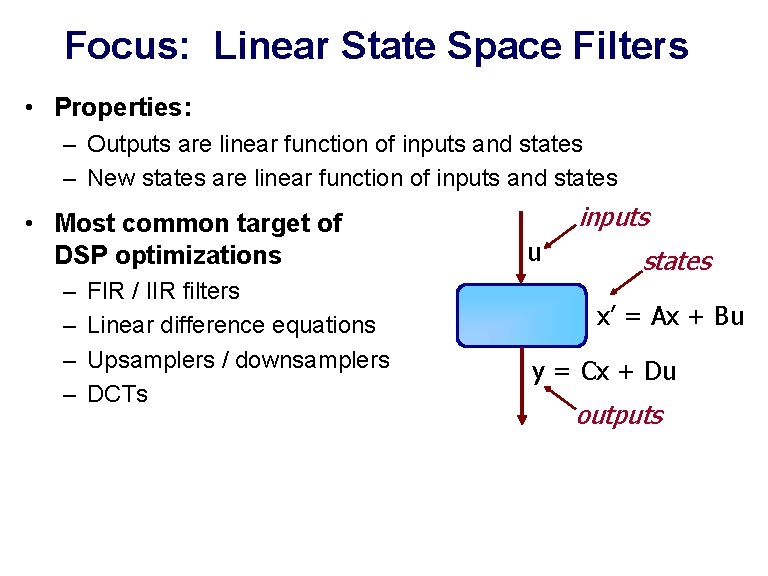

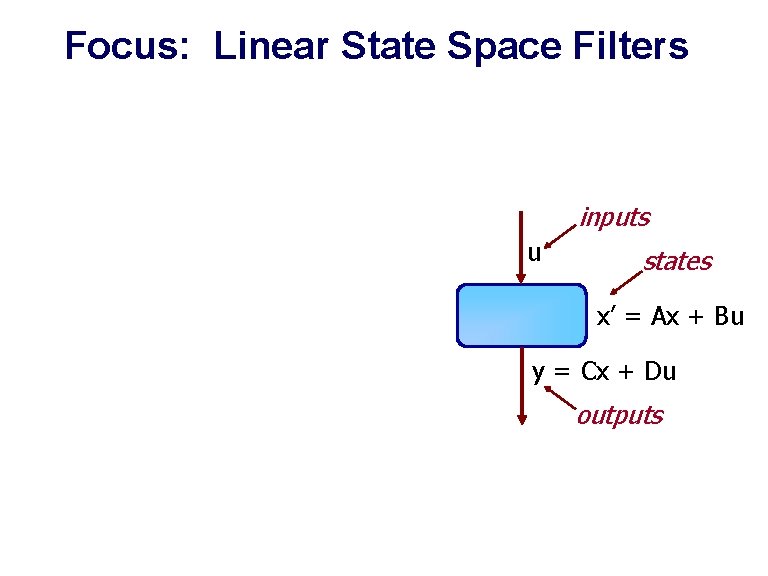

Focus: Linear State Space Filters • Properties: – Outputs are linear function of inputs and states – New states are linear function of inputs and states • Most common target of DSP optimizations – – FIR / IIR filters Linear difference equations Upsamplers / downsamplers DCTs inputs u states x’ = Ax + Bu y = Cx + Du outputs

Focus: Linear State Space Filters inputs u states x’ = Ax + Bu y = Cx + Du outputs

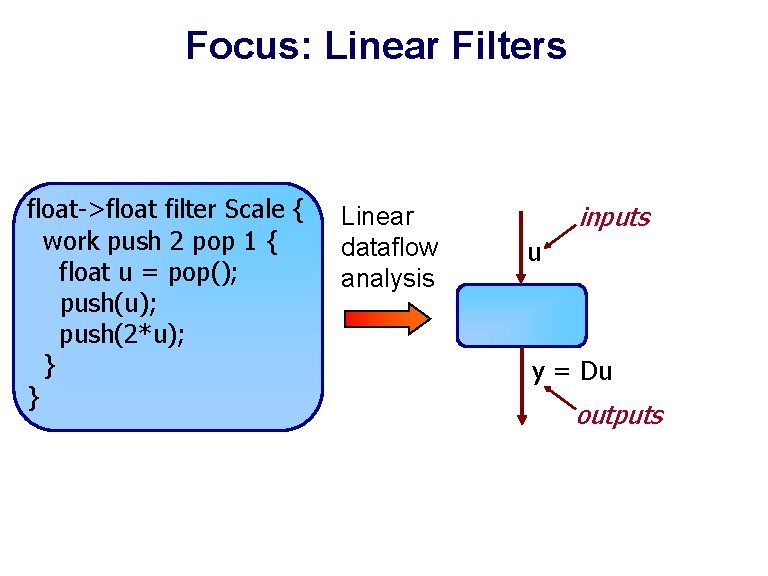

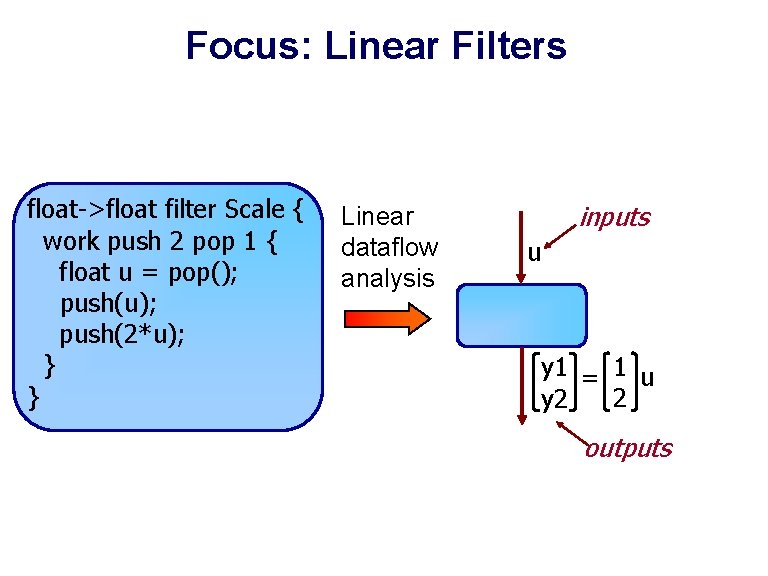

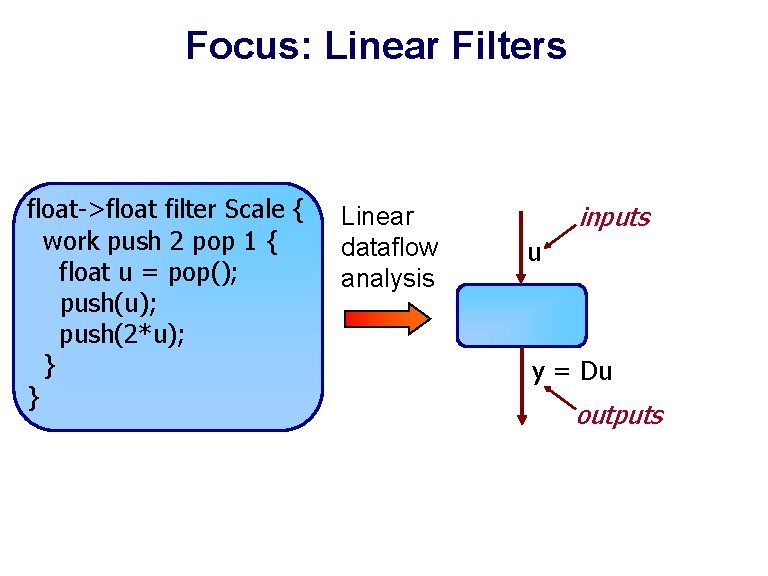

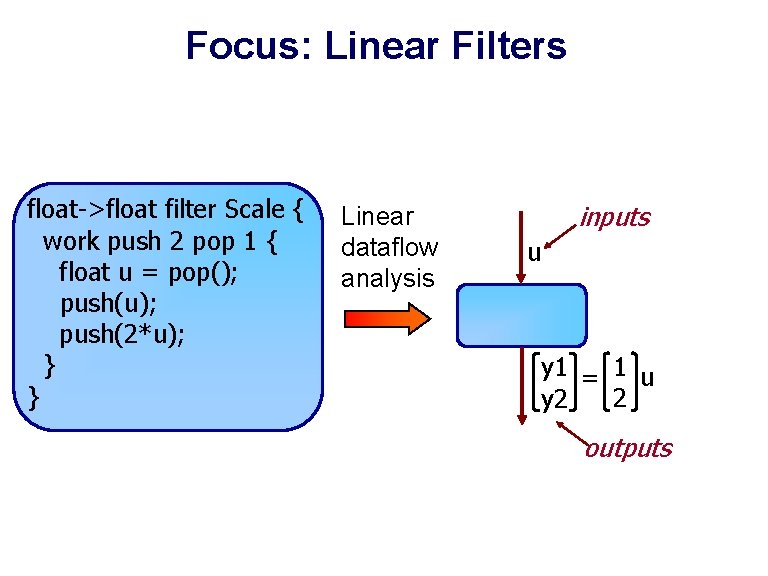

Focus: Linear Filters float->float filter Scale { work push 2 pop 1 { float u = pop(); push(u); push(2*u); } } Linear dataflow analysis inputs u y = Du outputs

Focus: Linear Filters float->float filter Scale { work push 2 pop 1 { float u = pop(); push(u); push(2*u); } } Linear dataflow analysis inputs u y 1 = 1 u 2 y 2 outputs

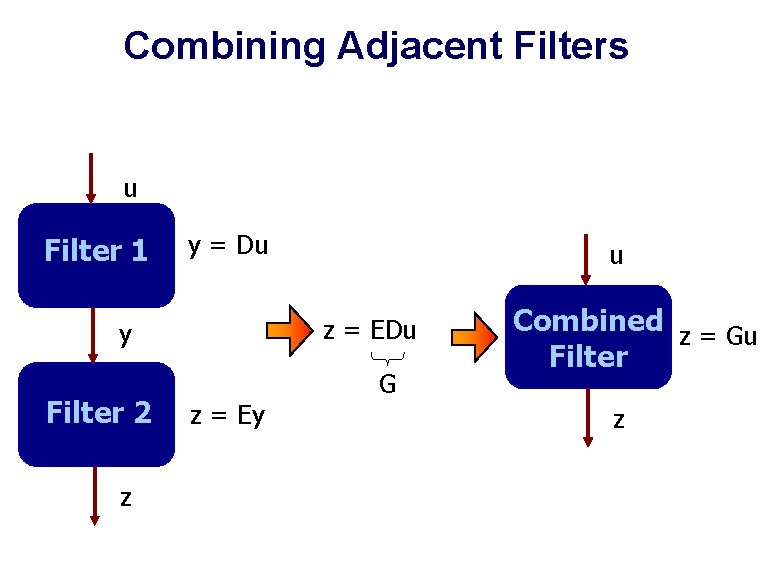

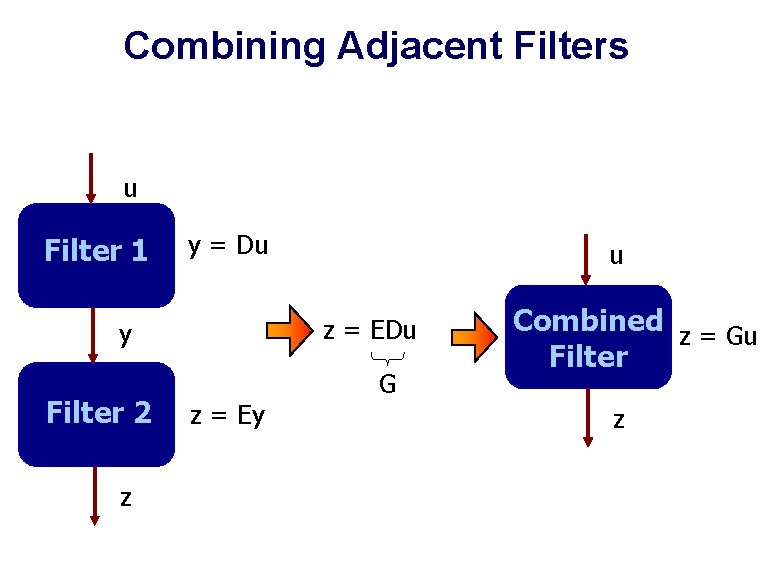

Combining Adjacent Filters u Filter 1 y = Du z = EDu y Filter 2 z u z = Ey G Combined z = Gu Filter z

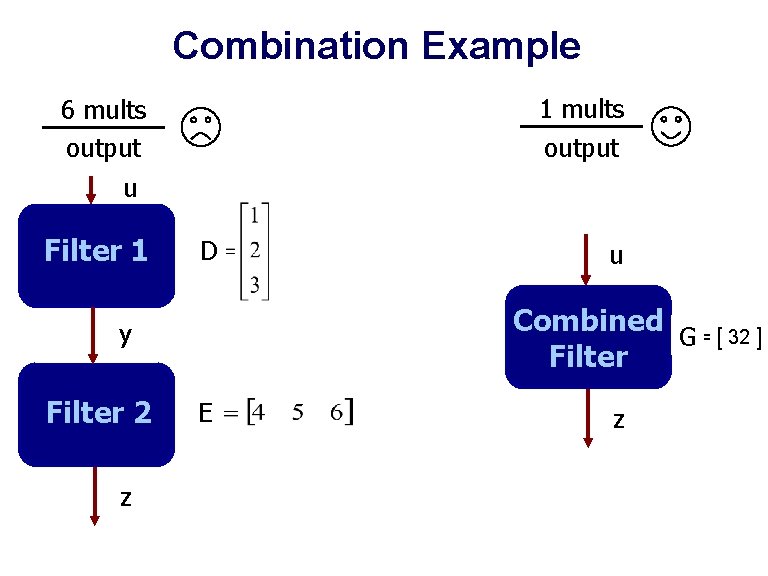

Combination Example 1 mults output 6 mults output u Filter 1 D Combined G C = [ 32 ] Filter y Filter 2 z u E z

![The General Case If matrix dimensions mismatch Matrix expansion Original U E D The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D]](https://slidetodoc.com/presentation_image_h/04490bf95e0fbce745ae09bbb5bf210e/image-61.jpg)

The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D] pop = U E Expanded [ D]

![The General Case If matrix dimensions mismatch Matrix expansion Original U E D The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D]](https://slidetodoc.com/presentation_image_h/04490bf95e0fbce745ae09bbb5bf210e/image-62.jpg)

The General Case • If matrix dimensions mis-match? Matrix expansion: Original U E [D] pop = U E Expanded [ D]

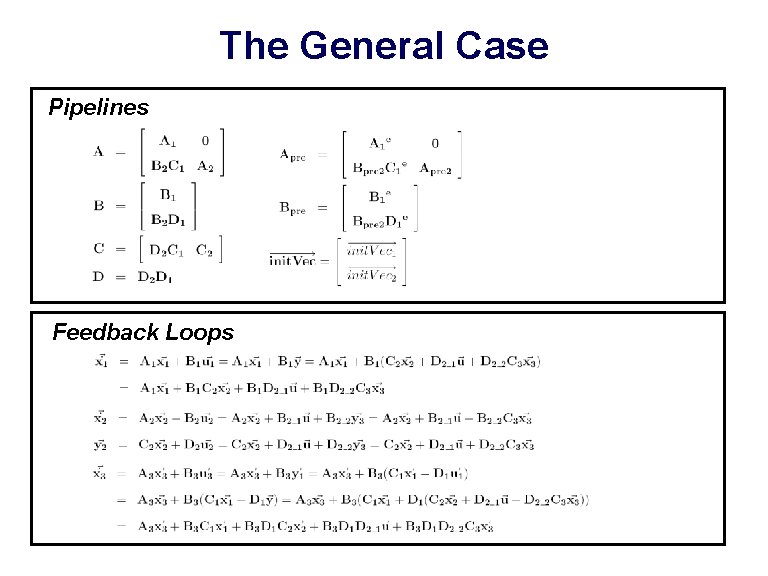

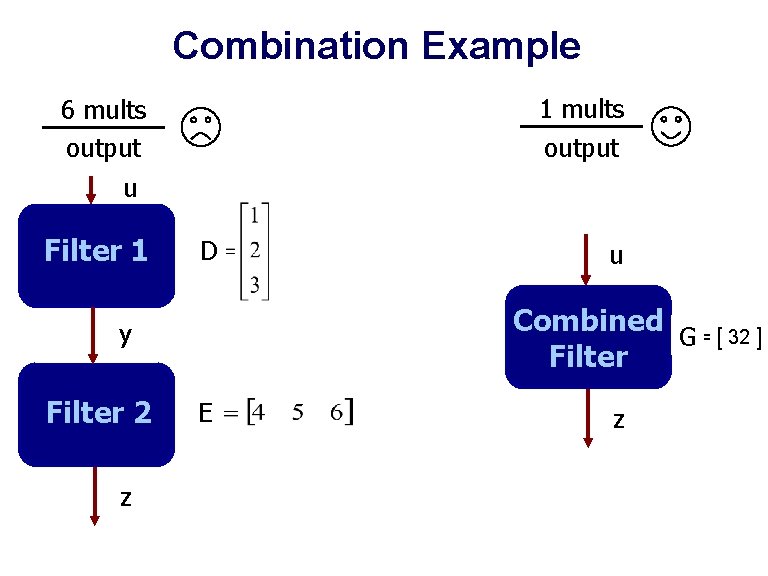

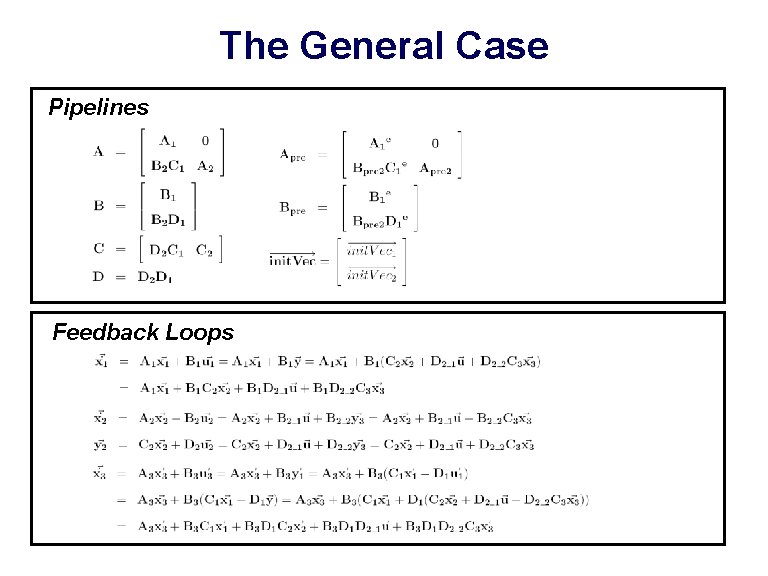

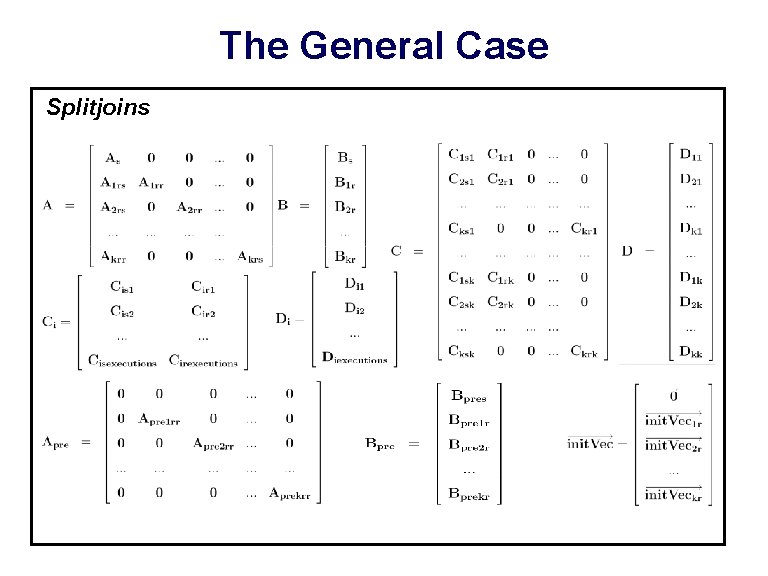

The General Case Pipelines Feedback Loops

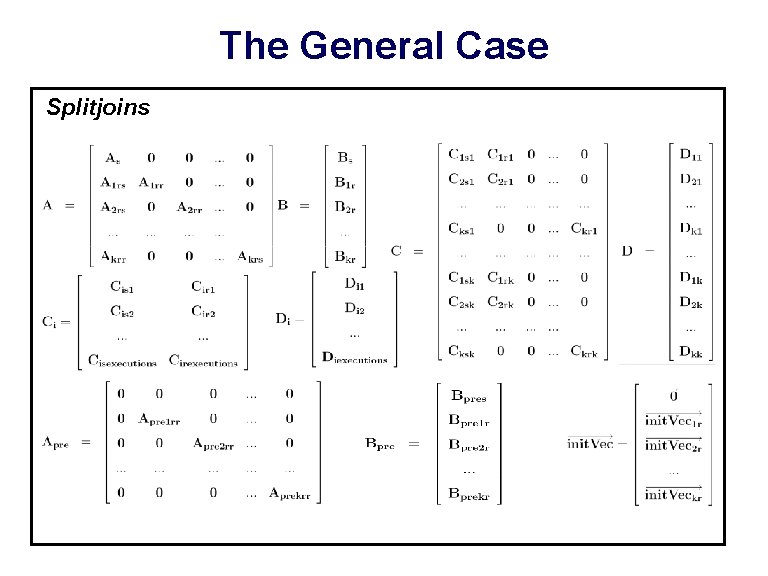

The General Case Splitjoins

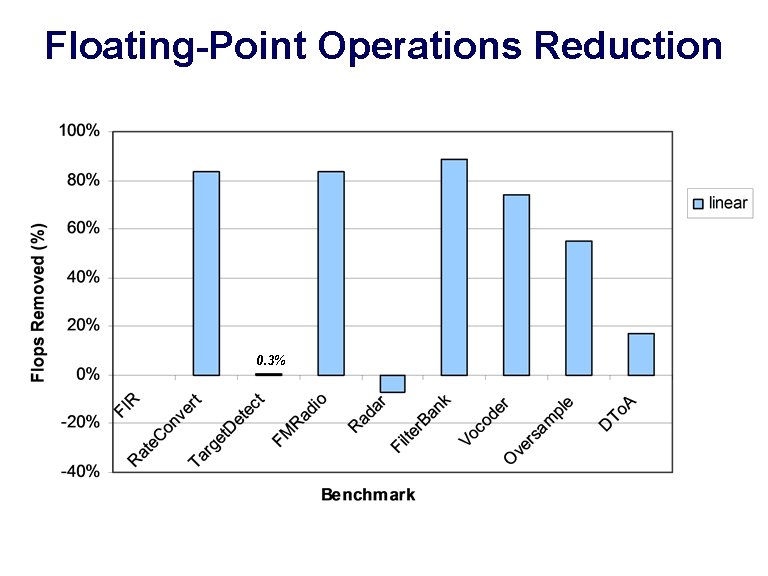

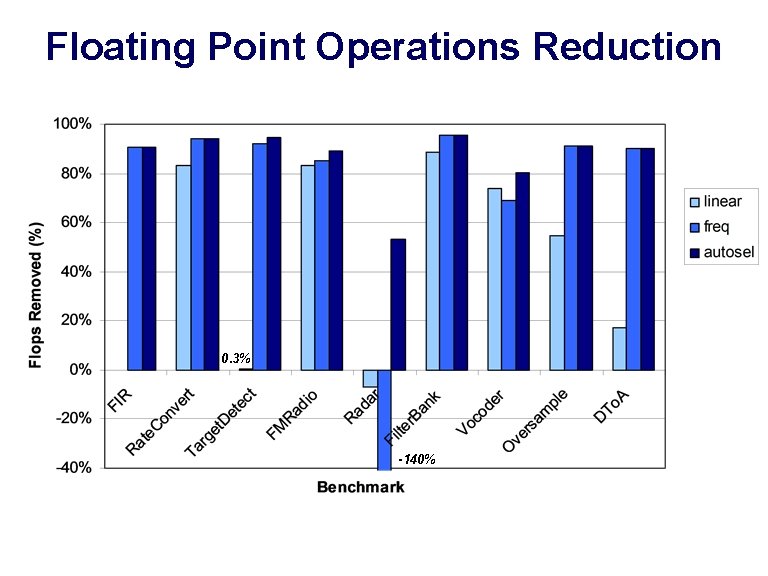

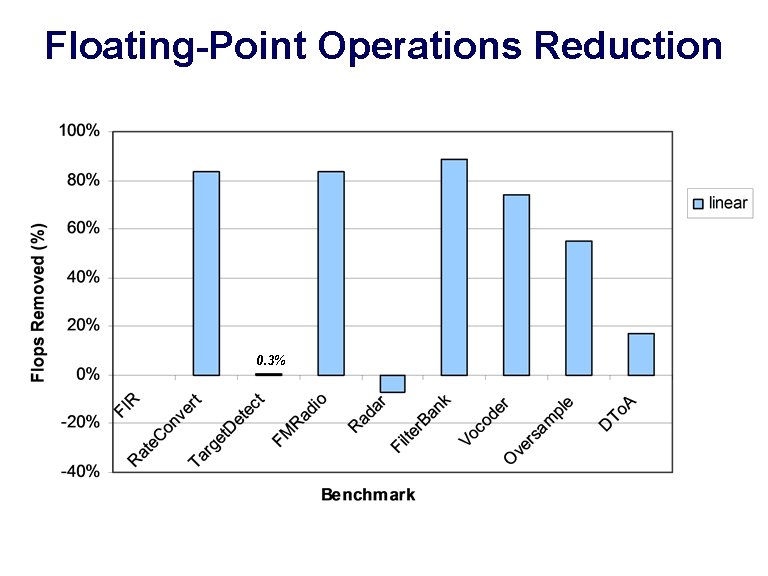

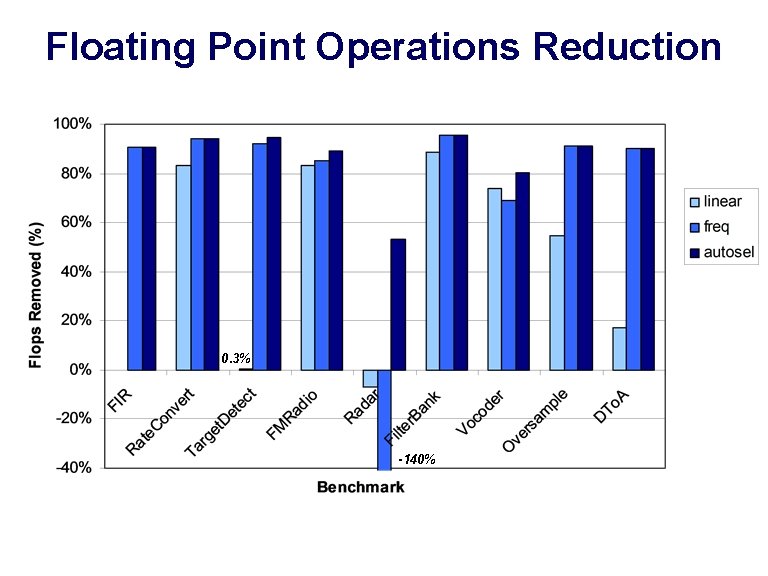

Floating-Point Operations Reduction 0. 3%

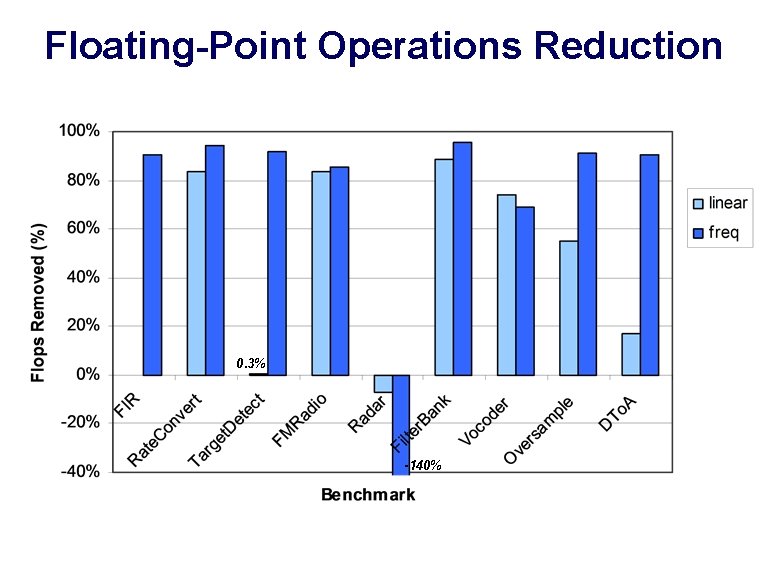

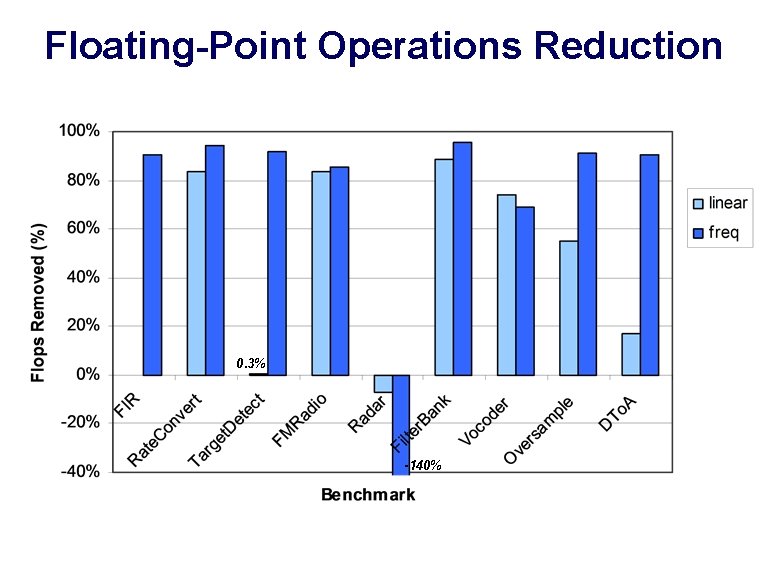

Floating-Point Operations Reduction 0. 3% -140%

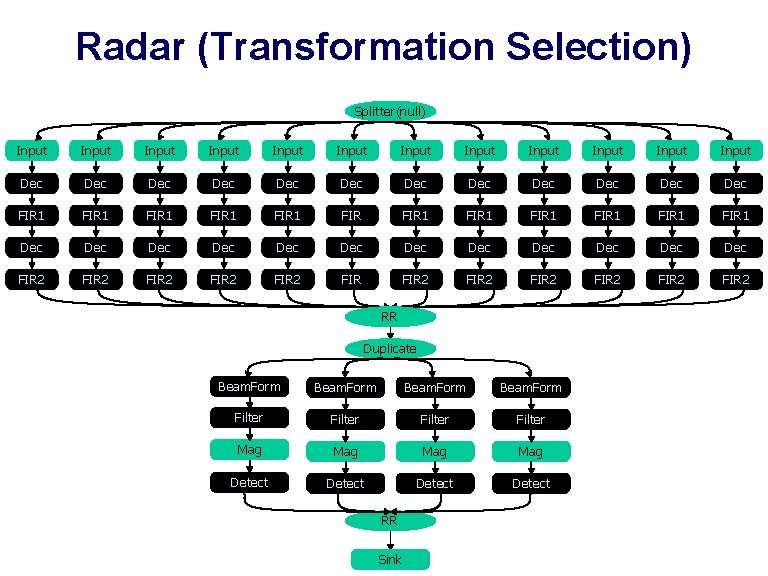

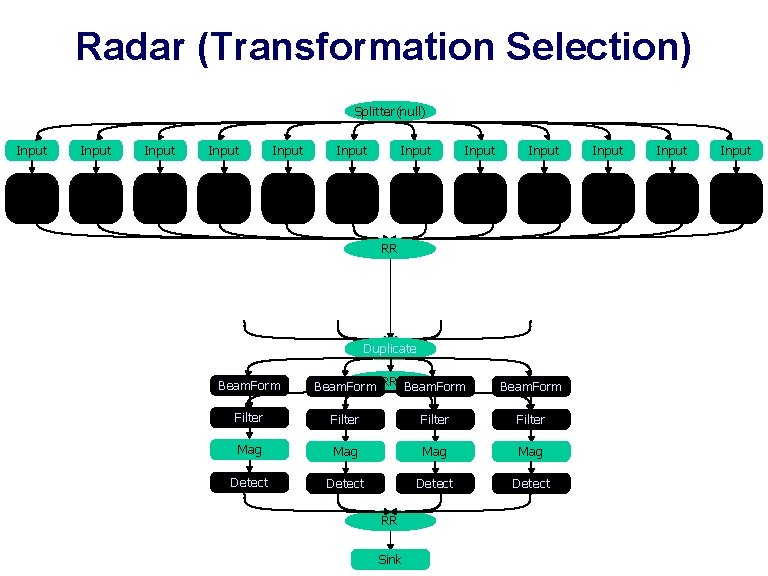

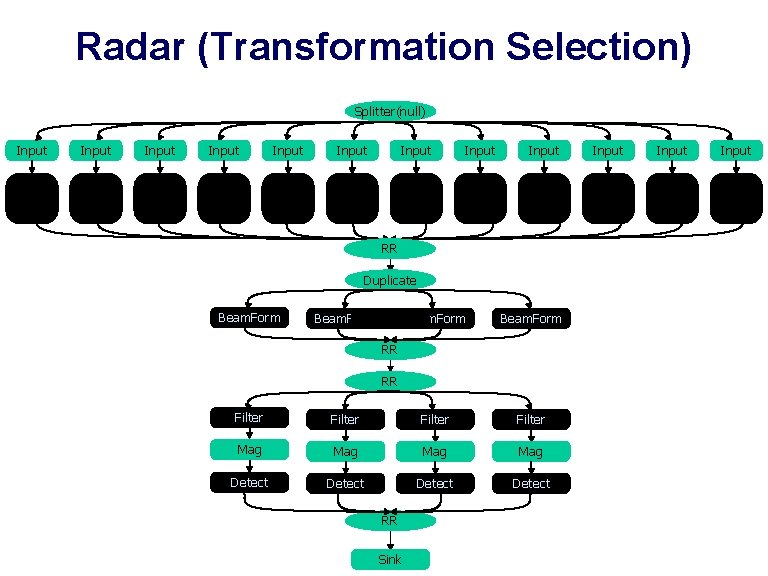

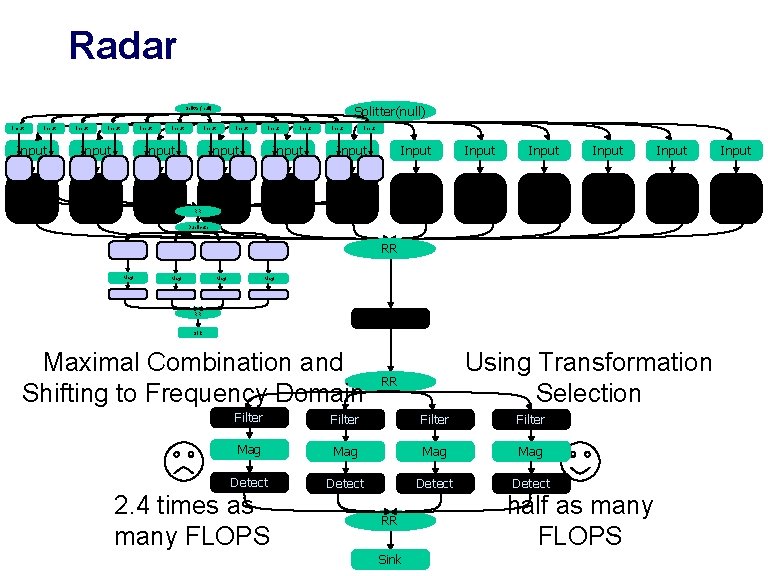

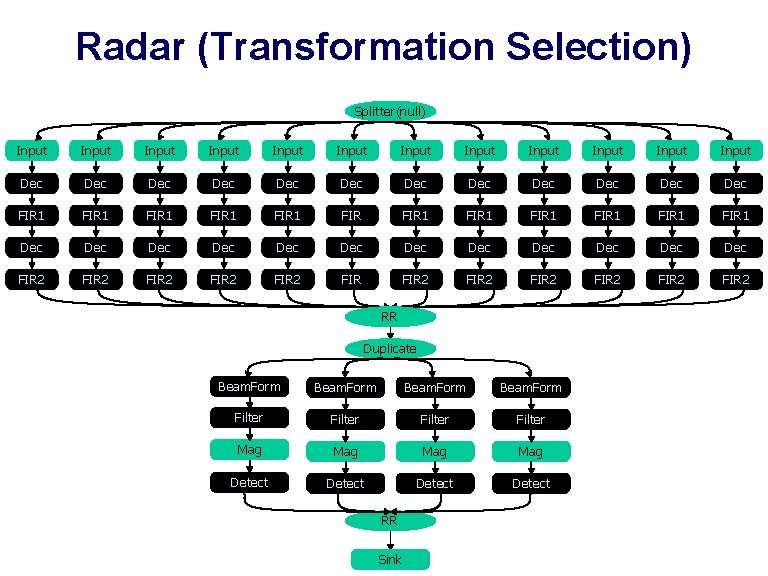

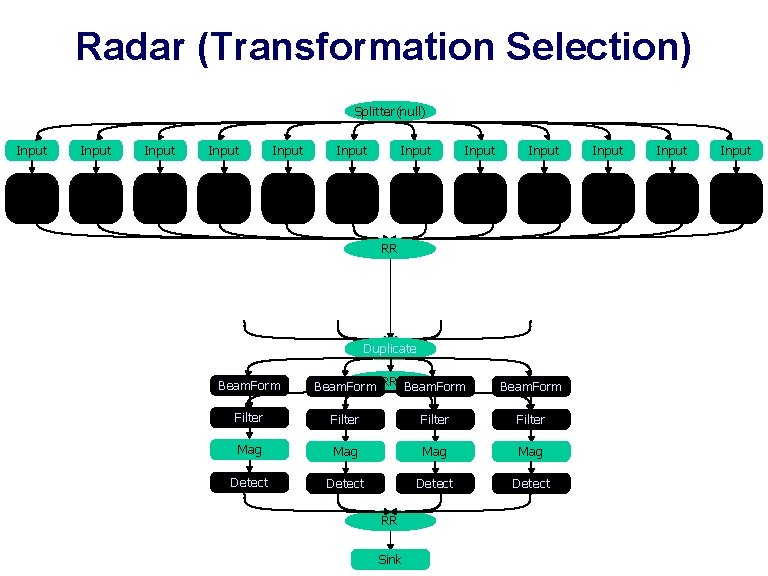

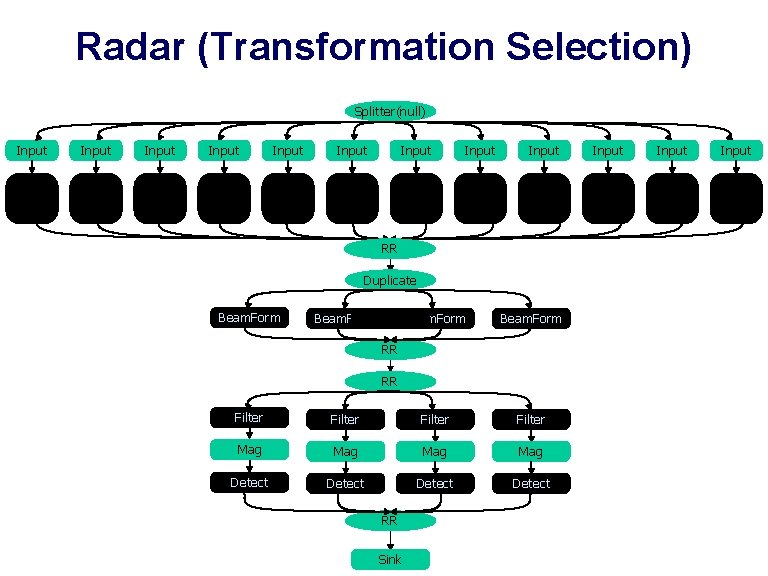

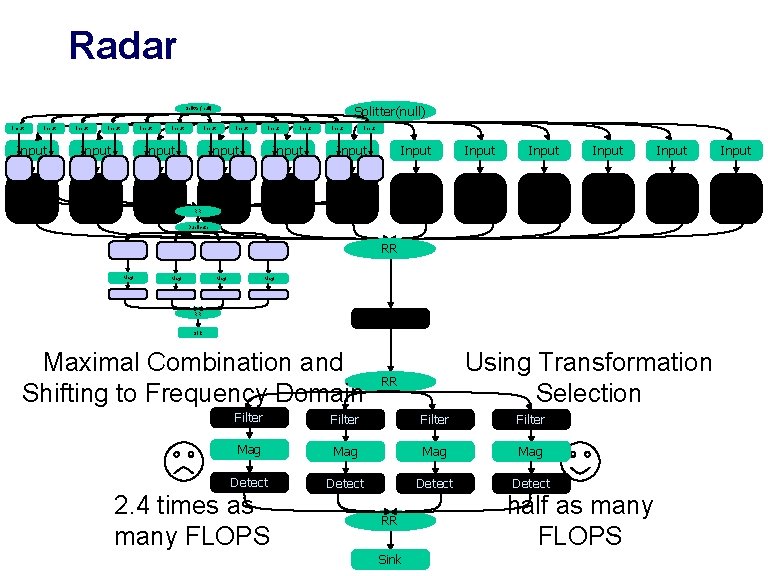

Radar (Transformation Selection) Splitter(null) Splitter Input Input Input Dec Dec Dec FIR 1 FIR 1 FIR 1 Dec Dec Dec FIR 2 FIR 2 FIR 2 RR Duplicate Beam. Form Filter Mag Mag Detect RR Sink

Radar (Transformation Selection) Splitter(null) Splitter Input Input Input RR Duplicate RR Beam. Form Filter Mag Mag Detect RR Sink Input

Radar (Transformation Selection) Splitter(null) Splitter Input Input Input RR Duplicate Beam. Form RR RR Filter Mag Mag Detect RR Sink Input

Radar (Transformation Selection) Splitter(null) Splitter Input Input Input Input Input Input RR Duplicate RR Mag Mag RR Sink Maximal Combination and Shifting to Frequency Domain Using Transformation Selection RR Filter Mag Mag Detect 2. 4 times as many FLOPS RR Sink half as many FLOPS Input

Floating Point Operations Reduction 0. 3% -140%

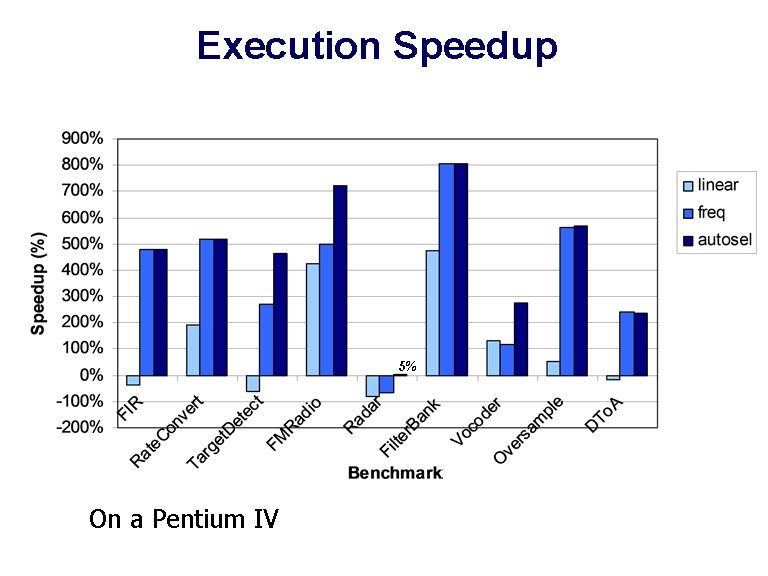

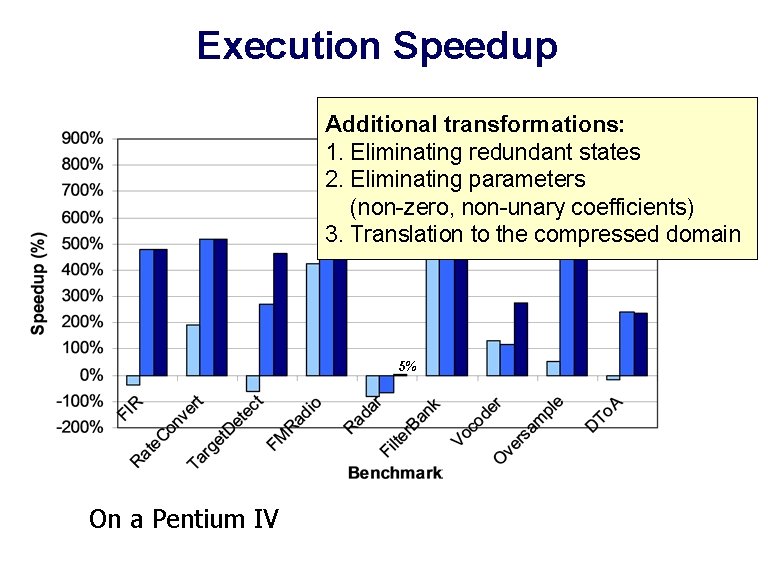

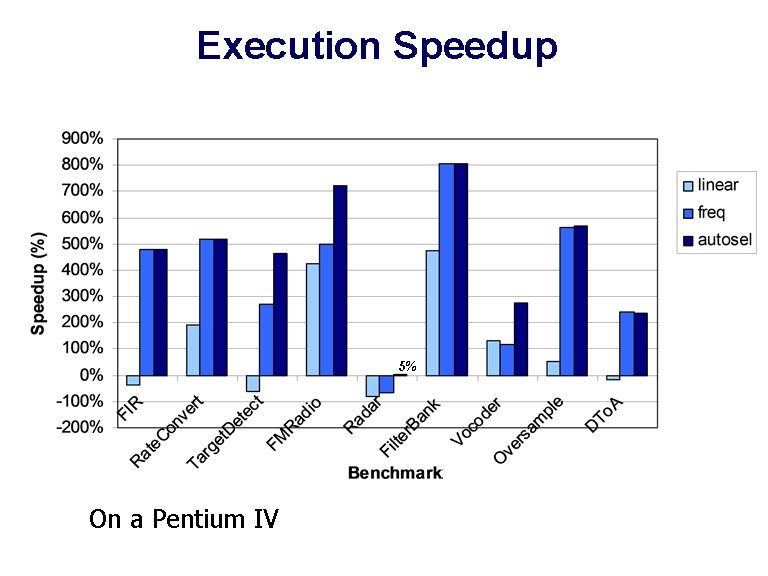

Execution Speedup 5% On a Pentium IV

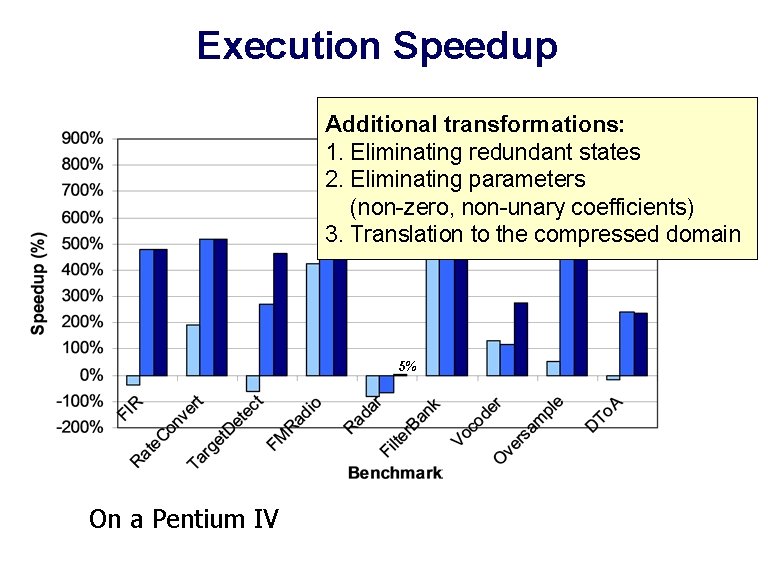

Execution Speedup Additional transformations: 1. Eliminating redundant states 2. Eliminating parameters (non-zero, non-unary coefficients) 3. Translation to the compressed domain 5% On a Pentium IV

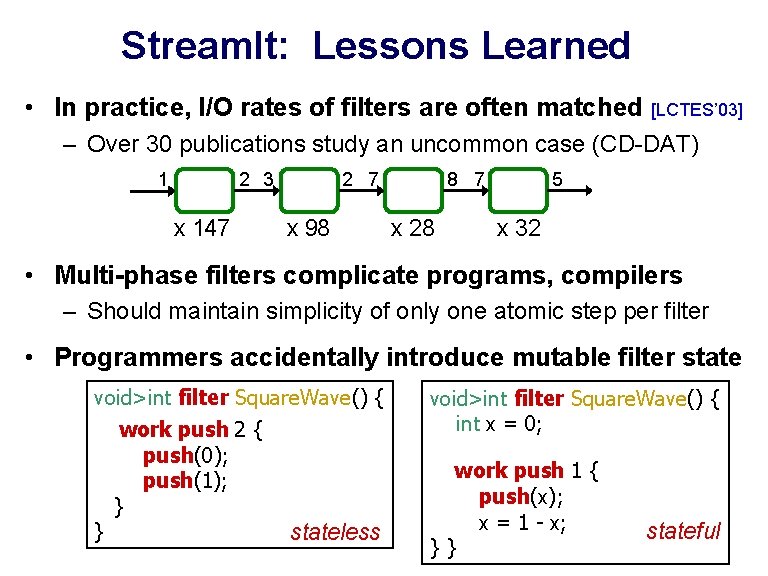

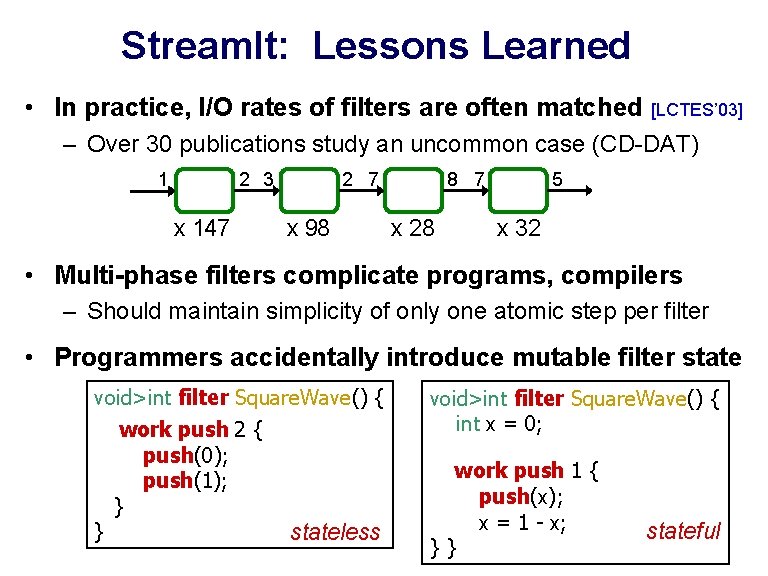

Stream. It: Lessons Learned • In practice, I/O rates of filters are often matched [LCTES’ 03] – Over 30 publications study an uncommon case (CD-DAT) 1 2 3 x 147 2 7 x 98 8 7 x 28 5 x 32 • Multi-phase filters complicate programs, compilers – Should maintain simplicity of only one atomic step per filter • Programmers accidentally introduce mutable filter state void>int filter Square. Wave() { work push 2 { push(0); push(1); } } stateless void>int filter Square. Wave() { int x = 0; work push 1 { push(x); x = 1 - x; }} stateful

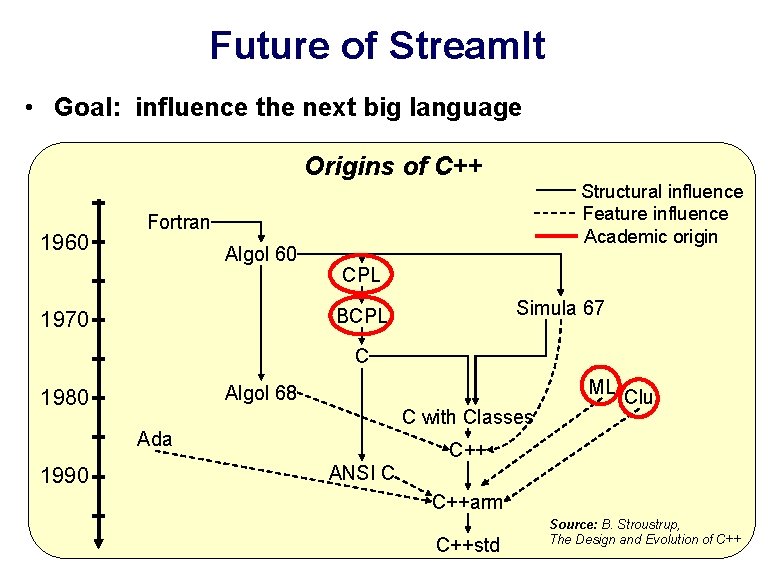

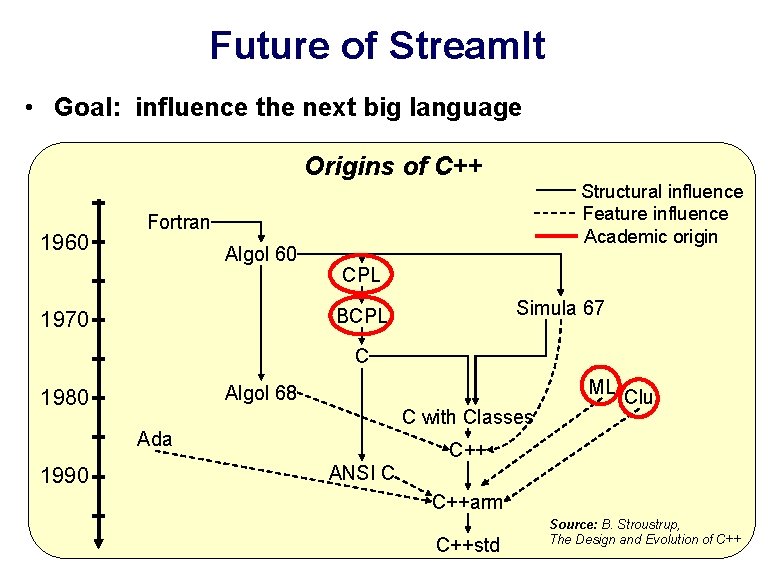

Future of Stream. It • Goal: influence the next big language Origins of C++ 1960 Structural influence Feature influence Academic origin Fortran Algol 60 CPL Simula 67 BCPL 1970 C ML Algol 68 1980 C with Classes Ada 1990 Clu C++ ANSI C C++arm C++std Source: B. Stroustrup, The Design and Evolution of C++

Research Trajectory • Vision: Make emerging computational substrates universally accessible and useful 1. Languages, compilers, & tools for multicores – I believe new language / compiler technology can enable scalable and robust performance – Next inroads: expose & exploit flexibility in programs 2. Programmable microfluidics – We have developed programming languages, tools, and flexible new devices for microfluidics – Potential to revolutionize biology experimentation 3. Technologies for the developing world – TEK: enable Internet experience over email account – Audio Wiki: publish content from a low-cost phone – u. Box / u. Phone: monitor & improve rural healthcare

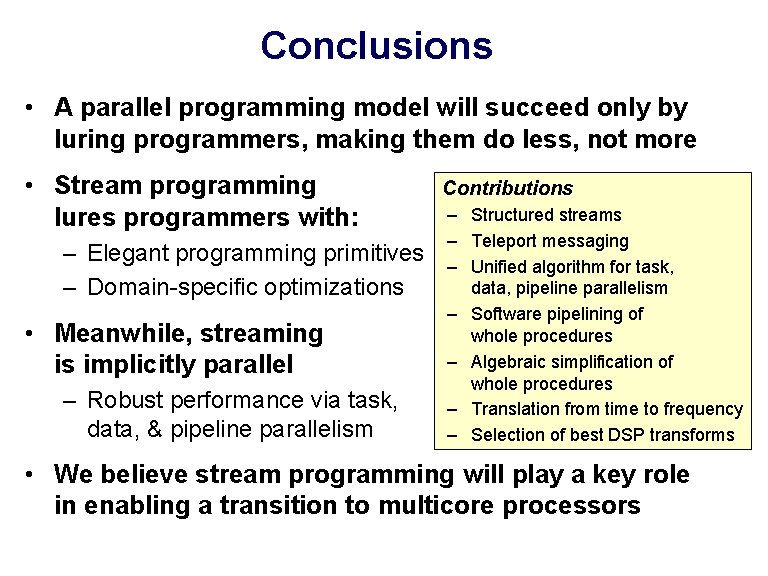

Conclusions • A parallel programming model will succeed only by luring programmers, making them do less, not more • Stream programming lures programmers with: – Elegant programming primitives – Domain-specific optimizations • Meanwhile, streaming is implicitly parallel – Robust performance via task, data, & pipeline parallelism Contributions – Structured streams – Teleport messaging – Unified algorithm for task, data, pipeline parallelism – Software pipelining of whole procedures – Algebraic simplification of whole procedures – Translation from time to frequency – Selection of best DSP transforms • We believe stream programming will play a key role in enabling a transition to multicore processors

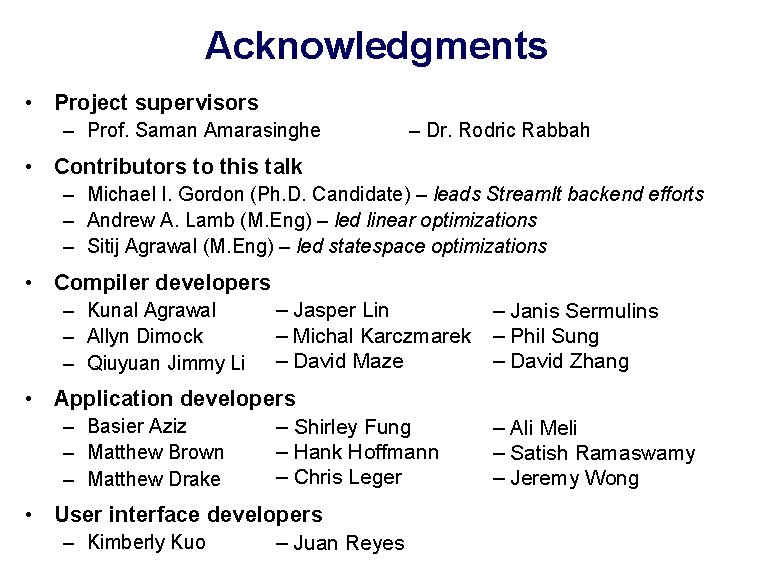

Acknowledgments • Project supervisors – Prof. Saman Amarasinghe – Dr. Rodric Rabbah • Contributors to this talk – Michael I. Gordon (Ph. D. Candidate) – leads Stream. It backend efforts – Andrew A. Lamb (M. Eng) – led linear optimizations – Sitij Agrawal (M. Eng) – led statespace optimizations • Compiler developers – Kunal Agrawal – Allyn Dimock – Qiuyuan Jimmy Li – Jasper Lin – Michal Karczmarek – David Maze • Application developers – Basier Aziz – Shirley Fung – Matthew Brown – Matthew Drake – Hank Hoffmann – Chris Leger • User interface developers – Kimberly Kuo – Juan Reyes – Janis Sermulins – Phil Sung – David Zhang – Ali Meli – Satish Ramaswamy – Jeremy Wong