Strategies for improving Monitoring and Evaluation South African

- Slides: 64

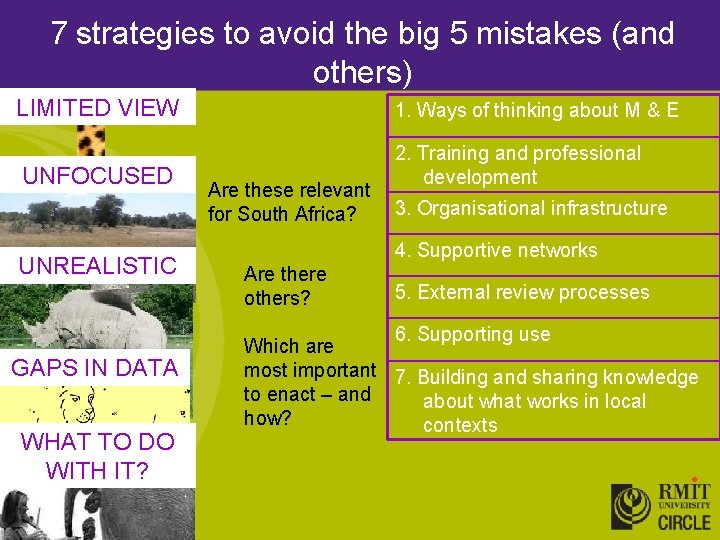

Strategies for improving Monitoring and Evaluation South African Monitoring & Evaluation Association Inaugural conference 28 to 30 March 2007 Associate Professor Patricia Rogers CIRCLE at RMIT University, Australia

Sign at the Apartheid Museum, Johannesburg

• Good evaluation can help make things better • Bad evaluation can be useless – or worse – Findings that are too late, not credible or not relevant – False positives (wrongly conclude things work) – False negatives (wrongly conclude things don’t work) – Destructive effect of poor processes

Overview of presentation The ‘Big Five’ problems in M & E Seven possible strategies for improving the quality of M & E

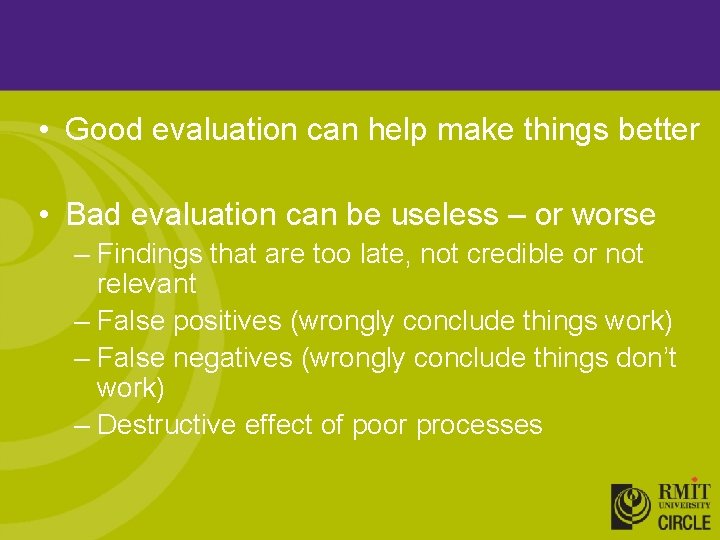

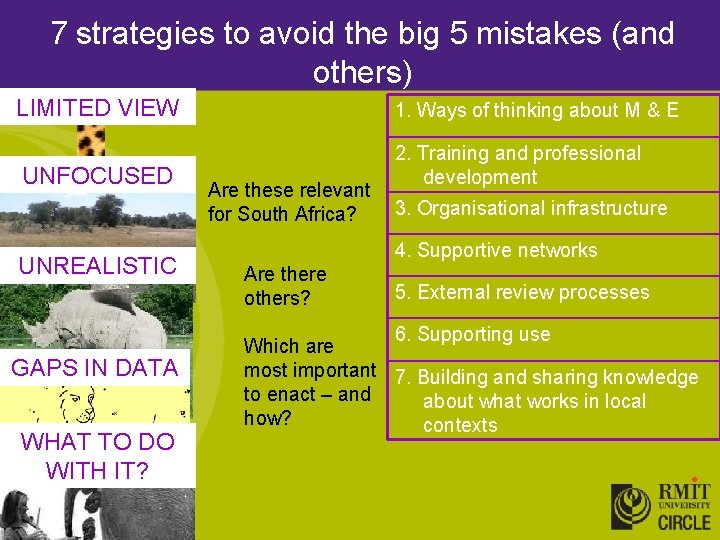

Questions for you The ‘Big Five’ problems in M & E Are these relevant for South Africa? Are there others? Which are most important to address? Seven possible strategies for improving the quality of M & E Are these relevant for South Africa? Are there others? Which are most important to enact – and how?

1. Presenting a limited view

1. Presenting a limited view

1. Presenting a limited view

1. Presenting a limited view • Only in terms of stated objectives and/or targets • Only from the perspectives of certain groups and individuals • Only certain types of data or research designs • Bare indicators without explanation

2. Unfocused

2. Unfocused

2. Unfocused

2. Unfocused

2. Unfocused • Trying to look at everything – and looking at nothing well • Not communicating clear messages

3. Unrealistic expectations

3. Unrealistic expectations

3. Unrealistic expectations Expecting • too much too soon and too easily • definitive answers • immediate answers about long-term impacts

4. Not enough good information

4. Not enough good information

4. Not enough good information • Poor measurement and other data collection • Poor response rate • Inadequate data analysis • Sensitive data removed • Pressure to fill in missing data

5. Waiting till the end to work out what to do with what comes out

5. Waiting till the end to work out what to do with what comes out

5. Waiting till the end to work out what to do with what comes out • Collecting lots of data – and then not being sure how to analyse it • Doing lots of evaluations – and then not being sure how to use them

Avoiding the Big 5

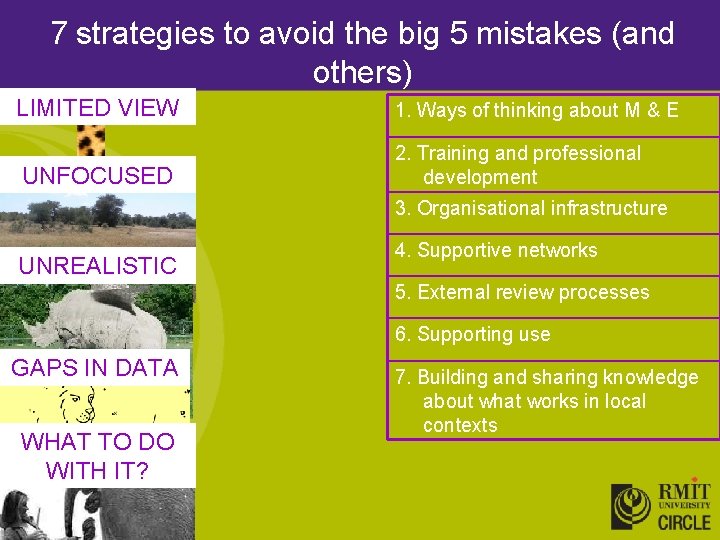

Avoiding the Big 5 LIMITED VIEW

Avoiding the Big 5 LIMITED VIEW UNFOCUSED

Avoiding the Big 5 LIMITED VIEW UNFOCUSED UNREALISTIC

Avoiding the Big 5 LIMITED VIEW UNFOCUSED GAPS IN DATA UNREALISTIC

Avoiding the Big 5 LIMITED VIEW UNFOCUSED WHAT TO DO WITH IT? GAPS IN DATA UNREALISTIC

Seven strategies 1. 2. 3. 4. 5. 6. 7. Better ways to think about M & E Training and professional development Organisational infrastructure Supportive networks External review processes Strategies for supporting use Building knowledge about what works in evaluation in particular contexts

1. Better ways to think about M & E Useful definitions Models of what evaluation is, and how it relates to policy and practice

1. Better ways to think about M & E Useful definitions • Not just measuring whether objectives have been met • Articulating, negotiating: – What do we value? – How is it going?

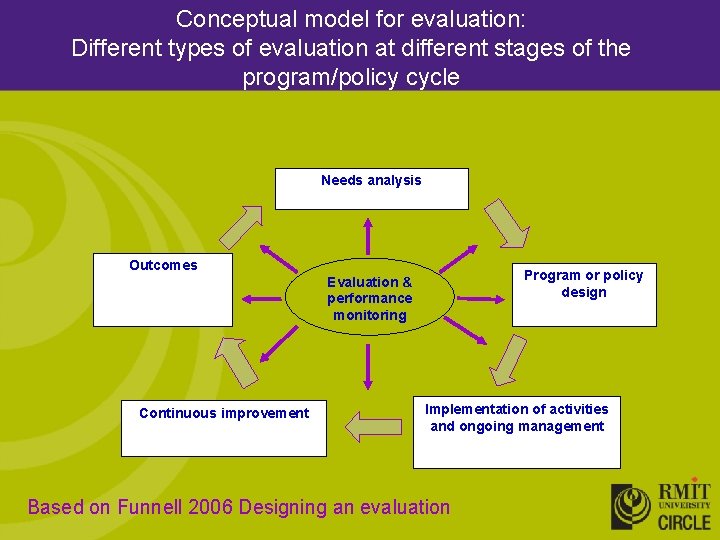

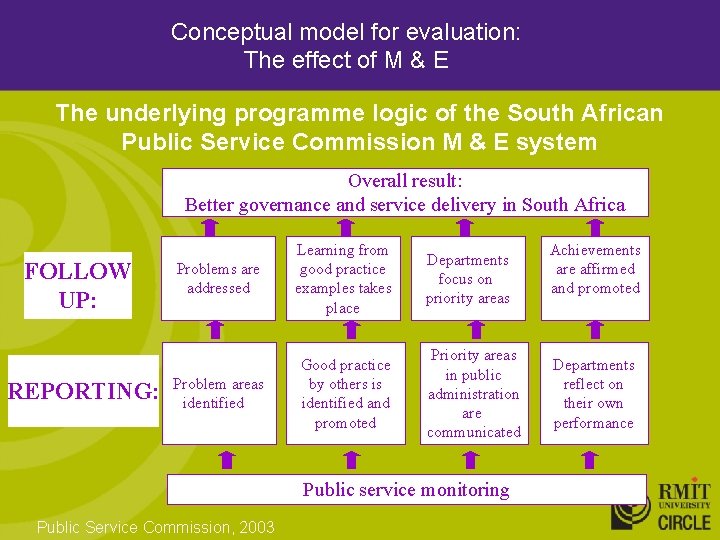

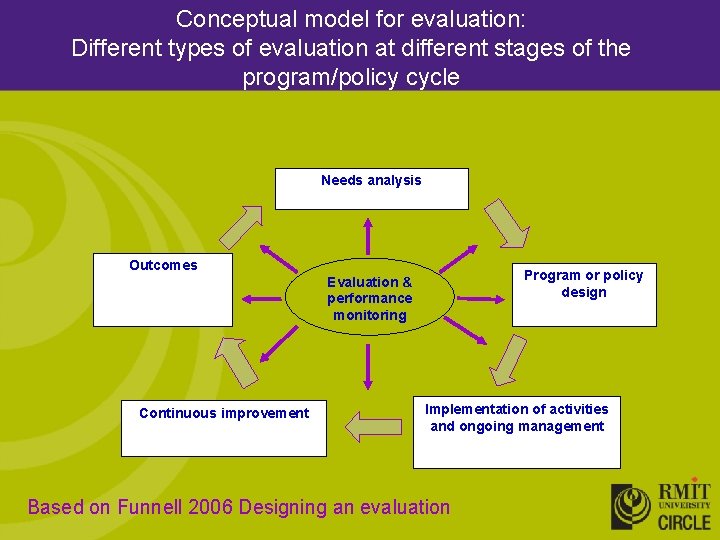

1. Better ways to think about M & E Models of what evaluation is, and how it relates to policy and practice 1. Different types of evaluation at different stages of the program/policy cycle – rather than a final activity 2. The effect of M & E 3. Iteratively building evaluation capacity

Common understandings of M & E • Including definitions and models in major documents, not just training manuals • Having these familiar to managers, staff and communities, not just to evaluators • Also recognising the value of different definitions and conceptualisations

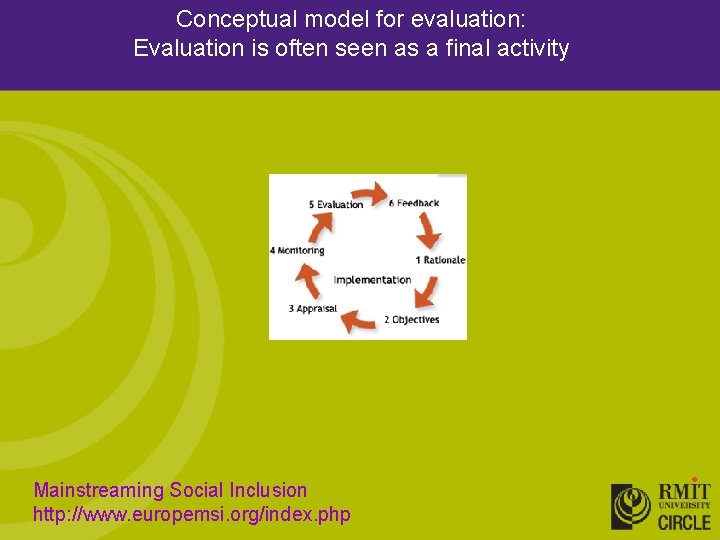

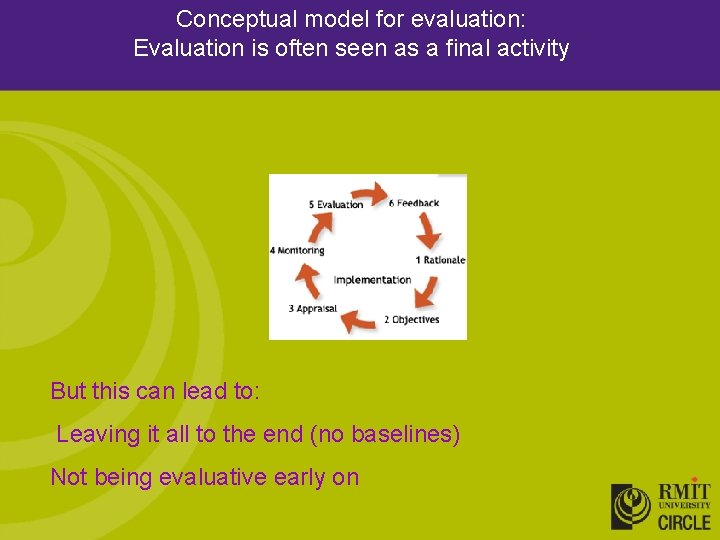

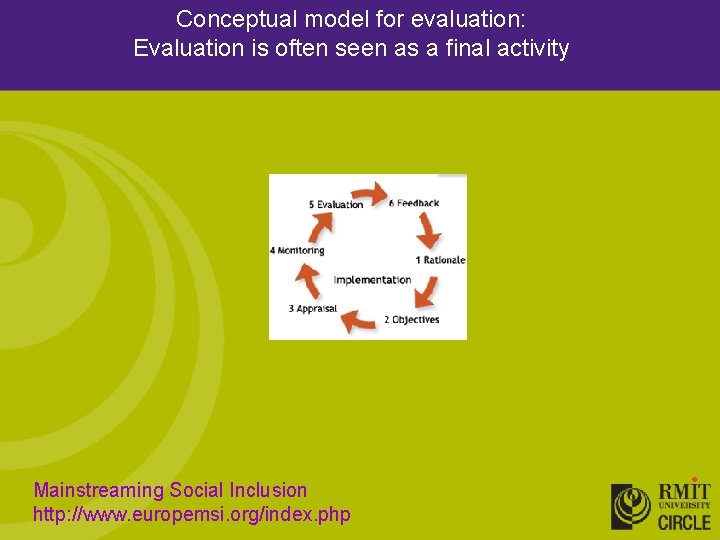

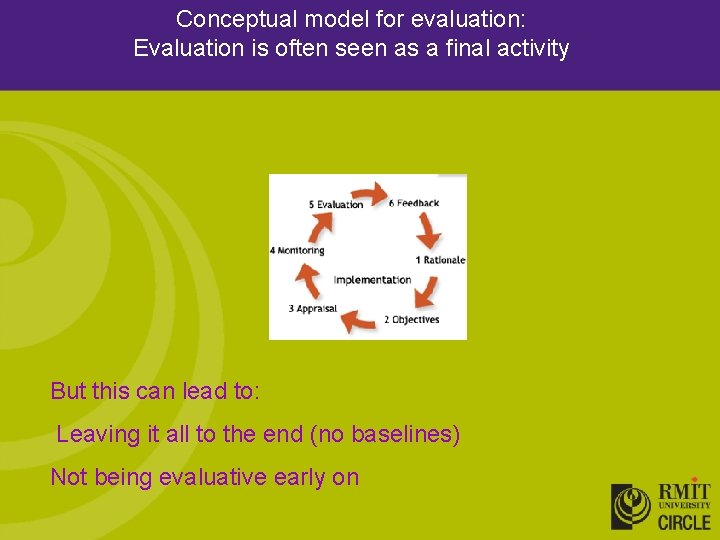

Conceptual model for evaluation: Evaluation is often seen as a final activity Mainstreaming Social Inclusion http: //www. europemsi. org/index. php

Conceptual model for evaluation: Evaluation is often seen as a final activity But this can lead to: Leaving it all to the end (no baselines) Not being evaluative early on

Conceptual model for evaluation: Different types of evaluation at different stages of the program/policy cycle Needs analysis Outcomes Program or policy design Evaluation & performance monitoring Continuous improvement Implementation of activities and ongoing management Based on Funnell 2006 Designing an evaluation

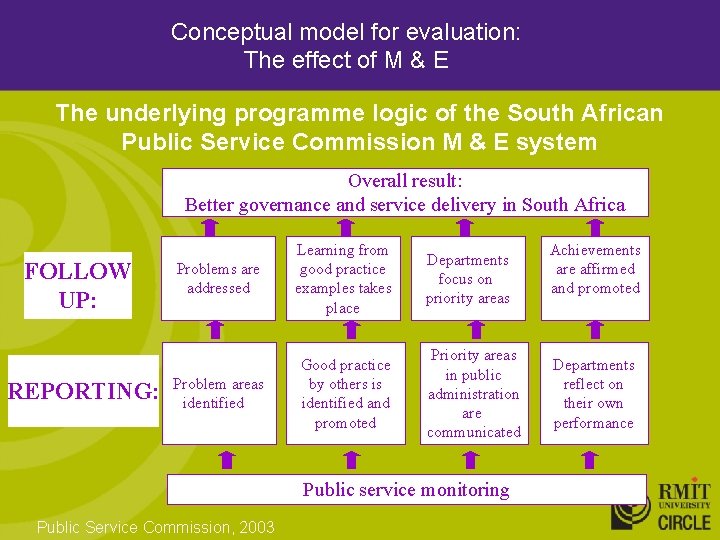

Conceptual model for evaluation: The effect of M & E The underlying programme logic of the South African Public Service Commission M & E system Overall result: Better governance and service delivery in South Africa FOLLOW UP: REPORTING: Problems are addressed Learning from good practice examples takes place Problem areas identified Good practice by others is identified and promoted Departments focus on priority areas Priority areas in public administration are communicated Public service monitoring Public Service Commission, 2003 Achievements are affirmed and promoted Departments reflect on their own performance

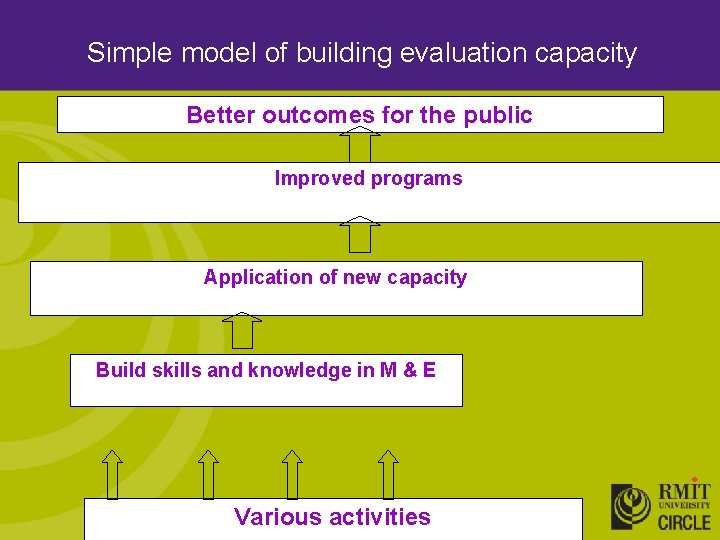

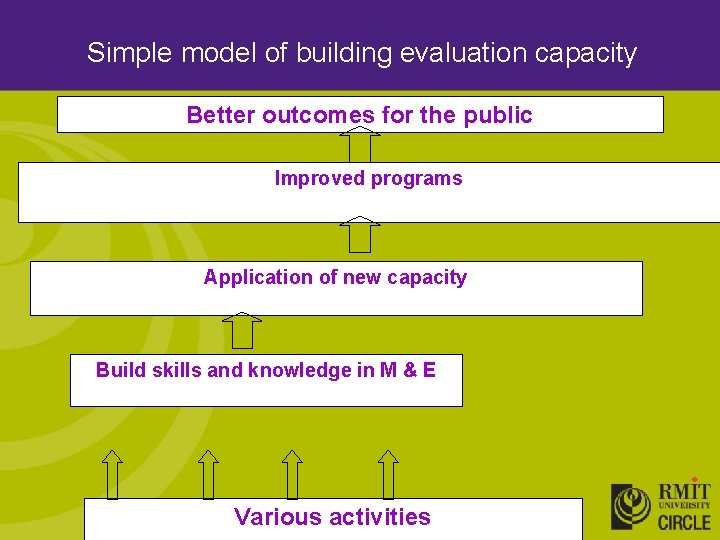

Simple model of building evaluation capacity Better outcomes for the public Improved programs Application of new capacity Build skills and knowledge in M & E Various activities

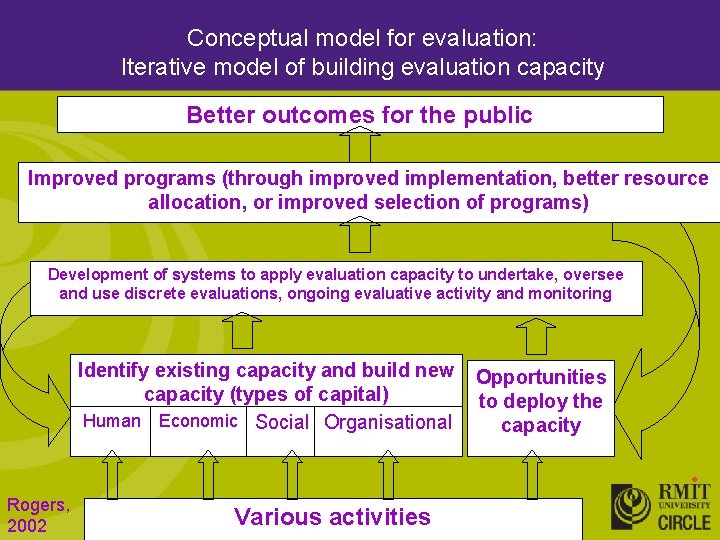

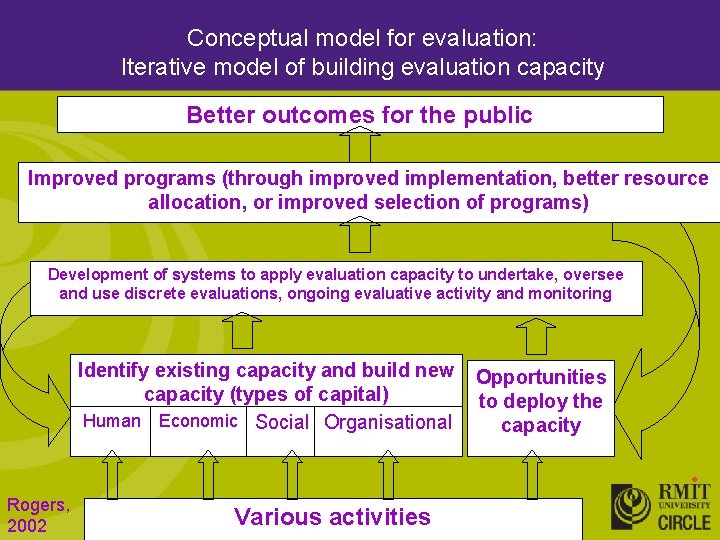

Conceptual model for evaluation: Iterative model of building evaluation capacity Better outcomes for the public Improved programs (through improved implementation, better resource allocation, or improved selection of programs) Development of systems to apply evaluation capacity to undertake, oversee and use discrete evaluations, ongoing evaluative activity and monitoring Identify existing capacity and build new capacity (types of capital) Human Economic Social Organisational Rogers, 2002 Various activities Opportunities to deploy the capacity

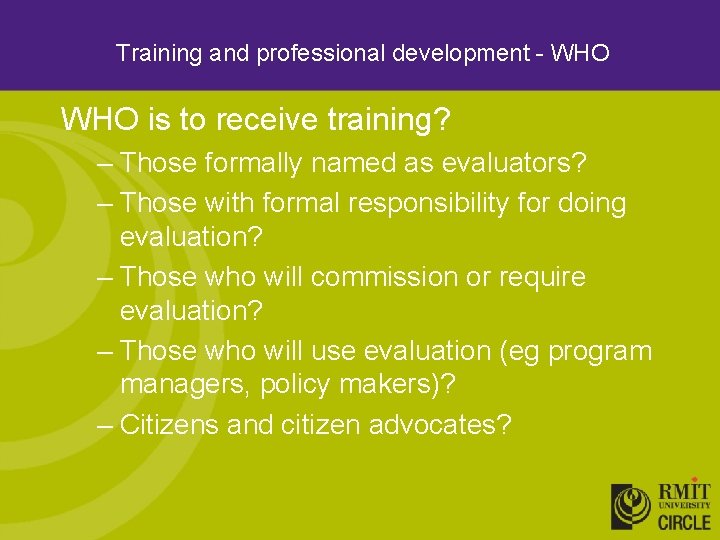

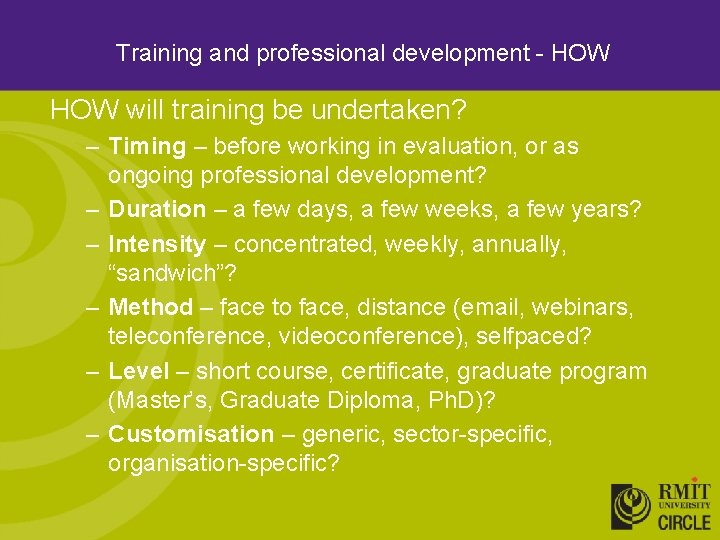

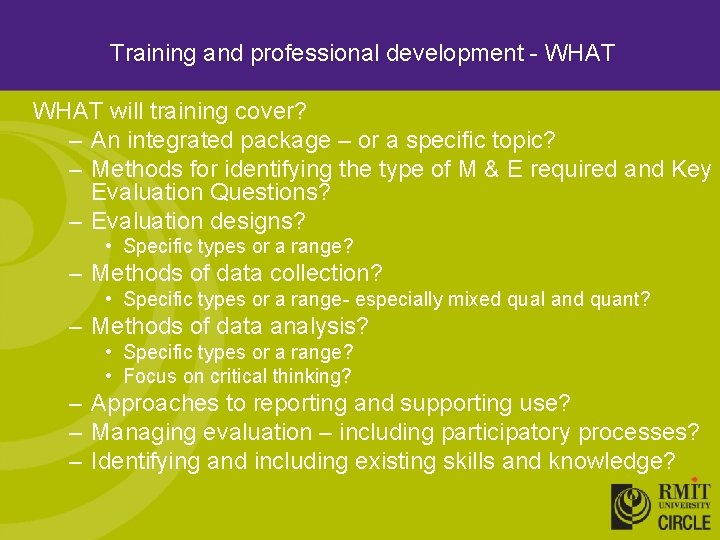

2. Training and professional development WHO is to receive training? HOW will training be undertaken? WHAT will training cover? WHO will control content, certification and accreditation?

Training and professional development - WHO is to receive training? – Those formally named as evaluators? – Those with formal responsibility for doing evaluation? – Those who will commission or require evaluation? – Those who will use evaluation (eg program managers, policy makers)? – Citizens and citizen advocates?

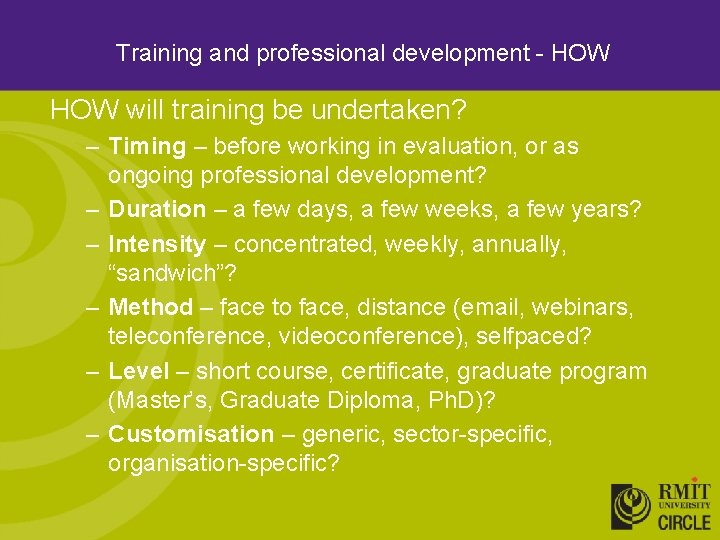

Training and professional development - HOW will training be undertaken? – Timing – before working in evaluation, or as ongoing professional development? – Duration – a few days, a few weeks, a few years? – Intensity – concentrated, weekly, annually, “sandwich”? – Method – face to face, distance (email, webinars, teleconference, videoconference), selfpaced? – Level – short course, certificate, graduate program (Master’s, Graduate Diploma, Ph. D)? – Customisation – generic, sector-specific, organisation-specific?

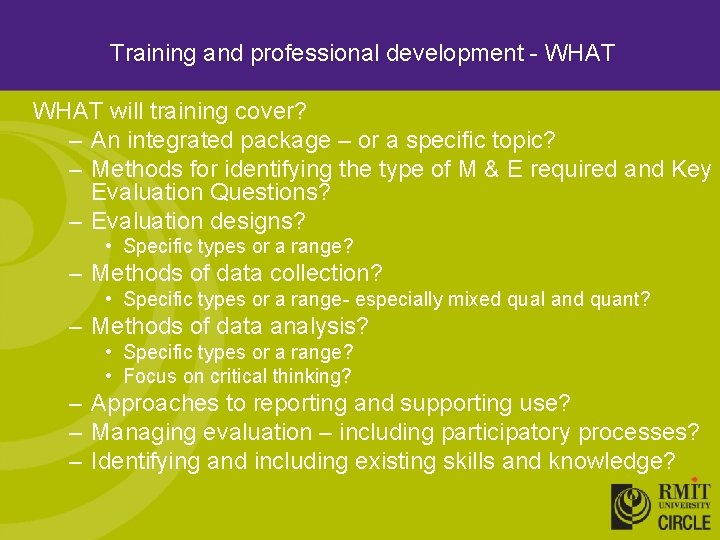

Training and professional development - WHAT will training cover? – An integrated package – or a specific topic? – Methods for identifying the type of M & E required and Key Evaluation Questions? – Evaluation designs? • Specific types or a range? – Methods of data collection? • Specific types or a range- especially mixed qual and quant? – Methods of data analysis? • Specific types or a range? • Focus on critical thinking? – Approaches to reporting and supporting use? – Managing evaluation – including participatory processes? – Identifying and including existing skills and knowledge?

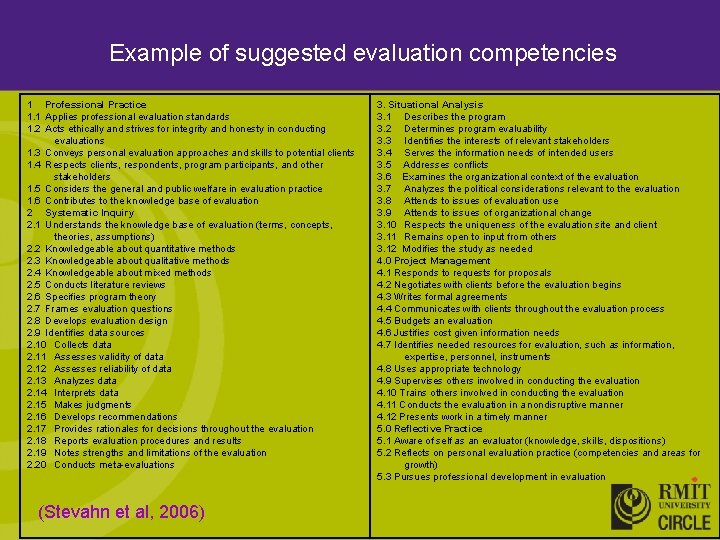

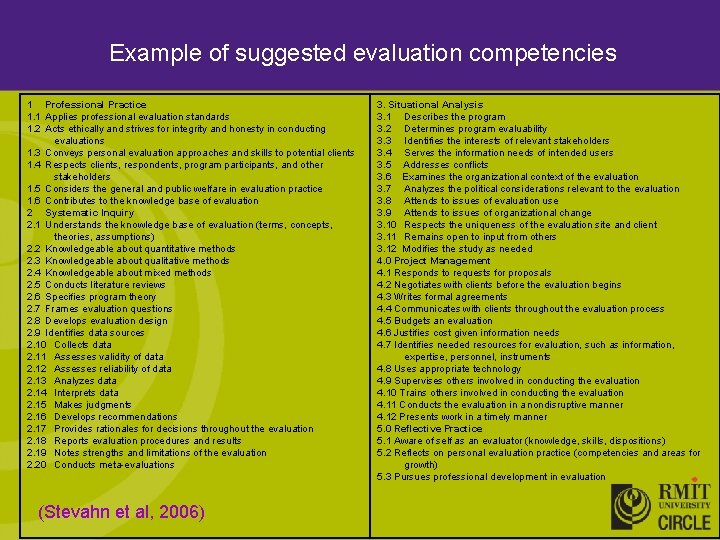

Example of suggested evaluation competencies 1 Professional Practice 1. 1 Applies professional evaluation standards 1. 2 Acts ethically and strives for integrity and honesty in conducting evaluations 1. 3 Conveys personal evaluation approaches and skills to potential clients 1. 4 Respects clients, respondents, program participants, and other stakeholders 1. 5 Considers the general and public welfare in evaluation practice 1. 6 Contributes to the knowledge base of evaluation 2 Systematic Inquiry 2. 1 Understands the knowledge base of evaluation (terms, concepts, theories, assumptions) 2. 2 Knowledgeable about quantitative methods 2. 3 Knowledgeable about qualitative methods 2. 4 Knowledgeable about mixed methods 2. 5 Conducts literature reviews 2. 6 Specifies program theory 2. 7 Frames evaluation questions 2. 8 Develops evaluation design 2. 9 Identifies data sources 2. 10 Collects data 2. 11 Assesses validity of data 2. 12 Assesses reliability of data 2. 13 Analyzes data 2. 14 Interprets data 2. 15 Makes judgments 2. 16 Develops recommendations 2. 17 Provides rationales for decisions throughout the evaluation 2. 18 Reports evaluation procedures and results 2. 19 Notes strengths and limitations of the evaluation 2. 20 Conducts meta-evaluations (Stevahn et al, 2006) 3. Situational Analysis 3. 1 Describes the program 3. 2 Determines program evaluability 3. 3 Identifies the interests of relevant stakeholders 3. 4 Serves the information needs of intended users 3. 5 Addresses conflicts 3. 6 Examines the organizational context of the evaluation 3. 7 Analyzes the political considerations relevant to the evaluation 3. 8 Attends to issues of evaluation use 3. 9 Attends to issues of organizational change 3. 10 Respects the uniqueness of the evaluation site and client 3. 11 Remains open to input from others 3. 12 Modifies the study as needed 4. 0 Project Management 4. 1 Responds to requests for proposals 4. 2 Negotiates with clients before the evaluation begins 4. 3 Writes formal agreements 4. 4 Communicates with clients throughout the evaluation process 4. 5 Budgets an evaluation 4. 6 Justifies cost given information needs 4. 7 Identifies needed resources for evaluation, such as information, expertise, personnel, instruments 4. 8 Uses appropriate technology 4. 9 Supervises others involved in conducting the evaluation 4. 10 Trains others involved in conducting the evaluation 4. 11 Conducts the evaluation in a nondisruptive manner 4. 12 Presents work in a timely manner 5. 0 Reflective Practice 5. 1 Aware of self as an evaluator (knowledge, skills, dispositions) 5. 2 Reflects on personal evaluation practice (competencies and areas for growth) 5. 3 Pursues professional development in evaluation

Training and professional development – Short course examples • University of Zambia M & E course • IPDET (International Program for Development Evaluation Training) Independent Evaluation Group of the World Bank and Carleton University, http: //www. ipdet. org • CDC (Centers for Disease Control), Summer Institute USA http: //www. eval. org/Summer. Institute/06 SIhome. asp • The Evaluators Institute San Francisco, Chicago, Washington DC, USA www. evaluatorsinstitute. com • CDRA (Community Development Resource Association) Developmental Planning, Monitoring, Evaluation and Reporting, Cape Town, South Africa www. cdra. org. za • Pre-conference workshops Afr. EA - African Evaluation Association www. afrea. org AEA - American Evaluation Association www. eval. org SAMEA - South African Monitoring and Evaluation Association www. samea. org. za AES - Australasian Evaluation Society www. aes. asn. au EES – European Evaluation Society www. europeanevaluation. org CES – Canadian Evaluation Society www. evaluationcanada. ca UKES – United Kingdom Evaluation Society www. evaluation. org. uk

Training and professional development – Graduate programs • Centre for Research on Science and Technology, The University of Stellenbsoch, Cape Town, South Africa, Postgraduate Diploma in Monitoring and Evaluation Methods. One year couse delivered in intensive mode of face to face courses interspersed with self-study. • School of Health Systems and Public Health (SHSPH), the University of Pretoria, South Africa, in collaboration with the MEASURE Evaluation Project M&E concentration in their Master of Public Health degree program. Courses taught in modules of one to three weeks, six-month internship and individual research. • Graduate School of Public & Development Management (P&DM), the University of the Witwatersrand, Johannesburg – Electives on monitoring and evaluation as part of their Masters Degree programmes in Public and Development Management as well as in Public Policy. • Centre for Program Evaluation, University of Melbourne, Australia Masters of Assessment and Evaluation. Available by distance education. www. unimelb. edu. au/cpe • CIRCLE, Royal Melbourne Institute of Technology, Australia Masters and Ph. D by research • University of Western Michigan, USA Interdisciplinary Ph. D residential coursework program.

Training and professional development – On-line material • Self-paced courses • Manuals • Guidelines

Training and professional development – Key Questions • Who controls the curriculum, accreditation of courses and certification of evaluators? • What are the consequences of this control?

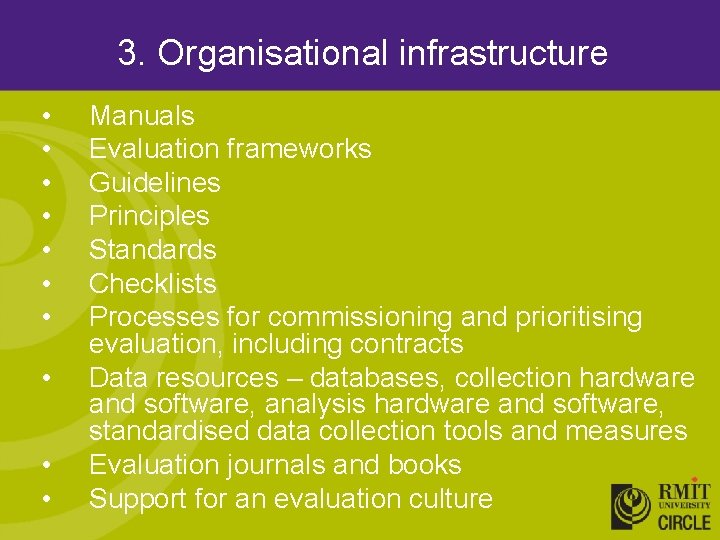

3. Organisational infrastructure • • • Manuals Evaluation frameworks Guidelines Principles Standards Checklists Processes for commissioning and prioritising evaluation, including contracts Data resources – databases, collection hardware and software, analysis hardware and software, standardised data collection tools and measures Evaluation journals and books Support for an evaluation culture

Options for organisational infrastructure • Importing existing infrastructure • Adapting existing infrastructure • Developing locally specific infrastructure

Some existing infrastructure • Guidelines e. g. Australasian Evaluation Society Ethical Guidelines www. aes. asn. au 6. Practise within competence The evaluator or evaluation team should possess the knowledge, abilities, skills and experience appropriate to undertake the tasks proposed in the evaluation. Evaluators should fairly represent their competence, and should not practice beyond it. 21. Fully reflect evaluator’s findings The final report(s) of the evaluation should reflect fully the findings and conclusions determined by the evaluator, and these should not be amended without the evaluator's consent.

Some existing infrastructure • Checklist e. g. Patton’s Qualitative Evaluation Checklist http: //www. wmich. edu/evalctr/checklists/ 1. Determine the extent to which qualitative methods are appropriate given the evaluation’s purposes and intended uses. �� Be prepared to explain the variations, strengths, and weaknesses of qualitative evaluations. �� Determine the criteria by which the quality of the evaluation will be judged. �� Determine the extent to which qualitative evaluation will be accepted or controversial given the evaluation’s purpose, users, and audiences. �� Determine what foundation should be laid to assure that the findings of a qualitative evaluation will be credible.

4. Supportive networks • • Informal networks Evaluation societies and associations Learning circles Mentoring

5. External review processes • WHAT – – – – Priorities for evaluation Guidelines, manuals Plans for individual evaluations Specifications for indicators Data collection Data analysis Reports

5. External review processes • WHEN – Before next stage of evaluation (review for improvement) – Before acceptance of evaluation report – At end of an episode of evaluation – identify and document lessons learned about evaluation – As part of ongoing quality assurance

5. External review processes • WHO – Peer review – reciprocal review of each other’s work – External expert

6. Supporting use • Register of evaluation reports Summary of methods used, findings, availability of report • Publishing evaluation reports Library and web access • Tracking and reporting on implementation of recommendations

7. Building knowledge about what works in evaluation in particular contexts • • Research into evaluation – empirically documenting what is done and how it goes Publishing accounts and lessons learned – – Books Journals Web sites Locally and internationally

Example: Kaupapa Maori evaluation (New Zealand) Seven key ethical considerations : 1. Aroha ki te tangata (respect for people) 2. Kanohi kitea (the seen face; a requirement to present yourself ‘face to face’) 3. Titiro, whakarongo…korero (look, listen… then speak). 4. Manaaki ki te tangata (share and host people, be generous) 5. Kia tupato (be cautious). 6. Kaua e takahia te mana o te tangata (do not trample on the mana of people). 7. Kaua e mahaki (do not flaunt your knowledge). Smith, G. H. (1997) The Development of Kaupapa Maori: theory and praxis. University of Auckland, Auckland.

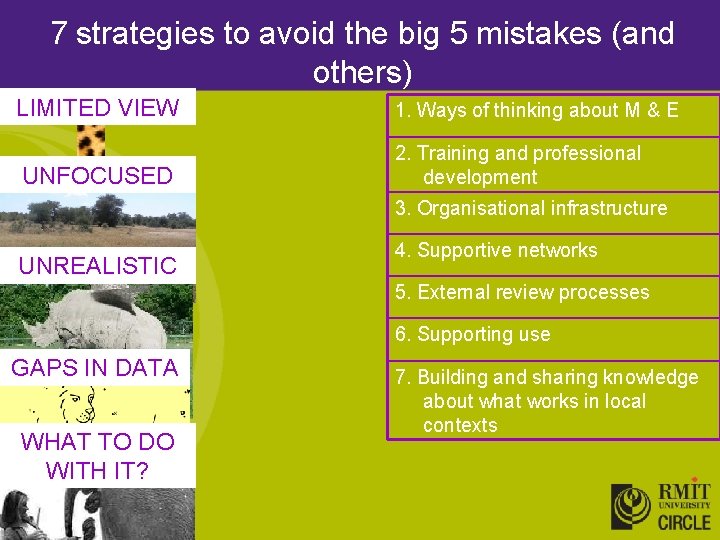

7 strategies to avoid the big 5 mistakes (and others) LIMITED VIEW 1. Ways of thinking about M & E UNFOCUSED 2. Training and professional development 3. Organisational infrastructure UNREALISTIC 4. Supportive networks 5. External review processes 6. Supporting use GAPS IN DATA WHAT TO DO WITH IT? 7. Building and sharing knowledge about what works in local contexts

7 strategies to avoid the big 5 mistakes (and others) LIMITED VIEW 1. Ways of thinking about M & E UNFOCUSED 2. Training and professional development UNREALISTIC GAPS IN DATA WHAT TO DO WITH IT? Are these relevant for South Africa? 3. Organisational infrastructure 4. Supportive networks Are there others? 5. External review processes 6. Supporting use Which are most important 7. Building and sharing knowledge to enact – and about what works in local how? contexts

Patricia. Rogers@rmit. edu. au CIRCLE at RMIT University Collaborative Institute for Research, Consulting and Learning in Evaluation, Royal Melbourne Institute of Technology Melbourne, AUSTRALIA