Store GPU Exploiting Graphics Processing Units to Accelerate

![Exploiting GPUs’ Computational Power Studies exploiting the GPU: • Bioinformatics: [Liu 06] • Chemistry: Exploiting GPUs’ Computational Power Studies exploiting the GPU: • Bioinformatics: [Liu 06] • Chemistry:](https://slidetodoc.com/presentation_image_h/dbde80b91acf0b45a2ad4520d9c9e677/image-4.jpg)

![References [Damgard 89] Damgard, I. A Design Principle for Hash Functions. in Advances in References [Damgard 89] Damgard, I. A Design Principle for Hash Functions. in Advances in](https://slidetodoc.com/presentation_image_h/dbde80b91acf0b45a2ad4520d9c9e677/image-35.jpg)

- Slides: 35

Store. GPU Exploiting Graphics Processing Units to Accelerate Distributed Storage Systems Samer Al-Kiswany with: Abdullah Gharaibeh, Elizeu Santos-Neto, George Yuan, Matei Ripeanu Net. Sys. Lab The University of British Columbia

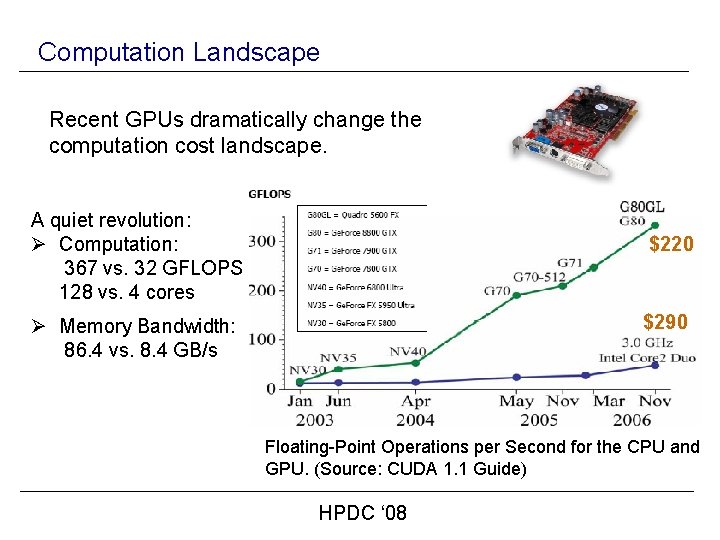

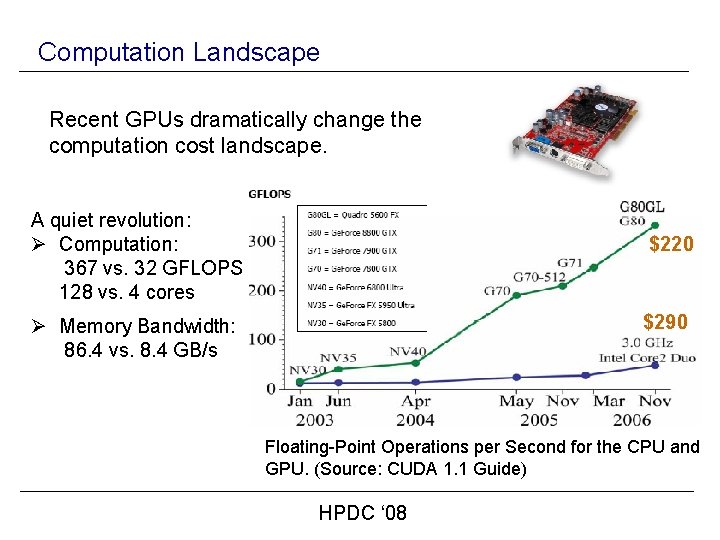

Computation Landscape Recent GPUs dramatically change the computation cost landscape. A quiet revolution: Ø Computation: 367 vs. 32 GFLOPS 128 vs. 4 cores $220 $290 Ø Memory Bandwidth: 86. 4 vs. 8. 4 GB/s Floating-Point Operations per Second for the CPU and GPU. (Source: CUDA 1. 1 Guide) HPDC ‘ 08

Computation Landscape Recent GPUs dramatically change the computation cost landscape. Ø Affordable Ø Widely available in commodity desktop Ø Include 10 s to 100 s of cores ( can support 1000 s of threads) Ø General purpose programming friendly HPDC ‘ 08

![Exploiting GPUs Computational Power Studies exploiting the GPU Bioinformatics Liu 06 Chemistry Exploiting GPUs’ Computational Power Studies exploiting the GPU: • Bioinformatics: [Liu 06] • Chemistry:](https://slidetodoc.com/presentation_image_h/dbde80b91acf0b45a2ad4520d9c9e677/image-4.jpg)

Exploiting GPUs’ Computational Power Studies exploiting the GPU: • Bioinformatics: [Liu 06] • Chemistry: [Vogt 08] • Physics: [Anderson 08] • And many more : [Owens 07] Report: 4 x to 50 x speedup But: Mostly scientific and specialized applications. HPDC ‘ 08

Motivating Question System design: balancing act in a multi-dimensional space e. g. , given certain objectives, say job turnaround, minimize total system cost given component prices, I/O bottlenecks, bounds on storage and network traffic, energy consumption, etc. Q: Does the 10 x reduction in computation costs GPUs offer change the way we design/implement (distributed) system middleware?

Distributed Systems Computationally Intensive Operations Ø Ø Ø Hashing Erasure coding Encryption/decryption Compression Membership testing (Bloom-filter) Used in: Ø Storage systems Ø Security protocols Ø Data dissemination techniques Ø Virtual machines memory management Ø And many more … Computationally intensive Often avoided in existing systems. HPDC ‘ 08

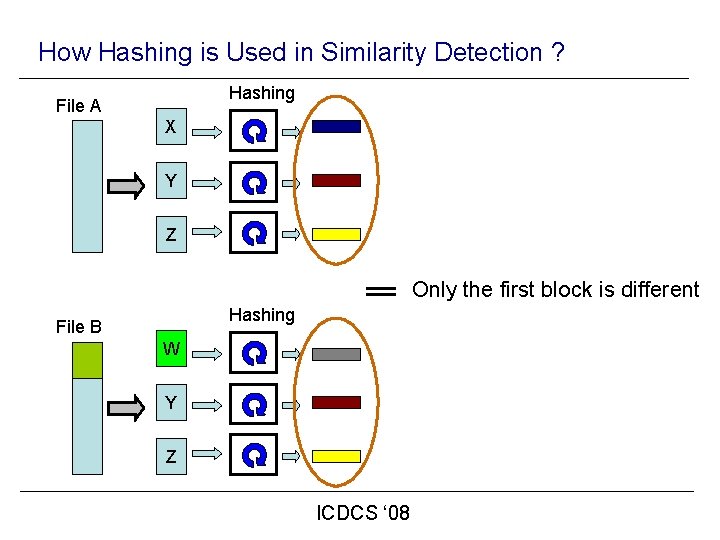

Why Start with Hashing? Popular -- used in many situations: § Similarity detection § Content addressability § Integrity § Copyright infringement detection § Load balancing

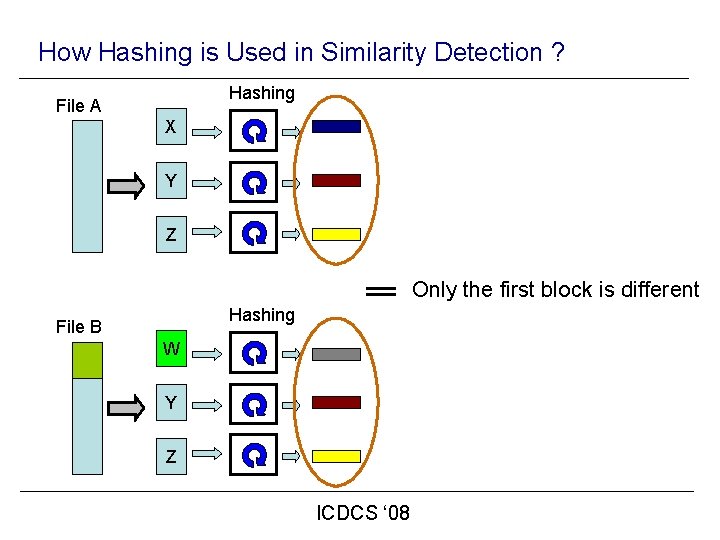

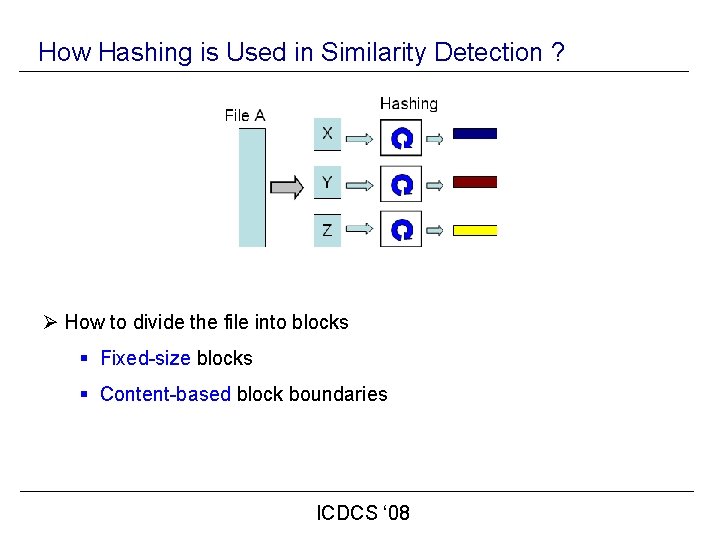

How Hashing is Used in Similarity Detection ? Hashing File A X Y Z Only the first block is different Hashing File B W Y Z ICDCS ‘ 08

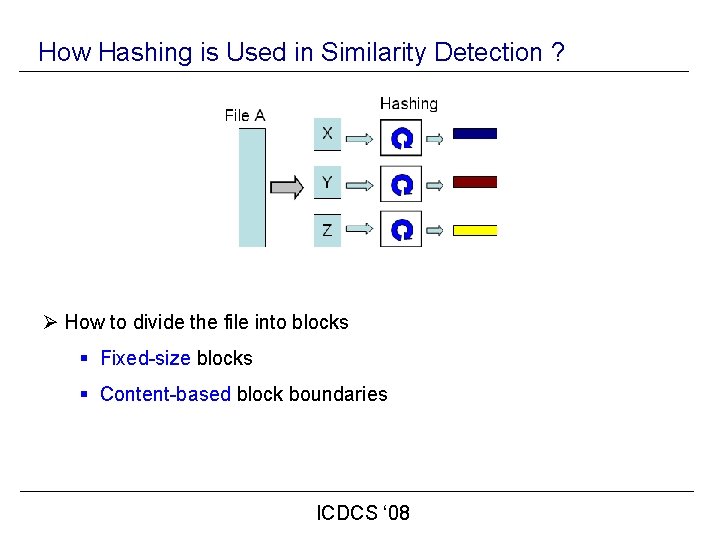

How Hashing is Used in Similarity Detection ? Ø How to divide the file into blocks § Fixed-size blocks § Content-based block boundaries ICDCS ‘ 08

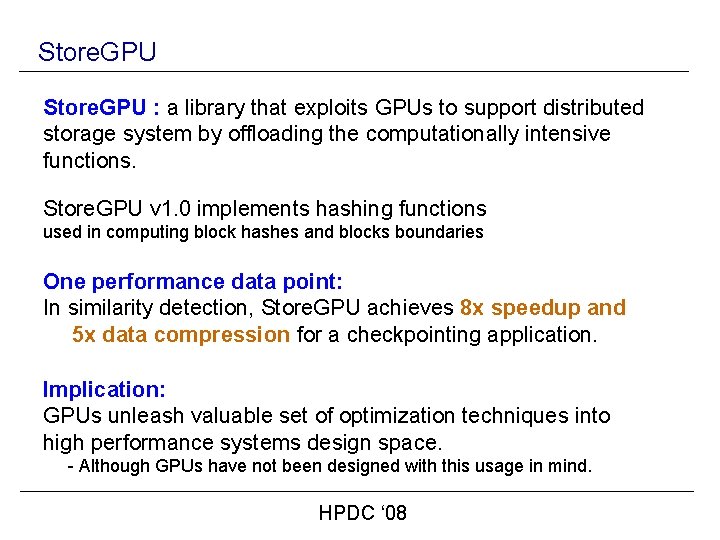

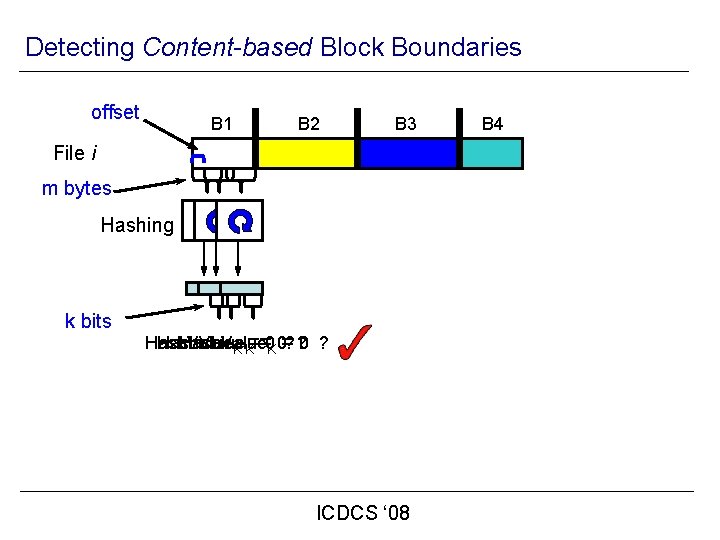

Detecting Content-based Block Boundaries offset B 1 B 2 B 3 File i m bytes Hashing k bits Hash. Value ==0 K 0=? ? 0 ? KK ICDCS ‘ 08 B 4

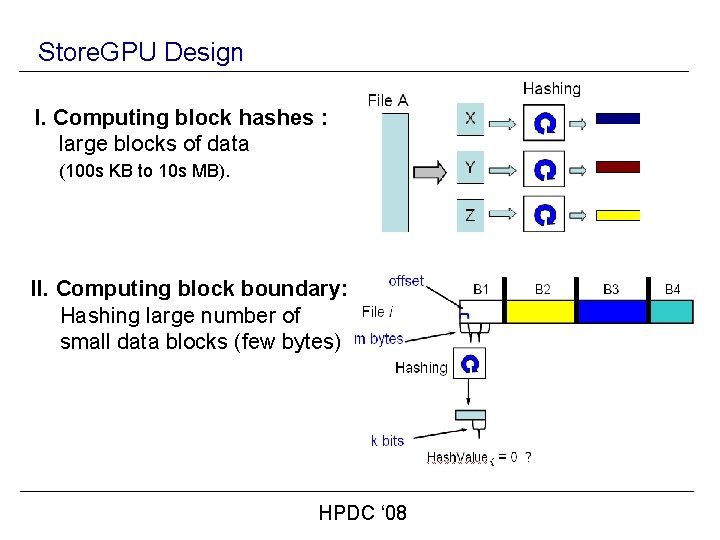

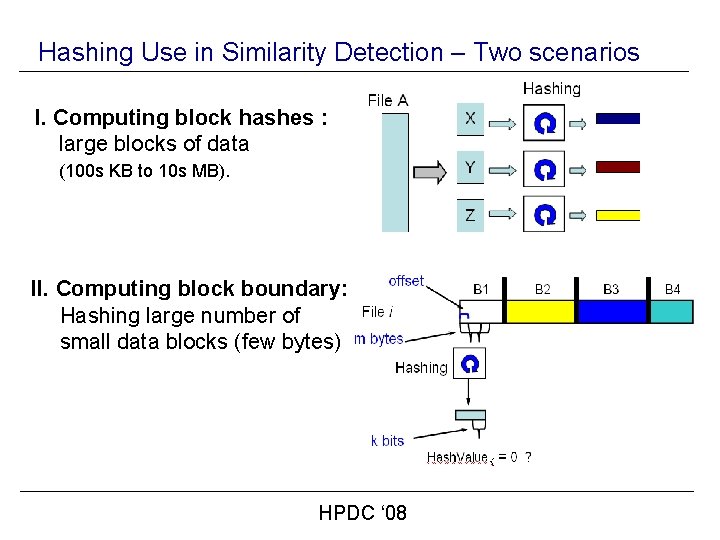

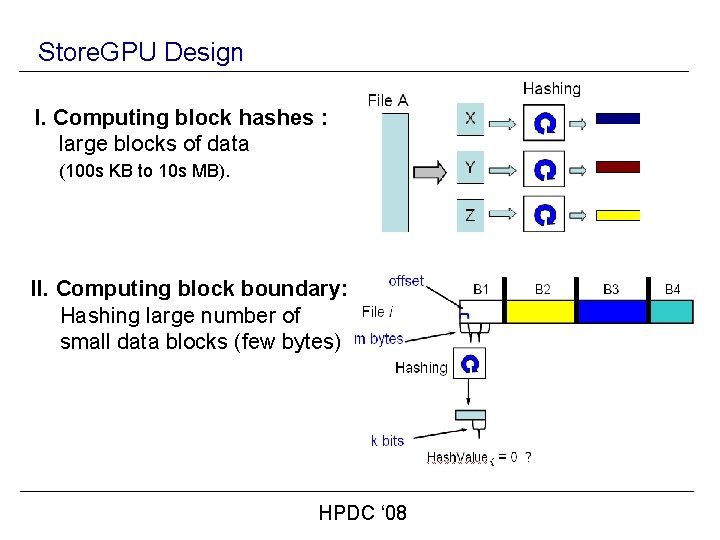

Hashing Use in Similarity Detection – Two scenarios I. Computing block hashes : large blocks of data (100 s KB to 10 s MB). II. Computing block boundary: Hashing large number of small data blocks (few bytes) HPDC ‘ 08

Store. GPU : a library that exploits GPUs to support distributed storage system by offloading the computationally intensive functions. Store. GPU v 1. 0 implements hashing functions used in computing block hashes and blocks boundaries One performance data point: In similarity detection, Store. GPU achieves 8 x speedup and 5 x data compression for a checkpointing application. Implication: GPUs unleash valuable set of optimization techniques into high performance systems design space. - Although GPUs have not been designed with this usage in mind. HPDC ‘ 08

Outline § § § GPU architecture GPU programming Typical application flow Store. GPU design Evaluation HPDC ‘ 08

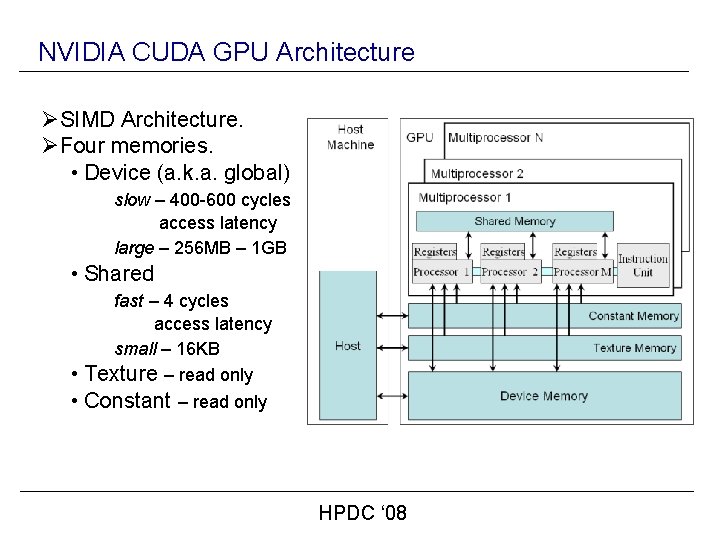

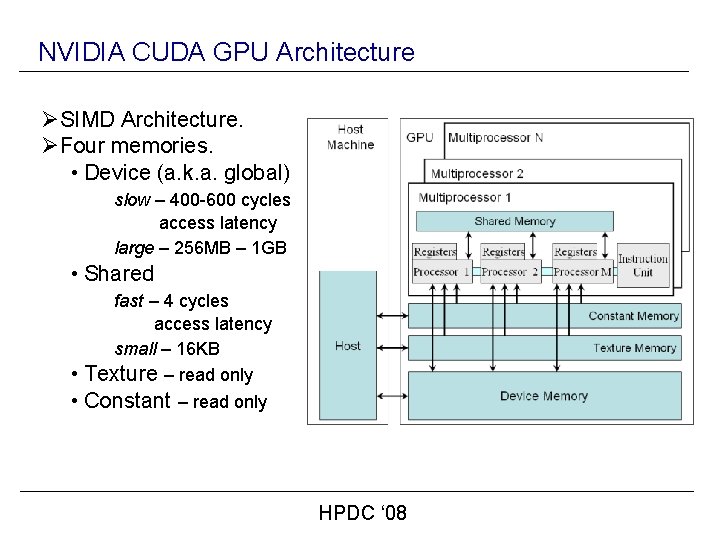

NVIDIA CUDA GPU Architecture ØSIMD Architecture. ØFour memories. • Device (a. k. a. global) slow – 400 -600 cycles access latency large – 256 MB – 1 GB • Shared fast – 4 cycles access latency small – 16 KB • Texture – read only • Constant – read only HPDC ‘ 08

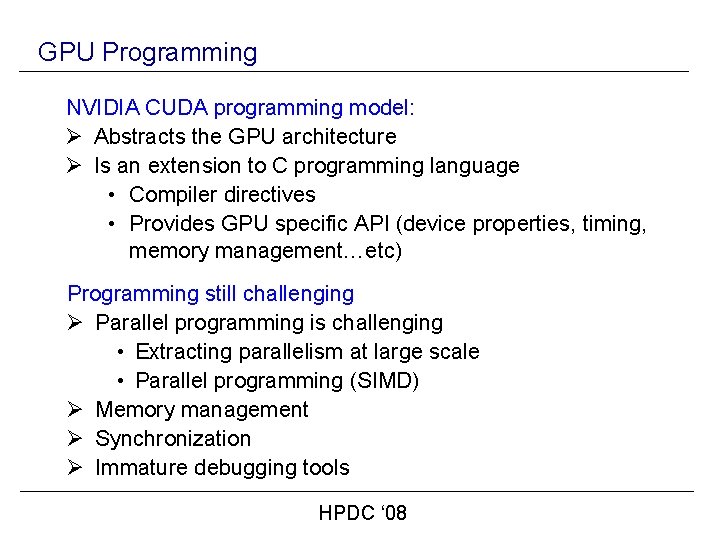

GPU Programming NVIDIA CUDA programming model: Ø Abstracts the GPU architecture Ø Is an extension to C programming language • Compiler directives • Provides GPU specific API (device properties, timing, memory management…etc) Programming still challenging Ø Parallel programming is challenging • Extracting parallelism at large scale • Parallel programming (SIMD) Ø Memory management Ø Synchronization Ø Immature debugging tools HPDC ‘ 08

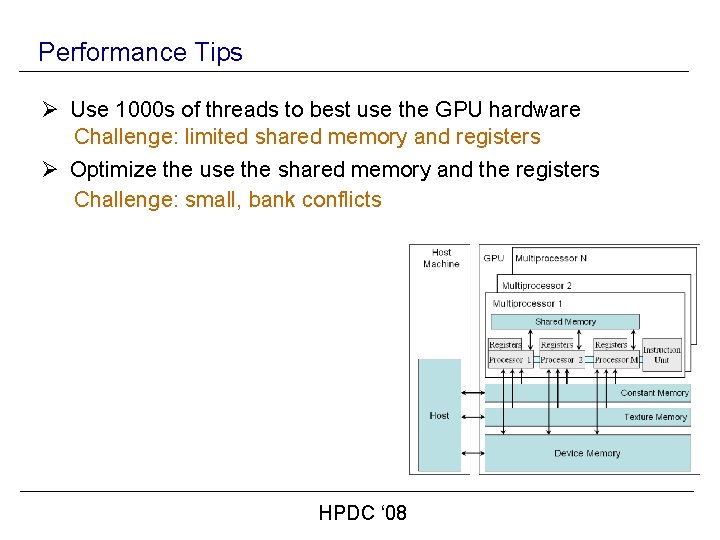

Performance Tips Ø Use 1000 s of threads to best use the GPU hardware Challenge: limited shared memory and registers Ø Optimize the use the shared memory and the registers Challenge: small, bank conflicts HPDC ‘ 08

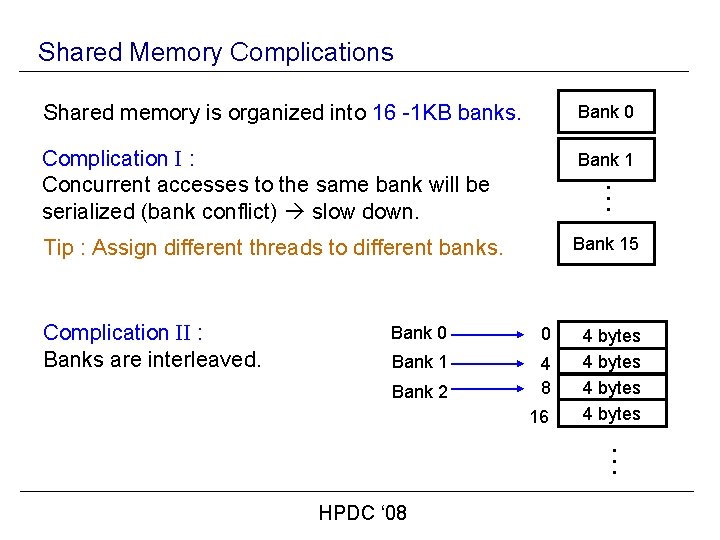

Shared Memory Complications Shared memory is organized into 16 -1 KB banks. Bank 0 Complication I : Concurrent accesses to the same bank will be serialized (bank conflict) slow down. Bank 1. . . Tip : Assign different threads to different banks. Bank 15 Complication II : Banks are interleaved. Bank 0 0 Bank 1 4 8 Bank 2 16 4 bytes. . . HPDC ‘ 08

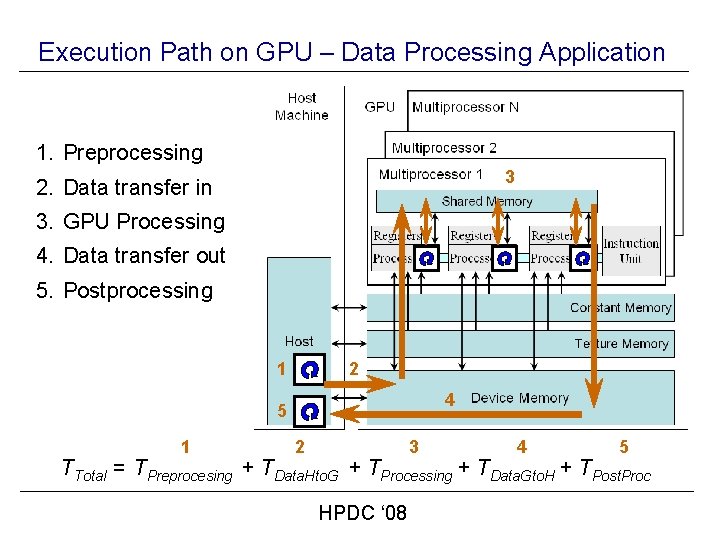

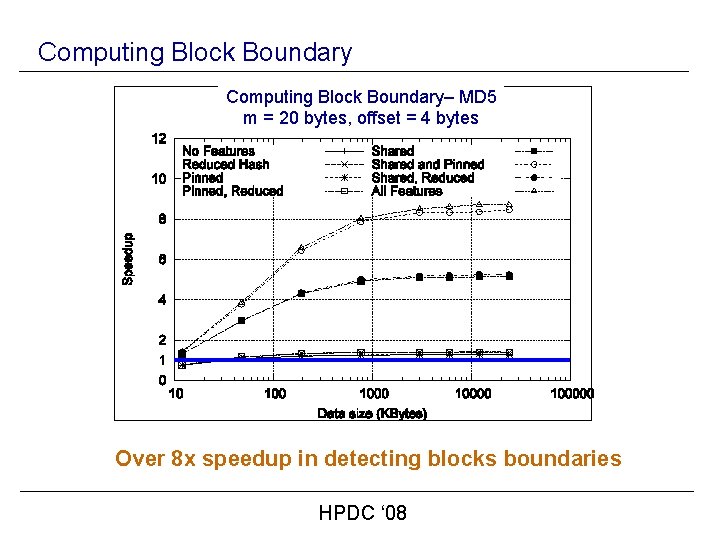

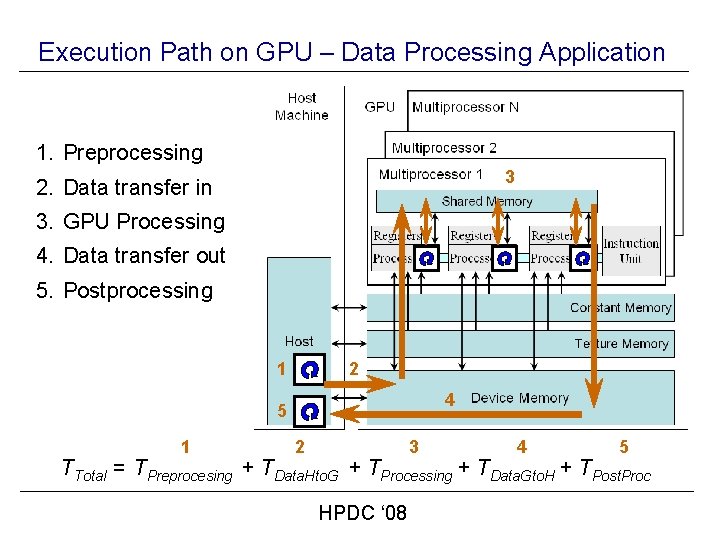

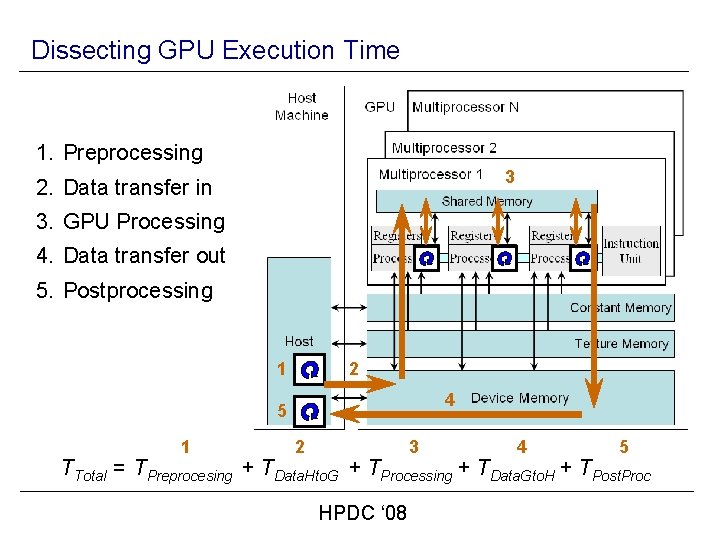

Execution Path on GPU – Data Processing Application 1. Preprocessing 3 2. Data transfer in 3. GPU Processing 4. Data transfer out 5. Postprocessing 1 2 4 5 1 2 3 4 5 TTotal = TPreprocesing + TData. Hto. G + TProcessing + TData. Gto. H + TPost. Proc HPDC ‘ 08

Outline § § § GPU architecture GPU programming Typical application flow Store. GPU design Evaluation HPDC ‘ 08

Store. GPU Design I. Computing block hashes : large blocks of data (100 s KB to 10 s MB). II. Computing block boundary: Hashing large number of small data blocks (few bytes) HPDC ‘ 08

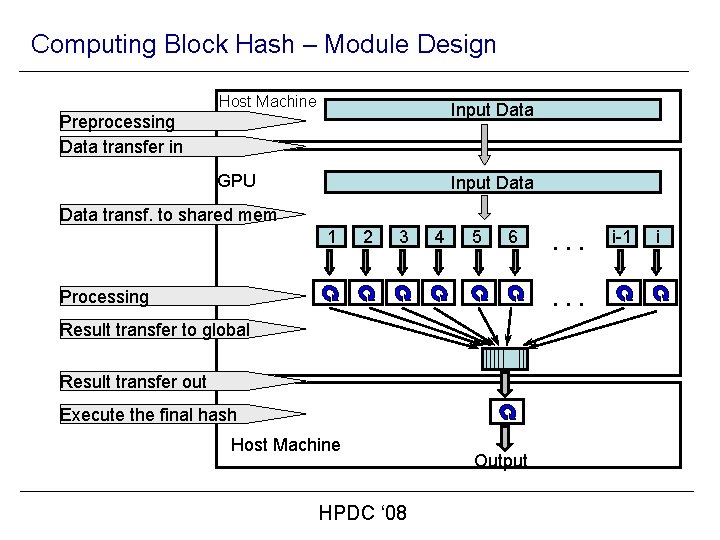

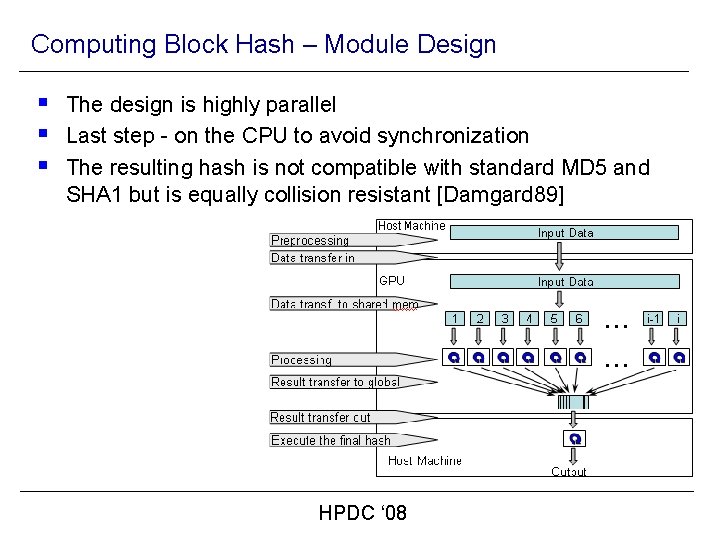

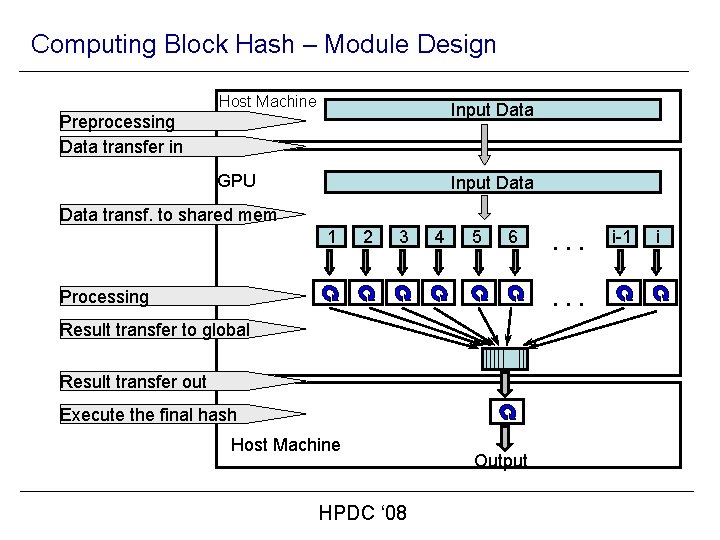

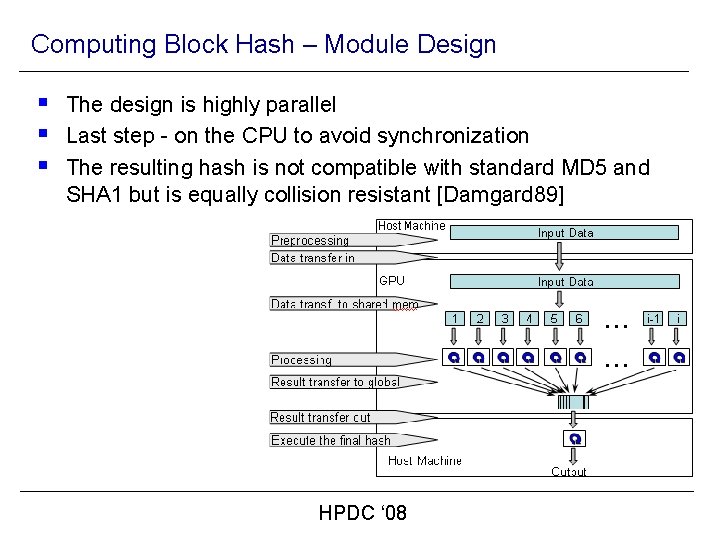

Computing Block Hash – Module Design Host Machine Input Data GPU Input Data Preprocessing Data transfer in Data transf. to shared mem 1 2 3 4 5 6 . . . Processing Result transfer to global Result transfer out Execute the final hash Host Machine HPDC ‘ 08 Output i-1 i

Computing Block Hash – Module Design § The design is highly parallel § Last step - on the CPU to avoid synchronization § The resulting hash is not compatible with standard MD 5 and SHA 1 but is equally collision resistant [Damgard 89] HPDC ‘ 08

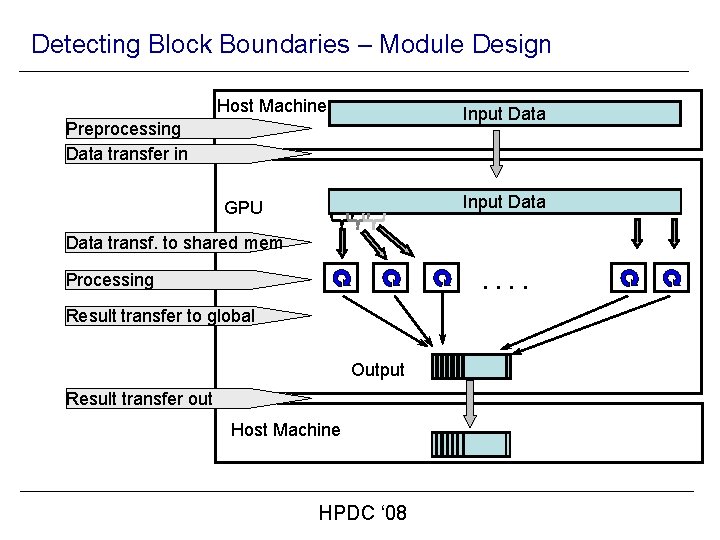

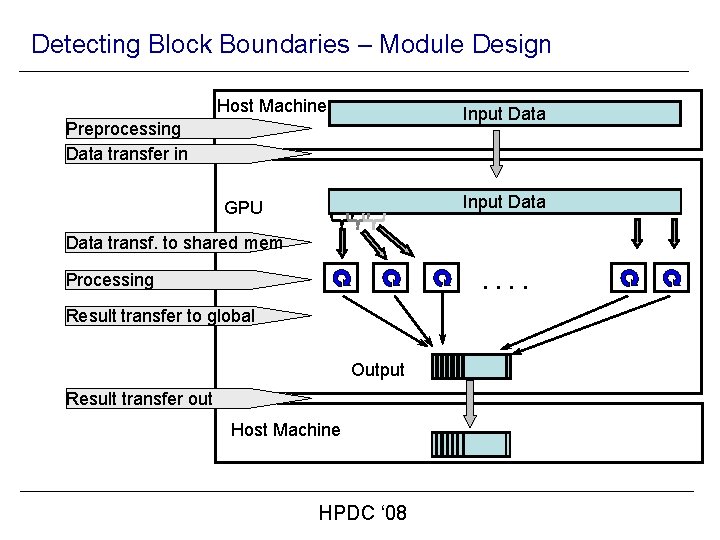

Detecting Block Boundaries – Module Design Host Machine Input Data Preprocessing Data transfer in Input Data GPU Data transf. to shared mem . . Processing Result transfer to global Output Result transfer out Host Machine HPDC ‘ 08

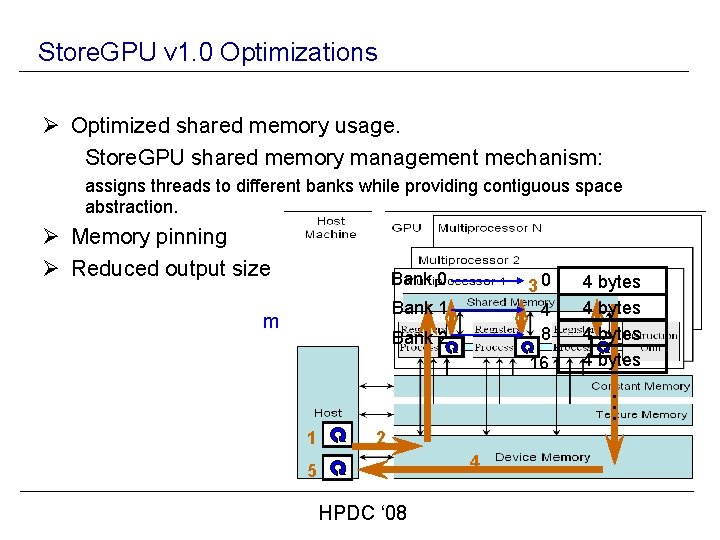

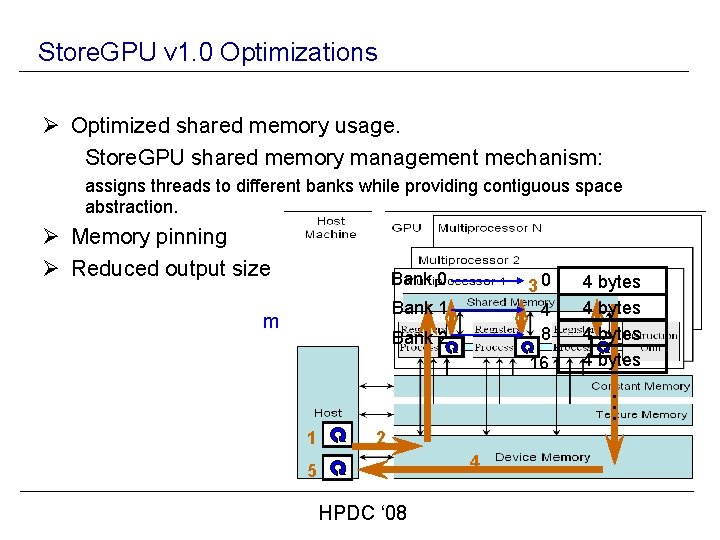

Store. GPU v 1. 0 Optimizations Ø Optimized shared memory usage. Store. GPU shared memory management mechanism: assigns threads to different banks while providing contiguous space abstraction. Ø Memory pinning Ø Reduced output size B 1 B 2 Bank 0 m bytes Bank 1 Bank 2 B 3 0 3 4 8 16 k bits 1 5 B 4 4 bytes. . . 2 Hash. Value. K = 0 ? 4 HPDC ‘ 08

Outline § § § GPU architecture GPU programming Typical application flow Store. GPU design Store. GPU v 1. 0 optimizations Evaluation HPDC ‘ 08

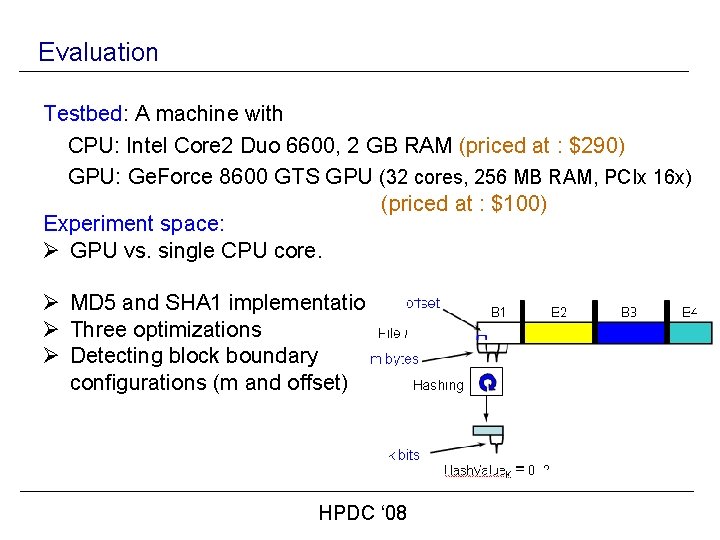

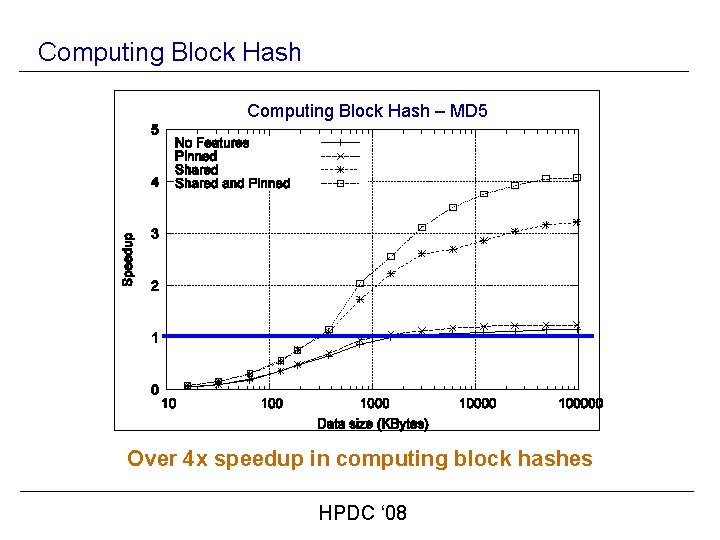

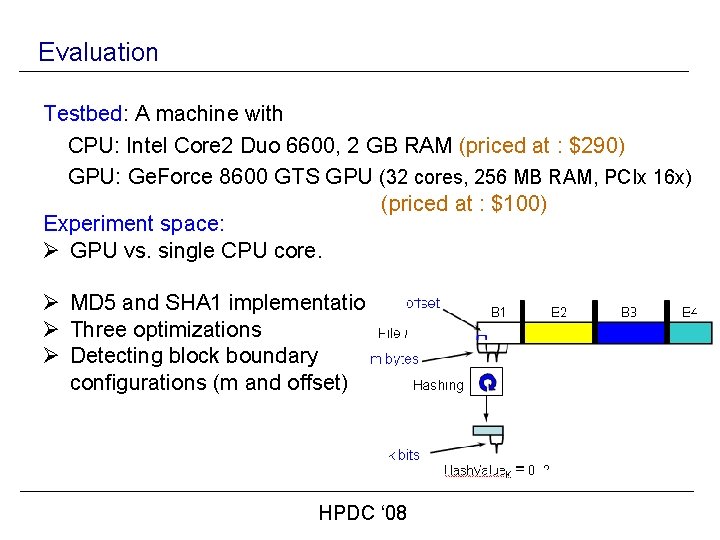

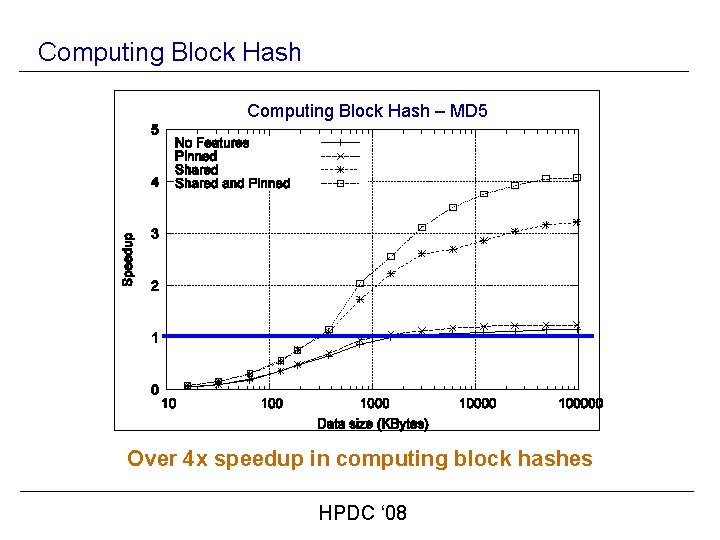

Evaluation Testbed: A machine with CPU: Intel Core 2 Duo 6600, 2 GB RAM (priced at : $290) GPU: Ge. Force 8600 GTS GPU (32 cores, 256 MB RAM, PCIx 16 x) (priced at : $100) Experiment space: Ø GPU vs. single CPU core. Ø MD 5 and SHA 1 implementations Ø Three optimizations Ø Detecting block boundary configurations (m and offset) HPDC ‘ 08

Computing Block Hash – MD 5 Over 4 x speedup in computing block hashes HPDC ‘ 08

Computing Block Boundary– MD 5 m = 20 bytes, offset = 4 bytes 1 Over 8 x speedup in detecting blocks boundaries HPDC ‘ 08

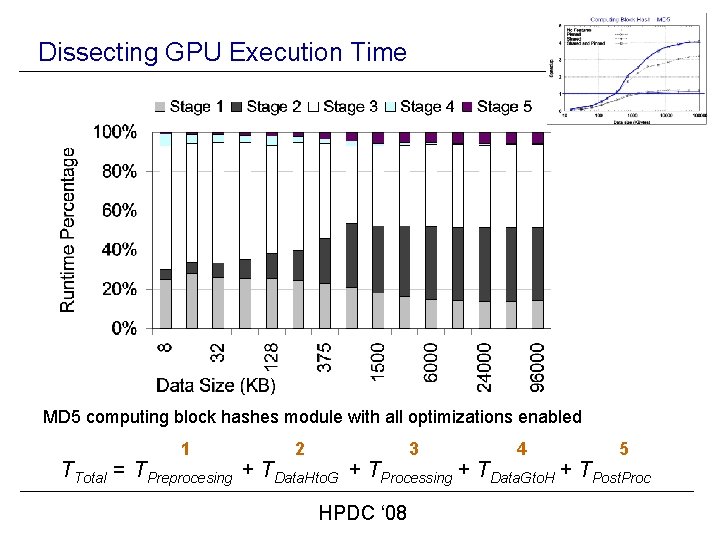

Dissecting GPU Execution Time 1. Preprocessing 3 2. Data transfer in 3. GPU Processing 4. Data transfer out 5. Postprocessing 1 2 4 5 1 2 3 4 5 TTotal = TPreprocesing + TData. Hto. G + TProcessing + TData. Gto. H + TPost. Proc HPDC ‘ 08

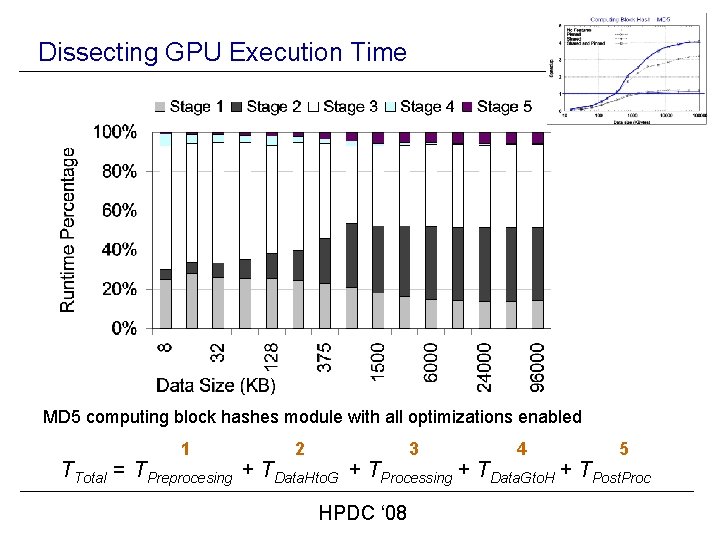

Dissecting GPU Execution Time MD 5 computing block hashes module with all optimizations enabled 1 2 3 4 5 TTotal = TPreprocesing + TData. Hto. G + TProcessing + TData. Gto. H + TPost. Proc HPDC ‘ 08

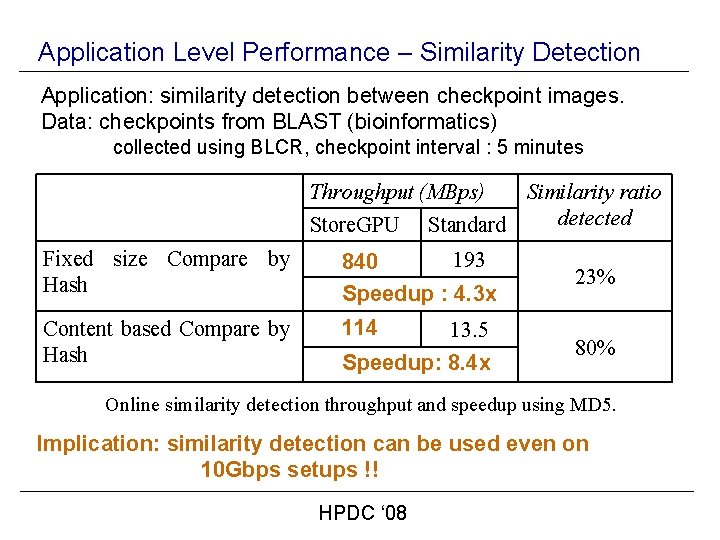

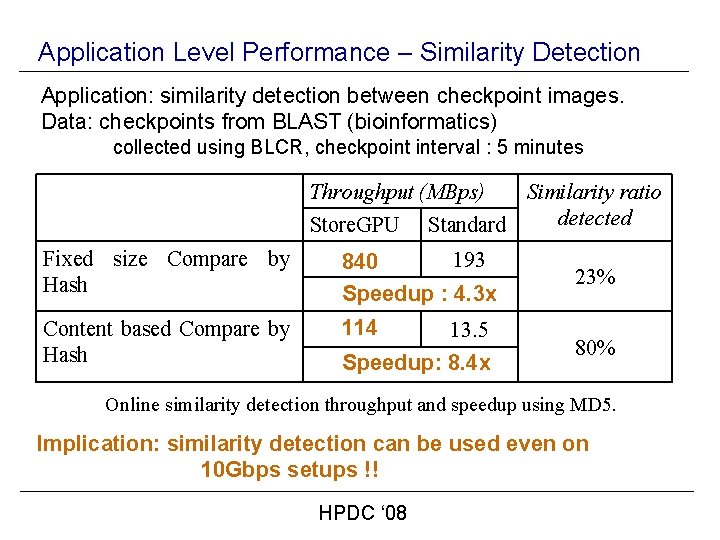

Application Level Performance – Similarity Detection Application: similarity detection between checkpoint images. Data: checkpoints from BLAST (bioinformatics) collected using BLCR, checkpoint interval : 5 minutes Throughput (MBps) Store. GPU Similarity ratio detected Standard Fixed size Compare by Hash 193 840 Speedup : 4. 3 x Content based Compare by Hash 114 13. 5 Speedup: 8. 4 x 23% 80% Online similarity detection throughput and speedup using MD 5. Implication: similarity detection can be used even on 10 Gbps setups !! HPDC ‘ 08

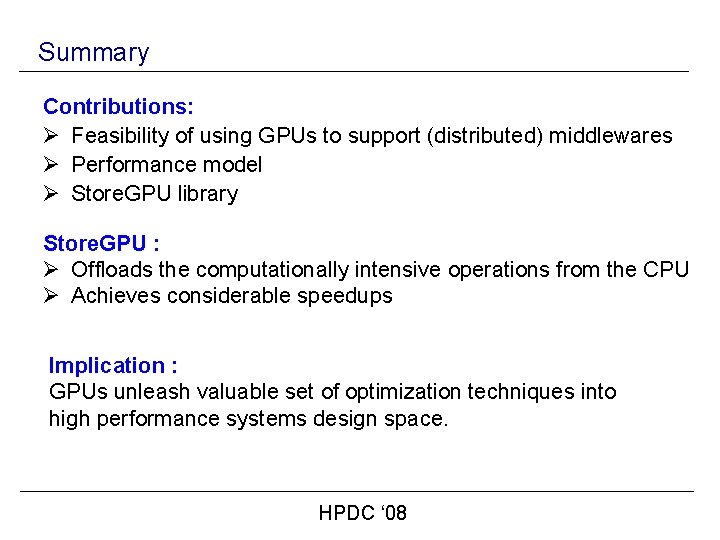

Summary Contributions: Ø Feasibility of using GPUs to support (distributed) middlewares Ø Performance model Ø Store. GPU library Store. GPU : Ø Offloads the computationally intensive operations from the CPU Ø Achieves considerable speedups Implication : GPUs unleash valuable set of optimization techniques into high performance systems design space. HPDC ‘ 08

Other GPU Applications Ø Ø Ø Hashing Erasure coding Encryption/decryption Compression Membership testing (Bloom-filter) Current Net. Sys. Lab GPU-related projects § Exploring GPU to support other middleware primitives: Bloom filters (Bloom. GPU) § Packet classification § Medical imaging compression HPDC ‘ 08

Thank you netsyslab. ece. ubc. ca HPDC ‘ 08

![References Damgard 89 Damgard I A Design Principle for Hash Functions in Advances in References [Damgard 89] Damgard, I. A Design Principle for Hash Functions. in Advances in](https://slidetodoc.com/presentation_image_h/dbde80b91acf0b45a2ad4520d9c9e677/image-35.jpg)

References [Damgard 89] Damgard, I. A Design Principle for Hash Functions. in Advances in Cryptology - CRYPTO. 1989: Lecture Notes in Computer Science. [Liu 06] Liu, W. , et al. Bio-sequence database scanning on a GPU. in Parallel and Distributed Processing Symposium, IPDPS. 2006 [Vogt 08] Vogt, L, et al. Accelerating Resolution-of-the-Identity Second. Order Moller-Plesset Quantum Chemistry Calculations with Graphical Processing Units. J. Phys. Chem. A, 112 (10), 2049 2057, 2008. [Anderson 08] Joshua A. Anderson, Chris D. Lorenz and A. Travesset, General purpose molecular dynamics simulations fully implemented on graphics processing units. Journal of Computational Physics Volume 227, Issue 10, 1 May 2008, Pages 5342 -5359 [Owens 07] Owens, J. D. , et al. , A Survey of General-Purpose Computation on Graphics Hardware. Computer Graphics Forum, 2007. 26(1): p. 80 -113 HPDC ‘ 08