Storage Area Network Usage A UNIX Sys Admins

- Slides: 60

Storage Area Network Usage A UNIX Sys. Admin’s View of How A SAN Works

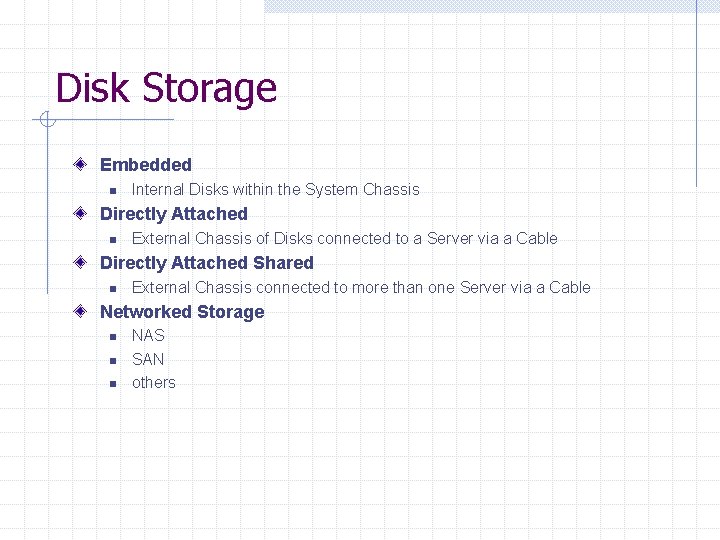

Disk Storage Embedded n Internal Disks within the System Chassis Directly Attached n External Chassis of Disks connected to a Server via a Cable Directly Attached Shared n External Chassis connected to more than one Server via a Cable Networked Storage n n n NAS SAN others

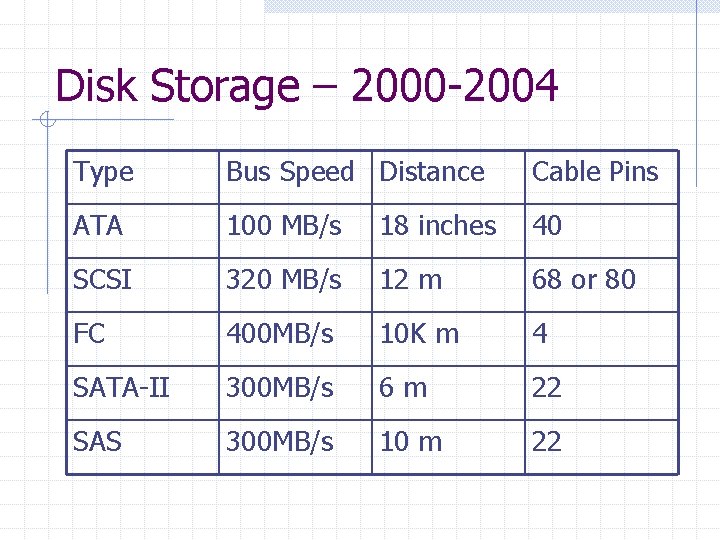

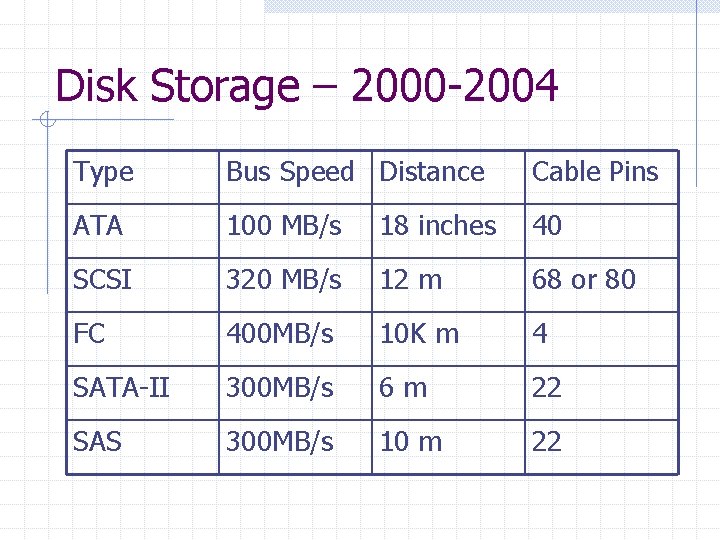

Disk Storage – 2000 -2004 Type Bus Speed Distance Cable Pins ATA 100 MB/s 18 inches 40 SCSI 320 MB/s 12 m 68 or 80 FC 400 MB/s 10 K m 4 SATA-II 300 MB/s 6 m 22 SAS 300 MB/s 10 m 22

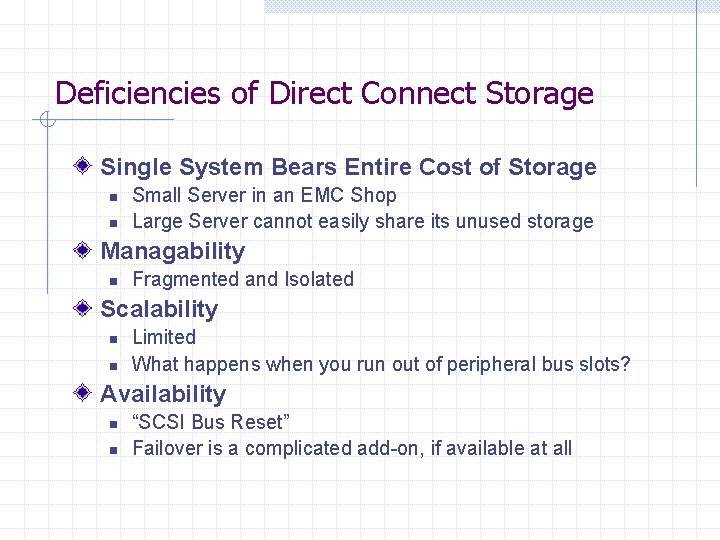

Deficiencies of Direct Connect Storage Single System Bears Entire Cost of Storage n n Small Server in an EMC Shop Large Server cannot easily share its unused storage Managability n Fragmented and Isolated Scalability n n Limited What happens when you run out of peripheral bus slots? Availability n n “SCSI Bus Reset” Failover is a complicated add-on, if available at all

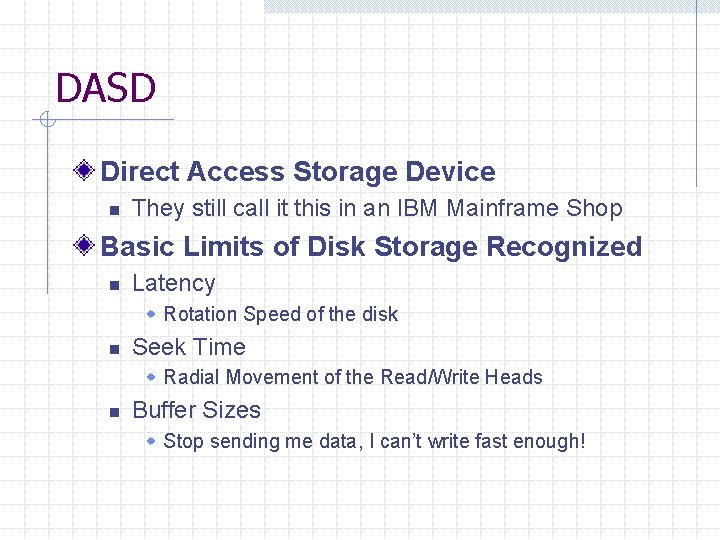

DASD Direct Access Storage Device n They still call it this in an IBM Mainframe Shop Basic Limits of Disk Storage Recognized n Latency w Rotation Speed of the disk n Seek Time w Radial Movement of the Read/Write Heads n Buffer Sizes w Stop sending me data, I can’t write fast enough!

SCSI – Small Computer System Interface n From Shugart’s 1979 SASI implementation w SASI: Shugart Associates System Interface Both Hardware and I/O Protocol Standards n n n Both have evolved over time Hardware is source of most limitations I/O Protocol has long-term potential

SCSI - Pro Device Independence n n Mix and match device types on the bus Disk, Tape, Scanners, etc… Overlapping I/O Capability n Multiple read & write commands can be outstanding simultaneously Ubiquitous

SCSI - Con Distance vs. Speed n Double the Signaling Rate w Speed: 40, 80, 160, 320 MBps n Halve the Cable Length Limits Device Count: 16 Maximum n Low voltage Differential Ultra 3 SCSI can support only 16 devices on a 12 meter cable at 160 MBps Server Access to Data Resources n Hardware changes are disruptive

SCSI – Overcoming the Con New Hardware & Signaling Platforms SCSI-3 Introduces Serial SCSI Support n n Fibre Channel Serial Storage Architecture (SSA) w Primarily an IBM implementation n Fire. Wire (IEEE 1394 – Apple fixes SCSI) w Attractive in consumer market Retains SCSI I/O Protocol

Scaling SCSI Devices Increase Controller Count within Server n Increasing Burden To CPU w Device Overhead w Bus Controllers can be saturated n n You can run out of slots Many Queues, Many Devices w Queuing Theory 101 (check-out line) - undesirable

Scaling SCSI Devices Use Dedicated External Device Controller n Hides Individual Devices w Provide One Large Virtual Resource n n n Offloads Device Overhead One Queue, Many Devices - good Cost and Benefit w Still borne by one system

RAID Redundant Array of Inexpensive Disks Combine multiple disks into a single virtual device How this is implemented determines different strengths n n Storage Capacity Speed w Fast Read or Fast Write n Resilience in the face of device failure

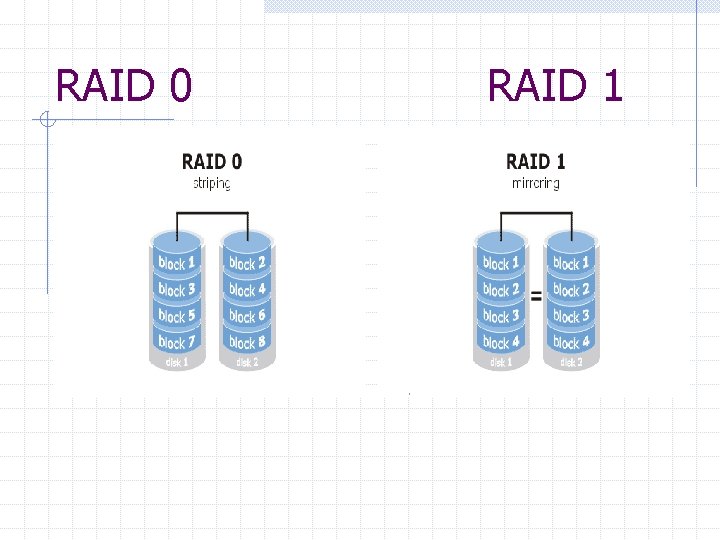

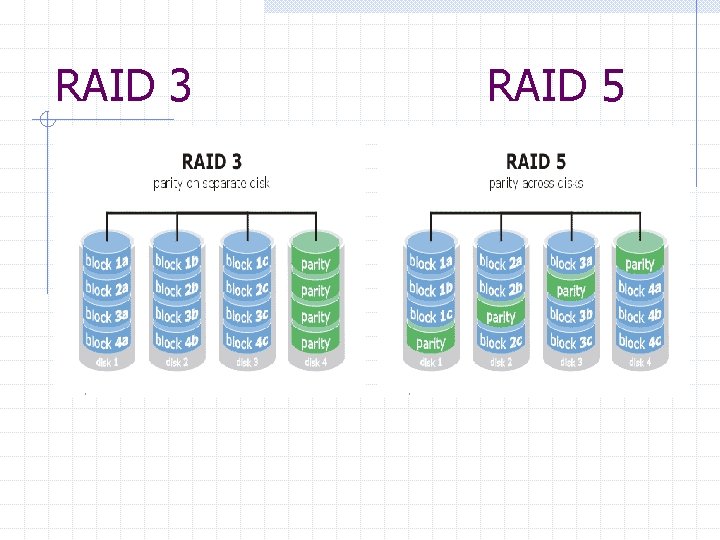

RAID Functions Striping n Write consecutive logical byte/blocks on consecutive physical disks Mirroring n Write the same block on two or more physical disks Parity Calculation n n Given N disks, N-1 consecutive blocks are data blocks, Nth block is for parity When any of the N-1 data blocks are altered, N-2 XOR calculations are performed on these N-1 blocks The Data Block(s) and Parity Block are written Destroy one of these N blocks, and that block can be reconstructed using N-2 XOR calculations on the remaining N-1 blocks Destroy two or more blocks – reconstruction is not possible

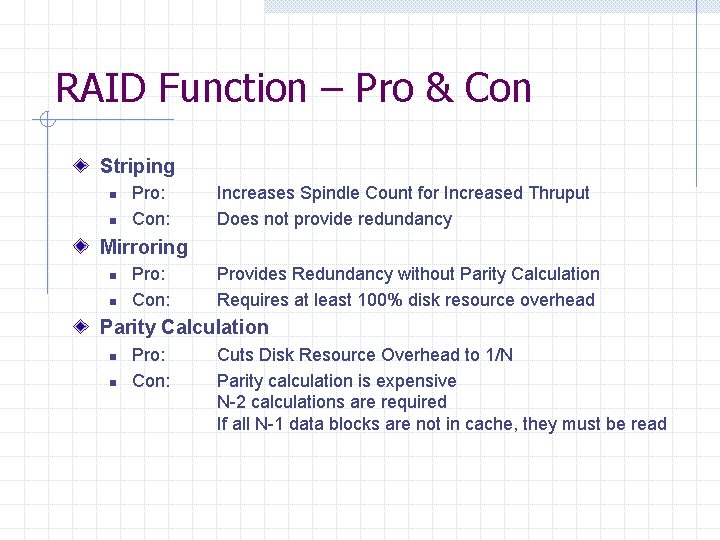

RAID Function – Pro & Con Striping n n Pro: Con: Increases Spindle Count for Increased Thruput Does not provide redundancy Mirroring n n Pro: Con: Provides Redundancy without Parity Calculation Requires at least 100% disk resource overhead Parity Calculation n n Pro: Con: Cuts Disk Resource Overhead to 1/N Parity calculation is expensive N-2 calculations are required If all N-1 data blocks are not in cache, they must be read

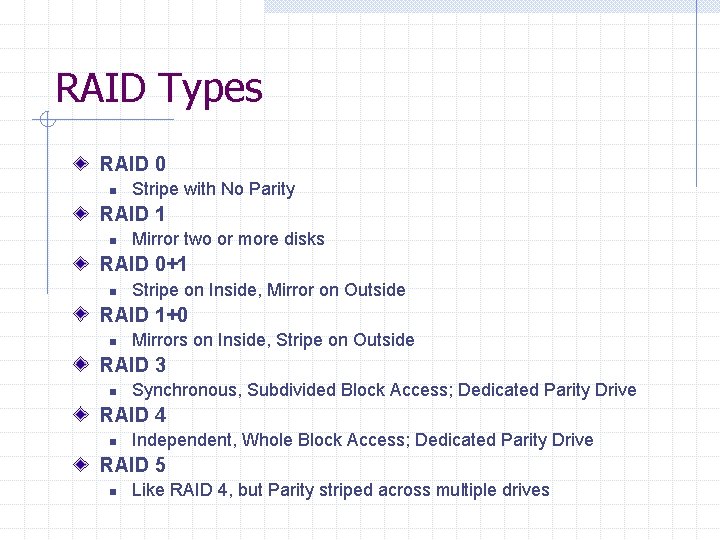

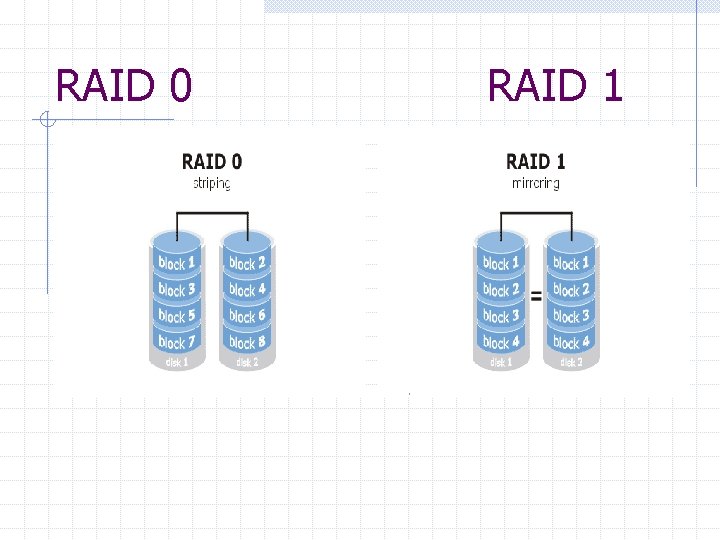

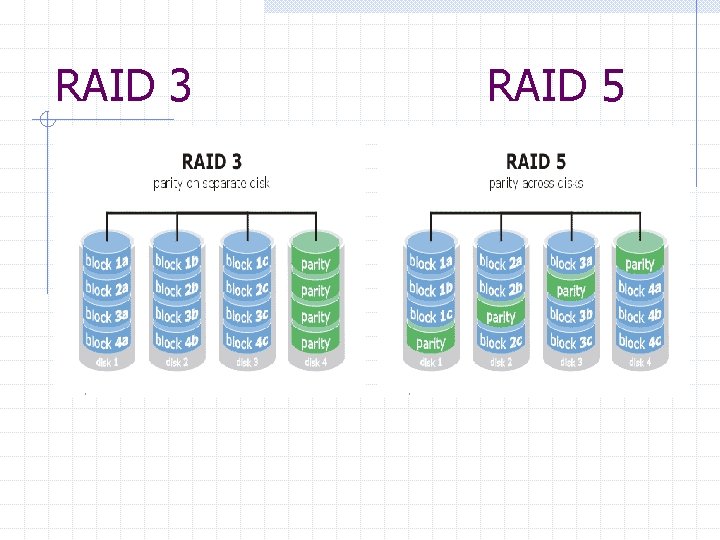

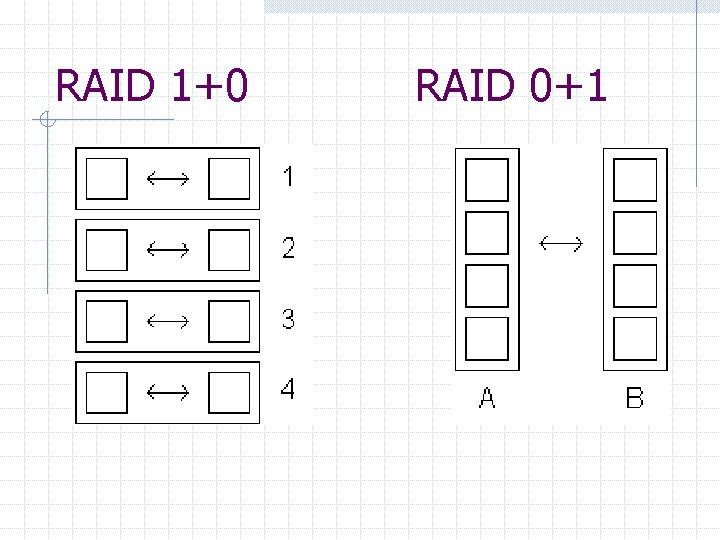

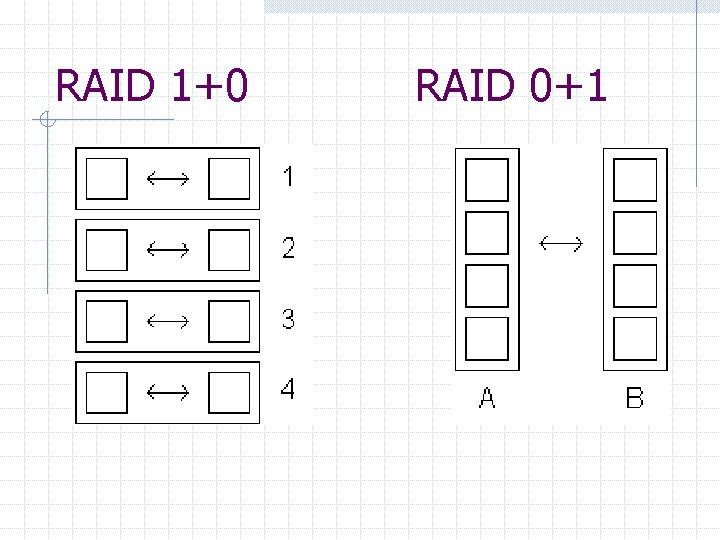

RAID Types RAID 0 n Stripe with No Parity RAID 1 n Mirror two or more disks RAID 0+1 n Stripe on Inside, Mirror on Outside RAID 1+0 n Mirrors on Inside, Stripe on Outside RAID 3 n Synchronous, Subdivided Block Access; Dedicated Parity Drive RAID 4 n Independent, Whole Block Access; Dedicated Parity Drive RAID 5 n Like RAID 4, but Parity striped across multiple drives

RAID 0 RAID 1

RAID 3 RAID 5

RAID 1+0 RAID 0+1

Breaking the Direct Connection Now you have high performance RAID n n n The storage bottleneck has been reduced You’ve invested $$$ to do it How do you extend this advantage to N servers without spending N x $$$? How about using existing networks?

How to Provide Data Over IP NFS (or CIFS) over a TCP/IP Network n n n This is Network Attached Storage (NAS) Overcomes some distance problems Full Filesystem Semantics are Lacking w …such as file locking n n Speed and Latency are problems Security and Integrity are problems as well IP encapsulation of I/O Protocols n n Not yet established in the marketplace Current speed & security issues

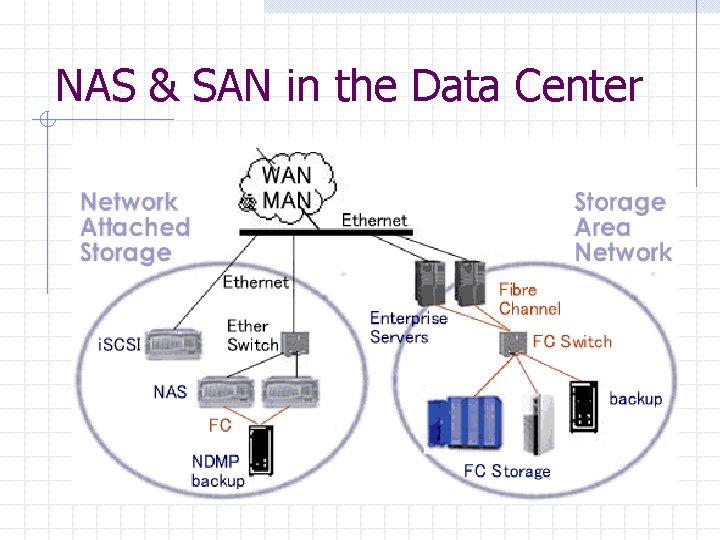

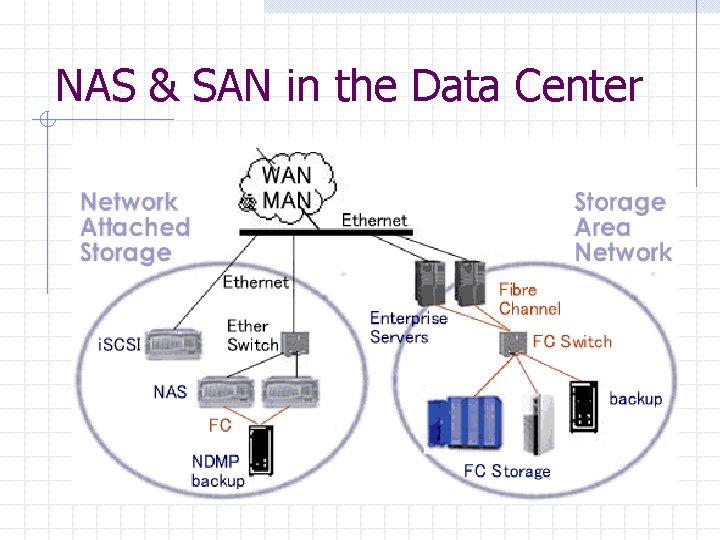

NAS and SAN NAS – Network Attached Storage n n File-oriented access Multiple Clients, Shared Access to Data SAN – Storage Area Network n n Block-oriented access Single Server, Exclusive Access to Data

NAS: Network Attached Storage File Objects and Filesystems n n OS Dependent OS Access & Authentication Possible Multiple Writers n Require locking protocols Network Protocol: i. e. , IP “Front-end” Network

SAN: Storage Area Network Block Oriented Access To Data Device-like Object is presented Unique Writer I/O Protocol: SCSI, HIPPI, IPI “Back-end” Network

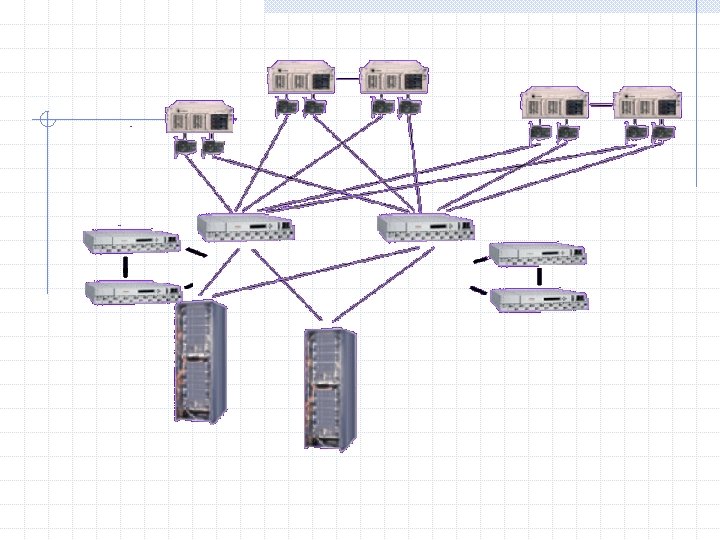

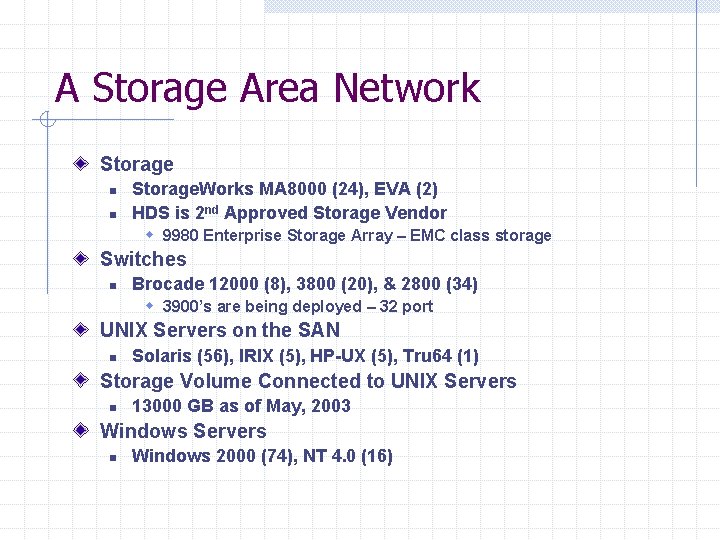

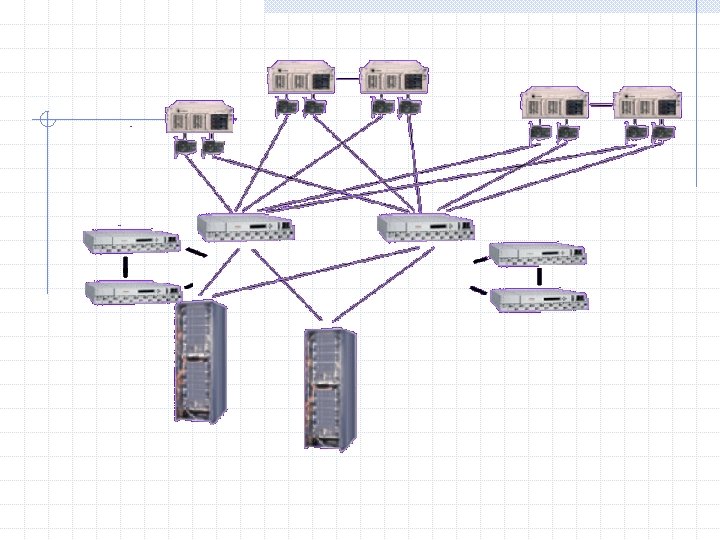

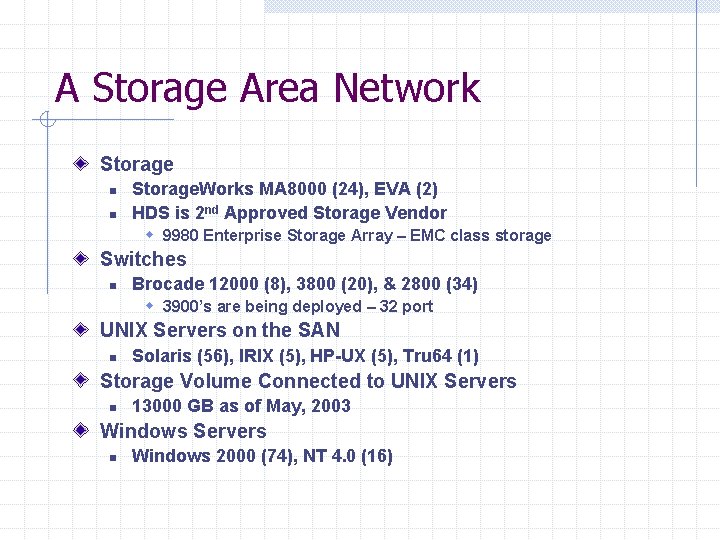

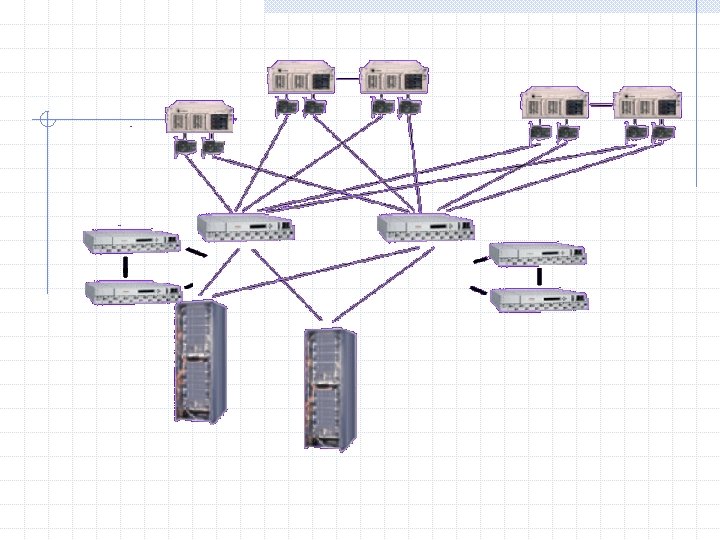

A Storage Area Network Storage n n Storage. Works MA 8000 (24), EVA (2) HDS is 2 nd Approved Storage Vendor w 9980 Enterprise Storage Array – EMC class storage Switches n Brocade 12000 (8), 3800 (20), & 2800 (34) w 3900’s are being deployed – 32 port UNIX Servers on the SAN n Solaris (56), IRIX (5), HP-UX (5), Tru 64 (1) Storage Volume Connected to UNIX Servers n 13000 GB as of May, 2003 Windows Servers n Windows 2000 (74), NT 4. 0 (16)

SAN Implementations Fibre. Channel n n FC Signalling Carrying SCSI Commands & Data Non-Ethernet Network Infrastructure i. SCSI n n SCSI Encapsulated By IP Ethernet Infrastructure FCIP – Fibre. Channel over IP n n Fibre. Channel Encapsulated by IP Extending Fibre. Channel over WAN Distances Future Bridge between Ethernet & Fibre. Channel i. FCP - another gateway implementation

NAS & SAN in the Data Center

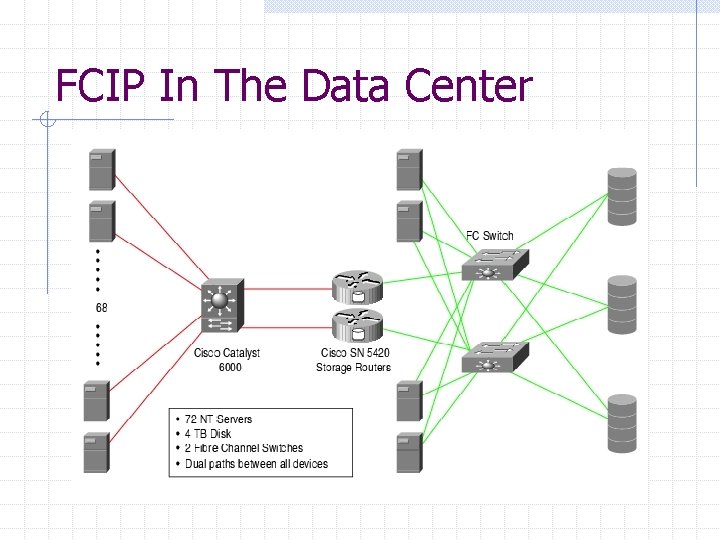

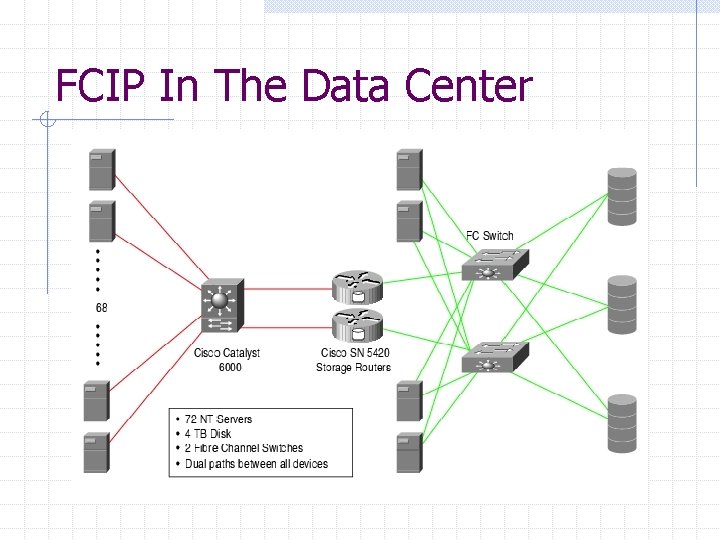

FCIP In The Data Center

Fibre. Channel How SCSI Limitations are Addressed n n Speed Distance Device Count Access

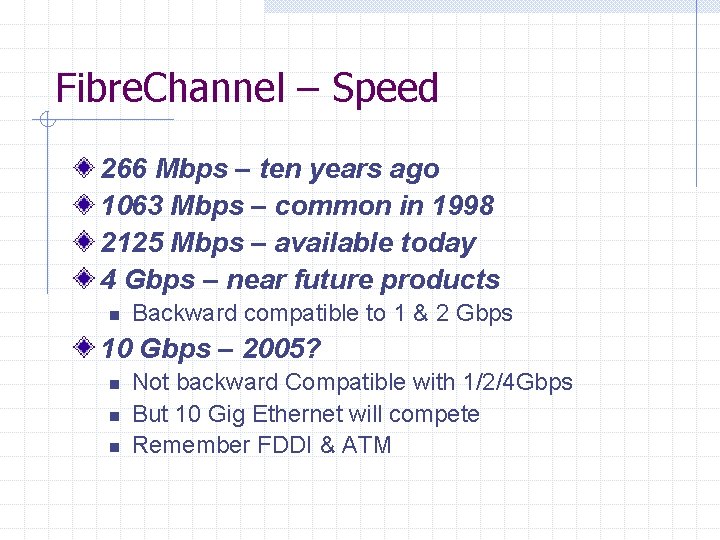

Fibre. Channel – Speed 266 Mbps – ten years ago 1063 Mbps – common in 1998 2125 Mbps – available today 4 Gbps – near future products n Backward compatible to 1 & 2 Gbps 10 Gbps – 2005? n n n Not backward Compatible with 1/2/4 Gbps But 10 Gig Ethernet will compete Remember FDDI & ATM

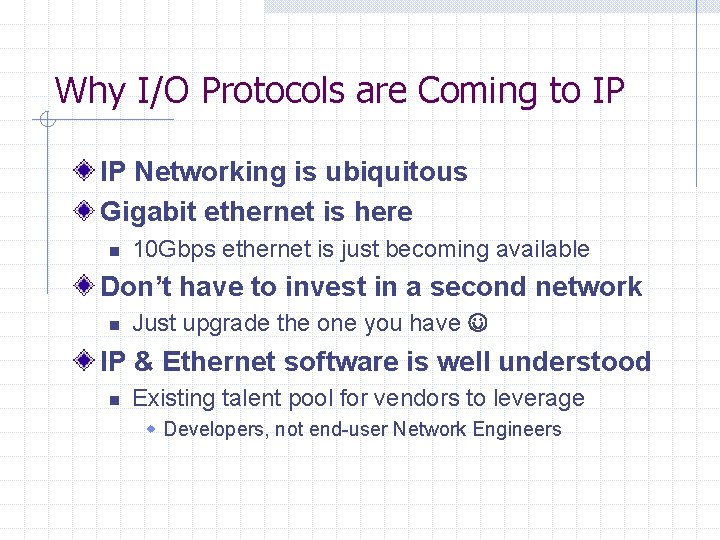

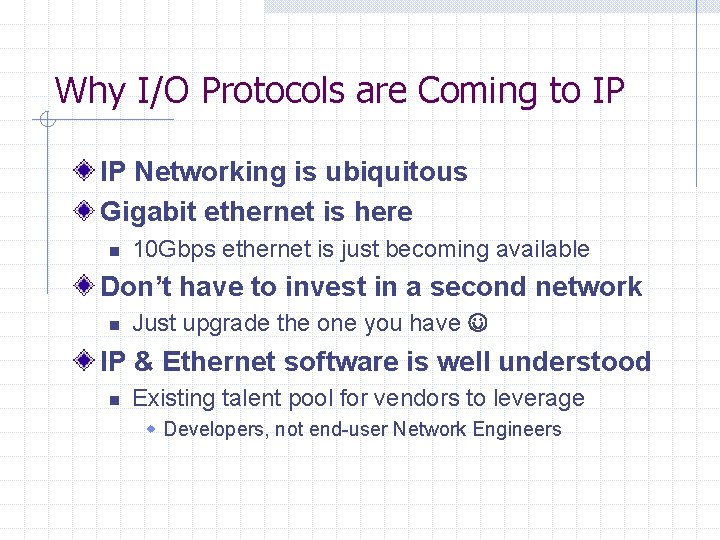

Why I/O Protocols are Coming to IP IP Networking is ubiquitous Gigabit ethernet is here n 10 Gbps ethernet is just becoming available Don’t have to invest in a second network n Just upgrade the one you have IP & Ethernet software is well understood n Existing talent pool for vendors to leverage w Developers, not end-user Network Engineers

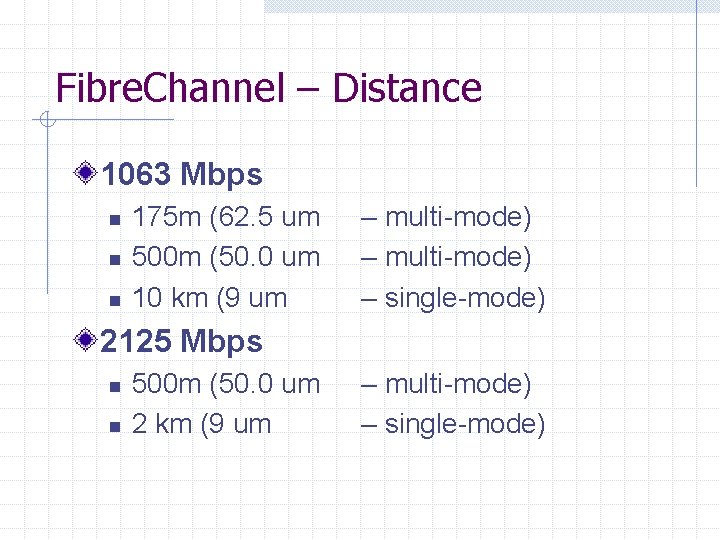

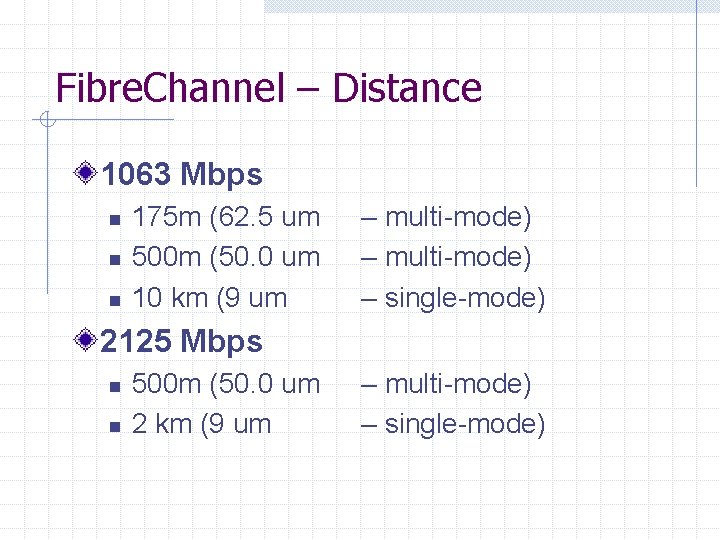

Fibre. Channel – Distance 1063 Mbps n n n 175 m (62. 5 um 500 m (50. 0 um 10 km (9 um – multi-mode) – single-mode) 2125 Mbps n n 500 m (50. 0 um 2 km (9 um – multi-mode) – single-mode)

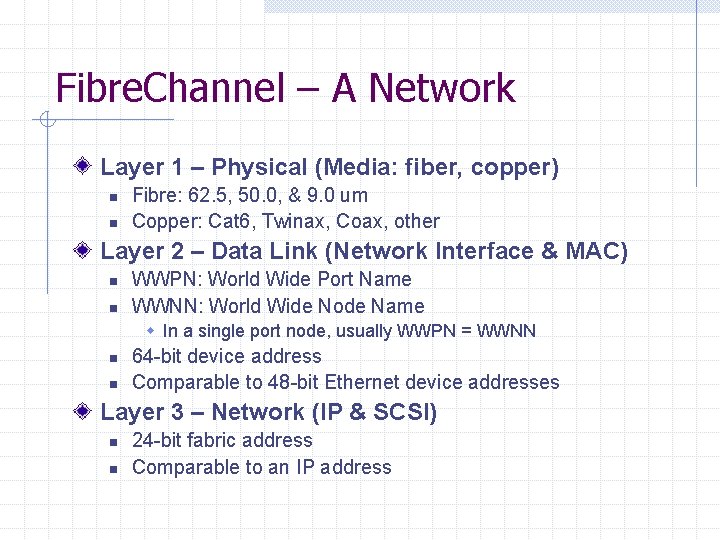

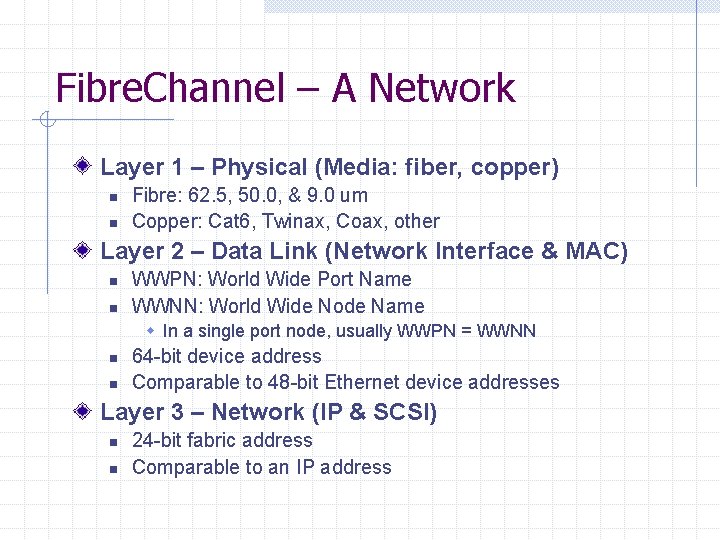

Fibre. Channel – A Network Layer 1 – Physical (Media: fiber, copper) n n Fibre: 62. 5, 50. 0, & 9. 0 um Copper: Cat 6, Twinax, Coax, other Layer 2 – Data Link (Network Interface & MAC) n n WWPN: World Wide Port Name WWNN: World Wide Node Name w In a single port node, usually WWPN = WWNN n n 64 -bit device address Comparable to 48 -bit Ethernet device addresses Layer 3 – Network (IP & SCSI) n n 24 -bit fabric address Comparable to an IP address

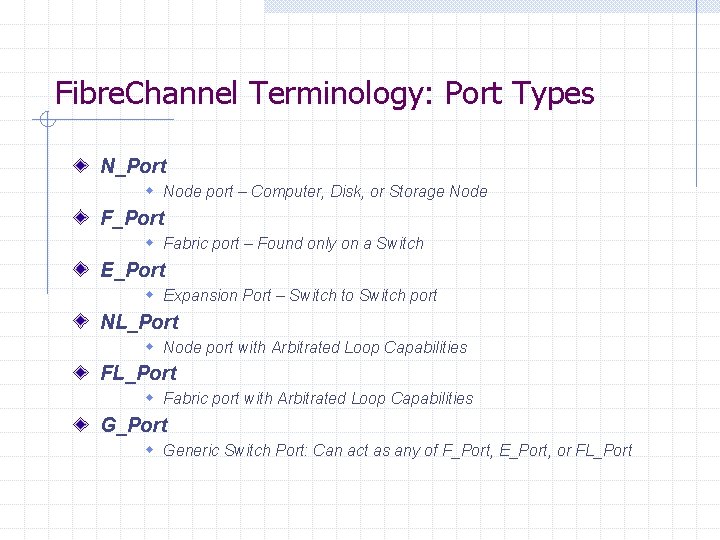

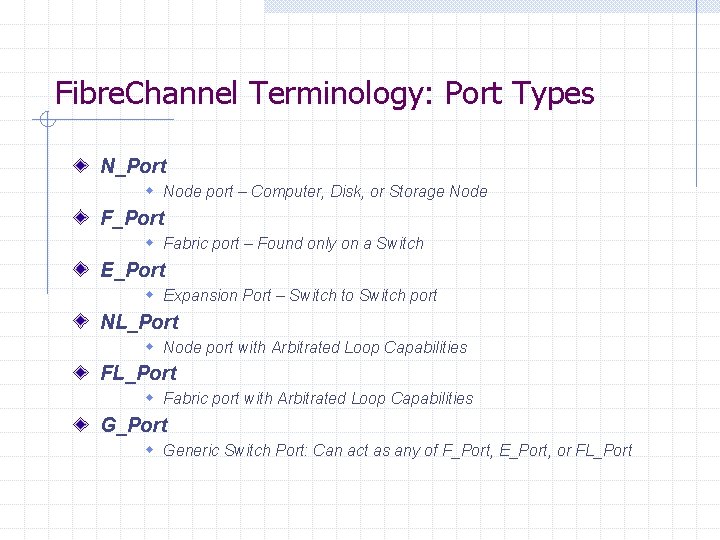

Fibre. Channel Terminology: Port Types N_Port w Node port – Computer, Disk, or Storage Node F_Port w Fabric port – Found only on a Switch E_Port w Expansion Port – Switch to Switch port NL_Port w Node port with Arbitrated Loop Capabilities FL_Port w Fabric port with Arbitrated Loop Capabilities G_Port w Generic Switch Port: Can act as any of F_Port, E_Port, or FL_Port

Fibre. Channel - Topology Point-to-Point Arbitrated Loop Fabric

Fibre. Channel – Point-to-point Direct Connection of Server and Storage Node Two N_Ports and One Link

Fibre. Channel - Arbitrated Loop Up to 126 Devices in a Loop via NL_Ports Token-access, Polled Environment (like FDDI) Wait For Access Increases with Device Count

Fibre. Channel - Fabric Arbitrary Topology Requires At Least One Switch Up to 15 million ports can be concurrently logged in with the 24 -bit address ID. Dedicated Circuits between Servers & Storage n via Switches Interoperability Issues Increase With Scale

Fibre. Channel – Device Count 126 devices in Arbitrated Loop 15 Million in a fabric (24 -bit addresses) n n Bit 0 -7: Bit 8 -15: Bit 16 -23: Port or Arbitrated Loop addr Area, identifies FL_Port Domain, address of switch 239 of 256 address available 256 x 239 = 15, 663, 104

Fibre. Channel Definitions WWPN Zone & Zoning LUN Masking

Fibre. Channel - WWPN World-Wide Port Number A unique 64 -bit hardware address for each Fibre. Channel Device Analogous to a 48 -bit ethernet hardware address WWNN - World-Wide Node Number

Fibre. Channel – Zone & Zoning Switch-Based Access Control Analogous to an Ethernet Broadcast Domain Soft Zone n n Zoning based on WWPN of Nodes Connected Preferred Hard Zone n Zoning Based on Port Number on Switch w to which the Nodes are Connected

Fibre. Channel - LUN Logical Unit Storage Node Allocates Storage and Assigns a LUN Appears to the server as a unique device (disk)

Fibre. Channel – LUN Masking Storage Node Based Access Control List (ACL) LUNs and Visible Server Connections (WWPN) are allowed to see each other thru the ACL. LUNs are Masked from Servers not in the ACL

LUN Security Host Software HBA-based n firmware or driver configuration Zoning LUN Masking

LUN Security Host-based & HBA n n Both these methods rely on correct security implemented at the edges Most difficult to manage due to large numbers and types of servers Storage Managers may not be Server Managers Don’t trust the consumer to manage resources w Trusting the fox to guard the hen house

LUN Security Zoning n n An access control list Establishes a conduit w A circuit will be constructed thru this n n Allows only selected Servers see a Storage Node Lessons learned w Implement in parallel with LUN Masking w Segregate OS types into different Zones w Always Promptly Remove Entries For Retired Servers

LUN Security LUN Masking n The Storage Node’s Access Control List w Sees the Server’s WWPN w Masks all LUNs not allocated to that server w Allows the Server to see only its assigned LUNs n Implement in parallel with Fabric Zoning

LUN - Persistent Binding of LUNs to Server Device IDs Permanently assign a System SCSI ID to a LUN. Ensures the Device ID Remains Consistent Across Reconfiguration Reboots Different HBAs use different binding methods & syntax Tape Drive Device Changes have been a repeated source of Net. Backup Media Server Failure

SAN Performance Storage Configuration Fabric Configuration Server Configuration

SAN - Storage Configuration More Spindles are Better Faster Disks are Better RAID 1+0 vs. RAID 5 n n “RAID 5 performs poorly compared to RAID 0+1 when both are implemented with software RAID” Allan Packer, Sun Microsystems, 2002 Where does RAID 5 underperform RAID 1+0? w Random Write Limit Partition Numbers Within RAIDsets

SAN - Fabric Configuration n Common Switch for Server & Storage w Multiple “hops” reduce performance w Increases Reliability n Large Port-count switches w 32 ports or more w 16 port switches create larger fabrics simply to carry its own overhead

SAN - Server Configuration Choose The Highest Performance HBA Available n n PCI: 64 -bit is better than 32 -bit PCI: 66 MHz is better than 33 MHz Place in the Highest Performance Slot n n Choose the widest, fastest slot in the system Choose an Underutilized Controller Size LUNs by RAIDset disk size n BAD: LUN sizes smaller than underlying disk size

SAN Resilience At Least Two Fabrics Dual Path Server Connections n n Each Server N_Port is Connected to a Different Fabric Circuit Failover upon Switch Failure Automatic Traffic Rerouting Hot-Plugable Disks & Power Supplies

SAN Resilience – Dual Path Multiple Fibre. Channel Ports within Server Active/Passive Links Most GPRD SAN disruptions have affected single-attached servers

SAN – Good Housekeeping Stay Current With OS Drivers & HBA Firmware Before You Buy a Server’s HBA n Is it supported by the switch & storage vendors? Coordinate Firmware Upgrades n Storage & Other Server Admin Teams Using SAN Monitor Disk I/O Statistics n Be Proactive; Identify and Eliminate I/O Problems

SAN Backups – Why We Should n n Offload Front-end IP Network Most Servers are still connected to 100 base. T IP 1 or 2 Gbps FC Links Increase Thruput Shrink Backup Times Why We Don’t n Cost w Net. Backup Media Server License: starts at $5 K list

Backup Futures Incremental Backups n n No longer stored on tape Use “near-line” cheap disk arrays w Several vendors are under current evaluation Still over IP n n 1 Gbps ethernet is commonly available on new servers 10 Gbps ethernet needed in core

Questions