STORAGE AND IO JehanFranois Pris jfparisuh edu Chapter

![First solution • Read values of N-1 other blocks in stripe • Recompute p[k] First solution • Read values of N-1 other blocks in stripe • Recompute p[k]](https://slidetodoc.com/presentation_image_h/81159292a88c5aa7bece66377a8bcd23/image-45.jpg)

![Second solution • Assume we want to update block b[m] • Read old values Second solution • Assume we want to update block b[m] • Read old values](https://slidetodoc.com/presentation_image_h/81159292a88c5aa7bece66377a8bcd23/image-46.jpg)

- Slides: 92

STORAGE AND I/O Jehan-François Pâris jfparis@uh. edu

Chapter Organization • Availability and Reliability • Technology review – Solid-state storage devices – I/O Operations – Reliable Arrays of Inexpensive Disks

DEPENDABILITY

Reliability and Availability • Reliability – Probability R(t) that system will be up at time t if it was up at time t = 0 • Availability – Fraction of time the system is up • Reliability and availability do not measure the same thing!

Which matters? • It depends: – Reliability for real-time systems • Flight control • Process control, … – Availability for many other applications • DSL service • File server, web server, …

MTTF, MMTR and MTBF • MTTF is mean time to failure • MTTR is mean time to repair • 1/MTTF is failure rate l • MTTBF, the mean time between failures, is MTBF = MTTF + MTTR

Reliability • As a first approximation R(t) = exp(–t/MTTF) – Not true if failure rate varies over time

Availability • Measured by (MTTF)/(MTTF + MTTR) = MTTF/MTBF – MTTR is very important • A good MTTR requires that we detect quickly the failure

The nine notation • Availability is often expressed in "nines" – 99 percent is two nines – 99. 9 percent is three nines –… • Formula is –log 10 (1 – A) • Example: –log 10 (1 – 0. 999) = –log 10 (10 -3) = 3

Example • A server crashes on the average once a month • When this happens, it takes 12 hours to reboot it • What is the server availability ?

Solution • MTBF = 30 days • MTTR = 12 hours = ½ day • MTTF = 29 ½ days • Availability is 29. 5/30 =98. 3 %

Keep in mind • A 99 percent availability is not as great as we might think – One hour down every 100 hours • Fifteen minutes down every 24 hours

Example • A disk drive has a MTTF of 20 years. • What is the probability that the data it contains will not be lost over a period of five years?

Example • A disk farm contains 100 disks whose MTTF is 20 years. • What is the probability that no data will be lost over a period of five years?

Solution • The aggregate failure rate of the disk farm is 100 x 1/20 =5 failures/year • The mean time to failure of the farm is 1/5 year • We apply the formula R(t) = exp(–t/MTTF) = -exp(– 5× 5) = 1. 4 × 10 -11

TECHNOLOGY OVERVIEW

Disk drives • See previous chapter • Recall that the disk access time is the sum of – The disk seek time (to get to the right track) – The disk rotational latency – The actual transfer time

Flash drives • Widely used in flash drives, most MP 3 players and some small portable computers • Similar technology as EEPROM • Two technologies

What about flash? • Widely used in flash drives, most MP 3 players and some small portable computers • Several important limitations – Limited write bandwidth • Must erase a whole block of data before overwriting them – Limited endurance • 10, 000 to 100, 000 write cycles

Storage Class Memories • Solid-state storage – Non-volatile – Much faster than conventional disks • Numerous proposals: – Ferro-electric RAM (FRAM) – Magneto-resistive RAM (MRAM) – Phase-Change Memories (PCM)

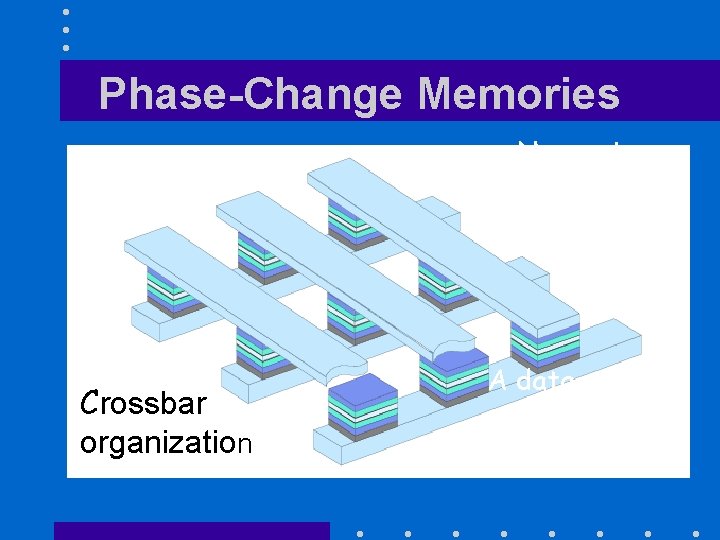

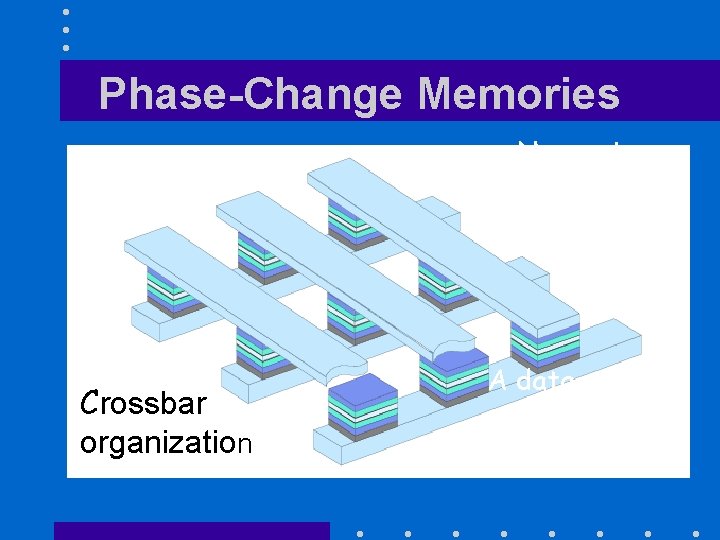

Phase-Change Memories No moving parts Crossbar organization A data cell

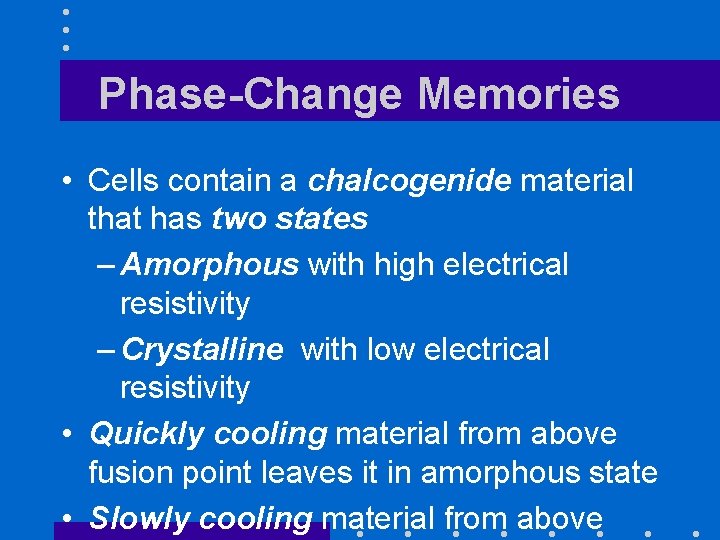

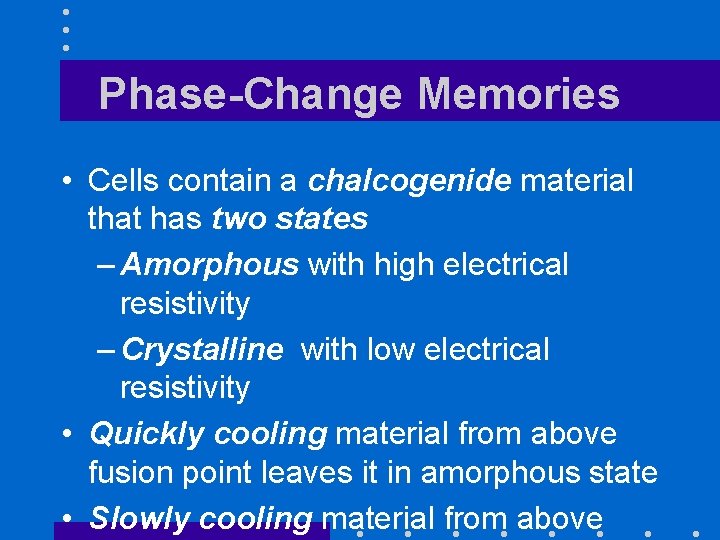

Phase-Change Memories • Cells contain a chalcogenide material that has two states – Amorphous with high electrical resistivity – Crystalline with low electrical resistivity • Quickly cooling material from above fusion point leaves it in amorphous state • Slowly cooling material from above

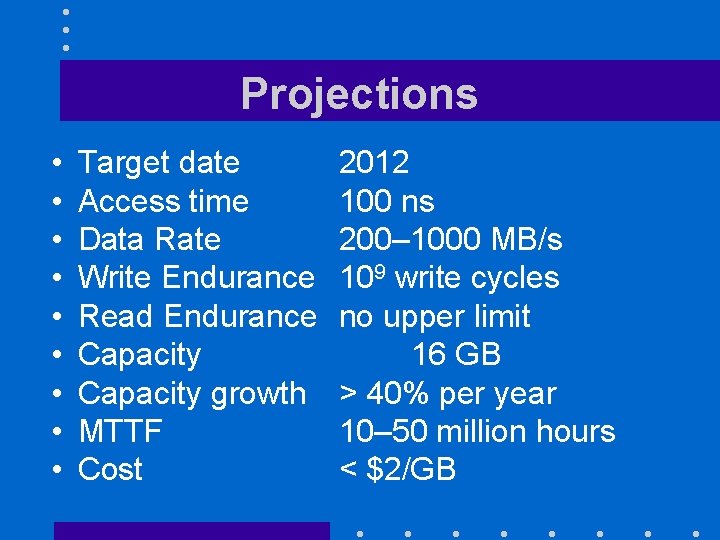

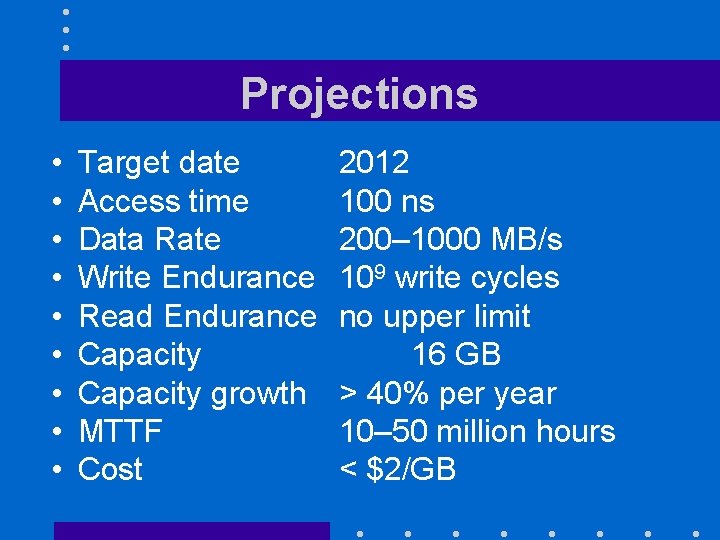

Projections • • • Target date Access time Data Rate Write Endurance Read Endurance Capacity growth MTTF Cost 2012 100 ns 200– 1000 MB/s 109 write cycles no upper limit 16 GB > 40% per year 10– 50 million hours < $2/GB

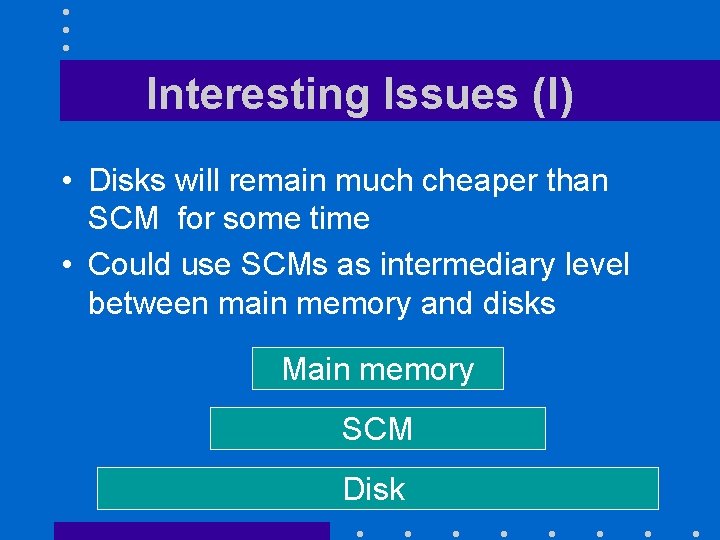

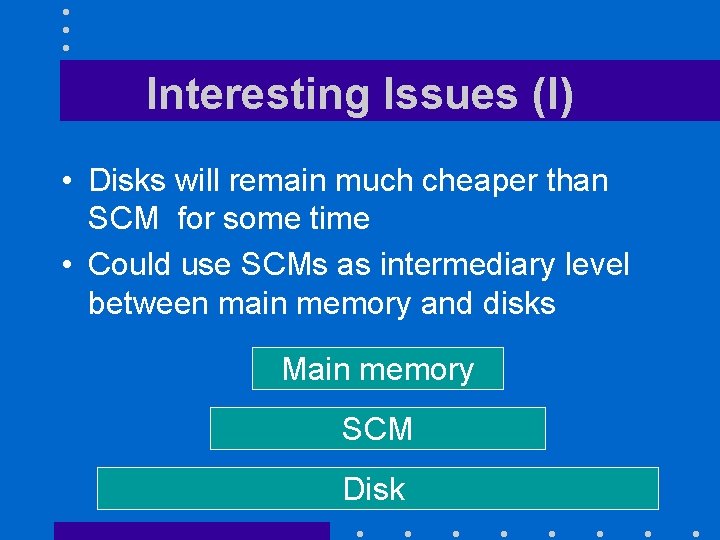

Interesting Issues (I) • Disks will remain much cheaper than SCM for some time • Could use SCMs as intermediary level between main memory and disks Main memory SCM Disk

A last comment • The technology is still experimental • Not sure when it will come to the market • Might even never come to the market

Interesting Issues (II) • Rather narrow gap between SCM access times and main memory access times • Main memory and SCM will interact – As the L 3 cache interact with the main memory – Not as the main memory now interacts with the disk

RAID Arrays

Today’s Motivation • We use RAID today for – Increasing disk throughput by allowing parallel access – Eliminating the need to make disk backups • Disks are too big to be backed up in an efficient fashion

RAID LEVEL 0 • No replication • Advantages: – Simple to implement – No overhead • Disadvantage: – If array has n disks failure rate is n times the failure rate of a single disk

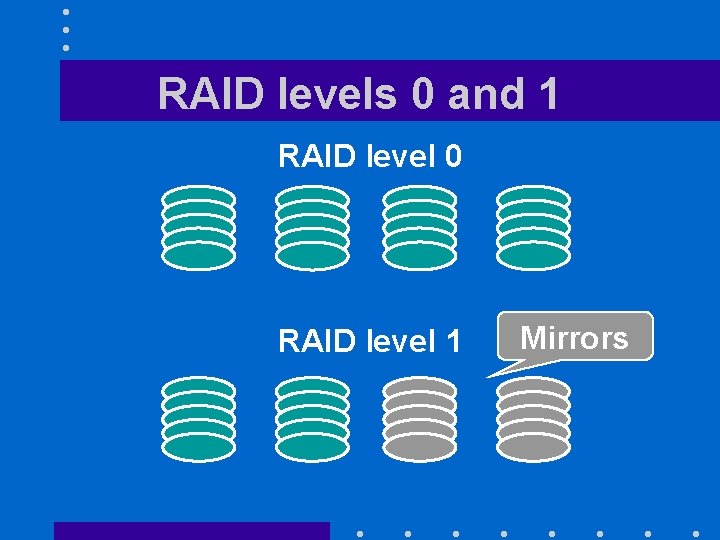

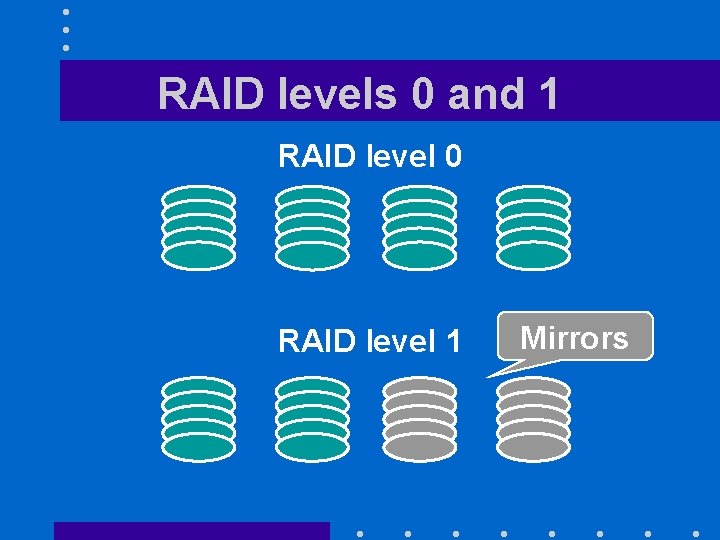

RAID levels 0 and 1 RAID level 0 RAID level 1 Mirrors

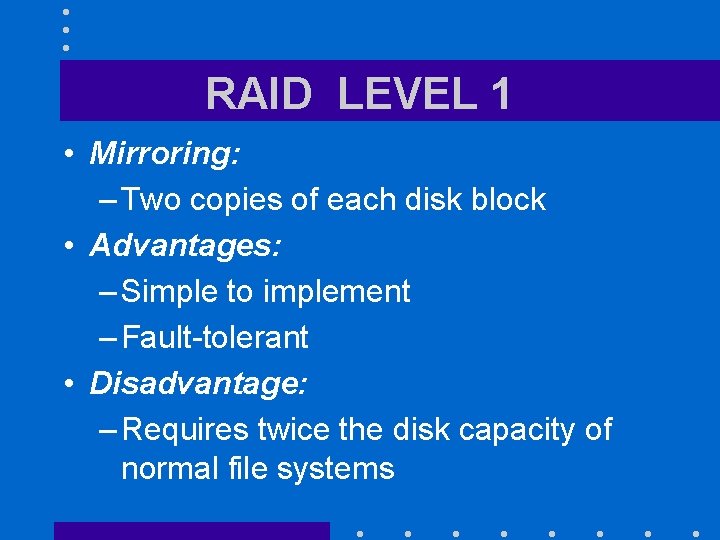

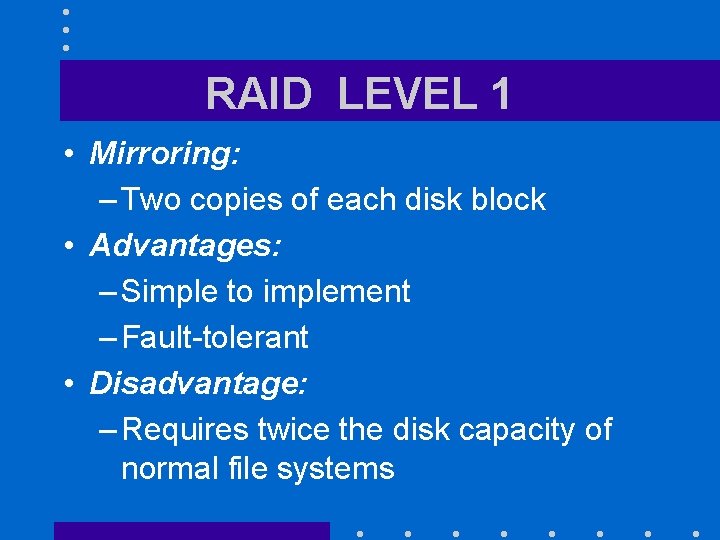

RAID LEVEL 1 • Mirroring: – Two copies of each disk block • Advantages: – Simple to implement – Fault-tolerant • Disadvantage: – Requires twice the disk capacity of normal file systems

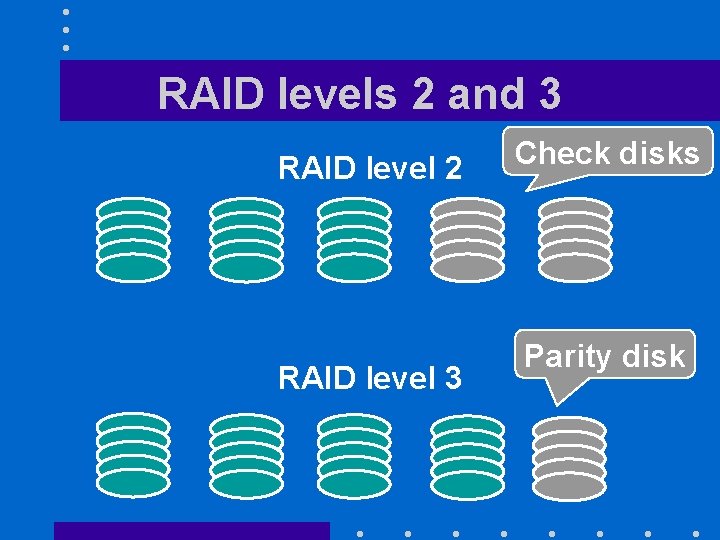

RAID LEVEL 2 • Instead of duplicating the data blocks we use an error correction code • Very bad idea because disk drives either work correctly or do not work at all – Only possible errors are omission errors – We need an omission correction code

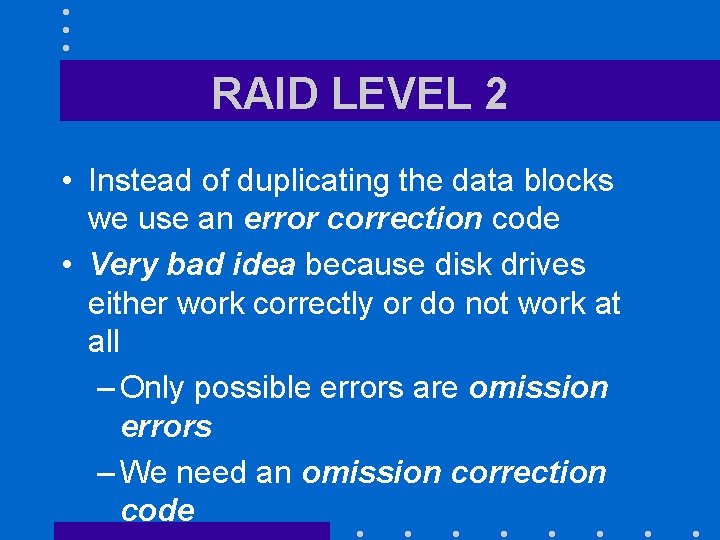

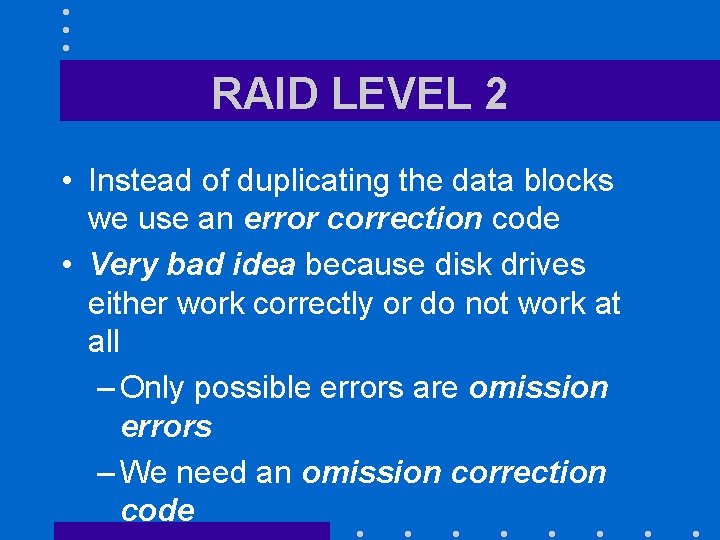

RAID levels 2 and 3 RAID level 2 RAID level 3 Check disks Parity disk

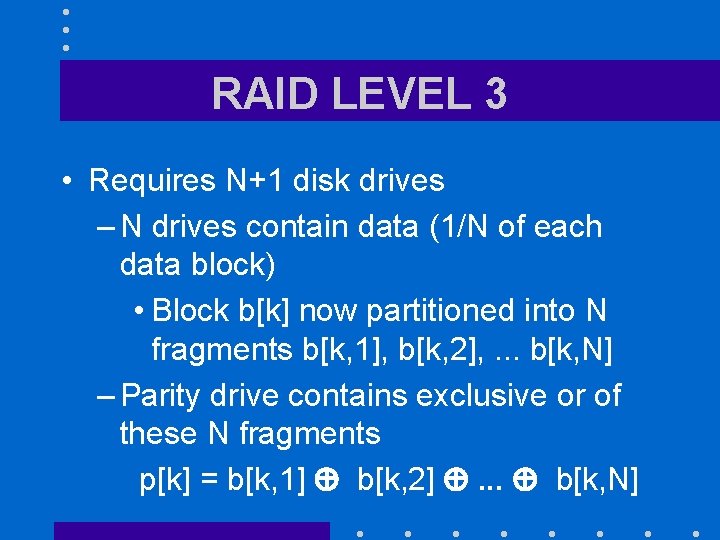

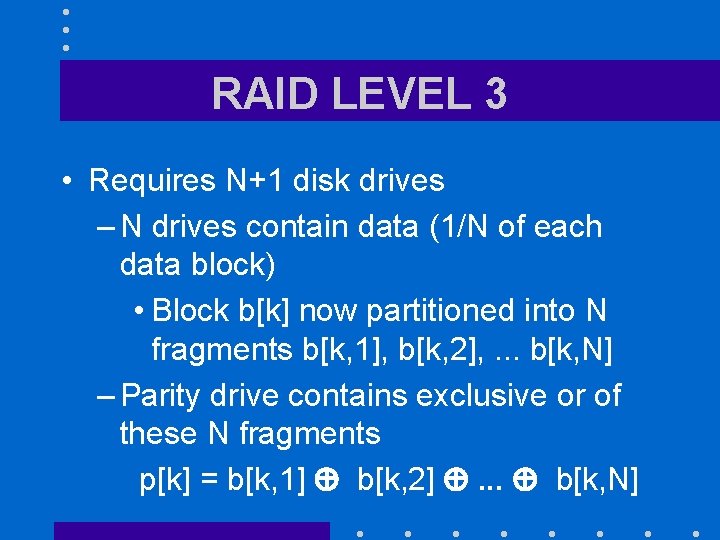

RAID LEVEL 3 • Requires N+1 disk drives – N drives contain data (1/N of each data block) • Block b[k] now partitioned into N fragments b[k, 1], b[k, 2], . . . b[k, N] – Parity drive contains exclusive or of these N fragments p[k] = b[k, 1] b[k, 2] . . . b[k, N]

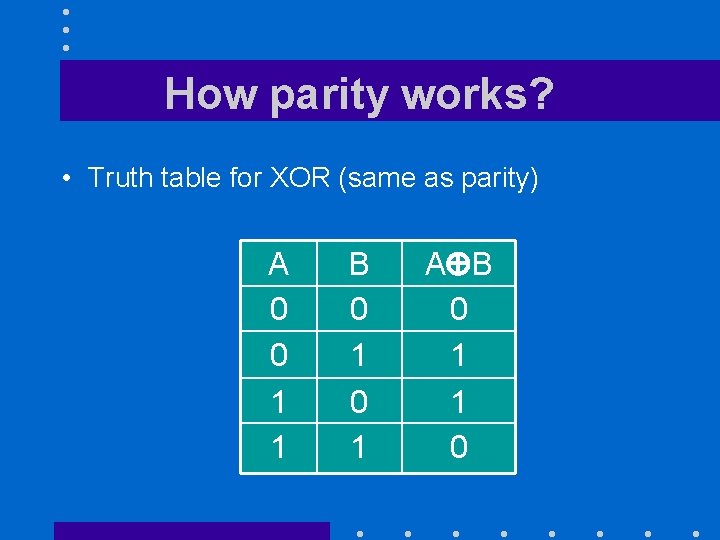

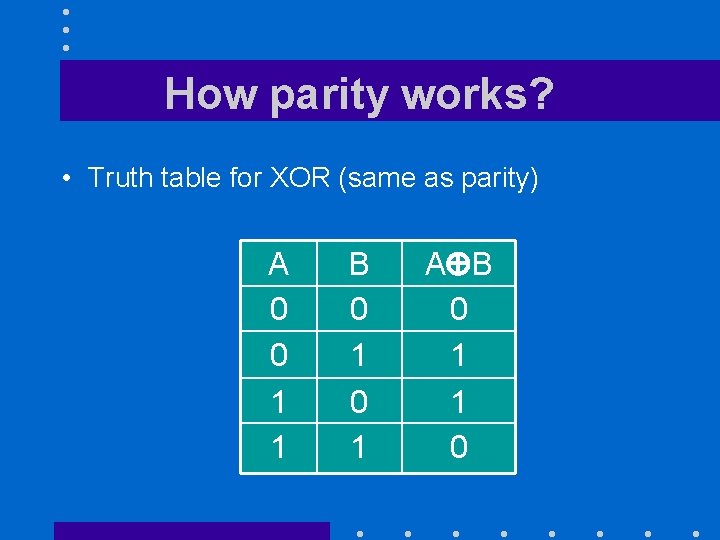

How parity works? • Truth table for XOR (same as parity) A 0 0 1 1 B 0 1 A B 0 1 1 0

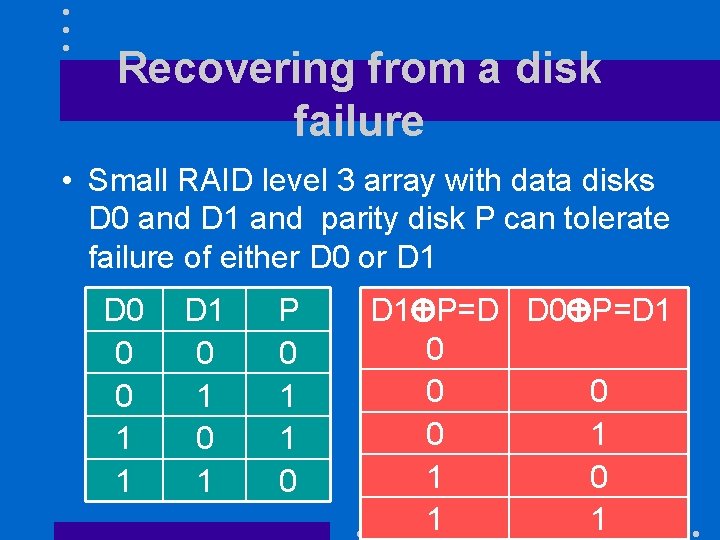

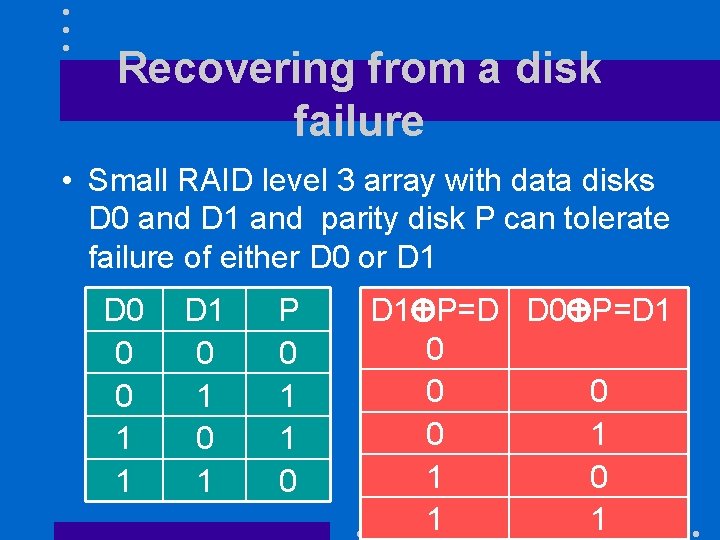

Recovering from a disk failure • Small RAID level 3 array with data disks D 0 and D 1 and parity disk P can tolerate failure of either D 0 or D 1 D 0 0 0 1 1 D 1 0 1 P 0 1 1 0 D 1 P=D D 0 P=D 1 0 0 1 1

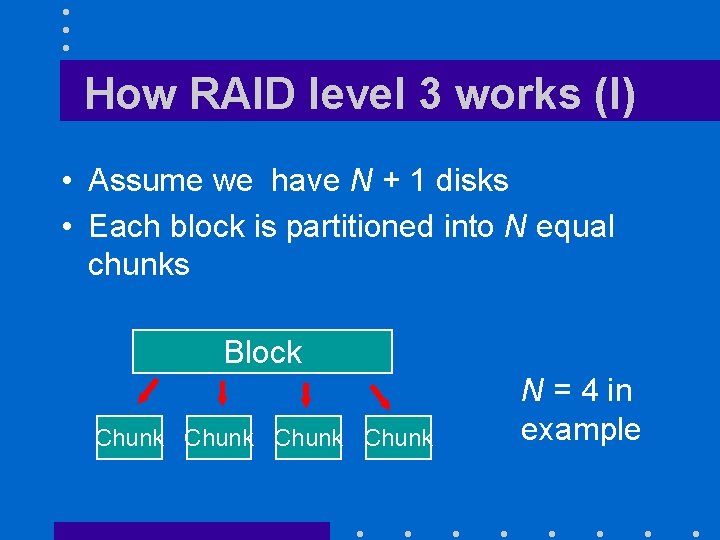

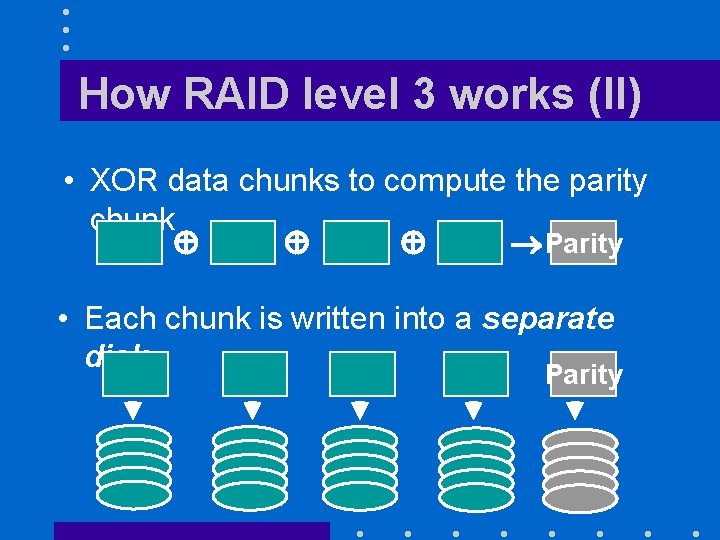

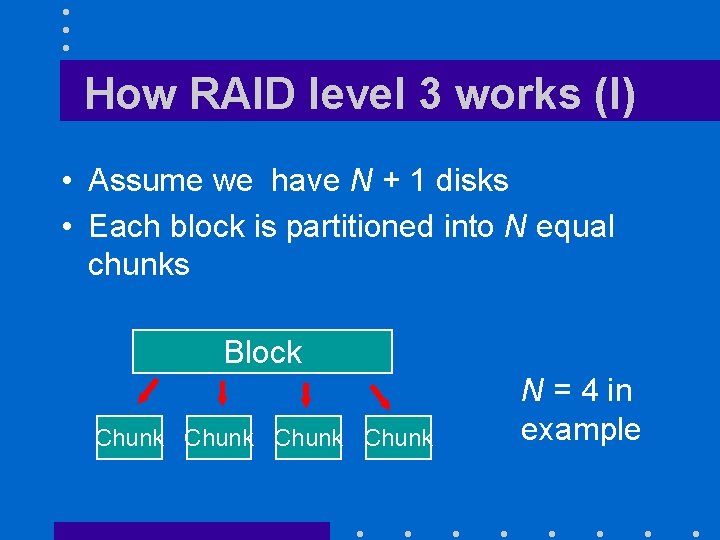

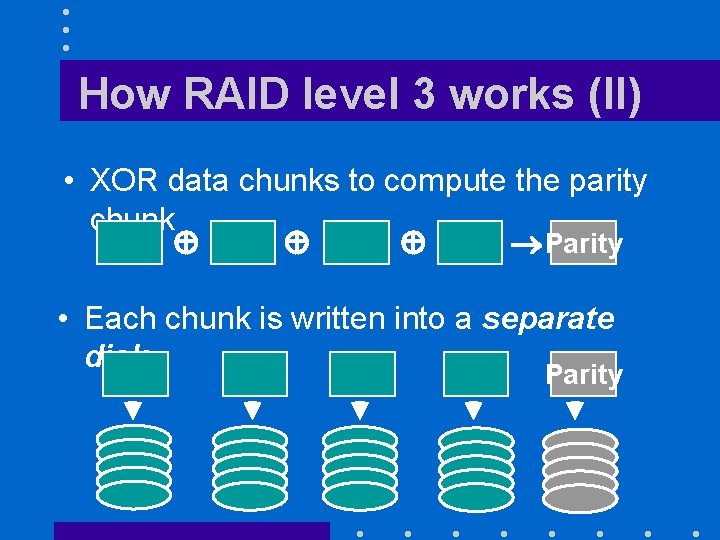

How RAID level 3 works (I) • Assume we have N + 1 disks • Each block is partitioned into N equal chunks Block Chunk N = 4 in example

How RAID level 3 works (II) • XOR data chunks to compute the parity chunk Parity • Each chunk is written into a separate disk Parity

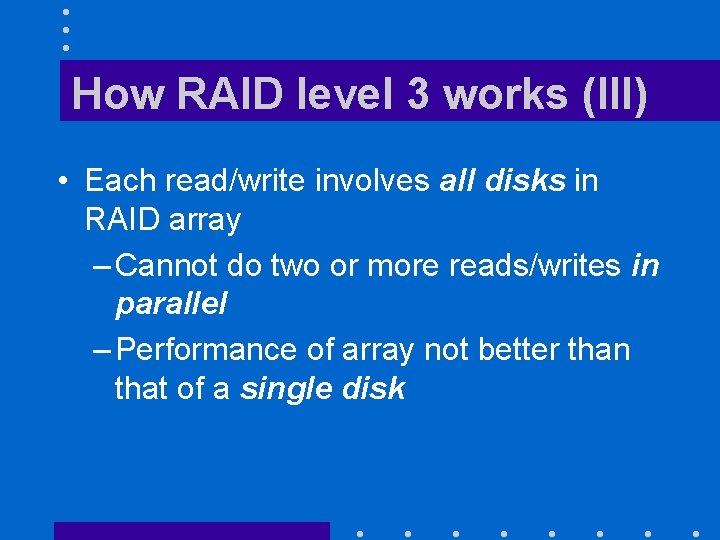

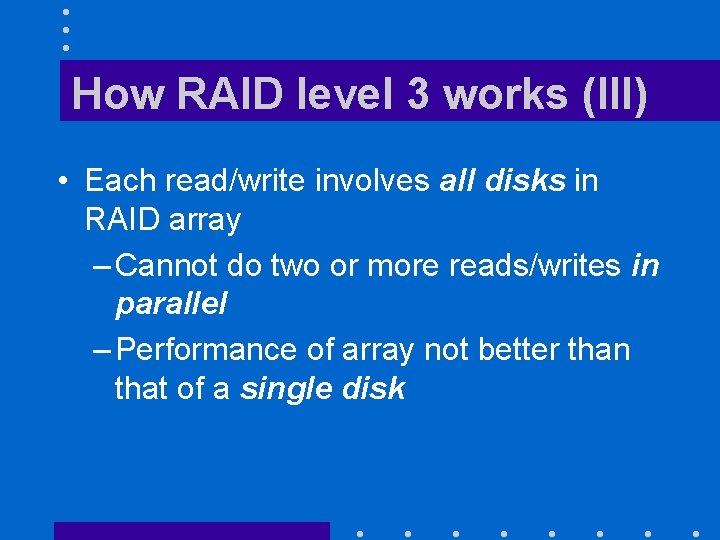

How RAID level 3 works (III) • Each read/write involves all disks in RAID array – Cannot do two or more reads/writes in parallel – Performance of array not better than that of a single disk

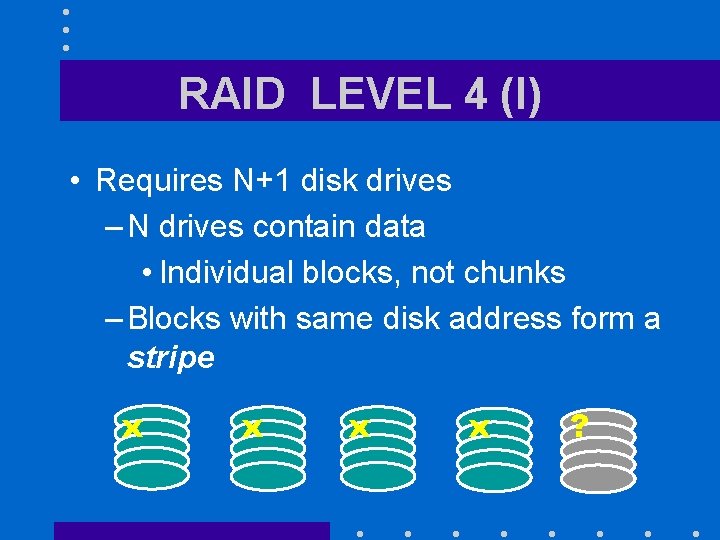

RAID LEVEL 4 (I) • Requires N+1 disk drives – N drives contain data • Individual blocks, not chunks – Blocks with same disk address form a stripe x x ?

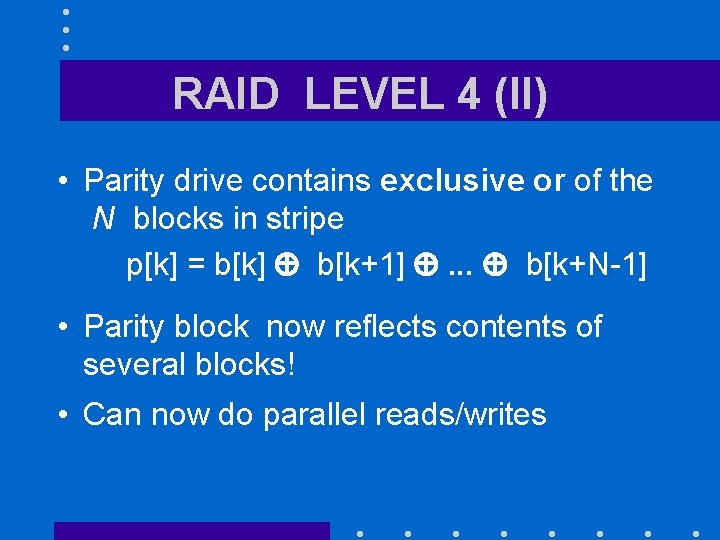

RAID LEVEL 4 (II) • Parity drive contains exclusive or of the N blocks in stripe p[k] = b[k] b[k+1] . . . b[k+N-1] • Parity block now reflects contents of several blocks! • Can now do parallel reads/writes

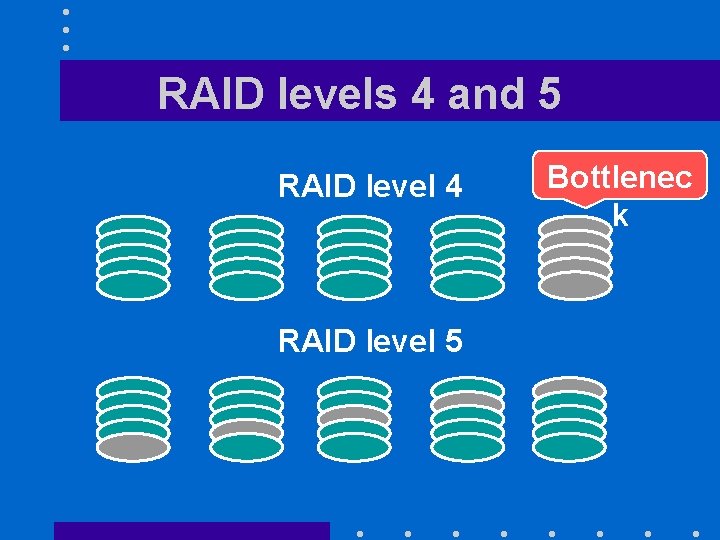

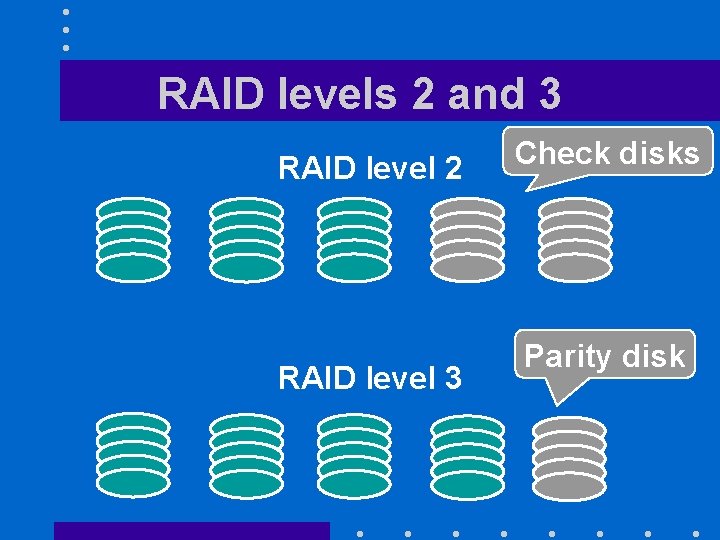

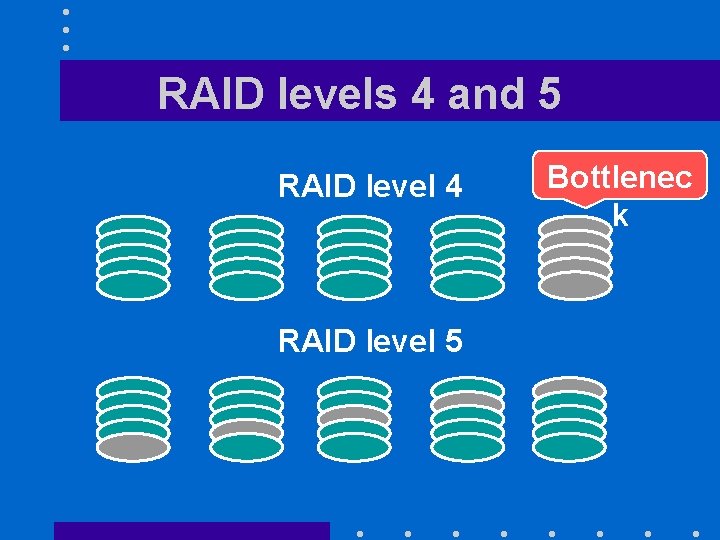

RAID levels 4 and 5 RAID level 4 RAID level 5 Bottlenec k

RAID LEVEL 5 • Single parity drive of RAID level 4 is involved in every write – Will limit parallelism • RAID-5 distribute the parity blocks among the N+1 drives – Much better

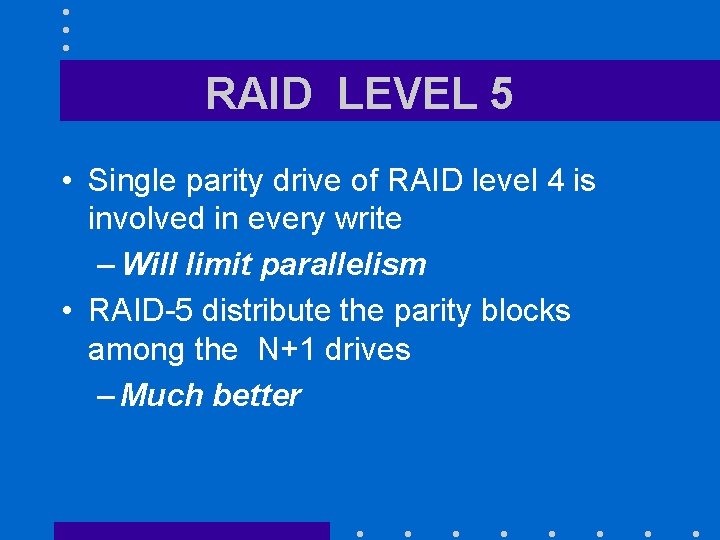

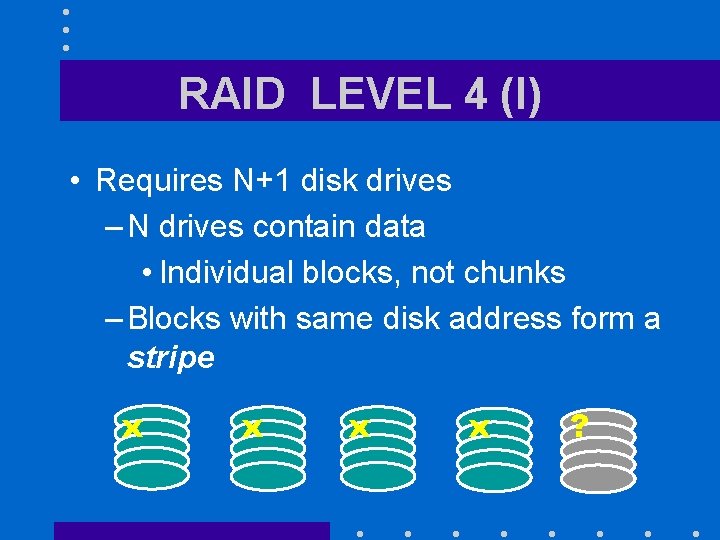

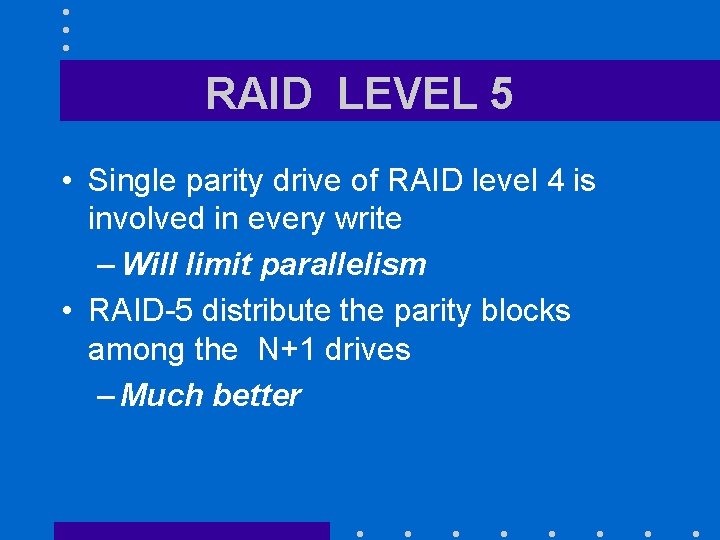

The small write problem • Specific to RAID 5 • Happens when we want to update a single block – Block belongs to a stripe – How can we compute the new value of the parity block p[k] b[k+1] b[k+2]. . .

![First solution Read values of N1 other blocks in stripe Recompute pk First solution • Read values of N-1 other blocks in stripe • Recompute p[k]](https://slidetodoc.com/presentation_image_h/81159292a88c5aa7bece66377a8bcd23/image-45.jpg)

First solution • Read values of N-1 other blocks in stripe • Recompute p[k] = b[k] b[k+1] . . . b[k+N-1] • Solution requires – N-1 reads – 2 writes (new block and new parity block)

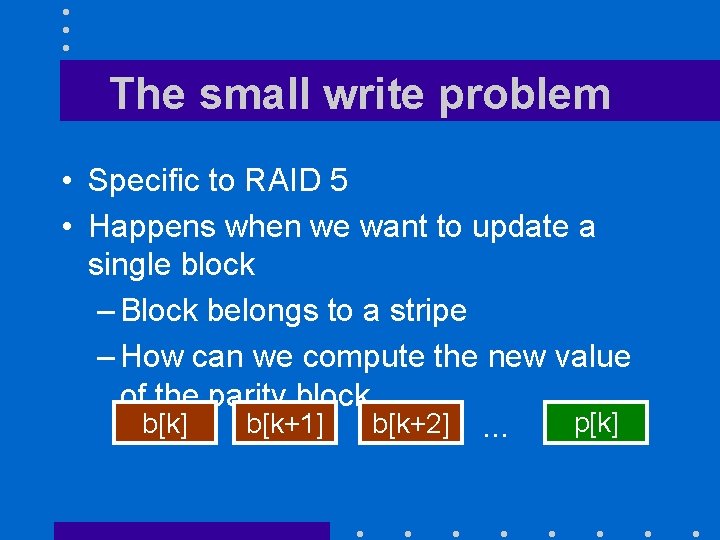

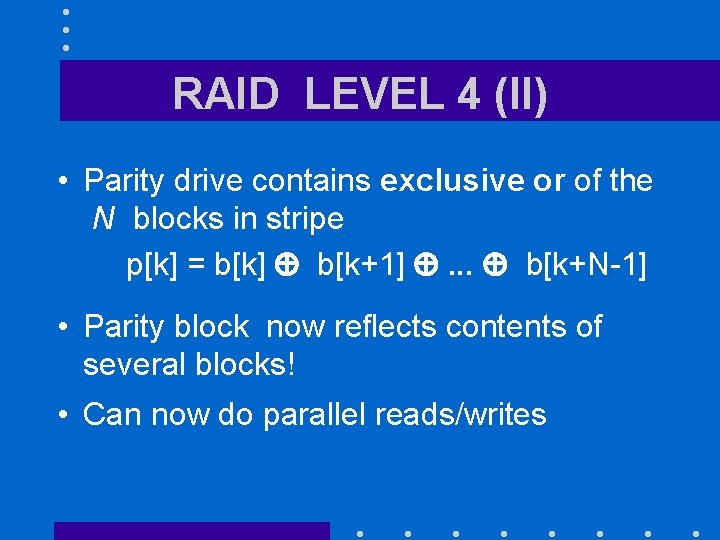

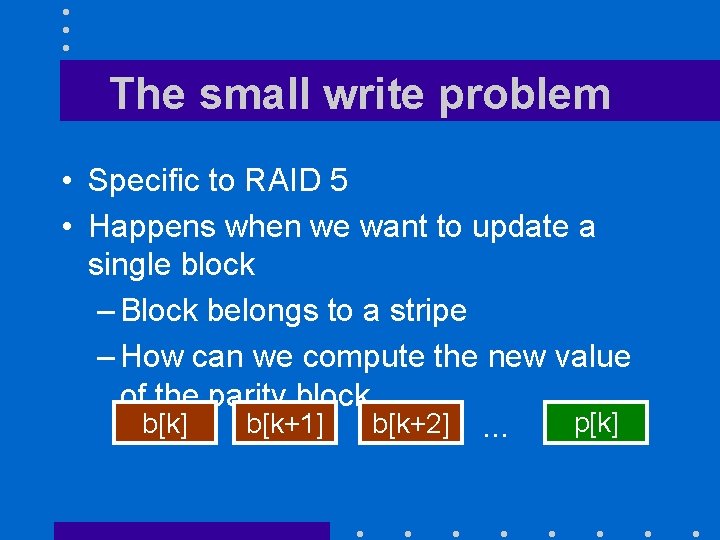

![Second solution Assume we want to update block bm Read old values Second solution • Assume we want to update block b[m] • Read old values](https://slidetodoc.com/presentation_image_h/81159292a88c5aa7bece66377a8bcd23/image-46.jpg)

Second solution • Assume we want to update block b[m] • Read old values of b[m] and parity block p[k] • Compute p[k] = new b[m] old p[k] • Solution requires – 2 reads (old values of block and parity block)

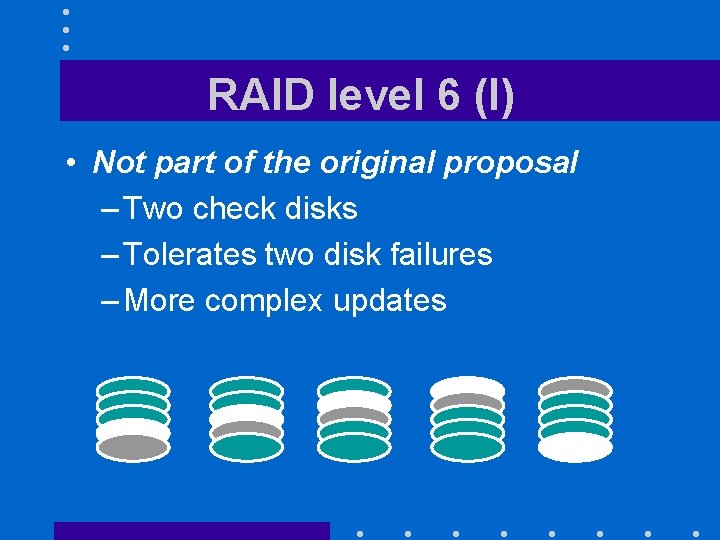

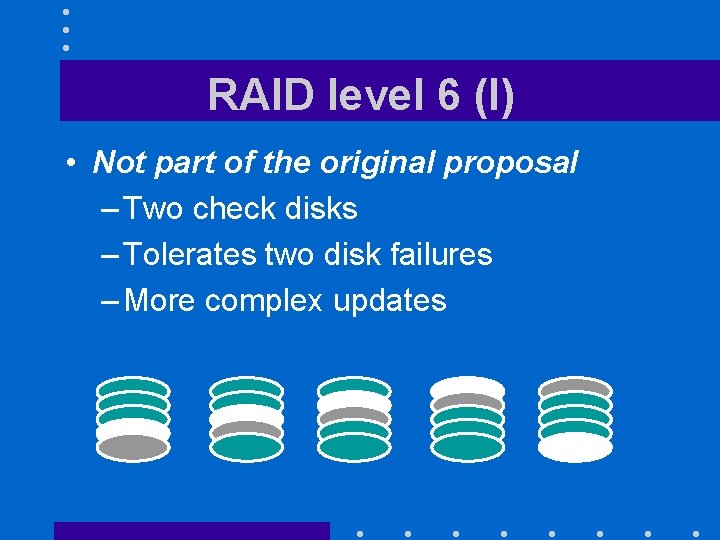

RAID level 6 (I) • Not part of the original proposal – Two check disks – Tolerates two disk failures – More complex updates

RAID level 6 (II) • Has become more popular as disks become – Bigger – More vulnerable to irrecoverable read errors • Most frequent cause for RAID level 5 array failures is – Irrecoverable read error occurring

RAID level 6 (III) • Typical array size is 12 disks • Space overhead is 2/12 = 16. 7 % • Sole real issue is cost of small writes – Three reads and three writes: • Read old value of block being updated, old parity block P, old party block Q • Write new value of block being updated, new parity block P, new

CONCLUSION (II) • Low cost of disk drives made RAID level 1 attractive for small installations • Otherwise pick – RAID level 5 for higher parallelism – RAID level 6 for higher protection • Can tolerate one disk failure and irrecoverable read errors

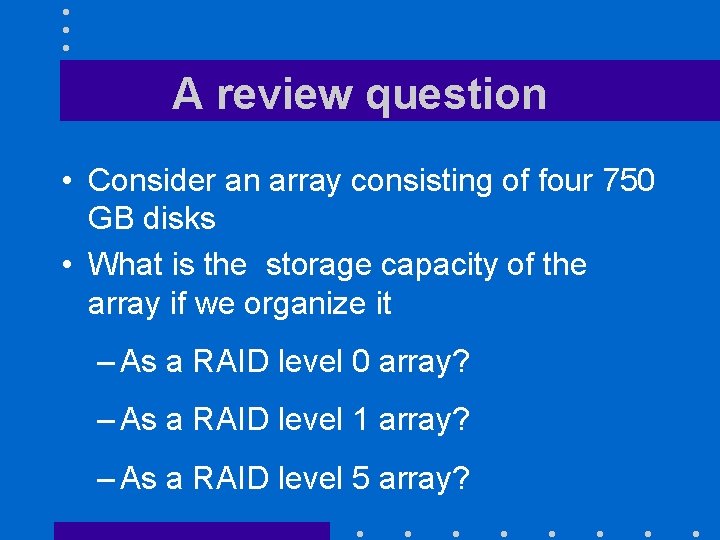

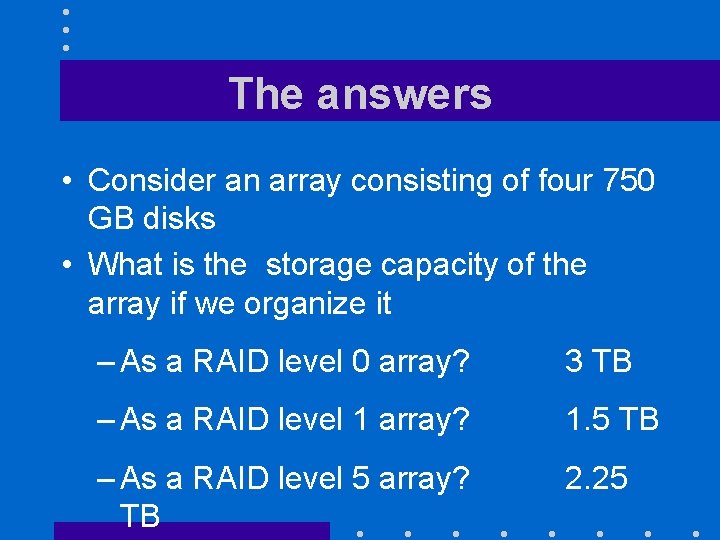

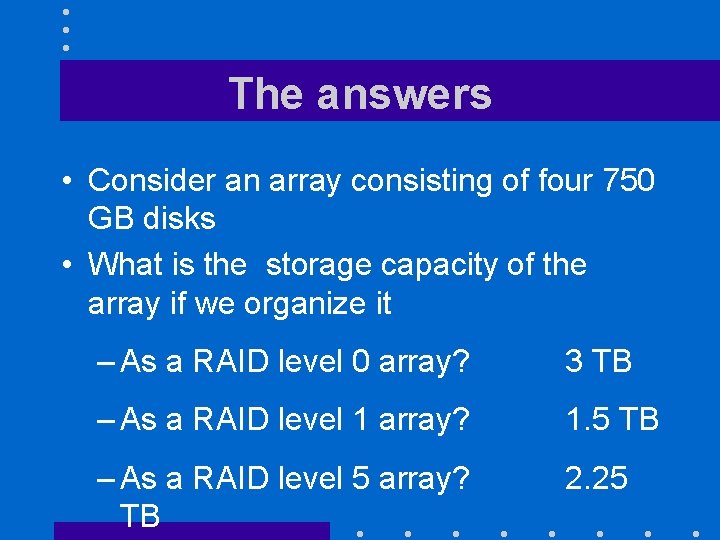

A review question • Consider an array consisting of four 750 GB disks • What is the storage capacity of the array if we organize it – As a RAID level 0 array? – As a RAID level 1 array? – As a RAID level 5 array?

The answers • Consider an array consisting of four 750 GB disks • What is the storage capacity of the array if we organize it – As a RAID level 0 array? 3 TB – As a RAID level 1 array? 1. 5 TB – As a RAID level 5 array? TB 2. 25

CONNECTING I/O DEVICES

Busses • Connecting computer subsystems with each other was traditionally done through busses • A bus is a shared communication link connecting multiple devices • Transmit several bits at a time – Parallel buses

Busses

Examples • Processor-memory busses – Connect CPU with memory modules – Short and high-speed • I/O busses – Longer – Wide range of data bandwidths – Connect to memory through processor-memory bus of backplane

Standards • Firewire – For external use – 63 devices per channel – 4 signal lines – 400 Mb/s or 800 Mb/s – Up to 4. 5 m

Standards • USB 2. 0 – For external use – 127 devices per channels – 2 signal lines – 1. 5 Mb/s (Low Speed), 12 Mb/s (Full Speed) and 480 Mb/s (Hi Speed) – Up to 5 m

Standards • USB 3. 0 – For external use – Adds a 5 Gb/s transfer rate (Super Speed) – Maximum distance is still 5 m

Standards • PCI Express – For internal use – 1 device per channel – 2 signal lines per "lane" – Multiples of 250 MB/s: • 1 x, 2 x, 4 x, 8 x, 16 x and 32 x – Up to 0. 5 m

Standards • Serial ATA – For internal use – Connects cheap disks to computer – 1 device per channel – 4 data lines – 300 MB/s – Up to 1 m

Standards • Serial Attached SCSI (SAS) – For external use – 4 devices per channel – 4 data lines – 300 MB/s – Up to 8 m

Synchronous busses • Include a clock in the control lines • Bus protocols expressed in actions to be taken at each clock pulse • Have very simple protocols • Disadvantages – All bus devices must run at same clock rate – Due to clock skew issues, cannot be both fast and long

Asynchronous busses • Have no clock • Can accommodate a wide variety of devices • Have no clock skew issues • Require a handshaking protocol before any transmission – Implemented with extra control lines

Advantages of busses • Cheap – One bus can link many devices • Flexible – Can add devices

Disadvantages of busses • Shared devices – can become bottlenecks • Hard to run many parallel lines at high clock speeds

New trend • Away from parallel shared buses • Towards serial point-to-point switched interconnections – Serial • One bit at a time – Point-to-point • Each line links a specific device to another specific device

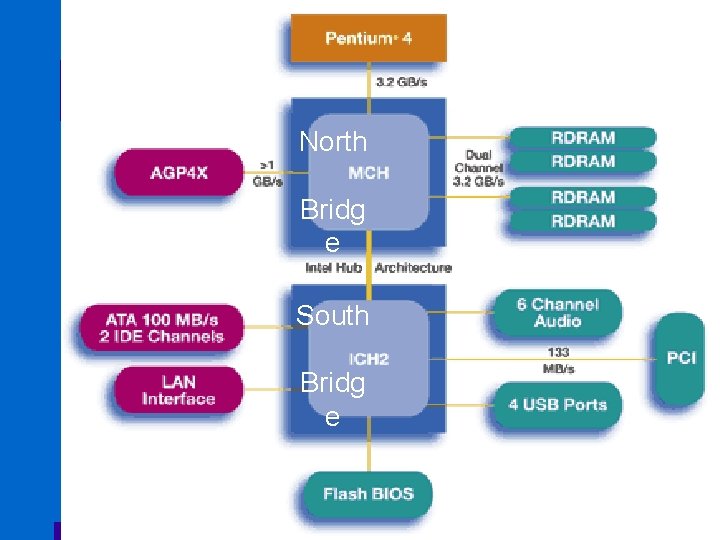

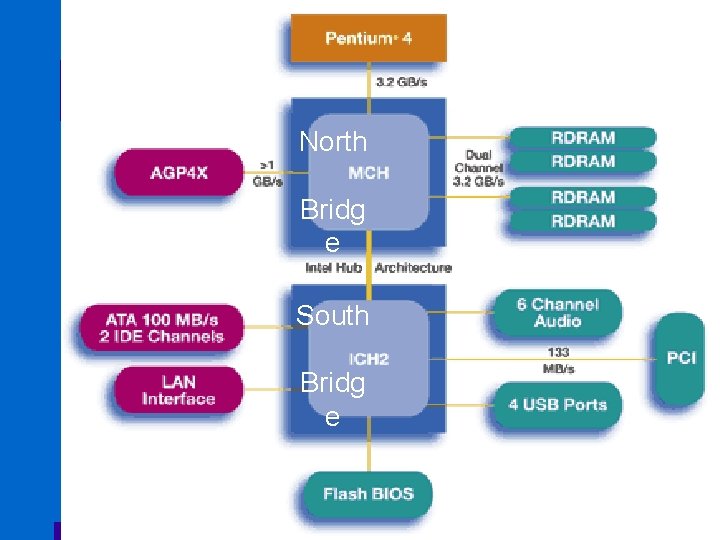

x 86 bus organization • Processor connects to peripherals through two chips (bridges) – North Bridge – South Bridge

x 86 bus organization North Bridg e South Bridg e

North bridge • Essentially a DMA controller – Lets disk controller access main memory w/o any intervention of the CPU • Connects CPU to – Main memory – Optional graphics card – South Bridge

South Bridge • Connects North bridge to a wide variety of I/O busses

Communicating with I/O devices • Two solutions – Memory-mapped I/O – Special I/O instructions

Memory mapped I/O • A portion of the address space reserved for I/O operations – Writes to any to these addresses are interpreted as I/O commands – Reading from these addresses gives access to • Error bit • I/O completion bit • Data being read

Memory mapped I/O • User processes cannot access these addresses – Only the kernel • Prevents user processes from accessing the disk in an uncontrolled fashion

Dedicated I/O instructions • Privileged instructions that cannot be executed by user processes – Only the kernel • Prevents user processes from accessing the disk in an uncontrolled fashion

Polling • Simplest way for an I/O device to communicate with the CPU • CPU periodically checks the status of pending I/O operations – High CPU overhead

I/O completion interrupts • Notify the CPU that an I/O operation has completed • Allows the CPU to do something else while waiting for the completion of an I/O operation – Multiprogramming • I/O completion interrupts are processed by CPU between instructions – No internal instruction state to save

Interrupts levels • See previous chapter

Direct memory access • DMA • Lets disk controller access main memory w/o any intervention of the CPU

DMA and virtual memory • A single DMA transfer may cross page boundaries with – One page being in main memory – One missing page

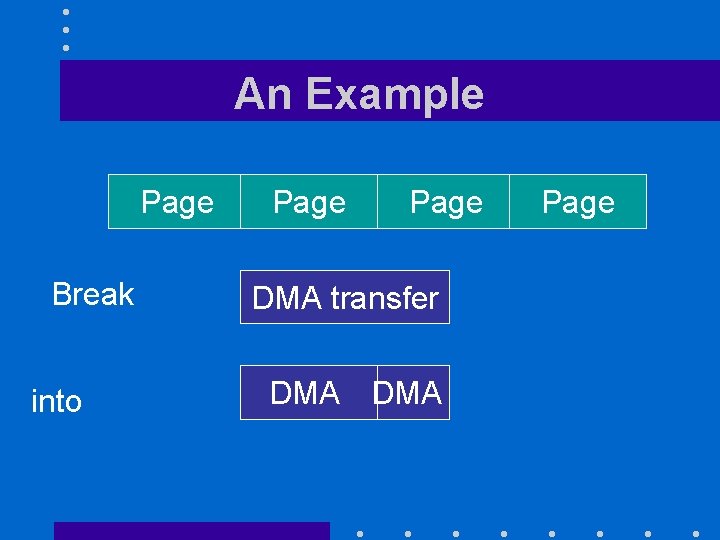

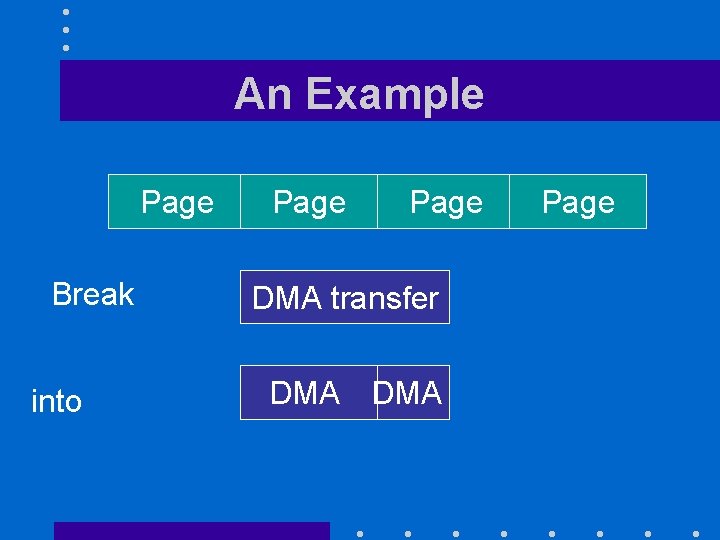

Solutions • Make DMA work with virtual addresses – Issue is then dealt by the virtual memory subsystem • Break DMA transfers crossing page boundaries into chains of transfers that do not cross page boundaries

Solutions • Make DMA work with virtual addresses – Issue is then dealt by the virtual memory subsystem • Break DMA transfers crossing page boundaries into chains of transfers that do not cores page boundaries

An Example Page Break into Page DMA transfer DMA Page

DMA and cache hierarchy • Three approaches for handling temporary inconsistencies between caches and main memory

Solutions 1. Running all DMA accesses to the cache – Bad solution 2. Have OS selectively – Invalidate affected cache entries when performing a read – Forcing immediate flush of dirty cache entries when performing a write

Benchmarking I/O

Benchmarks • Specific benchmarks for – Transaction processing • Emphasis on speed and graceful recovery from failures –Atomic transactions: • All or nothing behavior

An important observation • Very difficult to operate a disk subsystem at a reasonable fraction of its maximum throughput – Unless we access sequentially very large ranges of data • 512 KB and more

Major fallacies • Since rated MTTFs of disk drives exceed one million hours, disk can last more than 100 years – MTTF expresses failure rate during the disk actual lifetime • Disk failure rates in the field match the MMTTFS mentioned in the manufacturers’ literature – They are up to ten times higher

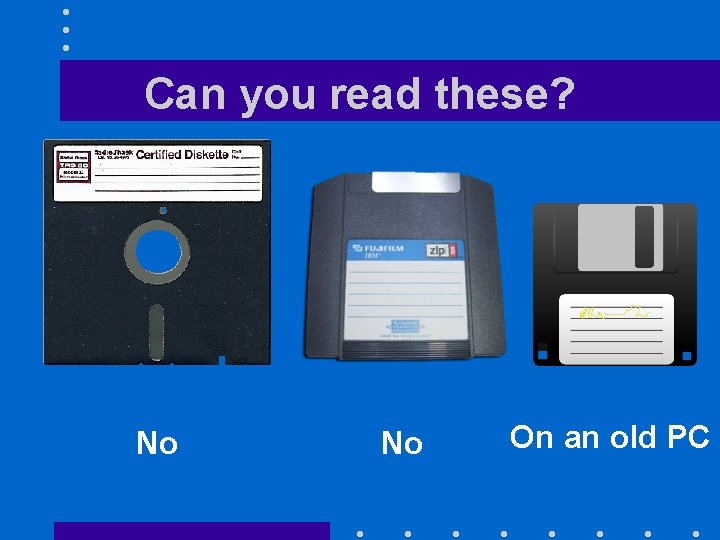

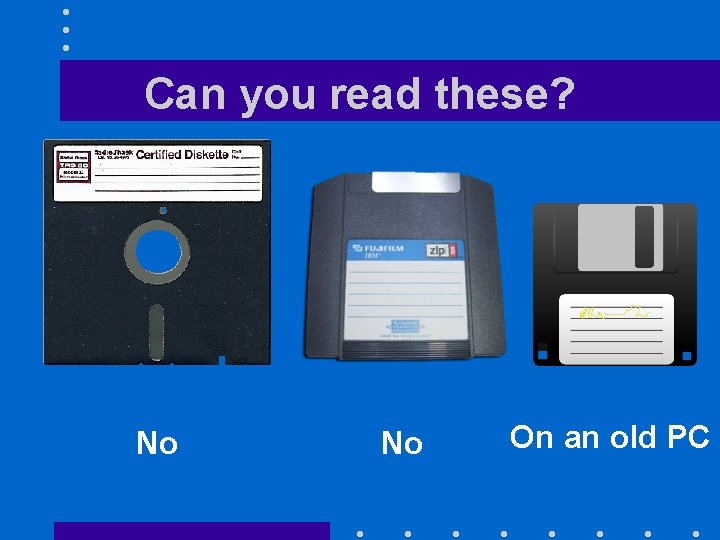

Major fallacies • Neglecting to do end-to-end checks –… • Using magnetic tapes to back up disks – Tape formats can become quickly obsolescent – Disk bit densities have grown much faster than tape data densities.

Can you read these? No No On an old PC

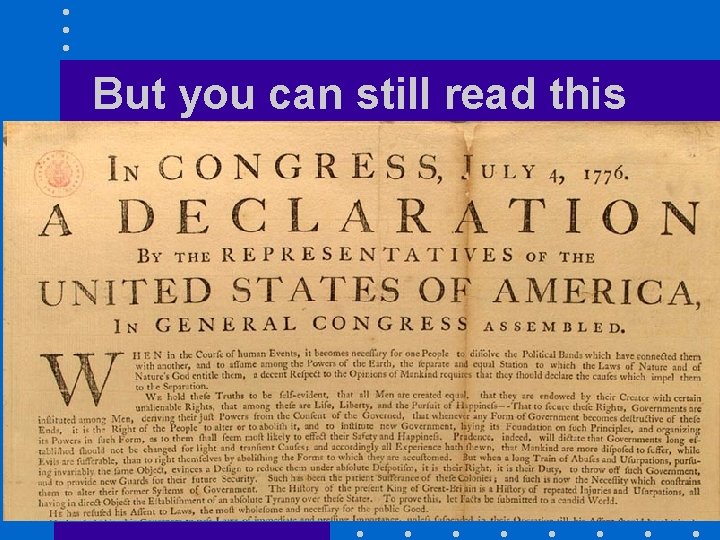

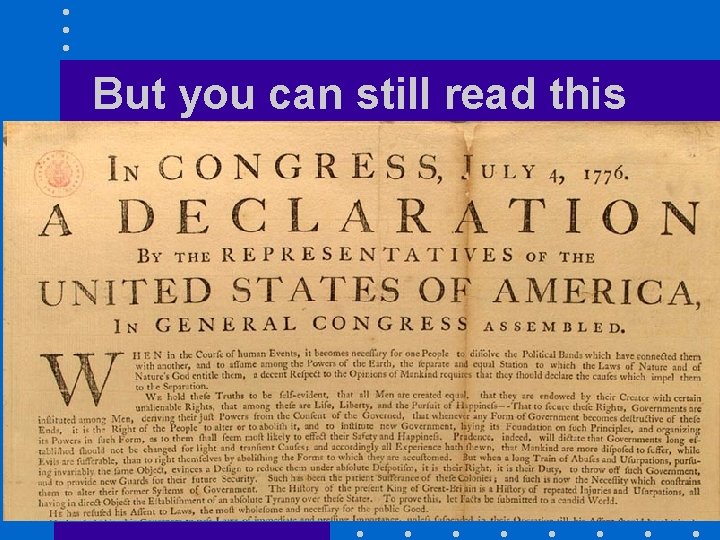

But you can still read this