STEREO VISION FOR DRIVERLESS CARS 6 375 Final

![[1] Y. Wang et al. : “Pseudo-Li. DAR from Visual Depth Estimation: Bridging the [1] Y. Wang et al. : “Pseudo-Li. DAR from Visual Depth Estimation: Bridging the](https://slidetodoc.com/presentation_image/90688fc5dbebefd23478d3c3b1e01ff6/image-2.jpg)

- Slides: 13

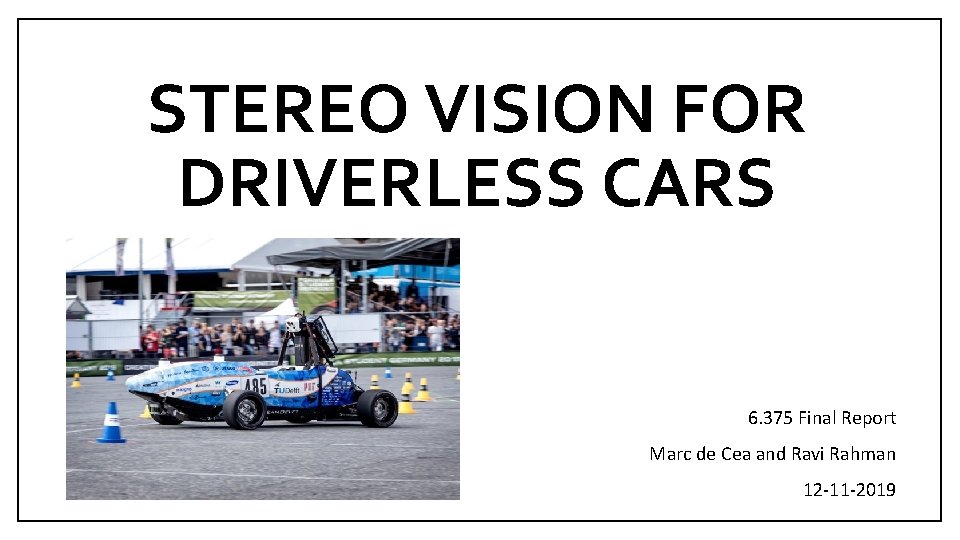

STEREO VISION FOR DRIVERLESS CARS 6. 375 Final Report Marc de Cea and Ravi Rahman 12 -11 -2019

![1 Y Wang et al PseudoLi DAR from Visual Depth Estimation Bridging the [1] Y. Wang et al. : “Pseudo-Li. DAR from Visual Depth Estimation: Bridging the](https://slidetodoc.com/presentation_image/90688fc5dbebefd23478d3c3b1e01ff6/image-2.jpg)

[1] Y. Wang et al. : “Pseudo-Li. DAR from Visual Depth Estimation: Bridging the Gap in 3 D Object Detection for Autonomous Driving”, ar. Xiv: 1812. 07179 (2018) STEREO VISION – WHY? • Stereo Vision (combined with other sensing and computation techniques) is a cheaper and potentially less complex alternative to LIDAR for 3 D mapping of a self driving car. Cornell researchers have showed that stereo vision can achieve LIDAR-like performance in object and obstacle detection [1]. • Elon Musk: “Anyone relying on Li. DAR is doomed. [They are] expensive sensors that are unnecessary. It’s like having a whole bunch of expensive appendices. Like, one appendix is bad, well now you have a whole bunch of them, it’s ridiculous, you’ll see. Li. DAR is lame, they’re gonna dump Li. DAR, mark my words. ” * * These are Elon Musk’s comments and do not represent our opinion! 2

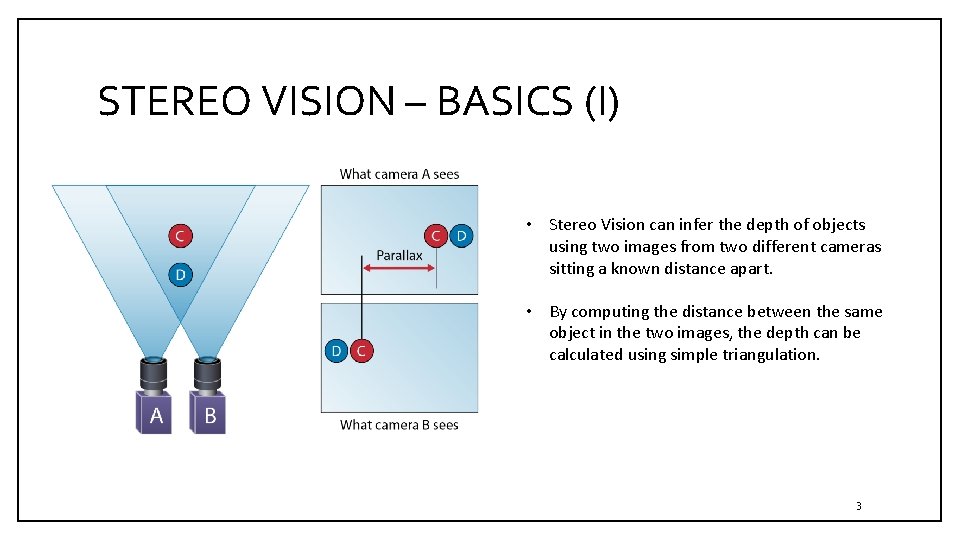

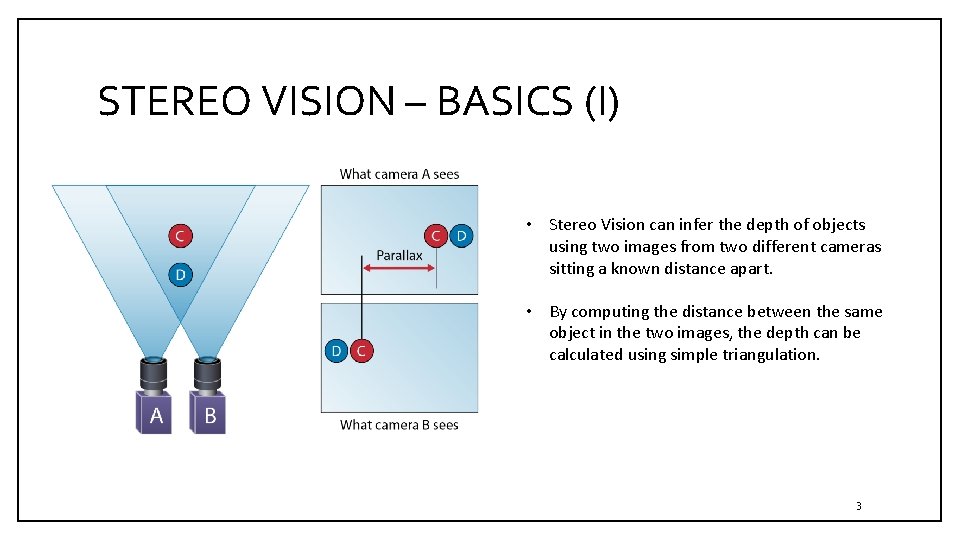

STEREO VISION – BASICS (I) • Stereo Vision can infer the depth of objects using two images from two different cameras sitting a known distance apart. • By computing the distance between the same object in the two images, the depth can be calculated using simple triangulation. 3

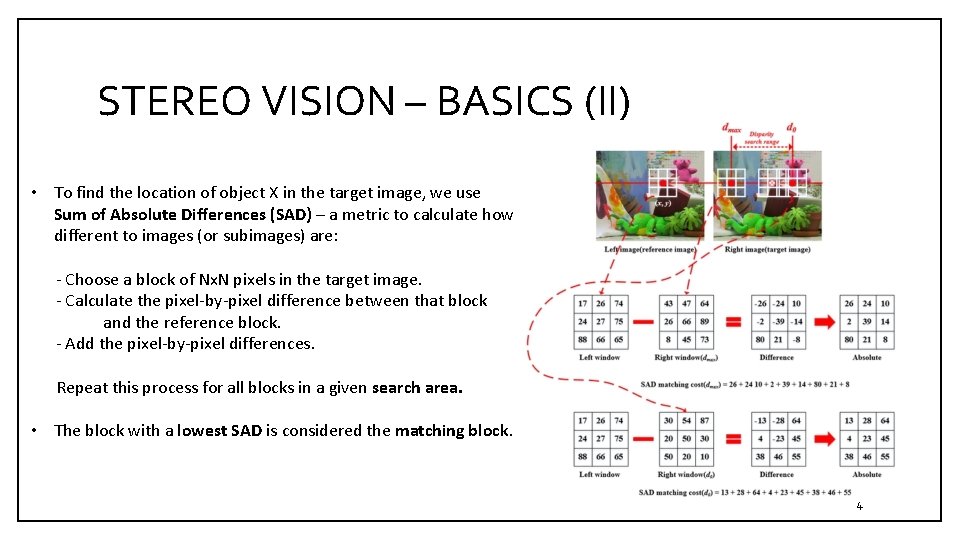

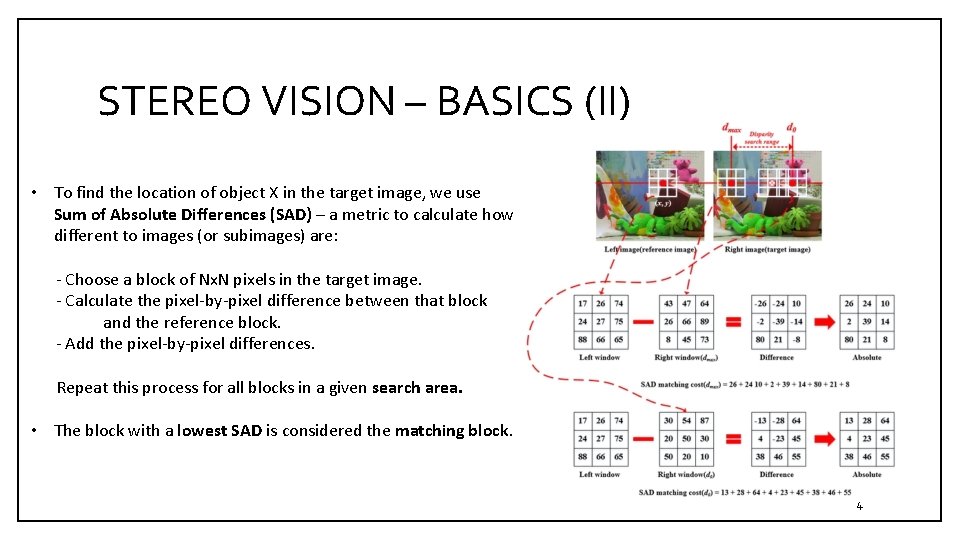

STEREO VISION – BASICS (II) • To find the location of object X in the target image, we use Sum of Absolute Differences (SAD) – a metric to calculate how different to images (or subimages) are: - Choose a block of Nx. N pixels in the target image. - Calculate the pixel-by-pixel difference between that block and the reference block. - Add the pixel-by-pixel differences. Repeat this process for all blocks in a given search area. • The block with a lowest SAD is considered the matching block. 4

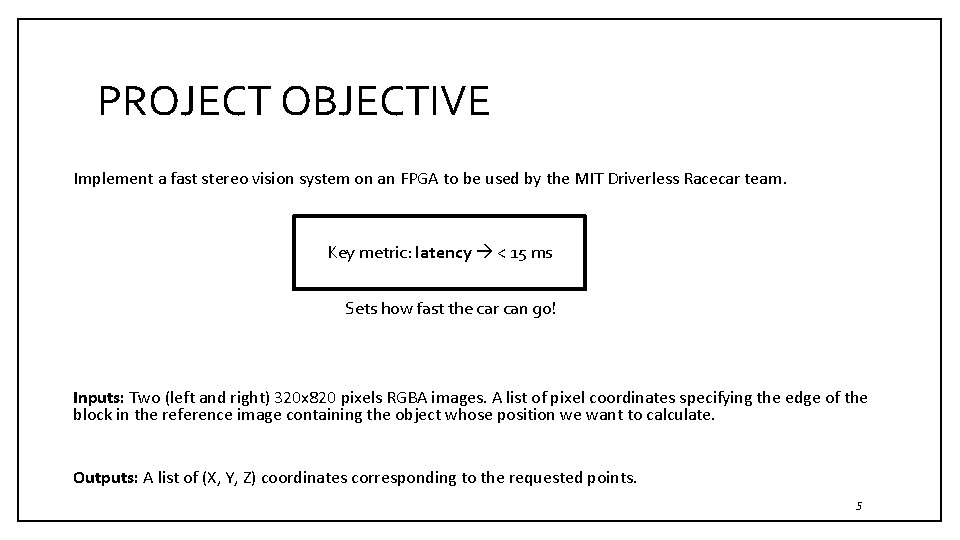

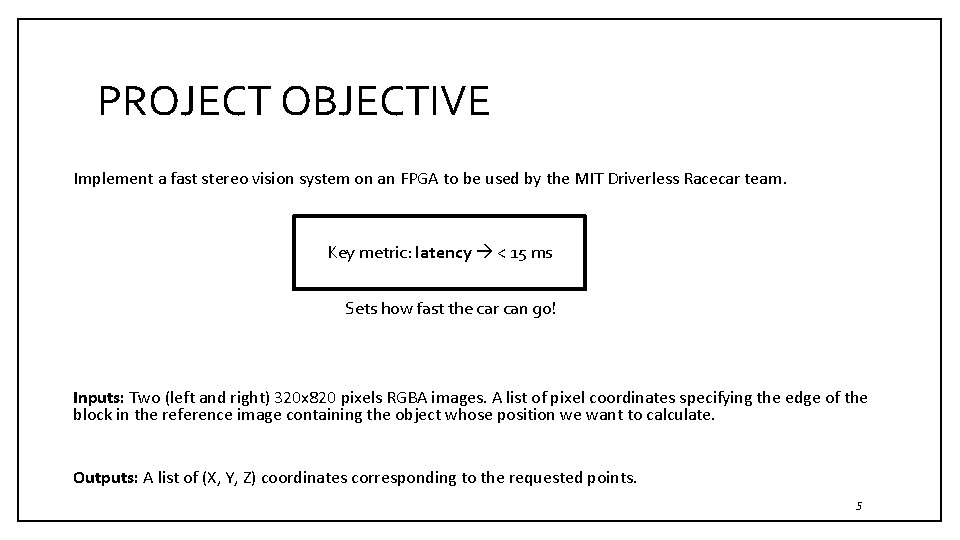

PROJECT OBJECTIVE Implement a fast stereo vision system on an FPGA to be used by the MIT Driverless Racecar team. Key metric: latency < 15 ms Sets how fast the car can go! Inputs: Two (left and right) 320 x 820 pixels RGBA images. A list of pixel coordinates specifying the edge of the block in the reference image containing the object whose position we want to calculate. Outputs: A list of (X, Y, Z) coordinates corresponding to the requested points. 5

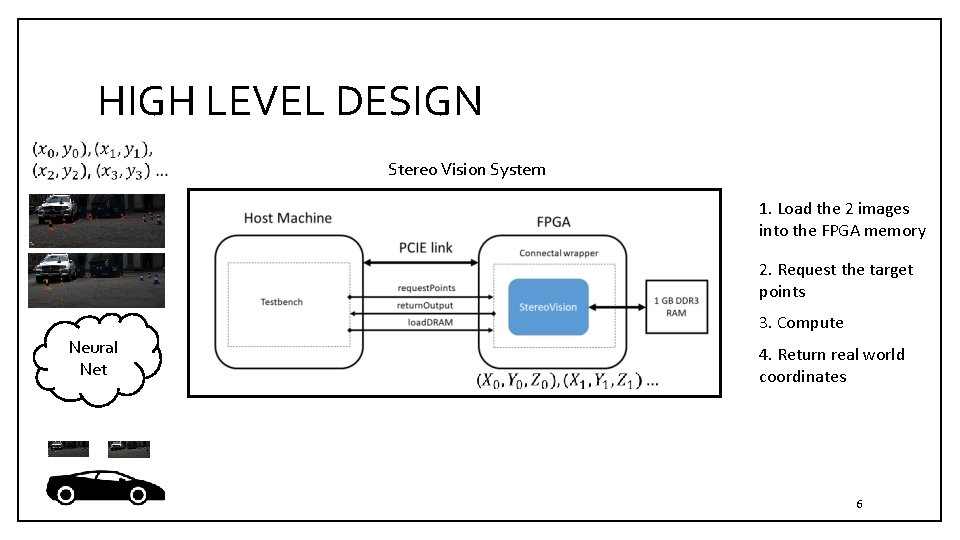

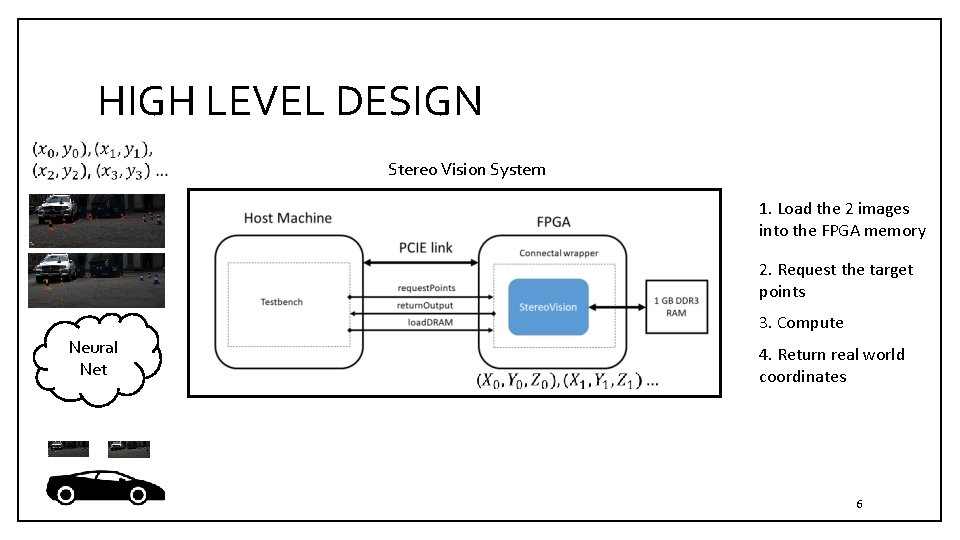

HIGH LEVEL DESIGN Stereo Vision System 1. Load the 2 images into the FPGA memory 2. Request the target points 3. Compute Neural Net 4. Return real world coordinates 6

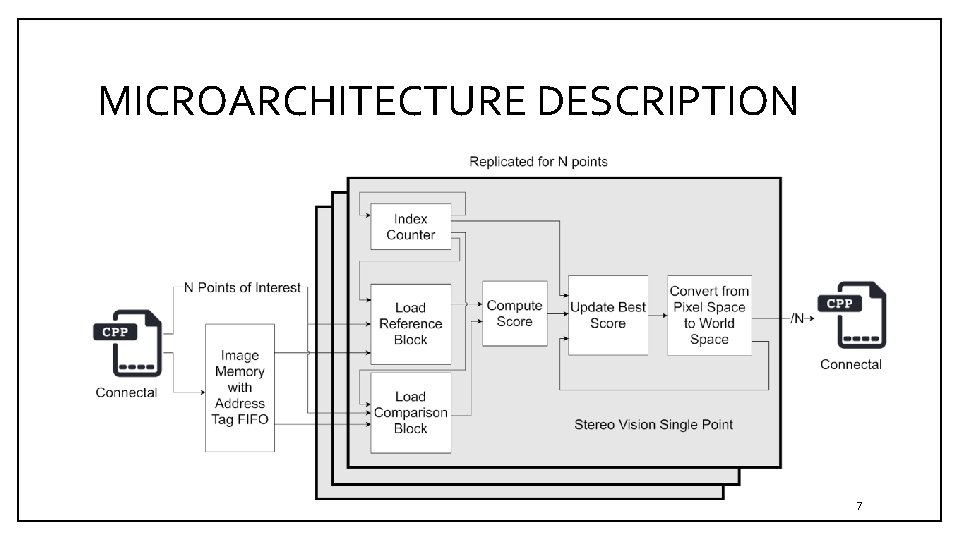

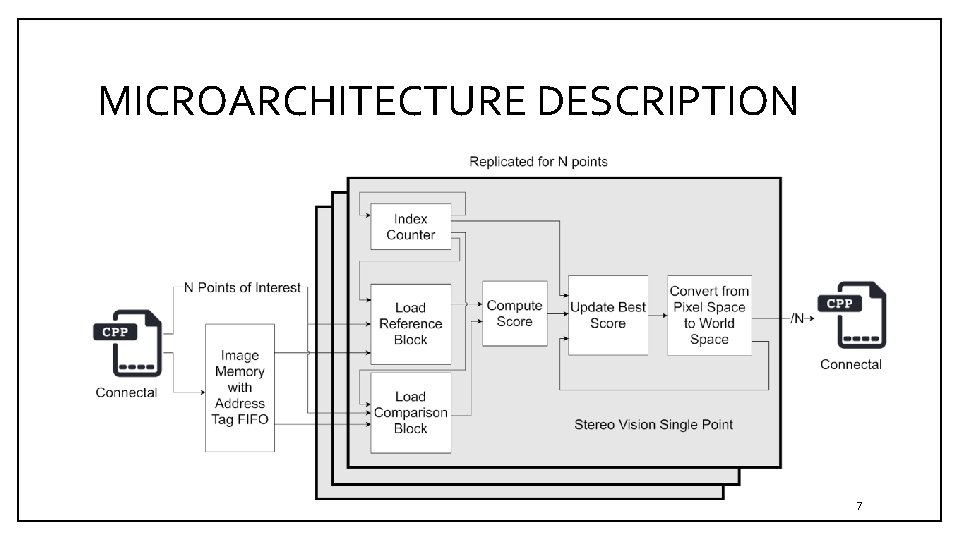

MICROARCHITECTURE DESCRIPTION 7

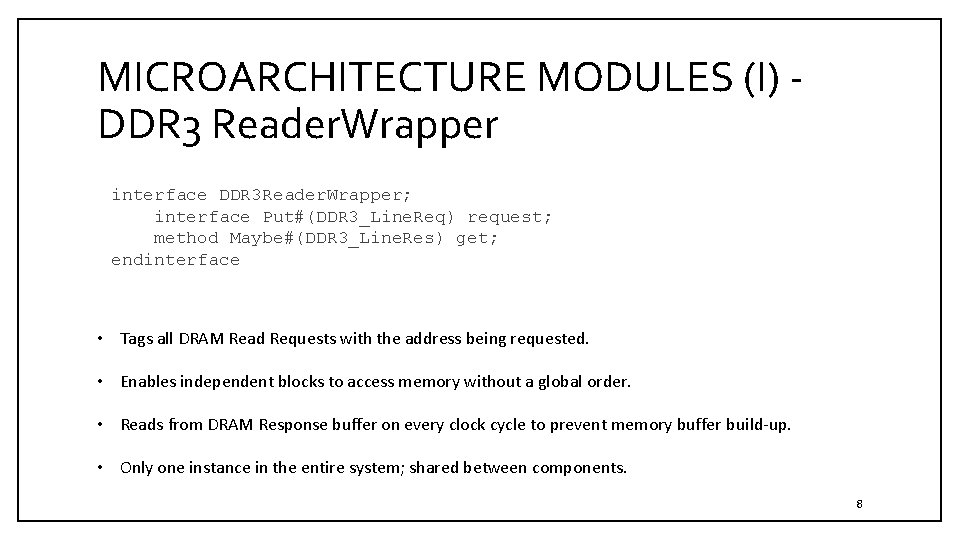

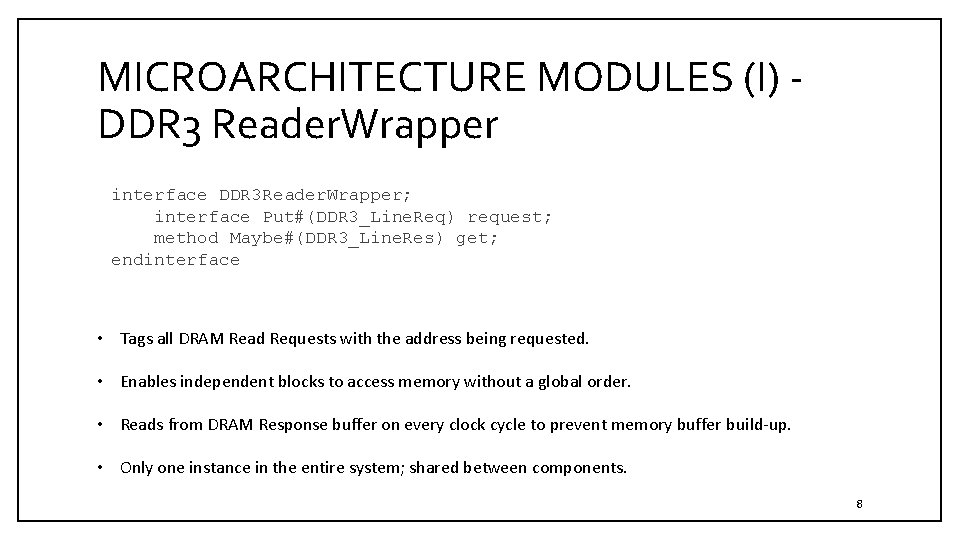

MICROARCHITECTURE MODULES (I) - DDR 3 Reader. Wrapper interface DDR 3 Reader. Wrapper; interface Put#(DDR 3_Line. Req) request; method Maybe#(DDR 3_Line. Res) get; endinterface • Tags all DRAM Read Requests with the address being requested. • Enables independent blocks to access memory without a global order. • Reads from DRAM Response buffer on every clock cycle to prevent memory buffer build-up. • Only one instance in the entire system; shared between components. 8

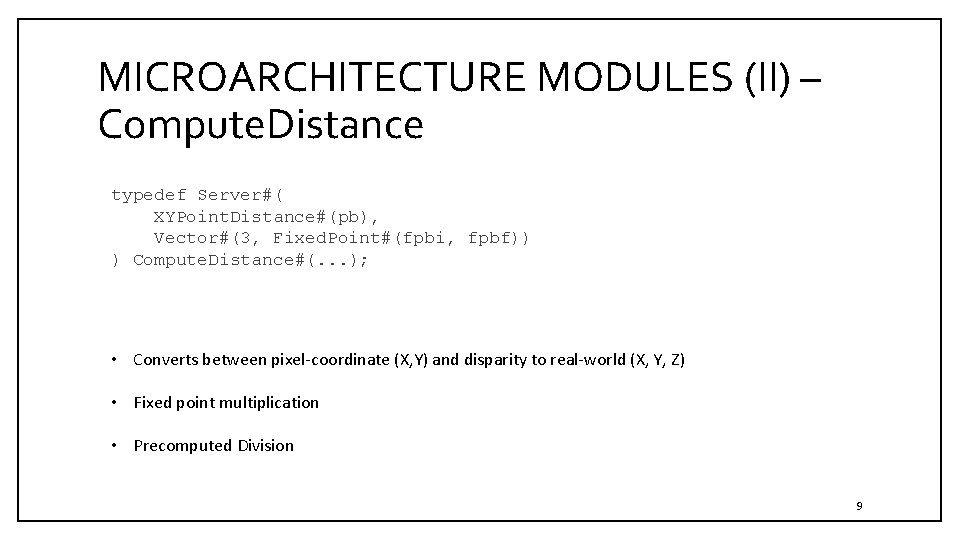

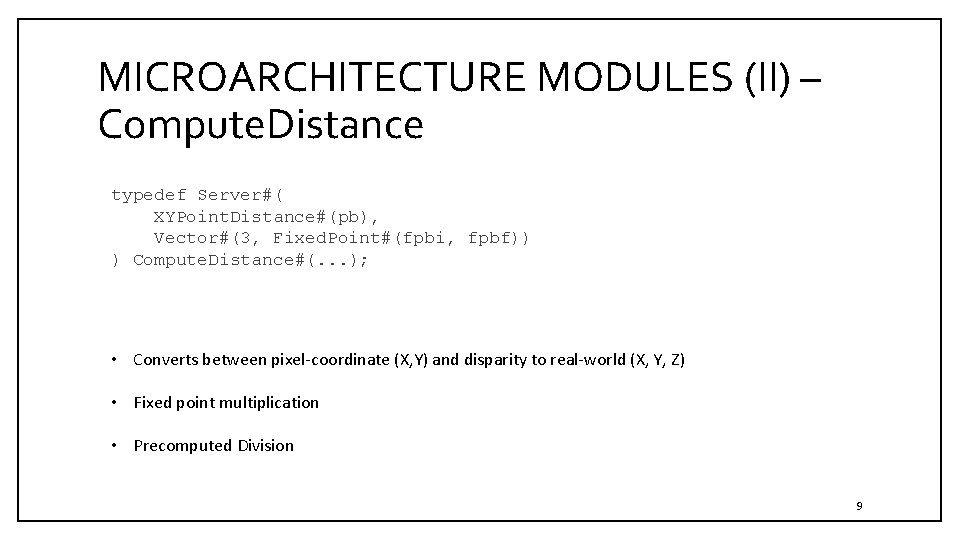

MICROARCHITECTURE MODULES (II) – Compute. Distance typedef Server#( XYPoint. Distance#(pb), Vector#(3, Fixed. Point#(fpbi, fpbf)) ) Compute. Distance#(. . . ); • Converts between pixel-coordinate (X, Y) and disparity to real-world (X, Y, Z) • Fixed point multiplication • Precomputed Division 9

DEMO Integrated with the Robotics Operating System (ROS) SDK -- the publisher/subscriber platform used by the MIT Driverless Racecar. 10

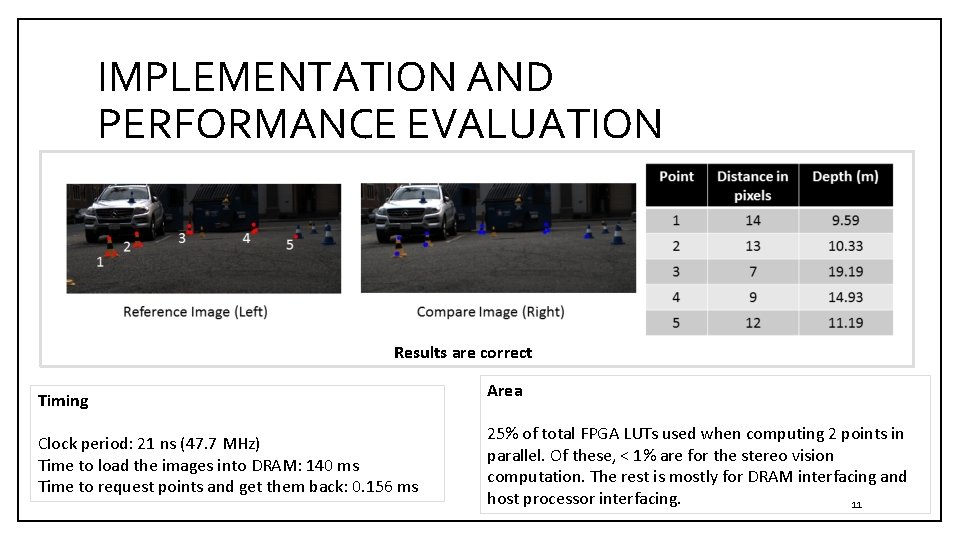

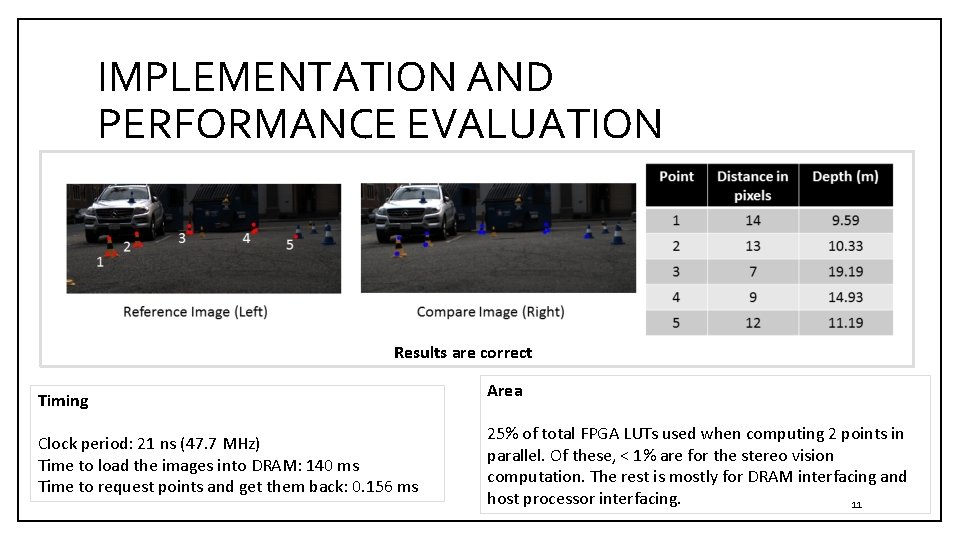

IMPLEMENTATION AND PERFORMANCE EVALUATION Results are correct Timing Clock period: 21 ns (47. 7 MHz) Time to load the images into DRAM: 140 ms Time to request points and get them back: 0. 156 ms Area 25% of total FPGA LUTs used when computing 2 points in parallel. Of these, < 1% are for the stereo vision computation. The rest is mostly for DRAM interfacing and host processor interfacing. 11

CONCLUSION • We have successfully implemented a stereo vision system in an FPGA. • By instantiating several modules that perform the stereo vision algorithm on a single point, we can compute multiple points in parallel, speeding up the computation. • The time taken by the FPGA to receive the target points, compute the real world coordinates and return them is only 1 ms, which beats by more than 10 x our goal of 15 ms latency. • Our test demonstration was limited by the long time (≈140 ms) it takes for the two stereo images to be loaded into the FPGA’s DRAM, something that would not be necessary in a real stereo vision system implementation 12

FUTURE WORK • Use Direct Memory Access (DMA) to replace DRAM. • Further increase the number of points being computed in parallel. • Deploy system on actual the actual racecar! 13