Stealing DNN models Attacks and Defenses Mika Juuti

- Slides: 21

Stealing DNN models: Attacks and Defenses Mika Juuti, Sebastian Szyller, Alexey Dmitrenko, Samuel Marchal, N. Asokan

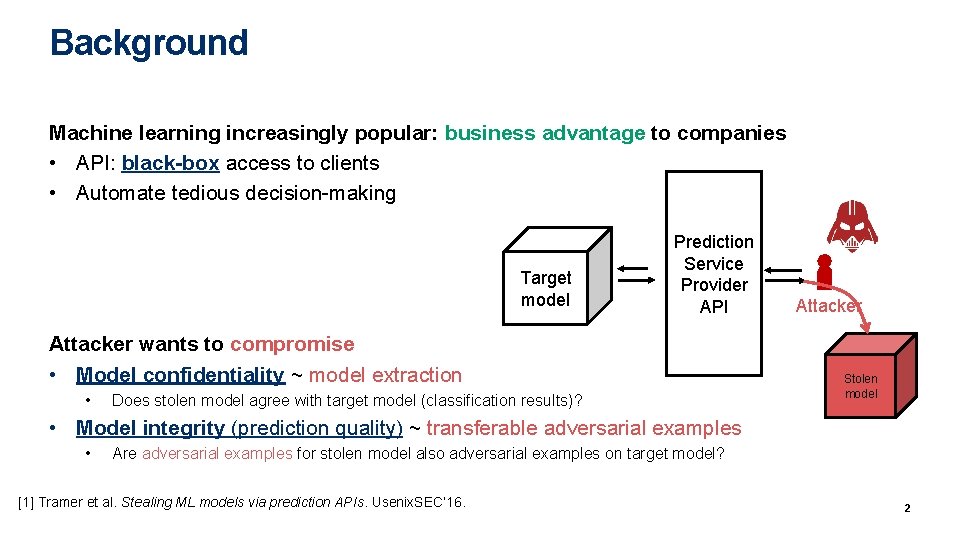

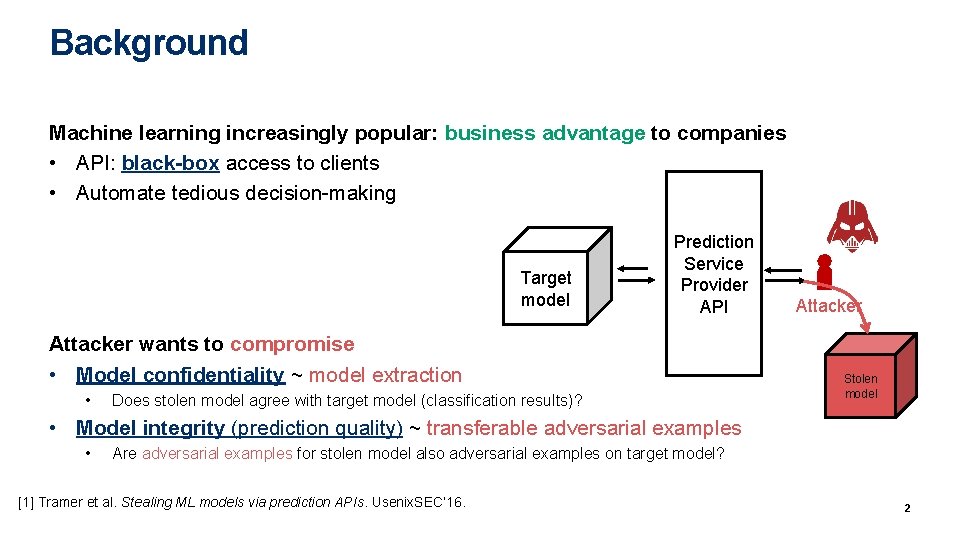

Background Machine learning increasingly popular: business advantage to companies • API: black-box access to clients • Automate tedious decision-making Target ML limit Speed 80 km/h model Prediction Service Provider API Attacker wants to compromise • Model confidentiality ~ model extraction • Does stolen model agree with target model (classification results)? Client Attacker Stolen model • Model integrity (prediction quality) ~ transferable adversarial examples • Are adversarial examples for stolen model also adversarial examples on target model? [1] Tramer et al. Stealing ML models via prediction APIs. Usenix. SEC’ 16. 2

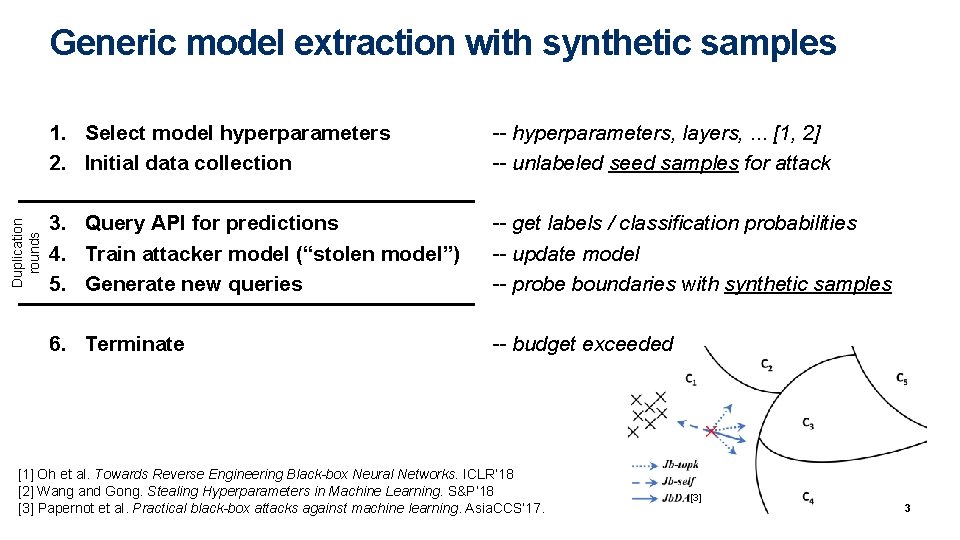

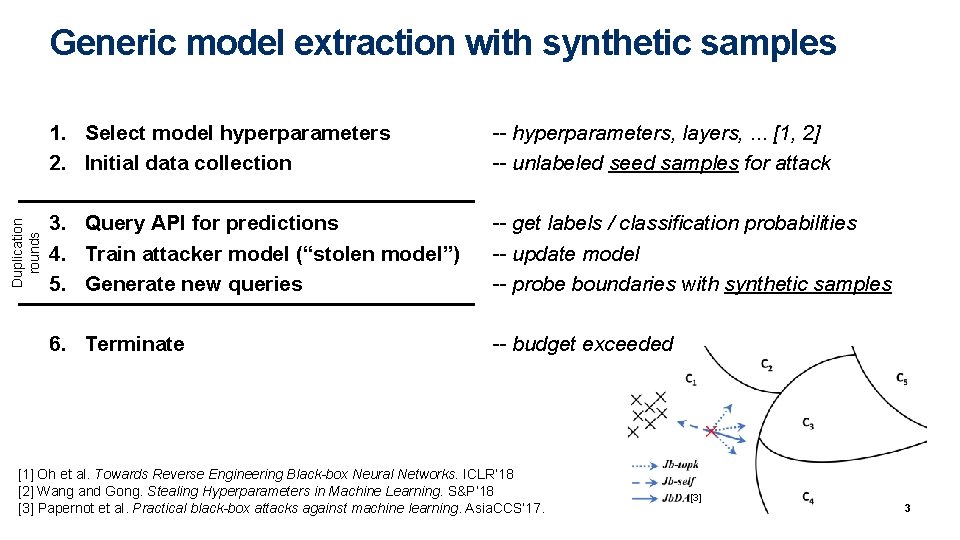

Duplication rounds Generic model extraction with synthetic samples 1. Select model hyperparameters 2. Initial data collection -- hyperparameters, layers, . . . [1, 2] -- unlabeled seed samples for attack 3. Query API for predictions 4. Train attacker model (“stolen model”) 5. Generate new queries -- get labels / classification probabilities -- update model -- probe boundaries with synthetic samples 6. Terminate -- budget exceeded [1] Oh et al. Towards Reverse Engineering Black-box Neural Networks. ICLR’ 18 [2] Wang and Gong. Stealing Hyperparameters in Machine Learning. S&P’ 18 [3] Papernot et al. Practical black-box attacks against machine learning. Asia. CCS’ 17. [3] 3

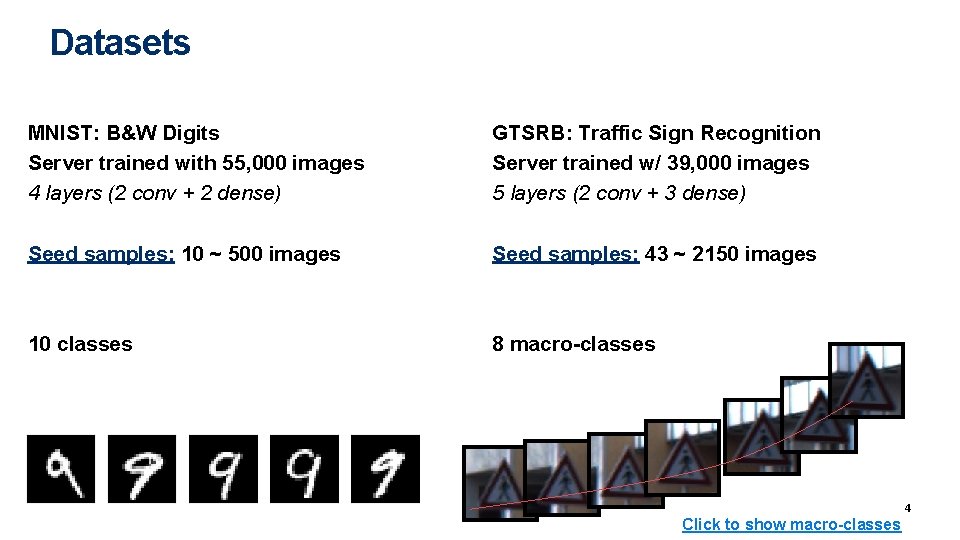

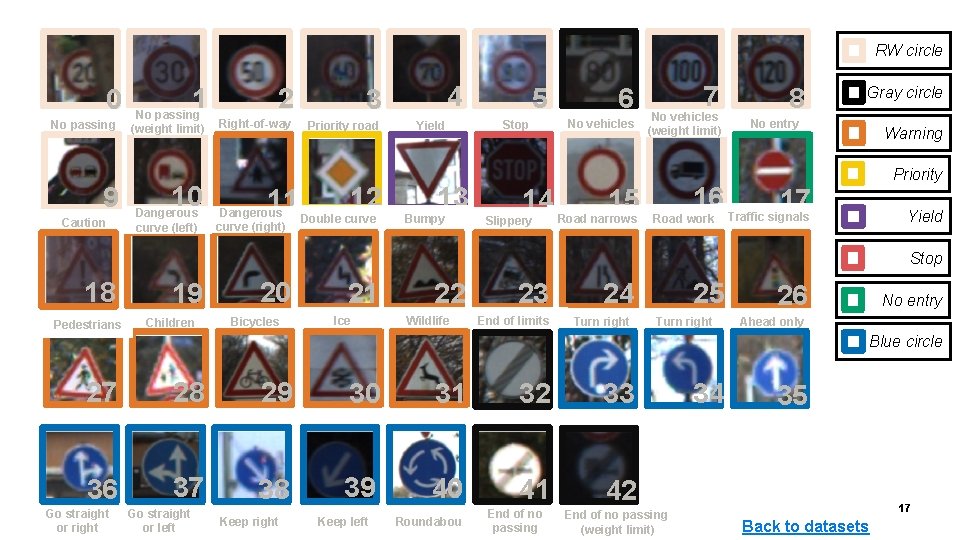

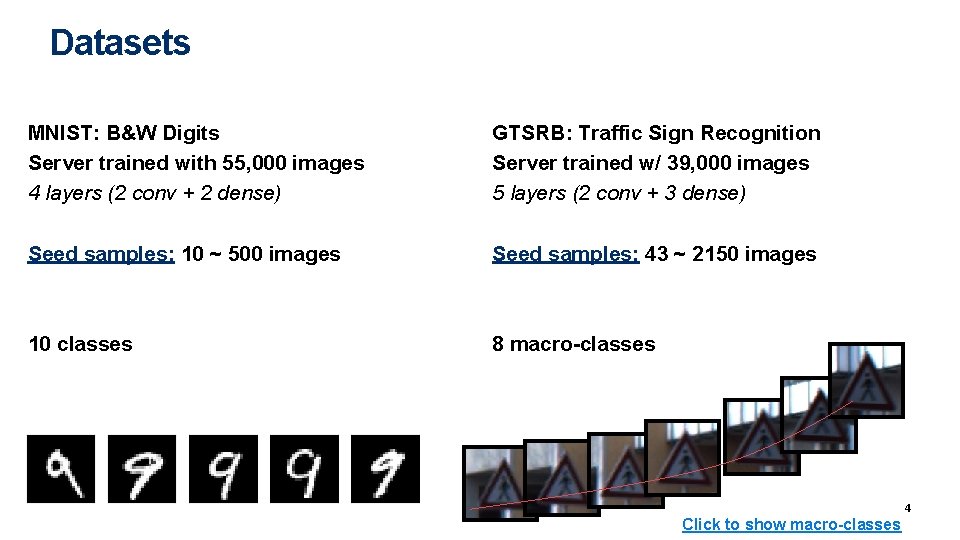

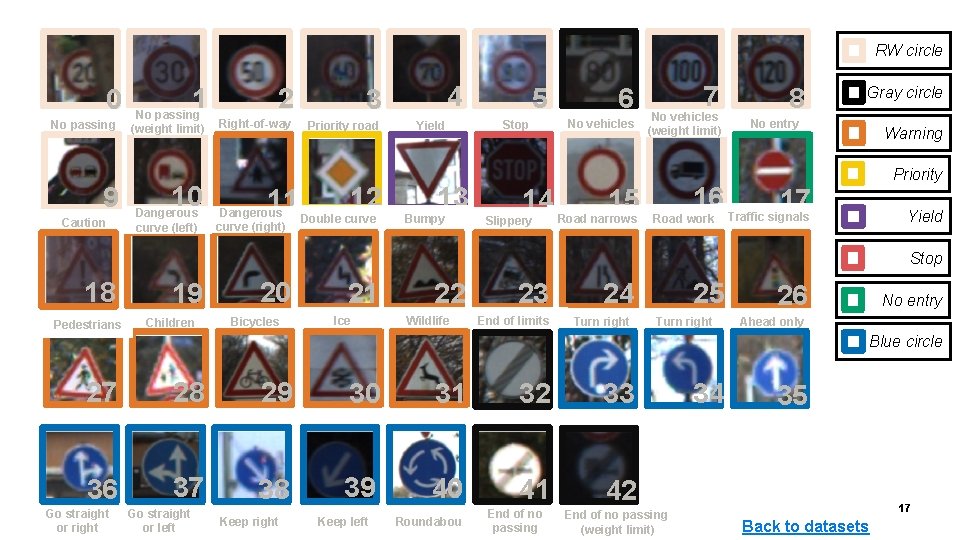

Datasets MNIST: B&W Digits Server trained with 55, 000 images 4 layers (2 conv + 2 dense) GTSRB: Traffic Sign Recognition Server trained w/ 39, 000 images 5 layers (2 conv + 3 dense) Seed samples: 10 ~ 500 images Seed samples: 43 ~ 2150 images 10 classes 8 macro-classes 4 Click to show macro-classes

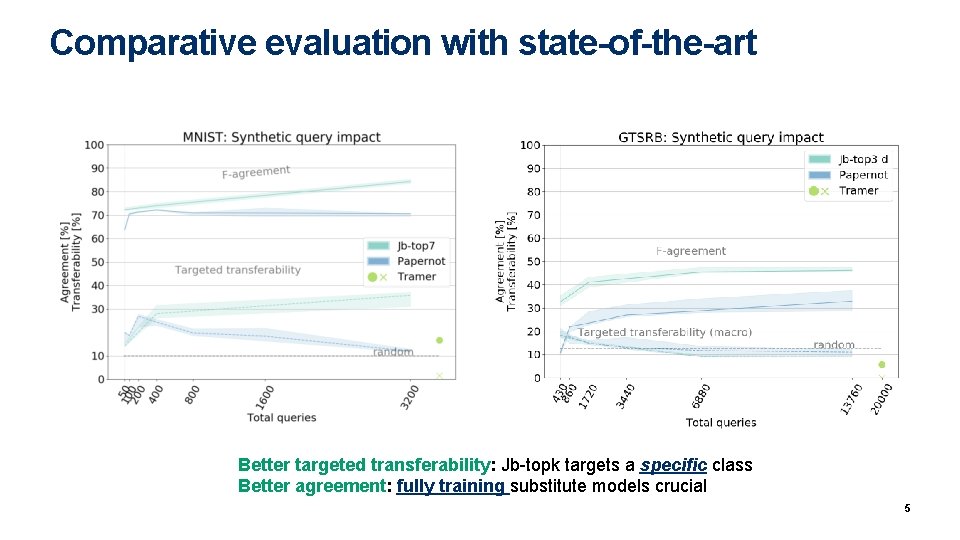

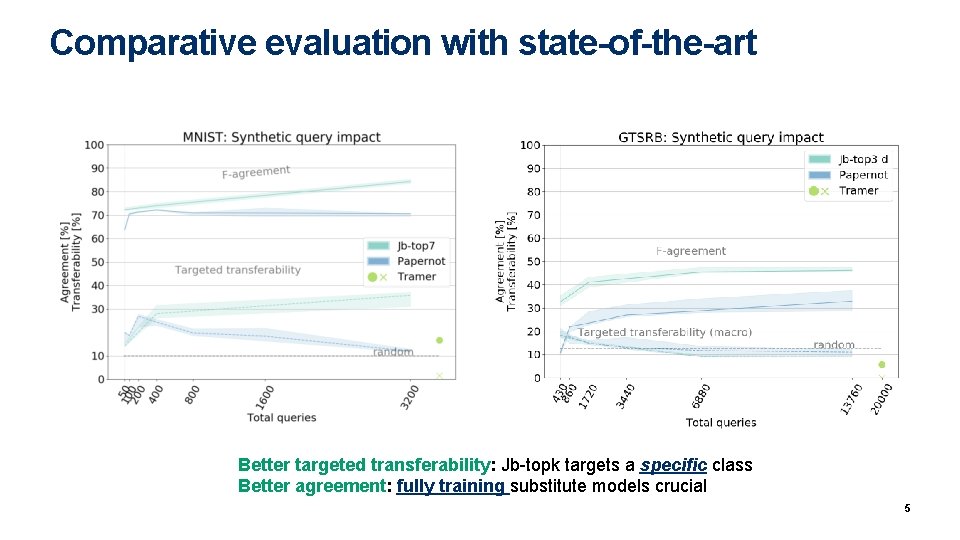

Comparative evaluation with state-of-the-art Better targeted transferability: Jb-topk targets a specific class Better agreement: fully training substitute models crucial 5

Take-aways (1) Agreement and transferability do not go hand-in-hand (2) Probabilities improve transferability (3) Dropout may help on complex data (4) Synthetic samples with probabilities boost transferability (5) Thinner, deeper networks more resilient to transferable adversarial examples 6

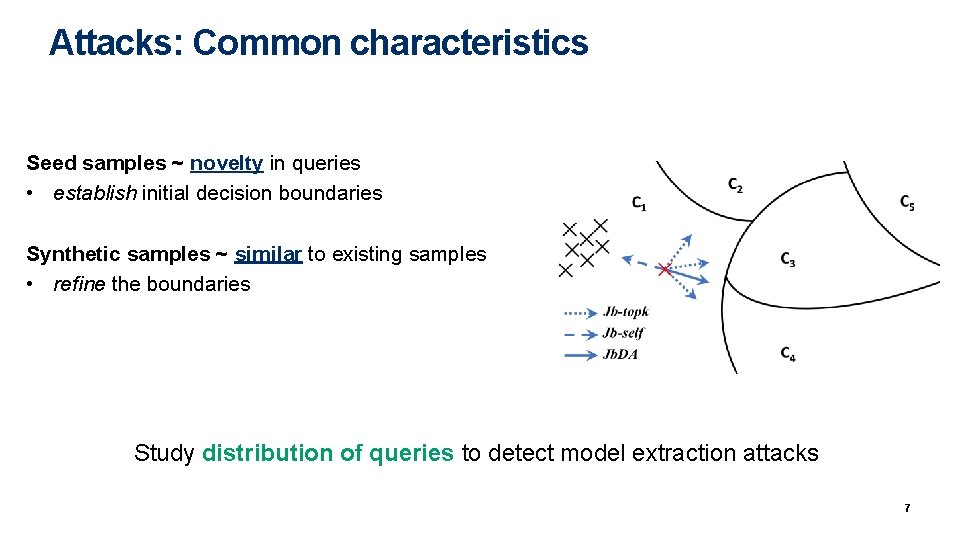

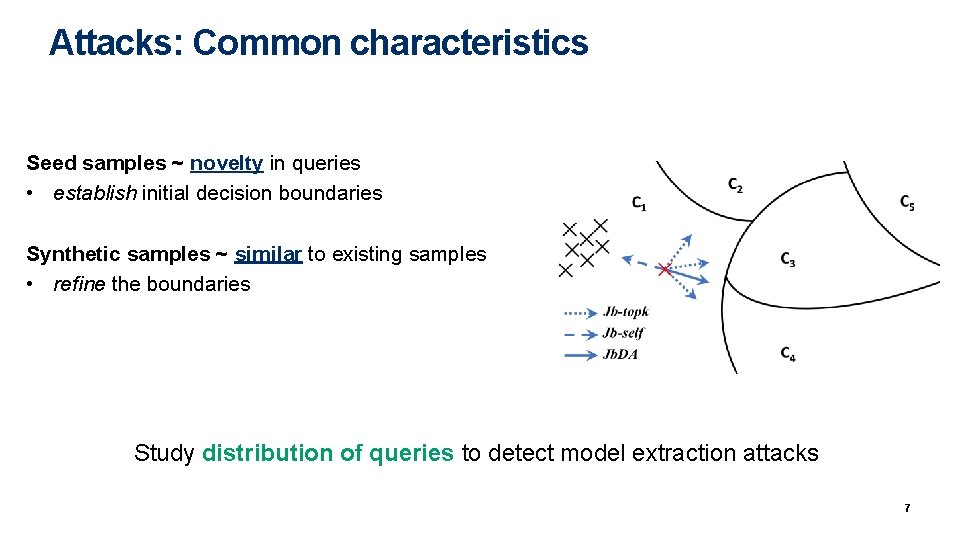

Attacks: Common characteristics Seed samples ~ novelty in queries • establish initial decision boundaries Synthetic samples ~ similar to existing samples • refine the boundaries Study distribution of queries to detect model extraction attacks 7

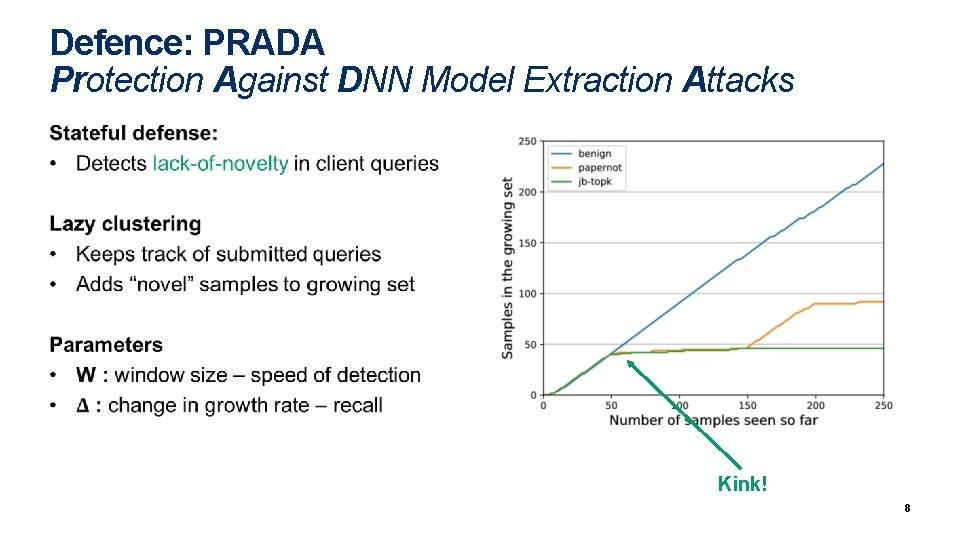

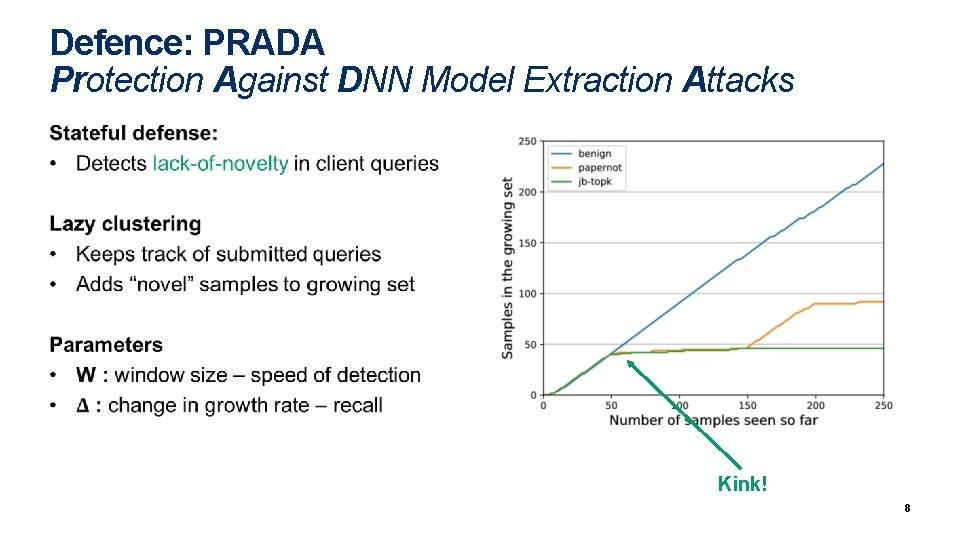

Defence: PRADA Protection Against DNN Model Extraction Attacks Kink! 8

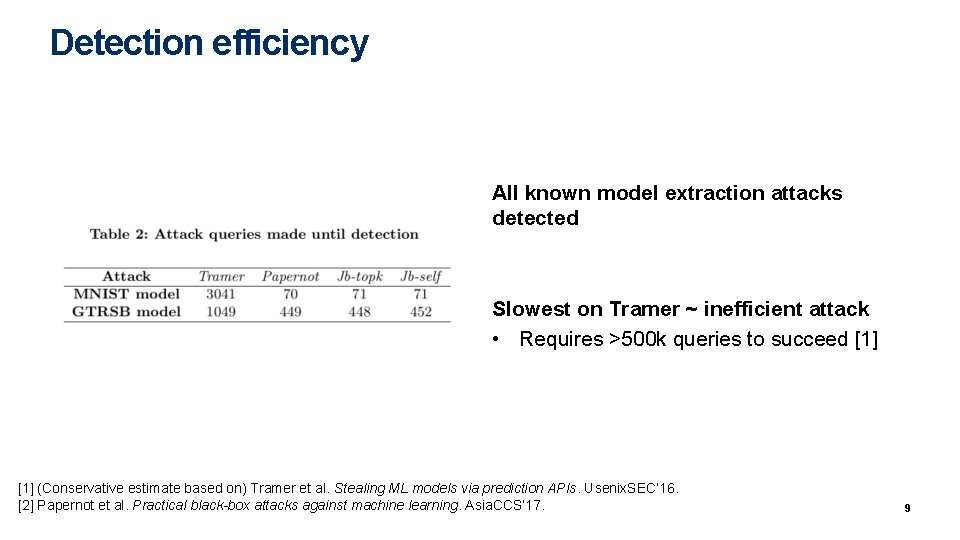

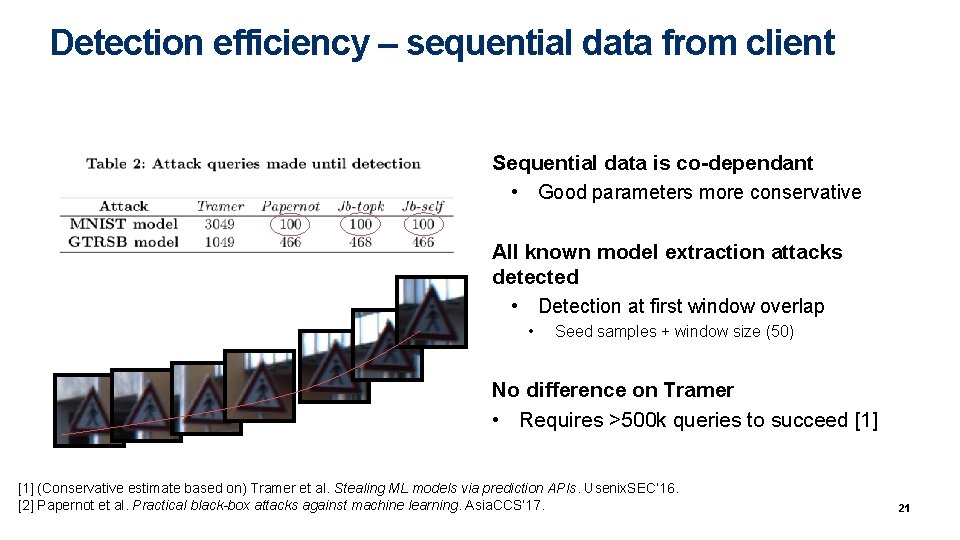

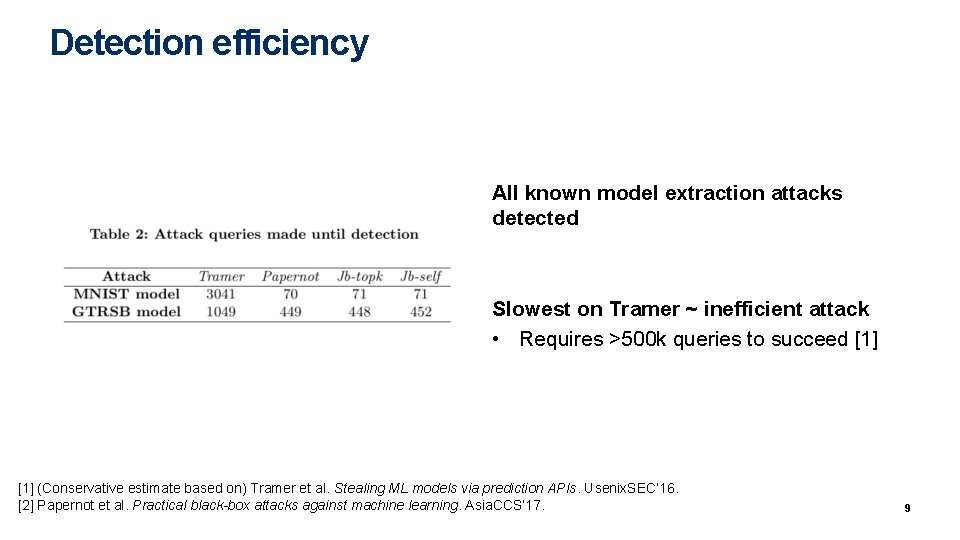

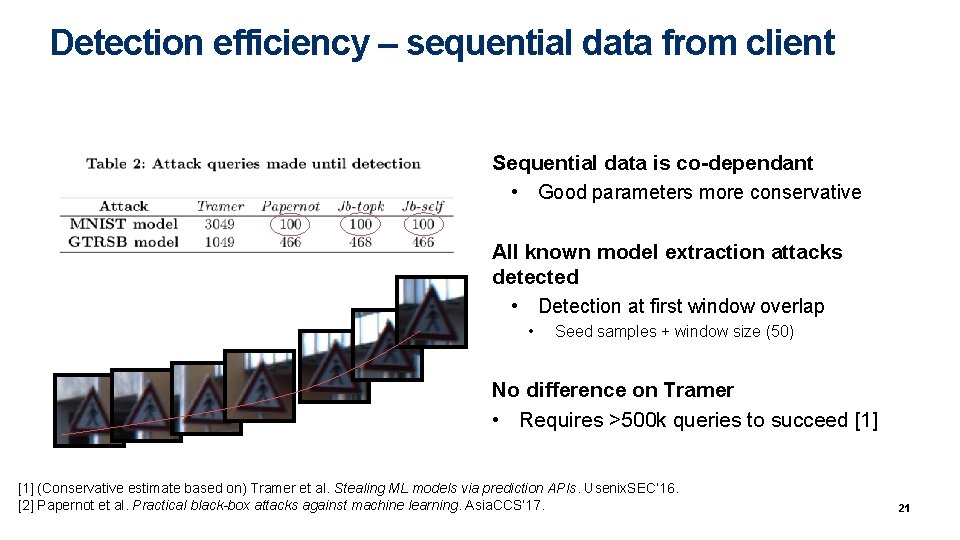

Detection efficiency All known model extraction attacks detected Slowest on Tramer ~ inefficient attack • Requires >500 k queries to succeed [1] (Conservative estimate based on) Tramer et al. Stealing ML models via prediction APIs. Usenix. SEC’ 16. [2] Papernot et al. Practical black-box attacks against machine learning. Asia. CCS’ 17. 9

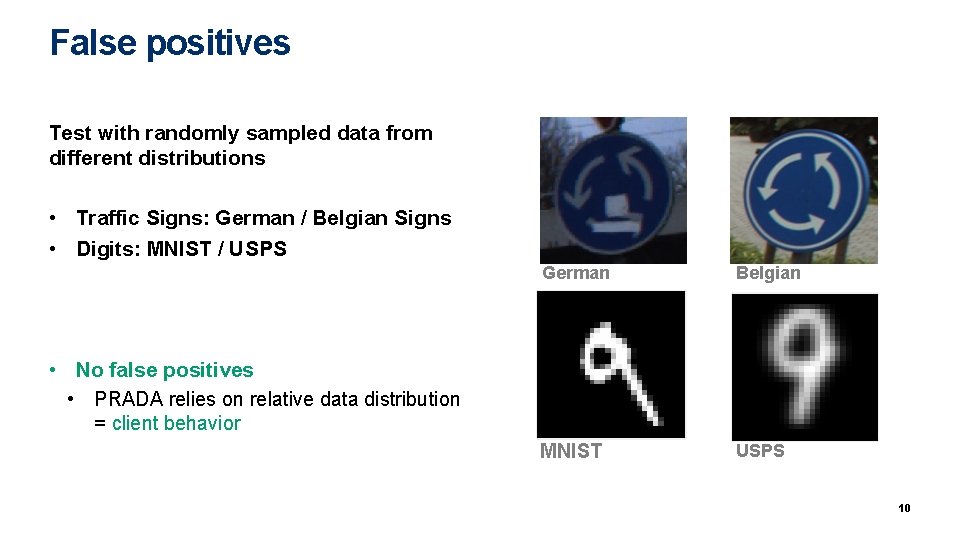

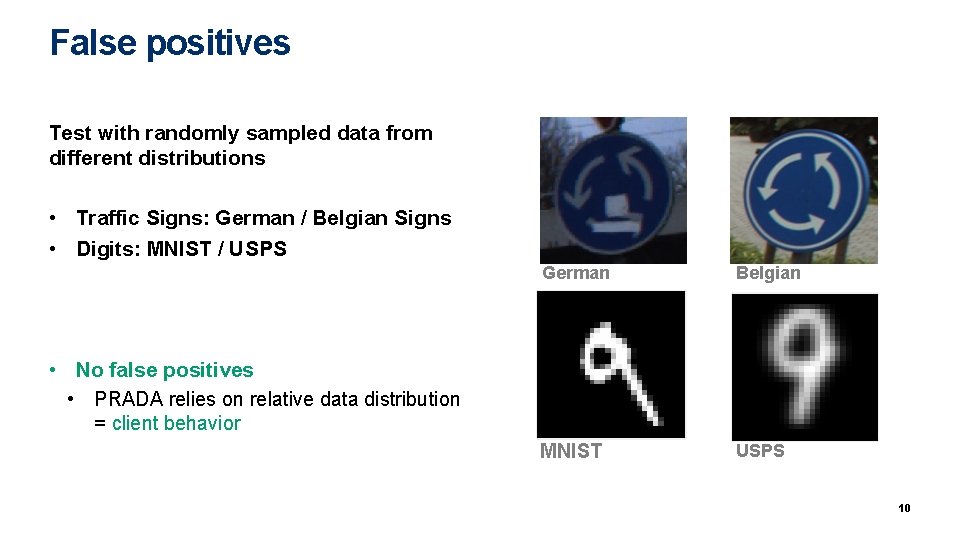

False positives Test with randomly sampled data from different distributions • Traffic Signs: German / Belgian Signs • Digits: MNIST / USPS German Belgian MNIST USPS • No false positives • PRADA relies on relative data distribution = client behavior 10

Summary Model extraction is a serious threat to model owners Proposed new attack that outperforms state-of-the-art Take-aways for model extraction attacks & transferability PRADA detects all known model extraction attacks https: //arxiv. org/abs/1805. 02628 11

Extra 12

Structured tests Exp 1: What is the impact of natural seed samples? Attacker only uses seed samples. Exp 2: What advantage do synthetic samples give? Continue attack with synthetic samples. Compare our new attack with existing. Exp 3: What effect does model complexity have? Transferability. Compare (1) number of layers and (2) number of parameters. 13

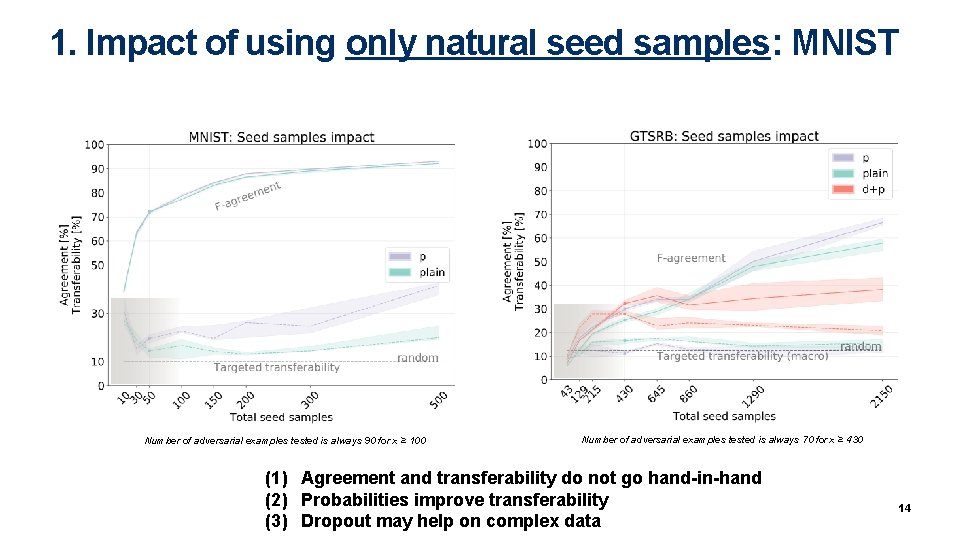

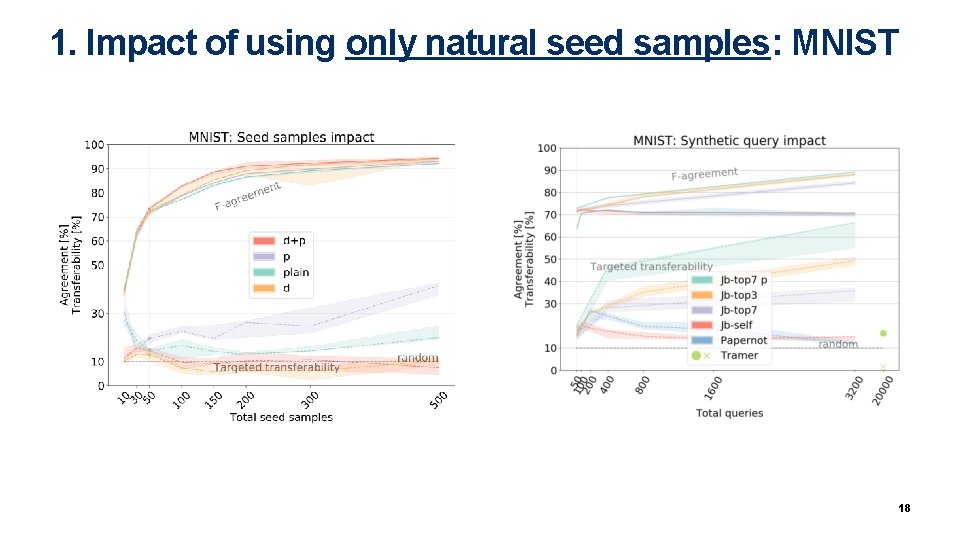

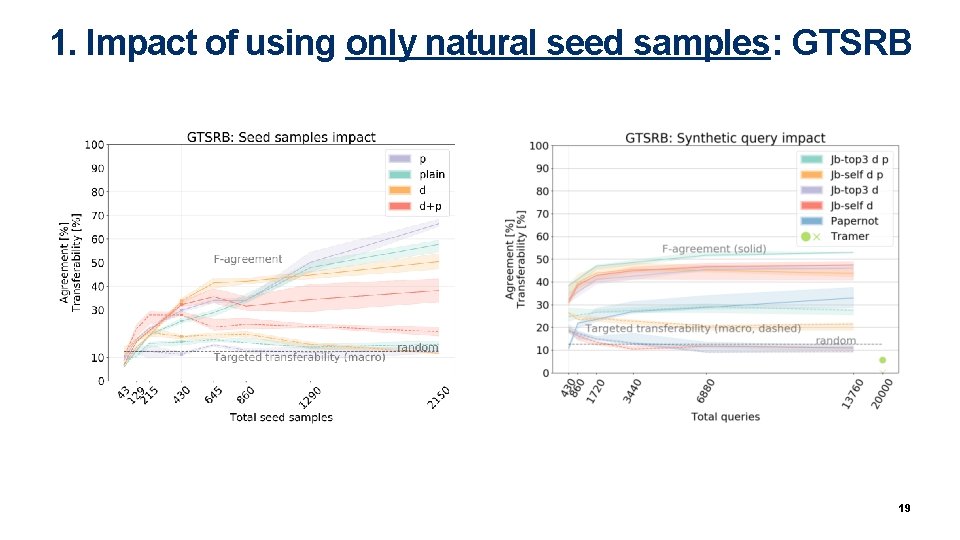

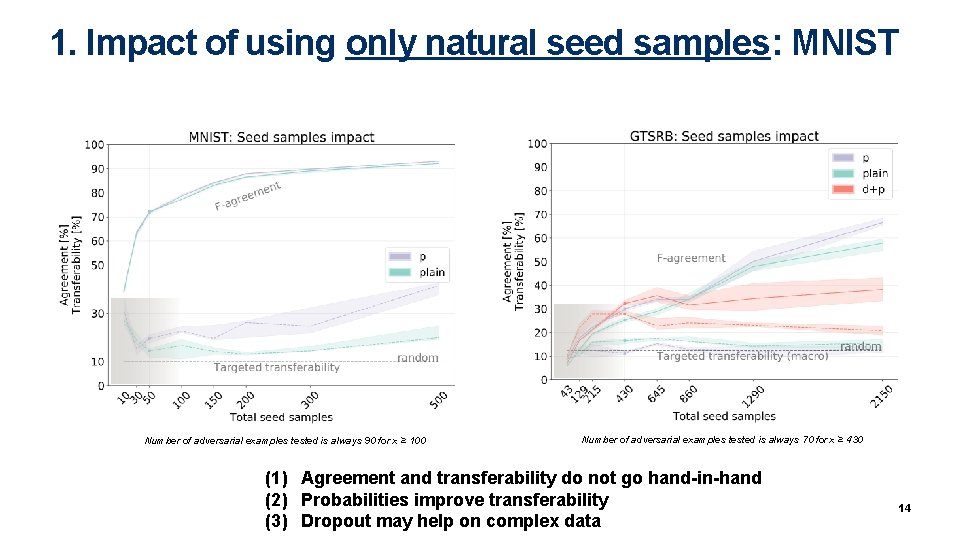

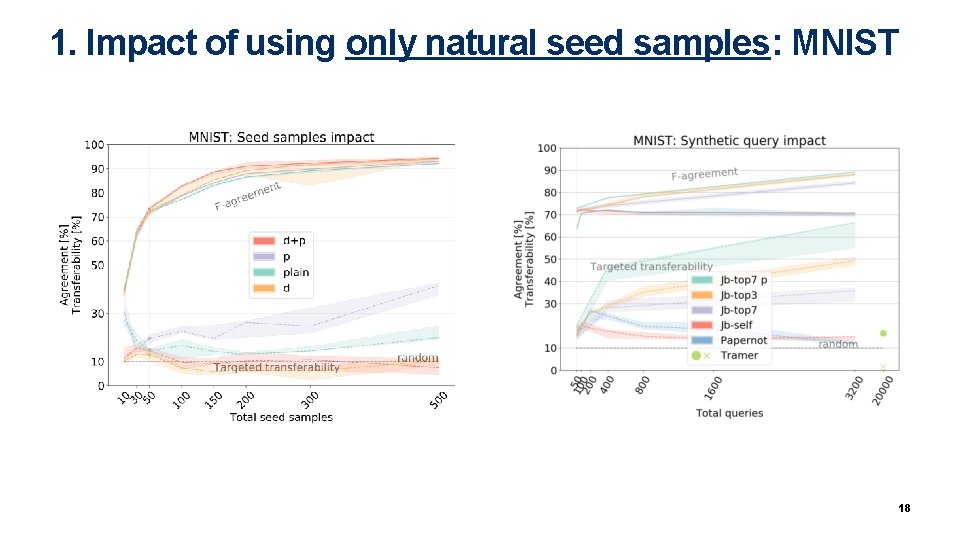

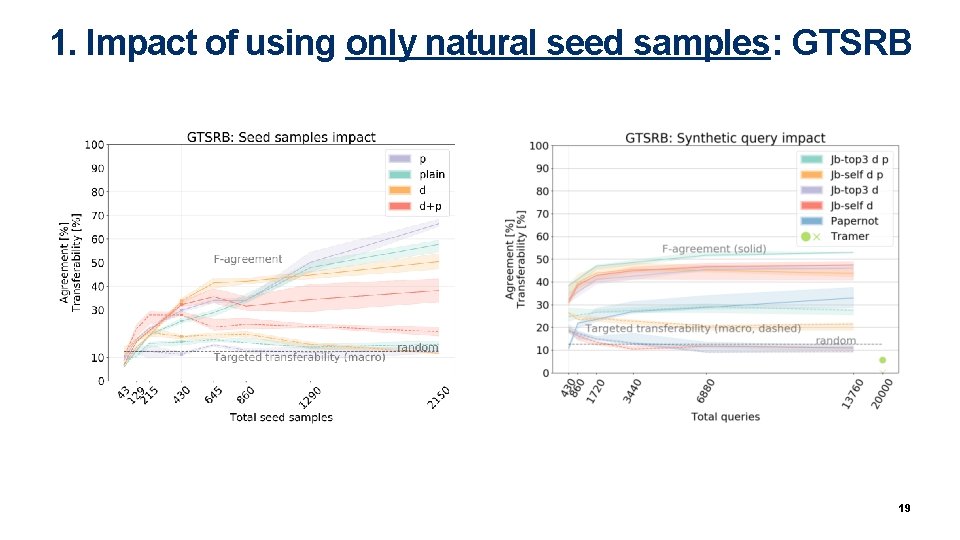

1. Impact of using only natural seed samples: MNIST Number of adversarial examples tested is always 90 for x ≥ 100 Number of adversarial examples tested is always 70 for x ≥ 430 (1) Agreement and transferability do not go hand-in-hand (2) Probabilities improve transferability (3) Dropout may help on complex data 14

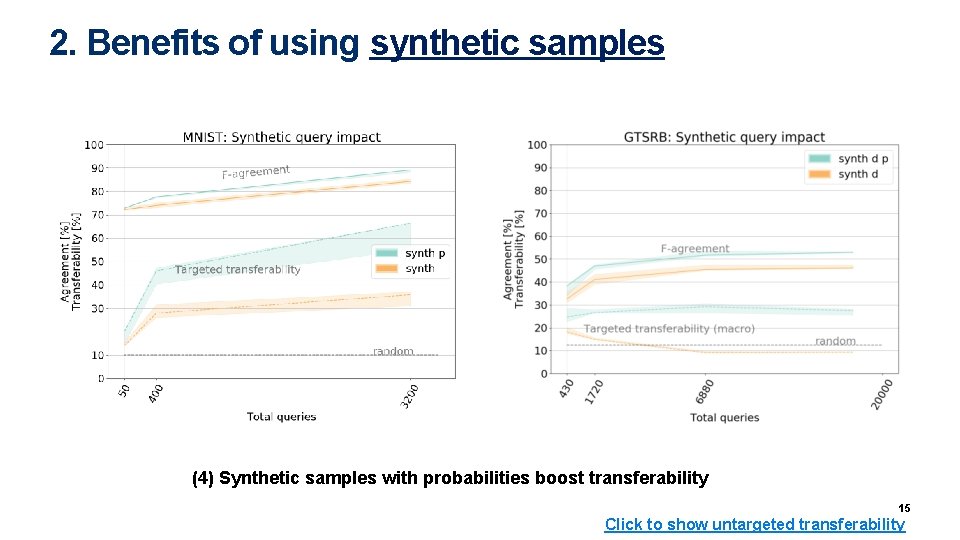

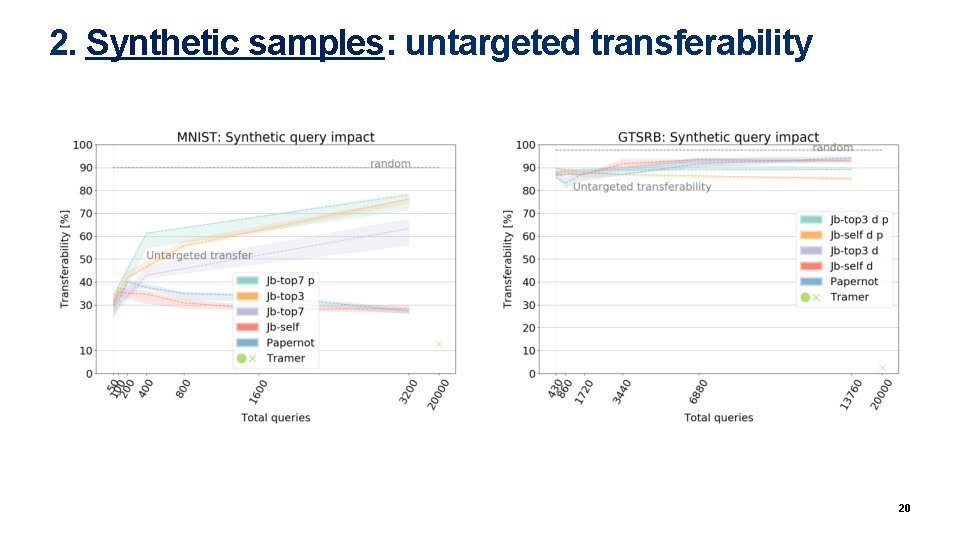

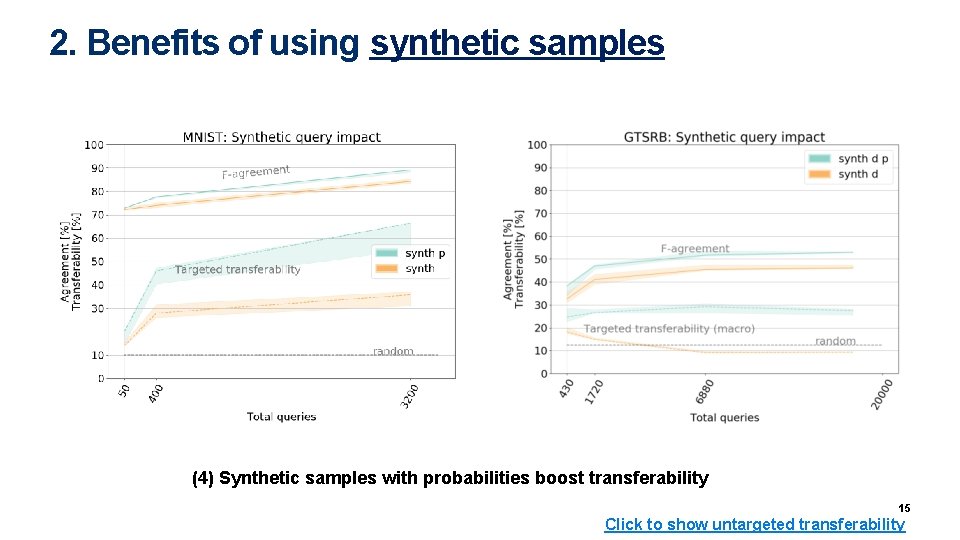

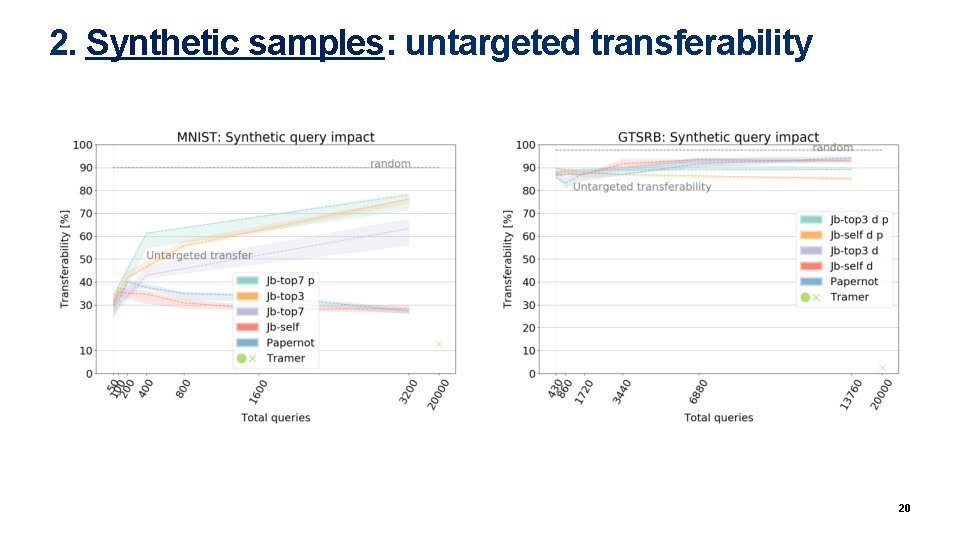

2. Benefits of using synthetic samples (4) Synthetic samples with probabilities boost transferability 15 Click to show untargeted transferability

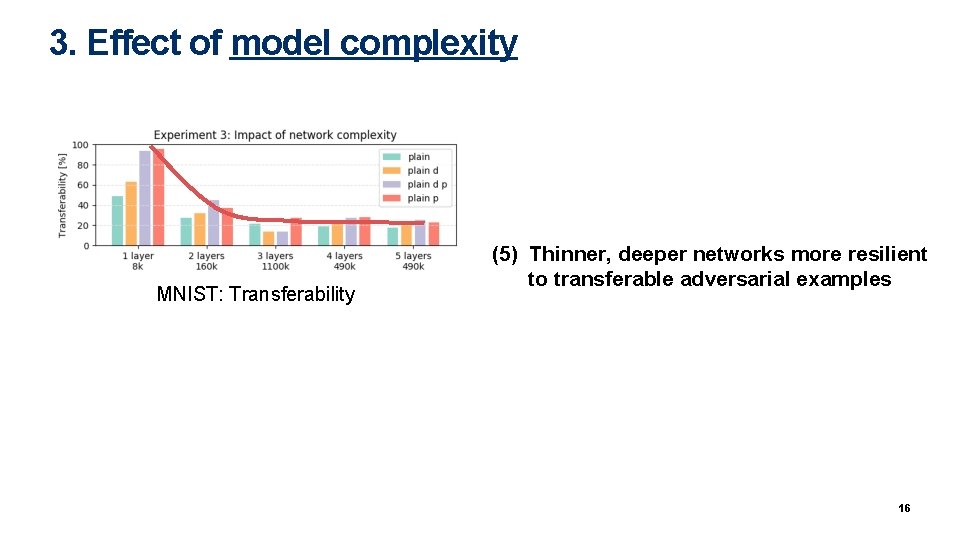

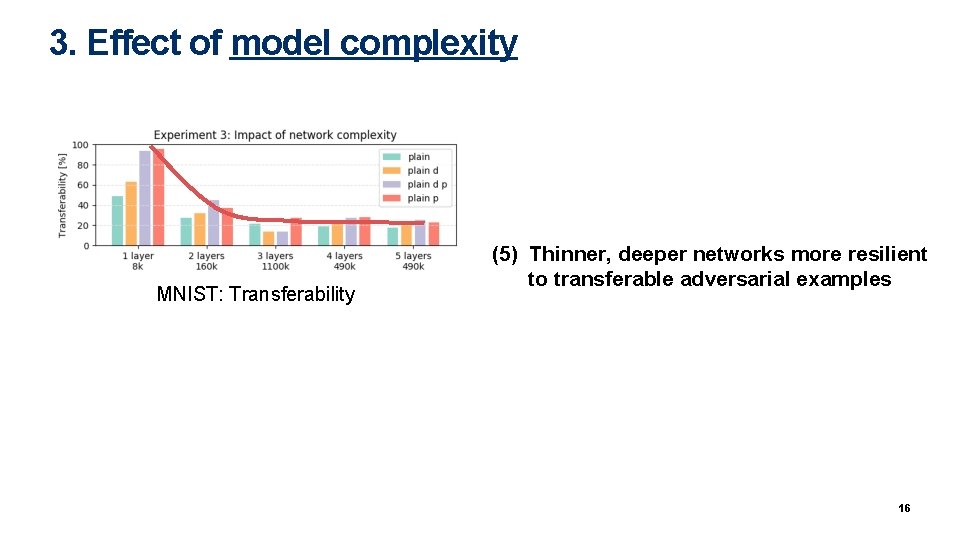

3. Effect of model complexity MNIST: Transferability (5) Thinner, deeper networks more resilient to transferable adversarial examples 16

RW circle 0 No passing 9 Caution 1 No passing (weight limit) 10 Dangerous curve (left) 2 3 Right-of-way Priority road 12 11 Double curve Dangerous curve (right) 4 Yield 13 Bumpy 5 Stop 14 Slippery 6 No vehicles 15 Road narrows 7 No vehicles (weight limit) 8 Gray circle No entry Warning Priority 16 Traffic signals 17 Yield Road work Stop 18 Pedestrians 19 Children 20 Bicycles 21 Ice 22 Wildlife 23 End of limits 25 24 Turn right 26 No entry Ahead only Blue circle 27 28 29 30 31 32 33 36 37 38 39 40 41 42 Go straight or right Go straight or left Keep right Keep left Roundabout End of no passing (weight limit) 34 35 17 Back to datasets

1. Impact of using only natural seed samples: MNIST 18

1. Impact of using only natural seed samples: GTSRB 19

2. Synthetic samples: untargeted transferability 20

Detection efficiency – sequential data from client Sequential data is co-dependant • Good parameters more conservative All known model extraction attacks detected • Detection at first window overlap • Seed samples + window size (50) No difference on Tramer • Requires >500 k queries to succeed [1] (Conservative estimate based on) Tramer et al. Stealing ML models via prediction APIs. Usenix. SEC’ 16. [2] Papernot et al. Practical black-box attacks against machine learning. Asia. CCS’ 17. 21