StatXFER A General Framework for Search based Syntaxdriven

Stat-XFER: A General Framework for Search -based Syntax-driven MT Alon Lavie Language Technologies Institute Carnegie Mellon University Joint work with: Erik Peterson, Alok Parlikar, Vamshi Ambati, Abhaya Agarwal, Greg Hanneman, Kevin Gimpel, Edmund Huber

Outline • Context and Rationale • CMU Statistical Transfer MT Framework • Automatic Acquisition of Syntax-based MT Resources • Chinese-to-English System • Urdu-to-English System • Open Research Challenges • Conclusions March 28, 2008 NIST MT-08: CMU Stat-XFER 2

Rule-based vs. Statistical MT • Traditional Rule-based MT: – Expressive and linguistically-rich formalisms capable of describing complex mappings between the two languages – Accurate “clean” resources – Everything constructed manually by experts – Main challenge: obtaining broad coverage • Phrase-based Statistical MT: – Learn word and phrase correspondences automatically from large volumes of parallel data – Search-based “decoding” framework: • Models propose many alternative translations • Effective search algorithms find the “best” translation – Main challenge: obtaining high translation accuracy March 28, 2008 NIST MT-08: CMU Stat-XFER 3

Research Goals • Long-term research agenda (since 2000) focused on developing a unified framework for MT that addresses the core fundamental weaknesses of previous approaches: – Representation – explore richer formalisms that can capture complex divergences between languages – Ability to handle morphologically complex languages – Methods for automatically acquiring MT resources from available data and combining them with manual resources – Ability to address both rich and poor resource scenarios • Focus has been on low-resource scenarios, scaling up to resource-rich scenarios in the past year • Main research funding sources: NSF (AVENUE and LETRAS projects) and DARPA (GALE) March 28, 2008 NIST MT-08: CMU Stat-XFER 4

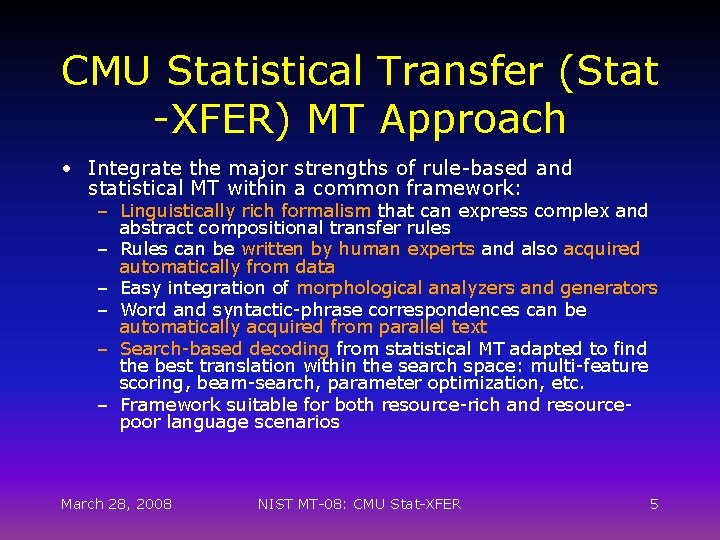

CMU Statistical Transfer (Stat -XFER) MT Approach • Integrate the major strengths of rule-based and statistical MT within a common framework: – Linguistically rich formalism that can express complex and abstract compositional transfer rules – Rules can be written by human experts and also acquired automatically from data – Easy integration of morphological analyzers and generators – Word and syntactic-phrase correspondences can be automatically acquired from parallel text – Search-based decoding from statistical MT adapted to find the best translation within the search space: multi-feature scoring, beam-search, parameter optimization, etc. – Framework suitable for both resource-rich and resourcepoor language scenarios March 28, 2008 NIST MT-08: CMU Stat-XFER 5

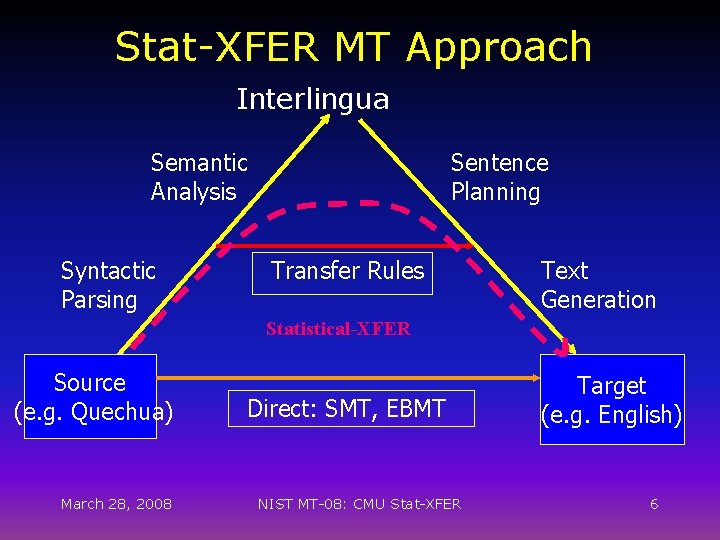

Stat-XFER MT Approach Interlingua Semantic Analysis Syntactic Parsing Sentence Planning Transfer Rules Text Generation Statistical-XFER Source (e. g. Quechua) March 28, 2008 Direct: SMT, EBMT NIST MT-08: CMU Stat-XFER Target (e. g. English) 6

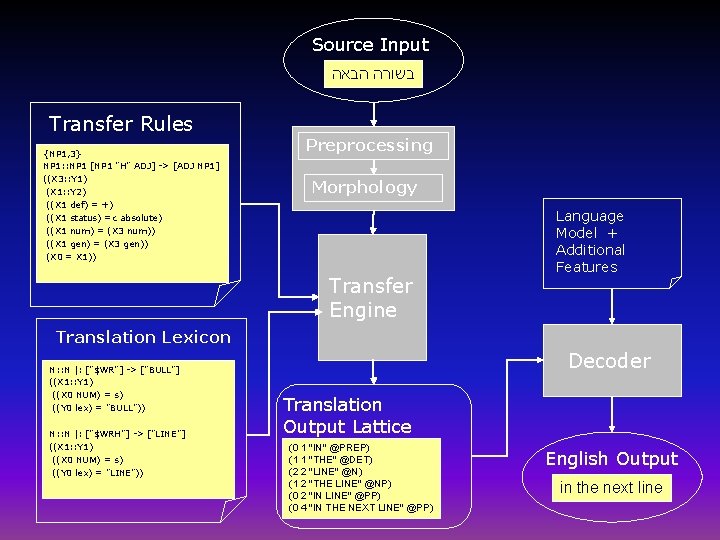

Source Input בשורה הבאה Transfer Rules {NP 1, 3} NP 1: : NP 1 [NP 1 "H" ADJ] -> [ADJ NP 1] ((X 3: : Y 1) (X 1: : Y 2) ((X 1 def) = +) ((X 1 status) =c absolute) ((X 1 num) = (X 3 num)) ((X 1 gen) = (X 3 gen)) (X 0 = X 1)) Preprocessing Morphology Transfer Engine Language Model + Additional Features Translation Lexicon N: : N |: ["$WR"] -> ["BULL"] ((X 1: : Y 1) ((X 0 NUM) = s) ((Y 0 lex) = "BULL")) N: : N |: ["$WRH"] -> ["LINE"] ((X 1: : Y 1) ((X 0 NUM) = s) ((Y 0 lex) = "LINE")) Decoder Translation Output Lattice (0 1 "IN" @PREP) (1 1 "THE" @DET) (2 2 "LINE" @N) (1 2 "THE LINE" @NP) (0 2 "IN LINE" @PP) (0 4 "IN THE NEXT LINE" @PP) English Output in the next line

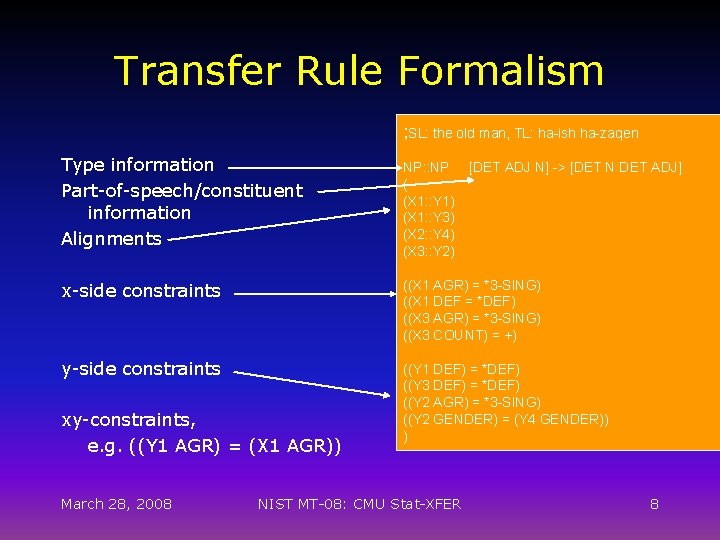

Transfer Rule Formalism ; SL: the old man, TL: ha-ish ha-zaqen Type information Part-of-speech/constituent information Alignments NP: : NP ( (X 1: : Y 1) (X 1: : Y 3) (X 2: : Y 4) (X 3: : Y 2) x-side constraints ((X 1 AGR) = *3 -SING) ((X 1 DEF = *DEF) ((X 3 AGR) = *3 -SING) ((X 3 COUNT) = +) y-side constraints ((Y 1 DEF) = *DEF) ((Y 3 DEF) = *DEF) ((Y 2 AGR) = *3 -SING) ((Y 2 GENDER) = (Y 4 GENDER)) ) xy-constraints, e. g. ((Y 1 AGR) = (X 1 AGR)) March 28, 2008 NIST MT-08: CMU Stat-XFER [DET ADJ N] -> [DET N DET ADJ] 8

![Translation Lexicon: Examples PRO: : PRO |: ["ANI"] -> ["I"] ( (X 1: : Translation Lexicon: Examples PRO: : PRO |: ["ANI"] -> ["I"] ( (X 1: :](http://slidetodoc.com/presentation_image_h2/0b5d0c146c82271f7eb9aad28287cf39/image-9.jpg)

Translation Lexicon: Examples PRO: : PRO |: ["ANI"] -> ["I"] ( (X 1: : Y 1) ((X 0 per) = 1) ((X 0 num) = s) ((X 0 case) = nom) ) N: : N |: ["$&H"] -> ["HOUR"] ( (X 1: : Y 1) ((X 0 NUM) = s) ((Y 0 lex) = "HOUR") ) PRO: : PRO |: ["ATH"] -> ["you"] ( (X 1: : Y 1) ((X 0 per) = 2) ((X 0 num) = s) ((X 0 gen) = m) ((X 0 case) = nom) ) N: : N |: ["$&H"] -> ["hours"] ( (X 1: : Y 1) ((Y 0 NUM) = p) ((X 0 NUM) = p) ((Y 0 lex) = "HOUR") ) March 28, 2008 NIST MT-08: CMU Stat-XFER 9

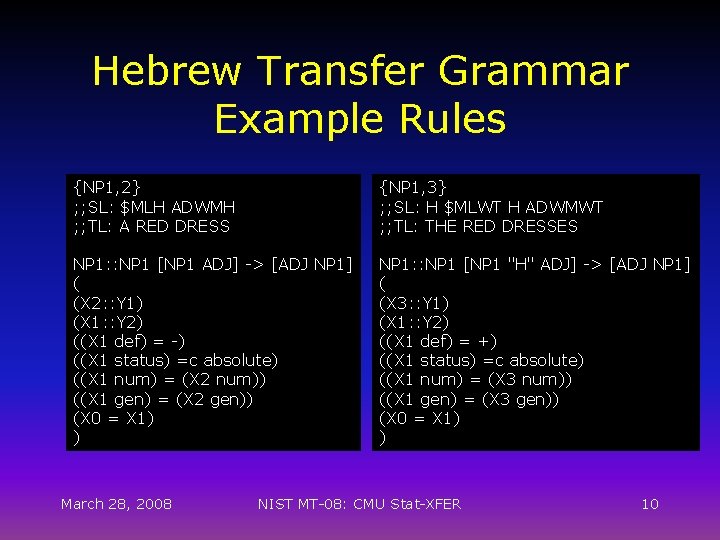

Hebrew Transfer Grammar Example Rules {NP 1, 2} ; ; SL: $MLH ADWMH ; ; TL: A RED DRESS {NP 1, 3} ; ; SL: H $MLWT H ADWMWT ; ; TL: THE RED DRESSES NP 1: : NP 1 [NP 1 ADJ] -> [ADJ NP 1] ( (X 2: : Y 1) (X 1: : Y 2) ((X 1 def) = -) ((X 1 status) =c absolute) ((X 1 num) = (X 2 num)) ((X 1 gen) = (X 2 gen)) (X 0 = X 1) ) NP 1: : NP 1 [NP 1 "H" ADJ] -> [ADJ NP 1] ( (X 3: : Y 1) (X 1: : Y 2) ((X 1 def) = +) ((X 1 status) =c absolute) ((X 1 num) = (X 3 num)) ((X 1 gen) = (X 3 gen)) (X 0 = X 1) ) March 28, 2008 NIST MT-08: CMU Stat-XFER 10

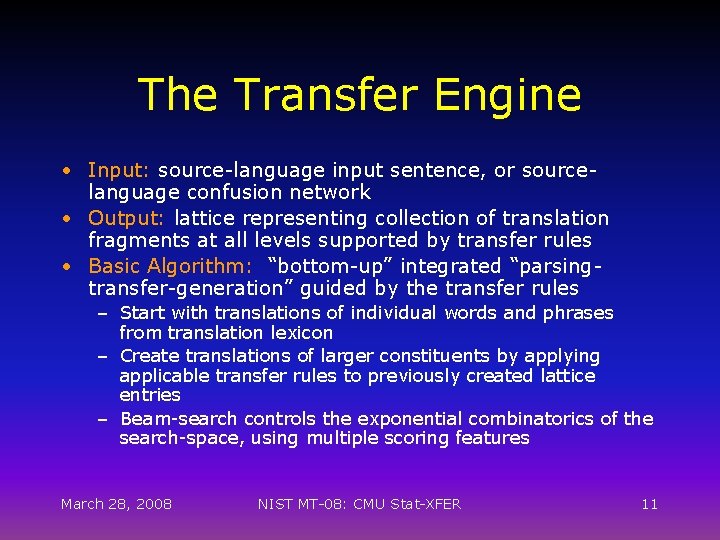

The Transfer Engine • Input: source-language input sentence, or sourcelanguage confusion network • Output: lattice representing collection of translation fragments at all levels supported by transfer rules • Basic Algorithm: “bottom-up” integrated “parsingtransfer-generation” guided by the transfer rules – Start with translations of individual words and phrases from translation lexicon – Create translations of larger constituents by applying applicable transfer rules to previously created lattice entries – Beam-search controls the exponential combinatorics of the search-space, using multiple scoring features March 28, 2008 NIST MT-08: CMU Stat-XFER 11

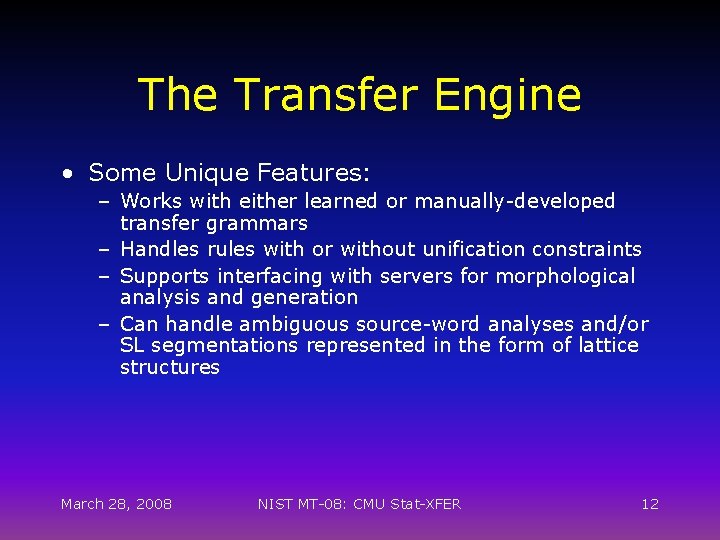

The Transfer Engine • Some Unique Features: – Works with either learned or manually-developed transfer grammars – Handles rules with or without unification constraints – Supports interfacing with servers for morphological analysis and generation – Can handle ambiguous source-word analyses and/or SL segmentations represented in the form of lattice structures March 28, 2008 NIST MT-08: CMU Stat-XFER 12

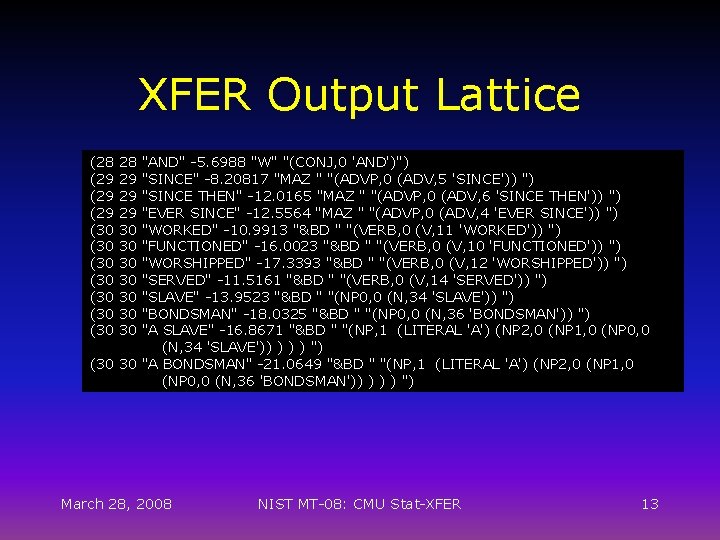

XFER Output Lattice (28 (29 (29 (30 (30 28 29 29 29 30 30 "AND" -5. 6988 "W" "(CONJ, 0 'AND')") "SINCE" -8. 20817 "MAZ " "(ADVP, 0 (ADV, 5 'SINCE')) ") "SINCE THEN" -12. 0165 "MAZ " "(ADVP, 0 (ADV, 6 'SINCE THEN')) ") "EVER SINCE" -12. 5564 "MAZ " "(ADVP, 0 (ADV, 4 'EVER SINCE')) ") "WORKED" -10. 9913 "&BD " "(VERB, 0 (V, 11 'WORKED')) ") "FUNCTIONED" -16. 0023 "&BD " "(VERB, 0 (V, 10 'FUNCTIONED')) ") "WORSHIPPED" -17. 3393 "&BD " "(VERB, 0 (V, 12 'WORSHIPPED')) ") "SERVED" -11. 5161 "&BD " "(VERB, 0 (V, 14 'SERVED')) ") "SLAVE" -13. 9523 "&BD " "(NP 0, 0 (N, 34 'SLAVE')) ") "BONDSMAN" -18. 0325 "&BD " "(NP 0, 0 (N, 36 'BONDSMAN')) ") "A SLAVE" -16. 8671 "&BD " "(NP, 1 (LITERAL 'A') (NP 2, 0 (NP 1, 0 (NP 0, 0 (N, 34 'SLAVE')) ) ") (30 30 "A BONDSMAN" -21. 0649 "&BD " "(NP, 1 (LITERAL 'A') (NP 2, 0 (NP 1, 0 (NP 0, 0 (N, 36 'BONDSMAN')) ) ") March 28, 2008 NIST MT-08: CMU Stat-XFER 13

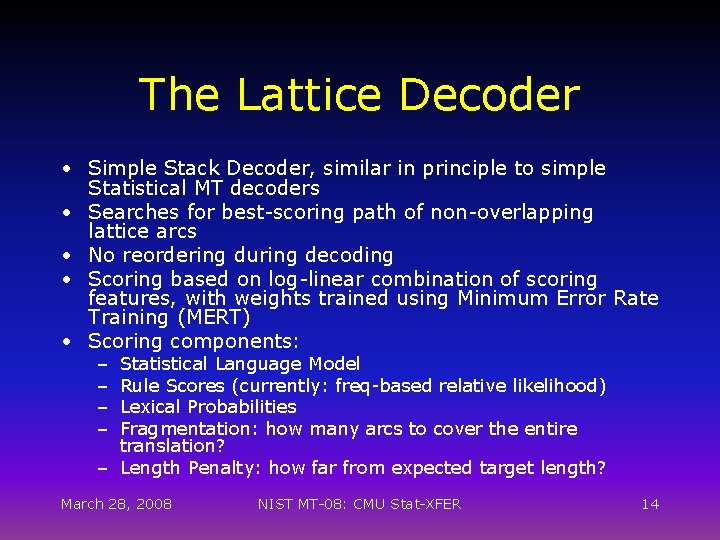

The Lattice Decoder • Simple Stack Decoder, similar in principle to simple Statistical MT decoders • Searches for best-scoring path of non-overlapping lattice arcs • No reordering during decoding • Scoring based on log-linear combination of scoring features, with weights trained using Minimum Error Rate Training (MERT) • Scoring components: – – Statistical Language Model Rule Scores (currently: freq-based relative likelihood) Lexical Probabilities Fragmentation: how many arcs to cover the entire translation? – Length Penalty: how far from expected target length? March 28, 2008 NIST MT-08: CMU Stat-XFER 14

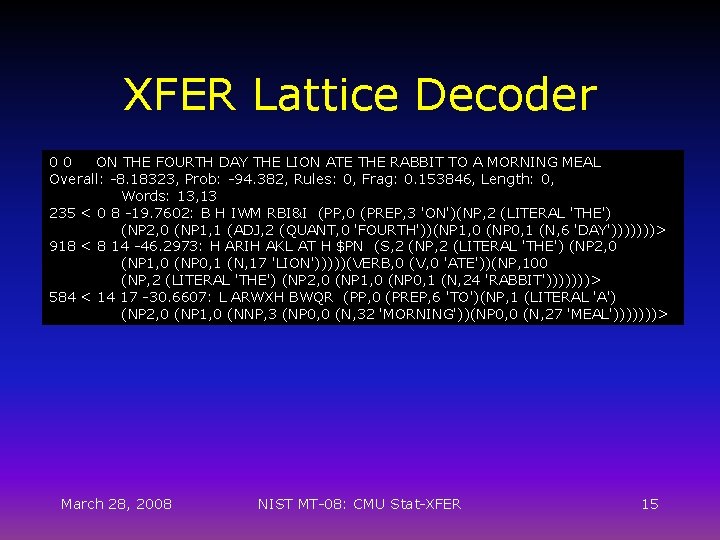

XFER Lattice Decoder 00 ON THE FOURTH DAY THE LION ATE THE RABBIT TO A MORNING MEAL Overall: -8. 18323, Prob: -94. 382, Rules: 0, Frag: 0. 153846, Length: 0, Words: 13, 13 235 < 0 8 -19. 7602: B H IWM RBI&I (PP, 0 (PREP, 3 'ON')(NP, 2 (LITERAL 'THE') (NP 2, 0 (NP 1, 1 (ADJ, 2 (QUANT, 0 'FOURTH'))(NP 1, 0 (NP 0, 1 (N, 6 'DAY')))))))> 918 < 8 14 -46. 2973: H ARIH AKL AT H $PN (S, 2 (NP, 2 (LITERAL 'THE') (NP 2, 0 (NP 1, 0 (NP 0, 1 (N, 17 'LION')))))(VERB, 0 (V, 0 'ATE'))(NP, 100 (NP, 2 (LITERAL 'THE') (NP 2, 0 (NP 1, 0 (NP 0, 1 (N, 24 'RABBIT')))))))> 584 < 14 17 -30. 6607: L ARWXH BWQR (PP, 0 (PREP, 6 'TO')(NP, 1 (LITERAL 'A') (NP 2, 0 (NP 1, 0 (NNP, 3 (NP 0, 0 (N, 32 'MORNING'))(NP 0, 0 (N, 27 'MEAL')))))))> March 28, 2008 NIST MT-08: CMU Stat-XFER 15

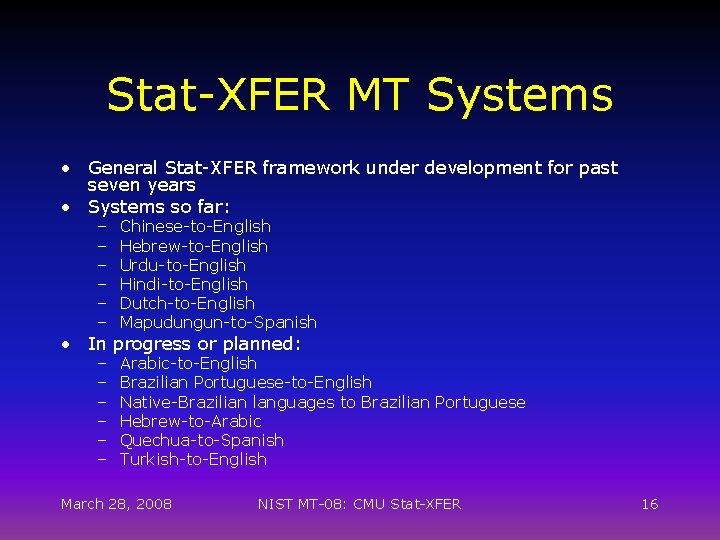

Stat-XFER MT Systems • General Stat-XFER framework under development for past seven years • Systems so far: – – – Chinese-to-English Hebrew-to-English Urdu-to-English Hindi-to-English Dutch-to-English Mapudungun-to-Spanish – – – Arabic-to-English Brazilian Portuguese-to-English Native-Brazilian languages to Brazilian Portuguese Hebrew-to-Arabic Quechua-to-Spanish Turkish-to-English • In progress or planned: March 28, 2008 NIST MT-08: CMU Stat-XFER 16

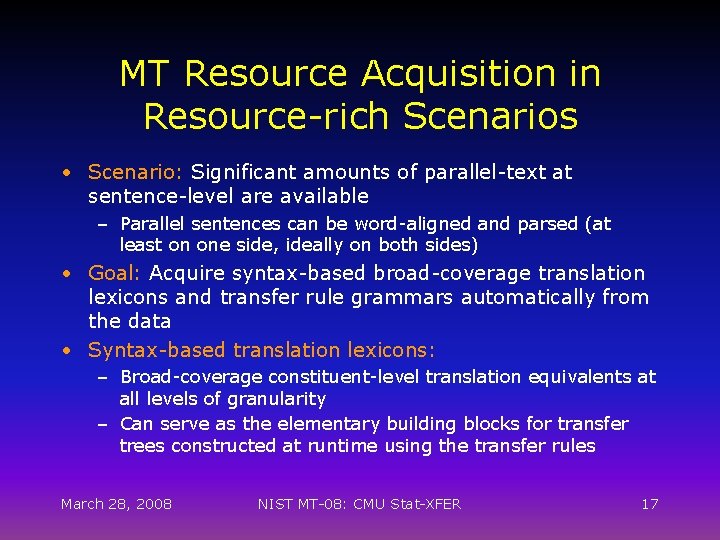

MT Resource Acquisition in Resource-rich Scenarios • Scenario: Significant amounts of parallel-text at sentence-level are available – Parallel sentences can be word-aligned and parsed (at least on one side, ideally on both sides) • Goal: Acquire syntax-based broad-coverage translation lexicons and transfer rule grammars automatically from the data • Syntax-based translation lexicons: – Broad-coverage constituent-level translation equivalents at all levels of granularity – Can serve as the elementary building blocks for transfer trees constructed at runtime using the transfer rules March 28, 2008 NIST MT-08: CMU Stat-XFER 17

Acquisition Process • Automatic Process for Extracting Syntax-driven Rules and Lexicons from sentence-parallel data: 1. 2. 3. 4. 5. 6. Word-align the parallel corpus (GIZA++) Parse the sentences independently for both languages Run our new PFA Constituent Aligner over the parsed sentence pairs Extract all aligned constituents from the parallel trees Extract all derived synchronous transfer rules from the constituent-aligned parallel trees Construct a “data-base” of all extracted parallel constituents and synchronous rules with their frequencies and model them statistically (assign them relative-likelihood probabilities) March 28, 2008 NIST MT-08: CMU Stat-XFER 18

PFA Constituent Node Aligner • Input: a bilingual pair of parsed and word-aligned sentences • Goal: find all sub-sentential constituent alignments between the two trees which are translation equivalents of each other • Equivalence Constraint: a pair of constituents <S, T> are considered translation equivalents if: – All words in yield of <S> are aligned only to words in yield of <T> (and vice-versa) – If <S> has a sub-constituent <S 1> that is aligned to <T 1>, then <T 1> must be a sub-constituent of <T> (and vice-versa) • Algorithm is a bottom-up process starting from wordlevel, marking nodes that satisfy the constraints March 28, 2008 NIST MT-08: CMU Stat-XFER 19

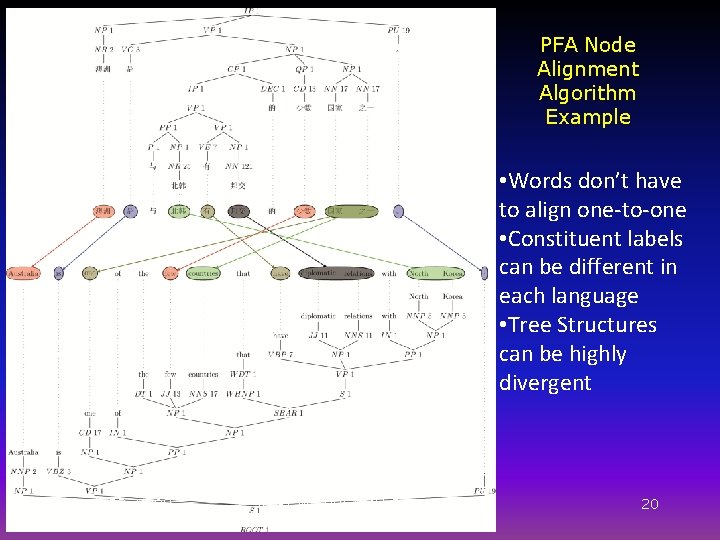

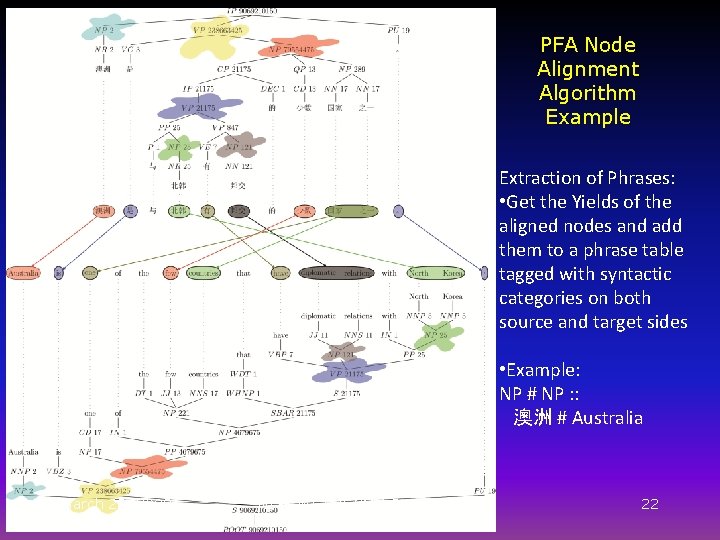

PFA Node Alignment Algorithm Example • Words don’t have to align one-to-one • Constituent labels can be different in each language • Tree Structures can be highly divergent March 28, 2008 NIST MT-08: CMU Stat-XFER 20

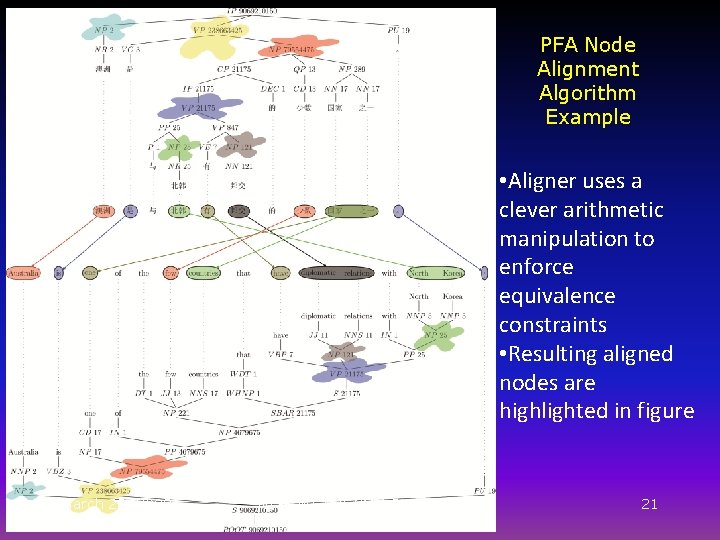

PFA Node Alignment Algorithm Example • Aligner uses a clever arithmetic manipulation to enforce equivalence constraints • Resulting aligned nodes are highlighted in figure March 28, 2008 NIST MT-08: CMU Stat-XFER 21

PFA Node Alignment Algorithm Example Extraction of Phrases: • Get the Yields of the aligned nodes and add them to a phrase table tagged with syntactic categories on both source and target sides • Example: NP # NP : : 澳洲 # Australia March 28, 2008 NIST MT-08: CMU Stat-XFER 22

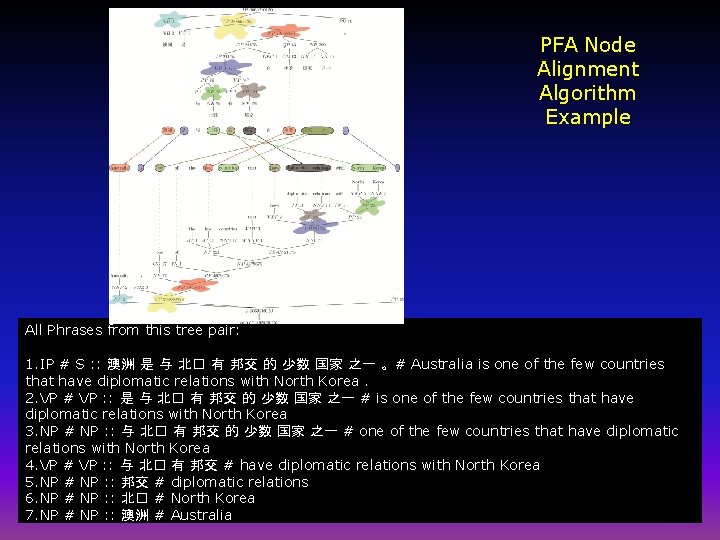

PFA Node Alignment Algorithm Example All Phrases from this tree pair: 1. IP # S : : 澳洲 是 与 北� 有 邦交 的 少数 国家 之一 。 # Australia is one of the few countries that have diplomatic relations with North Korea. 2. VP # VP : : 是 与 北� 有 邦交 的 少数 国家 之一 # is one of the few countries that have diplomatic relations with North Korea 3. NP # NP : : 与 北� 有 邦交 的 少数 国家 之一 # one of the few countries that have diplomatic relations with North Korea 4. VP # VP : : 与 北� 有 邦交 # have diplomatic relations with North Korea 5. NP # NP : : 邦交 # diplomatic relations 6. NP # NP : : 北� # North Korea 7. NP # NP : : 澳洲 # Australia

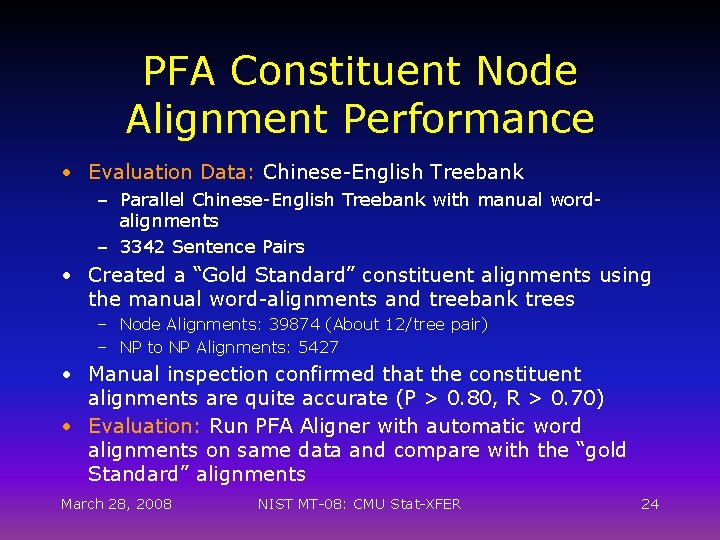

PFA Constituent Node Alignment Performance • Evaluation Data: Chinese-English Treebank – Parallel Chinese-English Treebank with manual wordalignments – 3342 Sentence Pairs • Created a “Gold Standard” constituent alignments using the manual word-alignments and treebank trees – Node Alignments: 39874 (About 12/tree pair) – NP to NP Alignments: 5427 • Manual inspection confirmed that the constituent alignments are quite accurate (P > 0. 80, R > 0. 70) • Evaluation: Run PFA Aligner with automatic word alignments on same data and compare with the “gold Standard” alignments March 28, 2008 NIST MT-08: CMU Stat-XFER 24

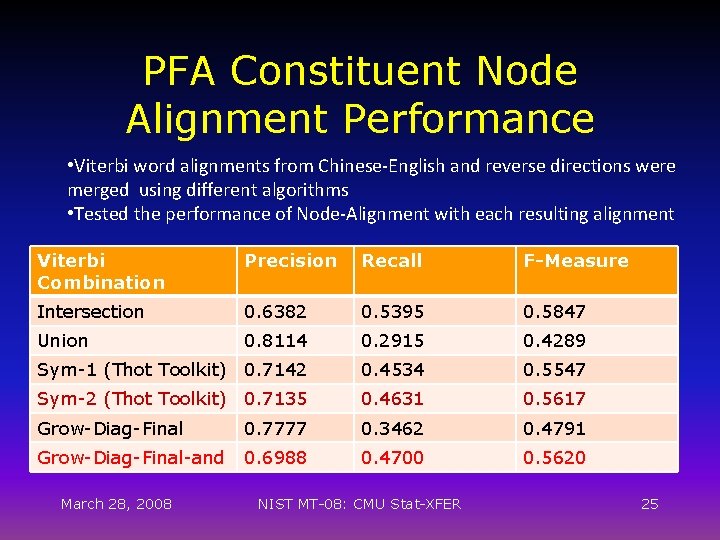

PFA Constituent Node Alignment Performance • Viterbi word alignments from Chinese-English and reverse directions were merged using different algorithms • Tested the performance of Node-Alignment with each resulting alignment Viterbi Combination Precision Recall F-Measure Intersection 0. 6382 0. 5395 0. 5847 Union 0. 8114 0. 2915 0. 4289 Sym-1 (Thot Toolkit) 0. 7142 0. 4534 0. 5547 Sym-2 (Thot Toolkit) 0. 7135 0. 4631 0. 5617 Grow-Diag-Final 0. 7777 0. 3462 0. 4791 Grow-Diag-Final-and 0. 6988 0. 4700 0. 5620 March 28, 2008 NIST MT-08: CMU Stat-XFER 25

Transfer Rule Learning • Input: Constituent-aligned parallel trees • Idea: Aligned nodes act as possible decomposition points of the parallel trees – The sub-trees of any aligned pair of nodes can be further decomposed at lower-level aligned nodes, creating an inventory of synchronous “TIG” correspondences – We decompose only at the “highest” level possible – Synchronous “TIGs” can be converted into synchronous rules • Algorithm: – Find and extract all possible synchronous TIG decompositions from the node aligned trees – “Flatten” the TIGs into synchronous CFG rules March 28, 2008 NIST MT-08: CMU Stat-XFER 26

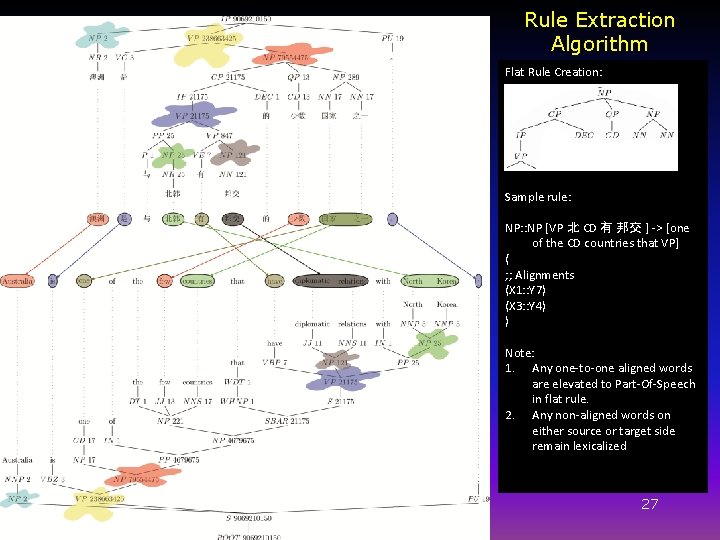

Rule Extraction Algorithm Flat Rule Creation: Sample rule: NP: : NP [VP 北 CD 有 邦交 ] -> [one of the CD countries that VP] ( ; ; Alignments (X 1: : Y 7) (X 3: : Y 4) ) Note: 1. Any one-to-one aligned words are elevated to Part-Of-Speech in flat rule. 2. Any non-aligned words on either source or target side remain lexicalized 27

![Rule Extraction Algorithm All rules extracted: VP: : VP [VC NP] -> [VBZ NP] Rule Extraction Algorithm All rules extracted: VP: : VP [VC NP] -> [VBZ NP]](http://slidetodoc.com/presentation_image_h2/0b5d0c146c82271f7eb9aad28287cf39/image-28.jpg)

Rule Extraction Algorithm All rules extracted: VP: : VP [VC NP] -> [VBZ NP] ( (*score* 0. 5) ; ; Alignments (X 1: : Y 1) (X 2: : Y 2) ) All rules extracted: NP: : NP [VP 北 CD 有 邦交 ] -> [one of the CD countries that VP] ( (*score* 0. 5) ; ; Alignments (X 1: : Y 7) (X 3: : Y 4) ) IP: : S [ NP VP ] -> [NP VP ] ( (*score* 0. 5) ; ; Alignments (X 1: : Y 1) (X 2: : Y 2) ) NP: : NP [ “北� ”] -> [“North” “Korea”] ( ; Many to one alignment is a phrase ) VP: : VP [VC NP] -> [VBZ NP] ( (*score* 0. 5) ; ; Alignments (X 1: : Y 1) (X 2: : Y 2) ) NP: : NP [NR] -> [NNP] ( (*score* 0. 5) ; ; Alignments (X 1: : Y 1) (X 2: : Y 2) ) VP: : VP [北 NP VE NP] -> [ VBP NP with NP] ( (*score* 0. 5) ; ; Alignments (X 2: : Y 4) (X 3: : Y 1) (X 4: : Y 2) ) 28

Chinese-English System • Developed over past year under DARPA/GALE funding (within IBM-led “Rosetta” team) • Participated in recent NIST MT-08 Evaluation • Large-scale broad-coverage system • Integrates large manual resources with automatically extracted resources • Current performance-level is still inferior to state-of-the-art phrase-based systems March 28, 2008 NIST MT-08: CMU Stat-XFER 29

Chinese-English System • Lexical Resources: – Manual Lexicons (base forms): • LDC, ADSO, Wiki • Total number of entries: 1. 07 million – Automatically acquired from parallel data: • • Approx 5 million sentences LDC/GALE data Filtered down to phrases < 10 words in length Full formed Total number of entries: 2. 67 million March 28, 2008 NIST MT-08: CMU Stat-XFER 30

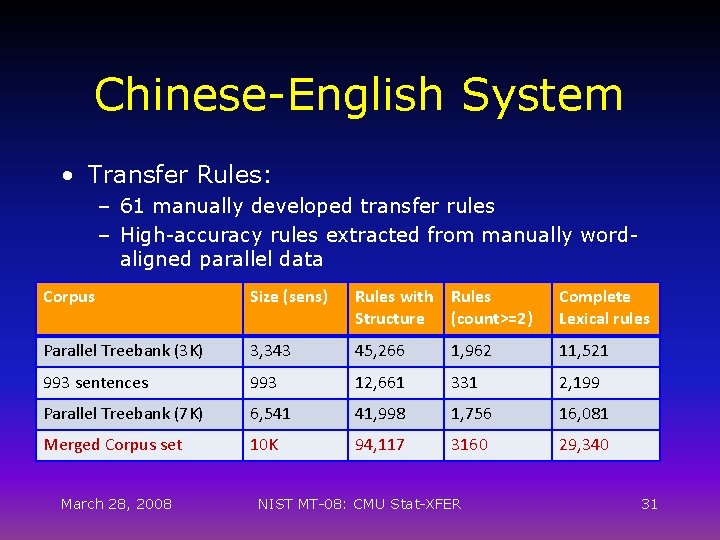

Chinese-English System • Transfer Rules: – 61 manually developed transfer rules – High-accuracy rules extracted from manually wordaligned parallel data Corpus Size (sens) Rules with Rules Structure (count>=2) Complete Lexical rules Parallel Treebank (3 K) 3, 343 45, 266 1, 962 11, 521 993 sentences 993 12, 661 331 2, 199 Parallel Treebank (7 K) 6, 541 41, 998 1, 756 16, 081 Merged Corpus set 10 K 94, 117 3160 29, 340 March 28, 2008 NIST MT-08: CMU Stat-XFER 31

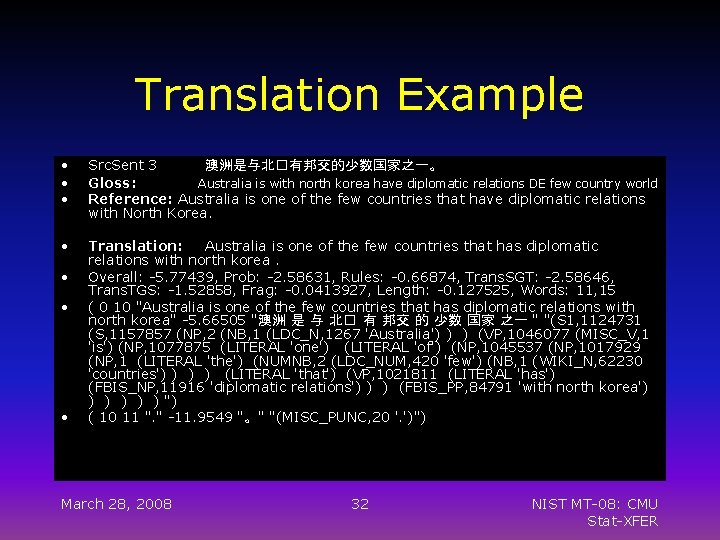

Translation Example • • • Src. Sent 3 澳洲是与北�有邦交的少数国家之一。 Gloss: Australia is with north korea have diplomatic relations DE few country world Reference: Australia is one of the few countries that have diplomatic relations with North Korea. • Translation: Australia is one of the few countries that has diplomatic relations with north korea. Overall: -5. 77439, Prob: -2. 58631, Rules: -0. 66874, Trans. SGT: -2. 58646, Trans. TGS: -1. 52858, Frag: -0. 0413927, Length: -0. 127525, Words: 11, 15 ( 0 10 "Australia is one of the few countries that has diplomatic relations with north korea" -5. 66505 "澳洲 是 与 北� 有 邦交 的 少数 国家 之一 " "(S 1, 1124731 (S, 1157857 (NP, 2 (NB, 1 (LDC_N, 1267 'Australia') ) ) (VP, 1046077 (MISC_V, 1 'is') (NP, 1077875 (LITERAL 'one') (LITERAL 'of') (NP, 1045537 (NP, 1017929 (NP, 1 (LITERAL 'the') (NUMNB, 2 (LDC_NUM, 420 'few') (NB, 1 (WIKI_N, 62230 'countries') ) (LITERAL 'that') (VP, 1021811 (LITERAL 'has') (FBIS_NP, 11916 'diplomatic relations') ) ) (FBIS_PP, 84791 'with north korea') ) ) ") ( 10 11 ". " -11. 9549 "。" "(MISC_PUNC, 20 '. ')") • • • March 28, 2008 32 NIST MT-08: CMU Stat-XFER

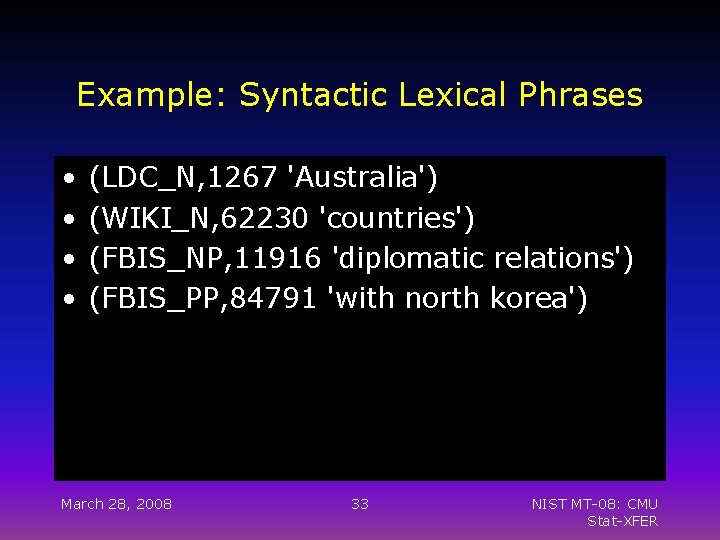

Example: Syntactic Lexical Phrases • • (LDC_N, 1267 'Australia') (WIKI_N, 62230 'countries') (FBIS_NP, 11916 'diplomatic relations') (FBIS_PP, 84791 'with north korea') March 28, 2008 33 NIST MT-08: CMU Stat-XFER

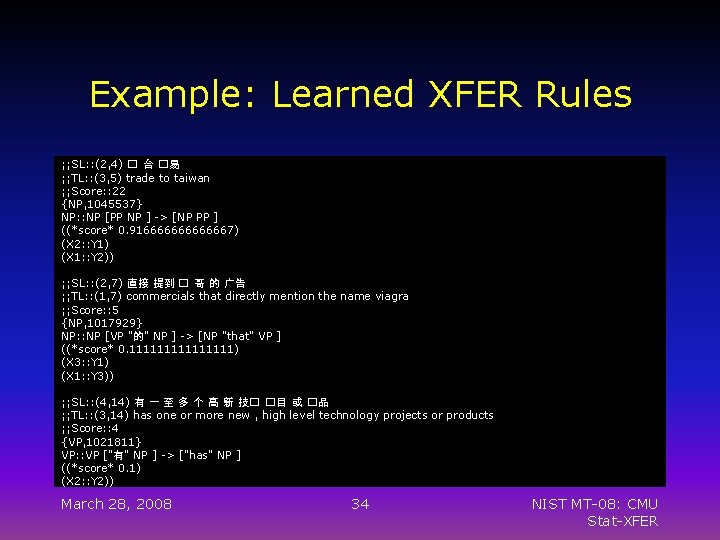

Example: Learned XFER Rules ; ; SL: : (2, 4) � 台 �易 ; ; TL: : (3, 5) trade to taiwan ; ; Score: : 22 {NP, 1045537} NP: : NP [PP NP ] -> [NP PP ] ((*score* 0. 916666667) (X 2: : Y 1) (X 1: : Y 2)) ; ; SL: : (2, 7) 直接 提到 � 哥 的 广告 ; ; TL: : (1, 7) commercials that directly mention the name viagra ; ; Score: : 5 {NP, 1017929} NP: : NP [VP "的" NP ] -> [NP "that" VP ] ((*score* 0. 11111111) (X 3: : Y 1) (X 1: : Y 3)) ; ; SL: : (4, 14) 有 一 至 多 个 高 新 技� �目 或 �品 ; ; TL: : (3, 14) has one or more new , high level technology projects or products ; ; Score: : 4 {VP, 1021811} VP: : VP ["有" NP ] -> ["has" NP ] ((*score* 0. 1) (X 2: : Y 2)) March 28, 2008 34 NIST MT-08: CMU Stat-XFER

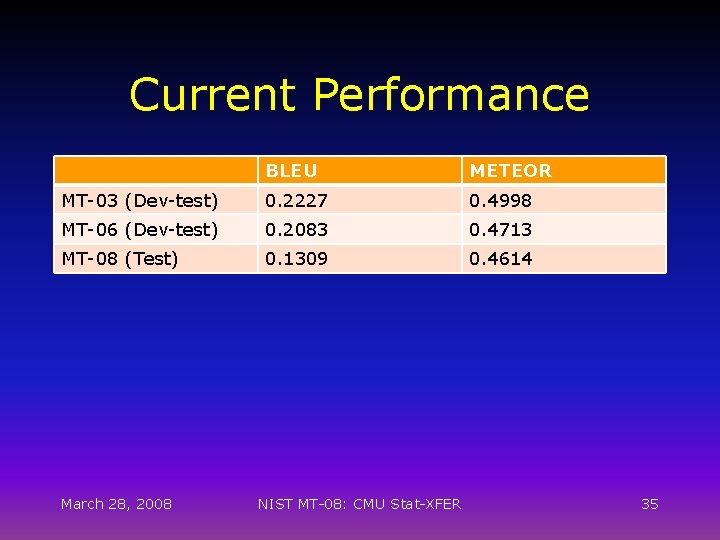

Current Performance BLEU METEOR MT-03 (Dev-test) 0. 2227 0. 4998 MT-06 (Dev-test) 0. 2083 0. 4713 MT-08 (Test) 0. 1309 0. 4614 March 28, 2008 NIST MT-08: CMU Stat-XFER 35

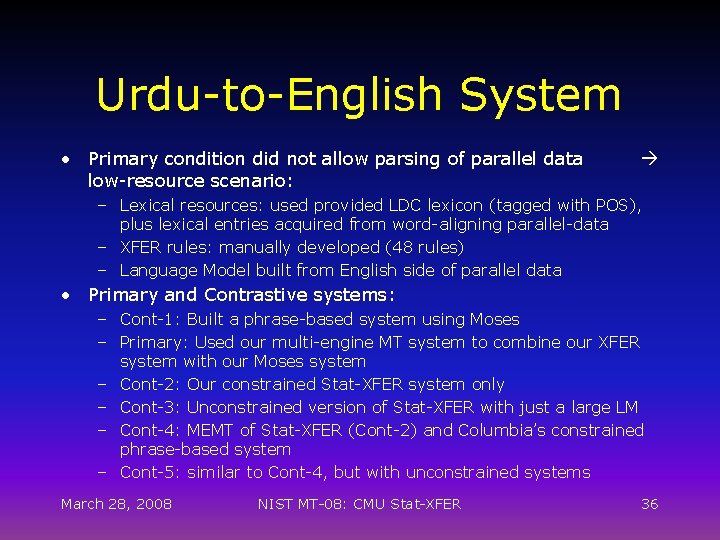

Urdu-to-English System • Primary condition did not allow parsing of parallel data low-resource scenario: – Lexical resources: used provided LDC lexicon (tagged with POS), plus lexical entries acquired from word-aligning parallel-data – XFER rules: manually developed (48 rules) – Language Model built from English side of parallel data • Primary and Contrastive systems: – Cont-1: Built a phrase-based system using Moses – Primary: Used our multi-engine MT system to combine our XFER system with our Moses system – Cont-2: Our constrained Stat-XFER system only – Cont-3: Unconstrained version of Stat-XFER with just a large LM – Cont-4: MEMT of Stat-XFER (Cont-2) and Columbia’s constrained phrase-based system – Cont-5: similar to Cont-4, but with unconstrained systems March 28, 2008 NIST MT-08: CMU Stat-XFER 36

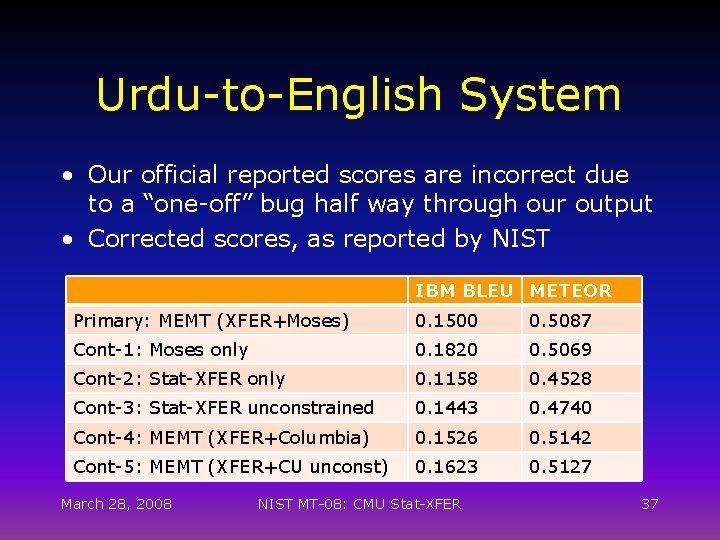

Urdu-to-English System • Our official reported scores are incorrect due to a “one-off” bug half way through our output • Corrected scores, as reported by NIST IBM BLEU METEOR Primary: MEMT (XFER+Moses) 0. 1500 0. 5087 Cont-1: Moses only 0. 1820 0. 5069 Cont-2: Stat-XFER only 0. 1158 0. 4528 Cont-3: Stat-XFER unconstrained 0. 1443 0. 4740 Cont-4: MEMT (XFER+Columbia) 0. 1526 0. 5142 Cont-5: MEMT (XFER+CU unconst) 0. 1623 0. 5127 March 28, 2008 NIST MT-08: CMU Stat-XFER 37

Open Research Questions • Our large-scale Chinese-English system is still significantly behind phrase-based SMT. Why? – – – Feature set is not sufficiently discriminant? Problems with the parsers for the two sides? Weaker decoder? Syntactic constituents don’t provide sufficient coverage? Bugs and deficiencies in the underlying algorithms? • The ISI experience indicates that it may take a couple of years to catch up with and surpass the phrase-based systems • Significant engineering issues to improve speed and efficient runtime processing and improved search March 28, 2008 NIST MT-08: CMU Stat-XFER 38

Open Research Questions • Immediate Research Issues: – Rule Learning: • Study effects of learning rules from manually vs automatically word aligned data • Study effects of parser accuracy on learned rules • Effective discriminant methods for modeling rule scores • Rule filtering strategies – Syntax-based LMs: • Our translations come out with a syntax-tree attached to them • Add a syntax-based LM feature that can discriminate between good and bad trees March 28, 2008 NIST MT-08: CMU Stat-XFER 39

Conclusions • Stat-XFER is a promising general MT framework, suitable to a variety of MT scenarios and languages • Provides a complete solution for building end-to-end MT systems from parallel data, akin to phrase-based SMT systems (training, tuning, runtime system) • No open-source publicly available toolkits (yet), but we welcome further collaboration activities • Complex but highly interesting set of open research issues March 28, 2008 NIST MT-08: CMU Stat-XFER 40

Questions? March 28, 2008 NIST MT-08: CMU Stat-XFER 41

- Slides: 41