Status of Tier 1 s Tier 2 s

![• Main Issues (Maurice Bouwhuis [bouwhuis@sara. nl]): • Network bandwidth to the compute • Main Issues (Maurice Bouwhuis [bouwhuis@sara. nl]): • Network bandwidth to the compute](https://slidetodoc.com/presentation_image/ad6ff7734b368892316d4143ba624588/image-9.jpg)

- Slides: 20

Status of Tier 1 s & Tier 2 s ~~~ Focus on STEP’ 09 Metrics & Recommendations N. B. much more detail in post-mortems! Jamie. Shiers@cern. ch ~~~ LCG-LHCC Referees Meeting, th 6 July 2009

What Were The Metrics? • Those set by the experiments: based on the main “functional blocks” that Tier 1 s and Tier 2 s support • Primary (additional) Use Cases in STEP’ 09: 1. (Concurrent) reprocessing at Tier 1 s – including recall from tape 2. Analysis – primarily at Tier 2 s (except LHCb) • In addition, we set a single service / operations site metric, primarily aimed at the Tier 1 s (and Tier 0) • Details: • • • ATLAS (logbook, p-m w/s), CMS (p-m), blogs Daily minutes: week 1, week 2 WLCG Post-mortem workshop 2

What Were The Results? J The good news first: ü Most Tier 1 s and many of the Tier 2 s met – and in some cases exceeded by a significant margin – the targets that were set • In addition, this was done with reasonable operational load at the site level and with quite a high background of scheduled and unscheduled interventions and other problems – including 5 simultaneous LHC OPN fibre cuts! Ø Operationally, things went really rather well • Experiment operations – particularly ATLAS – overloaded L The not-so-good news: • Some Tier 1 s and Tier 2 s did not meet one or more of the targets 3

Tier 1 s: “not-so-good” • Of the Tier 1 s that did not meet the metrics, need to consider (alphabetically) ASGC, DE-KIT and NL-T 1 • In terms of priority (i. e. what these sites deliver to the experiments), the order is probably DE-KIT, NL-T 1, ASGC Ø In all cases, once the problems have been understood and resolved need to re-test (also other sites…) • Also important to understand reproducibility of results: would another STEP yield the same results (in particular for those sites that passed? ) M There is also a very strong correlation between these sites and those that did not fully satisfy (I think I am being kind…) the site metric (which translates to full compliance with WLCG operations standards) 4

IN 2 P 3 • IN 2 P 3 had already scheduled an intervention when the timetable for STEP’ 09 was agreed • It took longer for them to recover than anticipated, but IMHO their results are good not only wrt the experiment targets, but also wrt “site metric” • Had we not taken a rather short two-week snapshot, they would almost certainly have caught-up with backlog very quickly – in the end they met 97% of ATLAS target! 5

DE-KIT • (Very) shortly before the start of the main STEP period, DE-KIT announced that they could not take part in any tape-related activities of STEP’ 09 due to major SAN / tape problems • Despite this attempts were made to recover the situation but the experiment targets were not met • AFAIK, the situation is still not fully understood M Communication of problems and follow-up still do not meet the agreed WLCG “standards” – even after such a major incident and numerous reminders, there has still not been a “Service Incident Report” for this major issue Ø I do not believe that this is acceptable: this is a major European Tier 1 providing resources to all 4 LHC experiments: the technical and operational problems must be fixed as soon as possible 6

• To wlcg operations and wlcg management board Dear all, unfortunately, we have some bad news about the Grid. Ka tape infrastructure. The connection to archival storage (tape) at Grid. Ka is broken and will probably not function before STEP 09. The actual cause is yet unknown but is rooted in the SAN configuration and/or hardware. We experience random timeouts on all fibre channel links that connect the dcache pool hosts to the tape drives and libraries. Grid. Ka technicians (and involved supporters from the vendors) are doing everything possible to fix the problems as soon as possible but chances to have the issue solved until next week are low. We therefore cannot take part in any tape dependant activities during STEP 09. If the situation improves during STEP 09 we might be able to join later, maybe during the second week. Sorry for the late information (experiments have been informed earlier) but I hoped to get some better news from our technicians this afternoon. We will keep you updated if the situation changes or (hopefully) improves. Reagrds, Andreas 7

NL-T 1 • NL-T 1 is somewhat less ‘critical’ than DE-KIT (but still important) as it supports fewer of the experiments (not CMS) and has very few Tier 2 s to support) • As opposed to DE-KIT which supports a large number in several countries… • Some progress in resolving the MSS performance problems (DMF tuning) has been made, but it is fair to point out that this site too is relatively poor on “the site metric” • The problems continue to be compounded by the NIKHEF / SARA “split” – which does not seem to affect a much more distributed (but simpler in terms of total capacity & # VOs): NDGF 8

![Main Issues Maurice Bouwhuis bouwhuissara nl Network bandwidth to the compute • Main Issues (Maurice Bouwhuis [bouwhuis@sara. nl]): • Network bandwidth to the compute](https://slidetodoc.com/presentation_image/ad6ff7734b368892316d4143ba624588/image-9.jpg)

• Main Issues (Maurice Bouwhuis [bouwhuis@sara. nl]): • Network bandwidth to the compute nodes too small to accommodate analysis jobs [will be fixed over summer together with new procurement of compute and storage resources] • Performance issues with the Mass Storage environment: lack of Tape Drives and tuning of new components [12 new tape drives have been installed after STEP’ 09 and will be brought online over the next month, Tuning of Mass Storage Disk Cache during STEP’ 09 is finished, Unexplained low performance during limited period still under investigation] • As expected before STEP’ 09, did not make the grade. • Achievements • d. Cache performed well and stable, ( > 24 hours continuous 10 Gb/s data transfers out of d. Cache based storage] • Relatively few incidents (improvement compared to CCRC 08). Performance issues were known before STEP’ 09 and will be addressed over summer and early autumn. Detailed technical report on STEP’ 09 NL-T 1 Post-Mortem is available. • Resources NL-T 1: • until End of 2009 - Computing 3. 800 k. SI 2 k, disk storage: 1. 110 TB, tape storage: 460 TB • New resources will be delivered September/October • Beginning of 2010 – Computing 11. 800 k. SI 2 k, Disk Storage: 3. 935 TB, Tape Storage: 1500 TB • Upgrade of network infrastructure at NIKHEF and SARA over the summer • Upgrade Mass Storage infrastructure over the summer 9

ASGC • ASGC suffered a fire in Q 1 which had a major impact on the site • They made very impressive efforts to recover as quickly as possible, including relocating to a temporary centre Ø They did not pass the metric(s) for a number of reasons • It is clearly important to understand these in detail and retest once they have relocated back (on-going) M But there have been and continue to be major concerns and problems with this site which pre-date the fire by many months • The man-power situation appears to be sub-critical • Communication has been and continues to be a major problem – despite improvements including local participation in the daily operations meeting L Other sites that are roughly equidistant from CERN (TRIUMF, Tokyo) do not suffer from these problems 10

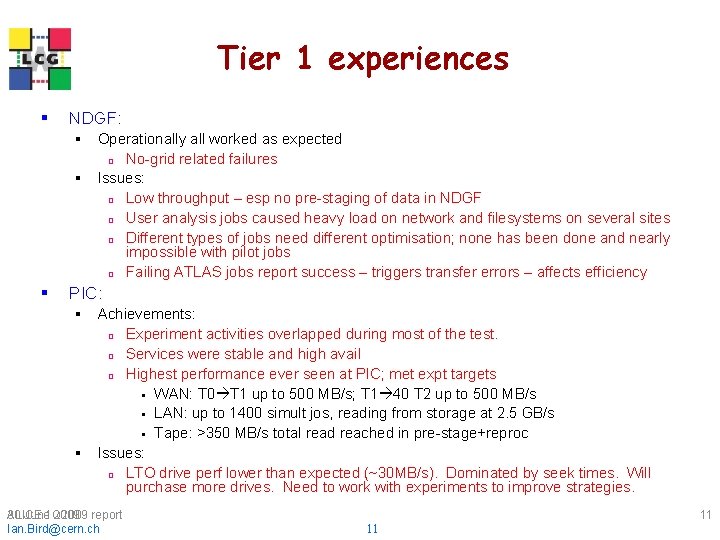

Tier 1 experiences § NDGF: § Operationally all worked as expected ¨ No-grid related failures § Issues: ¨ Low throughput – esp no pre-staging of data in NDGF ¨ User analysis jobs caused heavy load on network and filesystems on several sites ¨ Different types of jobs need different optimisation; none has been done and nearly impossible with pilot jobs ¨ Failing ATLAS jobs report success – triggers transfer errors – affects efficiency § PIC: § Achievements: ¨ Experiment activities overlapped during most of the test. ¨ Services were stable and high avail ¨ Highest performance ever seen at PIC; met expt targets WAN: T 0 T 1 up to 500 MB/s; T 1 40 T 2 up to 500 MB/s LAN: up to 1400 simult jos, reading from storage at 2. 5 GB/s Tape: >350 MB/s total read reached in pre-stage+reproc § Issues: ¨ LTO drive perf lower than expected (~30 MB/s). Dominated by seek times. Will purchase more drives. Need to work with experiments to improve strategies. 30 June 2009 ALICE 1 Q 2009 report Ian. Bird@cern. ch 11 11

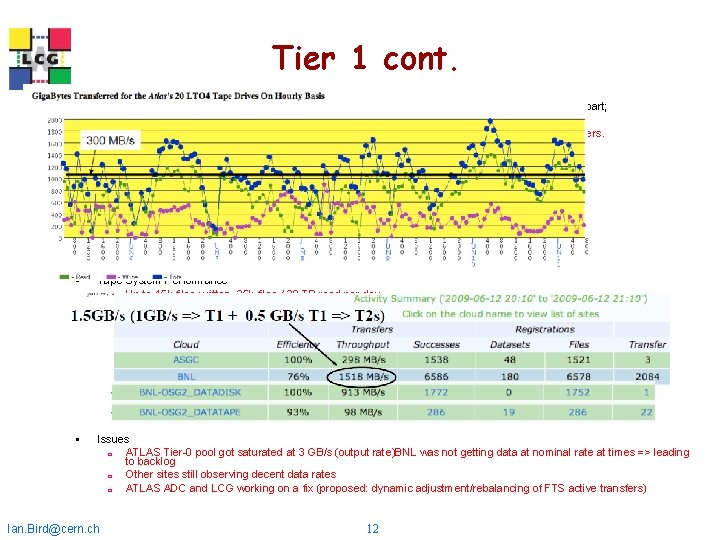

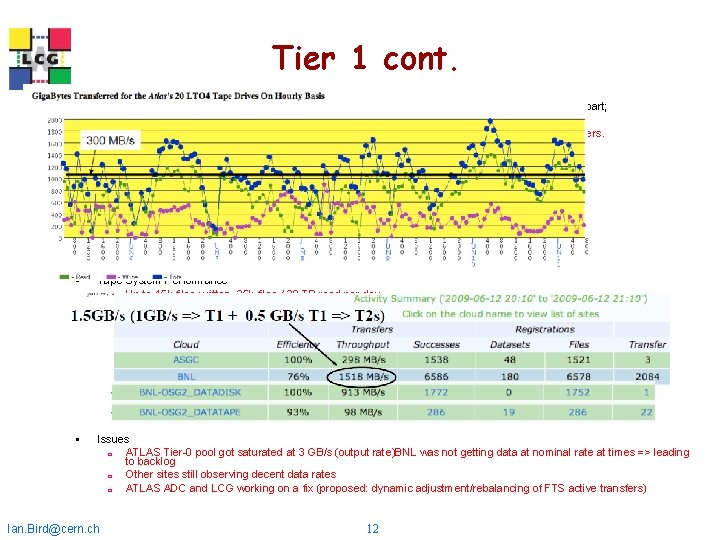

Tier 1 cont. § FZK: § § MSS problems started before STEP’ 09; decided not to participate in STEP’ 09 for the tape testing part; Achievements: ¨ T 0 -T 1; T 1 -T 2 worked well for CMS; OK for ATLAS until gridftp problem overloaded servers. ¨ Tape writing was OK ¨ Reprocessing from disk was OK ¨ No problems with g. Lite services ¨ No load problems with 3 D services ¨ No WAN bottlenecks even during OPN cut Issues: ¨ Should have tested SAN and d. Cache pool setup better ¨ STEP as a load test was important: functional testing and background activity is not enough ¨ Need to repeat tests now SAN setup is fixed BNL: § § § Tape System Performance ¨ Up to 45 k files written, 35 k files / 30 TB read per day ¨ 26 k staging requests received in 2 hours, 6 k files staged in 1 hour ¨ >300 MB/s average tape I/O over the course of the exercise (Re-)Processing ¨ Up to 10 k successfully completed jobs/day w/ input data from tape LAN and WAN Transfers ¨ Up to 1. 5 GB/s into/out of Tier-1 (1 GB/s from CERN/other Tier-1 s) ¨ >22 k/hour completed SRM managed WAN transfers ¨ Fiber cut in DE reduced BNL’s b/w to ~5 Gbps; TRIUMF was served via BNL ¨ 2. 7 GB/s average transfer rate between disk storage and WNs All Tier-1 Services stable over the entire course of STEP 09 Issues ¨ ATLAS Tier-0 pool got saturated at 3 GB/s (output rate)BNL was not getting data at nominal rate at times => leading to backlog ¨ Other sites still observing decent data rates ¨ ATLAS ADC and LCG working on a fix (proposed: dynamic adjustment/rebalancing of FTS active transfers) Ian. Bird@cern. ch 12

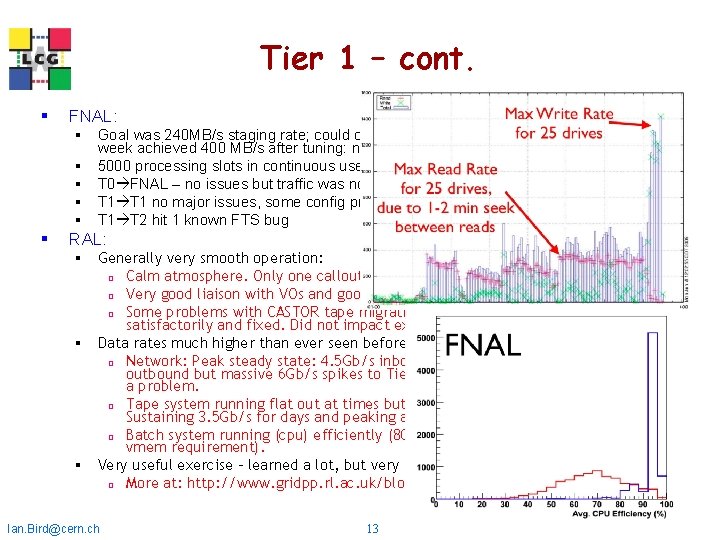

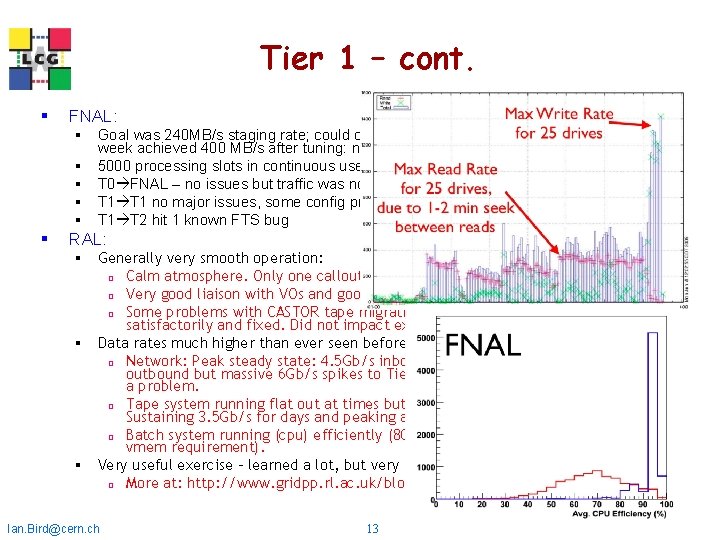

Tier 1 – cont. § FNAL: § Goal was 240 MB/s staging rate; could only do 200 in 1 st week. LTO has long seek. 2 nd week achieved 400 MB/s after tuning: need to regroup files on tape and monitor perf. § 5000 processing slots in continuous use; CPU efficiency good with pre-stage § T 0 FNAL – no issues but traffic was not high § T 1 no major issues, some config problems § T 1 T 2 hit 1 known FTS bug § RAL: § Generally very smooth operation: ¨ Calm atmosphere. Only one callout, most service system load low ¨ Very good liaison with VOs and good idea what was going on. ¨ Some problems with CASTOR tape migration (and robotics) – but all handled satisfactorily and fixed. Did not impact experiments. § Data rates much higher than ever seen before: ¨ Network: Peak steady state: 4. 5 Gb/s inbound (most from OPN), 2. 5 Gb/s outbound but massive 6 Gb/s spikes to Tier-2 sites. Reprocessing network load not a problem. ¨ Tape system running flat out at times but keeping up. (ATLAS/CMS/LHCB). Sustaining 3. 5 Gb/s for days and peaking at 6 Gb/s. ¨ Batch system running (cpu) efficiently (80 -90%) but could not entirely fill it (3 GB vmem requirement). § Very useful exercise – learned a lot, but very reassuring ¨ More at: http: //www. gridpp. rl. ac. uk/blog/category/step 09/ Ian. Bird@cern. ch 13

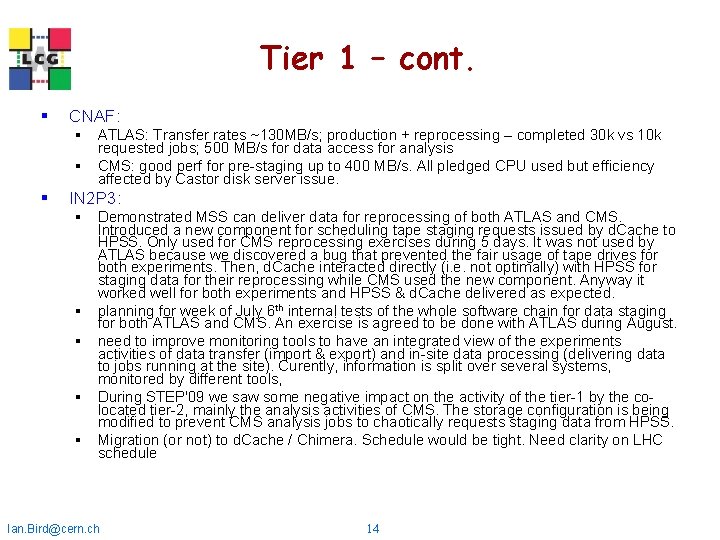

Tier 1 – cont. § CNAF: § ATLAS: Transfer rates ~130 MB/s; production + reprocessing – completed 30 k vs 10 k requested jobs; 500 MB/s for data access for analysis § CMS: good perf for pre-staging up to 400 MB/s. All pledged CPU used but efficiency affected by Castor disk server issue. § IN 2 P 3: § Demonstrated MSS can deliver data for reprocessing of both ATLAS and CMS. Introduced a new component for scheduling tape staging requests issued by d. Cache to HPSS. Only used for CMS reprocessing exercises during 5 days. It was not used by ATLAS because we discovered a bug that prevented the fair usage of tape drives for both experiments. Then, d. Cache interacted directly (i. e. not optimally) with HPSS for staging data for their reprocessing while CMS used the new component. Anyway it worked well for both experiments and HPSS & d. Cache delivered as expected. § planning for week of July 6 th internal tests of the whole software chain for data staging for both ATLAS and CMS. An exercise is agreed to be done with ATLAS during August. § need to improve monitoring tools to have an integrated view of the experiments activities of data transfer (import & export) and in-site data processing (delivering data to jobs running at the site). Curently, information is split over several systems, monitored by different tools, § During STEP'09 we saw some negative impact on the activity of the tier-1 by the colocated tier-2, mainly the analysis activities of CMS. The storage configuration is being modified to prevent CMS analysis jobs to chaotically requests staging data from HPSS. § Migration (or not) to d. Cache / Chimera. Schedule would be tight. Need clarity on LHC schedule Ian. Bird@cern. ch 14

Tier 1 - cont § NL-T 1: § Issues: Network bandwidth to the compute nodes too small to accommodate analysis jobs [will be fixed over summer together with new procurement of compute and storage resources] ¨ Performance issues with the Mass Storage environment: lack of Tape Drives and tuning of new components [12 new tape drives have been installed after STEP’ 09 and will be brought online over the next month], Tuning of Mass Storage Disk Cache during STEP’ 09 is finished, Unexplained low performance during limited period still under investigation ¨ As expected before STEP’ 09, did not make the grade. Achievements ¨ d. Cache performed well and stable, ( > 24 hours continuous 10 Gb/s data transfers out of d. Cache based storage] ¨ Relatively few incidents (improvement compared to CCRC 08). Performance issues were known before STEP’ 09 and will be addressed over summer and early autumn. Detailed technical report on STEP’ 09 NL-T 1 Post-Mortem is available. ¨ § § ASGC: § Achievements ATLAS got >1 GB/s to ASGC cloud ¨ Reprocessing smooth in 2 nd week only (several outstanding issues were fixed) ¨ CMS prestaging 150 vs 73 MB/s target; 8 k successful jobs ¨ Good data transfer performances Issues: (still problems related to relocation etc after fire) ¨ 3 D port wrong delayed start of ATLAS reproc tests ¨ Oracle Big. ID problem in Castor (they were missing workaround ¨ Wrong fairshare allowed too many simulation and Tier 2 jobs ¨ CMS reprocessing job efficiency low compared to other Tier 1 s ¨ § Ian. Bird@cern. ch 15

Summary of Tier 1 s • The immediate problems of ASGC, DE-KIT and NL-T 1 need to be solved and documented • Once this has been done, they need to be re-tested (separately, multi-VO and finally together) • A site visit to these sites (initially DE-KIT and NL-T 1) should be performed with priority: it is important that the issues and understood and in depth and that the situation is clarified not only with MB/GDB contacts, but also at the more technical level (buy-in) L Other major changes are underway (RAL CC move) or in th pipeline (d. Cache pnfs to Chimera migration) Ø A “rerun” – already referred to as “SEPT’ 09” is needed 16

Tier 2 s • The results from Tier 2 s are somewhat more complex to analyse – an example this time from CMS: • Primary goal : use at least 50% of pledged T 2 level for analysis • backfill ongoing analysis activity • go above 50% if possible • Preliminary results : • In aggregate: 88% of pledge was used. 14 sites with > 100% • 9 sites below 50% • The number of Tier 2 s is such that it does not make sense to go through each by name, however: Ø Need to understand primary causes for some sites to perform well and some to perform relatively badly Ø Some concerns on data access performance / data management in general at Tier 2 s: this is an area which has not been looked at in (sufficient? ) detail 17

Summary of Tier 2 s • Detailed reports written by a number of Tier 2 s • MC conclusion “solved since a long time” (Glasgow) • Also some numbers on specific tasks, e. g. Ganga. Robot • Some specific areas of concern (likely to grow IMHO) • Networking: internal bandwidth and/or external • Data access: aside from constraints above, concern that data access will met the load / requirements from heavy end-user analysis • “Efficiency” – # successful analysis jobs – varies from 94% down to 56% per (ATLAS) cloud, but >99% down to 0% (e. g. 13 K jobs failed, 100 succeed) (error analysis also exists) • IMHO, the detailed summaries maintained by the experiments together with site reviews demonstrate that the process is under control, not withstanding concerns 18

Recommendations 1. Resolution of major problems with in-depth written reports 2. Site visits to Tier 1 s that gave problems during STEP’ 09 (at least DE-KIT & NL-T 1) 3. Understanding of Tier 2 successes and failures 4. Rerun of “STEP’ 09” – perhaps split into reprocessing and analysis before a “final” re-run – on timescale of September 2009 5. Review of results prior to LHC restart 19

Overall Conclusions • Once again, this was an extremely valuable exercise an we all learned a great deal! • Progress – again – has been significant • The WLCG operations procedures / meetings have proven their worth • Need to understand resolve outstanding issues! Ø Overall, STEP’ 09 was a big step forward! 20

Tier 1 2 3 vocabulary words

Tier 1 2 3 vocabulary words Tier 3 words

Tier 3 words Tier 1 status

Tier 1 status Tier 1 isp

Tier 1 isp Tier bari

Tier bari Hand in a tier 2

Hand in a tier 2 Tier 1 instruction

Tier 1 instruction Is bc and bce the same

Is bc and bce the same Jkl tier 3

Jkl tier 3 Demand deposit vs savings deposit

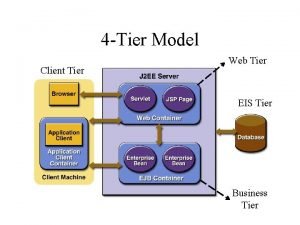

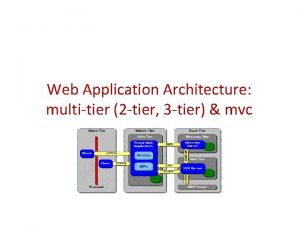

Demand deposit vs savings deposit Two tier data warehouse architecture

Two tier data warehouse architecture Tier 1 isp

Tier 1 isp N tier architecture advantages and disadvantages

N tier architecture advantages and disadvantages Maria tier 0

Maria tier 0 Tier one services

Tier one services Self assessment report nba

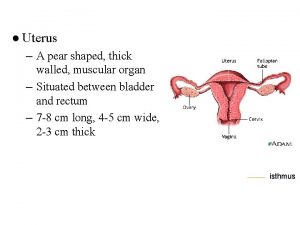

Self assessment report nba Nerve supply uterus

Nerve supply uterus Keiron bailey

Keiron bailey Marzano tier 2 vocabulary list

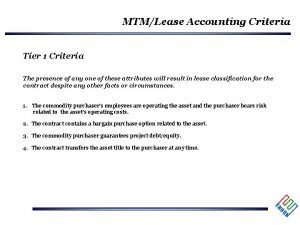

Marzano tier 2 vocabulary list Tier the presence

Tier the presence What is scaled advice

What is scaled advice