Statistical Topic Models for Text Mining Cheng Xiang

![Probabilistic Latent Semantic Analysis/Indexing (PLSA/PLSI) [Hofmann 99 a, 99 b] • • Mix k Probabilistic Latent Semantic Analysis/Indexing (PLSA/PLSI) [Hofmann 99 a, 99 b] • • Mix k](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-32.jpg)

![Latent Dirichlet Allocation (LDA) [Blei et al. 02] • Make PLSA a generative model Latent Dirichlet Allocation (LDA) [Blei et al. 02] • Make PLSA a generative model](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-45.jpg)

![LDA as a graph model [Blei et al. 03 a] distribution over topics for LDA as a graph model [Blei et al. 03 a] distribution over topics for](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-49.jpg)

![The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Using conjugacy of Dirichlet and The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Using conjugacy of Dirichlet and](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-51.jpg)

![The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Sample each zi conditioned on The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Sample each zi conditioned on](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-52.jpg)

![Sample Topics from TDT Corpus [Hofmann 99 b] 65 Sample Topics from TDT Corpus [Hofmann 99 b] 65](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-65.jpg)

![How to Help Users Interpret a Topic Model? [Mei et al. 07 b] • How to Help Users Interpret a Topic Model? [Mei et al. 07 b] •](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-66.jpg)

![Automatic Labeling of Topics [Mei et al. 07 b] Statistical topic models term 0. Automatic Labeling of Topics [Mei et al. 07 b] Statistical topic models term 0.](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-68.jpg)

![Using PLSA to Discover Temporal Topic Trends [Mei & Zhai 05] gene 0. 0173 Using PLSA to Discover Temporal Topic Trends [Mei & Zhai 05] gene 0. 0173](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-73.jpg)

![Construct Theme Evolution Graph [Mei & Zhai 05] 1999 2000 2001 2002 SVM 0. Construct Theme Evolution Graph [Mei & Zhai 05] 1999 2000 2001 2002 SVM 0.](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-74.jpg)

![Use PLSA to Integrate Opinions [Lu & Zhai 08] Output Topic: i. Pod Expert Use PLSA to Integrate Opinions [Lu & Zhai 08] Output Topic: i. Pod Expert](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-75.jpg)

![Comparison of Task Performance of PLSA and LDA [Lu et al. 11] • Three Comparison of Task Performance of PLSA and LDA [Lu et al. 11] • Three](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-80.jpg)

![Twelve Years of NIPS [Blei et al. 03 b] 85 Twelve Years of NIPS [Blei et al. 03 b] 85](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-85.jpg)

![Capturing Topic Structures: Correlated Topic Model (CTM) [Blei & Lafferty 05] 86 Capturing Topic Structures: Correlated Topic Model (CTM) [Blei & Lafferty 05] 86](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-86.jpg)

![Contextual Probabilistic Latent Semantics Analysis [Mei & Zhai 06 b] Themes View 1 View Contextual Probabilistic Latent Semantics Analysis [Mei & Zhai 06 b] Themes View 1 View](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-92.jpg)

![Comparing News Articles [Zhai et al. 04] Iraq War (30 articles) vs. Afghan War Comparing News Articles [Zhai et al. 04] Iraq War (30 articles) vs. Afghan War](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-93.jpg)

![Spatiotemporal Patterns in Blog Articles [Mei et al. 06 a] • • • Query= Spatiotemporal Patterns in Blog Articles [Mei et al. 06 a] • • • Query=](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-94.jpg)

![Multi-Faceted Sentiment Summary [Mei et al. 07 a] (query=“Da Vinci Code”) Facet 1: Movie Multi-Faceted Sentiment Summary [Mei et al. 07 a] (query=“Da Vinci Code”) Facet 1: Movie](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-97.jpg)

![Event Impact Analysis: IR Research [Mei & Zhai 06 b] Theme: retrieval models term Event Impact Analysis: IR Research [Mei & Zhai 06 b] Theme: retrieval models term](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-99.jpg)

![The Author-Topic model [Rosen-Zvi et al. 04] each author has a distribution over topics The Author-Topic model [Rosen-Zvi et al. 04] each author has a distribution over topics](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-100.jpg)

![Dirichlet-multinomial Regression (DMR) [Mimno & Mc. Callum 08] Allows arbitrary features to be used Dirichlet-multinomial Regression (DMR) [Mimno & Mc. Callum 08] Allows arbitrary features to be used](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-102.jpg)

![Supervised LDA [Blei & Mc. Auliffe 07] 104 Supervised LDA [Blei & Mc. Auliffe 07] 104](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-104.jpg)

![Latent Aspect Rating Analysis [Wang et al. 11] • Given a set of review Latent Aspect Rating Analysis [Wang et al. 11] • Given a set of review](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-106.jpg)

![Latent Aspect Rating Analysis Model [Wang et al. 11] • Unified framework Excellent location Latent Aspect Rating Analysis Model [Wang et al. 11] • Unified framework Excellent location](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-109.jpg)

![Network Supervised Topic Modeling [Mei et al. 08] • • Probabilistic topic modeling as Network Supervised Topic Modeling [Mei et al. 08] • • Probabilistic topic modeling as](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-111.jpg)

![References (incomplete) [Blei et al. 02] D. Blei, A. Ng, and M. Jordan. Latent References (incomplete) [Blei et al. 02] D. Blei, A. Ng, and M. Jordan. Latent](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-122.jpg)

![References (incomplete) ]Mei & Zhai 06 b] Qiaozhu Mei, Cheng. Xiang Zhai: A mixture References (incomplete) ]Mei & Zhai 06 b] Qiaozhu Mei, Cheng. Xiang Zhai: A mixture](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-123.jpg)

- Slides: 123

Statistical Topic Models for Text Mining Cheng. Xiang Zhai Department of Computer Science Graduate School of Library & Information Science Institute for Genomic Biology Department of Statistics University of Illinois, Urbana-Champaign http: //www. cs. illinois. edu/homes/czhai@illinois. edu NLP&CC 2012 Tutorial , Beijing, China, Nov. 2, 2012

Goal of the Tutorial • • Brief introduction to the emerging area of applying statistical topic models (STMs) to text mining (TM) Targeted audience: – Practitioners working on information retrieval and data mining who are interested in learning about cutting-edge text mining techniques • • – Researchers who are looking for new research problems in text mining, information retrieval, and natural language processing Emphasis is on basic concepts, principles, and major application ideas Accessible to anyone with basic knowledge of probability and statistics Check out David Blei’s tutorials on this topic for a more complete coverage of advanced topic models: http: //www. cs. princeton. edu/~blei/topicmodeling. html 2

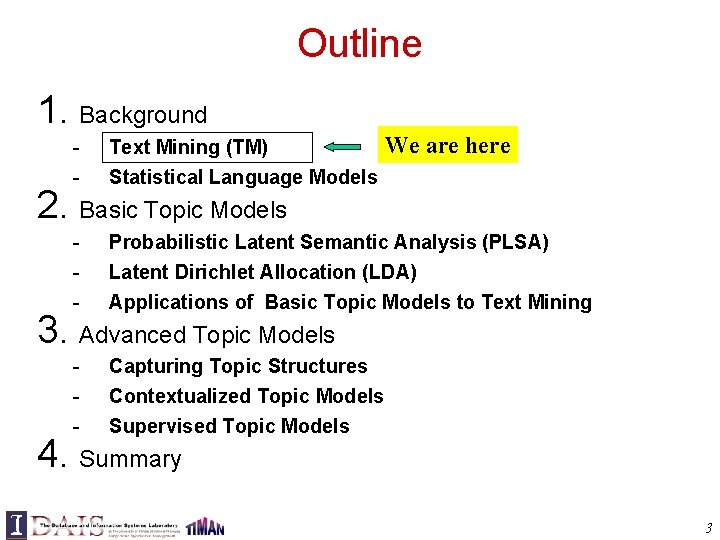

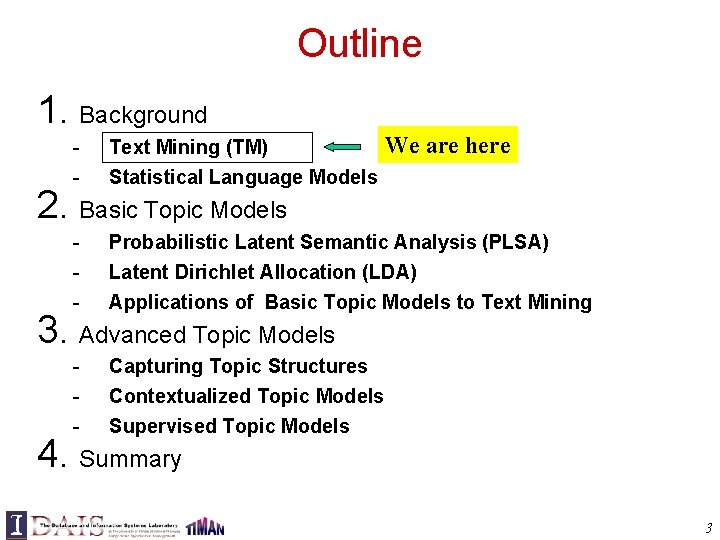

Outline 1. Background We are here - Text Mining (TM) - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models Statistical Language Models 2. Basic Topic Models 3. Advanced Topic Models 4. Summary 3

What is Text Mining? • Data Mining View: Explore patterns in textual data – Find latent topics – Find topical trends – Find outliers and other hidden patterns • Natural Language Processing View: Make inferences based on partial understanding of natural language text – Information extraction – Question answering 4

Applications of Text Mining • Direct applications – Discovery-driven (Bioinformatics, Business Intelligence, etc): We have specific questions; how can we exploit data mining to answer the questions? – Data-driven (WWW, literature, email, customer reviews, etc): We have a lot of data; what can we do with it? • Indirect applications – Assist information access (e. g. , discover major latent topics to better summarize search results) – Assist information organization (e. g. , discover hidden structures to link scattered information) 5

Text Mining Methods • • Data Mining Style: View text as high dimensional data – Frequent pattern finding – Association analysis – Outlier detection Information Retrieval Style: Fine granularity topical analysis – Topic extraction – Exploit term weighting and text similarity measures This tutorial Natural Language Processing Style: Information Extraction – Entity extraction – Relation extraction – Sentiment analysis Machine Learning Style: Unsupervised or semi-supervised learning – Mixture models – Dimension reduction 6

Outline 1. Background - Text Mining (TM) - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models Statistical Language Models 2. Basic Topic Models We are here 3. Advanced Topic Models 4. Summary 7

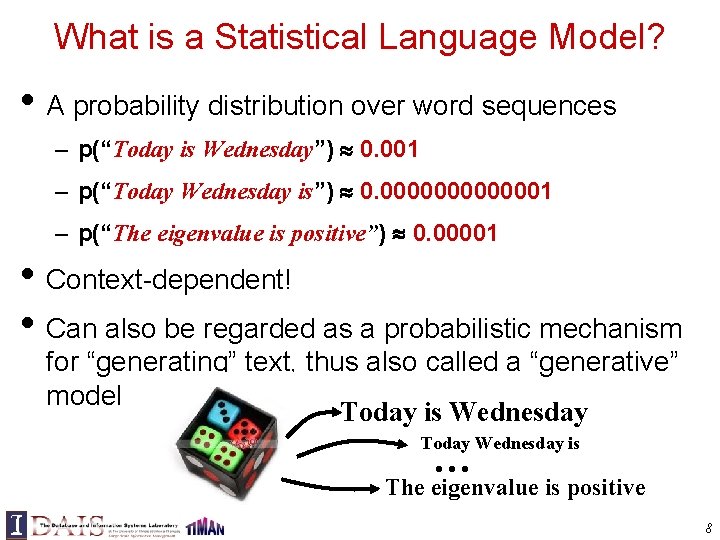

What is a Statistical Language Model? • A probability distribution over word sequences – p(“Today is Wednesday”) 0. 001 – p(“Today Wednesday is”) 0. 0000001 – p(“The eigenvalue is positive”) 0. 00001 • Context-dependent! • Can also be regarded as a probabilistic mechanism for “generating” text, thus also called a “generative” model Today is Wednesday Today Wednesday is … The eigenvalue is positive 8

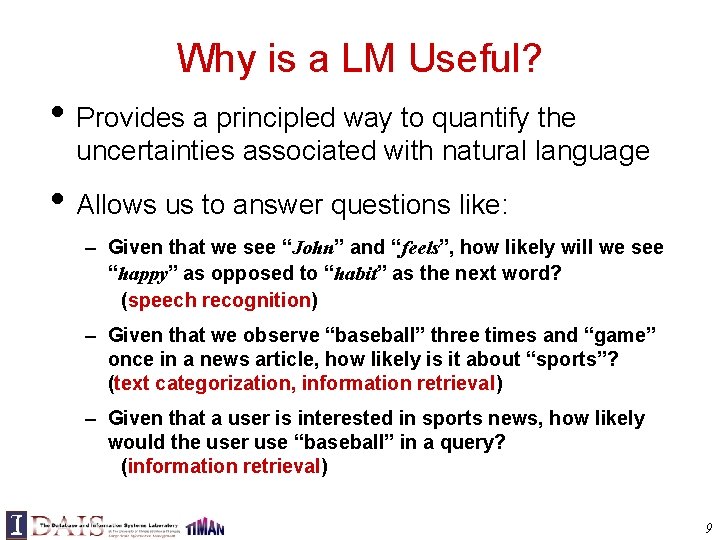

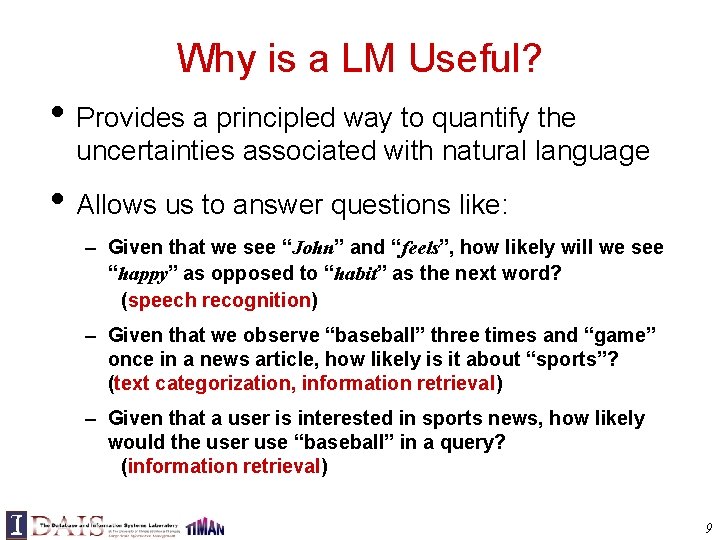

Why is a LM Useful? • Provides a principled way to quantify the uncertainties associated with natural language • Allows us to answer questions like: – Given that we see “John” and “feels”, how likely will we see “happy” as opposed to “habit” as the next word? (speech recognition) – Given that we observe “baseball” three times and “game” once in a news article, how likely is it about “sports”? (text categorization, information retrieval) – Given that a user is interested in sports news, how likely would the user use “baseball” in a query? (information retrieval) 9

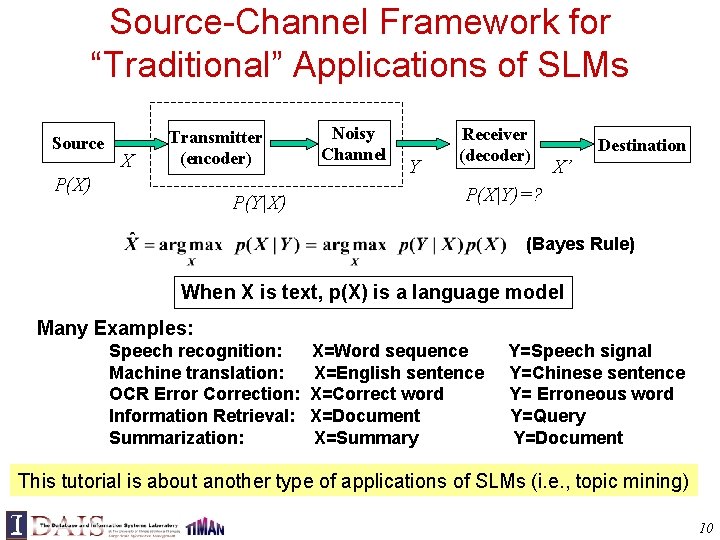

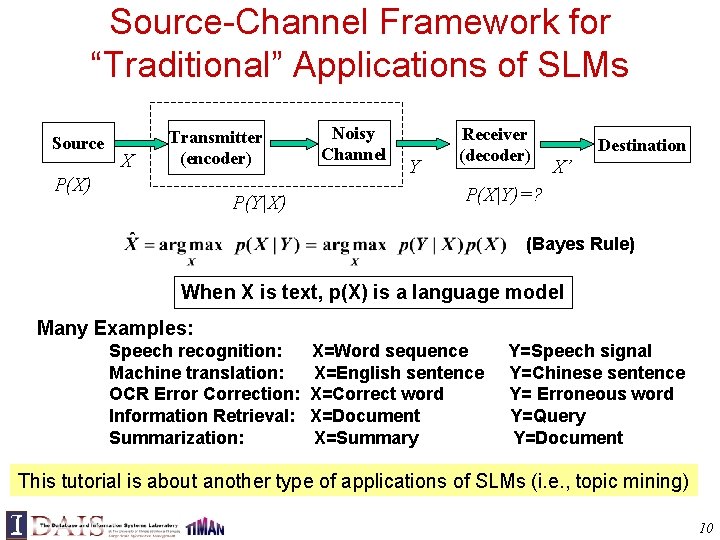

Source-Channel Framework for “Traditional” Applications of SLMs Source X Transmitter (encoder) P(X) P(Y|X) Noisy Channel Y Receiver (decoder) Destination X’ P(X|Y)=? (Bayes Rule) When X is text, p(X) is a language model Many Examples: Speech recognition: Machine translation: OCR Error Correction: Information Retrieval: Summarization: X=Word sequence X=English sentence X=Correct word X=Document X=Summary Y=Speech signal Y=Chinese sentence Y= Erroneous word Y=Query Y=Document This tutorial is about another type of applications of SLMs (i. e. , topic mining) 10

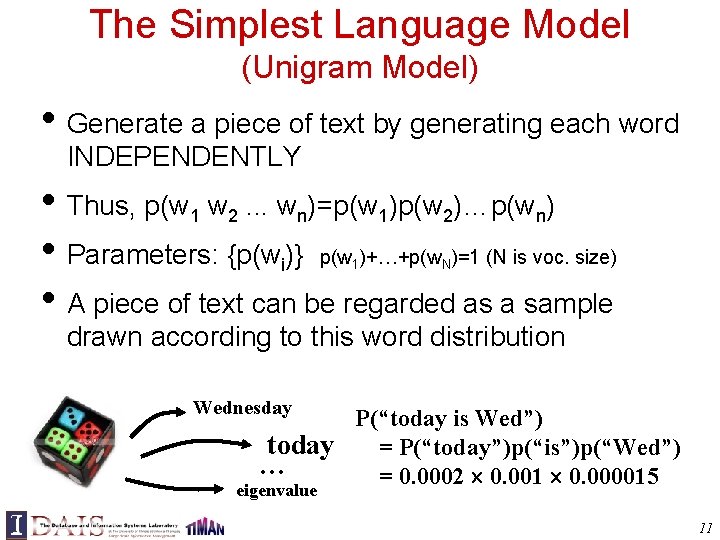

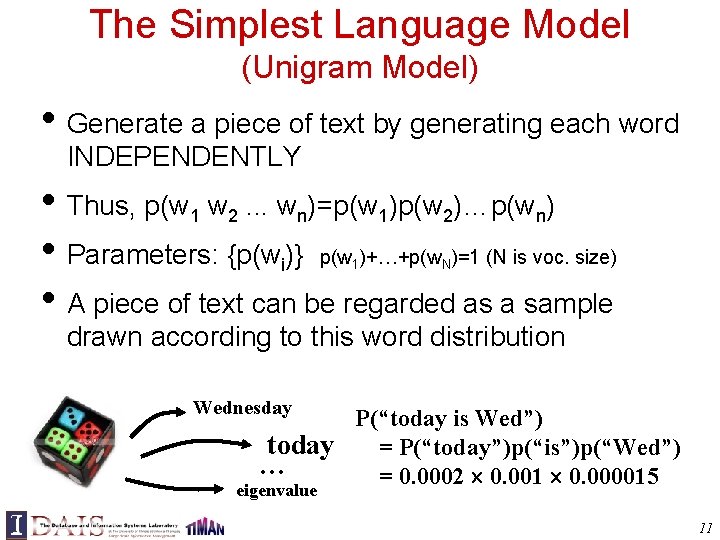

The Simplest Language Model (Unigram Model) • Generate a piece of text by generating each word INDEPENDENTLY • Thus, p(w 1 w 2. . . wn)=p(w 1)p(w 2)…p(wn) • Parameters: {p(wi)} p(w )+…+p(w )=1 (N is voc. size) • A piece of text can be regarded as a sample 1 N drawn according to this word distribution Wednesday P(“today is Wed”) today = P(“today”)p(“is”)p(“Wed”) … = 0. 0002 0. 001 0. 000015 eigenvalue 11

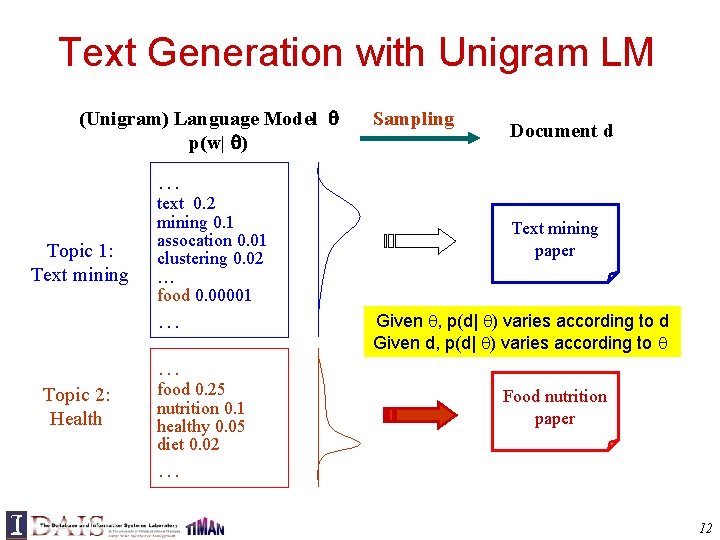

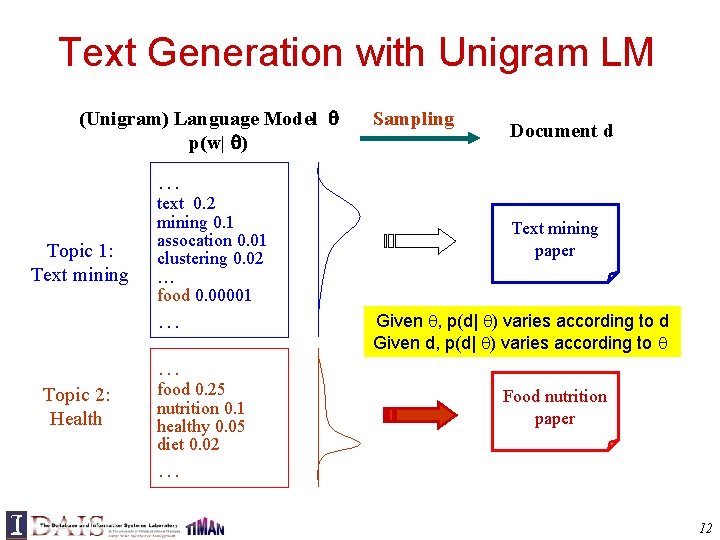

Text Generation with Unigram LM (Unigram) Language Model p(w| ) Sampling Document d … Topic 1: Text mining text 0. 2 mining 0. 1 assocation 0. 01 clustering 0. 02 … food 0. 00001 … Text mining paper Given , p(d| ) varies according to d Given d, p(d| ) varies according to … Topic 2: Health food 0. 25 nutrition 0. 1 healthy 0. 05 diet 0. 02 Food nutrition paper … 12

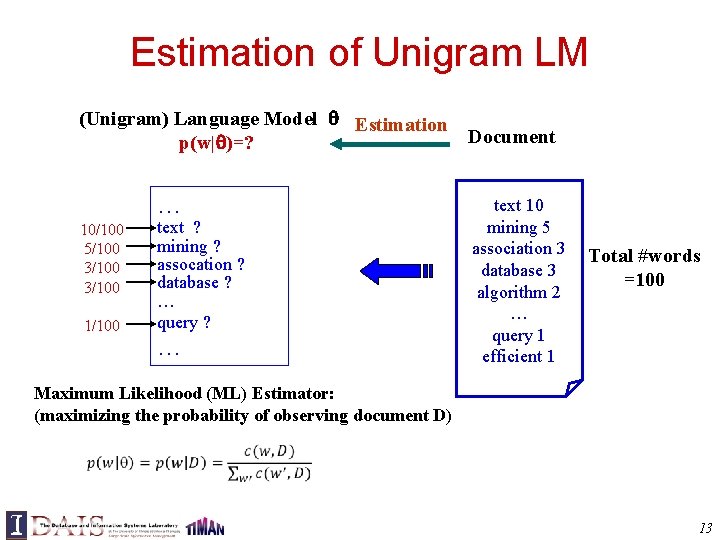

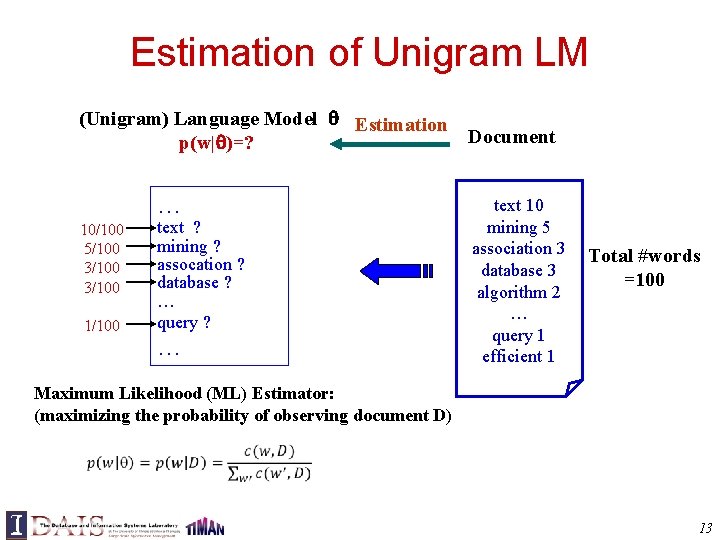

Estimation of Unigram LM (Unigram) Language Model Estimation Document p(w| )=? … 10/100 5/100 3/100 1/100 text ? mining ? assocation ? database ? … query ? … text 10 mining 5 association 3 database 3 algorithm 2 … query 1 efficient 1 Total #words =100 Maximum Likelihood (ML) Estimator: (maximizing the probability of observing document D) 13

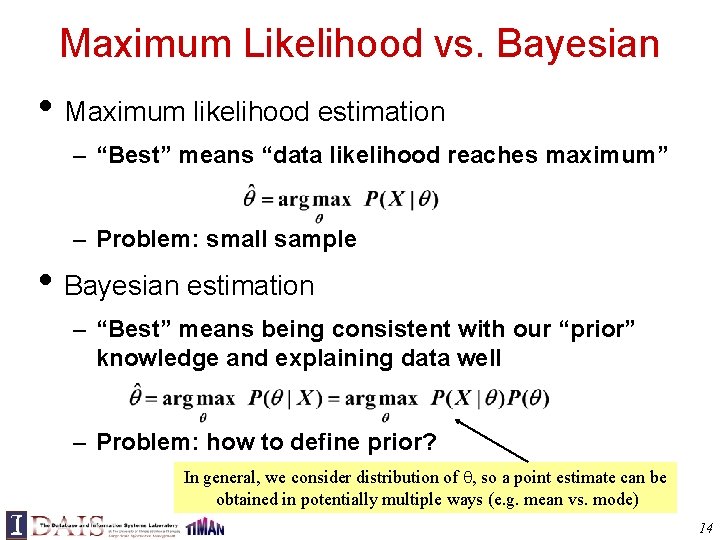

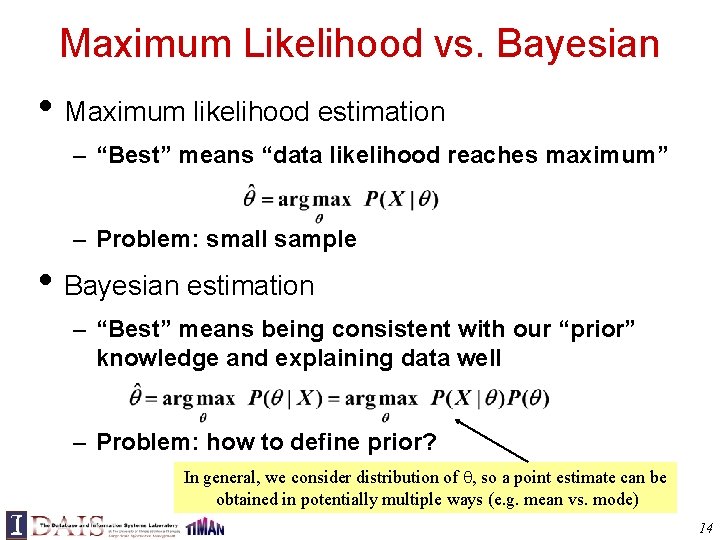

Maximum Likelihood vs. Bayesian • Maximum likelihood estimation – “Best” means “data likelihood reaches maximum” – Problem: small sample • Bayesian estimation – “Best” means being consistent with our “prior” knowledge and explaining data well – Problem: how to define prior? In general, we consider distribution of , so a point estimate can be obtained in potentially multiple ways (e. g. mean vs. mode) 14

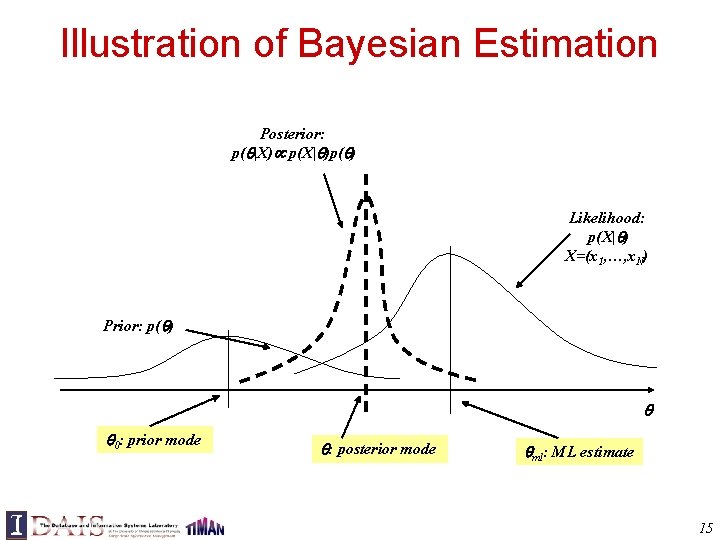

Illustration of Bayesian Estimation Posterior: p( |X) p(X| )p( ) Likelihood: p(X| ) X=(x 1, …, x. N) Prior: p( ) 0: prior mode : posterior mode ml: ML estimate 15

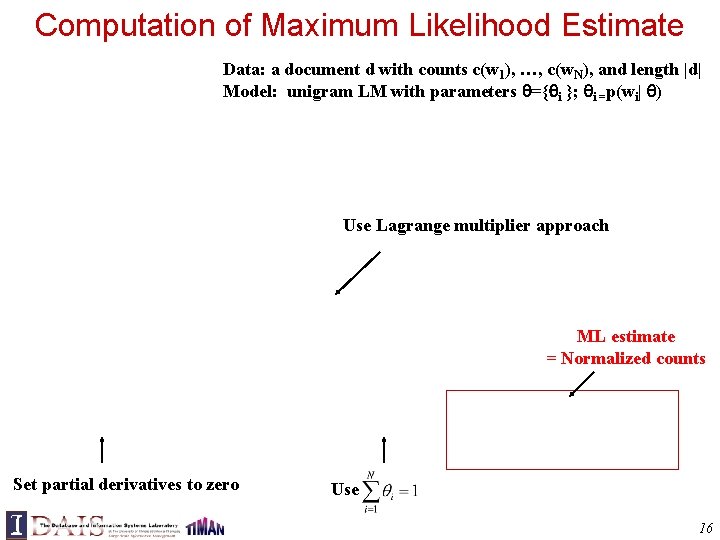

Computation of Maximum Likelihood Estimate Data: a document d with counts c(w 1), …, c(w. N), and length |d| Model: unigram LM with parameters ={ i }; i =p(wi| ) Use Lagrange multiplier approach ML estimate = Normalized counts Set partial derivatives to zero Use 16

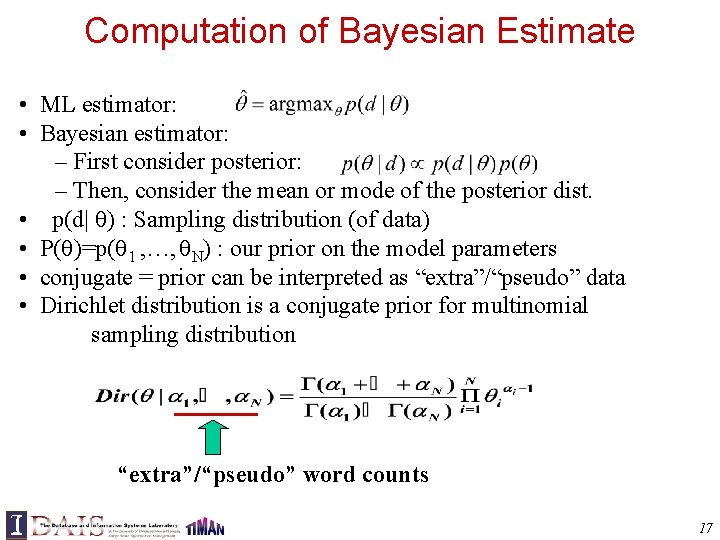

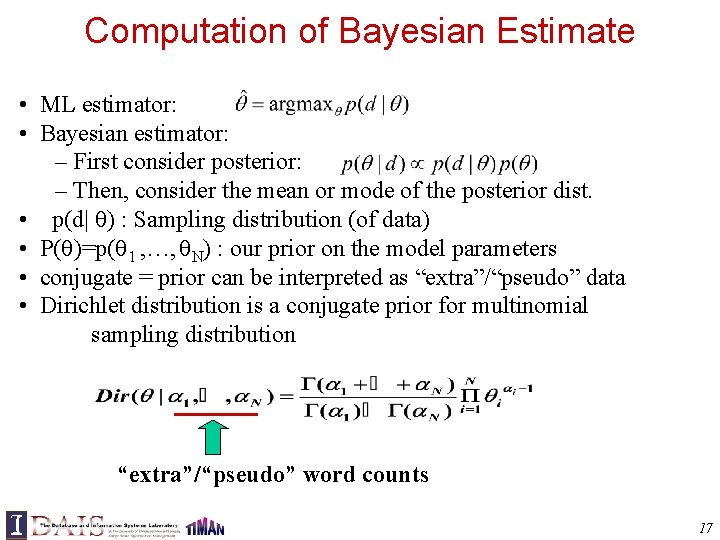

Computation of Bayesian Estimate • ML estimator: • Bayesian estimator: – First consider posterior: – Then, consider the mean or mode of the posterior dist. • p(d| ) : Sampling distribution (of data) • P( )=p( 1 , …, N) : our prior on the model parameters • conjugate = prior can be interpreted as “extra”/“pseudo” data • Dirichlet distribution is a conjugate prior for multinomial sampling distribution “extra”/“pseudo” word counts 17

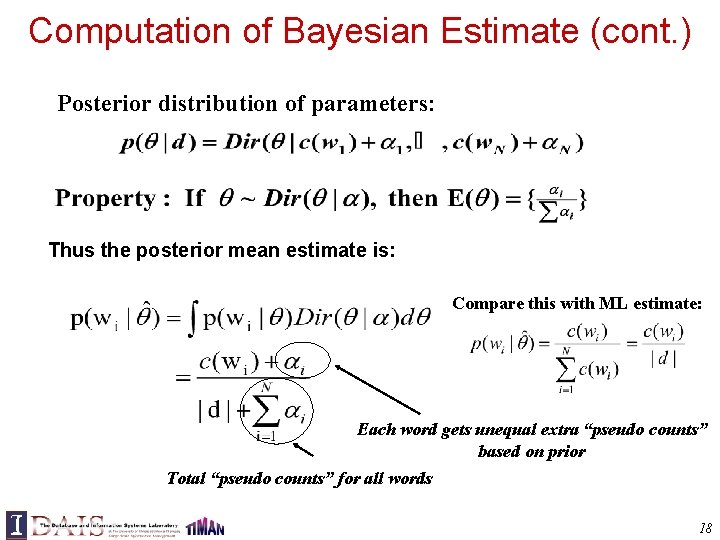

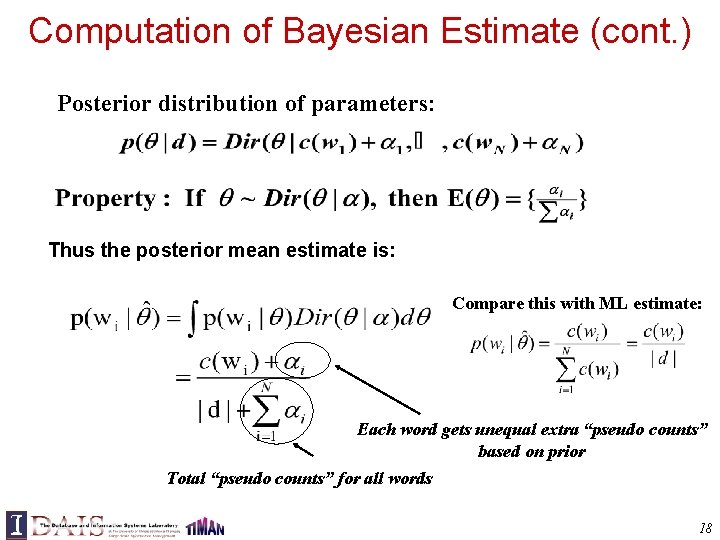

Computation of Bayesian Estimate (cont. ) Posterior distribution of parameters: Thus the posterior mean estimate is: Compare this with ML estimate: Each word gets unequal extra “pseudo counts” based on prior Total “pseudo counts” for all words 18

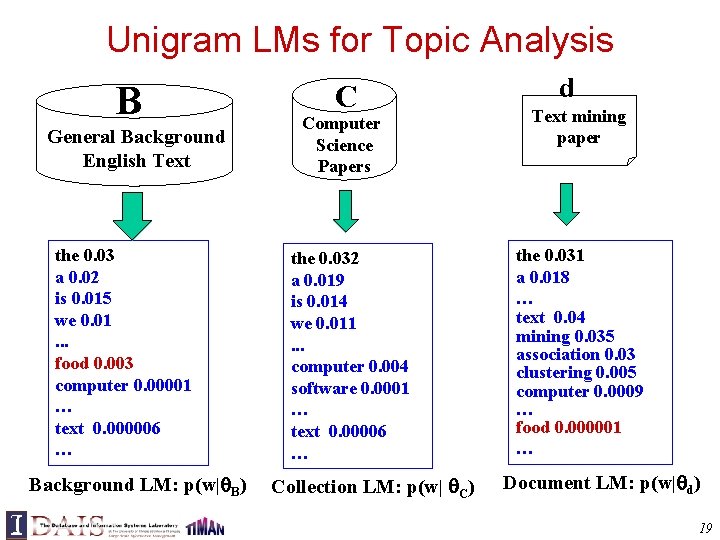

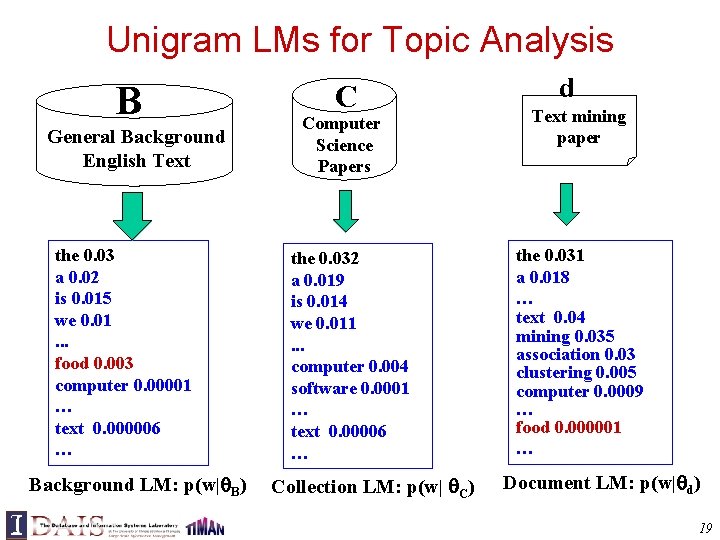

Unigram LMs for Topic Analysis B General Background English Text the 0. 03 a 0. 02 is 0. 015 we 0. 01. . . food 0. 003 computer 0. 00001 … text 0. 000006 … Background LM: p(w| B) C Computer Science Papers the 0. 032 a 0. 019 is 0. 014 we 0. 011. . . computer 0. 004 software 0. 0001 … text 0. 00006 … Collection LM: p(w| C) d Text mining paper the 0. 031 a 0. 018 … text 0. 04 mining 0. 035 association 0. 03 clustering 0. 005 computer 0. 0009 … food 0. 000001 … Document LM: p(w| d) 19

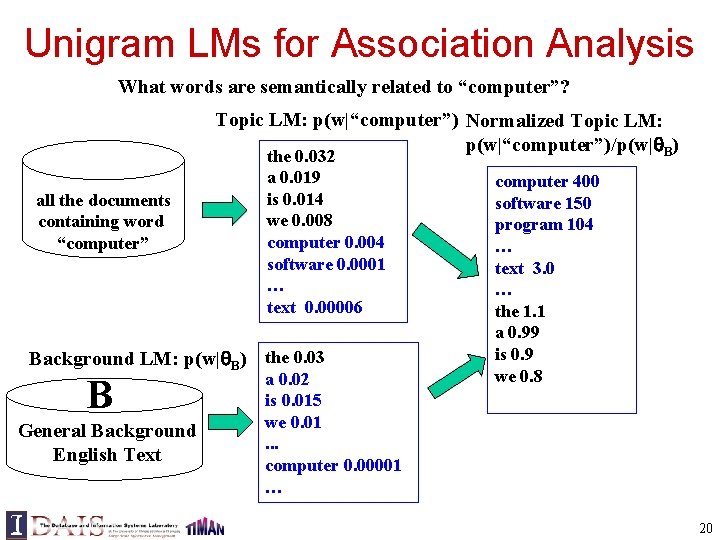

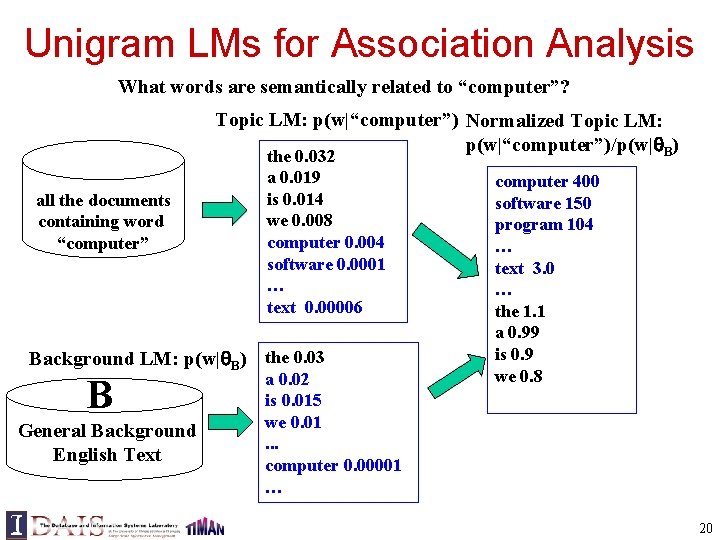

Unigram LMs for Association Analysis What words are semantically related to “computer”? Topic LM: p(w|“computer”) Normalized Topic LM: p(w|“computer”)/p(w| B) all the documents containing word “computer” the 0. 032 a 0. 019 is 0. 014 we 0. 008 computer 0. 004 software 0. 0001 … text 0. 00006 Background LM: p(w| B) the 0. 03 B General Background English Text a 0. 02 is 0. 015 we 0. 01. . . computer 0. 00001 … computer 400 software 150 program 104 … text 3. 0 … the 1. 1 a 0. 99 is 0. 9 we 0. 8 20

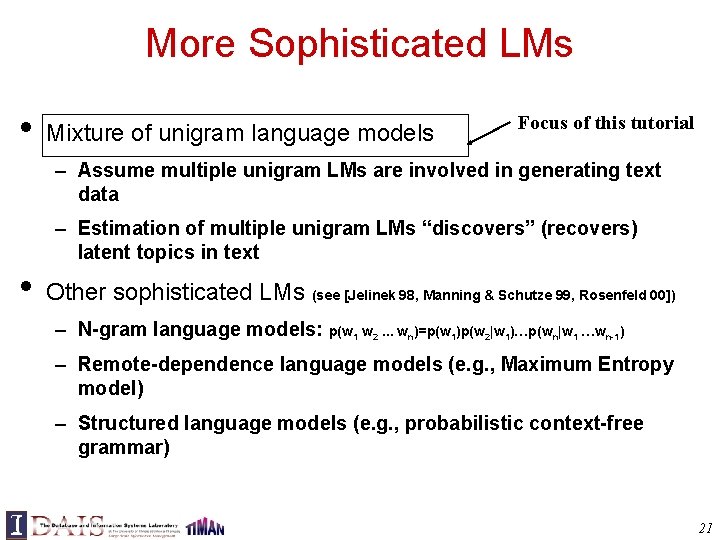

More Sophisticated LMs • Mixture of unigram language models Focus of this tutorial – Assume multiple unigram LMs are involved in generating text data – Estimation of multiple unigram LMs “discovers” (recovers) latent topics in text • Other sophisticated LMs (see [Jelinek 98, Manning & Schutze 99, Rosenfeld 00]) – N-gram language models: p(w 1 w 2. . . wn)=p(w 1)p(w 2|w 1)…p(wn|w 1 …wn-1) – Remote-dependence language models (e. g. , Maximum Entropy model) – Structured language models (e. g. , probabilistic context-free grammar) 21

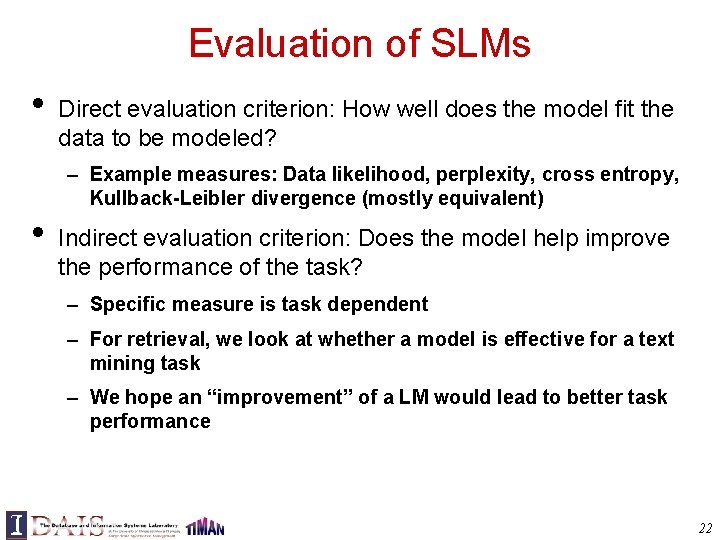

Evaluation of SLMs • Direct evaluation criterion: How well does the model fit the data to be modeled? – Example measures: Data likelihood, perplexity, cross entropy, Kullback-Leibler divergence (mostly equivalent) • Indirect evaluation criterion: Does the model help improve the performance of the task? – Specific measure is task dependent – For retrieval, we look at whether a model is effective for a text mining task – We hope an “improvement” of a LM would lead to better task performance 22

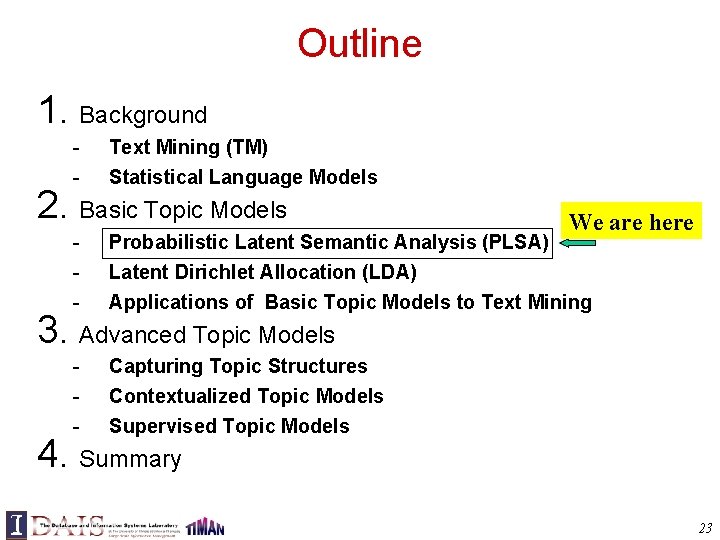

Outline 1. Background - Text Mining (TM) Statistical Language Models 2. Basic Topic Models We are here - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models 3. Advanced Topic Models 4. Summary 23

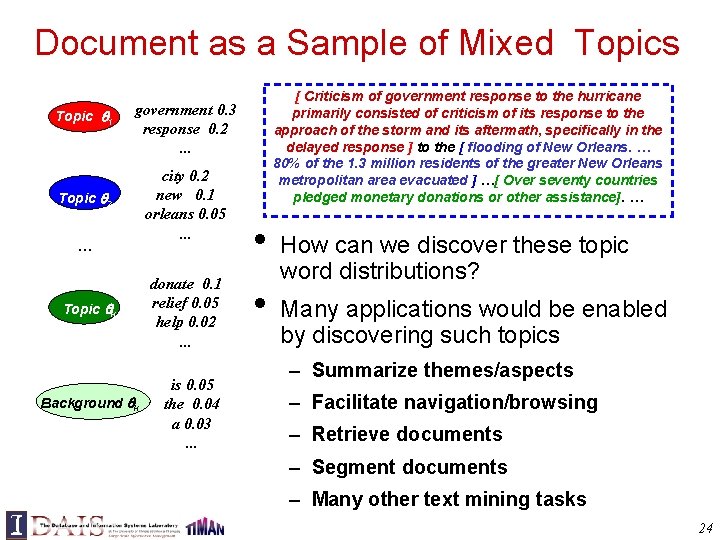

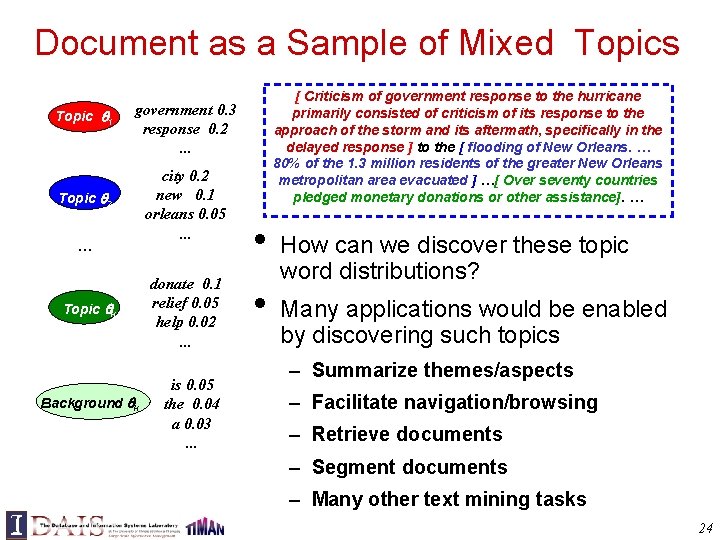

Document as a Sample of Mixed Topics Topic 1 [ Criticism of government response to the hurricane primarily consisted of criticism of its response to the approach of the storm and its aftermath, specifically in the delayed response ] to the [ flooding of New Orleans. … 80% of the 1. 3 million residents of the greater New Orleans metropolitan area evacuated ] …[ Over seventy countries pledged monetary donations or other assistance]. … government 0. 3 response 0. 2. . . Topic 2 … Topic k Background k city 0. 2 new 0. 1 orleans 0. 05 . . . donate 0. 1 relief 0. 05 help 0. 02 . . . is 0. 05 the 0. 04 a 0. 03 . . . • • How can we discover these topic word distributions? Many applications would be enabled by discovering such topics – Summarize themes/aspects – Facilitate navigation/browsing – Retrieve documents – Segment documents – Many other text mining tasks 24

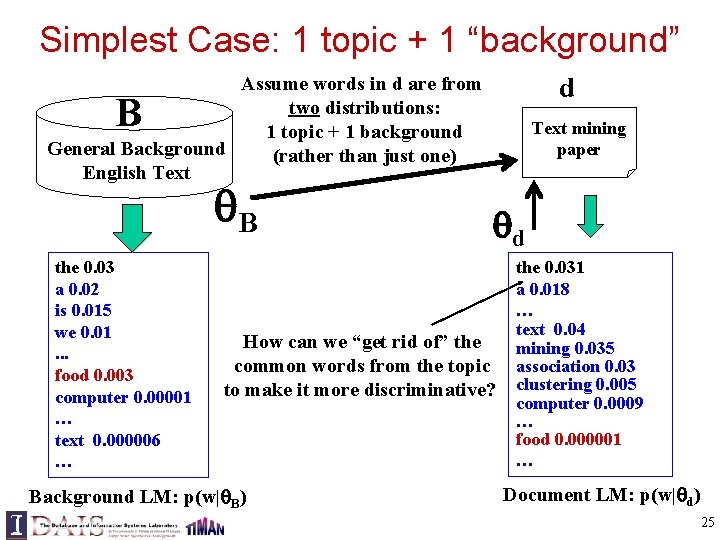

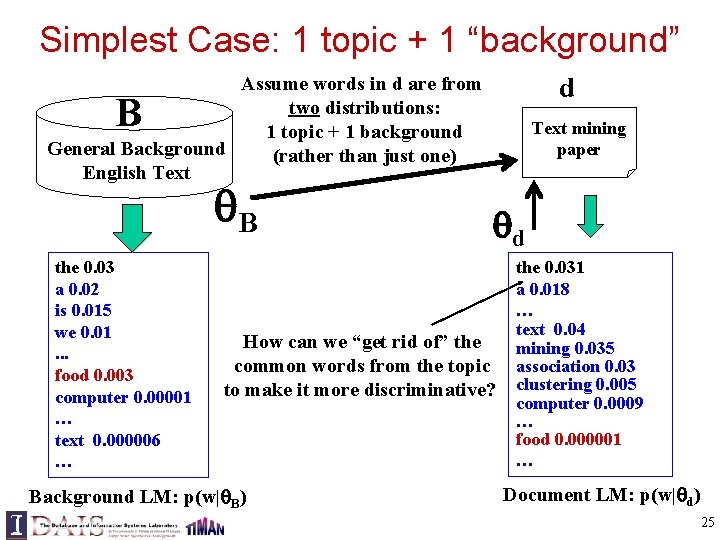

Simplest Case: 1 topic + 1 “background” d Assume words in d are from two distributions: 1 topic + 1 background General Background (rather than just one) English Text B B the 0. 03 a 0. 02 is 0. 015 we 0. 01. . . food 0. 003 computer 0. 00001 … text 0. 000006 … Text mining paper d How can we “get rid of” the common words from the topic to make it more discriminative? Background LM: p(w| B) the 0. 031 a 0. 018 … text 0. 04 mining 0. 035 association 0. 03 clustering 0. 005 computer 0. 0009 … food 0. 000001 … Document LM: p(w| d) 25

The Simplest Case: One Topic + One Background Model Assume p(w| B) and are known = assumed percentage of background words in d Topic choice P(Topic) Background words P(w| B) w Document d 1 - Topic words P(w| ) w Maximum Likelihood 26

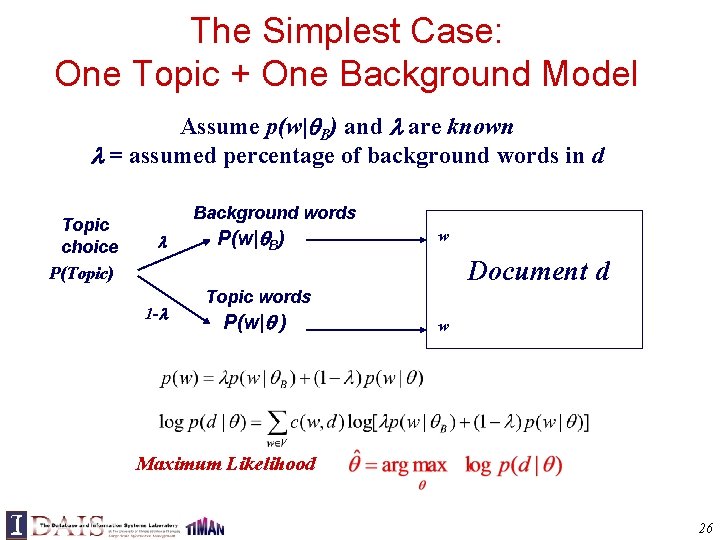

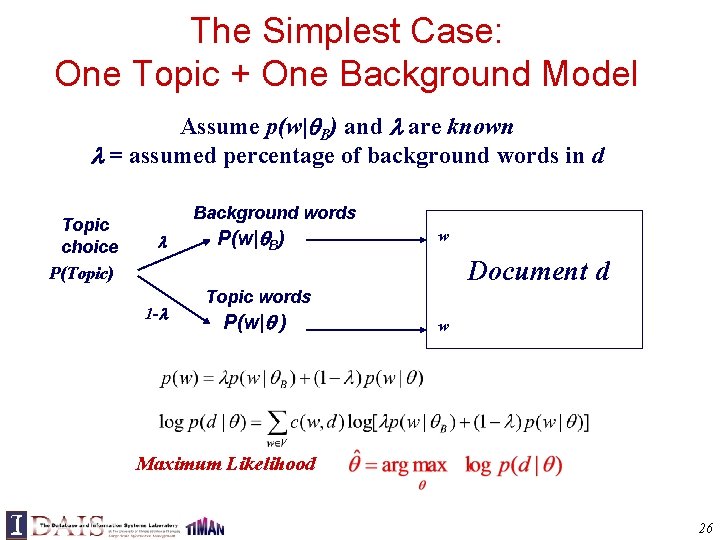

Understanding a Mixture Model the 0. 2 a 0. 1 we 0. 01 Known Background to 0. 02 … p(w| B) text 0. 0001 mining 0. 00005 … Unknown query topic p(w| )=? … “Text mining” … text =? mining =? association =? word =? Suppose each model would be selected with equal probability =0. 5 The probability of observing word “text”: p(“text”| B) + (1 - )p(“text”| ) =0. 5*0. 0001 + 0. 5* p(“text”| ) The probability of observing word “the”: p(“the”| B) + (1 - )p(“the”| ) =0. 5*0. 2 + 0. 5* p(“the”| ) The probability of observing “the” & “text” (likelihood) [0. 5*0. 0001 + 0. 5* p(“text”| )] [0. 5*0. 2 + 0. 5* p(“the”| )] How to set p(“the”| ) and p(“text”| ) so as to maximize this likelihood? assume p(“the”| )+p(“text”| )=constant give p(“text”| ) a higher probability than p(“the”| ) (why? ) B and are competing for explaining words in document d! 27

Simplest Case Continued: How to Estimate ? Known Background p(w| B) the 0. 2 a 0. 1 we 0. 01 to 0. 02 … text 0. 0001 mining 0. 00005 … =0. 7 Observed words ML Estimator Unknown query topic p(w| )=? “Text mining” … text =? mining =? association =? word =? … =0. 3 Suppose we know the identity/label of each word. . . 28

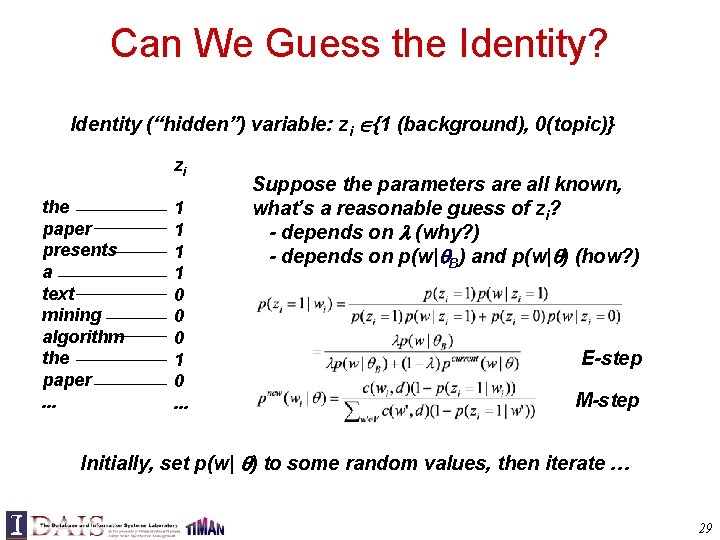

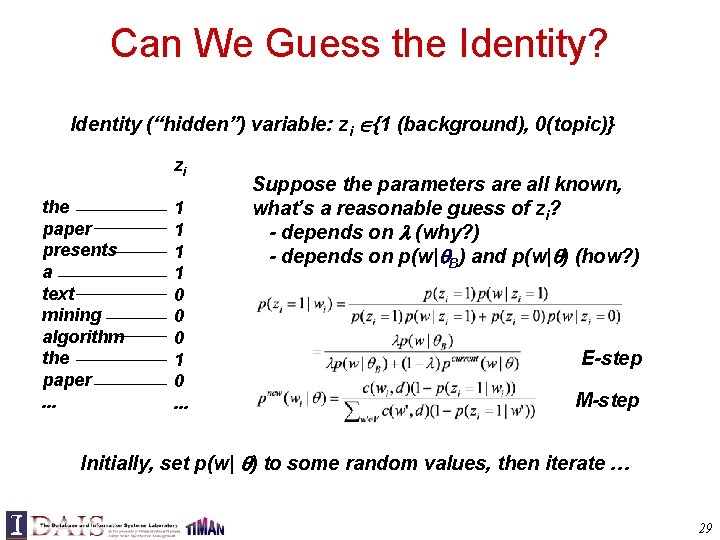

Can We Guess the Identity? Identity (“hidden”) variable: zi {1 (background), 0(topic)} zi the paper presents a text mining algorithm the paper. . . 1 1 0 0 0 1 0. . . Suppose the parameters are all known, what’s a reasonable guess of zi? - depends on (why? ) - depends on p(w| B) and p(w| ) (how? ) E-step M-step Initially, set p(w| ) to some random values, then iterate … 29

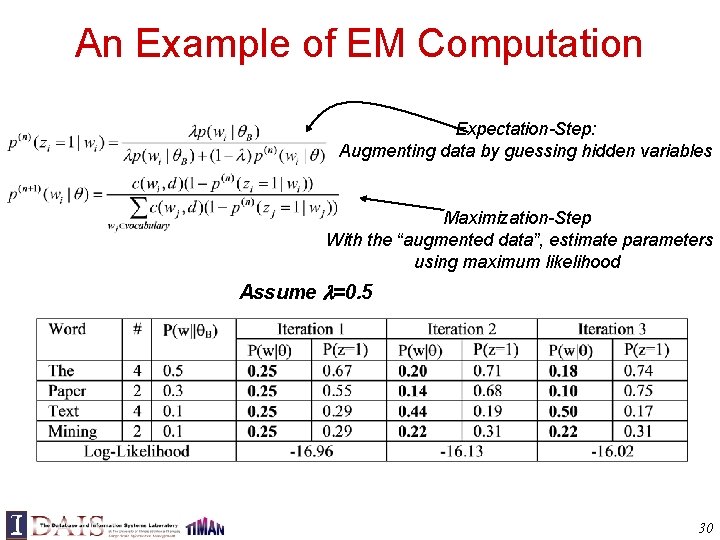

An Example of EM Computation Expectation-Step: Augmenting data by guessing hidden variables Maximization-Step With the “augmented data”, estimate parameters using maximum likelihood Assume =0. 5 30

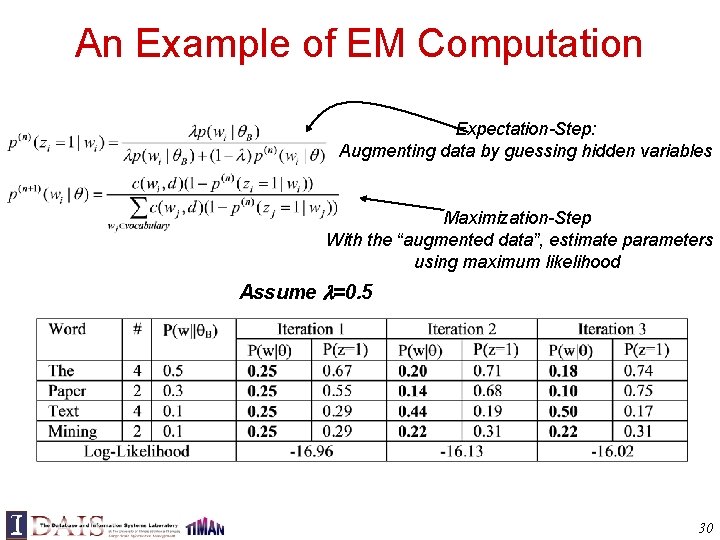

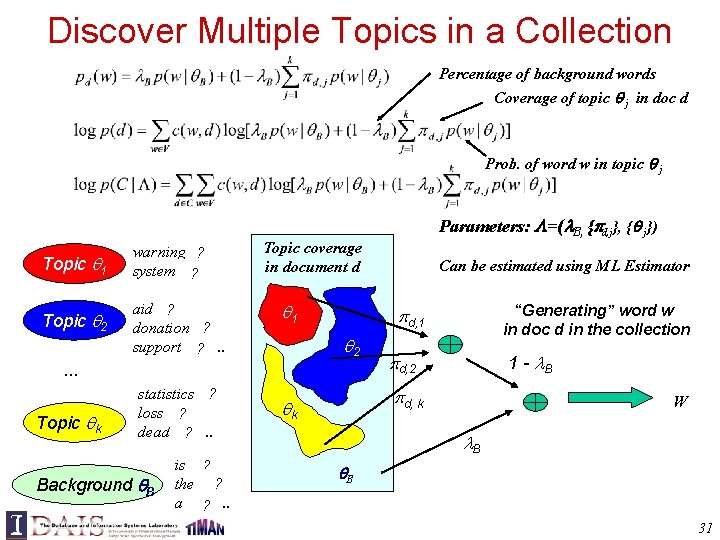

Discover Multiple Topics in a Collection Percentage of background words Coverage of topic j in doc d Prob. of word w in topic j Topic 1 warning 0. 3 ? system 0. 2. . ? Topic 2 aid 0. 1 ? ? donation 0. 05 support 0. 02 ? . . Topic coverage in document d 1 Topic k Background B is 0. 05 ? ? the 0. 04 a 0. 03 ? . . Can be estimated using ML Estimator “Generating” word w in doc d in the collection d, 1 2 … statistics 0. 2 ? loss 0. 1 ? dead 0. 05 ? . . Parameters: =( B, { d, j}, { j}) d, 2 1 - B d, k k W B B 31

![Probabilistic Latent Semantic AnalysisIndexing PLSAPLSI Hofmann 99 a 99 b Mix k Probabilistic Latent Semantic Analysis/Indexing (PLSA/PLSI) [Hofmann 99 a, 99 b] • • Mix k](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-32.jpg)

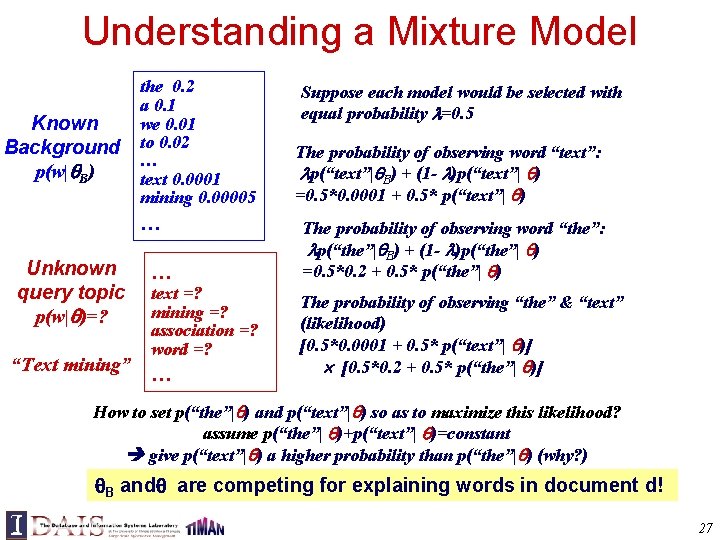

Probabilistic Latent Semantic Analysis/Indexing (PLSA/PLSI) [Hofmann 99 a, 99 b] • • Mix k multinomial distributions to generate a document Each document has a potentially different set of mixing weights which captures the topic coverage When generating words in a document, each word may be generated using a DIFFERENT multinomial distribution (this is in contrast with the document clustering model where, once a multinomial distribution is chosen, all the words in a document would be generated using the same multinomial distribution) By fitting the model to text data, we can estimate (1) the topic coverage in each document, and (2) word distribution for each topic, thus achieving “topic mining” 32

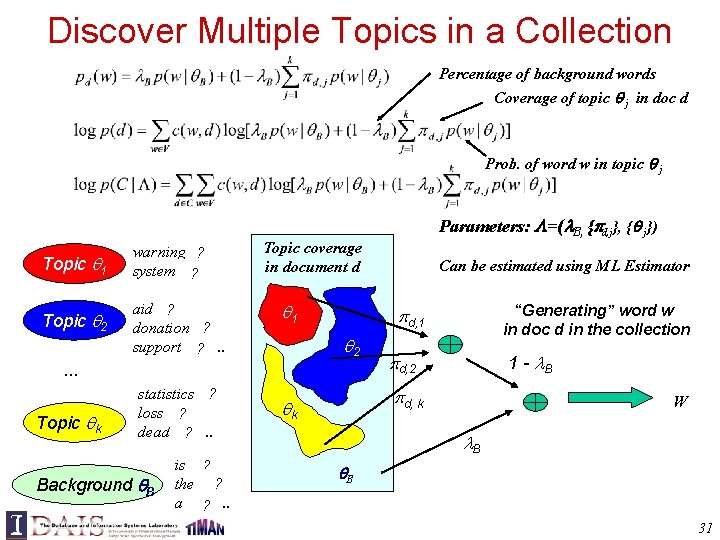

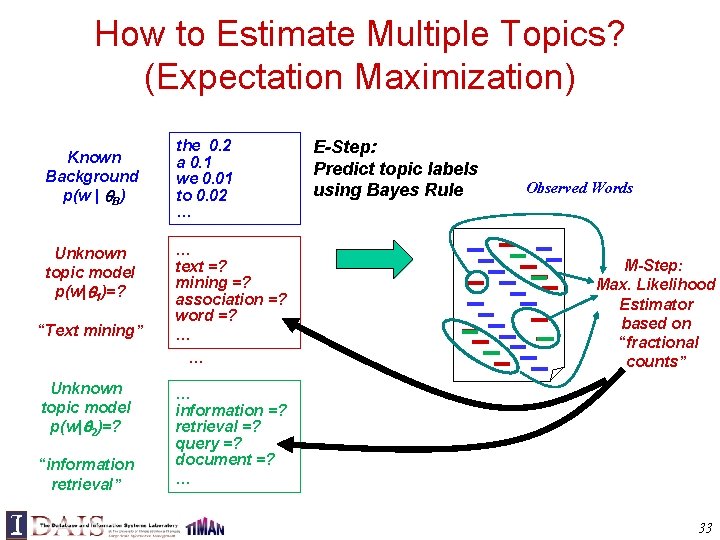

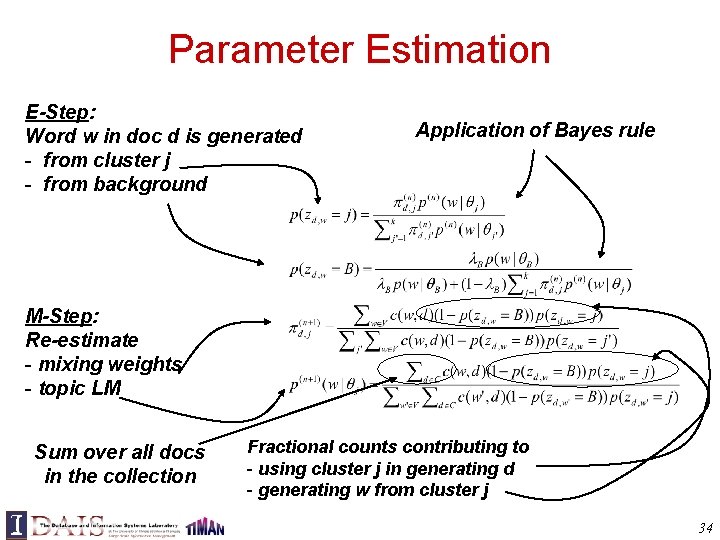

How to Estimate Multiple Topics? (Expectation Maximization) Known Background p(w | B) Unknown topic model p(w| 1)=? “Text mining” Unknown topic model p(w| 2)=? “information retrieval” the 0. 2 a 0. 1 we 0. 01 to 0. 02 … … text =? mining =? association =? word =? … … E-Step: Predict topic labels using Bayes Rule Observed Words M-Step: Max. Likelihood Estimator based on “fractional counts” … information =? retrieval =? query =? document =? … 33

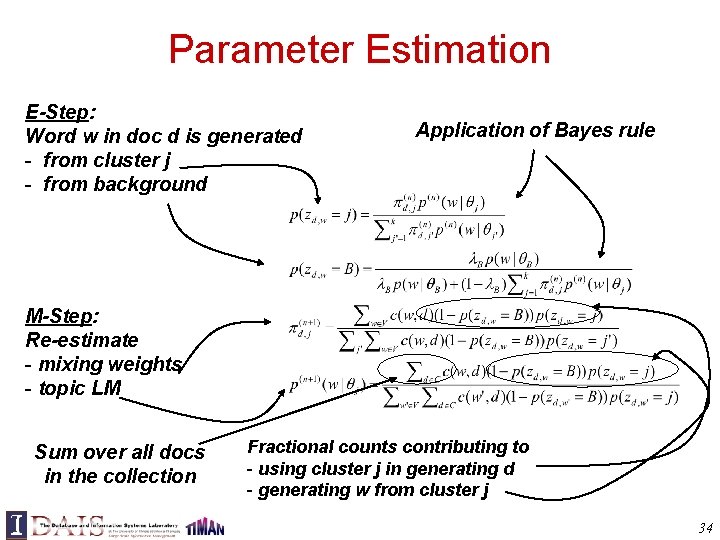

Parameter Estimation E-Step: Word w in doc d is generated - from cluster j - from background Application of Bayes rule M-Step: Re-estimate - mixing weights - topic LM Sum over all docs in the collection Fractional counts contributing to - using cluster j in generating d - generating w from cluster j 34

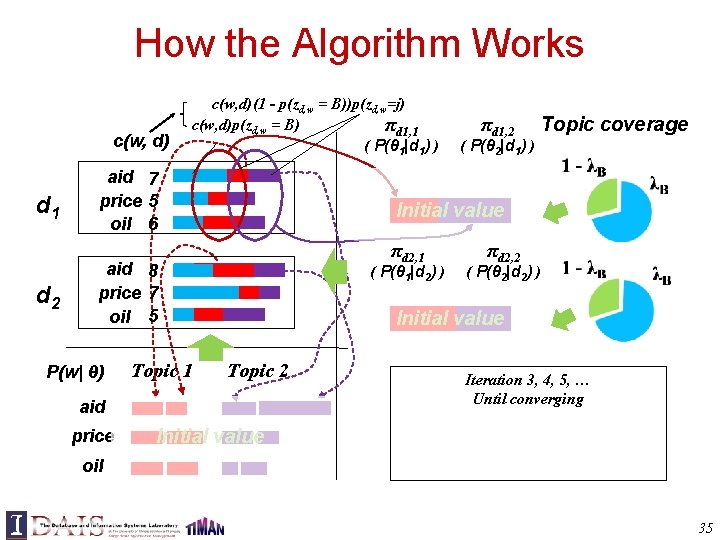

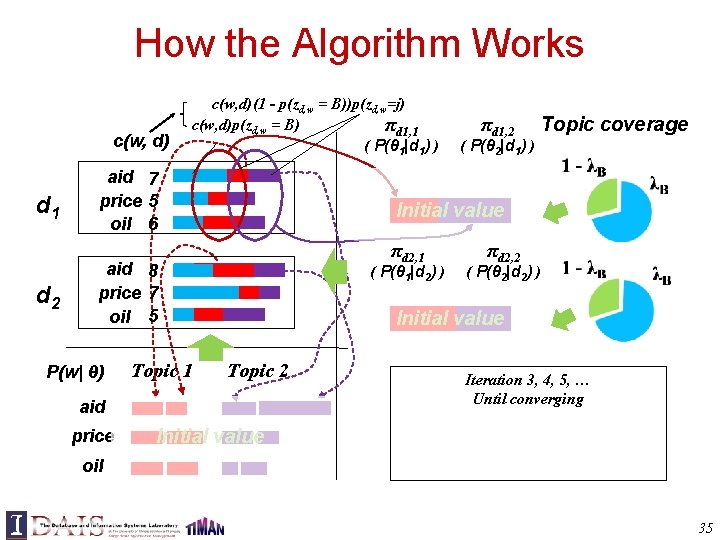

How the Algorithm Works c(w, d) d 1 d 2 c(w, d)(1 - p(zd, w = B))p(zd, w=j) c(w, d)p(zd, w = B) πd 1, 1 ( P(θ 1|d 1) ) aid 7 price 5 oil 6 Topic 1 πd 2, 1 ( P(θ 1|d 2) ) ( P(θ 2|d 1) ) πd 2, 2 ( P(θ 2|d 2) ) Initial value Topic 2 aid price Topic coverage Initial value aid 8 price 7 oil 5 P(w| θ) πd 1, 2 Initial value Iteration 2: E Step: split word counts Iteration 1: M Step: re-estimate π Iteration 2: M Step: re-estimate π Initializing π Iteration 3, 4, 5, … d, j and P(w| θj) with d, j with different topics (by computing z’ s) and P(w| θj) by adding and normalizing Until converging random values the splitted word counts oil 35

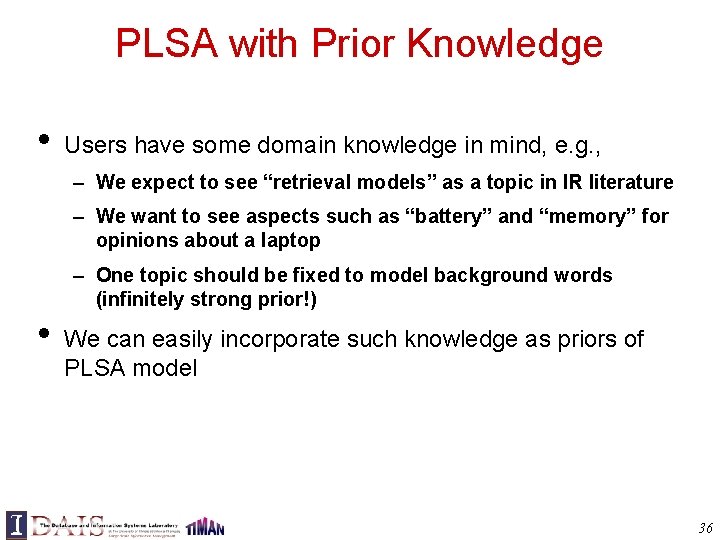

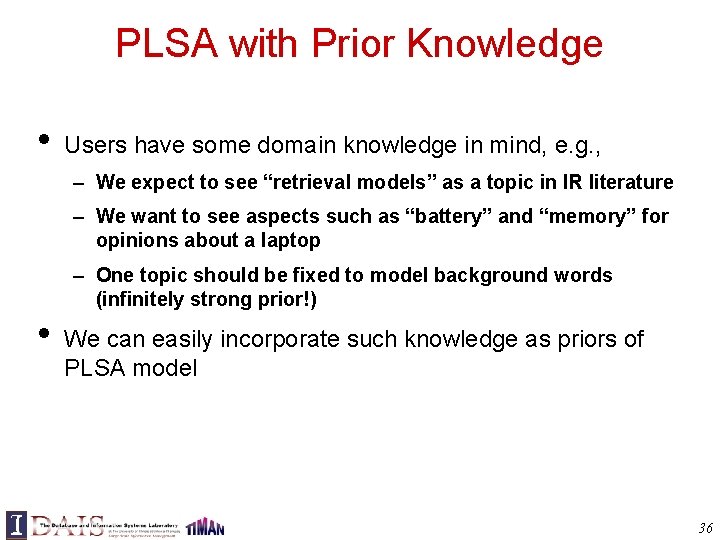

PLSA with Prior Knowledge • Users have some domain knowledge in mind, e. g. , – We expect to see “retrieval models” as a topic in IR literature – We want to see aspects such as “battery” and “memory” for opinions about a laptop – One topic should be fixed to model background words (infinitely strong prior!) • We can easily incorporate such knowledge as priors of PLSA model 36

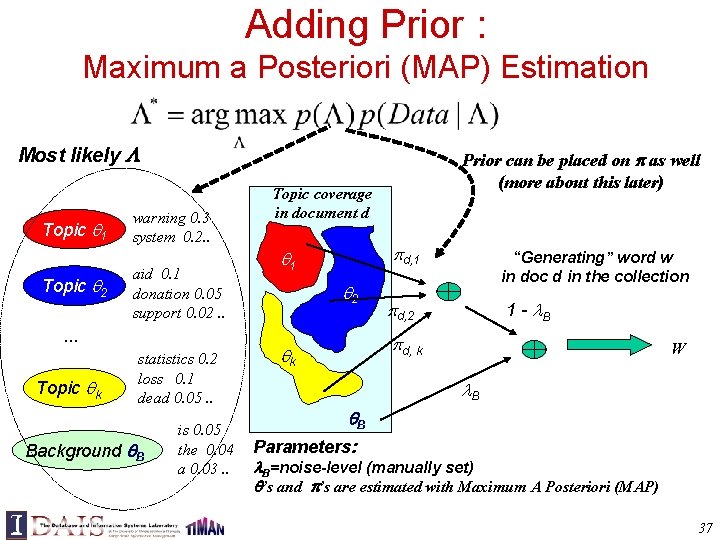

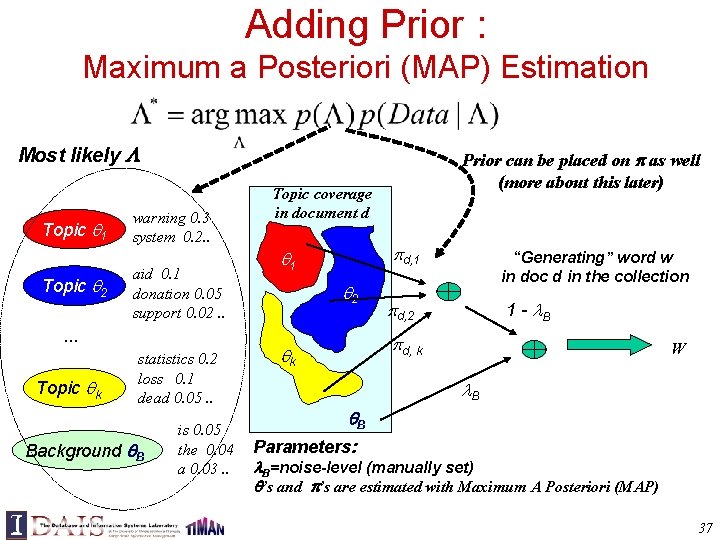

Adding Prior : Maximum a Posteriori (MAP) Estimation Most likely Topic 1 Topic 2 warning 0. 3 system 0. 2. . aid 0. 1 donation 0. 05 support 0. 02. . … Topic k statistics 0. 2 loss 0. 1 dead 0. 05. . Background B is 0. 05 the 0. 04 a 0. 03. . Prior can be placed on as well (more about this later) Topic coverage in document d d, 1 1 2 “Generating” word w in doc d in the collection d, 2 1 - B d, k k W B B Parameters: B=noise-level (manually set) ’s and ’s are estimated with Maximum A Posteriori (MAP) 37

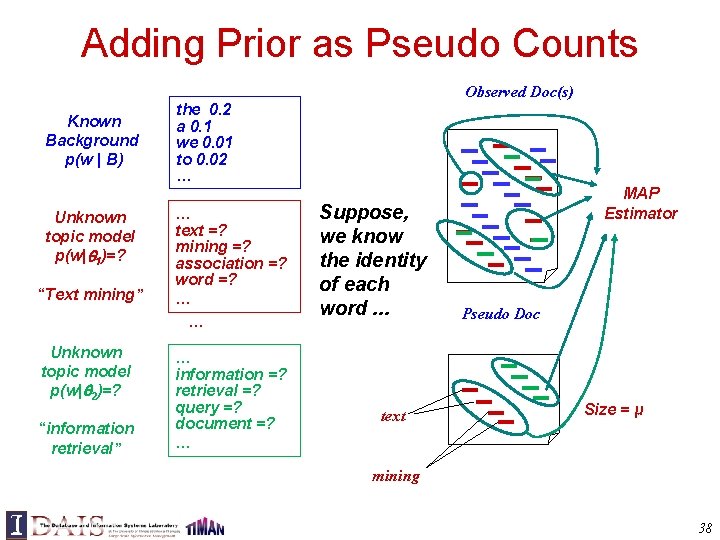

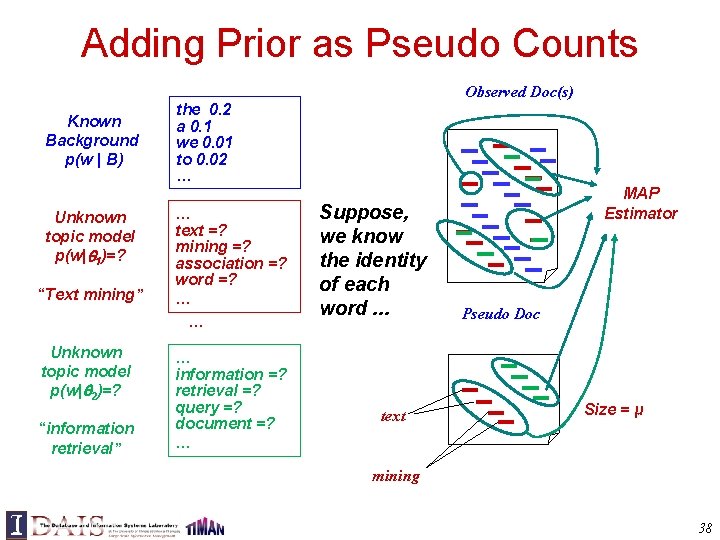

Adding Prior as Pseudo Counts Observed Doc(s) Known Background p(w | B) Unknown topic model p(w| 1)=? “Text mining” Unknown topic model p(w| 2)=? “information retrieval” the 0. 2 a 0. 1 we 0. 01 to 0. 02 … … text =? mining =? association =? word =? … … … information =? retrieval =? query =? document =? … Suppose, we know the identity of each word. . . text MAP Estimator Pseudo Doc Size = μ mining 38

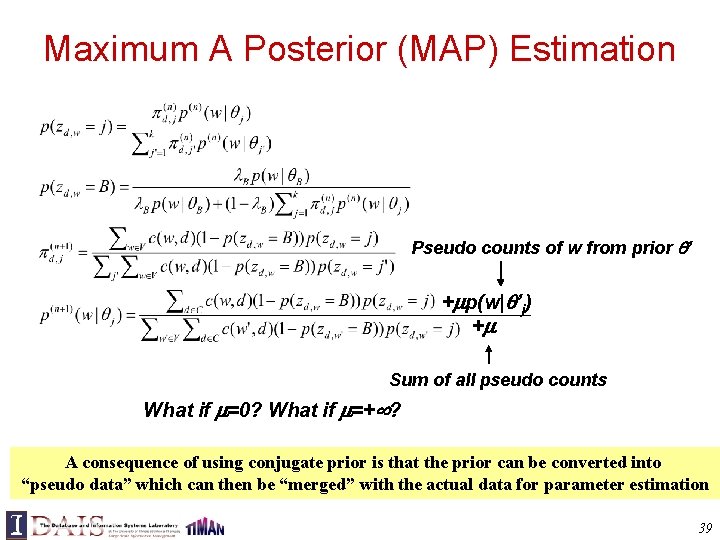

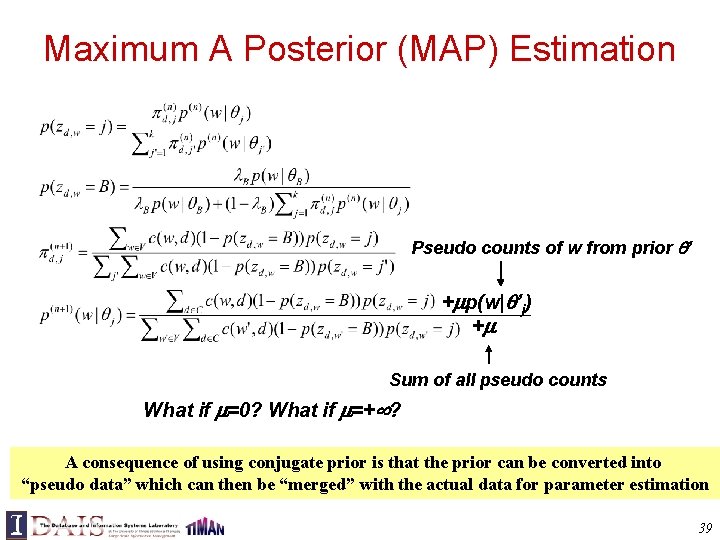

Maximum A Posterior (MAP) Estimation Pseudo counts of w from prior ’ + p(w| ’j) + Sum of all pseudo counts What if =0? What if =+ ? A consequence of using conjugate prior is that the prior can be converted into “pseudo data” which can then be “merged” with the actual data for parameter estimation 39

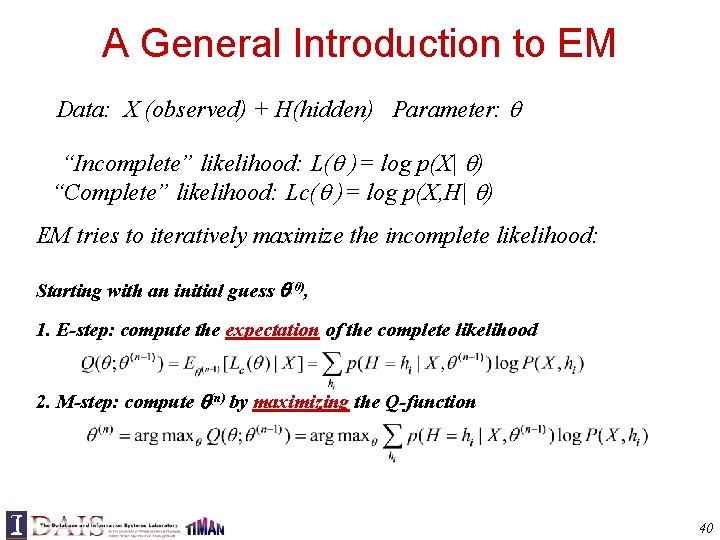

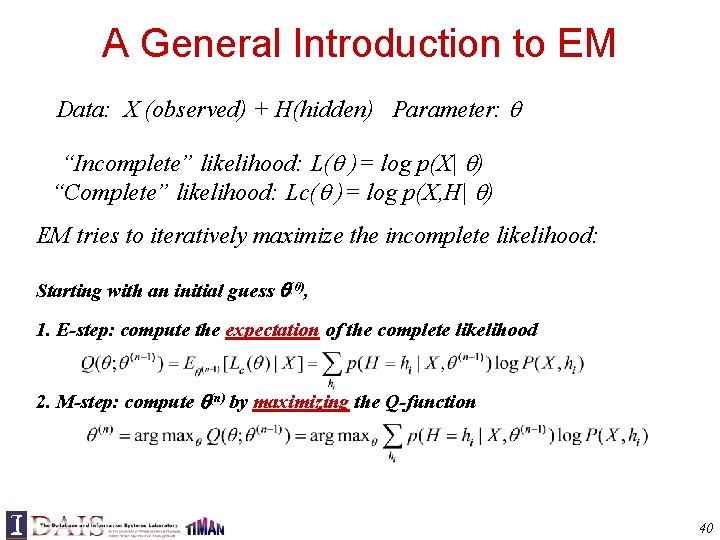

A General Introduction to EM Data: X (observed) + H(hidden) Parameter: “Incomplete” likelihood: L( )= log p(X| ) “Complete” likelihood: Lc( )= log p(X, H| ) EM tries to iteratively maximize the incomplete likelihood: Starting with an initial guess (0), 1. E-step: compute the expectation of the complete likelihood 2. M-step: compute (n) by maximizing the Q-function 40

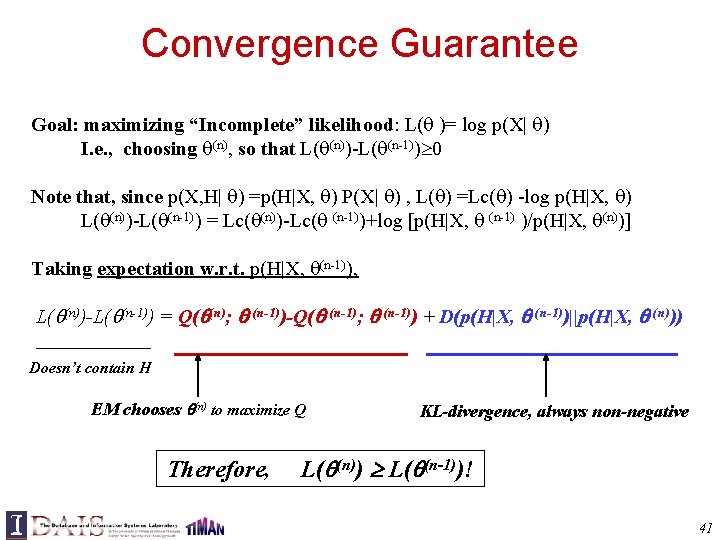

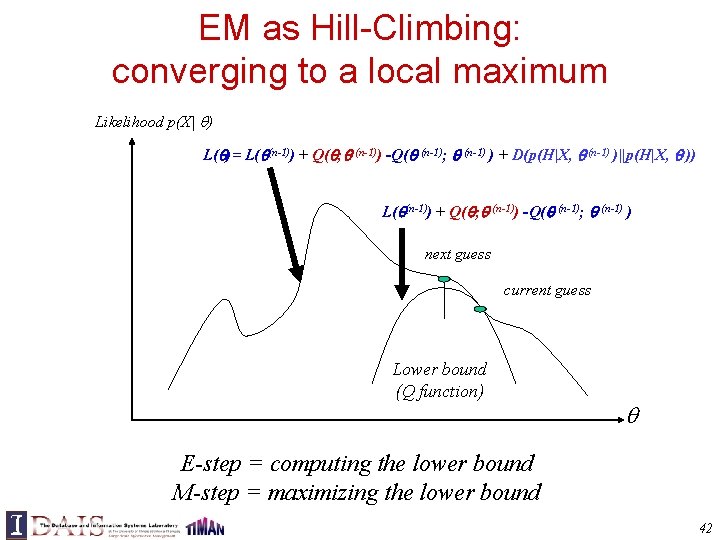

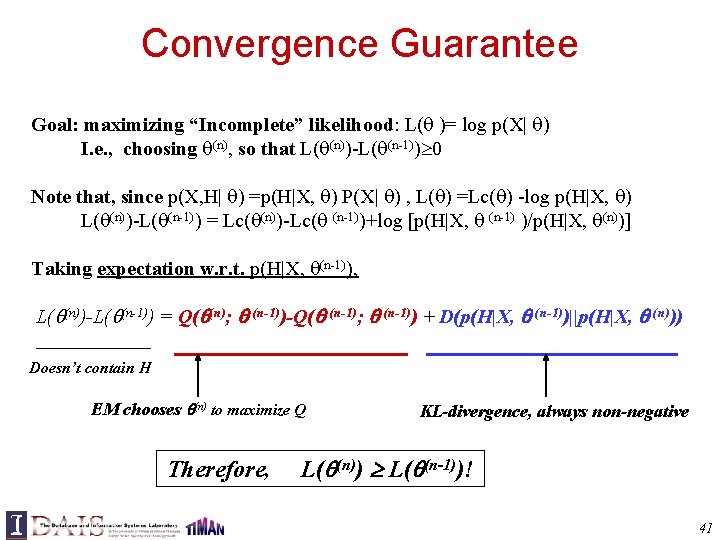

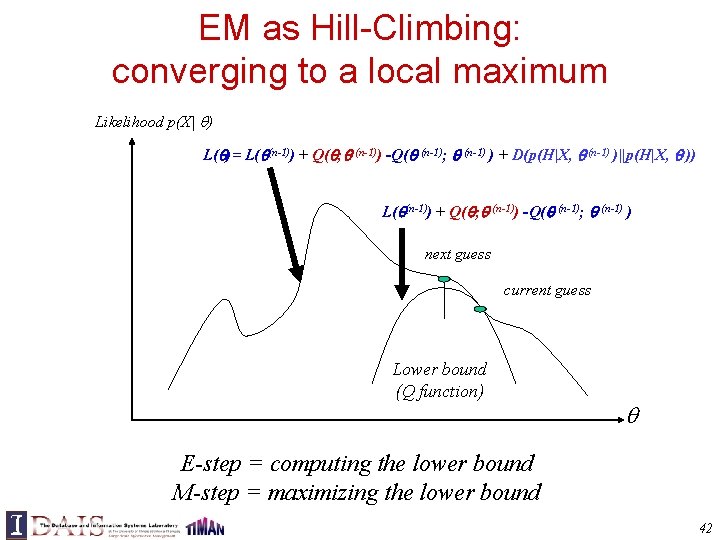

Convergence Guarantee Goal: maximizing “Incomplete” likelihood: L( )= log p(X| ) I. e. , choosing (n), so that L( (n))-L( (n-1)) 0 Note that, since p(X, H| ) =p(H|X, ) P(X| ) , L( ) =Lc( ) -log p(H|X, ) L( (n))-L( (n-1)) = Lc( (n))-Lc( (n-1))+log [p(H|X, (n-1) )/p(H|X, (n))] Taking expectation w. r. t. p(H|X, (n-1)), L( (n))-L( (n-1)) = Q( (n); (n-1))-Q( (n-1); (n-1)) + D(p(H|X, (n-1))||p(H|X, (n))) Doesn’t contain H EM chooses (n) to maximize Q KL-divergence, always non-negative Therefore, L( (n)) L( (n-1))! 41

EM as Hill-Climbing: converging to a local maximum Likelihood p(X| ) L( )= L( (n-1)) + Q( ; (n-1)) -Q( (n-1); (n-1) ) + D(p(H|X, (n-1) )||p(H|X, )) L( (n-1)) + Q( ; (n-1)) -Q( (n-1); (n-1) ) next guess current guess Lower bound (Q function) E-step = computing the lower bound M-step = maximizing the lower bound 42

Outline 1. Background - Text Mining (TM) - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) We are here Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models Statistical Language Models 2. Basic Topic Models 3. Advanced Topic Models 4. Summary 43

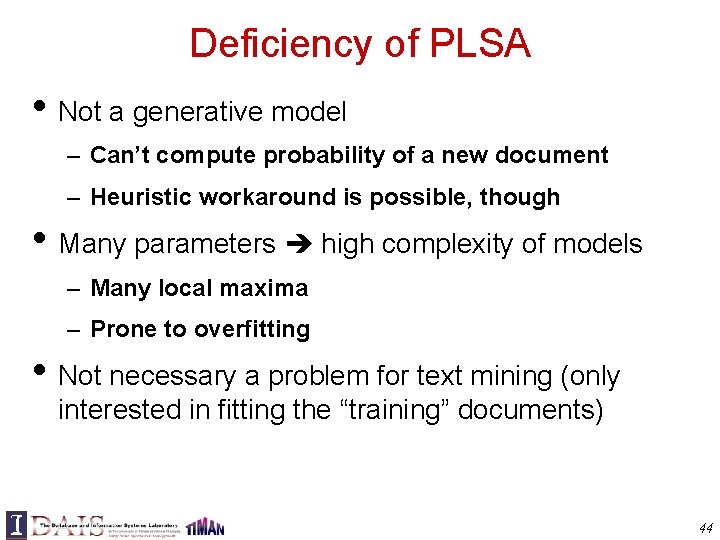

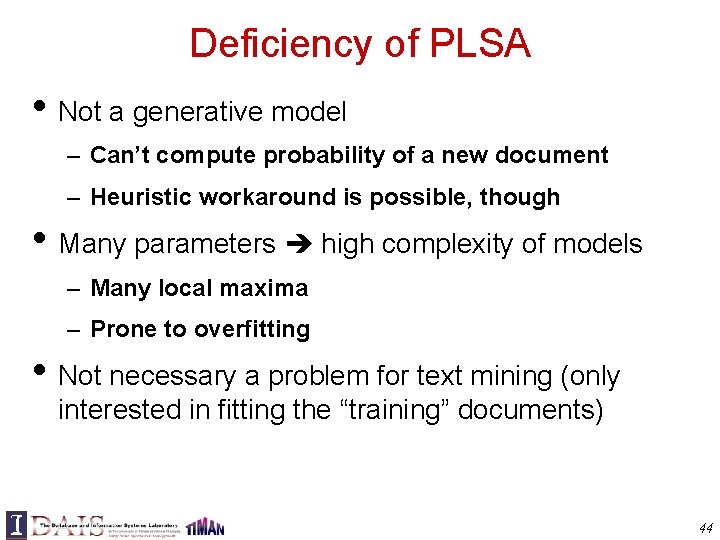

Deficiency of PLSA • Not a generative model – Can’t compute probability of a new document – Heuristic workaround is possible, though • Many parameters high complexity of models – Many local maxima – Prone to overfitting • Not necessary a problem for text mining (only interested in fitting the “training” documents) 44

![Latent Dirichlet Allocation LDA Blei et al 02 Make PLSA a generative model Latent Dirichlet Allocation (LDA) [Blei et al. 02] • Make PLSA a generative model](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-45.jpg)

Latent Dirichlet Allocation (LDA) [Blei et al. 02] • Make PLSA a generative model by imposing a Dirichlet prior on the model parameters – LDA = Bayesian version of PLSA – Parameters are regularized • Can achieve the same goal as PLSA for text mining purposes – Topic coverage and topic word distributions can be inferred using Bayesian inference 45

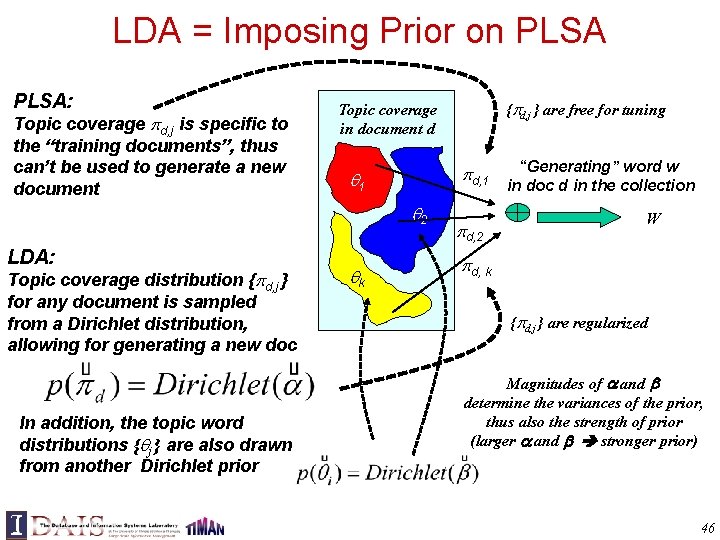

LDA = Imposing Prior on PLSA: Topic coverage d, j is specific to the “training documents”, thus can’t be used to generate a new document d, 1 1 2 LDA: Topic coverage distribution { d, j } for any document is sampled from a Dirichlet distribution, allowing for generating a new doc In addition, the topic word distributions { j } are also drawn from another Dirichlet prior { d, j } are free for tuning Topic coverage in document d k d, 2 “Generating” word w in doc d in the collection W d, k { d, j } are regularized Magnitudes of and determine the variances of the prior, thus also the strength of prior (larger and stronger prior) 46

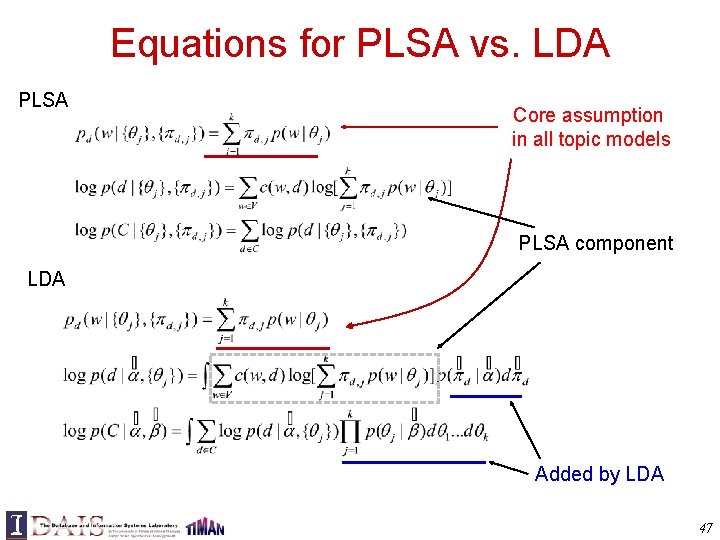

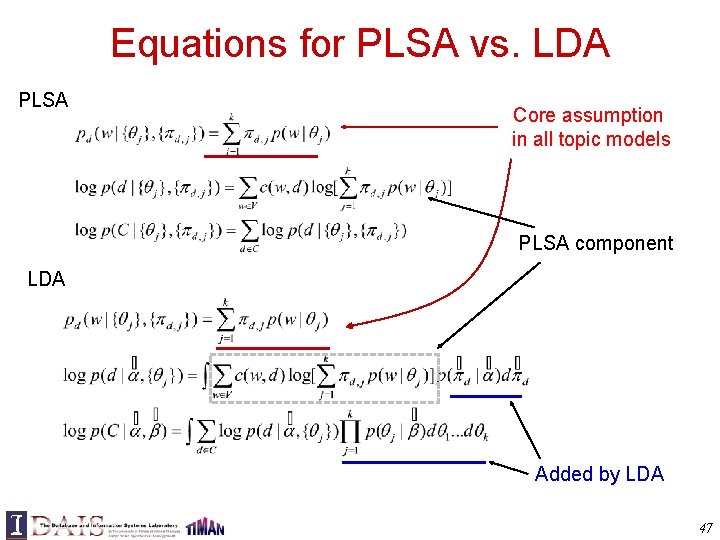

Equations for PLSA vs. LDA PLSA Core assumption in all topic models PLSA component LDA Added by LDA 47

Parameter Estimation & Inferences in LDA Parameter estimation can be done in the same say as in PLSA: Maximum Likelihood Estimator: However, must now be computed using posterior inference: Computationally intractable, must resort to approximate inference! 48

![LDA as a graph model Blei et al 03 a distribution over topics for LDA as a graph model [Blei et al. 03 a] distribution over topics for](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-49.jpg)

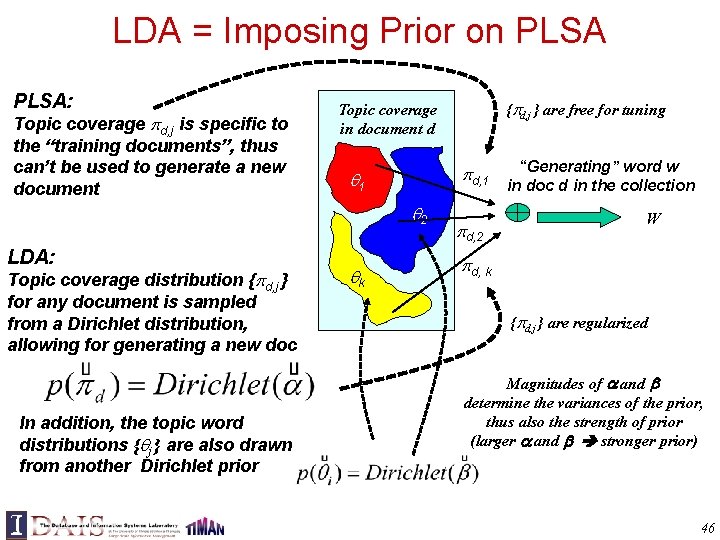

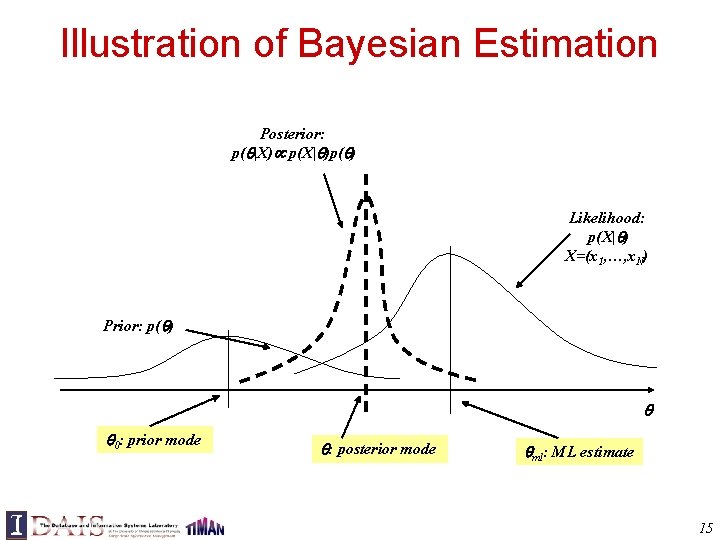

LDA as a graph model [Blei et al. 03 a] distribution over topics for each document (same as d on the previous slides) Dirichlet priors (d) Dirichlet( ) distribution over words for each topic assignment for each word (j) (same as j on the previous slides) (j) Dirichlet( ) zi Discrete( (d) ) zi T word generated from assigned topic wi Discrete( (zi) ) wi Nd D Most approximate inference algorithms aim to infer from which other interesting variables can be easily computed 49

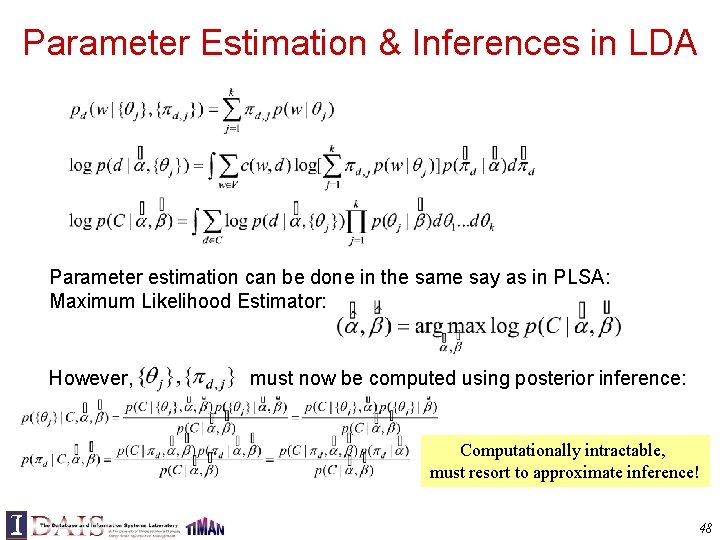

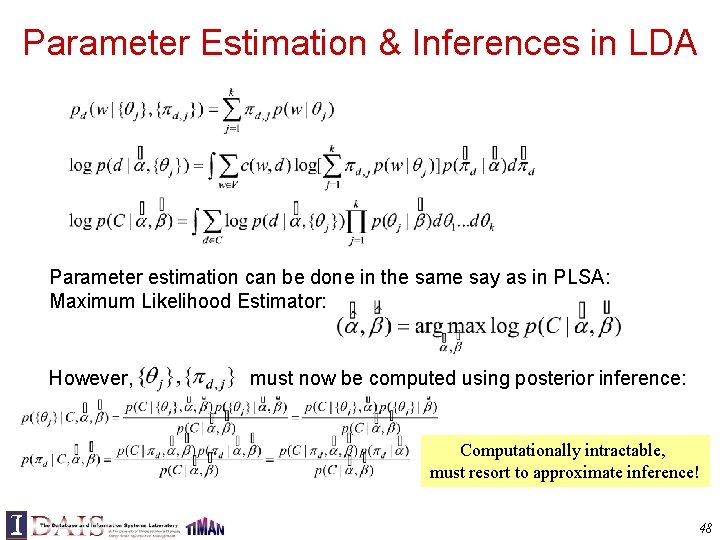

Approximate Inferences for LDA • Many different ways; each has its pros & cons • Deterministic approximation – variational EM [Blei et al. 03 a] – expectation propagation [Minka & Lafferty 02] • Markov chain Monte Carlo – full Gibbs sampler [Pritchard et al. 00] – collapsed Gibbs sampler [Griffiths & Steyvers 04] Most efficient, and quite popular, but can only work with conjugate prior 50

![The collapsed Gibbs sampler Griffiths Steyvers 04 Using conjugacy of Dirichlet and The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Using conjugacy of Dirichlet and](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-51.jpg)

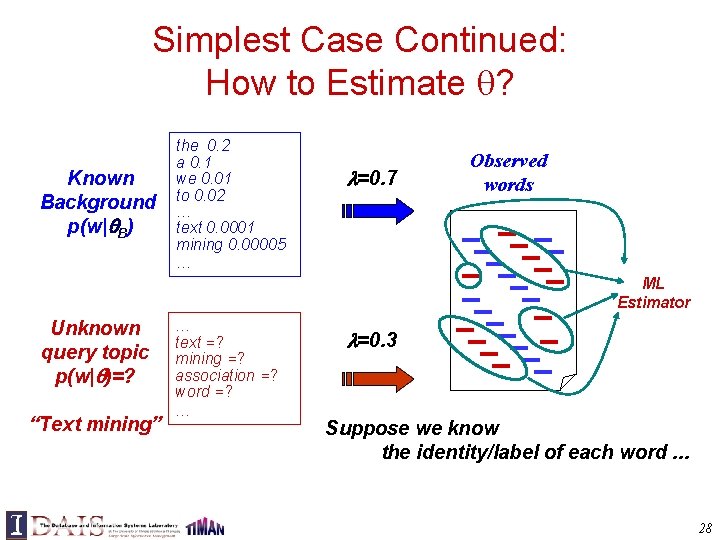

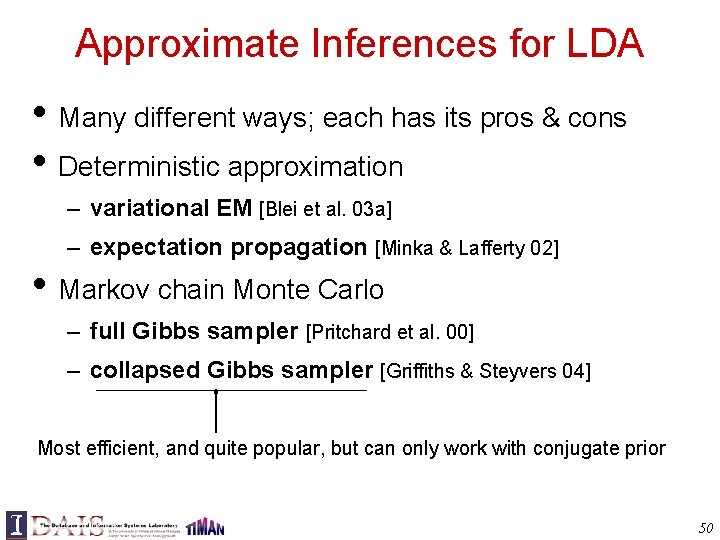

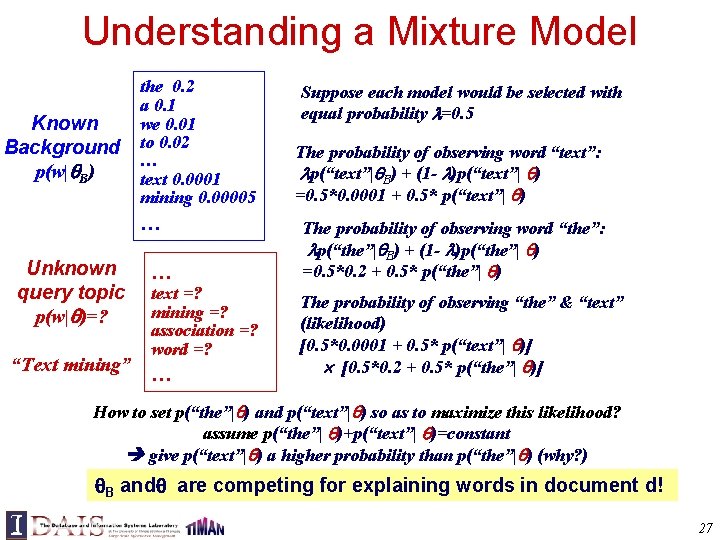

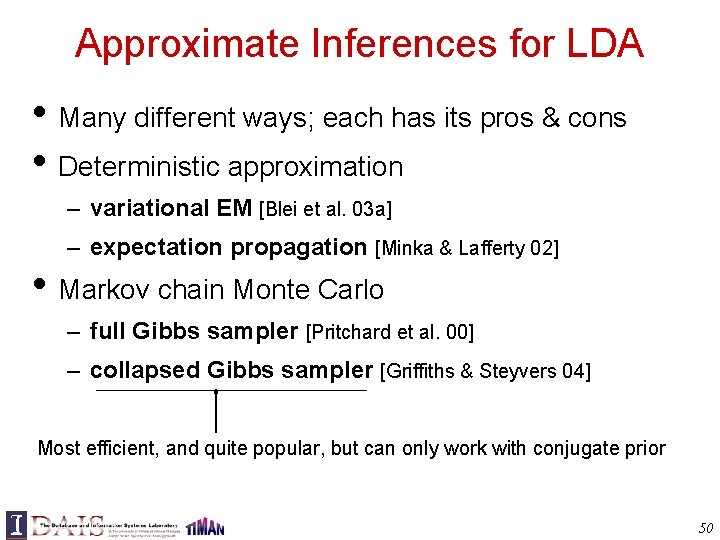

The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Using conjugacy of Dirichlet and multinomial distributions, integrate out continuous parameters • Defines a distribution on discrete ensembles z 51

![The collapsed Gibbs sampler Griffiths Steyvers 04 Sample each zi conditioned on The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Sample each zi conditioned on](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-52.jpg)

The collapsed Gibbs sampler [Griffiths & Steyvers 04] • Sample each zi conditioned on z-i • This is nicer than your average Gibbs sampler: – memory: counts can be cached in two sparse matrices – optimization: no special functions, simple arithmetic – the distributions on and are analytic given z and w, and can later be found for each sample 52

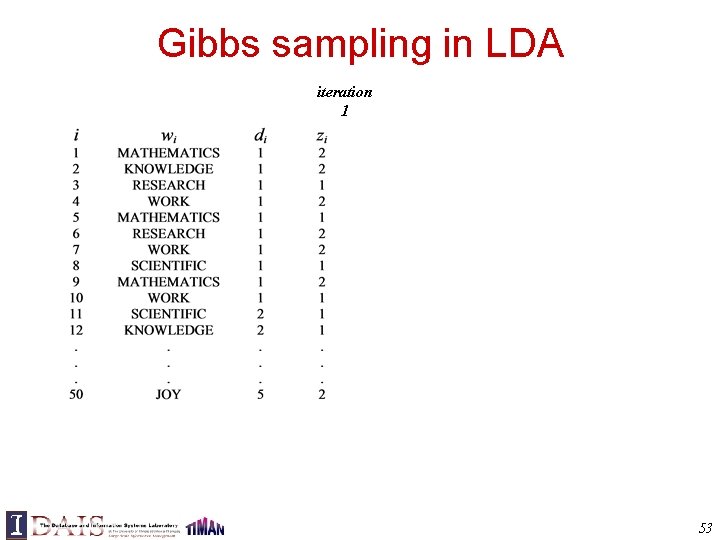

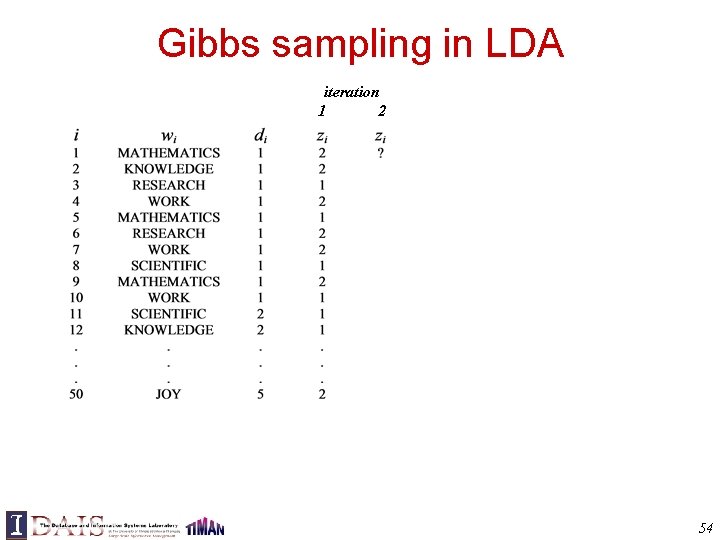

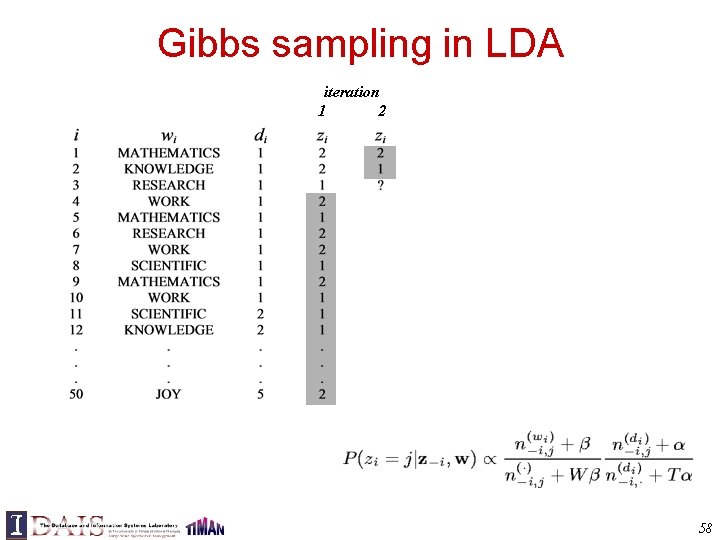

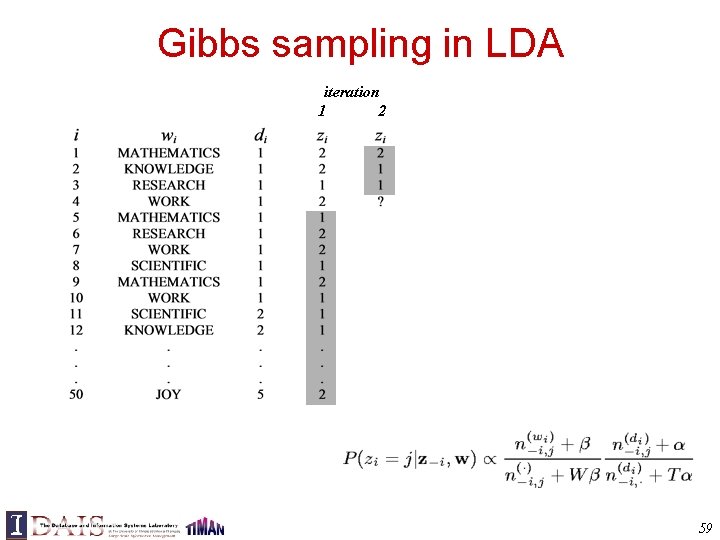

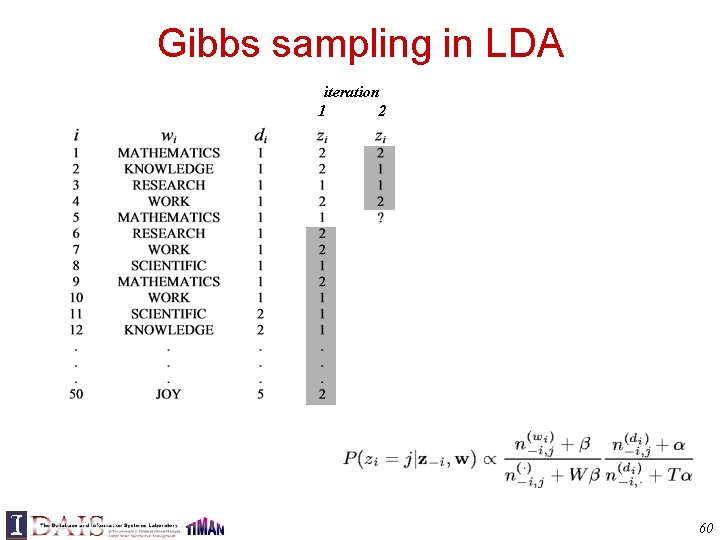

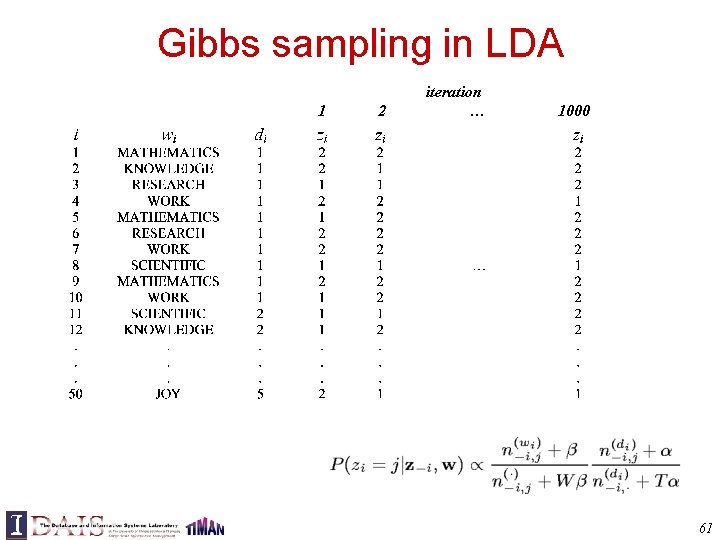

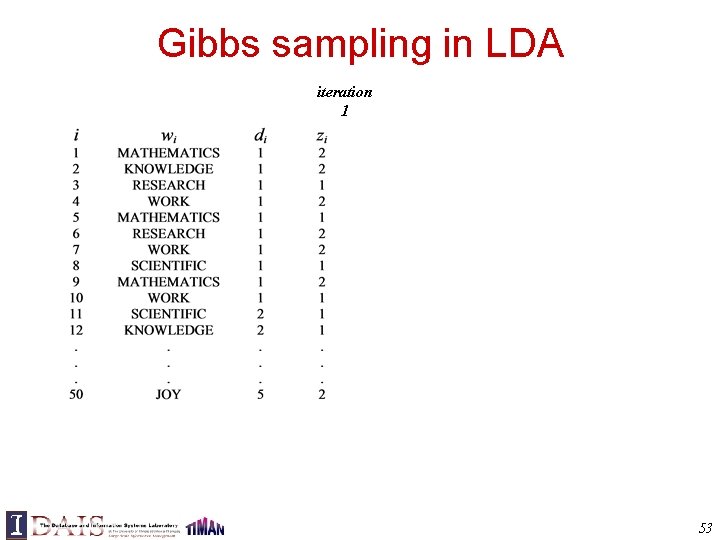

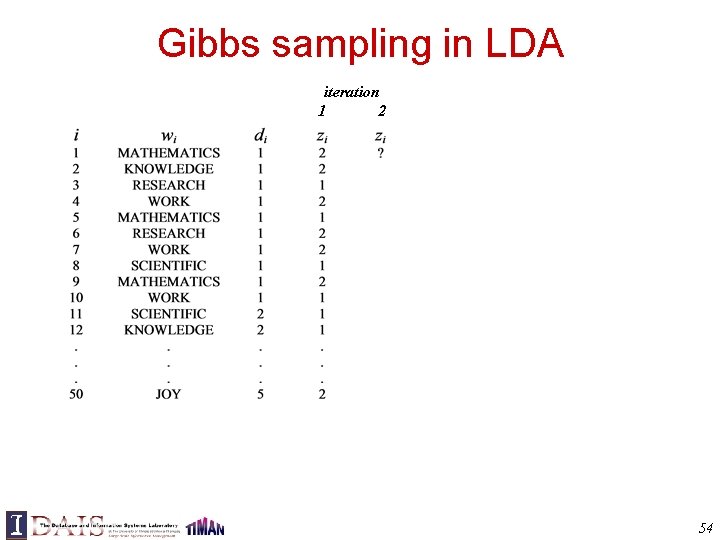

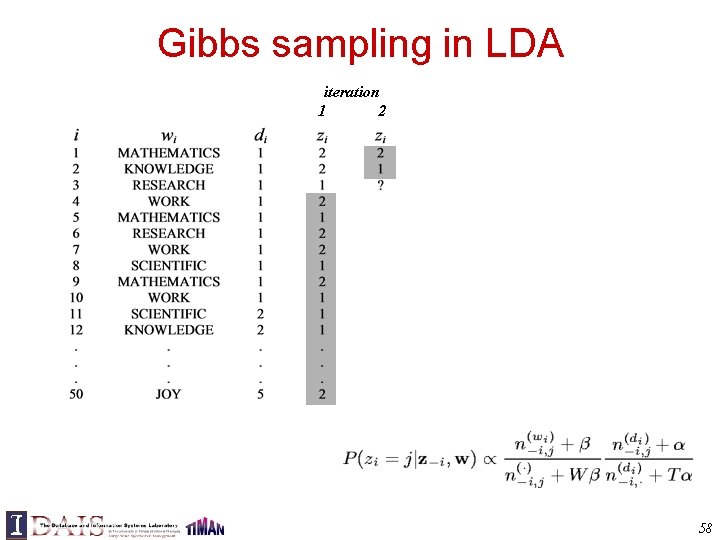

Gibbs sampling in LDA iteration 1 53

Gibbs sampling in LDA iteration 1 2 54

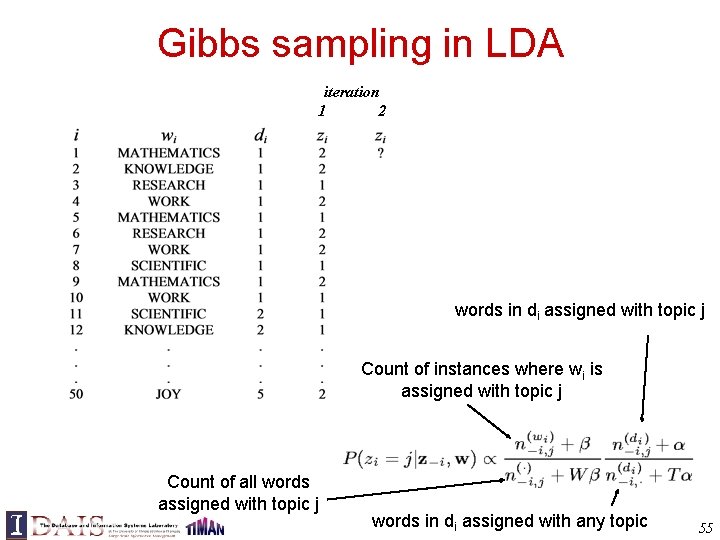

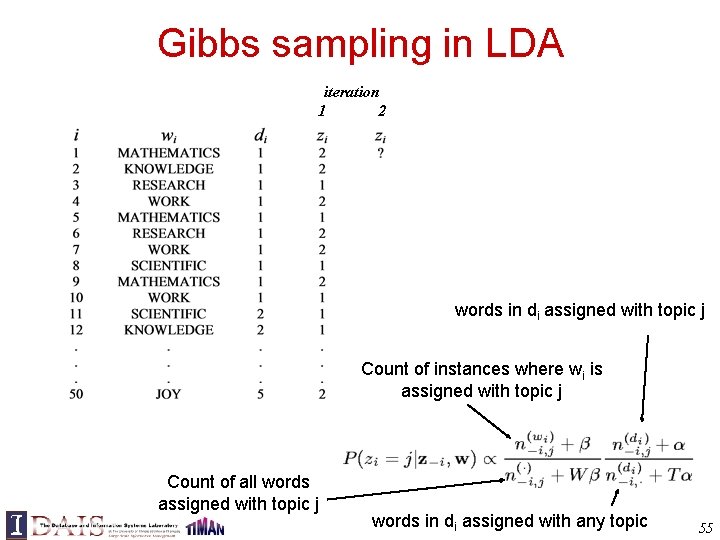

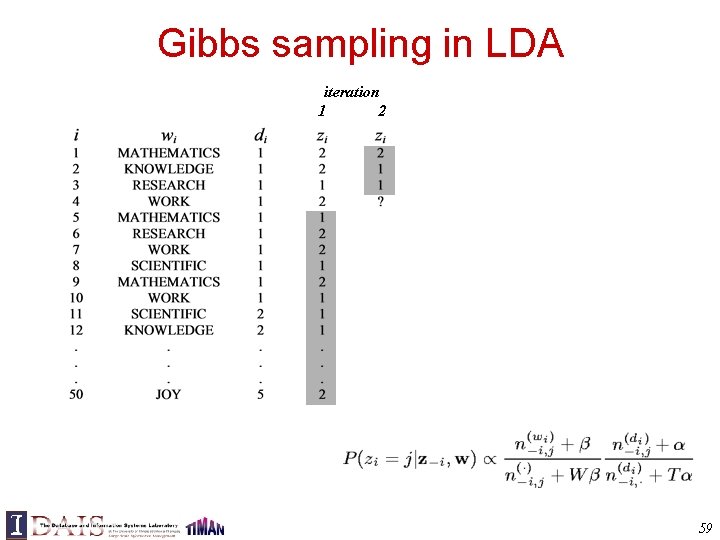

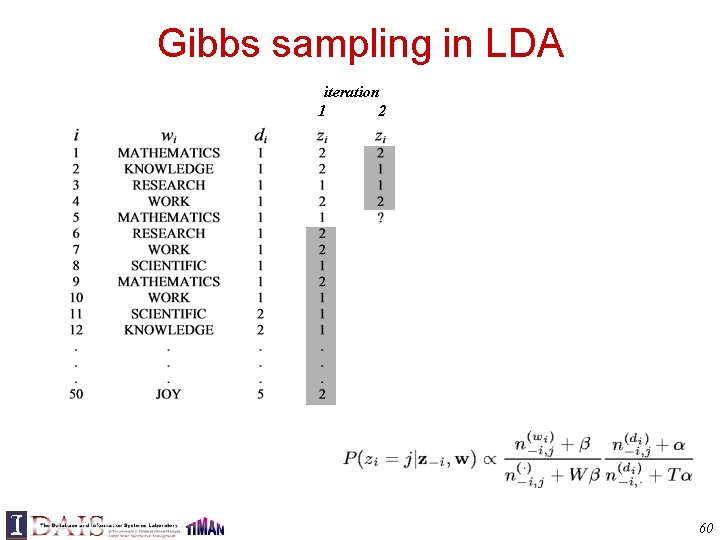

Gibbs sampling in LDA iteration 1 2 words in di assigned with topic j Count of instances where wi is assigned with topic j Count of all words assigned with topic j words in di assigned with any topic 55

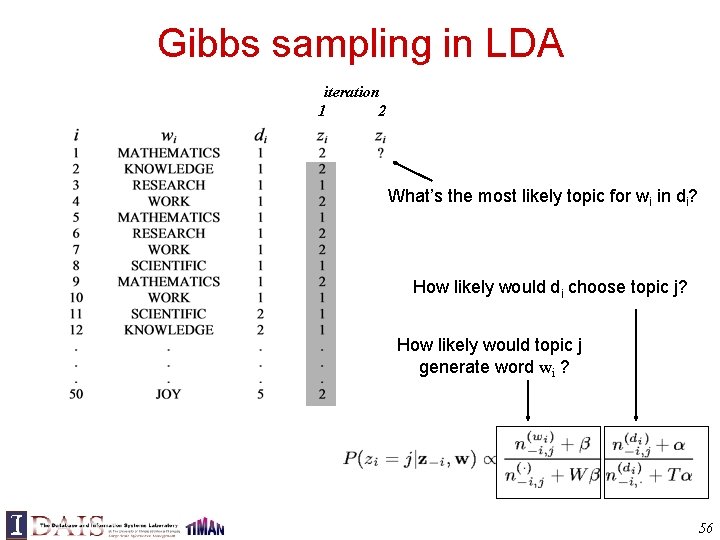

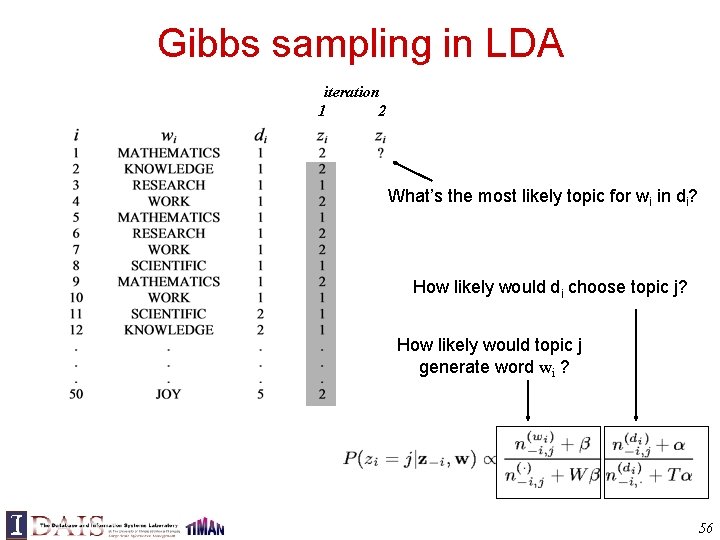

Gibbs sampling in LDA iteration 1 2 What’s the most likely topic for wi in di? How likely would di choose topic j? How likely would topic j generate word wi ? 56

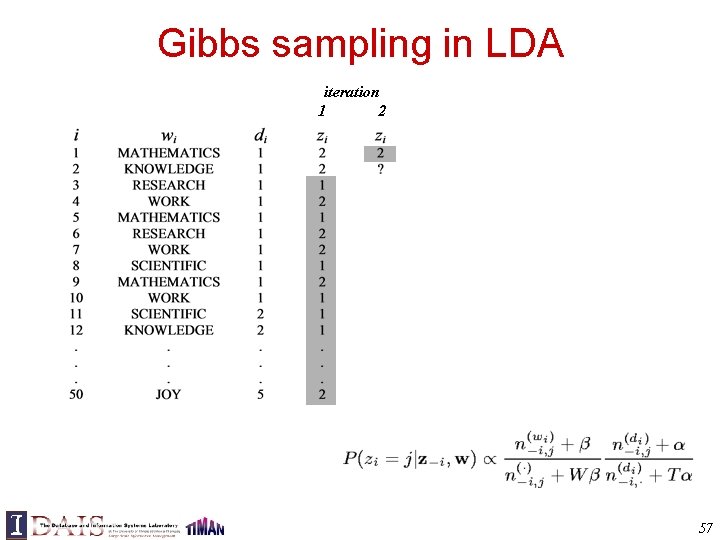

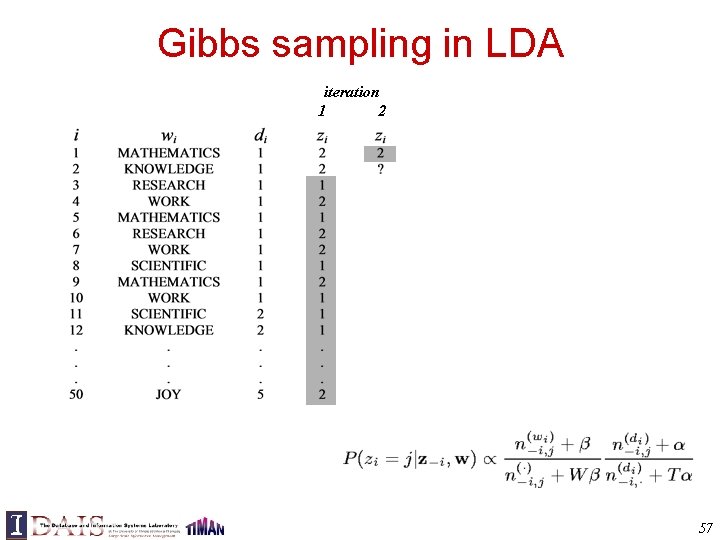

Gibbs sampling in LDA iteration 1 2 57

Gibbs sampling in LDA iteration 1 2 58

Gibbs sampling in LDA iteration 1 2 59

Gibbs sampling in LDA iteration 1 2 60

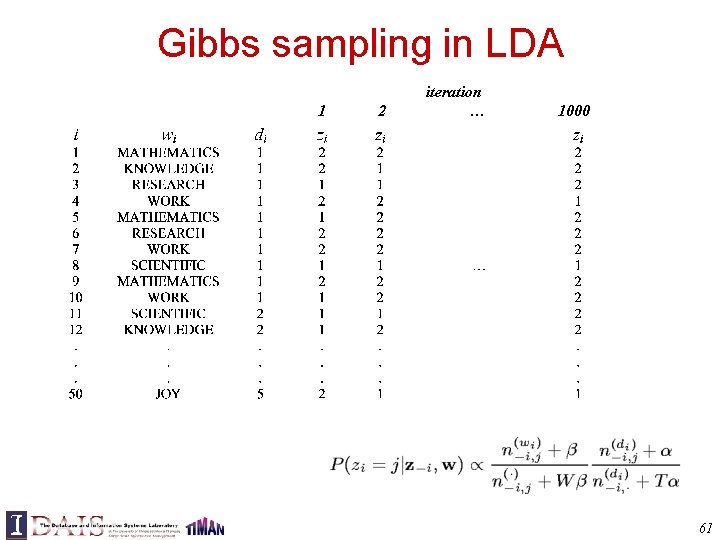

Gibbs sampling in LDA iteration 1 2 … 1000 61

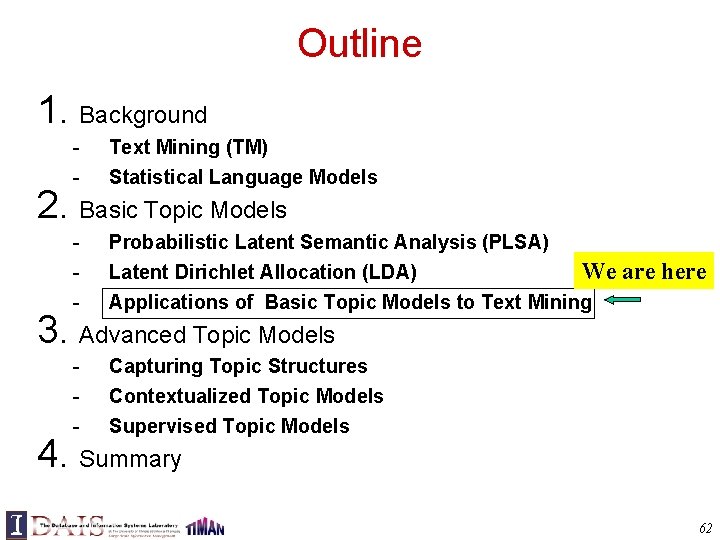

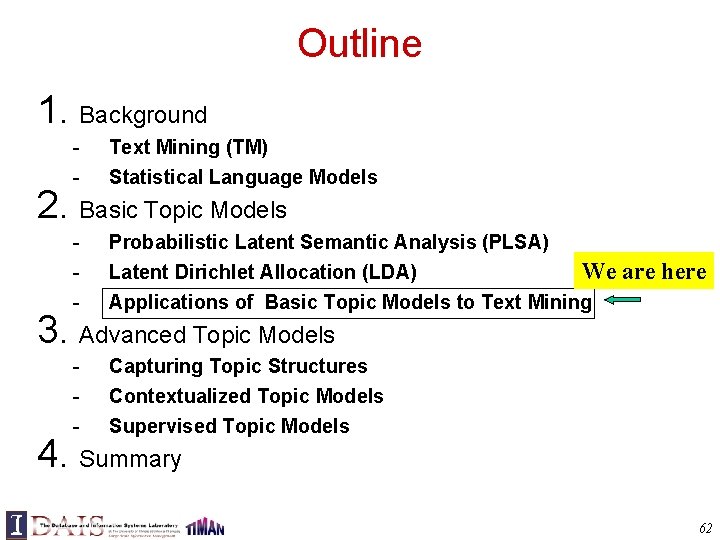

Outline 1. Background - Text Mining (TM) - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) We Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models Statistical Language Models 2. Basic Topic Models are here 3. Advanced Topic Models 4. Summary 62

Applications of Topic Models for Text Mining: Illustration with 2 Topics Likelihood: Application Scenarios: -p(w| 1) & p(w| 2) are known; estimate The doc is about text mining and food nutrition, how much percent is about text mining? -p(w| 1) & are known; estimate p(w| 2) 30% of the doc is about text mining, what’s the rest about? -p(w| 1) is known; estimate & p(w| 2) The doc is about text mining, is it also about some other topic, and if so to what extent? - is known; estimate p(w| 1)& p(w| 2) 30% of the doc is about one topic and 70% is about another, what are these two topics? -Estimate , p(w| 1), p(w| 2) The doc is about two subtopics, find out what these two subtopics are and to what extent the doc covers each. 63

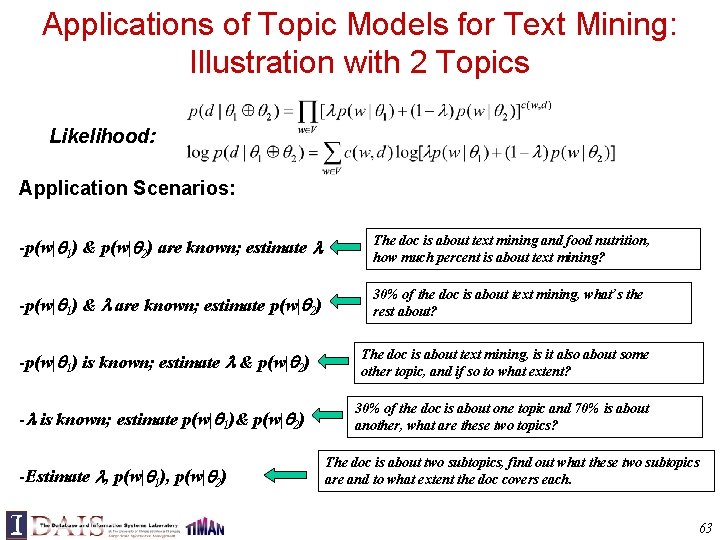

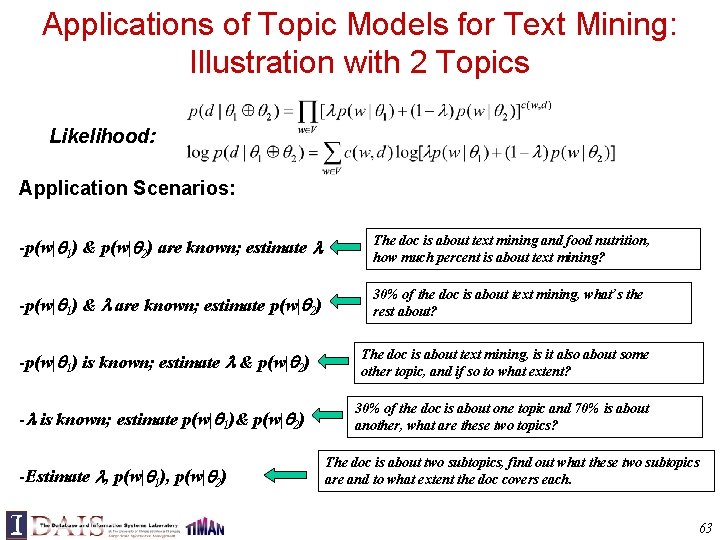

Use PLSA/LDA for Text Mining • Both PLSA and LDA would be able to generate – Topic coverage in each document: p( d = j) – Word distribution for each topic: p(w| j) – Topic assignment at the word level for each document – The number of topics must be given in advance • These probabilities can be used in many different ways – j naturally serves as a word cluster – d, j can be used for document clustering – Contextual text mining: Make these parameters conditioned on context, e. g. , • p( j |time), from which we can compute/plot p(time| j ) • p( j |location), from which we can compute/plot p(loc| j ) 64

![Sample Topics from TDT Corpus Hofmann 99 b 65 Sample Topics from TDT Corpus [Hofmann 99 b] 65](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-65.jpg)

Sample Topics from TDT Corpus [Hofmann 99 b] 65

![How to Help Users Interpret a Topic Model Mei et al 07 b How to Help Users Interpret a Topic Model? [Mei et al. 07 b] •](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-66.jpg)

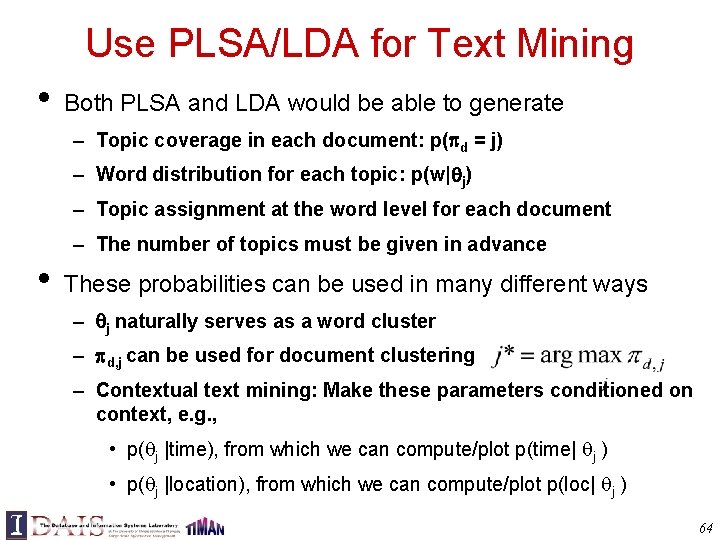

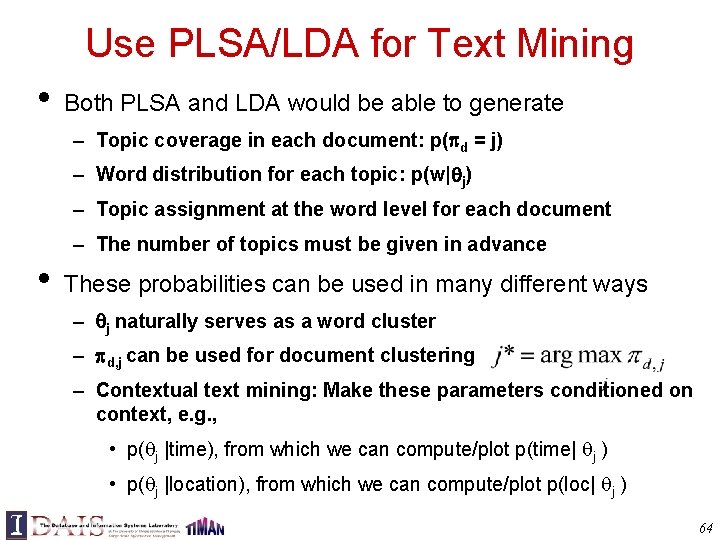

How to Help Users Interpret a Topic Model? [Mei et al. 07 b] • Use top words • term 0. 16 – automatic, but hard to make sense relevance 0. 08 weight 0. 07 feedback 0. 04 Term, relevance, independence 0. 03 weight, feedback model 0. 03 frequent 0. 02 Human generated labels probabilistic 0. 02 – Make sense, but cannot scale up document 0. 02 … Retrieval Models insulin foraging foragers collected grains loads collection nectar … ? Question: Can we automatically generate understandable labels for topics? 66

What is a Good Label? Retrieval models term 0. 1599 relevance 0. 0752 weight 0. 0660 feedback 0. 0372 independence 0. 0311 model 0. 0310 frequent 0. 0233 probabilistic 0. 0188 document 0. 0173 … A topic from [Mei & Zhai 06 b] • • • Semantically close (relevance) Understandable – phrases? High coverage inside topic Discriminative across topics … i. Pod Nano じょうほうけんさく Pseudo-feedback Information Retrieval 67

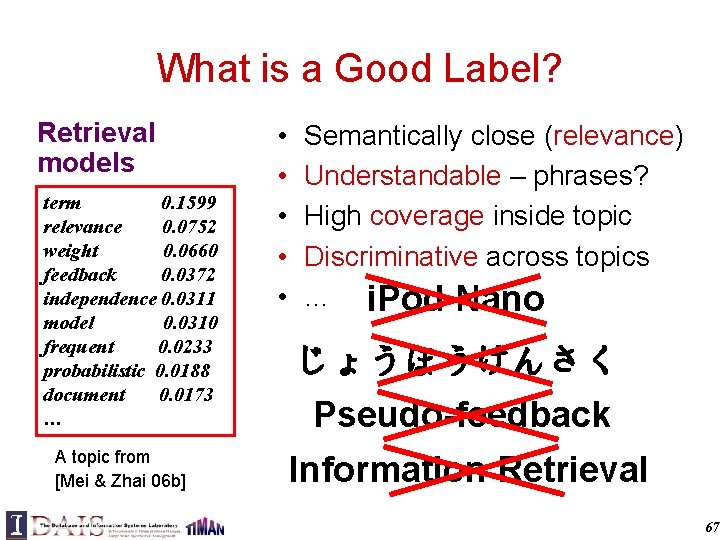

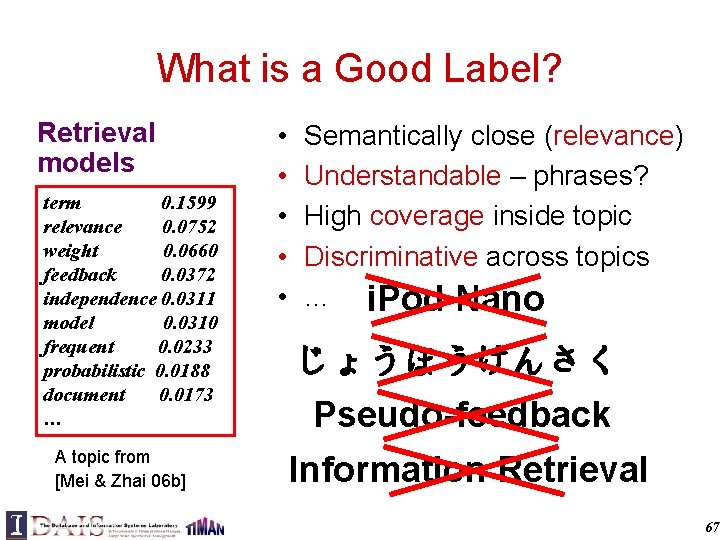

![Automatic Labeling of Topics Mei et al 07 b Statistical topic models term 0 Automatic Labeling of Topics [Mei et al. 07 b] Statistical topic models term 0.](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-68.jpg)

Automatic Labeling of Topics [Mei et al. 07 b] Statistical topic models term 0. 1599 relevance 0. 0752 weight 0. 0660 feedback 0. 0372 independence 0. 0311 model 0. 0310 frequent 0. 0233 probabilistic 0. 0188 document 0. 0173 … Collection (Context) Relevance Score term 0. 1599 relevance 0. 0752 weight 0. 0660 feedback 0. 0372 independence 0. 0311 model 0. 0310 frequent 0. 0233 probabilistic 0. 0188 document 0. 0173 … Multinomial topic models Coverage; Discrimination Re-ranking 1 NLP Chunker Ngram stat. 2 clustering algorithm; database system, clustering algorithm, distance measure; r tree, functional dependency, iceberg … cube, concurrency control, Ranked List index structure … Candidate label pool of Labels 68

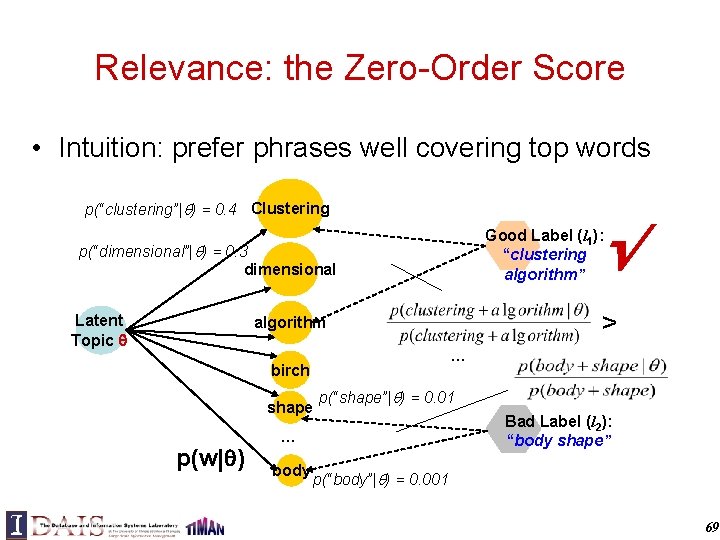

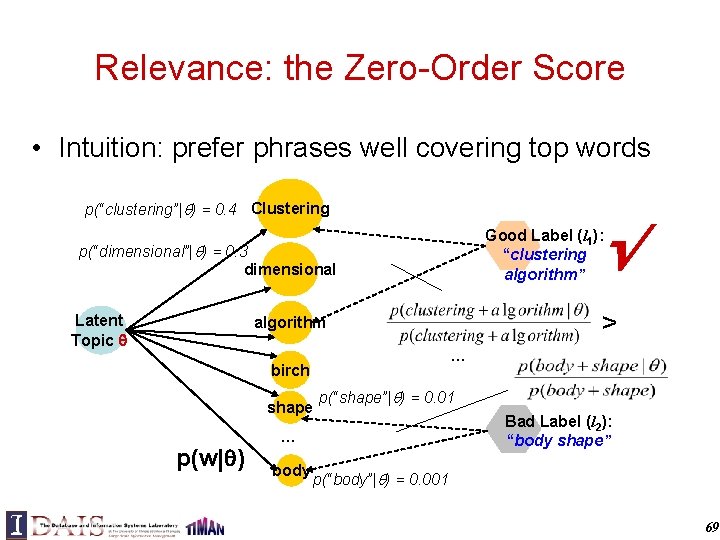

Relevance: the Zero-Order Score • Intuition: prefer phrases well covering top words p(“clustering”| ) = 0. 4 Clustering √ Good Label (l 1): “clustering algorithm” p(“dimensional”| ) = 0. 3 dimensional Latent Topic > algorithm … birch shape p(w| ) p(“shape”| ) = 0. 01 … body Bad Label (l 2): “body shape” p(“body”| ) = 0. 001 69

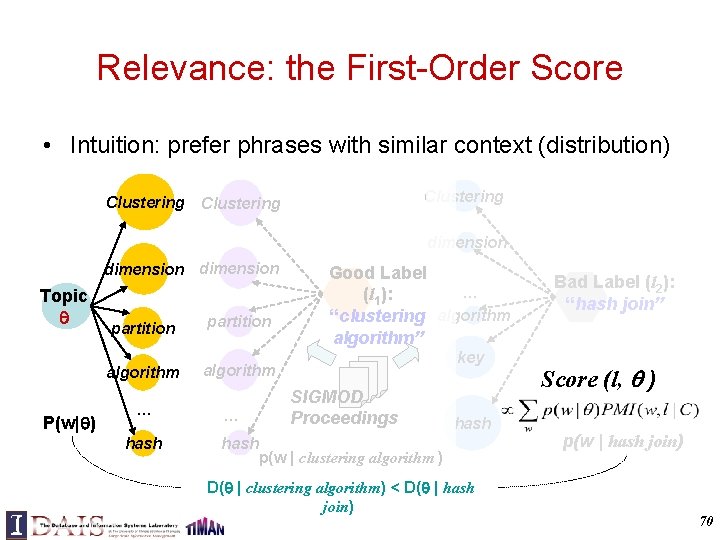

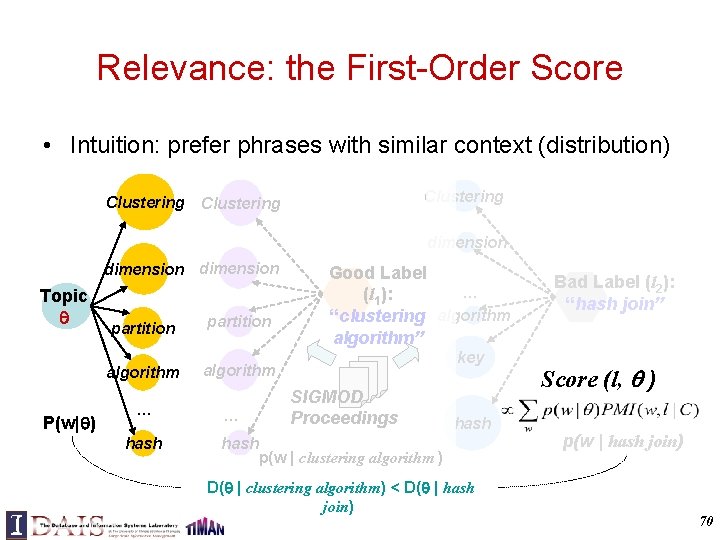

Relevance: the First-Order Score • Intuition: prefer phrases with similar context (distribution) Clustering dimension Topic P(w| ) partition algorithm … hash Good Label … (l 1): “clustering algorithm” key SIGMOD Proceedings hash p(w | clustering algorithm ) D( | clustering algorithm) < D( | hash join) Bad Label (l 2): “hash join” Score (l, ) p(w | hash join) 70

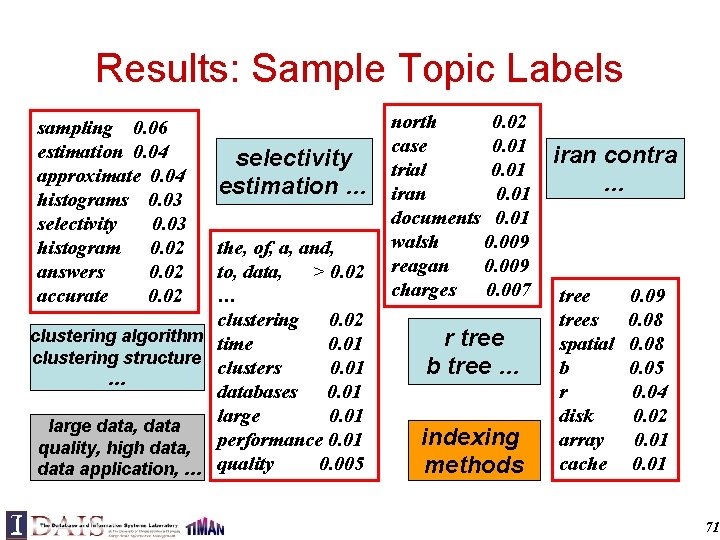

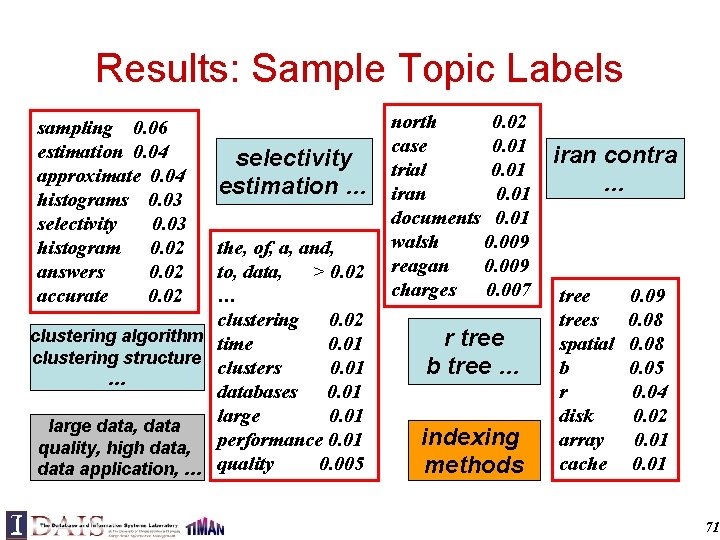

Results: Sample Topic Labels sampling 0. 06 estimation 0. 04 approximate 0. 04 histograms 0. 03 selectivity 0. 03 histogram 0. 02 answers 0. 02 accurate 0. 02 selectivity estimation … the, of, a, and, to, data, > 0. 02 … clustering 0. 02 clustering algorithm time 0. 01 clustering structure clusters 0. 01 … databases 0. 01 large data, data quality, high data, performance 0. 01 data application, … quality 0. 005 north 0. 02 case 0. 01 trial 0. 01 iran 0. 01 documents 0. 01 walsh 0. 009 reagan 0. 009 charges 0. 007 r tree b tree … indexing methods iran contra … tree 0. 09 trees 0. 08 spatial 0. 08 b 0. 05 r 0. 04 disk 0. 02 array 0. 01 cache 0. 01 71

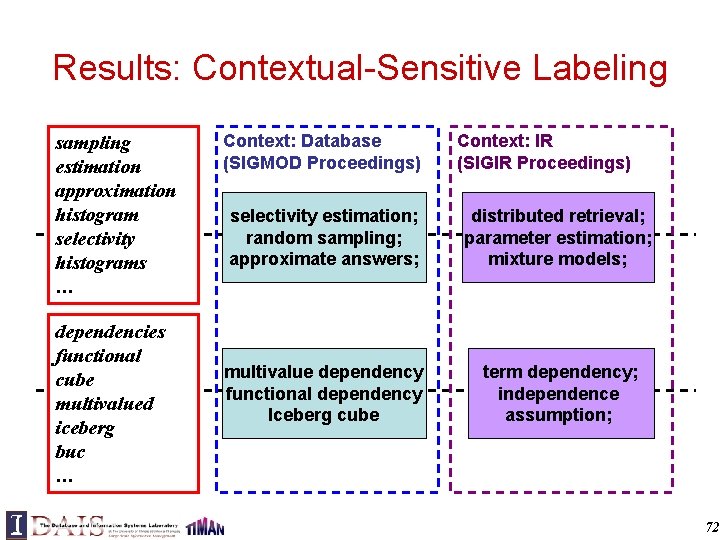

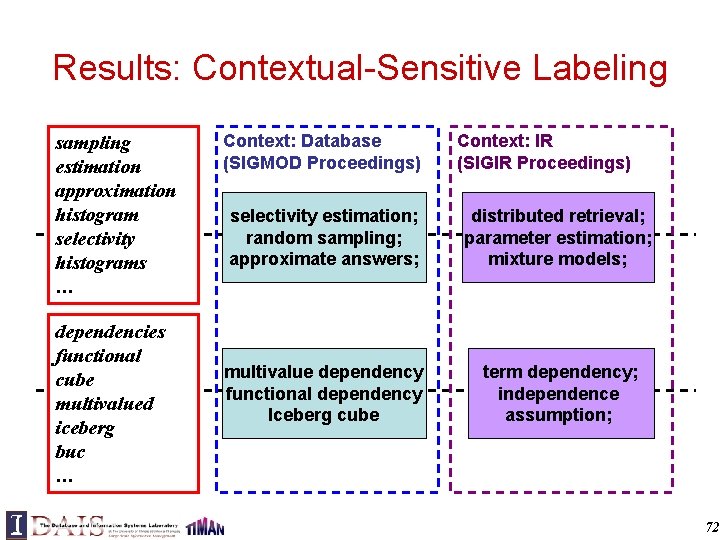

Results: Contextual-Sensitive Labeling sampling estimation approximation histogram selectivity histograms … dependencies functional cube multivalued iceberg buc … Context: Database (SIGMOD Proceedings) Context: IR (SIGIR Proceedings) selectivity estimation; random sampling; approximate answers; distributed retrieval; parameter estimation; mixture models; multivalue dependency functional dependency Iceberg cube term dependency; independence assumption; 72

![Using PLSA to Discover Temporal Topic Trends Mei Zhai 05 gene 0 0173 Using PLSA to Discover Temporal Topic Trends [Mei & Zhai 05] gene 0. 0173](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-73.jpg)

Using PLSA to Discover Temporal Topic Trends [Mei & Zhai 05] gene 0. 0173 expressions 0. 0096 probability 0. 0081 microarray 0. 0038 … marketing 0. 0087 customer 0. 0086 model 0. 0079 business 0. 0048 … rules 0. 0142 association 0. 0064 support 0. 0053 … 73

![Construct Theme Evolution Graph Mei Zhai 05 1999 2000 2001 2002 SVM 0 Construct Theme Evolution Graph [Mei & Zhai 05] 1999 2000 2001 2002 SVM 0.](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-74.jpg)

Construct Theme Evolution Graph [Mei & Zhai 05] 1999 2000 2001 2002 SVM 0. 007 criteria 0. 007 classifica – tion 0. 006 linear 0. 005 … decision 0. 006 tree 0. 006 classifier 0. 005 class 0. 005 Bayes 0. 005 … web 0. 009 classifica – tion 0. 007 features 0. 006 topic 0. 005 … 2003 mixture 0. 005 random 0. 006 clustering 0. 005 variables 0. 005 … … Classifica - tion 0. 015 text 0. 013 unlabeled 0. 012 document 0. 008 labeled 0. 008 learning 0. 007 … … Informa - tion 0. 012 web 0. 010 social 0. 008 retrieval 0. 007 distance 0. 005 networks 0. 004 … 2004 T topic 0. 010 mixture 0. 008 LDA 0. 006 semantic 0. 005 … 74

![Use PLSA to Integrate Opinions Lu Zhai 08 Output Topic i Pod Expert Use PLSA to Integrate Opinions [Lu & Zhai 08] Output Topic: i. Pod Expert](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-75.jpg)

Use PLSA to Integrate Opinions [Lu & Zhai 08] Output Topic: i. Pod Expert review with aspects Text collection of ordinary opinions, e. g. Weblogs Design Battery Price. . Extra Aspects Review Aspects Input Similar opinions Design Battery Price Supplementary opinions cute… tiny…. . thicker. . last many hrs die out soon could afford still it expensive i. Tunes warranty … easy to use… …better to extend. . Integrated Summary 75

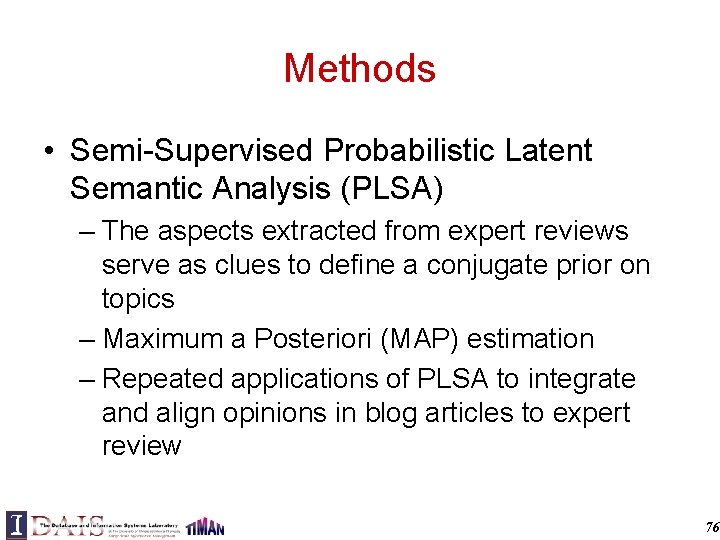

Methods • Semi-Supervised Probabilistic Latent Semantic Analysis (PLSA) – The aspects extracted from expert reviews serve as clues to define a conjugate prior on topics – Maximum a Posteriori (MAP) estimation – Repeated applications of PLSA to integrate and align opinions in blog articles to expert review 76

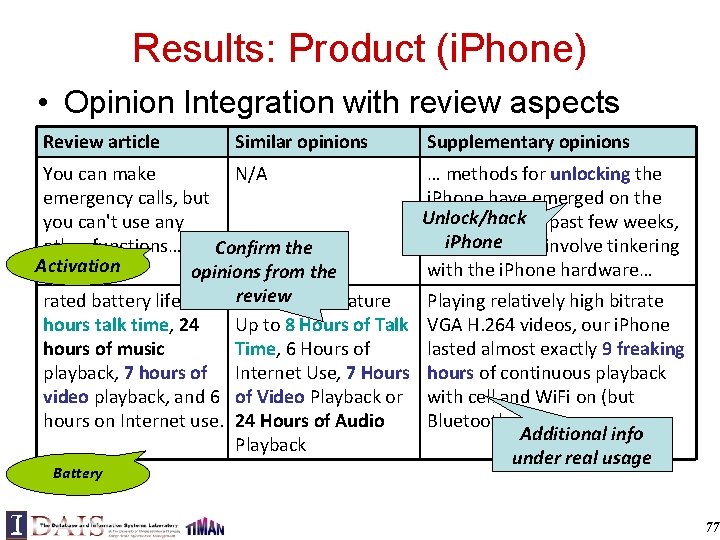

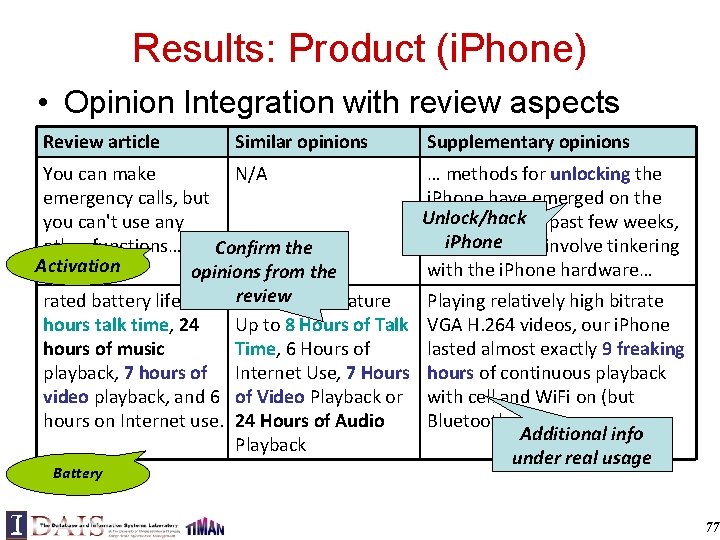

Results: Product (i. Phone) • Opinion Integration with review aspects Review article Similar opinions You can make N/A emergency calls, but you can't use any other functions… Confirm the Activation opinions from the review will Feature rated battery life of 8 i. Phone hours talk time, 24 Up to 8 Hours of Talk hours of music Time, 6 Hours of playback, 7 hours of Internet Use, 7 Hours video playback, and 6 of Video Playback or hours on Internet use. 24 Hours of Audio Playback Battery Supplementary opinions … methods for unlocking the i. Phone have emerged on the Unlock/hack Internet in the past few weeks, i. Phone they involve tinkering although with the i. Phone hardware… Playing relatively high bitrate VGA H. 264 videos, our i. Phone lasted almost exactly 9 freaking hours of continuous playback with cell and Wi. Fi on (but Bluetooth off). Additional info under real usage 77

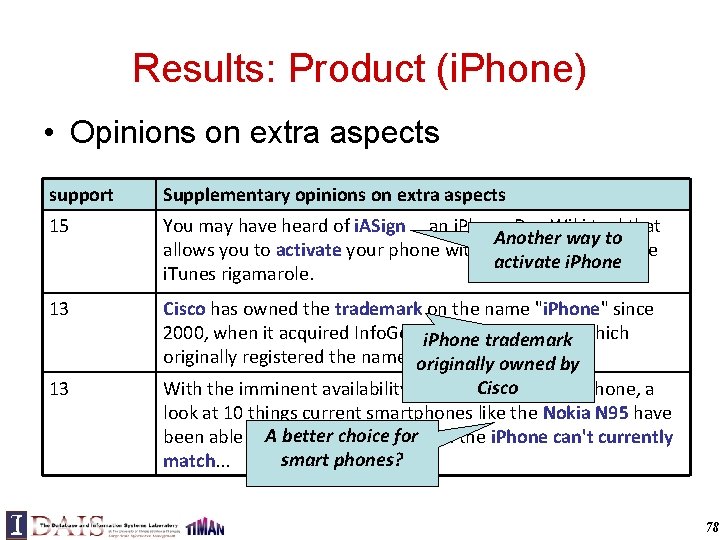

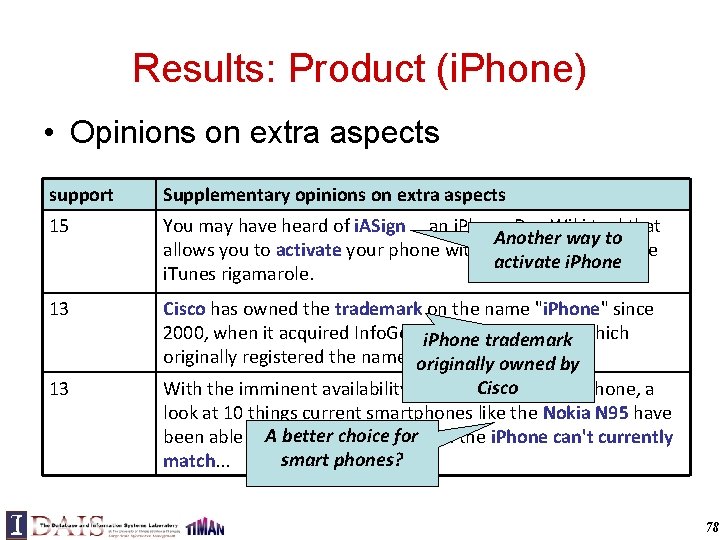

Results: Product (i. Phone) • Opinions on extra aspects support Supplementary opinions on extra aspects 15 You may have heard of i. ASign … an i. Phone Dev Wiki tool that Another way to allows you to activate your phone without going through the activate i. Phone i. Tunes rigamarole. 13 Cisco has owned the trademark on the name "i. Phone" since 2000, when it acquired Info. Geari. Phone Technology Corp. , which trademark originally registered the name. originally owned by 13 Cisco With the imminent availability of Apple's uber cool i. Phone, a look at 10 things current smartphones like the Nokia N 95 have choiceand for that the i. Phone can't currently been able to. Adobetter for a while smart phones? match. . . 78

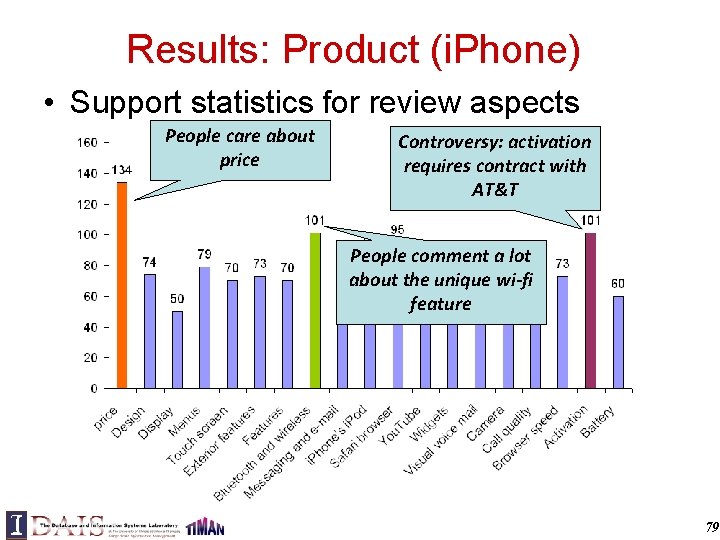

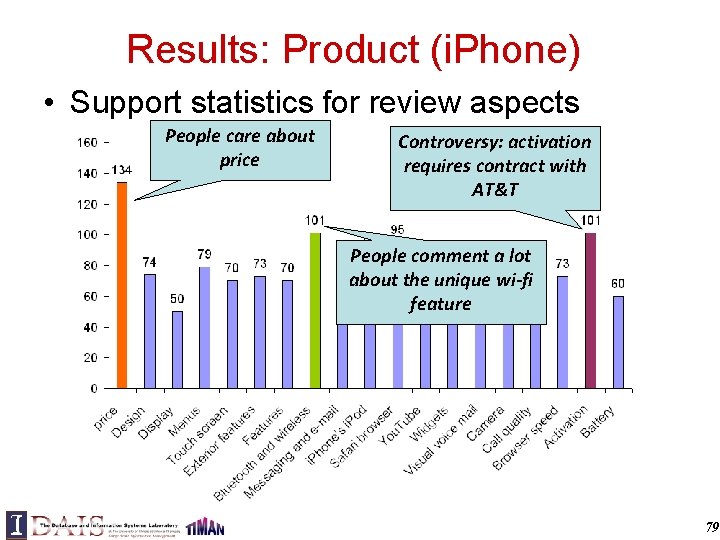

Results: Product (i. Phone) • Support statistics for review aspects People care about price Controversy: activation requires contract with AT&T People comment a lot about the unique wi-fi feature 79

![Comparison of Task Performance of PLSA and LDA Lu et al 11 Three Comparison of Task Performance of PLSA and LDA [Lu et al. 11] • Three](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-80.jpg)

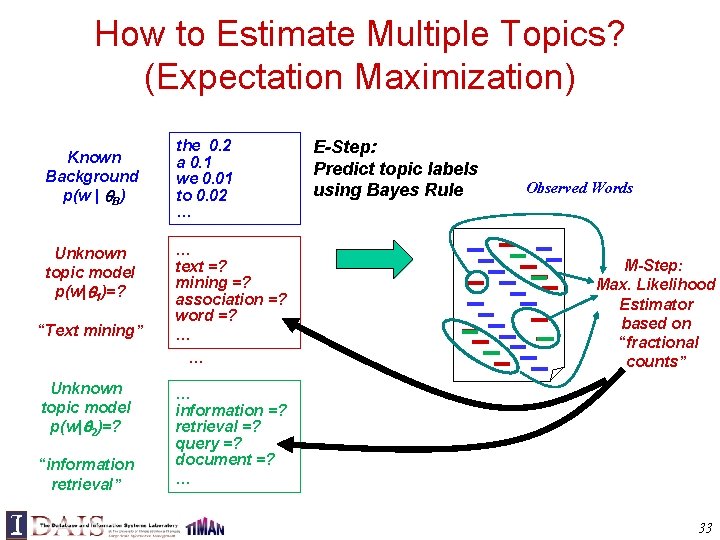

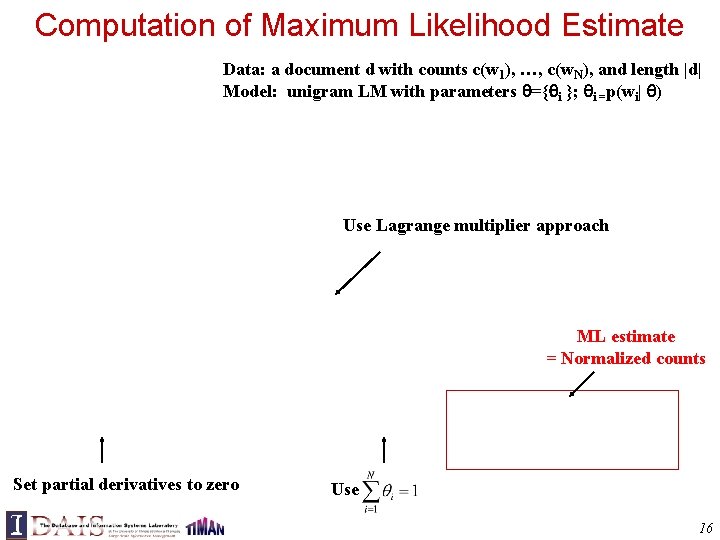

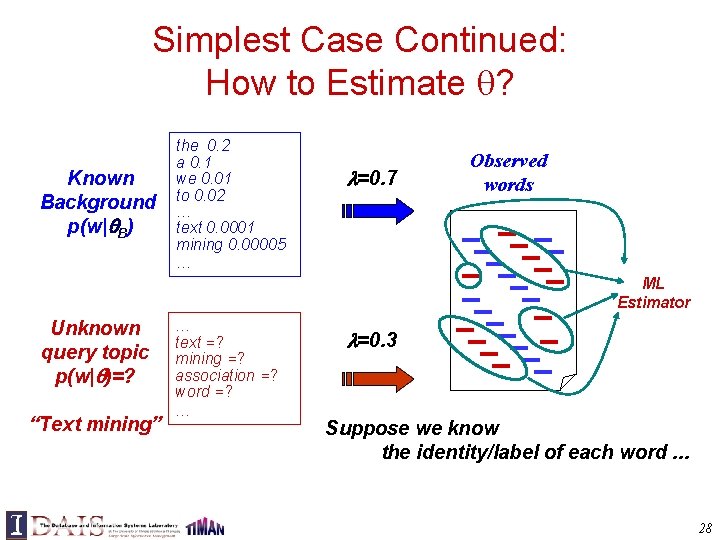

Comparison of Task Performance of PLSA and LDA [Lu et al. 11] • Three text mining tasks considered – Topic model for text clustering – Topic model for text categorization (topic model is used to obtain low-dimensional representation) – Topic model for smoothing language model for retrieval • Conclusions – PLSA and LDA generally have similar task performance for clustering and retrieval – LDA works better than PLSA when used to generate lowdimensional representation (PLSA suffers from overfitting) – Task performance of LDA is very sensitive to setting of hyperparameters – Multiple local maxima problem of PLSA didn’t seem to affect task performance much 80

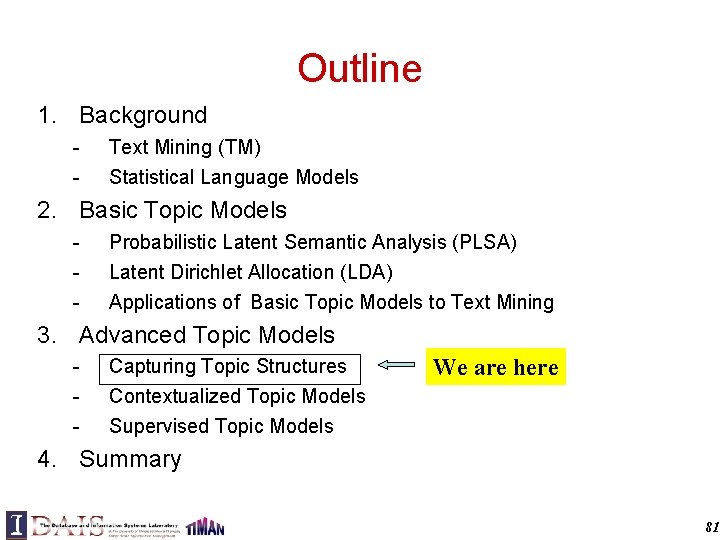

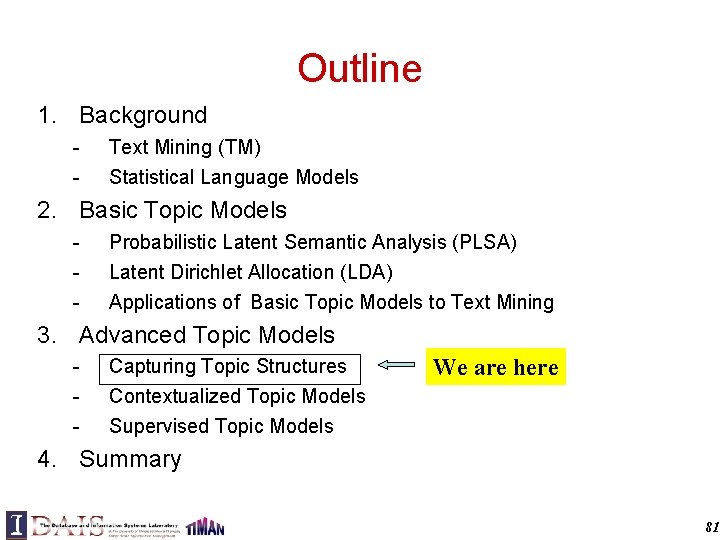

Outline 1. Background - Text Mining (TM) Statistical Language Models 2. Basic Topic Models - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) Applications of Basic Topic Models to Text Mining 3. Advanced Topic Models - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models We are here 4. Summary 81

Overview of Advanced Topic Models • There are MANY variants and extensions of the basic PLSA/LDA topic models! • Selected major lines to cover in this tutorial – Capturing Topic Structures – Contextualized Topic Models – Supervised Topic Models 82

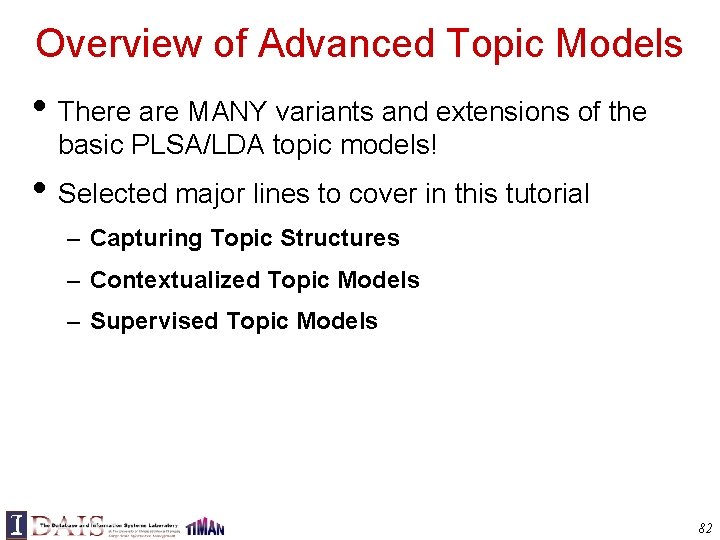

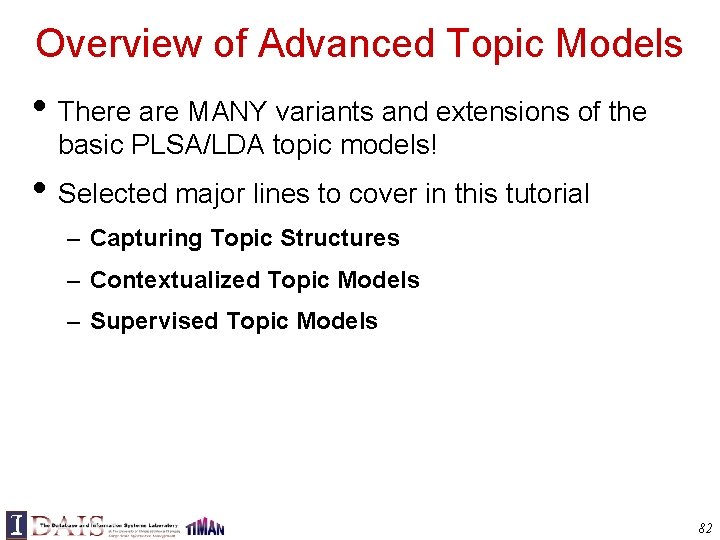

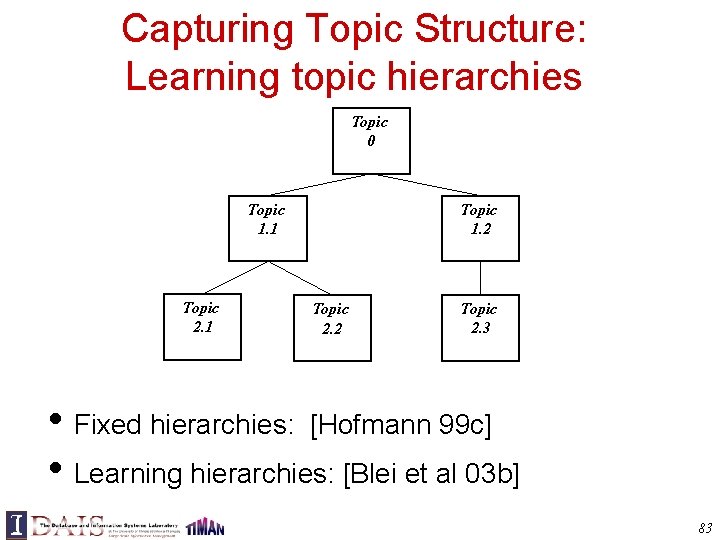

Capturing Topic Structure: Learning topic hierarchies Topic 0 Topic 1. 1 Topic 2. 1 Topic 1. 2 Topic 2. 3 • Fixed hierarchies: [Hofmann 99 c] • Learning hierarchies: [Blei et al 03 b] 83

Learning topic hierarchies The topics in each document form a path from root to leaf Topic 2. 1 Topic 0 Topic 1. 1 Topic 1. 2 Topic 2. 3 • Fixed hierarchies: [Hofmann 99 c] • Learning hierarchies: [Blei et al. 03 b] 84

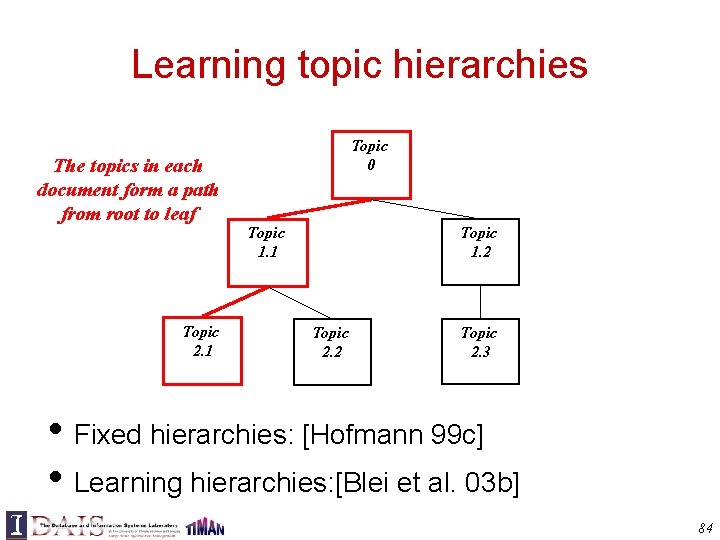

![Twelve Years of NIPS Blei et al 03 b 85 Twelve Years of NIPS [Blei et al. 03 b] 85](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-85.jpg)

Twelve Years of NIPS [Blei et al. 03 b] 85

![Capturing Topic Structures Correlated Topic Model CTM Blei Lafferty 05 86 Capturing Topic Structures: Correlated Topic Model (CTM) [Blei & Lafferty 05] 86](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-86.jpg)

Capturing Topic Structures: Correlated Topic Model (CTM) [Blei & Lafferty 05] 86

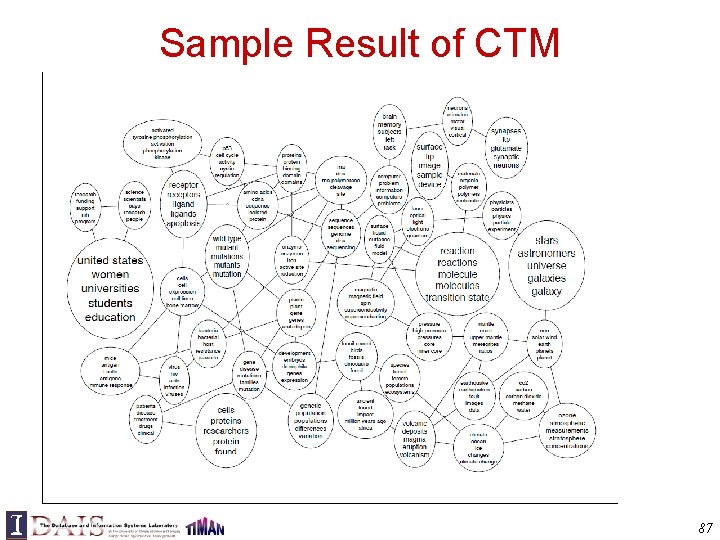

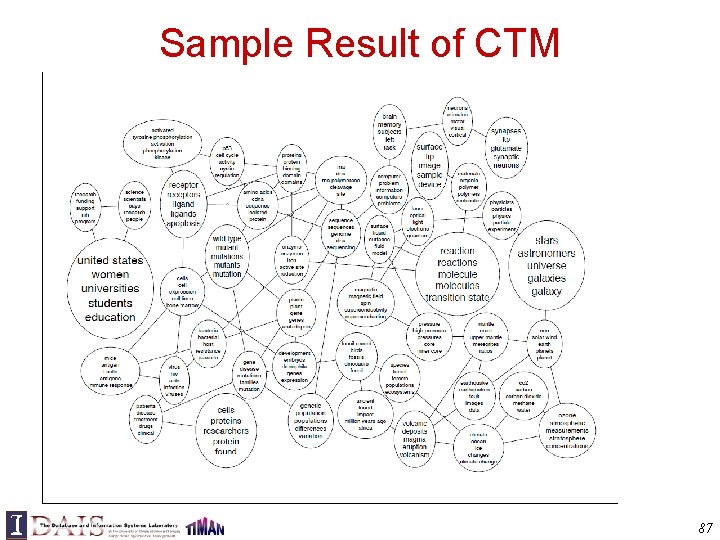

Sample Result of CTM 87

Outline 1. Background - Text Mining (TM) - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models Statistical Language Models 2. Basic Topic Models 3. Advanced Topic Models We are here 4. Summary 88

Contextual Topic Mining • Documents are often associated with context (metadata) – Direct context: time, location, source, authors, … – Indirect context: events, policies, … • Many applications require “contextual text analysis”: – Discovering topics from text in a context-sensitive way – Analyzing variations of topics over different contexts – Revealing interesting patterns (e. g. , topic evolution, topic variations, topic communities) 89

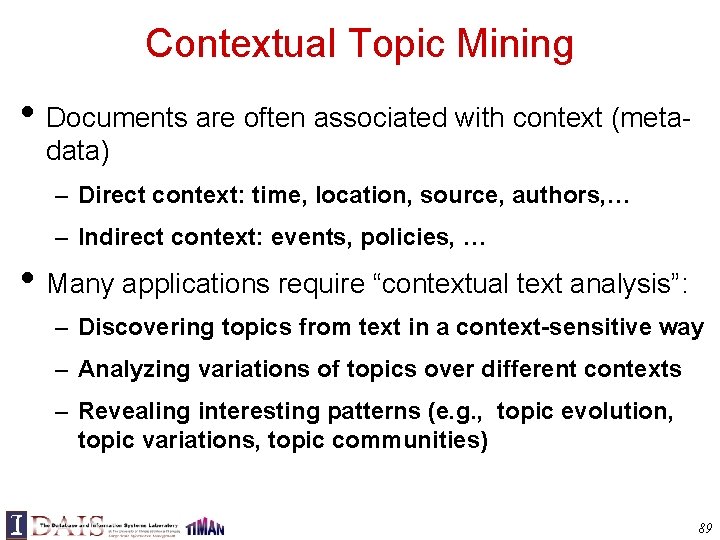

Example: Comparing News Articles Vietnam War CNN Afghan War Fox Before 9/11 During Iraq war US blog European blog Iraq War Blog Current Others Common Themes “Vietnam” specific “Afghan” specific “Iraq” specific United nations … … … Death of people … … … … What’s in common? What’s unique? 90

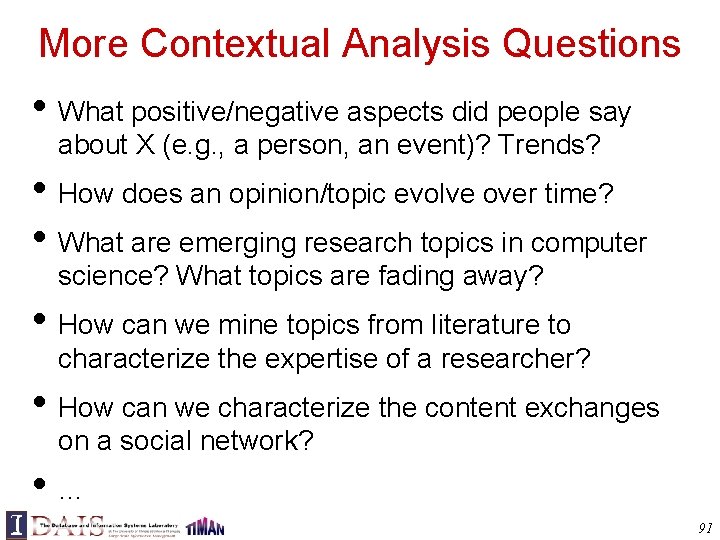

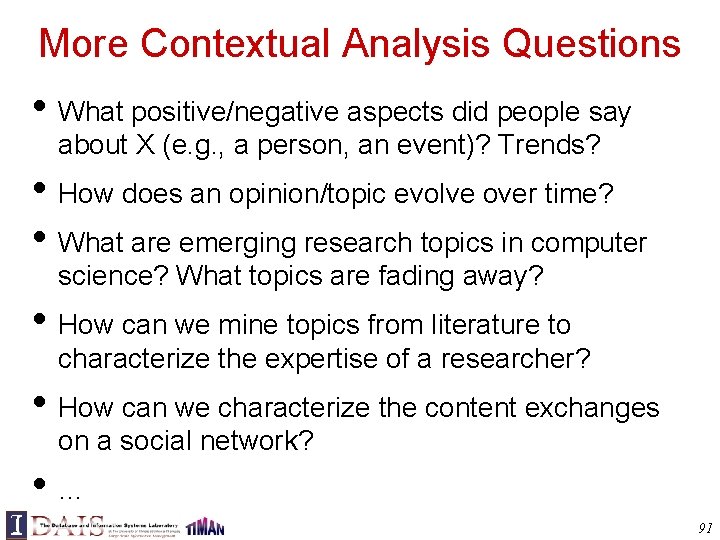

More Contextual Analysis Questions • What positive/negative aspects did people say about X (e. g. , a person, an event)? Trends? • How does an opinion/topic evolve over time? • What are emerging research topics in computer science? What topics are fading away? • How can we mine topics from literature to characterize the expertise of a researcher? • How can we characterize the content exchanges on a social network? • … 91

![Contextual Probabilistic Latent Semantics Analysis Mei Zhai 06 b Themes View 1 View Contextual Probabilistic Latent Semantics Analysis [Mei & Zhai 06 b] Themes View 1 View](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-92.jpg)

Contextual Probabilistic Latent Semantics Analysis [Mei & Zhai 06 b] Themes View 1 View 2 View 3 government Choose a theme Criticism of government Draw a word from i response togovernment the hurricane government 0. 3 primarily consisted of response 0. 2. . Document response criticism of its response context: to … The total shut-in oil Time = July production from 2005 the Gulf Location = Texas of Mexico … donate Author =24% xxx approximately help of the aid annual production and Occup. = Sociologist the. Group shut-in=gas Age 45+ production … Over … Orleans seventy countries new pledged monetary donations or other assistance. … donate 0. 1 relief 0. 05 help 0. 02. . donation city 0. 2 new 0. 1 orleans 0. 05. . New Orleans Texas July sociolo 2005 gist Choose a view Theme coverages : …… Texas July 2005 document Choose a Coverage 92

![Comparing News Articles Zhai et al 04 Iraq War 30 articles vs Afghan War Comparing News Articles [Zhai et al. 04] Iraq War (30 articles) vs. Afghan War](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-93.jpg)

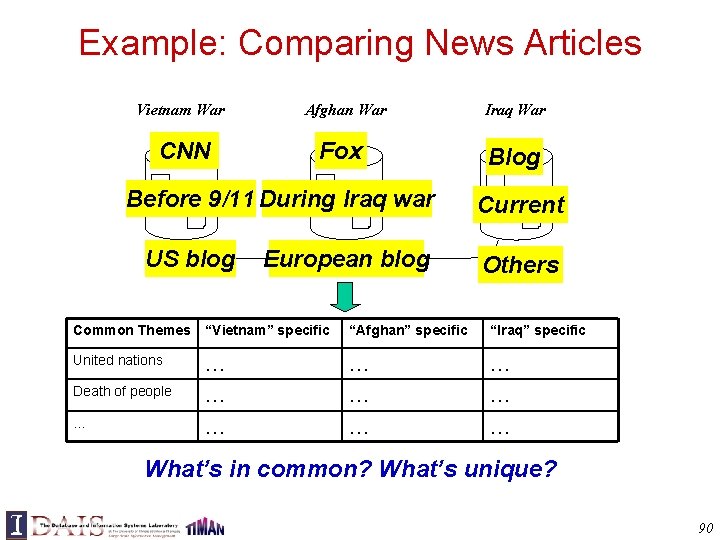

Comparing News Articles [Zhai et al. 04] Iraq War (30 articles) vs. Afghan War (26 articles) The common theme indicates that “United Nations” is involved in both wars Cluster 1 Common Theme Iraq Theme Afghan Theme united nations … Cluster 2 0. 04 n 0. 03 Weapons 0. 024 Inspections 0. 023 … Northern 0. 04 alliance 0. 04 kabul 0. 03 taleban 0. 025 aid 0. 02 … killed month deaths … troops hoon sanches … taleban rumsfeld hotel front … Cluster 3 0. 035 0. 032 0. 023 … 0. 016 0. 015 0. 012 … 0. 026 0. 02 0. 011 … Collection-specific themes indicate different roles of “United Nations” in the two wars 93

![Spatiotemporal Patterns in Blog Articles Mei et al 06 a Query Spatiotemporal Patterns in Blog Articles [Mei et al. 06 a] • • • Query=](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-94.jpg)

Spatiotemporal Patterns in Blog Articles [Mei et al. 06 a] • • • Query= “Hurricane Katrina” Topics in the results: Spatiotemporal patterns 94

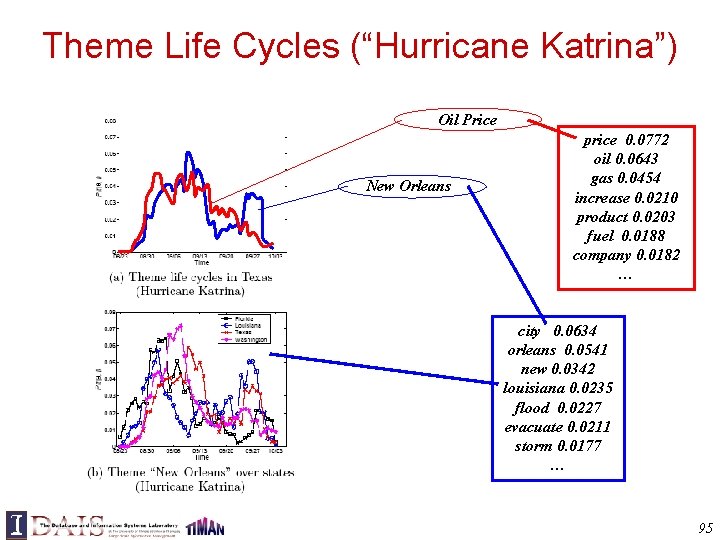

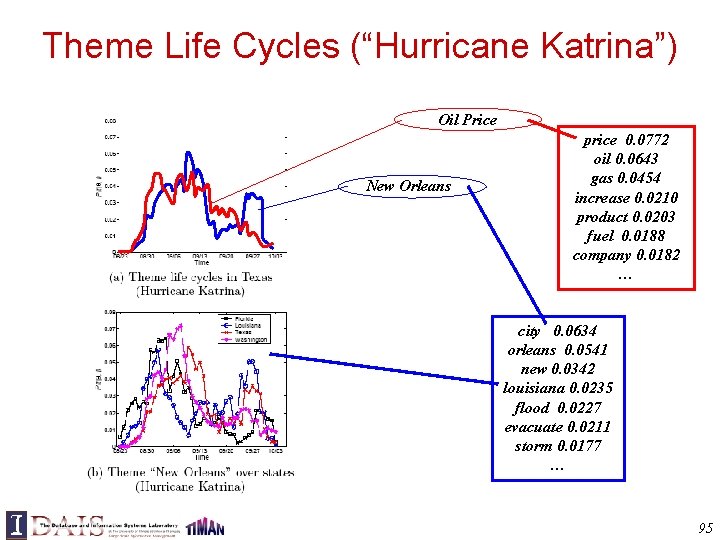

Theme Life Cycles (“Hurricane Katrina”) Oil Price New Orleans price 0. 0772 oil 0. 0643 gas 0. 0454 increase 0. 0210 product 0. 0203 fuel 0. 0188 company 0. 0182 … city 0. 0634 orleans 0. 0541 new 0. 0342 louisiana 0. 0235 flood 0. 0227 evacuate 0. 0211 storm 0. 0177 … 95

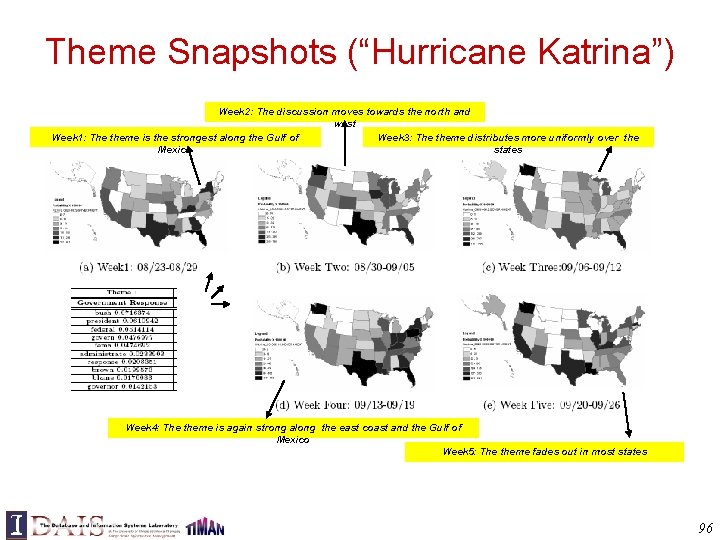

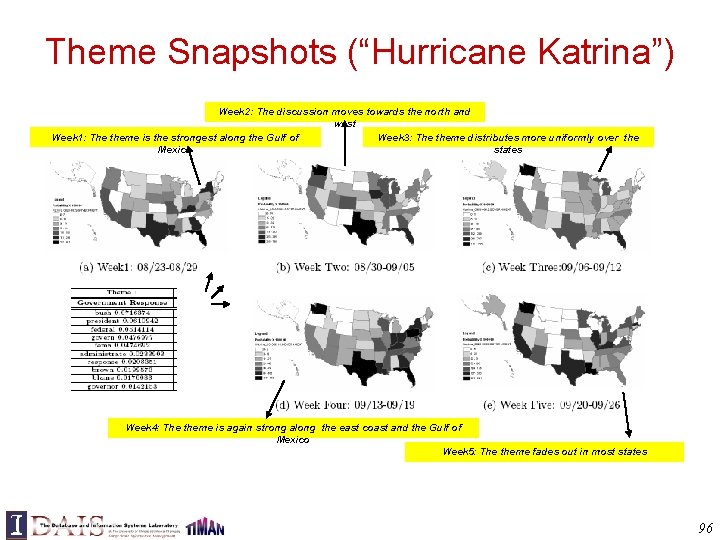

Theme Snapshots (“Hurricane Katrina”) Week 2: The discussion moves towards the north and west Week 1: The theme is the strongest along the Gulf of Week 3: The theme distributes more uniformly over the Mexico states Week 4: The theme is again strong along the east coast and the Gulf of Mexico Week 5: The theme fades out in most states 96

![MultiFaceted Sentiment Summary Mei et al 07 a queryDa Vinci Code Facet 1 Movie Multi-Faceted Sentiment Summary [Mei et al. 07 a] (query=“Da Vinci Code”) Facet 1: Movie](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-97.jpg)

Multi-Faceted Sentiment Summary [Mei et al. 07 a] (query=“Da Vinci Code”) Facet 1: Movie Facet 2: Book Neutral Positive Negative . . . Ron Howards selection of Tom Hanks to play Robert Langdon. Tom Hanks stars in the movie, who can be mad at that? But the movie might get delayed, and even killed off if he loses. Directed by: Ron Howard Writing credits: Akiva Goldsman. . . Tom Hanks, who is my favorite movie star act the leading role. protesting. . . will lose your faith by. . . watching the movie. After watching the movie I went online and some research on. . . Anybody is interested in it? . . . so sick of people making such a big deal about a FICTION book and movie. I remembered when i first read the book, I finished the book in two days. Awesome book. . so sick of people making such a big deal about a FICTION book and movie. I’m reading “Da Vinci Code” now. So still a good book to past time. This controversy book cause lots conflict in west society. … 97

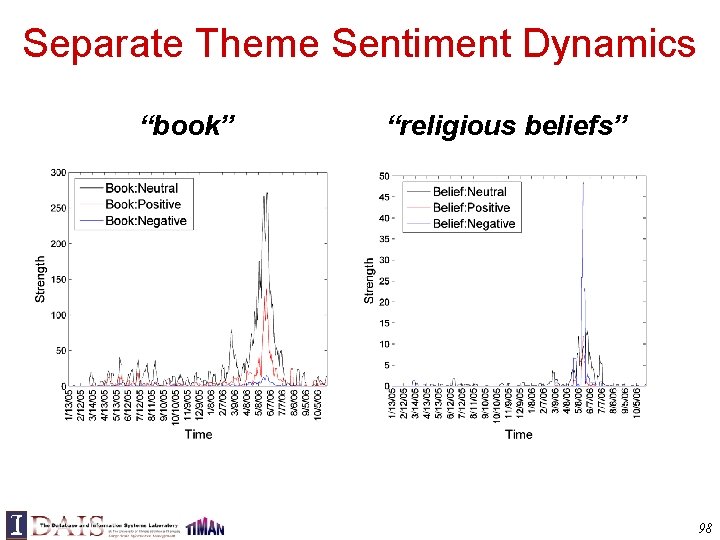

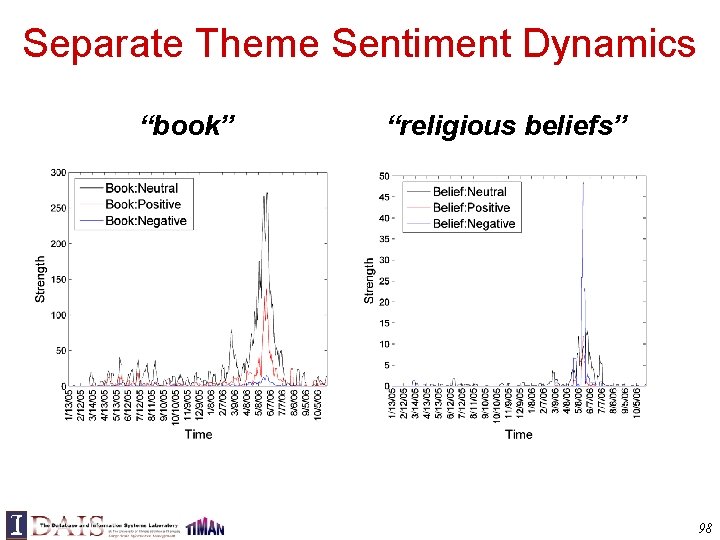

Separate Theme Sentiment Dynamics “book” “religious beliefs” 98

![Event Impact Analysis IR Research Mei Zhai 06 b Theme retrieval models term Event Impact Analysis: IR Research [Mei & Zhai 06 b] Theme: retrieval models term](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-99.jpg)

Event Impact Analysis: IR Research [Mei & Zhai 06 b] Theme: retrieval models term 0. 1599 relevance 0. 0752 weight 0. 0660 feedback 0. 0372 independence 0. 0311 model 0. 0310 frequent 0. 0233 probabilistic 0. 0188 document 0. 0173 … xml 0. 0678 email 0. 0197 model 0. 0191 collect 0. 0187 judgment 0. 0102 rank 0. 0097 subtopic 0. 0079 … vector 0. 0514 concept 0. 0298 extend 0. 0297 model 0. 0291 space 0. 0236 boolean 0. 0151 function 0. 0123 feedback 0. 0077 … 1992 SIGIR papers Publication of the paper “A language modeling approach to information retrieval” Starting of the TREC conferences probabilist 0. 0778 model 0. 0432 logic 0. 0404 ir 0. 0338 boolean 0. 0281 algebra 0. 0200 estimate 0. 0119 weight 0. 0111 … 1998 year model 0. 1687 language 0. 0753 estimate 0. 0520 parameter 0. 0281 distribution 0. 0268 probable 0. 0205 smooth 0. 0198 markov 0. 0137 likelihood 0. 0059 … 99

![The AuthorTopic model RosenZvi et al 04 each author has a distribution over topics The Author-Topic model [Rosen-Zvi et al. 04] each author has a distribution over topics](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-100.jpg)

The Author-Topic model [Rosen-Zvi et al. 04] each author has a distribution over topics the author of each word is chosen uniformly at random (a) Dirichlet( ) (a) A xi Uniform(A (d) ) xi (j) Dirichlet( ) (j) T zi zi Discrete( (xi) ) wi wi Discrete( (zi) ) Nd D 100

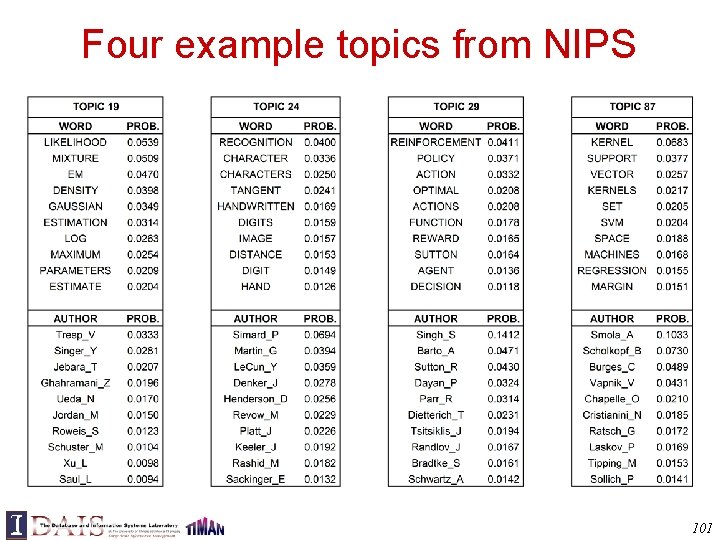

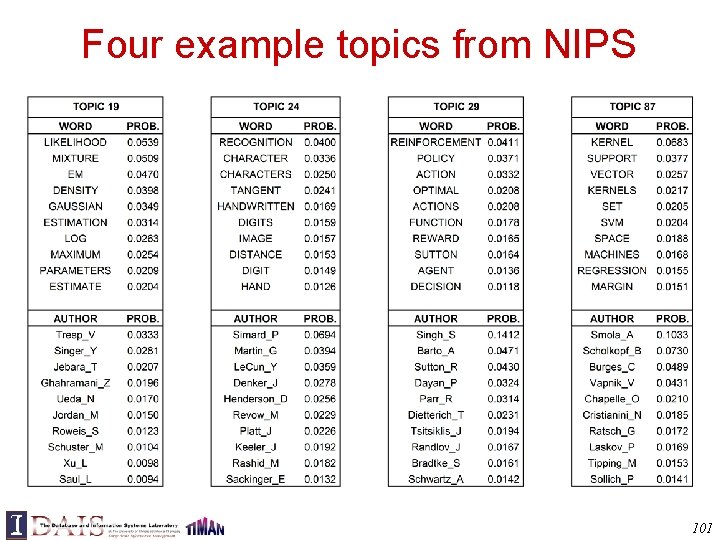

Four example topics from NIPS 101

![Dirichletmultinomial Regression DMR Mimno Mc Callum 08 Allows arbitrary features to be used Dirichlet-multinomial Regression (DMR) [Mimno & Mc. Callum 08] Allows arbitrary features to be used](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-102.jpg)

Dirichlet-multinomial Regression (DMR) [Mimno & Mc. Callum 08] Allows arbitrary features to be used to influence choice of topics 102

Outline 1. Background - Text Mining (TM) - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models Statistical Language Models 2. Basic Topic Models 3. Advanced Topic Models 4. Summary We are here 103

![Supervised LDA Blei Mc Auliffe 07 104 Supervised LDA [Blei & Mc. Auliffe 07] 104](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-104.jpg)

Supervised LDA [Blei & Mc. Auliffe 07] 104

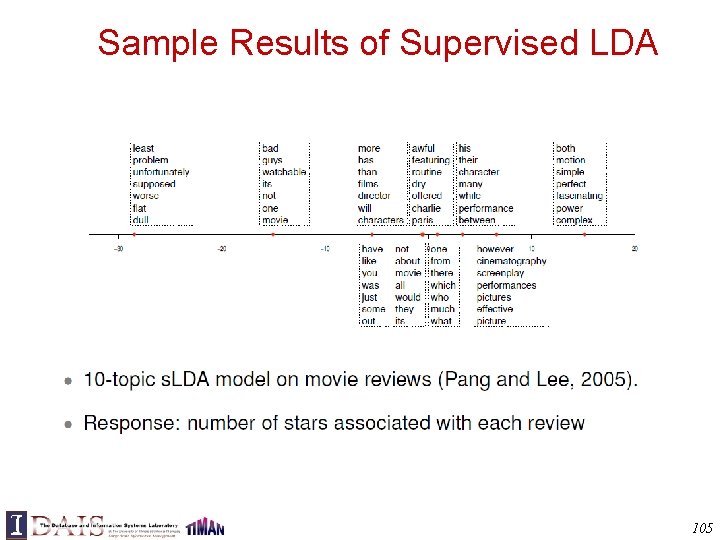

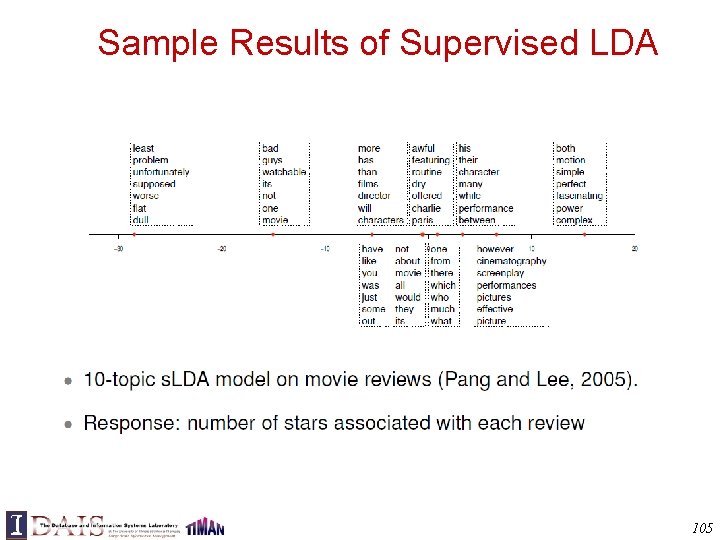

Sample Results of Supervised LDA 105

![Latent Aspect Rating Analysis Wang et al 11 Given a set of review Latent Aspect Rating Analysis [Wang et al. 11] • Given a set of review](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-106.jpg)

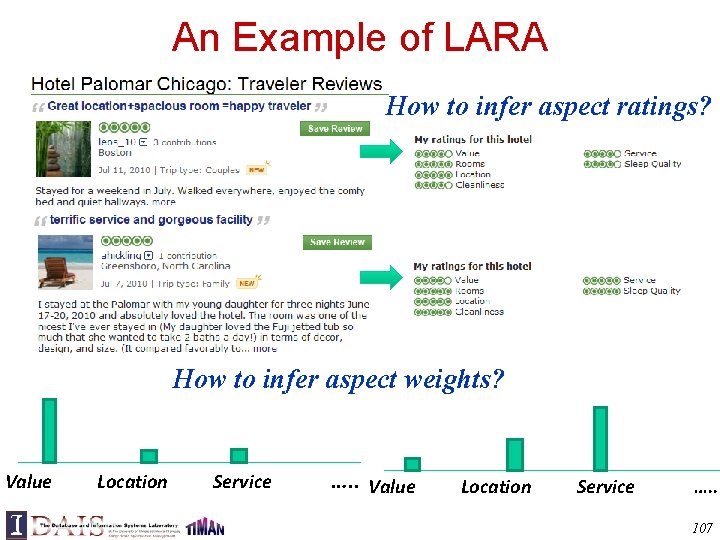

Latent Aspect Rating Analysis [Wang et al. 11] • Given a set of review articles about a topic with overall • ratings (ratings as “supervision signals”) Output – Major aspects commented on in the reviews – Ratings on each aspect • – Relative weights placed on different aspects by reviewers Many applications – Opinion-based entity ranking – Aspect-level opinion summarization – Reviewer preference analysis – Personalized recommendation of products – … 106

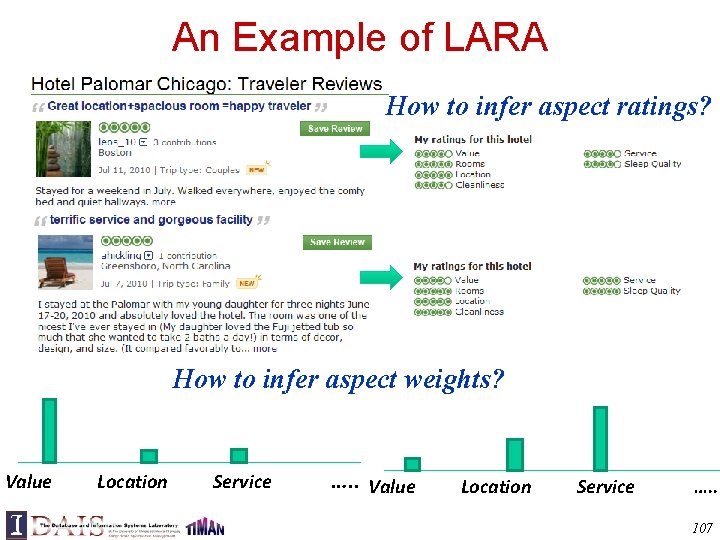

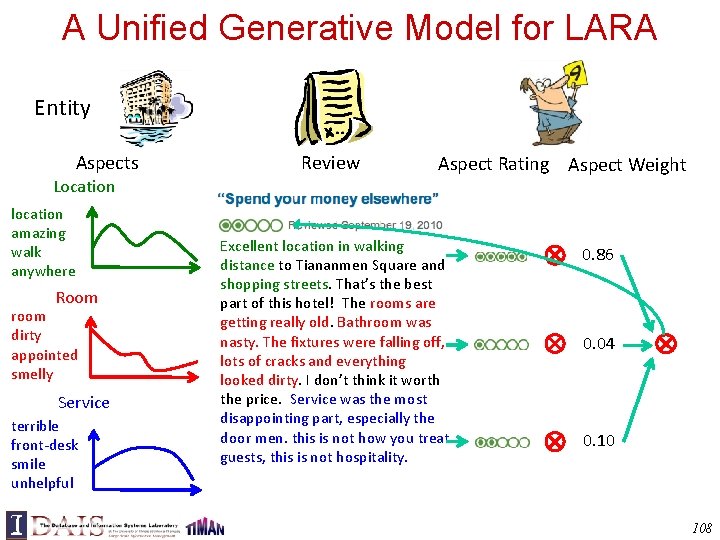

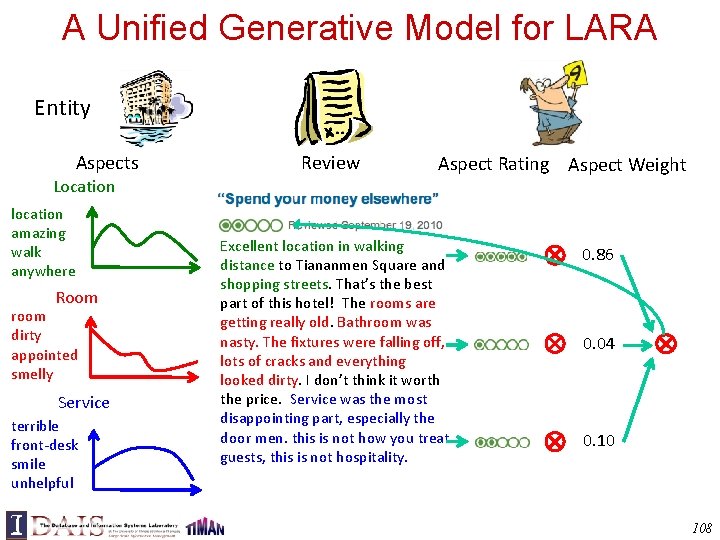

An Example of LARA How to infer aspect ratings? How to infer aspect weights? Value Location Service …. . 107

A Unified Generative Model for LARA Entity Aspects Location location amazing walk anywhere Room room dirty appointed smelly Service terrible front-desk smile unhelpful Review Aspect Rating Aspect Weight Excellent location in walking distance to Tiananmen Square and shopping streets. That’s the best part of this hotel! The rooms are getting really old. Bathroom was nasty. The fixtures were falling off, lots of cracks and everything looked dirty. I don’t think it worth the price. Service was the most disappointing part, especially the door men. this is not how you treat guests, this is not hospitality. 0. 86 0. 04 0. 10 108

![Latent Aspect Rating Analysis Model Wang et al 11 Unified framework Excellent location Latent Aspect Rating Analysis Model [Wang et al. 11] • Unified framework Excellent location](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-109.jpg)

Latent Aspect Rating Analysis Model [Wang et al. 11] • Unified framework Excellent location in walking distance to Tiananmen Square and shopping streets. That’s the best part of this hotel! The rooms are getting really old. Bathroom was nasty. The fixtures were falling off, lots of cracks and everything looked dirty. I don’t think it worth the price. Service was the most disappointing part, especially the door men. this is not how you treat guests, this is not hospitality. Rating prediction module Aspect modeling module 109

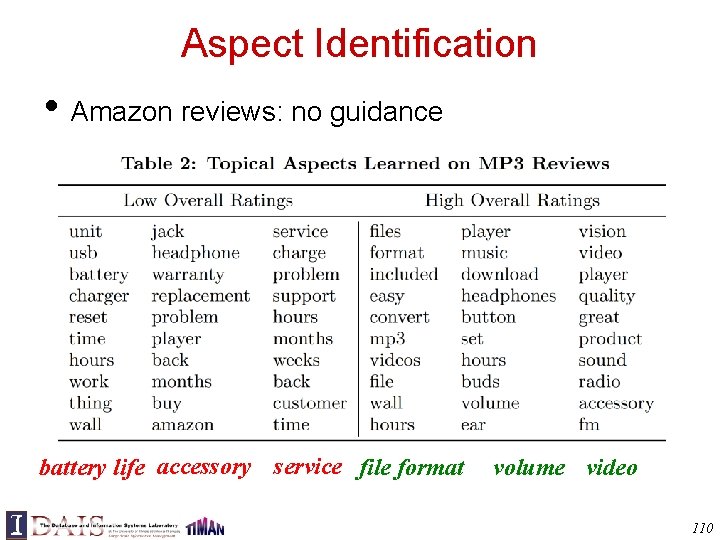

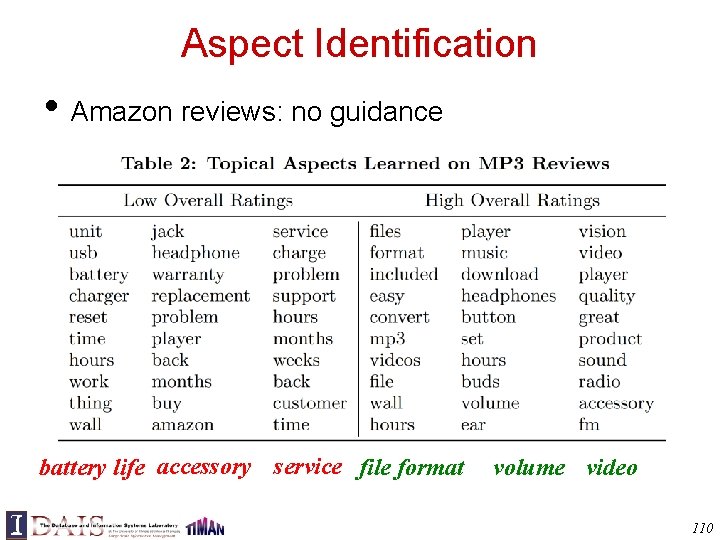

Aspect Identification • Amazon reviews: no guidance battery life accessory service file format volume video 110

![Network Supervised Topic Modeling Mei et al 08 Probabilistic topic modeling as Network Supervised Topic Modeling [Mei et al. 08] • • Probabilistic topic modeling as](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-111.jpg)

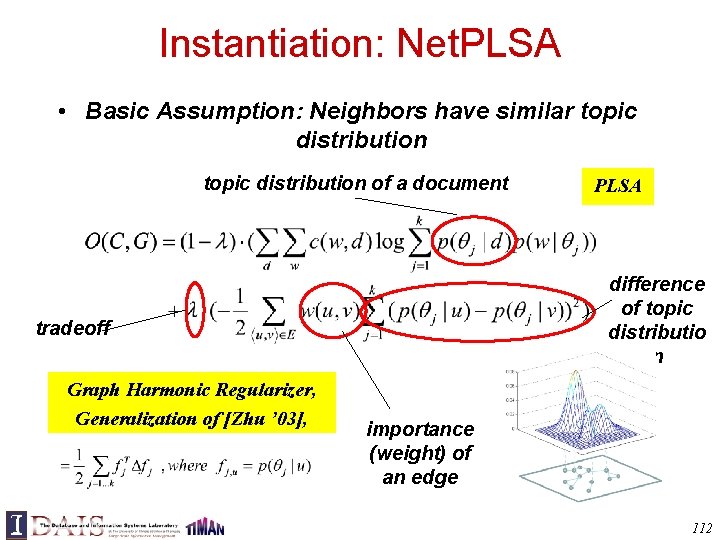

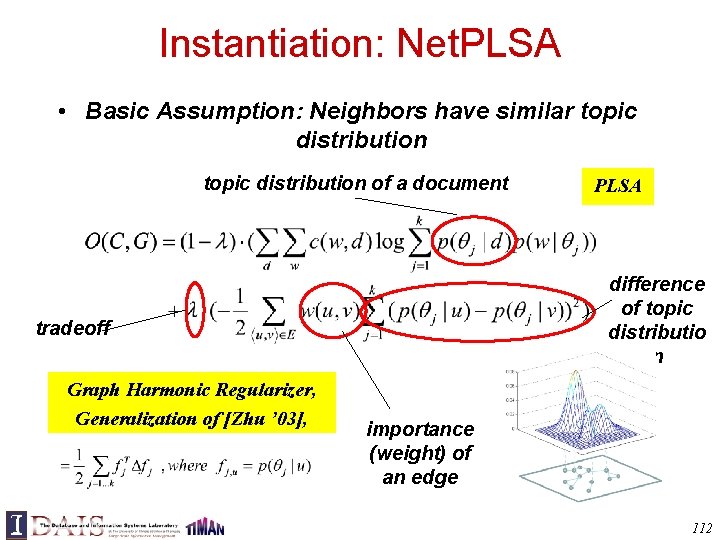

Network Supervised Topic Modeling [Mei et al. 08] • • Probabilistic topic modeling as an optimization problem (e. g. , PLSA/LDA: Maximum Likelihood): Regularized objective function with network constrains – Topic distribution are smoothed over adjacent vertices • Flexibility in selecting topic models and regularizers 111

Instantiation: Net. PLSA • Basic Assumption: Neighbors have similar topic distribution of a document difference of topic distributio n tradeoff Graph Harmonic Regularizer, Generalization of [Zhu ’ 03], PLSA importance (weight) of an edge 112

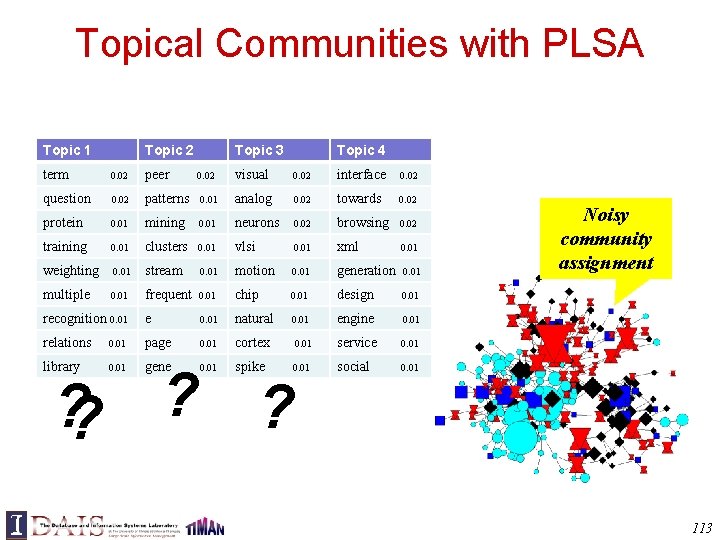

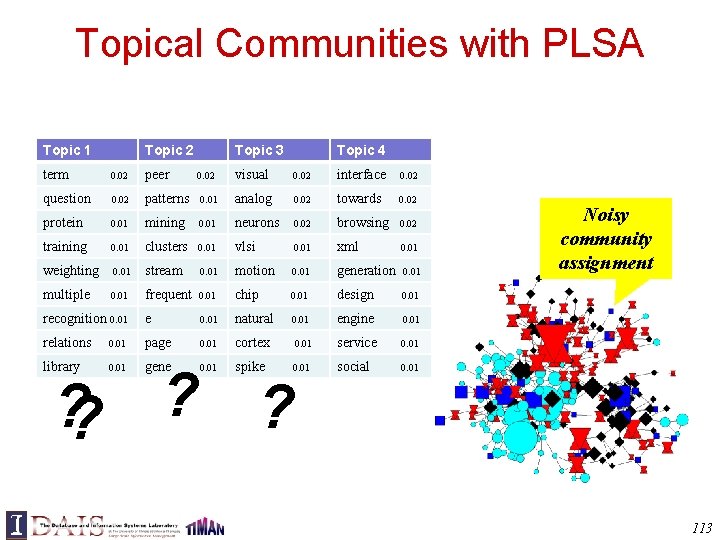

Topical Communities with PLSA Topic 1 Topic 2 Topic 3 Topic 4 term 0. 02 peer 0. 02 visual 0. 02 interface 0. 02 question 0. 02 patterns 0. 01 analog 0. 02 towards 0. 02 protein 0. 01 mining 0. 01 neurons 0. 02 browsing 0. 02 training 0. 01 clusters 0. 01 vlsi 0. 01 xml 0. 01 weighting 0. 01 stream 0. 01 motion 0. 01 generation 0. 01 multiple 0. 01 frequent 0. 01 chip 0. 01 design 0. 01 recognition 0. 01 e 0. 01 natural 0. 01 engine 0. 01 relations 0. 01 page 0. 01 cortex 0. 01 service 0. 01 library 0. 01 gene 0. 01 spike 0. 01 social 0. 01 ? ? Noisy community assignment 113

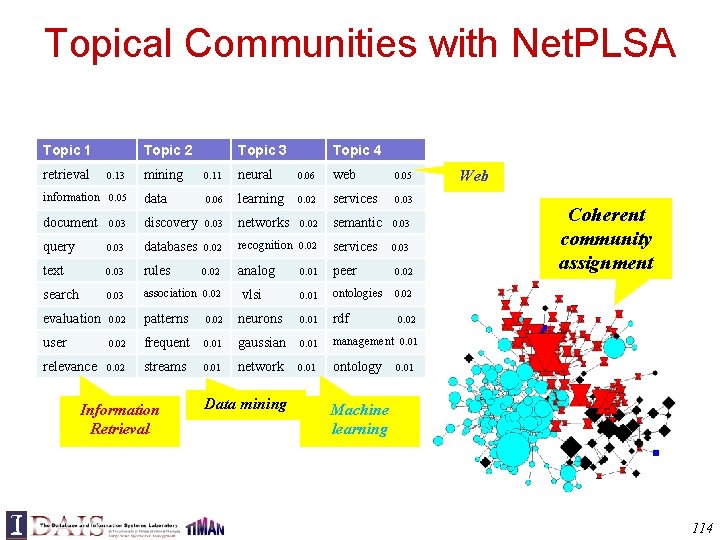

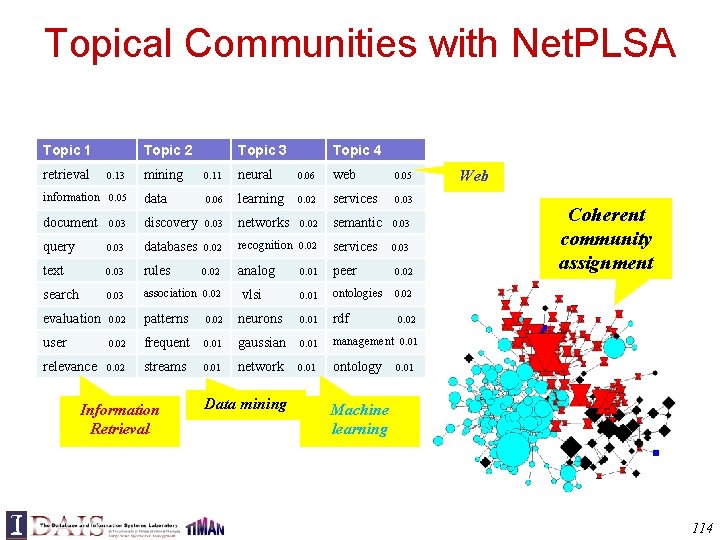

Topical Communities with Net. PLSA Topic 1 retrieval Topic 2 Topic 3 Topic 4 mining 0. 11 neural 0. 06 web 0. 05 information 0. 05 data 0. 06 learning 0. 02 services 0. 03 document 0. 03 discovery 0. 03 networks 0. 02 semantic 0. 03 query 0. 03 databases 0. 02 recognition 0. 02 services 0. 03 text 0. 03 rules 0. 02 analog 0. 01 peer 0. 02 search 0. 03 association 0. 02 vlsi 0. 01 ontologies 0. 02 evaluation 0. 02 patterns 0. 02 neurons 0. 01 rdf user 0. 02 frequent 0. 01 gaussian 0. 01 management 0. 01 relevance 0. 02 streams 0. 01 network 0. 01 ontology 0. 13 Information Retrieval Data mining Web Coherent community assignment 0. 02 0. 01 Machine learning 114

Outline 1. Background - Text Mining (TM) - Probabilistic Latent Semantic Analysis (PLSA) Latent Dirichlet Allocation (LDA) Applications of Basic Topic Models to Text Mining - Capturing Topic Structures Contextualized Topic Models Supervised Topic Models Statistical Language Models 2. Basic Topic Models 3. Advanced Topic Models 4. Summary We are here 115

Summary • Statistical Topic Models (STMs) are a new family of language models, especially useful for – Discovering latent topics in text – Analyzing latent structures and patterns of topics – Extensible for joint modeling and analysis of text and associated non-textual data • • • PLSA & LDA are two basic topic models that tend to function similarly, with LDA better as a generative model Many different models have been proposed with probably many more to come Many demonstrated applications in multiple domains and many more to come 116

Summary (cont. ) • However, all topic models suffer from the problem of multiple local maxima – Make it hard/impossible to reproduce research results – Make it hard/impossible to interpret results in real applications • Complex models can’t scale up to handle large amounts of text data – Collapsed Gibbs sampling is efficient, but only working for conjugate priors – Variational EM needs to be derived in a model-specific way – Parallel algorithms are promising • Many challenges remain…. 117

Challenges and Future Directions • Challenge 1: How can we quantitatively evaluate the benefit of topic models for text mining? – Currently, most quantitative evaluation is based on perplexity which doesn’t reflect the actual utility of a topic model for text mining – Need to separately evaluate the quality of both topic word distributions and topic coverage – Need to consider multiple aspects of a topic (e. g. , coherent? , meaningful? ) and define appropriate measures – Need to compare topic models with alternative approaches to solving the same text mining problem (e. g. , traditional IR methods, non-negative matrix factorization) – Need to create standard test collections 118

• Challenge 2: How can we help users interpret a topic? – Most of the time, a topic is manually labeled in a research paper; this is insufficient for real applications – Automatic labeling can help, but the utility still needs to evaluated – Need to generate a summary for a topic to enable a user to navigate into text documents to better understand a topic – Need to facilitate post-processing of discovered topics (e. g. , ranking, comparison) 119

Challenges and Future Directions (cont. ) • Challenge 3: How can we address the problem of multiple local maxima? – All topic models have the problem of multiple local maxima, causing problems with reproducing results – Need to compute the variance of a discovered topic – Need to define and report the confidence interval for a topic • Challenge 4: How can we develop efficient estimation/inference algorithms for sophisticated models? – How can we leverage a user’s knowledge to speed up inferences for topic models? – Need to develop parallel estimation/inference algorithms 120

Challenges and Future Directions (cont. ) • Challenge 5: How can we incorporate linguistic knowledge into topic models? – Most current topic models are purely statistical – Some progress has been made to incorporate linguistic knowledge (e. g. , [Griffiths et al. 04, Wallach 08]) – More needs to be done • Challenge 6: How can we incorporate domain knowledge and preferences from an analyst into a topic model to support complex text mining tasks? – Current models are mostly pre-specified with little flexibility for an analyst to “steer” the analysis process – Need to develop a general analysis framework to enable an analyst to use multiple topic models together to perform complex text mining tasks 121

![References incomplete Blei et al 02 D Blei A Ng and M Jordan Latent References (incomplete) [Blei et al. 02] D. Blei, A. Ng, and M. Jordan. Latent](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-122.jpg)

References (incomplete) [Blei et al. 02] D. Blei, A. Ng, and M. Jordan. Latent dirichlet allocation. In T G Dietterich, S. Becker, and Z. Ghahramani, editors, Advances in Neural Information Processing Systems 14, Cambridge, MA, 2002. MIT Press. [Blei et al. 03 a] David M. Blei, Andrew Y. Ng, Michael I. Jordan: Latent Dirichlet Allocation. Journal of Machine Learning Research 3: 993 -1022 (2003) [Griffiths et al. 04] Thomas L. Griffiths, Mark Steyvers, David M. Blei, Joshua B. Tenenbaum: Integrating Topics and Syntax. NIPS 2004 [Blei et al. 03 b] David M. Blei, Thomas L. Griffiths, Michael I. Jordan, Joshua B. Tenenbaum: Hierarchical Topic Models and the Nested Chinese Restaurant Process. NIPS 2003 [Teh et al. 04] Yee Whye Teh, Michael I. Jordan, Matthew J. Beal, David M. Blei: Sharing Clusters among Related Groups: Hierarchical Dirichlet Processes. NIPS 2004 [Blei & Lafferty 05] David M. Blei, John D. Lafferty: Correlated Topic Models. NIPS 2005 [Blei & Mc. Auliffe 07] David M. Blei, Jon D. Mc. Auliffe: Supervised Topic Models. NIPS 2007 [Hofmann 99 a] T. Hofmann. Probabilistic latent semantic indexing. In Proceedings on the 22 nd annual international ACMSIGIR 1999, pages 50 -57. [Hofmann 99 b] Thomas Hofmann: Probabilistic Latent Semantic Analysis. UAI 1999: 289 -296 [Hofmann 99 c] Thomas Hofmann: The Cluster-Abstraction Model: Unsupervised Learning of Topic Hierarchies from Text Data. IJCAI 1999: 682 -687 [Jelinek 98] F. Jelinek, Statistical Methods for Speech Recognition, Cambirdge: MIT Press, 1998. [Lu & Zhai 08] Yue Lu, Chengxiang Zhai: Opinion integration through semi-supervised topic modeling. WWW 2008: 121130 [Lu et al. 11] Yue Lu, Qiaozhu Mei, Cheng. Xiang Zhai: Investigating task performance of probabilistic topic models: an empirical study of PLSA and LDA. Inf. Retr. 14(2): 178 -203 (2011) [Mei et al. 05] Qiaozhu Mei, Cheng. Xiang Zhai: Discovering evolutionary theme patterns from text: an exploration of temporal text mining. KDD 2005: 198 -207 [Mei et al. 06 a] Qiaozhu Mei, Chao Liu, Hang Su, Cheng. Xiang Zhai: A probabilistic approach to spatiotemporal theme pattern mining on weblogs. WWW 2006: 533 -542 122

![References incomplete Mei Zhai 06 b Qiaozhu Mei Cheng Xiang Zhai A mixture References (incomplete) ]Mei & Zhai 06 b] Qiaozhu Mei, Cheng. Xiang Zhai: A mixture](https://slidetodoc.com/presentation_image_h/da70ebff47edfca59bdfbace5a6cf26e/image-123.jpg)

References (incomplete) ]Mei & Zhai 06 b] Qiaozhu Mei, Cheng. Xiang Zhai: A mixture model for contextual text mining. KDD 2006: 649 -655 [Met et al. 07 a] Qiaozhu Mei, Xu Ling, Matthew Wondra, Hang Su, Cheng. Xiang Zhai: Topic sentiment mixture: modeling facets and opinions in weblogs. WWW 2007: 171 -180 [Mei et al. 07 b] Qiaozhu Mei, Xuehua Shen, Cheng. Xiang Zhai: Automatic labeling of multinomial topic models. KDD 2007: 490 -499 [Mei et al. 08] Qiaozhu Mei, Deng Cai, Duo Zhang, Cheng. Xiang Zhai: Topic modeling with network regularization. WWW 2008: 101 -110 [Mimno & Mc. Callum 08[ David M. Mimno, Andrew Mc. Callum: Topic Models Conditioned on Arbitrary Features with Dirichlet-multinomial Regression. UAI 2008: 411 -418 [Minka & Lafferty 03] T. Minka and J. Lafferty, Expectation-propagation for the generative aspect model, In Proceedings of the UAI 2002, pages 352 --359. [Pritchard et al. 00] J. K. Pritchard, M. Stephens, P. Donnelly, Inference of population structure using multilocus genotype data, Genetics. 2000 Jun; 155(2): 945 -59. [Rosen-Zvi et al. 04] Michal Rosen-Zvi, Thomas L. Griffiths, Mark Steyvers, Padhraic Smyth: The Author-Topic Model for Authors and Documents. UAI 2004: 487 -494 [Wnag et al. 10] Hongning Wang, Yue Lu, Chengxiang Zhai: Latent aspect rating analysis on review text data: a rating regression approach. KDD 2010: 783 -792 [Wang et al. 11] Hongning Wang, Yue Lu, Cheng. Xiang Zhai: Latent aspect rating analysis without aspect keyword supervision. KDD 2011: 618 -626 [Zhai et al. 04] Cheng. Xiang Zhai, Atulya Velivelli, Bei Yu: A cross-collection mixture model for comparative text mining. KDD 2004: 743 -748 123