Statistical Significance Testing 1 The purpose of Statistical

- Slides: 9

Statistical Significance Testing 1

The purpose of Statistical Significance Testing n The purpose of Statistical Significance Testing is to answer the following questions: n Can the observed results be attributed to real characteristics of the classifiers under scrutiny or were they obtained by chance? n Were the data sets representative of the problems to which the classifier will be applied in the future? Important: Unfortunately, because of the inductive nature of the problem, such questions cannot be fully answered. The user should, instead, accept that no matter what evaluation procedures are followed, they only allows us to gather some evidence into the 2 classifiers’ behaviour. They are almost never conclusive.

Current Disagreements with Statistical Significance Testing n There is, currently, a controversy in the statistical community with some scholars calling for the rejection of Null Hypothesis Significance Testing (NHST) due to the fact that: n n n It is commonly misinterpreted, causing over-confidence in meaningless results. Its results can be manipulated to show statistical significance even if that significance is not practically meaningful. While remaining cautious about these issues, we believe that NHST is the best tool we have to answer the previous two questions, and will continue to use it. 3

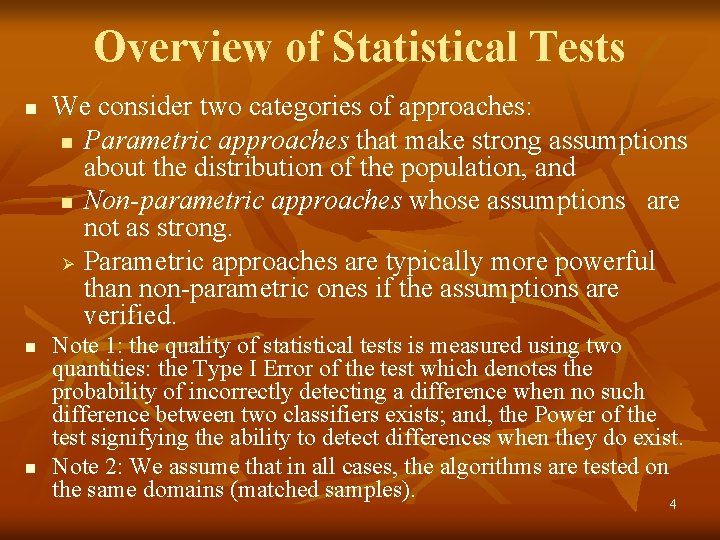

Overview of Statistical Tests n n n We consider two categories of approaches: n Parametric approaches that make strong assumptions about the distribution of the population, and n Non-parametric approaches whose assumptions are not as strong. Ø Parametric approaches are typically more powerful than non-parametric ones if the assumptions are verified. Note 1: the quality of statistical tests is measured using two quantities: the Type I Error of the test which denotes the probability of incorrectly detecting a difference when no such difference between two classifiers exists; and, the Power of the test signifying the ability to detect differences when they do exist. Note 2: We assume that in all cases, the algorithms are tested on the same domains (matched samples). 4

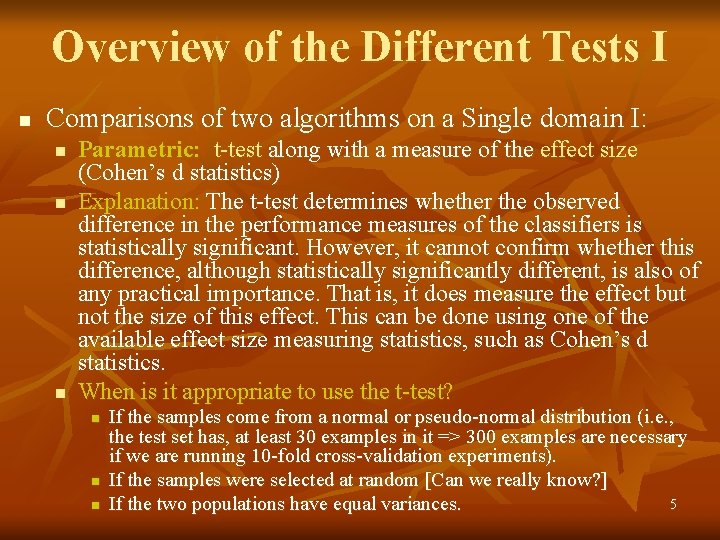

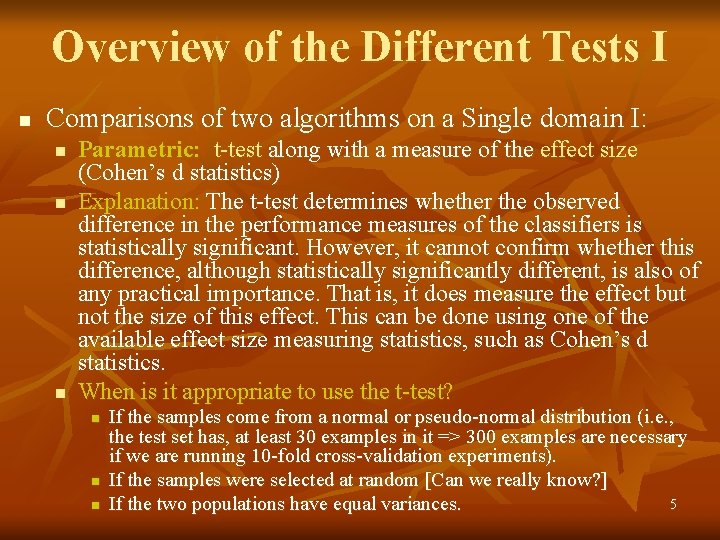

Overview of the Different Tests I n Comparisons of two algorithms on a Single domain I: n n n Parametric: t-test along with a measure of the effect size (Cohen’s d statistics) Explanation: The t-test determines whether the observed difference in the performance measures of the classifiers is statistically significant. However, it cannot confirm whether this difference, although statistically significantly different, is also of any practical importance. That is, it does measure the effect but not the size of this effect. This can be done using one of the available effect size measuring statistics, such as Cohen’s d statistics. When is it appropriate to use the t-test? n n n If the samples come from a normal or pseudo-normal distribution (i. e. , the test set has, at least 30 examples in it => 300 examples are necessary if we are running 10 -fold cross-validation experiments). If the samples were selected at random [Can we really know? ] 5 If the two populations have equal variances.

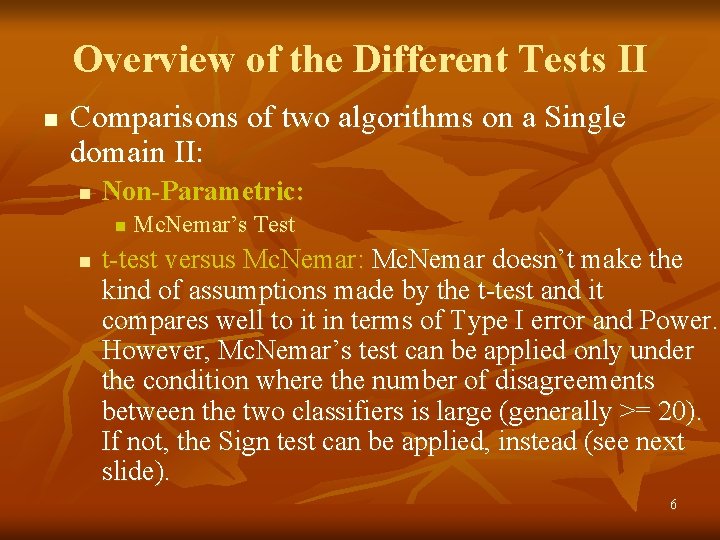

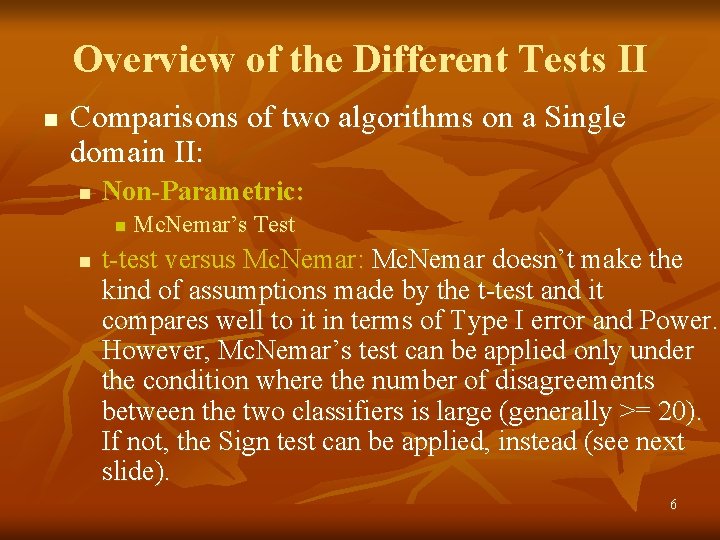

Overview of the Different Tests II n Comparisons of two algorithms on a Single domain II: n Non-Parametric: n n Mc. Nemar’s Test t-test versus Mc. Nemar: Mc. Nemar doesn’t make the kind of assumptions made by the t-test and it compares well to it in terms of Type I error and Power. However, Mc. Nemar’s test can be applied only under the condition where the number of disagreements between the two classifiers is large (generally >= 20). If not, the Sign test can be applied, instead (see next slide). 6

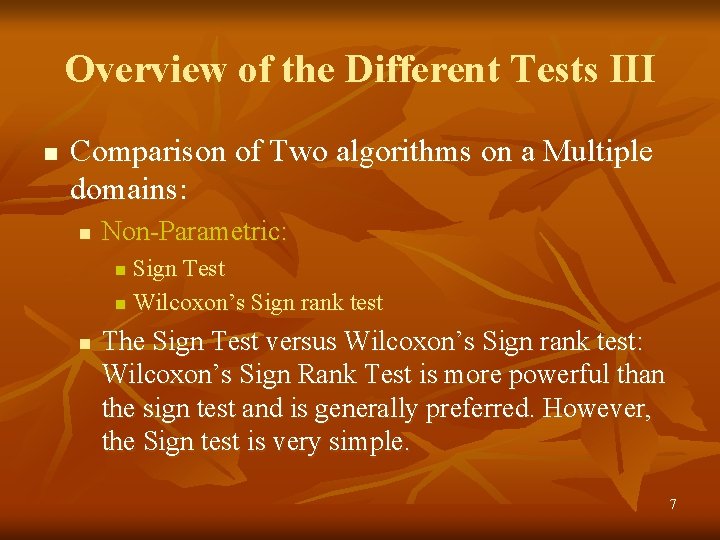

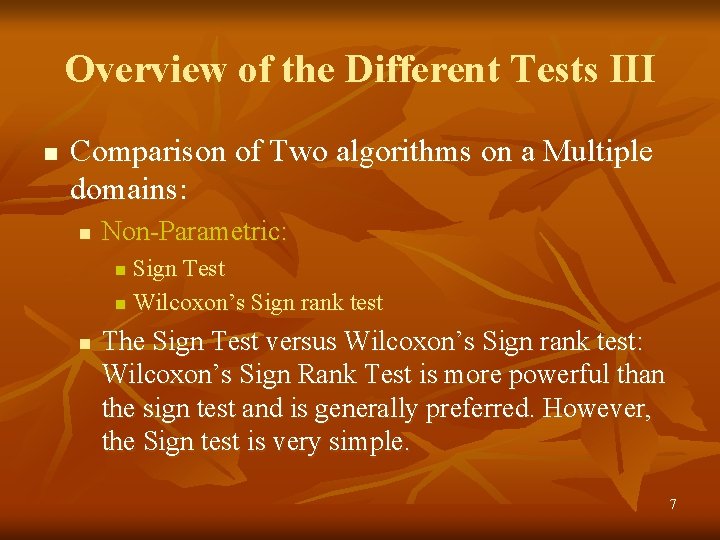

Overview of the Different Tests III n Comparison of Two algorithms on a Multiple domains: n Non-Parametric: Sign Test n Wilcoxon’s Sign rank test n n The Sign Test versus Wilcoxon’s Sign rank test: Wilcoxon’s Sign Rank Test is more powerful than the sign test and is generally preferred. However, the Sign test is very simple. 7

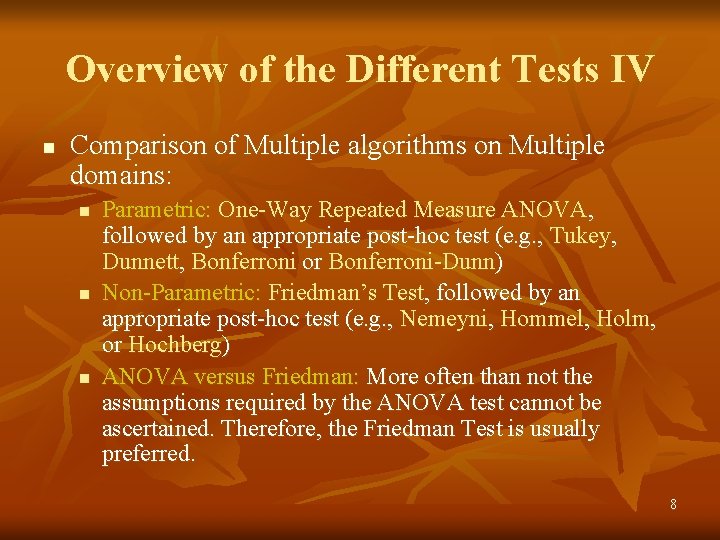

Overview of the Different Tests IV n Comparison of Multiple algorithms on Multiple domains: n n n Parametric: One-Way Repeated Measure ANOVA, followed by an appropriate post-hoc test (e. g. , Tukey, Dunnett, Bonferroni or Bonferroni-Dunn) Non-Parametric: Friedman’s Test, followed by an appropriate post-hoc test (e. g. , Nemeyni, Hommel, Holm, or Hochberg) ANOVA versus Friedman: More often than not the assumptions required by the ANOVA test cannot be ascertained. Therefore, the Friedman Test is usually preferred. 8

Practical Concerns n Section 6. 8 of my book (with M. Shah) shows how to use the freely downloadable R Statistical Software, in order to compute most of the tests discussed on the previous slides. 9