Statistical Learning Dong Liu Dept EEIS USTC Chapter

- Slides: 36

Statistical Learning Dong Liu Dept. EEIS, USTC

Chapter 8. Decision Tree 1. 2. 3. 4. Tree model Tree building Tree pruning Tree and ensemble 2020/9/30 Chap 8. Decision Tree 2

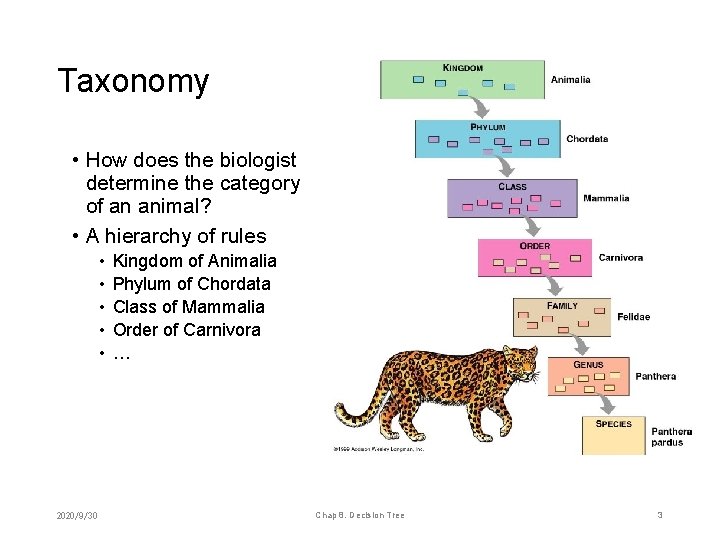

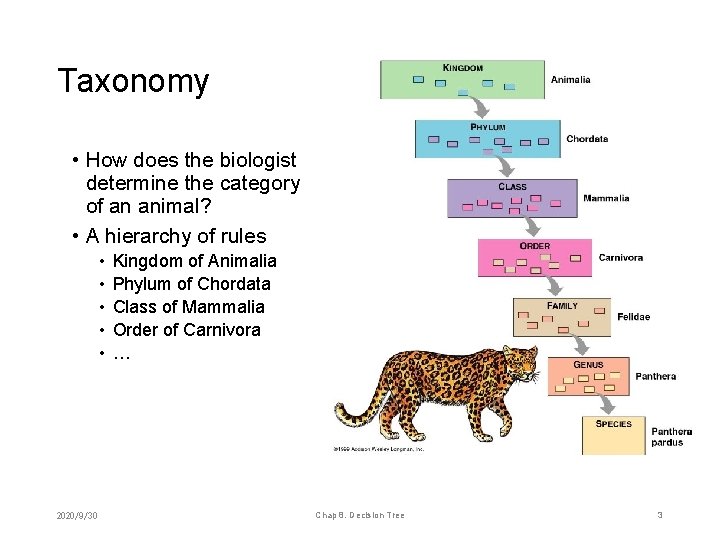

Taxonomy • How does the biologist determine the category of an animal? • A hierarchy of rules • • • 2020/9/30 Kingdom of Animalia Phylum of Chordata Class of Mammalia Order of Carnivora … Chap 8. Decision Tree 3

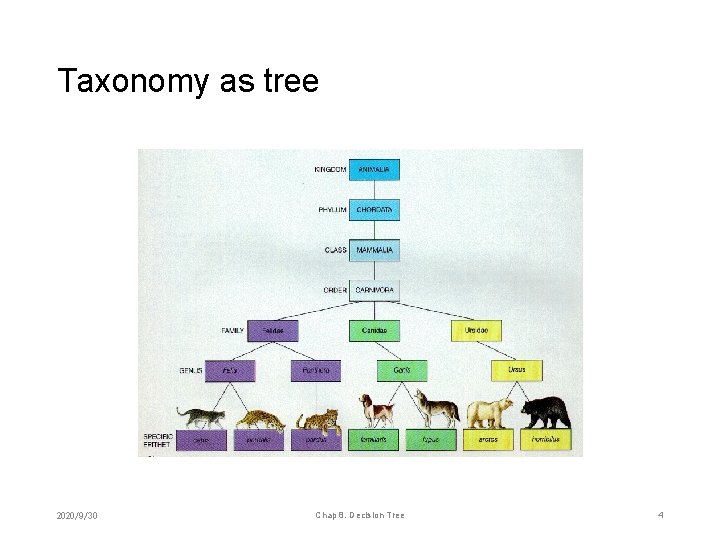

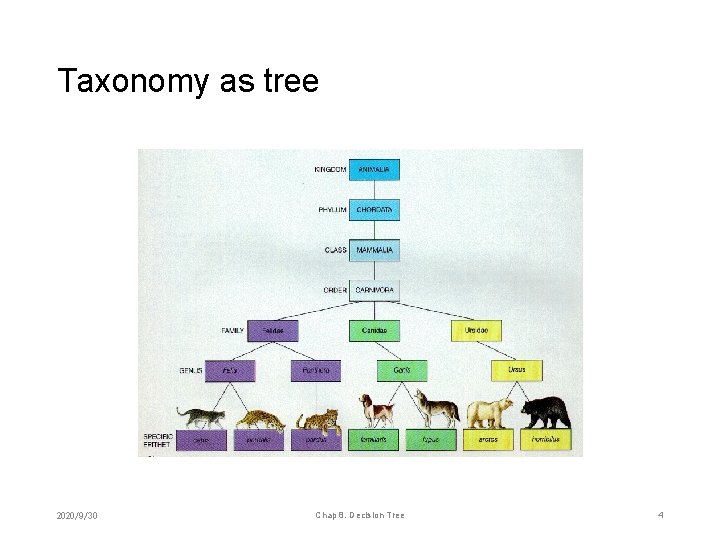

Taxonomy as tree 2020/9/30 Chap 8. Decision Tree 4

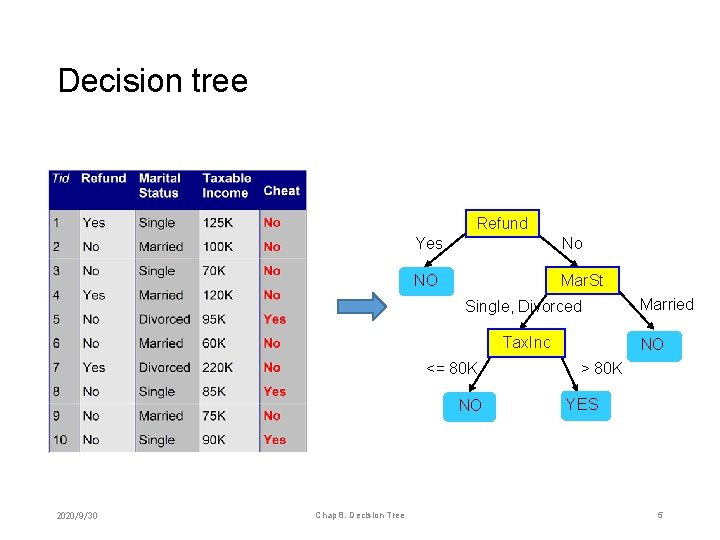

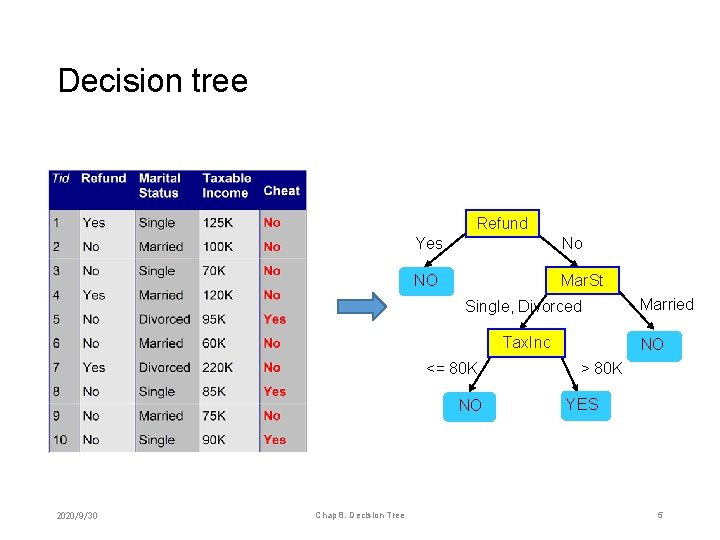

Decision tree Refund Yes No NO Mar. St Single, Divorced Tax. Inc <= 80 K NO 2020/9/30 Chap 8. Decision Tree Married NO > 80 K YES 5

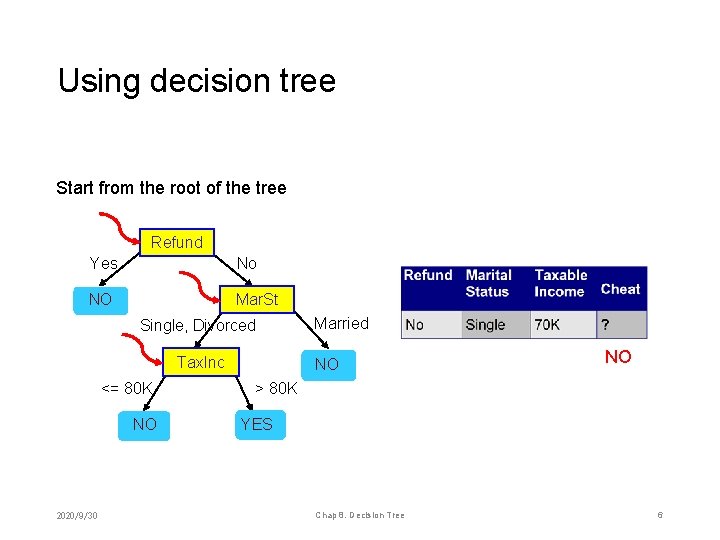

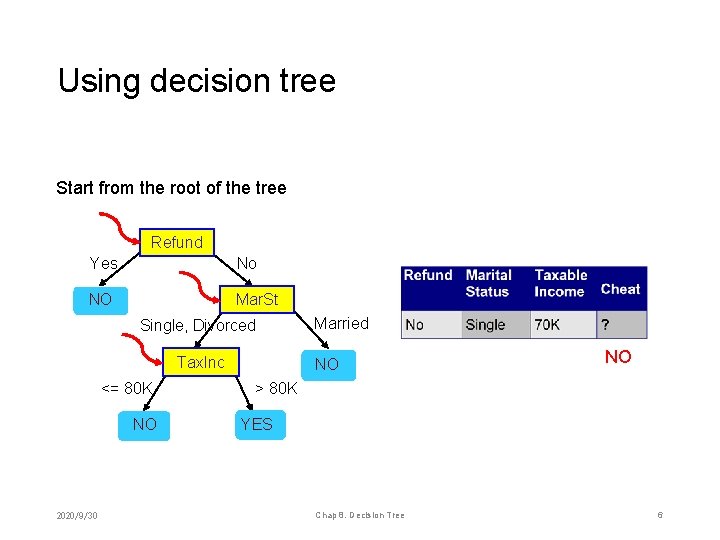

Using decision tree Start from the root of the tree Refund Yes No NO Mar. St Single, Divorced Tax. Inc <= 80 K NO 2020/9/30 Married NO NO > 80 K YES Chap 8. Decision Tree 6

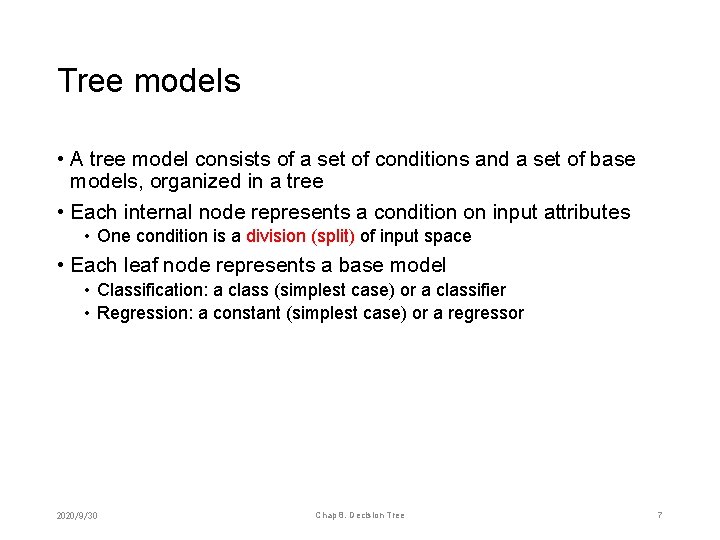

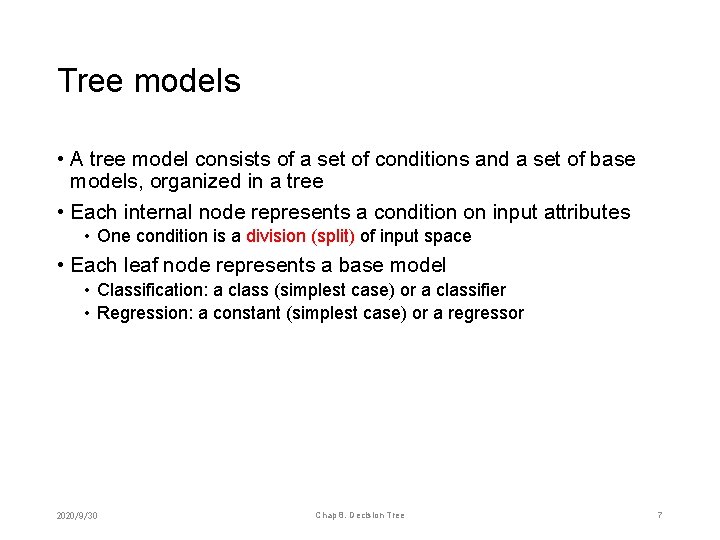

Tree models • A tree model consists of a set of conditions and a set of base models, organized in a tree • Each internal node represents a condition on input attributes • One condition is a division (split) of input space • Each leaf node represents a base model • Classification: a class (simplest case) or a classifier • Regression: a constant (simplest case) or a regressor 2020/9/30 Chap 8. Decision Tree 7

Chapter 8. Decision Tree 1. 2. 3. 4. Tree model Tree building Tree pruning Tree and ensemble 2020/9/30 Chap 8. Decision Tree 8

Tree induction • Assume we have defined the form of base models • How to find out the optimal tree structure (set of conditions, division of input space)? • Exhaustive search is computationally expensive • Heuristic approach: Hunt’s algorithm 2020/9/30 Chap 8. Decision Tree 9

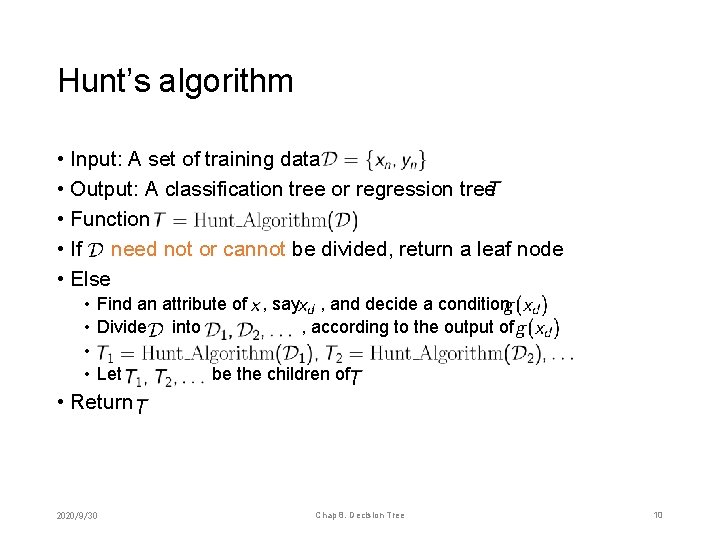

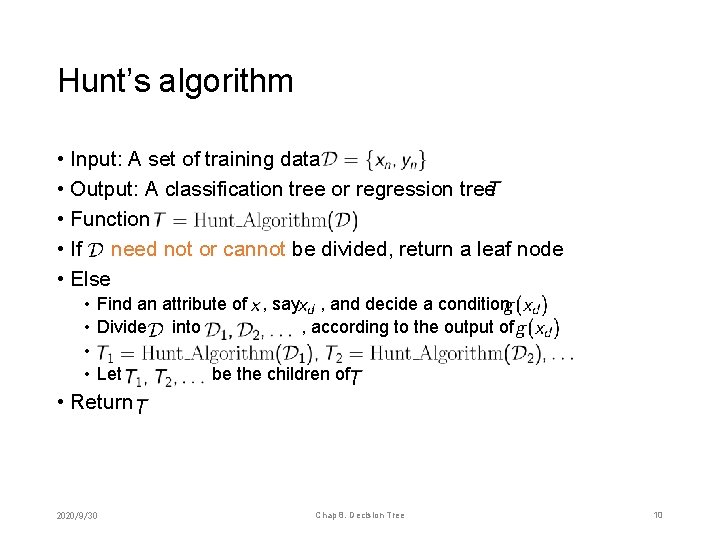

Hunt’s algorithm • Input: A set of training data • Output: A classification tree or regression tree • Function • If need not or cannot be divided, return a leaf node • Else • Find an attribute of , say , and decide a condition • Divide into , according to the output of • • Let be the children of • Return 2020/9/30 Chap 8. Decision Tree 10

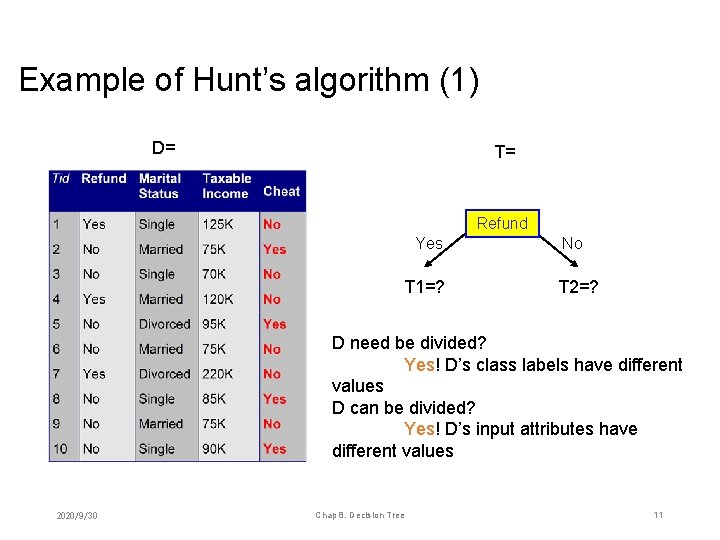

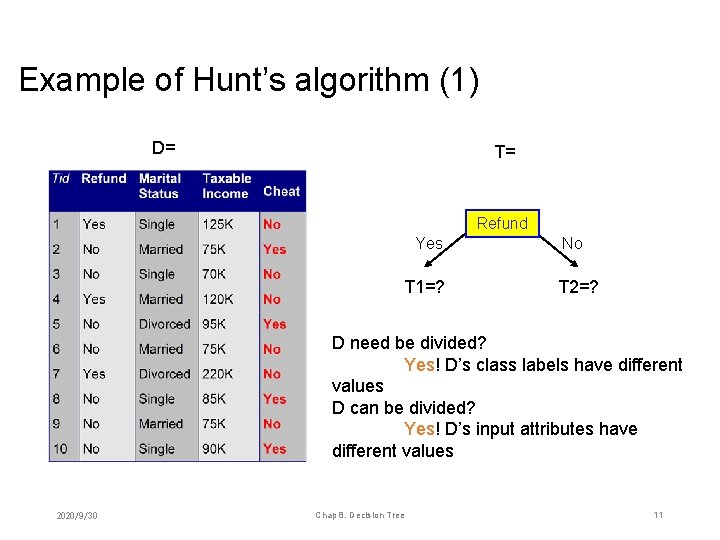

Example of Hunt’s algorithm (1) D= T= Refund Yes T 1=? No T 2=? D need be divided? Yes! D’s class labels have different values D can be divided? Yes! D’s input attributes have different values 2020/9/30 Chap 8. Decision Tree 11

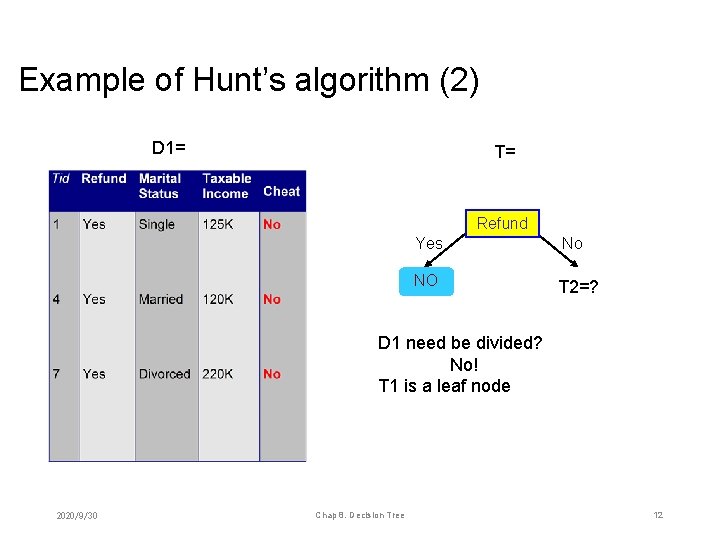

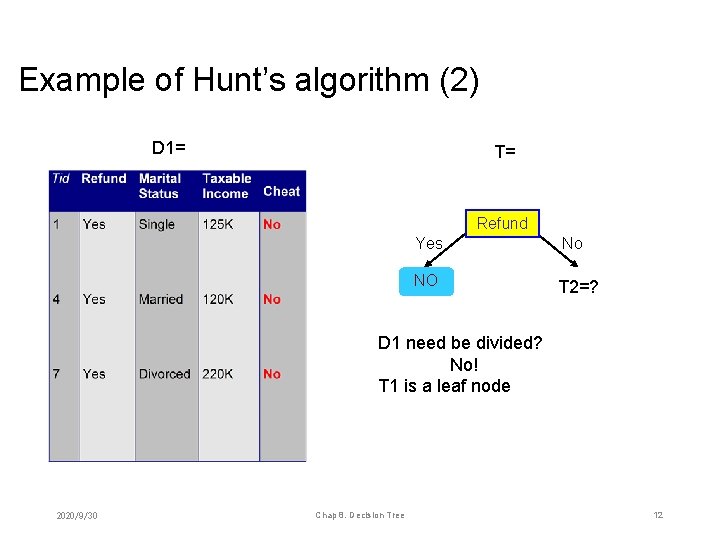

Example of Hunt’s algorithm (2) D 1= T= Refund Yes NO T 1=? No T 2=? D 1 need be divided? No! T 1 is a leaf node 2020/9/30 Chap 8. Decision Tree 12

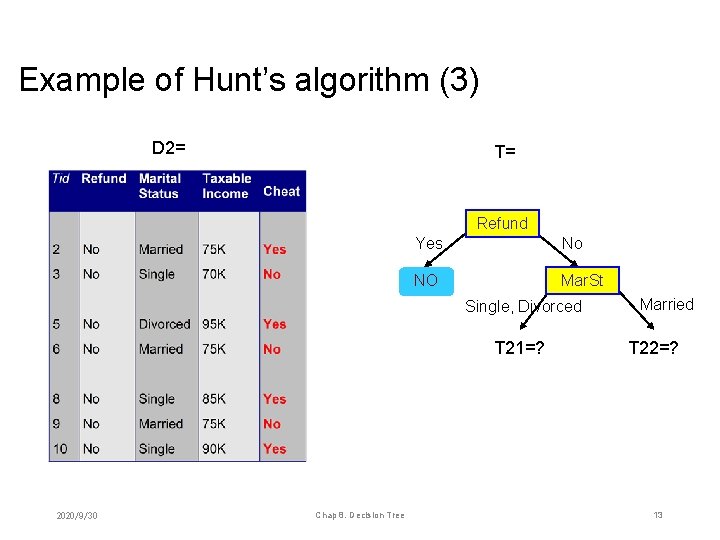

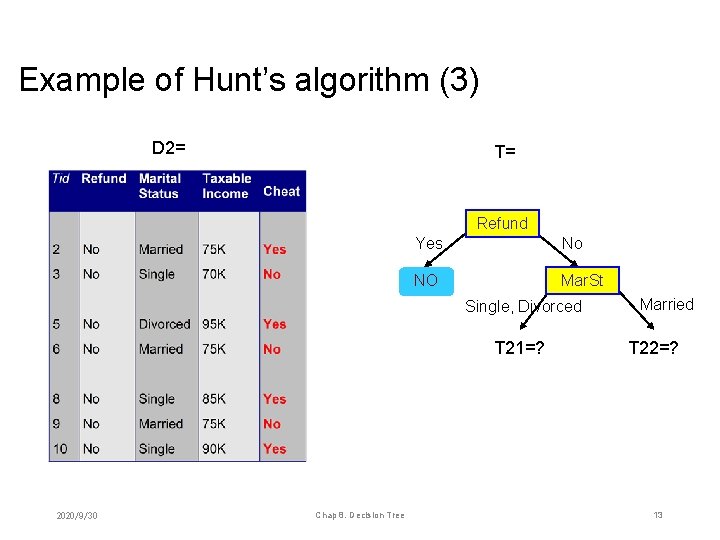

Example of Hunt’s algorithm (3) D 2= T= Refund Yes No NO Mar. St T 2=? Single, Divorced T 21=? 2020/9/30 Chap 8. Decision Tree Married T 22=? 13

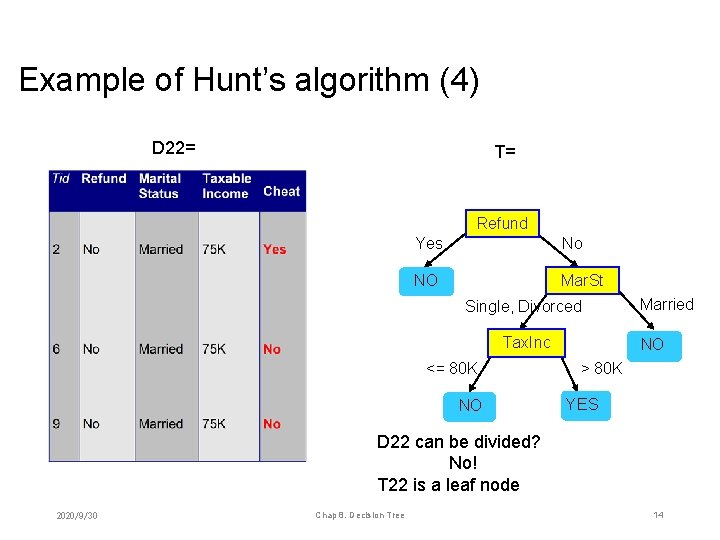

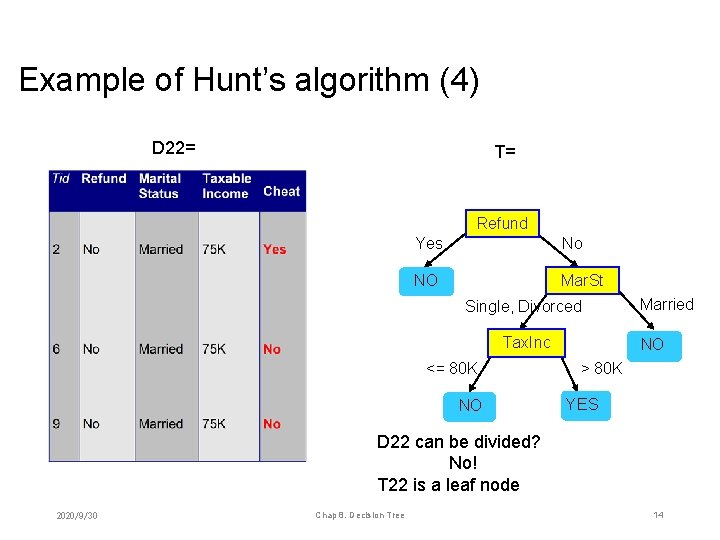

Example of Hunt’s algorithm (4) D 22= T= Refund Yes No NO Mar. St Single, Divorced Tax. Inc <= 80 K NO Married NO T 22=? > 80 K YES D 22 can be divided? No! T 22 is a leaf node 2020/9/30 Chap 8. Decision Tree 14

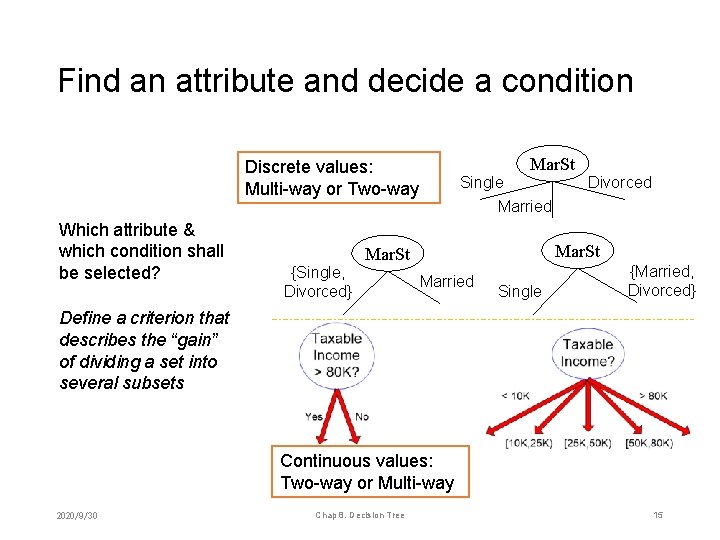

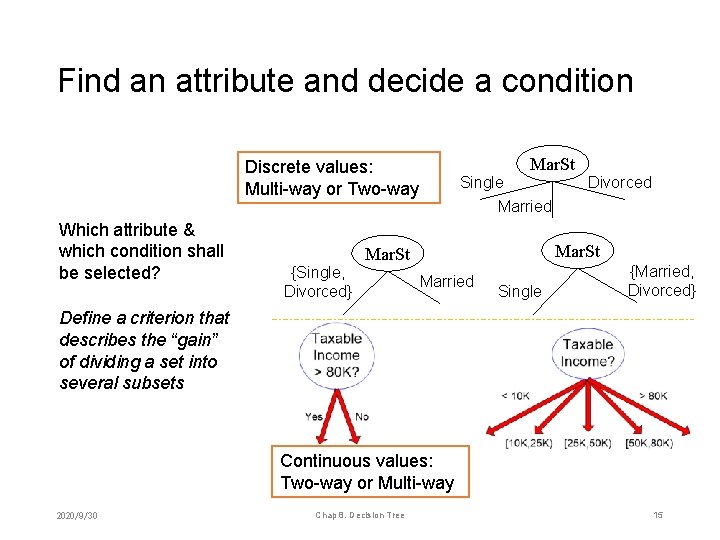

Find an attribute and decide a condition Mar. St Discrete values: Multi-way or Two-way Which attribute & which condition shall be selected? {Single, Divorced} Single Married Divorced Mar. St Married Single {Married, Divorced} Define a criterion that describes the “gain” of dividing a set into several subsets Continuous values: Two-way or Multi-way 2020/9/30 Chap 8. Decision Tree 15

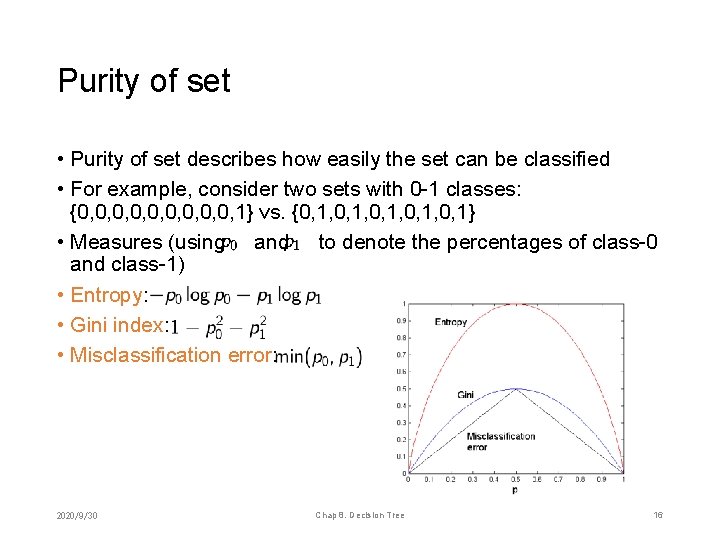

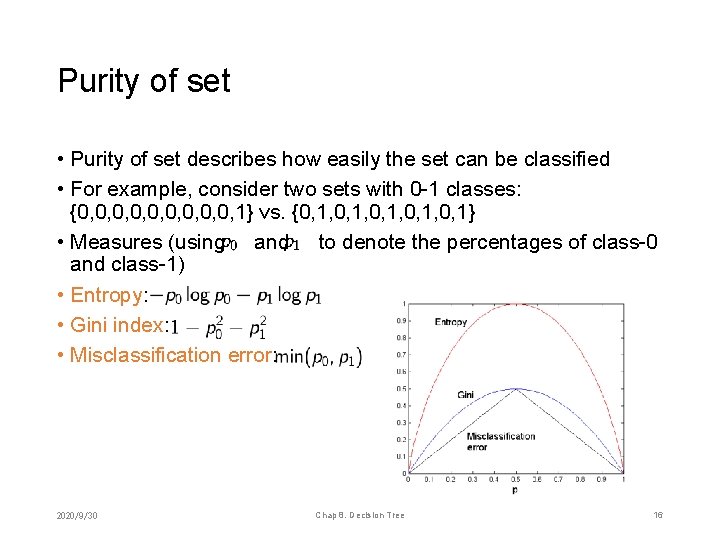

Purity of set • Purity of set describes how easily the set can be classified • For example, consider two sets with 0 -1 classes: {0, 0, 0, 1} vs. {0, 1, 0, 1} • Measures (using and to denote the percentages of class-0 and class-1) • Entropy: • Gini index: • Misclassification error: 2020/9/30 Chap 8. Decision Tree 16

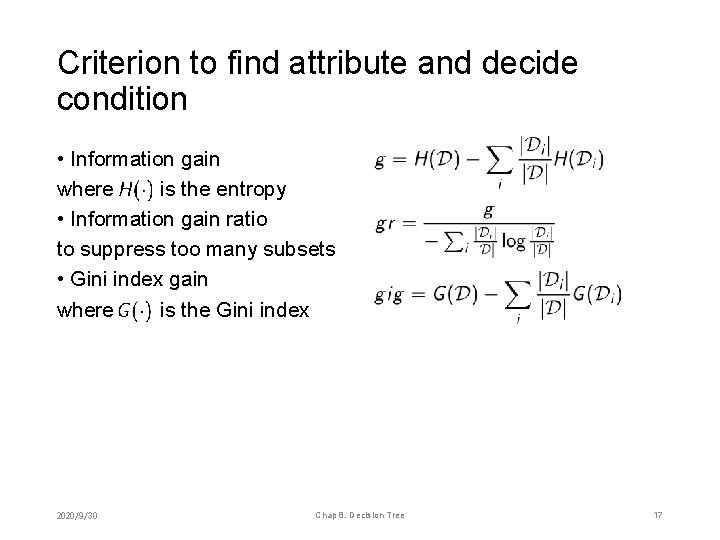

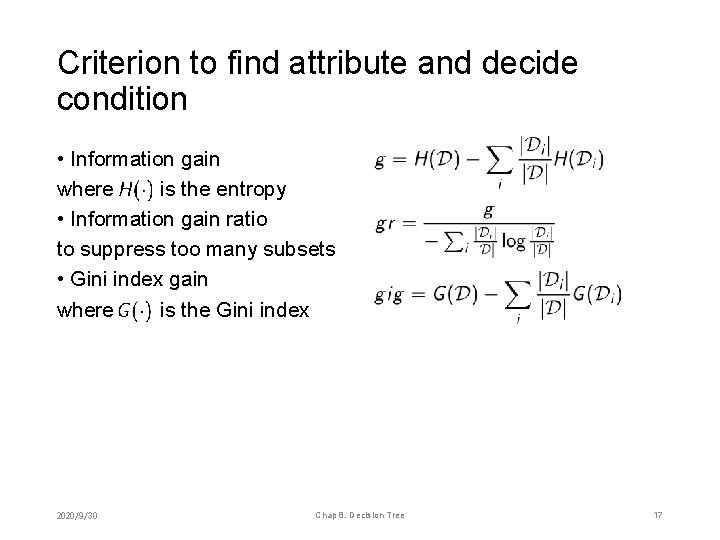

Criterion to find attribute and decide condition • Information gain where is the entropy • Information gain ratio to suppress too many subsets • Gini index gain where is the Gini index 2020/9/30 Chap 8. Decision Tree 17

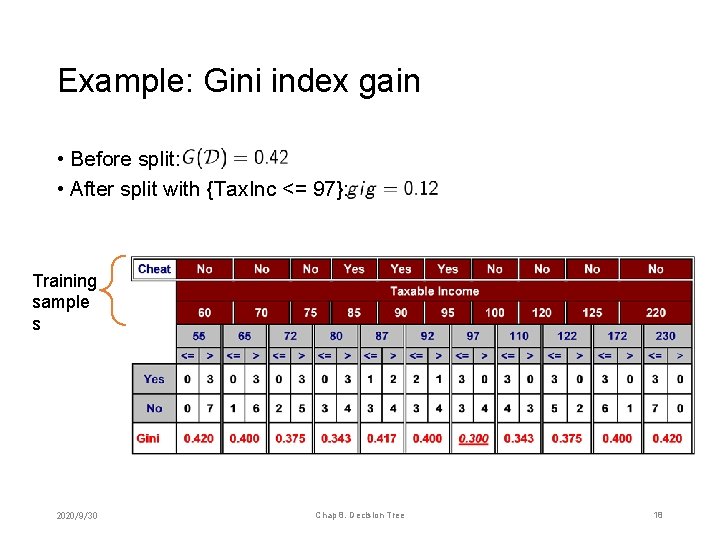

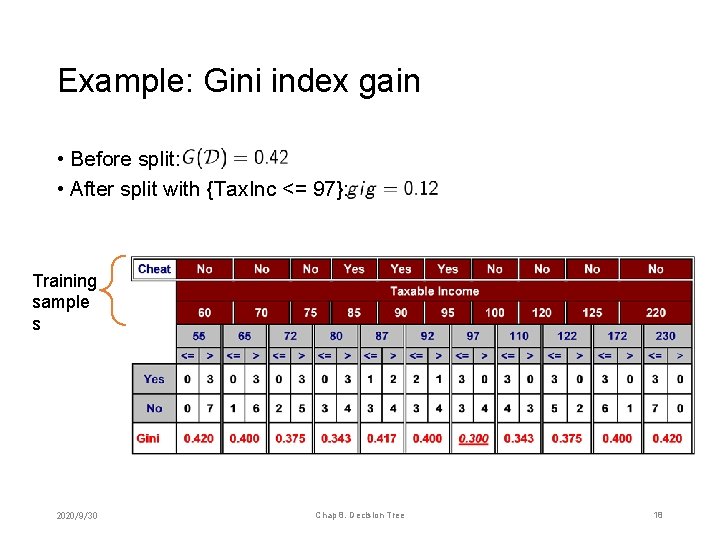

Example: Gini index gain • Before split: • After split with {Tax. Inc <= 97}: Training sample s 2020/9/30 Chap 8. Decision Tree 18

Chapter 8. Decision Tree 1. 2. 3. 4. Tree model Tree building Tree pruning Tree and ensemble 2020/9/30 Chap 8. Decision Tree 19

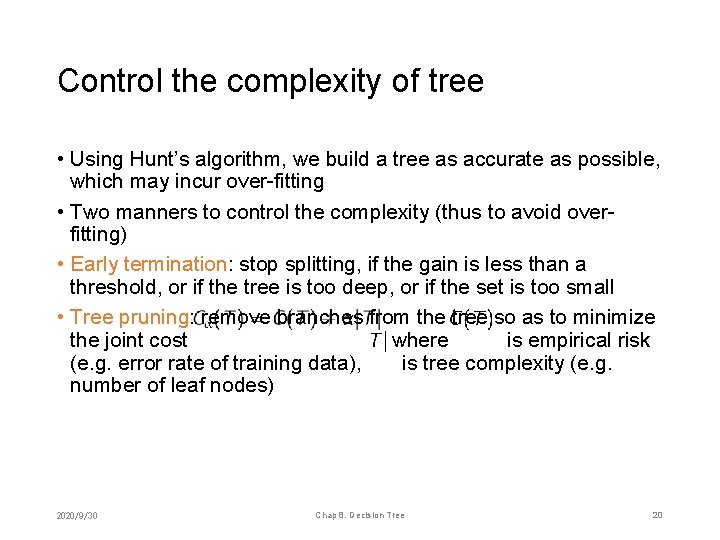

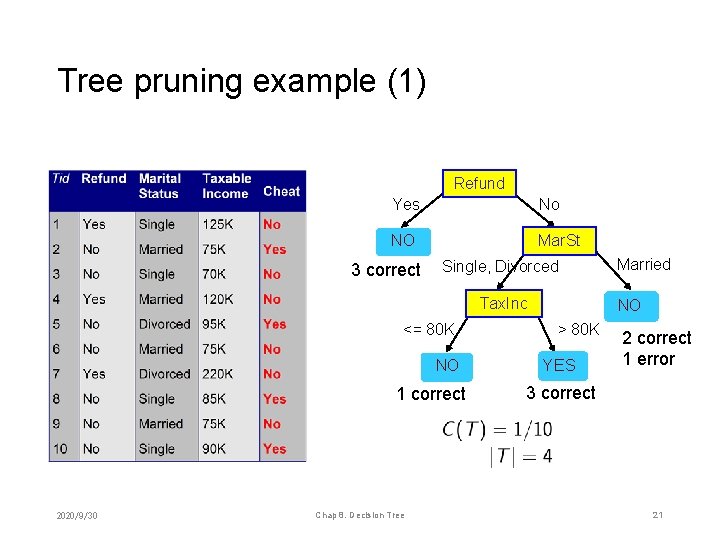

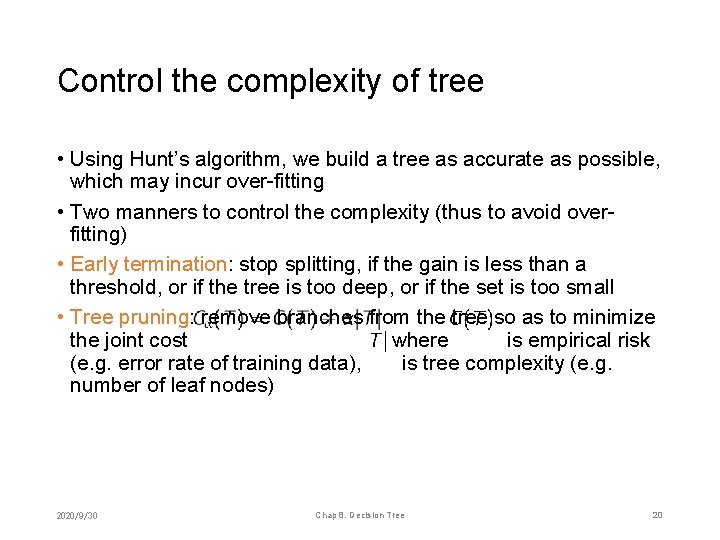

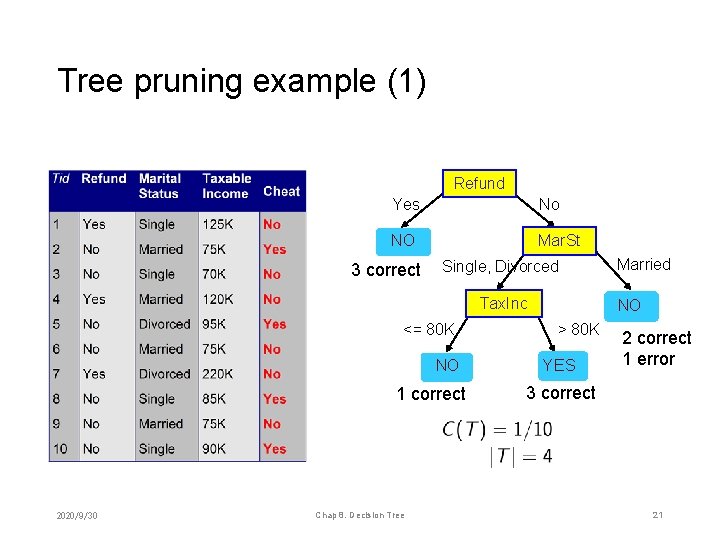

Control the complexity of tree • Using Hunt’s algorithm, we build a tree as accurate as possible, which may incur over-fitting • Two manners to control the complexity (thus to avoid overfitting) • Early termination: stop splitting, if the gain is less than a threshold, or if the tree is too deep, or if the set is too small • Tree pruning: remove branches from the tree so as to minimize the joint cost where is empirical risk (e. g. error rate of training data), is tree complexity (e. g. number of leaf nodes) 2020/9/30 Chap 8. Decision Tree 20

Tree pruning example (1) Refund Yes No NO Mar. St 3 correct Single, Divorced Tax. Inc <= 80 K NO 1 correct 2020/9/30 Chap 8. Decision Tree Married NO > 80 K YES 2 correct 1 error 3 correct 21

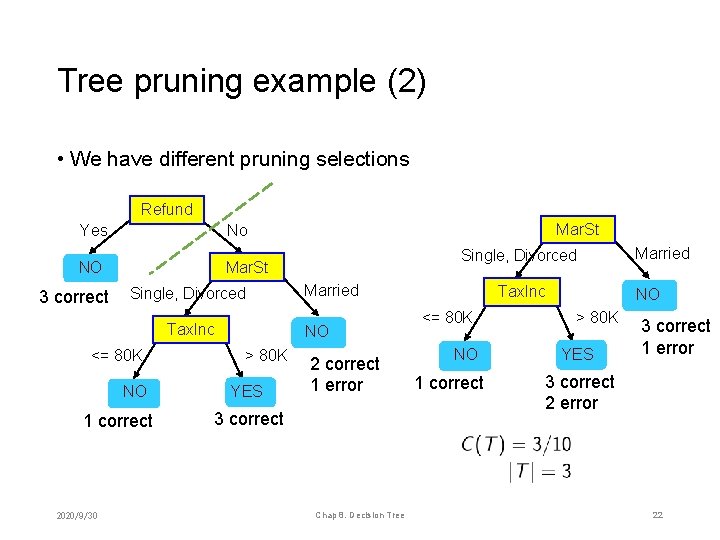

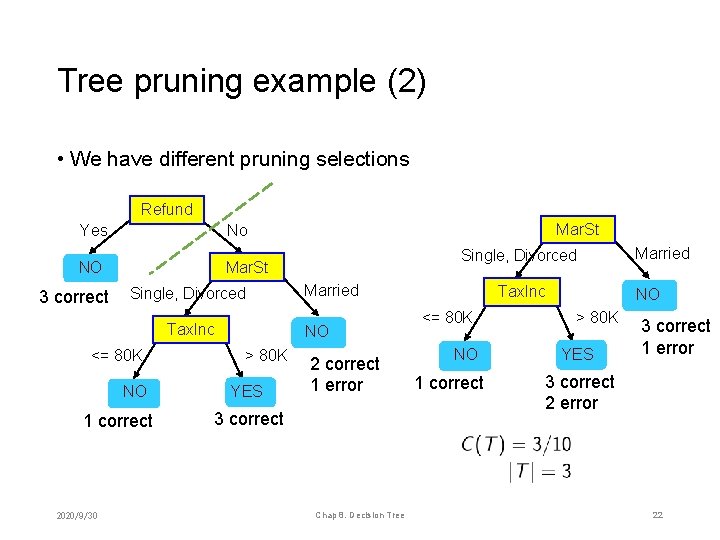

Tree pruning example (2) • We have different pruning selections Refund Yes NO 3 correct Single, Divorced Mar. St Single, Divorced Tax. Inc <= 80 K NO 1 correct 2020/9/30 Mar. St No Married NO > 80 K YES 2 correct 1 error 3 correct Chap 8. Decision Tree Tax. Inc <= 80 K NO 1 correct Married NO > 80 K YES 3 correct 1 error 3 correct 2 error 22

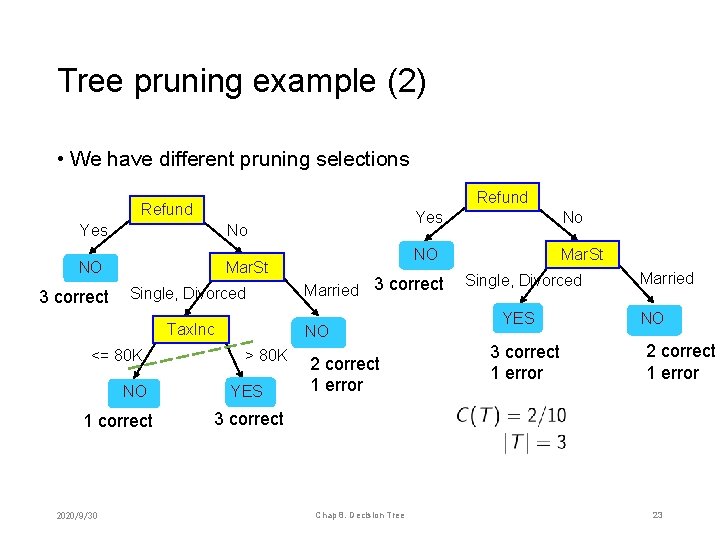

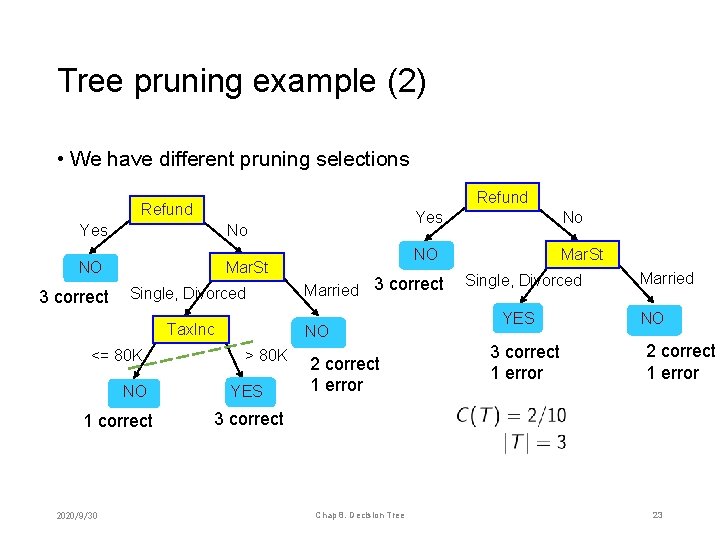

Tree pruning example (2) • We have different pruning selections Refund Yes No NO 3 correct Mar. St Single, Divorced Tax. Inc <= 80 K NO 1 correct 2020/9/30 YES No NO Mar. St Married 3 correct NO > 80 K Yes 2 correct 1 error Single, Divorced YES 3 correct 1 error Married NO 2 correct 1 error 3 correct Chap 8. Decision Tree 23

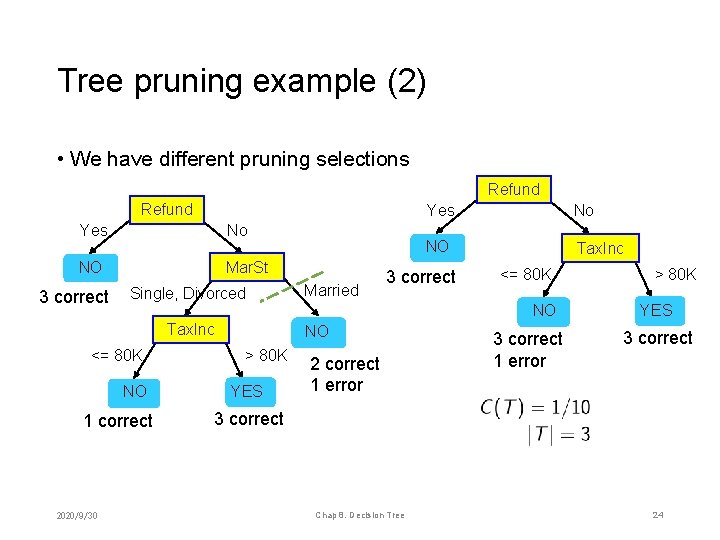

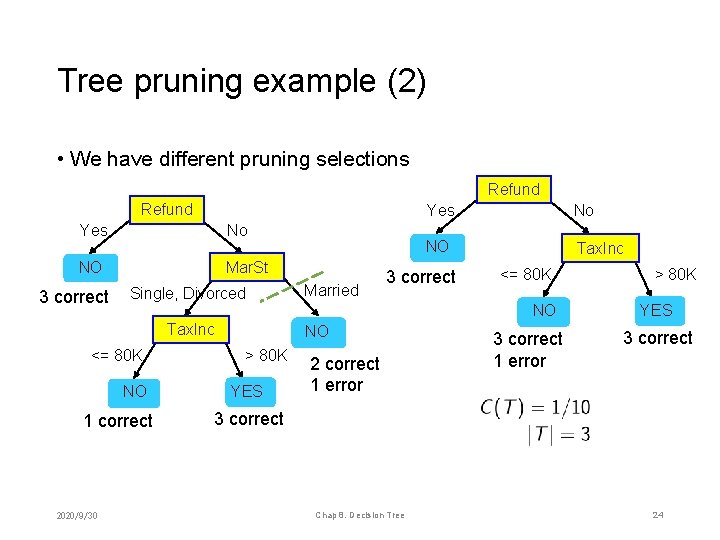

Tree pruning example (2) • We have different pruning selections Refund Yes No NO Mar. St 3 correct Single, Divorced Tax. Inc <= 80 K NO 1 correct 2020/9/30 Married YES No NO Tax. Inc 3 correct <= 80 K NO > 80 K Yes 2 correct 1 error 3 correct 1 error > 80 K YES 3 correct Chap 8. Decision Tree 24

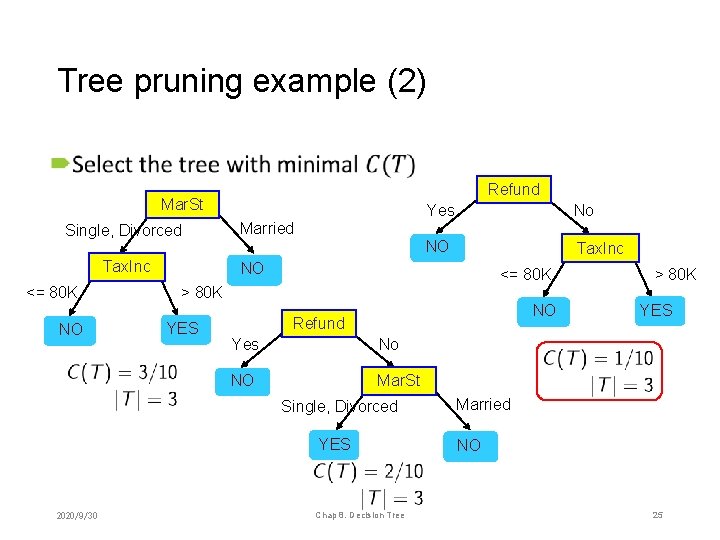

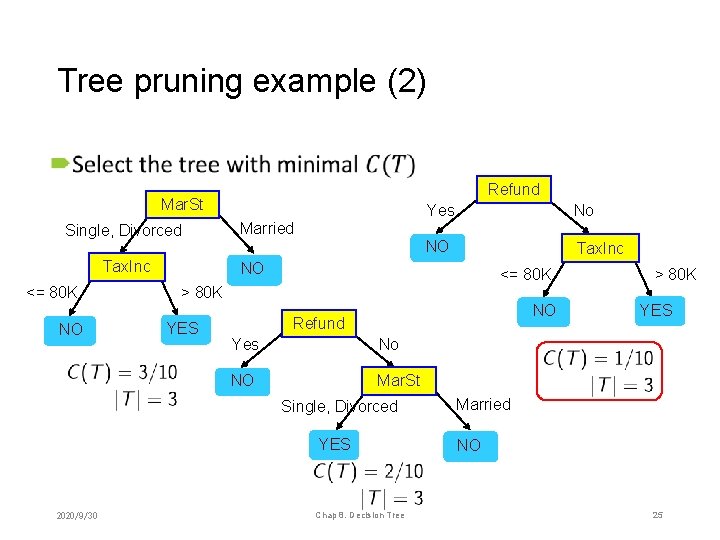

Tree pruning example (2) • Refund Mar. St Single, Divorced Tax. Inc <= 80 K NO Married No NO Tax. Inc NO <= 80 K > 80 K YES NO Refund Yes No NO Mar. St Single, Divorced YES 2020/9/30 Yes Chap 8. Decision Tree > 80 K YES Married NO 25

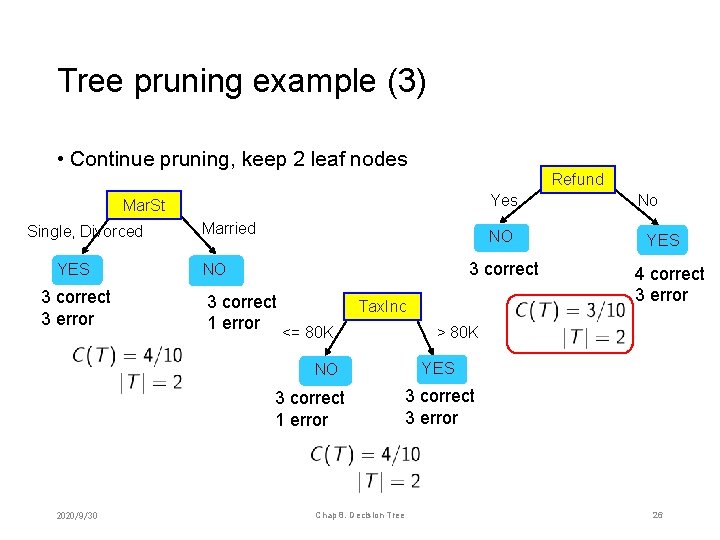

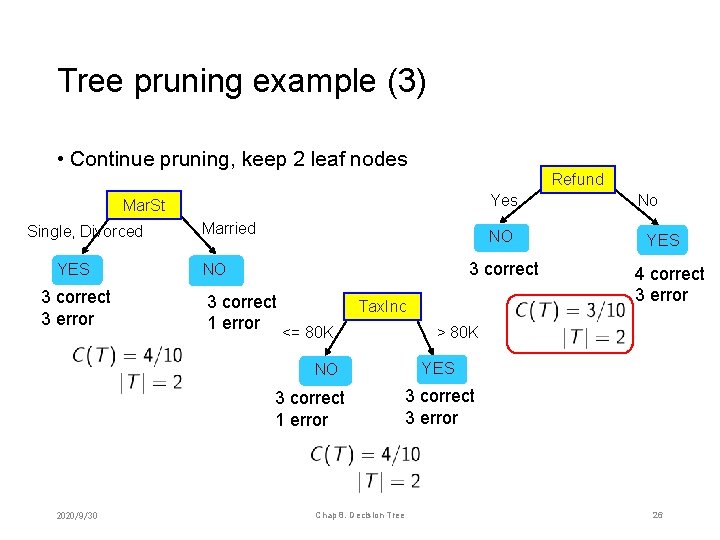

Tree pruning example (3) • Continue pruning, keep 2 leaf nodes Refund Yes Mar. St Single, Divorced YES 3 correct 3 error Married NO 3 correct 1 error <= 80 K Tax. Inc NO 3 correct 1 error 2020/9/30 Chap 8. Decision Tree No YES 4 correct 3 error > 80 K YES 3 correct 3 error 26

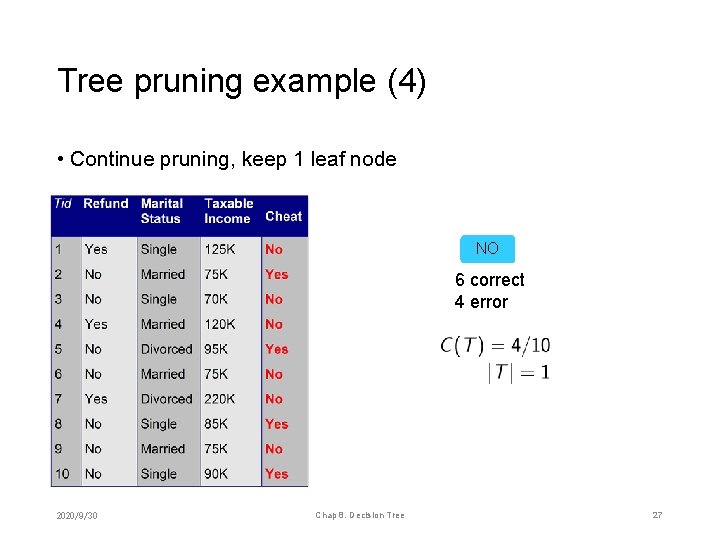

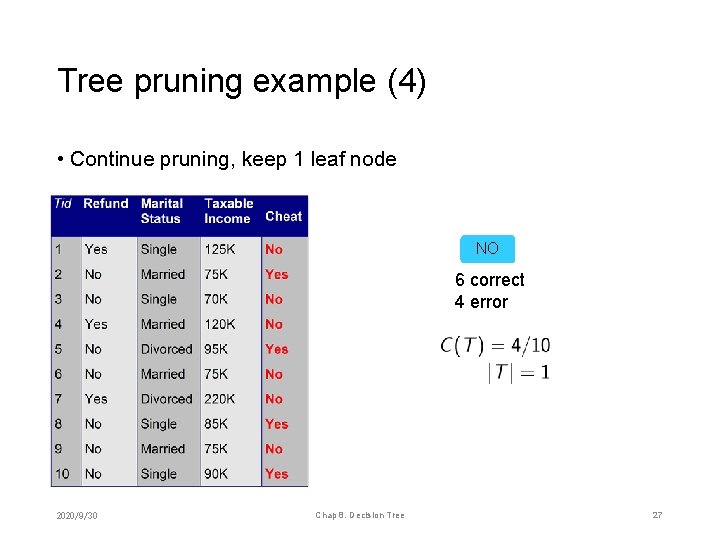

Tree pruning example (4) • Continue pruning, keep 1 leaf node NO 6 correct 4 error 2020/9/30 Chap 8. Decision Tree 27

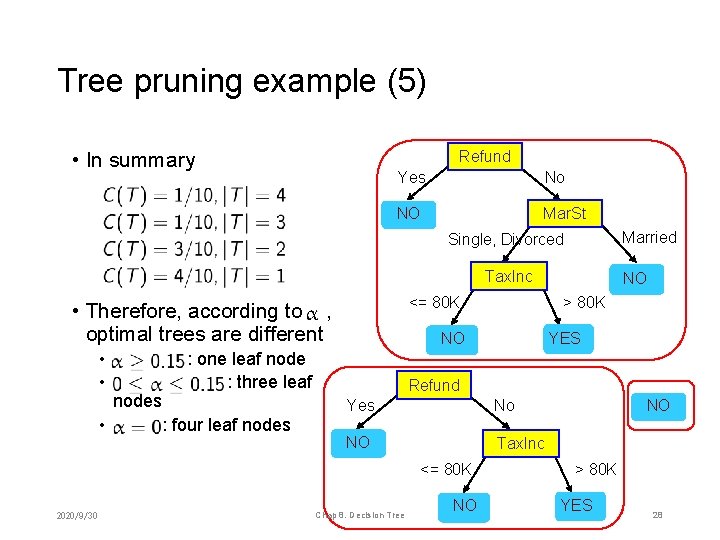

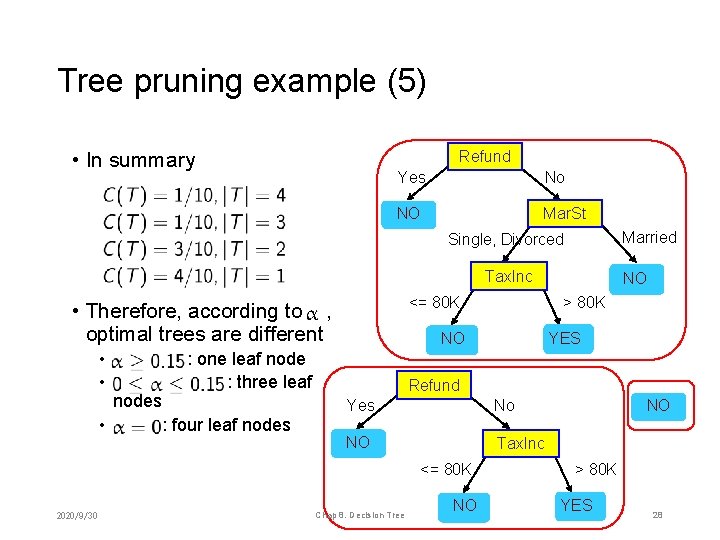

Tree pruning example (5) Refund • In summary Yes No NO Mar. St Married Single, Divorced Tax. Inc <= 80 K • Therefore, according to , optimal trees are different • • • nodes : four leaf nodes YES Refund Yes No NO Tax. Inc <= 80 K 2020/9/30 > 80 K NO : one leaf node : three leaf NO Chap 8. Decision Tree NO NO > 80 K YES 28

Chapter 8. Decision Tree 1. 2. 3. 4. Tree model Tree building Tree pruning Tree and ensemble 2020/9/30 Chap 8. Decision Tree 29

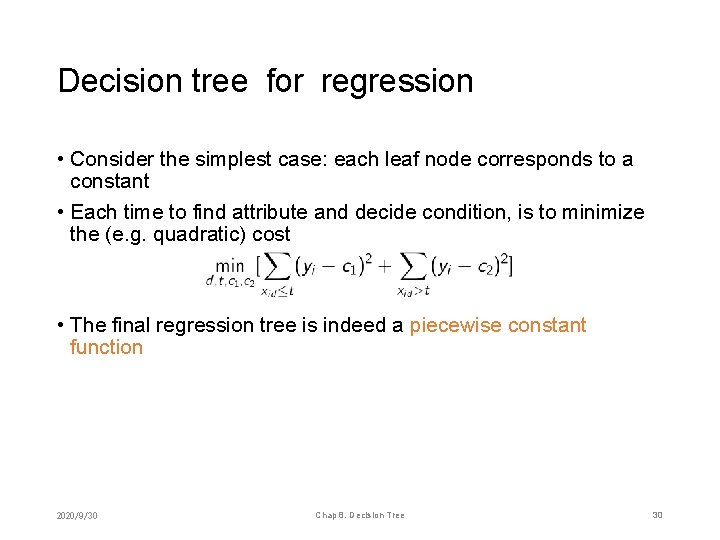

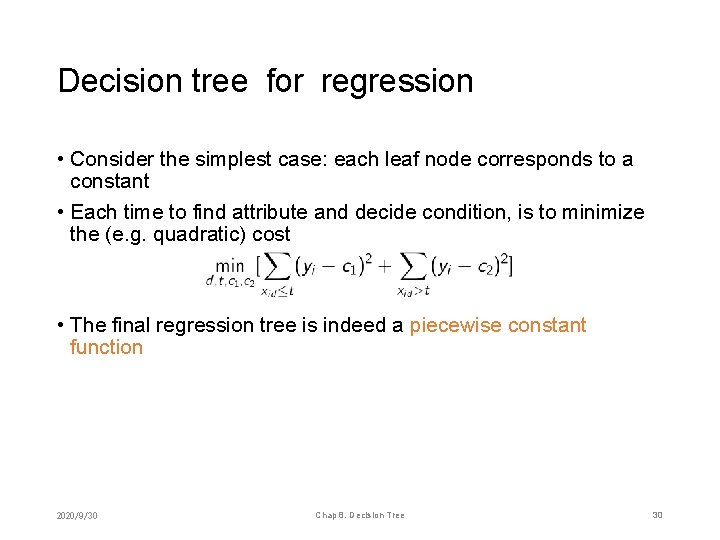

Decision tree for regression • Consider the simplest case: each leaf node corresponds to a constant • Each time to find attribute and decide condition, is to minimize the (e. g. quadratic) cost • The final regression tree is indeed a piecewise constant function 2020/9/30 Chap 8. Decision Tree 30

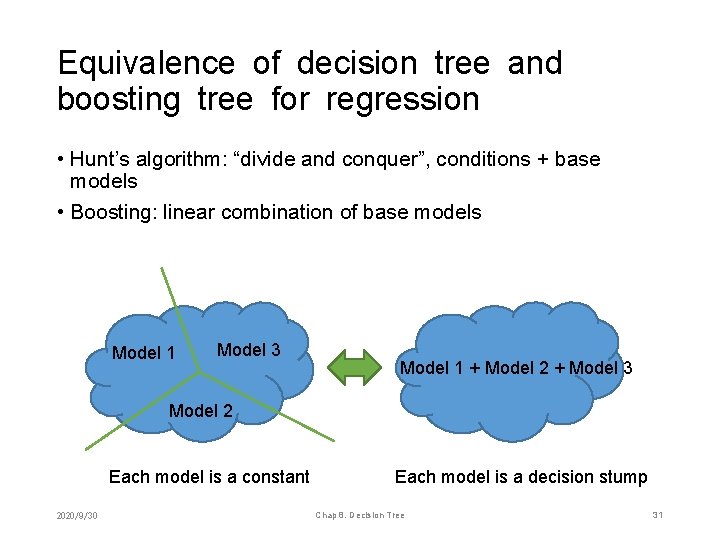

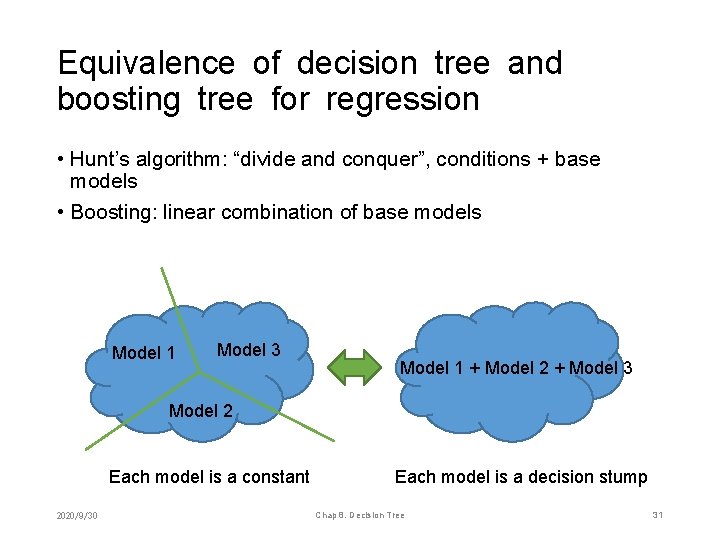

Equivalence of decision tree and boosting tree for regression • Hunt’s algorithm: “divide and conquer”, conditions + base models • Boosting: linear combination of base models Model 1 Model 3 Model 1 + Model 2 + Model 3 Model 2 Each model is a constant 2020/9/30 Each model is a decision stump Chap 8. Decision Tree 31

Implementation • ID 3: use information gain • C 4. 5: use information gain ratio (by default), one of most famous classification algorithm • CART: use Gini index (for classification) and quadratic cost (for regression), only 2 -way split • According to , increase gradually to get a series of subtrees • Determine which subtree is optimal according to validation (or cross validation) 2020/9/30 Chap 8. Decision Tree 32

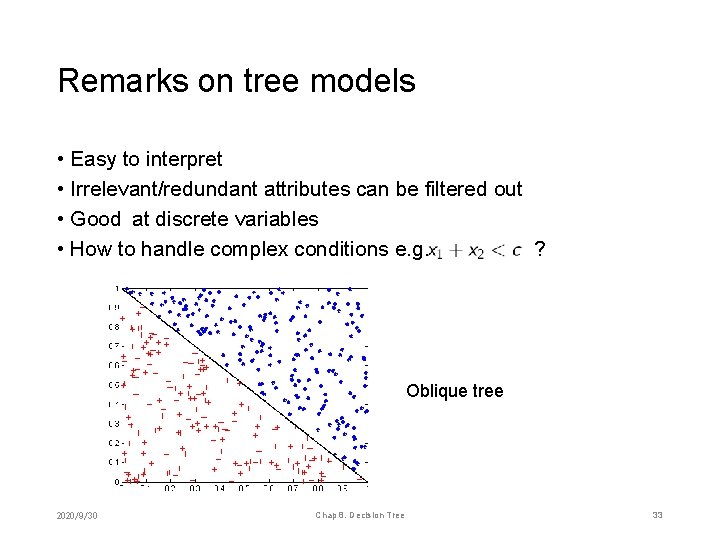

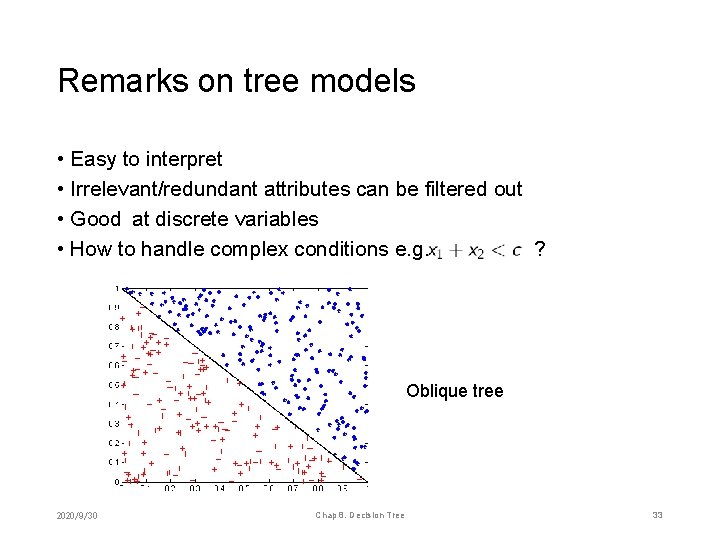

Remarks on tree models • Easy to interpret • Irrelevant/redundant attributes can be filtered out • Good at discrete variables • How to handle complex conditions e. g. ? Oblique tree 2020/9/30 Chap 8. Decision Tree 33

Random forest • Combination of decision tree and ensemble learning • According to bagging, firstly generate multiple datasets (bootstrap samples), each of which gives rise to a tree model • During tree building, consider a random subset of features when splitting 2020/9/30 Chap 8. Decision Tree 34

Chapter summary Dictionary Toolbox • Decision tree • Gini index • Pruning (of decision tree) • CART • C 4. 5 • Hunt’s algorithm • Information gain, ~ ratio • Random forest 2020/9/30 Chap 5. Non-Parametric Supervised Learning 35

Home exercises 2020/9/30 Chap 1. Linear Regression 36