Statistical Learning Dong Liu Dept EEIS USTC Chapter

- Slides: 43

Statistical Learning Dong Liu Dept. EEIS, USTC

Chapter 2. Linear Classification 1. 2. 3. 4. 5. 6. 7. The ABC of classification Logistic regression formulation The exponential family and maximum entropy Logistic regression solution Fisher’s linear discriminant analysis Perceptron Multi-class / Multi-label 2022/1/25 Chap 2. Linear Classification 2

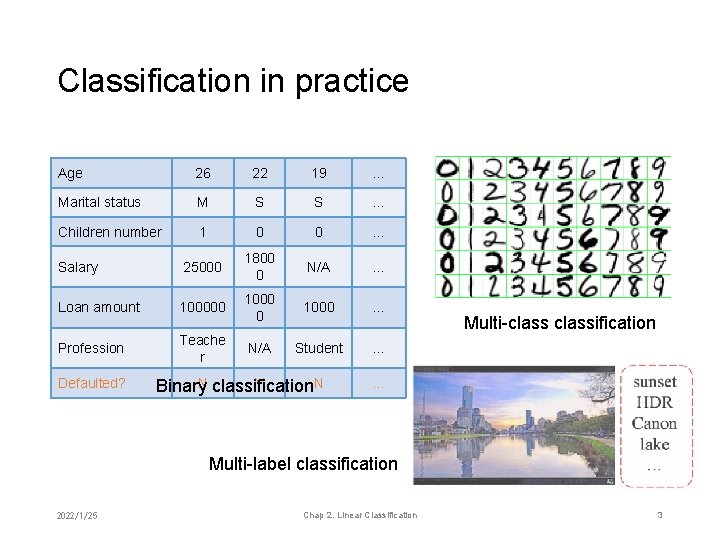

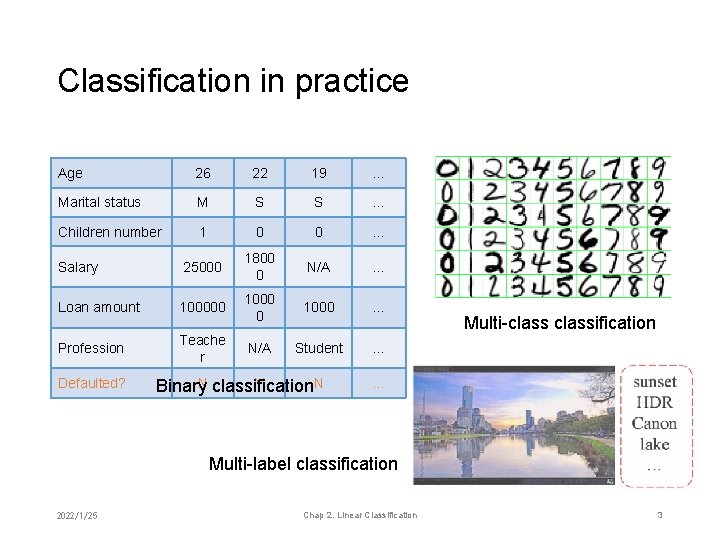

Classification in practice Age 26 22 19 … Marital status M S S … Children number 1 0 0 … Salary 25000 1800 0 N/A … Loan amount 100000 1000 … Profession Teache r N/A Student … Defaulted? N classification Y N Binary Multi-classification … Multi-label classification 2022/1/25 Chap 2. Linear Classification 3

Classification versus Regression • Both characterize the correlation between two variables • When the dependent one is treated continuous → regression • When the dependent one is treated discrete → classification • A must step in classification is to ensure discrete output (i. e. quantization) 2022/1/25 Chap 2. Linear Classification 4

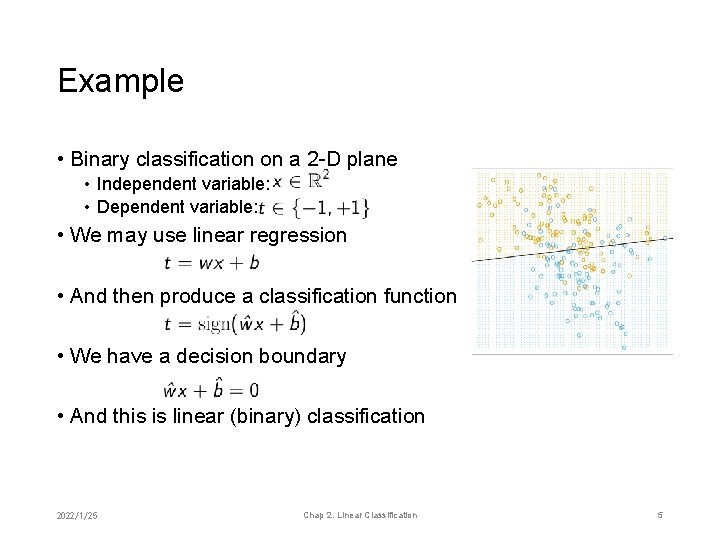

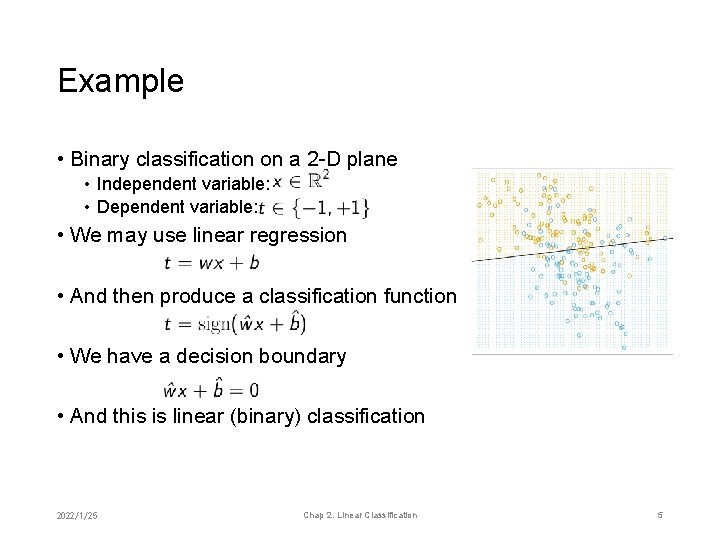

Example • Binary classification on a 2 -D plane • Independent variable: • Dependent variable: • We may use linear regression • And then produce a classification function • We have a decision boundary • And this is linear (binary) classification 2022/1/25 Chap 2. Linear Classification 5

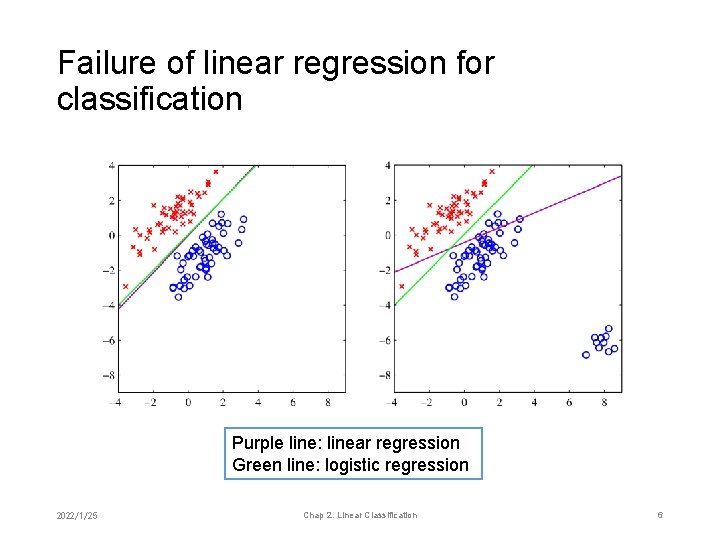

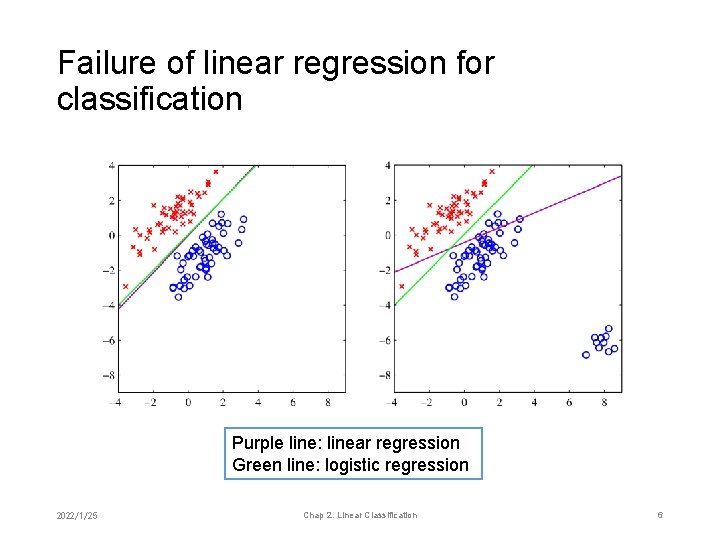

Failure of linear regression for classification Purple line: linear regression Green line: logistic regression 2022/1/25 Chap 2. Linear Classification 6

From regression to classification • Why is regression method not suitable for classification? • Training – Testing mismatch • But it is difficult to involve quantization into regression • Consider to solve 2022/1/25 Chap 2. Linear Classification 7

Chapter 2. Linear Classification 1. 2. 3. 4. 5. 6. 7. The ABC of classification Logistic regression formulation The exponential family and maximum entropy Logistic regression solution Fisher’s linear discriminant analysis Perceptron Multi-class / Multi-label 2022/1/25 Chap 2. Linear Classification 8

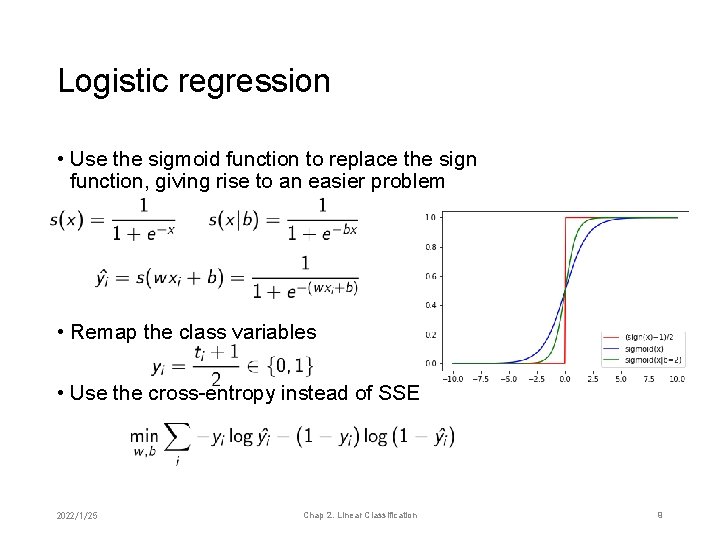

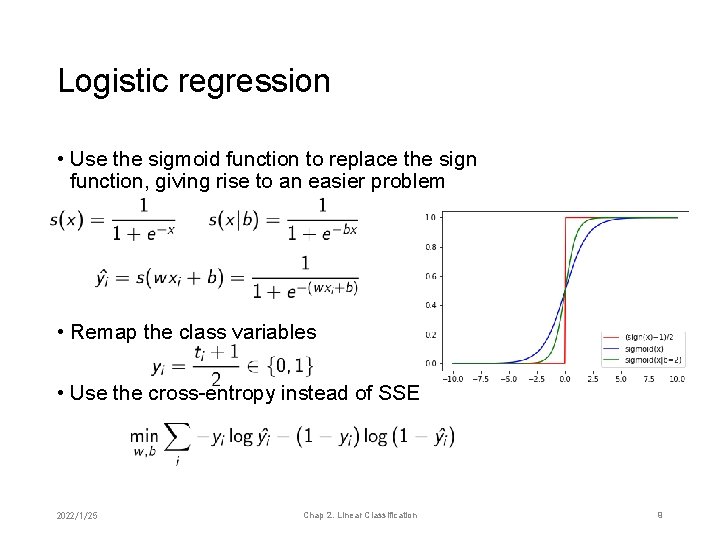

Logistic regression • Use the sigmoid function to replace the sign function, giving rise to an easier problem • Remap the class variables • Use the cross-entropy instead of SSE 2022/1/25 Chap 2. Linear Classification 9

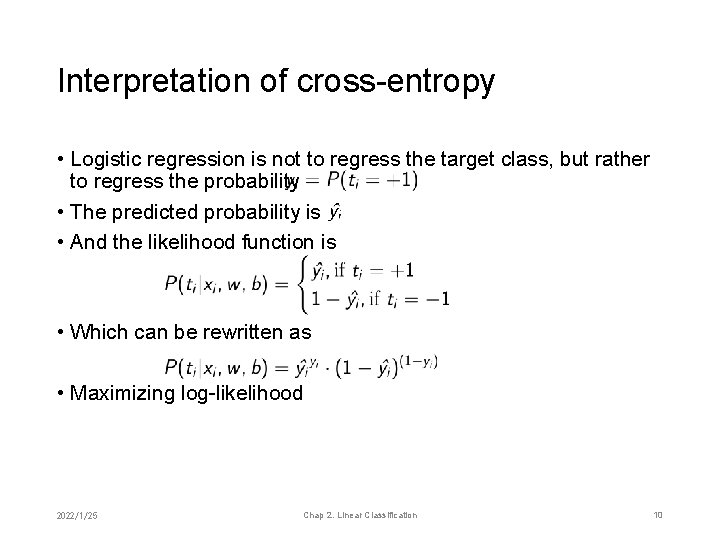

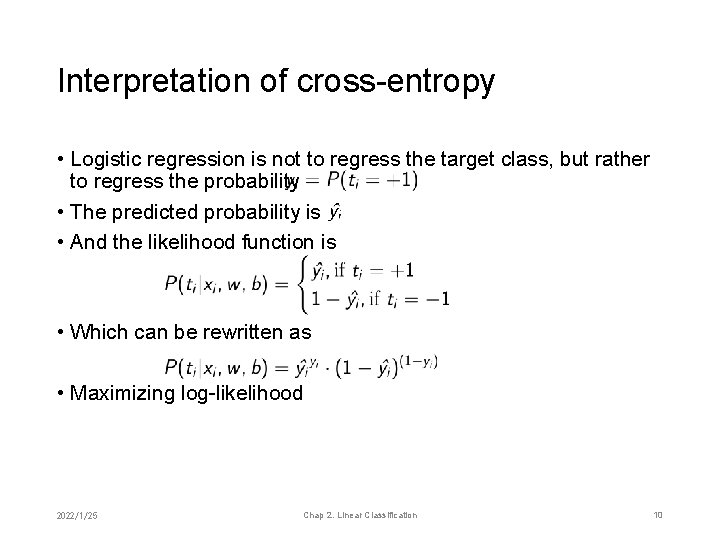

Interpretation of cross-entropy • Logistic regression is not to regress the target class, but rather to regress the probability • The predicted probability is • And the likelihood function is • Which can be rewritten as • Maximizing log-likelihood 2022/1/25 Chap 2. Linear Classification 10

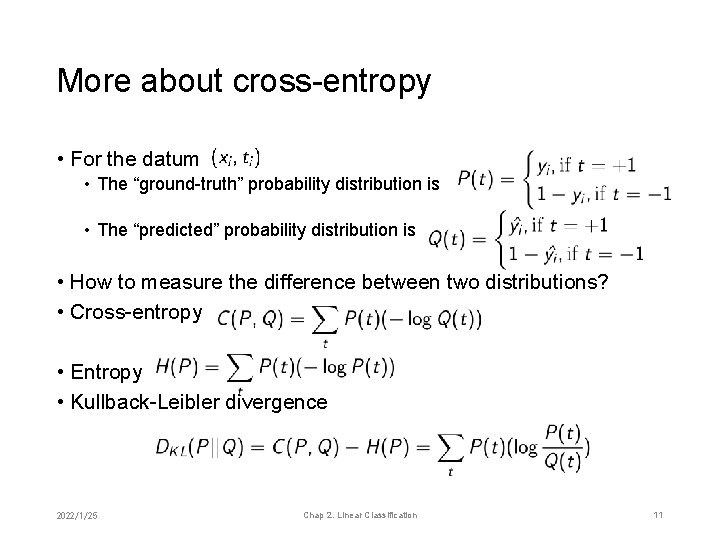

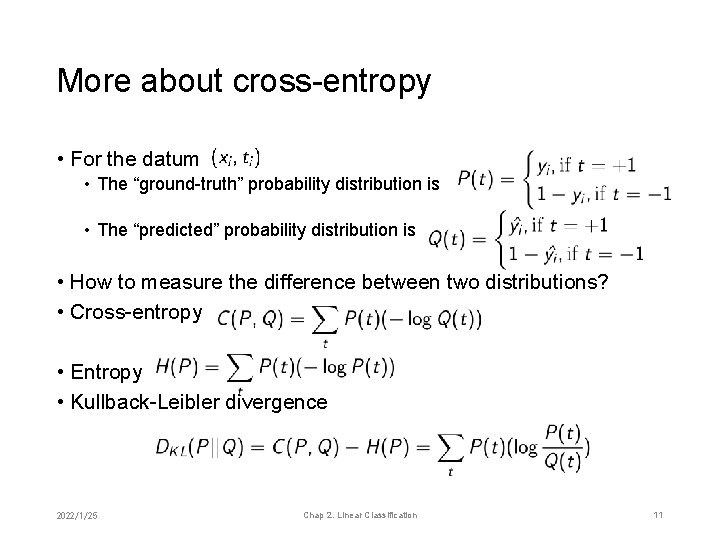

More about cross-entropy • For the datum • The “ground-truth” probability distribution is • The “predicted” probability distribution is • How to measure the difference between two distributions? • Cross-entropy • Entropy • Kullback-Leibler divergence 2022/1/25 Chap 2. Linear Classification 11

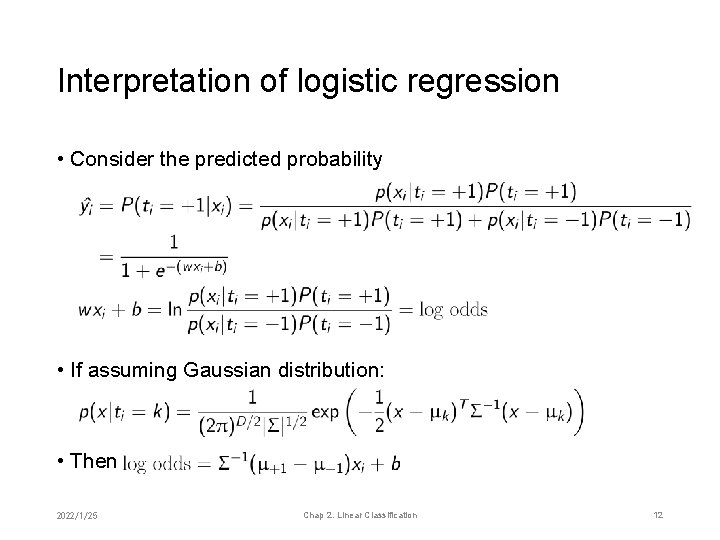

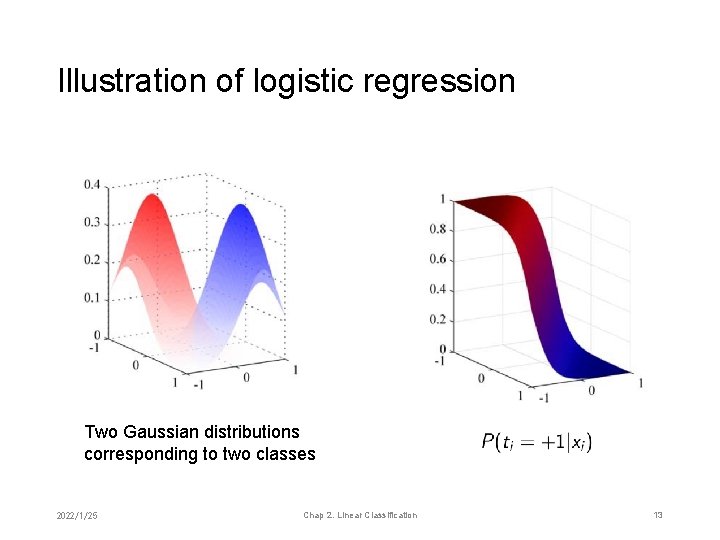

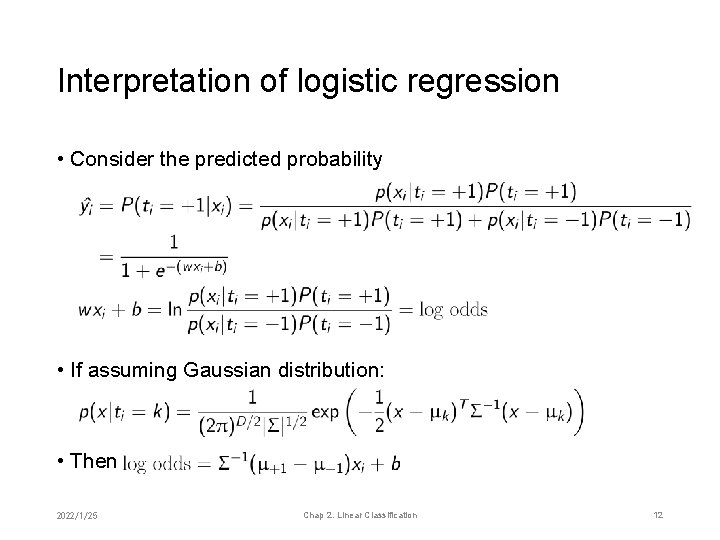

Interpretation of logistic regression • Consider the predicted probability • If assuming Gaussian distribution: • Then 2022/1/25 Chap 2. Linear Classification 12

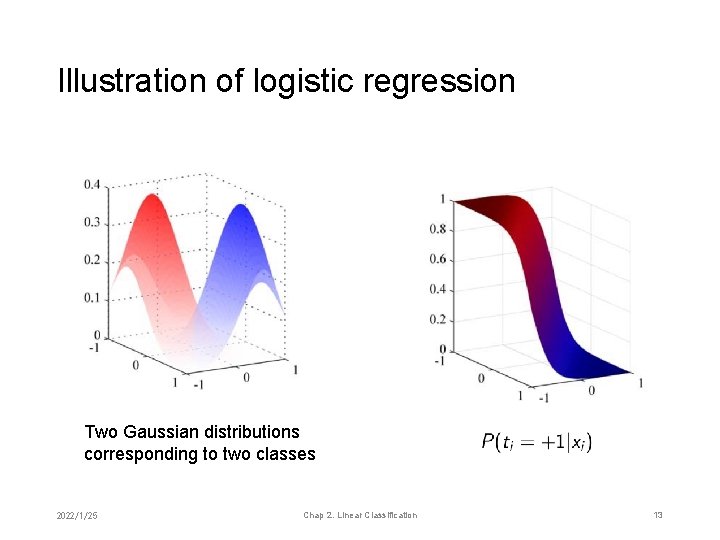

Illustration of logistic regression Two Gaussian distributions corresponding to two classes 2022/1/25 Chap 2. Linear Classification 13

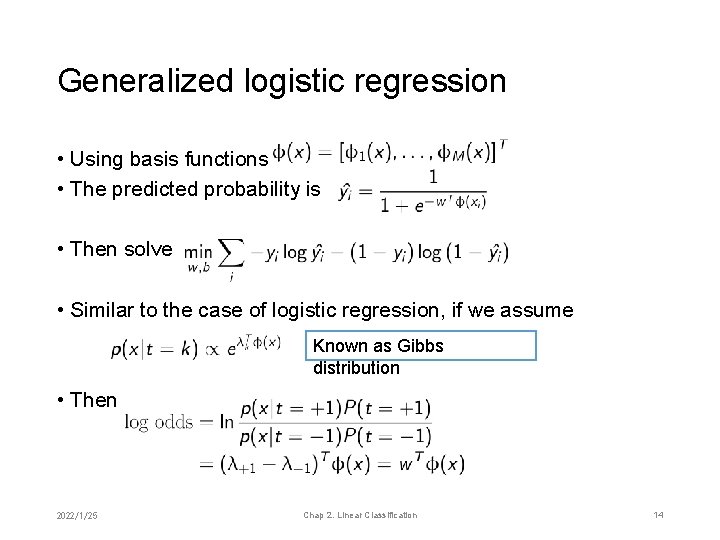

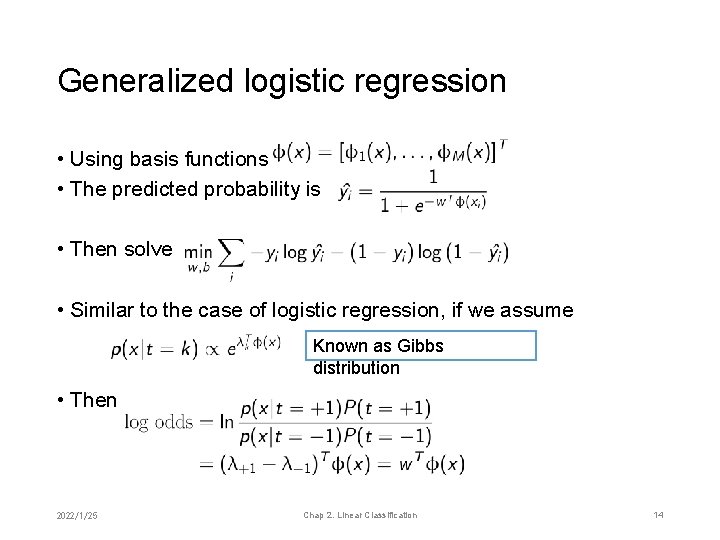

Generalized logistic regression • Using basis functions • The predicted probability is • Then solve • Similar to the case of logistic regression, if we assume Known as Gibbs distribution • Then 2022/1/25 Chap 2. Linear Classification 14

Chapter 2. Linear Classification 1. 2. 3. 4. 5. 6. 7. The ABC of classification Logistic regression formulation The exponential family and maximum entropy Logistic regression solution Fisher’s linear discriminant analysis Perceptron Multi-class / Multi-label 2022/1/25 Chap 2. Linear Classification 15

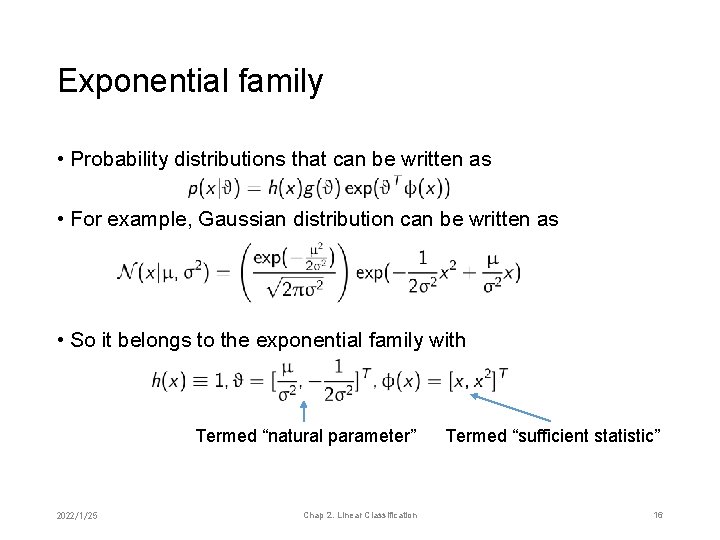

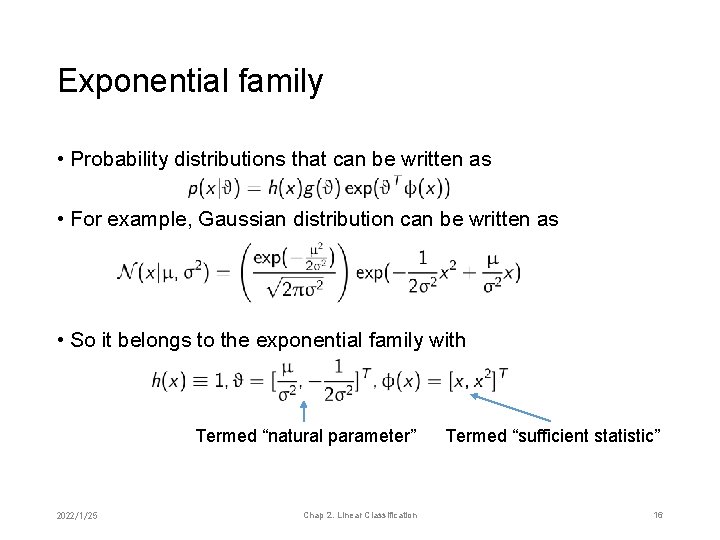

Exponential family • Probability distributions that can be written as • For example, Gaussian distribution can be written as • So it belongs to the exponential family with Termed “natural parameter” 2022/1/25 Chap 2. Linear Classification Termed “sufficient statistic” 16

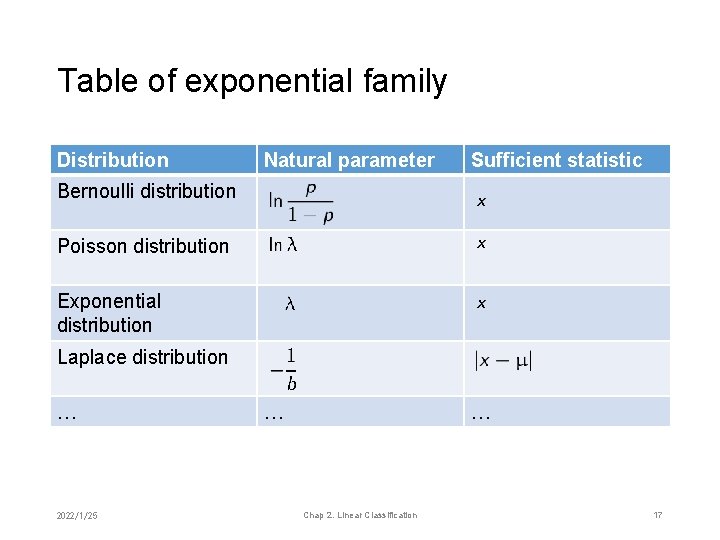

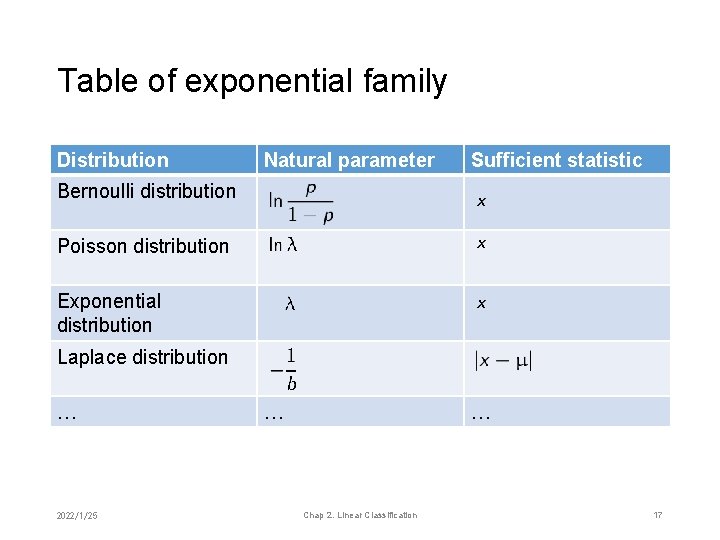

Table of exponential family Distribution Natural parameter Sufficient statistic … … Bernoulli distribution Poisson distribution Exponential distribution Laplace distribution … 2022/1/25 Chap 2. Linear Classification 17

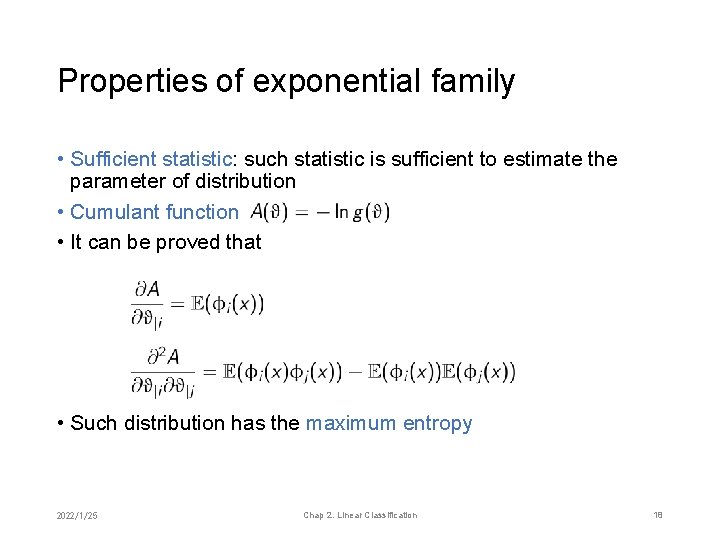

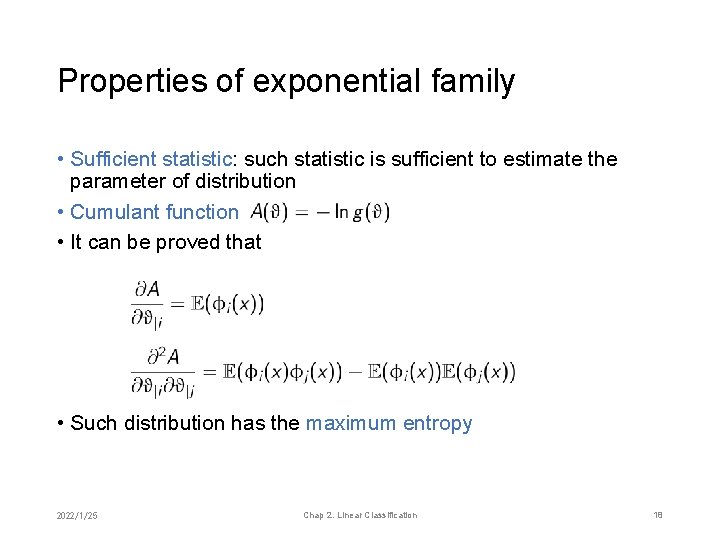

Properties of exponential family • Sufficient statistic: such statistic is sufficient to estimate the parameter of distribution • Cumulant function • It can be proved that • Such distribution has the maximum entropy 2022/1/25 Chap 2. Linear Classification 18

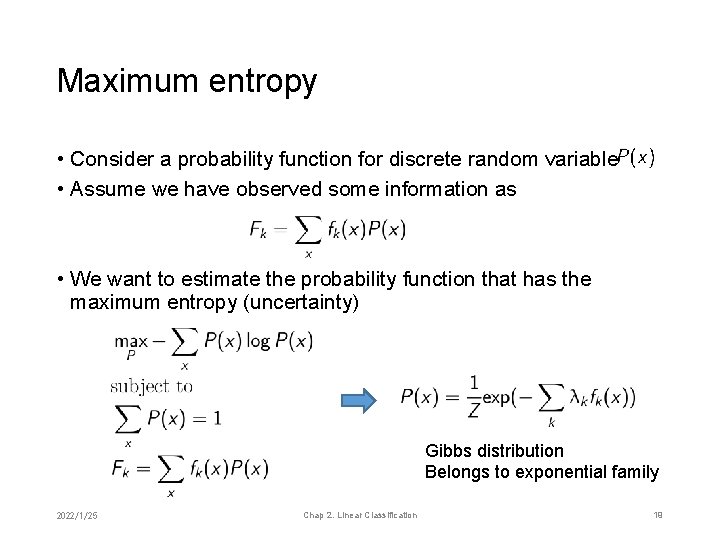

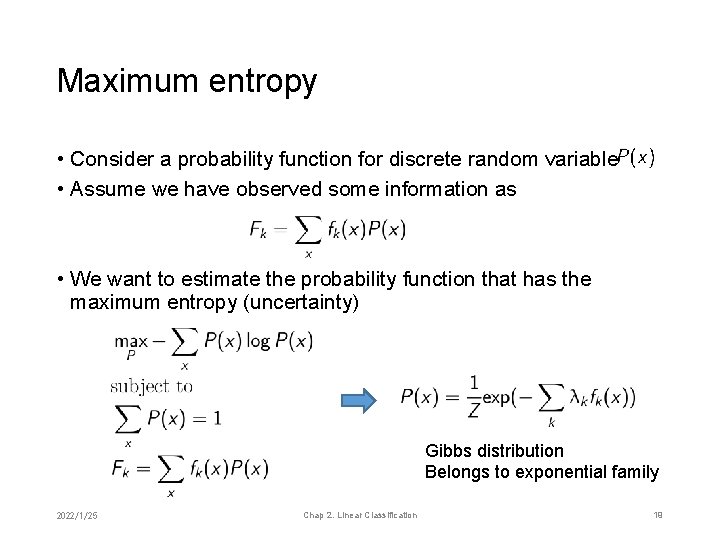

Maximum entropy • Consider a probability function for discrete random variable • Assume we have observed some information as • We want to estimate the probability function that has the maximum entropy (uncertainty) Gibbs distribution Belongs to exponential family 2022/1/25 Chap 2. Linear Classification 19

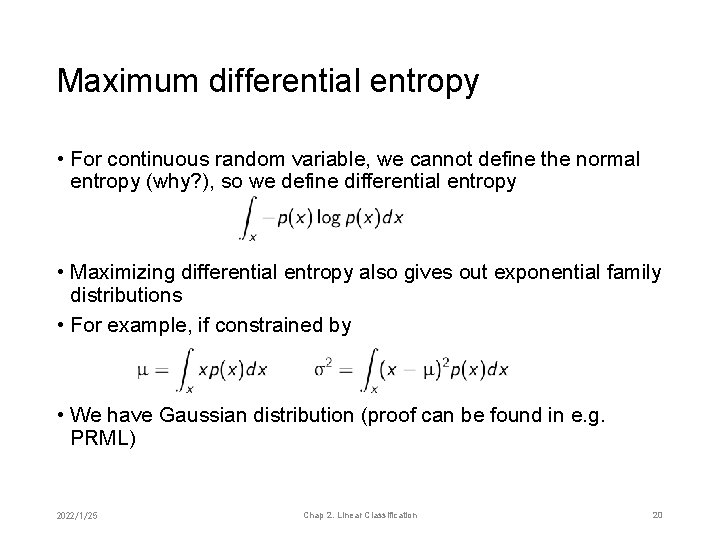

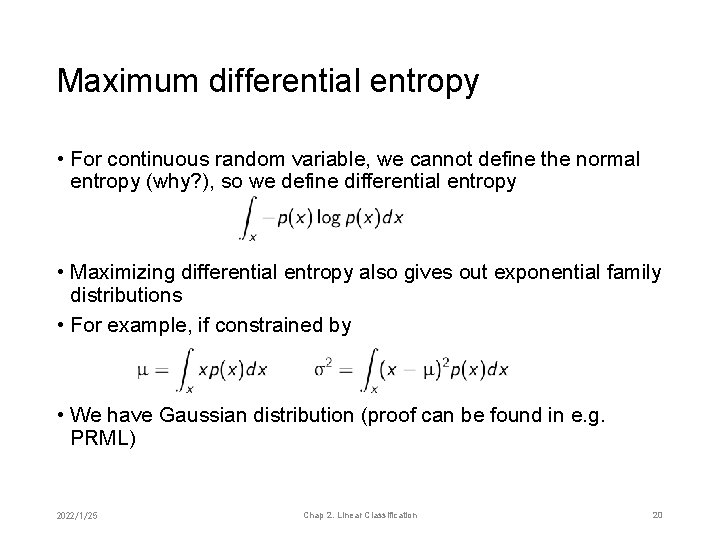

Maximum differential entropy • For continuous random variable, we cannot define the normal entropy (why? ), so we define differential entropy • Maximizing differential entropy also gives out exponential family distributions • For example, if constrained by • We have Gaussian distribution (proof can be found in e. g. PRML) 2022/1/25 Chap 2. Linear Classification 20

Chapter 2. Linear Classification 1. 2. 3. 4. 5. 6. 7. The ABC of classification Logistic regression formulation The exponential family and maximum entropy Logistic regression solution Fisher’s linear discriminant analysis Perceptron Multi-class / Multi-label 2022/1/25 Chap 2. Linear Classification 21

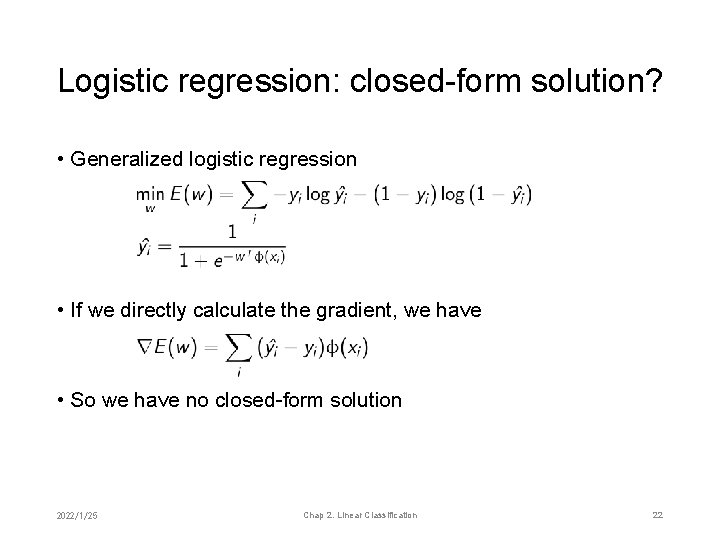

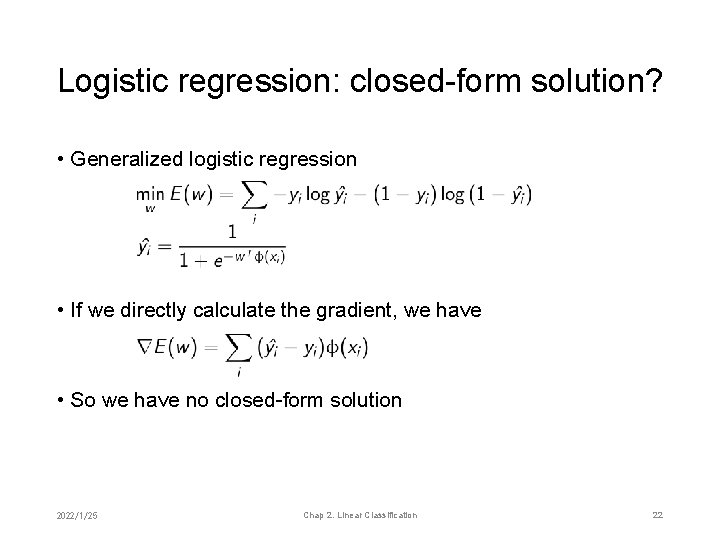

Logistic regression: closed-form solution? • Generalized logistic regression • If we directly calculate the gradient, we have • So we have no closed-form solution 2022/1/25 Chap 2. Linear Classification 22

Numerical algorithms for optimization problems • Many optimization problems have no closed-form solution, we have to retreat to numerical algorithms • More tractable problem, more efficient algorithm • Second-order: Newton-Raphson • First-order: gradient descent, Frank-Wolfe 2022/1/25 Chap 2. Linear Classification 23

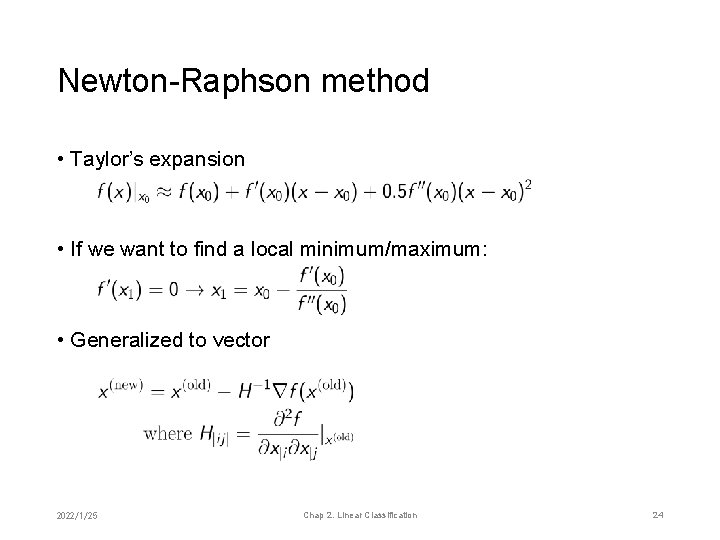

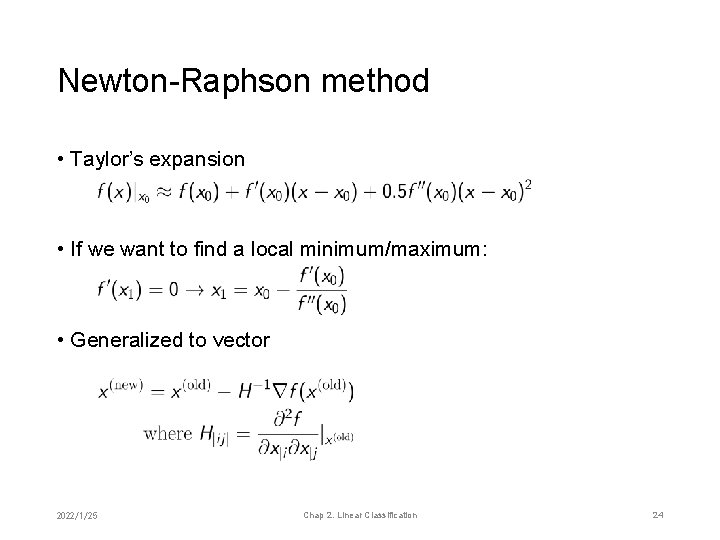

Newton-Raphson method • Taylor’s expansion • If we want to find a local minimum/maximum: • Generalized to vector 2022/1/25 Chap 2. Linear Classification 24

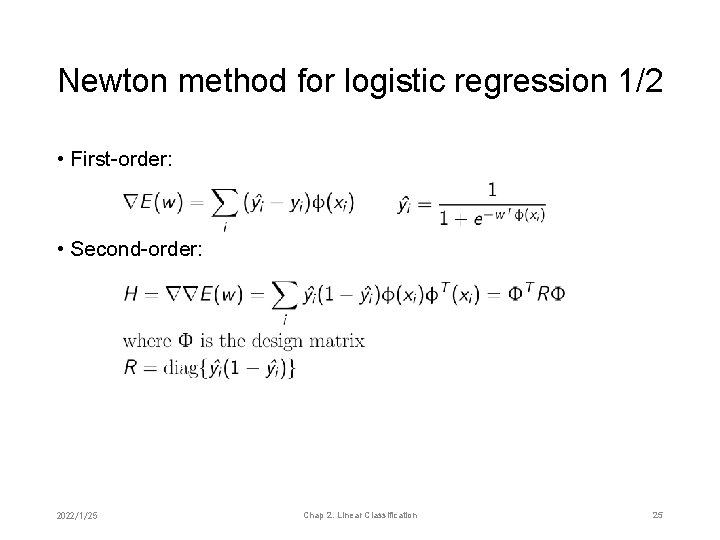

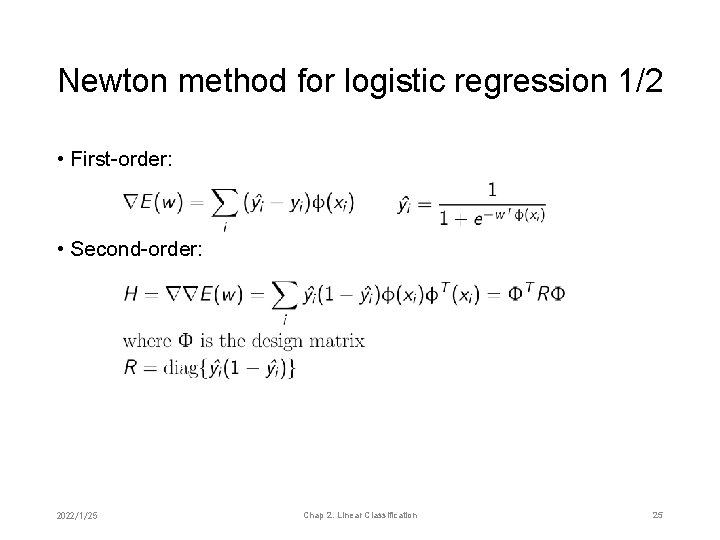

Newton method for logistic regression 1/2 • First-order: • Second-order: 2022/1/25 Chap 2. Linear Classification 25

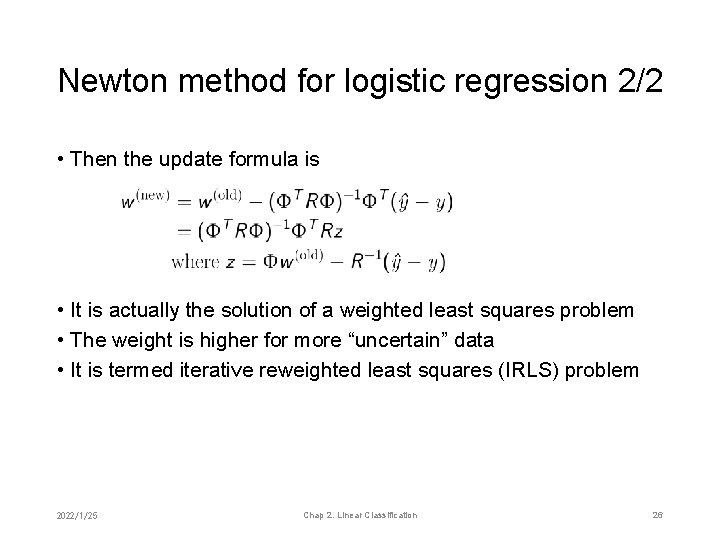

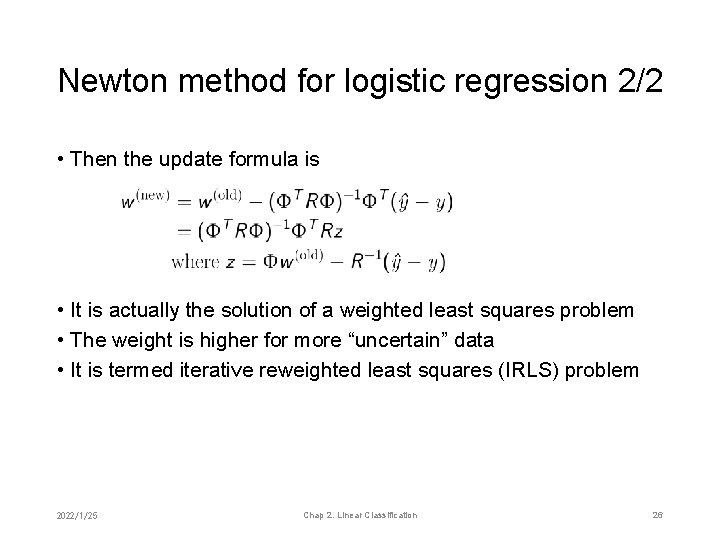

Newton method for logistic regression 2/2 • Then the update formula is • It is actually the solution of a weighted least squares problem • The weight is higher for more “uncertain” data • It is termed iterative reweighted least squares (IRLS) problem 2022/1/25 Chap 2. Linear Classification 26

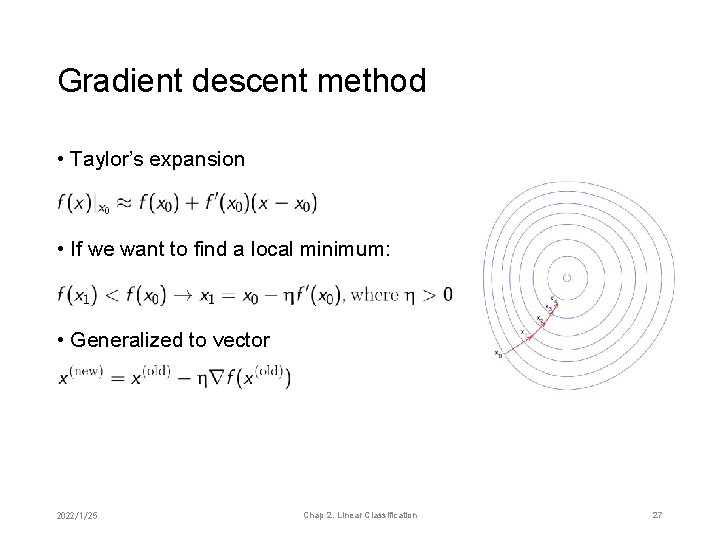

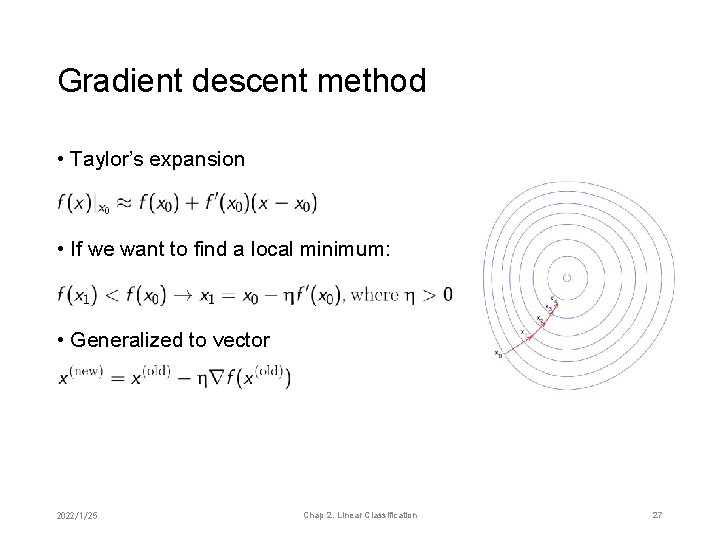

Gradient descent method • Taylor’s expansion • If we want to find a local minimum: • Generalized to vector 2022/1/25 Chap 2. Linear Classification 27

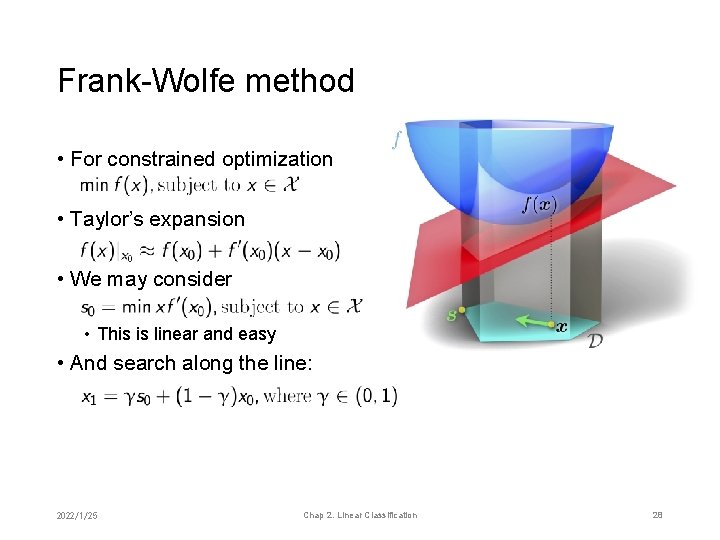

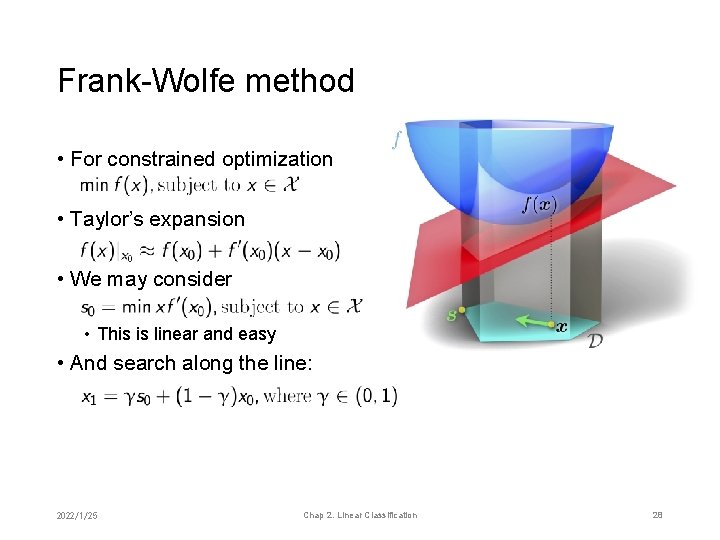

Frank-Wolfe method • For constrained optimization • Taylor’s expansion • We may consider • This is linear and easy • And search along the line: 2022/1/25 Chap 2. Linear Classification 28

Chapter 2. Linear Classification 1. 2. 3. 4. 5. 6. 7. The ABC of classification Logistic regression formulation The exponential family and maximum entropy Logistic regression solution Fisher’s linear discriminant analysis Perceptron Multi-class / Multi-label 2022/1/25 Chap 2. Linear Classification 29

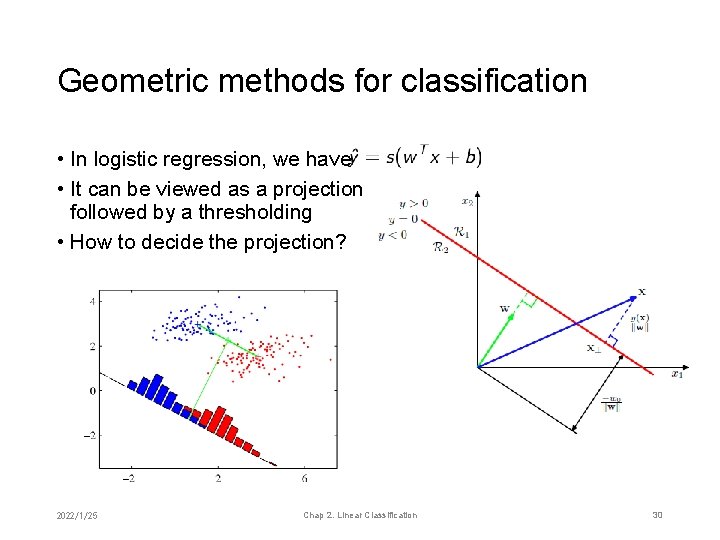

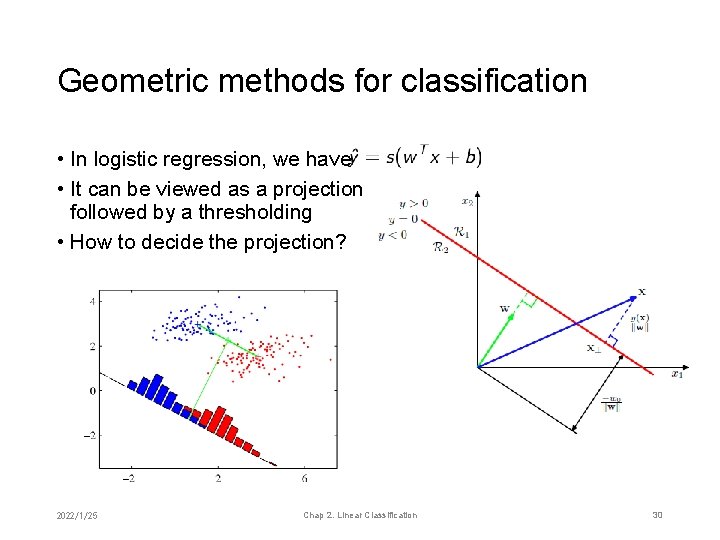

Geometric methods for classification • In logistic regression, we have • It can be viewed as a projection followed by a thresholding • How to decide the projection? 2022/1/25 Chap 2. Linear Classification 30

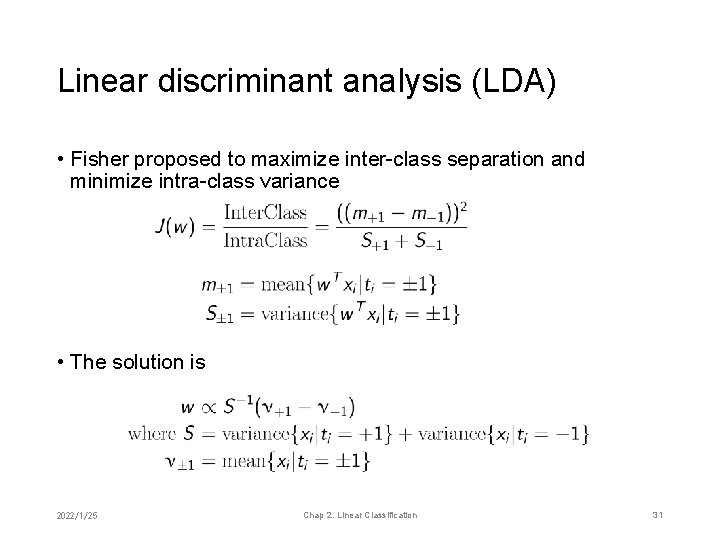

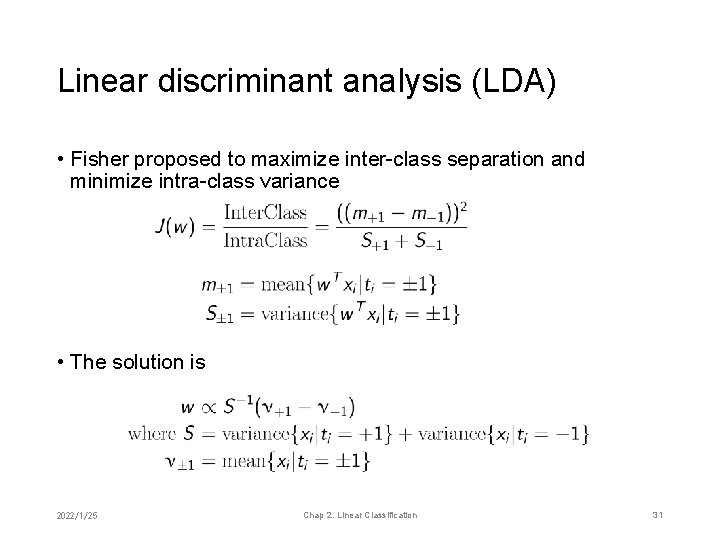

Linear discriminant analysis (LDA) • Fisher proposed to maximize inter-class separation and minimize intra-class variance • The solution is 2022/1/25 Chap 2. Linear Classification 31

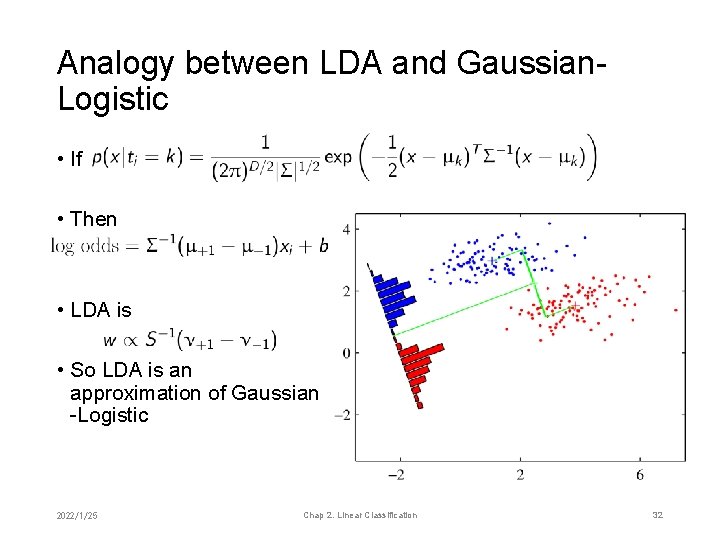

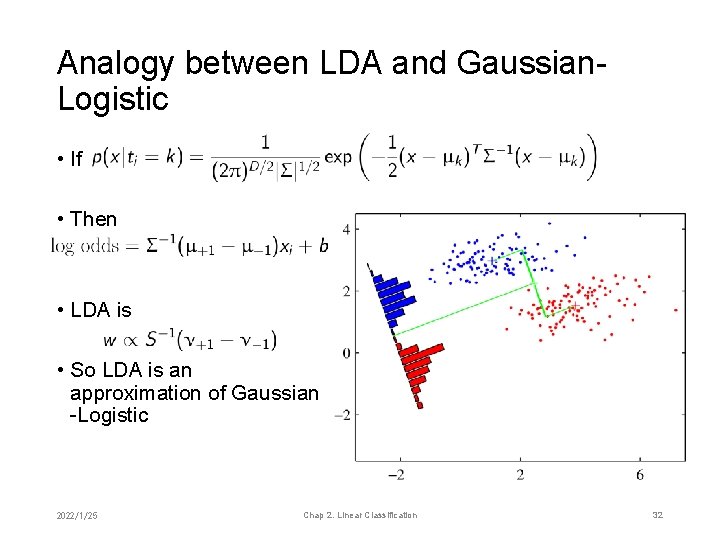

Analogy between LDA and Gaussian. Logistic • If • Then • LDA is • So LDA is an approximation of Gaussian -Logistic 2022/1/25 Chap 2. Linear Classification 32

Chapter 2. Linear Classification 1. 2. 3. 4. 5. 6. 7. The ABC of classification Logistic regression formulation The exponential family and maximum entropy Logistic regression solution Fisher’s linear discriminant analysis Perceptron Multi-class / Multi-label 2022/1/25 Chap 2. Linear Classification 33

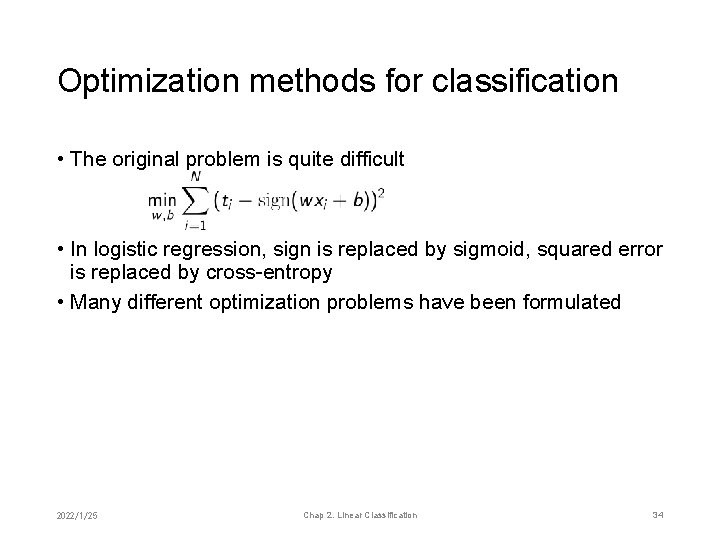

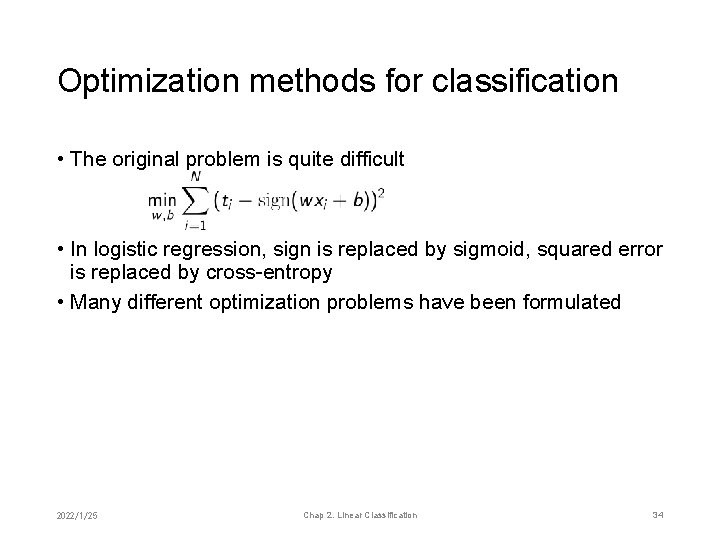

Optimization methods for classification • The original problem is quite difficult • In logistic regression, sign is replaced by sigmoid, squared error is replaced by cross-entropy • Many different optimization problems have been formulated 2022/1/25 Chap 2. Linear Classification 34

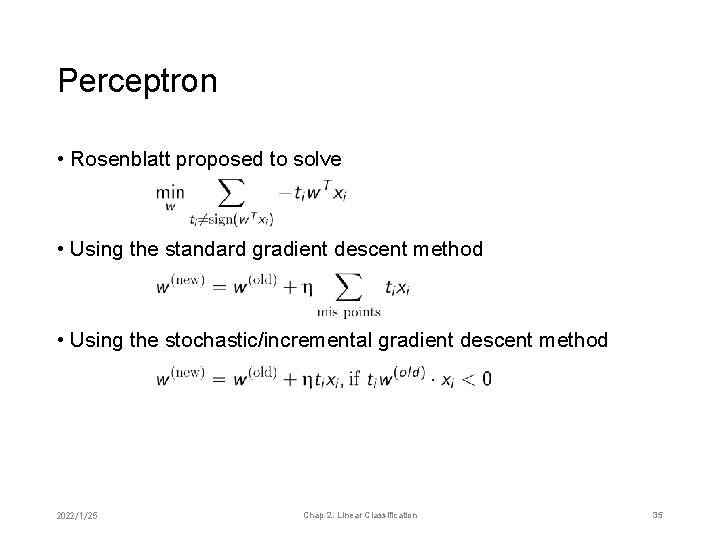

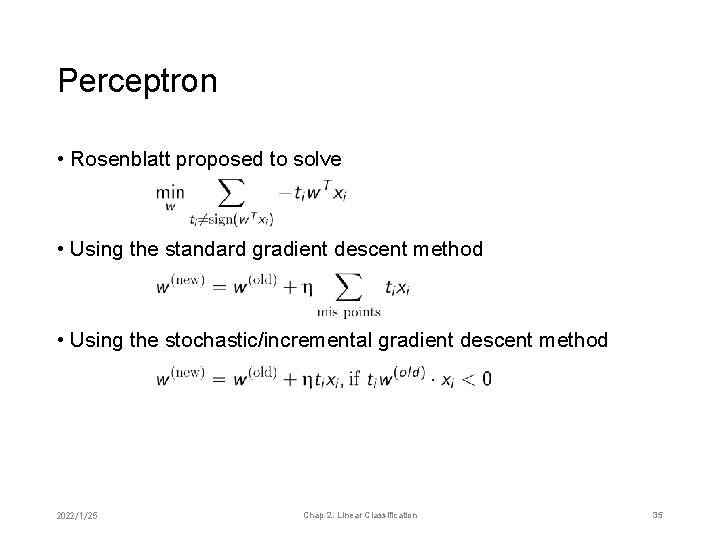

Perceptron • Rosenblatt proposed to solve • Using the standard gradient descent method • Using the stochastic/incremental gradient descent method 2022/1/25 Chap 2. Linear Classification 35

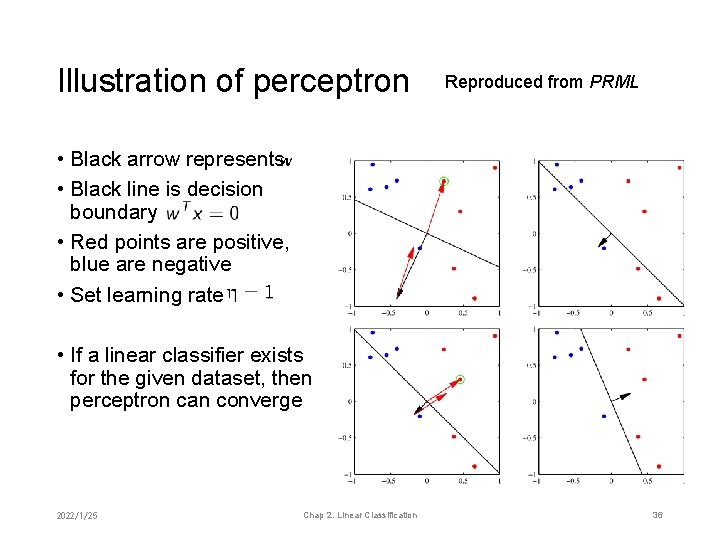

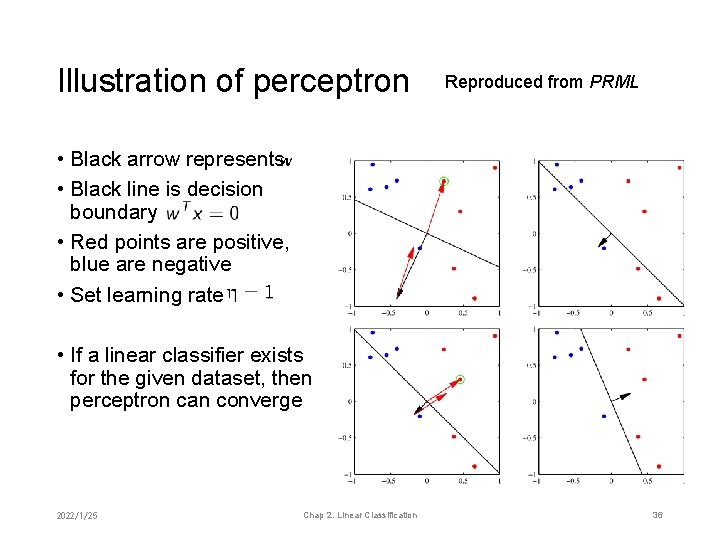

Illustration of perceptron Reproduced from PRML • Black arrow represents • Black line is decision boundary • Red points are positive, blue are negative • Set learning rate • If a linear classifier exists for the given dataset, then perceptron can converge 2022/1/25 Chap 2. Linear Classification 36

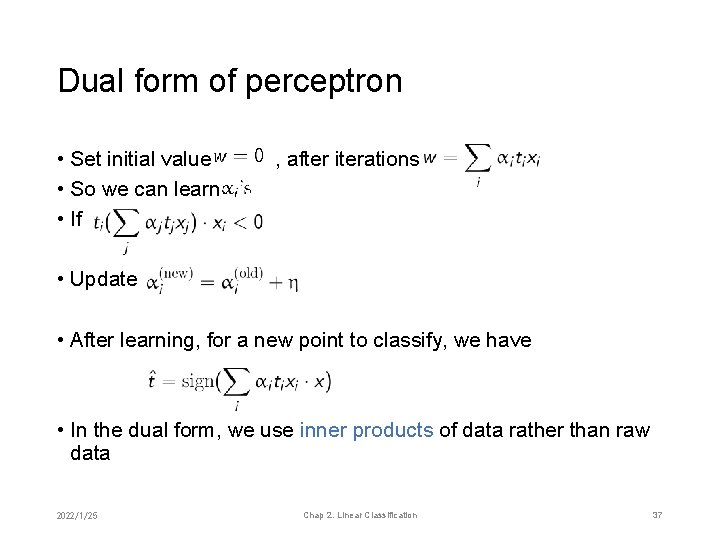

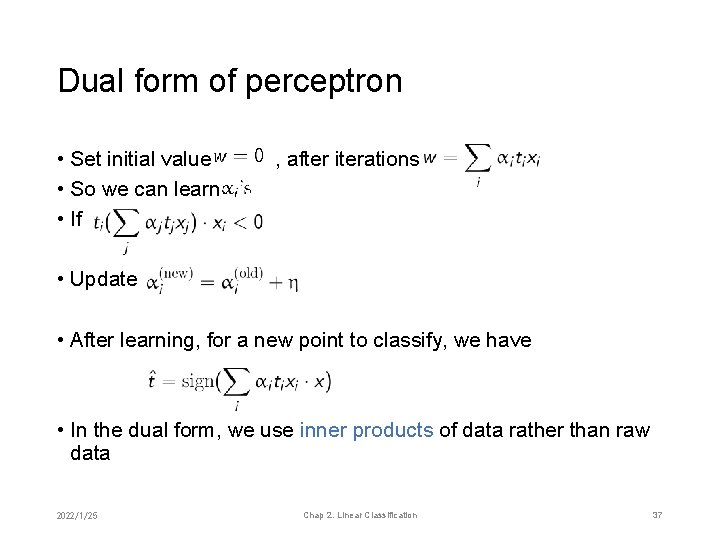

Dual form of perceptron • Set initial value • So we can learn • If , after iterations • Update • After learning, for a new point to classify, we have • In the dual form, we use inner products of data rather than raw data 2022/1/25 Chap 2. Linear Classification 37

Chapter 2. Linear Classification 1. 2. 3. 4. 5. 6. 7. The ABC of classification Logistic regression formulation The exponential family and maximum entropy Logistic regression solution Fisher’s linear discriminant analysis Perceptron Multi-class / Multi-label 2022/1/25 Chap 2. Linear Classification 38

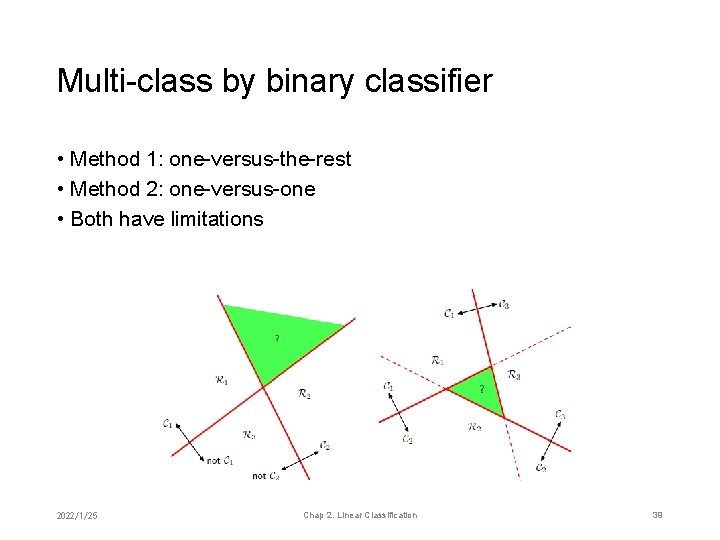

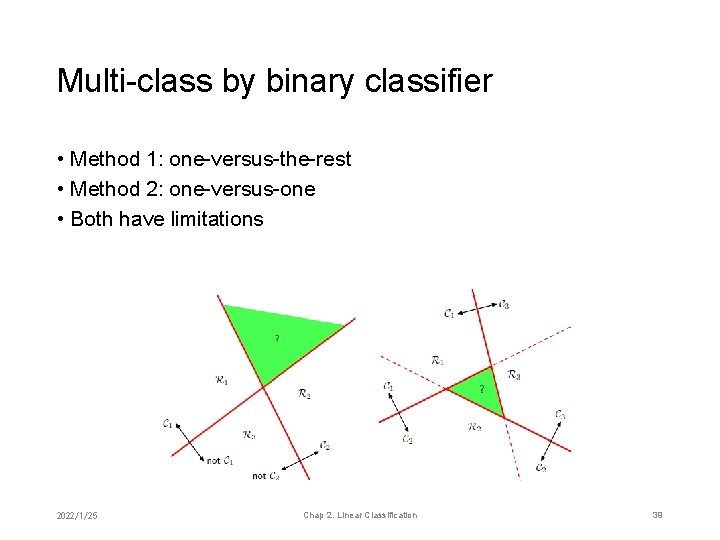

Multi-class by binary classifier • Method 1: one-versus-the-rest • Method 2: one-versus-one • Both have limitations 2022/1/25 Chap 2. Linear Classification 39

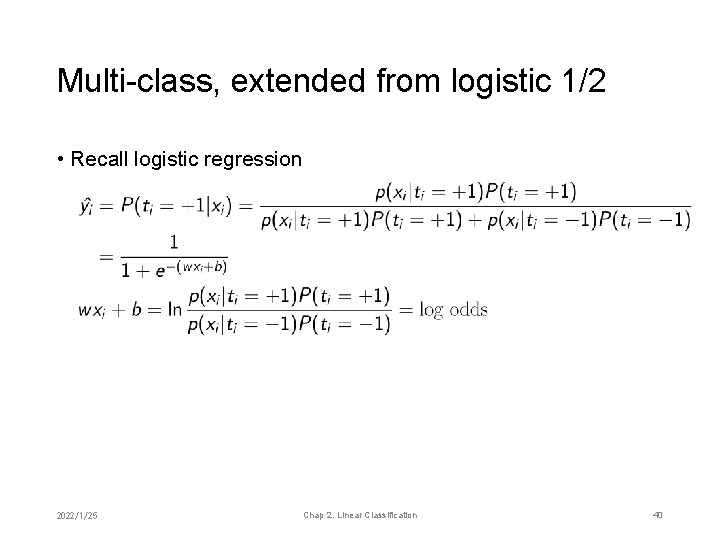

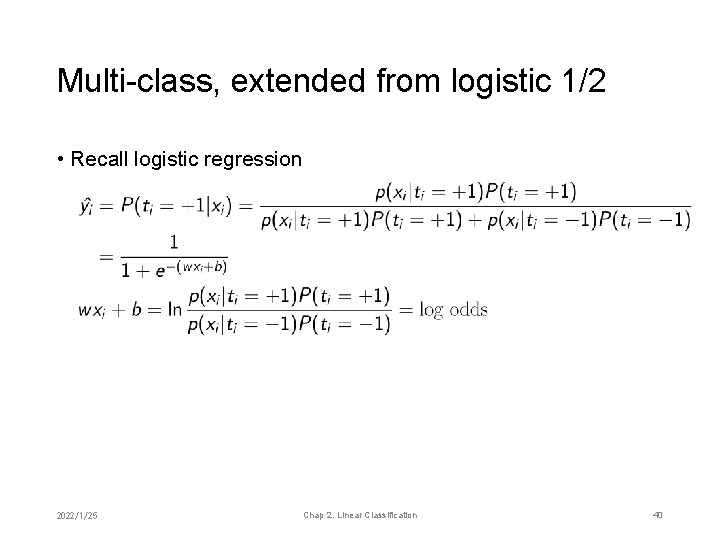

Multi-class, extended from logistic 1/2 • Recall logistic regression 2022/1/25 Chap 2. Linear Classification 40

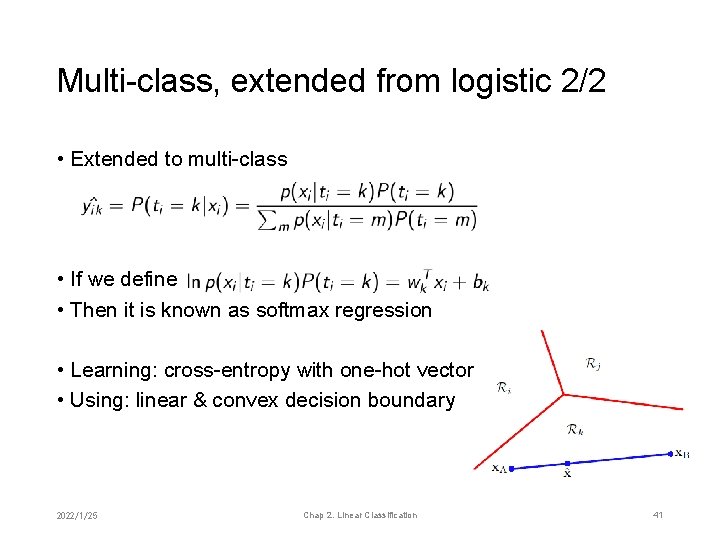

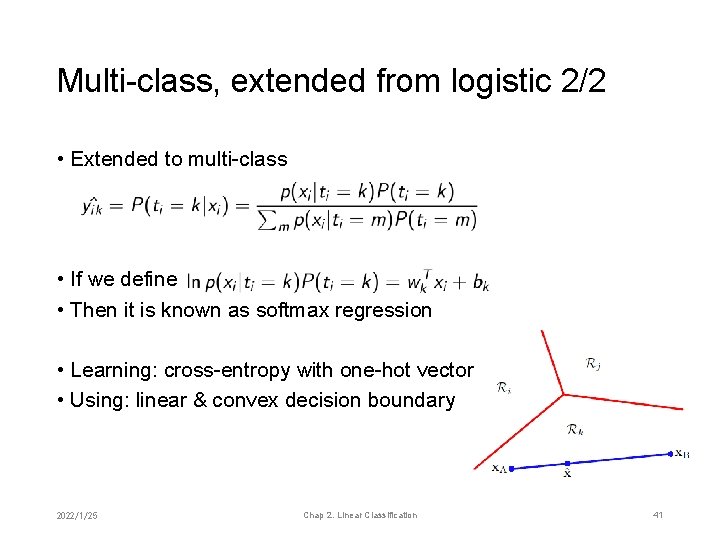

Multi-class, extended from logistic 2/2 • Extended to multi-class • If we define • Then it is known as softmax regression • Learning: cross-entropy with one-hot vector • Using: linear & convex decision boundary 2022/1/25 Chap 2. Linear Classification 41

Multi-label by binary classifier • Since the classes are not exclusive, it is natural to use yesversus-no for each class • Thus, it is simply concatenation of multiple binary classifiers • Using: we may rank the predicted probabilities to decide which label • Learning: we may also learn to rank using e. g. pairwise objective function 2022/1/25 Chap 2. Linear Classification 42

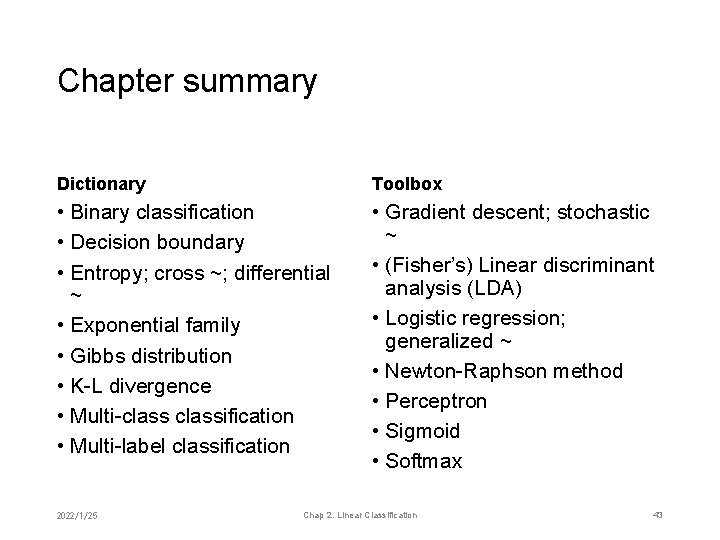

Chapter summary Dictionary Toolbox • Binary classification • Decision boundary • Entropy; cross ~; differential ~ • Exponential family • Gibbs distribution • K-L divergence • Multi-classification • Multi-label classification • Gradient descent; stochastic ~ • (Fisher’s) Linear discriminant analysis (LDA) • Logistic regression; generalized ~ • Newton-Raphson method • Perceptron • Sigmoid • Softmax 2022/1/25 Chap 2. Linear Classification 43