Statistical Learning Dong Liu Dept EEIS USTC Chapter

- Slides: 24

Statistical Learning Dong Liu Dept. EEIS, USTC

Chapter 5. Non-Parametric Supervised Learning 1. Parzen window 2. k-nearest-neighbor (k-NN) 3. Sparse coding 2021/2/28 Chap 5. Non-Parametric Supervised Learning 2

Non-parametric learning • Most of statistical learning methods assume a model • For example, linear regression assumes and linear classification assumes • Learning is converted to a problem of solving/estimating model parameters • In this chapter, we consider learning without explicit modeling • Non-parametric learning is sometimes equivalent to instance/memory-based learning 2021/2/28 Chap 5. Non-Parametric Supervised Learning 3

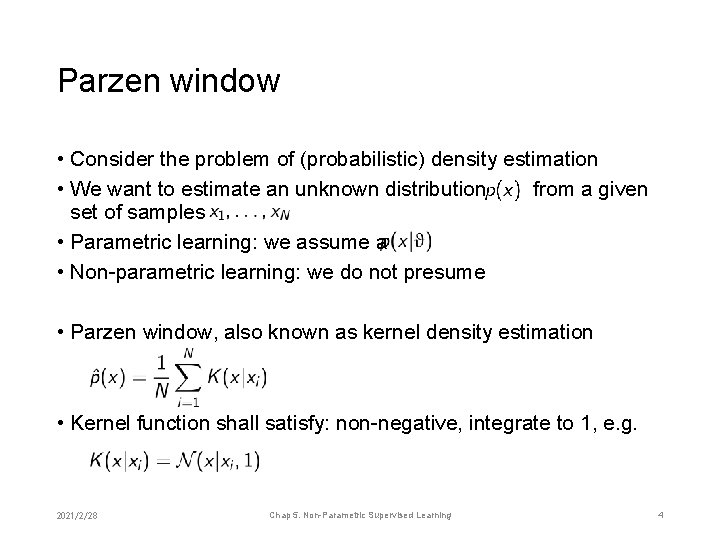

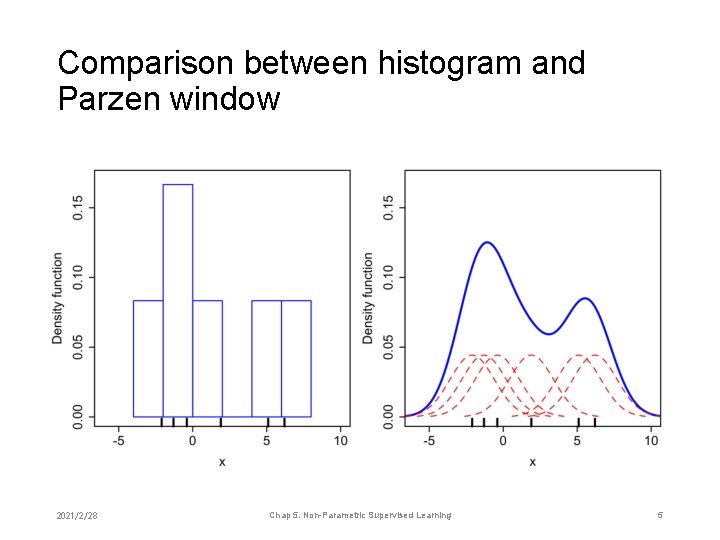

Parzen window • Consider the problem of (probabilistic) density estimation • We want to estimate an unknown distribution from a given set of samples • Parametric learning: we assume a • Non-parametric learning: we do not presume • Parzen window, also known as kernel density estimation • Kernel function shall satisfy: non-negative, integrate to 1, e. g. 2021/2/28 Chap 5. Non-Parametric Supervised Learning 4

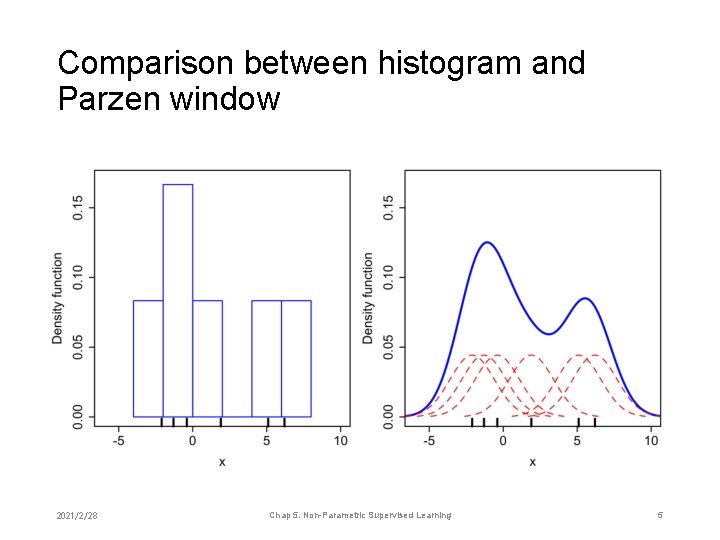

Comparison between histogram and Parzen window 2021/2/28 Chap 5. Non-Parametric Supervised Learning 5

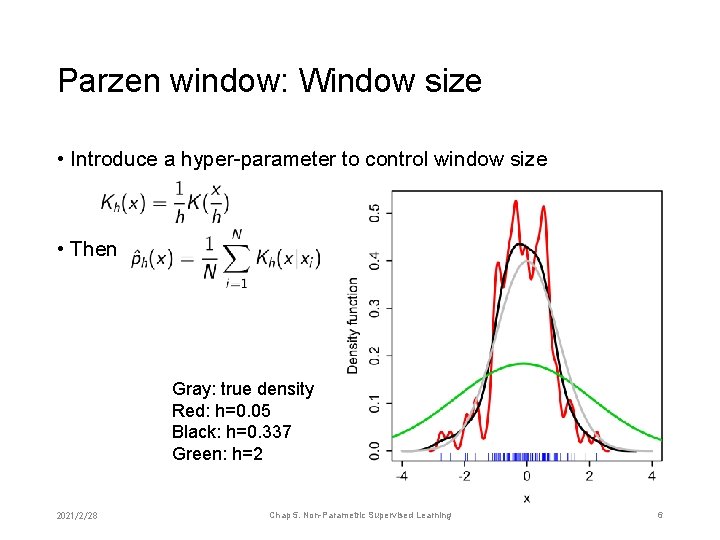

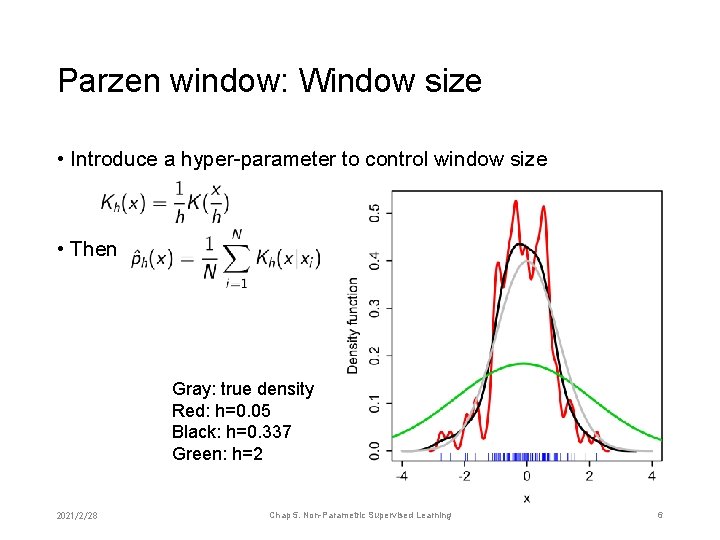

Parzen window: Window size • Introduce a hyper-parameter to control window size • Then Gray: true density Red: h=0. 05 Black: h=0. 337 Green: h=2 2021/2/28 Chap 5. Non-Parametric Supervised Learning 6

Chapter 5. Non-Parametric Supervised Learning 1. Parzen window 2. k-nearest-neighbor (k-NN) 3. Sparse coding 2021/2/28 Chap 5. Non-Parametric Supervised Learning 7

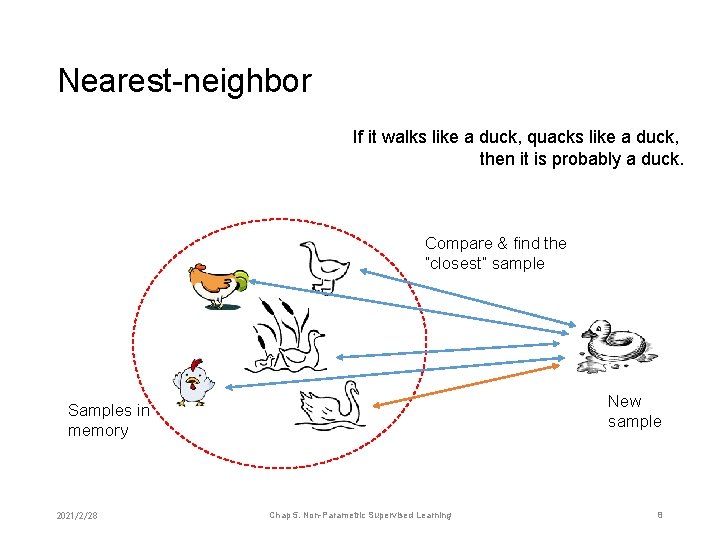

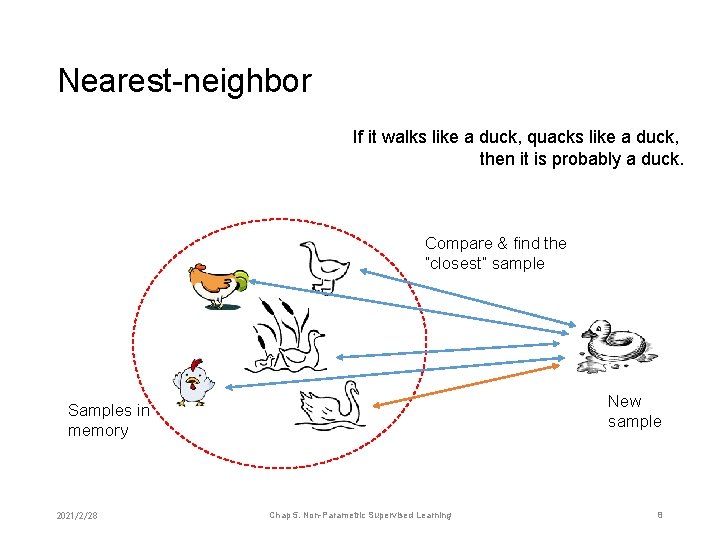

Nearest-neighbor If it walks like a duck, quacks like a duck, then it is probably a duck. Compare & find the “closest” sample New sample Samples in memory 2021/2/28 Chap 5. Non-Parametric Supervised Learning 8

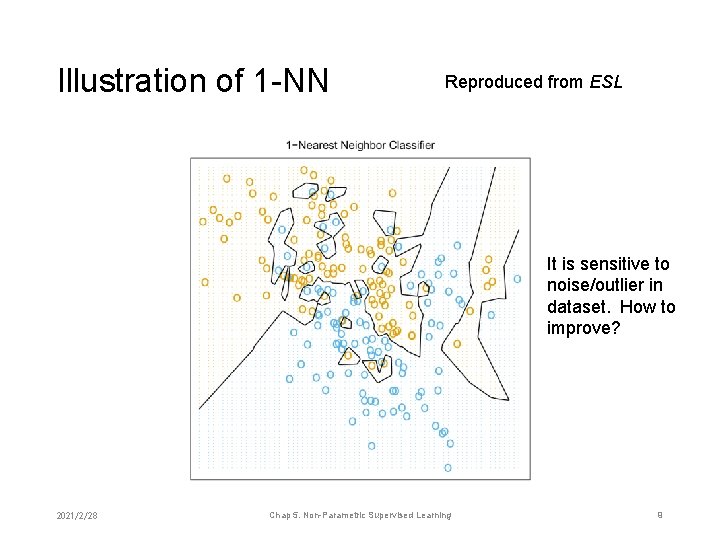

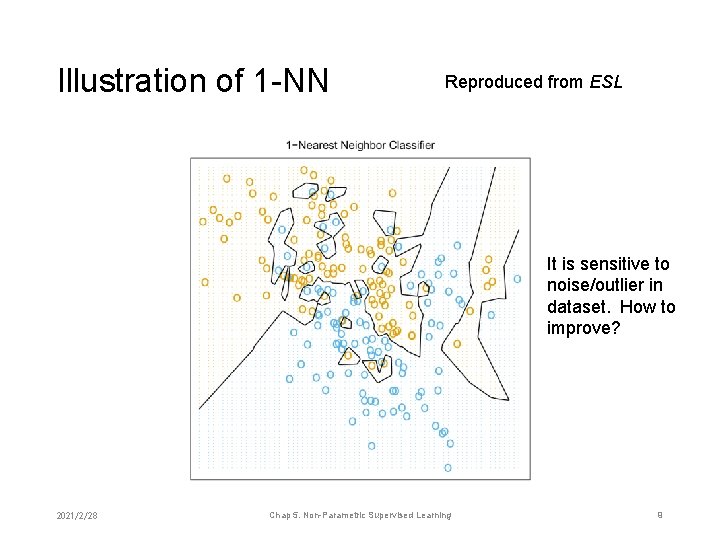

Illustration of 1 -NN Reproduced from ESL It is sensitive to noise/outlier in dataset. How to improve? 2021/2/28 Chap 5. Non-Parametric Supervised Learning 9

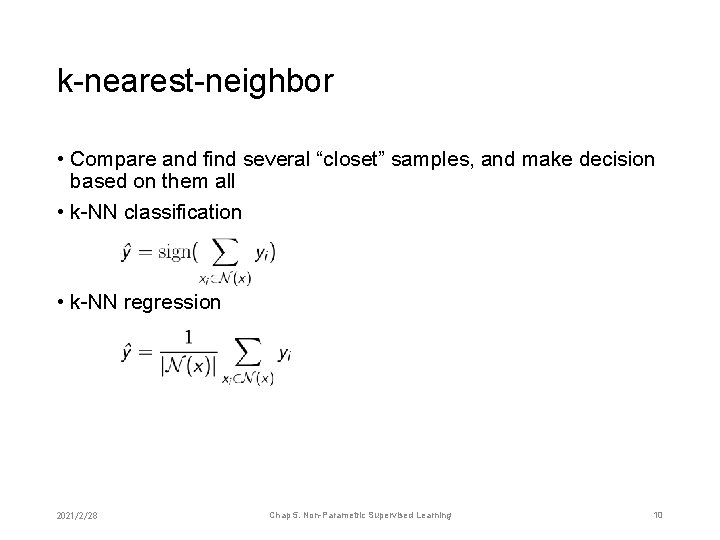

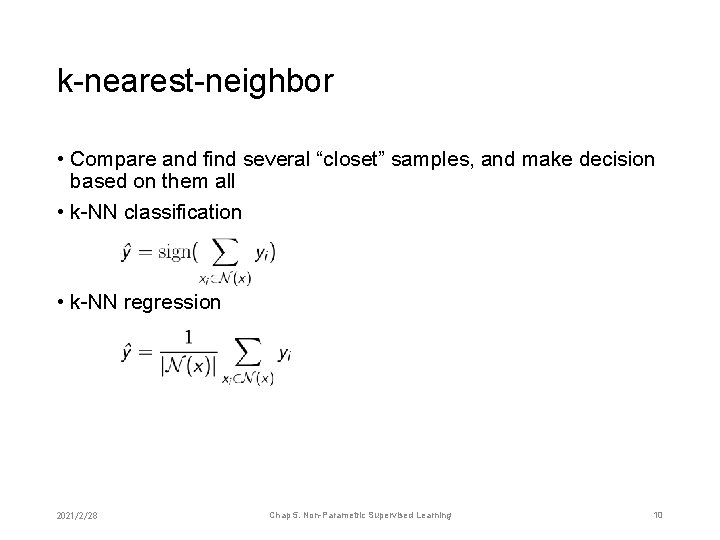

k-nearest-neighbor • Compare and find several “closet” samples, and make decision based on them all • k-NN classification • k-NN regression 2021/2/28 Chap 5. Non-Parametric Supervised Learning 10

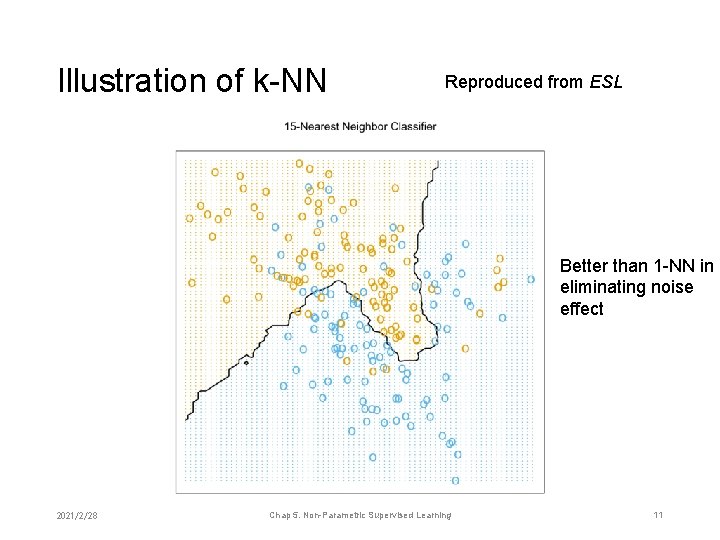

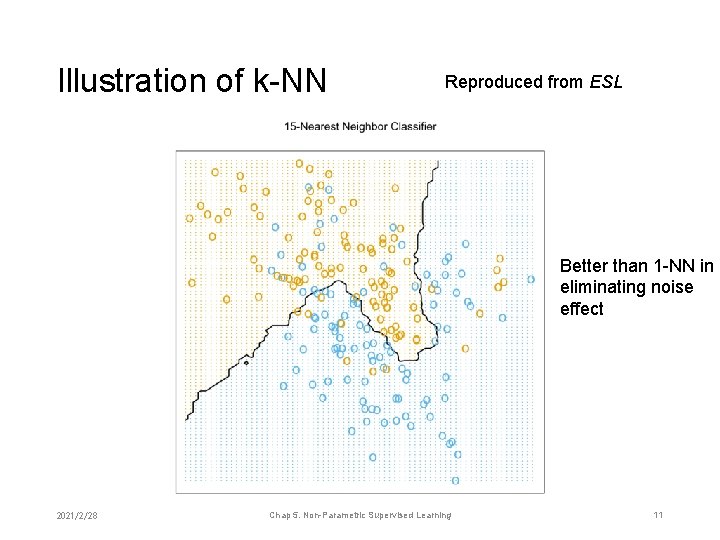

Illustration of k-NN Reproduced from ESL Better than 1 -NN in eliminating noise effect 2021/2/28 Chap 5. Non-Parametric Supervised Learning 11

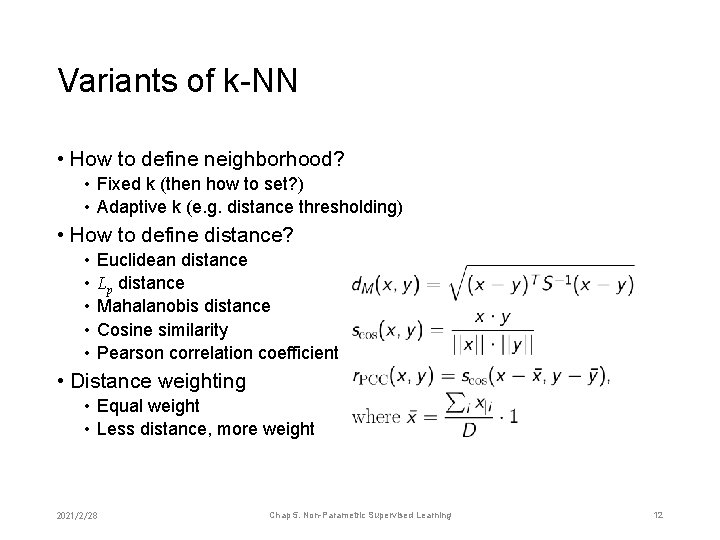

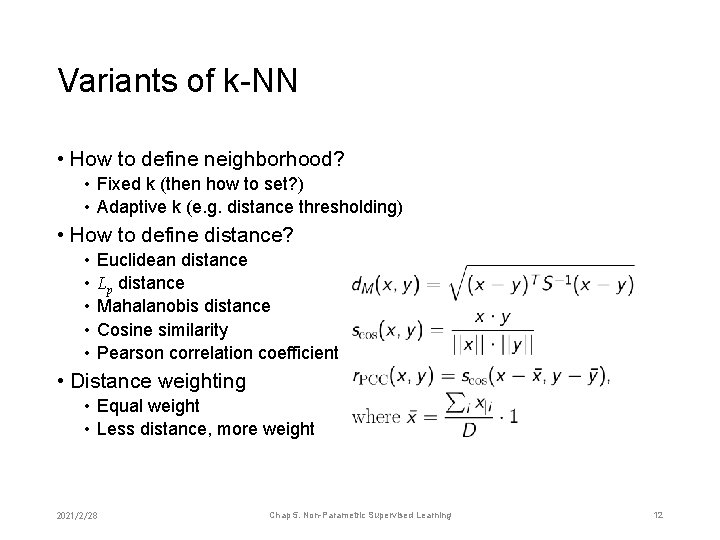

Variants of k-NN • How to define neighborhood? • Fixed k (then how to set? ) • Adaptive k (e. g. distance thresholding) • How to define distance? • • • Euclidean distance Lp distance Mahalanobis distance Cosine similarity Pearson correlation coefficient • Distance weighting • Equal weight • Less distance, more weight 2021/2/28 Chap 5. Non-Parametric Supervised Learning 12

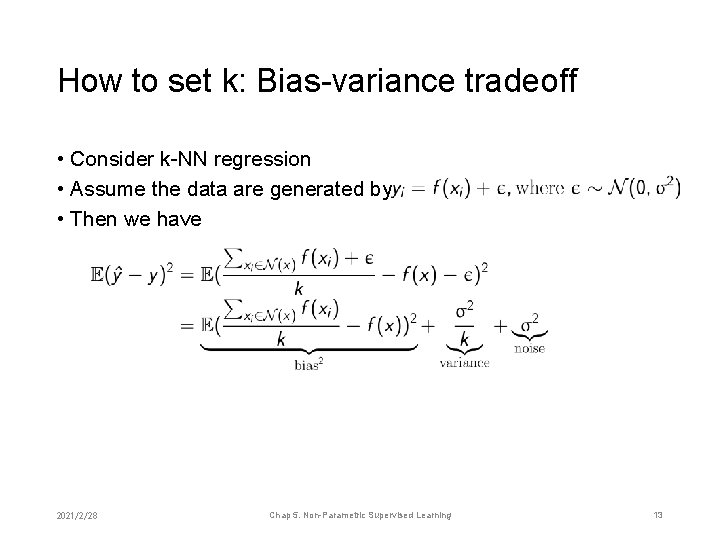

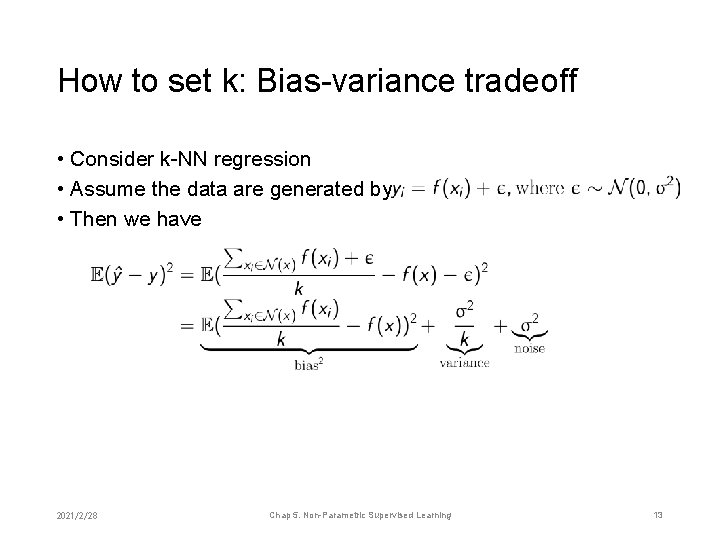

How to set k: Bias-variance tradeoff • Consider k-NN regression • Assume the data are generated by • Then we have 2021/2/28 Chap 5. Non-Parametric Supervised Learning 13

Example: Rating prediction for making recommendations 1/5 Recommendations Advertisements Search Items 2021/2/28 Products, news, movies, music, … Chap 5. Non-Parametric Supervised Learning 14

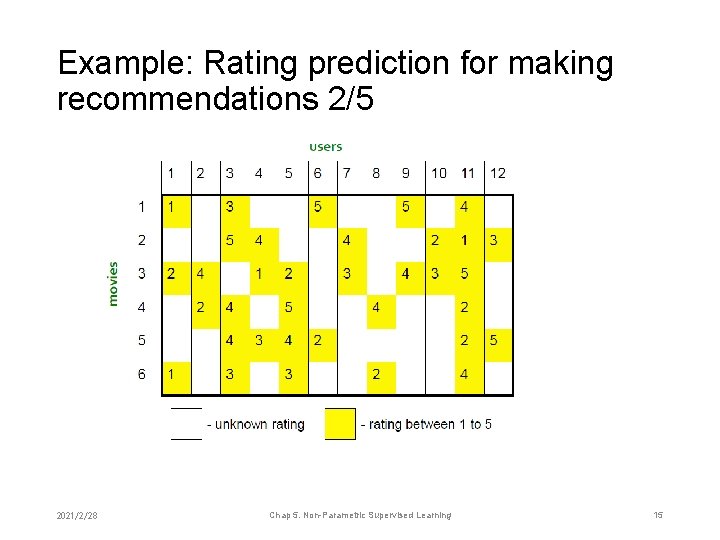

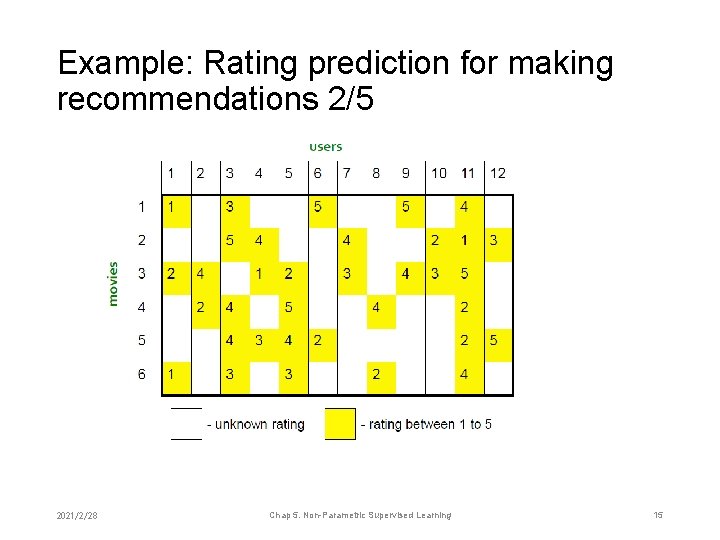

Example: Rating prediction for making recommendations 2/5 2021/2/28 Chap 5. Non-Parametric Supervised Learning 15

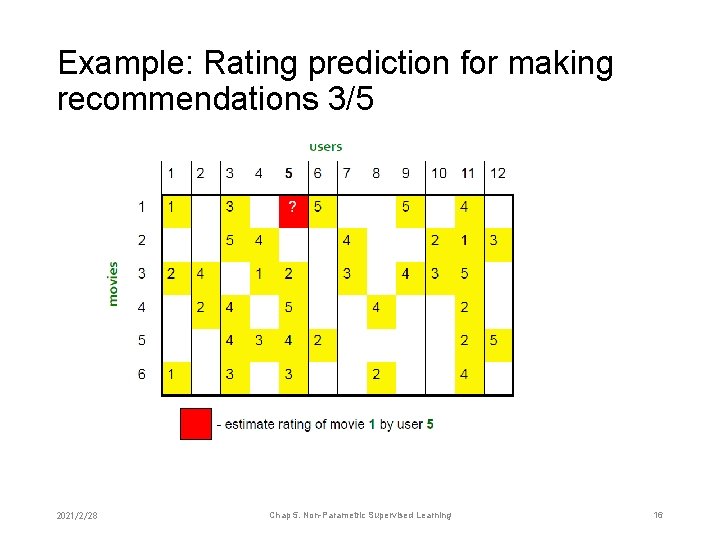

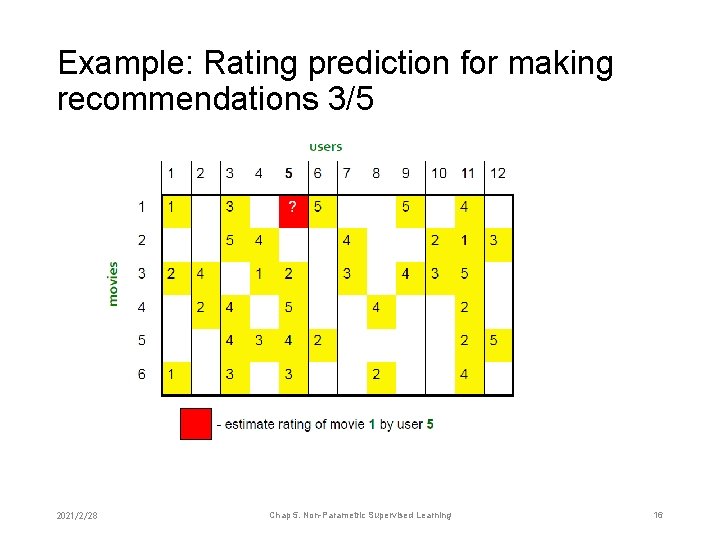

Example: Rating prediction for making recommendations 3/5 2021/2/28 Chap 5. Non-Parametric Supervised Learning 16

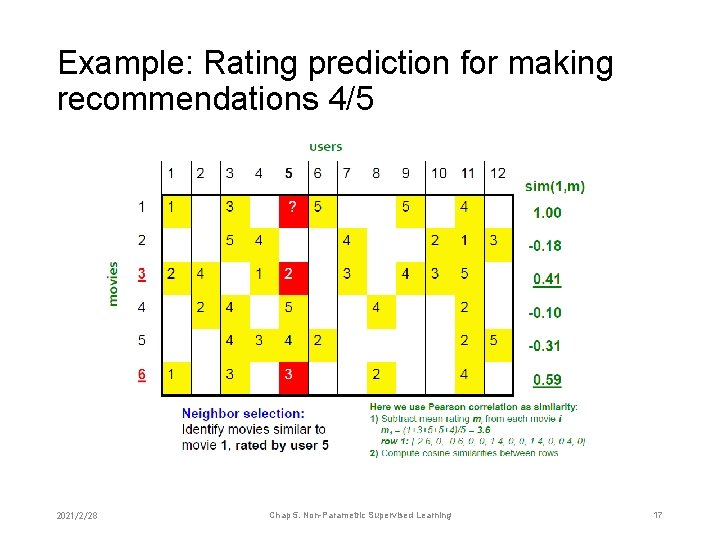

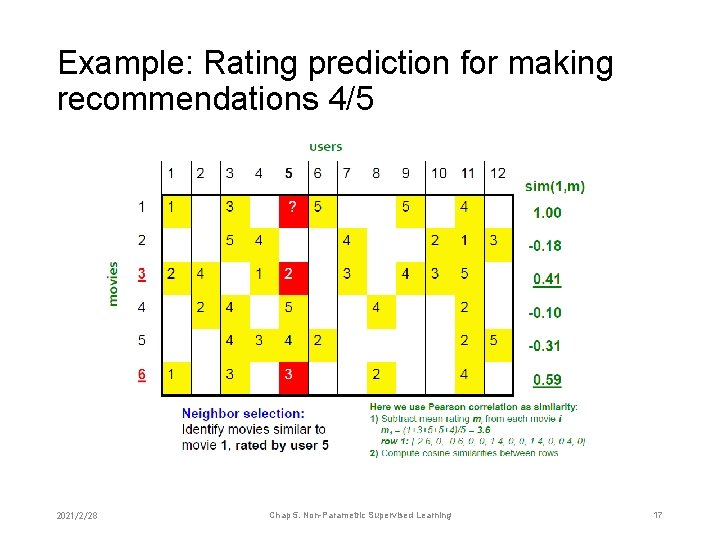

Example: Rating prediction for making recommendations 4/5 2021/2/28 Chap 5. Non-Parametric Supervised Learning 17

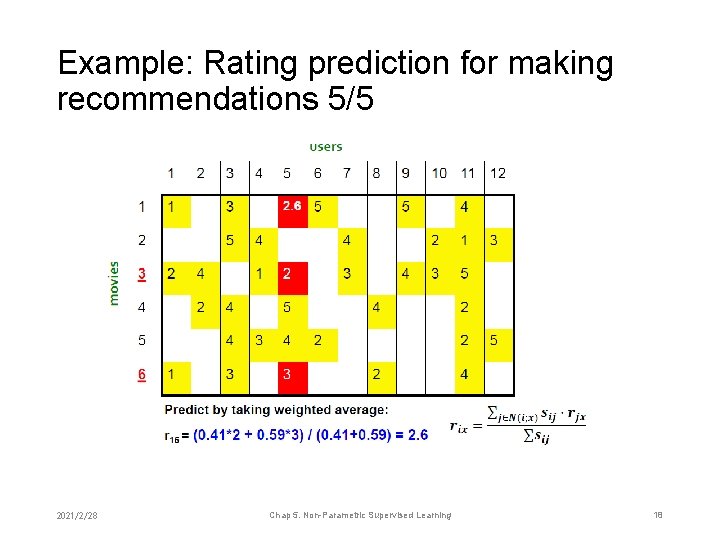

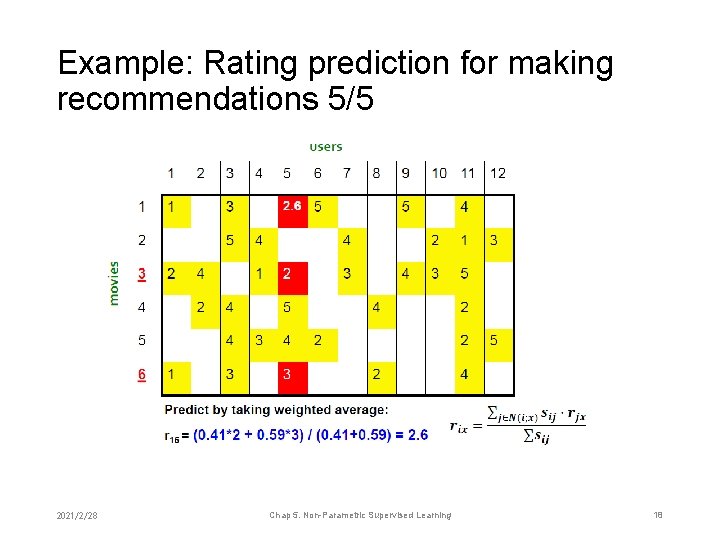

Example: Rating prediction for making recommendations 5/5 2021/2/28 Chap 5. Non-Parametric Supervised Learning 18

Chapter 5. Non-Parametric Supervised Learning 1. Parzen window 2. k-nearest-neighbor (k-NN) 3. Sparse coding 2021/2/28 Chap 5. Non-Parametric Supervised Learning 19

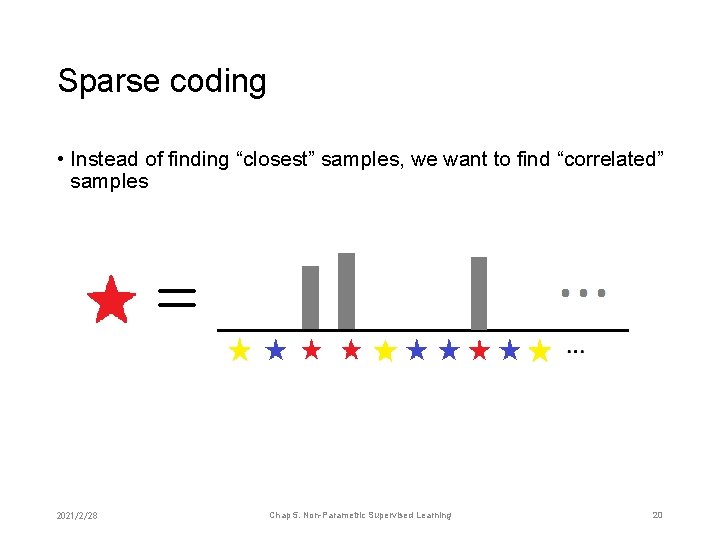

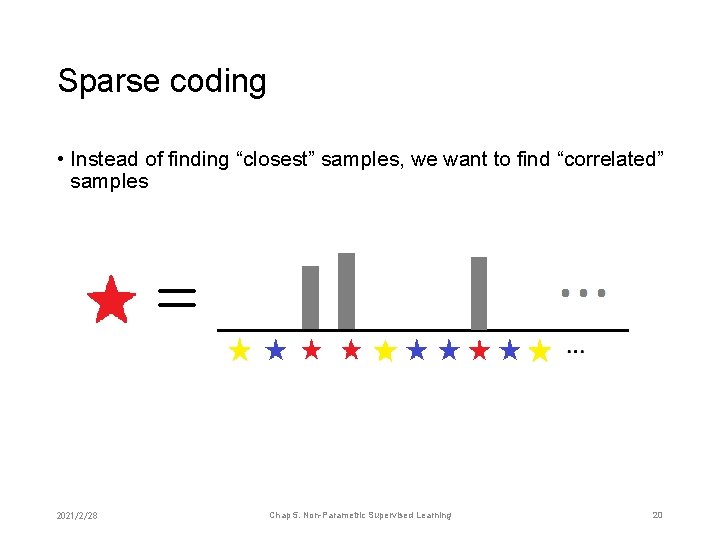

Sparse coding • Instead of finding “closest” samples, we want to find “correlated” samples 2021/2/28 Chap 5. Non-Parametric Supervised Learning 20

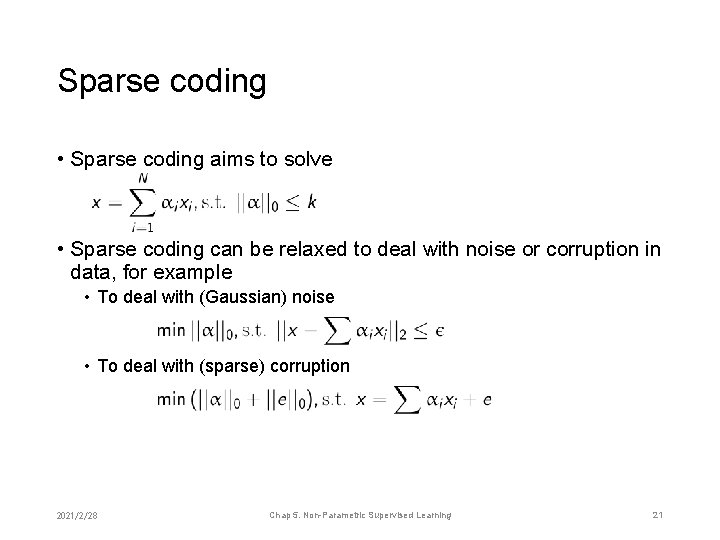

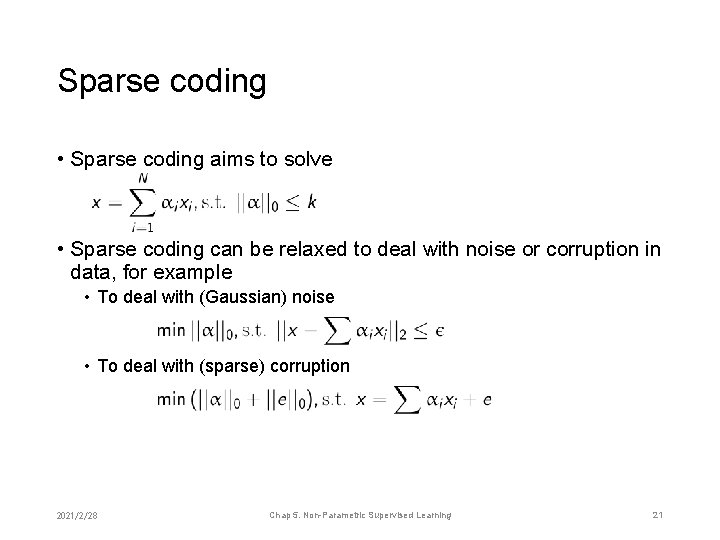

Sparse coding • Sparse coding aims to solve • Sparse coding can be relaxed to deal with noise or corruption in data, for example • To deal with (Gaussian) noise • To deal with (sparse) corruption 2021/2/28 Chap 5. Non-Parametric Supervised Learning 21

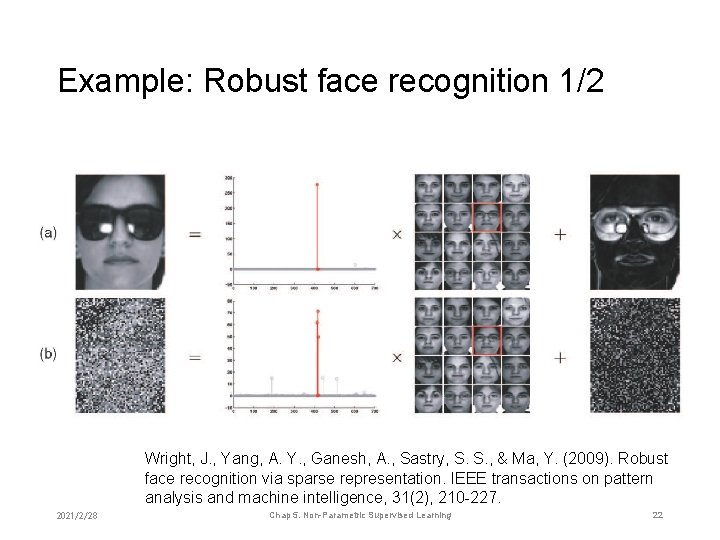

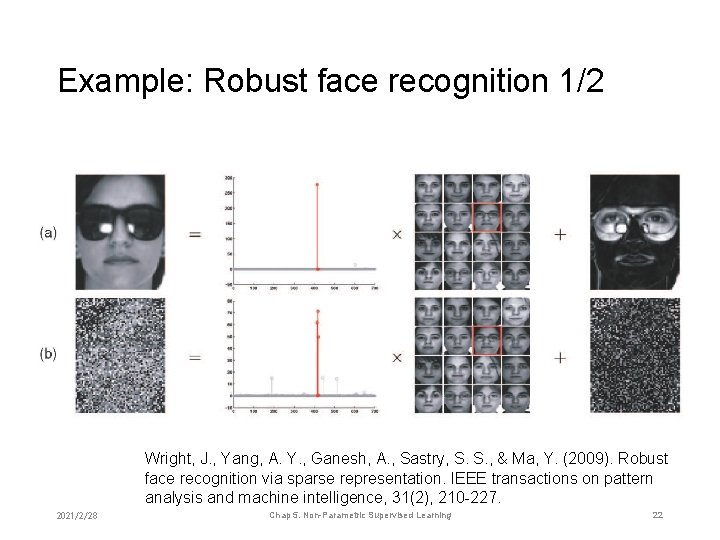

Example: Robust face recognition 1/2 Wright, J. , Yang, A. Y. , Ganesh, A. , Sastry, S. S. , & Ma, Y. (2009). Robust face recognition via sparse representation. IEEE transactions on pattern analysis and machine intelligence, 31(2), 210 -227. 2021/2/28 Chap 5. Non-Parametric Supervised Learning 22

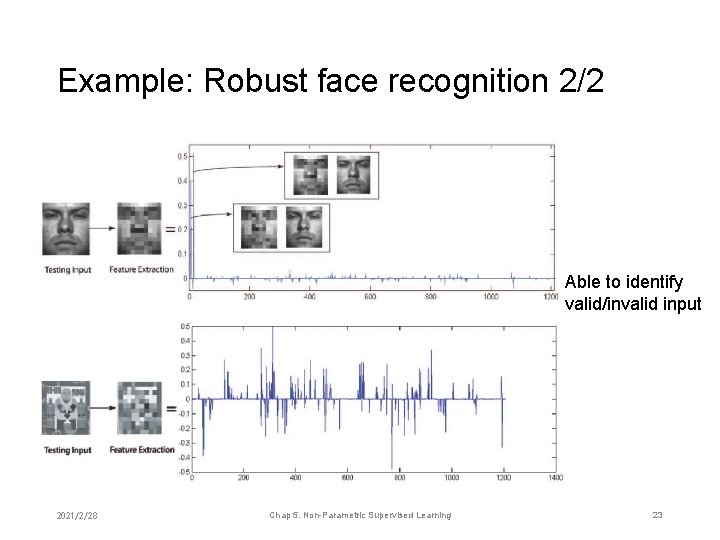

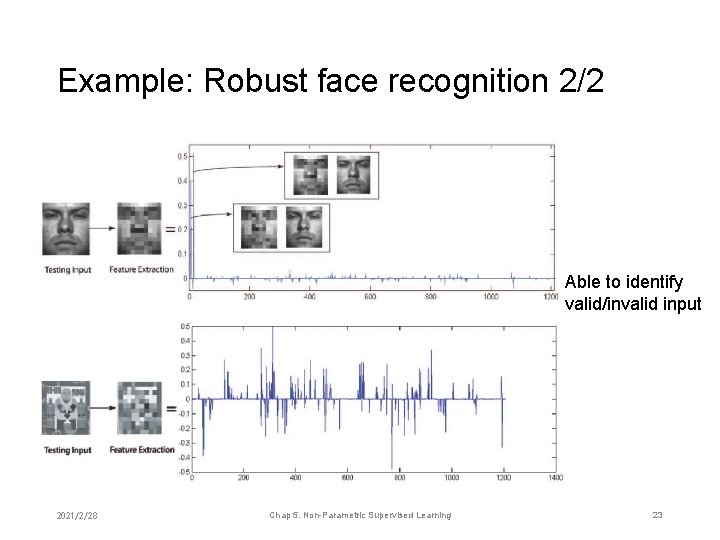

Example: Robust face recognition 2/2 Able to identify valid/invalid input 2021/2/28 Chap 5. Non-Parametric Supervised Learning 23

Chapter summary Dictionary Toolbox • Instance-based learning • Memory-based learning • k-nearest neighbor (k-NN) • Parzen window • Sparse coding 2021/2/28 Chap 5. Non-Parametric Supervised Learning 24