Statistical Inference and Synthesis in the Image Domain

- Slides: 40

Statistical Inference and Synthesis in the Image Domain for Mobile Robot Environment Modeling L. Abril Torres-Méndez and Gregory Dudek Centre for Intelligent Machines School of Computer Science Mc. Gill University

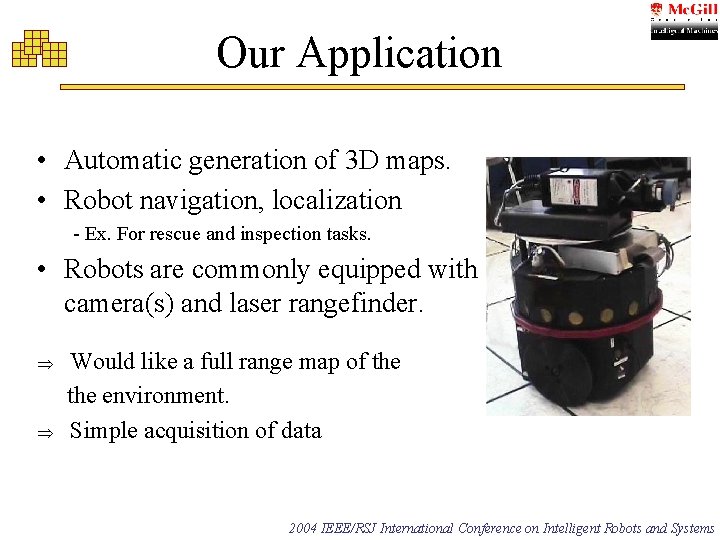

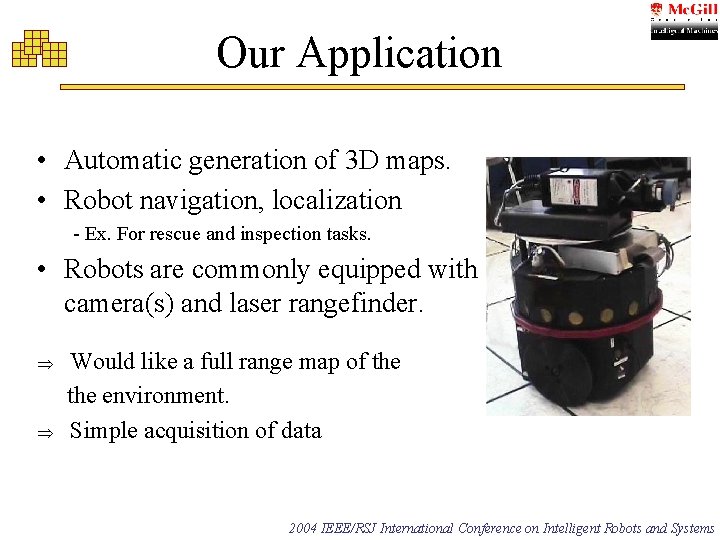

Our Application • Automatic generation of 3 D maps. • Robot navigation, localization - Ex. For rescue and inspection tasks. • Robots are commonly equipped with camera(s) and laser rangefinder. Þ Þ Would like a full range map of the environment. Simple acquisition of data 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Problem Context • Pure vision-based methods – Shape-from-X remains challenging, especially in unconstrained environments. • Laser line scanners are commonplace, but – Volume scanners remain exotic, costly, slow. – Incomplete range maps are far easier to obtain that complete ones. ü Proposed solution: Combine visual and partial depth Shape-from-(partial) Shape 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Problem Statement From incomplete range data combined with intensity, perform scene recovery. From range scans like this infer the rest of the map 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Overview of the Method • Approximate the composite of intensity and range data at each point as a Markov process. • Infer complete range maps by estimating joint statistics of observed range and intensity. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

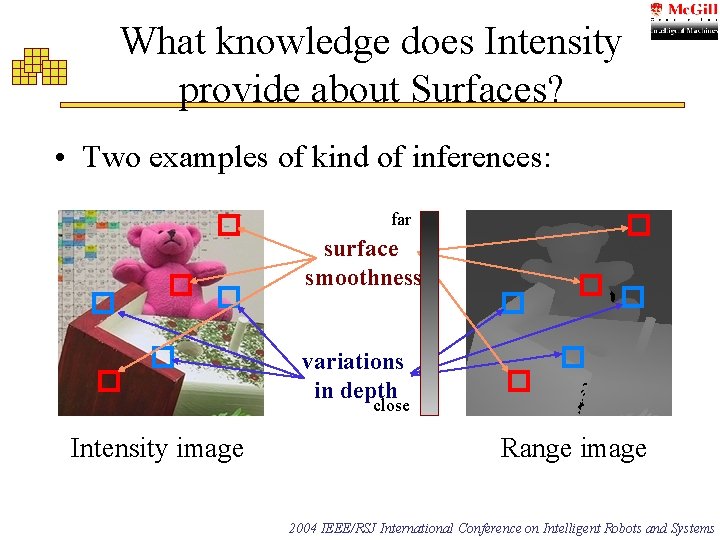

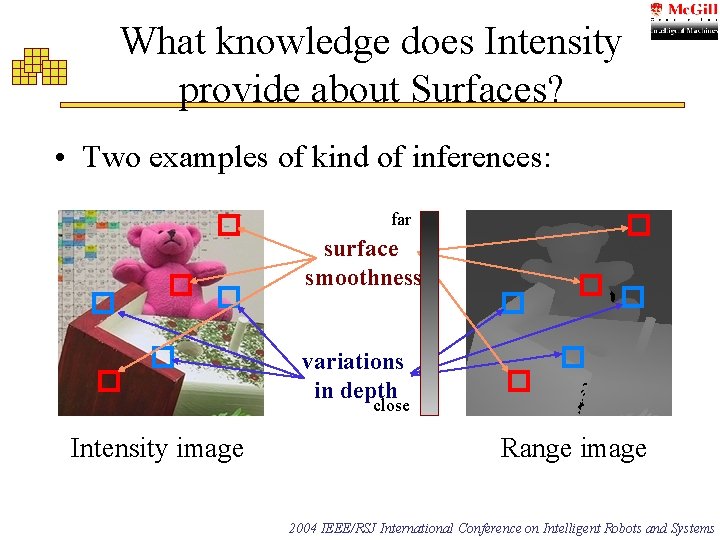

What knowledge does Intensity provide about Surfaces? • Two examples of kind of inferences: far surface smoothness variations in depth close Intensity image Range image 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

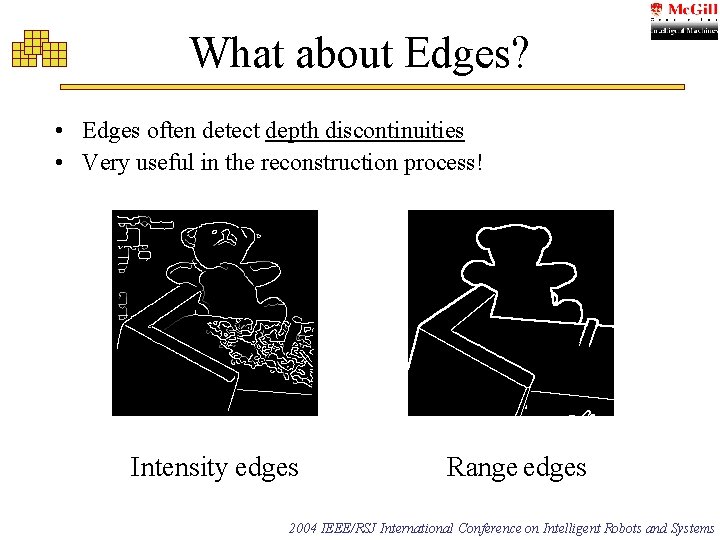

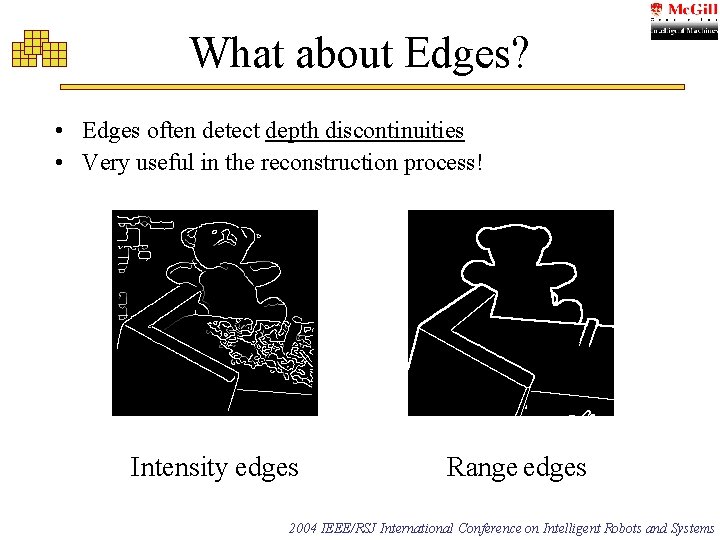

What about Edges? • Edges often detect depth discontinuities • Very useful in the reconstruction process! Intensity edges Range edges 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Range synthesis basis ü Range and intensity images are correlated, in complicated ways, exhibiting useful structure. - Basis of shape from shading & shape from darkness, but they are based on strong assumptions. ü The variations of pixels in the intensity and range images are related to the values elsewhere in the image(s). Markov Random Fields 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Related Work • Probabilistic updating has been used for – image restoration [e. g. Geman & Geman, TPAMI 1984] as well as – texture synthesis [e. g. Efros & Leung, ICCV 1999]. • Problems: Pure extrapolation/interpolation: – is suitable only for textures with a stationary distribution – can converge to inappropriate dynamic equilibria 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

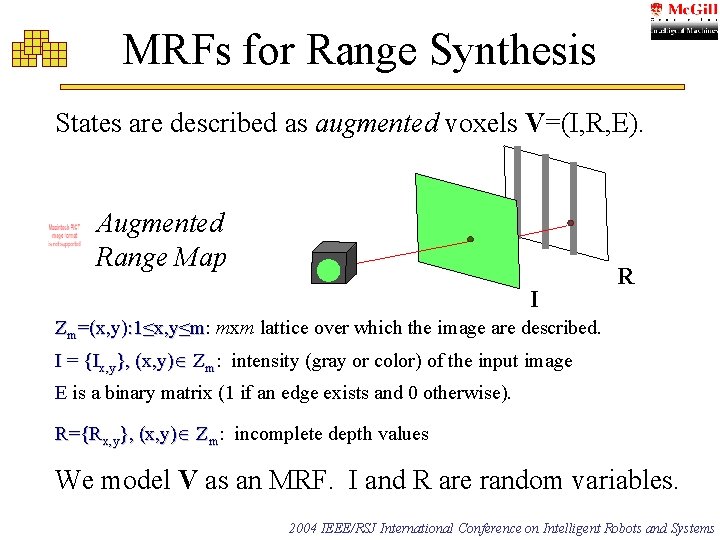

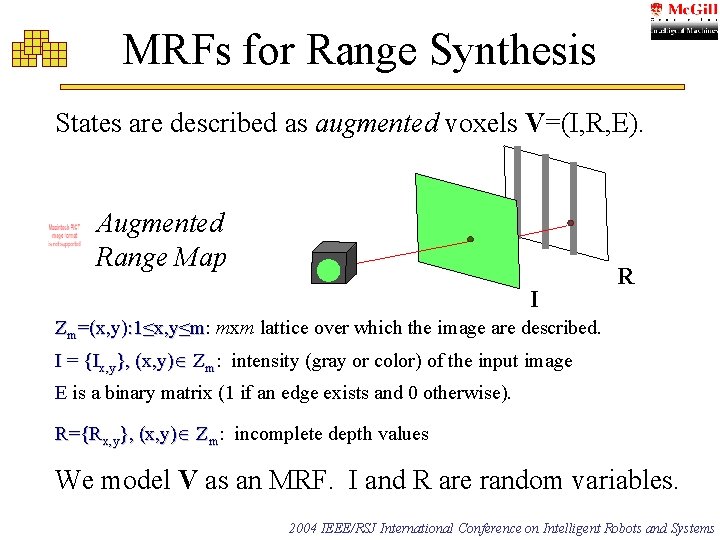

MRFs for Range Synthesis States are described as augmented voxels V=(I, R, E). Augmented Range Map vx, y I R Zm=(x, y): 1≤x, y≤m: =(x, y): 1≤x, y≤m mxm lattice over which the image are described. I = {Ix, y}, (x, y) Zm: intensity (gray or color) of the input image E is a binary matrix (1 if an edge exists and 0 otherwise). R={Rx, y}, (x, y) Zm: incomplete depth values We model V as an MRF. I and R are random variables. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Markov Random Field Model Definition: A stochastic process for which a voxel value is predicted by its neighborhood in range and intensity. Nx, y is a square neighborhood of size nxn centered at voxel Vx, y. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

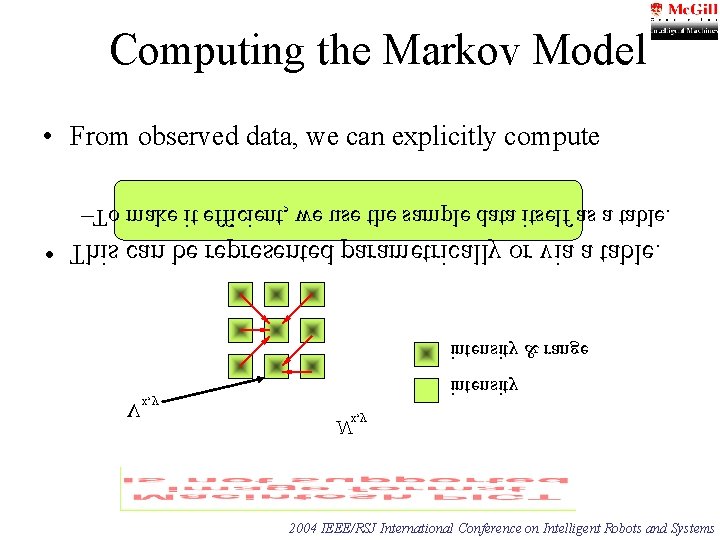

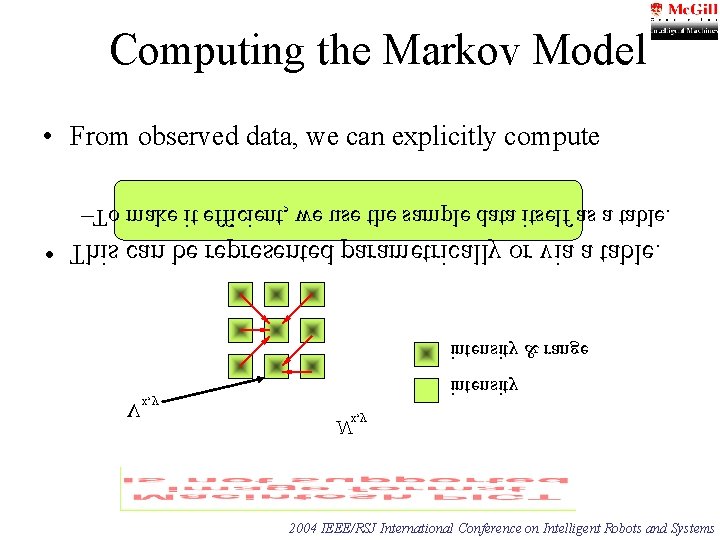

Computing the Markov Model • From observed data, we can explicitly compute –To make it efficient, we use the sample data itself as a table. • This can be represented parametrically or via a table. intensity & range intensity Vx, y Nx, y 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Estimation using the Markov Model n • From what should an unknown range value be? ¬ For an unknown range value with a known neighborhood, we can select the maximum likelihood estimate for Vx, y. Further, we can do this even with partial neighborhood information. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Interpolate PDF • In general, we cannot uniquely solve the desired neighborhood configuration, instead assume The values in Nu, v are similar to the values in Nx, y, (x, y) ≠ (u, v). Similarity measure: Gaussian-weighted SSD (sum of squared differences). Update schedule is purely causal and deterministic. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

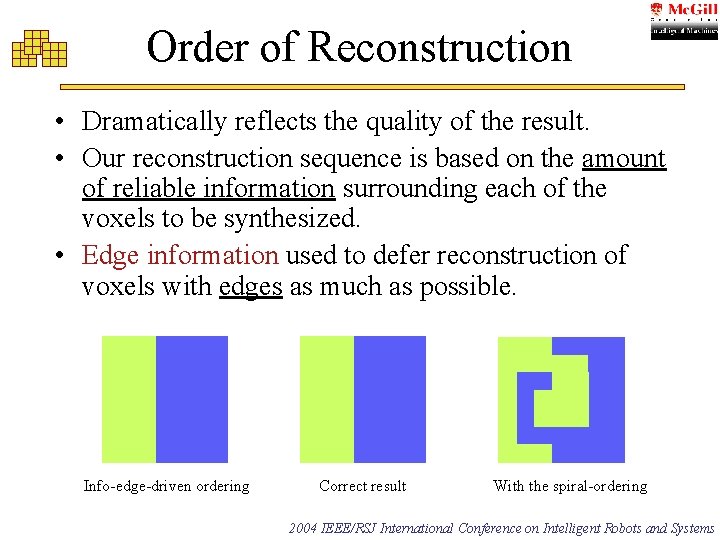

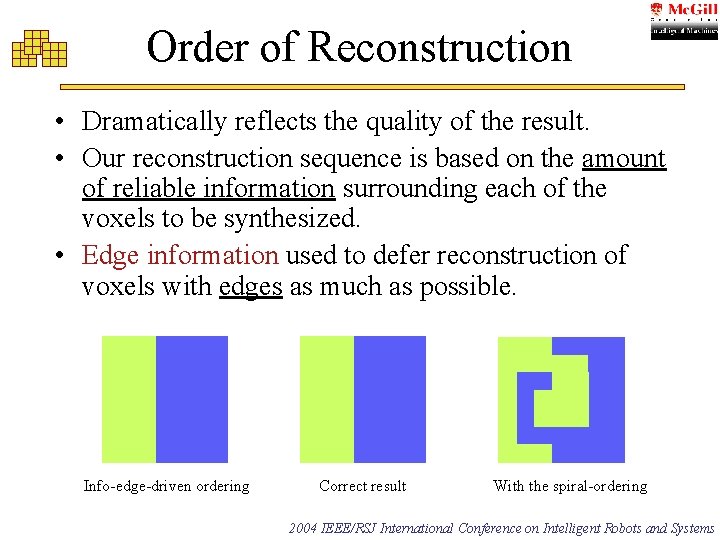

Order of Reconstruction • Dramatically reflects the quality of the result. • Our reconstruction sequence is based on the amount of reliable information surrounding each of the voxels to be synthesized. • Edge information used to defer reconstruction of voxels with edges as much as possible. Info-edge-driven ordering Correct result With the spiral-ordering 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Experimental Evaluation • Obtain full range and intensity maps of the same environment. • Remove most of the range data, then try and estimate what it is. • Use the original ground truth data to estimate accuracy of the reconstruction. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

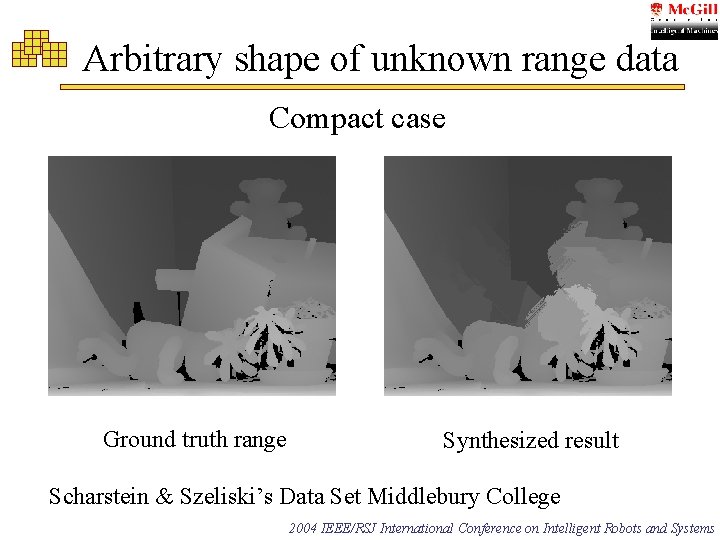

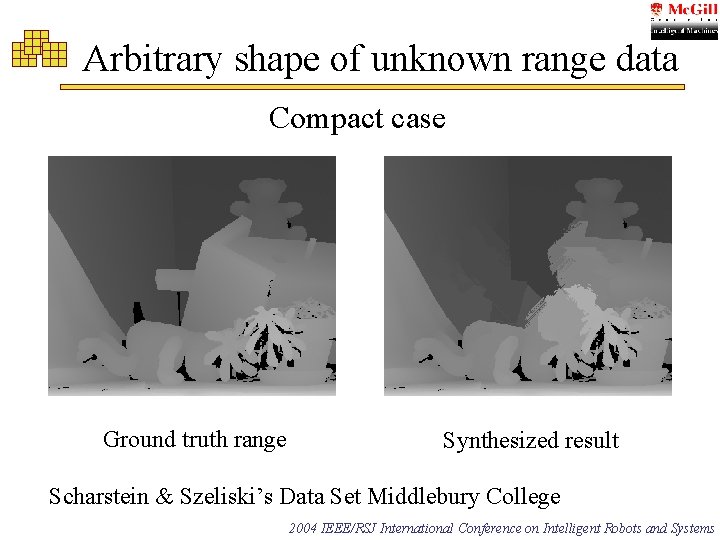

Arbitrary shape of unknown range data Compact case Ground Input Intensity truth range Input range image Ground truthresult range Synthesized result Synthesized Scharstein & Szeliski’s Data Set Middlebury College 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

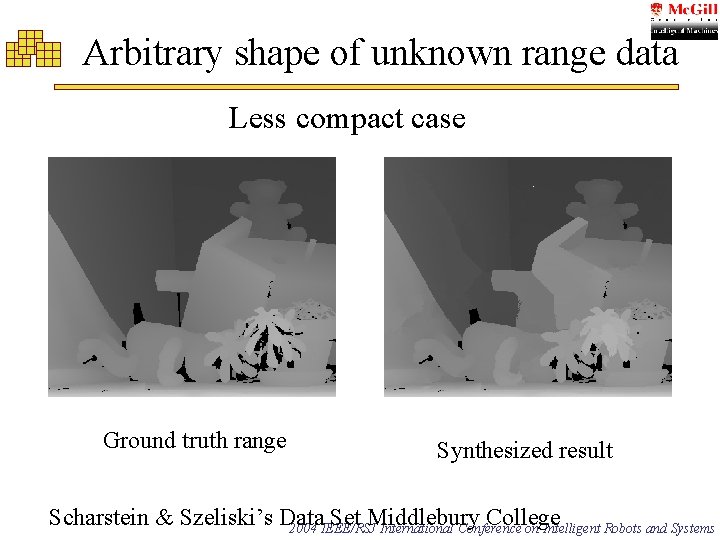

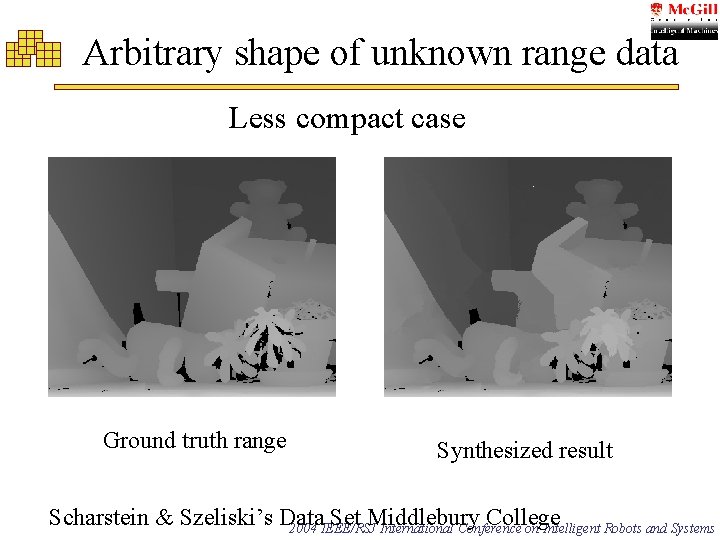

Arbitrary shape of unknown range data Less compact case Ground Input Intensity truth range Input range image Synthesized result Scharstein & Szeliski’s Data Set Middlebury College 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

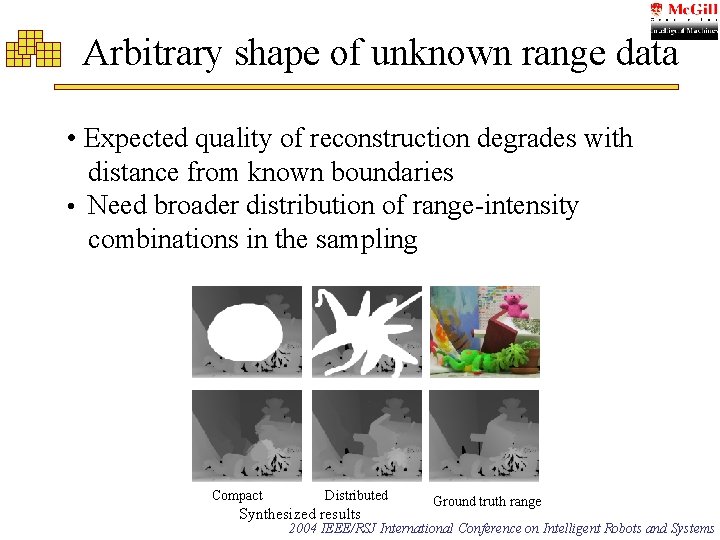

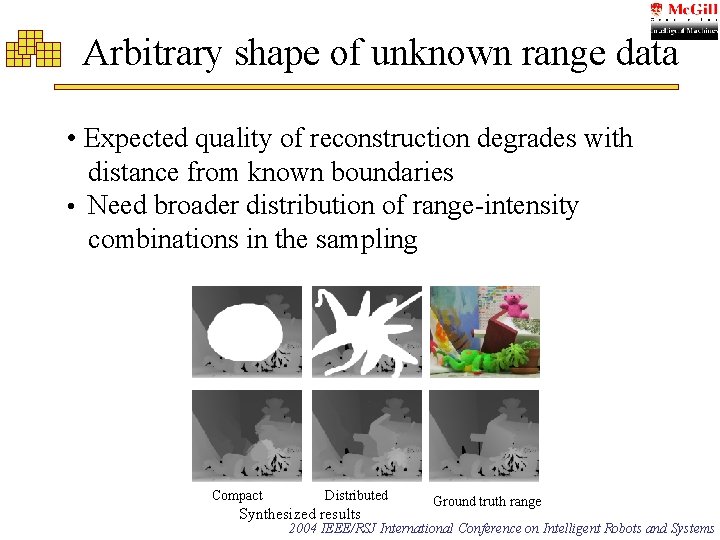

Arbitrary shape of unknown range data • Expected quality of reconstruction degrades with distance from known boundaries • Need broader distribution of range-intensity combinations in the sampling Compact Distributed Synthesized results Ground truth range 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

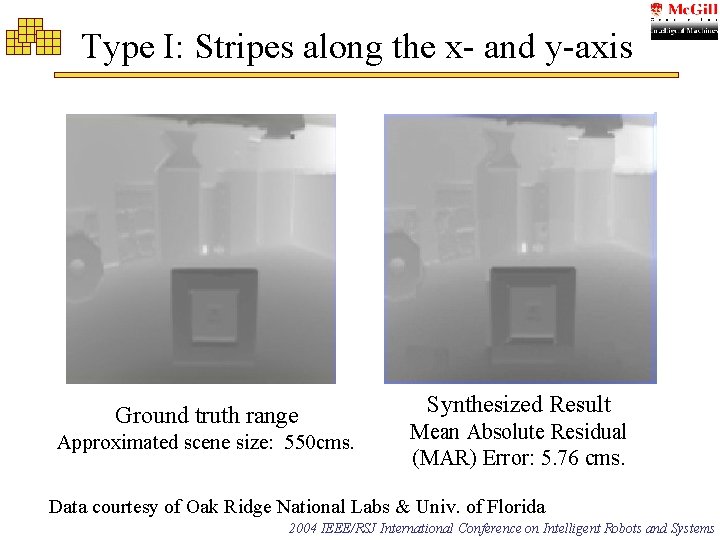

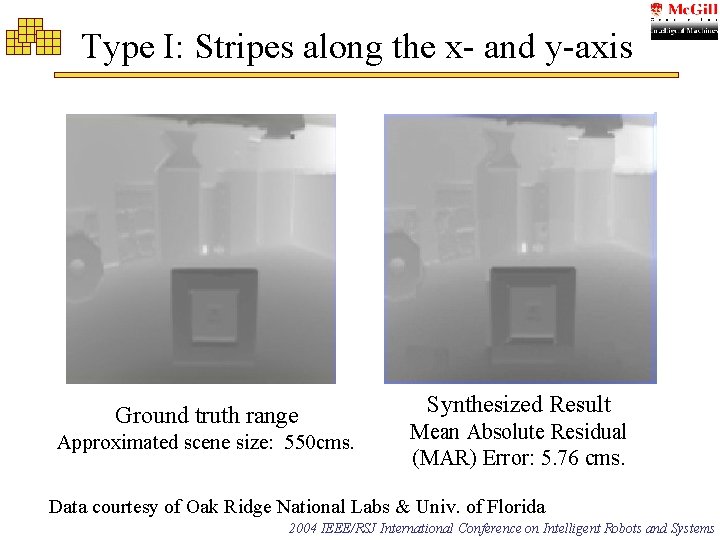

Type I: Stripes along the x- and y-axis xw rw Synthesized Ground truth. Result Case 1: rw=10, xrange w=20. Mean Absolute Residual Approximated scene size: 550 cms. 39% of missing range Input Intensity Ground truth range (MAR) Error: 5. 76 cms. Data courtesy of Oak Ridge National Labs & Univ. of Florida 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

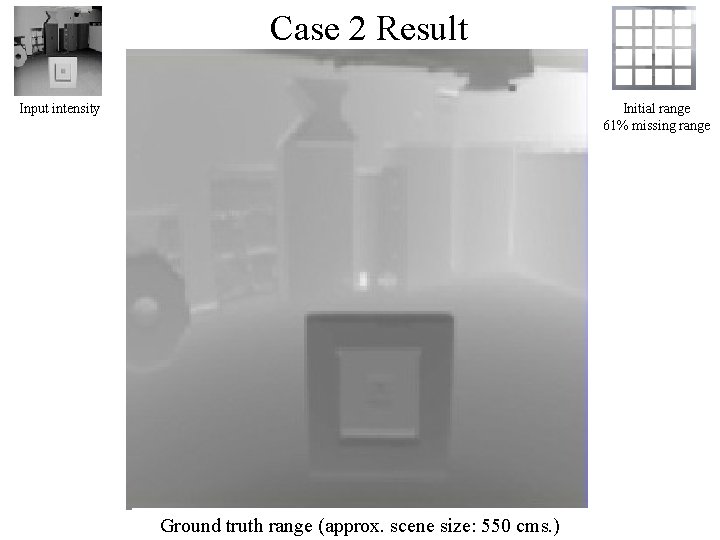

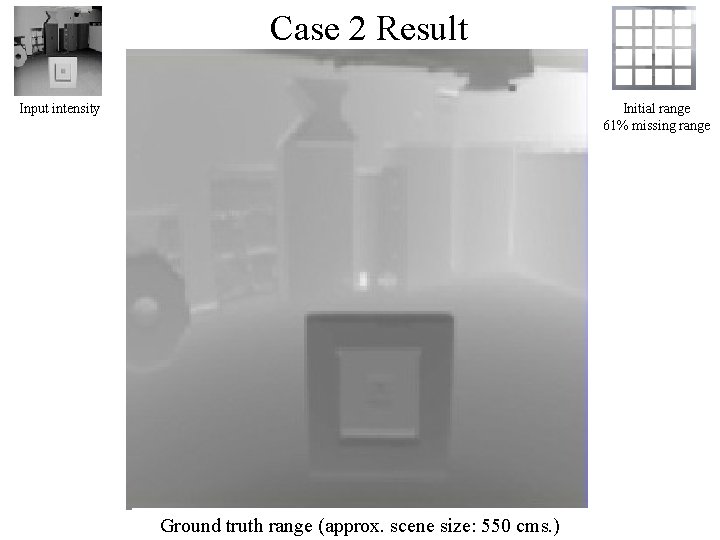

Case 2 Result Input intensity Initial range 61% missing range Initial Ground truth(rrange x(approx. 61% scene. Error: ofsize: missing 550 cms. ) range Mean range Absolute Residual (MAR) 8. 86 cms. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems w=5, w=25).

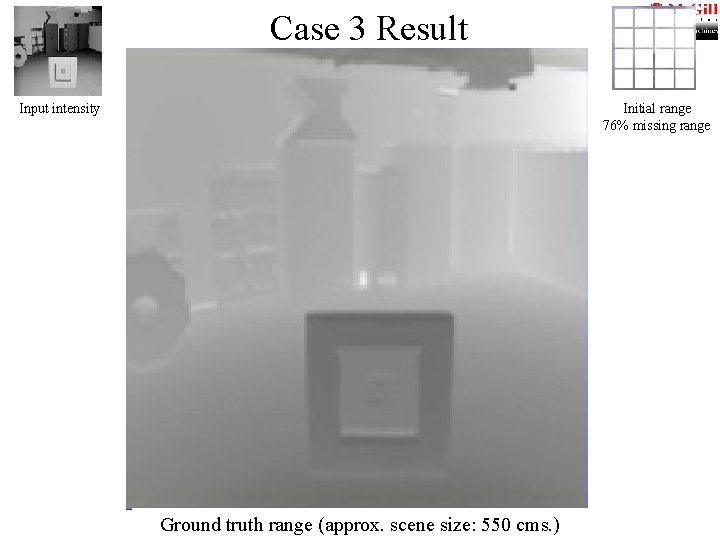

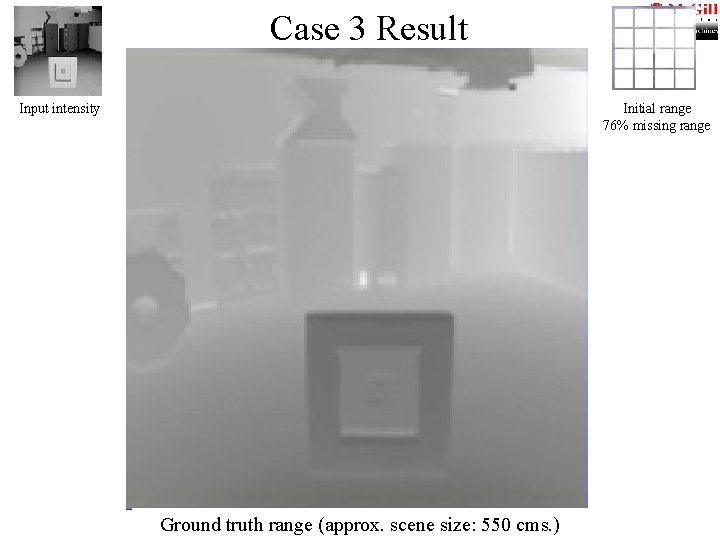

Case 3 Result Input intensity Initial range 76% missing range Ground truth range (approx. scene size: 550 cms. ) Initial Meanrange Absolute data (r Residual xw=28). (MAR) 76% Error: missing 9. 99 cms. range 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems w=3,

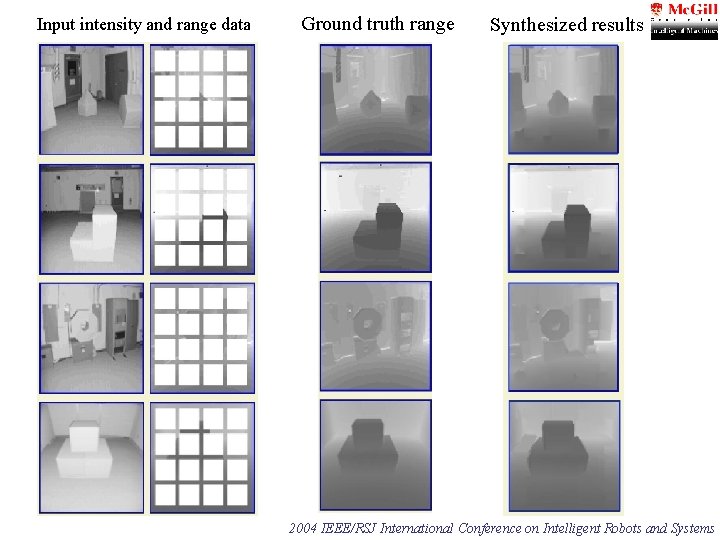

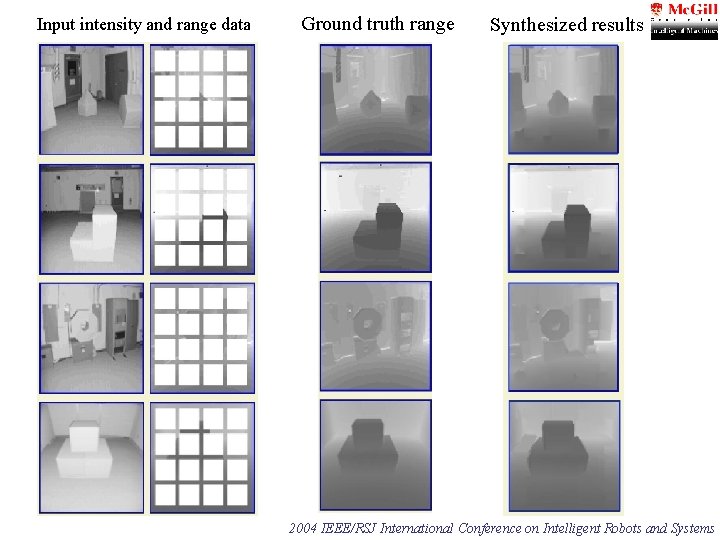

Input intensity and range data Ground truth range Synthesized results 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

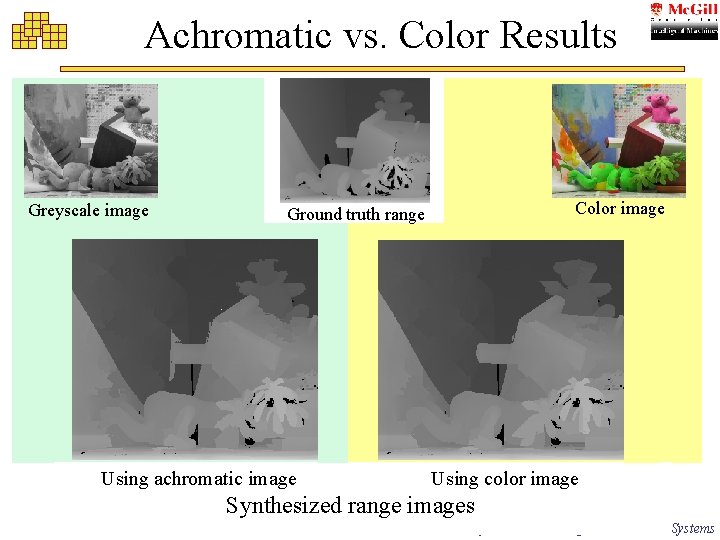

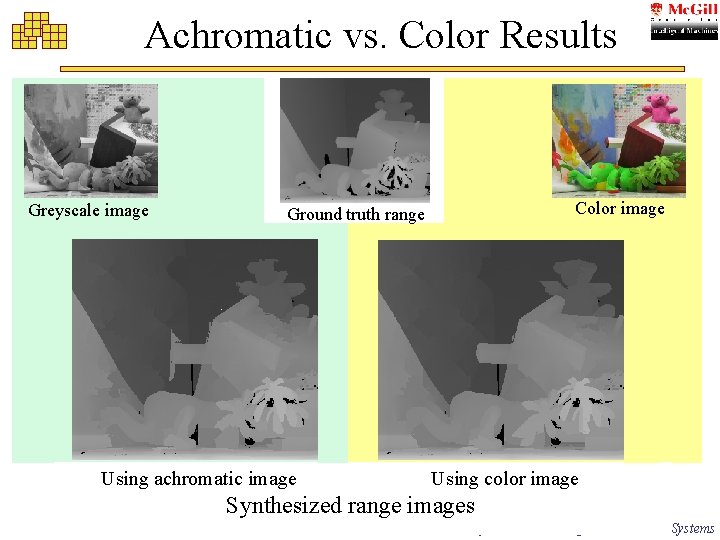

Achromatic vs. Color Results Greyscale image Color image Ground truth range Using achromatic image Using color image Input Synthesized range data. 66% missing rangeofimages 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

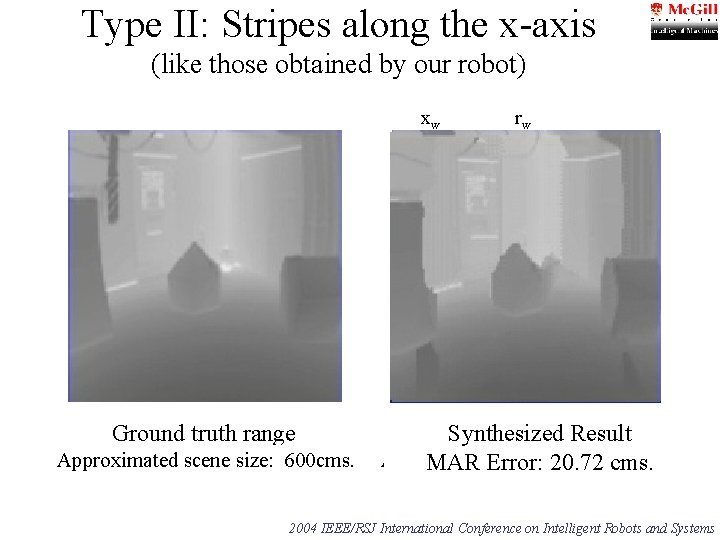

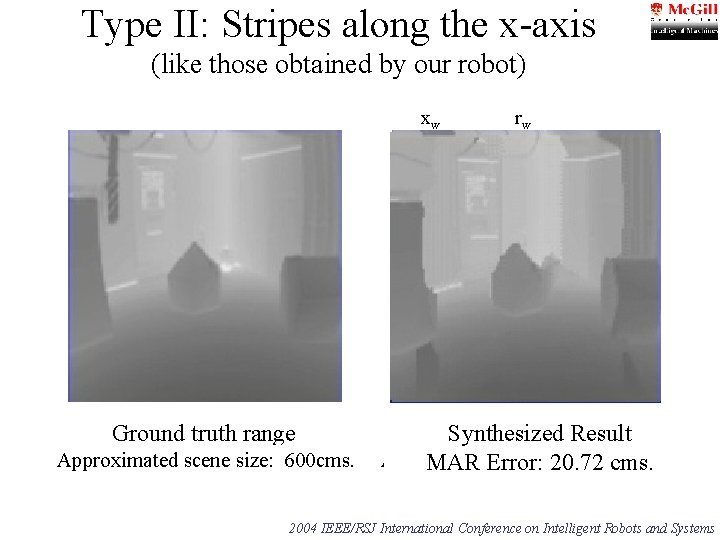

Type II: Stripes along the x-axis (like those obtained by our robot) xw xw Input Intensity Ground truth range Approximated scene size: 600 cms. rw rw Synthesized Ground Case 1: rwtruth =10, range x. Result w=20. Approx. scene size: 20. 72 600 cms. MAR Error: cms. 62. 5% of missing range 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

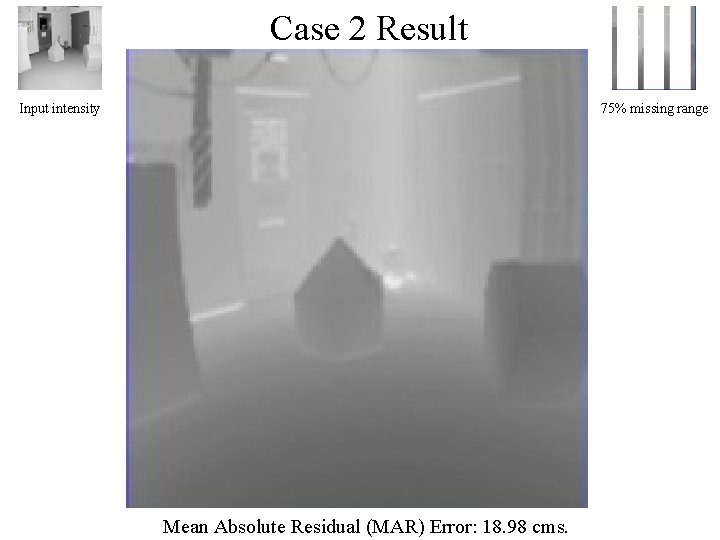

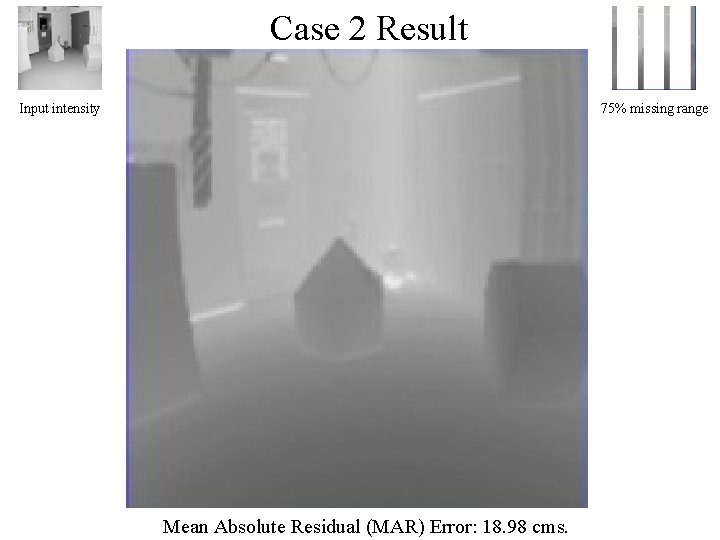

Case 2 Result Input intensity 75% missing range Mean Absolute 2004 Residual (MAR) Error: 18. 98 oncms. IEEE/RSJ International Conference Intelligent Robots and Systems

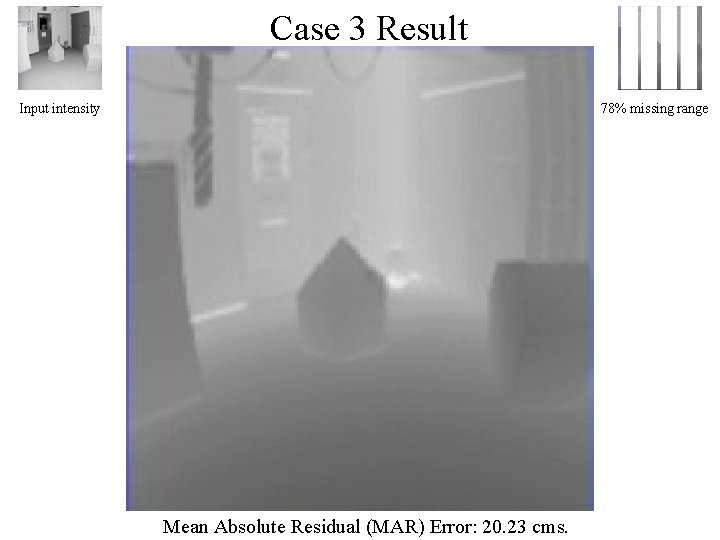

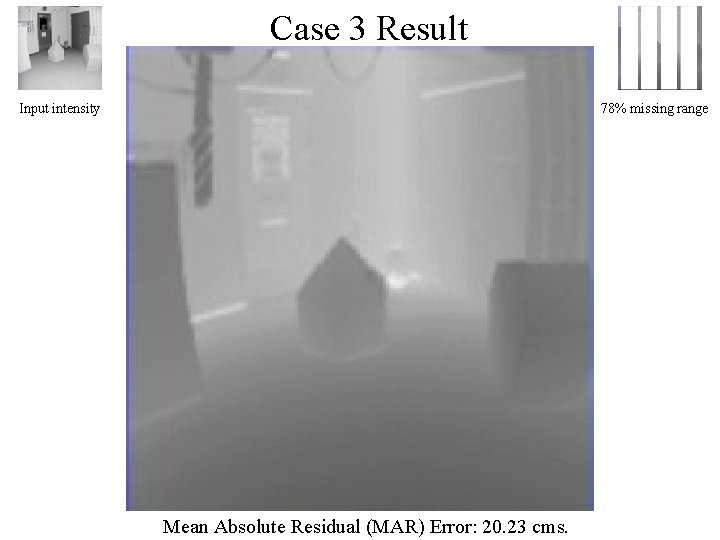

Case 3 Result Input intensity 78% missing range Mean Absolute 2004 Residual (MAR) Error: 20. 23 oncms. IEEE/RSJ International Conference Intelligent Robots and Systems

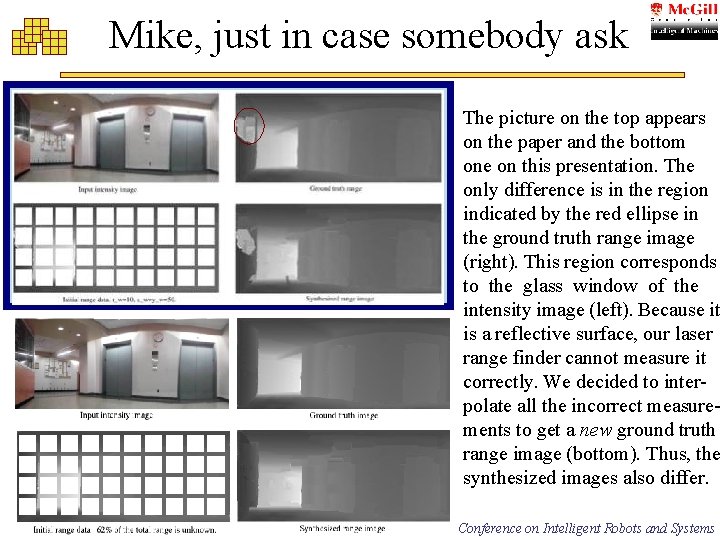

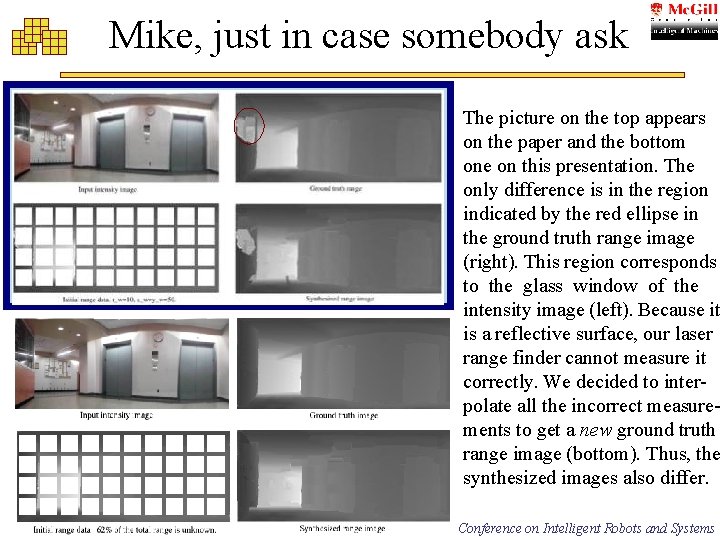

Mike, just in case somebody ask The picture on the top appears on the paper and the bottom one on this presentation. The only difference is in the region indicated by the red ellipse in the ground truth range image (right). This region corresponds to the glass window of the intensity image (left). Because it is a reflective surface, our laser range finder cannot measure it correctly. We decided to interpolate all the incorrect measurements to get a new ground truth range image (bottom). Thus, the synthesized images also differ. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

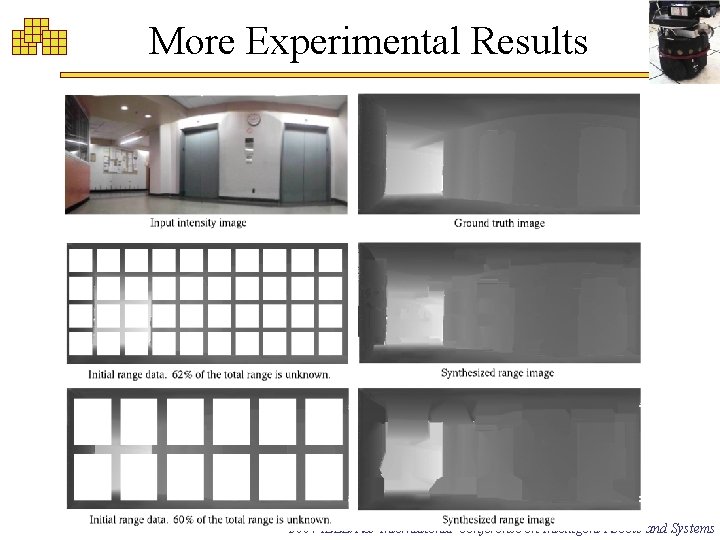

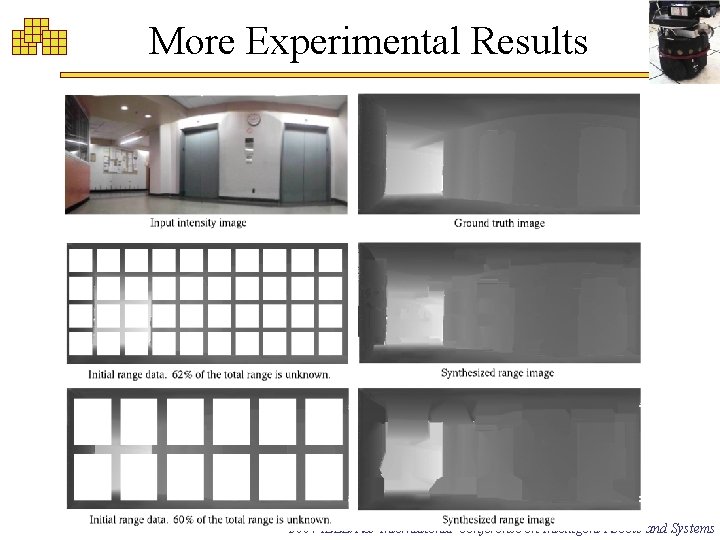

More Experimental Results 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Conclusions • Works very well -- is this consistent? • Can be more robust than standard methods (e. g. shape from shading) due to limited dependence on a priori reflectance assumptions. • Depends on adequate amount of reliable range as input. • Depends on statistical consistency of region to be constructed and region that has been measured. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Discussion & Ongoing Work • Surface normals are needed when the input range data do not capture the underlying structure • Data from real robot – Issues: non-uniform scale, registration, correlation on different type of data – Integration of data from different viewpoints 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Questions ? 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

Adding Surface Normals Not in the pa per • We compute the normals by fitting a plane (smooth surface) in windows of nxn pixels. • Normal vector: Eigenvector with the smallest eigenvalue of the covariance matrix. • Similarity is now computed between surface normals instead of range values. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

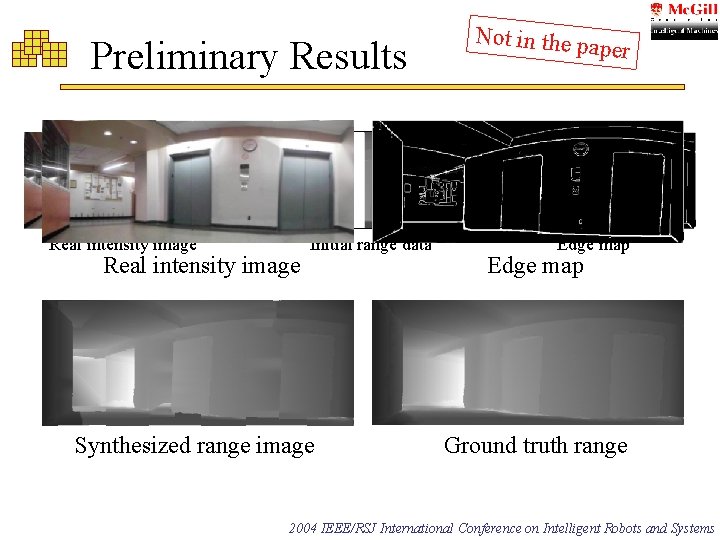

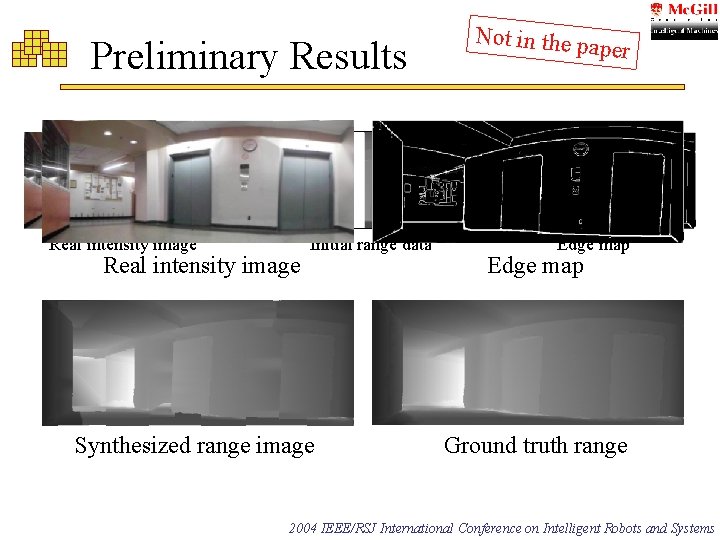

Preliminary Results Real intensity image Initial range data Synthesized range image Not in the pa p er Edge map Ground truth range Initial range scans 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

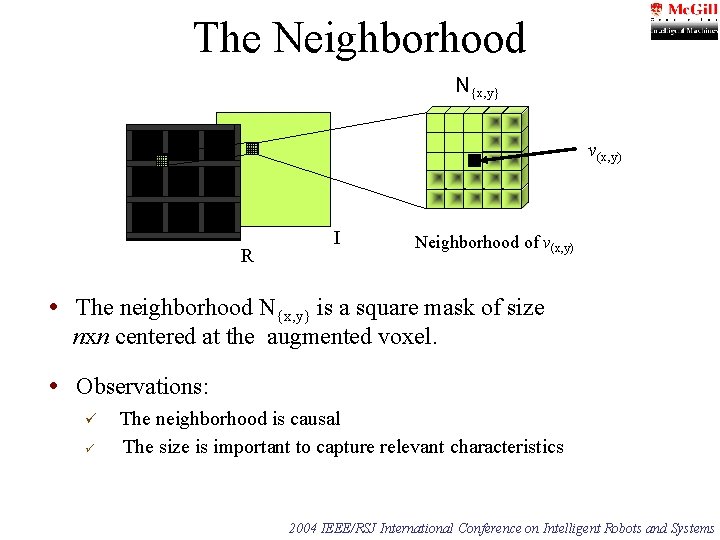

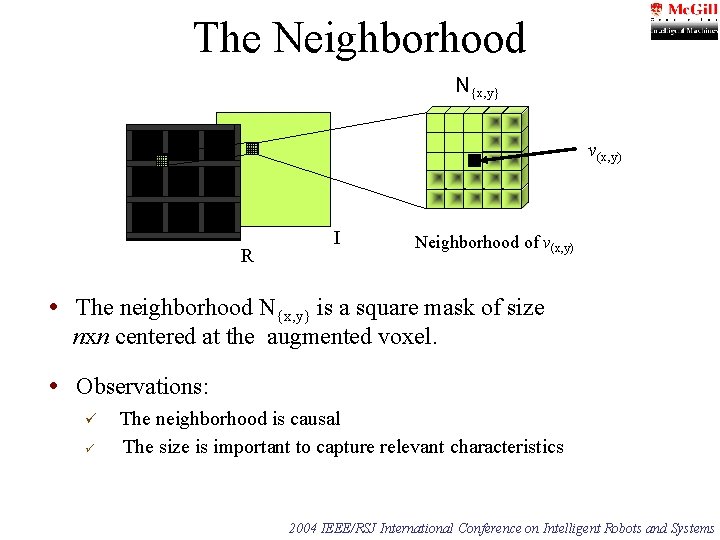

The Neighborhood N{x, y} v(x, y) R I Neighborhood of v(x, y) • The neighborhood N{x, y} is a square mask of size nxn centered at the augmented voxel. • Observations: ü ü The neighborhood is causal The size is important to capture relevant characteristics 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

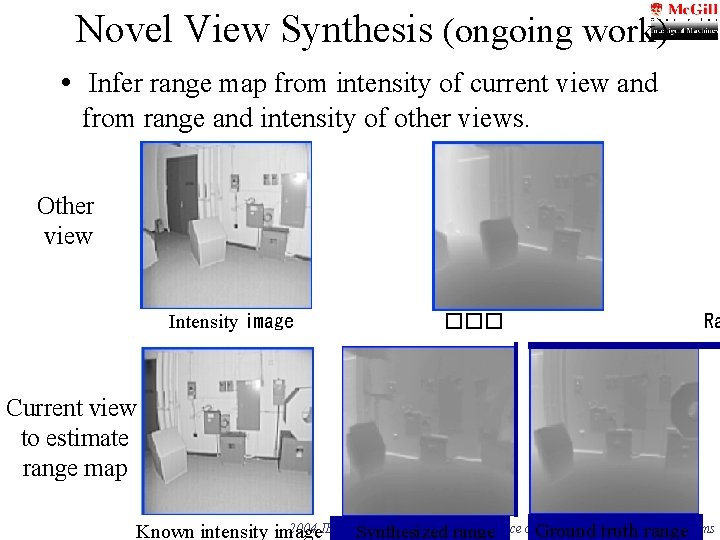

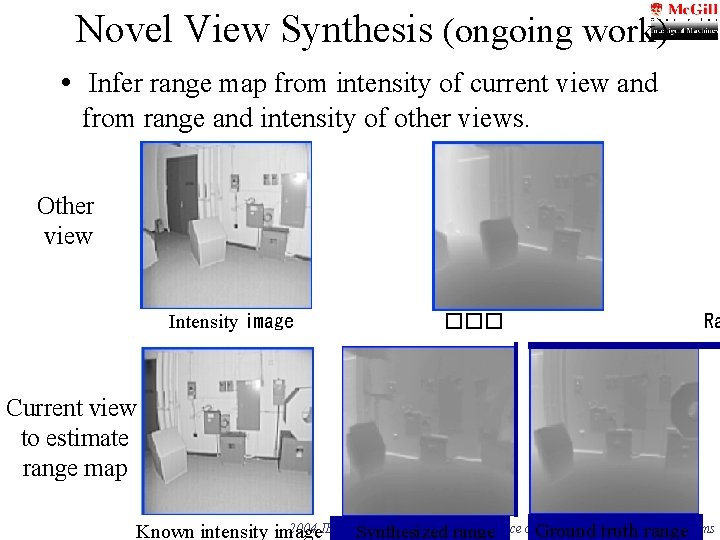

Novel View Synthesis (ongoing work) • Infer range map from intensity of current view and from range and intensity of other views. Other view Intensity image ��� Ra Current view to estimate range map 2004 IEEE/RSJ International Conference on. Ground Intelligent truth Robots range and Systems Known intensity image Initial range (none) Synthesized range

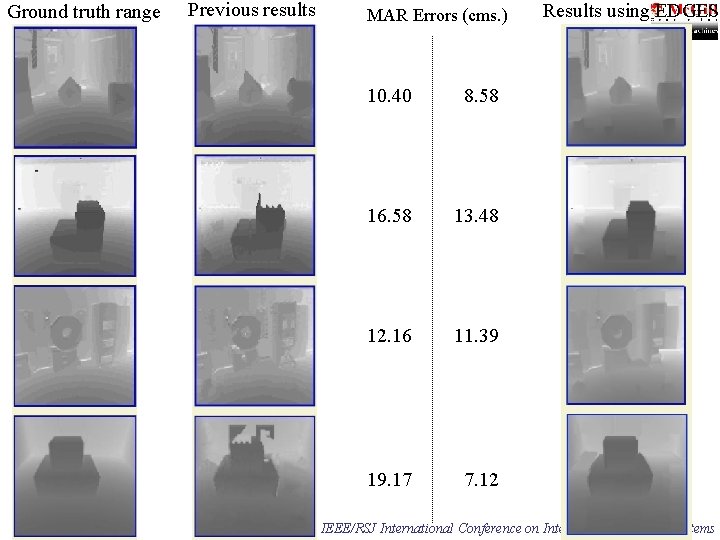

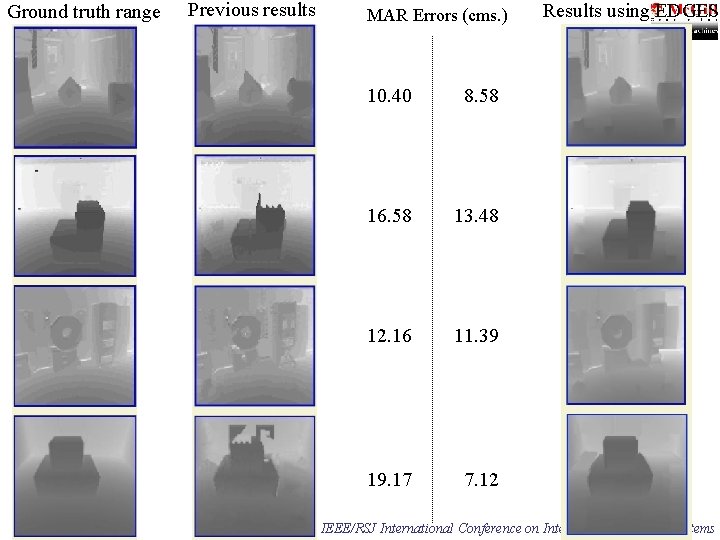

Ground truth range Previous results MAR Errors (cms. ) 10. 40 8. 58 16. 58 13. 48 12. 16 11. 39 19. 17 7. 12 Results using EDGES 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

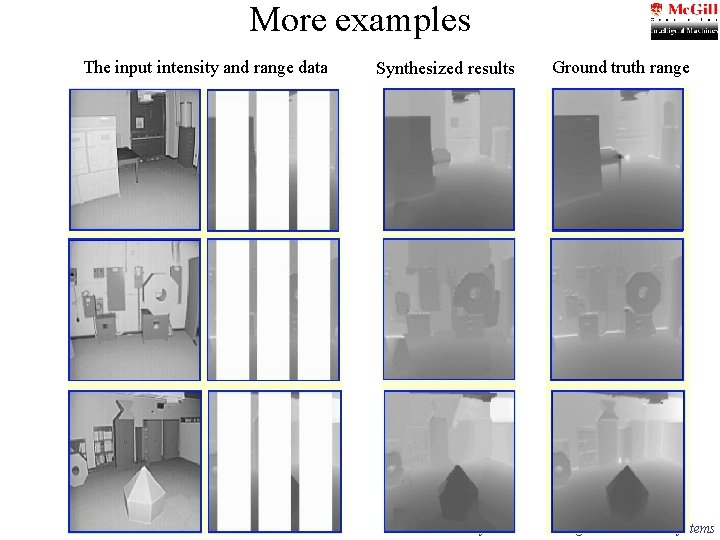

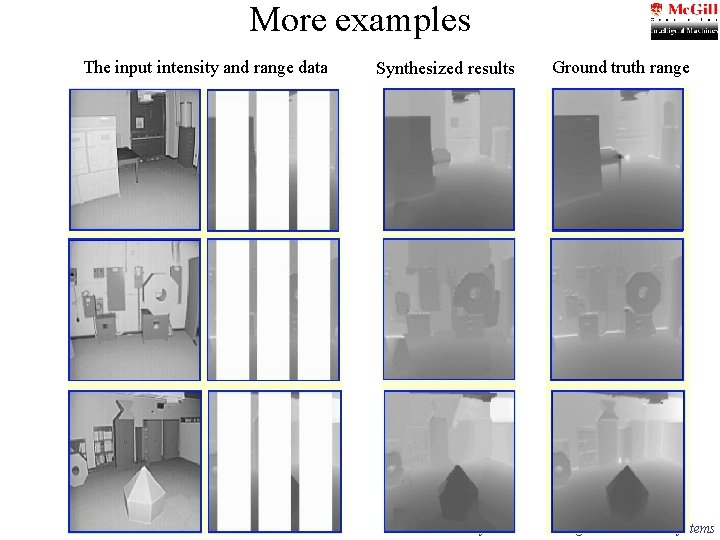

More examples The input intensity and range data Synthesized results Ground truth range 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems

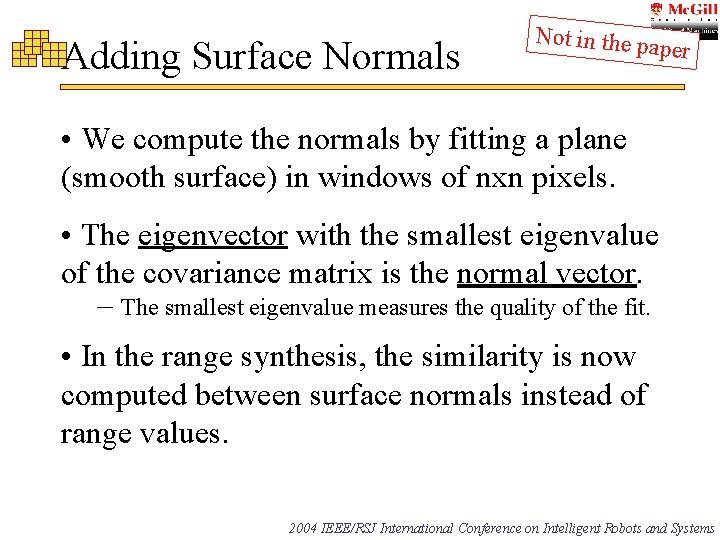

Adding Surface Normals Not in the pa per • We compute the normals by fitting a plane (smooth surface) in windows of nxn pixels. • The eigenvector with the smallest eigenvalue of the covariance matrix is the normal vector. – The smallest eigenvalue measures the quality of the fit. • In the range synthesis, the similarity is now computed between surface normals instead of range values. 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems